The Internet never began as a single creation or entity, like the invention of the telephone. It was an outgrowth of ARPANet, a project of the Advanced Research Projects Agency (ARPA), of the Department of Defense, an attempt to link our military, defense contractors, and universities together.

The Internet never began as a single creation or entity, like the invention of the telephone. It was an outgrowth of ARPANet, a project of the Advanced Research Projects Agency (ARPA), of the Department of Defense, an attempt to link our military, defense contractors, and universities together.

Prior to the Internet, computer networks were “hooked up” in a linear fashion, and every network had to be operating. If you had three network computers in a row and the middle one went down for repair, the first and last computer couldn’t talk to each other.

In 1969, ARPA set up the first network that was not centralized; there wasn’t any single computer running the show. With the new system, any number of computers could talk to each other and information was automatically rerouted should any computer go off-line. The fledgling network linked four universities together, with computers from the National Science Foundation also joining in.

By 1983, they switched to the Transmission Control Protocol/Internet Protocol (TCP/IP), still in use today. TCP/IP specifies how data is formatted, addressed, transmitted, routed, and received at the destination. The word “Internet” appeared at about the same time, and commercial activity on the Internet started shortly after. The first countries to participate outside the United States were England and Norway.

How big is the Internet? Very big! An estimated four out of every five Americans will use the Internet this week. Worldwide, about two billion, or about two of every seven people on planet Earth, will log on. The biggest users of the Internet are China, the United States, India, Japan, and Brazil. Of course, that has much to do with the population of these countries. If we go by the percentage of the population that use the Internet, four countries, Japan, United States, France, and Korea are about the same, right around 80 percent.

The World Wide Web is the most popular part of the Internet. It started in 1989 with fifty people sharing Web pages. Today it seems that everybody and her brother has a website.

Advertising is also big business on the Internet. And at this point, many kinds of companies do most of their business on the Internet.

Now that the Internet has grown so large, there are around twenty major search engines out there to help us navigate it, and competition is fierce. The top five, in order of popularity, are Google, Bing, Yahoo!, Ask, and AOL.

A helicopter is an amazing machine. A train can go forward and backward. A car goes forward and back plus left and right. A plane can move forward, left and right, and up and down. A helicopter can do everything an airplane can do and three additional things: A helicopter can hover motionless in the air, rotate in place, and move sideways, backward, and straight up and down.

A helicopter is an amazing machine. A train can go forward and backward. A car goes forward and back plus left and right. A plane can move forward, left and right, and up and down. A helicopter can do everything an airplane can do and three additional things: A helicopter can hover motionless in the air, rotate in place, and move sideways, backward, and straight up and down.

A helicopter gets its lift in much the same way an airplane does (see question 111). Air that goes over a wing at a faster speed than air below the wing creates less pressure on the top of the wing and more pressure on the bottom of the wing. That greater pressure on the bottom of the wing is called lift. A helicopter has two or more wings mounted on a central shaft, much like the blades on a ceiling fan. Indeed, the propeller on a small aircraft is really a rotating wing. The same Bernoulli’s principle applies. Helicopter blades are thinner and narrower than wings on an airplane, because they have to go much faster. Helicopter blades rotate rapidly to create sufficient lift, whereas the wings on an airplane move at the speed of the plane itself.

The helicopter’s primary assembly of rotating wings is the main rotor. Increase the angle of those blades, called the angle of attack, and the wings give lift. A gasoline-reciprocating engine—the same type of engine in our cars—or jet engines turn the main rotor.

So far, we have a vehicle that generates enough lift to get off the ground. But as soon as it was airborne, the whole machine would rotate in the opposite direction from the main rotor, in accordance with Newton’s law of action and reaction. Rotor blades turn one way (action), the machine turns the other way (reaction).

There are several solutions. One is to put two main rotors on the helicopter, one in the front and one in the back, then turn them in the opposite directions. An example is Boeing’s CH-47 helicopters, flown by the military. You see these Chinooks in the news because they’ve been used in Iraq and Afghanistan.

But the most common solution is to attach another, smaller rotor on the tail and power it with a long shaft, or boom, attached to the engine. The tail rotor produces the force, or thrust, just like an airplane propeller. The rotor is mounted vertically to produce sideways thrust, counteracting the tendency of the vehicle to spin in the opposite direction from the main rotor. By varying the amount of this sideways thrust, the pilot is able to turn the helicopter left and right. The two “rudder pedals” control the pitch, or angle of the blade, of the tail rotor blades.

But it gets even more complex! The main rotor must control the directions up and down and also laterally (sideways). For this, a swash plate assembly does the job, because it allows a pilot to control both movements at once. Pilots use a collective control in their left hands to control the overall pitch of the main blades. This makes the helicopter go up and down. And in their right hands, they hold the cyclic pitch control, which changes the angles of the blades individually as they go around. This makes the copter move in any horizontal direction: forward, backward, left, or right.

Igor Sikorsky, an immigrant from the Ukraine, is credited with the first successful and practical helicopter. First flown in May 1940, his VS-300 paved the way for most modern helicopters, which are still based on his design.

Just about any indirect injection diesel engine can run on vegetable oil—with a conversion kit, of course. An indirect injection engine is one in which the fuel is not injected directly into the cylinders for combustion, as it is in a typical diesel engine. In an indirect injection engine, the fuel is delivered to a pre-chamber where combustion begins.

Just about any indirect injection diesel engine can run on vegetable oil—with a conversion kit, of course. An indirect injection engine is one in which the fuel is not injected directly into the cylinders for combustion, as it is in a typical diesel engine. In an indirect injection engine, the fuel is delivered to a pre-chamber where combustion begins.

Vegetable oil falls into the biodiesel fuel category, and with crude oil prices over one hundred dollars a barrel, biodiesel looks more promising. Biodiesel can be made from corn, animal fats, kitchen cooking oil, and soybeans.

Biodiesel is made from biological matter. It is nontoxic and renewable. Gasoline, on the other hand, is made from petroleum. It is both toxic and nonrenewable.

Biodiesel fuels can be used in pure form, but they are usually blended with petroleum-based diesel fuels. B20 is the most common blend, with 20 percent blended to 80 percent standard diesel fuel. B100 refers to pure biodiesel.

Biodiesel has some advantages over gasoline and pure diesel. It helps reduce dependency on foreign oil, is environmentally friendly, lubricates the engine, reduces engine wear, and has fewer emissions. Furthermore, biodiesel is safer. It degrades faster than gasoline, so in a spill, cleanup is faster and easier. Biodiesel has a higher flashpoint than conventional diesel, which means it takes a higher temperature to make it burn, so it’s less likely to accidentally combust or explode.

Biodiesel does have some downsides. Even though there is a decrease in particulate matter in emissions, burning it does emit more nitrous oxide (N2O), which is a greenhouse gas. The new biodiesel fuels can loosen deposits built up in the system, clogging filters and fuel pumps. Some reports show that there can also be a decrease in engine power and fuel economy. Biodiesels can also cost more.

At the present time, it seems that E85 has a toe in the market that biodiesel does not share. E85 is 85 percent ethanol blended with 15 percent regular gasoline. Biodiesel use has been restricted mainly to enthusiasts—people who have, on their own, converted older diesel vehicles to run on cooking oil from deep-fat fryers used in restaurants.

It seems hard to believe that an aircraft, like the Air Force’s C5-A cargo plane, with a gross weight of eight hundred thousand pounds, can stay aloft. How can something so big and so heavy actually get off the ground?

It seems hard to believe that an aircraft, like the Air Force’s C5-A cargo plane, with a gross weight of eight hundred thousand pounds, can stay aloft. How can something so big and so heavy actually get off the ground?

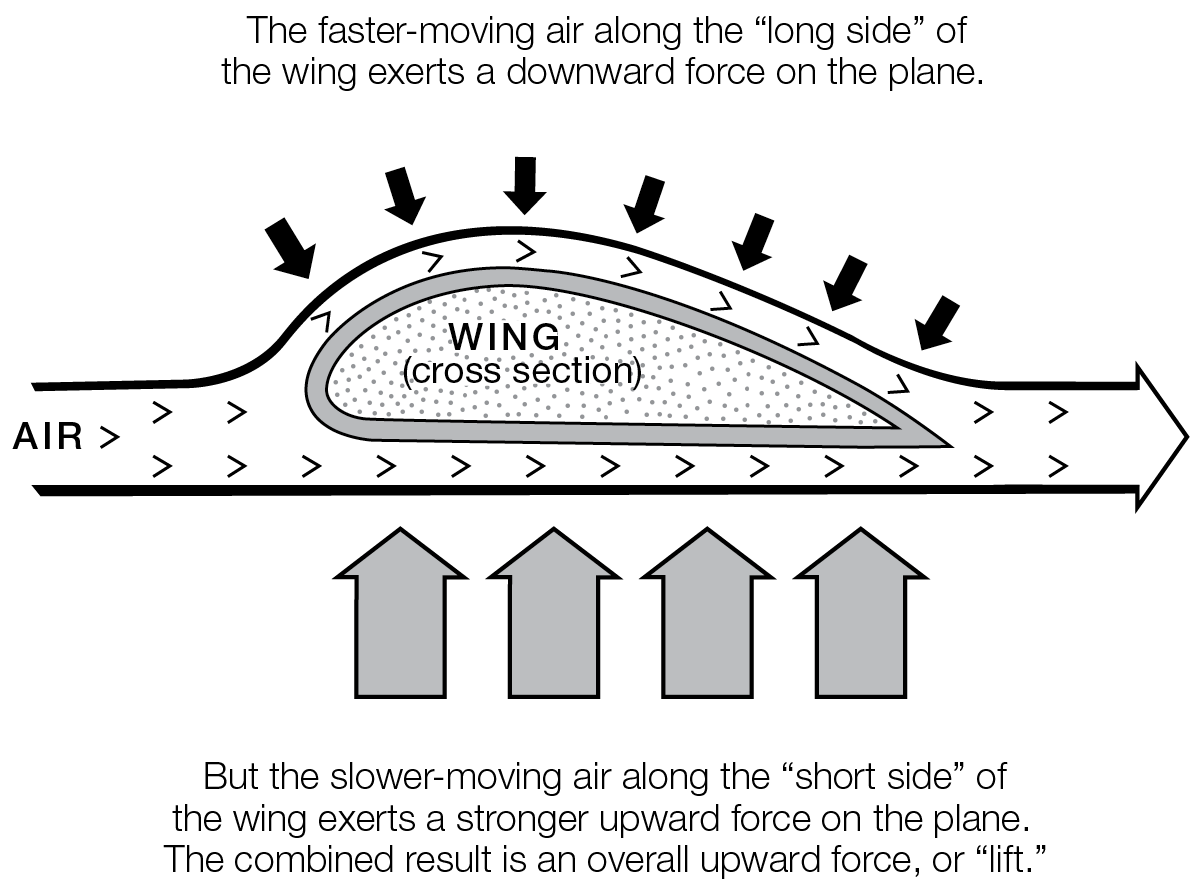

There are two theories of how lift occurs on a wing. Both are correct and both are useful in explaining the forces on an airplane.

The conventional and classic Longer Path explanation uses the Bernoulli effect. The top surface of the wing is more curved than the bottom side. Air traveling over the top of the wing has a greater distance to go—a longer path—than air passing underneath the wing. So the air over the top of the wing must travel faster than the air under the wing. The air passing over the top of the wing and from below the wing must meet behind the wing, otherwise a vacuum would be left in space.

The Bernoulli principle states that faster-moving airflow develops less pressure, while the slower-moving air has more pressure. That greater pressure on the bottom of the wing is termed “lift.” Basically, this pressure difference creates an upward suction on the top of the wing.

The competing but complementary theory is based on Isaac Newton’s third law of motion, the law of action and reaction, which states that “for every action there is an equal and opposite reaction.” When air molecules hit the bottom surface of the wing at a glancing angle, they bounce off and are pushed downward. The opposite reaction is the wing’s being pushed upward. Hence, we have lift. It’s similar to BBs hitting a metal plate—they’ll rebound backward. BBs go in one direction and the metal plate goes in the opposite direction.

As it turns out, the Bernoulli Longer Path theory is a better explanation of lift for slower-speed planes, including jet airliners. Newton’s third law is best suited for hypersonic planes that fly high in the thin air at more than five times the speed of sound.

The navy’s Korean War–era Douglas AD Skyraider was the first military plane to carry its own weight. The Douglas DC-7, put into passenger service in the early 1960s, was the first commercial plane to lift a load equal to its empty weight.

Eye surgeons have used lasers in retinal surgery for over forty years. They use them to zap bleeding blood vessels on the retina and also to repair a detached retina. The retina is the layer of cells in the back of the eye that receives light and converts it into electrical impulses that are sent to the brain. Eye surgeons also use lasers to correct nearsightedness. The most common case is the LASIK procedure that we see advertised in newspapers and on television. LASIK is an acronym for laser-assisted in-situ keratomileusis.

Eye surgeons have used lasers in retinal surgery for over forty years. They use them to zap bleeding blood vessels on the retina and also to repair a detached retina. The retina is the layer of cells in the back of the eye that receives light and converts it into electrical impulses that are sent to the brain. Eye surgeons also use lasers to correct nearsightedness. The most common case is the LASIK procedure that we see advertised in newspapers and on television. LASIK is an acronym for laser-assisted in-situ keratomileusis.

We call a person nearsighted if their eyeball is too long, so the cornea and lens in their eye focuses light on a point in front of the retina. Nearsighted people see nearby objects clearly, but distant objects are blurry to them. For farsighted people, it’s the other way around. Younger people tend to be nearsighted, whereas older people tend to be farsighted. In additions, older people who were nearsighted when they were young don’t necessarily have any improvement in their distance vision when they lose their near vision.

LASIK surgery is very effective for treating nearsightedness. LASIK flattens the cornea, the tough, transparent outer covering of the eye. The cornea does about two-thirds of the refracting, or bending, of light that enters the eye, and the lens does about one-third. Flattening the cornea lets the eye focus light farther back, right on the retina, just like a normal eye does.

Laser eye surgery removes a little of the cornea to flatten out the curvature. In the LASIK procedure, the surgeon folds a flap of the outer corneal tissue out of the way and uses the laser to reshape the underlying corneal tissue, then replaces the flap over the reshaped areas to let it heal to the new shape. Those big, colorful ads in newspapers and magazines do a nice job of showing how the LASIK surgery is performed. Healing is fast, and results are excellent. The cost is several thousand dollars per eye.

What are the problems? There are three main possibilities. One is undercorrecting. The surgeon doesn’t remove enough underlying tissue, and the person retains some nearsightedness. The second is overcorrecting. The surgeon removes too much tissue, and the person remains slightly farsighted. The third is wrinkling. The surgeon leaves a small fold or wrinkle under the flap, causing a blurry area in the person’s vision.

IBM developed the excimer laser that made laser eye surgery possible. The reason the laser doesn’t hurt the eye is twofold. First, it operates in the ultraviolet (UV) region. The wavelengths of the excimer laser beam are just slightly shorter than the waves we can see. Second, the beam can be focused to less than one-hundredth the diameter of a human hair. The beam penetrates less than a billionth of a meter into the surface, which absorbs the UV light on contact, vaporizing the cornea tissue one thin layer at a time.

Select a doctor who has done hundreds of these operations. Success depends on the skill of the surgeon as well as the precision of the instruments. It is highly recommended that you talk to patients who have already had the procedure. Check the reputation of the clinic. Vision is priceless.

Horsepower is a term that describes how much work an engine or other source does over a specific period of time. The precise definition is that a single unit of horsepower is thirty-three thousand foot-pounds per minute. If you lifted up thirty-three thousand pounds one foot in one minute—that’s a rate of 550 pounds per second for sixty seconds—you have been working at the rate of one horsepower.

Horsepower is a term that describes how much work an engine or other source does over a specific period of time. The precise definition is that a single unit of horsepower is thirty-three thousand foot-pounds per minute. If you lifted up thirty-three thousand pounds one foot in one minute—that’s a rate of 550 pounds per second for sixty seconds—you have been working at the rate of one horsepower.

We give James Watt credit for naming the horsepower. Steam engines were replacing horses in the late 1700s, and there had to be a way to rate the power of steam engines. So Watt calculated the work an average horse could do. He figured a horse could raise 330 pounds up one hundred feet in one minute. That is equivalent to 550 pounds per second or thirty-three thousand foot-pounds per minute.

We measure the power of modern engines by hooking up the engine to a machine called a dynamometer. The dynamometer applies a load to the engine and converts its torque (turning effect) to horsepower. We measure modern car and truck engines in terms of engine displacement, usually in liters. Engine displacement is the total volume inside all the cylinders of an engine. It is difficult to make an exact conversion from liters to horsepower, because there are so many variables, such as number of cylinders, bore diameter, stroke length, and revolutions per minute (rpm) of the engine. My 2005 Dodge Caravan has a 3.3-liter, six-cylinder engine. It is rated at 180 horsepower. So for this car, one liter is about fifty-five horsepower. The popular Ford Focus can come equipped with a two-liter Duratec engine that is rated at 136 horsepower. One liter on the Ford Focus is about sixty-two horsepower. We still use the horsepower rating for many of our smaller engines, such as lawn mowers, snowblowers, leaf blowers, and chainsaws, so people are familiar with the unit.

Humans can generate the equivalent of about one horsepower for a short period of time. Unlike an engine, our muscles tire, so we are limited in the amount of time we can expend power. Each year physics students across the country time themselves running up a flight of stairs. Then they retreat to the classroom and calculate their horsepower. Here’s an example. A 150-pound student runs up a flight of steps that is fifteen feet high in a time of four seconds. That works out to 563 foot-pounds per second, which is a bit over one horsepower. Most students are surprised by how little horsepower they can develop compared to the machines we can build. A good athlete can develop a third of a horsepower for an extended period of time, say, at least a half an hour.

We all want sources of electricity that are plentiful, cheap, and nonpolluting. Unfortunately, when it comes to energy production, there is no free lunch. All energy sources have advantages and disadvantages, good points and bad points. There are many factors to consider: availability, effect on the environment, safety, cost, reliability, site selection, and transmission capability.

We all want sources of electricity that are plentiful, cheap, and nonpolluting. Unfortunately, when it comes to energy production, there is no free lunch. All energy sources have advantages and disadvantages, good points and bad points. There are many factors to consider: availability, effect on the environment, safety, cost, reliability, site selection, and transmission capability.

Using the wind has enticing advantages. Wind power is clean. There are few polluting by-products. The cost of wind power has been coming down so that some wind farms are producing electricity at five cents per kilowatt-hour.

But it certainly is not free, as some would have us believe. If there were potent advantages to wind power, we would be using more of it. So why aren’t we? Since the wind does not blow all the time, wind power does not have the reliability associated with coal, nuclear, or natural gas. Remember, we don’t have any way to store appreciable amounts of electricity. When it is generated, it must be used immediately.

I’ve seen acres of windmills in the San Francisco area and also near Palm Beach. It’s quite a sight! But if you talk to the local people, they tell you they don’t want to live next to them, because they can be ugly and noisy. Wind generators also require a lot of acreage in regions where the wind blows on a steady basis. (Most wind generators need a wind speed of at least 7 mph.) The towers and generators use a lot of steel and aluminum, all of which requires processing and possibly mining, which means more pollution. Also, the generators depend on considerable labor-intensive maintenance.

Wind power has its place. It should be part of the mix. But in the foreseeable future, we will get only a small fraction of our electricity from wind. The total installed wind capacity in the entire United States is sixty thousand megawatts, which is equivalent to sixty power plants.

The world’s first remote control devices were those used by the German Navy in WWI to steer their radio-controlled motorboats into Allied boats. In World War II, both the United States and Germany used remote controls to detonate bombs. The Era Meter Company of Chicago offered an automatic garage door opener to the public in 1948.

The world’s first remote control devices were those used by the German Navy in WWI to steer their radio-controlled motorboats into Allied boats. In World War II, both the United States and Germany used remote controls to detonate bombs. The Era Meter Company of Chicago offered an automatic garage door opener to the public in 1948.

In 1950, Zenith developed a TV remote control appropriately called the Lazy Bones. A long cable attached the remote to the TV set. The remote would activate a motor that would rotate the channel tuner in the set. In those days, tuners were mechanical, not electronic. Eugene Polley invented the first wireless TV remote, called the Flash-Matic, in 1955. Shining a regular flashlight on photocells placed in the four corners of the set operated the functions of on-off, volume, and channel selection. But people forgot which corner to use, plus sunlight would wreak havoc by activating the controls. Then Zenith brought out the Space Command in 1956, which used ultrasonic waves. Unfortunately, clinking metal would affect it and it made dogs bark.

Today, most all remote controls for TVs, VCRs, DVDs, CD players, and home-entertainment systems use light. But it’s a kind of light we can’t see: infrared (IR) light, whose waves are longer than red, but shorter than radio, television, or microwaves. The IR remote control that we hold is the transmitter, and it sends a binary code that represents the commands we enter. If you look at the end of the remote control that you point to at the TV, stereo, or other such device, you can see the opening, or port, that emits the IR light. There is a receiving device on the TV that picks up the IR light from the transmitter, sorts out the binary signal (a series of 0s and 1s), and carries out the command, such as volume control or channel change. IR remotes are limited to line of sight and have a range of about forty feet, which is sufficient for most purposes.

Remotes to open garage doors use radio waves of between 300 and 400 megahertz (MHz). Key fobs to open car doors and entry doors use 315 MHz for cars made in North America and 434 MHz for cars made in Europe and Asia. The same frequencies are used for most remote-controlled toys. Radio waves can go through materials such as walls and glass, which means the waves can go through our windshield to open our garage door. And the range is usually at least one hundred feet.

Yes, remotes have made life easier and, you might say, “handier.” But what about the contention over who gets the remote? Have remotes contributed to obesity because we don’t have to get up to change the channels? And terrorists use remotes to detonate bombs and improvised explosive devices (IEDs), another name for roadside bombs.

The Mach number of a moving object is the ratio of the speed of the object to the speed of sound. The Mach number is named after the Austrian physicist and philosopher Ernst Mach (1838–1916).

The Mach number of a moving object is the ratio of the speed of the object to the speed of sound. The Mach number is named after the Austrian physicist and philosopher Ernst Mach (1838–1916).

Sound travels at about 750 mph. So a plane flying at 750 mph is doing Mach 1. If it is moving at 1,500 mph, it is going at Mach 2. Mach 3 would be about 2,250 mph. A speed of 750 mph is about the same as twelve hundred feet per second, or 340 meters per second in the metric system. So it takes about four and a half seconds for sound to travel one mile. This makes it an easy task to calculate how far away from us lightning has struck. Count the time between lightning flash and thunder and divide by five to get the distance in miles. We say “about” because the speed of sound depends on the temperature of the air. Sound travels a bit faster in warm air. So if you want to break the sound barrier, do it on a cold day. You don’t have to go quite as fast!

Anything traveling below the speed of sound is said to be subsonic. Supersonic refers to objects moving faster than the speed of sound. The British-French supersonic transport (SST) plane Concorde, which is no longer in passenger service, flew at Mach 2. Most of our top-line military fighter planes, such as the F-14 Tomcat (retired from service), F-15 Eagle, F-16 Falcon, F-18 Hornet, and F-22 Raptor, all fly at about Mach 2. The F-117 Nighthawk stealth fighter is listed as 0.92 Mach, just below the speed of sound. The former space shuttle orbited the Earth at a speed of over 17,000 mph, and the current International Space Station still does; this calculates out to Mach 23.

We usually speak of the speed of an aircraft when we use the term “Mach number,” but a Mach number can describe the speed of any object traveling through air. When we hear the sharp crack of a bullwhip, it means the tip of the whip is breaking the sound barrier. When someone snaps a towel, a playful but painful locker-room trick, the end of the towel is moving faster than sound moves.

When an aircraft exceeds Mach 1, high pressure builds up in front of the plane. The shock wave spreads backward and outward in a cone shape. It is this shock wave that we hear as a sonic boom.

Before 1947, most people thought that no plane could fly through this so-called sound barrier. Combat fighter pilots in World War II would go into a dive, and the air moving over the top of the wings would exceed the speed of sound; then their controls would freeze up and the plane would crash. Captain Chuck Yeager, at the controls of the Bell X-1, broke the sound barrier on October 14, 1947. Shaped like a .50-caliber bullet, the four-chambered rocket plane was named Glamorous Glennis, in tribute to his wife. It now hangs in the Smithsonian Air and Space Museum in Washington, DC.

Bluetooth is a technology that allows devices such as cell phones, cordless phones, television sets, personal computers, stereos, DVD players, entertainment centers, and TV and satellite radio to talk to each other. These devices can communicate with each other without stringing messy wires and cords between them. It is all done with radio signals.

Bluetooth is a technology that allows devices such as cell phones, cordless phones, television sets, personal computers, stereos, DVD players, entertainment centers, and TV and satellite radio to talk to each other. These devices can communicate with each other without stringing messy wires and cords between them. It is all done with radio signals.

Bluetooth creates a small area network that operates on a frequency of about 2.45 gigahertz (GHz). The US government has set this frequency band aside for industrial, scientific, and medical use. It is the same band used for baby monitors and some garage door openers and cordless phones.

Bluetooth devices send out very weak radio signals—in some cases, as little as about one milliwatt. The coverage distance is typically about thirty feet. That extremely low power ensures that a Bluetooth device won’t interfere with other devices and is also very easy on batteries.

Bluetooth uses a technique called frequency hopping spread spectrum. It uses seventy-nine randomly chosen frequencies, changing from one to another on a regular basis. The transmitters change frequencies sixteen hundred times every second. So it is unlikely that two transmitters will be on the same frequency at the same time.

Bluetooth allows an operator to set up a personal area network (PAN) in their house and car. This “piconet” may tie together their cell phone, stereo, computer, DVD player, satellite TV and radio receivers, and cell phone.

Ease of setup is one of the big attractions of Bluetooth. No need to wade through a ton of instruction manuals. When any Bluetooth-ready device is turned on, it automatically sends out radio signals to other devices. Then it “listens” for radio signals in response. Once it identifies a signal, it locks it in and remains active with other devices within that thirty-foot distance.

Bluetooth allows hands-free cell phone use. That’s a big advantage for car drivers, as many states have passed laws banning cell phone use and/or texting while driving. Users can be jogging or fishing or relaxing on the veranda while listening to music on their Bluetooth. The jawbone-shaped headset is now a common sight.

Bluetooth was developed by Ericsson, a large telecommunications company in Sweden. In Nordic lore, Harald “Bluetooth” Gormsson was king of Denmark from 958 AD to 985 or 986 AD, when, according to some, he was killed in a rebellion led by his son. He had united Denmark and parts of present-day Sweden and Norway into a single kingdom, and he introduced Christianity into his kingdom. He erected a large runestone, a stone with an inscription, in memory of his parents in Jelling, Denmark. Thus, the choosing of the name Bluetooth is a testament to the Nordic national pride in having discovered this form of wireless communication and because Bluetooth unites different communication protocols, as King Harald united his kingdom.

Isn’t human progress truly amazing? Seventeenth-century philosopher Thomas Hobbes described the life of early humans as “solitary, poore, nasty, brutish, and short.” We humans have come so far in so little time. It is hard to imagine what our daily life would be like if we were back in those times.

Isn’t human progress truly amazing? Seventeenth-century philosopher Thomas Hobbes described the life of early humans as “solitary, poore, nasty, brutish, and short.” We humans have come so far in so little time. It is hard to imagine what our daily life would be like if we were back in those times.

The caveman is a popular character based on ideas of how early humans may have looked and behaved. They’re usually pictured as hairy creatures clothed in animal skins, armed with clubs and spears, and oftentimes dumb or aggressive. Pop culture promotes this view, for example, in comics like B.C., Alley Oop, The Far Side, and the animated television series The Flintstones. Some of these depictions show cave people living at the same time as dinosaurs. There is strong evidence that dinosaurs died out about 65 million years ago. The only mammals at that time were small, furry, four-legged creatures.

We can picture early humans searching for food, trying to stay warm, protecting their territory, tending to their sick and injured, learning to use tools, and burying their dead. It must have been a nearly full-time struggle just to stay alive. Two developments allowed our early ancestors to dominate their environment: growth of the frontal lobes of the brain and evolution of the opposable thumb. These were instrumental in the human race’s long progress from cave to castle. The frontal lobes gave us the capabilities of impulse control, judgment, language, memory, motor function, socialization, and problem solving. They are responsible for planning, controlling, and executing behavior. In other words, the frontal lobe gives us all the functions we ascribe to humans. I recommend the Carl Sagan book Broca’s Brain, which describes how the frontal lobe is concerned with reasoning, planning, parts of speech, movement (motor cortex), emotions, and problem solving. Another fine read is Steven Jay Gould’s The Mismeasure of Man.

The opposable thumb allowed us to make and manipulate tools with great dexterity. If you are tempted to disregard the importance of an opposable thumb, try these tasks: tie your shoelaces, or blow up a balloon and tie it, without using your thumbs. Stereoscopic vision gave the tool of depth perception, a great aid in using tools and in hunting.

Another key feature of our long march of progress is the role of a written language. Knowledge exploded when people were able to permanently record what they learned and pass it on to the next generation. The oral tradition is very limited, and could be somewhat responsible for the differences in the development of civilization between Europe and North America in the time leading up to the 1500s.

But there is an element of the human ascent that is troubling: our penchant for destruction of our own kind and of our environment. Warfare seems to be the scourge of humanity. Many of us now have unprecedented wealth, comfort, longevity, medical care, and leisure time. Yet we have trouble getting along with each other. To make things worse, we have the capability to destroy other humans on a massive scale. It is “only the dead who have seen the end of war.” Some attribute this quote to Plato, an ancient Greek philosopher (428 BC–348 BC), while others say it’s from the writings of Spanish-American writer George Santayana (1863–1952). Whatever the case may be, I’m afraid the phrase may hold some truth.

What is the future of science and humankind? Science is a cultural pursuit, in the same vein as music, art, and literature. It is difficult to comprehend the mysterious beginnings of atoms, stars, galaxies, and planets. It is humbling to be able to understand how life emerged, advanced, and developed into a biosphere containing creatures like us, that have brains able to ponder the wonder of it all. This common understanding should rise above all national differences and all religions. Human beings are the only creatures that can ask the questions “Why am I here?” and “What is the meaning of my existence?” and “What does the future hold?”

But that complexity comes at a price: ignorance of the things around us. Many modern gadgets are like magic black boxes. Take apart a cell phone, look at the miniaturized parts, and there is not a clue as to how it works. That intricacy and complexity will only increase. Some people discuss implanting chips and circuitry in the brain that will augment its computing power, making us part human and part machine.

There is every reason to believe that the steady progression of scientific knowledge and implementation of that information will continue. It is progress that gives ever more people access to greater comfort, food, clothing, and shelter and to longer life, increased leisure time, and easing of pain. But we must be aware of the limitations of science. Just because we know more, that does not mean we behave any better or treat others in a humane manner. Some people knowingly ingest carcinogens, take illicit drugs, and drink too much alcohol. Some people are in poverty because they make bad choices, or because of others’ self-interested choices. We know so much better than we do.

We are great at solving technical problems. Putting a man on the moon was an engineering task. We understood rocket propulsion, celestial mechanics, navigation, and life-support systems. Our country had the money, will, and focus to succeed. But we are not very good at “people” problems. We have difficulty convincing people to make good decisions in use of food, alcohol, driving, drugs, etc. A free society cannot compel people to live or behave in a healthful manner. It must persuade them that it is in their best interest. And that is not an easy task. Just because science and technology can give us all these “goodies” does not mean people use them in a constructive manner. They don’t necessarily make people happier or lead them to live more productive lives. Scientific progress does not advance equally in all areas of human endeavor. The war on cancer is improving. But there remains much that we do not understand about cell biology. We’ve been able to predict lunar and solar eclipses centuries in advance at least since the time of the ancient Mayans. But predicting clear skies or cloudy skies only one day ahead is iffy. There is room for pessimism and for optimism. On the dark side, there are groups that will use science and technology to commit mass murder and eliminate those who hold ideas different from their own. On the positive side, there is hope that third-world countries will narrow the gap with the developed countries and enjoy the prospects of clean air and water, sufficient foodstuffs, and the freedom of choice we have in the Western world.

In 1883 or 1884 (accounts differ), Hermann Einstein brought home a compass and gave it to his four- or five-year-old son, Albert. Young Albert Einstein observed that the compass needle always pointed in the same direction and moved without anything touching it. He later stated it was at that moment he realized “something deeply hidden had to lie behind things.”

In 1883 or 1884 (accounts differ), Hermann Einstein brought home a compass and gave it to his four- or five-year-old son, Albert. Young Albert Einstein observed that the compass needle always pointed in the same direction and moved without anything touching it. He later stated it was at that moment he realized “something deeply hidden had to lie behind things.”

Like most compasses, Albert Einstein’s compass was just a bar magnet allowed to swivel on a pivot. Magnets truly are fascinating devices. We know much about how magnets operate, yet much about magnetism, especially at the atomic level, remains a mystery more than one hundred years after Albert Einstein played with that simple compass.

We do know that a magnet is any object that has a magnetic field around it and attracts objects made from iron, nickel, and cobalt and their compounds; these substances exhibit the property of ferromagnetism. (“Ferro-” comes from “ferrum,” the Latin word for iron.) The Greek philosopher Thales of Meletus recorded that natural magnets were found in the region of Magnesia, hence the name magnet.

An early word for magnet is “lodestone,” a Middle English word meaning “leading stone” or “course stone,” from its use in keeping ships on course. Ship navigation was often accomplished by placing a natural magnet (lodestone) on a piece of wood and then floating the combination in a bucket of water. The lodestone acted as a crude compass.

The most common magnet is a bar magnet, often marked “north” on one end and “south” on the other. Another familiar magnet is the horseshoe magnet, which is merely a bar magnet bent around into the shape of a horseshoe. A horseshoe magnet is stronger than a bar magnet the same size, because both poles can pull on metal objects instead of just one pole.

“Like” poles, such as north and north or south and south, repel each other. “Unlike” poles, north and south, attract each other. Magnets are strongest at their poles. The force of attraction varies according to the inverse square law: twice the distance away, one-fourth the pull. The north pole of a free-swinging magnet points to the geomagnetic North Pole (which is magnetically a south pole, even though we call its direction magnetic north), which is located up in Hudson Bay above the Arctic Circle. The position of the magnetic North Pole moves around from year to year.

But why are iron, nickel, and cobalt the only elements attracted to a magnet? These atoms have unpaired electrons in their outer orbits. Not only do these electrons go around the nucleus, they also spin on their axes, something like the way the Earth spins on its axis. The spin on an electron creates its own tiny magnetic field. If all the electrons are spinning with their axes pointed in the same direction, each one will exert a little tug, or pull. Adding them all together, you get a big tug. Groups of these atoms in which the electrons are spinning in the same direction are called domains. In the presence of a strong magnetic field, created by a current, the effect moves up a level: more and more of these domains align in the same direction. After the strong magnetic field is removed, the magnetic domains of materials that are not good magnet candidates revert to a random orientation. The domains of strong, or good, magnetic materials remain in a structured orientation; thus a permanent magnet is born.

Loudspeakers typically use an alnico magnet, a combination of aluminum, nickel, and cobalt. Refrigerator magnets are usually ceramic. Neodymium magnets are some of the strongest permanent magnets and are used in high-quality products such as microphones, professional loudspeakers, in-ear headphones, and computer hard disks, where low mass, small volume, or strong magnetic fields are required.

Hans Christian Oersted, a Danish physics teacher, discovered the relationship between electricity and magnetism. On April 21, 1920, Oersted was giving demonstrations to students in a classroom. He laid a wire carrying current near a compass and noticed that the compass needle moved. The needle deflected when electric current from a battery was switched on and off. Oersted had been looking for a relationship between electricity and magnetism for several years. The operation of all motors and generators is based on the association between magnetism and electricity.

Legos have reached cult status in the toy world. The popularity of the colorful interlocking plastic bricks has surpassed that of Erector Sets, Lincoln Logs, and Tinkertoys.

Legos have reached cult status in the toy world. The popularity of the colorful interlocking plastic bricks has surpassed that of Erector Sets, Lincoln Logs, and Tinkertoys.

Ole Kirk Christiansen, a Billund, Denmark, carpenter, started making small wooden toys and playthings in 1934. Ole, a stickler on quality, named his company Lego, after the Danish expression leg godt, meaning “play well.” In Latin, “lego” means “I put together.”

In 1949, Legos came out with and early version the familiar plastic stackable hollow rectangular blocks with round studs on top. The precision-made blocks would snap together and hold, but not too tightly. A three-year-old could pull them apart.

Like many plastic devices, Legos are made by injection molding. The process starts with heating the acrylonitrile butadiene styrene (ABS) plastic the bricks are made of to 450°F to give it the consistency of hot fudge, then forcing it into a mold at very high pressure and allowing it to cool for fifteen seconds. A mold is a steel or aluminum cavity having the shape of the desired part. The finished figure is ejected out of the mold.

Lego pieces have studs on top and tubes on the inside. The brick’s studs are slightly bigger than the space between the tubes and the walls. When bricks are pressed together, the studs push the walls out and the tubes in. The material is resilient and wants to hold its original shape, so the walls and tubes press back against the studs. Friction prevents the two bricks from sliding apart.

The popularity of the Lego system stems from its versatility. The design encourages creativity, as children can construct any number of machines, figures, and devices, then tear them down and reuse the parts.

In the late 1960s, Lego introduced the Duplo line of products. These bricks are twice the length, width, and height of the standard Lego block. Often dubbed Lego Preschool, the size of the larger blocks discourages tykes from swallowing them, and they are easier for small hands to manipulate. The Lego people have introduced numerous ancillary enterprises, including six amusement parks, competitions, games, and movies. They also put out new lines of Lego sets, Clikits and Belville, which are targeted to young girls. The addition of gears, motors, lights, sensors, switches, battery packs and cameras are incorporated in the Power Functions Line. Those smart Danish people have made sure that newer products are compatible with the older brick-type connections.

Lego claims they have manufactured 400 billion blocks in the last fifty years. That amounts to about fifty-seven Lego blocks for every person on planet Earth.

Rockets can be simple or very complex, depending on their size. On a small scale, they’re so simple that you and I can order parts from a hobby store, build a rocket, and send it up into the wild blue yonder ourselves. On a more massive scale, rockets are so complex that only a handful of countries, with billions of dollars in resources, have been able to use them to send humans or other objects into space.

Rockets can be simple or very complex, depending on their size. On a small scale, they’re so simple that you and I can order parts from a hobby store, build a rocket, and send it up into the wild blue yonder ourselves. On a more massive scale, rockets are so complex that only a handful of countries, with billions of dollars in resources, have been able to use them to send humans or other objects into space.

Rockets work on the principle of Newton’s third law: For every action, there is an equal and opposite reaction. We can experience this fundamental law by letting air out of an inflated balloon. The action of the air rushing out of the balloon propels the balloon in the opposite direction. Rockets work the same way. The gases produced when they burn either liquid or solid fuel rush out the back—that’s the action. The reaction is the rocket’s moving in the opposite direction. The thrust depends on several factors, including the type and amount of fuel and the length of time it’s burned.

The concept of momentum also comes into play in rocketry. Momentum is defined as mass multiplied by velocity. The mass of the gases multiplied by the velocity at which they are ejected is exactly equal to the mass of the rocket times its velocity; therefore, by definition, the momentum of the ejected gases is the same as the momentum of the rocket going up. Even though the gases have very little mass but tremendous velocity, while the rocket has a huge mass but a small velocity compared to that of the gases, the product when you multiply them is the same.

Model rockets are simple and safe. They utilize solid propellants that burn quickly without exploding. You can store model rocket engines for many years and they won’t deteriorate, and the fuel does not have to be handled. On the opposite end of the spectrum is the most powerful rocket ever built, the Saturn V, which sent Americans to the Moon in the late 1960s and early 1970s. Today, the most powerful rocket is NASA’s Delta IV, which can send fourteen tons into orbit around the Earth. The first use of the Delta IV, which burns liquid hydrogen and liquid oxygen, was in June 2012, to put a spy satellite into space.

The Chinese are credited with developing the very first rockets in the early 1100s; they were an extension of their development of gunpowder. It’s widely believed that the Chinese used rockets against the invading Mongol hordes in 1232 AD. In the early 1800s, William Congreve, in conjunction with England’s Royal Arsenal, prepared an efficient propellant mixture and made a rocket motor with a strong iron tube and a cone-shaped nose. During the War of 1812, Congreve rockets, launched by the British Navy, rained down on Fort McHenry near Baltimore in September 1814. This was the origin of the “rocket’s red glare” that Francis Scott Key alluded to in his poem “Defence of Fort McHenry.” The words were later used in our national anthem, “The Star-Spangled Banner.”

Ion propulsion is something quite new and very exciting. In ion engines, electrons from xenon gas are stripped off the xenon atom with a given electrical charge. Magnetic fields fire the ions out the back of a thruster. Ion engines do not use heavy, bulky fuels, and while they generate very low thrust, they can be “burned” for many hours. This makes them valuable for deep space travel and for sending payloads from a low Earth orbit to a geosynchronous orbit, which is the distance at which satellites like those used by Dish Network for TV transmission are in orbit (see question 104). NASA is working on ion propulsion systems with higher speed and more thrust.

Styrofoam is actually a Dow Chemical trademark for a foamed plastic made from polystyrene, derived from crude oil, which Dow introduced in 1954. Early Styrofoam was formed by blowing hazardous chlorofluorocarbons (CFCs) into a glob of resinous material made of styrene.

Styrofoam is actually a Dow Chemical trademark for a foamed plastic made from polystyrene, derived from crude oil, which Dow introduced in 1954. Early Styrofoam was formed by blowing hazardous chlorofluorocarbons (CFCs) into a glob of resinous material made of styrene.

Styrene is an organic compound made from benzene, a colorless oily liquid that evaporates easily. Styrofoam is a trademark name for expanded polystyrene that goes into disposable coffee cups, coolers, and cushioning material in packaging.

But most of the world phased out CFCs in the later 1980s because of concern about these gases destroying the ozone layer that protects us from excessive exposure to ultraviolet radiation. Now manufacturers make Styrofoam products by blowing pentane or carbon dioxide gas into a plastic melt. Heating it causes the gas to expand in bubbles of the polystyrene, forming the foam the product is named for. As the resin cools it traps these tiny bubbles of gas inside, forming a cellular plastic structure. Polystyrene foam products are about 98 percent air. That’s why they’re so lightweight and have such excellent insulating properties.

Some of the most common Styrofoam products are coffee cups, egg cartons, meat and produce trays, soup and salad bowls, “peanuts” used in packaging, and lightweight molded pieces that cushion appliances and electronics in their packaging for shipping.

Tests prove that the use of disposable Styrofoam plates and cups in schools and hospitals prevents diseases. Styrofoam resists moisture and remains strong and sturdy over long periods of time. Styrofoam can be molded into shapes easily, and it costs less than paper products.

Styrofoam has taken some knocks over the years. It can fill up landfills quickly. The polystyrene industry will tell you that Styrofoam makes up less than one percent of the weight of solid waste in a landfill. What they don’t say is that the percent by volume is much higher. Other disadvantages of Styrofoam are that it is not biodegradable or easy to recycle, and that burning the stuff gives off toxic gases.

Like so many products in our modern life, Styrofoam has its good side and its bad side. It seems to me that the positives outweigh the negatives.

There are five main alternatives to gasoline, each with its own set of pluses and minuses. But the big advantage of all five is that they are less polluting and produce lower amounts of greenhouse gases than gasoline or diesel fuel.

There are five main alternatives to gasoline, each with its own set of pluses and minuses. But the big advantage of all five is that they are less polluting and produce lower amounts of greenhouse gases than gasoline or diesel fuel.

The one we hear the most about is ethanol, or ethyl alcohol. E10 fuel is 10 percent ethyl alcohol and 90 percent gasoline. Most any car sold in the United States can use E10. The cost of E10 is about ten cents higher than regular gasoline in most areas. E85 is 85 percent alcohol and 15 percent gasoline. Not just any old car can use E85. It must have an engine specifically designed for E85; cars that use these engines are called flexible-fuel, or flex-fuel, vehicles. This kind of car can run off regular gasoline if E85 is not available.

E85 can be produced locally, causes less engine knock, and is usually considered less polluting as gasoline. In some places it costs less than regular gasoline, while in others it costs more. E85 is not widely available and reduces fuel economy compared to regular gasoline. About 3 percent of US cars are E85 powered.

Biodiesel is made from vegetable oils or animal fats (see question 110). It can even be made from used cooking oil, which can sometimes make it smell like french fries. B5 is 5 percent biodiesel, and B100 is 100 percent biodiesel. The most popular blend is B20, or 20 percent biodiesel, promoted by singer Willie Nelson. He calls it BioWillie. Biodiesel is not widely available and tends to solidify into a gel in bitter-cold winters, and car manufacturers won’t warrant their cars for anything higher than B5. Despite these drawbacks, experts say there may be a place for biodiesel fuels in the future.

Natural gas, in the form of compressed natural gas (CNG) or liquefied natural gas (LNG) is the third alternative to gasoline; it became an option with Honda’s Civic CNG, which began production in 2008. Natural gas is far less polluting to burn than gasoline, so it’s attractive for use in taxicabs and utility vehicles in crowded cities. Natural gas can be cheaper than gasoline, but the gas tank has to be rather large, taking up the whole trunk space. The rate of miles driven per fill-up is dismal, so you won’t see natural-gas cars in wide-open country.

Propane is the fourth possibility. It burns quite cleanly in engines and is also less polluting than gasoline. Propane, in the form of liquefied petroleum gas (LPG), is ideal for fleets of taxis, buses, delivery vehicles, and forklifts. Zion National Park in Utah has a fleet of thirty buses powered by propane. LPG is stored as a liquid under about two hundred pounds per square inch of pressure. Canisters of LPG are also popular for barbecue units and camp stoves. Liquid propane flashes to a vapor when no longer under pressure. A quick release, say, from a ruptured tank, yields a fine white mist as moisture in the air condenses on the gas particles.

It should be pointed out that LPG is usually considered to be 100 percent propane in the United States and Canada. However, in Europe, the propane content of LPG can be less than 50 percent. Propane is the third on the list of most widely used motor fuels in the world.

The fifth alternative fuel is hydrogen, which has enormous potential, mainly because there is no pollution to speak of, since the by-product of combustion is water vapor. Hydrogen can come from water and is used in fuel cells for electric cars, but it can also be used to power internal combustion vehicles. Hydrogen has the potential to be produced locally and anywhere in the world, but the technology is simply not quite there yet.

To be sure, there are significant drawbacks. Fuel cells are expensive. Storing hydrogen in a car is also dicey, the cost is high, and there is no widespread distribution system in place.

You see it on the news every summer. Someplace on this good planet Earth, train tracks buckle during a spell of hot weather, causing a train wreck. The reason is well understood. Metals expand when heated; thus, the metal rails in the train tracks get longer. In the old days, there were gaps between the thirty-nine-foot rails that made the familiar clickity-clack sound as the train went over the tracks. A thirty-nine-foot train rail can expand as much as a half inch when the temperature goes from 70 to 100°F. The gaps allow the rails to expand.

You see it on the news every summer. Someplace on this good planet Earth, train tracks buckle during a spell of hot weather, causing a train wreck. The reason is well understood. Metals expand when heated; thus, the metal rails in the train tracks get longer. In the old days, there were gaps between the thirty-nine-foot rails that made the familiar clickity-clack sound as the train went over the tracks. A thirty-nine-foot train rail can expand as much as a half inch when the temperature goes from 70 to 100°F. The gaps allow the rails to expand.

This process changed a few years ago. Railroad companies now have tracks laid in segments of about fifteen hundred feet or more. This so-called continuous track, or continuous welded rail, eliminates the expansion joints. At the mill, shorter tracks are welded together and carried lying on two flatcars to the intended site. There, the sections are welded together in a thermite fusion-welding process, which involves placing a mixture of aluminum powder and iron oxide in a crucible and igniting them with magnesium. The combustion raises the temperature of the reaction of aluminum powder and iron oxide to more than 5,000°F, and this mixture is poured into the gap between the two preheated rails to weld them together.

There are advantages to employing rails that may be a half mile in length instead of the standard thirty-nine feet. The longer joined rails provide a smoother ride, less maintenance, and less friction than the shorter rails. Trains can travel at a higher speed.

Railroad companies always schedule the process of laying the long sections of track for one of the locally hottest days of the year, so that the rails are not likely to expand further. On cooler days, the rails will be under tension because they will try to contract and pull apart. But the maximum stress on the rail, even if the temperature drops 100°F, is less than one-fourth the allowable stress for the steel in a continuous track.

As a matter of curiosity, the distance between the rails is 4 feet, 8.5 inches, believed to be the same distance as the wheels of Roman chariots. Ben Hur was actually “riding the rails.”

The length of airport runways depends on several factors, including the types of aircraft the airport will serve, the airplanes’ itineraries, the altitude of the airport, the speed and direction of the winds, the surrounding terrain, and the proximity of tall buildings and towers.

The length of airport runways depends on several factors, including the types of aircraft the airport will serve, the airplanes’ itineraries, the altitude of the airport, the speed and direction of the winds, the surrounding terrain, and the proximity of tall buildings and towers.

Large, heavy aircraft need a longer runway to achieve the high speed required to give the wings enough lift for takeoff. As for itineraries, more fuel is needed for longer flights, so a higher takeoff speed is used to lift the greater weight of the additional fuel. Some fully loaded Boeing 747 planes weigh close to a million pounds.

Another factor is the elevation difference between airports near sea level, like the one in San Francisco, and those in the mountains, like Denver’s airport. The air in Denver, the Mile High City, is quite a bit less dense than the air in San Francisco, so it provides less lift during takeoff. In addition, the less-dense Denver air provides less air for jet engines, which means the engine is not generating as much power as it would at a lower-level airport.

So an aircraft in Denver must reach a higher speed on the ground to be able to take off, requiring a longer runway to give the aircraft time to reach that speed. As a rule of thumb, the runway length is increased by 7 percent for each one thousand feet of elevation above sea level.

For Denver, which is over five thousand feet above sea level, the figuring goes like this: 1.07 (the runway length needed at sea level plus 7 percent) to the fifth power (adding 7 percent to each new total five times, once for each thousand feet) equals 1.4. That is, we can multiply 1.07 times itself five times and we come up with 1.4. So the runway lengths at Denver should be 40 percent greater than the airport runway lengths at San Francisco. That holds with reality: The new runway at Denver is 16,000 feet long, while the longest runway at San Francisco is 11,870 feet in length. The required takeoff distance for the fully loaded Boeing 747-400 at sea level is 11,100 feet, and at Denver’s high altitude, a plane needs nearly 5,000 feet more runway to generate the required lift.

Additional reasons to need a longer runway are based on air temperature and humidity. Warmer air is less dense than cold air, so warm air gives less lift. Pilots refer to this as density altitude. Dry air is slightly more dense than moist air. Ideally, pilots want to take off in air that is low (low altitude), cold, and dry.

The first gun was essentially a hand cannon. It consisted of a strong metal tube open at one end and with a hole drilled into the tube near the closed end. The user loaded gunpowder into the tube and rammed a ball, or bullet, down the barrel, then stuck a fuse (or poured a bit of powder) into the drilled hole. Igniting the fuse turned the gunpowder into a gas and pushed the ball out of the open end of the barrel with great speed. Mongolians used hand cannons in the 1200s. The barrel was long, the accuracy was terrible, and those early guns were extremely heavy. Today, these hand cannons are called pistols.

The first gun was essentially a hand cannon. It consisted of a strong metal tube open at one end and with a hole drilled into the tube near the closed end. The user loaded gunpowder into the tube and rammed a ball, or bullet, down the barrel, then stuck a fuse (or poured a bit of powder) into the drilled hole. Igniting the fuse turned the gunpowder into a gas and pushed the ball out of the open end of the barrel with great speed. Mongolians used hand cannons in the 1200s. The barrel was long, the accuracy was terrible, and those early guns were extremely heavy. Today, these hand cannons are called pistols.

The blunderbuss, a gun associated with the Pilgrims, had a short barrel and a flared muzzle. The wide barrel speeded loading and spread the shot. The ignition mechanism for a gun is called a lock. The lock in a blunderbuss held a slow-burning rope that was ignited ahead of time and then moved into position by the trigger to ignite the gunpowder housed in a pan. This gun had some disadvantages; for example, rain would put out the burning rope. Also, the burning end of the rope glowed at night, betraying the shooter’s position.

The matchlock was the first weapon that allowed the shooter to keep both hands on the gun. Just as important, it permitted the shooter to keep an eye on the target while firing the weapon.

The flintlock was a big breakthrough and lasted for over three hundred years. Flint is a kind of rock, and when it strikes steel, it creates hot sparks. In a flintlock, that spark ignited gunpowder in the lock. The flintlock was the soldier’s main firearm during America’s Revolutionary War.

The percussion cap was perfected by the time of our Civil War. The cap, about the size of a pencil eraser, was a short, hollow tube made of metal and closed at one end, loaded with a chemical compound called mercuric fulminate, which is highly explosive. The open end of the cap fit over a “nipple” from which a tube extended to the powder, waiting to be lit. Pulling the trigger made a hammer strike the cap, setting off the explosive.

The cartridge came along about the time of the Civil War, too. A cartridge has the powder and bullet enclosed in a metal casing, with the powder sitting right behind the bullet. A sharp blow to either the rim or the center of the shell ignites the powder.

Not so many years ago, we marked the two hundredth anniversary of the beginning of the Lewis and Clark Expedition to explore the lands acquired by the Louisiana Purchase. The thirty-three frontiersmen ran out of trading goods, whiskey, and food, but during the twenty-eight-month journey to the Pacific and back, the Corps of Discovery never ran out of weapons, bullets, and gunpowder.

A regular incandescent lightbulb consists of a thin, frosted, glass enclosure with a tungsten filament inside. When the bulb is turned on, the filament becomes white hot at about 4,500°F. These lightbulbs are not very efficient, because the process of incandescence emits large amounts of heat. Most of the electrical energy put into the incandescent lightbulb goes into heat and not light. And the bulbs only last about a thousand hours. As the tungsten filament evaporates away, depositing particles on the inside of the glass, the bulb darkens (see question 135).

A regular incandescent lightbulb consists of a thin, frosted, glass enclosure with a tungsten filament inside. When the bulb is turned on, the filament becomes white hot at about 4,500°F. These lightbulbs are not very efficient, because the process of incandescence emits large amounts of heat. Most of the electrical energy put into the incandescent lightbulb goes into heat and not light. And the bulbs only last about a thousand hours. As the tungsten filament evaporates away, depositing particles on the inside of the glass, the bulb darkens (see question 135).

A halogen lightbulb has the same kind of tungsten filament but is housed in a much smaller quartz glass envelope. Inside the envelope is a gas from the halogen group, such as fluorine, chlorine, bromine, iodine, or astatine. The gas combines with the tungsten that is evaporating from the filament; this causes the tungsten to be redeposited on the filament via a halogen cycle chemical reaction.

How does this halogen cycle work? At lower, moderate temperatures, the evaporating tungsten reacts with the halogen to produce a halide gas. At the higher temperatures close to the filament, the halide gas dissociates, releasing the tungsten and the halogen. The tungsten is deposited back on the filament to be reused instead of accumulating on the glass, and the halogen gas is free to circulate again. This halogen cycle gives the bulb a longer life.

This setup also allows the filament to run hotter, which makes the bulb more efficient. The hotter the bulb, the more light produced per watt of electricity consumed. In a smaller bulb, the filament is closer to the surface, which makes the glass bulb hotter. Regular glass would melt at such high temperatures, so manufacturers use quartz.

It is interesting to know the story of the development of halogen lights. In the 1950s, engineers wanted small, powerful lights to fit on the wing tips of jet planes. So General Electric came up with the halogen light to fill that need.

Perpetual motion machines violate the most sacred law in all of science, the law of conservation of energy. A perpetual motion machine would have to have more output than input. In other words, it would have to produce more energy than it uses. You don’t get something for nothing!

Perpetual motion machines violate the most sacred law in all of science, the law of conservation of energy. A perpetual motion machine would have to have more output than input. In other words, it would have to produce more energy than it uses. You don’t get something for nothing!

Every machine has a supply of energy, of which it uses some proportion to do work; it gives off the rest as waste heat. The starting energy equals the sum of the energy used to do the work and the energy expended as waste heat. In other words, energy is conserved. Examples of energy would include heat, mechanical, solar, electrical, magnetic, chemical, and thermal.

The law of conservation of energy states that one cannot create energy from nothing. No one has ever found an exception to this rule. Energy is always conserved—it just changes from one form to another. Those forms include mechanical, electrical, magnetic, thermal, chemical, and nuclear energy.

Any perpetual motion machine would have to take energy at some point, use it for work, and yet return everything to its original state, unchanged, at the end of the cycle. Real-world machines and processes leave things changed permanently. Engineers measure this change as entropy. And the second law of thermodynamics requires that any real process increase the entropy of the universe. For these reasons, no genuine perpetual motion machine currently exists, has ever existed, or could ever exist. As early as 1775, the Royal Academy of Sciences in Paris issued a statement that it would “no longer accept or deal with proposals concerning perpetual motion.”

In the past, an inventor could apply for and be issued a patent for a drawing, idea, or scheme. They didn’t have to produce or build any real device. Some charlatans would find people to invest in a supposed perpetual motion machine, take their money, and invest it in banks or the stock market. Indeed, the investors got their money back when the perpetual motion machine was shown to be a fraud, but the crooks got the interest on that money. The US Patent and Trademark Office (PTO) issued a decree in 2001 that it will not issue a patent for a perpetual motion machine unless the person applying for the patent demonstrates a working model. One can go on the Internet and find all kinds of so-called perpetual motion machines, but they don’t have patents, because none of them actually work.

Consider a bow and arrow. By pulling the arrow back, a person does work in bending the bow. The bent bow has potential energy. When the person releases the arrow, it has kinetic energy in its motion. The arrow embeds itself into a straw bull’s-eye target. No more potential energy. No more kinetic energy. But the arrowhead and the straw target have a slightly higher temperature: mechanical energy (in the form of kinetic energy) to heat energy. Nothing lost and nothing gained, only a transfer of energy.

Here’s another example: A car uses more fuel, gas, diesel, or alternative, when the air conditioner is running, when the headlights are on, and when the radio is playing. The energy to run these functions comes from the battery, which must get it from the alternator, which is turned by the engine, which burns the gasoline. You can’t run the air-conditioning, the headlights, and the radio for nothing.

James Prescott Joule, son of a British brewmaster, was instrumental in developing the law of conservation of energy. He proved that for any amount of work done, a definite quantity of heat always appears. Putting it in scientific terms, the total energy of an isolated system remains constant regardless of changes within the system. That’s why there can never be a perpetual motion machine. Even in the modern age, there are people who claim to have come up with these machines that have a greater output than input. Can’t happen!

The best known of these perpetual-motion people is inventor Joseph Newman. For years he claimed his “energy machine” produces more energy than it consumed. He appeared on the Johnny Carson show. He took millions of bucks from investors. He applied for a patent, but the PTO rejected his application. He sued them. The National Bureau of Standards was asked to test his claims. Its report came back in June 1986, stating that at no time did the output exceed the input. No free energy or perpetual motion. Go online and view his website, www.josephnewman.com, which is very impressive, and the Web page from what is now the National Institute of Standards and Technology that debunks his claims.

Dissolvable stitches, also called absorbable sutures, can be used for both internal and external wounds and surgical incisions. There is no need for a follow-up trip to the hospital or doctor to have the stitches removed. The sutures naturally decompose in the body.

Dissolvable stitches, also called absorbable sutures, can be used for both internal and external wounds and surgical incisions. There is no need for a follow-up trip to the hospital or doctor to have the stitches removed. The sutures naturally decompose in the body.

Dissolvable stitches are usually made from natural material, such as processed collagen from an animal intestine. “Catgut” is a common name for this material, although it is actually made from sheep and beef intestines. The stitches dissolve over time, and by the time they do, the wound or incision has healed. Your body treats stitches as a foreign substance, and it is programmed to destroy them. Sometimes a stitch is left on the outside of the body and will not decompose because it is not in contact with body fluids. In these cases, the surgeon can remove any remaining pieces of suture material. Dissolvable sutures are available with a chromium salt coating to make them last longer in the body (chromic gut) or heat treated for more rapid absorption (fast gut). Surgeons choose one of these or untreated sutures (plain gut) depending on how long they expect the stitches will need to remain in place for complete wound healing.

A major portion of dissolvable sutures are now made from synthetic polymer fibers, similar to tennis racket strings or fishing line. Some are braided, others are monofilament. They are easier to handle, cost less, cause less tissue reaction, and are nontoxic. Many stitches are now made of cotton, where the wound is less prone to infection and the material is cheap.

Nonabsorbable sutures, made of materials that cannot dissolve in the body, are usually used for skin wound closures, because sutures on the surface are easy to remove. Sutures for this purpose are made from polyester, nylon, or silk. Stainless-steel sutures are used in orthopedic surgery and to close the chest after cardiac and abdominal surgery.

Stitches, or sutures, vary in thickness, elasticity, and decomposition rates. An extremely thin suture is used for cosmetic surgery, in which scarring is a major concern. An elastic suture is useful for knee surgery, so the knee can bend without tearing the stitches. A deep, wide gash needs stitches that will last longer, since it will take more time to heal than a simple surface wound.

There was a time when very bright people thought that steel or iron ships couldn’t float. After all, steel is about eight times as dense as water. Put a hunk of steel in water, and down it goes. It wasn’t until the mid-1850s that steel was used extensively for shipbuilding. Prior to the Civil War, ships were made of wood. Everyone knew that wood floats in water.

There was a time when very bright people thought that steel or iron ships couldn’t float. After all, steel is about eight times as dense as water. Put a hunk of steel in water, and down it goes. It wasn’t until the mid-1850s that steel was used extensively for shipbuilding. Prior to the Civil War, ships were made of wood. Everyone knew that wood floats in water.

Ships float because of their shape. A hunk of steel will sink. But that same hunk of steel can be shaped to make a lot of hollow space inside. When in water, the ship, with its outward-curving sides, is able to push out of the way, or displace, a weight of water equal to its own weight.

The Titanic, for example, weighed about 46,000 tons, so the floating Titanic displaced sixty-six thousand tons of water. Put ten thousand tons of people and cargo on board, and the Titanic would displace seventy-six thousand tons of water. Of course, then the Titanic would sink lower in the water. If the cargo weighs too much, or if a lot of water comes in the sides or over the top, bad things happen. Suddenly, the Titanic weighs more than the weight volume of water it displaces, and down it goes!

Archimedes, living in the third century BC, is credited with discovering the principle of buoyancy. King Hiero of Syracuse had commissioned Archimedes to determine if a gold crown was really made of pure gold or if the goldsmith had mixed in some baser material, like silver or lead. Sitting in the bath one day, Archimedes noticed the upward buoyant force of the water. He didn’t weigh as much in the water as he did standing beside the tub. Archimedes reasoned that if the gold crown actually had silver mixed in it, it would take up more space than a pure gold crown. Archimedes compared the amount of water displaced by the crown and the amount of water displaced by an equal mass of pure gold. Sure enough, the crown displaced more water, indicating it was a fraud. The goldsmith literally lost his head. A very good read about Archimedes’s experiment can be found at http://www.bellarmine.edu/eureka/about/.

Lightning strikes on aircraft are quite common, but also quite harmless. According to the US Federal Aviation Administration (FAA), on average, every airliner gets hit more than once a year. Of course, some planes get hit more than once and some not at all. Yes, indeed, that adds up to a lot of struck planes.

Lightning strikes on aircraft are quite common, but also quite harmless. According to the US Federal Aviation Administration (FAA), on average, every airliner gets hit more than once a year. Of course, some planes get hit more than once and some not at all. Yes, indeed, that adds up to a lot of struck planes.

Modern planes have an aluminum “skin,” and aluminum is a very good conductor of electricity. When lightning strikes a plane, it flows over the skin and off into the air. However, lightning could damage very sensitive electronic instruments on board, so airplanes require built-in lightning protection systems.

The last time an airplane crash was blamed on lightning was in 1963, when lightning struck a Boeing 707 that was in a holding pattern over Elkton, Maryland. The lightning spark ignited vapors in a fuel tank, causing an explosion and killing the eighty-one people on board. The very next week, on orders from the FAA, suppressors were required to be installed in aircraft flying within US airspace. These static dischargers are wicks or rods intended to drain off a charge of electricity in the event of a lightning strike. They are installed on the wing tips and horizontal and vertical stabilizers.

Pilots do try to avoid thunderstorms, not only because of lightning but also because the high shear winds (updrafts and downdrafts) can tear an aircraft apart. Also, if a jet engine pulls in a huge amount of water, the engine will quit (flame out). In addition, an airplane flying into a cloud that has built up an electric charge can actually trigger a lightning strike—but as explained, they can handle it.

Prisms are blocks of glass or plastic with flat polished sides arranged at precise angles to one another. Prisms can refract or deviate a beam of light and invert or rotate an image.

Prisms are blocks of glass or plastic with flat polished sides arranged at precise angles to one another. Prisms can refract or deviate a beam of light and invert or rotate an image.