ROBOTS

BEYOND

ROBOTS BEYOND

While many of the robots already in service are awesome, they still fall a long way short of the robots dreamed of in science fiction – Commander Data, Terminator, the inhabitants of Westworld. But researchers are working hard to bridge that gap.

Robots do not need to look like humans, so most of them have functional shapes, like industrial robot arms. But when machines work in a human environment, opening doors and negotiating staircases, a humanoid form can make sense. Examples include Atlas, Boston Dynamics machine for human-type mobility, NASA’s Robonaut for space missions alongside humans, and the US Navy’s SAFFiR shipboard firefighting robot. While they are not quite Olympic athletes yet, they have come a long way in a few years. Robot bodies may not always be halting, inferior copies of human ones.

Robots are natural explorers. Unmanned systems were the first into space and machines such as NASA’s Curiosity Rover are digging into the surface of Mars in search of life, decades before humans are likely to reach the Red Planet. The Flying Sea Glider can explore the oceans for months on end, while the Vishwa Extensor, a remote-controlled robotic hand for deep-sea divers, operates at greater depths than any human.

The human form is not the only one researchers want to copy. Dolphins can swim faster than submarines, and even leap clean out of the water. The best way to follow suit was to build a robot which mimicked nature’s design – the amazing Dolphin, first of a new type of efficient swimming robots. The Ocotobot is a robot octopus, and although the resemblance is whimsical rather than practical, it highlights the technology of ‘soft robotics’. Just as the boneless octopus is an alternative way of building a mobile, dextrous body rather than vertebrates like us, soft robotics explores the possibilities of machines with no rigid components.

Exploration is the story of sheer human persistence against the elements. A sea voyage lasting several months is an epic calling for tremendous endurance – unless you are a machine, in which case it is business as usual. Extremes of temperature, or even being underwater for prolonged periods, mean nothing to robots that are natural submariners. The Flying Sea Glider can fly for prolonged periods as well as swim. The Vishwa Extensor, designed as a remote-controlled robotic hand for human deep-sea divers, might end up equipping all sorts of unmanned craft.

Space is a better habitat for machines than humans. Unmanned systems were the first into space and the first to land on the Moon ahead of the astronauts. Machines such as NASA’s Curiosity Rover are digging into the surface of Mars in search of life, and continue to work there decades before humans set foot on the red planet.

The Kilobot might seem like the least impressive machine of all, with less capability than a clockwork toy. It is in fact a research platform for testing software to control swarms of cooperative robots that can work together. This technique may ultimately dominate all forms of robotics, whether they are cleaning windows, performing surgery or working in fields or factories.

Nanobots are so far confined to science fiction: microscopic robots working in teams of millions to create or destroy. Nanobots in our bloodstream may one day detect and eradicate tumours before they can be a threat; others might tear down old buildings and reassemble them as new houses, efficiently recycling every particle of metal, stone and plastic. Technology has moved slower in this area than some expected, but that does not mean they are not coming.

This book gives some idea of what robots can already do, and what they will be able to do in the near future, based on what is already in the public domain. Government laboratories, and giant corporations like Google, may already have more advanced machines under wraps. As to where robots will go next, there are no fixed limits.

One thing we can say is that the technologies developed by individual programs – manipulators, mobility, swarming or social interaction software – will be combined to create more effective robots. Expect to see dolphin-like machines with hands like the Vishwa extensors, or Roombas with swarming software that allow several to cover a large house efficiently. Realistic but essentially static humanoids like Sophia may be matched with highly mobile bodies like Atlas to create a true android.

The other great unknown is artificial intelligence (AI). Ray Kurzweil, Google’s director of engineering, anticipates that computers will exceed human intelligence in the 2040s. When that happens, current machines will look as primitive as da Vinci’s mechanical knight.

Robots may be changing the world now, but they have barely started.

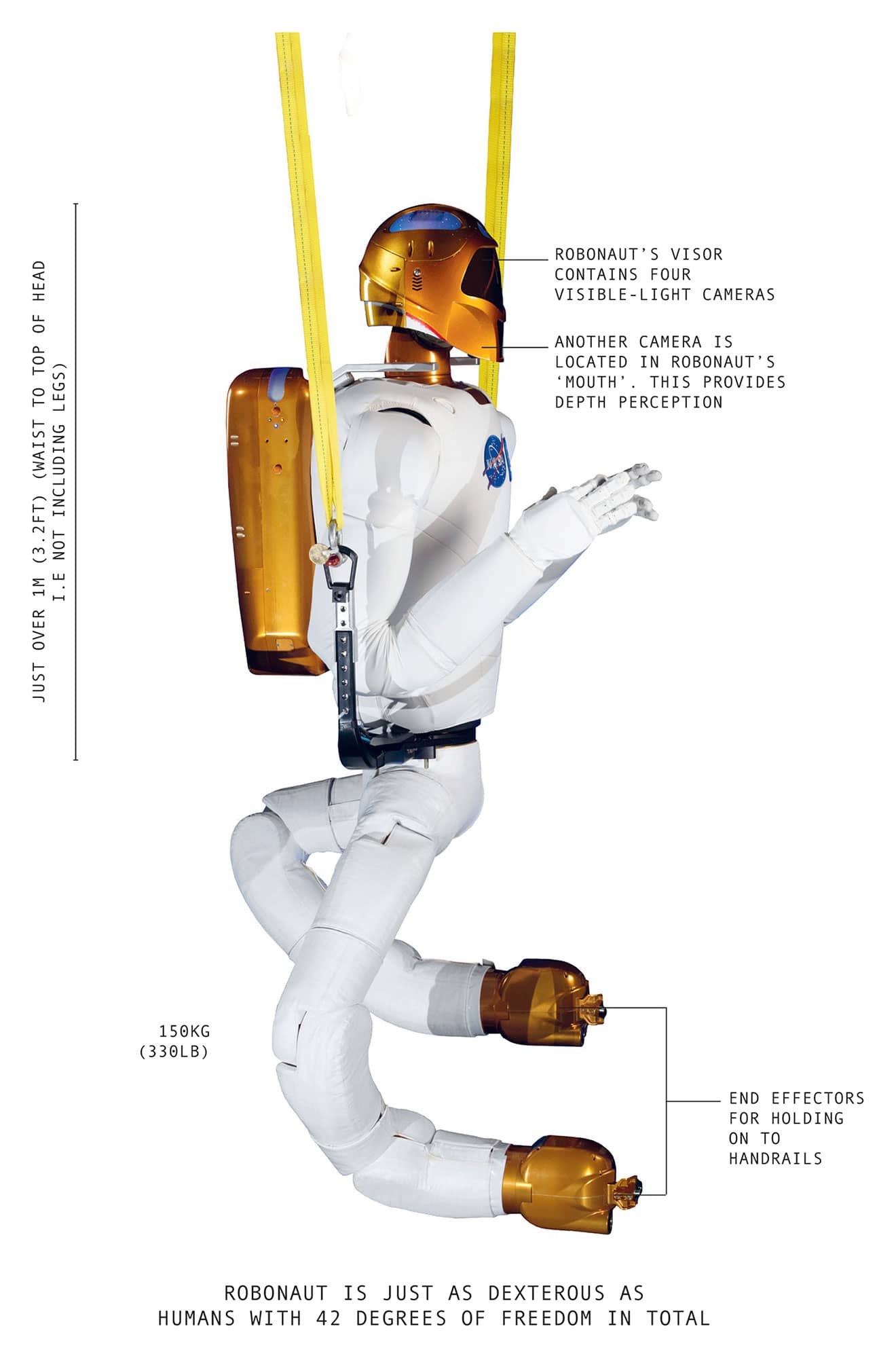

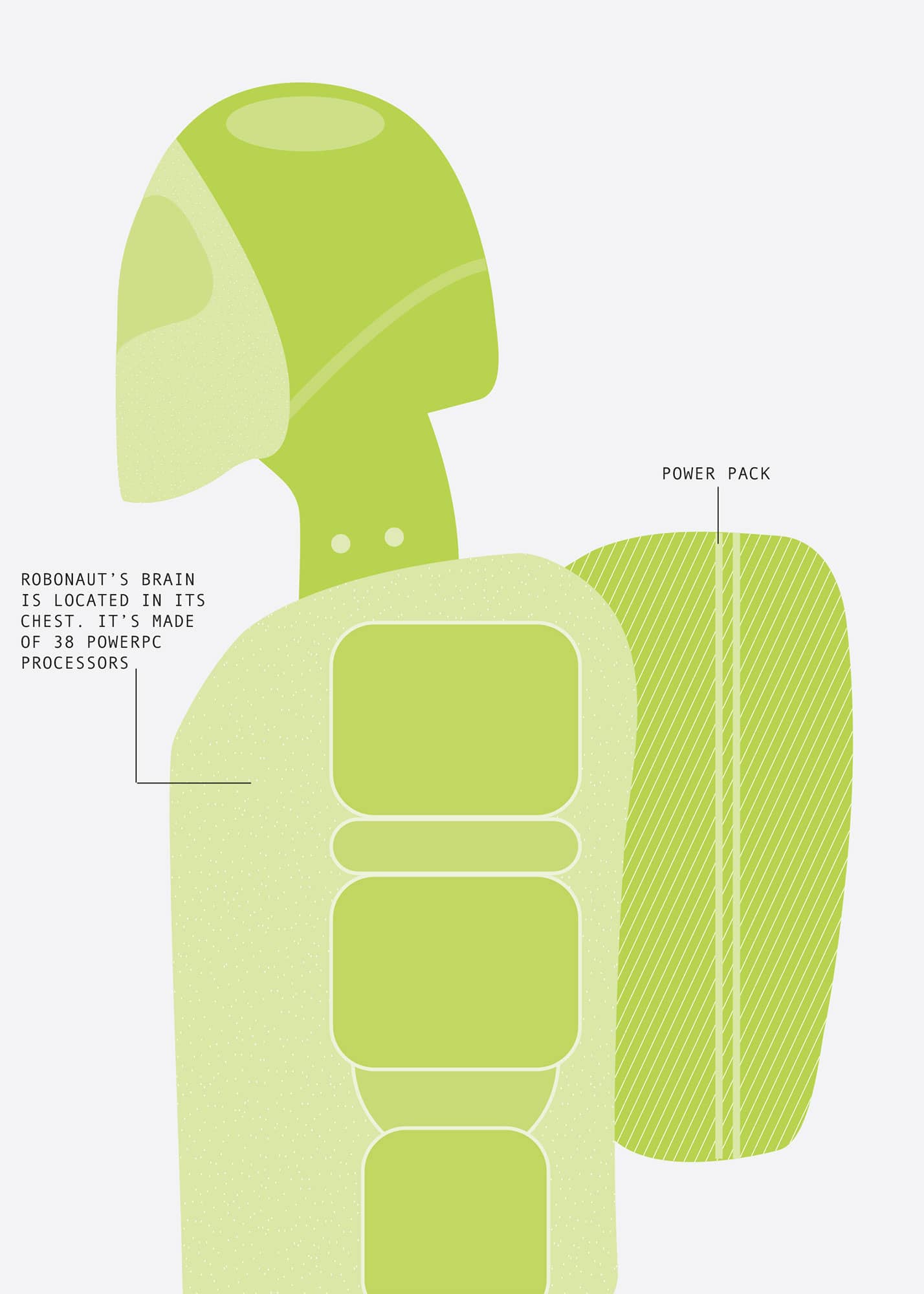

ROBONAUT 2

Height |

2.4m (7.9ft) |

Weight |

150kg (330lb) |

Year |

2011 |

Construction material |

Composite |

Main processor |

PowerPC processors |

Power source |

Battery pack/external power |

Robonaut is a robotic astronaut, not a replacement for humans in space, but a tireless helper. This autonomous, if unimaginative, assistant carries out simple, repetitive tasks so human astronauts can concentrate on more important things than housekeeping.

Extra-Vehicular Activity or EVA, which involves the astronaut donning a bulky space suit and leaving the International Space Station (ISS) to work on the outside, is demanding and exhausting. Astronauts only carry out EVA for a few hours at a time. It is also extremely hazardous, as the slightest tear in a spacesuit will suck all the air into the vacuum of space, leading to a perilous situation. Even if an astronaut is careful, micrometeors and orbiting debris are a constant threat. EVA is also impossible during solar storms due to high radiation levels.

By contrast, Robonaut does not mind vacuum. It is the same size and shape as a human, so it can use the full range of existing tools developed for astronauts, and is the ideal candidate for jobs that nobody else wants to do. The current version, Robonaut 2, was delivered to the ISS in 2011, although the Robonaut project dates back to 1997.

Robonaut’s main talent is its dexterity, boasting the same skill at manipulation as a human. Its arms have seven degrees of freedom, while each five-fingered hand has twelve degrees of freedom and a gripping power of over 2kg (4.4lb). Even Robonaut’s neck has three degrees of freedom, allowing it to rotate and tilt its head to look round, with four visible-light cameras and an infrared camera. Robonaut’s backpack contains a power-conversion system that plugs into the ISS’s electricity supply.

Robonaut’s brain, which includes thirty-eight PowerPC processors, is located in its torso. It handles the input from over three hundred sensors around its body. Many of these sensors are in the hands: every joint has two position sensors, a pressure sensor to provide a sense of touch and four temperature sensors.

The eyes provide full 3D vision. At present, this only works well in good lighting conditions and struggles when conditions are less than ideal. The developers are seeking to improve it with the use of better object-recognition algorithms. While terrestrial robots work in unpredictable surroundings, everything in Robonaut’s environment has been manufactured to a known size and shape. This should make it easier to catalogue and classify every object it needs to recognise.

Robonaut originally consisted of an upper body only, about 1m (3.2ft) tall, as what mattered was providing a pair of hands. It weighed 150kg (330lb), but getting around was not considered to be a problem in zero gravity. In 2014, a pair of legs, technically known as climbing manipulators, arrived at the ISS to make a Robonaut into a complete humanoid. Each leg has seven joints, and instead of having a foot it has an ‘end effector’ like a pincer, which can take hold of handrails like a climbing monkey. A vision system will be added to each of these, so Robonaut can see footholds to take hold of them.

The developers have experimented with other configurations, including a version of Robonaut for planetary exploration with a humanoid torso mounted on a wheeled chassis. This combination is known as Centaur.

For the present, Robonaut only takes on the most menial of tasks in the ISS: cleaning handrails and carrying out routine measurements of air circulation within the space station using a handheld meter. The robot also has its own ‘taskboard’, like a child’s playset with buttons to push and switches to flip, as a practice device. Robonaut is not yet permitted to press real buttons.

Astronauts require a mass of life-support equipment to provide them with food, water and breathable air. A long-duration mission, such as a manned expedition to Mars, would rely heavily on robot labour to support the small human crew, so there would probably be more robonauts than astronauts. Unlike science-fiction stories, where robots invariably seem to rebel, they will probably be the most reliable members of the team.

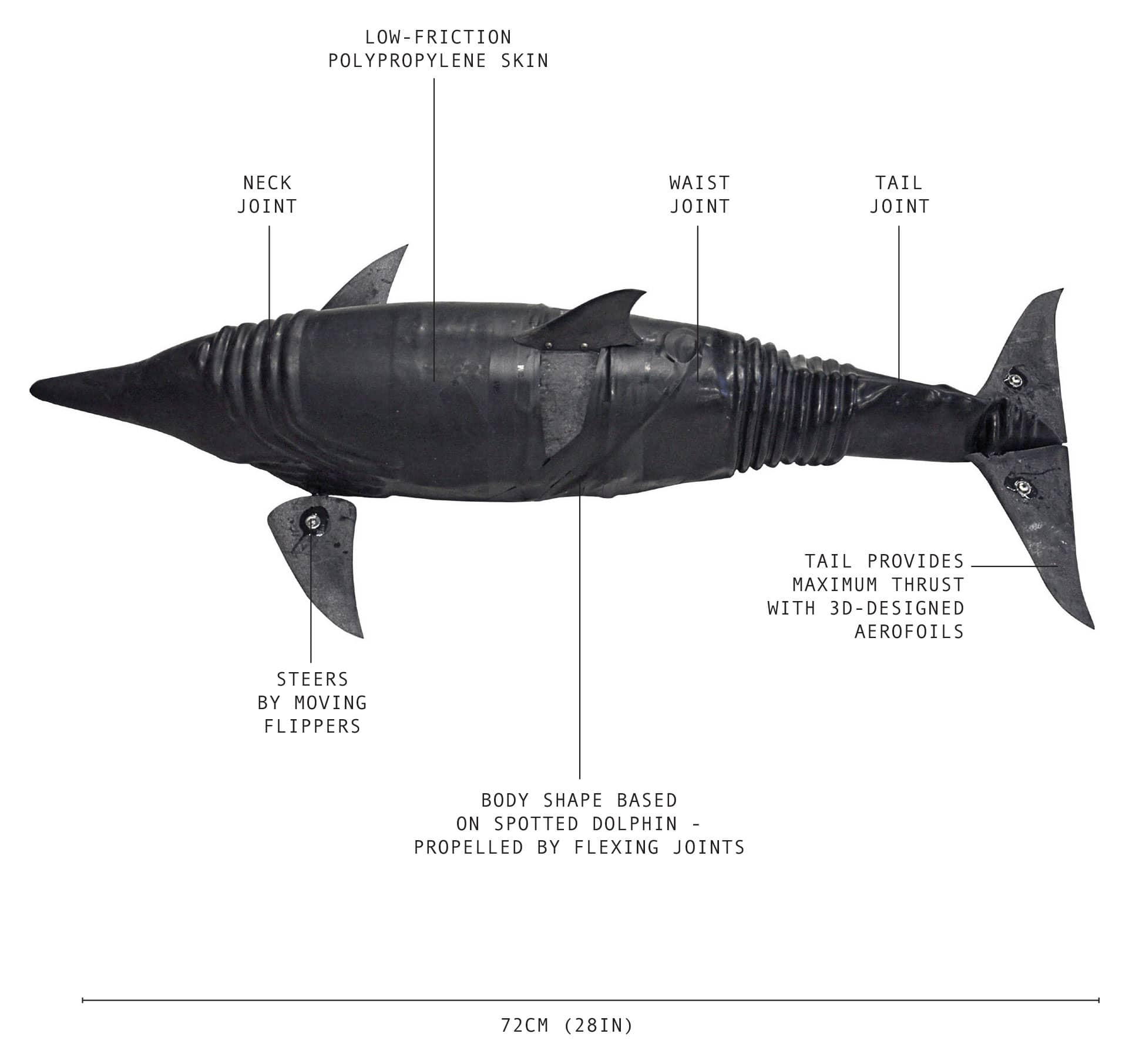

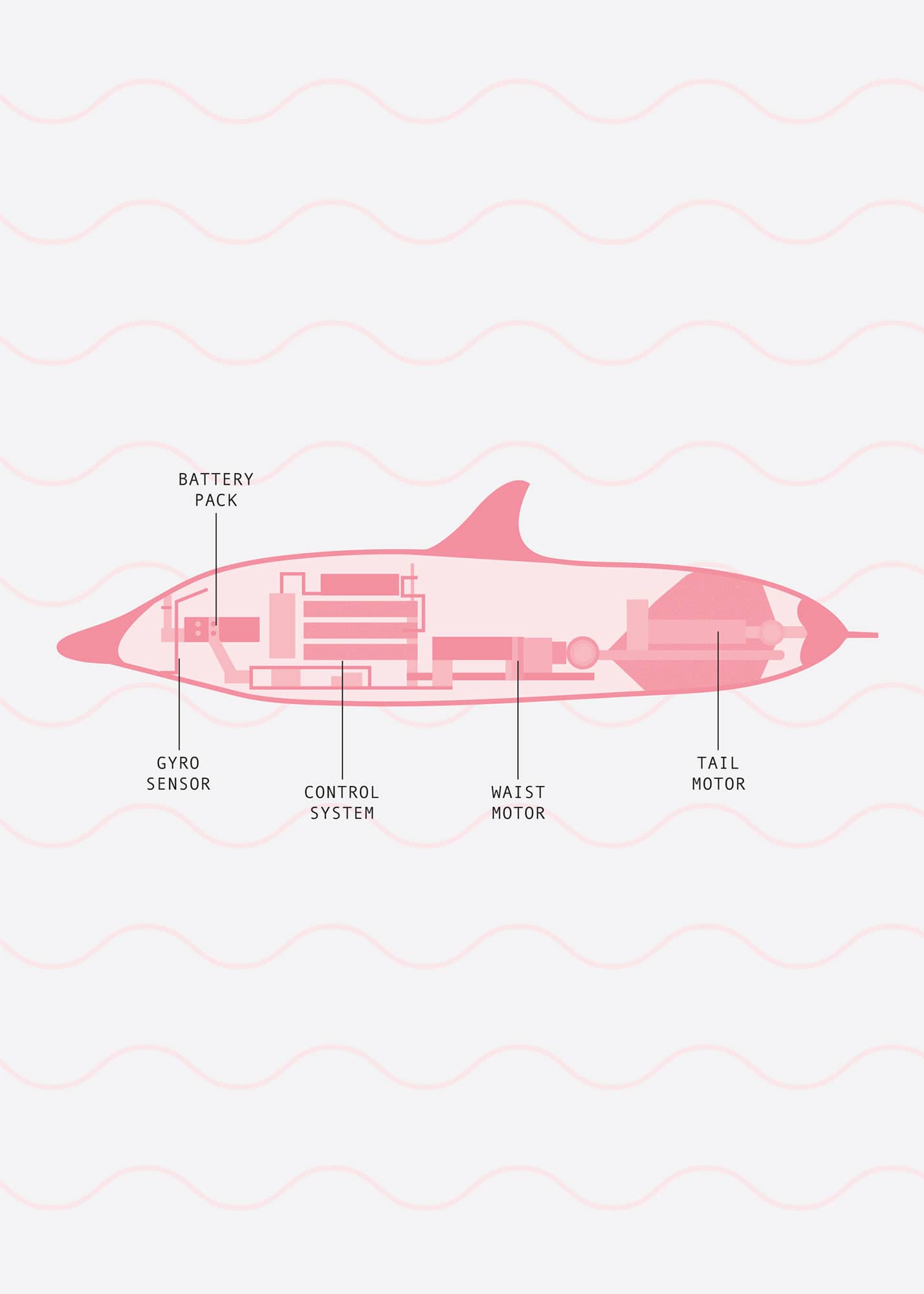

DOLPHIN

Length |

72cm (28in) |

Weight |

4.7kg (10.3lb) |

Year |

2016 |

Construction material |

Titanium and polypropylene |

Main processor |

ARM Cortex M3 |

Power source |

Battery pack/external power |

A Chinese underwater robot shaped like a dolphin, which moves by flexing its body and tail, is much faster than conventional rigid designs with propellers. It looks just like a real dolphin, and can even jump out of the water, pointing the way to future generations of agile, fish-like machines.

Water is a thousand times denser than air, and the drag it exerts makes submarines much slower than surface vessels. Boat designers improve speed by minimising contact between the hull and the water, and sailing yachts even have ‘flying keels’ with hydrofoils to lift them clear of the waves. This approach is useless underwater, so unmanned underwater vehicles are limited to a few knots. Reaching even that speed requires a disproportionate amount of power.

Nature is way ahead of us. Fish flick past effortlessly underwater, and dolphins can be seen ‘porpoising’, leaping out of the water in a series of high-speed leaps. In 1936, biologist James Gray had calculated that it ought to be impossible for dolphins to swim at 20mph (32kmh) because the underwater drag was too great. The puzzle was not solved until 2008 when another biologist – one Frank Fish – showed that the dolphin’s tail is far more efficient at producing thrust than anyone had realised.

Roboticists have been borrowing ideas from nature ever since Leonardo da Vinci, and a team led by Professor Junzhi Yu at the Chinese Academy of Science in Beijing modelled its machine on a spotted dolphin. It is a scaled-down version, at 72cm (28in) long and weighing under 5kg (11lb). The design emphasises streamlining, with a tail designed for maximum thrust powered by electric motors. The dolphin’s tail fins, known as flukes, and its flippers, are designed as 3D aerofoils.

The robot dolphin’s body has three flexible, powered joints: a neck joint, a waist joint and a caudal joint between the body and the tail. These flex to propel the robot, while steering is provided by moving the small side fins. The parts of the dolphin’s skeleton requiring strength are made of titanium, and the rest of it is aluminium and nylon, with polypropylene skin and flippers which allows the dexterity. A lithium ion battery provides power for three hours of operation.

The dolphin swims at an impressive 4.5mph (7.2kmh), or almost three body-lengths a second. From a design perspective, what matters is the robot’s ‘swimming number’, the distance it travels with each stroke of its tail. In fact, it is close to the value for a real dolphin, a good indication that Yu’s team has successfully reverse-engineered the dolphin’s swimming technique. The robot dolphin is even able to copy the dolphin’s feat of jumping clear of the water, something no underwater robot has ever achieved before. The advanced design required both a thorough understanding of dolphin aerodynamics, and the ability to translate them into a machine that could be built using existing materials.

The current robot dolphin is an early version, but can already run rings around propeller-driven underwater vehicles as easily as a playful dolphin circles a human swimmer. Yu’s team is now looking at energy expenditure and the relationship between power and speed. This should lead to further increases in speed and efficiency, and longer, higher leaps. Maybe they will be the stars of a dolphinarium show soon!

More seriously, this type of propulsion should lead to underwater robots that swim as quickly and smoothly as fish, rather than churning the water inefficiently with propellers. They will be able to swim greater distances, manoeuvre more easily and manage bursts of speed that are impossible to current robots.

As well as carrying out scientific research, robot dolphins could take on industrial tasks, such as checking pipelines, locating fish and monitoring pollution. This form of propulsion is also far quieter than a propeller, so it is likely to be appealing to the military. Future submarines may find themselves being stalked by schools of stealthy, fish-like underwater robots.

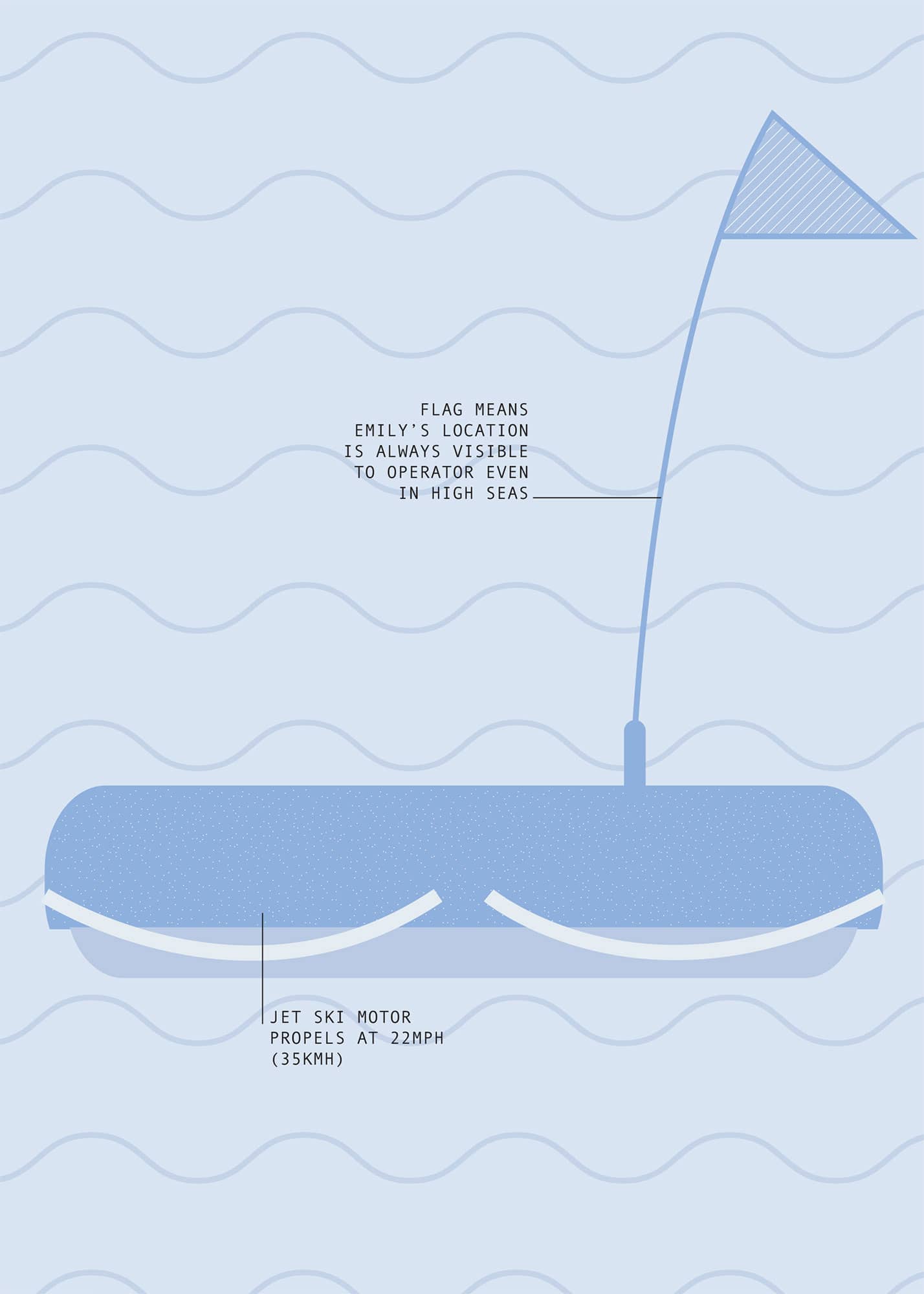

EMILY

Length |

1.2m (3.9ft) |

Weight |

11kg (24lb) |

Year |

2011 |

Construction material |

Kevlar and composites |

Main processor |

Commercial processors |

Power source |

Battery pack |

EMILY is a remote-controlled lifeguard that can swim faster than any human and that has already saved hundreds of lives. EMILY’s name is short for Emergency Integrated Lifesaving Lanyard; it is 1.2m (3.9ft) long and weighs just over 11kg (24lb). Its size belies it power: EMILY has an engine like a jet ski, which sends the robot flying through the water at 22mph (35kmh), four times faster than the best Olympic swimmer. The design has no external propeller blades to tangle or cause injuries.

Made of Kevlar and aircraft-grade composite materials, EMILY is, according to inventor Bob Lautrup of marine robotics company Hydronalix Inc., ‘virtually indestructible’. Its rugged construction means that it can survive almost anything; collisions with rocks or reefs at full speed cause no damage. Conditions that might pose a problem for a human lifeguard, such as 10m (33ft) waves, are no problem for EMILY.

The robot can be thrown off a pier or from a boat, or even dropped from a helicopter, then piloted by remote control to a person having trouble in the water. Being highly buoyant, with grab handles to hold on to, it can act as an emergency flotation device for up to six people as well as carrying additional life jackets in the case of large groups.

Adorned in a vivid orange, red and yellow colour scheme, and a flag that is visible above the waves, the robot also has lights for night rescues. A two-way radio allows rescuers to talk to people in the water – often necessary when they are panicking – as well as seeing them via a video camera. This is supplemented by a thermal imager, which helps to find people in the water at night or in severe weather. The robot can take a 700m (2,300ft) rescue line to a boat or person, and can even tow boats itself, a powerful attribute that helped rescue 300 Syrian refugees in 2015.

Lautrup says that EMILY was invented in 2009, after his team was testing a highly mobile waterborne robot at a beach in Malibu, California. They saw Los Angeles County California Fire Department lifeguards making rescues and realised that the robot would be ideal for sending flotation to a swimmer in trouble. The first version was fielded the next year.

There are already almost three hundred EMILY robots in service with coastguards and navies around the world. More sophisticated versions are on the way. One upgrade is a sonar sensor that can sense what is happening underneath the water, as the victim may not be easily visible from the surface. The challenge is to make the interface simple enough that the operator can see clearly what is happening in churning water. The team is also looking at other sensors to help rescue teams quickly locate someone who has fallen in the water from a ship or pier.

A project known as ‘smartEMILY’ is aiming to provide the robot with artificial intelligence (AI). This would help it to distinguish between people in the water who are conscious and active – and who would be able to hold onto the robot – and those who are unconscious and will need help from a human lifeguard.

Looking further forward, EMILY might be fitted with a soft manipulator arm or an inflatable device that can be placed under a swimmer who is unconscious or otherwise unable to hold on for themselves.

EMILY may be only one element of a team of robots. Some lifeguards are experimenting with using drones to patrol beaches and even to drop flotation devices. These could help locate swimmers and ensure that they stay afloat until EMILY can reach them and tow them to safety.

EMILY may not have quite the same appeal as the buff, bronzed members of the Baywatch team, and being rescued by a robot may seem a little unromantic. But when it is a matter or saving lives, EMILY is a star to match any human.

ATLAS

Height |

1.88m (6.2ft) |

Weight |

75kg (165lb) |

Year |

2013 |

Construction material |

Aluminium and titanium |

Main processor |

Commercial processors |

Power source |

Battery |

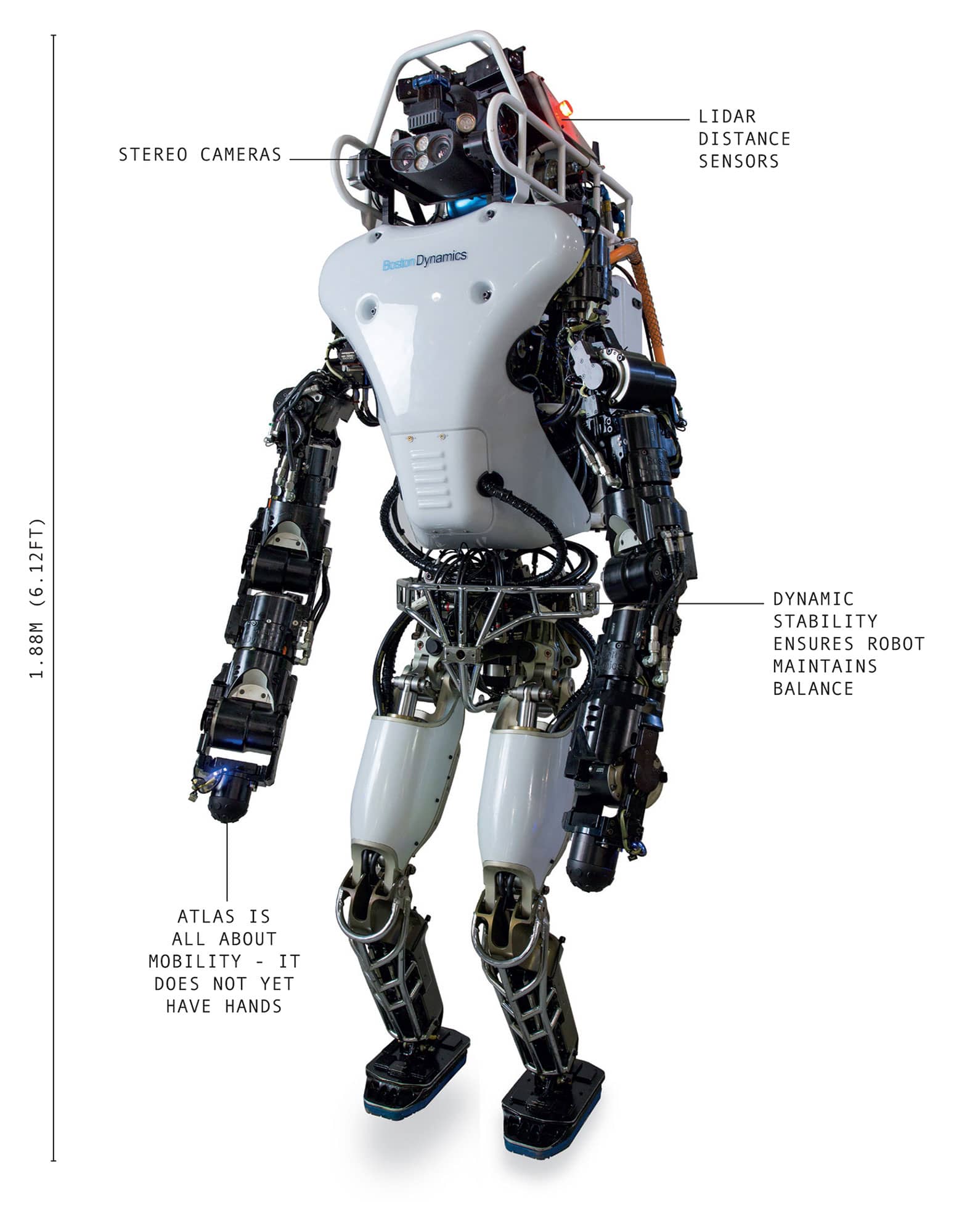

Boston Dynamics is famous for machines that move and react to their surroundings in the same ways that living things do, and Atlas is their most advanced humanoid robot to date. Atlas is the heir to Leonardo da Vinci’s robot knight, a step towards a general-purpose humanoid robot, with a level of agility that Leonardo could only dream of.

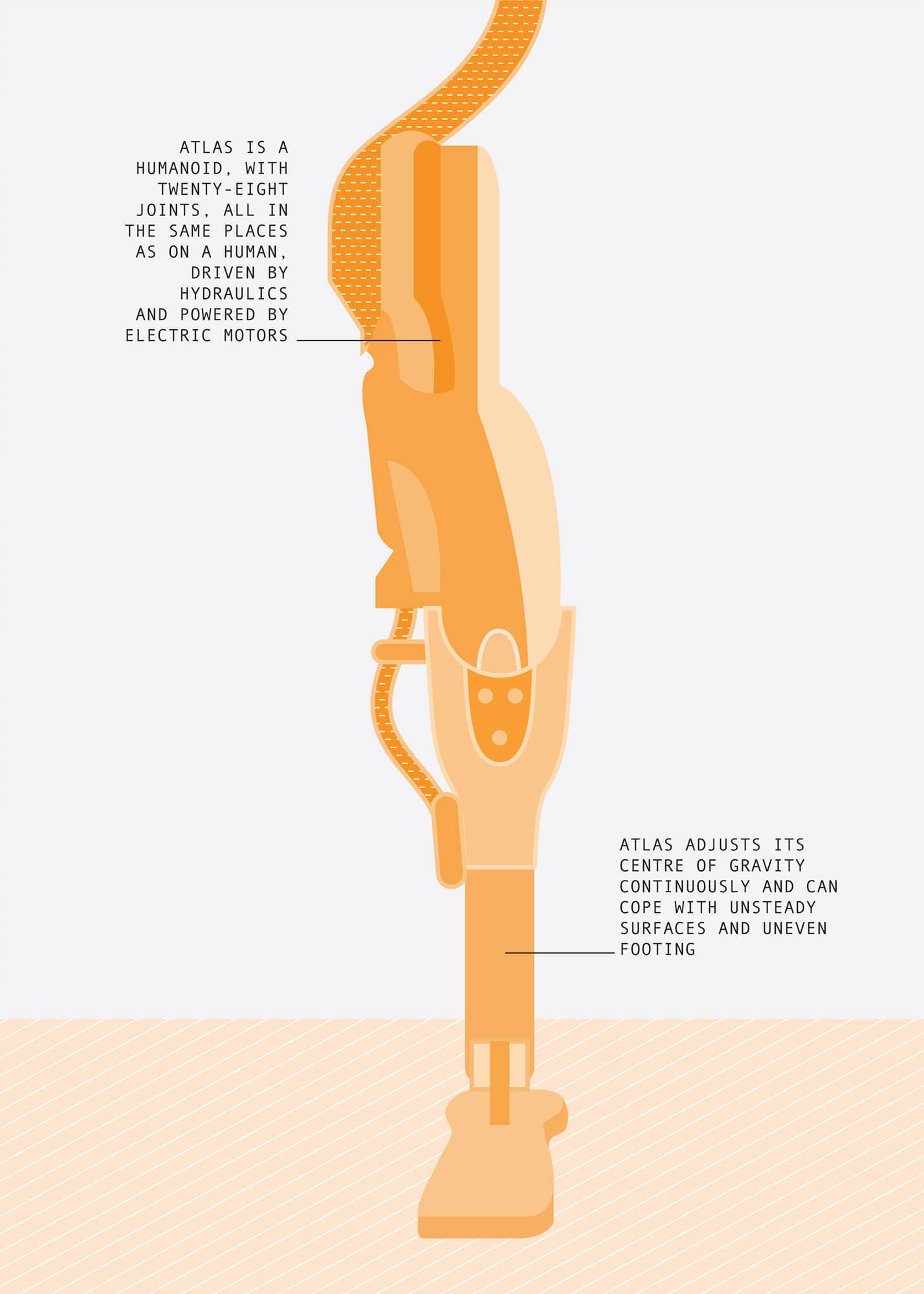

Many of the company’s previous machines, such as the celebrated BigDog, have been quadrupeds. Staying upright is much easier on four legs than two. Atlas is humanoid, with twenty-eight joints, all in the same places as those of a human, driven by hydraulics and powered by electric motors.

Marc Raibert, the company’s CEO, says that they do not directly copy nature, but rather they replicate what it does, an approach he refers to as ‘biodynotics’. Most robots have static stability, staying upright by keeping their centre of mass directly over the support provided by their feet. But to move fast, you need to anticipate your forward motion and put your feet where they will be needed next.

‘Think of the runner who wants to accelerate, putting their feet way behind them at the start of a race’, says Raibert. ‘Or the runner slowing down at the end of the race, putting their feet way in front of themselves, and leaning back. That is dynamic stability.’

Atlas has a somewhat peculiar gait, taking short, quick steps at regular intervals. It never seems to stand quite still. Even when it is not going anywhere it seems to march on the spot as it adjusts its footing. Jumping, running and hopping all rely on dynamic stability, as do the actions of dancers or gymnasts. Atlas has a good – though not perfect – sense of balance, and can recover itself and stay upright if it slips or is shoved. Like a human, Atlas adjusts its centre of gravity continuously and can cope with unsteady surfaces and uneven footing. If Atlas does fall over, it gets back to its feet without assistance. It can also pick up a heavy box and place it on a high shelf, a feat that requires a good mastery of balance.

Stereo vision and LIDAR range sensors (see here) allow Atlas to assess its environment and avoid obstacles. It requires a human operator, and Boston Dynamics are not specific about how much autonomy Atlas currently possesses.

Atlas can deal with the roughest of terrain, using its hands for support and balance where it needs to go all fours, to climb over or crawl under obstacles. The developers hope Atlas will ultimately be able to swing from one handhold to the next. Ultimately it should be able to climb rock faces or negotiate obstacle courses better than a human.

Atlas has not reached that stage yet, judging by the 2015 DARPA Robotics Challenge in which it competed. The challenge was inspired by the Fukushima disaster, and involved entering an area that might be contaminated by radiation. Robots had to operate controls that would stabilise a reactor, open and close valves and clear away debris. This required driving a vehicle, climbing a ladder, using a tool to break a concrete panel, and opening a door – all things that are easy for humans but difficult for robots. The results suggest that robots are not quite ready yet – for example, they had difficulty with some obstacles because they were unable to use handrails.

A new, more capable version of Atlas was unveiled in 2016 after the Grand Challenge. It is a work in progress; like many of Boston Dynamics machines, Atlas is a testbed rather than a finished product. Atlas itself may never be commercial, but there is no doubt that the technology – the dynamic stability, its agility, and ability to carry out human tasks – will feature in future robots.

‘Our long-term goal is to make robots that have mobility, dexterity, perception and intelligence comparable to humans’, said Raibert. Such machines could be emergency-response robots, versatile factory workers, household helpers … or almost anything else.

CURIOSITY

Height |

2.2m (7.2ft) |

Weight |

899kg (1,981lb) |

Year |

2012 |

Construction material |

Titanium |

Main processor |

BAE RAD750 |

Power source |

Radioisotope |

If robots are good for jobs that are ‘dirty, dangerous and dull’, then they are perfectly suited for missions to Mars. Dirty in the sense of continuous exposure to harmful radiation and dangerous because of the risks of space travel – twenty-six Martian missions have ended in failure – and dull, with the trip typically taking seven months. Robots also do not need to return to Earth, when a return leg would add tremendously to the cost and complexity of the mission. This is why the only Martian explorers to date have been robots.

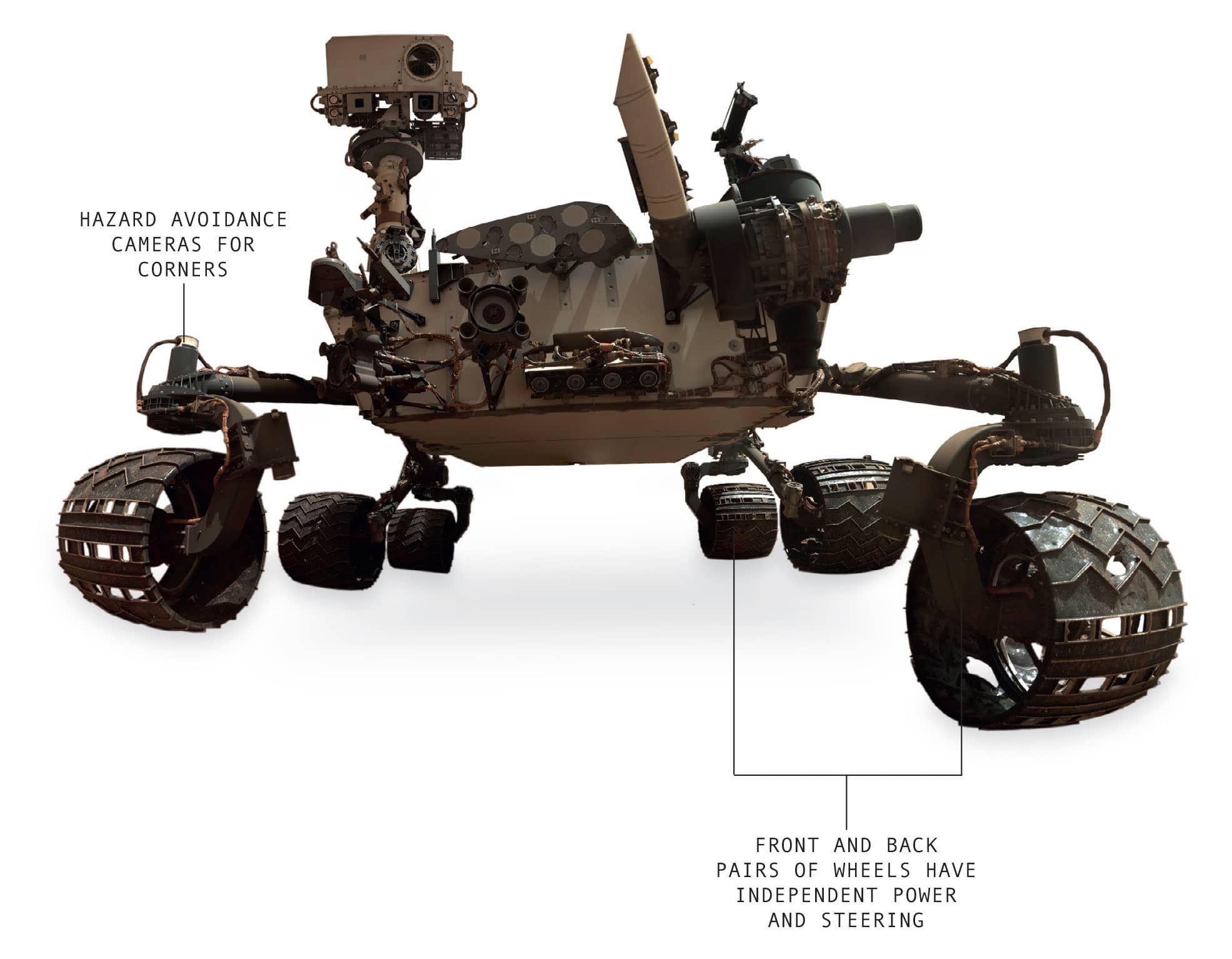

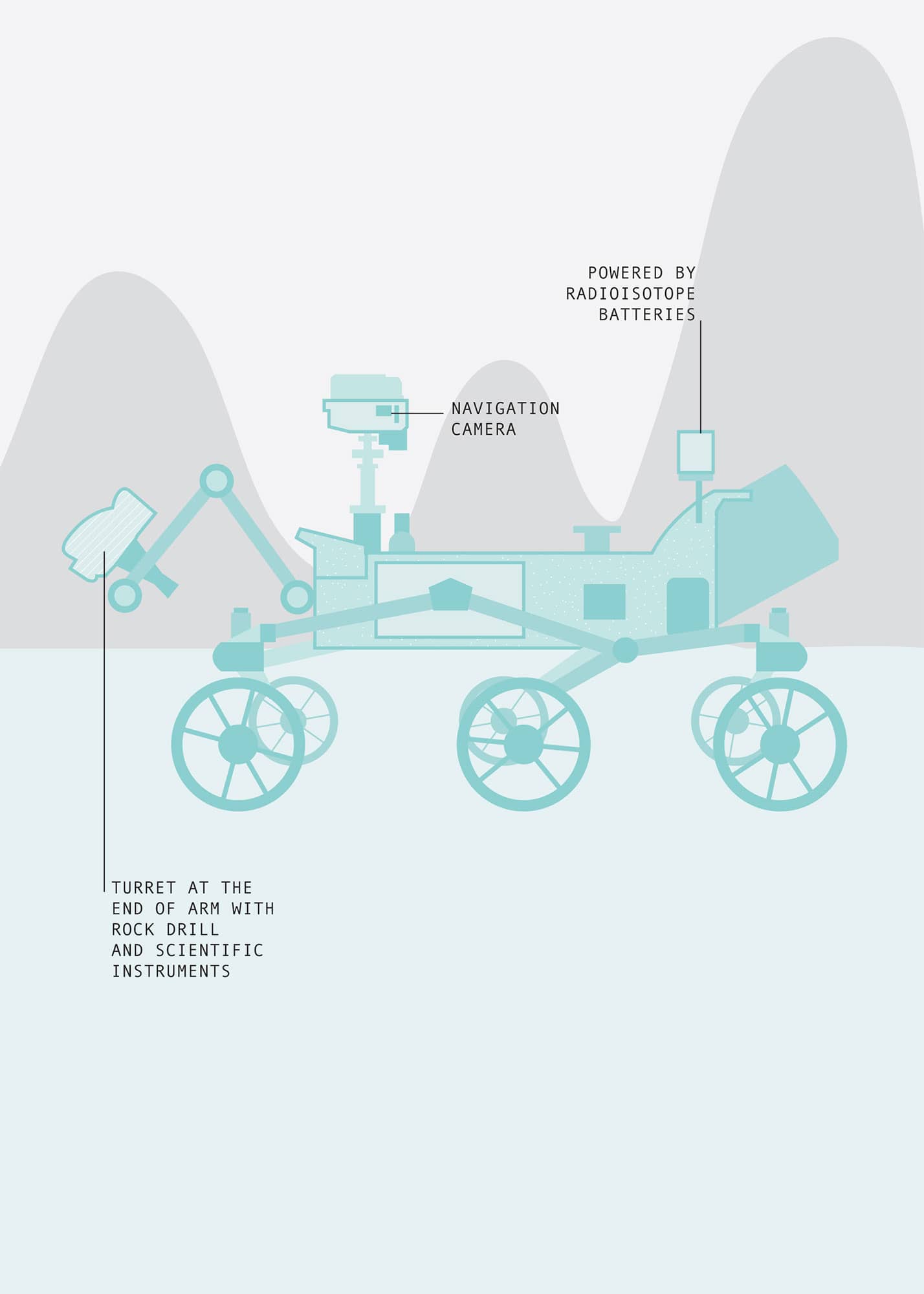

Curiosity landed on Mars in August 2012 and is still going strong. It is the most advanced planetary exploration robot ever, with a wide array of scientific instruments to search for traces of life on Mars. It follows NASA’s Sojourner in 1997, and Spirit and Opportunity in 2003. Sojourner weighed just 11kg (24lb), Spirit and Opportunity 180kg (396lb), but Curiosity is the size of a small car at 899kg (1,981lb) and 3m (9.8ft) long. It is also vastly more capable than its predecessors.

Curiosity has six large wheels, the front and back pairs having independent power and steering. Unusually, it is powered by radioisotope batteries. These would be too expensive and too environmentally hazardous for Earth, but they are good for Mars because they have effectively unlimited lifetime. Despite the nuclear power source, Curiosity moves agonisingly slowly: its top speed is about 4cm (1.5in) per second, less than half as fast as a tortoise.

Getting Curiosity to Mars took years of work and billions of dollars. One careless move might overturn the robot, get it stuck, or damage it, ending the mission. The distance between Earth and Mars means the time taken for radio signals to travel between them is between four and twenty-four minutes, so Curiosity cannot be controlled in real time. Each move is planned well in advance, and operations are conducted at a slow and careful pace.

Curiosity has no less than eleven sets of cameras, including a pair of hazard-avoidance cameras (hazcams) at each corner to aid automatic avoidance of unexpected obstacles. Two pairs of navigation cameras (navcams) on the mast give a longer view for route planning. There are various science cameras too, including a wide-angle panoramic camera.

Curiosity carries some 80kg (176lb) of scientific instruments. One device fires a tiny laser beam that can vaporise rock from 7m (23ft) away; amazingly the composition of the material it strikes can be determined from the spectrum of light emitted by the resulting puff of smoke. Curiosity’s arm, which has shoulder, elbow, and wrist joints, can collect and examine mineral samples. The arm ends in a rotating turret with a set of tools. One is the Mars Hand Lens Imager, Curiosity’s version of a magnifying glass, giving views of minerals detailed enough to distinguish features smaller than the thickness of a human hair. There is also a rock drill, a brush, and a device for scooping up samples of powdered rock and soil. Onboard tools include a neutron source to help detect water, believed to be an essential ingredient in life. There is even a miniature laboratory to analyse mineral specimens, and sample the Martian atmosphere.

Everything on Curiosity is designed for robustness, with a high degree of backup potential. For example, there are two central control computers. If one breaks down, the other takes over automatically.

Every discovery leads to new questions. Curiosity has literally only scratched the surface of Mars, drilling holes 5cm (2in) deep. Scientists now believe that they will have to go much further down to find any traces of ancient Martian life. More sophisticated rovers are already on the drawing board and in development.

Human astronauts will reach Mars one day. And when they do, it will have been explored, mapped, and analysed by robots like Curiosity.

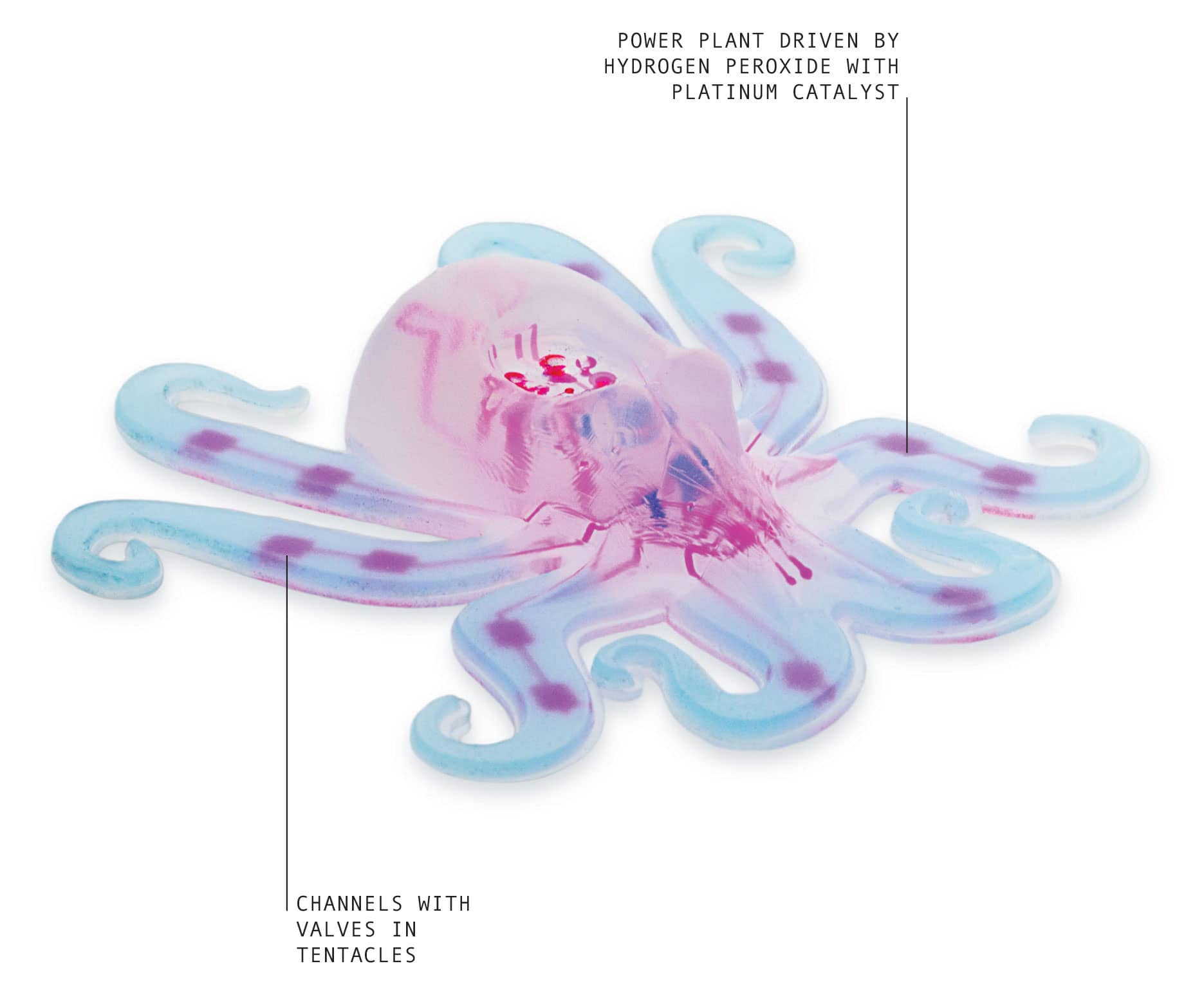

OCTOBOT

Height |

2cm (0.8in) approx. |

Weight |

6g (0.2oz) |

Year |

2016 |

Construction material |

Silicone rubber |

Main processor |

None (soft computer) |

Power source |

Chemical reaction |

Robots, like other machines, tend to be constructed of hard materials, such as metal and plastic. The only flexibility is in their joints, which is why so much effort goes into how those joints work and how to control them. Not everything in nature is hard though, and there are plenty of soft manipulators, from octopus tentacles to elephant trunks, and even long tongues used to snag insects or tear up vegetation.

Soft manipulators can be much simpler and easier to control than their mechanical counterparts. Programming a robot hand to take hold of a doorknob is tricky, as the hand must be positioned in a certain way with all the fingers in the right places. A tentacle though, can simply wrap round the doorknob and envelop it. Soft robots are also better for handling delicate items, whether it’s produce like fruit, or human patients in a hospital.

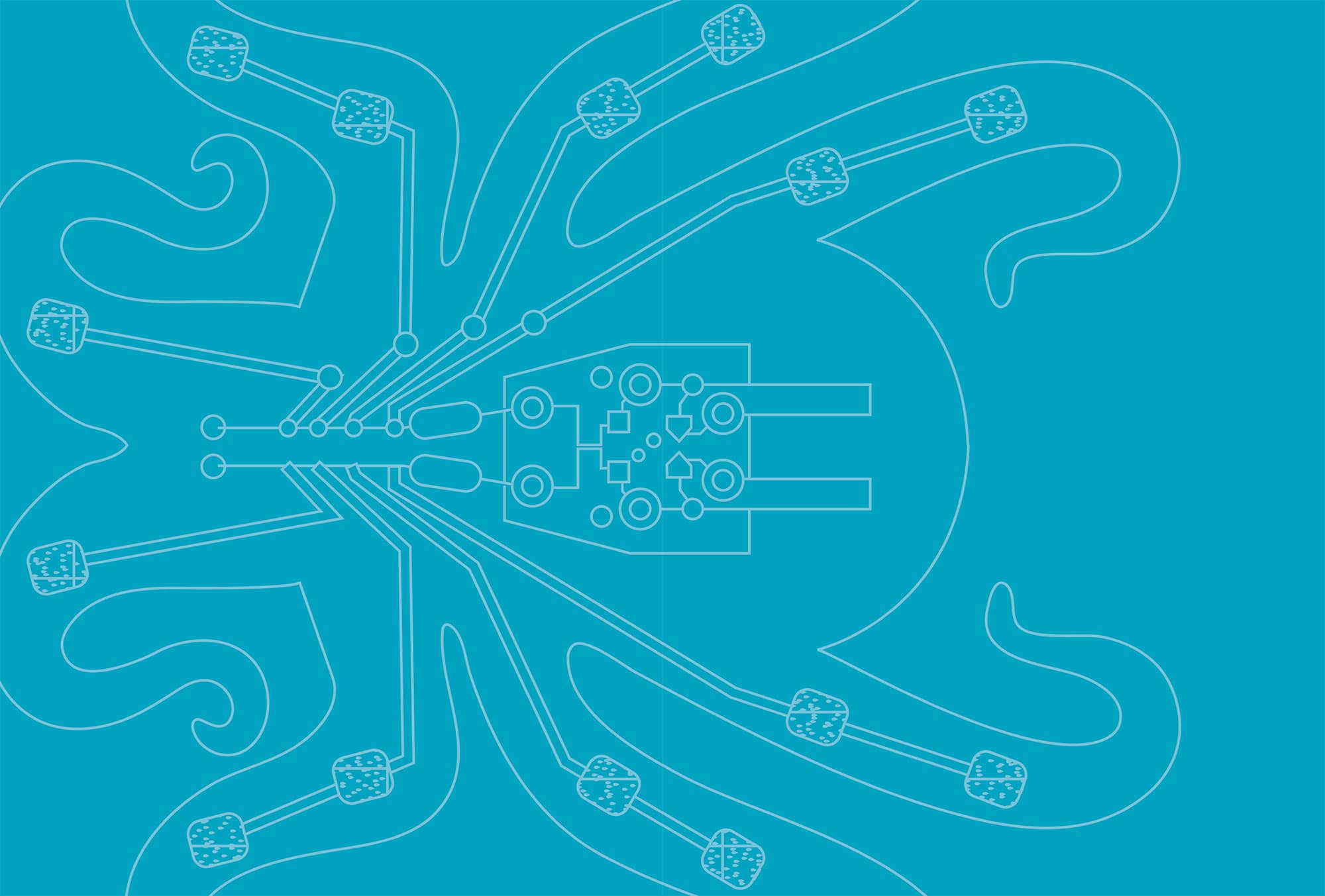

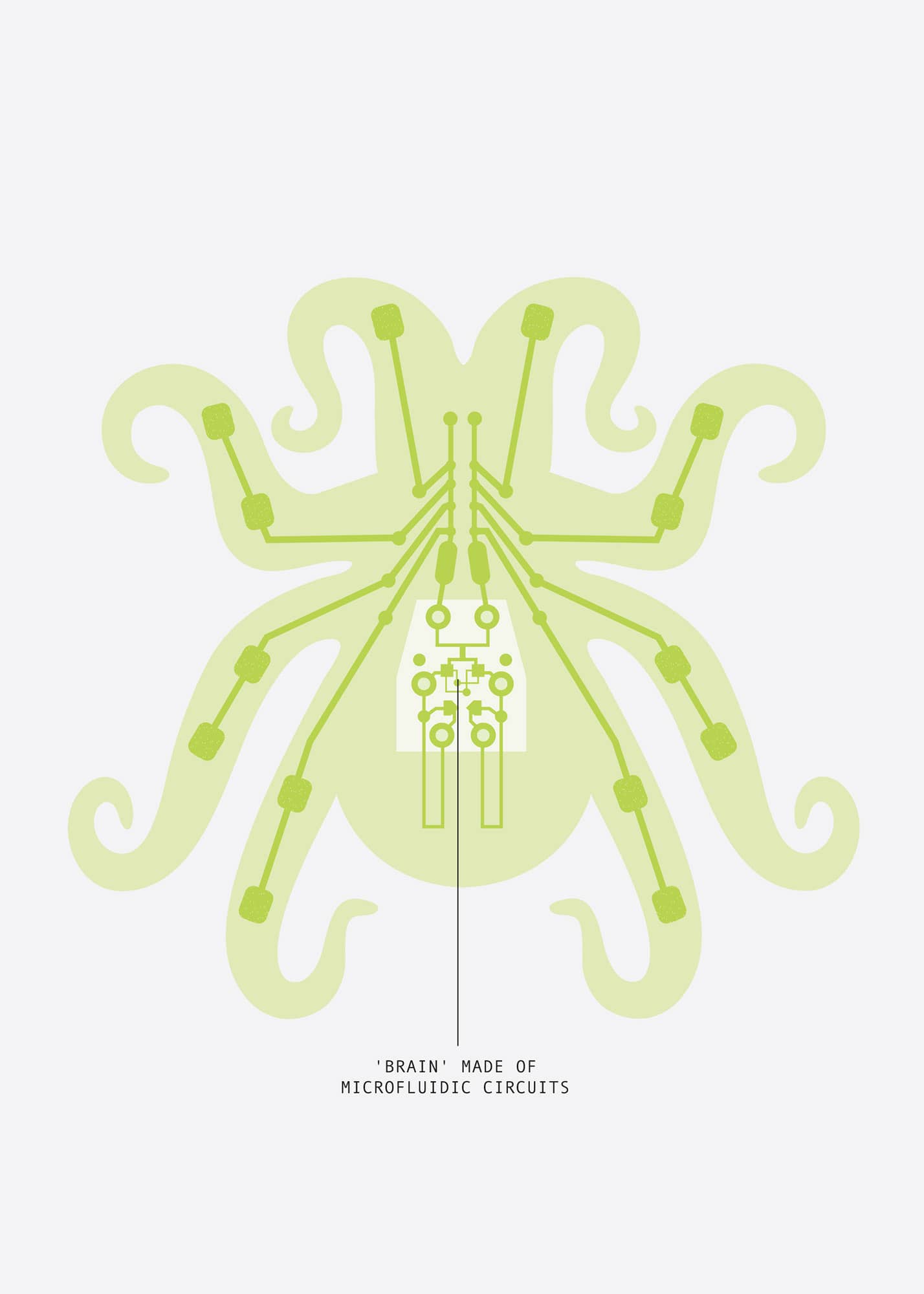

The most sophisticated soft robot to date is the Octobot, developed by Robert Wood and colleagues at Harvard University. At first glance it looks like a toy octopus, small enough to hold in your hand, and is made of 3D-printed silicone rubber components. Fluorescent dyes inside the translucent robot show up the tiny channels running down its limbs, making it look like a pop art sculpture. The Octobot does not just have soft limbs, but is an entirely soft machine with no electronic components. There are no batteries or motors or computer chips. Even the Octobot’s ‘brain’ is a set of flexible microfluid circuits composed of pressure-activated valves and switches. Instead of electrons flowing around an electrical circuit, fluid runs around piping.

While previous soft robots have been tethered to a hydraulic or pneumatic tube, the Octobot is free roaming. It is powered chemically, via hydrogen peroxide reacting with a platinum catalyst producing water and oxygen. The resulting pressure inflates and extends the limbs, like water pressure inflating a rolled-up hose. Valves and switches inside Octobot’s brain extend the arms in two alternating groups. When pressure rises at certain points, it closes one set of valves and opens another, directing flow into one half of the robot at a time. As the second side inflates it switches over again to the first side.

Octobot can carry out a preprogrammed series of actions, with up to eight minutes of power provided by one millilitre of hydrogen peroxide. It does not have any applications, but is intended as a demonstrator to inspire other researchers with the potential of soft robotics and show how challenges, such as self-contained propulsion can be overcome. It may also be the precursor to more sophisticated Octobots. ‘Any future version would involve more complex behaviours, including the addition of sensors, and target simple modes of locomotion by introducing more articulation in the limbs’, says project leader Rob Wood.

The other striking feature of Octobot is that its simplicity makes it very cheap to mass-produce: the components cost less than £2, most of which is for the platinum catalyst. Developer Michael Wehner says that this could open the door to applications that require large numbers of robots – cooperative search and rescue for example, with a swarm of soft robots squeezing through rubble to find survivors.

Part of the motivation for developing soft robots is that they are conformal: they can slip through narrow openings and shape themselves into whatever space is available. DARPA has looked at soft robots for infiltration – machines that might slither through letterboxes or under doors, or travel through air ducts. Tiny versions may have medical applications, as they can move more safely and easily inside a body than hard robots.

While a completely soft robot may not be practical, soft manipulators may be a viable alternative to hard robotic limbs. They will be safer to have working around humans than unyielding, rigid arms, and so may prove useful for applications with the elderly, for example. In a future where robots are likely to be rubbing shoulders more often with humans, the softer those shoulders, the better.

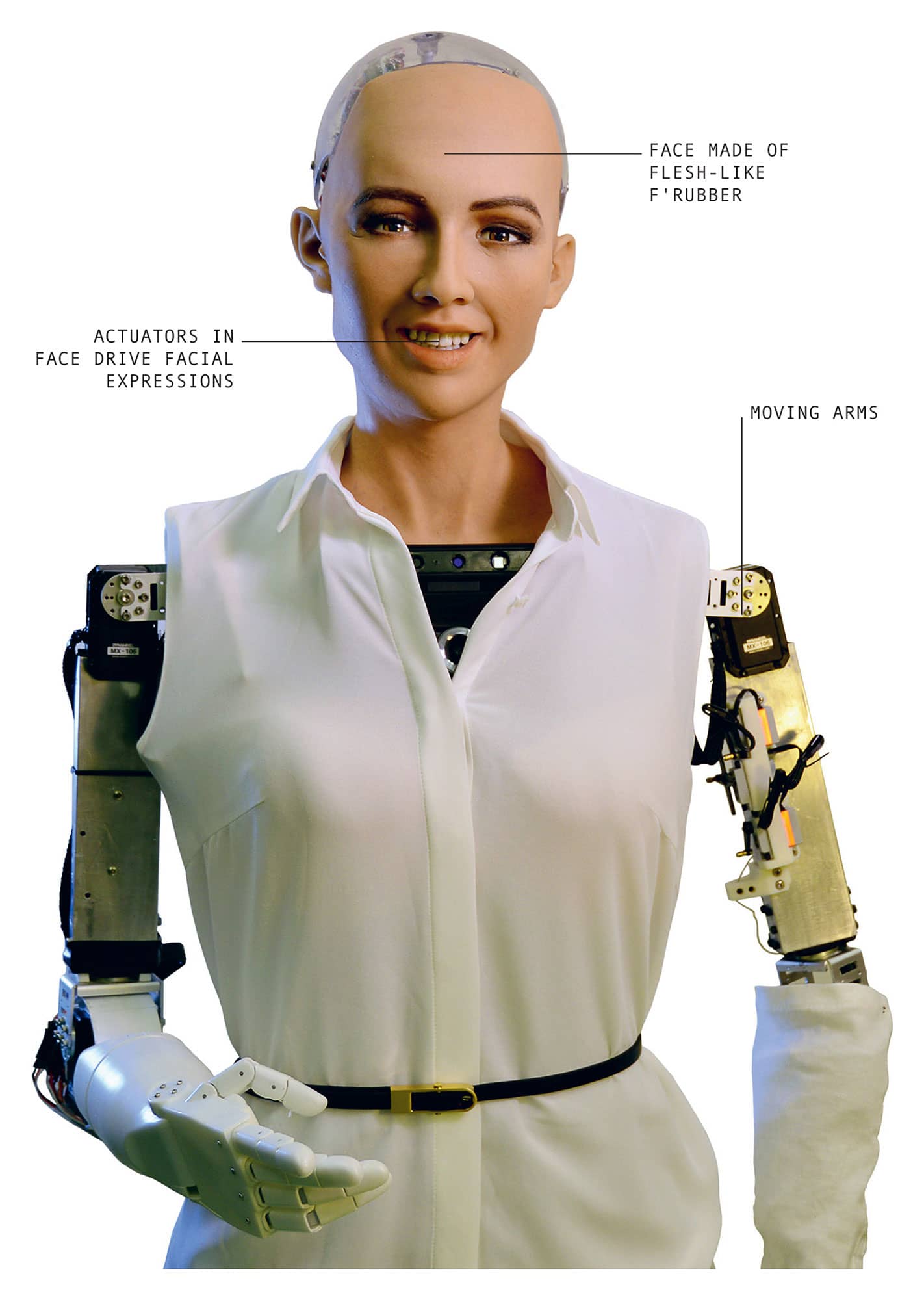

SOPHIA

Height |

1.75m (5.75ft) |

Weight |

20kg (44lb) |

Year |

2015 |

Construction material |

F’rubber face |

Main processor |

Commercial processors |

Power source |

Battery |

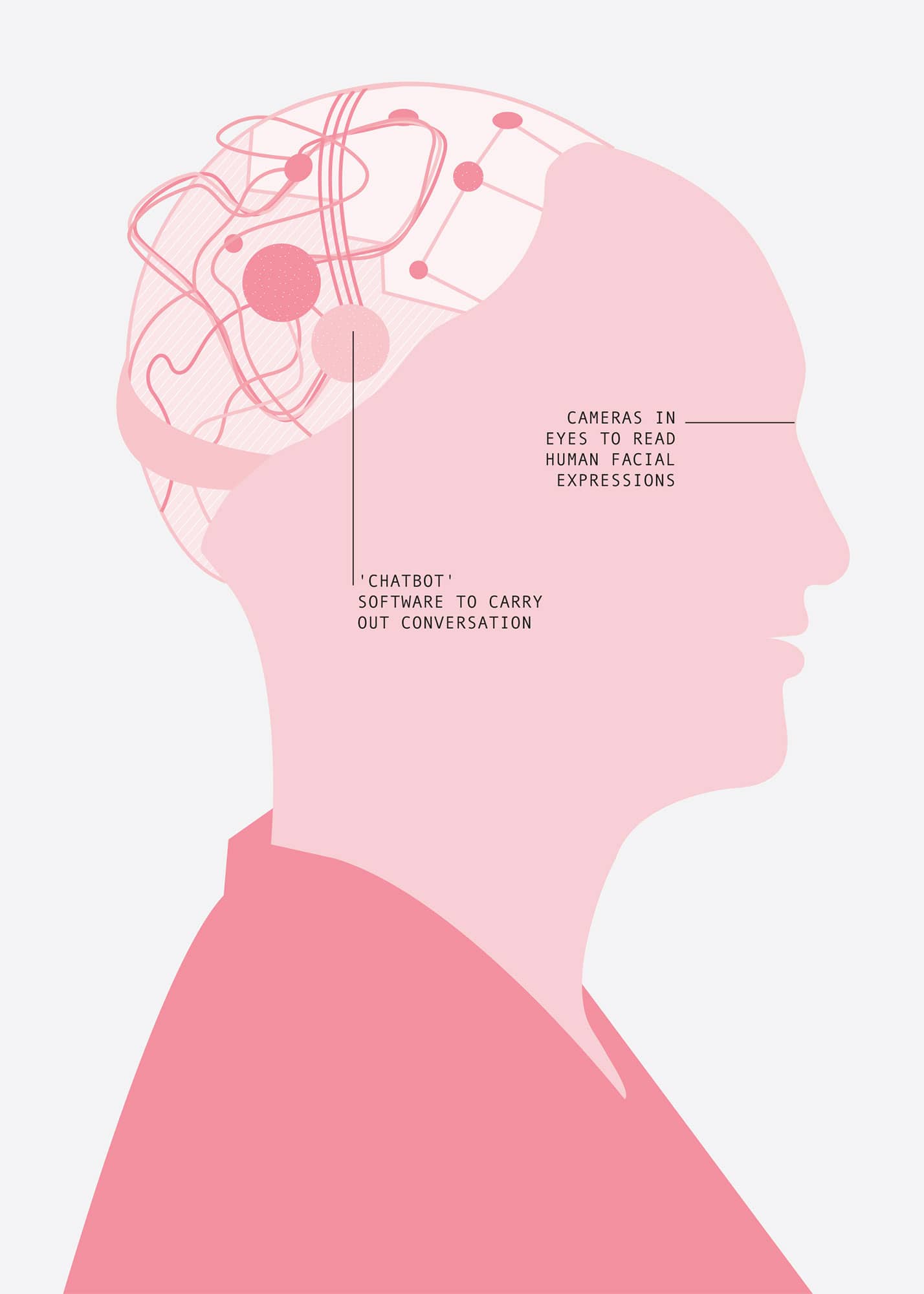

There is something uncanny about Sophia. Her face is reminiscent of a movie star, specifically Audrey Hepburn, with – according to makers Hanson Robotics – ‘high cheekbones, an intriguing smile, and deeply expressive eyes’. Sophia can convey emotion and has shown off her conversational skills on numerous chat shows. She is an interactive, entertaining robot.

Robots that imitate humans have a long pedigree, going back to Hero of Alexandria’s automata in the first century. The art was further developed in medieval times with ‘Jacks’, mechanical figures that appeared to strike clocks or carry out other actions. Leonardo’s robot knight is a particularly sophisticated version of this genre.

Walt Disney used the term ‘animatronics’ for the lifelike, moving machines he developed to populate his theme parks. One of Disney’s first creations in the 1950s was an animatronic Abraham Lincoln, which delivered speeches prerecorded by an actor. The machine’s lips moved in sync with the words, and it was programmed to produce facial expressions and gestures at appropriate points in the speech.

Sophia is the latest incarnation of the human-like robot. While the Geminoids (see here) are remote controlled and have no brainpower of their own, Sophia has artificial intelligence (AI) – technology of the sort used by Internet chatbots – and aims to provide something like a natural human conversation with a machine.

Robert Hanson, CEO of Hanson Robotics, notes that large areas of the human brain are devoted to recognising and responding to facial expression. While the factoid that ninety per cent of communication is non-verbal is misleading, a robot that can communicate through facial expression, and that can understand another’s facial expressions, should be an improvement on a simple voice from a screen. This may be especially true for children and the elderly who are not familiar with computers.

Appropriately enough, Hanson started out in Walt Disney’s Imagineering company and has been perfecting the look of his robots since 2003, developing a special material that he calls F’rubber, along with a system of actuators to produce facial expressions and synchronise lip movements to speech. Hanson has not released details of Sophia’s inner workings, but his previous robots had as many as twenty facial actuators. Sophia can move her head and neck and can gesture with her hands, which is all that is needed for conversation – and play rock, paper, scissors.

Sophia is essentially the hardware interface for a chatbot. Put simply, Chatbot is software that can understand and mimic conversation, and has proliferated the Internet since 2016. Apple’s Siri and Microsoft’s Cortana are both chatbot-based assistants that help millions of people on a daily basis. Chatbots are typically either based on a set of complex rules that require a major programming effort, or a learning approach that needs a huge database of conversational interactions. Like Siri and Cortana, Sophia’s speech is machine-like and lacks the inflections of a human speaker.

Armed with scripted replies, added to her usual repertoire for each occasion, Sophia delighted, amazed and slightly appalled many TV hosts. ITV’s Good Morning Britain presenters were clearly disconcerted in June 2017, with a rattled Piers Morgan commenting ‘This is really freaking me out’. Host Jimmy Fallon made an almost identical comment on The Tonight Show, suggesting the uncanny valley effect is at work (see Geminoids).

Judging from Hanson’s background, and the way that Sophia has been presented so far, show business may be her natural habitat. Disney’s animatronic Abraham Lincoln was built to entertain more visitors per day than any actor could manage and robots like Sophia would be ideal as television presenters and interviewers within the industry. Thousands of organisations produce an streams of Internet corporate videos. A presenter who is wooden but flawlessly professional, and who can be rented along with the cameras, looks like competition for human hosts. Judging from the public reaction, Sophia is not ready for a wider public yet. That may well change in a few years.

FLYING SEA GLIDER

Length |

2m (6.5ft) |

Weight |

25kg (55lb) |

Year |

2017 |

Construction material |

Composite |

Main processor |

Not specified |

Power source |

Battery |

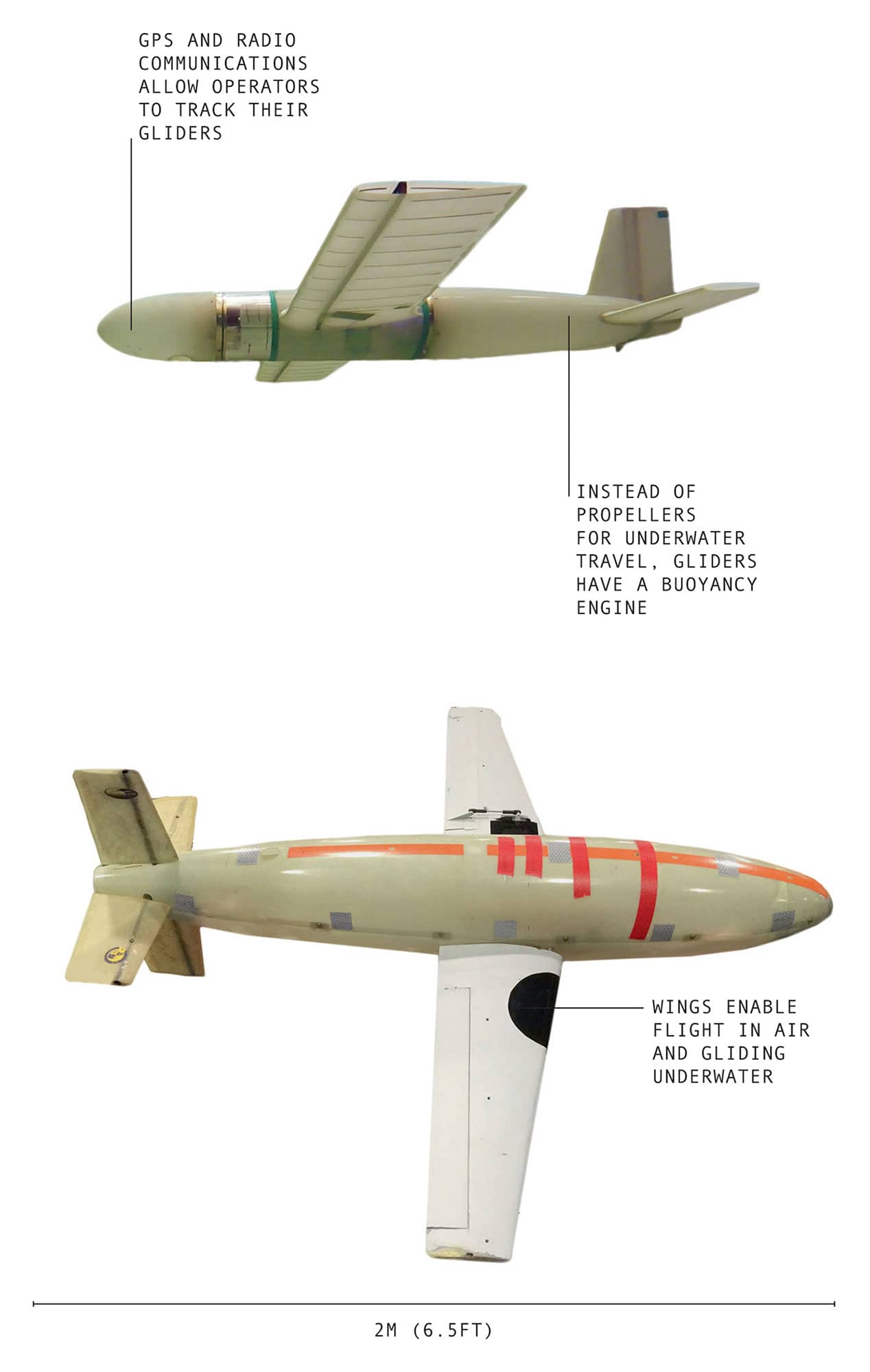

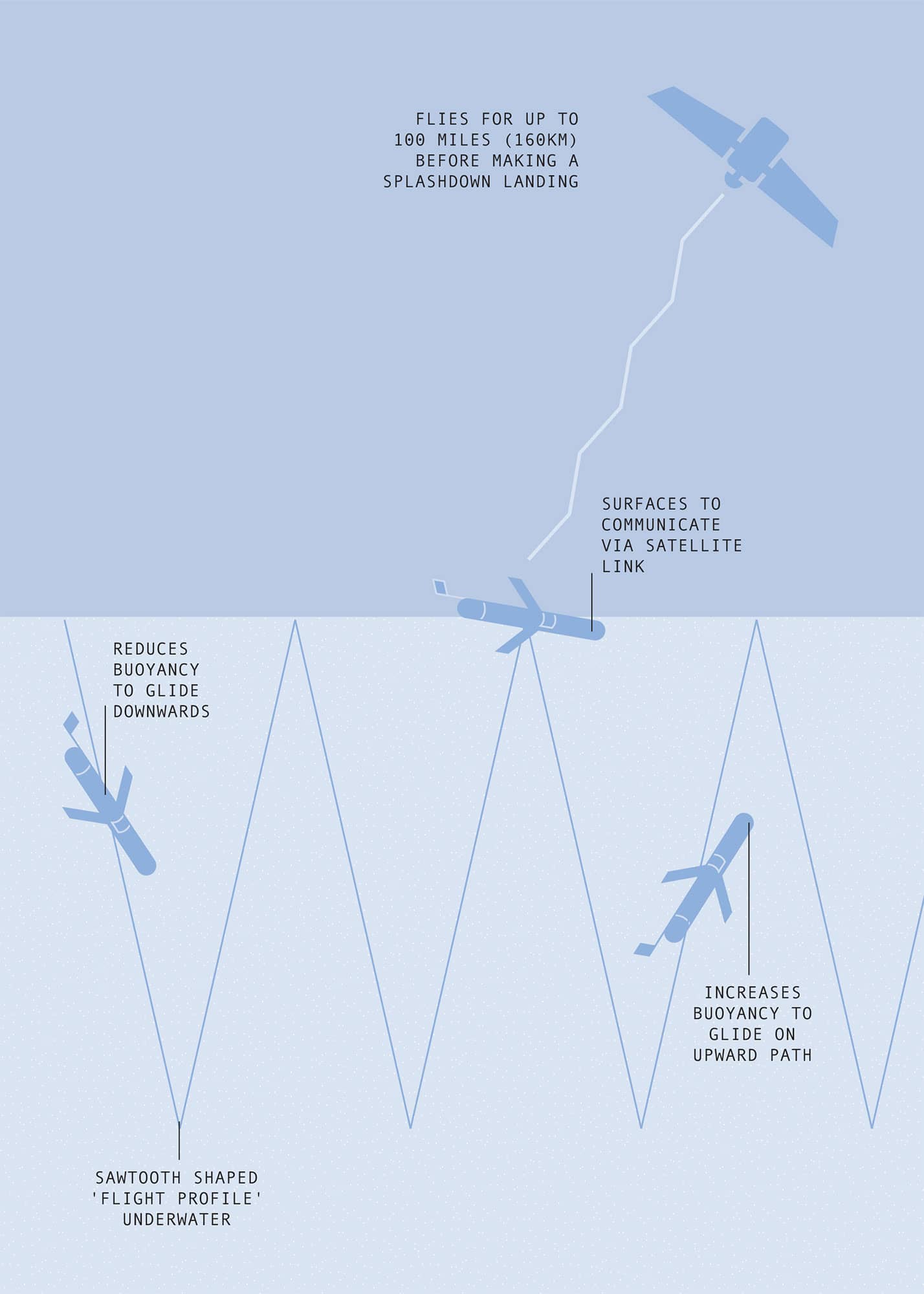

Underwater gliders are slow, steady unmanned submarines, carrying sensors to the furthest reaches of the oceans. They generally look like winged torpedoes about 2m (6.5ft) long. While most underwater robots are tethered and operated by remote control from a short distance away, gliders roam freely, surfacing occasionally to exchange data with an operator via satellite. They can travel on battery power for weeks or months at a time. A recent version can also fly, giving it speed as well as endurance.

Instead of propellers, gliders have a buoyancy engine. This pumps a small quantity of oil from an external bladder to an internal one, reducing the buoyancy so the glider starts to sink. Thanks to its wings, rather than simply falling, the robot glides forward several metres for every metre it descends, typically at less than 1mph (1.6kmh). It may drop to a depth of a 1,000m (3280ft) before pumping oil back into the external bladder, making the glider buoyant enough to start rising, this time gliding upwards at the same slow rate. It’s a slow but frugal means of travel. In 2009, the Scarlet Knight glider operated by Rutgers University completed an Atlantic crossing on one battery charge, taking seven months.

Gliders are tough, sometimes returning from missions with multiple shark bites. Storms and high seas mean nothing to them. Fishing nets are the biggest hazard, but GPS and radio communications allow operators to track their gliders and they can often be reclaimed after some negotiation.

To date, gliders have watched underwater erupting volcanoes, inspected icebergs at close range and survived hurricanes. They tracked the Deepwater Horizon oil spill over an extended period, and carried radiation sensors to spot leakage from the Fukushima nuclear reactors. Gliders with acoustic sensors pinpoint tagged fish or listen in to whale song. Others carry out climate change research, measuring temperatures in different layers of seawater and mapping the abundance of algae. The Storm Glider lurks in hurricane-prone areas, bobbing up to take readings during extreme weather, while the Chinese make a glider that can explore the depths at 6,000m (19,685ft).

Gliders can be traced back to Doug Webb and Henry Stommel of the Woods Hole Oceanographic Institution. Since their beginnings in 1991, gliders have matured. In 2002 there were about thirty gliders worldwide. Now there are several hundred, with plans to build thousands more.

Their low speed is a limitation, but the Flying Sea Glider developed by the US Naval Research Laboratory could overcome that. As the name suggests, this is a glider that flies above the water as well as gliding through it. The same sort of aerodynamics applies in air and water, so it was a matter of finding a design that combined both into one airframe.

The Flying Sea Glider can be released from an aircraft or launched from a ship. A propeller driven by an electric motor can carry it to an operational area over 100 miles (160km) away. When it reaches the desired location, it dives vertically into the water, like a pelican diving for fish. Hollow wings and other spaces in the glider fill with water through a series of small holes, and it soon reaches neutral buoyancy. It can then proceed like any other underwater glider.

Flying gliders are ideal where several gliders need to be in place rapidly, for example to monitor an oil spill, a radiation leak or the path of a hurricane. The gliders could take measurements for as long as needed before being recovered. In the military world, large numbers of air-dropped gliders would be useful for locating submarines.

Navy researchers are looking at the possibility of gliders that can expel water and take flight again, something that has not yet been achieved. This would give them almost unlimited mobility and endurance, especially if fitted with solar cells. Researchers are also looking at networking gliders together via acoustic communications so they form an underwater sensing net, opening a large and permanent window into the underwater world.

KILOBOT

Height |

3.4cm (1.3in) |

Weight |

4g (0.15oz) |

Year |

2015 |

Construction material |

Metal |

Main processor |

ATmega 328P (8bit @ 8MHz) |

Power source |

Battery |

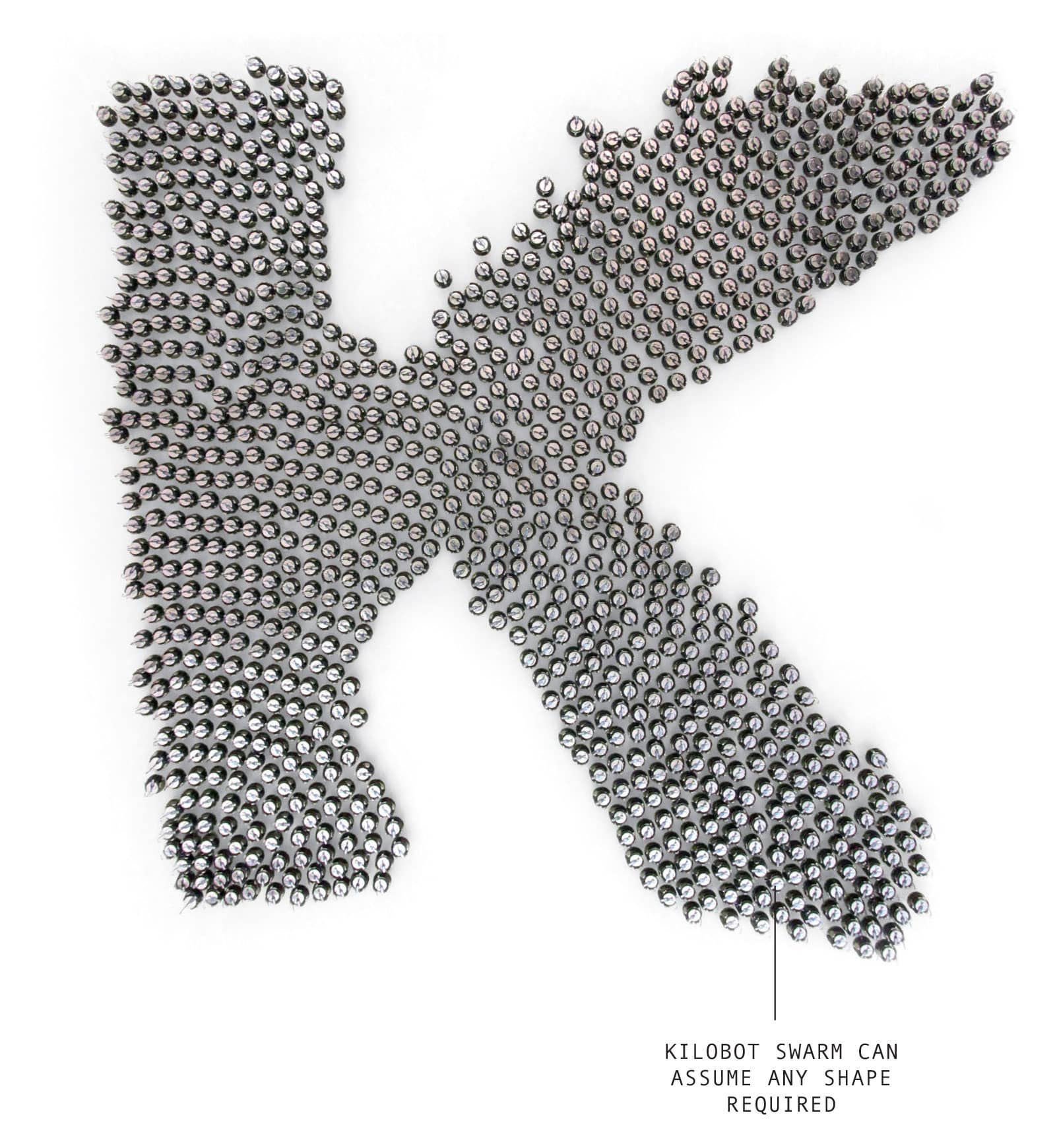

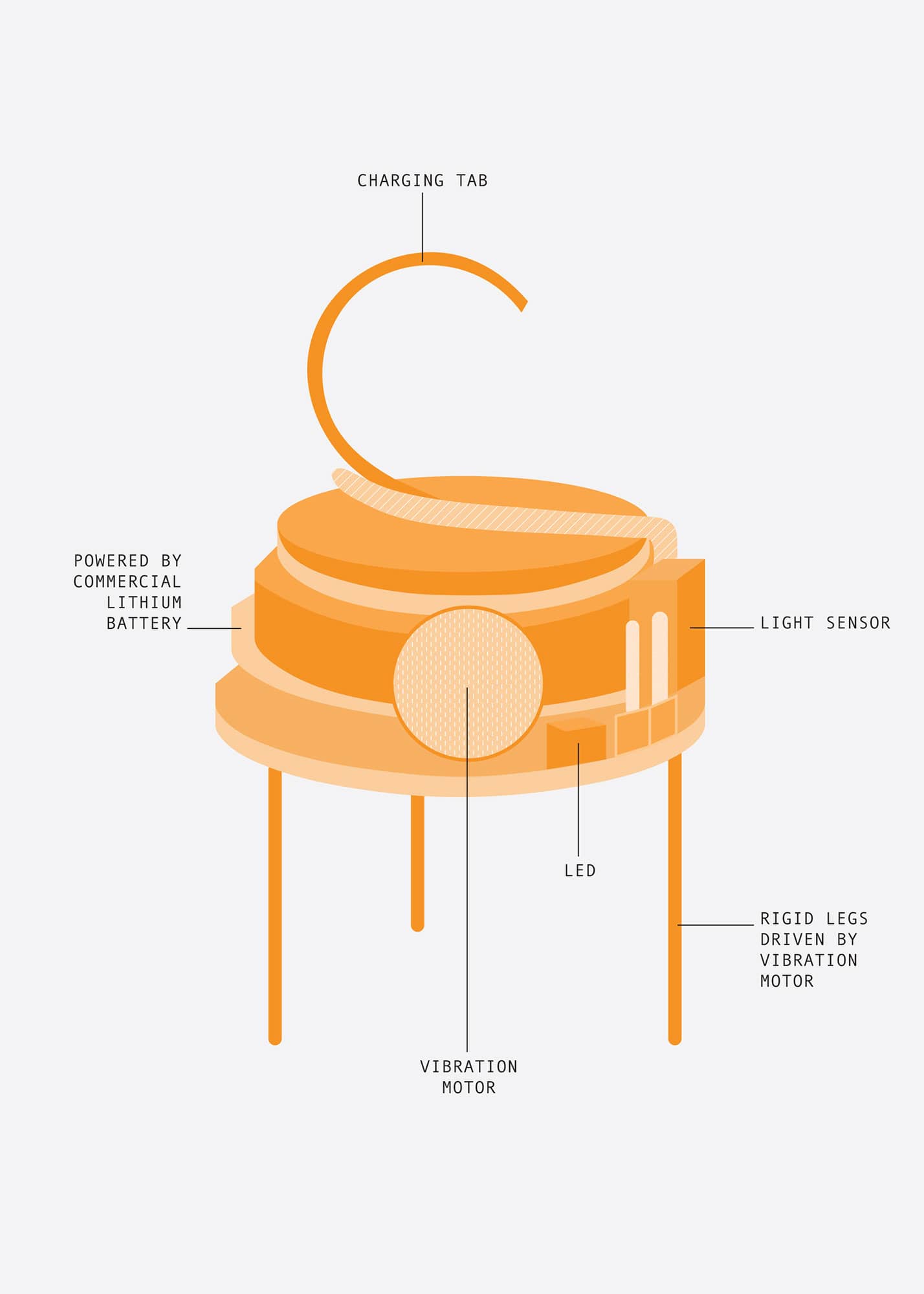

The Kilobot is a battery-powered widget the size of a table-tennis ball that moves at about a centimetre a second by vibrating its four legs. It may not look impressive, but an individual Kilobot is really a building block, part of a bigger entity. Kilobots become interesting when they swarm.

There are plenty of different types of insect swarm in the natural world. Bees forage efficiently over a wide area, with successful individuals sharing their finds with others. Desert ants work together to carry loads fifty times their own weight. Termites just a few millimetres long cooperate to build giant mounds metres high with complex air-circulation systems. In each of these cases, the individual carries out relatively simple actions, but the net result is ‘intelligent’ behaviour by the group as a whole.

Small, cheap robots using a swarming approach could combine for tasks impossible for larger robots. Swarms can cover a large area. They are robust; even if some members break down, the swarm can still function, whereas one broken motor or loose cable can bring a single robot to a halt.

Swarming approaches are well established in software, with some search engines using swarms of ‘software agents’ that spread out to locate data. Swarming robots are harder to develop than software, partly because of the cost. The other problem is complexity of dealing with so many machines at the same time. Every robot in a swarm needs to be charged up and switched on, and the appropriate software loaded onto it. The more robots, the longer this takes, and laboratory swarms are typically limited to a few tens of machines.

Harvard researchers, led by Michael Rubenstein, wanted to work with much larger swarms, and so developed an affordable robot designed for use in large numbers. Hence Kilobot, the kilo- prefix meaning one thousand. Kilobot is powered by a small lithium button battery. This drives the vibrating legs, which have a forward speed of about 1cm (0.4in) per second, and it can make a ninety-degree turn in two seconds. The Kilobot has a basic commercial processor for a brain, an infrared transmitter/receiver for communication and sensing, LEDs for signalling and a light sensor. The parts for one cost just £10, and it can be assembled in about five minutes.

Once assembled, Kilobots are ‘scalable’; they do not require individual attention and ten thousand can be managed as easily as ten. The whole collective can be turned on or off remotely via an infrared communicator. The same method is used to download new software. To recharge, the robots are placed en masse between two charging plates.

The key to collective behaviour is having a set of rules followed by all members of a swarm. The behaviour of each member affects all the others – for example, when they are trying to spread out or cluster together. This can create complex interactions so it is important to test with real hardware rather than computer models to find out if it works in real life.

A Kilobot collective can follow a path, forming itself into a specific shape, or spread out evenly to cover an area, filling in any gaps. These simple tasks are the basis of what a robot swarm will do in the field, whether they are agricultural droids looking for weeds, or military robots on patrol. The Kilobot gives researchers a convenient testbed for software – for example, processes for splitting and reforming swarms – rather than having to jump straight in with something costing millions.

Swarming robots are already starting to appear outside the laboratory. Intel’s Shooting Star quadcopters are an alternative to fireworks, producing colourful aerial displays of flashing LEDs; Lady Gaga performed with four hundred swarming drones at the Super Bowl halftime show in 2017. Meanwhile, the US military are testing swarms of small Perdix air-launched drones that could overwhelm defences.

Swarms of small robots may take over tasks such as mowing lawns and cleaning windows carried out by larger machines, and tiny swarming medical robots may one day carry out operations inside a patient. That is why it is important to have a good test platform like Kilobot to ensure the basic software works reliably.

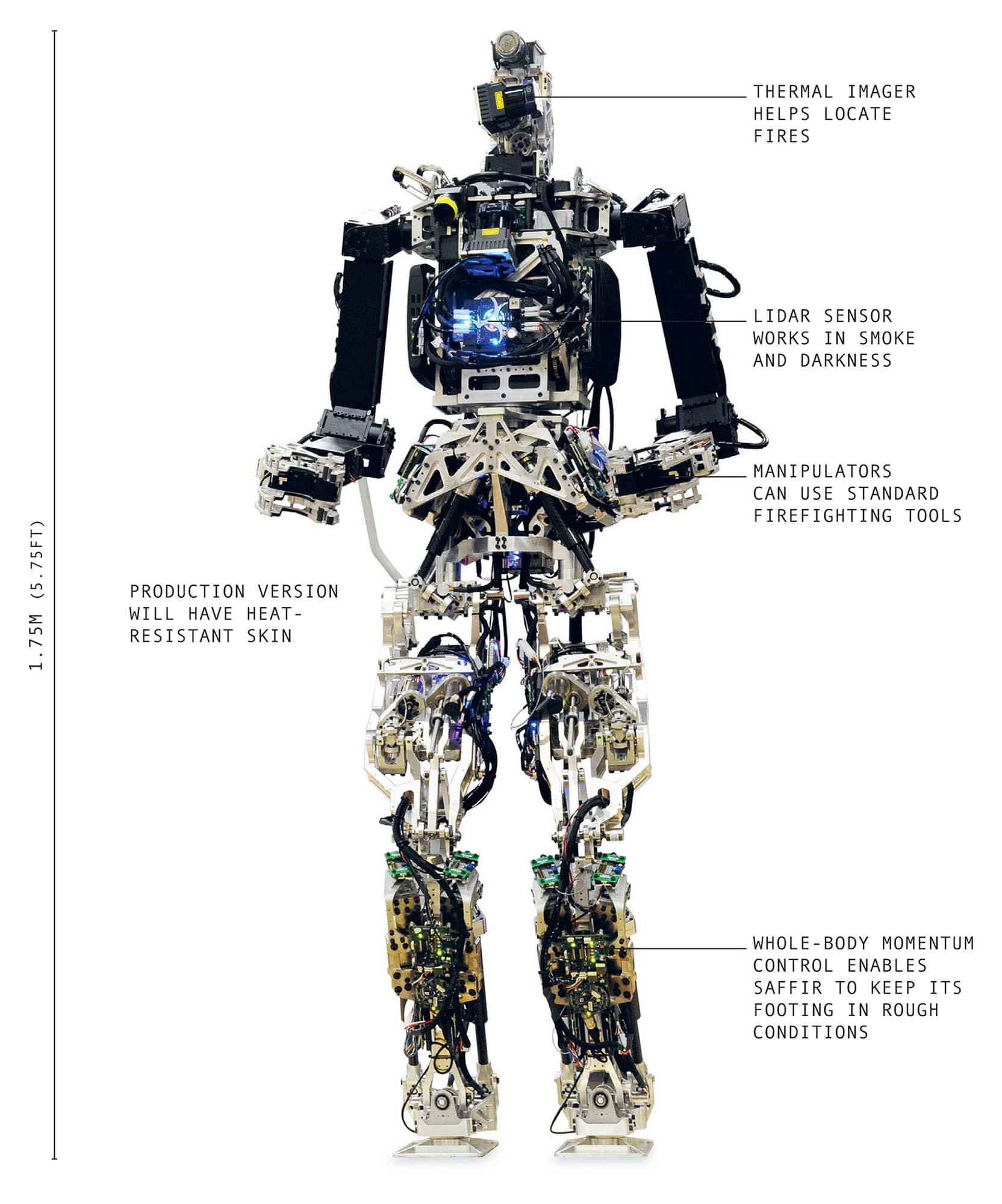

SAFFIR

Height |

1.75m (5.75ft) |

Weight |

64kg (141lb) |

Year |

2015 |

Construction material |

Steel |

Main processor |

Not known |

Power source |

External power |

‘Damage control’ is an innocuous phrase describing what a warship’s crew does in order to keep it afloat and in operational condition after it has been hit. The reality is a chaos of noise, smoke and torrents of seawater. Flooding is a hazard, but fire is more dangerous. Ships are loaded with flammable fuel and ammunition, and any fire can be catastrophic. Of the five US aircraft carriers lost during the Second World War, only one sank, the other four succumbed to out-of-control fires. To help prevent this in future, ships could have a new crew member. Step forward the Shipboard Autonomous Firefighting Robot, or SAFFiR, developed by the US Office of Naval Research, will assist with damage control.

The prototype looks like a classic mechanical man. Like Atlas and Robonaut (see here and here), it is a two-legged humanoid, designed to work in human spaces and with human tools. It is able to open and close doors and hatches, and use existing firefighting gear.

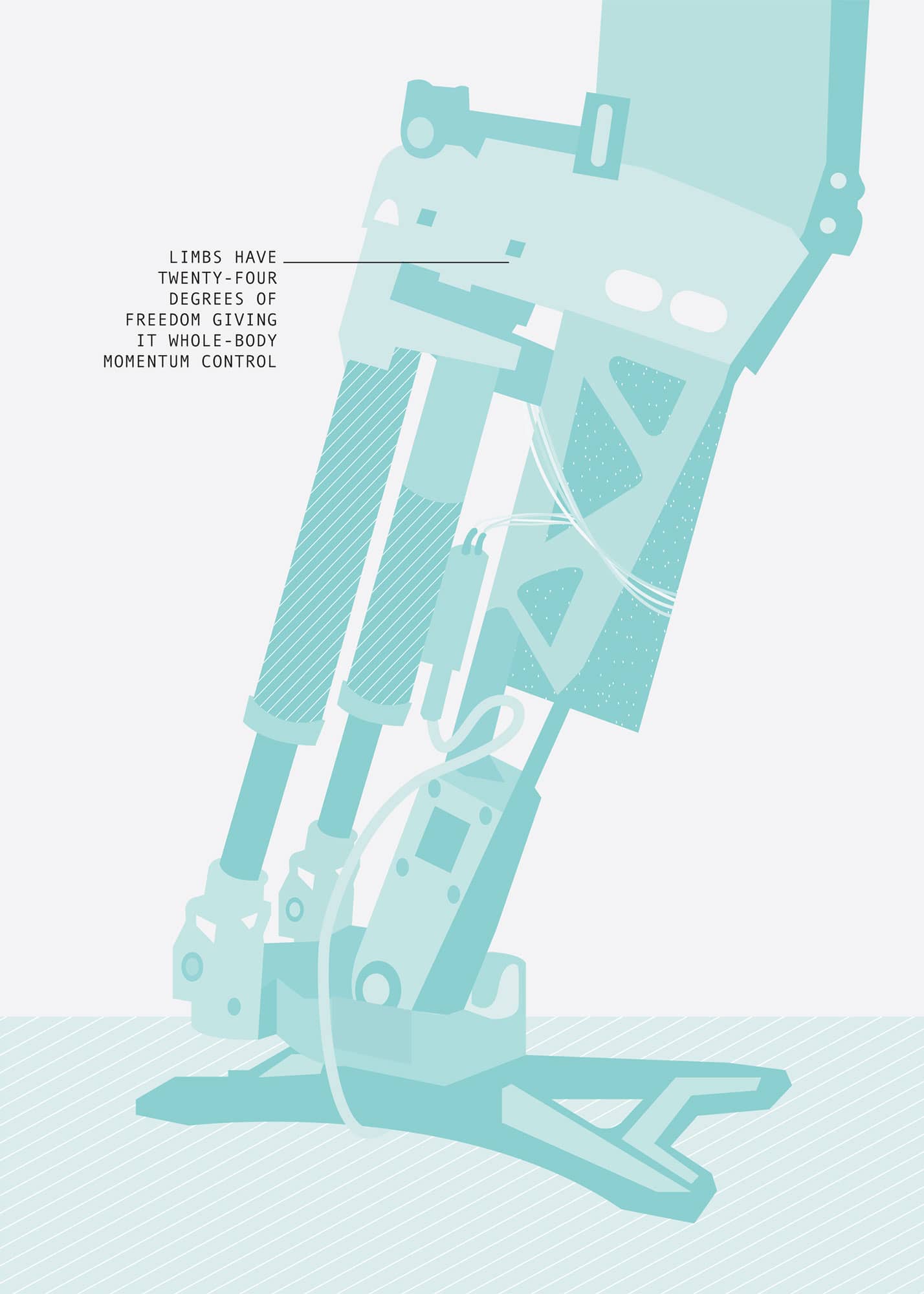

SAFFiR’s limbs have twenty-four degrees of freedom, and because it has to work even in stormy conditions, SAFFiR needs sea legs, or what the developers call ‘whole-body momentum control’. This is a control system that moves all of the robot’s joints together to keep its centre of mass supported on uncertain and unstable surfaces. Humans naturally take short steps and use their arms for support without thinking, but this is a novelty for robots.

The inside of a burning ship is an extremely difficult environment for firefighters. Thick smoke makes it impossible to breathe or to see anything. There is no question of staying well back and playing a firehose from tens of metres away as firefighters can on land. As it does not breathe, SAFFiR is unaffected by choking or toxic fumes. The prototype does not have thermal shielding, but the finished SAFFiR will be able to withstand higher temperatures for longer periods than human firefighters. It will also be waterproof and may function underwater in flooded sections of the ship. And, unlike human firefighters, SAFFiR is expendable. If someone has to go into an inferno on a one-way mission to close a hatch and stop flooding, a robot is the best candidate.

The robot has two sensing systems, a thermal imager and LIDAR (see here), both able to see through smoke. The thermal imager can locate and assess fires. Being able to see temperatures allows SAFFiR to detect a fire on the other side of a hatch or bulkhead. Its sophisticated thermal-vision system distinguishes the texture and motion of heat sources, so that SAFFiR can tell actual fire from hot material, and can separate heat reflections from heat sources. It can pinpoint the source of the fire and direct an extinguisher at it.

While the prototype SAFFiR is still remote-controlled, ultimately the robot will be autonomous, able to navigate through the ship, clambering over obstacles on its own. It will work as part of a team alongside human firefighters, taking orders verbally or by gestures, or even by touch. Navy research found that in damage-control situations, crews communicate by pushing or pulling each other to warn of danger or the need to move.

SAFFiR will not be the only robot on the team. The same project is also developing microflyers, small quadcopters that can negotiate the narrow confines of a ship at high speed and beam back sensor data. These will help assess damage and direct SAFFiRs to where they are most needed.

SAFFiR will be very much at home inside a fire, and a land-based version is a natural extension. But such a capable piece of kit should not sit idle in a locker, waiting for an emergency. Developers are looking at ways for SAFFiR to carry out routine shipboard tasks. If decks are swabbed and rails polished in the 21st century, SAFFiR’s relatives will be doing the work. But this robot’s real value will not be revealed until the ship becomes an inferno and more than human toughness and coolness are required.

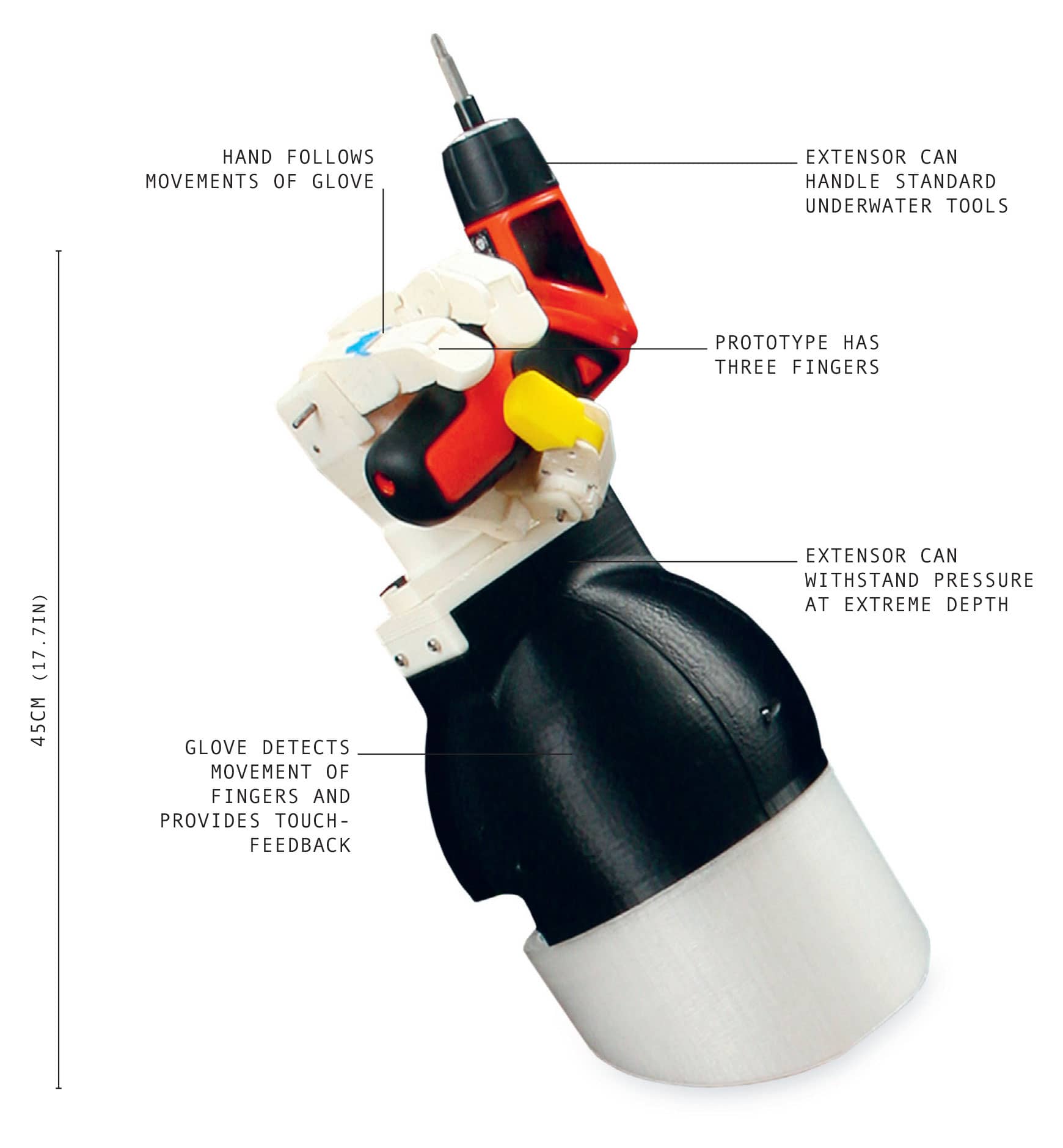

VISHWA EXTENSOR

Length |

45cm (17.7in) |

Weight |

1.8kg (4lb) |

Year |

2014 |

Construction material |

Titanium |

Main processor |

Commercial processors |

Power source |

External power |

The human hand is a uniquely capable manipulator, reproducing its capability is hugely difficult. The challenge is even tougher in deep-sea environments and other similar extremes where handling often needs to take place at a distance.

Scuba divers can descend to about 100m (328ft) at most, beyond that the pressures become too great for humans to bear. For deep dives, divers are encased in an Atmospheric Dive Suit, a hard shell like a human-shaped submarine containing a bubble of air at normal atmospheric pressure. The pressure and thickness of the suit make it impossible to use gloves. Instead the suit has ’prehensors’ resembling lobster claws. These are crude tools, with only open and closed positions – a single degree of freedom, in robot terms – making it difficult to grip irregular objects. It is impossible to use normal tools with prehensors, and even the most basic tasks, such as unscrewing a bolt, can be time-consuming and frustrating.

This problem inspired Bhargav Gajjar, CEO of Vishwa Robotics in Cambridge, Massachusetts, to develop a new type of remote-controlled robotic hand. The operator puts their hand inside a glove, and the robot hand moves according to the movements of the glove. Sensors in the hand provide force feedback, so the operator feels the resistance that the robot hand is meeting. This touch sensation, called ‘haptic sense’, allows the operator to handle objects as gently or as firmly as they need to.

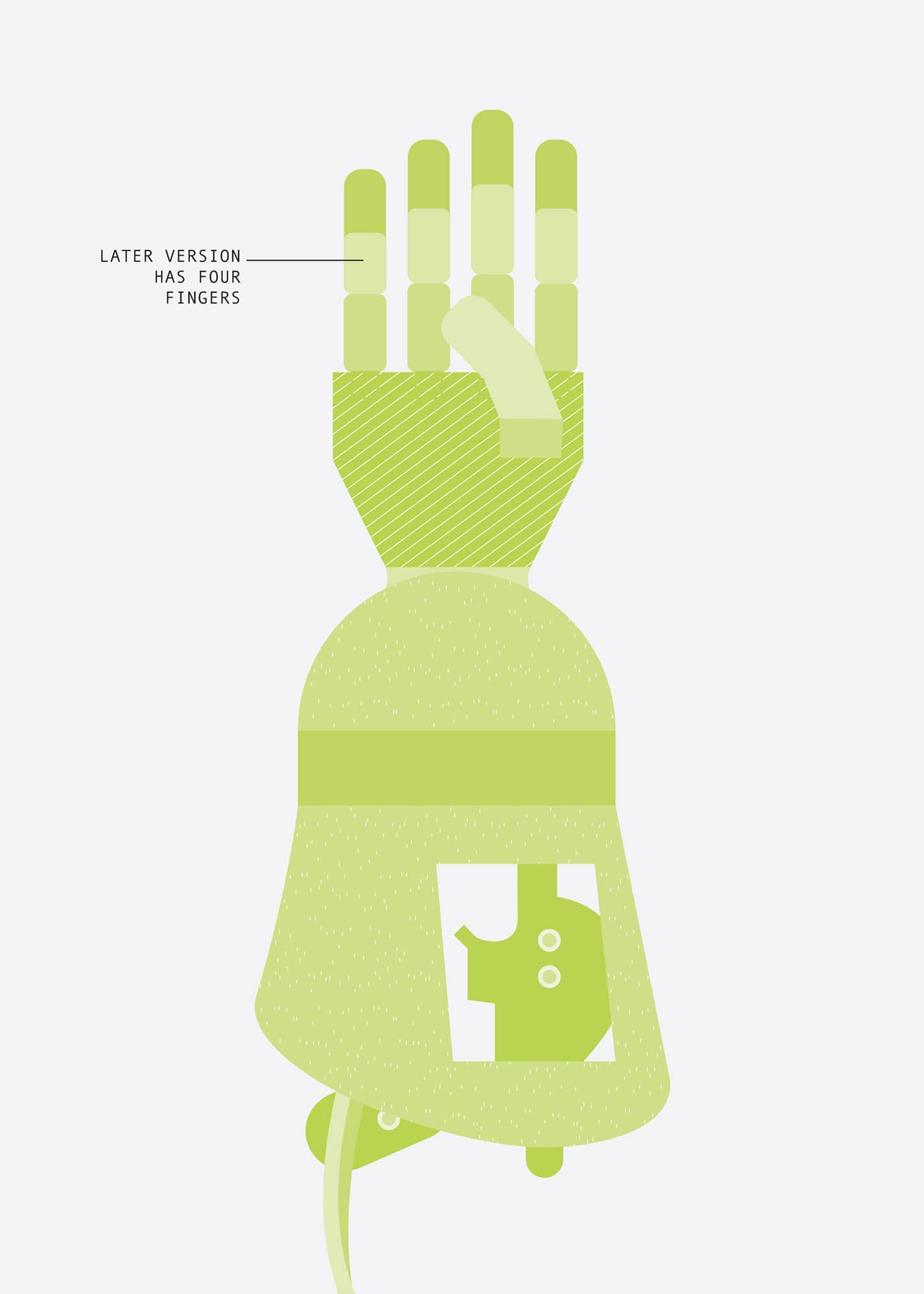

The prototype Vishwa Extensor had three fingers and an opposing thumb – Gajjarinitially decided against giving it four fingers, as the ring finger and little finger tend to move together as one - later versions have four fingers for added strength. Each finger has four degrees of freedom, and the wrist has three, so it can follow the movements of the operator. Like the bebionic hand, it is the realization of Leonardo’s dream of a machine that matches human articulation.

The Extensor can handle all the normal hand tools used by deep-sea divers. It is easy for this robot to use a wrench, pick up a nut and attach it to a bolt, or hold an electric drill and operate the trigger, all tasks that are difficult, or impossible, with claw-like prehensors. Opening submarine hatches, a crucial task, is child’s play with the Extensor.

Gajjar developed the hand for US Navy divers and unmanned submarines. A diver would use the hand to manipulate objects at close range, or an operator on a ship could use a robot fitted with hands to work remotely with objects on the seabed.

The military are likely to use the Extensor for mine disposal, salvage and crash retrievals, as well as installing and maintaining underwater infrastructure such as sensors and communication cables. Nonmilitary users are also likely to appreciate the new possibilities offered by the Extensor. Marine biology and archaeology both require very careful handling of delicate structures – for example, when picking up undersea creatures like corals – for which the Extensor will be useful. In commercial diving, the Extensor allows divers to utilise tools such as chipping hammers and welding torches, which has been difficult with prehensors.

In future, Extensor may find applications far removed from diving. Gajjar suggests that it will be equally valuable for the remote handling of dangerous materials in laboratories or industrial settings – radioactive substances or hazardous biological samples. In space, this type of extensor would turn a machine like NASA’s Robonaut into a remote-controlled pair of hands that could be used from inside the ISS, removing the need for astronauts to go outside into danger for jobs too tricky for the robot.

There is no reason for an Extensor to be the size of a human hand. A, scaled-down pair of hands that a surgeon can position inside a patients’ body to carry out an operation might be useful for surgery. These would be more dexterous than the surgical tools used by the da Vinci Surgical System (see here). Or the extensor might be scaled up to giant size, with strength to match, for underwater or space work when dealing with large jobs. The only limits will be the imagination of the users.

GEMINOID HI-4

Height |

1.4m (4.6ft) sitting |

Weight |

20kg (44lb) estimated |

Year |

2013 |

Construction material |

Metal skeleton |

Main processor |

None (remote control) |

Power source |

External electric power and pneumatic air |

Geminoids are eerily accurate robot copies of living people, built to explore the ways in which robots and humans interact. That eeriness is a key part of what they are about.

In 1970, Japanese robotics professor Masahiro Mori proposed the idea of the ‘uncanny valley’, a dramatic dip in the acceptance of robots as they gradually become more human-like. Mori noted that people are happy to accept industrial robots and other machines that look like machines, and even happier with androids that appear perfectly human. But in between these two peaks of acceptance is a dip, what he called uncanny valley. Anything that is not quite human tends to create strong revulsion.

Makers of dolls and puppets have always been wary of this effect. They know that to be likeable, their creations should be cartoonish rather than strictly realistic. Mannequins in shop windows are fine when they are abstract or stylized, but when they get too close to being human, the lifeless, staring faces start to look creepy. Animatronics can be highly realistic, but, as Mori observed, there is a moment when we suddenly see that they are not human, and that can trigger a plunge into uncanny valley.

Uncanny valley is a fundamental problem for designers and makers of ‘social robots’ that are meant to interact with people, especially robots that care for the elderly or dealing with children. Developers want their robots to be human-like so they can communicate in friendly, relatable and human ways without accidentally making people feel uncomfortable and possibly freaking them out.

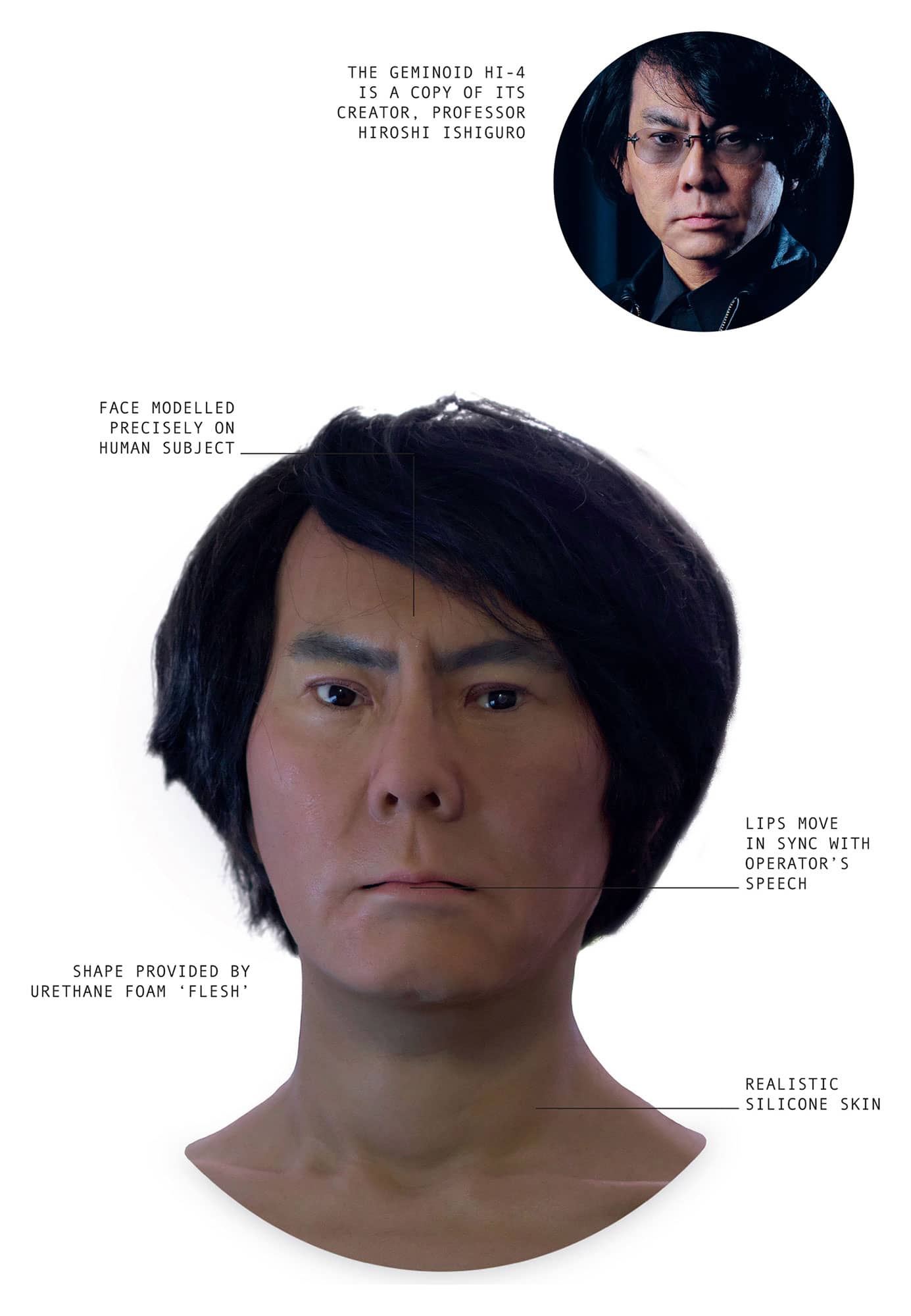

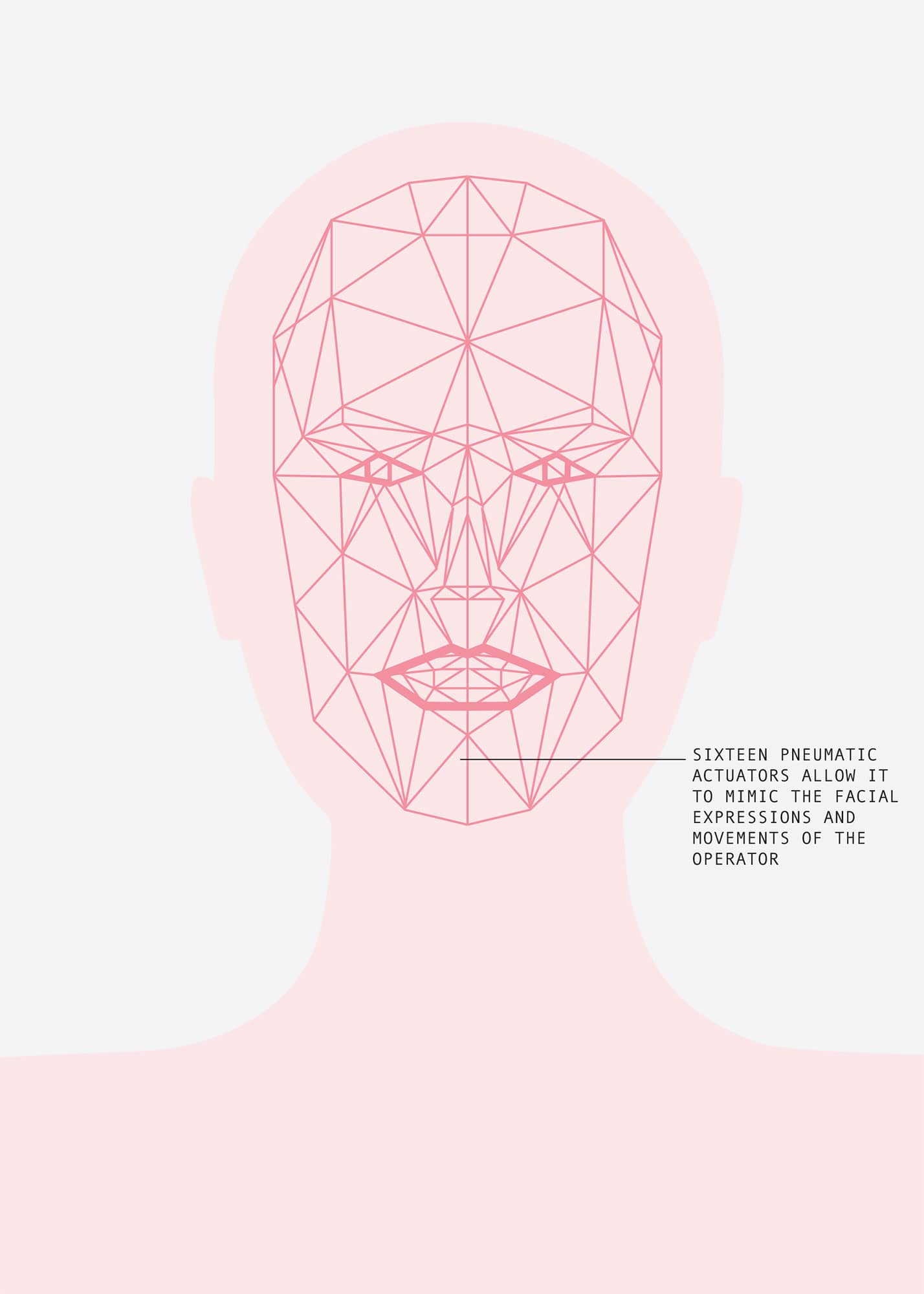

Hiroshi Ishiguro, a professor at the School of Engineering Science at Osaka University, is at the forefront of research in this area. Ishiguro introduced the idea of the Geminoid, a robot that is not simply like a human, but that is an exact replica of a specific person. The Geminoid HI-4 is a copy of Ishiguro himself. In one sense, the HI-4 Geminoid is a cheat. While it may appear to be human-like, the HI-4 is a remote-controlled device with no intelligence of its own. Sixteen pneumatic actuators, twelve in the head and four in the body, allow it to mimic facial expressions and movements of the operator via a telepresence rig.

One of the aims of the HI-4 is to explore the human presence, and how we derive the idea of someone being in the room with us. The limitations of AI meant that remote control was the easiest means of studying the effect in the laboratory and providing a testbed for human-robotic interaction.

The HI-4’s sculpted urethane foam flesh and silicone skin give a close approximation to the original. Ishiguro says that the HI-4 provides a genuine sense of his being present, and people who know him react to the robot as they do to him. The effect works both ways; when people talk to the robot, he feels they are talking to him directly even though the experience is remote.

Geminoids are being used to directly explore uncanny valley. Following on from Mori’s original paper, researchers are looking at two key factors, eeriness and likeability, which can change after repeated encounters with the robot. Likeability was influenced by the way the robot behaved rather than anything else, and was not affected by exposure. However, repeated exposure to the Geminoid did gradually reduce the sense of eeriness. As with other new pieces of technology, an android might seem strange at first, but becomes part of the furniture after continued contact.

In one set of tests, around forty per cent of people who had a conversation with the Geminoid reported an uncanny feeling, with twenty-nine per cent enjoying the conversation. The results from these tests, and the particular aspects of the robot that people picked up on as being uncanny – bodily movements, it’s facial expressions and the way it directed its gaze – are being fed into the design of the next generation of robots.

Whether it will ever be possible to build a robot that will pass as human remains to be seen. From the researchers’ point of view, it would be a big enough prize to come up with a design that, while not necessarily human, is in no way uncanny, and that everyone is happy to talk to.

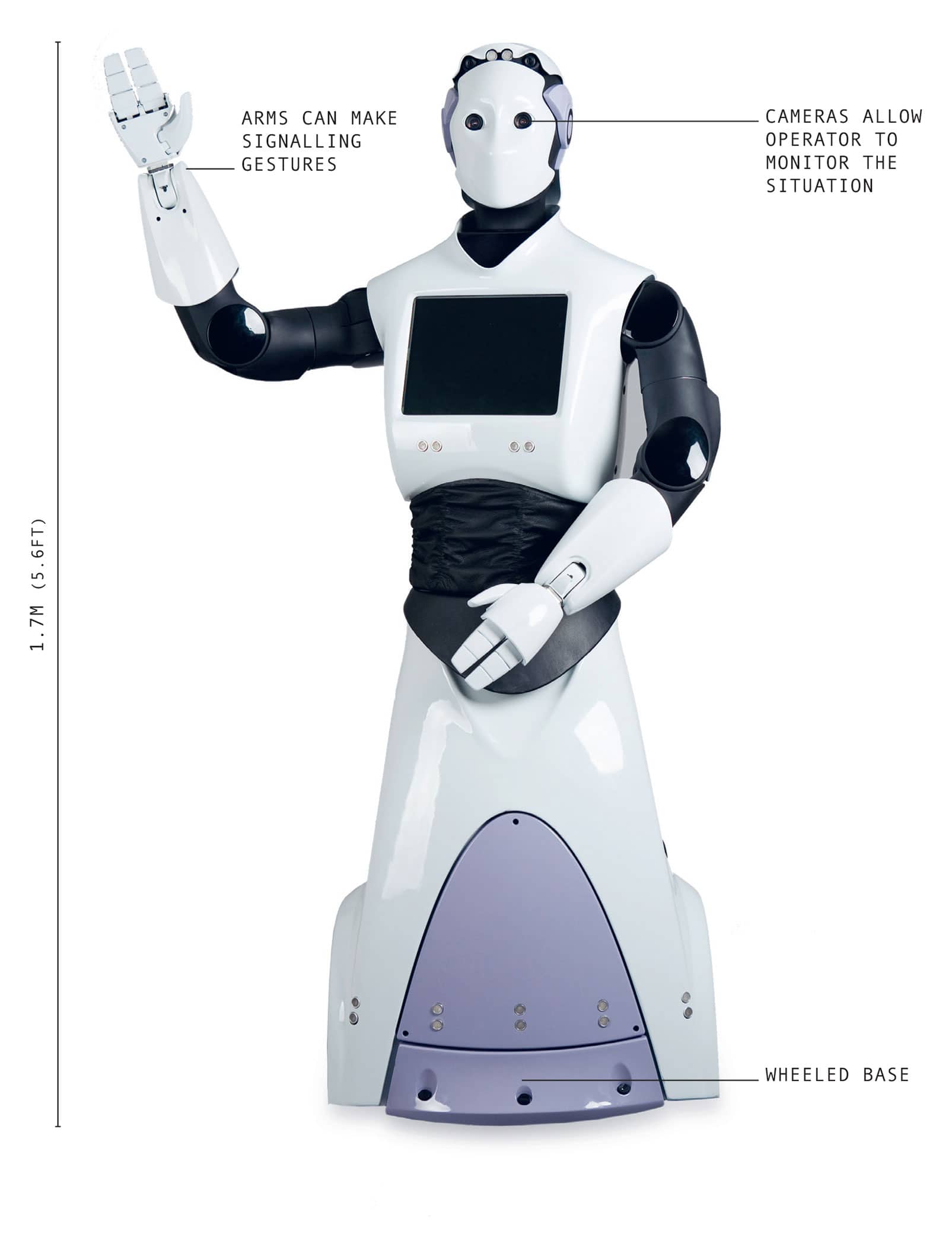

REEM DUBAI POLICE

Height |

1.7m (5.6ft) |

Weight |

100kg (220lb) estimated |

Year |

2017 |

Construction material |

Composite |

Main processor |

Commercial processors |

Power source |

Battery |

The 1989 movie Robocop made a huge impression on the public psyche. The title character was a cyborg, part human and part machine, who tackled armed gangs using massive firepower. Robocop was an effective enforcer, but not your friendly neighbourhood bobby. This has not put off the government of Dubai from calling its own police droid Robocop.

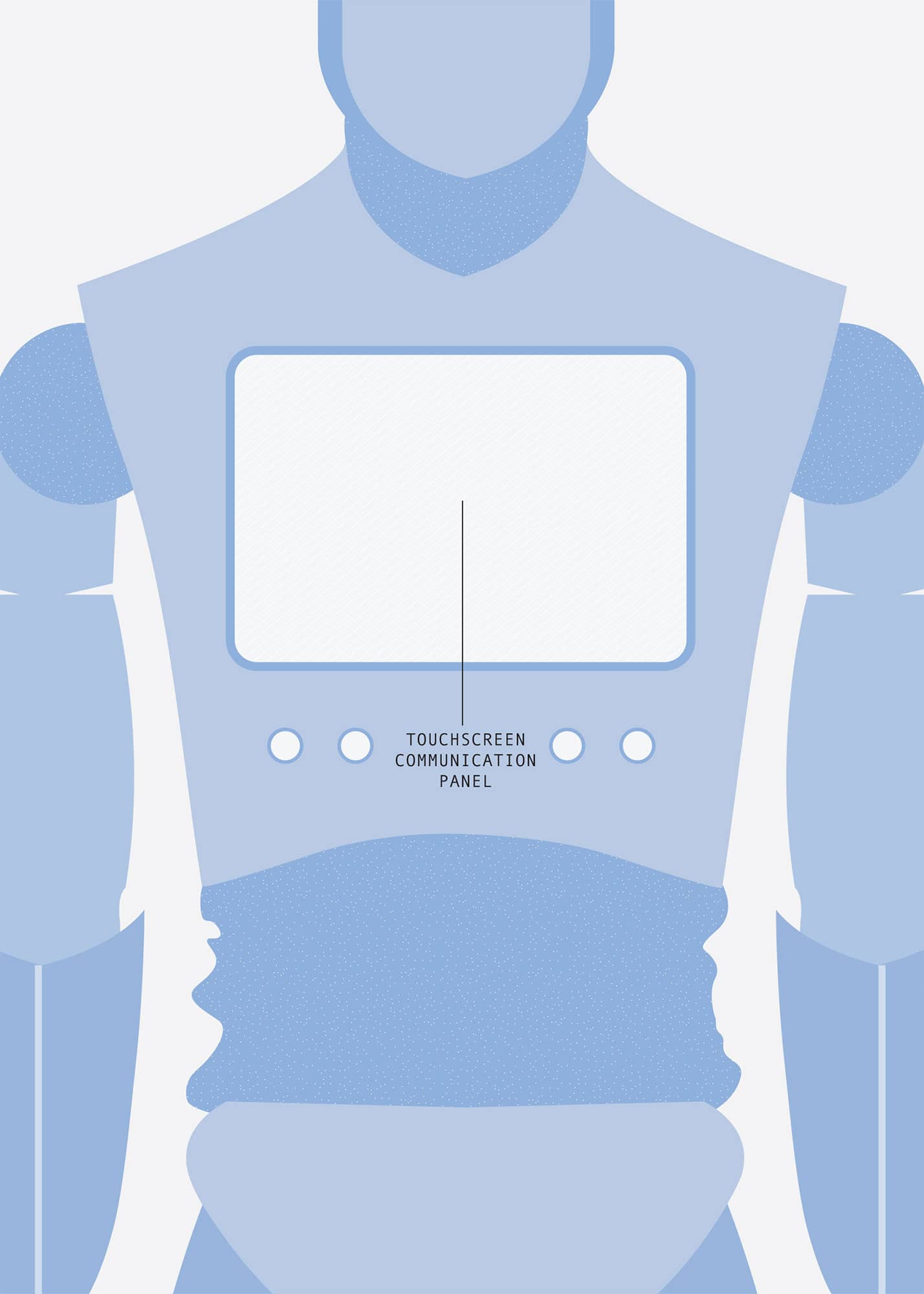

Dubai’s Robocop is nothing like the armed and armoured movie character; based on an existing robot made by Spanish company Pal Robotics, it is more like a mobile information kiosk. It is derived from the company’s general-purpose machine designed for exhibitions and conferences, with a touch-screen communication panel on its chest.

The idea is that people will be able to report crimes, get information and pay traffic fines. The Dubai police are reportedly working with IBM’s Watson technology so that Robocop can carry out a conversation, for example interviewing a witness and asking intelligent questions rather than simply following a set script.

It is doubtful how much of Robocop’s workload really requires a physical presence. A decent website or mobile phone application could provide the same crime-reporting and fine-paying facilities. It could also talk to people and interview them wherever they were, and deal with any number of conversations at the same time. It would be accessible to everyone with a smartphone rather than being limited to just one location.

However, Robocop’s main purpose seems to be public relations. By being seen in shopping malls and public places, it will get people used to the idea of robotic policing. Specialist police units have used robots for bomb disposal for decades, and there are tentative moves towards establishing police drone units, but these have met considerable public resistance. People do not quite trust robots, a fear that was reflected and amplified by the original Robocop movie.

Hence the need for a gentle introduction, in the form of the REEM robot. As well as acting as an information point, it will patrol the streets, using cameras as mobile CCTV. It can watch, record and arrest criminals, but cannot detain or pursue them – especially if they use stairs as a getaway.

Dubai wants one-quarter of its police to be robots by 2030. Robocops cannot replace humans on a one-for-one basis, but there will be other types of robot, too. In June 2017, a few weeks after announcing Robocop, the Dubai government showed off a miniature self-driving police car. This is the O-R3, an electric vehicle developed by a Singaporean company OTSWA Digital, equipped with 360-degree cameras to keep an eye out for crime with automatic ‘anomaly detection’ software. It can even launch a quadcopter to follow suspects.

It is a short step from this technology to machines that can subdue suspects. Quadcopters equipped with Tasers, pepper spray and other weapons have already been tested. Fielding them has more to do with political will and what is acceptable than with the limits of robotics. Some suspect a sinister motive for pushing this controversial technology; a core of robot officers, breaking up demonstrations or arresting protesters would be a mechanical process. Robot police would be ideal for a police state.

The issue is not the robots themselves but how they are used. When dealing with a potential bomb, police send in a robot from a safe distance. It makes just as much sense for a robot to tackle a potentially dangerous suspect. Providing two-way communication to an officer via microphone and speaker, and with no threat to their own safety the officer could respond coolly and rationally. At worst, a drone or robot would be damaged.

Patrolling robots could make sure that there is always a police presence nearby, and could extend the capacity of overstretched forces. With built-in cameras, everything robot police do is recorded and could be used in evidence. They cannot turn a blind eye, and are as impartial as justice is supposed to be.

The use of Robocops needs to be monitored closely, but we should not ignore their possibilities and benefits.