Webbots are sometimes easier to use when they’re packaged as functions. These functions are simply interfaces to webbots that download and parse information and return the desired data in a predefined structure. For example, the National Oceanic and Atmospheric Association (NOAA) provides weather forecasts on its website (http://www.noaa.gov). You could write a function to execute a webbot that downloads and parses a forecast. This interface could also return the forecast in an array, as shown in Example 16-1.

Example 16-1. Simplifying webbot use by creating a function interface

# Get weather forecast $forcast_array = get_noaa_forecast($zip=89109); # Display forecast echo $forcast_array['MONDAY']['TEMPERATURE']."<br>"; echo $forcast_array['MONDAY']['WIND_SPEED']."<br>"; echo $forcast_array['MONDAY']['WIND_DIRECTION']."<br>";

While the example in Example 16-1 is hypothetical, you can see that interfacing with a webbot in this manner conceals the dirty details of downloading or parsing web pages. Yet, the programmer has full ability to access online information and services that the webbots provide. From a programmer’s perspective, it isn’t even obvious that webbots are used.

When a programmer accesses a webbot from a function interface, he or she gains the ability to use the webbot both programmatically and in real time. This is a departure from the traditional method of launching webbots.[52] Customarily, you schedule a webbot to execute periodically, and if the webbot generates data, that information is stored in a database for later retrieval. With a function interface to a webbot, you don’t have to wait for a webbot to run as a scheduled task. Instead, you can directly request the specific contents of a web page whenever you need them.

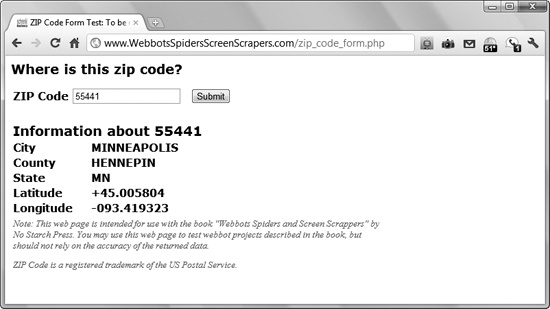

This project uses a web page that decodes ZIP codes and converts that operation into a function, which is available from a PHP program. This particular web page finds the city, county, state, and geo coordinates for the post office located in a specific ZIP code. Theoretically, you could use this function to validate ZIP codes or use the latitude and longitude information to plot locations on a map. Figure 16-1 shows the target website for this project.

The sole purpose of the web page in Figure 16-1 is to be a target for your webbots. (A link to this page is available at this book’s website.) This target web page uses a standard form to capture a ZIP code. Once you submit that form, the web page returns a variety of information about the ZIP code you entered in a table below the form.

This example function uses the interface shown in Example 16-2, where a function named decode_zipcode() accepts a five-digit ZIP code as a input parameter and returns an array, which describes the area serviced by the ZIP code.

Since this webbot needs to submit a ZIP code to a form, you will need to use the techniques you learned in Chapter 6 to emulate someone manually submitting the form. As you learned, you should always pass even simple forms through a form analyzer (similar to the one used in Chapter 6) to ensure that you will submit the form in the manner the server expects. This is important because web pages commonly insert dynamic fields or values into forms that can be hard to detect by just looking at a page.

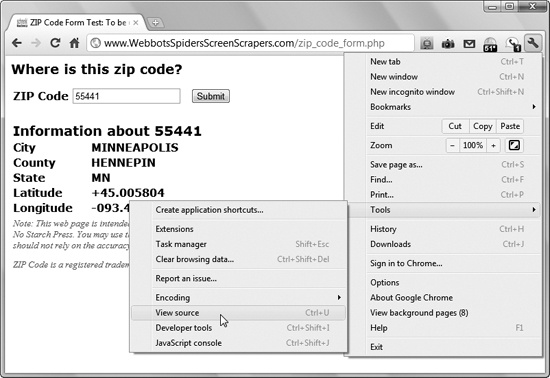

To use the form analyzer, simply load the web page into a browser and view the source code, as shown in Figure 16-2.

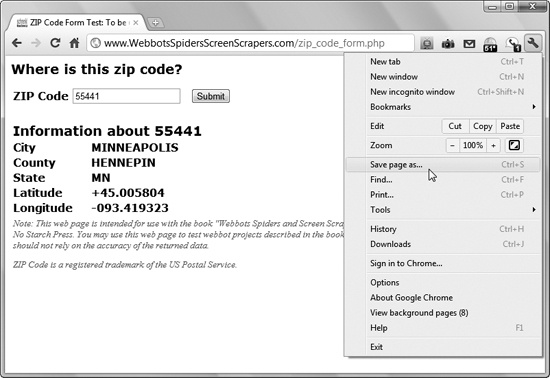

Once you have the target’s source code, save the HTML to your hard drive, as done in Figure 16-3.

Once the form’s HTML is on your hard drive, you must edit it to make the form submit its content to the form analyzer instead of the target server. You do this by changing the form’s action attribute to the location of the form analyzer, as shown in Figure 16-4.

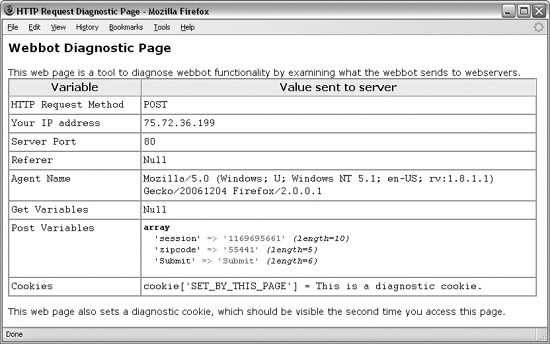

Now you have a copy of the target form on your hard drive, with the form’s original action attribute replaced with the web address of the form analyzer. The final step is to load this local copy of the form into a browser, manually fill in the form, and submit it to the analyzer. Once submitted, you should see the analysis performed by the form analyzer, as shown in Figure 16-5.

The analysis tells us that the method is POST and that there are three required data fields. In addition to the zipcode field, there is also a hidden session field (which looks suspiciously like a Unix timestamp) and a Submit field, which is actually the name of the Submit button. To emulate the form submission, it is vitally important to correctly use all the field names (with appropriate values) as well as the same method used by the original form.

Once you write your webbot, it’s a good idea to test it by using the form analyzer as a target to ensure that the webbot submits the form as the target webserver expects it to. This is also a good time to verify the agent name your webbot uses.

The script that interfaces the target web page to a PHP function, called describe_zipcode(), is available in its entirety at this book’s website. It is broken into smaller pieces and annotated here for clarity.

It is uncommon to find dynamically assigned values, like the session value employed by this target, in forms. Since the session is assigned dynamically, the webbot must first make a page request to get the session value before it can submit form values. This actually mimics normal browser use, as the browser first must download the form before submitting it. The webbot captures the session variable with the script described in Example 16-3.

Example 16-3. Downloading the target to get the session variable

# Start interface describe_zipcode($zipcode)

function describe_zipcode($zipcode)

{

# Get required libraries and declare the target

include ("LIB_http.php");

include("LIB_parse.php");

$target = "http://www.WebbotsSpidersScreenScrapers.com/zip_code_form.php";

# Download the target

$page = http_get($target, $ref="");

# Parse the session hidden tag from the downloaded page

# <input type="hidden" name="session" value="xxxxxxxxxx">

$session_tag = return_between($string = $page['FILE'] ,

$start = "<input type=\"hidden\" name=\

"session\"",

$end = ">",

$type = EXCL

);

# Remove the "'s and "value=" text to reveal the session value

$session_value = str_replace("\"", "", $session_tag);

$session_value = str_replace("value=", "", $session_value);The script in Example 16-3 is a classic screen scraper. It downloads the page and parses the session value from the form <input> tag. The str_replace() function is later used to remove superfluous quotes and the tag’s value attribute. Notice that the webbot uses LIB_parse and LIB_http, described in previous chapters, to download and parse the web page.[53]

Once you know the session value, the script in Example 16-4 may be used to submit the form. Notice the use of http_post_form() to emulate the submission of a form with the POST method. The form fields are conveniently passed to the target webserver in $data_array[].

The remaining step is to parse the desired city, county, state, and geo coordinates from the web page obtained from the form submission in the previous listing. The script that does this is shown in Example 16-5.

Example 16-5. Parsing and returning the data

$landmark = "Information about ".$zipcode;

$table_array = parse_array($form_result['FILE'], "<table", "</table>");

for($xx=0; $xx<count($table_array); $xx++)

{

# Parse the table containing the parsing landmark

if(stristr($table_array[$xx], $landmark))

{

$ret['CITY'] = return_between($table_array[$xx], "CITY", "</tr>", EXCL);

$ret['CITY'] = strip_tags($ret['CITY']);

$ret['STATE'] = return_between($table_array[$xx], "STATE", "</tr>", EXCL);

$ret['STATE'] = strip_tags($ret['STATE']);

$ret['COUNTY'] = return_between($table_array[$xx], "COUNTY", "</tr>", EXCL);

$ret['COUNTY'] = strip_tags($ret['COUNTY']);

$ret['LATITUDE'] = return_between($table_array[$xx], "LATITUDE", "</tr>", EXCL);

$ret['LATITUDE'] = strip_tags($ret['LATITUDE']);

$ret['LONGITUDE'] = return_between($table_array[$xx], "LONGITUDE", "</tr>", EXCL);

$ret['LONGITUDE'] = strip_tags($ret['LONGITUDE']);

}

}

# Return the parsed data

return $ret;

} # End Interface describe_zipcode($zipcode)This script first uses parse_array() to create an array containing all the tables in the downloaded web page, which is returned in $form_result['FILE']. The script then looks for the table that contains the parsing landmark Information about . . . . Once the webbot finds the table that holds the data we’re looking for, it parses the data using unique strings that identify the beginning and end of the desired data. The parsed data is then cleaned up with strip_tags() and returned in the array we described earlier. Once the data is parsed and placed into an array, that array is returned to the calling program.