CHAPTER 27

Cryptographic Algorithms

In this chapter, you will

• Identify the different types of cryptography

• Learn about current cryptographic methods

• Understand how cryptography is applied for security

• Given a scenario, utilize general cryptography concepts

Cryptographic systems are composed of two main elements, an algorithm and a key. The key exists to provide a means to alter the encryption from message to message, while the algorithm provides the method of converting plaintext to ciphertext and back. This chapter examines the types and characteristics of cryptographic algorithms.

Certification Objective This chapter covers CompTIA Security+ exam objective 6.2, Explain cryptography algorithms and their basic characteristics.

Symmetric Algorithms

Symmetric algorithms are characterized by using the same key for both encryption and decryption. Symmetric encryption algorithms are used for bulk encryption because they are comparatively fast and have few computational requirements. Common symmetric algorithms are DES, 3DES, AES, Blowfish, Twofish, RC2, RC4, RC5, and RC6.

EXAM TIP Ensure you understand DES, 3DES, AES, Blowfish, Twofish, and RC4 symmetric algorithms for the exam.

DES

The Data Encryption Standard (DES) was developed in response to the National Bureau of Standards (NBS), now known as the National Institute of Standards and Technology (NIST), issuing a request for proposals for a standard cryptographic algorithm in 1973. NBS specified that DES had to be recertified every five years. While DES passed without a hitch in 1983, the National Security Agency (NSA) said it would not recertify it in 1987. However, since no alternative was available for many businesses, many complaints ensued, and the NSA and NBS were forced to recertify DES. The algorithm was then recertified in 1993. NIST has now certified the Advanced Encryption Standard (AES) to replace DES.

3DES

Triple DES (3DES) is a follow-on implementation of DES. Depending on the specific variant, it uses either two or three keys instead of the single key that DES uses. It also spins through the DES algorithm three times via what’s called multiple encryption.

Multiple encryption can be performed in several different ways. The simplest method of multiple encryption is just to stack algorithms on top of each other—taking plaintext, encrypting it with DES, then encrypting the first ciphertext with a different key, and then encrypting the second ciphertext with a third key. In reality, this technique is less effective than the technique that 3DES uses, which is to encrypt with one key, then decrypt with a second, and then encrypt with a third.

This greatly increases the number of attempts needed to retrieve the key and is a significant enhancement of security. The additional security comes with a price, however. It can take up to three times longer to compute 3DES than to compute DES. However, the advances in memory and processing power in today’s electronics make this problem irrelevant in all devices except for very small, low power devices.

The only weaknesses of 3DES are those that already exist in DES. However, because different keys are used with the same algorithm in 3DES, the effective key length is longer than the DES keyspace, which results in greater resistance to brute force attack, making 3DES stronger than DES to a wide range of attacks. While 3DES has continued to be popular and is still widely supported, AES has taken over as the symmetric encryption standard.

AES

Because of the advancement of technology and the progress being made in quickly retrieving DES keys, NIST put out a request for proposals (RFP) for a new Advanced Encryption Standard (AES). NIST called for a block cipher using symmetric key cryptography and supporting key sizes of 128, 192, and 256 bits. After evaluation, NIST had five finalists:

• MARS IBM

• RC6 RSA

• Rijndael Joan Daemen and Vincent Rijmen

• Serpent Ross Anderson, Eli Biham, and Lars Knudsen

• Twofish Bruce Schneier, John Kelsey, Doug Whiting, David Wagner, Chris Hall, and Niels Ferguson

In the fall of 2000, NIST picked Rijndael to be the new AES. It was chosen for its overall security as well as its good performance on limited-capacity devices. AES has three different standard key sizes, 128, 192, and 256, designated AES-128, AES-192, and AES-256, respectively.

While no efficient attacks currently exist against AES, more time and analysis will tell if this standard can last as long as DES did.

EXAM TIP In the world of symmetric cryptography, AES is the current gold standard of algorithms. It is considered secure and is computationally efficient.

RC4

RC is a general term for several ciphers all designed by Ron Rivest—RC officially stands for Rivest Cipher. RC1, RC2, RC3, RC4, RC5, and RC6 are all ciphers in the series. RC1 and RC3 never made it to release, but RC2, RC4, RC5, and RC6 are all working algorithms.

RC4 was created before RC5 and RC6, but it differs in operation. RC4 is a stream cipher, whereas all the symmetric ciphers we have looked at so far have been block-mode ciphers. A stream cipher works by enciphering the plaintext in a stream, usually bit by bit. This makes stream ciphers faster than block-mode ciphers. Stream ciphers accomplish this by performing a bitwise XOR with the plaintext stream and a generated key stream. RC4 can use a key length of 8 to 2048 bits, though the most common versions use 128-bit keys. The algorithm is fast, sometimes ten times faster than DES. The most vulnerable point of the encryption is the possibility of weak keys. One key in 256 can generate bytes closely correlated with key bytes. Proper implementations of RC4 need to include weak key detection.

EXAM TIP RC4 is the most widely used stream cipher and is used in popular protocols such as Transport Layer Security (TLS) and Wi-Fi Protected Access (WPA).

Blowfish/Twofish

Blowfish was designed in 1994 by Bruce Schneier. It is a block-mode cipher using 64-bit blocks and a variable key length from 32 to 448 bits. It was designed to run quickly on 32-bit microprocessors and is optimized for situations with few key changes. The only successful cryptanalysis to date against Blowfish has been against variants that used reduced rounds. There does not seem to be a weakness in the full 16-round version.

Twofish was one of the five finalists in the AES competition. Like other AES entrants, it is a block cipher utilizing 128-bit blocks with a variable-length key of up to 256 bits. This algorithm is available for public use, and has proven to be secure. Twofish is an improvement over Blowfish in that it is less vulnerable to certain classes of weak keys.

Cipher Modes

In symmetric or block algorithms, there is a need to deal with multiple blocks of identical data to prevent multiple blocks of ciphertext that would identify the blocks of identical input data. There are multiple methods of dealing with this, called modes of operation. This section describes the common modes listed in exam objective 6.2, ECB, CBC, CTM, and GCM.

CBC

Cipher Block Chaining (CBC) is a block mode where each block is XORed with the previous ciphertext block before being encrypted. To obfuscate the first block, an initialization vector (IV) is XORed with the first block before encryption. CBC is one of the most common modes used, but it has two major weaknesses. First, because there is a dependence on previous blocks, the algorithm cannot be parallelized for speed and efficiency. Second, because of the nature of the chaining, a plaintext block can be recovered from two adjacent blocks of ciphertext. An example of this is in the POODLE (Padding Oracle On Downgraded Legacy Encryption) attack. This type of padding attack works because a one-bit change to the ciphertext causes complete corruption of the corresponding block of plaintext, and inverts the corresponding bit in the following block of plaintext, but the rest of the blocks remain intact.

GCM

Galois Counter Mode (GCM) is an extension of CTM with the addition of a Galois mode of authentication. Galois fields are a mathematical representation that has significant utility in practical encoding. The addition of a Galois mode adds an authentication function to the cipher mode. Because the Galois field used in the process can be parallelized, GCM provides an efficient method of adding this capability. GCM is employed in many international standards, including IEEE 802.1ad and 802.1AE. NIST recognized AES-GCM, as well as GCM and GMAC.

ECB

Electronic Code Book (ECB) is the simplest mode operation of all. The message to be encrypted is divided into blocks, and each block is encrypted separately. This has several major issues, most notable of which is that identical blocks yield identical encrypted blocks, telling the attacker that the blocks are identical. ECB is not recommended for use in cryptographic protocols.

EXAM TIP ECB is not recommended for use in any cryptographic protocol because it does not provide protection against input patterns or known blocks.

CTM/CTR

Counter Mode (CTM) uses a “counter” function to generate a nonce that is used for each block encryption. The sequence of operations is to take the counter function value (nonce), encrypt using the key, then XOR with plaintext. Each block can be done independently, resulting in the ability to multithread the processing. CTM is also abbreviated CTR in some circles.

EXAM TIP CBC and CTM/CTR are considered to be secure and are the most widely used modes.

Stream vs. Block

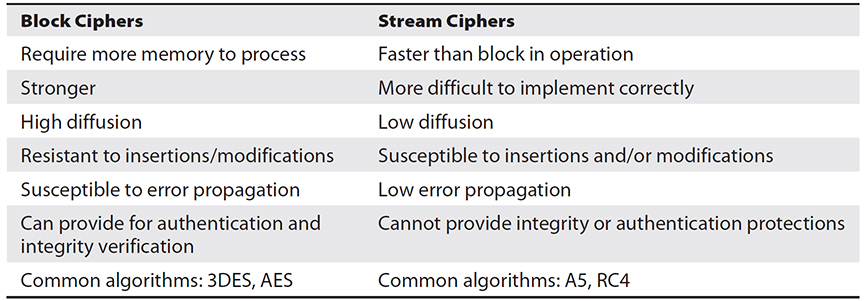

When encryption operations are performed on data, there are two primary modes of operation, block and stream. Block operations are performed on blocks of data, enabling both transposition and substitution operations. This is possible when large pieces of data are present for the operations. Stream operations have become more common with the streaming of audio and video across the Web. The primary characteristic of stream data is that it is not available in large chunks, but rather either bit by bit or byte by byte, pieces too small for block operations. Stream ciphers operate using substitution only and therefore offer less robust protection than block ciphers. Table 27-1 compares and contrasts block and stream ciphers.

Table 27-1 Comparison of Block and Stream Ciphers

Asymmetric Algorithms

Asymmetric cryptography is in many ways completely different from symmetric cryptography. Also known as public key cryptography, asymmetric algorithms are built around hard-to-reverse math problems. The strength of these functions is very important: because an attacker is likely to have access to the public key, he can run tests of known plaintext and produce ciphertext. This allows instant checking of guesses that are made about the keys of the algorithm. RSA, DSA, Diffie-Hellman, elliptic curve cryptography (ECC), and PGP/GPG are all popular asymmetric protocols. We will look at all of them and their suitability for different functions.

EXAM TIP Asymmetric methods are significantly slower than symmetric methods and thus are typically not suitable for bulk encryption.

RSA

RSA, one of the first public key cryptosystems ever invented, can be used for both encryption and digital signatures. RSA is named after its inventors, Ron Rivest, Adi Shamir, and Leonard Adleman, and was first published in 1977. This algorithm uses the product of two very large prime numbers and works on the principle of difficulty in factoring such large numbers. It’s best to choose large prime numbers from 100 to 200 digits in length that are equal in length.

This is a simple method, but its security has withstood the test of more than 30 years of analysis. Considering the effectiveness of RSA’s security and the ability to have two keys, why are symmetric encryption algorithms needed at all? The answer is speed. RSA in software can be 100 times slower than DES, and in hardware, it can be even slower.

As mentioned, RSA can be used for both regular encryption and digital signatures. Digital signatures try to duplicate the functionality of a physical signature on a document using encryption. Typically, RSA and the other public key systems are used in conjunction with symmetric key cryptography. Public key, the slower protocol, is used to exchange the symmetric key (or shared secret), and then the communication uses the faster symmetric key protocol. This process is known as electronic key exchange.

DSA

A digital signature is a cryptographic implementation designed to demonstrate authenticity and identity associated with a message. Using public key cryptography, the digital signature algorithm (DSA) allows traceability to the person signing the message through the use of their private key. The addition of hash codes allows for the assurance of integrity of the message as well. The operation of a digital signature is a combination of cryptographic elements to achieve a desired outcome. The steps involved in digital signature generation and use are illustrated in Chapter 26.

The common implementation of DSA is a derivative of the ElGamal signature method and is detailed in Federal Information Processing Standard 186 series. It is covered by a patent that the U.S. government has released royalty free worldwide. The most important element in the signature is the per message random signature value k, which needs to change with every signed message and must be kept secret. Reusing this value can lead to the discovery of the private key as demonstrated by an attack on Sony’s improper implementation of DSA when signing software for PS3.

Diffie-Hellman

Diffie-Hellman, introduced in Chapter 26, is one of the most common encryption protocols in use today. It plays a role in the electronic key exchange method of the Secure Sockets Layer (SSL) and TLS protocols. It is also used by the Secure Shell (SSH) and IP Security (IPsec) protocols. Diffie-Hellman is important because it enables the sharing of a secret key between two people who have not contacted each other before.

EXAM TIP Diffie-Hellman is the gold standard for key exchange, and for the exam, you should understand the subtle differences between the forms DHE and ECDHE.

DH Groups

Diffie-Hellman (DH) groups determine the strength of the key used in the key exchange process. Higher group numbers are more secure, but require additional time to compute the key. DH group 1 consists of a 768-bit key, group 2 consists of a 1024-bit key, and group 5 comes with a 1536-bit key. Higher number groups are also supported, with correspondingly longer keys.

DHE

There are several variants of the Diffie-Hellman key exchange. Diffie-Hellman Ephemeral (DHE) is a variant where a temporary key is used in the key exchange rather than reusing the same key over and over.

ECDHE

Elliptic Curve Diffie-Hellman (ECDH) is a variant of the Diffie-Hellman protocol that uses elliptic curve cryptography. ECDH can also be used with ephemeral keys, becoming Elliptic Curve Diffie-Hellman Ephemeral (ECDHE), to enable perfect forward secrecy (described in Chapter 26).

Elliptic Curve

Elliptic curve cryptography (ECC) was covered in detail in Chapter 26. What is important to note from a use perspective is that ECC is well suited for platforms with limited computing power, such as mobile devices.

The security of elliptic curve systems has been questioned, mostly because of lack of analysis. However, all public key systems rely on the difficulty of certain math problems. It would take a breakthrough in math for any of the mentioned systems to be weakened dramatically, but research has been done about the problems and has shown that the elliptic curve problem has been more resistant to incremental advances. Again, as with all cryptography algorithms, only time will tell how secure they really are. The big benefit of ECC systems is that they require less computing power for a given bit strength. This makes ECC ideal for use in low power mobile devices. The surge in mobile connectivity has brought secure voice, e-mail, and text applications that use ECC and AES algorithms to protect a user’s data.

EXAM TIP Ensure you understand RSA, DSA, ECC, and Diffie-Hellman variants of asymmetric algorithms for the exam.

PGP/GPG

Pretty Good Privacy (PGP), created by Philip Zimmermann in 1991, passed through several versions that were available for free under a noncommercial license. PGP is now a commercial enterprise encryption product offered by Symantec. It can be applied to popular e-mail programs to handle the majority of day-to-day encryption tasks using a combination of symmetric and asymmetric encryption protocols. One of the unique features of PGP is its use of both symmetric and asymmetric encryption methods, accessing the strengths of each method and avoiding the weaknesses of each as well. Symmetric keys are used for bulk encryption, taking advantage of the speed and efficiency of symmetric encryption. The symmetric keys are passed using asymmetric methods, capitalizing on the flexibility of this method.

Gnu Privacy Guard (GPG), also called GnuPG, is an open source implementation of the OpenPGP standard. This command-line–based tool is a public key encryption program designed to protect electronic communications such as e-mail. It operates similarly to PGP and includes a method for managing public/private keys.

Hashing Algorithms

Hashing algorithms are cryptographic methods that are commonly used to store computer passwords and to ensure message integrity.

MD5

Message Digest (MD) is the generic version of one of several algorithms that are designed to create a message digest or hash from data input into the algorithm. MD algorithms work in the same manner as SHA (discussed next) in that they use a secure method to compress the file and generate a computed output of a specified number of bits. The MD algorithms were all developed by Ronald L. Rivest of MIT. The current version is MD5, while previous versions were MD2 and MD4. MD5 was developed in 1991 and is structured after MD4 but with additional security to overcome the problems in MD4. In November 2007, researchers published their findings on the ability to have two entirely different Win32 executables with different functionality but the same MD5 hash. This discovery has obvious implications for the development of malware. The combination of these problems with MD5 has pushed people to adopt a strong SHA version for security reasons.

SHA

Secure Hash Algorithm (SHA) refers to a set of hash algorithms designed and published by the National Institute of Standards and Technology (NIST) and the National Security Agency (NSA). These algorithms are included in the SHA standard Federal Information Processing Standards (FIPS) 180-2 and 180-3. Individually, each standard is named SHA-1, SHA-224, SHA-256, SHA-384, and SHA-512. The latter four variants are occasionally referred to as SHA-2. Because of collision-based weaknesses in the SHA-1 and SHA-2 series, NIST conducted a search for a new version, the result of which is known as SHA-3.

SHA-1

SHA-1, developed in 1993, was designed as the algorithm to be used for secure hashing in the U.S. Digital Signature Standard (DSS). It is modeled on the MD4 algorithm and implements fixes in that algorithm discovered by the NSA. It creates a message digest 160 bits long that can be used by the Digital Signature Algorithm (DSA), which can then compute the signature of the message. This is computationally simpler, as the message digest is typically much smaller than the actual message—smaller message, less work. SHA-1 works, as do all hashing functions, by applying a compression function to the data input.

At one time, SHA-1 was one of the more secure hash functions, but it has been found vulnerable to a collision attack. Thus, most implementations of SHA-1 have been replaced with one of the other, more secure SHA versions. The added security and resistance to attack in SHA-1 does require more processing power to compute the hash. In spite of these improvements, SHA-1 is still vulnerable to collisions and is no longer approved for use by government agencies.

SHA-2

SHA-2 is a collective name for SHA-224, SHA-256, SHA-384, and SHA-512. SHA-2 is similar to SHA-1, in that it will also accept input of less than 264 bits and reduces that input to a hash. The SHA-2 series algorithm produces a hash length equal to the number after SHA, so SHA-256 produces a digest of 256 bits. The SHA-2 series became more common after SHA-1 was shown to be potentially vulnerable to a collision attack.

SHA-3

SHA-3 is the name for the SHA-2 replacement. In 2012, the Keccak hash function won the NIST competition and was chosen as the basis for the SHA-3 method. Because the algorithm is completely different from the previous SHA series, it has proved to be more resistant to attacks that are successful against them. Because the SHA-3 series is relatively new, it has not been widely adopted in many cipher suites yet.

EXAM TIP The SHA-2 and SHA-3 series are currently approved for use. SHA-1 has been discontinued.

HMAC

HMAC, or Hashed Message Authentication Code, is a special subset of hashing technology. Message authentication codes are used to determine if a message has changed during transmission. Using a hash function for message integrity is common practice for many communications. When you add a secret key and crypto function, the MAC becomes a HMAC and you also have the ability to determine authenticity in addition to integrity. Popular hash algorithms are Message Digest (MD5), the Secure Hash Algorithm (SHA) series, and the RIPEMD algorithms.

EXAM TIP The commonly used hash functions in HMAC are MD5, SHA-1, and SHA-256. Although MD5 has been deprecated because of collision attacks, when used in the HMAC function, the attack methodology is not present and the hash function still stands as useful.

RIPEMD

RACE Integrity Primitives Evaluation Message Digest (RIPEMD) is a hashing function developed by the RACE Integrity Primitives Evaluation (RIPE) consortium. It originally provided a 128-bit hash and was later shown to have problems with collisions. RIPEMD was strengthened to a 160-bit hash known as RIPEMD-160 by Hans Dobbertin, Antoon Bosselaers, and Bart Preneel.

RIPEMD-160

RIPEMD-160 is an algorithm based on MD4, but it uses two parallel channels with five rounds. The output consists of five 32-bit words to make a 160-bit hash. There are also larger output extensions of the RIPEMD-160 algorithm. These extensions, RIPEMD-256 and RIPEMD-320, offer outputs of 256 bits and 320 bits, respectively. While these offer larger output sizes, this does not make the hash function inherently stronger.

Key Stretching Algorithms

As described in Chapter 26, key stretching is a mechanism that takes what would otherwise be weak keys and “stretches” them to make the system more secure against brute force attacks. A typical methodology used for key stretching involves increasing the computational complexity by adding iterative rounds of computations. To extend a password to a longer length of key, you can run it through multiple rounds of variable-length hashing, each increasing the output by bits over time. This may take hundreds or thousands of rounds, but for single-use computations, the time is not significant. Two common forms of key stretching employed in use today include BCRYPT and Password-Based Key Derivation Function 2.

BCRYPT

BCRYPT is a key-stretching mechanism that uses the Blowfish cipher and salting, and adds an adaptive function to increase the number of iterations. The result is the same as other key-stretching mechanisms (single use is computationally feasible), but when attempting to brute force the function, the billions of attempts make it computationally unfeasible.

PBKDF2

Password-Based Key Derivation Function 2 (PBKDF2) is a key derivation function designed to produce a key derived from a password. This function uses a password or passphrase and a salt and applies an HMAC to the input thousands of times. The repetition makes brute force attacks computationally unfeasible.

Obfuscation

Obfuscation is the purposeful hiding of the meaning of a communication. By itself, obfuscation is weak, because once the method/algorithm used for hiding is discovered, the protection is gone. But it still has use in increasing the complexity of solving the hidden message problem.

XOR

XOR (exclusive OR) is a simple cipher operation and is performed by the addition of the text and the key, using modulus 2 arithmetic. A string of text can be encrypted by applying the bitwise XOR operator to every character using a given key. To decrypt the output, merely reapplying the XOR function with the key will remove the cipher. Both of these operations are exceedingly fast on chips, making this a true line-speed method. XOR is a common component inside many of the more complex cipher algorithms.

The weakness of using the XOR method is when the text length is significantly longer than the key, forcing reuse of the key across the length of the cipher. If the key is as long as the text being encrypted and is never reused, then this forms a perfect cipher from a mathematical perspective.

ROT13

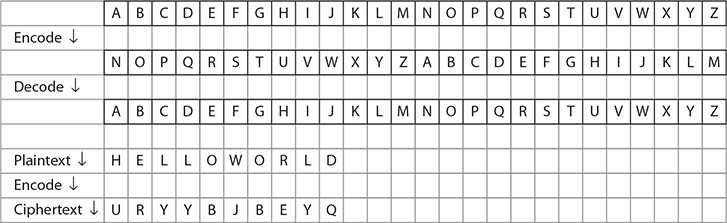

ROT13 is a special case of a Caesar substitution cipher where each character is replaced by a character 13 places later in the alphabet. Because the basic Latin alphabet has 26 letters, ROT13 has the property of undoing itself when applied twice. The following illustration demonstrates ROT13 encoding of “HelloWorld.” The top two rows show encoding, while the bottom two show decoding replacement.

Substitution Ciphers

Substitution ciphers substitute characters on a character-by-character basis via a specific scheme. The order of the characters in each block is maintained. A transposition cipher is one where the order of the characters is changed per a given algorithm. A simple substitution cipher replaces each character with a corresponding substitute character, the length of the message. Although this has an entropy of 88 bits, because of structures in language, this is relatively easily broken using frequency analysis of the substituted characters.

A more complex method is a polyalphabetic substitution, of which the Vigenère cipher is an example, where the substitution alphabet changes with each use of a character. This increases the complexity and thwarts basic frequency analysis as it obscures repeated letters and frequency analysis in general across a message.

Chapter Review

In this chapter, you became acquainted with cryptographic algorithms and their application. The chapter opened with an examination of the symmetric algorithms, AES, DES, 3DES, RC4, and Blowfish/Twofish. Next, the concept of block cipher modes was covered, including ECB, CBC, CTM, and GCM.

The chapter further explored encryption through asymmetric methods, RSA, DSA, Diffie-Hellman, ECC, and PGP/GPG. Hashing algorithms were then covered, including MD5, SHA, and RIPEMD. The chapter then presented key stretching algorithms and closed with an examination of obfuscation using XOR and ROT13.

Questions

To help you prepare further for the CompTIA Security+ exam, and to test your level of preparedness, answer the following questions and then check your answers against the correct answers at the end of the chapter.

1. Your organization wants to deploy a new encryption system that will protect the majority of data with a symmetric cipher of at least 256 bits in strength. What is the best choice of cipher for large amounts of data at rest?

A. RC4

B. 3DES

C. AES

D. Twofish

2. A colleague who is performing a rewrite of a custom application that was using 3DES encryption asks you how 3DES can be more secure than the DES it is based on. What is your response?

A. 3DES uses a key that’s three times longer.

B. 3DES loops through the DES algorithm three times, with different keys each time.

C. 3DES uses transposition versus the substitution used in DES.

D. 3DES is no more secure than DES.

3. What cipher mode is potentially vulnerable to a POODLE attack?

A. ECB

B. CBC

C. CTR

D. GCM

4. What cipher mode is used in the IEEE 802.1AE standard and recognized by NIST?

A. CTR

B. GCM

C. CBC

D. ECB

5. Your manager wants you to spearhead the effort to implement digital signatures in the organization and to report to him what is needed for proper security of those signatures. You likely have to study which algorithm?

A. RC4

B. AES

C. SHA-1

D. RSA

6. Hashing is most commonly used for which of the following?

A. Digital signatures

B. Secure storage of passwords for authentication

C. Key management

D. Block cipher algorithm padding

7. A friend at work asks you to e-mail him some information about a project you have been working on, but then requests you “to hide the e-mail from the monitoring systems by encrypting it using ROT13.” What is the weakness in this strategy?

A. ROT13 is a very simple substitution scheme and is well understood by anyone monitoring the system, providing no security.

B. ROT13 is not an algorithm.

C. The monitoring system will not allow anything but plaintext to go through.

D. ROT13 is more secure than is needed for an internal e-mail.

8. Why would you use PBKDF2 as part of your encryption architecture?

A. To use the speed of the crypto subsystems built into modern CPUs

B. To increase the number of rounds a symmetric cipher has to perform

C. To stretch passwords into secure-length keys appropriate for encryption

D. To add hash-based message integrity to a message authentication code

9. Why are hash collisions bad for malware prevention?

A. Malware could corrupt the hash algorithm.

B. Two different programs with the same hash could allow malware to be undetected.

C. The hashed passwords would be exposed.

D. The hashes are encrypted and cannot change.

10. What has made the PGP standard popular for so long?

A. Its flexible use of both symmetric and asymmetric algorithms

B. Simple trust model

C. The ability to run on any platform

D. The peer-reviewed algorithms

11. What is a key consideration when implementing an RC4 cipher system?

A. Key entropy

B. External integrity checks

C. Checks for weak keys

D. Secure key exchange

12. Why are ephemeral keys important to key exchange protocols?

A. They are longer than normal keys.

B. They add entropy to the algorithm.

C. They allow the key exchange to be completed faster.

D. They increase security by using a different key for each connection.

Answers

1. C. The most likely utilized cipher is AES. It can be run at 128-, 192-, and 256-bit strengths and is considered the gold standard of current symmetric ciphers, with no known attacks, and is computationally efficient.

2. B. 3DES can be more secure because it loops through the DES algorithm three times, with a different key each time: encrypt with key 1, decrypt with key 2, and then encrypt with key 3.

3. B. Cipher Block Chaining (CBC) mode is vulnerable to a POODLE (Padding Oracle On Downgraded Legacy Encryption) attack, where the system freely responds to a request about a message’s padding being correct. Manipulation of the padding is used in the attack.

4. B. Galois Counter Mode (GCM) is recognized by NIST and is used in the 802.1AE standard.

5. D. Digital signatures require a public key algorithm, so most likely you need to study RSA to provide the asymmetric cryptography.

6. B. Hashing is most commonly used to securely store passwords on systems so that users can authenticate to the system.

7. A. ROT13 is a simple substitution cipher that is very well known and will be simple for any person or system to decode.

8. C. PBKDF2 is a key stretching algorithm that stretches a password into a key of suitable length by adding a salt and then performing an HMAC to the input thousands of times.

9. B. The ability to create a program that has the same hash as a known-good program would allow malware to be undetected by detection software that uses a hash list of approved programs.

10. A. Pretty Good Privacy (PGP) is a popular standard because of its use of both symmetric and asymmetric algorithms when best suited to the type of encryption being done.

11. C. As RC4 is susceptible to weak keys, one key in 256 is considered weak and should not be utilized. Any implementation should have a check for weak keys as part of the protocol.

12. D. Ephemeral keys are important to key exchange protocols because they ensure that each connection has its own key for the symmetric encryption, and if an attacker compromises one key, he does not have all the traffic for this connection.