EC-GSM-IoT performance

Abstract

This chapter presents the performance of EC-GSM-IoT in terms of coverage, throughput, latency, power consumption, system capacity, and device complexity. It is shown that EC-GSM-IoT meets commonly accepted targets for these metrics in 3GPP, even in a system bandwidth as low as 600 kHz. In addition to the presented performance, an overview of the methods and assumptions used when deriving the presented results is given. This allows for a deeper understanding of both the scenarios in which EC-GSM-IoT is expected to operate and the applications EC-GSM-IoT is intended to serve.

Keywords

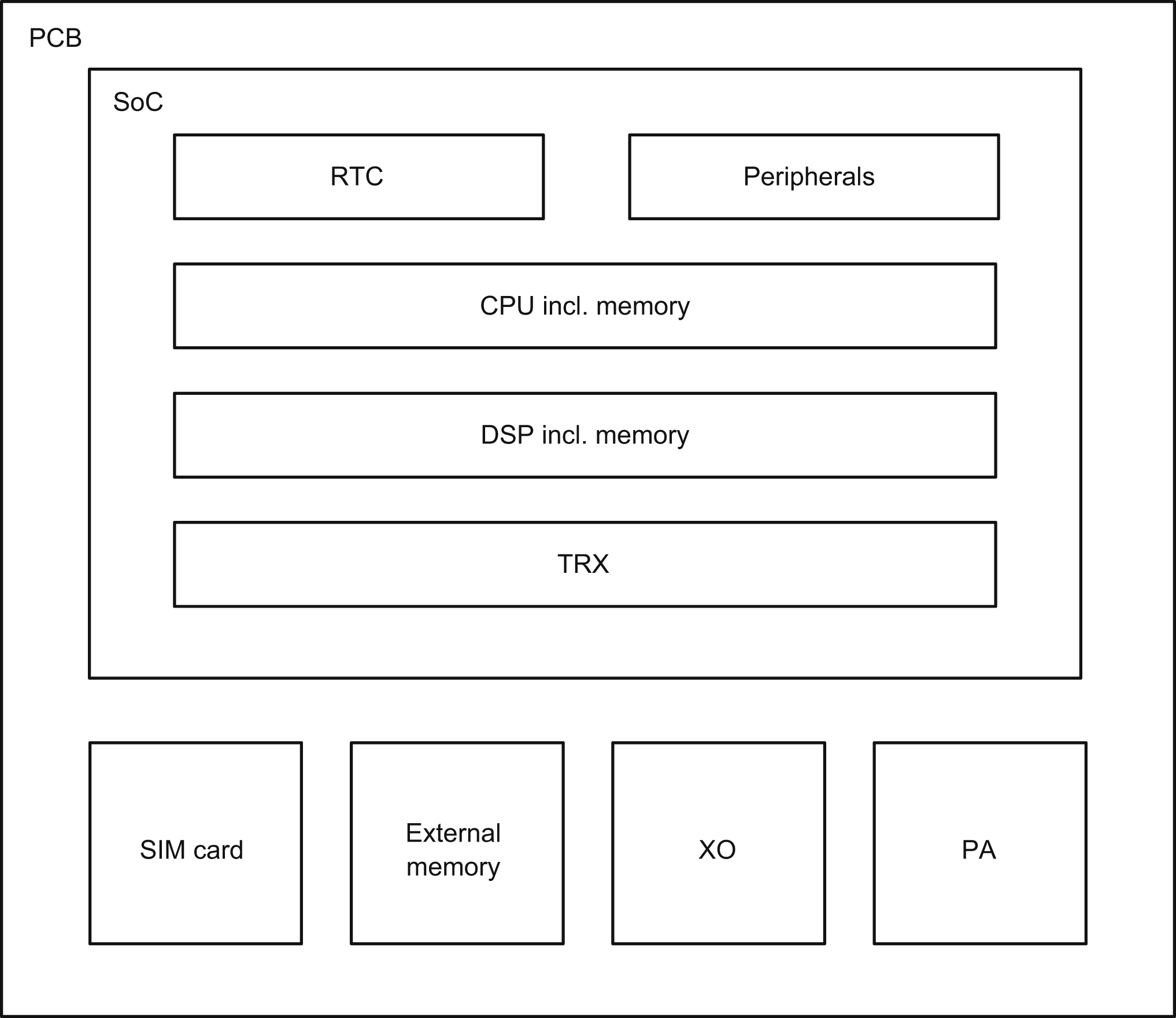

4.1. Performance objectives

4.2. Coverage

4.2.1. Evaluation assumptions

4.2.1.1. Requirements on logical channels

4.2.1.1.1. Synchronization channels

4.2.1.1.2. Control and broadcast channels

4.2.1.1.3. Traffic channels

4.2.1.2. Radio-related parameters

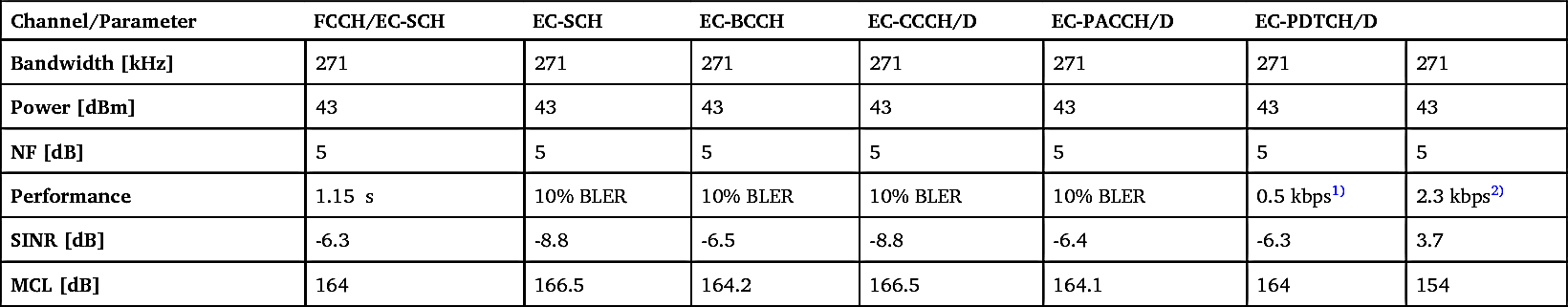

Table 4.1

4.2.1.3. Coverage performance

(4.1)

(4.1)

Table 4.2

| Channel/Parameter | FCCH/EC-SCH | EC-SCH | EC-BCCH | EC-CCCH/D | EC-PACCH/D | EC-PDTCH/D | |

|---|---|---|---|---|---|---|---|

| Bandwidth [kHz] | 271 | 271 | 271 | 271 | 271 | 271 | 271 |

| Power [dBm] | 43 | 43 | 43 | 43 | 43 | 43 | 43 |

| NF [dB] | 5 | 5 | 5 | 5 | 5 | 5 | 5 |

| Performance | 1.15 s | 10% BLER | 10% BLER | 10% BLER | 10% BLER | 0.5 kbps 1) | 2.3 kbps 2) |

| SINR [dB] | -6.3 | -8.8 | -6.5 | -8.8 | -6.4 | -6.3 | 3.7 |

| MCL [dB] | 164 | 166.5 | 164.2 | 166.5 | 164.1 | 164 | 154 |

Table 4.3

| Channel/Parameter | EC-RACH | EC-PACCH/U | EC-PDTCH/U | |||

|---|---|---|---|---|---|---|

| Bandwidth [kHz] | 271 | 271 | 271 | 271 | 271 | 271 |

| Power [dBm] | 33 | 23 | 33 | 23 | 33 | 23 |

| NF [dB] | 3 | 3 | 3 | 3 | 3 | 3 |

| Performance | 20% BLER | 20% BLER | 10% BLER | 10% BLER | 0.5 kbps | 0.6 kbps |

| SINR [dB] | -15 | -15 | -14.3 | -14.3 | -14.3 | -14.3 |

| MCL [dB] | 164.7 | 154.7 | 164.0 | 154.0 | 164.0 | 154.0 |

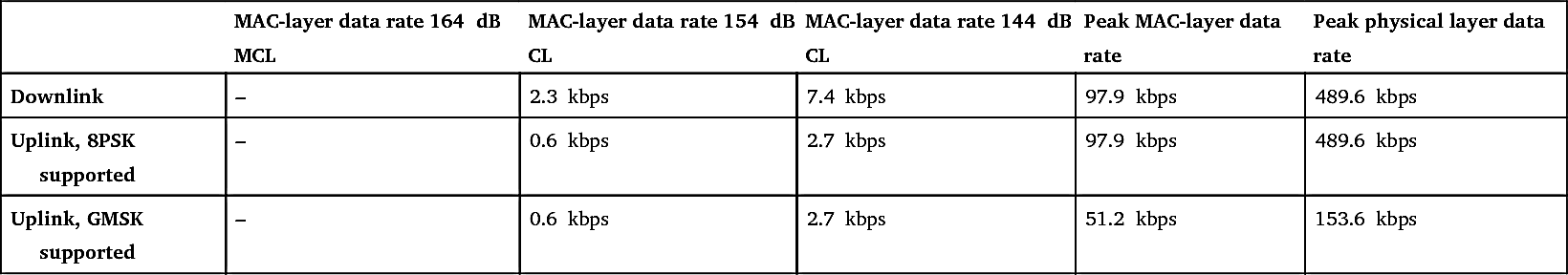

4.3. Data rate

Table 4.4

|

MAC-layer data rate

164

dB MCL

|

MAC-layer data rate

154

dB CL

|

MAC-layer data rate

144

dB CL

|

Peak MAC-layer data rate | Peak physical layer data rate | |

|---|---|---|---|---|---|

| Downlink | 0.5 kbps | 3.7 kbps | 45.6 kbps | 97.9 kbps | 489.6 kbps |

| Uplink, 8PSK supported | 0.5 kbps | 2.7 kbps | 39.8 kbps | 97.9 kbps | 489.6 kbps |

| Uplink, GMSK supported | 0.5 kbps | 2.7 kbps | 39.8 kbps | 51.2 kbps | 153.6 kbps |

Table 4.5

| MAC-layer data rate 164 dB MCL | MAC-layer data rate 154 dB CL | MAC-layer data rate 144 dB CL | Peak MAC-layer data rate | Peak physical layer data rate | |

|---|---|---|---|---|---|

| Downlink | – | 2.3 kbps | 7.4 kbps | 97.9 kbps | 489.6 kbps |

| Uplink, 8PSK supported | – | 0.6 kbps | 2.7 kbps | 97.9 kbps | 489.6 kbps |

| Uplink, GMSK supported | – | 0.6 kbps | 2.7 kbps | 51.2 kbps | 153.6 kbps |

4.4. Latency

4.4.1. Evaluation assumptions

Table 4.6

| Type | Exception report |

|---|---|

| Application data | 20 |

| COAP | 4 |

| DTLS | 13 |

| UDP | 8 |

| IP | 40 |

| SNDCP | 4 |

| LLC | 7 |

| Total | 96 |

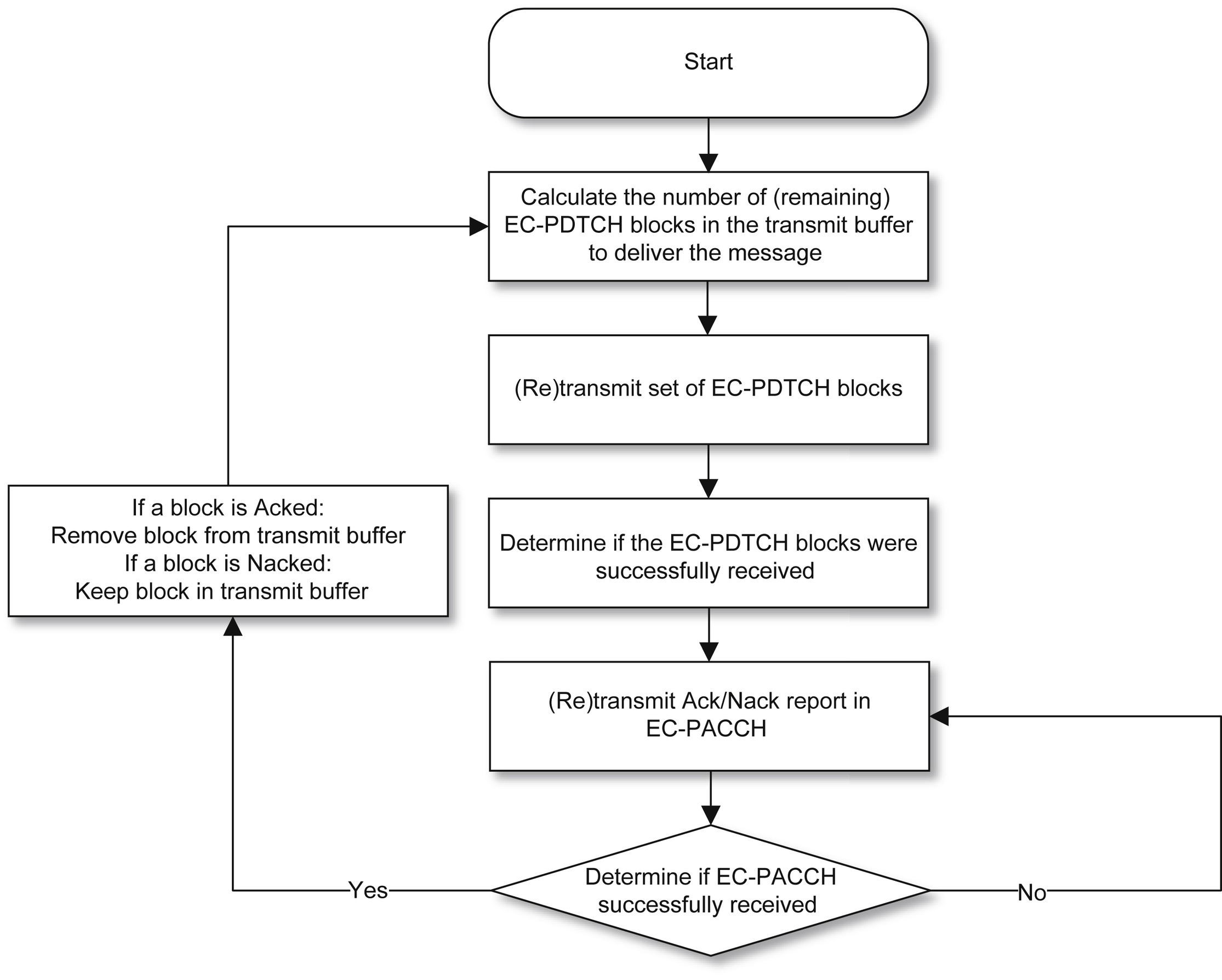

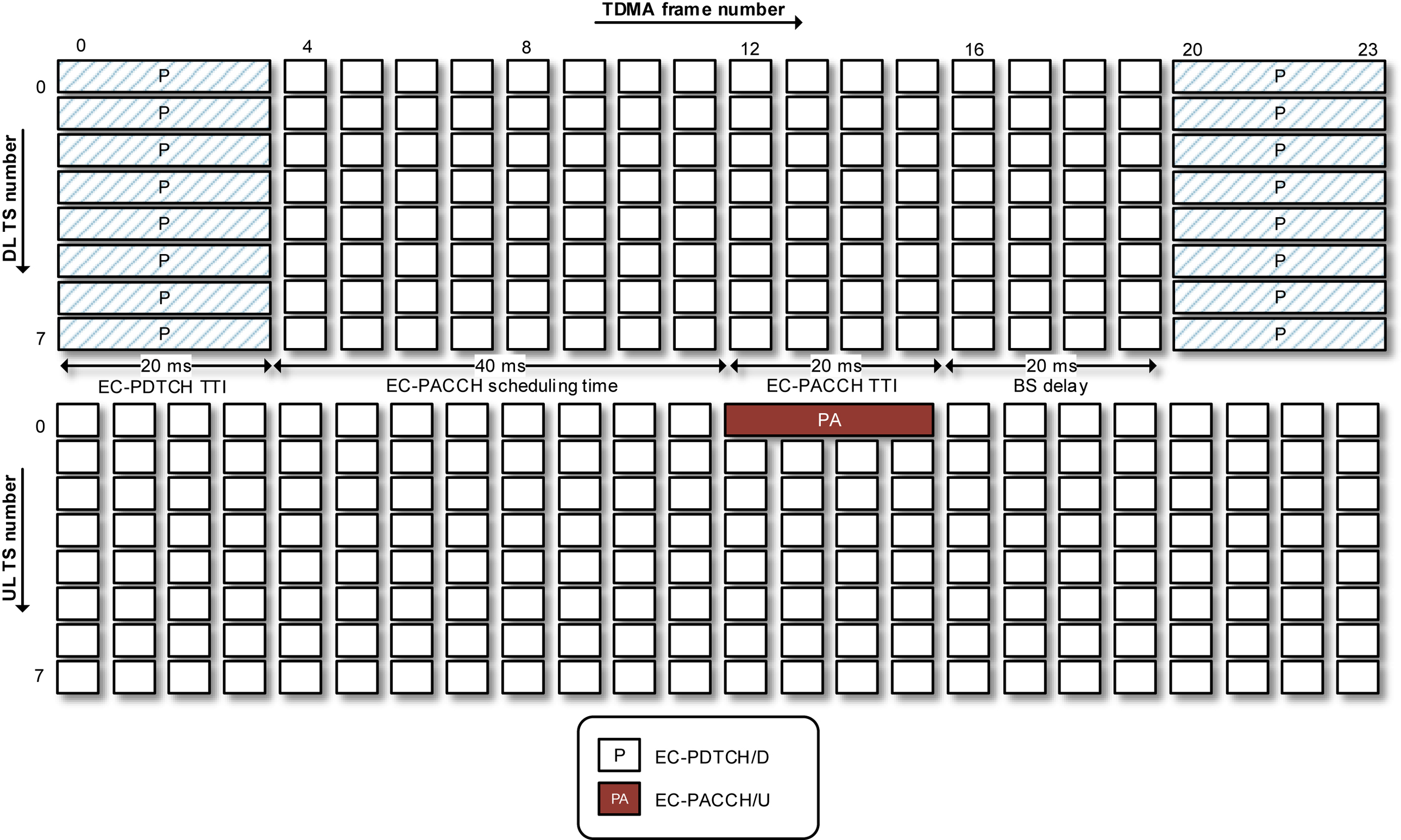

4.4.2. Latency performance

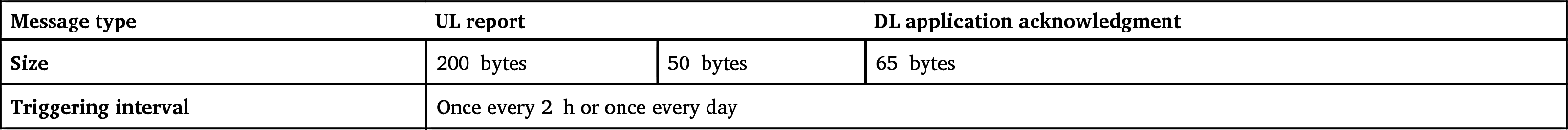

4.5. Battery life

4.5.1. Evaluation assumptions

Table 4.7

| Coupling loss | 23 dBm device | 33 dBm device |

|---|---|---|

| 144 dB | 1.2 s | 0.6 s |

| 154 dB | 3.5 s | 1.8 s |

| 164 dB | – | 5.1 s |

Table 4.8

| Message type | UL report | DL application acknowledgment | |

|---|---|---|---|

| Size | 200 bytes | 50 bytes | 65 bytes |

| Triggering interval | Once every 2 h or once every day | ||

Table 4.9

| TX, 33 dBm | TX, 23 dBm | RX | Idle mode, light sleep |

Idle mode,

Deep sleep

|

|---|---|---|---|---|

| 4.051 W | 0.503 W | 99 mW | 3 mW | 15 uW |

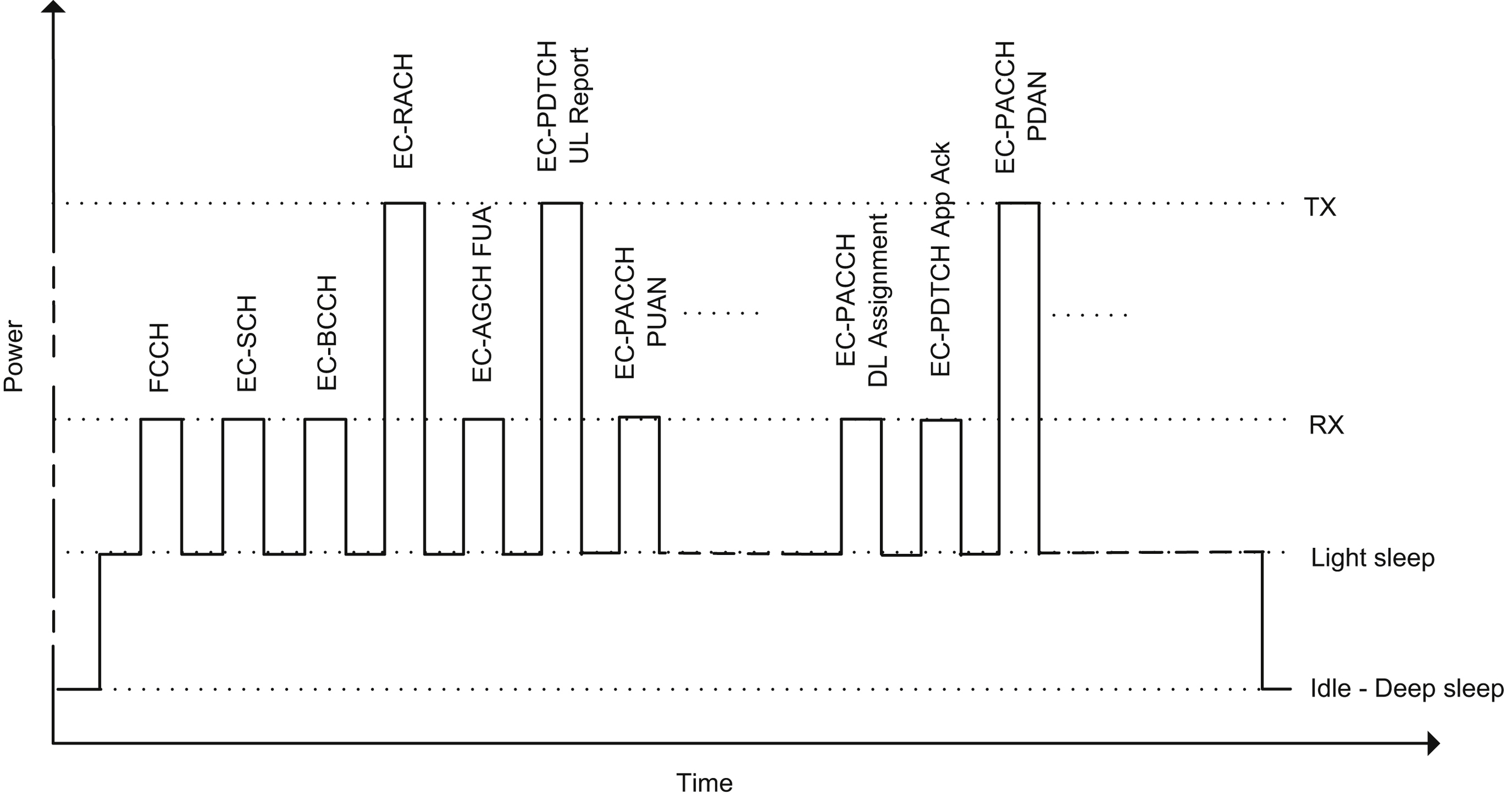

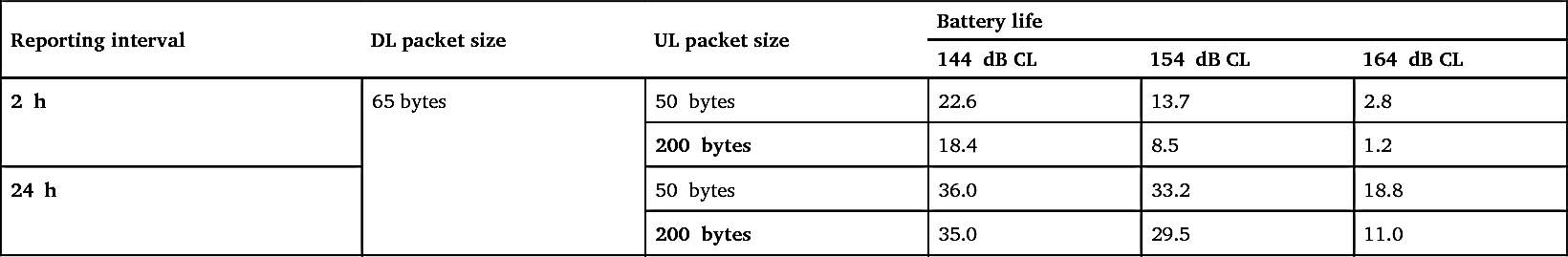

4.5.2. Battery life performance

Table 4.10

| Reporting interval | DL packet size | UL packet size | Battery life | ||

|---|---|---|---|---|---|

| 144 dB CL | 154 dB CL | 164 dB CL | |||

| 2 h | 65 bytes | 50 bytes | 22.6 | 13.7 | 2.8 |

| 200 bytes | 18.4 | 8.5 | 1.2 | ||

| 24 h | 50 bytes | 36.0 | 33.2 | 18.8 | |

| 200 bytes | 35.0 | 29.5 | 11.0 | ||

Table 4.11

| Reporting interval | DL packet size | UL packet size | Battery life | |

|---|---|---|---|---|

| 144 dB CL | 154 dB CL | |||

| 2 h | 65 bytes | 50 bytes | 26.1 | 12.5 |

| 200 bytes | 22.7 | 7.4 | ||

| 24 h | 50 bytes | 36.6 | 32.5 | |

| 200 bytes | 36.0 | 28.3 | ||

4.6. Capacity

4.6.1. Evaluation assumptions

Table 4.12

| Household density [homes/km2] | Devices per home | Devices per km2 | Inter-site distance [m] | Devices per hexagonal cell |

|---|---|---|---|---|

| 1517 | 40 | 60,680 | 1732 | 52,547 |

Table 4.13

| Parameter | Model |

|---|---|

| Cell structure | Hexagonal grid with 3 sectors per size |

| Cell inter site distance | 1732 m |

| Frequency band | 900 MHz |

| System bandwidth | 2.4 MHz |

| Frequency reuse | 12 |

| Frequency channels per cell | 1 |

| Base station transmit power | 43 dBm |

| Base station antenna gain | 18 dBi |

| Channel mapping |

TS0: FCCH, SCH, BCCH, CCCH

TS1: EC-SCH, EC-BCCH, EC-CCCH

TS2-7: EC-PACCH, EC-PDTCH

|

| Device transmit power | 33 dBm or 23 dBm |

| Device antenna gain | -4 dBi |

| Device mobility | 0 km/h |

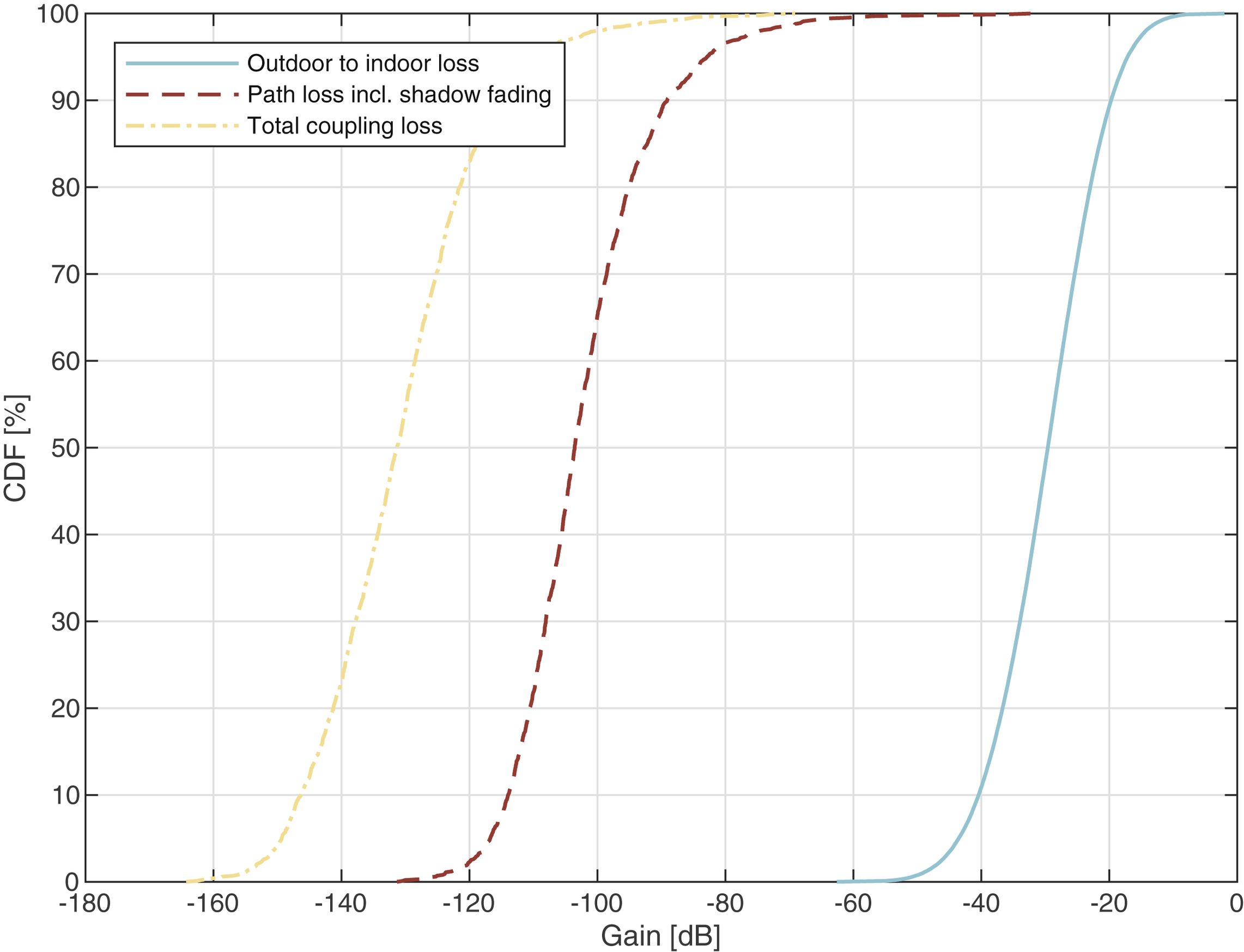

| Path loss model | 120.9 + 37.6∙LOG10(d), with d being the base station to device distance in km |

| Shadow fading standard deviation | 8 dB |

| Shadow fading correlation distance | 110 m |

4.6.1.1. Autonomous reporting and network command

Table 4.14

| Device report and network command periodicity [hours] | Device distribution [%] |

|---|---|

| 24 | 40 |

| 2 | 40 |

| 1 | 15 |

| 0.5 | 5 |

Table 4.15

| Periodicity [days] | Device distribution [%] |

|---|---|

| 180 | 100 |

4.6.1.2. Software download

4.6.2. Capacity performance

4.7. Device complexity

4.7.1. Peripherals and real time clock

4.7.2. CPU

- • Circuit switched voice is not supported.

- • The only mandated modulation and coding schemes are MCS-1 to MCS-4.

- • The RLC window size is only 16 (compared to 64 for GPRS or 1024 for EGPRS).

- • There is a significant reduction in the number of supported RLC/MAC messages and procedures compared with GPRS.

- • Concurrent uplink and downlink data transfers are not supported.

4.7.3. DSP and transceiver

4.7.4. Overall impact on device complexity

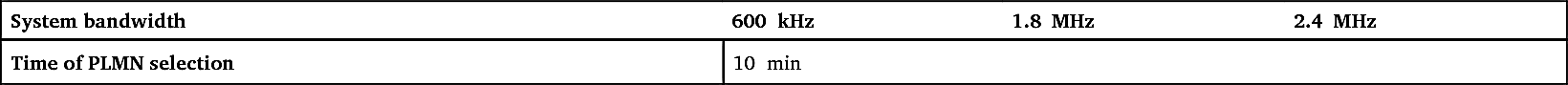

4.8. Operation in a narrow frequency deployment

4.8.1. Idle mode procedures

4.8.1.1. PLMN and cell selection

Table 4.16

| System bandwidth | 600 kHz | 1.8 MHz | 2.4 MHz |

|---|---|---|---|

| Time of PLMN selection | 10 min | ||

Table 4.17

| System bandwidth | 600 kHz | 1.8 MHz | 2.4 MHz |

|---|---|---|---|

| Probability of selecting strongest cell as serving cell | 89.3 % | 89.7 % | 90.1 % |

Table 4.18

| System bandwidth | 600 kHz | 1.8 MHz | 2.4 MHz |

|---|---|---|---|

| Probability of reconfirming serving cell | 98.7 % | 99.9 % | 99.9 % |

| Synchronization time, 99th percentile | 0.32 s | 0.12 s | 0.09 s |

4.8.1.2. Cell reselection

4.8.2. Data and control channel performance