What is the value of code? Agile developers value “working software over comprehensive documentation.”[21] Does that mean a requirements document has no value? Does it mean unfinished code has no value?

Like a rock at the top of a hill, code has potential—potential energy for the rock and potential value for the code. It takes a push to realize that potential. The rock has to be pushed onto a slope in order to gain kinetic energy; the software has to be pushed into production in order to gain value.

It’s easy to tell how much you need to push a rock. Big rock? Big push. Little rock? Little push. Software isn’t so simple—it often looks ready to ship long before it’s actually done. It’s my experience that teams underestimate how hard it will be to push their software into production.

To make things more difficult, software’s potential value changes. If nothing ever pushes that rock, it will sit on top of its hill forever; its potential energy won’t change. Software, alas, sits on a hill that undulates. You can usually tell when your hill is becoming a valley, but if you need weeks or months to get your software rolling, it might be sitting in a ditch by the time you’re ready to push.

In order to meet commitments and take advantage of opportunities, you must be able to push your software into production within minutes. This chapter contains 6 practices that give you leverage to turn your big release push into a 10-minute tap:

"done done" ensures that completed work is ready to release.

No bugs allows you to release your software without a separate testing phase.

Version control allows team members to work together without stepping on each other’s toes.

A ten-minute build builds a tested release package in under 10 minutes.

Continuous integration prevents a long, risky integration phase.

Collective code ownership allows the team to solve problems no matter where they may lie.

Post-hoc documentation decreases the cost of documentation and increases its accuracy.

Note

Whole Team

We’re done when we’re production-ready.

“Hey, Liz!” Rebecca sticks her head into Liz’s office. “Did you finish that new feature yet?”

Liz nods. “Hold on a sec,” she says, without pausing in her typing. A flurry of keystrokes crescendos and then ends with a flourish. “Done!” She swivels around to look at Rebecca. “It only took me half a day, too.”

“Wow, that’s impressive,” says Rebecca. “We figured it would take at least a day, probably two. Can I look at it now?”

“Well, not quite,” says Liz. “I haven’t integrated the new code yet.”

“OK,” Rebecca says. “But once you do that, I can look at it, right? I’m eager to show it to our new clients. They picked us precisely because of this feature. I’m going to install the new build on their test bed so they can play with it.”

Liz frowns. “Well, I wouldn’t show it to anybody. I haven’t tested it yet. And you can’t install it anywhere—I haven’t updated the installer or the database schema generator.”

“I don’t understand,” Rebecca grumbles. “I thought you said you were done!”

“I am,” insists Liz. “I finished coding just as you walked in. Here, I’ll show you.”

“No, no, I don’t need to see the code,” Rebecca says. “I need to be able to show this to our customers. I need it to be finished. Really finished.”

“Well, why didn’t you say so?” says Liz. “This feature is done—it’s all coded up. It’s just not done done. Give me a few more days.”

Important

You should able to deploy the software at the end of any iteration.

Wouldn’t it be nice if, once you finished a story, you never had to come back to it? That’s the idea behind “done done.” A completed story isn’t a lump of unintegrated, untested code. It’s ready to deploy.

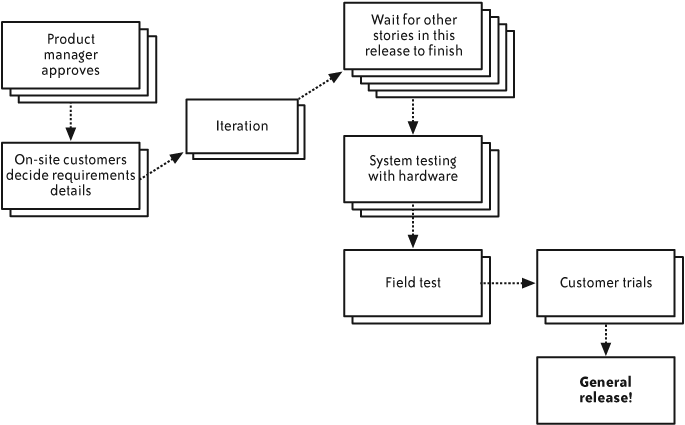

Partially finished stories result in hidden costs to your project. When it’s time to release, you have to complete an unpredictable amount of work. This destabilizes your release planning efforts and prevents you from meeting your commitments.

To avoid this problem, make sure all of your planned stories are “done done” at the end of each iteration. You should be able to deploy the software at the end of any iteration, although normally you’ll wait until more features have been developed.

What does it take for software to be “done done”? That depends on your organization. I often explain that a story is only complete when the customers can use it as they intended. Create a checklist that shows the story completion criteria. I write mine on the iteration planning board:

Tested (all unit, integration, and customer tests finished)

Coded (all code written)

Designed (code refactored to the team’s satisfaction)

Integrated (the story works from end to end—typically, UI to database—and fits into the rest of the software)

Builds (the build script includes any new modules)

Installs (the build script includes the story in the automated installer)

Migrates (the build script updates database schema if necessary; the installer migrates data when appropriate)

Reviewed (customers have reviewed the story and confirmed that it meets their expectations)

Fixed (all known bugs have been fixed or scheduled as their own stories)

Accepted (customers agree that the story is finished)

Important

Some teams add “Documented” to this list, meaning that the story has documentation and help text. This is most appropriate when you have a technical writer as part of your team.

Other teams include “Performance” and “Scalability” in their “done done” list, but these can lead to premature optimization. I prefer to schedule performance, scalability, and similar issues with dedicated stories (see Performance Optimization” in Chapter 9).

Important

Make a little progress on every aspect of your work every day.

XP works best when you make a little progress on every aspect of your work every day, rather than reserving the last few days of your iteration for getting stories “done done.” This is an easier way to work, once you get used to it, and it reduces the risk of finding unfinished work at the end of the iteration.

Use test-driven development to combine testing, coding, and designing. When working on an engineering task, make sure it integrates with the existing code. Use continuous integration and keep the 10-minute build up-to-date. Create an engineering task (see Incremental Requirements” in Chapter 9 for more discussion of customer reviews) for updating the installer, and have one pair work on it in parallel with the other tasks for the story.

Just as importantly, include your on-site customers in your work. As you work on a UI task, show an on-site customer what the screen will look like, even if it doesn’t work yet (see Customer review” in Chapter 9). Customers often want to tweak a UI when they see it for the first time. This can lead to a surprising amount of last-minute work if you delay any demos to the end of the iteration.

Important

Similarly, as you integrate various pieces, run the software to make sure the pieces all work together. While this shouldn’t take the place of testing, it’s a good check to help prevent you from missing anything. Enlist the help of the testers on occasion, and ask them to show you exploratory testing techniques. (Again, this review doesn’t replace real exploratory testing.)

Important

Throughout this process, you may find mistakes, errors, or outright bugs. When you do, fix them right away—then improve your work habits to prevent that kind of error from occurring again.

When you believe the story is “done done,” show it to your customers for final acceptance review. Because you reviewed your progress with customers throughout the iteration, this should only take a few minutes.

This may seem like an impossibly large amount of work to do in just one week. It’s easier to do if you work on it throughout the iteration rather than saving it up for the last day or two. The real secret, though, is to make your stories small enough that you can completely finish them all in a single week.

Many teams new to XP create stories that are too large to get “done done.” They finish all the coding, but they don’t have enough time to completely finish the story—perhaps the UI is a little off, or a bug snuck through the cracks.

Remember, you are in control of your schedule. You decide how many stories to sign up for and how big they are. Make any story smaller by splitting it into multiple parts (see Stories” in Chapter 8) and only working on one of the pieces this iteration.

Creating large stories is a natural mistake, but some teams compound the problem by thinking, “Well, we really did finish the story, except for that one little bug.” They give themselves credit for the story, which inflates their velocity and perpetuates the problem.

Important

If a story isn’t “done done,” don’t count it toward your velocity.

If you have trouble getting your stories “done done,” don’t count those stories toward your velocity (see Velocity” in Chapter 8). Even if the story only has a few minor UI bugs, count it as a zero when calculating your velocity. This will lower your velocity, which will help you choose a more manageable amount of work in your next iteration. (Estimating” in Chapter 8 has more details about using velocity to balance your workload.)

You may find this lowers your velocity so much that you can only schedule one or two stories in an iteration. This means that your stories were too large to begin with. Split the stories you have, and work on making future stories smaller.

How does testers’ work fit into “done done”?

Important

In addition to helping customers and programmers, testers are responsible for nonfunctional testing and exploratory testing. Typically, they do these only after stories are “done done.” They may perform some nonfunctional tests, however, in the context of a specific performance story.

Regardless, the testers are part of the team, and everyone on the team is responsible for helping the team meet its commitment to deliver “done done” stories at the end of the iteration. Practically speaking, this usually means that testers help customers with customer testing, and they may help programmers and customers review the work in progress.

What if we release a story we think is “done done,” but then we find a bug or stakeholders tell us they want changes?

Important

If you can absorb the change with your iteration slack, go ahead and make the change. Turn larger changes into new stories.

This sort of feedback is normal—expect it. The product manager should decide whether the changes are appropriate, and if they are, he should modify the release plan. If you are constantly surprised by stakeholder changes, consider whether your on-site customers truly reflect your stakeholder community.

When your stories are “done done,” you avoid unexpected batches of work and spread wrap-up and polish work throughout the iteration. Customers and testers have a steady workload through the entire iteration. The final customer acceptance demonstration takes only a few minutes. At the end of each iteration, your software is ready to demonstrate to stakeholders with the scheduled stories working to their satisfaction.

This practice may seem advanced. It’s not, but it does require self-discipline. To be “done done” every week, you must also work in iterations and use small, customer-centric stories.

In addition, you need a whole team—one that includes customers and testers (and perhaps a technical writer) in addition to programmers. The whole team must sit together. If they don’t, the programmers won’t be able to get the feedback they need in order to finish the stories in time.

Finally, you need incremental design and architecture and test-driven development in order to test, code, and design each story in such a short timeframe.

This practice is the cornerstone of XP planning. If you aren’t “done done” at every iteration, your velocity will be unreliable. You won’t be able to ship at any time. This will disrupt your release planning and prevent you from meeting your commitments, which will in turn damage stakeholder trust. It will probably lead to increased stress and pressure on the team, hurt team morale, and damage the team’s capacity for energized work.

The alternative to being “done done” is to fill the end of your schedule with make-up work. You will end up with an indeterminate amount of work to fix bugs, polish the UI, create an installer, and so forth. Although many teams operate this way, it will damage your credibility and your ability to deliver. I don’t recommend it.

[21] The Agile Manifesto, http://www.agilemanifesto.org/.

[22] The value stream map was inspired by [Poppendieck & Poppendieck].