Perceptual Organization

Flip It Video: Gestalt Psychology

Flip It Video: Gestalt Psychology

It’s one thing to understand how we see colors and shapes. But how do we organize and interpret those sights (or sounds, or tastes, or smells) so that they become meaningful perceptions—a rose in bloom, a familiar face, a sunset?

Early in the twentieth century, a group of German psychologists noticed that people who are given a cluster of sensations tend to organize them into a gestalt, a German word meaning a “form” or a “whole.” As we look straight ahead, we cannot separate the perceived scene into our left and right fields of view (each as seen with one eye closed). Our conscious perception is, at every moment, a seamless scene—an integrated whole.

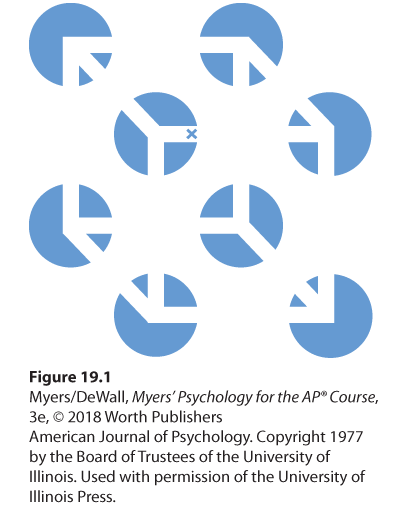

Consider Figure 19.1: The individual elements of this figure, called a Necker cube, are really nothing but eight blue circles, each containing three converging white lines. When we view these elements all together, however, we see a cube that sometimes reverses direction. This phenomenon nicely illustrates a favorite saying of Gestalt psychologists: In perception, the whole may exceed the sum of its parts.

Figure 19.1 A Necker cube

What do you see: circles with white lines, or a cube? If you stare at the cube, you may notice that it reverses location, moving the tiny X in the center from the front edge to the back. At times, the cube may seem to float forward, with circles behind it. At other times, the circles may become holes through which the cube appears, as though it were floating behind them. There is far more to perception than meets the eye. (From Bradley et al., 1976.)

Over the years, the Gestalt psychologists demonstrated many principles we use to organize our sensations into perceptions (Wagemans et al., 2012a,b). Underlying all of them is a fundamental truth: Our brain does more than register information about the world. Perception is not just opening a shutter and letting a picture print itself on the brain. We filter incoming information and construct perceptions. Mind matters.

Form Perception

Imagine designing a video-computer system that, like your eye-brain system, could recognize faces at a glance. What abilities would it need?

Figure and Ground

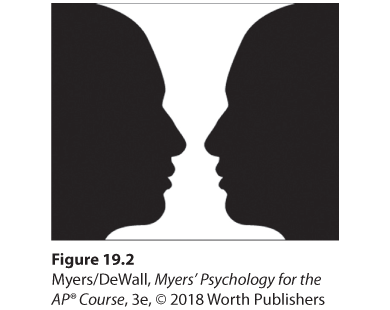

To start with, the system would need to perceive figure-ground—to separate faces from their backgrounds. In our eye-brain system, this is our first perceptual task—perceiving any object (the figure) as distinct from its surroundings (the ground). As you read, the words are the figure; the white space is the ground. This perception applies to our hearing, too. As you hear voices at a party, the one you attend to becomes the figure; all others are part of the ground. Sometimes the same stimulus can trigger more than one perception. In Figure 19.2, the figure-ground relationship continually reverses. First we see the vase, then the faces, but we always organize the stimulus into a figure seen against a ground.

Figure 19.2 Reversible figure and ground

Grouping

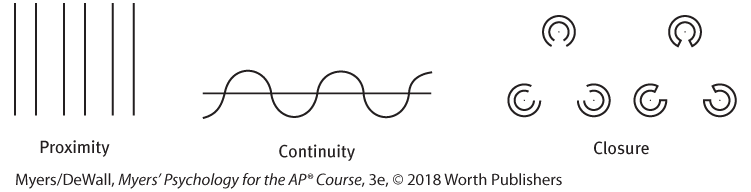

Having discriminated figure from ground, we (and our video-computer system) must also organize the figure into a meaningful form. Some basic features of a scene—such as color, movement, and light-dark contrast—we process instantly and automatically (Treisman, 1987). Our mind brings order and form to other stimuli by following certain rules for grouping, also identified by the Gestalt psychologists. These rules, which we apply even as infants and even in our touch perceptions, illustrate how the perceived whole differs from the sum of its parts, rather as water differs from its hydrogen and oxygen parts (Gallace & Spence, 2011; Quinn et al., 2002; Rock & Palmer, 1990). Three examples:

Proximity: We group nearby figures together. We see not six separate lines, but three sets of two lines:

Continuity: We perceive smooth, continuous patterns rather than discontinuous ones. This pattern could be a series of alternating semicircles, but we perceive it as two continuous lines—one wavy, one straight:

Closure: We fill in gaps to create a complete, whole object. Thus we assume that the circles on the left are complete but partially blocked by the (illusory) triangle. Add nothing more than little line segments to close off the circles and your brain stops constructing a triangle.

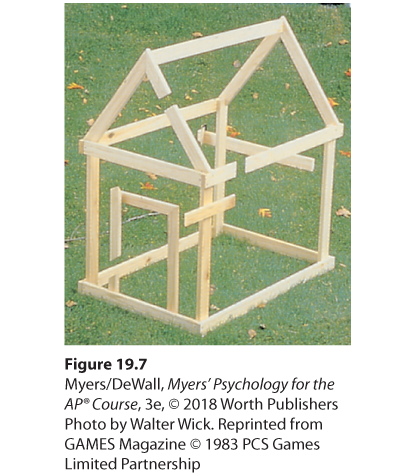

Such principles usually help us construct reality. Sometimes, however, they lead us astray, as when we look at the doghouse in Figure 19.3.

Figure 19.3 Grouping principles

What’s the secret to this impossible doghouse? You probably perceive this doghouse as a gestalt—a whole (though impossible) structure. Actually, your brain imposes this sense of wholeness on the picture. As Figure 19.7 shows, Gestalt grouping principles such as closure and continuity are at work here.

Depth Perception

Our eye-brain system performs many remarkable feats, among which is depth perception. From the two-dimensional images falling on our retinas, we somehow organize three-dimensional perceptions that let us estimate the distance of an oncoming car or the height of a faraway house. How do we acquire this ability? Are we born with it? Did we learn it?

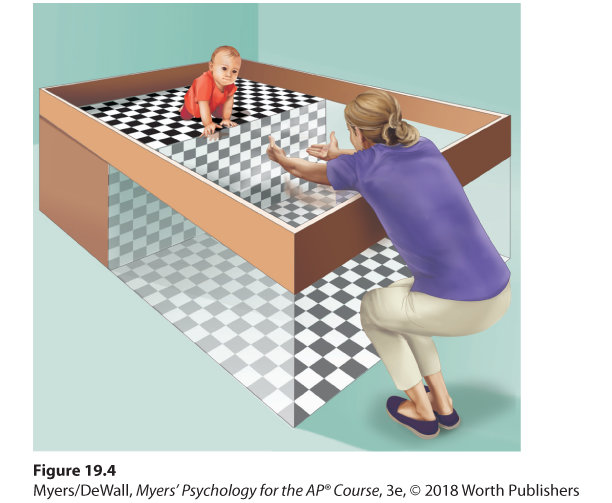

As Eleanor Gibson picnicked on the rim of the Grand Canyon, her scientific curiosity kicked in. She wondered: Would a toddler peering over the rim perceive the dangerous drop-off and draw back? To answer that question and others, Gibson and Richard Walk (1960) designed a series of experiments in their Cornell University laboratory using a visual cliff—a model of a cliff with a “drop-off” area that was actually covered by sturdy glass. They placed 6- to 14-month-old infants on the edge of the “cliff” and had the infants’ mothers coax them to crawl out onto the glass (Figure 19.4). Most infants refused to do so, indicating that they could perceive depth.

Figure 19.4 Visual cliff

Eleanor Gibson and Richard Walk devised this miniature cliff with a glass-covered drop-off to determine whether crawling infants and newborn animals can perceive depth. Even when coaxed, infants are reluctant to venture onto the glass over the cliff.

Had they learned to perceive depth? Learning is surely part of the answer because crawling, no matter when it begins, seems to increase an infant’s fear of heights (Adolph et al., 2014; Campos et al., 1992). But depth perception is also partly innate. Mobile newborn animals—even those with no visual experience (including young kittens, a day-old goat, and newly hatched chicks)—also refuse to venture across the visual cliff. Thus, biology prepares us to be wary of heights, and experience amplifies that fear.

If we were to build the ability to perceive depth into our video-computer system, what rules might enable it to convert two-dimensional images into a single three-dimensional perception? A good place to start would be the depth cues our brain receives from information supplied by one or both of our eyes.

Binocular Cues

People who see with two eyes perceive depth thanks partly to binocular cues. Here’s an example: With both eyes open, hold two pens or pencils in front of you and touch their tips together. Now do so with one eye closed. A more difficult task, yes?

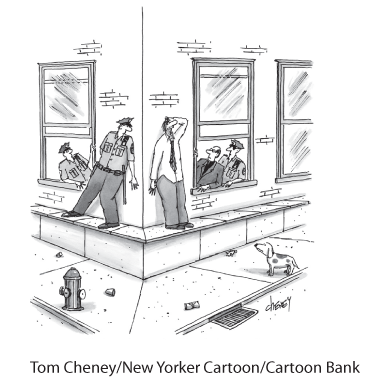

“I can’t go on living with such lousy depth perception!”

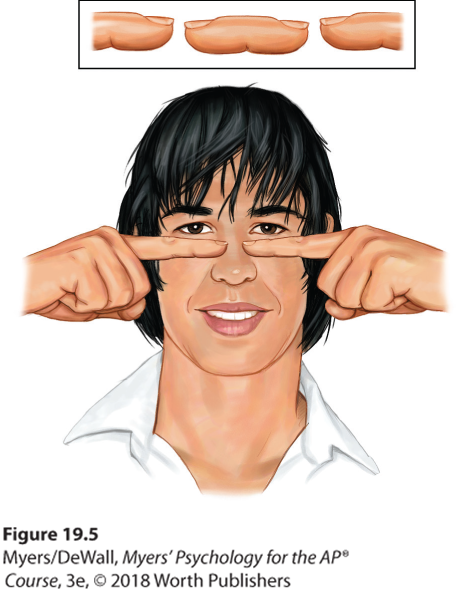

We use binocular cues to judge the distance of nearby objects. One such cue is convergence, the inward angle of the eyes focusing on a near object. Another is retinal disparity. Because your eyes are about 2½ inches apart, your retinas receive slightly different images of the world. By comparing these two images, your brain can judge how close an object is to you. The greater the disparity (difference) between the two retinal images, the closer the object. Try it. Hold your two index fingers, with the tips about half an inch apart, directly in front of your nose, and your retinas will receive quite different views. If you close one eye and then the other, you can see the difference. (Bring your fingers close and you can create a finger sausage, as in Figure 19.5.) At a greater distance—say, when you hold your fingers at arm’s length—the disparity is smaller.

Figure 19.5 The floating finger sausage

Hold your two index fingers about 5 inches in front of your eyes, with their tips half an inch apart. Now look beyond them and note the weird result. Move your fingers out farther and the retinal disparity—and the finger sausage—will shrink.

We could easily include retinal disparity in our video-computer system. Moviemakers sometimes film a scene with two cameras placed a few inches apart. Viewers then watch the film through glasses that allow the left eye to see only the image from the left camera, and the right eye to see only the image from the right camera. The resulting effect, as 3-D movie fans know, mimics or exaggerates normal retinal disparity, giving the perception of depth.

Monocular Cues

Flip It Video: Monocular Cues

Flip It Video: Monocular Cues

How do we judge whether a person is 10 or 100 meters away? Retinal disparity won’t help us here, because there won’t be much difference between the images cast on our right and left retinas. At such distances, we depend on monocular cues (depth cues available to each eye separately). See Figure 19.6 for some examples.

Figure 19.6 Monocular depth cues

Motion Perception

Imagine that, like Mrs. M. described earlier, you could perceive the world as having color, form, and depth but that you could not see motion. Not only would you be unable to bike or drive, you would have trouble writing, eating, and walking.

Normally your brain computes motion based partly on its assumption that shrinking objects are retreating (not getting smaller) and enlarging objects are approaching. But you are imperfect at motion perception. In young children, this ability to correctly perceive approaching (and enlarging) vehicles is not yet fully developed, which puts them at risk for pedestrian accidents (Wann et al., 2011). But it’s not just children who have occasional difficulties with motion perception. An adolescent or adult brain is also sometimes tricked into believing what it is not seeing. When large and small objects move at the same speed, the large objects appear to move more slowly. Thus, trains seem to move slower than cars, and jumbo jets seem to land more slowly than little jets.

“ Sometimes I wonder: Why is that Frisbee getting bigger? And then it hits me.”

Anonymous

Our brain also perceives a rapid series of slightly varying images as continuous movement (a phenomenon called stroboscopic movement). As film animators know well, a superfast slide show of 24 still pictures a second will create an illusion of movement. We construct that motion in our heads, just as we construct movement in blinking marquees and holiday lights. We perceive two adjacent stationary lights blinking on and off in quick succession as one single light moving back and forth. Lighted signs exploit this phi phenomenon with a succession of lights that creates the impression of, say, a moving arrow.

Perceptual Constancy

So far, we have noted that our video-computer system must perceive objects as we do—as having a distinct form, location, and perhaps motion. Its next task is to recognize objects without being deceived by changes in their color, brightness, shape, or size—a top-down process called perceptual constancy. Regardless of the viewing angle, distance, and illumination, we can identify people and things in less time than it takes to draw a breath. This feat, which challenges even advanced computers, would be a monumental challenge for a video-computer system.

Color and Brightness Constancies

Our experience of color depends on an object’s context. This would be clear if you viewed an isolated tomato through a paper tube over the course of a day. As the light—and thus the tomato’s reflected wavelengths—changed, the tomato’s color would also seem to change. But if you discarded the paper tube and viewed the tomato as one item in a salad bowl, its perceived color would remain essentially constant. This perception of consistent color we call color constancy.

Figure 19.7 The solution

Another view of the impossible doghouse in Figure 19.3 reveals the secrets of this illusion. From the photo angle in Figure 19.3, the grouping principle of closure leads us to perceive the boards as continuous.

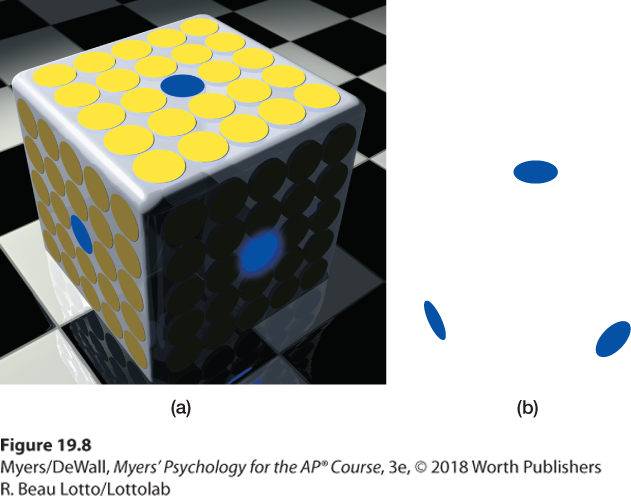

Though we take color constancy for granted, this ability is truly remarkable. A blue poker chip under indoor lighting reflects wavelengths that match those reflected by a sunlit gold chip (Jameson, 1985). Yet bring a goldfinch indoors and it won’t look like a bluebird. The color is not in the chip or the bird’s feathers. We see color thanks to our brain’s computations of the light reflected by an object relative to the objects surrounding it. Figure 19.8 dramatically illustrates the ability of a blue object to appear very different in three different contexts. Yet we have no trouble seeing these disks as blue. Nor does knowing the truth—that these disks are identically colored—diminish our perception that they are quite different. Because we construct our perceptions, we can simultaneously accept alternative objective and subjective realities.

Figure 19.8 Color depends on context

(a) Believe it or not, these three blue disks are identical in color. (b) Remove the surrounding context and see what results.

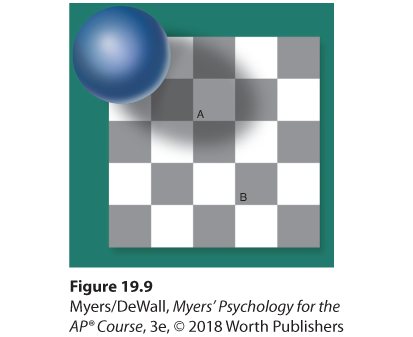

Brightness constancy (also called lightness constancy) similarly depends on context. We perceive an object as having a constant brightness even as its illumination varies. This perception of constancy depends on relative luminance—the amount of light an object reflects relative to its surroundings (Figure 19.9). White paper reflects 90 percent of the light falling on it; black paper, only 10 percent. Although a black paper viewed in sunlight may reflect 100 times more light than does a white paper viewed indoors, it will still look black (McBurney & Collings, 1984). But if you view sunlit black paper through a narrow tube so nothing else is visible, it may look gray, because in bright sunshine it reflects a fair amount of light. View it without the tube and it is again black, because it reflects much less light than the objects around it.

Figure 19.9 Relative luminance

Because of its surrounding context, we perceive Square A as lighter than Square B. But believe it or not, they are identical. To channel comedian Richard Pryor, “Who you gonna believe: me, or your lying eyes?” If you believe your lying eyes—actually, your lying brain—you can photocopy (or screen-capture and print) the illustration, then cut out the squares and compare them. (Information from Edward Adelson.)

This principle—that we perceive objects not in isolation but in their environmental context—matters to artists, interior decorators, and clothing designers. Our perception of the color and brightness of a wall or of a streak of paint on a canvas is determined not just by the paint in the can but by the surrounding colors. The take-home lesson: Context governs our perceptions.

Shape and Size Constancies

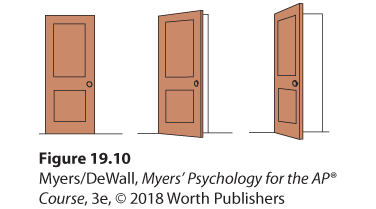

Sometimes an object whose actual shape cannot change seems to change shape with the angle of our view. More often, thanks to shape constancy, we perceive the form of familiar objects, such as the door in Figure 19.10, as constant even while our retinas receive changing images of them. Our brain manages this feat because visual cortex neurons rapidly learn to associate different views of an object (Li & DiCarlo, 2008).

Figure 19.10 Shape constancy

A door casts an increasingly trapezoidal image on our retinas as it opens. Yet we still perceive it as rectangular.

Thanks to size constancy, we perceive an object as having an unchanging size, even while our distance from it varies. We assume a car is large enough to carry people, even when we see its tiny image from two blocks away. This assumption also illustrates the close connection between perceived distance and perceived size. Perceiving an object’s distance gives us cues to its size. Likewise, knowing its general size—that the object is a car—provides us with cues to its distance.

Even in size-distance judgments, however, we consider an object’s context. This interplay between perceived size and perceived distance helps explain several well-known illusions, including the Moon illusion: The Moon looks up to 50 percent larger when near the horizon than when high in the sky. Can you imagine why?

For at least 22 centuries, scholars have wondered (Hershenson, 1989). One reason is that monocular cues to an object’s distance make the horizon Moon seem farther away. If it’s farther away, our brain assumes, it must be larger than the Moon high in the night sky (Kaufman & Kaufman, 2000). But again, if you use a paper tube to take away the distance cue, the horizon Moon will immediately seem smaller.

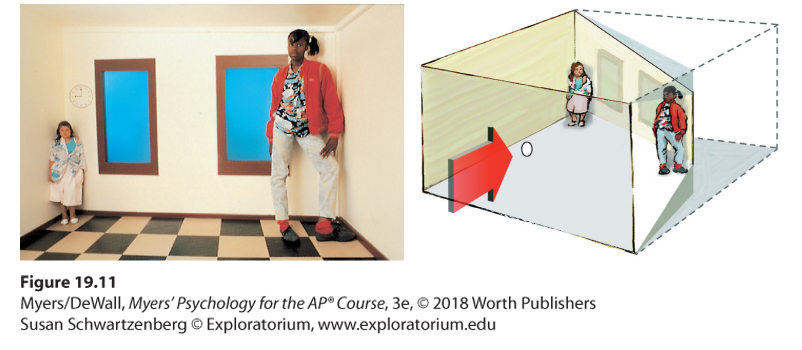

Size-distance relationships also explain why, in Figure 19.11, the two same-age girls seem so different in size. As the diagram reveals, the girls are actually about the same size, but the room is distorted. Viewed with one eye through a peephole, the Ames room’s trapezoidal walls produce the same images you would see in a normal rectangular room viewed with both eyes. Presented with the camera’s one-eyed view, your brain makes the reasonable assumption that the room is normal and each girl is therefore the same distance from you. Given the different sizes of the girls’ images on your retinas, your brain ends up calculating that the girls must be very different in size.

Figure 19.11 The illusion of the shrinking and growing girls

This distorted room, designed by Adelbert Ames, appears to have a normal rectangular shape when viewed through a peephole with one eye. The girl in the right corner appears disproportionately large because we judge her size based on the false assumption that she is the same distance away as the girl in the left corner.

Perceptual illusions reinforce a fundamental lesson: Perception is not merely a projection of the world onto our brain. Rather, our sensations are disassembled into information bits that our brain, using both bottom-up and top-down processing, then reassembles into its own functional model of the external world. During this reassembly process, our assumptions—such as the usual relationship between distance and size—can lead us astray. Our brain constructs our perceptions.

* * *

Form perception, depth perception, motion perception, and perceptual constancies illuminate how we organize our visual experiences. Perceptual organization applies to our other senses, too. Listening to an unfamiliar language, we have trouble hearing where one word stops and the next one begins. Listening to our own language, we automatically hear distinct words. This, too, reflects perceptual organization. But it is more, for we even organize a string of letters—THEDOGATEMEAT—into words that make an intelligible phrase, more likely “The dog ate meat” than “The do gate me at” (McBurney & Collings, 1984). This process involves not only the organization we’ve been discussing, but also interpretation—discerning meaning in what we perceive.