In this second chapter on information security behaviour, our focus moves from understanding behaviour to changing behaviour. It isn’t enough to comprehend why our colleagues and co-workers act in certain ways; we also need to be able to improve their performance. In other words, having gained some insight into what is happening on a psychological level, we now must try to use that knowledge to direct their behaviour in a more security conscious direction.

As such, this chapter is structured with regard to the most recognisable cybersecurity areas, where we most often require end users to change their behaviour. In each of the areas described, you will be presented with ideas that you can apply to your own workplace, which include published scientific research and findings from our workshops.

However, per the last chapter, it isn’t really very useful to talk about ‘cybersecurity behaviour’ in general. That might be the case in academic theory, but not in everyday practice. If your superiors task you with ‘improving cybersecurity’ in the workforce, then you will be hard-pressed to achieve success. You need to focus on specific cybersecurity behaviours if you are going to change anything for the better.

That means that this chapter will include sections on access control, password management, anti-phishing, workstation locking, removable media and mobile security, updating, and anti-virus. Admittedly, this doesn’t cover every aspect of end user security activity, and arguably some aspects are more important than others, but it is hoped that this broad overview will give you a good foundation from which to start changing particular behaviours in your workplace.

INFORMATION SECURITY POLICY

First, once again it is worth discussing a little policy before we begin. At the outset, based on the Next steps section of the previous chapter, we’re assuming that you have had a good look at your organisation’s policy documents, and made certain recommendations about what is and isn’t included. Whatever behaviour we are interested in changing, we need end users to be more aware of their organisation’s information security policy than they currently are, not to mention following it, and contributing to it. Crucially, we also need end users to report incidents to their information security team.

In the Secure Summits survey of 2017, we asked participants about behaviour and policy. As mentioned in Chapter 4, these are intimately linked. Essentially, it is unfair to criticise end users for doing something incorrectly, if that behaviour has not been explicitly prohibited. It therefore is essential that end users are aware of their organisation’s information security policies, and what behaviours are disallowed by them.

From our survey results, it seems that the InfoSec community has some work to do here. When presented with the following statement, our participants responded as follows:

Every end user in my organisation knows which behaviours are violations of information security policy:

Strongly disagree |

7% |

Disagree |

25% |

Neither agree nor disagree |

25% |

Agree |

40% |

Strongly agree |

3% |

That’s a stark figure: only 3 per cent of information security professionals could ‘strongly agree’ that their end users knew which behaviours were violations of policy. Similarly, a total of 32 per cent disagreed or strongly disagreed with the statement. This supports the idea that the very first step we have to address in changing end user security behaviour is simply educating them about their organisation’s information security policy, and what behaviours it prohibits.

At the same time, when we asked participants a related question, we received more heartening results:

My organisation has a clear, simple and effective process for reporting cybersecurity incidents:

Strongly disagree |

3% |

Disagree |

11% |

Neither agree nor disagree |

27% |

Agree |

41% |

Strongly agree |

18% |

So, with nearly 60 per cent of respondents agreeing or strongly agreeing that their organisation has a good process for reporting incidents, we get to see where information security professionals’ priorities lie: less on policy, more on process. This would suggest that we need to do more work on educating end users about which behaviours are forbidden (and why) rather than on reporting those behaviours.

However, when we asked security professionals about the sort of incidents that such processes are designed to defend against, the results were not exactly encouraging:

End user errors or violations of information security policy are certain to be detected:

Strongly disagree |

10% |

Disagree |

31% |

Neither agree nor disagree |

40% |

Agree |

17% |

Strongly agree |

2% |

Less than 20 per cent of respondents could agree that violations of policy in their organisation would be certain to be detected. In a way, this isn’t surprising – maintaining absolute visibility over an internal network, not to mention all human behaviour in an organisation too, is a tall order. But nevertheless, it does reflect the scale of the task in front of us. And to carry out that task – of defending our organisations to the best of our ability – we need our fellow end users to work with us. You might say that the best way to improve the information security team’s visibility over the network is to actually involve the end users as another line of defence.

In that regard, we also asked our respondents about the system of justice in their organisation with regard to information security policy violations:

End user errors or violations are disciplined fairly and transparently, regardless of seniority:

Strongly disagree |

11% |

Disagree |

24% |

Neither agree nor disagree |

36% |

Agree |

25% |

Strongly agree |

4% |

These results are exceptionally concerning from an organisational psychology perspective. Less than 30 per cent of the information security professionals we surveyed could agree that errors and violations were dealt with fairly by their employers. That is a disappointing and worrying statistic, as getting end users involved with positive information security behaviour requires, as an essential element, their understanding that their mistakes will be handled impartially.

A couple of research studies are worth discussing here. There is one carried out by a Canadian team of researchers in 2009 that is a good starting point in examining how user behaviour is linked to organisational policy, and ultimately culture. Bulgurcu, Cavusoglu and Benbasat (2009) used the theory of planned behaviour, which you will recall from Chapter 4, to examine what factors influenced whether or not employees intended to comply with their information security policies. This study used a panel of 464 adults resident in the United States, employed by a diverse set of organisations.

In the light of that theory, and the answers to the first question in our Secure Summits survey above, it is probably unsurprising that Bulgurcu and colleagues found that participants’ awareness of information security positively predicted their attitude to comply with their organisation’s policies. In other words, generally speaking, the more someone is aware of information security and information security policies, the more likely they are to comply with policies. So, not exactly surprising. But Bulgurcu et al. also asked their participants about another factor: how fair they thought those policies were. This proved to be an equally powerful predictor of attitudes and intention to comply with information security policy. In other words, users are more likely to comply with policies that they feel are fair and transparent.

This paper concluded that ‘creating a fair environment and ensuring procedural justice in regards to implementing security rules and regulations is the key to effective information security management’ (Bulgurcu et al., 2009, p. 3273). We can’t expect to change users’ behaviour in a more positive way if they do not feel that they are treated impartially.

However, let’s look in the mirror for a minute, too. Another study of interest comes from Ashenden and Sasse, published in 2013. Again, as with the previous paper, the industry may have changed since then, but its insights have stood the test of time. This team of UK researchers used a qualitative methodology and conducted in-depth, semi-structured interviews with CISOs working at large international organisations. Strikingly, this paper is sub-titled: ‘Their own worst enemy?’ which gives you some idea as to its findings.

In these interviews, Ashenden and Sasse were primarily interested in information security awareness campaigns, and how CISOs used them to effect cultural change – in other words, a large chunk of what this book is also about. Among the many themes that emerged in these interviews, including issues around business strategy and marketing, those that are most striking centre on the CISOs’ struggle to gain credibility. Ashenden and Sasse surmise that this is because CISOs seem to lack organisational power, experience a certain amount of confusion about their role identity and are often unable to engage effectively with employees. Crucially, the researchers point out that while each of the interviewees worked at large organisations that were perceived to be at the forefront of information security practice, none of them had a clear idea whether or not their awareness-raising activities and tools actually had any effect in changing end user behaviour, despite spending lots of time promoting them. Ashenden and Sasse liken this to parents giving children toys and pizza simply to keep them quiet.

As such, let your #1 take-home message from this part of the book be to interact with end users more: try to understand what they think is fair, and what they think works – this is the only way you will know whether or not your efforts to change behaviour are actually working. More to the point, this is a crucial part of practice in developing and updating your organisation’s information security policy – if end users are consulted about it, they are more likely to adhere to it.

TECHNIQUES FOR CHANGING BEHAVIOUR

There are a number of basic techniques at your disposal as a security professional in trying to change the behaviour of your colleagues. In the examples that follow, I have made particular recommendations as to which to use in particular circumstances. However, your mileage may vary: these techniques will have varying efficacy in different organisational contexts. This is not an exact science: think of yourself as an artist or an engineer trying to choose the right tool for the job at hand, rather than pointlessly searching for a magic bullet that doesn’t exist.

Automated tools

First, as you surely know, there are automated tools. These essentially force users to execute certain behaviours or prevent them from doing things. For example, you could conceivably run a script over the network to log out every workstation after, say, three minutes of inactivity. The advantages of such tools are that they are very cheap, will work all day, every day and equally apply across every level of seniority.

However, if these tools are not perceived to be fair, you can be sure that someone will try to find a way around them. Take the auto-log-out script mentioned above. Three minutes might be seen as an unreasonably short period of time. I’ve heard of employees using all kinds of vibrating contraptions to keep their mouse moving while they were away from their desk – all so that they don’t have to suffer the indignity of having to re-enter their password. Consequently, you might come under pressure to increase the time limit to 5, 6 or even 10 minutes. Which may actually defeat the purpose of the script in the first place, and back to square one we go!

Human behavioural tools

Second, there are human behavioural tools – namely, the carrot and the stick. On the one hand, when a user does something that we don’t want them to do, we can punish them, in order to try to convince them not to do it again. On the other hand, when they do the right thing, we can reward them, in order to encourage them to do it more often.

To be frank, in the security industry there is way too much of the former, whereas the latter has long been preferred by psychological scientists and practitioners. That is, instead of trying to stop users from doing ‘bad things’, we should try to encourage them to do ‘good things’.

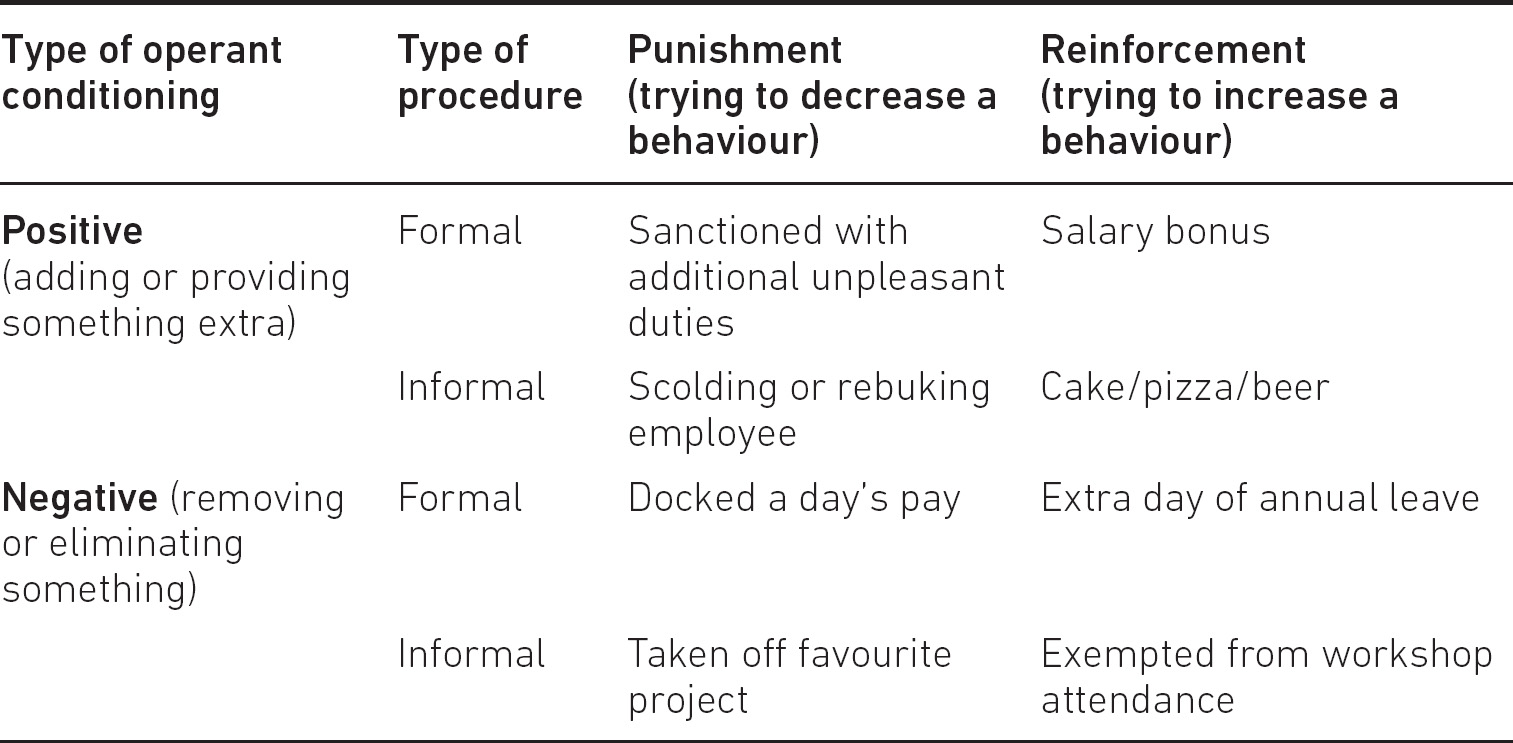

Crucially, there are positive and negative variations of both of these – in psychological science this is known as operant, or instrumental, conditioning. For example, a negative punishment would involve removing something from the user in order to discourage them from performing a particular behaviour again. Whereas, alternatively, a positive reinforcement would mean giving the subject something in order to convince them to repeat a behaviour.

Furthermore, it is worth noting that in terms of both punishment and reinforcement, we can talk about both formal and informal procedures. Some behaviour is serious enough to merit written organisational policy documentation, whereas others can be handled unofficially. Again, this is a kind of toolbox you can pick and choose from, depending on the security behaviour you are interested in at any given time. Let’s sketch this out with a few examples in Table 5.1 to make it a bit clearer.

Table 5.1 Types of operant conditioning, with formal and informal examples

It is crucial to note that with any of these behavioural techniques, for them to be effective, they must act like the automatic tools mentioned previously. In other words, they must be applied both immediately and consistently. I must stress, however, that while punishment certainly has its place, in general it is not preferred by behavioural scientists. And while there is a certain amount of sentimentality involved in that preference, it also boils down to efficacy: when a positive punishment is removed or a negative punishment is restored, the unwanted behaviour will often return. This is less the case with reinforcements of either kind. As such, CISOs should simply think ‘less stick, more carrot’ if they want to change end user behaviour in the long run.

Specific cybersecurity behaviours

Now let’s examine the core areas of cybersecurity behaviour in detail. In the subsections that follow, I have drawn on the advice outlined in Coventry et al. (2014). In this report on trying to improve cybersecurity practices in the general public, published by the UK Government Office for Science, the authors proposed the following basic advice that everyone should follow:

- Use strong passwords and manage them securely.

- Use anti-virus software and firewalls.

- Log out of sites after you have finished and shut down your computer.

- Use only trusted and secure connections, computers and devices (including Wireless Fidelity (Wi-Fi)).

- Use only trusted and secure sites and services.

- Stay informed about risks (knowledge, common sense, intuition). Try to avoid scams and phishing.

- Always opt to provide the minimal amount of personal information needed for any online interaction and keep your identity protected.

- Be aware of your physical surroundings when online.

- Report cybercrimes and criminals to the authorities (Coventry et al., 2014).

Most likely, none of this advice will be unfamiliar to anyone working in cybersecurity. However, as our data shows, in training some of these pointers are more commonly focused on than others.

In the Secure Summits survey of 2017, we asked respondents which aspects of security user behaviour they focused on most in training. As you can see from Table 5.2, there is considerable variation across these specific behaviours in terms of how often they are addressed. This should give the CISO some pause for thought: are we trying to change the behaviours that are actually the riskiest to our organisations? Or simply the ones that are easiest to deal with? This is something you need to think about and discuss with your board.

Table 5.2 Frequency of inclusion of cybersecurity behaviours in user training by information security professionals

Access control

At the crossover between physical security and cyber, or information security, lies access control. In the modern workplace, the behaviours we are talking about here include maintaining good door access and key card practices, properly storing sensitive printed documentation and being wise to shoulder-surfing (i.e. an adversary literally looking over your shoulder as you enter your password or other sensitive information in order to steal it). The core behaviour we need to get through to users according to Coventry et al.’s report is to get them to be aware of their physical surroundings – not only when online, but when around sensitive information in general. In this hyperconnected world, we tend to forget that information is not purely digital. Valuable information can take the form of printed paper, which can easily be left behind or picked up by individuals with nefarious intent. Moreover, even when important documents are in digital format, they require physical security – tape backups must be under lock and key, server rooms need to be robustly secured – this is the basis of access control.

To be frank, there is very little research of any kind available to give evidence-based psychological advice here. However, it strikes me that unless the issue is of a very serious nature, then probably only informal strategies of punishment or reinforcement should be used. For example, a quick trip around a given team’s office space during their lunchtime (or perhaps a colleague could do this for you while you are giving them a workshop) can reveal who follows the clear desk policy, and you can leave them a chocolate or some other treat. The dirty desks get nothing! Hence, you are rewarding good behaviour, not punishing bad. Make sure to randomise this by team too – so nobody actually knows when they will be observed: remember the Hawthorne effect from Chapter 4.

Workstation locking

Locking workstations is a behaviour that our survey indicated many security professionals are trying to change, with almost three in five of our respondents reporting that they focused on it in training either ‘often’ or ‘constantly’.

Of course, you can double up the reinforcement of the clear desk policy mentioned above for workstation locking too: one treat or reward for a clear desk, one for workstation locked – and any other behaviours you care to reinforce.

However, I’d also like to share some advice from a workshop participant. A simple way to improve user behaviour here may require only two fingers. On a Windows computer, encourage users to get into the habit of placing their left index finger on the  key, and their right index finger on the L key, which locks their computers, as they stand up and push off their desk. On a Mac, the equivalent shortcut requires just one more key:

key, and their right index finger on the L key, which locks their computers, as they stand up and push off their desk. On a Mac, the equivalent shortcut requires just one more key:  + Shift + Q. What we’re doing here is pairing the new and desired behaviour – logging out of the workstation – with an old and familiar behaviour – standing up and pushing off from the desk.

+ Shift + Q. What we’re doing here is pairing the new and desired behaviour – logging out of the workstation – with an old and familiar behaviour – standing up and pushing off from the desk.

You might call this ‘muscle memory’ but this is just classic psychology. In the punishment and reinforcement model mentioned earlier, a behaviour is followed by certain conditions, depending on whether or not we want it increased or diminished. Here we are doing something much simpler – less voluntary and more immediate. Instead of following the behaviour we are interested in with either a reward or a punishment, here we pair the behaviour we want the user to perform with another behaviour that they already have to do anyway – such as standing up to go to the bathroom. In theory, this means that workstation locking should then become psychologically habitual, and every time the user stands up, they should feel the need to hit the keyboard with two fingers – so long as your awareness-raising activities have been successful.

In conclusion, while you can set network policies on automatically logging users out, it is far better in the long run to simplify good security culture into small behaviours that users can incorporate into their daily routine with little fuss.

Password management

Highly popular across the industry, password management was unsurprisingly reported by over 70 per cent of our survey respondents as a training aim. This naturally reflects how crucial passwords are in cybersecurity, but also how complex its psychology is too.

In a survey carried out on 263 Brazilian adults, Pilar et al. (2012) examined some factors that are often discussed with regard to memory abilities, namely education and age. On the one hand, this research found interesting differences in password behaviour, with younger and more educated participants using longer passwords than older and less educated individuals. However, when it came to difficulty in remembering passwords, this study did not find any significant differences.

What actually did predict difficulty in password memory was simply the number of passwords that respondents used. In other words, it doesn’t seem to matter much if you are old or young, educated or not, but if you have too many passwords to remember, you will struggle. In general, across the various groups, difficulties in remembering passwords seemed to steeply increase beyond five passwords. As such, Pilar and co-workers (2012) recommend mnemonics and reusing passwords by category of use. This would probably fly in the face of much practice in the cybersecurity industry, but that’s what the psychological research suggests. Ultimately, it is worth bearing in mind that most people have more passwords to remember than they can probably cope with.

A UK study examined a related problem – password sharing. In a 2015 survey of 497 adults, researchers Whitty et al. were interested in the type of person most likely to engage in this behaviour. Similar to Pilar and colleagues above, this team were interested in age as a factor, but also personality characteristics and, wait for it, cybersecurity knowledge.

The results were quite surprising. Overall, a whopping 51.1 per cent of participants admitted that they shared passwords – a statistic that should make a CISO’s blood run cold. Moreover, given that this is self-reported data, it’s likely that the actual level of this behaviour is much higher. Lots of work to do here!

Just like the Brazilian study, expectations were confounded. Yet again, the ‘forgetful old person’ did not appear in the data – in fact, it was younger people who emerged as more likely to share passwords. Calling to mind the Whitty paper above, this gives us some idea about how changing password behaviour should be targeted. It isn’t the case that older people are more forgetful or prone to misusing passwords and therefore need special attention. In actual fact, it’s probably the younger end users that we should focus on. Improving password behaviour may seem a little daunting when you think about all the end users on your network as a whole. Consequently, it might be more worthwhile to think about segmenting them psychologically for different campaigns. Can you work out which of your end users need to stop reusing passwords and which ones need to use more complex passwords – and target these groups accordingly?

Moreover, calling to mind the Dunning–Kruger effect mentioned in the last chapter, Pilar and colleagues found that knowledge about cybersecurity did not distinguish between those who did and did not share passwords. In other words, people who claimed to understand cybersecurity did not actually practise cybersecurity any better than those who didn’t.

Hence, when we are trying to change behaviour in our own organisations, we should not take colleagues claims of knowing about cybersecurity at face value: these people should be given as much, if not more, attention than everyone else. There are no easy solutions to dealing with the Dunning–Kruger effect as it surfaces in various aspects of trying to change cybersecurity behaviour. However, it is recommended that when engaging in any behaviour change project, you encourage an atmosphere of intellectual curiosity and humility. Cybersecurity behaviour is a field where there is always something more to learn: people should feel comfortable with answering any question with ‘I don’t know’.

Anti-phishing

Phishing attacks seem to be the main vector for so many cyberattacks these days, and, as a result, CISOs have to design their behaviour change projects accordingly. As shown in Table 5.2, close to four-fifths of our survey respondents reported that they concentrated on phishing in training either ‘often’ or ‘constantly’.

Like password usage, phishing is one aspect of information security behaviour where there is relatively more scientific research. One study on phishing in 2017 is noteworthy. An American team led by Carella carried out a user study experiment with 150 university students that aimed to establish an educational standard for anti-phishing campaigns (Carella et al., 2017). Carried out over several weeks, participants received a variety of phishing email simulations and data was gathered on those emails within which they clicked on the links.

Participants were split into three groups that received different levels of anti-phishing training: a control group, which received no training at all; a presentation group, which received an in-class anti-phishing training presentation; and a documents group, who were directed to anti-phishing awareness documentation each time they clicked on a link in a simulated phishing email. Notably, the actual information received by both the presentation group and the documents group was essentially the same, only the manner of its communication differed. As you can probably deduce, the documents group were being treated with a form of positive punishment: they were being given something extra in order to try to decrease a behaviour.

Seven waves of phishing emails were sent out to each of these groups. In the first week, each group performed quite similarly, with click-through rates of over 50 per cent. This is shocking enough in and of itself – before any intervention took place, the participants were highly likely to click through on a link in a phishing email.

In the second week, the presentation group received their in-class anti-phishing training presentation. Thereafter this group’s click-through rate fell substantially – for a while. In waves 2, 3 and 4, the presentation group performed in the mid-30 per cent range, but by wave 7, the final week, their click-through rate was basically back where it started from, at 50 per cent. By the end of the experiment, the presentation group was performing on anti-phishing detection at the same rate as the control group, who had received no training at all. This kind of rebound may be familiar to anyone who’s ever carried out cybersecurity workshops in an attempt to change behaviour.

On the other hand, those in the documents group performed very well, with their click-through rates dropping from one week to the next. By the last wave, this group were clicking on a mere 8 per cent of links in phishing emails. Hence this method of phishing training – that is, redirecting to anti-phishing resources after clicking on a phishing link in a simulated attack – appears to have a solid scientific basis and is more effective than a classroom exercise.

However, given what we outlined above regarding the effects of punishment, one would not be surprised to find that the performance of the documents group declined once the punishment stimulus was removed. This study did not include a follow-up procedure, so we don’t know how well this group performed in the weeks and months thereafter. The effect on the presentation group wore off after a couple of weeks, so perhaps it would take longer than that, but one would expect that it would wear off at some point. The key for CISOs attempting to change behaviour in this way is to have a plan that is not simply a one-off: treatment has to be repeated at regular intervals.

However, paradoxically, as engineers get better at automating the detection (and quarantining) of phishing emails, end users therefore have less material to learn from. Hence, as the network gets better at preventing phishing, the end users get worse. More to the point, given that the signal in question here – phishing emails – changes from one day to the next, phishing training will always be challenging. It is therefore crucial that CISOs remain in close contact with end users and ensure that their office is seen as approachable and engaged.

Again, with regard to policy development, and indeed also in relation to culture as will be explained in the next chapters, we need to think about phishing far more broadly than simply stopping users from clicking on links. We need to have joined up thinking from that end user right up to the boardroom; from what should happen after the risky link is actually clicked, through to how that policy is developed and who is involved in that discussion, all the way to how senior executives decide on how these risks should be managed.

Removable media and mobile security

An inescapable element of the modern office is its portability. From mobile phones to USB keys and public Wi-Fi, 21st century technology now allows us to move while we work – which presents a whole new range of cybersecurity challenges. As a result, it is unsurprising that in our survey only 1 per cent had never covered these topics in end user training.

Consequently, it is worthwhile trying to understand why, for example, users plug in stray memory keys. One study, carried out in 2016 by Tischer and colleagues, was specifically focused on this question: why do people plug in thumb drives that they find lying around?

To answer this, the research team dropped 297 USB keys on a campus of the University of Illinois. They used a variety of different types of devices, with different labels, and dropped them at different locations on the campus, and at different times of the day. Each memory key contained files consistent with how they were externally labelled – however, every file was actually an Hypertext Markup Language (HTML) file that contained an <img> tag for an image located on a centrally controlled server. This allowed the researchers to detect when the file was opened, and also popped up a survey that explained the study and encouraged whoever had plugged in the device to answer some questions about why they had done so.

The authors report some eye-catching statistics. Checking the location of where the drives were dropped after a week, 290 of the 298 drives had disappeared. Of those, 135 (45 per cent) ended up being plugged in – hence the authors describe the attack’s success as being in the 45–98 per cent range (though only 58 agreed to participate in the study).

In terms of motivation, a large majority of those who participated in the study said that they connected the drive simply in order to locate its owner (68 per cent). So, there, maybe, is your answer: people stick in stray USB keys because – being nice people – they want to get them back to their owners. To be blunt: your colleagues are going to plug in USB keys that they find lying around – no amount of scare messaging is going to stop them, so don’t try. It is likely impossible, and indeed objectionable, to try to remove altruism from ordinary human beings via mandatory workplace training. Instead, why not try positively reinforcing a behaviour that you actually want them to do? So, continue dropping USB keys throughout your organisation, but make sure that you have a clear procedure of what you want users to do with them when they find them. Maybe you want them to take them to the IT desk, or to plug them into a designated, air-gapped machine – make sure they are rewarded as soon as they do.

Updates and anti-virus

In most organisations, issues such as software updates and anti-virus have been automated at a network level, so the results we received when we asked our survey respondents about them are perhaps understandable. As you can see from Table 5.2, these are things that many security professionals rarely or never focus on in training. However, it is still important that end users understand these processes and do not become complacent.

Don’t forget, while you may be able to push out updates for most devices that your end users operate, that doesn’t cover everything. Mobile security is an ongoing scary place where CISOs have less technical control than they would like. While ‘bring your own device’ (BYOD) practices may have reduced spending on IT equipment, they have markedly increased cybersecurity risk exposure. To be clear, these are risks that many organisations have decided to ignore, which is not a wise move at all. However, with subtle changes to organisational culture you can improve security behaviour, by simply spending a little bit of time nudging routines.

My advice here is to start small, with short coffee-break style standing meetings, where teams are encouraged to whip out their phones and compare operating systems. So, while Apple can push out security updates to iOS devices and generally badger users about installing them, users can still ignore these warnings. Security updates are even easier to ignore with Android devices. Arguably, end users will not deem this to be a security priority until it is made a business priority. Even with partitioned devices and advanced enterprise device management policies, essentially the user is the perimeter and should be treated as such. Get users’ attention for as little as 15 minutes a month with their smartphones and simply get them to update their devices over a coffee. And that’s it – making a little bit of time to get the end users to consciously engage with improving their cybersecurity behaviour.

Again, this feeds into workplace culture, which will be explored in the next two chapters, but it should also be seen as an important element of habitual behaviour. In other words, as a security professional, it is not enough that you encourage your colleagues to have anti-virus on, and always run the latest version of software by updating regularly. You must also advocate that employees are always given enough time in their workday to carry out these tasks too.

SUMMARY

In concluding this chapter let’s summarise what we have learned about changing cybersecurity behaviour. To begin with, we started this chapter with a brief discussion of policy. If we want to change behaviour, it is important that this is specified in official policy, and that end users are aware of what is prohibited by policy. But more crucially, an atmosphere of fairness should be developed around cybersecurity, and end users should be part of the process of writing policy. If we are to positively influence behaviour, we must understand end users’ perspectives on what works and what doesn’t. This chapter also covered the relative effectiveness of those human behavioural tools known as punishment and reinforcement. In making the point that while the former seems to be far more popular in information security, the latter is preferred by behavioural scientists, we also considered the distinction between formal and informal reactions to violations of policies. Following this we moved on to explore strategies for changing specific cybersecurity behaviours and dealt with in turn access control, workstation locking, password management, anti-phishing, removable media and mobile security, and finally updates and anti-virus. In each of these cases, we evaluated peer-review research and best practice advice. Overall, readers are advised to start small, make changes gradually and foster an atmosphere of fairness and intellectual humility. Naturally, this feeds into workplace culture, which will be examined in Chapters 6 and 7.

NEXT STEPS

Let’s wrap up with a few suggestions for where you might think about changing your colleagues’ cybersecurity behaviours. Again, as with the last chapter, take baby steps with these interventions:

- Think about your own role. Are you leading by example with your own cybersecurity behaviour? Or is there anything you need to change?

- Additionally, how are you personally going to change behaviour within your organisation? What are the strengths and weaknesses of your position? Who do you need help from or buy-in from, before you even begin?

- What is the most effective method you can think of for making your colleagues more aware of your organisation’s information security policy? And if it’s not fair and equitable in its current state, how are you going to fix that?

- Think about how you will approach the two sides of the Dunning–Kruger effect with regard to claimed cybersecurity knowledge. How will you deal with these different types of people? How will you encourage an atmosphere of intellectual humility around cybersecurity behaviour?

- Using the ‘positive reinforcement with chocolate’ idea, plan out how you are going to change workstation lockout behaviour in your organisation. What is the best way of raising awareness of the ‘muscle memory at loo break’ method? Don’t forget though about the complexity of measuring behaviours as described in Chapter 4!

- Consider what you will encourage end users to do with stray USB keys and how you will reward, rather than punish, this behaviour.

- Can you make it a company-wide policy to set aside a short timeslot for updating operating systems for smartphones and other mobile devices, whether iOS or Android?