If every communication process must be explained as relating to a system of significations, it is necessary to single out the elementary structure of communication at the point where communication may be seen in its most elementary terms. Although every pattern of signification is a cultural convention, there is one communicative process in which there seems to be no cultural convention at all, but only – as was proposed in 0.7 – the passage of stimuli. This occurs when so-called physical ‘information’ is transmitted between two mechanical devices.

When a floating buoy signals to the control panel of an automobile the level reached by the gasoline, this process occurs entirely by means of a mechanical chain of causes and effects. Nevertheless, according to the principles of information theory, there is an ‘informational’ process that is in some way considered a communicational process too. Our example does not consider what happens once the signal (from the buoy) reaches the control panel and is converted into a visible measuring device (a red moving line or an oscillating arm): this is an undoubted case of sign-process in which the position of the arm stands for the level of the gasoline, in accordance with a conventionalized code.

But what is puzzling for a semiotic theory is the process which takes place before a human being looks at the pointer: although at the moment when he does so the pointer is the starting point of a signification process, before that moment it is only the final result of a preceding communicational process. During this process we cannot say that the position of the buoy stands for the movement of the pointer: instead of ‘standing-for’, the buoy stimulates, provokes, causes, gives rise to the movement of the pointer.

It is then necessary to gain a deeper knowledge of this type of process, which constitutes the lower threshold of semiotics. Let us outline a very simple communicative situation(1). An engineer – downstream – needs to know when a watershed located in a basin between two mountains, and closed by a Watergate, reaches a certain level of saturation, which he defines as ‘danger level’.

Whether there is water or not; whether it is above or below the danger level; how much above or below; at what rate it is rising: all this constitutes pieces of information which can be transmitted from the watershed, which will therefore be considered as a source of information.

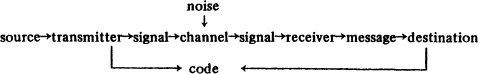

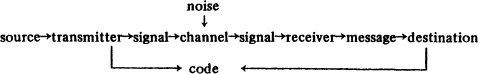

So the engineer puts in the watershed a sort of buoy which, when it reaches danger level, activates a transmitter capable of emitting an electric signal which travels through a channel (an electric wire) and is picked up downstream by a receiver, this device converts the signal into a given string of elements (i.e. releases a series of mechanical commands) that constitute a message for a destination apparatus. The destination, at this point, can release a mechanical response in order to correct the situation at the source (for instance opening the watergate so that the water can be slowly evacuated). Such a situation is usually represented as follows:

In this model the code is the device which assures that a given electric signal produces a given mechanical message, and that this elicits a given response. The engineer can establish the following code: presence of signal (+ A) versus absence of signal (- A). The signal + A is released when the buoy sensitizes the transmitter.

But this Watergate Model’ also foresees the presence of potential noise on the channel, which is to say any disturbance that could alter the nature of the signals, making them difficult to detect, or producing + A when - A is intended and vice versa. Therefore the engineer has to complicate his code. For instance, if he establishes two different levels of signal, namely + A and +B, he then disposes of three signals (2) and the destination may accordingly be instructed in order to release three kinds of response.

+ A produces ‘state of rest’

+ B produces ‘feedback’

- AB (and + AB) produces an emergency signal (meaning that something does not work)

This complication of the code increases the cost of the entire apparatus but makes the transmission of information more secure. Nevertheless there can be so much noise as to produce + A instead of + B. In order to avoid this risk, the code must be considerably complicated. Suppose that the engineer now disposes of four positive signals and establishes that every message must be composed of two signals. The four positive signals can be represented by four different levels but in order to better control the entire process the engineer decides to represent them by four electric bulbs as well. They can be set out in a positional series, so that A is recognizable inasmuch as it precedes B and so on; they can also be designed as four bulbs of differing colors, following a wave-length progression (green, yellow, orange, red). It must be made absolutely clear that the destination apparatus does not need to ‘see’ bulbs (for it has no sensory organs): but the bulbs are useful for the engineer so that he can follow what is happening.

I should add that the correspondence between electric signals (received by the transmitter and translated into mechanical messages) and the lighting of the bulbs (obviously activated by another receiver) undoubtedly constitutes a new coding phenomenon that would need to receive separate attention; but for the sake of convenience I shall consider both the message to the destination and the bulbs as two aspects of the same phenomenon. At this point the engineer has – at least from a theoretical point of view – 16 possible messages at his disposal:

AA BA CA DA

AB BB CB DB

AC BC CC DC

AD BD CD DD

Since AA, BB, CC, DD are simply repetitions of a single signal, and therefore cannot be instantaneously emitted, and since six messages are simply the reverse of six others (for instance, BA is the reverse of AB, and the temporal succession of two signals is not being considered in this case), the engineer actually disposes of six messages: AB, BC, CD, AD, AC and BD. Suppose that he assigns to the message AB the task of signalling “danger level”. He has at his disposal 5 ‘empty’ messages.

Thus the engineer has achieved two interesting results: (i) it is highly improbable that a noise will activate two wrong bulbs and it is probable that any wrong activation will give rise to a ‘senseless’ message, such as ABC or ABCD: therefore it is easier to detect a misfunctioning; (ii) since the code has been complicated and the cost of the transmission has been increased, the engineer may take advantage of this investment to amortize it through a more informative exploitation of the code.

In fact with such a code he can get a more comprehensive range of information about what happens at the source and he can better instruct the destination, selecting more events to be informed about and more mechanical responses to be released by the apparatus in order to control the entire process more tightly. He therefore establishes a new code, able to signal more states of the water in the watershed and to elicit more articulated responses (Table 4).

(a) |

|

(b) |

|

(c) |

bulbs |

|

states of water |

|

responses of the |

|

|

or |

|

destination |

|

|

notions about the |

|

|

|

|

states of water |

|

|

AB |

= |

danger level |

= |

water dumping |

BC |

= |

alarm level |

= |

state of alarm |

CD |

= |

security level |

= |

state of rest |

AD |

= |

insufficency level |

= |

water make-up |

The fact of having complicated the code has introduced redundancy into it: two signals are used in order to give one piece of information. But the redundancy has also provided a supply of messages, thus enabling the engineer to recognize a larger array of situations at the source and to establish a larger array of responses at the destination. As a matter of fact redundancy has also provided two more messages (AC and BD) that the engineer does not want to use and by means of which he could signal other states within the watershed (combined with appropriate additional responses): they could also be used in order to introduce synonymies (danger level being signalled both by AB and by AC). Anyway the code which has been adopted would seem to be an optimal one for an engineer’s purposes and it would be unwise to complicate it too much.(3)

Once the Watergate Model is established and the engineer has finished his project, a semiotician could ask him a few questions, such as: (i) what do you call a ‘code’? the device by which you know that a given state in the watershed corresponds to a given set of illuminated bulbs? (ii) if so, does the mechanical apparatus possess a code, that is, does the destination recognize the ‘meaning’ of the received message or does it simply respond to mechanical stimuli? (iii) and is the fact that the destination responds to a given array of stimuli by means of a given sequence of responses based on a code? (iv) who is that code for? you or the apparatus? (v) and anyway, is it not true that many people would call the internal organization of the system of bulbs a code, irrespective of the state of things that can be signalled through its combinational articultation? (vi) finally, is not the fact that the water’s infinite number of potential positions within the watershed have been segmented into four, and only four ‘pertinent’ states, sometimes called a ‘code’?

One could carry on like this for a long time. But it seems unnecessary, since it will already be quite clear that under the name of /code/ the engineer is considering at least four different phenomena:

(a) A set of signals ruled by internal combinatory laws These signals are not necessarily connected or connectable with the state of the water that they conveyed in the Watergate Model, nor with the destination responses that the engineer decided they should be allowed to elicit. They could convey different notions about things and they could elicit a different set of responses: for instance they could be used to communicate the engineer’s love for the next-watershed girl, or to persuade the girl to return his passion. Moreover these signals can travel through the channel without conveying or eliciting anything, simply in order to test the mechanical efficiency of the transmitting and receiving apparatuses. Finally they can be considered as a pure combinational structure that only takes the form of electric signals by chance, an interplay of empty positions and mutual oppositions, as will be seen in 1.3. They could be called a syntactic system.

(b) A set of states of the water which are taken into account as a set of notions about the state of the water and which can become (as happened in the Watergate Model) a set of possible communicative contents. As such, they can be conveyed by signals (bulbs), but are independent of them: in fact they could be conveyed by any other type of signal, such as flags, smoke, words, whistles, drums and so on. Let me call this set of ‘contents’ a semantic system.

(c) A set of possible behavioral responses on the part of the destination. These responses are independent of the (b) system: they could be released in order to make a washing-machine work or (supposing that the engineer was a ‘mad scientist’) to admit more water into the watershed just when danger level was reached, thereby provoking a flood. They can also be elicited by another (a) system: for example the destination can be instructed to evacuate the water only when, by means of a photoelectric cell, it detects an image of Fred Astaire kissing Ginger Rogers. Communicationally speaking the responses are the proofs that the message has been correctly received (and many philosophers maintain that ‘meaning’ is nothing more than this detectable disposition to respond to a given stimulus (see Morris, 1946)): but this side of the problem can be disregarded, for at present the responses are being considered independently of any conveying element.

(d) A rule coupling some items from the (a) system with some from the (b) or the (c) system. This rule establishes that a given array of syntactic signals refers back to a given state of the water, or to a given ‘pertinent’ segmentation of the semantic system; that both the syntactic and the semantic units, once coupled, may correspond to a given response; or that a given array of signals corresponds to a given response even though no semantic unit is supposed to be signalled; and so on.

Only this complex form of rule may properly be called a ‘code’. Nevertheless in many contexts the term /code/ covers not only the phenomenon (d) – as in the case of the Morse code – but also the notion of purely combinational systems such as (a), (b) and (c). For instance, the so-called ‘phonological code’ is a system like (a); the so-called ‘genetic code’ seems to be a system like (c); the so-called ‘code of kinship’ is either an underlying combinational system like (a) or a system of pertinent parenthood units very similar to (b).

Since this homonymy has empirical roots and can in some circumstances prove itself very useful, I do not want to challenge it. But in order to avoid the considerable theoretical damage that its presence can produce, one must clearly distinguish the two kinds of so-called ‘codes’ that it confuses: I shall therefore call a system of elements such as the syntactic, semantic and behavioral ones outlined in (a), (b) and (c) an s-code (or code as system); whereas a rule coupling the items of one s-code with the items of another or several other s-codes, as outlined in (d), will simply be called a code.

S-codes are systems or ‘structures’ that can also subsist independently of any sort of significant or communicative purpose, and as such may be studied by information theory or by various types of generative grammar. They are made up of finite sets of elements oppositionally structured and governed by combinational rules that can generate both finite and infinite strings or chains of these elements. However, in the social sciences (as well as in some mathematical disciplines), such systems are almost always recognized or posited in order to show how one such system can convey all or some of the elements of another such system, the latter being to some extent correlated with the former (and vice versa). In other words these systems are usually taken into account only insofar as they constitute one of the planes of a correlational function called a ‘code’.

Since an s-code deserves theoretical attention only when it is inserted within a significant or communicational framework (the code), the theoretical attention is focused on its intended purpose: therefore a non-significant system is called a ‘code’ by a sort of metonymical transference, being understood as part of a semiotic whole with which it shares some properties.

Thus an s-code is usually called a ‘code’ but this habit relies on a rhetorical convention that it would be wise to eliminate. On the contrary the term /s-code/ can be legitimately applied to the semiotic phenomena (a), (b) and (c) without any danger of rhetorical abuse since all of these are, technically speaking, ‘systems’, submitted to the same formal rules even though composed of very different elements; i.e. (a) electric signals; (b) notions about states of the world, (c) behavioral responses.

Taken independently of the other systems with which it can be correlated, an s-code is a structure; that is, a system (i) in which every value is established by positions and differences and (ii) which appears only when different phenomena are mutually compared with reference to the same system of relations. “That arrangement alone is structured which meets two conditions: that it be a system, ruled by an internal cohesiveness; and this cohesiveness, inaccessible to observation in an isolated system, be revealed in the study of transformations, through which the similar properties in apparently different systems are brought to light” (Lévi-Strauss, 1960).

In the Watergate Model systems (a), (b) and (c) are homologously structured. Let us consider system (a): there are four elements (A; B; C; D) which can be either present or absent:

A = 1000

B = 0100

C = 0010

D = 0001

The message they generate can be detected in the same way:

AB = 1100

CD = 0011

BC = 0110

AD = 1001

AB is recognizable because the order of its features is oppositionally different from that of BC, CD and AD and so on. Each element of the system can be submitted to substitution and commutation tests, and can be generated by the transformation of another element; furthermore the whole system could work equally well even if it organized four fruits, four animals or the four musketeers instead of four bulbs.

The (b) system relies upon the same structural mechanism. Taking 1 as the minimal pertinent unit of water, the increase of water from insufficiency to danger might follow a sort of ‘iconic’ progression whose opposite would be the regression represented by the (c) system, in which 0 represents the minimal pertinent unit of evacuated water:

(b) |

(c) |

(danger) 1111 |

0000 (evacuation) |

(alarm) 1110 |

0001 (alarm) |

(security) 1100 |

0011 (rest) |

(insuff.) 1000 |

0111 (admission) |

By the way, if an inverse symmetry appears between (b) and (c), this is because the two systems are in fact considered as balancing each other out; whereas the representation of the structural properties of the system (a) does not look homologous to the other two because the correspondence between the strings in (a) and the units of (b) and (c) was arbitrarily chosen. One could have chosen the message ABCD (IIII), in order to signal “danger” and to elicit “evacuation”. But, as was noted in 1.1.3, this choice would have submitted the informational process to greater risk of noise. Since the three systems are not here considered according to their possible correlation, I am only concerned to show how each can, independently of the others, rely on the same structural matrix, this being able to generate different combinations following diverse combinational rules. When the formats of the three systems are compared, their differences and their potential for mutual transformation become clear, precisely because they have the same underlying structure.

The structural arrangement of a system has an important practical function and shows certain properties(4). It makes a situation comprehensible and comparable to other situations, therefore preparing the way for a possible coding correlation. It arranges a repertoire of items as a structured whole in which each unit is differentiated from the others by means of a series of binary exclusions. Thus a system (or an s-code) has an internal grammar that is properly studied by the mathematics of information. The mathematics of information, in principle, has nothing to do with engineering the transmission of information, insofar as it only studies the statistical properties of an s-code. These statistical properties permit a correct and economic calculation as to the best transmission of information within a given informational situation, but the two aspects can be considered independently.

What is important, on the other hand, is that the elements of an informational ‘grammar’ explain the functioning not only of a syntactic system, but of every kind of structured system, such as for example a semantic or a behavioral one. What information theory does not explain is the functioning of a code as a correlating rule. In this sense information theory is neither a theory of signification nor a theory of communication but only a theory of the abstract combinational possibilities of an s-code.

Let us summarize the state of the present methodological situation:

The term /information/ has two basic senses: (a) it means a statistical property of the source, in other words it designates the amount of information that can be transmitted; (b) it means a precise amount of selected information which has actually been transmitted and received. Information in sense (a) can be view as either (a, i) the information at one’s disposal at a given natural source or (a, ii) the information at one’s disposal once an s-code has reduced the equi-probability of that source. Information in sense (b) can be computationally studied either: as (b, i) the passage through a channel of signals which do not have any communicative function and are thus simply stimuli, or as (b, ii) the passage through a channel of signals which do have a communicational function, which – in other words – been coded as the vehicles of some content units.

Therefore we must take into account four different approaches to four different formal objects, namely:

(a, i) the results of a mathematical theory of information as a structural theory of the statistical properties of a source (see 1.4.2); this theory does not directly concern a semiotic approach except insofar as it leads to approach (a, ii);

(a, ii) the results of a mathematical theory of information as a structural theory of the generative properties of an s-code (see 1.4.3); such an approach is useful for semiotic purposes insofar as it provides the elements for a grammar of functives (see 2.1.);

(b, i) the results of studies in informational engineering concerning the process whereby non-significant pieces of information are transmitted as mere signals or stimuli (see 1.4.4); these studies do not directly concern a semiotic approach except insofar as they lead to approach (b, ii);

(b, ii) the result of studies in informational engineering concerning the processes whereby significant pieces of information used for communicational purposes are transmitted (see 1.4.5); such an approach is useful from a semiotic point of view insofar as it provides the elements for a theory of sign production (see chapter 3).

Thus a semiotic approach is principally interested in (a, ii) and (b, ii); it is also interested in (a, i) and (b, i) – these constituting the lower threshold of semiotics – inasmuch as the theory and the engineering of information offer it useful and more effective categories.

As will be shown in chapter 2, a theory of codes, which studies the way in which a system of type (a, ii) becomes the content plane of another system of the same type, will use categories such as ‘meaning’ or ‘content’. These have nothing to do with the category of ‘information’, since information theory is not concerned with the contents that the units it deals with can convey but, at best, with the internal combinational properties of the system of conveyed units, insofar as this too is an s-code.(5)

According to sense (a, i) information is only the measure of the probability of an event within an equi-probable system. The probability is the ratio between the number of cases that turn out to be realized and the total number of possible cases. The relationship between a series of events and the series of probabilities connected to it is the relationship between an arithmetical progression and a geometrical one, the latter representing the binary logarithm of the former. Thus, given an event to be realized among n different probabilities of realization, the amount of information represented by the occurrence of that event, once it has been selected, is given by

log n = x

In order to isolate that event, x binary choices are necessary and the realization of the event is worth x bits of information. In this sense the value ‘information’ cannot be identified with the possible content of that event when used as a communicational device. What counts is the number of alternatives necessary to define the event without ambiguity.

Nevertheless the event, inasmuch as it is selected, is already a detected piece of information, ready to be eventually transmitted, and in this sense it concerns theory(b, i)more specifically.

On the contrary, information in the sense (a, i) is not so much what is ‘said’ as what can be ‘said’. Information represents the freedom of choice available in the possible selection of an event and therefore it is first of all a statistical property of the source. Information is the value of equi-probability among several combinational possibilities, a value which increases along with the number of possible choices: a system where not two or sixteen but millions of equi-probable events are involved is a highly informative system. Whoever selected an event from a source of this kind would receive many bits of information. Obviously the received information would represent a reduction, an impoverishment of that endless wealth of possible choices which existed at the source before the event was chosen.

Insofar as it measures the equi-probability of a uniform statistical distribution at the source, information – according to its theorists – is directly proportional to the ‘entropy’ of a system (Shannon and Weaver, 1949), since the entropy of a system is the state of equi-probability to which its elements tend. If information is sometimes defined as entropy and sometimes as ‘neg-entropy’ (and is therefore considered inversely proportional to the entropy) this is because in the former case information is understood in sense (a, i), while in the latter information is understood in sense (b, i), that is, information as a selected, transmitted and received piece of information.

Nevertheless in the preceding pages information has instead appeared to be the measure of freedom of choice provided by the organized structure known as an s-code. And in the Watergate Model the s-code appeared as a reductive network, superimposed on the infinite array of events that could have taken place within the watershed in order to isolate a few pertinent events.

I shall now try to demonstrate how such a reduction is usually due to a project for transmitting information (sense b, i), and how this project gives rise to an s-code that can in itself be considered a new type of source endowed with particular informational properties – which are the object of a theory of s-codes in the sense (a, ii).

Examples of this kind of theory are represented by structural phonology and many types of distributional linguistics, as well as by some structural theories of semantic space (for instance Greimas, 1966, 1970), by theories of generative grammar (Chomsky & Miller, 1968; etc.) and by many theories of plot structure (Bremond, 1973) and of text-grammar (Van Dijk, 1970; Petöfi, 1972).

If all the letters of the alphabet available on a typewriter keyboard were to constitute a system of very high entropy, we would have a situation of maximum information. According to an example of Guilbaud’s, we would say that, since in a typewriter page I can predict the existence of 25 lines, each with 60 spaces, and since the typewriter keyboard has (in this case) 42 keys – each of which can produce 2 characters – and since, with the addition of spacing (which has the value of a sign), the keyboard can thus produce 85 different signs, the result is the following problem: given that 25 lines of 60 spaces make 1,500 spaces available, how many different sequences of 1,500 spaces can be produced by choosing each of the 85 signs provided on the keyboard?

We can obtain the total number of messages of length L provided by a keyboard of C signs, by raising C to the power of L. In our case we know that we would be able to produce 851,500 possible messages. This is the situation of equi-probability which exists at the source; the possible messages are expressed by a number of 2,895 digits.

But how many binary choices are necessary to single out one of the possible messages? An extremely large number, the transmission of which would require an impressive expense of time and energy.

The information as freedom of choice at the source would be noteworthy, but the possibility of transmitting this potential information so as to realize finished messages is very limited (Guilbaud, 1954). Here is where an s-code’s regulative function comes into play.

The number of elements (the repertoire) is reduced, as are their possible combinations. Into the original situation of equi-probability is introduced a system of constraints: certain combinations are possible and others less so. The original information diminishes, the possibility of transmitting messages increases.

Shannon (1949) defines the information of a message, which implies N choices among h symbols, as:

I = N log2 h

(a formula which is reminiscent of that of entropy). A message selected from a very large number of symbols (among which an astronomical number of combinations may be possible) would consequently be very informative, but would be impossible to transmit because it would require too many binary choices.

Therefore, in order to make it possible to form and transmit messages, one must reduce the values of N and h. It is easier to transmit a message which is to provide information about a system of elements whose combinations are governed by a system of established rules. The fewer the alternatives, the easier the communication.

The s-code, with its criteria of order, introduces these communicative possibilities: the s-code represents a system of discrete states superimposed on the equi-probability of the original system, in order to make it more manageable.

However, it is not the statistical value ‘information’ which requires this element of order, but ease of transmission.

When the s-code is superimposed upon a source of extreme entropy like the typewriter keyboard, the possibilities that the latter offers for choice are reduced; as soon as I, possessing such an s-code as the English grammar, begin to write, the source possesses a lesser entropy. In other words the keyboard cannot produce all of the 851,500 messages that are possible on one page, but a much smaller number, taken from rules of probability, which correspond to a system of expectations, and are therefore much more predictable. Even though, of course, the number of possible messages on a typed page is still very high, nevertheless the system of rules introduced by the s-code prevents my message from containing a sequence of letters such as /Wxwxscxwxscxwxx/ (except in the case of metalinguistic formulations such as the present one).

Given, for instance, the syntactic system of signals in the Watergate Model, the engineer had a set of distinctive features (A, B, C, D) to combine in order to produce as many pertinent larger units (messages like AB) as possible(6)

Since the probability of the occurrence of a given feature among four is 1/4 and since the probability of the co-occurrence of two features is 1/16, the engineer had at his disposal (as shown in 1.1) sixteen possible messages, each of them amounting to 4 bits of information. This system constitutes a convenient reduction of the information possible at the source (so that the engineer no longer has to control and to predict an infinite set of states of the water), and is at the same time a rich (although reduced) source of equi-probabilities. Nevertheless we have already seen that the acceptance of all of the 16 possible messages would have led to many ambiguous situations. The engineer has therefore thoroughly reduced his field of probabilities, selecting as pertinent only four states of the water (as well as four mechanical responses and four conveying signals). By reducing the number of probabilities in his syntactic system, the engineer has also reduced the number of events he can detect at the source. The s-code of signals, entailing two other structurally homologous s-codes (semantic and behavioral system), has superimposed a restricted system of possible states on that larger one which an information theory in the sense (a, i) might have considered as a property of an indeterminate source. Now every message transmitted and received according to the rules of the syntactic system, even though it is always theoretically worth 4 bits, can, technically speaking, be selected by means of two alternative choices, granted that these are limited to four pre-selected combinations (AB, BC, CD, AD) and therefore ‘costs’ only 2 bits.

By means of the same structural simplification, the engineer has brought under semiotic control three different systems; and it is because of this that he has been able to correlate the elements of one system to the elements of the others, thus instituting a code. Certain technical communicative intentions (b, ii), relying on certain technical principles of the type(b, i), have led him, basing himself on the principles of (a, i), to establish systems of the type (a, ii) in order to set out a system of sign-functions called a ‘code’(7).

This chapter may justifiably leave unexplained, regarding it as a pseudo-problem, the question of whether the engineer first produced three organized s-codes in order to correlate them within the framework of a code, or whether, step by step, he correlated scattered and unorganized units from different planes of reality, and then structured them into homologous systems. The option between these two hypotheses demands, in the case of the Watergate Model, a psychological study of the engineer or a biographical sketch; but for more complicated cases such as the natural languages, it demands a theory of the origins of language, a matter which has up to now been avoided by linguists. In the final analysis, what is needed is a theory of intelligence, which is not my particular concern in this context, even though a semiotic enquiry must continuously emphasize the entire range of its possible correlations with it.

What remains undisputed is that pour cause a code is continuously confused with the s-codes: whether the code has determined the format of the s-codes or vice versa, a code exists because the s-codes exist, and the s-codes exist because a code exists, has existed or has to exist. Signification encompasses the whole of cultural life, even at the lower threshold of semiotics.

1. The following model is borrowed from De Mauro, 1966 (now in De Mauro, 1971). It is one of the clearest and most useful introductions to the problems of coding in semiotics.

2. The absence of one of the signals is no longer a signal, as it was in the preceding case (+A vs. -A), for now the absence of one signal is the condition for the detected presence of the other. On the other hand, both their concurrent absence and their concurrent presence can be taken as synonymous devices, both of which reveal something wrong with the apparatus.

3. Clearly from now on the code is valid even if the machine (whether by mistake or under the influence of a malin genie) lies: the signals are supposed to refer to actual states of the water but what they convey are not actual states, but notions about actual states.

4. A problem appears at this point: is structure, thus defined, an objective reality or an operational hypothesis? In the following pages the term ‘structure’ will be used in accordance with the following epistemological presupposition: a structure is a model built and posited in order to standardize diverse phenomena from a unified point of view. One is entitled to suspect that, as long as these simplifying models succeed in explaining many phenomena, they may well reproduce some ‘natural’ order or reflect some ‘universal’ functioning of the human mind. The methodological fault it seems to me important to avoid is the ultimate assumption that, when succeeding in explaining some phenomena by unified structural models, one has grasped the format of the world (or of the human mind, or of social mechanisms) as an ontological datum. For arguments against this kind of ontological structuralism see Eco, 1968.

5. Thus it is correct to say that in the Watergate Model the destination apparatus does not rely on a code, that is, does not receive any communication, and therefore does not ‘understand’ any sign-function. For the destination apparatus is the formal object of a theory (b, i) which studies the amount of stimuli which pass through a channel and arrive at a destination. On the contrary the engineer who has established the model is also concerned with a theory (b, ii) according to which – as far as he is concerned – signals convey contents and are therefore signs. The same happens for the so-called ‘genetic code’. It is the object of a theory of both types (a, i) and (b, i); it only could be the object of a theory of type (b, ii) for God or for any other being able to design a system of transmission of genetic information. As a matter of fact the description the geneticists give of genetic phenomena, superimposing an explanatory structure on an imprecise array of biological processes, is an s-code: therefore the ‘genetic code’ can be the object of a theory of the type (a, ii) thus allowing metaphorical and didactic explanation of the type (b, ii). See note 4 and the discussion in 0.7. As to a semiotic ‘reading’ of the genetic code see also Grassi, 1972.

6. In linguistics, features such as A, B, C, D are elements of second articulation, devoid of meaning (like the phonemes in verbal language), that combine in order to form elements of first articulation (such as AB), endowed with meaning (like the morphemes – or monemes in Martinet’s sense). According to Hjelmslev, when pertinent and non-significant features such as A, B, C, D are elements of a non-verbal system, they can be called ‘figurae’

7. The ambiguous relation between source, s-code, and code arises because an s-code is posited in order to enable some syntactic units to convey semantic units that are supposed to coincide with events happening at a given source. In this sense a syntactic code is so strongly conditioned by its final purpose (and a semantic system so heavily marked by its supposed capacity to reflect what actually happens in the world), that it is easy to understand (though less so to justify) why all three formal objects of the three diverse theories are naively called ‘code’ tout court.