Chapter 13. AI Key Considerations

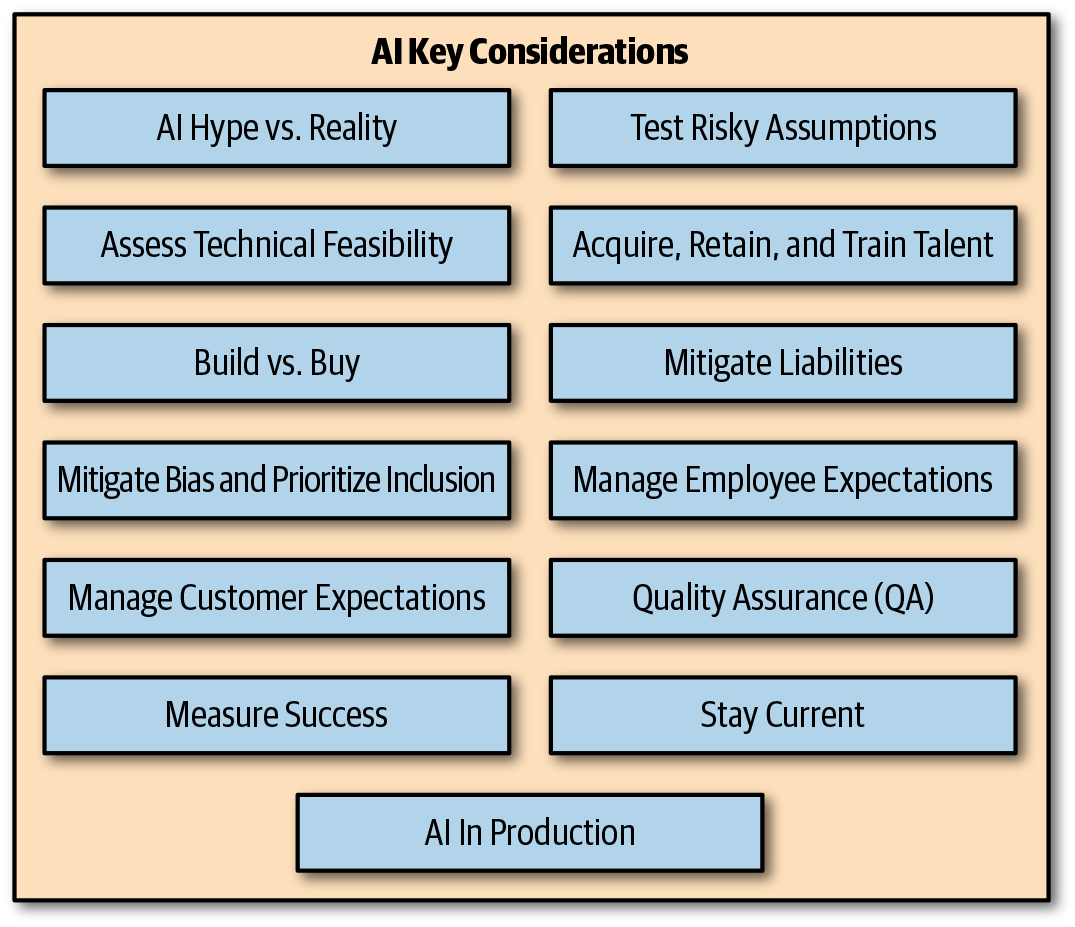

This chapter covers the third and final category of the AIPB Assessment Component: the many key considerations that you need to take into account, and plan for, when developing an AI strategy. Readiness, maturity, and key consideration assessments should be completed as part of the assess methodology phase to create your assessment strategy. Figure 13-1 shows specific key considerations that we cover in this chapter.

Figure 13-1. AI key considerations

Other critical considerations, not noted in Figure 13-1, are ethics and human values. You should never lose sight of these two things. AI-based solutions should be ethically designed and built to benefit people. With that as our foundational key consideration, let’s discuss the other key AI considerations.

AI Hype versus Reality

Many people are under the impression that AI is very close to, or has already achieved, AGI. This is partly due to some of what we see in science-fiction TV shows, movies, and comic books. The Terminator movie franchise is a classic example, and other good examples include Ex Machina, Westworld, and C-3PO from Star Wars.

These incorrect impressions are also largely due to product marketing, over-promising, and the tendency of many people and companies to call just about anything AI—what I collectively refer to as AI hype. You see this a lot with software products, particularly SaaS. Having a few metrics in a dashboard does not qualify as being AI powered, and yet many companies continue to say that they “do” or “have” AI with analytics not much more sophisticated than that. “Real” AI is intelligence exhibited by machines. If a machine doesn’t learn, generate some degree of understanding, and then use the knowledge learned to do something, it’s not AI.

The reality of AI is that, as discussed earlier in the book, it is currently a one-trick pony that is mainly used to solve highly specialized problems, and as of this writing, AGI is still a long way off. AGI might never become a reality in our lifetimes.

AI as a field is also still in its infancy, and the real-world use cases and applications are growing daily. AI is advancing at a rapid pace, and there is a lot of important research being done. One of the main questions around AI is whether current techniques such as deep learning can ever be adapted to multitasking and, ultimately, AGI, or whether we need to find and develop a completely new approach to AI that doesn’t yet exist.

AGI is also related to a question of AI for human automation versus AI for augmented intelligence, and currently most executives and companies are interested in augmented intelligence over automation. Also, there is something very interesting known as the Paradox of Automation. The paradox says that the more efficient an automated system becomes, the more critical the contribution of the human operator becomes.

For example, if an automated system experiences an error (machines are never perfect; bugs exist), the error can multiply or spiral out of control until it is fixed or the system shuts down. Humans are needed to handle this situation. Think about all of the movies or TV shows you’ve seen in which an automated process (e.g., on a spaceship, on an airplane, in a nuclear power plant) fails and humans are deployed to find workarounds and heroically solve the problem.

Lastly, given all of the AI hype and proliferation of AI tools, there are some people who seem to think that starting an AI project should be relatively easy and that they can quickly expect big gains. The reality is that planning and building AI solutions is difficult, and there’s a significant talent shortage. There’s a major shortage of data scientists and machine learning engineers in general, particularly those who have AI-specific expertise and skills.

Even when you have the right talent, AI initiatives can still be very difficult to execute successfully. That said, achieving success with highly custom and difficult-to-build AI solutions will likely generate significant differentiation and competitive advantage. This was highlighted by the Innovation Uncertainty Risk versus Reward Model covered in Chapter 12.

Also, there aren’t many simple automated ways to build many types of AI applications, or to access, move, and prepare data. That said, luckily there are new automation tools, techniques such as transfer learning, and shared models that can offer sufficient accuracy out of the box, or with only minor modifications.

In addition, large gains from AI can take significant time, effort, and cost. Some of those costs can be sunk by unsuccessful projects. Also, although there are many people working on simplifying AI for general use, AI research and techniques are still quite complex. Take a quick look at some of the latest AI research papers published to Cornell’s Arxiv (pronounced “archive”) digital library to see for yourself.

A concept worth mentioning is that of the AI winter, which refers to periods of time in which financial and practical interest in AI is reduced, and sometimes significantly. There have been multiple rounds of AI winters since the early 1970s, due to many factors that we won’t discuss here. The AI winter concept is associated with the fact that technologies and products are subject to hype cycles that relate to their maturity, adoption, and applications.

This law certainly applies to AI and is the reason why we’re seeing so much hype about AI as of this writing. Ultimately, AI has enormous potential to drive real value for people and companies, but we are nowhere near the full realization of all of the potential capabilities, applications, benefits, and impacts. Regarding another AI winter possibly caused by unmet expectations due to the hype, renowned AI expert Andrew Ng does not think there will be one of any significance. This is simply because AI has come a long way and is now proving its value in innovative, exciting, and expanding ways in the real world.

Testing Risky Assumptions

Risky assumptions are made by people all of the time about many things. When building companies, products, and services, assumptions often represent potential risks that increase depending on the degree to which they’re incorrect, including potential costs (e.g., time and money) and lack of product-market fit and adoption, for example. Common assumptions and risk types associated with technology and innovation are around value, usability, feasibility, and business viability. Related questions include: for [insert any technology-based product or service here], will anyone find enough value to buy or use it, will users know how to use it and understand it without assistance, can it be built given our resources and time available, will this product achieve product-market fit and be profitable, and can we execute a successful go-to-market strategy?

The Segway is a great example of this. Dean Kamen and many others assumed that the product was going to disrupt and transform transportation for a very large number of people, and therefore quickly built a huge number of units. A lot of the public hype around the Segway’s release seemed to support that assumption, as well. Although Segway vehicles are still sold for highly specialized applications, they certainly did not have the expected massive impact and sales that Kamen and his company were hoping for.

By the time it was determined that the Segway assumptions were incorrect, a large amount of time, money, and other resources were spent. Approximately $100 million dollars in R&D costs, according to some estimates.

For an AI-specific example, a six-year-old girl in early 2017 accidentally ordered a $170 dollhouse and four pounds of cookies just by having a conversation with Alexa about dollhouses and cookies. In this case, a potentially risky assumption is that consumers are aware of and will set up safety settings (e.g., add a passcode or turn off voice activated ordering) for these devices to prevent accidental orders by children.

The concept of the MVP stems from Lean manufacturing and software development, which provides a mechanism to help mitigate risk and minimize unnecessary expenditure of time and cost. The primary idea is that the minimum amount of UX and software functionality should be built and put in users’ hands as quickly as possible to properly test the riskiest assumptions and validate product–market fit and user delight. Feedback and metrics from MVP pilots are then used to drive iterative improvements until a product or feature is “de-risked.” You should use this framework, or similar Agile and Lean methodologies when developing AI solutions.

Assess Technical Feasibility

We discussed different goals for people and business stakeholders at length earlier in the book. The discussion was kept at an appropriately high level, although in reality, high-level goals driving real-world AI solutions must be broken into more granular goals or initiatives that are better suited at guiding the technical approaches to be used.

Machine learning models don’t fundamentally increase profits or reduce costs as outputs, for example. Machine learning models make predictions, determine classifications, assign probabilities, and produce other outputs based on the chosen technique as well as the data being leveraged, which must be appropriate and adequate for each of the possible output types (i.e., the “right” data, which we covered earlier in the book). Further, each of these output types could be used to achieve the same goal, albeit from a different angle—there’s usually more than one way to get the job done. Determining which approach and output type to use to best achieve a more granular goal (e.g., personalized podcast recommendations), which in turn will help achieve the top-level business goal (customer retention), along with having the “right” data on hand are what technical feasibility is all about.

Let’s assume that our top-level business goal is customer retention. Let’s further assume that our collaborative team of business folks, domain experts, and AI practitioners decide that AI-based personalization of a podcast app’s feed is likely to have the most potential ROI and retention lift out of the options being considered. In this case, the podcasts shown in each individual user’s feed can be displayed and ordered based on a predictive model that calculates the likelihood that the particular user will like it (a probability), or listed without a specific order based on a classifier that classifies each podcast as relevant or not relevant (only the ones classified as relevant are shown), or through some other method.

The point is that a technical feasibility assessment involving an appropriately assembled, cross-functional team must be used to determine which approach will work best and whether the data can get the job done for the given choice. Think of a technical feasibility assessment as being the next, more granular, step of opportunity identification. This might require preparing data, and testing many possible options (remember this is scientific innovation!).

Acquire, Retain, and Train Talent

An incredibly important consideration is acquiring and retaining talent. AI, machine learning, and data science are difficult. There’s a lot of work being done by people and organizations to try to simplify and automate aspects of these fields, but they are still challenging and require very specialized expertise and experience.

The ideal data scientist is an expert programmer, statistician and mathematician, effective communicator, and MBA-level businessperson. This is a uniquely difficult (read, unicorn) combination to find in any one person. The reality is that people are often good at some of these things and less so at others. In addition, although most universities offer computer science degrees, education has not yet caught up to the demand for data science and advanced analytics talent, and therefore not many quality degree programs exist.

The end result is a significant shortage of highly competent and experienced talent. This includes leaders, managers, and practitioners alike. LinkedIn reported in August of 2018 that there was a national shortage of approximately 152,000 people with data science skills, and that the data science shortage was growing faster (accelerating) than the shortage for software development skills.1 Not having the necessary internal expertise, skills, and leadership while executing your AI strategy can result in unsuccessful initiatives, sunk costs, and wasted time.

As someone who has spent a lot of time recruiting data scientists and machine learning engineers, I can honestly say that it’s not easy. The vast majority of applicants that I’ve seen are either fresh out of college or in the process of switching careers. This type of applicant is often well suited for large organizations that have the infrastructure needed to nurture and train very junior talent. This can be more difficult for smaller to midsized data science teams, and for startups in general. Smaller companies also need to fiercely compete with larger and established tech companies for AI talent.

The good news for many small- to midsized companies is that many data scientists are very interested in working with a promising company that has a great value proposition and culture. In addition, people are often driven by the need to have a direct impact on a company’s success and are therefore attracted to smaller companies and data science teams. Companies that fit this description should tailor their messaging accordingly to better attract and retain top talent.

Another consideration is that data science and advanced analytics talent is expensive, and companies might need to spend a substantial amount of money depending on their needs and the talent market at the time. It is very important to ensure that you have the best talent and therefore the best chance for success and most return on dollars spent. Companies should thus prepare and budget accordingly. AI talent is not cheap. Multiple prominent articles have been published by the New York Times and others on the relatively high costs of AI talent.2

Given the shortage, competition, and relatively high costs for AI talent as described, a company must develop a strong recruiting, hiring, and/or staffing augmentation strategy. There are multiple options to consider when it comes to finding and hiring talent. Many include typical business considerations such as hiring contractors versus full-time employees, staff augmentation using a specialized firm, and hiring on-shore versus off-shore.

It’s worth noting that for talent hired outside of your organization and country, there can be a nontrivial risk of exposing and sharing your intellectual property and data outside of your organization, particularly in a different country with different laws, where you are not well equipped to monitor everything. This is becoming especially important given the focus on data privacy and security these days, including needing to be compliant according to regulations such as Europe’s GDPR.

Some of these regulations and standards are very specific about who can have access to sensitive data, and how and where they can access it. This can be exponentially more challenging to monitor and control with off-shore companies, so you must take extra care to ensure trust, compliance, and accountability. Whichever way you find and hire talent, be sure to properly vet candidates and potential partner firms, and assess and mitigate any potential risks. Strong leadership and expertise in data and advanced analytics is key here.

Another option to consider is an acquihire; that is, buying a company primarily to acquire its data science and advanced analytics talent. Acquihires can require a significant amount of money and present a unique set of challenges, but might be a viable option for obtaining talent in a hurry, and has been a strategy employed by many companies.

The last potential option is training talent internally, which can be an excellent choice for overcoming talent shortage challenges. In many ways, this option is ideal but can require a lot of upfront training infrastructure setup and development, talent, cost, and time, particularly when developing an effective and structured curriculum.

Creating an internal advanced analytics training program allows a company to train for exactly what’s needed, both in terms of expertise and tools. It should include training for programming, mathematics, statistics, data science principles, machine learning algorithms, and more.

As someone who has taught and trained thousands of people, and developed curriculum from scratch, this is easier said than done, although it is certainly doable. Going this route requires making certain decisions, as well. What education, experience, skills, and background are required to get into the training program? How are those things assessed? Ultimately, you are trying to assess potential more than actual skills and expertise given that your company will be the one filling those gaps.

Other things to determine are how to assess progress and knowledge learned throughout the training process, which includes assessing hands-on projects, optimal training length, and whether trainees should work on actual business projects. You must also determine what the path for the employee looks like following training and whether there will be ongoing training.

Developing an effective and successful training program like this would be amazing and hugely powerful. This can also become increasingly necessary as AI becomes more predominant in the workplace, and people might need to be re-allocated to new jobs that require specialized training as a result.

An alternative, or an option for curriculum augmentation, would be to take advantage of many existing online training tools and courses (e.g., MOOCs).

One final thing to note. In addition to hiring and/or staffing augmentation, a strong emphasis should also be placed on employee onboarding, training, engagement, mentorship, development, and retention. Given the relative shortage and competition around talent, care should be given to providing the best work environment (e.g., safe, diverse, inclusive), culture, and opportunities possible in order to keep your best talent around for a long time.

Build Versus Buy

When it comes to employing technology for products, services, and operations, companies often need to determine whether to build versus buy. This is a very legitimate question.

To come to the “right” answer and make the corresponding decision, you can use common business and financial analysis techniques such as cost-benefit analysis (CBA), total cost of ownership (TCO), return on investment (ROI), and opportunity cost estimation. These techniques might require financial estimates and forecasting that can be imprecise and difficult to obtain. I would argue that you can answer the build versus buy question in a much simpler way in most cases.

First, is there a buy option available? If not, the answer is simple: you must build. Second, do you want to develop specific technologies as a core part of your business, or use technology to innovate, differentiate, and generate competitive advantage? If yes, you must build some or all of the total solution. It’s definitely worth determining, however, whether anything existing is available to use without you having to reinvent the wheel. When building technology solutions, it’s often best to use open source software and other tools such as APIs when available and at a reasonable cost, especially when free.

If innovation isn’t your goal and you’re mainly interested in applying technology to help improve business KPIs only, or to facilitate business operations and processes, there might be off-the-shelf solutions available that make more sense to buy. In this case, you need to very seriously weigh the buy benefits against potential disadvantages such as vendor lock, very high costs, data ownership, data portability, and vendor stability. There are few, if any, cases in which I would recommend any technology solution in which you do not own your data, or have the ability to move your data at will.

Generally speaking, I find that buying most often results in an inferior product that costs far more than building and is not customized to your specific business or needs. It also means that you’re buying a commoditized product that’s available to every one of your competitors, too, which means you have absolutely no advantage beyond being better at configuration or are more of a power user.

I’m always amazed at how many company executives will say that they must increase sales and profits, cut costs, and truly differentiate themselves from their competitors, and yet at the same want to buy off-the-shelf solutions and avoid pursuing innovation. More and more every day, you can’t have both. They also realize how unhappy they are with aspects of a specific product or vendor and migrate to another as a result. I’ve seen this over and over. In many cases, the product or vendor that these executives migrate to presents some of the same problems they had before, and thus the cycle repeats. That tends to become pretty expensive pretty quickly, and incurs a ton of nonfinancial costs, as well. When the dust settles, they would have been better off building.

Ultimately, it comes down to whether you want to lead, differentiate, and truly strengthen your unique value proposition, or just simply follow and do what everyone else is doing. This is analogous to the ideas behind red ocean and blue ocean strategies for those familiar. Red oceans are characterized by fierce and dense competition and commoditization. Blue oceans, on the other hand, represent new and uncontested markets, free of competition, and where the demand is created as opposed to being competed for.

In that respect, it’s less of a question of build versus buy, but rather lead or follow. Leaders build and followers buy, almost always. Likewise, leaders are successful at finding new profit and growth opportunities through innovation and differentiation, whereas followers fight to stay afloat and maintain the status quo. I think that in today’s technology age, it’s become abundantly clear that innovators and leaders continue to disrupt and displace incumbents and those slow to innovate and embrace emerging technology.

One final note. We discussed scientific innovation already at length as well as the scientific, empirical, and nondeterministic nature of AI and machine learning. If these are insurmountable challenges for your company, it can be very difficult to pursue AI initiatives. In that case, using data is still critical and should be centered more on commoditized business intelligence and descriptive analytics until you’re able to innovate and build versus buy.

Mitigate Liabilities

There are many potential liabilities and risks when it comes to data and analytics, including those associated with regulations and compliance, data security and privacy, consumer trust, algorithmic opaqueness, lack of interpretability or explainability, and more.

Starting with regulation and compliance, most new initiatives are primarily centered on increasing data security and privacy. Companies publishing privacy policies is standard practice, but very few people read these policies, nor are they able to understand all the details, given the legal jargon.

The EU’s GDPR is a major change in data privacy regulation that went into effect on May 25, 2018. GDPR currently applies only to the EU, but many US-based tech companies operate globally, and therefore need to be in compliance in the EU. According to the official GDPR website, stronger rules on data protection mean that people have more control over their personal data, and businesses benefit from a level playing field.

It is yet to be seen whether the GDPR will be adopted by the United States or if it will its own comparable data privacy regulation. In 2018, the state of California passed the California Consumer Privacy Act (AB 375). This could certainly be a step closer to the United States adopting stricter consumer privacy regulation in general.

In addition, trust is very important to most consumers. Consumer trust with respect to data means that consumers do not want their data to be used in ways they are unaware of or would not approve of. Examples include using people’s data in ways that benefit only business and not the consumer, and selling data to third parties without oversight or regard of its use after it’s transferred.

Customer inquiries and complaints can occur when people feel that their data is not properly secured or used. Lack of consumer trust can be a form of liability. It is critical that we use data in ethical ways while respecting consumer privacy, security, and trust. Again, the goal should always be to benefit people and business, not just business.

We can establish trust through transparency and disclosure. Potential areas of transparency for customers include transparency into a business in general—how and why certain business decisions are made—as well as transparency into technologies, algorithms, and third-party partners that use consumer data. This is particularly important in highly regulated industries like insurance, financial services, and health care.

In addition to the considerations discussed so far, explainability, algorithmic transparency, and interpretability can be critical in certain scenarios. These are not the same things, and it’s important to understand the differences. Explainability is the ability to describe very complex concepts, such as those very common with AI, in simple terms that almost anyone can understand. This means abandoning complex statistical, mathematical, and computer science jargon in exchange for easy-to-understand descriptions and analogies.

Algorithmic transparency means that any algorithms used, along with their intention, underlying structure, and outputs (e.g., decisions, predictions) should be made visible to any stakeholders (e.g., business, user, regulator) as needed. This can help build trust and provide accountability.

Interpretability refers to the ability of people to interpret exactly how certain algorithms and machine learning models make predictions, classifications, and decisions. Some algorithms and models are very difficult, if not impossible, to interpret and are usually referred to as “black boxes.” This can cause a problem; for example, someone deciding to sue a company based on a decision made or action taken that was carried out by an algorithm.

In court, for example, it can be very easy to explain why a person was algorithmically turned down for a financial loan if the decision was made using a highly interpretable decision tree–based algorithm. If the loan decision was made using a neural network or deep learning approach (covered more in Appendix A), on the other hand, all bets are off. You likely wouldn’t be able to justify and convince the judge as to why the decision was made exactly—a situation that would not be in your favor. It is for these reasons that highly interpretable and explainable algorithms are often chosen over their black-box counterparts in highly regulated industries, despite the potential model performance penalty.

Additional potential issues with black-box algorithms are lack of verifiability and diagnostic difficulty, as noted by Erik Brynjolfsson and Andrew Mcafee in their Harvard Business Review article. Lack of verifiability simply means that it can be almost impossible to ensure that a given neural network will work in all situations, including those outside of how it was trained. Depending on the application, this could present a serious problem (e.g., in a nuclear power plant).

Diagnostic difficulty refers to potential issues with diagnosing errors, some of which might arise from similar factors that cause model drift, and subsequently being able to fix them. This is largely due to the complexity and lack of interpretability with neural network algorithms, as discussed.

Despite the aforementioned potential downsides of black-box algorithms, neural networks and deep learning techniques have many significant benefits that can outweigh the downsides. Some of these benefits include potentially much better performance, the ability to produce outcomes not possible using other techniques, and also the ability to do something that most other machine learning algorithms are unable to do (e.g., automatic feature extraction). This means that the algorithm removes the need for a human machine learning engineer to manually select features or create new features for model training. This can be a huge benefit, although it might not be clear what features a given neural network automatically generates and leverages, and therefore adds to the degree to which the neural network is a black box. In either case, the trade-offs between potential lack of interpretability and the benefits discussed present key considerations for which you must account.

Lastly, errors in general present a potential liability that you must consider, and in some cases, certain errors can have life-and-death consequences. Let’s discuss error types and potential consequences a bit more. As discussed earlier in the book, most AI and machine learning models are error based, which means that the models are trained using a training dataset until the chosen performance metric (and accompanying error) is within acceptable range when the model is tested against a test dataset.

Depending on the application, acceptable range can mean predicting something 85% of the time correctly, or it might mean making a choice between a lower false positive (type 1 error) rate over a false negative rate (type 2 error). Let’s look at two examples to explain the difference between these error types and their potential impact. The first example looks at email spam detection; the second examines diagnosing cancer from a medical test. In the spam detection case, an email is considered positive if it is spam, and in the cancer case, the test results are considered positive when actual cancer is diagnosed. False positive (type 1) errors occur when a predictive model incorrectly predicts a positive result (spam or cancer in our example), whereas false negative (type 2) errors occur when a predictive model incorrectly predicts a negative result (not spam or not cancer in our example).

In email spam detection, it’s more important to ensure important emails wind up in the inbox, even if that means letting a little bit of spam in, as well. This means that those building and optimizing the model will tune it to favor making false negative (type 2) errors over false positive (type 2) errors, and therefore some emails might be incorrectly classified as being not spam and sent to the inbox when they should have been sent to the spam folder.

In cancer detection and diagnosis, however, this trade-off and decision is significantly more important and carries potential life or death consequences. In this case, it’s much better to falsely diagnose someone with cancer (false positive—type 1 error) and ultimately find out it was a mistake, as opposed to telling a person they don’t have cancer when they actually do (false negative—type 2 error). Although the former case can cause the patient undue stress, additional expenses and medical tests, the latter case can cause the patient to leave the doctor’s office and go about their lives with the disease undiscovered and untreated until it’s too late.

In other applications, another type of error trade-off is referred to as precision versus recall, and similarly, decisions must be made about which is more important to favor. Google’s Jess Holbrook advises making a determination about whether “it’s important to include all the right answers even if it means letting in more wrong ones (optimizing for recall), or minimizing the number of wrong answers at the cost of leaving out some of the right ones (optimizing for precision).”3

There are many types of performance metrics and errors associated with machine learning, which can sometimes carry significant consequences if not tuned for correctly. It is very important that executives and managers understand the different types of potential errors and their impacts, as most practitioners (e.g., data scientists) are usually not in a position to properly assess, manage, or make key decisions around the potential risks involved.

Mitigating Bias and Prioritizing Inclusion

Another key consideration—and one that is becoming much more widely talked about—are the potential biases that AI applications can learn and exhibit. This is commonly called algorithmic bias and can include biases and potential discrimination based on race, ethnicity, gender, and demographics. Clearly, you need to avoid this.

Algorithmic bias is largely a data problem. Data can be biased due to the factors and conditions that the data stems from (e.g., socioeconomic and low-income communities), and therefore isn’t an AI problem per se, but a real-world systemic issue that is represented in the data and infused into AI solutions (from training) as a result. The data might be biased in a way that reflects very poorly and inaccurately on a certain subset of people and therefore might not model reality. This data, when used without careful consideration of bias, can result in trained models that can make very poor and unfair decisions. These decisions can further bias both reality and subsequent data, and therefore create a negative feedback loop.

Inclusion, or lack thereof, is related to algorithmic bias, as well. It is very important to prioritize inclusion from the outset of a project. This means making sure to gather and use as widely a diverse and inclusive dataset as possible. The ultimate goal is to ensure that a given AI solution provides benefits to all people, and not just one or a few select groups.

Another consideration is known as confirmation bias. Confirmation bias is when people see what they want to see if they look hard enough. An example of confirmation bias is when you’re interested in buying a new car and are considering a specific make and model. Often, people in this situation begin to notice that exact make and model on the roads much more than previously, and this helps confirm that they are making the right decision. This despite the fact that the number of cars with the specific make and model hasn’t changed, and also that many other car models might be equally, if not more, represented on the roads.

I have seen confirmation bias many times when it comes to data. This type of bias can occur in different ways. One is that people simply think the data supports an idea or hypothesis they have even though that support is not concrete and largely subject to interpretation. Another possibility is that the data is manipulated in some way to include only supporting data while discarding the rest.

Regardless of how confirmation bias can occur, it is critical to let the data tell you what it has to say, assuming that you or your data science colleagues have the requisite expertise and skill set to extract key information and insights from the data. It is just as important to learn that a given hypothesis is wrong as it is to learn that it is right. Understanding what nonmanipulated deep insights the data contains is a critical step in utilizing the data in meaningful, high value, and optimal ways.

The final type of bias covered here is selection (aka sampling, or sample selection) bias. Selection bias occurs when data is not properly randomized prior to use with techniques such as statistics, machine learning, and predictive analytics. Lack of proper randomization can result in unrepresentative data that does not properly reflect the full data population.

Managing Employee Expectations

AI is good at making some people feel like either killer robots will take over the world, or in the less dramatic case, that they will be replaced at work by AI robots or automation. As such, it is quite possible that there will be deep employee concern and resistance to AI initiatives, particularly those that could potentially automate parts or entire jobs.

Another potential employee concern is how and why AI is being used in certain applications, which ultimately stems from some people’s ethics, morals, and values. In some cases employees can feel very strongly against a particular AI application and protest the company as a result.

The main point here is that empathy and sensitivity should be given to these potential concerns and addressed accordingly. Again, trust often results from transparency, so it is very wise to educate employees on why certain AI initiatives are being pursued as part of an overall vision and strategy, and what employees can expect as a result. Great internal data and advanced analytics leadership is critical here, particularly in terms of the messaging (conveying value and vision), impact, rollout, and timing.

Managing Customer Expectations

As discussed, AI hype largely supersedes its current state-of-the-art and actual real-world use. As a result, many people’s expectations of AI are unrealistic, and this can lead to disappointment and lack of appreciation for the many already-existing benefits of AI.

The impact of hype on people’s perception and expectations of AI solutions is especially notable for personal assistants such as Amazon’s Alexa, Google’s Assistant, and Apple’s Siri. Tons of hype and marketing around these technologies when initially launched gave people the impression that these tools were able to carry out a large number of useful tasks and also provide accurate information and answers on demand. It wasn’t long before people realized that their capabilities were actually quite limited, and that a large proportion of requests weren’t able to be filled or the results were simply wrong. Additionally, the user experience (e.g., conversationally) of using these devices for many people leaves something to be desired, although it is getting better over time.

For these personal assistants to truly rise to the hype and meet people’s expectations, they will need significant improvements in natural language understanding (NLU), which if you recall is a very difficult problem in AI. Expectations should be set accordingly; personal assistants currently provide very useful features and functionality that are relatively limited at the moment, but new capabilities are being actively developed and overall functionality and usefulness should improve significantly over time.

AI and machine learning are capable of many things, and the list is growing, although many people are still not sure what these technologies can do specifically or how we can use them in real-world applications. In addition, many people think of AI in very narrow and often industry- or business function–specific terms, which is usually a function of the person’s role in a company. If you speak with marketing people about AI, for example, they might be aware of, and focus on, segmentation, targeting, and personalization. There are, however, many other potential uses of AI and machine learning in marketing, and that’s not always obvious.

Additionally, often people and businesses do not know exactly what they want or what is possible in software, which is something I’ve encountered especially in consulting. A well-known quote attributed to Henry Ford, is4 “If I had asked people what they wanted, they would have said faster horses.” This can be a result of many things, including lack of technical background, lack of imagination, or not knowing what’s technically feasible.

This means that most nontechnical people tend to have a limited ability to influence the way technology impacts their experiences and lives. People must therefore rely on those of us who do understand how to design and build technological solutions, including those using AI. It is then up to us to make sure that we respect that and put people at the center of our solutions.

A potential outcome of this, and another consideration around customer expectations, is that customers might believe that off-the-shelf software can get the job done. In this context, you might hear things like, “My CRM can do that.” In most cases the reality is that the CRM cannot do that. Software built for the masses such as SaaS CRM applications are usually built to appeal to the average of the masses, although this might include some specific feature customizability in the form of plug-ins and modules.

In either case, most built-in analytics are very generic and not customizable enough. Data can also become potentially much more useful when combined with other data, which is usually not possible with off-the-shelf solutions without extensive export and ETL processes. Everything discussed in the section on building versus buying is pertinent here.

Quality Assurance

All software applications must be properly tested for quality. The industry term for this is quality assurance, or QA. This is no different for AI-based solutions (e.g., generating deep actionable insights, augmenting human intelligence, automation). In particular, QA is paramount to ensuring intended and high-performing outcomes as well as inspiring confidence in the results and deliverables among key stakeholders and end users.

Have you ever been given a report full of metrics, data visualizations, and data aggregations in which you or a colleague noticed that some of the values didn’t seem to be correct? Often the person who generated the report looks into it and finds that a set of feature values was counted twice, left out all together, or something similar. The aggregation and data transformation logic might have been incorrect, and therefore the software that generated the report was buggy. In either case, nothing is better at shaking people’s confidence in data applications more than being presented with incorrect and error-ridden results. In these cases, creating a successful AI solution on which people rely on data for insights can become an uphill battle.

Finding errors like these when doing complex data queries, ingestions, transformations, and aggregations can be very difficult, and often requires substantial QA and even hand calculations to replicate the analytics being automated to ensure that everything is calculated properly. That’s assuming that it can even be done.

Previously, we explored black-box and complex algorithms. In those cases, it can be virtually impossible to do QA and reproduce what they are doing in any other way. The only measure of correctness might be the model’s performance itself, which is subject to change over time, and not even applicable in the case of unsupervised learning. In either case, ensuring accurate analytics, predictions, and other data-based deliverables is critical for maintaining stakeholder confidence and for promoting a data-informed and data-driven cultural shift; in other words, for getting people to become reliant on data for insights and decision making.

Measure Success

The purpose of becoming data informed or data driven is to transition from historical precedent, simple analytics, and gut feel, as discussed, but also to gain much deeper actionable insights and maximize outcomes and benefits from key business initiatives. Using data in decision making should be done on both sides of the decision-making process. You should use data to inform better decisions and suggest or automate actions, and you also should use it to measure the effectiveness and value of actions taken.

Given the importance of data in the decision-making process, there is not much point in pursuing and deploying AI solutions without having a way to measure impact and success. In other words, be sure to know whether you achieved your goals, and whether the intended benefits were realized and by how much.

After a particular AI-based solution is chosen, built, and deployed in the real-world, it is very important to be able to measure success. There are a variety of business and product metrics (KPIs) that are often used. Let’s discuss business metrics first. Business metrics are direct measures of the state and health of a business, either as a snapshot, or tracked over time to make comparisons, determine trends, and enable forecasting. These metrics are based primarily on company financials and operations, and are measured outside of the context of specific products and services.

Top examples include total revenue (top line), gross margin, net profit margin (bottom line), earnings before interest, taxes, depreciation, and amortization (EBITDA), ROI, growth (both revenue and new customers), utilization rate (for billable time), operating productivity (e.g., revenue per sales employee), variable cost percentage, overhead costs, and compound annual growth rate (CAGR). Business metrics include funnel and post sales metrics as well; things like qualified lead rate, cost of customer acquisition (CAC), monthly and annual recurring revenue (MRR, ARR), annual contract value (ACV), customer lifetime value (LTV), customer retention and churn, and lead conversion rate.

Product metrics are those intended to measure the efficacy and value of specific products and services, often for both the business and user. These metrics are ideal for better understanding customer engagement (type and frequency of product interaction), customer satisfaction and likelihood to recommend, customer loyalty, and sales driven by product interactions (conversions).

Dave McClure coined the phrase “Startup Metrics for Pirates: AARRR!!!”. This phrase represents a model that he created for a metrics framework to drive product and marketing efforts. The “AARRR” part stands for acquisition, activation, retention, referral, and revenue. This is a type of funnel that represents the journey of a new exploring, nonpaying customer through conversion to becoming a paying customer.

Acquisition is the first site visit or app download. Activation is a result of an initial positive experience with the product, and willingness to return. Retention is repeated visits and use. Referral is the act of telling others about a product because of liking it so much, and revenue means that the user performs actions with the product that result in money spent and business revenue received.

Although not all stemming from the AARRR model, specific product metrics include net promoter score (NPS), app downloads, number of accounts registered, average revenue per user, active users, app traffic and interaction (web and mobile), conversions (both revenue and nonrevenue generating), usability test metrics, A/B and multivariate testing metrics, and many quality- and support-related metrics.

These metrics are not only important for measuring success, but you can use some of them as features for training machine learning models. We can use certain metrics, such as those associated with user engagement, to predict future sales, for example. These metrics can also be used to create new AI visions, drive decisions, and guide AI strategy modifications in order to improve success metrics and maximize desired outcomes.

One thing worth mentioning is that you should avoid emphasizing vanity metrics. These are metrics that look great and make people feel good, but have very little quantifiable impact on business outcomes. A great example is the number of followers you or your company have on a social media platform. The more the better, of course, but that doesn’t mean that your company’s top line grows in proportion to your followers.

I want to finish this section by briefly mentioning the concept of customer delight. This is arguably the most difficult thing to measure, and yet has the most potential to make or break a product or service. People pay for products and services they like, and avoid those they don’t. Not only that, liking something isn’t good enough, especially for premium and relatively more expensive products. The additional cost must be justified, particularly when there is a lot of competition, by going from like to delight. People will give up bells and whistles (features) for a product that is delightful and pleasurable to use.

Stay Current

It is incredibly important to stay current when it comes to AI, particularly for those involved in a creating a company’s AI vision and strategy, making related decisions, and for those involved in execution (i.e., practitioners). This is true in terms of specific fields such as deep learning, natural language, reinforcement learning, and transfer learning, all of which are being actively developed at a very fast rate, with advancements being made almost daily. This is also the case for AI-related hardware, tools, and compute resources.

How best to stay current? Because I can’t speak to your individual needs and optimal way of learning, I can simply let you know my preferences and primary sources of information. I have Twitter lists and Reddit feeds that I follow. I am also subscribed to many relevant email newsletters, delivering great and current content directly to my email inbox. I also regularly read books and take online courses when I have the time. For staying updated on very technical and academic research and advancements, I use Cornell University Library’s arXiv.org. I also try to keep up with general industry and market research. Finally, I do good old-fashioned research, as needed.

My advice is to find what works best for you and stick with that. It’s very difficult to keep up with everything, so pick a manageable set of topics and filter everything else out in order to stay up to date on your interests.

AI in Production

There is a very big difference between exploratory machine learning and AI development, as compared to creating production-ready AI solutions that require actually deploying, monitoring, maintaining, and optimizing. This is represented by the build, deliver, and optimize phases of the AIPB Methodology Component.

There are many key differences and challenges that you should consider, which deserve a chapter of their own. As such, and given the more technical, subject matter-specific nature of this topic, a thorough discussion of considerations and differences associated with AI in production and development can be found in Appendix C.

Summary

This chapter covered key considerations that represent the third and final subphase of the assess phase of the AIPB Methodology Component and final category of AIPB assessment. The three AIPB assessments should be completed to create your AIPB assessment strategy, which should be incorporated into your overall AI strategy.

At this point, we’ve covered a lot when it comes to planning for and executing a vision-aligned AI strategy. Chapter 14 presents an example of developing an AI strategy and the resulting outputs: a solution strategy and prioritized roadmap.

1 https://economicgraph.linkedin.com/resources/linkedin-workforce-report-august-2018

2 https://www.nytimes.com/2018/04/19/technology/artificial-intelligence-salaries-openai.html and https://www.nytimes.com/2017/10/22/technology/artificial-intelligence-experts-salaries.html

3 https://medium.com/google-design/human-centered-machine-learning-a770d10562cd

4 It turns out that there’s no actual proof that he ever said this, but it makes a good point.