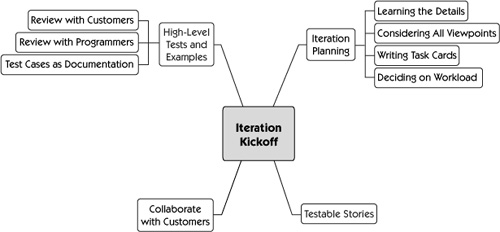

Most teams kick off their new iteration with a planning session. This might be preceded by a retrospective, or “lessons learned” session, to look back to see what worked well and what didn’t in the previous iteration. Although the retrospective’s action items or “start, stop, continue” suggestions will affect the iteration that’s about to start, we’ll talk about the retrospective as an end-of-iteration activity in Chapter 19, “Wrap Up the Iteration.”

While planning the work for the iteration, the development team discusses one story at a time, writing and estimating all of the tasks needed to implement that story. If you’ve done some work ahead of time to prepare for the iteration, this planning session will likely go fairly quickly.

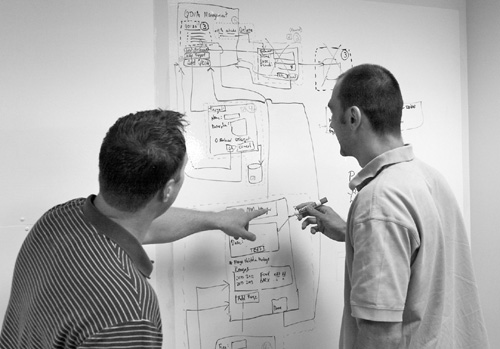

Teams new to agile development often need a lot of time for their iteration planning sessions. Iteration planning often took a whole day when Lisa’s team first started out. Now they are done in two or three hours, which includes time for the retrospective. Lisa’s team uses a projector to display user acceptance test cases and conditions of satisfaction from their wiki so that everyone on the team can see them. They also project their online story board tool, where they write the task cards. Another traditional component of their planning meetings is a plate of treats that they take turns providing. Figure 17-1 shows an iteration planning meeting in progress.

Ideally, the product owner and/or other customer team members participate in the iteration planning, answering questions and providing examples describing requirements of each story. If nobody from the business side can attend, team members who work closely with the customers, such as analysts and testers, can serve as proxies. They explain details and make decisions on behalf of the customers, or take note of questions to get answered quickly. If your team went over stories with the customers in advance of the iteration, you may think you don’t need them on hand during the iteration planning session. However, we suggest that they be available just in case you do have extra questions.

As we’ve emphasized throughout the book, use examples to help the team understand each story, and turn these examples into tests that drive coding. Address stories in priority order. If you haven’t previously gone over stories with the customers, the product owner or other person representing the customer team first reads each story to be planned. They explain the purpose of the story, the value it will deliver, and give examples of how it will be used. This might involve passing around examples or writing on a whiteboard. UI and report stories may already have wire frames or mock-ups that the team can study.

A practice that helps some teams is to write user acceptance tests for each story together, during the iteration planning. Along with the product owner and possibly other stakeholders, they write high-level tests that, when passing, will show that the story is done. This could also be done shortly in advance of iteration planning as part of the iteration “prep work.”

Stories should be sized so they’ll take no more than a few days to complete. When we get small stories to test on a regular basis, we do not have them all finished at once and stacked up at the end of the iteration waiting to be tested. If a story has made it past release planning and pre-iteration discussions and is still too large, this is the final chance to break it up into smaller pieces. Even a small story can be complex. The team may go through an exercise to identify the thin slices or critical path through the functionality. Use examples to guide you, and find the most basic user scenarios.

Agile testers, along with other team members, are alert to “scope creep.” Don’t be afraid to raise a red flag when a story seems to be growing in all directions. Lisa’s team makes a conscious effort to point out “bling,” or “nice to have” components, which aren’t central to the story’s functionality. Those can be put off until last, or postponed, in case the story takes longer than planned to finish.

Considering All Viewpoints

As a tester, you’ll try to put each story into the context of the larger system and assess the potential of unanticipated impacts on other areas. As you did in the release planning meeting, put yourself in the different mind-sets of user, business stakeholder, programmer, technical writer, and everyone involved in creating and using the functionality. Now you’re working at a detailed level.

In the release planning chapter, we used this example story:

As a customer, I want to know how much my order will cost to ship based on the shipping speed I choose, so I can change if I want to.

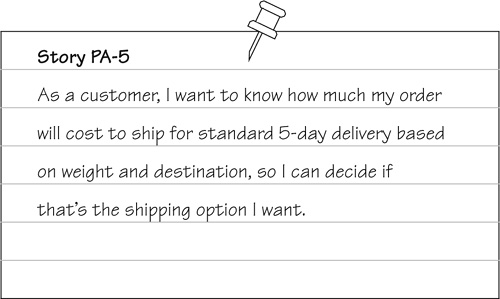

We decided to take a “thin slice” and change this story to assume there is only one shipping speed. The other shipping speeds will be later stories. For this story, we need to calculate shipping cost based on item weight and destination, and we decided to use BigExpressShipping’s API for the calculation. Our story is now as shown in Figure 17-2.

The team starts discussing the story.

Tester: “Does this story apply for all items available on the site? Are any items too heavy or otherwise disqualified for 5-day delivery?

Product Owner: “5-day ground is available for all our items. It’s the overnight and 2-day that are restricted to less than 25 lbs.”

Tester: “What’s the goal here, from the business perspective? Making it easy to figure the cost to speed up the checkout? Are you hoping to encourage them to check the other shipping methods—are those more profitable?”

Product Owner: “Ease of use is our main goal, we want the checkout process to be quick, and we want the user to easily determine the total cost of the order so they won’t be afraid to complete the purchase.”

Programmer: “We could have the 5-day shipping cost display as a default as soon as the user enters the shipping address. When we do the stories for the other shipping options, we can put buttons to pop up those costs quickly.”

Product Owner: “That’s what we want, get the costs up front. We’re going to market our site as the most customer friendly.”

Tester: “Is there any way the user can screw up? What will they do on this page?”

Product Owner: “When we add the other shipping options, they can opt to change their shipping option. But for now, it’s really straightforward. We already have validation to make sure their postal code matches the city they enter for the shipping address.”

Tester: “What if they realize they messed up their shipping address? Maybe they accidentally gave the billing address. How can they get back to change the shipping address?”

Programmer: “We’ll put buttons to edit billing and shipping addresses, so it will be very easy for the user to correct errors. We’ll show both addresses on this page where the shipping cost displays. We can extend this later when we add the multiple shipping addresses option.”

Tester: “That would make the UI easy to use. I know when I shop online, it bugs me to not be able to see the shipping cost until the order confirmation. If the shipping is ridiculously expensive and I don’t want to continue, I’ve already wasted time. We want to make sure users can’t get stuck in the checkout process, get frustrated, and just give up. So, the next page they’ll see is the order confirmation page. Is there any chance the shipping cost could be different when the user gets to that page?”

Programmer: “No, the API that gives us the estimated cost should always match the actual cost, as long as the same items are still in the shopping cart.”

Product Owner: “If they change quantities or delete any items, we need to make sure the shipping cost is immediately changed to reflect that.”

As you can see by the conversation, a lot of clarification came to light. Everyone on the team now has a common understanding of the story. It’s important to talk about all aspects of the story. Writing user acceptance tests as a group is a good way to make sure the development team understands the customer requirements. Let’s continue monitoring this conversation.

Tester: “Let’s just write up some quick tests to make sure we get it right.”

Customer: “OK, how’s this example?

I can select two items with a 5-day shipping option and see my costs immediately.

Tester: “Great start, but we won’t know where to ship it to at that point. How about a more generic test like:

Verify the 5-day shipping cost displays as the default as soon as the user enters a shipping address.

Customer: “That works for me.”

Asking questions based on different viewpoints will help to clarify the story and allow the team to do a better job.

When your team has a good understanding of a story, you can start writing and estimating task cards. Because agile development drives coding with tests, we write both testing and development task cards at the same time.

If you have done any pre-planning, you may have some task cards already written out. If not, write them during the iteration planning meeting. It doesn’t matter who writes the task cards, but everyone on the team should review them and get a chance to give their input. We recognize that tasks may be added as we begin coding, but recognizing most of the tasks and estimating them during the meeting gives the team a good sense of what is involved.

Janet uses an approach similar to this, but the programmer’s coding card stays in the “To Test” column until the testing task has been completed. Both cards move at the same time to the “Done” column.

Three test cards for Story PA-5 (Figure 17-2), displaying the shipping cost for 5-day delivery based on weight and destination, that Lisa’s team might write are:

Write FitNesse tests for calculating 5-day ship cost based on weight and destination.

Write FitNesse tests for calculating 5-day ship cost based on weight and destination.

Write WebTest tests for displaying the 5-day ship cost.

Write WebTest tests for displaying the 5-day ship cost.

Manually test displaying the 5-day delivery ship cost.

Manually test displaying the 5-day delivery ship cost.

Some teams prefer to write testing tasks directly on the development task cards. It’s a simple solution, because the task is obviously not “done” until the testing is finished. You’re trying to avoid a “mini-waterfall” approach where testing is done last, and the programmer feels she is done because she “sent the story to QA.” See what approach works best for your team.

If the story heavily involves outside parties or shared resources, write task cards to make sure those tasks aren’t forgotten, and make the estimates generous enough to allow for dependencies and events beyond the team’s control. Our hypothetical team working on the shipping cost story has to work with the shipper’s cost calculation API.

Tester: “Does anyone know who we work with at BigExpressShipping to get specs on their API? What do we pass to them, just the weight and postal code? Do we already have access for testing this?”

Scrum Master: “Joe at BigExpressShipping is our contact, and he’s already sent this document specifying input and output format. They still need to authorize access from our test system, but that should be done in a couple of days.”

Tester: “Oh good, we need that information to write test cases. We’ll write a test card just to verify that we can access their API and get a shipping cost back. But how do we know the cost is really correct?”

Scrum Master: “Joe has provided us with some test cases for weight and postal code and expected cost, so we can send those inputs and check for the correct output. We also have this spreadsheet showing rates for some different postal codes.”

Tester: “We should allow lots of time for just making sure we’re accessing their API correctly. I’m going to put a high estimate on this card to verify using the API for testing. Maybe the developer card for the interface to the API should have a pretty conservative estimate, too.”

When writing programmer task cards, make sure that coding task estimates include time for writing unit tests and for all necessary testing by programmers. A card for “end-to-end” testing helps make sure that programmers working on different, independent tasks verify that all of the pieces work together. Testers should help make sure all necessary cards are written and that they have reasonable estimates. You don’t want second-guess estimates, but if the testing estimates are twice as high as the coding estimates, it might be worth talking about.

Some teams keep testing tasks to a day’s work or less and don’t bother to write estimated hours on the card. If a task card is still around after a day’s work, the team talks about why that happened and writes new cards to go forward. This might cut down on overhead and record-keeping, but if you are entering tasks into your electronic system, it may not. Do what makes sense for your team.

Estimating time for bug fixing is always tricky as well. If existing defects are pulled in as stories, it is pretty simple. But what about the bugs that are found as part of the iteration?

However your team chooses to estimate time spent for fixing defects during the iteration, whether it is included in the story estimate or tracked separately, make sure it is done consistently.

Another item to consider when estimating testing tasks is test data. The beginning of an iteration is almost too late to think about the data you need to test with. As we mentioned in Chapter 15, “Tester Activities in Release or Theme Planning,” think about test data during release planning, and ask the customers to help identify and obtain it. Certainly think about it as you prep for the next iteration. When the iteration starts, whatever test data is missing must be created or obtained, so don’t forget to allow for this in estimates.

We, as the technical team, control our own workload. As we write tasks for each story and post them on our (real or virtual) story board, we add up the estimated hours or visually check the number of cards. How much work can we take on? In XP, we can’t exceed the number of story points we completed in the last iteration. In Scrum, we commit to a set of stories based on the actual time we think we need to complete them.

Lisa’s current team has several years of experience in their agile process and finds they sometimes waste time writing task cards for stories they may not have time to do during the iteration. They start with enough stories to keep everyone busy. As people start to free up, they pull in more stories and plan the related tasks. They might have some stories ready “on deck” to bring in as soon as they finish the initial ones. This sounds easy, but it is difficult to do until you’ve learned enough to be more confident about story sizes and team velocity, and know what your team can and cannot do in a given amount of time and in specific circumstances.

Your job as tester is to make sure enough time is allocated to testing, and to remind the team that testing and quality are the responsibility of the whole team. When the team decides how many stories they can deliver in the iteration, the question isn’t “How much coding can we finish?” but “How much coding and testing can we complete?” There will be times when a story is easy to code but the testing will be very time consuming. As a tester, it is important that you only accept as many stories into the iteration as can be tested.

If you have to commit, commit conservatively. It’s always better to bring in another story than to have to drop one. If you have high-risk stories that are hard to estimate, or some tasks are unknown or need more research, write task cards for an extra story or two and have them ready on the sidelines to bring in mid-iteration.

As a team, we’re always going to do our best. We need to remember that no story is done until it’s tested, so plan accordingly.

When you are looking at stories, and the programmers start to think about implementation, always think how you can test them. An example goes a long way toward “testing the testability.” What impact will it have on my testing? Part III, “The Agile Testing Quadrants,” gives a lot of examples of how to design the application to enable effective testing. This is your last opportunity to think about testability of a story before coding begins.

During iteration planning, think about what kind of variations you will need to test. That may drive other questions.

When testability is an issue, make it the team’s problem to solve. Teams that start their planning by writing test task cards probably have an advantage here, because as they think about their testing tasks, they’ll ask how the story can be tested. Can any functionality be tested behind the GUI? Is it possible to do the business-facing tests at the unit level? Every agile team should be thinking test-first. As your team writes developer task cards for a story, think about how to test the story and how to automate testing for it. If the programmers aren’t yet in the habit of coding TDD or automating unit tests, try writing a “XUnit” task card for each story. Write programming task cards for any test automation fixtures that will be needed. Think about application changes that could help with testing, such as runtime properties and APIs.

If you have similar issues because other teams are developing parts of the system, write a task card to discuss the problem with the other team and come up with a coordinated solution. If working with the other team isn’t an option, budget time to brainstorm another solution. At the very least, be mindful of the limitations, and adjust testing estimates accordingly and manage the associated risk.

When you’re embarking on something new to the team, such as a new templating framework or reporting library, remember to include it as a risk in your test plan. Hopefully, your team considered the testability before choosing a new framework or tool, and selected one that enhanced your ability to test. Be generous with your testing task estimates with everything new, including new domains, because there are lots of unknowns. Sometimes new domain knowledge or new technology means a steep learning curve.

High-Level Tests and Examples

We want “big picture” tests to help the programmers get started in the right direction on a story. As usual, we recommend starting with examples and turning them into tests. You’ll have to experiment to see how much detail is appropriate at the acceptance test level before coding starts. Lisa’s team has found that high-level tests drawn from examples are what they need to kick off a story.

High-level tests should convey the main purpose behind the story. They may include examples of both desired and undesired behavior. For our earlier Story PA-5 (Figure 17-2) that asks to show the shipping cost for 5-day delivery based on the order’s weight and destination, our high-level tests might include:

Verify that the 5-day shipping cost displays as the default as soon as the user enters a shipping address.

Verify that the 5-day shipping cost displays as the default as soon as the user enters a shipping address.

Verify that the estimated shipping cost matches the shipping cost on the final invoice.

Verify that the estimated shipping cost matches the shipping cost on the final invoice.

Verify that the user can click a button to change the shipping address, and when this is done, the updated shipping cost displays.

Verify that the user can click a button to change the shipping address, and when this is done, the updated shipping cost displays.

Verify that if the user deletes items from the cart or adds items to the cart, the updated shipping option is displayed.

Verify that if the user deletes items from the cart or adds items to the cart, the updated shipping option is displayed.

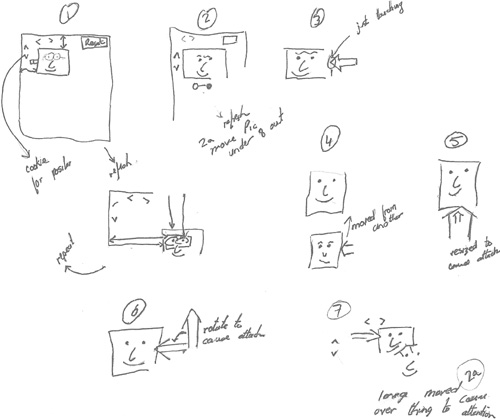

Don’t confine yourself to words on a wiki page when you write high-level tests. For example, a test matrix such as the one shown in Figure 15-7 might work better. Some people express tests graphically, using workflow drawings and pictures. Brian Marick [2007] has a technique to draw graphical tests that can be turned into Ruby test scripts. Model-driven development provides another way to express high-level scope for a story. Use cases are another possible avenue for expressing desired behavior at the “big picture” level.

See the bibliography for links to more information on graphical tests and model-driven development.

Mock-ups can convey requirements for a UI or report quickly and clearly. If an existing report needs modifying, take a screenshot of the report and use highlighters, pen, pencil, or whatever tools are handy. If you want to capture it electronically, try the Windows Paint program or other graphical tool to draw the changes and post it on the wiki page that describes the report’s requirements.

Distributed teams need high-level tests available electronically, while co-located teams might work well from drawings on a whiteboard, or even from having the customer sit with them and tell them the requirements as they code.

What’s important as you begin the iteration is that you quickly learn the basic requirements for each story and express them in context in a way that works for the whole team. Most agile teams we’ve talked to say their biggest problem is to understand each story well enough to deliver exactly what the customer wanted. They might produce code that’s technically bug-free but doesn’t quite match the customer’s desired functionality. Or they may end up doing a lot of rework on one story during the iteration as the customer clarifies requirements, and run out of time to complete another story as a result.

Put time and effort into experimenting with different ways to capture and express the high-level tests in a way that fits your domain and environment. Janet likes to say that a requirement is a combination of the story + conversation + a user scenario or supporting picture if needed + a coaching test or example.

Earlier in this chapter we talked about the importance of constant customer collaboration. Reviewing high-level tests with customers is a good opportunity for enforced collaboration and enhanced communication, especially for a new agile team. After your team is in the habit of continually talking about stories, requirements, and test cases, you might not need to sit down and go over every test case.

If your team is contracting to develop software, requirements and test cases might be formal deliverables that you have to present. Even if they aren’t, it’s a good idea to provide the test cases in a format that the customers can easily read on their own and understand.

Reviewing with Programmers

You can have all of the diagrams and wiki pages in the world, but if nobody looks at them, they won’t help. Direct communication is always best. Sit down with the programmers and go over the high-level tests and requirements. Go over whiteboard diagrams or paper prototypes together. Figure 17-4 shows a tester and a programmer discussing a diagram of thin slices or threads through a user workflow. If you’re working with a team member in another location, find a way to schedule a phone conversation. If team members have trouble understanding the high-level tests and requirements, you’ll know to try a different approach next time.

Programmers with good domain knowledge may understand a story right away and be able to start coding even before high-level tests are written. Even so, it’s always a good idea to review the stories from the customer and tester perspective with the programmers. Their understanding of the story might be different than yours, and it’s important to look at mismatches. Remember the “Power of Three” rule and grab a customer if there are two opinions you can’t reconcile. The test cases also help put the story in context with the rest of the application. Programmers can use the tests to help them to code the story correctly. This is the main reason you want to get this done as close to the start of the iteration as you can—before programmers start to code.

Don’t forget to ask the programmers what they think you might have missed. What are the high-risk areas of the code? Where do they think the testing should be focused? Getting more technical perspective will help with designing detailed test cases. If you’ve created a test matrix, you may want to review the impacted areas again as well.

One beneficial side effect of reviewing the tests with the programmers is the cross-learning that happens. You as a tester are exposed to what they are thinking, and they learn some techniques for testing that they would not have otherwise encountered. As programmers, they may get a better understanding of what high-level tests they hadn’t considered.

Test Cases as Documentation

High-level test cases, along with the executable tests you’ll write during the iteration, will form the core of your application’s documentation. Requirements will change during and after this iteration, so make sure your executable test cases are easy to maintain. People unfamiliar with agile development often have the misconception that there’s no documentation. In fact, agile projects produce usable documentation that contains executable tests and thus is always up to date.

The great advantage of having executable tests as part of your requirements document is that it’s hard to argue with their results.

Organizing the test cases and tests isn’t always straightforward. Many teams document tests and requirements on a wiki. The downside to a wiki’s flexibility is that you can end up with a jumble of hierarchies. You might have trouble finding the particular requirement or example you need.

Lisa’s team periodically revisits its wiki documentation and FitNesse tests, and refactors the way they’re organized. If you’re having trouble organizing your requirements and test cases, budget some time to research new tools that might help. Hiring a skilled technical writer is a good way to get your valuable test cases and examples into a usable repository of easy-to-find information.

Write FitNesse tests for calculating 5-day ship cost based on weight and destination.

Write FitNesse tests for calculating 5-day ship cost based on weight and destination.