7

The Cycle of Thought

Despite being a pudgy bundle of fibres powered by a modest 20 watts of energy, the human brain is by far the most powerful computer in the known universe. Our brains are, it is true, forgetful, error-prone, and can struggle to sustain attention on anything for more than a few minutes. Indeed, we struggle with elementary arithmetic, let alone logic and mathematics, and we read, talk and reason painstakingly slowly. But our brains have stunning abilities too: they can interpret a bafflingly complex sensory world, perform a huge variety of skilled actions, and communicate and navigate a vastly complex physical and social world. And our brains do all this at a level that far exceeds the power of anything yet created in artificial intelligence. Our brain is a remarkable computer, but it is a very unconventional computer.

The power of the familiar digital computers – including our PCs, laptops and tablets – comes, largely, from carrying out simple calculation steps at phenomenal speeds: many billions of operations per second. By comparison, the brain is dreadfully slow. Neurons – the basic computational unit of the brain – calculate by sending electrical pulses to each other across hugely complex electro-chemical networks. The very highest rate of neural ‘firing’ is about 1,000 pulses per second; neurons, even when directly recruited to the task in hand, fire mostly at far more leisurely rates, from five to fifty times per second.1 So our neurons are leisurely compared with the astonishing processing speed of silicon chips. But while neurons may be slow, they are numerous. A PC has one or at most a few processing chips, processing at a phenomenal rate, while the human brain has roughly one hundred billion sluggish neurons, linked by roughly one hundred trillion connections.

So the spectacular cleverness of the human mind must come not from the frenzied sequences of simple calculations that underpin silicon computation. Instead, brain-style computation must result from cooperation across the highly interconnected, but slow, neural processing units, leading to coordinated patterns of neural activity across whole networks or perhaps entire regions of the brain.2

But it is hard to see how a vast population of interconnected neurons can coordinate on more than one thing at a time, without suffering terrible confusion and interference. Each time a neuron fires, it sends an electrical pulse to all the other neurons it is linked to (typically up to 1,000). This is a good mechanism for helping neurons cooperate, as long as they are all working on different aspects of the same problem (e.g. building up different parts of a possible meaningful organization of a face, word, pattern or object). Then, by linking together, cross-checking, correcting and validating different parts of an organization (the parts of a face, the letters making up a word), it is possible gradually to build up a unified whole. But if interconnected neurons are working on entirely different problems, then the signals they pass between them will be hopelessly at cross-purposes – and neither task will be completed successfully: each neuron has no idea which of the signals it receives are relevant to the problem it is working on, and which are just irrelevant junk.

So we have a general principle. If the brain solves problems through the cooperation computation of vast networks of individually sluggish neurons, then any specific network of neurons can work on just one solution to one problem at a time. To a rough approximation, the brain seems to be pretty close to one giant, highly interconnected network (although the connections between different regions of the brain are not equally dense). So we should expect, then, that a network of neurons in the brain should be able to cooperate on just one problem at a time.

This gives us the beginning of an explanation of the slow, step-by-step nature of perception and thought that we came across in Part One: our ability to process one word, face, or even colour, at a time. Attending to a set of information to be explained is setting the problem that the brain has to solve. The problems can be very diverse: to find the ‘meaning’ in a pattern of black and white shapes; to figure out what is being said in a stream of speech; to visualize a cube balanced on one point; to recall the last time you went to the cinema, and so on. We can think of this as specifying the values of some subset of the population of neurons. Each step in a sequence of thoughts then involves cooperative computation to find the most meaningful organization of everything else we know to find an answer that best fits that ‘question’. A single step may take many hundredths of a second, but the computational power achieved by drawing on the knowledge and processing power across a network of billions of neurons can be enormous.

The computational abilities of the brain are, then, both severely limited and remarkably powerful. The problem of interference implies that the cycle of thought is limited to proceeding one step at a time, and working on just one problem at a time. Yet by cooperatively drawing on a vast population of interconnected neurons – each contributing only a little to the overall solution to the problem in hand – each step has the potential to answer questions of enormous difficulty: for example, decoding a facial expression, predicting what will happen next in a complex physical and social situation, integrating a fast-flowing input of speech or text, planning and initiating the spectacularly complex sequence of actions to return a tennis serve thundering down at more than 100 mph. Each of these processes would, to the extent that it can ever be successfully simulated on a conventional computer at all, correspond to millions or even billions of tiny steps, implemented with almost unimaginable speed, one after the other. But the brain takes a different tack: its slow neural units split up the problem into myriad tiny fragments and share their tentative solutions in parallel across the entire, densely interconnected network.

What is important is that the very fact that the brain uses cooperative computation across vast networks of neurons implies that these networks make one giant, coordinated step at a time rather than, as in a conventional computer, through a myriad of almost infinitesimally tiny information-processing steps. I shall call this sequence of giant, cooperative steps, running at an irregular pulse of several ‘beats’ per second, the cycle of thought.3

The comparison between conventional computers and the brain can, therefore, be highly misleading. We can write a document, or watch a movie, on our PC, while in the background it is searching for large prime numbers, downloading music, crunching away on astronomical calculations, or any number of tasks. So the notion that, as our conscious mind is focused on making breakfast or reading a novel, all manner of profound and abstruse thoughts might be running along below conscious awareness, seems plausible. But brains are very different from conventional computers – rather than being able to time-share a super-fast central processor, the brain works by cooperative computation across most or all of its neurons – and cooperative computation can only lock onto, and solve, one problem at a time.

If each network of cooperating neurons in the brain can only focus on a single problem, then we should be able to consider only one chess move at a time; read one word at a time; recognize one face at a time; or listen to one conversation at a time. Thus the cooperative style of computation used by the brain imposes severe limitations upon us – limitations that are indeed evident in the light of decades of careful psychological experiments.

If each brain network can take on a single task at a time, a crucial question becomes how far the brain may naturally be divided into many independent networks, each with its own task; whether such divisions are fixed or whether the brain can actively reconfigure itself into distinct networks to address the challenges in hand. But whatever the nature of the division of the brain into cooperating networks of nerve cells (and we shall briefly touch on the question of how flexibly this can be done later), the key point is that each cooperative network of neurons can address precisely one problem at a time.

Moreover, analysis of brain pathways, through tracing the ‘wiring diagram’ of the brain as well as monitoring the flow of brain activity when we engage in specific tasks, suggests that the networks for the brain are highly interconnected. One consequence of this is that multitasking will be the exception rather than the rule.

It turns out that whichever object or task is engaging our conscious attention will typically engage large swathes of the brain.4 Accordingly, there will typically be severe interference between any two tasks or problems that engage our conscious attention – because the cooperative style of brain computation precludes a single brain network carrying out two distinct tasks. This means not only that we can consciously attend to just one problem at a time, but also that if we are consciously thinking about one problem, we cannot even unconsciously be thinking about another – because the brain networks involved would be likely to overlap. Tasks and problems needing conscious attention engage swathes of our neural machinery; and each part of that machinery can only do one thing at a time. In particular, the unconscious cannot be working away on, say, tricky intellectual or creative challenges, while we are consciously attending to some other task – because the brain circuits that would be needed for such sophisticated unconscious thoughts are ‘blocked’ by the conscious brain processes of the moment. We shall return to the far-reaching implications of ‘no background processing’ below, leading us to revise our intuitions about ‘unconscious thoughts’ and ‘hidden motives’, and eliminate any suspicion that our behaviour is the product of a battle between multiple selves (e.g. Freud’s id, ego and superego).

The brain is a set of highly interconnected, and cooperative, networks, but the structure of these networks turns out to be particularly revealing: there is a relatively narrow neural bottleneck through which sensory information flows. This bottleneck restricts the possibility of doing too many things at once – but, as we shall see, it also gives some fascinating clues about the nature of conscious experience.

PRODDING THE CONSCIOUS BRAIN

The eminent neurosurgeon Wilder Penfield pioneered brain investigations and brain surgery on people who were wide awake.5 From the patient’s point of view, a little local anaesthetic to deal with any pain of the incision through the skull is all that is required. Though the brain detects pains of many and varied types throughout the body (pokes, abrasions, twists, excesses of heat and cold), the brain has no mechanism for detecting damage to itself. So Penfield’s brain operations were entirely painless for his patients.

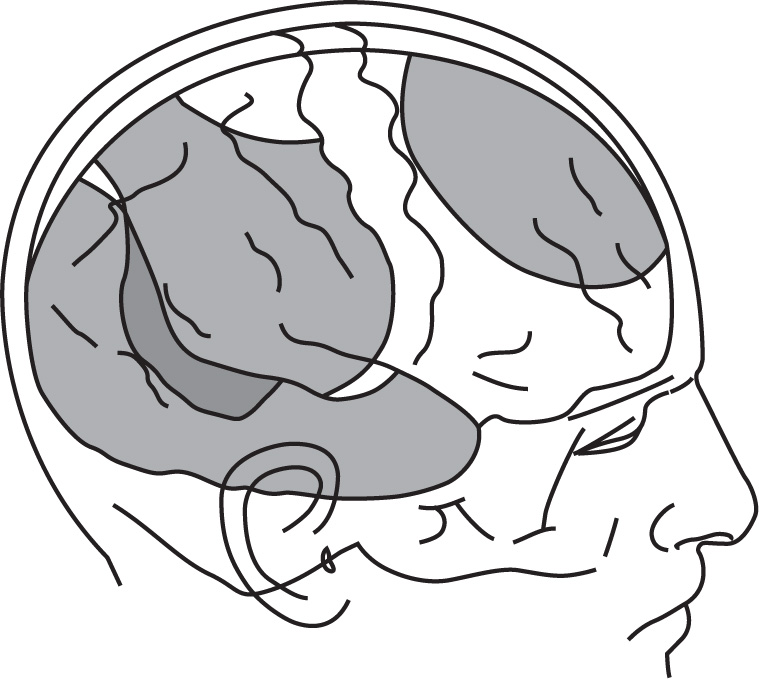

The purpose of Penfield’s surgery was to relieve severe epilepsy by attempting to isolate, and remove, portions of the brain from which the seizures originated. In an epileptic attack, the cells across large areas cease any complex cooperative computation to solve the problem of the moment and start instead to ‘fire’ in slow synchronized waves and hence, being entrained by each other, become disengaged from their normal information-processing function. A slightly fanciful parallel is to imagine the population of a busy city suddenly dropping their varied and highly interconnected activities (buying, selling, chatting, building, making) to join in a single, continuous, coordinated, but entirely involuntary Mexican wave – wherever the wave spreads, work will come to a complete halt. In severe epileptic attacks, the whole, or large areas of, the cerebral cortex become entrained and hence entirely non-functional, until the brain is somehow able to reset itself; people with severe epilepsy can suffer such debilitating attacks many times each day. The entrainment in epilepsy typically starts in a specific region of the cortex. It is as if the inhabitants of one district are particularly prone to spontaneously launching into Mexican waves – and the nearby neighbourhoods are then drawn in, and the wave spreads inexorably across the city. Penfield’s logic was that, if only the troublesome district could somehow be isolated from the rest of the city, the Mexican wave would be unable to spread – and normal life would continue unhindered. In practice, Penfield found that the most effective treatment often turned out to be rather extreme: rather than a few subtle surgical cuts to key regions of the cortex, the equivalent of closing a few bridges or main roads, Penfield was often driven to remove large regions of the cortex, analogous to flattening huge areas of a city (see Figure 28).

I can scarcely imagine anything more alarming than having areas of one’s brain removed with nothing more than a local anaesthetic. But the use of a local anaesthetic turned out to be crucial: the electrical stimulation of different parts of the cortex meant that the awake patient was able to communicate a good deal of information about the function of that area; some areas of the cortex are more crucial than others, and Penfield was therefore able to operate, as far as possible, without inadvertently causing paralysis, or loss of language. The surgeon was in the uncanny position of being able to converse with patients throughout the period that substantial areas of their brains were being removed. The patients not only maintained consciousness throughout, but typically maintained a fluid conversation, neither exhibiting nor reporting any disruption to their conscious experience.

One might conclude that perhaps these areas of cortex are simply not relevant to conscious thought. But a wide range of other considerations ruled out this possibility. For example, recall that the visual neglect patients we described in Chapter 3 frequently had no conscious experience of one half of the visual field – and the area of absence of consciousness maps neatly onto the area of visual cortex that had been damaged. Similarly, patients who have, often through a localized stroke, suffered damage to cortical regions known to process colour, motion, taste, and so on, show the consequences of such damage in their conscious experience: they no longer perceive colour normally, may view the world as intermittently frozen and moving jerkily, or report that they have lost their sense of taste. In short, the cortical processing machinery we possess seems to map directly onto the conscious phenomenology we experience. Penfield himself was able to collect new and very direct evidence of the close connection between cortex and consciousness, through his electrical stimulation of different parts of the cortical surface. Such stimulation often did intrude on conscious experience, and in very striking ways. Depending on the area and the stimulation, patients would often report visual experiences, sounds, dreamlike strands, or even what appeared to be entire flashes of memory (most famously, one patient volunteered the strangely specific ‘I can smell burnt toast!’ in response to a particular electrical probing of the brain).

So how could it be that stimulating a brain area leads to conscious phenomenology, but removing that very same area appears to leave conscious experience entirely unaffected? Penfield’s answer was that consciousness isn’t located in the cortical surface of the brain, but in the deeper brain areas into which the cortex projects – in particular, to the collection of evolutionarily old ‘sub-cortical’ structures that lie at the core of the brain and which the cortex enfolds.

The anatomy of the brain gives, perhaps, an initial clue. Sub-cortical structures (such as the thalamus) have rich neural projections that fan out into the cortex that surrounds it, and these connections allow information to pass both ways. Intriguingly, most information from our senses passes through the thalamus before projecting onto the cortex; and information passes in the opposite direction from the cortex through to deep sub-cortical structures to drive our actions. So what we may loosely call the ‘deep’ brain serves as a relay station between the sensory world and the cortex, and from the cortex back to the world of action. Here, perhaps, somewhere in these deep brain structures, is the crucial bottleneck of attention; and whatever passes through the bottleneck is consciously experienced.

Penfield’s viewpoint was more recently elaborated on and extended by Swedish neuroscientist Björn Merker.7 Merker highlights a number of further observations that fit with Penfield’s suspicion that conscious experience requires linkage between diverse cortical areas and a narrow processing bottleneck deep in the brain. If conscious experience is controlled by the deep, sub-cortical brain structures, for example, then one might expect those structures to control the very presence of consciousness; in particular, to act as a switch between wakefulness and sleep. Indeed, such a switch does appear to exist – or, at least, electrically stimulating highly localized deep brain structures in animals (specifically the reticular formation) can lead to a sharp reduction in activity across the whole of the cortex. The animal lapses into a quiescent state.8 Moreover, if this brain area is surgically removed, the animal is comatose – as if unable to wake up. By contrast, wakefulness is unaffected in either animals or humans by removal of large areas of cortex.

Might temporary disruption deep in the human brain cause the switch momentarily to be thrown – so that consciousness ceases abruptly, perhaps just for a matter of seconds or minutes? Following Penfield, Merker points out that so-called ‘petit mal’ or absence epilepsy seems to have just this character. A petit mal episode involves a person, during everyday activity, suddenly adopting a vacant stare and becoming completely unresponsive to their surroundings. If the patient is walking, she will slow and freeze in position, while remaining upright; if she is speaking, speech may continue briefly, although typically slowing and then ceasing entirely; if eating, a forkful of food may hover in space between the plate and the mouth. Attempts to rouse the patient during a period of ‘absence’ are usually ineffective, although they can on occasion cause the patient to ‘awake’ suddenly. Usually, though, consciousness returns spontaneously, often within seconds. The sufferer typically has no immediate knowledge of having suffered an epileptic episode – conscious experience appears, bizarrely, to pick up uninterrupted from where it left off, as if time, from the point of view of the patient, has stood still. Patients experience, in particular, complete amnesia during the period of ‘absence’.

Recordings of the electrical activity in the cortex during periods of ‘absence’ show the typical slow-wave pattern – but this pattern appears to synchronize simultaneously across the cortical surface from the very beginning of the episode, rather than, as with many other forms of epileptic seizure, propagating from one area to the next. It is as if a Mexican wave were to begin across the entire city, rather than rippling from one district to the next – and this would suggest some external communication signal, perhaps a radio broadcast, simultaneously instructing the population to act in unison. Penfield’s conclusion is that the deep sub-cortical brain structures, with their rich fan of nervous connections to the cortical surface that enfolds them, play precisely this role.

A further clue from Penfield’s neurosurgical investigations comes from results obtained by electrical stimulation as he operated. Such electrical stimulation would frequently trigger epileptic episodes. Patients were, after all, suffering epileptic attacks of the most extreme and frequent form – or they would never have been referred to Penfield for such radical surgery – and so it is not surprising that their brains were readily triggered into an epileptic state. Yet Penfield reports that the one form of epilepsy that was never induced, whichever region of the cortex was stimulated, was ‘absence’ or petit mal epilepsy – no electrical stimulation of the cortex itself could trigger the crisp, immediate, total shutdown of the cortical system, because, he suggested, the ‘switch’ of consciousness lies not in the cortical surface but deep within the brain.

When we think of the human brain, and the astonishing intelligence it supports, we conjure up an image of the tightly folded walnut-like surface of the cortex, lying just beneath our skull. Indeed, in humans, the cortex is of primary importance. While in many mammals, such as rats, the cortex is of rather modest size in comparison with other brain regions, in primates such as chimpanzees and gorillas it dominates the brain; and it is expanded spectacularly in humans. But the cortex receives its input, and sends its output, through deeper, sub-cortical brain structures – and these sub-cortical structures may determine the contents of the ‘flow’ of consciousness and, indeed, whether we are conscious at all.

To see how this might work, the link between perception and action may be especially illuminating. Suppose that we are picking apples from a tree. Our brain needs to alight on the next apple to be picked, determine whether it is sufficiently ripe and not yet rotten, perhaps identifying it through a veil of foliage, and then needs to plan a sequence of movements which will successfully grasp the apple and twist it from its stem. Or, in the case of a person, the action might consist merely of suggesting that someone else pick the apple, or describing it, perhaps inwardly. But in any case, it is crucial that the action is connected to the visual input concerned with this particular apple, and that the different pieces of the visual input (the different fragments of apple visible through foliage, perhaps) are integrated into a whole. If we reach out to the apple and touch it, then information about the location of our arm, and the sensations as we brush through the leaves and then feel the surface of the apple we grasp, must be linked up with our visual input (so that we know that we are reaching and grasping the very apple that we are looking at). And all of this information must be linked, in turn, to memory: of our earlier decision to pick apples (and, perhaps, only particularly ripe apples), our memory of past visual experience which allows us to identify apples, leaves and branches; and we may, in turn, be reminded of apple-picking incidents in our childhood, agricultural or biological facts about apples, and so on. The action of reaching and grasping an apple is itself potentially complex, requiring not just coordination of a single arm and hand, but of potentially stretching actions, standing on tiptoe if necessary, and making a suite of postural compensations to maintain balance.

Now actions occur, roughly, one at a time; but each action requires the integration of potentially large amounts of information from the senses, from memory, and from our motor system. So perhaps the role of one or more structures deep in our brains is to serve as the focal point for such integration; to probe the diverse areas of the surrounding cortex, devoted to the processing of sensory information, memory or the control of movement, and to bring them to bear on the same problem. Thus, the sequential nature of action will be mirrored by the sequential flow of thought.

While deep brain structures, rather than the cortex, may be the bottleneck through which conscious experience flows, the activation of the cortex, for example by stimulation using an electrode, should be able to intrude on conscious experience: connections between cortex and deep brain areas are two-way. A sudden surge of activity in a particular area of the cortex may lead to signals into deep brain areas which disturb, and even override, their current activity, generating, for example, strange sensory experiences and fragments of memory. But crucially, the complete disappearance of an area of cortex, unless it happens to be engaged directly in some current mental activity, will pass entirely unnoticed, without even the merest ripple in conscious experience. And this is, of course, precisely what Penfield observed: patients reported strange fragments of conscious experience, such as the smell of burnt toast, when a piece of cortex was electrically stimulated, but no anomalous conscious experience at all as Penfield removed entire areas of brain.

This perspective explains, too, why patients with visual neglect, where the cortex corresponding to a large area of the visual field may be damaged or entirely inoperative, can none the less be entirely unaware of their deficit. We are consciously aware, perhaps, only of the specific task on which we are currently focused. So, if engaged in fruit-picking, a person with visual neglect will focus their attention only on visual information in parts of the visual cortex which are intact, and link with memory and action systems through the coordinating power of structures deep in the brain, just as for a person with normal visual processing. In deep brain areas, conscious experience may be entirely normal. They will not, of course, pick or describe fruit whose visual positions project into the ‘blind’ area of visual cortex – so their visual phenomenology, while entirely normal moment by moment, will be restricted to, say, the right half of their visual field.

Our brain is fully engaged with making sense of the information it is confronted with at each moment. Consciousness, and indeed the entire activity of thought, appears to be guided, sequentially, through the narrow bottleneck: deep, sub-cortical structures search for, and coordinate, patterns in sensory input, memory and motor output, one at a time. The brain’s task is, moment by moment, to link together different pieces of information, and to integrate and act on them right away. Our brain will, of course, lay down fresh memories as this processing proceeds; and draw on the richness of memories of past processing.

So our no background processing slogan is reinforced. Or, at least, if there are brain processes which are scurrying about behind the scenes, contemplating, evaluating and reasoning about matters that we appear not to be thinking about at all, then neuroscience has found no trace of them. The brain appears, instead, be concentrating on making sense of immediate experience, and generating sequences of actions, including language (whether spoken aloud or inner speech), through the narrow bottleneck of conscious thought. This is why it can only integrate and transform information to solve one problem at a time.

We now have some tentative answers about how the cooperative style of brain computation operates. In the Penfield/Merker vision of the brain, both the questions that the brain faces and the answers that it provides are represented in sub-cortical structures, including the thalamus, which serves as a relay station between the cerebral cortex and our sensory and motor systems – essentially as a gateway between the hemispheres of the brain and the outside world. And, we might suspect, both the questions and their answers are concerned primarily with the organization of sensation and movement; and the rich interconnections between these structures and the cortex provide the networks of cooperative computation that are able to solve the problems posed by these sub-cortical structures. Yet while the cortex is crucial in processing visual information, planning movement and drawing on memories, we are aware of the results of the vast cooperative enterprise across the brain only in so far as the results of such computations reach the sub-cortical ‘gateway’ structures – these, and not the cortex itself, are the locus of conscious experience.

FOUR PRINCIPLES OF THE CYCLE OF THOUGHT

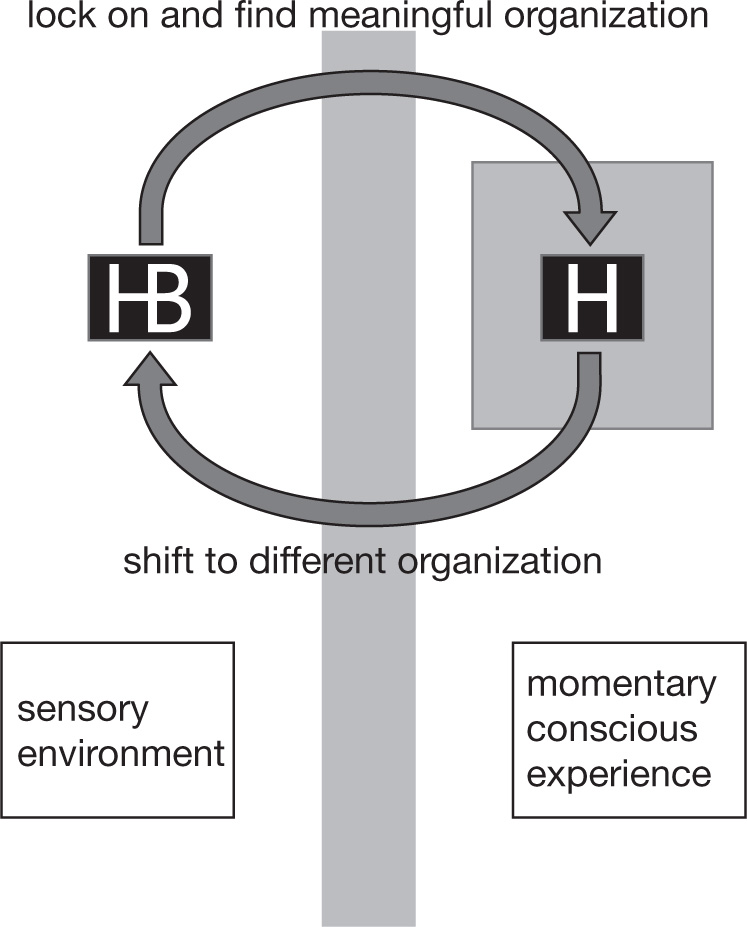

Here is an outline of how the mind works, in the form of a picture (Figure 29) and four proposals. The first principle is that attention is the process of interpretation. At each moment, our brain ‘locks onto’ (or, in everyday terms, pays attention to) a target set of information, which the brain then attempts to organize and interpret. The target might be aspects of sensory experience, a fragment of language, or a memory.9 Figure 29 illustrates a case where the brain has momentarily locked onto the ‘H’ in complex stimulus. Moments later, it might alight on the ‘B’. Crucially, our brain locks onto one target at a time. This implies that the line shared between the ‘H’ and ‘B’ in the stimulus is, at any given moment, interpreted as belonging to one or the other – but not both. Following Penfield and Merker’s conjectures, we might suspect that such information is represented in sub-cortical structures buried deep in the brain, with connections across the entire cortex, so that the full range of past experience and knowledge can be brought to bear on finding meaning in the current target. Remember that we can lock onto, and integrate, information of all kinds. We can use any and all pieces of information and great leaps of ingenuity and imagination to find meaning in the world, but we can only create one pattern at a time.

Our second principle concerns the nature of consciousness and is that our only conscious experience is our interpretation of sensory information. The result of the brain’s ‘interpretations’ of sensory input is conscious – we are aware of the brain’s interpretation of the world – but the ‘raw materials’ from which this interpretation is constructed, and the process of construction itself, are not consciously accessible. (Conscious experience is the organization represented in deep brain structures, with inputs from across the cortex – we are not directly conscious of cortical activity itself.) So in Figure 29, we perceive the ‘H’ or the ‘B’, but have absolutely no awareness of the process through which these were constructed.

Perception always works this way: we ‘see’ objects, people, faces as a result of a pattern of firing from light-sensitive cells triggered by light falling on our retina; we ‘hear’ voices, musical instruments and traffic noise as a result of picking up the complex patterns of firing of vibration-detecting cells in our inner ear. But we have no idea, from introspection alone, where such meaningful interpretations spring from – how our brain makes the jump from successive waves of cacophonous chatter from our nervous system to a stable and meaningful world around us. All we ‘experience’ is the stable and meaningful world: we experience the result, not the process.10

So far, we have focused on our consciousness of meaning in sensory information. The third principle is that we are conscious of nothing else: all conscious thought concerns the meaningful interpretation of sensory information. But while we have no conscious experience of non-sensory information, we may be conscious of their sensory ‘consequences’ (i.e. I have no conscious experience of the abstract number 5, although I may conjure up a sensory representation of five dots, or the shape of symbol ‘5’). Deep brain regions are, after all, relay stations for conveying sensory information to the cortex – so if they are locations of conscious experience, then we should expect conscious experience to be sensory experience, and nothing more.

The claim that we are aware of nothing more than meaningful organization of sensory experience isn’t quite as restrictive as it sounds. Sensory information need not necessarily be gathered by our senses, but may be invented in our dreams or by active imagery. And much sensory information comes, of course, not from the external world but from our own bodies – including many of our pains, pleasures, and sensations of effort or boredom. We are conscious of the sounds or shapes of the words we use to encode abstract ideas; or the imagery which accompanies them. But we are not conscious of the abstract ideas themselves, whatever that might mean. I can imagine (just about) three apples or the symbols ‘3’, ‘iii’ or ‘three’; and I can imagine various rather indistinct triangles and the word ‘triangle’. But I surely can’t imagine, or in any sense be conscious of, the abstract number 3; or the abstract mathematical concept of ‘triangularity’. I can hear myself say ‘Triangles have three straight sides’ or ‘The internal angles of a triangle add up to 180 degrees’ – but I surely don’t have any additional conscious experience of these abstract truths.

Similarly, as we have seen already, it is a mistake to think that we are conscious of any of our beliefs, desires, hopes or fears. I can say to myself ‘I’m terrified of water’ or I can have visions of myself struggling desperately as a rip-tide pulls me out to sea. But it is words and images that are the objects of consciousness – not the ‘abstract’ belief. Just in case you doubt this viewpoint, reflect on what beliefs you are conscious of right now. How many are there, exactly? Can you feel when one belief leaves your consciousness or a fresh belief ‘comes into mind’? I suspect not.11

Now we can tie together our three proposals into a fourth principle. I have proposed that an individual conscious thought is the process of the creation of a meaningful organization of sensory input. So what is the stream of consciousness? Nothing more than a succession of thoughts, an irregular cycle of experiences which are the results of sequential organization of different aspects of sensory input – the shifting contents of the right-hand box in Figure 29. This fits with the Penfield/Merker story about the brain: sub-cortical structures deep in the brain form a ‘crucible’ onto which the resources of the whole cortex can be focused to impose meaning on fragments of sensory information – but only one pattern can be placed in the crucible at a time.

Note, in particular, that the cycle of thought is sequential: we lock onto and impose meaning on one set of information at a time. Now of course your brain can control your breathing, heart-rate and balance independent of the cycle of thought – to some extent at least (we don’t topple over when particularly engrossed in a problem). But the brain’s activities beyond the sequential cycle of thought are, we shall see, surprisingly limited – we can manage, roughly speaking, just one thought at a time.

From this point of view, many of the strange phenomena we saw in Part One fall into place:

- The brain is continually scrambling to link together scraps of sensory information (and has the ability, of course, to gather more information, with a remarkably quick flick of the eye). We ‘create’ our perception of an entire visual world from a succession of fragments, picked up one at a time (see Chapter 2). Yet our conscious experience is merely the output of this remarkable process; we have little or no insight into the relevant sensory inputs or how they are combined.

- As soon as we query some aspects of the visual scene (or, equally, of our memory), then the brain immediately locks onto relevant information and attempts to impose meaning upon it. The process of creating such meaning is so fluent that we imagine ourselves merely to be reading off pre-existing information, to which we already have access, just as, when scrolling down the contents of a word processor, or exploring a virtual reality game, we have the illusion that the entire document, or labyrinth, pre-exist in all their glorious pixel-by-pixel detail (somewhere ‘off-screen’). But, of course, they are created for us by the computer software at the very moment they are needed (e.g. when we scroll down or ‘run’ headlong down a virtual passageway). This is the sleight of hand that underlies the grand illusion (see Chapter 3).

- In perception, we focus on fragments of sensory information and impose what might be quite abstract meaning: the identity, posture, facial expression, intentions of another person, for example. But we can just as well reverse the process. We can focus on an abstract meaning, and create a corresponding sensory image: this is the basis of mental imagery. So just as we can recognize a tiger from the slightest of glimpses, we can also imagine a tiger – although, as we saw in Chapter 4, the sensory image we reconstruct is remarkably sketchy.

- Feelings are just one more thing we can pay attention to. An emotion is, as we saw in Chapter 5, the interpretation of a bodily state. So experiencing an emotion requires attending to one’s bodily state as well as relevant aspects of the outer world: the interpretation imposes a ‘story’ linking body and world together. Suppose, for example, that Inspector Lestrade feels the physiological traces of negativity (perhaps he draws back, hunches his shoulders, his mouth turns down, he looks at the floor) as Sherlock Holmes explains his latest triumph. The observant Watson attends, successively, to Lestrade’s demeanour and Holmes’s words, searching for the meaning of these snippets, perhaps concluding: ‘Lestrade is jealous of Holmes’s brilliance.’ But Lestrade’s reading of his own emotions works in just the same way: he too must attend to, and interpret his own physiological state and Holmes’s words in order to conclude that he is jealous of Holmes’s brilliance. Needless to say, Lestrade may be thinking nothing of the kind – he may be trying (with frustratingly little success) to find flaws in Holmes’s explanation of the case. If so, while Watson may interpret Lestrade as being jealous, Lestrade is not experiencing jealousy (of Holmes’s brilliance, or anything else) – because experiencing jealousy results from a process of interpretation, in which jealous thoughts are the ‘meaning’ generated, but Lestrade’s mind is attending to other matters entirely, in particular, the details of the case.

- Finally, consider choices (see Chapter 6). Recall how the left hemisphere of a split-brain patient fluently, though often completely spuriously, ‘explains’ the mysterious activity of the left hand – even though that hand is actually governed by the brain’s right hemisphere. This is the left, linguistic brain’s attempt to impose meaning on the left-hand movements: to create such meaningful (though, in the case of the split-brain patient, entirely illusory) explanation requires locking onto the activity of the left hand in order to make sense of it. It does not, in particular, involve locking onto any hidden inner motives lurking within the right hemisphere (the real controller of the left hand) because the left and right hemispheres are, of course, completely disconnected. But notice that, even if the hemispheres were connected, the left hemisphere would not be able to attend to the right hemisphere’s inner workings – because the brain can only attend to the meaning of perceptual input (including the perception of one’s own bodily state), not to any aspect of its own inner workings.

We are, in short, relentless improvisers, powered by a mental engine which is perpetually creating meaning from sensory input, step by step. Yet we are only ever aware of the meaning created; the process by which it arises is hidden. Our step-by-step improvisation is so fluent that we have the illusion that the ‘answers’ to whatever ‘questions’ we ask ourselves were ‘inside our minds all along’. But, in reality, when we decide what to say, what to choose, or how to act, we are, quite literally, making up our minds, one thought at a time.