These guidelines are primarily based on the work of Haladyna and Rodriguez (2013), reflecting decades of item-writing research and guidance. In addition, we reviewed dozens of guidelines from offices of teaching and learning at a variety of colleges and universities to secure the most comprehensive guidance available. Some of these guidelines have a research basis. The empirical evidence for these guidelines is comprehensively reviewed by Haladyna, Downing, and Rodriguez (2003) and Haladyna and Rodriguez (2013). We simply state when an item is research based in the introduction to the guideline.

Each guideline is described here, including examples of MC items with poor and better versions. In Chapter 6, we provide many examples of items from different fields, again including poor and better versions.

Content Concerns

1. Base each item on one aspect of content and cognitive task.

The main goal here is to make each item as direct and precise as possible—clearly stating the question, challenge, or problem being posed to the student. Many classroom test items are flawed because of failure to understand and follow this guideline.

- Poor: A very high positive correlation between academic aptitude and achievement is estimated with a random sample of college students, but we should expect a ____________ correlation among a random sample of ____________ students.

- A. negative—high school

- B. lower—honors

- C. zero—first-year

- D. higher—a larger sample of

The poor version is a common format, but it is asking for two different elements of content. In addition, option C is not plausible, as “zero” is too absolute. This item is less problematic than most, since both pieces of information are unique in each option. Consider this version:

- Poor: A very high positive correlation between academic aptitude and achievement is estimated with a random sample of college students, but we should expect a ____________ correlation among a random sample of ____________ students.

- A. lower—high school

- B. lower—honors

- C. higher—high school

- D. higher—honors

In both cases, we can improve measurement by focusing on one aspect of the task and then be more certain about the nature of student understanding or misunderstanding.

- Better: A correlation between academic aptitude and achievement is estimated from a random sample of college students. How will a second correlation from a random sample of honor students compare? The second correlation will be

- A. lower.

- B. similar.

- C. higher.

Sometimes the complexity is subtle. The following question provides a question with two different scenarios when only one is required.

- Poor: What is the most likely shape of a distribution if it is unimodal, but the mean is different than the median or the median is different than the mode?

- A. normal

- B. skewed

- C. leptokurtic

- Better: What is the most likely shape of a distribution if it is unimodal, but the mean is different than the mode?

- A. normal

- B. skewed

- C. leptokurtic

2. Use new material and contexts to elicit higher-order cognitive skills.

Avoid using direct quotes, examples, and other materials from textbooks, reading assignments, and lectures from class. This invites simple recall and remembering the materials. Novel material and contexts let you tap cognitive tasks that are more important and that measure the ability of students to generalize their knowledge and skills. The poor example that follows is based on the correlation item from guideline 1. With the context now removed, we’re assessing recall of information rather than application to a new situation.

- Poor: A correlation is estimated using a heterogeneous sample of people. How will a second correlation from a more homogenous sample compare? The second correlation will be

- A. lower.

- B. similar.

- C. higher.

At the end of this chapter, we provide a list of recommendations and question templates that can be used to measure higher-order thinking.

3. Keep the content of items independent of one another.

This concern is more common with constructed-response items, particularly in mathematics, where the solution from one question is used to solve the next question. But this is also important in MC items.

The correct response to one item should not depend on the response to another item. If the student gets the first item incorrect, and the next item depends on this response, the next item will likely be answered incorrectly as well. You want each item to provide unique and independent information about a student’s KSAs.

-

Poor: If the mean is 50, the median is 40, and the mode is 30, what is the shape of the distribution?

- A. positively skewed

- B. normal

- C. negatively skewed

- D. unknown

Based on this distribution (from previous question), is the distribution symmetric?

Another way this can create problems is by providing clues to the correct answer. If the following question accompanied the first poor question, it might help the student realize the correct option to the preceding item.

A distribution that is positively skewed has one tail “pulled” or extended in which direction?

It is best to create unique and independent items. Many times, multiple items must be written that tap important content, but they should not be connected in any way.

4. Test important content. Avoid overly specific and overly general content.

This guideline should really state: Test your course and instructional learning objectives. And those learning objectives should declare the important elements of knowledge, skills, and abilities that result from taking the class. There are many possible examples of overly specific questions that students should not be required to memorize.

Consider the following question from a course on educational and psychological measurement, which focuses on application of the principles of measurement.

- Poor: The reliability of the Hypochondria scale of the MMPI-2 is

There is no real answer to this question (it is hypothetical). Anyway, we should all cry being faced with such a question. But we can reclaim it.

-

Better: Consider the following test question:

The reliability of the Hypochondria scale of the MMPI-2 is

The item-writing flaw in this item is that it

- A. tests multiple cognitive tasks.

- B. is a trick item.

- C. includes overly specific content.

A better option might be to offer a series of MC items, each with an item-writing flaw, with a list of guidelines. The students can then match or identify the guideline being violated in each MC item.

Sometimes the content is simply too general. Not only do these tend to be easy items, we lose the opportunity to test content that is more important—content we want students to understand and be able to apply, analyze, and so on.

- Poor: Validity is important for

- A. test taker experience.

- B. test score interpretation.

- C. test-retest reliability.

- Better: Dropping poor items from a test on the basis of item analysis data alone will likely lower

- A. content-related validity.

- B. score reliability.

- C. average item difficulty.

The poor question is very general and doesn’t really tap specific knowledge or understanding about validity—hopefully we all know now that we validate test score interpretations and uses. It is much more important to inquire about students’ understanding of the characteristics and principles of validity and what affects it.

5. Avoid opinions and trick items.

Opinion items often don’t have a clear and consistent best option, as it depends on who you ask—someone’s opinion. However, if the point is to connect the opinion with a specific person, then the item must include the source of the opinion. This is one of the dangers of true-false items; they are often written so that they appear to be opinions.

- Poor: School accountability testing should only be reported at the level of

- A. students.

- B. teachers.

- C. schools.

- D. school districts.

- Better: According to Professor Rodriguez, school accountability test results should be reported at the level of

- A. students.

- B. teachers.

- C. schools.

This item still has a problem since the word “school” appears in both the stem and the options (clang association). If the term “school” is not used in the stem, then the level of accountability is left ambiguous, and any option could be correct. Unfortunately, it is sometimes not easy (or even possible) to ask questions we want without providing some level of clue to students.

Trick items are a different story. There is very little research on the use of trick items, but it is a common complaint from students. Dennis Roberts (1993) surveyed 174 college students and 41 college faculty, asking them to define trick questions. Nearly half reported that MC items can be trick items, with fewer reporting that true-false (20%), short-answer (3%), matching (1%), essay (1%), or any item format (27%) could be tricky. Most participants also reported that when trick questions appear on the test, it is deliberate. There were several themes that emerged from the definitions of trick items from these college students and faculty:

- The test developer intends to confuse or mislead the student.

- The content is trivial and not important.

- The content is at a level of precision not discussed in class—options are too similar to each other.

- The stem contains extra irrelevant information.

- There are multiple correct answers.

- The item measures knowledge that is presented in the opposite way from which it was presented in class.

- The item is so ambiguous, even the most knowledgeable student has to guess.

Roberts then created a 25-item test, with 13 of the items being trick items that included many of the issues presented in the tricky-item-characteristic list above (had irrelevant content, ambiguous stems, principles presented in the opposite way it was introduced in class, and others). More than 100 students were asked not to answer the items but simply to rate them in terms of the extent to which it may be a trick item on a 1 (not a trick item) to 4 (definitely a trick item) rating scale. Students were not effective at distinguishing between trick items and not-trick items. However, among the not-trick items, students were more accurate in their identification of the items (that they actually were not trick items), whereas students were far less able to correctly identify the trick items.

Here are two of the example trick items from Roberts:

- Tricky: A researcher collected some data on 15 students and the mean value was 30 and the standard deviation was .3. What is the sum of X if the variance is 9 and the median is 29?

This item is tricky because it is testing trivial content (knowing the sum is the mean times the sample size), but more importantly, it contains irrelevant information about other descriptive statistics.

- Tricky: Someone gives a test and also collects some attitude data on a group of parents in a city. The test has an average value of 30 with a variability value of 4 while the attitude average score is 13 with a variance of 18. The relationship between the two variables is .4. What is the regression equation using X to predict Y?

- A. Y = .9 + .2 X

- B. Y = −32 − 1.3 X

- C. Y = 3.2 + 3.2 X

- D. None of the above

This item is tricky because of the ambiguity in the stem. Are the test scores X or Y? Is the relationship described by .4 a correlation? Is “variability” the same as variance?

Another example of a tricky item is one similar to an item found in an educational measurement textbook item bank. Consider the following item.

- Tricky: A measure of four different social-emotional skills was correlated with high school grades (GPA), with results as shown below. Which measure permits the most accurate prediction of GPA?

- A. Positive Identity r = +.35

- B. Social Competence r = −.20

- C. Mental Distress r = −.50

- D. Commitment to Learning r = +.40

This is a tricky item because there are two things being considered in the list. One is the predictor; which predictor of school grades might be most useful? We probably believe successful students are committed to learning. Note that positive identity is a strange characteristic, typically meaning self-assured and motivated. And of course we hope that successful students should not be mentally distressed (although it may depend on the school!). But the most accurate prediction is simply a function of the largest correlation, regardless of the direction or sign or the construct being measured. We often think about “prediction” being positive, but statistically, we get more accurate prediction from the correlation with the largest absolute value; it doesn’t matter what the predictor is.

Formatting and Style Concerns

6. Format each item vertically instead of horizontally.

MC items can take up a fair amount of room on a page, especially if there are four or five or more options listed one per line vertically on the page. But this is the preferred formatting, as it avoids potential errors due to formatting the item horizontally, which we sometimes want to do to save room. Consider the following examples:

- Poor: If 64% of the test’s total score variance is error variance, what is the test’s reliability coefficient?

- A. .36

- B. .56

- C. .64

- D. .80

- Better: If 64% of the test’s total score variance is error variance, what is the test’s reliability coefficient?

- A. .36

- B. .56

- C. .64

- D. .80

In the horizontal format, it’s possible to read through the row of letters and numbers and inadvertently select the corresponding letter following the answer choice rather than the letter that precedes it. It can be confusing to tell which letter goes with which option. This is especially possible if the space between options is reduced:

- Poor: Expectancy tables provide what form of validity evidence?

- A. Concurrent

- B. Predictive

- C. Content

- D. Construct

- Better: Expectancy tables provide what form of validity evidence?

- A. Concurrent

- B. Predictive

- C. Content

- D. Construct

7. Edit and proof items.

This should go without saying. If you write items throughout the course of the term, when you start to put them together in a test form, you can review your earlier work and potentially edit items with fresh eyes. Even we occasionally administer class tests that contain typos—often times discovered by students during the exam. This is where we reluctantly get up in front of the class and make the correction announcement, or write the correction on the board. But the best way to ensure limited errors is to have a colleague or teaching assistant review the test before you administer it to the class.

In mathematics and other technical fields, the potential for typographical errors is great. Small errors can indicate very different things. Consider the following:

Poor: (2.5 × 103)

Here, the closing parenthesis is in the superscript. Is this supposed to be (2.5 × 103) or is it supposed to be (2.5 × 10)3?

Poor: The concepts of reliability and validity of test scores is …

Grammar must be consistent (“is” should be “are”).

- Poor: Why would a student’s local percentile score decrease on a standardized achievement test when the student moved from Minneapolis to Saint Paul?

- A. Different courses are offered in each district.

- B. Percentile norms are ordinal measures.

- C. The ability of the comparison group changed.

The word “each” should not be underlined. If you do underline key words, there should be one underlined in each option. But such writing techniques tend to be distracting and not helpful.

8. Keep the language complexity of items at an appropriate level for the class being tested.

This is a challenging guideline. We know from a great deal of research that language complexity often interferes with our ability to measure substantive KSAs. This is particularly important for students with some kinds of disabilities and for students who are nonnative speakers of the language of the test. Unless you are testing language skills and the complexity of reading passages and test questions is relevant to instruction and reflecting the learning objectives, language should be as simple and direct as possible. We don’t want unnecessarily complex language in our test items to interfere with our measurement of KSAs in mathematics, science, business, education, or other areas. Even in language-based courses (e.g., literature), the complexity of the language used in test items should be appropriate for the students and reflect the learning objectives.

- Poor: Why would a student’s local percentile score attenuate on a standardized achievement test when the student relocated from Minneapolis to Saint Paul?

Unless it was part of the curriculum in the course, the term “attenuate” is not one commonly understood to be synonymous with “decrease.” A better version of this stem is provided as an example for guideline #7.

- Poor: What will help ameliorate the negative effects of global warming?

- Better: What will help improve the negative effects of global warming?

- Poor: What is the plausible deleterious result of poor item writing?

- Better: What is the likely harmful result of poor item writing?

- Poor: What would be the result if scientists would put forth effort to promulgate their work?

- Better: When scientists publish their work, they contribute to …

Words matter, particularly in diverse classrooms that are likely to include first-generation college students, nonnative speakers of the language of instruction (for most of us, nonnative English speakers), and even students who are not majoring in the field of the course. Terms and language that we might take for granted can make the difference between understanding a question or not. If we want to test for understanding, application, or other higher-order skills, we don’t want language to get in the way—unless of course the point of the question is knowledge of language or terminology.

Things to avoid in item writing, unless it is part of the learning objective:

- Technical jargon; use common terms or define special words.

- Passive voice; use active voice.

- Acronyms; spell it out.

- Multiple conditional clauses; be direct and test one condition at a time.

- Adjectives and adverbs; be direct and succinct.

- Abstract contexts; provide concrete examples or contexts.

- Prepositional phrases; if necessary, use only one, and make it important to answering the question.

For more information on creating test items that minimize irrelevant complex language, particularly for nonnative English speakers, see the work of Jamal Abedi (2016), who provides many examples of how language can interfere with measuring KSAs.

9. Minimize the amount of reading in each item. Avoid window dressing.

This is related to but different from the previous guideline on minimizing language complexity. Another way to address the same issue is to reduce the amount of reading in the context materials and test items—unless of course reading is the target of measurement. If the learning objectives are about reading skills and abilities, then the reading load should be appropriate. But in most subject-matter tests, reading skills may interfere with our ability to measure KSAs in the (nonreading) subject matter. Reducing the amount of reading also has the effect of reducing the cognitive load, so that the task presented in the item is focused and allows us to interpret the response as a function of the intended content and cognitive level. In this way, we know how to interpret responses. And it reduces the amount of time required to take the test, possibly making room for additional test items, improving our coverage of the content and learning objectives.

- Poor: Because of the federal education accountability law in the United States of America, known as the Every Student Succeeds Act, every state is required to administer exams to test achievement of reading, mathematics, and science standards. Some states require students to pass one or more of these tests in high school in order to graduate. What makes these high school graduation tests be considered criterion-referenced?

- A. It was built with a test blueprint.

- B. Most students will pass a graduation test.

- C. It is based on a clearly defined domain.

- D. It has a passing score required for graduation.

- Better A high school graduation test is criterion referenced if

- A. it was built with a test blueprint.

- B. most students pass the test.

- C. it is based on a clearly defined domain.

- D. it is required to receive a diploma.

The better version has almost one third the number of words (36 words) compared to the poor version (95 words). Notice that the options are also more direct in the better version.

- Poor: Some current standardized tests report test score results as percentile bands, which include a range of percentile values. One advantage that is claimed for this procedure is that it

- A. expresses results in equal units across the scale.

- B. emphasizes the objective nature of test scores.

- C. reduces the tendency to overinterpret small differences.

- Better: What is one advantage of reporting test results as percentile bands?

- A. They express results in equal units.

- B. They emphasize the objective nature of scores.

- C. They reduce overinterpretation of small differences.

In this case, the number of words was reduced from 51 (poor) to 30 (better). In both cases, the poor versions started with context information that is irrelevant to the target of measurement.

Context is often used in mathematics and science tests, as we want students to be able to apply mathematical and scientific principles in context. But in many cases, the context is not required to solve the problem. If context is used, it must be essential to the question. Otherwise, it introduces irrelevant information that may interfere with measuring the intended learning objective.

- Poor: What is an example of criterion-referenced measurement?

- A. Santiago’s score is three standard deviations above the state mean.

- B. Hattie scored above the 95th percentile requirement to win the scholarship.

- C. Jean Luc answered 80 percent of the items correctly.

- D. Eighty percent of the class scored above a T-score of 55.

- Better: Which phrase represents criterion-referenced measurement?

- A. 80 percent correct

- B. T-score of 55

- C. 95th percentile

Now, the poor version may be more interesting than the better version, but it does contain irrelevant information and requires more time and effort to read; the number of words was reduced from 48 to 14.

- Poor: Maria José did a study of barriers to student achievement and she measured student test anxiety with a brief questionnaire. She found that most students were similar, with a low amount of anxiety, but there were a few students with extremely high levels of anxiety. Because the anxiety scores were positively skewed, she decided to standardize the scores by transforming them into z-scores. What will the shape of the resulting score distribution be?

- A. Negatively skewed

- B. Normal

- C. Positively skewed

- D. Cannot tell from this information

- Better: If a score distribution is positively skewed, what is the shape of the distribution after the scores are transformed into z-scores?

- A. Negatively skewed

- B. Normal

- C. Positively skewed

The fact that Maria José is doing a study of test anxiety is interesting but not needed in order to answer the question. Both versions of this item also have something called a “clang-association” which is described in what follows (guideline 22c), where “positively skewed” appears in the stem and is also one of the options. But in this case, it is an important element of the question. There is a common misconception that standardizing scores also normalizes distributions—which is not true. It would be awkward to avoid this clang association:

- Better: If a score distribution has a heavier right tail, what is the shape of the distribution after the scores are transformed into z-scores?

- A. Negatively skewed

- B. Normal

- C. Positively skewed

Introducing “a heavier right tail” also suggests a strange variation in the kurtosis of the distribution rather than a simple focus on skewness. It also requires an additional inference: When one tail is heavier, it is probably skewed. But the focus of the item is on the fact that transforming scores to z-scores does not change the shape of the distribution.

Writing the Stem

10. Write the stem as a complete question or a phrase to be completed by the options.

There is some research on this guideline. The evidence suggests that either format is effective for MC items. The following examples illustrate the same item in both formats.

Complete: What is the difference between the observed score and the error score?

- A. The derived score

- B. The standard score

- C. The true score

- D. The underlying score

Phrase: The difference between the observed score and the error score is the

- A. derived score.

- B. standard score.

- C. true score.

Notice that when we use the phrase-completion format, each option starts with a lowercase word and ends with a period. Each option is written so that it completes the stem. We often see other formats that are not appropriate.

- Poor: If 30 out of 100 students answer a question correctly, the item difficulty is:

- A. .30.

- B. .70.

- C. Not enough information is provided to compute item difficulty.

- Better: If 30 out of 100 students answer a question correctly, the item difficulty is

- Poor: Internal consistency is a more appropriate form of reliability for:

- A. Criterion-referenced tests

- B. Norm-referenced tests

- Better: Internal consistency is a more appropriate form of reliability for

- A. criterion-referenced tests.

- B. norm-referenced tests.

Ideas for questions or statements for test items can come from many places, including textbook test banks, items in online resources, and course materials, lectures, and discussions. Our most valuable sources of test items are the interactions we have as instructors with our students. Discussions, questions, and comments made during class are full of potential test items. Unfortunately, textbook item banks are not carefully constructed, and often the majority of items contain item-writing flaws. We use textbook item banks as a source for many of the example items in Chapter 6.

If you use textbook test banks for your test items, be sure to modify the item to be consistent with these guidelines.

11. State the main idea in the stem clearly and concisely and not in the options.

The goal is to make the question clear and unambiguous. This is a challenge when the stem is a phrase or part of a statement that is completed in the options. When the item stem does not clearly convey the intended content, too much is left up to the student for interpretation, leaving greater ambiguity in the item and the student’s response. In these cases, there are often multiple correct answers, since the distractors are written to be plausible.

- Poor: Percentile norms are

- A. reported as percentile ranks.

- B. expressed in interval units of measurement.

- C. based on a group relevant to the individual.

- Better: In order for norms to provide meaningful information about an individual’s performance, they must be

- A. reported as percentile ranks.

- B. expressed in interval units of measurement.

- C. based on a relevant group.

This item also was flawed because the word “percentile” appears in both the stem and the first option. To correct that, we simply removed the word percentile from the stem.

We’ve seen examples such as the following four. Each of these shows the entire stem.

These stems are simply inadequate and create a great deal of ambiguity and possibly multiple correct options. A good stem is one that allows a student to hypothesize or produce the correct response directly from the stem without reading the options.

12. Word the stem positively; avoid negative phrasing.

This guideline has some empirical research behind it. The trouble with negatively worded stems occurs when students overlook the negative word and respond incorrectly because of that rather than responding in a way consistent with their ability. In the health sciences, negatively worded stems are more common and serve an important purpose. In these cases, it is important for the test taker to distinguish among a set of conditions, contexts, symptoms, tests, or related options to identify the one that is not appropriate or relevant. In such cases, the negative word should be underlined and in italics and/or bolded, or even in all caps, so the test taker understands what is being asked and doesn’t overlook the negative term.

- Poor: What is NOT meant by “the test item is highly discriminating”?

- A. Students who had instruction on the topic are more able to answer it correctly.

- B. Many more high-ability students than low-ability students answer it correctly.

- C. It is biased against some groups, as they are less likely to answer it correctly.

- Better: What is meant by “the test item is highly discriminating”?

- A. Only students who had a particular training are able to answer it correctly.

- B. Many more high-ability students than low-ability students answer it correctly.

- C. Only a few of the students are able to answer it correctly.

This revised item also is flawed because two of the option start with a common word, “only,” making them a pair, and the word “only” is an absolute quantity that is extreme and would rarely be true. Usually this can be corrected by simply removing the word “only.”

Negative phrasing of the stem tends to make items slightly more difficult but also reduces test score reliability—suggesting that it introduces more measurement error in item responses and ultimately in total test scores.

13. Move into the stem any words that are repeated in each option.

This helps achieve earlier guidelines by minimizing the amount of reading. Repetitive words are unnecessary and can usually be consolidated in the stem, reducing the amount of reading effort and time for the student.

- Poor: Classical test theory includes the assumption that random error

- A. is uncorrelated with the observed score.

- B. is uncorrelated with the true score.

- C. is uncorrelated with the latent score.

- Better: Classical test theory includes the assumption that random error is uncorrelated with the

- A. observed score.

- B. true score.

- C. latent score.

This reduced the number of words from 27 to 19 (a 30% reduction). This adds up quickly across items in a test.

- Poor: What will likely result in a zero discrimination index?

- A. less able students answer the item correctly

- B. nearly everyone answers the item correctly

- C. more able students answer the item correctly

- Better: What group of students is more likely to answer the item correctly to produce a zero discrimination index?

- A. high ability

- B. nearly all

- C. low ability

Writing the Options

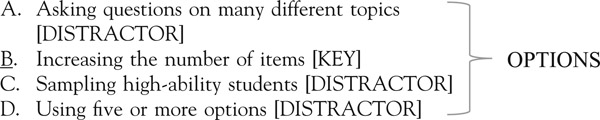

14. Write as many options as are needed, given the topic and cognitive task; three options are usually sufficient.

The quality of the distractors is more important than the number of distractors. But nearly 100 years of experimental research on this topic is unanimous in its finding: Three options is sufficient (Rodriguez, 2005). If three is the optimal number of options, why do most tests have items with four or five options? In large part, this is because of the fear of increasing the chance of randomly guessing correctly. But this same body of research has shown that the effect of guessing does not materialize, particularly in classroom tests and tests that matter to the test taker. Even so, the chance of obtaining a high score on a test of three-option items is relatively low, especially if there are enough items (see Table 4.1).

However, in some cases, four options is the better choice, particularly when trying to achieve balance in the options. Especially for quantitative options, balance can only be achieved by including two positive and two negative options or two odd and two even options or two high and two low options. We shouldn’t strive to write three-option items simply for the sake of writing three-option items, especially if it creates an unbalanced set of options. The real goal is to write distractors that are plausible and relevant to the content of the item and that might provide us with feedback about the nature of student errors.

Table 4.1 Chances That a Student Will Score 70% or Higher From Random Guessing

|

Number of test items

|

Chance to score 70%

|

|

| 10 |

1 out of 52 |

| 20 |

1 out of 1,151 |

| 30 |

1 out of 22,942 |

| 40 |

1 out of 433,976 |

You do not need to use the same number of options for every item. The number of options should reflect the nature of the item, the important plausible distractors, and a single best option. In Chapter 6, nearly all of the example items from various fields have four or five options. A common edit could be

the reduction of the number of options. Reducing the number of options to three reduces the time required to develop one or two more plausible distractors (see the next guideline) and reduces the reading time for students—particularly reading that is mostly irrelevant to the task at hand. In addition, by reducing the reading time, it’s possible to include a few more items and cover the content more thoroughly. This does far more for improving content coverage and the validity of inferences regarding what students know and can do.

15. Make all distractors plausible.

To ensure distractors are plausible, they should reflect common misconceptions, typical errors, or careless reasoning. Some of the best distractors are based on uninformed comments that come from students during class discussions or errors students repeatedly make on assignments and other class projects.

- Poor: If we know the exact error of measurement in a student’s score and we subtracted this from their observed score, we would obtain the

- A. ultimate score.

- B. absolute score.

- C. true score.

We should be able to justify each distractor as plausible. Here, the distractors are not plausible:

- A. The “ultimate” score is not a term used in measurement.

- B. The “absolute” score is also not a term used in measurement, although the word “absolute” has uses in mathematics, such as absolute values.

- Better: If we know the exact error of measurement in a student’s score and we subtracted this from their observed score, we would obtain the

- A. deviation score.

- B. standard score.

- C. true score.

Again, each distractor should be justifiable. Here, the distractors are plausible:

- A. The stem describes a subtraction (observed score minus error), which is also called a deviation. But the deviation score in measurement is the mean score minus the observed score.

- B. A standard score is a type of score in measurement, but it is the difference between scores divided by a standard deviation.

In both distractors, the options are plausible, related to the stem, but not the best answer. Herein we have a great deal of control over the item difficulty. To make the item easier, we could write distractors that are more different (more heterogeneous) but also use real (plausible) scores, such as T-score or z-score. Similarly, to make items more difficult, we could write distractors that are more similar (more homogeneous), such as the latent score, trait score, or something that is a kind of true score. These other types of scores may be plausible and correct, but only under certain kinds of measurement models that are not described in the item. To make the best option, “true score,” the best answer when using even more similar terms as these, it might be necessary to add context to the stem, such as “In Classical Test Theory …,” making “true score” the only best option.

- Poor: The best way to improve content-related validity evidence for a test is to increase the

- A. sample size of the validity study.

- B. number of items on the test.

- C. amount of time to complete the test.

- D. statistical power of each item.

Option A contains a word from the stem (validity), which is a clang-association (see guideline 22c) but will result in a more stable score. Option C should improve test score reliability since students will not have to guess if they run out of time. Option D is not plausible since statistical power does not apply to individual items.

- Better: The best way to improve content-related validity evidence for a test is to increase the

- A. sample of students taking the test.

- B. number of items on the test.

- C. amount of time to complete the test.

Now each option will improve the quality of test scores, but only one affects content-related validity evidence.

Many of the example items in Chapter 6 illustrate this guideline. Very few four- or five-option items have three or four plausible distractors.

16. Make sure that only one option is the correct answer.

This is a challenge. Make the distractors plausible but not the best answer. The correct option should be unequivocally correct. This is another reason why it is important to have your test reviewed by a colleague, an advanced student, or the teaching assistant.

- Poor: If a raw score distribution is positively skewed, standardizing the scores will result in what type of distribution?

- A. Normal

- B. Positively skewed

- C. Cannot tell from this information

- Better: If a raw score distribution is positively skewed and transformed into z-scores, this will result in what type of distribution?

- A. Normal

- B. Positively skewed

- C. Cannot tell from this information

In the context of what was discussed in class (and in most measurement textbooks), standardizing scores does not change the skewness of the distribution, only the location and spread of the distribution on the score scale. However, there are methods of standardizing scores that are nonlinear, rarely used, but may actually change the shape of the distribution. One such transformation is the normal-curve equivalent (NCE) transformation, which normalizes scores in the transformation, resulting in a normal distribution.

- Poor: In survey research, the set of all possible observations of a social condition is the

- A. frame.

- B. sample.

- C. population.

- D. study.

Option A is also correct, since the sampling frame represents the population available from which to draw the sample. Option D is not of the same kind as the others and is not plausible.

- Better: In survey research, the set of all possible observations of a social condition is the

- A. strata.

- B. sample.

- C. population.

This is one of the most common item-writing flaws we find in textbook item banks. It commonly results from limited knowledge of the subject matter or from hasty item writing. We identify this flaw in the example items in the next chapter. How do we catch such errors? Subject-matter expertise is one step, but even then, peer review is still helpful.

17. Place options in logical or numerical order.

In order to avoid clues in how we order the options, particularly the location of the correct option, it is best to be systematic and adopt a simple rule when we order the options. This also helps support the student’s thinking and reading clarity. It’s distracting and confusing when a list of numbers or dates or names is ordered randomly—without structure. Using logical systems for ordering options reduces cognitive load and allows the student to focus on the important content issues in the item rather than trying to reorder or decipher the order of the options. Not to mention, it’s just a nice thing to do.

- Poor: Making judgments about the worth of a program from objective information is called

- A. validation.

- B. assessment.

- C. measurement.

- D. evaluation.

- Better: Making judgments about the worth of a program from objective information is called

- A. assessment.

- B. evaluation.

- C. measurement.

- D. validation.

- Poor: The proportion of scores less than z = 0.00 is

- A. .00

- B. 1.00

- C. −.50

- D. .50

- Better: In the unit normal distribution, the proportion of scores less than z = 0.00 is

Notice we also removed one option, 1.00, as this is likely to be the least plausible. In addition to ordering the options numerically, we provided more white space between the letters and the numeric values, so the decimals are not immediately adjacent to each other. When using numbers as options, it is best to align them at the decimal point, again to support the reading effort and to remove additional irrelevant challenges.

- Poor: Which measurement is more precise?

- A. 35.634 meters

- B. 1,152.501 kilometers

- C. 3.823 centimeters Better: Which measurement is most precise?

- Better: Which measurement is most precise?

- A. 3.823 centimeters

- B. 35.634 meters

- C. 1,152.501 kilometers

There are a few problems with the poor version. First, there are two measurements that are “more” precise than the other. The measurements are not ordered in terms of their magnitudes (centimeters to kilometers), although this might be part of the problem you hope to have students address—but recognize that this does violate the first guideline: Test one aspect of content at a time. But the real issue is the ordering and alignment of the numeric values. The better version eliminates visual challenges and makes the options more accessible to the student.

- Poor: By doubling the sample size in a study, a correlation will most likely

- A. increase.

- B. decrease.

- C. stay the same.

- Better: If the sample size doubles, a correlation will most likely

- A. decrease.

- B. stay the same.

- C. increase.

18. Vary the location of the right answer evenly across the options.

The measurement community recommends that each option should be designated to be the correct option about equally often. This is to avoid the tendency to make the middle or the last option correct (which some of us have done). This is also something that many students believe: “If you don’t know, guess C!”

Traditionally, the guideline was to vary the location of the right answer according to the number of options. Tests were often devised so that every item had the same number of options, such as all items having five options. In this case, each options would be correct about 20% (1/5) of the time. But we now know that the number of options should fit the demands of the item, in terms of what’s plausible, and also what fits the specific question. So that version of the guideline doesn’t really work. We just need to make sure that all options have about the same probability of being correct so that one option is not obviously more likely to be correct.

By logically or numerically ordering the options, according to guideline 17, we likely avoid these tendencies. But even with this guideline, be sure to check through the key and make adjustments if necessary. Also, we don’t want a string of As being correct, or a string of Bs being correct, and so on. We want to mix it up and spread out the correct option across all options. Some students, particularly those who are less prepared, will look for patterns to capitalize on their chances of guessing correctly.

19. Keep options independent; options should not overlap.

This is another way of saying: Make sure only one option is the best answer. If the options overlap, then multiple options may be correct.

- Poor: If you are one standard deviation below the mean in a normal distribution, your approximate percentile rank will be

- A. less than the 10th percentile.

- B. greater than the 10th percentile.

- C. greater than the 20th percentile.

- Better: If you are one standard deviation below the mean in a normal distribution, your approximate percentile rank will be

- A. less than the 10th percentile.

- B. between the 10th and 20th percentiles.

- C. greater than the 20th percentile.

The problem with the poor version is that B includes C, so both B and C are correct. This issue arises from the use of the term “approximate” in the stem.

- Poor: In item response theory, if a student’s ability is at the same level as the location of an item, what is the probability the student will get the item right?

- A. less than chance

- B. less than 50%

- C. about 50%

- D. greater than 50%

- Better: In item response theory, if a student’s ability is at the same level as the location of an item, what is the probability the student will get the item right?

- A. less than 50%

- B. about 50%

- C. greater than 50%

In the poor version, A (less than chance) is also part of B (less than 50%). However, there is a more significant technical flaw in this item that only a well-versed item response theory expert will recognize (or a well-read graduate student). The correct answer is 50% for one- and two-parameter IRT models, but not for three-parameter models, in which case the probability of a correct response is greater than 50% (because of the influence of a nonzero lower asymptote).

20. Avoid using the options none of the above, all of the above, and I don’t know.

This guideline is one of the most studied empirically. Evidence suggests that it tends to make items slightly less discriminating or less correlated with the total score.

When we use none of the above as the correct option, students who may not know the correct answer select this option by recognizing that none of the options is correct. We want students to answer items correctly because they have the KSAs being tapped by the item, not because they know what’s not correct.

- Poor: What is NOT a possible modification to a multiple-choice item?

- A. Requiring students to justify their selected response

- B. Underlining important words

- C. Referring to a graphical display

- D. None of the above

- Better: What is a multiple-choice item modification that promotes higher-order thinking?

- A. Requiring students to justify their selected response

- B. Underlining important words

- C. Referring to a graphical display

This is a challenging item. The poor version has a negative term in the stem (NOT) and then in the options (none), creating a double-negative situation. But, more importantly, the question isn’t a particularly useful one, since there are potentially many possible modifications to MC items. If a modification is not possible, then it’s probably not possible for any items. Even the better option is not ideal, since supporting higher-order thinking depends on the context, and C could also be a correct option for some graphical displays and depending on what is being asked of the student.

When we use all of the above as a correct option, students only need to know two of the options are correct, again allowing them to respond correctly with only partial knowledge. If the student recognizes that at least one of the options is incorrect, all of the above cannot be the correct option. This option provides clues in both cases, making it a bad choice in item writing.

- Poor: What evidence is useful for building a validity argument about the measurement of a construct?

- A. Correlations with similar measures

- B. Mean differences among groups

- C. Confirmatory factor analysis results

- D. Expert judgment about item content

- E. All of the above Better: What evidence is useful for building a validity argument about subscore usefulness?

- Better: What evidence is useful for building a validity argument about

subscore usefulness?

- A. Correlations with similar measures

- B. Mean differences among groups

- C. Confirmatory factor analysis results

- D. Expert judgment about item content

The problem with the poor version is that a student only needs to know that two of the four options are correct to realize E is the correct answer. Another technical flaw is that, according to Samuel Messick (a prominent validity theorist), all validity evidence supports the construct interpretation of scores.

Finally, you may be tempted to use I don’t know as an option, but this leaves us without any information about student understanding, since they did not select the option that best reflected their interpretation of the item. If the distractors are common errors or misconceptions, we can obtain diagnostic information about student abilities. The I don’t know option eliminates this function of testing and wastes our hard effort to develop plausible distractors.

21. Word the options positively; avoid negative words such as “not.”

We’ve seen examples already of using negative words in the stem and sometimes in the options. Using negative words in the options simply increases the possibility that students will inadvertently miss the negative term and get the answer wrong, not because of misinformation or lack of knowledge but because of reading too quickly.

- Poor: What is a significant limitation of the multiple-choice item format?

- A. It is easy to develop many items

- B. It tends to measure recall

- C. It takes a long time to read and respond

- D. It does not allow for innovative responses

- Better: What is the most critical limitation of the multiple-choice item format?

- A. It is time consuming to develop many items.

- B. It tends to measure recall.

- C. It takes a long time to read and respond to.

- D. It excludes innovative responses.

The poor version has a number of problems. First, it uses a vague quantifier in “a significant limitation.” But the primary problem here is the use of NOT within the correct option. First, its use is unique among the options. Moreover, the first option is not a limitation, but it is also not true (since it is challenging to develop many MC items). The better version is still problematic, since all of the options are limitations, so we rely on the clarity of instruction to identify the most critical limitation—we trust that this is clear to students.

Furthermore, when it comes to developing MC items, we argue that the first three options in the better item can be addressed, but the exclusion of innovative responses is nearly impossible to avoid.

22. Avoid giving clues to the right answer.

There are several ways that clues can be given to the right answer. These tend to be clues for testwise students—students who are so familiar with testing that they are able to find clues to the correct answer without the appropriate content knowledge. The six most common clues are included here.

22a. Keep the length of options about equal.

This guideline has been studied empirically. The evidence consistently indicates that making the correct option longer makes items easier (due to it providing a clue to the correct answer) and significantly reduces validity (correlations with other similar measures).

The most common error committed by classroom teachers and college instructors is to make the correct option longer than the distractors. We tend to make the correct option longer by including details that help us defend its correctness. Testwise students recognize that the longest option is often the correct option.

- Poor: The major difference between teacher-made and standardized achievement tests is in their

- A. relevance to specific instructional objectives for a specific course.

- B. item difficulty.

- C. item discrimination.

- D. test score reliability.

- Better: What is the major difference between teacher-made and standardized tests?

- A. blueprints

- B. item difficulties

- C. number of items

- D. score reliabilities

The wording on this guideline is deliberate. The options don’t need to be exactly the same length, which is not practical. They just need to be “about equal.” There should not be noticeably large differences in option length.

22b. Avoid specific determiners including “always,” “never,” “completely,” and “absolutely.”

Words such as “always,” “never,” “all,” “none,” and others that are specific and extreme are rarely, if ever, true. These words clue testwise students, who know that these terms are rarely defensible.

- Poor: In general, the test item that is more likely to have a zero discrimination index is the one that

- A. all the less able students get correct.

- B. nearly everyone gets correct.

- C. all the more able students get correct.

- Better: In general, the test item that is more likely to have a zero discrimination index is the one that

- A. low-ability students are more likely to get correct.

- B. nearly all students get correct.

- C. high-ability students are more likely to get correct.

The poor version has at least two issues. One is that A and C use the term “all.” Second, A and C present a pair of options, since they have the same structure, which is different than B. This second issue is still somewhat of a problem in the better item.

- Poor: One benefit of learning more about educational measurement is that

- A. people have respect for measurement specialists.

- B. it brings complete credibility to our work.

- C. it improves our teaching and assessment practice.

- D. teachers generally have little assessment literacy.

- Better: One benefit of learning more about educational measurement is that

- A. it brings some credibility to our work.

- B. it improves our teaching and assessment practice.

- C. teachers generally have little assessment literacy.

Here, the poor version includes “complete” in option B, which was changed to “some” in the better version. Also, option A in the poor version is probably not true (especially these days with the negative environment around testing) and is likely the least plausible option, so it was removed.

This is also a common flaw for true-false items. Here, the term “only” is too difficult to justify, potentially leading students to select false.

- Poor: According to social exchange theory, trust is the only factor needed to maintain relationships. (True or False)

- Better: According to social exchange theory, trust is more important than the ratio between costs and benefits to maintain relationships. (True or False)

22c. Avoid clang associations, options identical to or resembling words in the stem.

Another clue to testwise students (most students actually) is when a word that appears in the stem also appears in one or more options. This is also a problem when a different form or version of the same word appears in both the stem and an option. There were multiple examples of this in some of the other item-writing guidelines, including example items for guidelines 9 and 15 (it’s a common error).

A clang association is a speech characteristic of some psychiatric patients. It is the tendency to choose words because of their sound not their meaning. Sometimes the choice of words is because of rhyming or the beginning sound of the words. This is associated to another disorder psychiatrists call the “word salad,” a random string of words that do not make sense but might sound interesting together. We don’t want items to contain clang associations or take the form of a word salad!

The key point here is that we don’t want students to select options or discredit options because of a similar word or words that sound the same in the stem and the options.

- Poor: What can we use to report content-related validity evidence for test interpretation?

- A. Reliability coefficients

- B. Validity coefficients

- C. The test blueprint

- D. Think-aloud studies

- Better: What can we use to report content-related validity evidence for test interpretation?

- A. Criterion correlations

- B. Reliability coefficients

- C. The test blueprint

- D. Think-aloud studies

The poor version contains the term “validity” in both the stem and option B. In addition, in the poor version, options A and B present a pair (see guideline 22d), since they are both “coefficients.” All of the distractors are forms of validity evidence, but only C directly addresses the content of the test.

22d. Avoid pairs or triplets of options that clue the test taker to the correct choice.

When two or three options are similar in structure, share common terms, or are similar in other ways, students can often see which options to eliminate or which options might be correct.

- Poor: Concepts about validity have continuously evolved for decades. The prevailing conceptualization of validity in educational measurement suggests that it is

- A. a property of the test.

- B. a unitary characteristic of the test.

- C. a subjective decision.

- D. a property of score interpretation.

- Better: The educational measurement validity framework described by Kane includes a focus on

- A. decisions about test content.

- B. test score interpretation.

- C. internal structure of the test.

The poor version has a number of problems, including the pair of options in A and B, both about the test itself, and “property” and “characteristic” are synonyms. If you know one is wrong, you know the other is wrong. In addition, the first sentence of the stem is unnecessary—window dressing. Also, in the better version, the distractors A and B are both forms of validity evidence but not the focus of validity itself.

Other examples of option pairs can be found in the example items presented in guidelines 12, 22b, and 22c.

22e. Avoid blatantly absurd, ridiculous, or humorous options.

There is no experimental research on this topic, but it has been written about. Berk (2000) found evidence that humor can reduce anxiety, tension, and stress in the college classroom. The bulk of the research on humor in testing has occurred in undergraduate psychology classes. The results regarding the effects of humor in testing to reduce anxiety and stress were mixed. Berk conducted a set of experiments using humorous test items and measuring anxiety among students in an undergraduate statistics course. Students reported that the use of humor in exams was effective in reducing anxiety and encouraging their best performance on the exam. Here are some examples of how Berk illustrated his recommendations to use humor in the stem or in the options.

- Example 1: The artist formerly known as MS-DOS is

- A. Linux.

- B. X Window.

- C. Windows.

- D. Unix.

- E. Mac OS.

- Example 2: What is the most appropriate politically correct term for ex-spouse (not necessarily yours)?

- A. cerebrally challenged

- B. parasitically oppressed

- C. insignificant other

- D. socially misaligned

Another example he provided is for the matching format, and although it is not an MC item format, it is a humorous example of how this guideline can be applied to other formats. This is slightly modified from the item presented by Berk (2000).

- Example 3: Match each questionnaire item with the highest level of measurement it captures. Mark your answer on the line preceding each item. Each level of measurement may be used once, more than once, or not at all.

| Questionnaire Item

|

Level of Measurement

|

| B Wait time to see your doctor |

|

| ☐ 10 minutes or less |

A. Nominal |

| ☐ more than 10 but less than 30 minutes |

B. Ordinal |

| ☐ between 30 minutes and 1 hour |

C. Interval |

| ☐ more than 1 hour and less than 1 day |

D. Ratio |

| ☐ I'm still waiting |

|

| B Degree of frustration |

|

| ☐ Totally give up |

|

| ☐ Might give up |

|

| ☐ Thinking about giving up |

|

| ☐ Refuse to give up |

|

| ☐ Don't know the meaning of "give up" |

|

| D Scores on the "Where's Waldo" final exam (0-100) |

|

| A Symptoms of exposure to statistics |

|

| ☐ Vertigo |

|

| ☐ Nausea |

|

| ☐ Vomiting |

|

| ☐ Numbness |

|

| ☐ Hair loss |

|

| D Quantity of blood consumed by Dracula per night (in ml) |

|

Berk (2000) wisely recommended evaluating the use of humor if you decide to use it in your tests. He suggested giving a brief anonymous survey to students, including questions such as:

- Did you like the humorous items on the test?

- Were they too distracting?

- Did they help you perform your best on the test?

- Should humorous items be included on the next test?

- Do you have any comments about the use of humor on class tests?

We recognize that Berk himself is a very humorous writer and measurement specialist. He is known for taking complex materials and controversial topics and making them understandable through humor. Think about how many social controversies have been clarified through cartoons (especially political cartoons). Sometimes it works. But we also recognize that one person’s humor may not be so humorous to another. We adopt the guidelines of McMorris, Boothroyd, and Pietrangelo (1997), who did an earlier review of research on the use of humor in testing. They recommended that if humor is used in testing, the following conditions should be ensured:

- It is consistent with the use of humor during instruction and class activities.

- The test has no time limit, or a very generous one.

- The humor is positive, avoids sensitive content, and is appropriate for the group.

- The item writer (instructor) understands the cultural contexts of all students.

- It should be used sparingly and not count against students’ performance.

22f. Keep options homogeneous in content and grammatical structure.

When options cover a variety of content, it becomes easier to detect the correct answer, even without much content knowledge. Similarly, a common clue to students is inconsistent or incorrect grammar. These are common item-writing flaws that can be avoided through good editing and review of the test items by peers.

This can also be an effective way to control item difficulty. As described in the section on item difficulty that follows, the extent to which options are similar and homogenous often determines the difficulty of the task presented to students.

- Poor: What information does the mean score provide?

- A. The spread of scores on the score scale

- B. The shape of the score distribution

- C. The location of the distribution on the score scale

- Better: What information does the mean score provide?

- A. Where most of the scores are concentrated

- B. The value of the most common score

- C. The location of the distribution on the score scale

The poor version includes distractors that are related to score distributions but not to central tendency—A is about variability and B is about shape. In the better version, the two distractors are both about central tendency.

Items with absurd options also violate this guideline, since they are not homogenous with the other options. But the degree to which options are absurd can be subjective, sometimes including options that are just not plausible.

- Poor: In what year was Ronald Reagan elected?

- A. 1971

- B. 1984

- C. 1979

- D. 2000

This item has a number of problems. First, Ronald Reagan was elected to the governorship of California twice (1970s) and the presidency twice (1980s)—so which is the question asking about? Second, these are not all election years. There is a difference between when one is elected and when one takes office. Third, the years are not in order. Fourth, 2000 is not plausible for students who are studying the national leadership timeline. Finally, this item is tapping simple recall and is not particularly informative.

- Better: Which Ronald Reagan election was considered a landslide?

- A. 1967 election as California governor

- B. 1971 reelection as California governor

- C. 1980 election as U.S. president

- D. 1984 reelection as U.S. president