Table 19.1 Common Data Rates

| Format |

Raster Size |

Frames/ Sec |

Scan |

Approximate Data Rate* |

| SD Video |

720 × 486 |

29.97 |

Interlace |

270 mb/s |

| HD 720p 60 |

1280×720 |

59.94 |

Progressive |

1.5 gb/s |

| HD1080i |

1920×1080 |

29.97 |

Interlace |

1.5 gb/s |

| HD1080p |

1920×1080 |

59.94 |

Progressive |

3 gb/s |

| UHD |

3940×2160 |

59.94 |

Progressive |

12 gb/s |

Throughout this book, media is described as a continuous flow of information. When produced as digital data, this requires an enormous amount of bandwidth and storage space. A high definition signal traveling along an SDI path produces one and a half billion bits of data each second. 4K images in the same form require data paths that allow 12 billion bits to flow each second. These large data flows need to be managed as discrete sections that can be saved and moved in the file-based structure of computers. To keep this flow of information running smoothly and efficiently within computer-based systems, it is important to prepare the files by choosing the best codec or compression scheme for the file’s use as well as the most appropriate container to ensure it reaches its destination in tact.

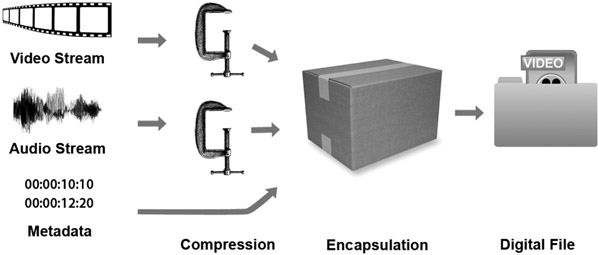

File Containers

Media files combine audio, video and metadata. Just as you need an envelope to use the U.S. Postal Service, media files need a container or wrapper to encapsulate data to store and move it within computer systems. And just as physical envelopes come in different shapes, sizes and material suited to various tasks, there are a number of media containers that store and move media data (Figure 19.1).

One of the most important things a media container does is arrange and interleave the data so that the audio and video play out synchronically. If all the video data were at the start of the file, with

the audio at the end, the entire file would need to be loaded into the computer memory before it could be played. Most computers don’t have enough memory for this, nor does the audience have the patience to wait for this to happen. Let’s take a look at different file containers.

QuickTime

One of the most familiar container formats is QuickTime, which was created by Apple. Files with the extension .MOV are QuickTime files. In addition to audio and video tracks, QuickTime files also support tracks for time code, text and some effects information.

Because QuickTime hosts a wide variety of audio and video codecs, and because Apple offers a free player for several operating systems, it is a very popular choice for web-based distribution. It is also a common format for the creation of files to be exchanged among content creators for review and approval copies.

There are several related containers that are very similar in structure to QuickTime, but were designed for special purposes. MP4 files (including .mp4, the audio only version .m4a, as well as others) are a container developed by the MPEG committee to interact specifically with MPEG-4 compression features not supported by QuickTime itself. 3GP is another format based on QuickTime, but in this case optimized for mobile delivery over cellular networks.

NOTE Remember that QuickTime, MP4 and 3GP are different types of containers. They don’t control or determine the type of compression they contain and carry. The compression type is determined by the codec. Codecs are described later in this chapter.

Windows Media

Files ending in .WMV and .ASF are Windows Media Files. This is a Microsoft design whose name is used to represent both a compression codec and a container format. Normally if a file has the .WMV extension, it is both a Windows media container and compressed with Windows media compressor. If .ASF is the extension, then the container holds a different codec.

Flash

Flash format (.flv, .f4v) is another popular container format. This is an Adobe product that was developed to contain animations built using a format called SWF. It was later extended to allow audio and video in the H.264 codec, discussed later in this chapter. This is mostly seen as files playing in a web browser via a plug-in that must be downloaded by the user. Adobe makes the browser plug-in available for free. As a result, Flash is a very popular container for web distribution of video content.

WebM

This container is a more recent development for distribution of web files in association with HTML 5 initiatives. While this supports limited codecs, it is making headway in some areas with large services like YouTube making their content available in this format (Figure 19.2). (WebM is discussed in more detail later in this chapter.)

Media Exchange Format

The MXF format is perhaps the most popular in large-scale media production organizations. This format was developed from the beginning to be a SMPTE format that equipment manufacturers could use to exchange content among their products. Computerized systems used in television can require media to be wrapped in different ways. To accommodate this, the standard allows for several variations in the format. These differences are categorized as Operational Patterns, or OPs. While there are currently 10 different specs for OPs, the two most common are OP-Atom and OP-1a. The biggest difference between the two is how the audio and video are stored on disk.

OP-Atom is designed for editing systems that store the audio and video as different files. Early computer editing systems had difficulty getting all the data needed to edit from a single disk, so the audio and video were stored on different drives. While that is no longer the case, some edit systems still use separate files for each element.

OP-1a is a single file in which all the audio and video are stored in the same container. Devices such as video servers use this format.

Other Containers

There are many other containers beyond those discussed. Some are legacy formats that are fading from use. Others are brand new and have yet to build a large user base. Some are special purpose and limited to equipment and workflows.

TIP The one hint that seems pretty universal is that the file extension for a media file is the container format it is using.

Switching Containers

The process of moving media between containers is referred to as re-wrapping. The audio and video stay the same, still in the original codecs. The material is just reorganized in a different container better suited to the target device. For example, this process frequently happens when files are moved from the camera to an editing system.

NOTE If the codec needs to be changed as well, the process then becomes one of transcoding. Transcoding can affect the quality of a file, most especially when going from a less to more compressed codec. Because re-wrapping does not change the codec of a file, it is a clean lossless process.

Codecs

As you learned in Chapter 14, data is normally compressed to reduce the amount of information with which our computers have to cope. There are many different algorithms, or codecs, that can be used for this job. Some are best suited for use when capturing images, while others are meant for editing. Some work well for distribution, with variants for the kind of medium that will carry them. Often codecs developed for one purpose are also used in others areas.

NOTE Remember that the word codec is derived from the words compression and decompression.

There are hundreds of codecs that have been created, with newer more efficient ones replacing previous generations. Let’s take a look at some of the more common codecs that are used in the production and post production process, as well as those used to stream media.

Acquisition Codecs

Image Capture comes in many forms from the camera phone through cameras designed for digital cinema quality. Low-end cameras look for codecs that allow long captures to be stored in a small space. The goal at the high end is to capture as much detail as possible to allow the greatest flexibility in post production image processing.

At the high end are codecs such as ArriRaw and Redcode. The Arri format for the cameras they produce is not compressed at all and is, as the name implies, the raw data from the image sensor. Red-code, from the makers of the Red Camera series, is compressed, very gently, in a codec that uses JPEG 2000 wavelet compression. Sony also offers a gently compressed acquisition codec in their SR format. While the files these codecs produce are huge, they contain all the data captured by the sensor. For later post production manipulations like compositing and color grading, all the available detail gives a greater range of creative options.

NOTE These cameras, like those of the other high-end camera makers, can also be set to shoot in several other formats as well.

Adobe created CinemaDNG format as an open non-proprietary format for image capture. This is a moving image version of their Digital Negative Format (or DNG), which supports gentle lossless compression. Several camera makers support this format including Black Magic.

A somewhat more compressed image can be captured using codecs that were originally designed for editing. Avid DNxHD and Apple ProRes are two examples. Both of these formats allow for various quality captures by choosing different data rates. Originally created for editing, these codecs capture each frame as a separate element. Both have top data rates of about 200–250 Megabits per second (Mbps), or about 7:1 compression ratio. In addition to on-camera recording, these are popular choices for video servers recording studio television shows, often with data rates as low as 100 Megabits per second. An additional motivation to use these formats is that the captured material is edit-ready. No additional processing is necessary to prepare for the edit room. The clip files may simply be quickly copied to the edit system. Obviously, this is tremendously helpful for workflows that have short deadlines.

The next step down the quality ladder is to cameras that record in more heavily compressed formats such as Sony XDCam and Panasonic AVCHD. The data rate for these formats is in the 30 to 50 Megabits per second range. Both compression types are variations of the MPEG 4 Part 10, or H.264 format. Cameras in this class are often used in newsgathering and other projects that do not require heavy image compositing or color grading. Since much of the detail is compressed out of these images, they are not suited to heavy post production manipulation.

Finally at the lower end of the image capture spectrum are cameras that record in very long GOP versions of codecs like H.264. Compression for these images is very lossy, with data rates often less than 1 Mbps. While these can produce acceptable images, they are very difficult to work with in post production and often must be transcoded to a different format for editing. Camera phones and inexpensive digital video cameras sold as consumer devices fall into this group of equipment.

Codecs Used in Post Production

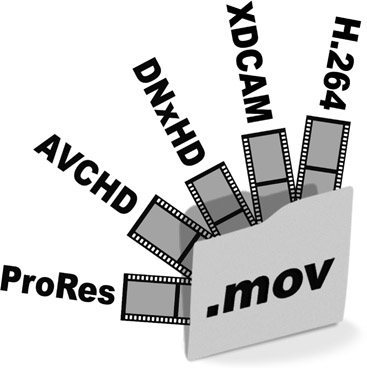

As mentioned earlier, there are codecs that were designed specifically for the unique requirements of editing. As examples, Avid created a group of codecs called DNxHD, while the engineers at Apple have produced several versions of their ProRes codecs (Figure 19.3). Both of these are Intraframe-based codecs, meaning they do not require information from adjacent frames to produce images.

Many codecs achieve high compression ratios by taking advantage of redundant information in adjacent frames. This does make editing a challenge. In order to make a cut at a specific frame, the computer must create that frame, along with the new one that follows, by decoding the picture from neighboring frames. While this is possible, it is somewhat taxing on the system and can produce a less than satisfactory experience for the person making the edits.

Figure 19.3

Various Codecs Using .MOV File Container Format

While there are codecs designed just for editing, most edit programs will work with many other codecs as well. Often editors will choose to work in the codec that their material was acquired in for speed and simplicity in workflow. For example, some larger networks and program producers have settled on XDCam at 50 Megabits per second as their common format. This offers an acceptable compromise between quality, speed and operability.

Contribution and Mezzanine Codecs

Frequently there is a need for compression that is an intermediate between uncompressed footage and distribution codecs. For example, let’s say the cameras at a sports event are capturing the footage RAW or uncompressed. When it’s time to uplink that footage or send it over a fiber optic line back to the network, the signal has to be compressed to reduce its file size. The encoder that does that is set to the largest compression ratio the channel can handle. That level of compression is referred to as contribution quality. Of course, when the footage gets to the network, it might be further compressed to be recorded on a server, or it might be uncompressed to be switched with other material as a live feed. Other uses of contribution quality compression include storing and playing out program content and commercials, and archiving finished work.

NOTE Examples of this group are codecs such as H.264, HEVC (H.265), MPEG and JPEG 2000. When used at contribution quality levels, the codecs are adjusted to higher bit rates than when used for distribution.

Another name used for this type of compression is mezzanine. Much like the mezzanine is half way between floors in a building, you can think of mezzanine compression as a middle level of compression. One common use of this type of compression is when preparing files for distribution channels such as YouTube. The finished edit in the edit codec for an hour-long show can run to many Gigabytes, far too large to easily send to the web host. A compression is made to reduce the amount of data to something easier to upload. The web host site then processes that file into several variations at different bit rates to make it publically available. The web site will offer either automatic or manual selection of the best bit rate for each user’s connection. Thus, what you have sent to be re-encoded is a middle or mezzanine format.

Digital Distribution Codecs

For digital distribution such as Internet streaming, which is discussed in more detail in Chapter 21, there are certain formats that are more effective than others. One codec in particular, MPEG-4, was developed especially for streaming media on computers that have a much lower signal throughput or bandwidth than digital television or DVDs. It has heavily influenced three specific areas, which are interactive multimedia (products distributed on disks and via the web), interactive graphic applications (for example, the mobile app “Angry Birds”) and digital television (DTV).

MPEG-4 Codecs

MPEG-4 is the most common digital multimedia format used to stream video and audio at much lower data rates and smaller file sizes than the previous MPEG-1 or MPEG-2 schemes. It can stream everything included in the previous MPEG schemes, but it was expanded greatly to support both video and audio objects, 3D content, subtitles and still images as well as other media types—all with a low bitrate encoding scheme. It supports a great many devices from cell phones to satellite television distributors, such as DISHTV and DIRECTV, to broadcast digital television. MPEG-4 has many subdivisions, called profiles, that address applications ranging from surveillance cameras with low-image quality and low-resolution to more sophisticated HDTV broadcasting and Bluray DVDs that have a much higher quality image and greater resolution. Let’s take a closer look at some MPEG-4 profiles.

H.264/AVC (Advanced Video Coding) is part of the main MPEG-4 profile. It is one of the most prevalent video codecs or video compression formats in that profile and is used for everything from Internet streaming applications to HDTV broadcast, Blu-ray DVDs and even Digital Cinema. It is nearly lossless with a 50% bit rate savings while still maintaining excellent video quality. In 2008, H.264 was approved for use in broadcast television in the United States. This was especially helpful to Digital Satellite TV, which is constrained by bandwidth issues. H.264 is less than half of the bitrate of its MPEG-2 predecessor.

High Efficiency Video Coding, also known as HEVC/H.265, is the successor to H.264/AVC. Compared to H.264, this new video compression codec doubles the data compression ratio squeezing out another 50% bitrate savings while still maintaining the same level of video quality. Alternatively, it can be used to provide vastly improved video quality at the same bit rate as H.264.

Unlike Apple’s QuickTime, the wrapper or delivery container that H.265 uses is called DivX. This is the newest video compression standard, which was completed and published in 2013. It can also support 8K UHD (Ultra High Definition) televisions and resolutions up to 8192 × 4230. Still in its early stages as of the writing of this book, H.265 promises to deliver support for enhanced video formats, scalable coding and 3D video extensions.

Google’s WebM Codec

Google’s WebM is quite a different story. Unlike other proprietary codecs, Google’s WebM is an audio-video media container format that is not only royalty free, but it is also considered to be an open-source video compression scheme for use with HTML5. This format is being developed through a community driven process that is fully documented and publicly available for viewing. WebM does not contain proprietary extensions and all users are granted a worldwide, non-exclusive, no-charge, royalty-free patent license.

One of the most important aspects of HTML5 video is that it includes various tags that allow browsers to natively play video without requiring a plug-in like Flash or Silverlight, and it does not specify a standard codec to be used.

Audio Codecs

Just as there is a variety of video codecs, there are numerous audio codecs that have been created for different purposes.

MP3 is a lossy data compression scheme for encoding digital audio. MP3 was the de facto digital audio compression scheme used to transfer and playback music on most digital audio players such as iPods. It is an audio-specific format designed by the Moving Picture Experts Group (MPEG) to greatly reduce the amount of data required to faithfully reproduce an audio recording as perceived by the human ear. The method used to produce these results is called perceptual encoding.

In perceptual encoding, compression algorithms are used to reduce the bandwidth of the audio data stream by dropping out the audio data that cannot be perceived by the human ear. For example, a soft sound immediately following a loud sound would be dropped out of the audio signal saving bandwidth.

NOTE MP3 is formally known as MPEG-1 (or MPEG-2) Audio Layer III.

AAC (Advanced Audio Coding) is a lossy compression and encoding scheme for digital audio, which is replacing MP3. Using a more sophisticated compression algorithm than MP3, AAC audio has a vastly better audio quality at similar bit rates as MP3.

As part of the pervasive MPEG-2 and MPEG-4 family of standards, AAC has become the standard audio format for devices and delivery systems such as iPhone, iPod, iTunes, YouTube, Nintendo 3Ds and DSi, Wii, the PlayStation 3 and the DivX Plus Web Player (Figure 19.3). The manufacturers of in-dash car audio systems, capable of receiving Sirius XM, are also moving away from MP3 and are embracing AAC audio.

Windows Media Audio (WMA) is a proprietary lossy audio codec developed by Microsoft to compete with MP3 and the RealAudio codecs. It is used by the Windows Media Player. Variations of it are compatible with several other Windows or Linux players. This audio format and codec can be played on a Mac using the Quick-Time framework but it requires a third-party QuickTime component called Flip4Mac WMA in order to play.

Distribution Codecs

Distribution codecs are the ones that are tuned for delivering the content to the audience. Often they are more heavily compressed versions of codecs such as MPEG-2, H.264 and HEVC. Here the selection is based on reducing bandwidth as much as possible while providing robust error recovery for less than perfect signal paths. The codec that is selected is a function of the type of delivery channel.

For Over the Air (OTA) television, MPEG-2 at just over 19 Megabits per second was chosen by the ATSC. A 2009 revision of the ATSC standard also allowed the more efficient H.264 codec to be used as well. As this book was being written, the ATSC was working to standardize the next broadcast format to include UHD, or 4K video. The codec being considered is HEVC or H.265 for what will be known as ATSC 3.

When delivered over cable and satellite to the home, the video is compressed using MPEG codecs. However when compared to OTA, cable and satellite companies often provide more highly compressed content in an effort to deliver more channels to subscribers.

Optical disks use either MPEG-2 or H.264. MPEG-2 is normally seen on DVDs, which are always standard definition. Blu-Ray disks can use several codecs—MPEG-2, H.264, or Windows Media (VC-1).

YouTube compresses content in MPEG-4. Several copies of each submitted video are made at different sizes, and the user can select the quality of the playback supported by their connection and computer. Some online services, such as Netflix, dynamically change between different compression levels based on bandwidth conditions. Other online distribution can use a broad range of codecs including Windows Media, all the MPEG variants, H.264, HEVC, and many others.

The development of compression standards, codecs and containers is an ongoing process. As mathematicians and engineers take advantage of computer and network speed, new and better compression technologies will be developed.

NOTE Due to the ever-changing nature of this technology, consider the codecs described in this chapter as a foundation for understanding how codecs evolve and how they are used in the different stages of video production, post production and distribution.