Learning theory is often thought of as the backbone of psychology and on a broader basis, it is life itself. From the day life begins to the day that it ends, learning never ceases. There is no doubt that humans and many other varieties of organisms learn to walk or locomote, think, and interact with each other, although there continues to this day a controversy concerning the model or theory of learning that explains fully the mechanism(s) of learning. We begin our treatment of learning with a brief look at the roots of behaviorism and three overarching models employed in the study of learning, namely, stimulus–response (S–R), stimulus–organism–response (S–O–R), and response (R).

We continue our inquiry into learning theory with a discussion of John B. Watson (1878–1951) who, in 1913, proclaimed that psychology is a division of the natural sciences in which objective study rules over earlier subjective methods of study in psychology, especially introspection or verbal self-reports of the contents of consciousness. Watson viewed psychology as the measurement of glandular secretions and muscular movements, which gave rise to behaviors and three fundamental emotions expressed through hereditary pattern reactions. Karl Lashley (1890–1958) furthered Watson’s strict behaviorist theory by mapping levels and types of learning across the cortex of the brain. Lashley found that specific regions of the brain follow the rules of mass action and equipotentiality for simple learned tasks. For complex learned tasks such as shape discrimination, very specific parts of the brain mediate responses. Thereafter, we examine basic and applied Pavlovian conditioning, especially regarding contemporary studies of treatment strategies for addictions and anxiety.

In general, Watsonian behaviorism and Pavlovian learning exemplify a S–R model while most of the neobehaviorists that follow embrace a S–O–R model of learning. Although many psychologists fall under this S–O–R heading, we focus upon Clark Hull (1884–1952). Hull created a complex mathematical system of learning known as the hypothetico-deductive theory of behavior, which incorporated numerous operationally defined bundles of variables into relatively complex equations or general principles with the intent of understanding, predicting, and controlling all behaviors. Hull’s work served as a major impetus for numerous studies of animal learning during the period from about 1940 to1960.

Edward C. Tolman (1886–1959) thought Watson’s view of behaviorism was too mechanistic, which caused Tolman to include cognitive mechanisms such as cognitive maps, thinking, memory, and reward expectations as central concepts to explain human and infrahuman learning. Tolman’s cognitive molar (versus Clark Hull’s mechanical molecular view of learning) led him to distinguish between place as compared to response learning.

We examine next Orval Hobart Mowrer (1907–1983) who believed that learning was mediated by two types of responses, namely, autonomic emotional responses and instrumental behavioral responses. In Mowrer’s theory, there are two fundamental emotions, fear and hope, that arise from drive induction and drive reduction, respectively.

The concept of learning, especially operant learning, was addressed at length by Burrhus Fredric Skinner (1904–1990). Operant conditioning differs from Pavlov’s classical conditioning most notably because with operant conditioning the response is emitted (action arises in the absence of a specific stimulus) while Pavlov’s respondent conditioning requires a specific stimulus to elicit a specific response from the organism.

Martin Seligman’s (1942–) work is then examined in relationship to biological predisposition to conditioning particularly in terms of learned helplessness and learned optimism. We briefly touch upon Seligman’s work on explanatory style and his contributions to the recent emergence of positive psychology stressing a focus upon the scientific study of ordinary human strengths and virtues.

We examine the research of Albert Bandura (1925–) and his foundational work focused upon perceived self-efficacy. Bandura is a major advocate of social learning theory, which generated a great deal of initial attention especially arising from his famous study known as the Bobo doll study. In this study, Bandura showed that the modeling of a learned behavior without specific overt response learning during observation of the behaviors to be learned was sufficient for learning, although the addition of reinforcement increased the speed of learning. Bandura’s work has a great deal of clinical application through the use of modeling, exploring a client’s levels of perceived self-efficacy, and providing the skills for self-regulation, another area he investigated extensively. We conclude this chapter with a brief treatment of positive psychology, which is built in large measure upon the fundamental psychological mechanism of learning.

When you finish studying this chapter, you will be prepared to:

- Identify the three overarching paradigms of learning and the specific theories of learning derived from them

- Describe the conflict in psychology between subjective and objective methods of study and how this controversy gave rise to the school of behaviorism

- Distinguish between mass action and equipotentiality and how these concepts contributed to early studies of the mapping of the cerebral cortex

- Understand the benefits of a molar versus a molecular approach to the study of learning and performance and the distinction between “place” (molecular–mechanical model) versus “response” (molar–cognitive model) learning

- Differentiate between drive reduction and drive induction and the effects they exercise upon the acquisition and maintenance of learned behaviors

- Describe the four defining features of neobehaviorism and how they shaped Clark Hull’s hypothetic–deductive theory of learning

- Distinguish between respondent (classical) and operant (instrumental) learning

- Identify behaviors that organisms are biologically prepared to learn

- Understand the effect that learning has on self-referential process such as perceived self-efficacy and explanatory style

- Give specific examples of the application of Pavlovian conditioning in clinical settings

- Identify the defining characteristics of positive psychology and how this 21st-century school of psychology is shaping current and future psychological research and interventions

The influence and impact that behaviorism has had and continues to have on the field of psychology is profound and extensive. From the beginning, behaviorists set out to move away from the methodology of introspection and focus primarily upon consciousness toward objective scientific methods, stressing the operational definitions of independent and dependent variables while measuring muscular motions and glandular secretions. The main goal of behaviorism, as outlined by the founder, John B. Watson, was to predict a response when given a stimulus and the stimulus when given a response. After almost 60 years, starting with the 1940s, the systematic behavioral study of human and nonhuman or animal learning began to reach this goal with a new challenge emerging today, namely, to establish a link between findings from laboratory studies and the application of these findings to add value for people from varied cultures around the globe in the domains of education, health, and productive lives.

There were many repercussions within psychology after Watson’s formal introduction of behaviorism in 1913 given the temporary derailment and loss of primary emphasis on the systematic study of the content (voluntarism and structuralism) and the utilities of consciousness (functionalism). In short, some said that “behaviorism caused psychology to lose its mind.” Psychology was depersonalized because of the focus upon measuring muscular and glandular secretions rather than studying verbal self-reported consciousness.

Multiple models of learning are presented in this chapter, each of which is unique, although some common features can be identified that yield a meaningful grouping or taxonomy of the models of learning. Although there may seem to be a limitless range of explanations of learning, they can be broken down into three types of models or theories. The models of learning revolve around the emphasis upon some combination of three variables, namely, stimulus, organism, and response variables. The stimulus is the object in the organism’s environment that elicits a behavior while the response is an observable behavior. The understanding of learning is not only about the systematic study of the presence and/or absence of a particular stimulus eliciting a particular response (S–R model), but also, according to some, the host of internal cognitive and emotional activities that take place between a stimulus and an observable response, the S–O–R model. Lastly, some investigators have focused only upon the response(s) of the organism (R model). Thus, the three models of learning include the stimulus–response (S–R), stimulus–organism–response (S–O–R), and response (R) models.

This model, as its name implies, is concerned strictly with the stimulus and the subsequent response. The first name that may come to mind when speaking of a stimulus–response model of learning is Ivan P. Pavlov (1849–1936). The S–R model took off with Watson’s (1913) Psychological Review article in which he threw down the gauntlet by defining the goal of psychology to achieve a model of learning by which “given the response the stimulus can be predicted; given the stimuli the response can be predicted.” Watson proclaimed the uselessness of studying consciousness by introspection and he sought to describe higher psychological processes such as thinking as merely a complex chain of stimuli and responses.

Many learning psychologists came to view the S–R model of learning as too simplistic, reductionistic, and mechanistic, and argued for a Stimulus–Organism–Response model of learning. They believed that understanding only the stimulus–response relationship is not sufficient because in almost all cases of drive reduction, cognitive and emotional states of the organism intervene between the stimulus and response. The S–O–R model of learning was championed by psychologists such as Hull, Tolman, Mowrer, Seligman, and many practitioners of behavior therapy. Tolman factored into his S–O–R model of learning cognitive processes intersecting between the stimulus and response. Likewise, Mowrer’s theory of learning was similar to Tolman’s in that he believed that the emotional state of the organism was important and he developed his two-factor theory of learning including emotional and instrumental responses. Seligman’s theory of biological predisposition to learning and his studies of explanatory style, learned helplessness, and learned optimism, are also presented in the context of a S–O–R model of learning.

A strict response-based model of learning is the most reductionist view of learning. B. F. Skinner promoted this model of learning advocating the primary focus only upon the consequences that follow a response or chain of responses by an organism. Skinner believed strongly that Type R (response) conditioning, also known as operant conditioning, was the dominant type of conditioning or learning over Type S (stimulus) conditioning, also known as classical or Pavlovian conditioning in which a specific stimulus elicits a specific response. Operant conditioning is exemplified, for example, when a food-deprived rat presses a lever to receive a food pellet and the frequency of this emitted action increases as a consequence of the contingent reinforcement. The Skinnerian R-based model of learning aims to predict and control behavior, and excludes the existence of intervening variables such as cognitive processes or emotional states because they contribute to explanatory fictions (hypothetical internal factors that serve as non-causal explanation of learned behaviors).

The mind takes a different form for the behaviorist than it did for the earlier introspective psychologists. Those who employed the introspective model to study consciousness frame the mind to be much like a black box. Accordingly, it was argued that the mysterious contents of mind could be studied only through the verbal reflections and reports derived from detailed descriptions of conscious experiences in response to specific stimuli. This methodological perspective stands in direct opposition to the behaviorist view of psychology, which embraces objective methodology to study publicly learned behaviors as indexed exclusively by alternate muscular movements and glandular secretions.

Watson saw psychology as a science equivalent to physics because both study scientifically bodies in motion, which for psychology were muscular movement and/or glandular secretions. If overt behaviors can be explained and quantified by muscular movements and/or glandular secretion, then it follows, according to Watson, that even highly private and personal responses can be studied scientifically and objectively. In effect, an organism, human and/or infrahuman, is nothing more than a bundle of muscular twitches and glandular secretions shaped primarily by the environment and refined further through the mechanism of classical conditioning.

Watson was born in Greenville, South Carolina, to Pickens Butler Watson and Emma Roe, who had visions of her son becoming a Baptist minister. However, luckily for the field of psychology, Watson directed his future goals away from his mother’s. Watson earned his undergraduate degree from Furman University without exhibiting much enthusiasm for his studies. Surprisingly, he continued his education at the University of Chicago where he graduated magna cum laude in 1903 with a PhD alongside distinguished peers such as John Dewey and Henry Donaldson.

Watson began his academic career at Johns Hopkins University, and his controversial paper, “Psychology as the Behaviorist Views It,” which attacked the current method of introspection used in psychology, was published in Psychological Review (1913), although further advancement of his work was cut short by the calling of World War I. Upon his return to Johns Hopkins University, Watson’s career in academia came to an abrupt end due to his intimate affair with one of his graduate students, Rosalie Rayner, while he was married to Elizabeth Watson, a noted philanthropist and socialite. After leaving Johns Hopkins, Watson began a new career in business that provided him with a large amount of wealth serving as the vice president of the J. Walter Thompson Company.

Historically, up until Watson’s Behaviorist Doctrine, as his 1913 paper is often called, psychology focused upon studies of adult human consciousness utilizing the method of introspection that was grounded in the verbal reports of highly trained participants. Watson proposed a change of subject matter and method of study for psychology to the behaviorist view of human behavior as bodies in motion studied through the scientific method utilizing objective observations (Watson, 1924). Watson proposed a reductionistic analysis of responses to stimuli. As a reductionist, he asserted that complex behavior patterns such as food preferences, and even thinking as well as almost any learned behavior could arise from classical, respondent, or Pavlovian conditioning reflected by muscular movement and/or glandular secretions. This reductionist view of responses including only muscular movement and/or glandular secretion allowed Watson to systematically study behavior in quantifiable terms. Behavioral analysis could be applied to all animals as well as humans, easily replicated, and yield specific hypotheses not possible from introspective psychology.

When behaviorism began to win over its opponents there were still many who were hesitant to embrace it because they found it difficult to see the translation of findings derived from animal studies of learning as applicable to improving the quality of life of humans. Although Watson had made claims about the capacity of behaviorism to improve the quality of human life, the animal studies he conducted had yet to link the findings from these studies to enhancement of the well-being of humans. Watson saw an opportunity to sway the skeptics, including university administrators who were reluctant to supply him with adequate funding, and, accordingly, he accepted an invitation to set up a laboratory in Adolf Meyer’s clinic at Johns Hopkins University (Buckley, 1989).

At his new laboratory, Watson studied primarily reflexes and basic emotional responses and conditioned emotional responses of infants in search of the fundamental emotional responses that we all have in common. He identified three fundamental unconditioned emotions: fear, rage, and love. Fear was defined as a response including catching of the breath, clutching of the hand, blinking of the eye, puckering of the lips, and crying with the stimulus being a sudden loud noise and/or loss of support or any abrupt change in a pattern of stimulation (Watson & Morgan, 1917). The second emotion, rage, is a response characterized by crying, screaming, stiffening of the body, and slashing movements of the arms and hands in response to hindering or restricting severely an infant’s movement. Love, the third identified emotion, is smiling, gurgling, and/or cooing resulting from gently stroking or rocking an infant. These three emotions were observed in newborn infants, while studies with slightly older persons made plain that there was a much wider range of experienced emotions. Watson conducted the famous Little Albert Study in an attempt to understand the expanding range of emotions associated with increased age. The subject of Watson’s study, Albert B., was a good candidate for this particular study because he was reared from birth in a “stable environment” allowing a clean pallet, if you will, for conditioning, which culminated in the fundamental finding that classical or Pavlovian conditioning was the process by which previously neutral stimuli could give rise to additional emotions.

The Little Albert Study as well as the balance of Watson’s work rested on the presumption that psychology exists without attention to subjective mental events and only muscular movements and/or glandular secretions verified through objective observation are the true subject matter of psychology. This presumption could pose a problem in explaining the phenomenon of thinking, something that is inherently intangible and mental; however, Watson even had an explanation for thinking. He conceptualized both speech and thought as forming through the association of thought patterns resulting from each experience over a lifespan (Watson, 1924). When one learns to speak he or she learns the associated muscular habits ranging from the larynx to the rest of the body (e.g., hands, shoulders, tongue, facial muscles, and throat). To further illustrate the view that thinking and speech are paired with muscular movements, he pointed out that the young, deaf, and speechless use bodily motions and talking to themselves when executing thinking patterns and that there are bodily movements such as the shrug of the shoulders that can replace words themselves. Watson then believed that eventually overt speech or movement of the lips and other laryngeal muscular movements became implicit or not readily observable, with thinking still taking place in the absence of overt movement of the human body.

Instinct, as well as emotion and thought, was a topic to which Watson paid attention over the years. To illustrate his changing views concerning the existence of instincts, Watson redefined an instinct as a hereditary pattern reaction composed primarily of movements of the striped muscle. He observed that animals have visibly identifiable instincts; however, human instincts are not as easily defined. Human habit quickly becomes the director of actions and it is in this way that instincts and emotions are similar in that both are hereditary modes of actions.

Watson was a determinist who interpreted all behavior in physical terms, that is, that behavior is essentially bodies in motion, in contrast to William McDougall’s view that instincts were key to understanding human behavior. McDougal (1871–1938), an English psychologist who came to the United States in 1920, believed that all human behavior arose from innate tendencies of thought or action. This instinctual theory, as presented in his book titled An Introduction to Social Psychology, directly opposed Watson’s objective and scientific view of behaviorism (McDougall, 1908). The two different explanations of behaviorism continued to develop independently until the two men were asked to discuss their differences in a public debate. The Psychology Club of Washington, DC, brought the two men together on February 5, 1924, to debate their differences (Watson & McDougall, 1929). The debate, which occurred at a time when there were only 464 members of the APA, attracted 1,000 persons, and when it was all said and done the judges sided with McDougall. The judges decided in favor of McDougall’s position because they focused upon the negative social consequences associated with Watson’s views that people are not responsible for their actions, but rather that they are determined by the environment.

Lashley, a psychologist who earned his doctorate from Johns Hopkins in 1914 and served as the APA president in 1929, spent the majority of his career between the Yerkes Laboratory of Primate Biology at Orange Park, Florida, and Harvard University studying the relationship between brain functioning and behavior. More specifically, he studied brain localization in rats from a behaviorist perspective. Lashley succeeded in bringing behavioral techniques together with physiological techniques, and used these two techniques to study the effects of cortical ablation or removal on learned behaviors both in mazes and discrimination tasks so as to better understand the acquisition, retention, and reacquisition of learned behaviors.

Two main concepts emerged from Lashley’s systematic program of research examining the effects of cortical ablation upon leaning in rats, namely, mass action and equipotentiality. Mass action, simply stated, means that the more cortical tissue available, the more rapid and accurate specific task learning. Equipotentiality states that learning does not depend on a particular patch of cortical tissue, thus making “all cortical tissue equal” with such equality moderated only by task complexity. Thus, for “simple tasks” any cortical tissue is satisfactory to mediate learning while for “complex tasks,” such as language production or speaking, specific cortical localization is critical (e.g., Broca’s area for speech production); as task complexity increases, equipotentiality or substitutability of one location of cortex for another particular location decreases. However, almost regardless of task complexity, mass action holds so that the more cortical tissue, the faster and more accurate the learning.

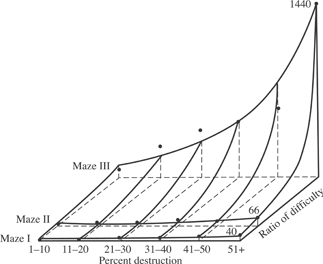

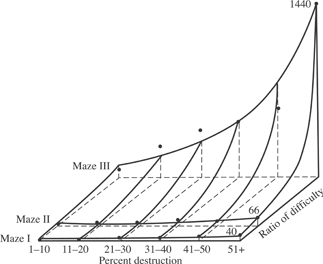

Lashley’s (1929) work, reported in Brain Mechanisms and Intelligence, showed findings focused upon the study of brain localization and maze learning. Three different mazes were created, namely, Mazes I, II, and III, for the study of equipotentiality and mass action (Figure 10.1). He found that there was a positive relationship between the magnitude of cortical removal or injury and learning capacity for mazes of varying task difficulty. For example, he found that the more cerebral cortex removed or injured the lower the learning for all three mazes and especially for the relatively difficult task (Maze III). Although for complex mazes the rate and accuracy of learning was positively related to task difficulty, acquisition as well as retention of learned maze behaviors was not influenced by the locus of cortical lesions for relatively simpler tasks (Mazes I and II; Lashley, 1929). Thus, a 50% reduction in the anterior portion of the cortex produces the same result as a 50% reduction in the posterior cortex for a relatively simple task (Mazes I and II) but not for more complex maze learning tasks only (Maze III). Put simply, the cerebral cortex exhibits mass action for all levels of task complexity and equipotentiality primarily for relatively simple tasks (see Figure 10.2).

The principles of mass action and equipotentiality explain well the neurophysiological components for simple learning tasks yet leave unanswered questions about more specific and complex tasks. Accordingly, Lashley set out to identify the limits of equipotentiality for more complex learning tasks such as the acquisition and retention of brightness and shape discrimination compared to maze learning. Lashley first turned to the study of the relationships between brain localization and brightness discrimination. He (1929) found that rats whose occipital cortices were destroyed before they learned a brightness discrimination task showed only a decrement in the speed and accuracy with which they learned the task. However, if a rat had learned brightness discrimination before experiencing cortical insult the original learning was destroyed temporarily so that the rat was able

Figure 10.1 Floor Diagrams of Karl Lashley’s Three Mazes

Maze I: least complex (only one choice point to reach food (F) from start box (S); Maze II: three choice points; and Maze III: most complex, involves eight choice points.

Figure 10.2

Karl Lashley’s Three-Dimensional Surface Showing the Relationships Among the Percent of Destruction, Ratio Difficulty, and Errors in the Maze

to relearn the task. This is to say that if an insult occurs before learning then the speed of acquisition of the learned behaviors (i.e., brightness discrimination) is reduced although the capacity to learn the brightness discrimination endures. Likewise, if learning occurs after an insult the learned behavior is temporarily lost although the ability to relearn is retained. These findings indicate that the occipital cortex, although involved in the acquisition of brightness discrimination, is not essential to such a learning task. Rather, there are other brain mechanisms (i.e., the superior colliculi) that mediate the acquisition, and retention of brightness discrimination (Lashley, 1929). The findings on brightness discrimination supported Lashley’s theory that habits (simple and perhaps complex ones as well) could be relearned utilizing different cortical and sub-cortical mechanisms following a brain injury.

Lashley found that rats lacking the striate occipital cortex could not learn or acquire form or shape discrimination (i.e., distinguish between a circle or triangle). Unlike with brightness discrimination, when the animal learned form or shape discrimination before the cortical lesion was imposed, permanent postoperative amnesia resulted indicating that no amount of retraining could reestablish the learned form discrimination. These findings make plain that there was obviously no other brain mechanism that could mediate form discrimination other than the striate cortex. Thus, equipotentiality did not hold for form discrimination although it did hold for brightness discrimination learning tasks. When partial rather than complete lesions of the striate occipital cortex were employed after discrimination learning then form or shape discrimination was only partially abolished. Thus, unlike the findings for brightness discrimination, not all learned behaviors can always be reacquired after brain injury. Task complexity moderates equipotentiality whereas mass action applies across task complexity in that the larger the cortical insult the slower and less accurate the learning.

We suspect that most of our readers have some fundamental understanding of Pavlov’s work or classical conditioning; however, there may not be an immediate understanding of how Pavlov supported Watsonian behaviorism. As a young boy growing up in the small Russian town of Ryazan, Ivan P. Pavlov (1849–1936) was greatly influenced by his mother, who was the daughter of a Russian Orthodox priest, his paternal grandfather, who was the village sexton, and his father, who was the parish priest. Pavlov grew up with the intent of following in his family’s footsteps by becoming a priest until he read Darwin’s (1899/1859) On the Origin of Species and Sechenov’s (1965/1866) Reflexes of the Brain. These two works influenced him to enroll in the University of St. Petersburg in 1870. Pavlov earned his MD in 1883 and his career leapt forward when he was appointed to the chair of pharmacology at the St. Petersburg Military Academy in 1891, where he organized the Institute of Experimental Medicine in St. Petersburg. Thereafter, he was appointed professor of physiology at the University of St. Petersburg in 1895. Pavlov (1902/1897) was awarded the Nobel Prize in physiology for his Lectures on the Work of the Digestive Glands, and finally in 1907 was elected a full member of the Russian Academy of Science. (See Chapter 16 for further details about I. P. Pavlov.)

For his Nobel Prize address, Pavlov, rather than describe his research on the digestive tract, for which he was awarded the prize, focused upon his latest work on what he called psychical stimuli. Pavlov came to realize through his studies concerning the pairing of neutral stimuli with feeding that he was working with two types of salivary reflexes, both of which were caused by physiological responses of the nervous system. The foundational physiological response was the unconditioned response (UCR) caused by the natural stimulation of the oral cavity. The second reflex, conditioned responses (CR), could activate areas other than the oral cavities (i.e., eyes, ears, and/or nose; Pavlov, 1955). The next step was to explain the relationship between the two types of responses. He described the paradigm in terms of an unconditioned stimulus (US), a biological stimulus that has the capacity to elicit automatically a reflex activity that yields the UCR, whereas a conditioned stimulus (CS) is a stimulus that was at one time a neutral stimulus but through repeated pairings with the US elicits a CR similar to the UCR. This basic paradigm, as we will see, has been applied to many learning phenomena to explain phenomena such as delayed conditioning, trace conditioning, stimulus conditioning, extinction, spontaneous recovers, disinhibition, stimulus generalization, discrimination, and temperament.

Pavlovian conditioning or classical conditioning is no longer viewed strictly as the pairing of a conditioned stimulus with an unconditioned stimulus, but rather involves circumstances around the learning, that is, the context of the learning and other pertinent variables (Rescorla, 1988). An area of psychology that has undergone major changes as well as flourished as a result of the systematic application of the principles of Pavlovian conditioning is psychotherapy. In fact, the work of Rescorla (1988) and others has extended Pavlovian conditioning so that we now have a better understanding, treatment, and even prevention of pathology, especially in the areas of anxiety disorders and drug addiction.

Recent theories of panic disorder (PD) with and without agoraphobia (fear of going out of the home) have been based in classical conditioning. Pavlovians argue that the conditioning of anxiety and/or panic to interoceptive (i.e., increased heart rate or lightheadedness) and exteroceptive (i.e., physical location) cues upon exposure to an episode of a panic attack can be understood as emotional conditioning. Anxiety is the emotional state that prepares one for the next panic, and panic is the emotional state that is designed to aid coping with a trauma in progress. After the exposure to a panic attack, a previous neutral stimulus (now the CS) such as a place (e.g., a room, in a car, and/or in an airplane) or an interoceptive cue becomes paired with the panic attack (US and UCR) for which anxiety is the CR (Bouton, Mineka, & Barlow, 2001). After the conditioning of the stimulus has occurred, the mere presence of the conditioned stimulus may evoke anxiety and lead to a panic attack that spirals the subject into the development of a panic disorder (PD). The classical conditioning perspective of PD, by taking both the emotions and the contextual cues into consideration, provides an excellent illustration of how the evolution of Pavlovian conditioning has led to broad and useful application of this specific learning paradigm.

Another solid example of the application of Pavlovian conditioning is in the process of understanding drug addiction. The ingestion of a drug constitutes a US and it may be paired with other stimuli such as objects, behaviors, or emotions present at the time of taking the drug (CS). The CR (conditioned response) to the conditioned stimulus is often opposite of the unconditioned response, that is to say that when the ingestion of morphine, which usually elicits a decrease in pain sensitivity, is paired with a CS, the CR is an increase in pain sensitivity (Siegel, 1989). This phenomenon has been illustrated with other drugs such as alcohol and is thought to be the body’s compensatory reaction: the body is preparing to neutralize itself against the drug.

The conditioned stimulus (CS) can act as a motivator in instances of drug dependence and panic disorder. In the case of drug dependence, the CS can increase tolerance to the drug through the compensatory nature of the CR. The compensatory CR (e.g., feeling more pain) may also be adversive and motivate the user to take the drug again. In the case of anxiety, the CS may increase the vigor of the instrumental behavior of avoidance of the US (unconditioned stimulus) because fear motivates avoidance behavior. With this understanding, it is possible to alter behaviors to free the client from this vicious cycle. Thus, for example, if increased heart rate is the CS with anxiety it may be possible to free the client from the CR by exposing him or her to the CS under alternative conditions. In this case increased heart rate (CS) is paired with the response of exercise instead of a panic attack.

There are significant implications for classical conditioning in the treatment of pathology using behavioral therapy. For learning to occur it is thought that the CS must supply new information about the US; for example, if there is a second CS present that already predicts the US then there will be no new conditioning (Kamin, 1969). This may limit the number of CSs that will elicit anxiety or other CRs. Pavlov also discovered two phenomena that have clinical implications, namely, extinction and counterconditioning. Extinction can reduce the CR if the CS is repeatedly presented in the absence of the US after conditioning, and counterconditioning can eliminate the effects of the CS by pairing the CS with a significantly different US and UCR. Counterconditioning is the basis of the common clinical tool, systematic desensitization (Wolpe, 1958). Although these forms of behavior therapy illustrate promise in treating persons with psychological disorders such as panic attacks or drug dependence, it is important to remember two points. The first point is that the original learning is not destroyed but only lies dormant, and in or with the correct context or timing there is potential for relapse (Bouton, 2002). The second key point is that the treatment of anxiety disorder can be challenging when identifying the CS because emotional learning can occur without any conscious recollection or awareness of the process, making it difficult for the subject to remember the initial panic attack and the details around the conditioning of the CS (LeDoux, 1996; Ohman, Flykt, & Lundqvist, 2000). Although there are still challenges to be overcome in improving behavioral therapy as a treatment for pathology, Pavlovian conditioning and its potential for enhancing the effectiveness of behavioral therapy can be expected to grow in the future.

Watson’s behaviorism and the S–R model of learning finally established the systematic study of behavior, especially learned behavior, as the subject matter of psychology. Neobehaviorism developed further a coherent set of four key principles to guide the study of learning under the S–O–R model of learning. First, the neobehaviorists believed that data derived from animal learning are applicable to our understanding of human learning, and, second, that an explanatory system to account for all learning data could be developed. Third, neobehaviorists endorsed completely the concepts of operationism, according to which all variables (i.e., independent, intervening, and dependent variables) must be expressed in a manner that could be measured. In effect, the neobehaviorist like Watson, focused on learning as the core of psychology. Many of these principles and future developments in neobehaviorism, which spanned the period from about 1930 to the early 1960s, were grounded in the work of Hull, Tolman, Mowrer, and Skinner.

Hull was marked as a man of perseverance from the time he was a young boy growing up in rural New York, as he had to overcome the ravages of typhoid fever and poliomyelitis. He quickly expressed other uses for his perseverance by graduating from the University of Michigan in 1913 with a bachelor’s degree and then from the University of Wisconsin at Madison in 1918 with a PhD. He remained in Madison for ten years focusing his research and teaching primarily on aptitude testing before moving to Yale University’s Institute of Human Relations. It was at Yale that he pursued in earnest new interests in suggestibility and hypnosis as well as methodological behaviorism.

Hull, while at Yale University, published a total of 32 papers and one book on hypnosis. These works described the nature of hypnosis as a state of hypersuggestibility that facilitates the recall of earlier memories more so than the recall of more recent ones, and the posthypnotic state as one in which suggestions are ineffective (Hull, 1933). In addition to describing the nature of hypnosis, Hull went on to describe the susceptibility to hypnosis as normally distributed although it has been assumed that children and women were more susceptible to hypnosis than men. Unfortunately, as a consequence of litigation surrounding an alleged incident of sexual harassment associated with one of his studies of hypnosis, which was settled out of court, Yale University mandated that Hull discontinue his excellent work on hypnosis and focus upon new research interests in psychology.

Hull, despite the change of research program, had a long-standing commitment to the importance of systematic methodology in psychology. Accordingly, Hull’s quantitative skills and their application to behaviorism emerged as a natural transition to the study of learning. Hull’s behavioral approach became more and more evident as the years progressed, as reflected clearly in his APA presidential address in 1937, “Mind, Mechanism, Adaptive Behavior,” which many refer to as Hull’s Principia. Sir Isaac Newton (1642–1727) was Hull’s hero, and he had all of his graduate students read Newton’s Principia, which he kept on his desk at all times so that they would understand that his work mirrored Newton’s mechanistic theory of the physical world (Newton, 1999/1687). Newtonian theory, translated in terms of behaviorism, stated that human beings are merely machines and the relationships between the variables generating behaviors could be described mathematically. Following his APA presidential address, Hull (1943, 1951) published his Principles of Behavior and Essentials of Behavior, indicating clearly his intent to pursue the application of his quantitative skills to the field of learning to better understand human behavior. Hull, like his hero Newton, was considered a fundamental force in the science of psychology as reflected by the fact that his work became so well respected that 40% of all experimental papers between 1941 and 1950 in the Journal of Experimental Psychology and Journal of Comparative and Physiological Psychology made reference to his work and 70% of all articles dealing with learning cited his work (Spence, 1952).

In the style of a true neobehaviorist, Hull agreed with Watson in his reductionist position that when studying behavior there is no need to consider consciousness, purpose, intentionality, or the emotional state of the organism. Instead, an organism is viewed as being in a continuing state of interaction with the environment in which specific biological needs must be met for survival, and when they are not met the organism behaves in a manner to reduce the specific need. Accordingly, from this view of animal and human learning, drive reduction or essentially a reinforcement theory was marked as the key mechanism for explaining all of infrahuman and human learning.

Hull developed his hypothetico-deductive system based on Newton’s work. This system entailed the development of sophisticated postulates or principles, which were then tested, modified if needed, revised, and then the revisions were tested again. This series of forming hypotheses and then testing them through experimental observations was conducted on Hull’s set of 18 postulates, which are mathematical statements about behaviors shaped by the operation of three sets of variables (Hull, 1951). In as much as the postulates could not be directly tested themselves, specific hypotheses that could be tested were developed and from the experimental results the postulates were then modified if necessary as dictated by the data. This hypothetico-deductive theory focused on studies conducted using three major sets of variables, namely, input or stimulus variables, intervening or organismic variables, and response or output variables. Each of these three variables was operationally defined so that input or stimulus variables are defined as the number of reinforced trials and/or the amount of reward. For the second set of variables, the intervening variables, three are presented here. First, habit strength (SHR) is defined as the tendency for particular or specific response and varies directly as a function of the number of reinforced trials of a particular response. Second, drive (D) is defined as the number of hours of deprivation (e.g., 24 hours food deprived). Finally, reaction potential (SER) is defined as the tendency of any response to occur that was a function of SHR and D minus any negative reaction tendencies (S I R). In other words, one’s reaction potential (the probability of any response) could be mathematically defined as a function of the number of reinforced trials for a particular response (SHR) combined multiplicatively with level of drive. The final set of variables was the response variables. Hull believed that there were four different measures of output or response variables; latency (STR), amplitude (A), number of responses to extinction (N), and probability (P). These three sets of variables and their operational definitions allowed Hull to create a hypothetical quantitative connection between intervening variables such that all human and nonhuman behavior could be explained through equations linking the three variables together and, in particular, one equation that we now examine briefly.

Hull believed finally that he could, by means of his hypothetico-deductive system, explain all instances of animal and human learning grounded in the mechanism of drive reduction. For example, Hull’s drive reduction theory proposed that the readiness to respond for any behavior (SER) is a direct function of habit strength for a particular behavior (SHR), an acquired habit or specific learned response, multiplied by the drive state of the organism (D), which energizes behavior and transforms response readiness to behavior through a defined number of hours of deprivation.

This equation indicates that habit strength and drive combine multiplicatively to determine reaction potential. According to Hull’s model, the pairing of a stimulus with a particular response that leads to positive reinforcement increases habit strength (SHR), in which the reinforcement serves to reduce drive (e.g., food) but with each pairing drive is reduced while habit strength is increased so that habit strength and drive state are inversely related. Learning then can be defined as an increase in habit strength that is incremental in nature, not abrupt, and mathematically defined by the number of reinforced trials also known as the learning curve. Drive reduction theory of learning makes plain that response readiness or tendency for any response is most likely if habit strength and drive are both at heightened levels, while if either the drive or habit strength (also known as learning) are zero then there will be no reaction or expressed behaviors.

Although Hull’s work and theory of drive reduction were referenced in a large number of publications at one point in time, many believe that the complexity and number of assumptions required by his hypothetico-deductive theory of behavior limited the widespread adoption of his model.

Tolman grew up in Newton, a suburb of Boston, Massachusetts, in a middle-class family. After graduating from Massachusetts Institute of Technology in 1911 with a degree in electrochemistry, he continued his education at Harvard University and eventually earned a PhD in 1915 under the imminent psychologist E. B. Holt (1873–1946). Tolman believed that learned behavior is purposeful and goal-directed and can be understood by the operation of intervening cognitive variables studied under carefully controlled experiments. Tolman, following his graduation from Harvard, taught at Northwestern University before moving to the University of California at Berkeley where he remained for the balance of his professional career (Crutchfield, 1961).

Tolman promoted a psychology in the S–O–R model that respected the objective nature of behaviorism while at the same time including cognitive components of thinking, remembering, and goal-directedness as mediators of behavior because he found Watson’s mechanismic behaviorism too restrictive and unable to account for some important types of learning such as latent and insight learning. Tolman stressed five main points in his work. First, he studied behavior from a molar perspective. He defined his molar perspective as large units of behavior directed toward a goal as opposed to Watson’s molecular focus upon muscular movements and glandular secretions as the initial engines for learning. Second, Tolman believed behavior was purposeful. He argued and later demonstrated that goal-directed behavior of human and infra-humans involves expectancy of a reward, which can be operationally defined and measured in laboratory-based studies of learning. Third, Tolman employed the concept of intervening variables to demonstrate that learning cannot be attributed exclusively to stimulus–response connections (as Watson proposed) without considering what may be going on inside the organism. Cognitions, expectancies, purposes, hypotheses, and appetite were, for Tolman, all examples of intervening or mediating variables reflecting psychological processes going on within the organism. Thus, he promoted a stimulus–organism–response model to explain learning (S–O–R model). Fourth, Tolman thought that there were two distinctive types of learning, namely, place and response learning. Place learners learn by means of cognitive maps, which are mental representations of the relative position of stimulus objects in their environment, while response learners were thought to learn through repetition and reinforcement of specific responses. Finally, Tolman saw behavior as arising from five independent variables. Regardless of which type of learning, place or response, Tolman thought that environmental stimuli (S), physiological drive (P), heredity (H), prior training (T), and age (A) all ultimately contributed to the acquisition and retention of learned behavior (B). He expressed this through a simple equation:

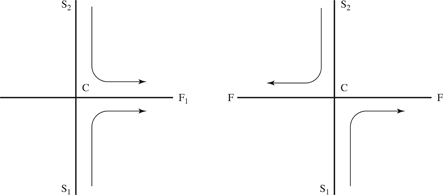

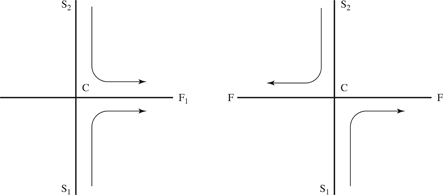

Tolman argued that cognitive maps were created through an overall knowledge of the structure and spatial patterns of elements in the learning environment of the organism. For example, Tolman, Ritchie, and Kalish (1946a, 1946b) demonstrated in a series of experiments that rats learned faster when utilizing place learning versus response learning. In the prototypical experiment, Tolman created a maze in which the rats started from points S1 and S2 while place learners always found food in the same place. Now with the response learners regardless of starting from S1 or S2 rats found food by always turning to their right

(Figure 10.3). Tolman and his colleagues found that place learners learned more rapidly than response learners. In 1948, Tolman published his final paper, titled “Cognitive Maps in Rats and in Men,” describing the application of these findings to human behavior by arguing that environments that are too limited have negative effects upon learning due to the inability of the learner to perceive the entire environment and consequently determine a path to the goal. On the other hand, comprehensive or complex environments are preferred because they also facilitate the creation of complex cognitive maps that connect different place elements in the learning environment.

Figure 10.3 Mazes for Studying Place and Response Learners

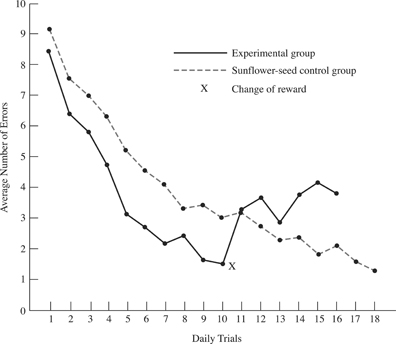

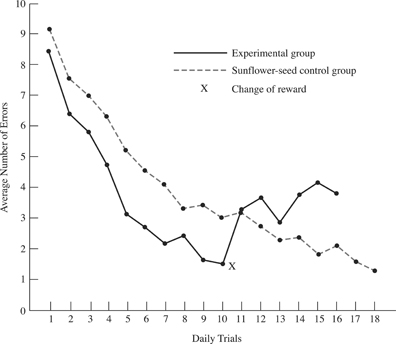

In addition to his systematic studies of learning through cognitive maps, Tolman also studied extensively the impact of reward expectancy upon learned behaviors and latent learning. Using a T-maze, both Elliott (1928) and Tolman (1932) were able to study objectively the phenomenon of reward expectancy (see Figure 10.4). Tolman believed that reward expectancy was an important intervening variable that had a significant effect on animal (i.e., appetitive bran mash versus aversive sunflower seeds) and human learned behaviors (i.e., expecting a $100 reward versus a $10 reward). Thus, for example, Tolman found that the experimental group of rats that were rewarded with mashed bran for nine consecutive days and then on the tenth day were rewarded with sunflower seeds exhibited disrupted behavior over the following six-day period (number of errors increased on the 11th and subsequent days) while the control group that received sunflower seeds throughout the entire experiment experienced no disruptive changes in behavior (Figure 10.4). Tolman explained the rats’ change of behavior in the experimental group by means of the intervening, mediating, or organismic variable of reward expectancy such that the rats expected a specific appetitive reward and upon changing the reward to one less appealing there was a decremental change in learned behavior.

Latent learning involves reinforcement, and the expression of learning in performance. Latent learning can be defined as hidden learning that is only revealed under specific conditions. In this instance, Tolman and Honzik (1930) employed two groups of rats in which one group received a food reinforcement at the end of running a maze while the other group received no food reinforcement after running the same maze. Over time, the first group improved significantly both their accuracy and time of running the maze and the second group showed modest although not significant improvement on both measures. Such findings would lead one to believe that the first group had learned and the second group had not

learned to traverse quickly and smoothly through the maze, although Tolman saw something else in the data. He believed that the second group of rats had learned but had no motivation to perform as there was no reward in the goal box. He decided to test this hypothesis by putting the second group of rats back in the maze with a food reinforcement and to his excitement there was a dramatic decrease in the time to complete running the maze and an increase of the accuracy by the rats who previously had not been reinforced when they had run the maze. These startling results indicated that there had been previous learning by these rats that had not been expressed in performance due to a lack of reinforcement.

Figure 10.4 Results of Tolman’s Study of Reward Expectancy

Tolman succeeded in crafting an objective behaviorism coupled with cognitive mechanisms that related more closely to everyday learning situations for animals and humans. As a result, he helped psychology “find again its mind,” which was temporarily lost during the reign of Watson’s revolution focused only on mechanistic behaviorism. The Tolmanian consideration of cognitive mechanisms with methodological behaviorism changed dramatically the field of learning and set the stage for the emergence of many subfields that would later develop into modern psychology, including motivation, neuropsychology, and mathematical theories of learning.

Mowrer approached the explanation of learning from a different perspective compared to Watson and Tolman. Throughout his study at the University of Missouri, where he earned his PhD in 1932, and during his work with Hull at Yale University, Mowrer came to believe that there was much more to learning than merely the mechanical pairing of stimulus–response units as promoted by Watson, Lashley, and Pavlov. His S–O–R theory, unlike Tolman’s, incorporated the emotional state of the organism rather than the cognitive processes of the organism as central to the acquisition and retention of learned behaviors.

Mowrer developed a two-factor theory of learning, which explained learning as contingent upon reinforcement of instrumental responses mediated by the central nervous system coupled with emotional responses mediated by the autonomic nervous system (Mowrer, 1947).

Mowrer (1960) introduced his emotional learning theory in his book Learning Theory and Behavior. Although Mowrer spent a significant portion of his career studying the conditioning of emotions as the foundation of learning, he outlined two types of learning. One type of learning, which Mowrer referred to as sign learning, is that which arises when the conditioned stimulus precedes drive induction or drive reduction. In effect, sign learning is classical or Pavlovian conditioning. Sign learning is mediated by the autonomic nervous system and represents an automatic elicited response of the organism to a stimulus. The second type of learning that Mowrer focused upon was solution learning, which involves a voluntary or instrumental response leading to a reduction of a drive, for example, to avoid shock (aversive learning) or to seek food (appetitive learning).

Mowrer’s learning theory focused primarily upon the emotional state of the organism during learning and he studied extensively drive induction and reduction. Mowrer believed that there were two basic emotions: fear arising from drive induction, and hope arising from drive reduction, and it was these two foundational emotional states that were conditioned or linked to specific stimuli and responses, respectively. Drive induction causes an increase in a drive and gives rise to the emotional experience of fear, while drive reduction causes a diminution of drive and is associated with the emotional state of hope. An example of drive induction would be sitting in the dental chair and the dentist saying, as she starts the drilling, “this will only take a few seconds”; or at an extreme, realizing that you may not have a safe place to sleep the next night if you are unfortunately a homeless person. Drive reduction, on the other hand, is exemplified when the dentist says, “just another few seconds and I am done,” or a person finds a secure place to be safe, sleep, and rest. Drive induction promotes fear and the organism will learn instrumental responses to attenuate or reduce this fear, which is an engine for learning. For example, when a stimulus precedes an increase in drive then that stimulus serves as a cue or signal to elicit fear and appropriate avoidance behavior to the unconditioned stimulus (US). Likewise, if a stimulus precedes or signals a decrease in drive then that stimulus serves as a cue to elicit hope and the appropriate instrumental response. Mowrer’s study of positive emotions like hope has been examined along with other positive emotions more recently in a field known as “positive psychology.” We will discuss in more detail positive psychology and other contemporary S–O–R theorists after a brief look at Skinner’s work as a response (R) theorist.

B. F. Skinner was and still is a foundational force in psychology as a result of his high intelligence, creativity, and hard work. Having grown up in a small town in Pennsylvania during the Progressive era (1940–1950s), his mother instilled in the young Skinner the so-called Protestant ethic or the value of hard work. He went to Hamilton College in New York where he read Watson’s Behaviorism and Pavlov’s Conditioned Reflexes, both of which motivated Skinner to move to Cambridge, Massachusetts, where he studied psychology at Harvard University under E. G. Boring and earned his PhD in 1931. Skinner began his professional career at Harvard, moved to the University of Minnesota in 1936, and then was appointed as chair of the psychology department at Indiana University in 1945. After a three-year stay at Indiana he moved back to Harvard (Lattal, 1992). Skinner spent the majority of his career developing a descriptive and atheoretical system of behaviorism. At the annual meeting of the American Psychological Association in Boston, Massachusetts, a few days before his death from leukemia, Skinner pleaded with his colleagues to move closer to descriptive behaviorism or an R model of learning and away from the misguided field of cognitive psychology (Holland, 1992).

Skinner presented his theory of experimental descriptive behaviorism in his 1938 book The Behavior of Organisms: An Experimental Analysis, which described two types of conditioning: Type S and Type R. Type S conditioning is the same as Pavlovian or classical conditioning or what he called respondent conditioning, according to which the unconditioned stimulus elicits the specific unconditioned response and pairing a neutral stimulus (CS) with the UCS could eventually, after a few pairings, elicit the conditioned response by the CS alone. Skinner thought that this type of conditioning could not explain all behaviors. He thought that most behaviors are emitted by the organism and controlled by the immediate consequence of the response. Type R conditioning, also known as operant conditioning, arises when an emitted behavior occurs and the immediate consequences affect the likelihood of the repetition of that behavior. Skinner studied extensively this form of conditioning devoted entirely to the study of responses (R), in direct opposition to the stimulus–organism–response (S–O–R) models advanced by Hull, Tolman, Mowrer, and their students. Skinner’s emphasis on responses was apparent in his definition of an operant as a behavior operating on the environment thus producing a given consequence. Skinner was not interested in the study of intervening variables because they left the door open to pseudo-explanations of learned behavior while responses could be observed and measured. He believed that the subjective states of “feeling” could be expressed only through verbal reinforcement contingencies and are thus nothing more than additional behavior (Delprato & Midgley, 1992).

Skinner studied different schedules of reinforcement because they increase or decrease the rate of response, depending upon the particular schedule of reinforcement. He identified two broad categories of reinforcement schedules: continuous reinforcement (CRF) and intermittent reinforcement (IRF) schedules. CRF is used with contingent reinforcement to condition or acquire a specific operant; while IRF is used to maintain the operant behavior once learned. In CRF, reinforcement always follows the target response to be learned or never follows such response (i.e., extinction). Within the intermittent reinforcement schedules there are two subsets: interval and ratio schedules, which are further divided into two types of schedules of reinforcement. With an interval schedule the critical element is time. Interval schedules consist of a fixed interval schedule that delivers reinforcement at a constant interval of time, say every two minutes; and a variable interval schedule that delivers reinforcement aperiodically, but when averaged over the experimental session it is delivered on average at a specific interval of time, say every two minutes. Ratio schedules, on the other hand, focus upon response rate as the critical variable for delivery of reinforcement rather than the pairing of time, as in the case of interval schedule reinforcement. Ratio schedules consist of a fixed ratio that delivers reinforcement at a constant proportion of rate of response, and a variable ratio that delivers reinforcement aperiodically, but when averaged over the experimental session it is delivered on average at a specific proportion of rate of response. According to Skinner, the types of reinforcement schedules are critical as they govern the rate of acquisition, maintenance, and extinction of all learned behaviors with fixed schedules employed during the initial acquisition stage of learning and then using intermittent schedules to maintain the newly acquired behavior (Lattal, 1992).

Skinner thought that psychology should have two main goals: the prediction of behavior and the control of behavior through the experimental analysis of behavior. In an effort to achieve this goal he invented the operant chamber for bar pressing with rats (Skinner, 1956), and eventually light pecking by pigeons (Skinner, 1960). He believed that these two inventions, which would later be referred to as the Skinner Box, would facilitate progress toward the above two goals. According to Skinner, these learning environments allowed the identification of laws determining first animal and ultimately human learning because they required, for example, relatively easy responses, the target response is not crucial for the organism’s survival, and the target response is unambiguous. For example, a rat will on average press a bar about six times per hour in the chamber, which is an ideal base for operant conditioning. Rate of response was the key measurement (dependent variable) in the process of operant conditioning. For example, to document that learning occurred the rate of responding must increase over time while for extinction of the learned behavior the rate of response must approach or equal zero over time. Skinner observed that after a few reinforced trials the rate of operant responding, bar pressing or pecking at an illuminated circular disk, is extremely rapid, which spawned the development of the law of acquisition. This law stated that if the occurrence of an operant is followed by a reinforcing stimulus the rate of response is increased exhibiting learning (Lattal, 1992).

Finally, Skinner’s interests turned toward an integration of his earlier love for writing and his then current passion for the study of behavior. As a college student, he aspired to be a writer and sent copies of his work to Robert Frost, receiving much praise for his pieces; however, he changed fields after feeling as though freelance writing was a dead-end career choice. As his interests in learning moved more to applied research he desired to create a technology of behavior based on his extensive findings regarding the impact of reinforcement and especially schedules of reinforcement for modifying behaviors, believing that a society could be engineered thus creating a utopian community. Throughout his career his interests continued to evolve causing him to branch out into studies of language, parenting, education, and military applications of learning principles. However, his most notable works in social engineering focused upon applied behavioral technology and included Walden Two and Beyond Freedom and Dignity in which he argues for the translation of his behavioral research findings to individual and societal living situations (Skinner, 1948, 1971). He described the present order of living where it is assumed that the person will rationally choose between right and wrong by means of directive laws. However, Skinner argues that because the law stresses individual autonomy and freedom rather than survival of the human species, the laws of our society need to be reoriented to stress the survival of the species rather than focus solely upon the freedom and choices of the individual. The reorientation of the social value system would arise from education thereby creating a society in which basic materialism, arts, and the sciences necessary for a decent life would flourish while factors such as individualism, rampant technology, and individual greed would be reduced by the application of the principles of operant conditioning.

Martin Seligman is a foundational leader in the field of contemporary psychology. His work, like that of many other modern psychologists, does not fit neatly into the category of neobehaviorism, although it has evolved from it with a S–O–R model of learning. Seligman (1970) found that not all behaviors could be conditioned equally well, through either Pavlovian or operant conditioning, but rather that animals seemed best able to learn using what they were biologically prepared to learn.

Seligman (1975) investigated the types of learning that animals were biologically prepared to learn, one of which he titled learned helplessness. In his studies, he observed that many of the lab animals learned that if the consequences of a behavior seemed to be independent of their behaviors then they learned to be helpless in that situation. Thus, for example, as applied to learning in the classroom, if one worked extra hard for a semester by going to every class, every review session, completing all of the assigned readings, and even the supplemental readings, to meet one’s goal of earning a 4.0 grade point average (gpa), and at the end of the semester received one’s usual 3.2 (gpa), one may learn rather readily that there is probably no systematic connection between the extra behaviors one engaged in to earn a higher grade and one is thus helpless. This type of learning, learned helplessness, has been applied to the study of depression and has yielded effective clinical interventions by minimizing noncontingent reinforcement conditions.

On a more positive note, Seligman is also well known for his work on learned optimism (Seligman, 1991). He has argued that psychology in recent years is finally turning to the systematic study of positive emotions such as hope, which have been left out of psychology for too long. Seligman has focused upon the study of explanatory style, which is an individual’s interpretation of events and naturally occurring reinforcement schedules in our daily lives, to explain the origins of learned optimism and pessimism. Learned optimism involves the partial reinforcement extinction effect (PREE), a reinforcement schedule related to that of the previously discussed continuous reinforcement (CRF) and partial reinforcement (PRF) schedules. As mentioned before, CRF is particularly useful in the extinction of previously reinforced behaviors of animals. For humans, not only is the reinforcement schedule important in determining human behavior, but also the relationship between the reinforcement schedules and explanatory style. For example, a person who thinks that a naturally occurring reinforcement schedule may be an extinction schedule without any further reinforcement is likely to give up immediately in the absence of reinforcement, while a person who thinks the absence of reinforcement is temporary continues to respond. The optimist is inclined to take a chance and persist somewhat longer at a task than a pessimist and most likely but not always gains access to reinforcement.

Explanatory style as a concept can be broken down into three important components; permanence, pervasiveness, and personalization. The first dimension, permanence, states that those who believe that the cause of bad events is permanent give up more easily than those who are more optimistic and believe that the cause of bad events is only temporary. Examples of this include a permanent or pessimistic explanatory style in which one might say, “You are always mad at me” or “I always do poorly in school.” Whereas, an optimistic explanatory style might sound like the following, “You get mad at me when I don’t respect your space” or “I don’t do well in school when my priorities lie elsewhere.” Here setbacks or negative outcomes are stated specifically and focus on actions. The take-home message concerning the two types of explanatory style in this category is that if one tends to use statements such as always or never one most likely has a pessimistic style; whereas if one tends to use statements such as sometimes or lately, blaming bad events on temporary conditions, one has an optimistic explanatory style.

The second component of explanatory style, pervasiveness, is further divided into two dimensions, namely, specific and universal pervasiveness. Pervasiveness in general is concerned with space or range of affected area as opposed to time. Universal explanations of failures tend to yield pessimism, and the person is most likely to give up on everything when something in one area goes awry. However, those persons who construct specific explanations of setbacks in one area of their lives and see them as temporary will most likely tend to carry on with the rest of their lives, being challenged or incapacitated in only one area, and will tend to be optimistic.

Personalization, the third and final category of explanatory style, can also be broken down into the dimensions of internal versus external causation of a setback. The pessimistic assessment of blaming oneself (internalization) when bad things happen tends to lead to low self-esteem, while the optimistic view of realistically identifying other people or circumstances (externalization) for negative events does not lead to a low self-esteem. Imagine the impact of this attribution process practiced regularly, as it means the difference between a self-image of being increasingly worthless, talentless, and unlovable compared to a self-image of enhanced worthiness, talent, and lovableness.

Although personalization controls how one feels about oneself, the other two factors, permanence and pervasiveness, control what one does and how long and across what specific or universal dimensions an explanation of both positive and/or negative events endures. Seligman’s view was that helplessness, which in this discussion can be thought of as being similar to pessimism, and optimism are both behaviors with biological predispositions to be learned. Seligman thought that by studying positive concepts, such as hope, in the laboratory, the field of psychology could be expanded to not only focus upon disheartening subjects such as depression and suicide, but also in part upon uplifting features of the human spirit such as hope and love.

Professor Albert Bandura, another contemporary S–O–R theorist and foundational psychologist, graduated from the University of Iowa in 1952 with a PhD in clinical psychology. His first and last full-time position, which he received only a year after his graduation, was and is still at Stanford University. Bandura has become noted for his opposition to radical behaviorism, reflected in his emphasis upon cognitive factors as important controlling influences on human behavior. His primary interests focus on the study of the three-way interaction between cognition, behavior, and the environment as the determinants of learned behavior and personality developments.

Bandura has spent the majority of his career studying social learning, which involves observational learning. Social learning combines the theories of cognitive and behavioral psychologies anchored upon the three-way interaction of cognitive processes, the environment, and behavior as the determinants of learning (Bandura, 1986). As a result of the inclusive nature of social learning, it is often considered as the most integrative explanation of learning, and has been applied in clinical settings to address a variety of psychological problems.

Social learning focuses upon the operation of four psychological processes essential for understanding learning, namely, attention, retention, reproduction, and motivation. In the first step, attention, an individual notices something in his or her environment and thus focuses on features of some particular behaviors exhibited by the model. This stage can vary depending on the characteristics of the observer, the modeled behavior, and competing stimuli. For example, an individual learning to shoot a basketball may pay attention to the trajectory used by the model or instructor (i.e., shooting right for the hoop or basket or using the backboard). The second step in the process of social learning, according to Bandura, is retention, in which an individual remembers the observed modeled behaviors during the attention phase. In this step, imagery and language aid retention by facilitating the recall of mental images and verbal cues associated with the behavior to be learned. For example, the individual who paid attention to the trajectory of a basketball shot in the first step now remembers a mental image and words associated with that basketball shot. Reproduction is the third step in Bandura’s social learning model, in which an individual produces the behavior that was modeled. This step involves the conversion of a symbolic representation of the modeled behavior into actual performance of that behavior. Thus, after observing and retaining information about the trajectory of the basketball shot the individual produces the behavior and shoots the basketball. The fourth and final step in the social learning process is motivation. Here, the environment presents a consequence that changes the probability that the targeted behavior will be emitted again. Consequently, the key determinant to subsequent attempts to continue shooting basketballs (and hopefully making a basket or scoring points) is motivation.

Bandura’s research indicates clearly that the process of modeling plays a significant role in the formation of thoughts, feelings, and behaviors. An extremely important feature of Bandura’s social learning theory is that learning that arises through actual execution of the target behavior during the acquisition phase of learning can also be learned through modeling. Importantly, Bandura has argued that the exposure to a model performing a target behavior to be learned can yield learning without reinforcement. This is a bold position because it directly opposes the principles behind Hullian, Skinnerian, and Pavlovian models of learning, not to mention the fourth step of Bandura’s learning theory (i.e., motivation). Bandura does not deny that reinforcement and/or punishment is not required for learning; however, he recognizes that the presence of reinforcement or punishment changes the speed or rate at which the modeled behavior is learned. Bandura’s theory of social learning provides a framework for understanding and learning that arises in the absence of explicit rewards or punishment, especially the acquisition of learned behaviors by children.

Bandura’s studies of modeling are perhaps his most well-known work (Bandura, 1973). In a series of studies known as the Bobo doll studies (inflated doll of approximately four feet high, weighted at the bottom so that when struck the Bobo doll falls over and then returns to the upright position as a result of the weight), Bandura found that children would change their behavior without directly experiencing reinforcement simply by watching others perform a behavior that the observer attends to in a given situation. In these experiments, children were asked to watch a video in which a child behaved aggressively toward a Bobo doll. The participant children were randomly assigned to one of three groups with each group viewing a different ending to a brief video associated with the model acting aggressively toward the doll. The first group saw the child in the video get praised for his aggressive behavior, the second group saw the child in the video get punished for hitting the Bobo doll by having to sit in a corner without any toys, and the third (the control) saw the child receive no response to aggressive behavior toward the Bobo doll. Upon completing the video, the participant children were then allowed to play with toys including the Bobo doll and their behaviors, especially acts of aggression, were recorded. The results indicated that the children who watched the video in which the child was rewarded for aggressive behaviors emitted significantly more acts of aggression than did the children who saw the video where there were no consequences associated with the aggressive behavior.

Bandura extended his social learning theory that arose originally from the Bobo doll study by introducing the concept of perceived self-efficacy (PSE) to the field of psychology (Bandura, 1982). Bandura (1986) not only introduced the concept of PSE but continued to research and extensively analyze the concept as reflected in his important work titled Social Foundations of Thought and Action (1986). Perceived self-efficacy is defined by Bandura as a person’s judgment of her or his capabilities to execute a specific task.