When we give a char

variable the value 'A', what exactly is

that value?

We’ve already alluded to the fact that there is some kind of encoding going on—remember that we mentioned the IBM-derived Latin1 scheme when we were discussing escaped character literals.

Computers work with binary values, typically made up of one or more bytes, and we clearly need some kind of mapping between the binary values in these bytes and the characters we want them to represent. We’ve all got to agree on what the binary values mean, or we can’t exchange information. To that end, the American Standards Association convened a committee in the 1960s which defined (and then redefined, tweaked, and generally improved over subsequent decades) a standard called ASCII (pronounced ass[22]-key): the American Standard Code for Information Interchange.

This defined 128 characters, represented using 7 bits of a byte. The first 32 values from 0x00–0x19, and also the very last value, 0x7F, are called control characters, and include things like the tab character (0x09), backspace (0x09), bell (0x07), and delete (0x7F).

The rest are called the printable characters, and include space (0x20), which is not a control character, but a “blank” printable character; all the upper and lowercase letters; and most of the punctuation marks in common use in English.

This was a start, but it rapidly became apparent that ASCII did not have enough characters to deal with a lot of the common Western (“Latin”) scripts; the accented characters in French, or Spanish punctuation marks, for example. It also lacked common characters like the international copyright symbol ©, or the registered trademark symbol ®.

Since ASCII uses only 7 bits, and most computers use 8-bit bytes, the obvious solution was to put the necessary characters into byte values not used by ASCII. Unfortunately, different mappings between byte values and characters emerged in different countries. These mappings are called code pages. If you bought a PC in, say, Norway, it would use a code page that offered all of the characters required to write in Norwegian, but if you tried to view the same file on a PC bought in Greece, the non-ASCII characters would look like gibberish because the PC would be configured with a Greek code page. IBM defined Latin-1 (much later updated and standardized as ISO-8859-1) as a single code page that provides most of what is required by most of the European languages that use Latin letters. Microsoft defined the Windows-1252 code page, which is mostly (but not entirely) compatible. Apple defined the Mac-Roman encoding, which has the same goal, but is completely different again.

All of these encodings were designed to provide a single solution for Western European scripts, but they all fall short in various different ways—Dutch, for example, is missing some of its diphthongs. This is largely because 8 bits just isn’t enough to cover all possible characters in all international languages. Chinese alone has well over 100,000 characters.

In the late 1980s and early 1990s, standardization efforts were underway to define an internationally acceptable encoding that would allow characters from all scripts to be represented in a reasonably consistent manner. This became the Unicode standard, and is the one that is in use in the .NET Framework.

Unicode is a complex standard, as might be expected from something that is designed to deal with all current (and past) human languages, and have sufficient flexibility to deal with most conceivable future changes, too. It uses numbers to define more than 1 million code points in a codespace. A code point is roughly analogous to a character in other encodings, including formal definitions of special categories such as graphic characters, format characters, and control characters. It’s possible to represent a sequence of code points as a sequence of 16-bit values.

You might be wondering how we can handle more than 1 million characters, when there are only 65,536 different values for 16-bit numbers. The answer is that we can team up pairs of characters. The first is called a high surrogate; if this is then followed by a low surrogate, it defines a character outside the normal 16-bit range.

Unicode also defines complex ways of combining characters. Characters and their diacritical marks can appear consecutively in a string, with the intention that they become combined in their ultimate visual representation; or you can use multiple characters to define special ligatures (characters that are joined together, like Æ).

The .NET Framework Char, then, is

a 16-bit value that represents a Unicode code point.

Note

This encoding is called UTF-16, and is the common in-memory representation for strings in most modern platforms. Throughout the Windows API, this format is referred to as “Unicode”. This is somewhat imprecise, as there are numerous different Unicode formats. But since none were in widespread use at the time Windows first introduced Unicode support, Microsoft apparently felt that “UTF-16” was an unnecessarily confusing name. But in general, when you see “Unicode” in either Windows or the .NET Framework, it means UTF-16.

From that, we can see that those IsNumber, IsLetter, IsHighSurrogate, and

IsLowSurrogate

methods correspond to tests for particular Unicode categories.

You may ask: why do we need to know about encodings when “it just works”? That’s all very well for our in-memory representation of a string, but what happens when we save some text to disk, encrypt it, or send it across the Web as HTML? We may not want the 16-bit Unicode encoding we’ve got in memory, but something else. These encodings are really information interchange standards, as much as they are internal choices about how we represent strings.

Most XML documents, for example, are encoded using the UTF-8 encoding. This is an encoding that lets us represent any character in the Unicode codespace, and is compatible with ASCII for the characters in the 7-bit set. It achieves this by using variable-length characters: a single byte for the ASCII range, and two to six bytes for the rest. It takes advantage of special marker values (with the high bit set) to indicate the start of two to six byte sequences.

Warning

While UTF-8 and ASCII are compatible in the sense that any file that contains ASCII text happens to be a valid UTF-8 file (and has the same meaning whether you interpret it as ASCII or UTF-8), there are two caveats. First, a lot of people are sloppy with their terminology and will describe any old 8-bit text encoding as ASCII, which is wrong. ASCII is strictly 7-bit. Latin1 text that uses characters from the top-bit-set range is not valid UTF-8. Second, it’s possible to construct a valid UTF-8 file that only uses characters from the 7-bit range, and yet is not a valid ASCII file. (For example, if you save a file from Windows Notepad as UTF-8, it will not be valid ASCII.) That’s because UTF-8 is allowed to contain certain non-ASCII features. One is the so-called BOM (Byte Order Mark), which is a sequence of bytes at the start of the file unambiguously representing the file as UTF-8. (The bytes are 0xEF, 0xBB, 0xBF.) The BOM is optional, but Notepad always adds it if you save as UTF-8, which is likely to confuse any program that only understands how to process ASCII.

We’re not going to look at any more details of these specific encodings. If you’re writing an encoder or decoder by hand, you’ll want to refer to the relevant specifications and vast bodies of work on their interpretation.

Fortunately, for the rest of us mortals, the .NET Framework provides us with standard implementations of most of the encodings, so we can convert between the different representations fairly easily.

Encoding is the process of turning a text string into a sequence of bytes. Conversely, decoding is the process of turning a byte sequence into a text string. The .NET APIs for encoding and decoding represents these sequences as byte arrays.

Let’s look at the code in Example 10-80 that illustrates this. First, we’ll encode some text using the UTF-8 and ASCII encodings, and write the byte values we see to the console.

Example 10-80. Encoding text

static void Main(string[] args)

{

string listenUp = "Listen up!";

byte[] utf8Bytes = Encoding.UTF8.GetBytes(listenUp);

byte[] asciiBytes = Encoding.ASCII.GetBytes(listenUp);

Console.WriteLine("UTF-8");

Console.WriteLine("-----");

foreach (var encodedByte in utf8Bytes)

{

Console.Write(encodedByte);

Console.Write(" ");

}

Console.WriteLine();

Console.WriteLine();

Console.WriteLine("ASCII");

Console.WriteLine("-----");

foreach (var encodedByte in asciiBytes)

{

Console.Write(encodedByte);

Console.Write(" ");

}

Console.ReadKey();

}The framework provides us with the Encoding class. This has a set of static

properties that provide us with specific instances of an Encoding object for a particular scheme. In

this case, we’re using UTF8 and

ASCII, which actually return

instances of UTF8Encoding and

ASCIIEncoding, respectively.

Note

Under normal circumstances, you do not need to know the actual

type of these instances; you can just talk to the object returned

through its Encoding base

class.

GetBytes returns us the byte

array that corresponds to the actual in-memory representation of a

string, encoded using the relevant scheme.

If we build and run this code, we see the following output:

UTF-8 ----- 76 105 115 116 101 110 32 117 112 33 ASCII ----- 76 105 115 116 101 110 32 117 112 33

Notice that our encodings are identical in this case, just as

promised. For basic Latin characters, UTF-8 and ASCII are compatible.

(Unlike Notepad, the .NET UTF8Encoding does not choose to add a BOM by

default, so unless you use characters outside the ASCII range this will

in fact produce files that can be understood by anything that knows how

to process ASCII.)

Let’s make a quick change to the string we’re trying to change,

and translate it into French. Replace the first line inside the Main method with Example 10-81. Notice that we’ve got a

capital E with an acute accent at the

beginning.

If you don’t have a French keyboard and you’re wondering how to insert that E-acute character, there are a number of ways to do it.

If you know the decimal representation of the Unicode code point, you can hold down the Alt key and type

the number on the numeric keypad (and then release the Alt key). So

Alt-0163 will insert the symbol for the UK currency, £, and Alt-0201 produces É. This doesn’t work for the normal number

keys, though, so if you don’t have a numeric keypad—most laptops

don’t—this isn’t much help.

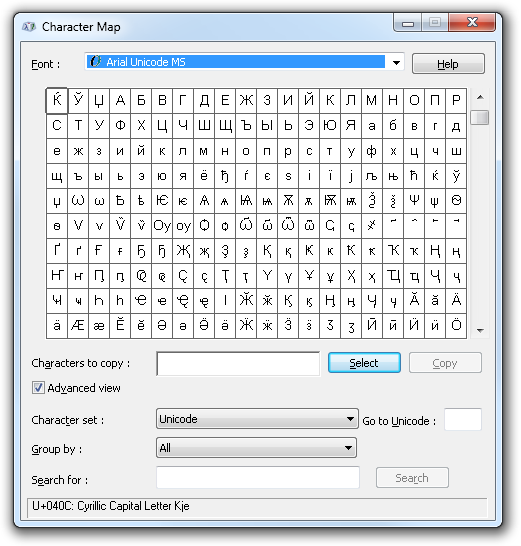

Possibly the most fun, though, is to run the charmap.exe application. The program icon for

it in the Start menu is buried pretty deeply, so it’s easier to type

charmap into a command prompt, the

Start→Run box, or the Windows 7 Start

menu search box. This is very instructive, and allows you to explore the

various different character sets and (if you check the “Advanced view”

box) encodings. You can see an image of it in Figure 10-2.

Alternatively, you could just escape the character—the string

literal "\u00C9coutez moi" will

produce the same result. And this has the advantage of not requiring

non-ASCII values in your source file. Visual Studio is perfectly able to

edit various file encodings, including UTF-8, so you can put non-ASCII

characters in strings without having to escape them, and you can even

use them in identifiers. But some text-oriented tools are not so

flexible, so there may be advantages in keeping your source code purely

ASCII.

Now, when we run again, we get the following output:

UTF-8 ----- 195 13799 111 117 116 101 45 109 111 105 33ASCII ----- 6399 111 117 116 101 45 109 111 105 33

We’ve quite clearly not got the same output in each case. The UTF-8 case starts with 195, 137, while the ASCII starts with 63. After this preamble, they’re again identical.

So, let’s try decoding those two byte arrays back into strings, and see what happens.

Insert the code in Example 10-82 before the

call to Console.ReadKey.

Example 10-82. Decoding text

string decodedUtf8 =Encoding.UTF8.GetString(utf8Bytes);string decodedAscii= Encoding.ASCII.GetString(asciiBytes);Console.WriteLine(); Console.WriteLine(); Console.WriteLine("Decoded UTF-8"); Console.WriteLine("-------------"); Console.WriteLine(decodedUtf8); Console.WriteLine(); Console.WriteLine(); Console.WriteLine("Decoded ASCII"); Console.WriteLine("-------------"); Console.WriteLine(decodedAscii);

We’re now using the GetString method on our

Encoding objects, to

decode the byte array back into a string. Here’s

the output:

UTF-8 ----- 195 137 99 111 117 116 101 45 109 111 105 33 ASCII ----- 63 99 111 117 116 101 45 109 111 105 33 Decoded UTF-8 -------------Écoute-moi! Decoded ASCII -------------?coute-moi!

The UTF-8 bytes have decoded back to our original string. This is

because the UTF-8 encoding supports the E-acute character, and it does

so by inserting two bytes into the array: 195 137.

On the other hand, our ASCII bytes have been decoded and we see that the first character has become a question mark.

If you look at the encoded bytes, you’ll see that the first byte is 63, which (if you look it up in an ASCII table somewhere) corresponds to the question mark character. So this isn’t the fault of the decoder. The encoder, when faced with a character it didn’t understand, inserted a question mark.

Warning

So, you need to be careful that any encoding you choose is capable of supporting the characters you are using (or be prepared for the information loss if it doesn’t).

OK, we’ve seen an example of the one-byte-per-character ASCII representation, and the at-least-one-byte-per-character UTF-8 representation. Let’s have a look at the underlying at-least-two-bytes-per-character UTF-16 encoding that the framework uses internally—Example 10-83 uses this.

Example 10-83. Using UTF-16 encoding

static void Main(string[] args)

{

string listenUpFR = "Écoute-moi!";

byte[] utf16Bytes = Encoding.Unicode.GetBytes(listenUpFR);

Console.WriteLine("UTF-16");

Console.WriteLine("-----");

foreach (var encodedByte in utf16Bytes)

{

Console.Write(encodedByte);

Console.Write(" ");

}

Console.ReadKey();

}Notice that we’re using the Unicode encoding this time.

If we compile and run, we see the following output:

UTF-16 ----- 201 09901110117011601010450109011101050330

It is interesting to compare this with the ASCII output we had before:

ASCII ----- 63 99 111 117 116 101 45 109 111 105 33

The first character is different, because UTF-16 can encode the E-acute correctly; thereafter, every other byte in the UTF-16 array is zero, and the next byte corresponds to the ASCII value. As we said earlier, the Unicode standard is highly compatible with ASCII, and each 16-bit value (i.e., pair of bytes) corresponds to the equivalent 7-bit value in the ASCII encoding.

There’s one more note to make about this byte array, which has to do with the order of the bytes. This is easier to see if we first update the program to show the values in hex, using the formatting function we learned about earlier, as Example 10-84 shows.

Example 10-84. Showing byte values of encoded text

static void Main(string[] args)

{

string listenUpFR = "Écoute-moi!";

byte[] utf16Bytes = Encoding.Unicode.GetBytes(listenUpFR);

Console.WriteLine("UTF-16");

Console.WriteLine("-----");

foreach (var encodedByte in utf16Bytes)

{

Console.Write(string.Format("{0:X2}", encodedByte));

Console.Write(" ");

}

Console.ReadKey();

}If we run again, we now see our bytes written out in hex format:

UTF-16

-----

C9 00 63 00 6F 00 75 00 74 00 65 00 2D 00 6D 00 6F 00 69 00 21 00But remember that each UTF-16 code point is represented by a 16-bit value, so we need to think of each pair of bytes as a character. So, our second character is 63 00. This is the 16-bit hex value 0x0063, represented in the little-endian form. That means we get the least-significant byte (LSB) first, followed by the most-significant byte (MSB).

For good (but now largely historical) reasons of engineering efficiency, the Intel x86 family is natively a little-endian architecture. It always expects the LSB followed by the MSB, so the default Unicode encoding is little-endian. On the other hand, platforms like the 680x0 series used in “classic” Macs are big-endian—they expect the MSB, followed by the LSB. Some chip architectures (like the later versions of the ARM chip used in most phones) can even be switched between flavors!

Note

Another historical note: one of your authors is big-endian (he used the Z80 and 68000 when he was a baby developer) and the other is little endian (he used the 6502, and early pre-endian-switching versions of the ARM when he was growing up).

Consequently, one of us has felt like every memory dump he’s looked at since about 1995 has been “backwards”. The other takes the contrarian position that it’s so-called “normal” numbers that are written backwards. So take a deep breath and count to 01.

Should you need to communicate with something that expects its

UTF-16 in a big-endian byte array, you can ask for it. Replace the line

in Example 10-84 that

initializes the utf16Bytes variable

with the code in Example 10-85.

Example 10-85. Using big-endian UTF-16

byte[] utf16Bytes = Encoding.BigEndianUnicode.GetBytes(listenUpFR);

As you might expect, we get the following output:

UTF-16 ------ 00 C9 00 63 00 6F 00 75 00 74 00 65 00 2D 00 6D 00 6F 00 69 00 21

And let’s try it once more, but with Arabic text, as Example 10-86 shows.

Example 10-86. Big-endian Arabic

static void Main(string[] args)

{

string listenUpArabic = "ّأنصت إليّ";

byte[] utf16Bytes = Encoding.BigEndianUnicode.GetBytes(listenUpArabic);

Console.WriteLine("UTF-16");

Console.WriteLine("-----");

foreach (var encodedByte in utf16Bytes)

{

Console.Write(string.Format("{0:X2}", encodedByte));

Console.Write(" ");

}

Console.ReadKey();

}And our output is:

UTF-16 ----- 06 23 06 46 06 35 06 2A 00 20 06 25 06 44 06 4A 06 51

(Just to prove that you do get values bigger than 0xFF in Unicode!)

In the course of the chapters on file I/O (Chapter 11) and networking (Chapter 13), we’re going to see a number of communications and storage APIs that deal with writing arrays of bytes to some kind of target device. The byte format in which those strings go down the wires is clearly very important, and, while the framework default choices are often appropriate, knowing how (and why) you might need to choose a different encoding will ensure that you’re equipped to deal with mysterious bugs—especially when wrangling text in a language other than your own, or to/from a non-Windows platform.[23]