| 24 | The Arithmetic of Reasoning Boolean Algebra |

Do computers think? They often appear to. They ask us questions, offer suggestions, correct our grammar, keep track of our finances, and calculate our taxes. Sometimes they seem maddeningly perverse, misunderstanding what we're sure we told them, losing our precious work, or refusing to respond at all to our reasonable requests! However, the more a computer appears to think, the more it is actually a tribute to the thinking ability of humans, who have found ways to express increasingly complex rational activities entirely as strings of 0s and 1s.

Attempts to reduce human reason to mechanical processes date back at least to the logical syllogisms of Aristotle in the 4th century B.C. A more recent instance occurs in the work of the great German mathematician Gottfried Wilhelm Leibniz. One of Leibniz's many achievements was the creation in 1694 of a mechanical calculating device that could add, subtract, multiply, and divide. This machine, called the Stepped Reckoner, was an improvement on the first known mechanical adding machine, Blaise Pascal's Pascaline of 1642, which could only add and subtract. Unlike the Pascaline, Leibniz's machine used the binary numeration system in its calculation, expressing all numbers as sequences of 1s and 0s.

Leibniz's Stepped Reckoner

(Photo courtesy of IBM Archives)

Leibniz, who also invented calculus independently of Isaac Newton,1 became intrigued by the vision of a "calculus of logic." He set out to create a universal system of reasoning that would be applicable to all science. He wanted his system to work "mechanically'" according to a simple set of rules for deriving new statements from ones already known, starting with only a few basic logical assumptions. In order to implement this mechanical logic, Leibniz saw that statements would have to be represented symbolically in some way, so he sought to develop a universal characteristic, a universal symbolic language of logic. Early plans for this work appeared in his 1666 publication, De Arte Combinatoria; much of the rest of his efforts remained unpublished until early in the 20th century. Thus, many of his prophetic insights about abstract relations and an algebra of logic had little continuing influence on the mathematics of the 18th and 19th centuries.

The symbolic treatment of logic as a mathematical system began in earnest in the 19th century with the work of Augustus De Morgan and George Boole, two friends and colleagues who personified success in the face of adversity.

De Morgan was born in Madras, India, blind in one eye. Despite this disability, he graduated with honors from Trinity College in Cambridge and was appointed a professor of mathematics at London University at the age of 22. He was a man of diverse mathematical interests and had a reputation as a brilliant, if somewhat eccentric, teacher. He wrote textbooks and popular articles on logic, algebra, mathematical history, and various other topics and was a co-founder and first president of the London Mathematical Society. De Morgan believed that any separation between mathematics and logic was artificial and harmful, so he worked to put many mathematical concepts on a firmer logical basis and to make logic more mathematical. Perhaps thinking of the handicap of his partial blindness, he summarized his views by saying:

The two eyes of exact science are mathematics and logic: the mathematical sect puts out the logical eye, the logical sect puts out the mathematical eye: each believing that it can see better with one eye than with two.2

The son of an English tradesman, George Boole began life with neither money nor privilege. Nevertheless, he taught himself Greek and Latin and acquired enough of an education to become an elementary-school teacher. Boole was already twenty years old when he began studying mathematics seriously. Just eleven years later he published The Mathematical Analysis of Logic (1847). the first of two books that laid the foundation for the numerical and algebraic treatment of logical reasoning. In 1849 he became a professor of mathematics at Queens College in Dublin. Before his sudden death at the age of 49, Boole had written several other mathematics books that are now regarded as classics. His second, more famous book on logic, An Investigation of the Laws of Thought (1854), elaborates and codifies the ideas explored in the 1847 book. This symbolic approach to logic led to the development of Boolean algebra, the basis for modern computer logic systems.

The key element of Boole's work was his systematic treatment of statements as objects whose truth values can be combined by logical operations in much the same way as numbers are added or multiplied. For instance, if each of two statements P and Q can be either true or false, then there are only four possible truth-value cases to consider when they are combined. The fact that the statement "P and Q" is true if and only if both of those statements are true can then be represented by the first table in Display 1. Similarly, the second table in Display 1 captures the fact that "P or Q" is true whenever at least one of the two component statements is true. The final table shows the fact that a statement and its negation always have opposite truth values.

Display 1

From there it was an easy step to translate T and F into 1 and 0. and to regard the logical operation tables (shown in Display 2) as the basis for a somewhat unusual but perfectly workable arithmetic system, a system with many of the same algebraic properties as addition, multiplication, and negation of numbers.

Display 2

De Morgan, too. was an influential, persuasive proponent of the algebraic treatment of logic. His publications helped to refine, extend, and popularize the system started by Boole. Among De Morgan's many contributions to this field are two laws, now named for him, that capture clearly the symmetric way in which the logical operations and and or behave with respect to negation:

Among De Morgan's important contributions to the mathematical theory of logic was his emphasis on relations as objects worthy of detailed study in their own right. Much of that work remained largely unnoticed until it was resurrected and extended by Charles Sanders Peirce in the last quarter of the 19th century. C. S. Peirce, a son of Harvard mathematician and astronomer Benjamin Peirce, made contributions to many areas of mathematics and science. However, as his interests in philosophy and logic became more and more pronounced, his writings migrated in that direction. He distinguished his interest in an algebra of logic from that of his father and other mathematicians by saying that mathematicians want to get to their conclusions as quickly as possible and so are willing to jump over steps when they know where an argument is leading: logicians, on the other hand, want to analyze deductions as carefully as possible, breaking them down into small, simple steps.

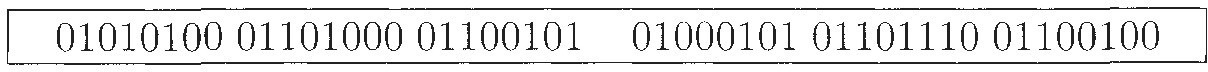

This reduction of mathematical reasoning to long strings of tiny, mechanical steps was a critical prerequisite for the "computer age." Twentieth-century advances in the design of electrical devices, along with the translation of 1 and 0 into on-off electrical states, led to electronic calculators that more than fulfilled the promise of Leibniz's stepped reckoner. Standard codes for keyboard symbols allowed such machines to read and write words. But it was the work of Boole, De Morgan, C. S. Peirce, and others in transforming reasoning from words to symbols and then to numbers that has led to the modern computer, whose rapid calculation of long strings of 1s and 0s empowers it to "think" its way through the increasingly intricate applications of mathematical logic.

For a Closer Look: A good place to start is pages 1852–1931 of [135]. An accessible book-length treatment is [38]. See also [126 for more on Leibniz, Boole, De Morgan, and C. S. Peirce.