So far, we have considered operant and respondent behavior as separate domains. Respondent behavior is elicited by the events that precede it, and operants are strengthened (or weakened) by stimulus consequences that follow them. Assume that you are teaching a dog to sit and you are using food reinforcement. You might start by saying “sit,” push the animal into a sitting position, and follow this posture with food. After training, you present the dog with the discriminative stimulus “sit,” and it quickly sits. This sequence nicely fits the operant paradigm—the SD “sit” sets the occasion for the response of sitting, and food reinforcement strengthens this behavior (Skinner, 1953).

In most circumstances, however, both operant and respondent conditioning occur. If you look closely at what the dog does, it is apparent that the “sit” command also elicits respondent behavior. Specifically, the dog salivates just after you say “sit.” This occurs because the “sit” command reliably preceded and was paired with the presentation of food, becoming a conditioned stimulus that elicits respondent salivation. For these reasons, the stimulus “sit” is said to have a dual function: It is an SD in the sense that it sets the occasion for operant responses, and it is a CS that elicits respondent behavior. Shapiro (1960) demonstrated that respondent salivation was positively correlated with operant lever-pressing for fixed-interval food in dogs.

Similar effects are seen when a warning stimulus (a tone) is turned on that signals imminent shock if a rat does not press a lever. The signal is a discriminative stimulus that increases the probability of bar pressing, but it is also a CS that elicits changes in heart rate, hormone levels, and other physiological responses (all of which can be called fear). Consider that you are out for a before-breakfast walk and you pass a doughnut and coffee shop. The aroma from the shop may be a CS that elicits salivation and also an SD that sets the occasion for entering the store and ordering a doughnut. These examples should make it clear that in many settings respondent and operant conditioning are intertwined—probably sharing common neural pathways in the brain.

One reason for the interrelationship of operant and respondent conditioning is that whatever happens to an organism is applied to the whole neurophysiological system, not just to one set of effectors or the other. That is, we analytically separate respondents (as smooth-muscle and gland activity) from operants (striated skeletal muscle activity) but the contingencies are applied to the entire organism. When operant reinforcement occurs, it is contiguous with everything going on inside the individual. When a CS or US is presented, there will be all kinds of operant behavior going on as well. The separation of operant and respondent models is for analytical convenience and control but the organism's interaction with the environment is more complicated than these basic distinctions.

In some circumstances, the distinction between operant and respondent behavior becomes even more difficult. Experimental procedures (contingencies) can be arranged in which responses that are typically considered reflexive increase when followed by reinforcement; that is, respondents are treated as operants. Other research has shown that behavior usually thought to be operant may be elicited by the stimuli that precede it; operants are treated as respondents. People sometimes incorrectly label operant or respondent behavior in terms of its form or topography. That is, pecking a key is automatically called an operant, and salivation, no matter how it comes about, is labeled a reflex. Recall, though, that operants and respondents are defined by the experimental procedures that produce them, not by their topography. When a pigeon pecks a key for food, pecking is an operant. If another bird reliably strikes a key when it is lit up, and does this because the light has been paired with food, pecking is a respondent.

When biologically relevant stimuli like food are contingent on an organism's behavior, speciescharacteristic behavior is occasionally elicited at the same time. One class of species-characteristic responses that is elicited (when operant behavior is expected) is unconditioned reflexive behavior. This intrusion of reflexive behavior occurs because respondent procedures are sometimes embedded in operant contingencies of reinforcement. These respondent procedures cause species-characteristic responses that may interfere with the regulation of behavior by operant contingencies.

At one time, this intrusion of respondent conditioning in operant situations was used to question the generality of operant principles and laws. The claim was that the biology of an organism overrode operant principles (Hinde & Stevenson-Hinde, 1973; Schwartz & Lacey, 1982; Stevenson-Hinde, 1983) and behavior was said to drift toward its biological roots.

Operant (and respondent) conditioning is, however, part of the biology of an organism. Conditioning arose on the basis of species history; organisms that changed their behavior as a result of life experience had an advantage over animals that did not change their behavior. Behavioral flexibility by operant conditioning allowed for rapid adaptation to an altered environment. As a result, organisms that evolved the neural mechanisms for operant learning were more likely to survive and produce offspring.

Both evolution and learning involve selection by consequences. Darwinian evolution has developed species-characteristic behavior (reflexes, fixed action patterns, and reaction chains) and basic mechanisms of learning (operant and respondent conditioning) through natural selection of the best fit. Operant conditioning during an organism's lifetime selects response topographies, rates of response, and repertoires of behavior through the feedback from consequences. A question is whether unconditioned responses (UR) in the basic reflex model (US →UR) also are selected by consequences. In this regard, it is interesting to observe that the salivary glands are activated when food is placed in the mouth; as a result, the food can be tasted, ingested (or rejected), and digested. That is, there are notable physiological effects following reflexive behavior. If the pupil of the eye constricts when a bright light is shown, the result is an escape from retinal pain and the avoidance of retina damage. It seems that reflexes have come to exist and operate because they do something; it is the effects of these responses that maintain the operation of the reflexive behavior. One might predict that if the effects of salivating for food or blinking at a puff of air did not result in improved performance (ease of ingestion or protection of the eye) then neither response would continue, as this behavior would not add to the fitness of the organism.

Marion and Keller Breland worked with B. F. Skinner as students and later established a successful animal training business. They conditioned a variety of animals for circus acts, arcade displays, advertising, and movies. In an important paper (Breland & Breland, 1961), they documented occasional instances in which species-specific behavior interfered with operant responses. For example, when training a raccoon to deposit coins in a box, they noted:

The response concerned the manipulation of money by the raccoon (who has “hands” rather similar to those of primates). The contingency for reinforcement was picking up the coins and depositing them in a 5-inch metal box.

Raccoons condition readily, have good appetites, and this one was quite tame and an eager subject. We anticipated no trouble. Conditioning him to pick up the first coin was simple. We started out by reinforcing him for picking up a single coin. Then the metal container was introduced, with the requirement that he drop the coin into the container. Here we ran into the first bit of difficulty: he seemed to have a great deal of trouble letting go of the coin. He would rub it up against the inside of the container, pull it back out, and clutch it firmly for several seconds. However, he would finally turn it loose and receive his food reinforcement. Then the final contingency: we put him on a ratio of 2, requiring that he pick up both coins and put them in the container.

Now the raccoon really had problems (and so did we). Not only could he not let go of the coins, but he spent seconds, even minutes rubbing them together (in a most miserly fashion), and dipping them into the container. He carried on the behavior to such an extent that the practical demonstration we had in mind—a display featuring a raccoon putting money in a piggy bank—simply was not feasible. The rubbing behavior became worse and worse as time went on, in spite of non-reinforcement.

(Breland & Breland, 1961, p. 682)

Breland and Breland documented similar instances of what they called instinctive drift in other species. Instinctive drift refers to species-characteristic behavior patterns that became progressively more invasive during training. The term instinctive drift is problematic because the concept suggests a conflict between nature (biology) and nurture (environment). Behavior is said to drift toward its biological roots. There is, however, no need to talk about behavior “drifting” toward some end. Behavior is appropriate to the operating contingencies. Recall that respondent procedures may be embedded in an operant contingency and this seems to be the case for the Brelands' raccoon.

In the raccoon example, the coins were presented just before the animal was reinforced with food. For raccoons, food elicits rubbing and manipulating food items. Since the coins preceded food delivery, they became a CS that elicited the respondent behavior of rubbing and manipulating (coins). This interpretation is supported by the observation that the behavior increased as training progressed. As more and more reinforced trials occurred, there were necessarily more pairings of coins and food. Each pairing increased the strength of the CS(coin) → CR(rubbing) relationship, and the behavior became more and more prominent.

Respondent processes also occur as by-products of operant procedures with rats and kids. Rats will hold on to marbles longer than you might expect when they receive food pellets for depositing the marble in a hole. Kids will manipulate tokens or coins prior to banking or exchanging them. The point is that what the Brelands found is not that unusual or challenging to an operant account of behavior. Today, we talk about the interrelationship of operant and respondent contingencies rather than label these observations as a conflict between nature and nurture.

Suppose that you have trained a dog to sit quietly on a mat, and you have reinforced the animal's behavior with food. Once this conditioning is accomplished (the dog sits quietly on the mat), you start a second training phase. During this phase, you turn on a buzzer located on the dog's right side. A few seconds after the sound of the buzzer, a feeder delivers food to a dish that is placed 6 ft in front of the dog. Figure 7.1 is a diagram of this sort of arrangement.

When the buzzer goes off, the dog is free to engage in any behavior it is able to emit. From the perspective of operant conditioning it is clear what should happen. When the buzzer goes off, the dog should stand up, walk over to the dish, and eat. This is because the sound of the buzzer is an SD that sets the occasion for the operant of going to the dish, and this response has been reinforced by food. In other words, the three-term contingency, SD: R → Sr, specifies this outcome and there is little reason to expect any other result. A careful examination of the contingency, however, suggests that the sign (sound) could be either an SD (operant) that sets the occasion for approaching and eating the reinforcer (food); or a CS+ (respondent) that is paired with the US (food). In this latter case, the CS would be expected to elicit food-related conditioned responses.

Jenkins, Barrera, Ireland, and Woodside (1978) conducted an experiment very much like the one described here. Dogs were required to sit on a mat and a light/tone stimulus compound was presented either on the left or right side of the animal. When the stimulus was presented on one side it signaled food, and on the other side it signaled extinction. As expected, when the extinction stimulus came on, the dogs did not approach the food tray and for the most part ignored the signal. However, when the food signal was presented, the animals unexpectedly approached the signal (light/speaker) and made, what was judged by the researchers to be, “food-soliciting responses” to the stimulus. Some of the dogs physically contacted the signal source, and others seemed to beg at the stimulus by barking and prancing. This behavior is called sign tracking because it refers to approaching a sign (or stimulus) that has signaled a biologically relevant event (in this case, food).

FIG. 7.1 Diagram of apparatus used in sign tracking. When the signal for food is given, the dog approaches the signal and makes “food-soliciting” responses rather than go directly to the food dish.

The behavior of the dogs is not readily understood in terms of operant contingencies of reinforcement. As stated earlier, the animals should simply trot over to the food and eat it. Instead the dogs' behavior appears to be elicited by the signal that precedes food delivery. Importantly, the ordering of stimulus → behavior resembles the CS → CR arrangement that characterizes classical conditioning. Of course, SD: R follows the same time line, but in this case the response should be a direct approach to the food, not to the signal. Additionally, behavior in the presence of the stimulus appears to be food directed. When the tone/light comes on, the dog approaches it, barks, begs, prances, licks the signal source, and so on. Thus, the temporal arrangement of stimulus followed by response, and the form or topography of the animal's behavior, suggests respondent conditioning. Apparently, in this situation the unconditioned stimulus (US) features of the food are stronger (in the sense of regulating behavior) than the operant-reinforcement properties of the food. Because of this, the light/tone gains strength as a CS with each pairing of light/tone and food. A caution is that one cannot entirely dismiss the occurrence of operant behavior in this experiment. If the dog engages in a chain of responses that is followed by food you can expect the sequence to be maintained, and this seems to be the case in such experiments.

Shaping is the usual way that a pigeon is taught to strike a response key. In the laboratory, a researcher operating the feeder with a hand-switch reinforces closer and closer approximations to the final performance (key pecking). Once the bird makes the first independent peck on the key, electronic programming equipment activates a food hopper and the response is reinforced. The contingency between behavior and reinforcement both during shaping and after the operant is established is clearly operant (R → Sr). This method of differential reinforcement of successive approximation requires considerable patience and a fair amount of skill on the part of the experimenter.

Brown and Jenkins (1968) reported a way to automatically teach pigeons to peck a response key. In one experiment, they first taught birds to approach and eat grain whenever a food hopper was presented. After the birds were magazine trained, automatic programming turned on a key light 8 s before the grain was delivered. Next, the key light went out and the grain hopper was presented. After 10–20 pairings of this key light followed by food procedure, the birds started to orient and move toward the lighted key. Eventually all 36 pigeons in the experiment began to strike the key even though pecking did not produce food. Figure 7.2 shows the arrangement between key light and food presentation. Notice that the light onset precedes the presentation of food and appears to elicit the key peck.

The researchers called this effect autoshaping, an automatic way to teach pigeons to key peck. Brown and Jenkins offered several explanations for their results. In their view, the most likely explanation had to do with species characteristics of pigeons. They noted that pigeons have a tendency to peck at things they look at. The bird notices the onset of the light, orients toward it, and “the species-specific look—peck coupling eventually yields a peck to the [key]” (Brown & Jenkins, 1968, p. 7). In their experiment, after the bird made the response, food was presented, and this contiguity could have accidentally reinforced the first peck.

Another possibility was that key pecking resulted from respondent conditioning. The researchers suggested that the lighted key had become a CS that elicted key pecks. This could occur because pigeons make unconditioned pecks (UR) when grain (US) is presented to them. In their experiment, the key light preceded grain presentation and may have elicited a conditioned peck (CR) to the lighted key (CS). Brown and Jenkins comment on this explanation and suggest that although it is possible, it “seem[s] unlikely because the peck appears to grow out of and depend upon the development of other motor responses in the vicinity of the key that do not themselves resemble a peck at grain” (1968, p. 7). In other words, the birds began to turn toward the key, stand close to it, and make thrusting movements with their heads, all of which led eventually to the key peck. It does not seem likely that all these are reflexive responses. They seem more like operant approximations that form a chain culminating in pecking.

FIG. 7.2 Autoshaping procedures are based on Brown and Jenkins (1968). Notice that the onset of the light precedes the presentation of food and appears to elicit the key peck.

Notice that respondent behavior like salivation, eye blinks, startle, knee jerks, pupil dilation, and other reflexes do not depend on the conditioning of additional behavior. When you touch a hot stove you rapidly and automatically pull your hand away. This response simply occurs when a hot object is contacted. A stove does not elicit approach to it, orientation toward it, movement of the hand and arm, and other responses. All of these additional responses seem to be clearly operant, forming a chain or sequence of behavior that avoids contact with the hot stove.

Autoshaping extends to other species and other types of reinforcement and responses. Chicks have been shown to make autoshaped responses when heat was the reinforcer (Wasserman, 1973). When food delivery is signaled for rats by lighting a lever or by inserting it into the operant chamber, the animals lick and chew on the bar (Peterson, Ackil, Frommer, & Hearst, 1972; Stiers & Silberberg, 1974). These animals also direct social behavior toward another rat that signals the delivery of food (Timberlake & Grant, 1975). Rachlin (1969) showed autoshaped key pecking in pigeons using electric shock as negative reinforcement. The major question that these and other experiments raise is: What is the nature of the behavior that is observed in autoshaping and sign-tracking experiments?

In general, research has shown that autoshaped behavior is at first respondent, but when the contingency is changed so that pecks are followed by food, the pecking becomes operant. Pigeons reflexively peck (UR) at the sight of grain (US). Because the key light reliably precedes grain presentation, it acquires a CS function that elicits the CR of pecking the key. However, when pecking is followed by grain, it comes under the control of contingencies of reinforcement and it is an operant. To make this clear, autoshaping produces respondent behavior that can then be reinforced. Once behavior is reinforced, it is regulated by consequences that follow it and it is considered to be operant.

In discussing their 1968 experiments on autoshaping, Brown and Jenkins report that:

Experiments in progress show that location of the key near the food tray is not a critical feature [of autoshaping], although it no doubt hastens the process. Several birds have acquired the peck to a key located on the wall opposite the tray opening or on a side wall.

(p.7)

This description of autoshaped pecking by pigeons sounds similar to sign tracking by dogs. Both autoshaping and sign tracking involve species-characteristic behavior that is elicited by food presentation. Instinctive drift also appears to be reflexive behavior that is elicited by food. Birds peck at grain and make similar responses to the key light. That is, birds sample or taste items in the environment by the only means available to them, beak or bill contact. Dogs make food-soliciting responses to the signal that precedes food reinforcement. For example, this kind of behavior is clearly seen in pictures of wolf pups licking the mouth of an adult returning from a hunt. Raccoons rub and manipulate food items and make similar responses to coins that precede food delivery. And we have all seen humans rubbing dice together between their hands before throwing them. It is likely that autoshaping, sign tracking, and instinctive drift represent the same (or very similar) processes (for a discussion, see Hearst & Jenkins, 1974).

One proposed possibility is that all of these phenomena (instinctive drift, sign tracking, and autoshaping) are instances of stimulus substitution. That is, when a CS (e.g., light) is paired with a US (e.g., food) the conditioned stimulus is said to substitute for, or generalizes from, the unconditioned stimulus. This means that responses elicited by the CS (rubbing, barking and prancing, pecking) are similar to the ones caused by the US. While this is a parsimonious account, there is evidence that it is wrong.

Recall from Chapter 3 that the laws of the reflex (US → UR) do not hold for the CS → CR relationship, suggesting that there is no universal substitution of the CS for the US. Also, in many experiments, the behavior evoked by the US is opposite in direction to the responses elicited by the CS (see Chapter 3, ON THE APPLIED SIDE). Additionally, there are experiments conducted within the autoshaping paradigm that directly refute the stimulus substitution hypothesis.

In an experiment by Wasserman (1973), chicks were placed in a very cool enclosure. In this situation, a key light was occasionally turned on and this was closely followed by the activation of a heat lamp. All the chicks began to peck the key light in an unusual way. The birds moved toward the key light and rubbed their beaks back and forth on it: behavior described as snuggling. These responses resemble the behavior that newborn chicks direct toward their mothers, when soliciting warmth. Chicks peck at their mothers' feathers and rub their beaks from side to side, behavior that results in snuggling up to their mother.

At first glance, the “snuggling to the key light” seems to illustrate an instance of stimulus substitution. The chick behaves to the key light as it does toward its mother. The difficulty is that the chicks in Wasserman's (1973) experiment responded completely differently to the heat lamp than to the key light. In response to heat from the lamp, a chick extended its wings and stood motionless, behavior that it might direct toward intense sunlight. In this experiment, it is clear that the CS does not substitute for the US because these stimuli elicit completely different responses (also see Timberlake & Grant, 1975).

An alternative to stimulus substitution has been proposed by Timberlake (1983, 1993), who suggested that each US (food, water, sexual stimuli, heat lamp, and so on) controls a distinct set of species-specific responses or a behavior system. That is, for each species there is a behavior system related to procurement of food, another related to obtaining water, still another for securing warmth, and so on. For example, the presentation of food to a raccoon activates the behavior system that consists of procurement and ingestion of food. One of these behaviors, rubbing and manipulating the item, is evoked. Other behaviors like bringing the food item to the mouth, salivation, chewing, and swallowing of the food are not elicited. Timberlake goes on to propose that the particular responses elicited by the CS depend, in part, on the physical properties of the stimulus. Presumably, in the Wasserman experiment, properties of the key light (a visual stimulus raised above the floor) were more closely related to snuggling than to standing still and extending wings.

At the present time, it is not possible to predict which responses in a behavior system a given CS will elicit. That is, a researcher can predict that the CS will elicit one or more of the responses controlled by the US, but cannot specify which responses will occur. One possibility is that the salience of the US affects which responses are elicited by the CS. For example, as the intensity of the heat source increases (approximating a hot summer day) the chicks' response to the CS key light may change from snuggling to behavior appropriate to standing in the sun (open wings and motionless).

As you have seen, there are circumstances in which both operant and respondent conditioning occur. Moreover, responses that are typically operant on the basis of topography are occasionally regulated by respondent processes (and, as such, are respondents). There are also occasions in which behavior that, in form or topography, appears to be reflexive is regulated by the consequences that follow it.

In the 1960s, a number of researchers attempted to show that involuntary reflexive or autonomic responses could be operantly conditioned (Kimmel & Kimmel, 1963; Miller & Carmona, 1967; Shearn, 1962). Miller and Carmona (1967) deprived dogs of water and monitored their respondent level of salivation. The dogs were separated into two groups. One group was reinforced with water for increasing salivation and the other group was reinforced for a decrease. Both groups of animals showed the expected change in amount of salivation. That is, the dogs that were reinforced for increasing saliva flow showed an increase, and the dogs reinforced for less saliva flow showed a decrease.

At first glance, this result seems to demonstrate the operant conditioning of salivation. However, Miller and Carmona (1967) noticed an associated change in the dogs' behavior that could have produced the alteration in salivation. Dogs that increased saliva flow appeared to be alert, and those that decreased it were described as drowsy. For this reason, the results are suspect—salivary conditioning may have been mediated by a change in the dogs' operant behavior. Perhaps drowsiness was operant behavior that resulted in decreased salivation, and being alert increased the reflex. In other words, the change in salivation could have been part of a larger, more general behavior pattern that was reinforced. Similar problems occurred with other experiments. For example, Shearn (1962) showed operant conditioning of heart rate, but this dependent variable can be affected by a change in pattern of breathing.

It is difficult to rule out operant conditioning of other behavior as a mediator of reinforced reflexes. However, Miller and DiCara (1967) conducted a classic experiment in which this explanation was not possible. The researchers reasoned that operant behavior could not mediate conditioning if the subject had its skeletal muscles immobilized. To immobilize their subjects, which were white rats, they used the drug curare. This drug paralyzes the skeletal musculature and interrupts breathing, and the rats were maintained by artificial respiration. When given curare the rats could not swallow food or water; Miller and DiCara solved this problem by using electrical stimulation of the rats' pleasure center in the brain as reinforcement for visceral reflexes.

Before starting the experiment, the rats had electrodes permanently implanted in their hypothalamus. This was done in a way that allowed the experimenters to connect and disconnect the animals from the equipment that stimulated the pleasure center. To make certain that the stimulation was reinforcing, the rats were trained to press a bar in order to turn on a brief microvolt pulse. This procedure demonstrated that the pulse was, in fact, reinforcing since the animals pressed a lever for the stimulation.

At this point, Miller and DiCara administered curare to the rats and reinforced half of them with electrical stimulation for decreasing their heart rate. The other animals were reinforced for an increase in heart rate. Figure 7.3 shows the results of this experiment. Both groups start out with heart rates in the range of 400–425 beats per minute. After 90 min of contingent reinforcement, the groups are widely divergent. The group that was reinforced for slow heart rate is at about 310 beats per minute, and the fast rate group is at approximately 500 beats per minute.

FIG. 7.3 Effects of curare immobilization of skeletal muscles and the operant conditioning of heart rate are shown (Miller & DiCara, 1967). Half of the rats received electrical brain stimulation for increasing heart rate and the other half for decreasing heart rate.

Miller and Banuazizi (1968) extended this finding. They inserted a pressuresensitive balloon into the large intestine of rats, which allowed them to monitor intestinal contractions. At the same time, the researchers measured the animals' heart rate. As in the previous experiment, the rats were administered curare and reinforced with electrical brain stimulation. In different conditions, reinforcement was made contingent on increased or decreased intestinal contractions. Also, the rats were reinforced on some occasions for a decrease in heart rate, and at other times for an increase.

The researchers showed that reinforcing intestinal contractions or relaxation changed them in the appropriate direction. The animals also showed an increase or decrease in heart rate when this response was made contingent on brain stimulation. Finally, Miller and Banuazizi (1968) demonstrated that a change in intestinal contractions did not affect heart rate and, conversely, changes in heart rate did not affect contractions.

In these experiments contingent reinforcement modified behavior usually considered to be reflexive, under conditions in which skeletal responses could not affect the outcome. Also, the effects were specific to the response that was reinforced, showing that brain stimulation was not generating general physiological changes that produced the outcomes of the experiment. It seems that responses that are usually elicited can be conditioned using an operant contingency of reinforcement. Greene and Sutor (1971) extended this conclusion to humans, showing that a galvanic skin response (GSR) could be regulated by negative reinforcement (for more on operant autonomic conditioning, see DiCara, 1970; Engle, 1993; Jonas, 1973; Kimmel, 1974; Miller, 1969).

Although this conclusion is probably justified, the operant conditioning of autonomic responses like blood pressure, heart rate, and intestinal contraction has run into difficulties. Miller even had problems replicating the results of his own experiments (Miller & Dworkin, 1974), concluding “that the original visceral learning experiments are not replicable and that the existence of visceral learning remains unproven” (Dworkin & Miller, 1986). The weight of the evidence does suggest that reflexive responses are, at least in some circumstances, affected by the consequences that follow them. This behavior, however, is also subject to control by contiguity or pairing of stimuli. It is relatively easy to change heart rate by pairing a light (CS) with electric shock and then using the light to change heart rate. It should be evident that controlling heart rate with an operant contingency is no easy task. Thus, autonomic behavior may not be exclusively tied to respondent conditioning, but respondent conditioning is particularly effective with these responses.

Clearly, the fundamental distinction between operant and respondent conditioning is operational. The distinction is operational because conditioning is defined by the operations that produce it. Operant conditioning involves a contingency between behavior and its consequences. Respondent conditioning entails the pairing of stimuli.

Autonomic responses are usually respondents and are best modified by respondent procedures. When these responses are changed by the consequences that follow them, they are operants. Similarly, skeletal responses are usually operant and most readily changed by contingencies of reinforcement, but when modified by the pairing of stimuli they are respondents. The whole organism is impacted by contingencies (environmental arrangement of events), whether these contingencies are designed as operant or respondent procedures. That is, most contingencies of reinforcement activate respondent processes and Pavlovian contingencies often involve the reinforcement of operant behavior.

As we stated in Chapter 1, the evolutionary history, ontogenetic history, and current physiological status of an organism is the context for conditioning. Edward Morris (1992) has described the way we use the term context:

Context is a funny word. As a non-technical term, it can be vague and imprecise. As a technical term, it can also be vague and imprecise—and has been throughout the history of psychology. In what follows, I mean to use it technically and precisely. … First, the historical context—phylogenetic and ontogenetic, biological and behavioral—establishes the current structure and function of biology (anatomy and physiology) and behavior (form and function). Second, the form or structure of the current context, organismic or environmental, affects (or enables) what behavior can physically or formally occur. Third, the current context affects (actualizes) the functional relationships among stimuli and response (i.e., their “meaning” for one another).

(p. 14)

Context is a way of noting that the probability of behavior depends on certain conditions. Thus, the effective contingencies (stimuli, responses, and reinforcing events) may vary from species to species. A hungry dog can be reinforced with meat for jumping a hurdle and a pigeon will fly to a particular location to get grain. These are obvious species differences, but there are more subtle effects of the biological context. The rate of acquisition and level of behavior once established may be influenced by an organism's physiology, as determined by species history. Moreover, within a species, reinforcers, stimuli, and responses can be specific to particular situations.

Behavior that is observed with any one set of responses, stimuli, and reinforcers may change when different sets are used. In addition, different species may show different environmentbehavior relationships when the same set of responses and events is investigated. Although the effects of contingencies sometimes depend on the particular events and responses, principles of behavior like extinction, discrimination, and spontaneous recovery show generality across species. The behaviors of schoolchildren working at math problems for teacher attention and of pigeons pecking keys for food are regulated by the principles of reinforcement even though the particular responses and reinforcers vary over species. With regard to generalization of behavior principles, human conduct is probably more sensitive to environmental influence than the behavior of any other species. In this sense, humans may actually be the organisms best described by the principles of behavior.

As early as 1938, Skinner recognized that a comprehensive understanding of the behavior of organisms required the study of more than “arbitrary” stimuli, responses, and reinforcers (Skinner, 1938, pp. 10–11). By using simple stimuli, easy-to-execute and record responses, and precise reinforcers Skinner hoped to identify general principles of operant conditioning. By and large, this same strategy is used today in the modern operant laboratory.

In an experiment by Wilcoxon, Dragoin, and Kral (1971), quail and rats were given blue salty water. After the animals drank the water, they were made sick. Following recovery, the animals were given a choice between water that was not colored but tasted salty and plain water that was colored blue. The rats avoided the salty-flavored water and the quail would not drink the colored solution. This finding is not difficult to understand—when feeding or drinking, birds rely on visual cues; and rats are sensitive to taste and smell. In the natural habitat, drinking liquids that produce illness should be avoided and this has obvious survival value. Because quail typically select food on the basis of what it looks like they avoided the colored water. Rats on the other hand avoided the taste, as it was what had been associated with sickness.

In the Wilcoxon et al. (1971) experiment, the taste and color of the water was a compound CS that was paired with the US illness. Both species showed taste aversion learning. That is, the animals came to avoid one or the other of the CS elements based on biological history. In other words, the biology of the organism dictated which cue became a CS, but the conditioning of the aversion, or CS-US pairing, was the same for both species. Of course, a bird that relied on taste for food selection would be expected to associate taste and illness. This phenomenon has been called preparedness— quail are more biologically prepared to discriminate critical stimulus features when sights are associated with illness and rats respond best to a flavor-illness association. Other experiments have shown that within a species the set of stimuli, responses, and reinforcers may be affected by the biology of the organism.

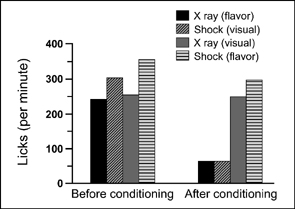

Garcia and colleagues conducted several important experiments that were concerned with the conditions that produce taste aversion in rats.1 Garcia and Koelling (1966) had thirsty rats drink tasty (saccharin-flavored) water, or unflavored water accompanied by flashing lights and gurgling noises (bright-noisy water). After the rats drank the water half of each group was immediately given an electric shock for drinking. The other animals were made ill by injecting them with lithium chloride or by irradiating them with X-rays. Lithium chloride and high levels of X-rays produce nausea roughly 20 min after administration. Figure 7.4 shows the four conditions of the experiment.

After aversion training and recovery the rats were allowed to drink and their water intake was measured. The major results of this experiment are shown in Figure 7.5. Baseline measures of drinking were compared to fluid intake after shock or lithium or X-rays paired with a visual or flavor stimulus (CS). Both shock and illness induced by X-ray exposure suppressed drinking. Those rats that received shock after drinking the bright-noisy water and the ones that were made sick after ingesting the flavored water substantially reduced their fluid intake. Water intake in the other two groups was virtually unaffected. The animals made sick after drinking the bright-noisy water and those shocked for ingesting the flavored water did not show a conditioned aversion.

These results are unusual for several reasons. During traditional respondent conditioning, the CS and US typically overlap or are separated by only a few seconds. In the Garcia and Koelling (1966) experiment, the taste CS was followed much later by the US— drug or X-ray. Also, it is often assumed that

FIG. 7.4 Conditions used to show taste aversion conditioning by rats in an experiment by Garcia and Koelling (1966). From a description given in same study.

FIG. 7.5 Major results are presented from the tasteaversion experiment by Garcia and Koelling (1966). Based on data from same study.

the choice of CS and US is irrelevant for respondent conditioning. Pavlov claimed that the choice of CS was arbitrary; he said anything would do. However, taste and grastrointestinal malaise produced aversion, but taste and shock did not. Therefore, it appears that for some stimuli the animal is prepared by nature to make a connection and for others they may even be contraprepared (Seligman, 1970). Generally, for other kinds of classical conditioning many CS-US pairings are required, but aversion to taste is conditioned after a single pairing of flavor-illness. In fact, the animal need not even be conscious in order for an aversion to be formed (Provenza, Lynch, & Nolan, 1994). Finally, it appears necessary for the animal to experience nausea in order for taste aversion to condition. Being poisoned by strychnine, which inhibits spinal neurons but does not cause sickness, will not work (Cheney, van der Wall, & Poehlmann, 1987).

These results can be understood by considering the biology of the rat. The animals are omnivorous and as such they eat a wide range of meat and vegetable foods. Rats eat 10–16 small meals each day and frequently ingest novel food items. The animals are sensitive to smell and taste but have relatively poor sight, and they cannot vomit. When contaminated, spoiled, rotten, or naturally toxic food is eaten, it typically has a distinctive smell and taste. For this reason, taste and smell but not visual cues are associated with illness. Conditioning after a long time period between CS and US occurs because there is usually a delay between ingestion of a toxic item and nausea. It would be unusual for a rat to eat and then have this quickly followed by an aversive stimulus (flavorshock); hence there is little conditioning. The survival value of one-trial conditioning, or quickly avoiding food items that produce illness, is obvious—eat that food again and it may kill you.

Behavior is why the nervous system exists. Those organisms with a nervous system that allowed for behavioral adaptation and reproduction survived. To reveal the neural mechanisms that interrelate brain and behavior, neuroscientists often look for changes in behavior as a result of functional changes in the brain as the organism solves problems posed by its environment. This approach has been used to understand how organisms acquire aversions to food tastes and avoidance of foods with these tastes.

A recent study investigated the sites in the brain responsive to lithium chloride (LiCl), a chemical often used in conditioned taste aversion learning to induce nausea. The researchers used brain imaging to measure the presence of c-fos, a protein with a short neural expression that is implicated in the neural reward system (Andre, Albanos, & Reilly, 2007). The gustatory area of the thalamus showed elevated levels of c-fos following LiCl treatment, implicating this brain region as central to conditioned taste aversion. Subsequent research by Yamamoto (2007) showed that two regions of the amygdala were also involved in taste aversion conditioning. One region is concerned with detecting the conditioned stimulus (e.g., distinctive taste) and the other is involved with hedonic shift from positive to negative, as a result of taste aversion experience. Thus, brain research is showing that several areas of the brain are involved in the detection of nausea from toxic chemicals and the subsequent aversion to tastes predictive of such nausea.

There are also brain sites that relate to urges, cravings, and excessive behavior. The cortical brain structure called the insula appears to be an area that turns physical reactions into sensations of craving. A recent investigation in Science showed that smokers with strokes that injured or destroyed this area lost their craving for cigarettes (Naqvi, Rudrauf, Damasio, & Bechara, 2007). The insula area appears critical for behaviors whose bodily effects are experienced as pleasurable, like cigarette smoking. Specific brain sites seem to code for the physiological reactions to stimuli and “upgrade” the integrated neural responses into awareness, allowing the person to act on the urges of an acquired addiction. Since humans have learned to modulate their own brain activity to reduce the sensation of pain, they also may be able to learn to deactivate the insula and thus reduce cravings associated with excessive use of drugs.

Behavioral neuroscience is progressively relating behavior to the neurological mechanisms of the brain. Eventually, scientists will have a more complete account of how the external environment and the neurophysiology of the organism interrelate to produce specific behavior in a given situation.

Taste aversion learning has been replicated and extended in many different experiments (see Barker, Best, & Domjan, 1977; Rozin & Kalat, 1971). Revusky and Garcia (1970) showed that the interval between a flavor CS and an illness-inducing US could be as much as 12 h. Other findings indicate that a novel taste is more easily conditioned than one with which an animal is familiar (Cheney & Eldred, 1980; Revusky & Bedarf, 1967). A novel setting (as well as taste) has also been shown to increase avoidance of food when a toxin is the unconditioned stimulus. For example, Mitchell, Kirschbaum, and Perry (1975) fed rats in the same container at a particular location for 25 days. Following this, the researchers changed the food cup and made the animals ill. After this experience, the rats avoided eating from the new container (see Parker, 2003, for difference between taste aversion and avoidance). Taste aversion learning also occurs in humans of course (Arwas, Rolnick, & Lubow, 1989; Logue, 1979, 1985, 1988a). Alexandra Logue at the State University of New York, Stony Brook, has concluded:

Conditioned food aversion learning in humans appears very similar to that in other species. As in other species, aversions can be acquired with long CS-US delays, the aversion most often forms to the taste of food, the CS usually precedes the US, aversions frequently generalized to foods that taste qualitatively similar, and aversions are more likely to be formed to less preferred, less familiar foods. Aversions are frequently strong. They can be acquired even though the subject is convinced that the food did not cause the subject's illness.

(1985, p. 327)

Imagine that on a special occasion you spend an evening at your favorite restaurant. Stimuli at the restaurant include your companion, waiters, candles on the table, china, art on the wall, and many more aspects of the setting. You order several courses, most of them familiar, and “just to try it out” you have pasta primavera for the first time. What you do not know is that a flu virus has invaded your body and is percolating away while you eat. Early in the morning, you wake up with a clammy feeling, rumbling stomach, and a hot acid taste in the back of your throat. You spew primavera sauce, wine, and several other ugly bits and pieces on the bathroom mirror.

The most salient stimulus at the restaurant was probably your date. Alas, is the relationship finished? Will you get sick at the next sight of your lost love? Is this what the experimental analysis of behavior has to do with romance novels? Of course, the answer to these questions is no. It is very likely that you will develop a strong aversion only to pasta primavera. Interestingly, you may clearly be aware that your illness was caused by the flu, not the new food. You may even understand taste aversion learning but, as one of the authors (Cheney) of this book can testify to, it makes no difference. The novel-taste CS, because of its single pairing (delayed by several hours even) with nausea, is likely to be avoided in the future.

Taste aversion learning may be involved in human anorexia. In activity anorexia (Epling & Pierce, 1992, 1996a), food restriction increases physical activity and mounting physical activity reduces food intake (see ON THE APPLIED SIDE in this chapter). Two researchers, Bo Lett and Virginia Grant (Lett & Grant, 1996), at Memorial University in Newfoundland, Canada suggested that human anorexia induced by physical activity could involve taste aversion learning. Basically, it is known that physical activity like wheel running suppresses food intake in rats (e.g., Epling, Pierce & Stefan, 1983; Routtenberg & Kuznesof, 1967). Lett and Grant proposed that suppression of eating could be due to a conditioned taste aversion (CTA) induced by wheel running. According to this view, a distinctive taste becomes a conditioned stimulus (CS) for reduced consumption when followed by the unconditioned stimulus (US) of nausea from wheel running. In support of this hypothesis, rats exposed to a flavored liquid that was paired with wheel running drank less of the liquid than control rats that remained in their home cages (Lett & Grant, 1996; see also Heth, Inglis, Russell, & Pierce, 2001).

Further research by Sarah Salvy, who worked with Pierce (author of textbook) and is now in Pediatrics at the State University of New York (Buffalo), indicates that CTA induced by wheel running involves respondent processes (Salvy, Heth, Pierce, & Russell, 2004; Salvy, Pierce, Heth, & Russell, 2002, 2003), although operant components have not been ruled out. Salvy conducted “bridging experiments” in which a distinctive food rather than a flavored liquid is followed by wheel running (Salvy et al., 2003). Rats that ate novel food snacks (flavored cat treats) followed by bouts of wheel running consumed less of the food compared with control rats receiving the food followed by access to a locked wheel. That is, CTA induced by wheel running was generalized to novel food stimuli. In our laboratory at the University of Alberta we have consistently obtained wheel-runninginduced CTA to novel food, but have not yet been able to establish CTA with familiar food (laboratory chow). Familiar laboratory chow, however, is used in the activity anorexia procedure. One possibility is that CTA occurs during activity anorexia but does not explain the suppression of eating during the cycle (see Sparks, Grant, & Lett, 2003). Another possibility is that CTA and other nonassociative processes combine to produce activity anorexia. Further research is necessary to clarify the role of CTA for the onset and maintenance of activity anorexia.

On time-based and interval schedules, organisms may emit behavior patterns that are not required by the contingency of reinforcement (Staddon & Simmelhag, 1971). If you received $5 for pressing a lever once every 10 min you might start to pace, twiddle your thumbs, have a sip of soda, or scratch your head between payoffs on a regular basis. Staddon (1977) has noted that during a fixed time between food reinforcers animals engage in three distinct types of behavior. Immediately after food reinforcement interim behavior like drinking water may occur; next an organism may engage in facultative behavior that is independent of the schedule of reinforcement (e.g., rats may groom themselves); finally, as the time for reinforcement gets close, animals engage in food-related activities called terminal behavior, such as orienting toward the lever or food cup. The first of these categories, interim or adjunctive behavior,2 is of most interest for the present discussion as it is behavior not required by the schedule but induced by reinforcement. Because the behavior is induced as a side effect of the reinforcement schedule, it is also referred to as schedule-induced behavior.

When a hungry animal is placed on an interval schedule of reinforcement, it will ingest an excessive amount of water if allowed to drink. Falk (1961, 1964, 1969) suggested that this polydipsia or excessive drinking is adjunctive behavior induced by the time-based delivery of food. A rat that is working for food on an intermittent schedule may drink as much as half its body weight during a single session (Falk, 1961). This drinking occurs even though the animal is not water deprived. The rat may turn toward the lever, press for food, obtain and eat the food pellet, drink excessively, groom itself, and then repeat the sequence. Pressing the lever is required for reinforcement, and grooming may occur in the absence of food delivery, but polydipsia appears to be induced by the schedule.

In general, adjunctive behavior refers to any excessive and persistent behavior pattern that occurs as a side effect of reinforcement delivery. The schedule may require a response for reinforcement, or it may simply be time based, as when food pellets are given every 30 s no matter what the animal is doing. Additionally, the schedule may deliver reinforcement on a fixed time basis (e.g., every 60 s) or it may be constructed so that the time between reinforcers varies (e.g., 20 s, then 75, 85, 60 s, and so on).

Schedules of food reinforcement have been shown to generate such adjunctive behavior as attack against other animals (Flory, 1969; Hutchinson, Azrin, & Hunt, 1968; Pitts & Malagodi, 1996), licking at an airstream (Mendelson & Chillag, 1970), drinking water (Falk, 1961), chewing on wood blocks (Villareal, 1967), and preference for oral cocaine administration (Falk & Lau, 1997; Falk, D'Mello, & Lau, 2001). Adjunctive behavior has been observed in pigeons, monkeys, rats, and humans; reinforcers have included water, food, shock avoidance, access to a running wheel, money, and for male pigeons the sight of a female (see Falk, 1971, 1977; Staddon, 1977, for reviews). Muller, Crow, and Cheney (1979) induced locomotor activity in college students and retarded adolescents with fixed-interval (FI) and fixed-time (FT) token delivery. Stereotypic and self-injurious behavior of humans with developmental disabilities also has been viewed as adjuctive to the schedule of reinforcement (Lerman, Iwata, Zarcone, & Ringdahl, 1994). Thus, adjunctive behavior occurs in different species, is generated by a variety of reinforcement procedures, and extends to a number of induced responses.

A variety of conditions affect adjunctive behavior, but the schedule of reinforcement delivery and the deprivation status of the organism appear to be the most important. As the time between reinforcement deliveries increases from 2 to 180 s, adjunctive behavior increases. After 180 s adjunctive behavior drops off and reaches low levels at 300 s. For example, a rat may receive a food pellet every 10 s and drink only a bit more than a normal amount of water between pellet deliveries. When the schedule is changed to 100 s drinking increases; polydipsia goes up again if the schedule is stretched to 180 s. As the time between pellets is further increased to 200, 250, and then 300 s, water consumption goes down. This increase, peak, and then drop in schedule-induced behavior is illustrated in Figure 7.6 and is called a bitonic function. The function has been observed in species other than the rat and occurs for other adjunctive behavior (see Keehn & Jozsvai, 1989, for contrary evidence).

In addition to the reinforcement schedule, adjunctive behavior becomes more and more excessive as the level of deprivation increases. A rat that is at 80% of its normal body weight and is given food pellets every 20 s will drink more water than an animal at 90% weight and on the same schedule. Experiments have shown that food-schedule-induced drinking (Falk, 1969), airstream licking (Chillag & Mendelson, 1971), and attack (Dove, 1976) go up as an animal's body weight goes down. Thus, a variety of induced activities escalate when deprivation for food is increased and when food is the scheduled reinforcer.

Falk (1977) has noted that “on the surface” adjunctive behavior does not seem to make sense:

[Adjunctive activities] are excessive and persistent. A behavioral phenomenon which encompasses many kinds of activities and is widespread over species and high in predictability ordinarily can be presumed to be a basic mechanism contributing to adaptation and survival. The puzzle of adjunctive behavior is that, while fulfilling the above criteria its adaptive significance has escaped analysis. Indeed, adjunctive activities have appeared not only curiously exaggerated and persistent, but also energetically quite costly.

(p. 326)

FIG. 7.6 A bitonic relationship is presented, showing time between food pellets and amount of adjunctive water drinking.

In fact, Falk (1977) goes on to argue that induced behavior does make biological sense.

The argument made by Falk is complex and beyond the scope of this book. Simply stated, adjunctive behavior may be related to what ethologists call displacement behavior. Displacement behavior is seen in the natural environment and is “characterized as irrelevant, incongruous, or out of context. … For example, two skylarks in combat might suddenly cease fighting and peck at the ground with feeding movements” (Falk, 1971, p. 584). The activity of the animal does not make sense given the situation, and the displaced responses do not appear to follow from immediately preceding behavior. Like adjunctive behavior, displacement activities arise when consummatory (i.e., eating, drinking, etc.) activities are interrupted or prevented. In the laboratory, a hungry animal is interrupted from eating when small bits of food are intermittently delivered.

Adjunctive and displacement activities occur at high strength when biologically relevant behavior (i.e., eating or mating) is blocked. Recall that male pigeons engage in adjunctive behavior when reinforced with the sight of (but not access to) female members of the species. These activities may increase the chance that other possibilities in the environment are contacted. A bird that pecks at tree bark when prevented from eating may find a new food source. Armstrong (1950) has suggested that “a species which is able to modify its behavior to suit changed circumstances by means of displacements, rather than by the evolution of ad hoc modifications starting from scratch will have an advantage over other species” (Falk, 1971, p. 587). Falk, however, goes on to make the point that evolution has probably eliminated many animals that engage in nonfunctional displacement activities.

Adjunctive behavior is another example of activity that is best analyzed by considering the biological context. Responses that do not seem to make sense may ultimately prove adaptive. The conditions that generate and maintain adjunctive and displacement behavior are similar. Both types of responses may reflect a common evolutionary origin, and this suggests that principles of adjunctive behavior will be improved by analyzing the biological context.

In 1967, Carl Cheney (who was then at Eastern Washington State University) ran across a paper (Routtenberg & Kuznesof, 1967) that reported self-starvation in laboratory rats. Cheney (coauthor of this textbook) thought that this was an unusual effect since most animals are reluctant to kill themselves for any reason. Because of this, he decided to replicate the experiment and he recruited W. Frank Epling (former coauthor of this textbook), an undergraduate student at the time, to help run the research. The experiment was relatively simple. Cheney and Epling (1968) placed a few rats in running wheels and fed them for 1 h each day. The researchers recorded the daily number of wheel turns, the weight of the rat, and the amount of food eaten. Surprisingly, the rats increased wheel running to excessive levels, ate less and less, lost weight, and if allowed to continue in the experiment died of starvation. Importantly, the rats were not required to run and they had plenty to eat, but they stopped eating and ran as much as 10 miles a day.

Twelve years later, Frank Epling (who was then an assistant professor of psychology at the University of Alberta) began to do collaborative research with David Pierce (coauthor of this textbook), a professor of sociology at the same university. They wondered if anorexic patients were hyperactive like the animals in the self-starvation experiments. If they were, it might be possible to develop an animal model of anorexia. Clinical reports indicated that indeed many anorexic patients were excessively active. For this reason, Epling and Pierce began to investigate the relationship between wheel running and food intake (Epling & Pierce, 1988; Pierce & Epling, 1991; Pierce, 2001). The basic finding is that physical activity decreases food intake and that decreased food intake increases activity. Epling and Pierce call this feedback loop activity-based anorexia or just activity anorexia, and argue that a similar cycle occurs in anorexic patients (see Epling & Pierce, 1992; Epling, Pierce, & Stefan, 1983).

This analysis of eating and exercise suggests that these activities are interrelated. Depriving an animal of food should increase the reinforcing value of exercise. Rats that are required to press a lever in order to run on a wheel should work harder for wheel access when they are deprived of food. Additionally, engaging in exercise should reduce the reinforcing value of food. Rats that are required to press a lever for food pellets should not work as hard for food following a day of exercise. Pierce, Epling, and a graduate student, Doug Boer, designed two experiments to test these ideas (Pierce, Epling, & Boer, 1986).

We asked whether food deprivation increased the reinforcing effectiveness of wheel running. If animals worked harder for an opportunity to exercise when deprived of food, this would show that running had increased in its capacity to support behavior. That is, depriving an animal of food should increase the reinforcing effectiveness of running. This is an interesting implication because increased reinforcement effectiveness is usually achieved by withholding the reinforcing event. Thus, to increase the reinforcement effectiveness of water a researcher typically withholds access to water, but (again) in this case food is withheld in order to increase the reinforcing effectiveness of wheel access.

We used nine young rats of both sexes to test the reinforcing effectiveness of wheel running as food deprivation changed. The animals were trained to press a lever to obtain 60 s of wheel running. When the rat pressed the lever, a brake was removed and the running wheel was free to turn. After 60 s, the brake was again activated and the animal had to press the lever to obtain more wheel access for running. The apparatus that we constructed for this experiment is shown in Figure 7.7.

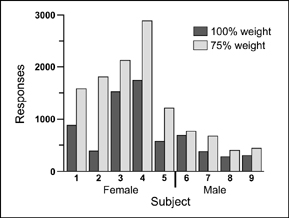

Once lever pressing for wheel running was stable, each animal was tested when it was food deprived (75% of normal weight) and when it was at free-feeding weight. Recall that the animals were expected to work harder for exercise when they were food deprived. To measure the reinforcing effectiveness of wheel running the animals were required to press the lever more and more for each opportunity to run: a progressive ratio schedule. Specifically, the rats were required to press 5 times to obtain 60 s of wheel running, and then 10, 15, 20, 25, and so on. The point at which they gave up pressing for wheel running was used as an index of the reinforcing effectiveness of exercise.

The results of this experiment are shown in Figure 7.8. All animals lever pressed for wheel running more when they were food deprived than when at normal weight. In other words, animals worked harder for exercise when they were hungry. Further evidence indicated that the reinforcing effectiveness went up and down when an animal's weight was made to increase and decrease. For example, one rat pressed the bar 1567 times when food deprived, 881 times at normal weight,

FIG. 7.7 Wheel-running apparatus used in the Pierce, Epling, and Boer (1986) experiment on the reinforcing effectiveness of physical activity as a function of food deprivation. From “Deprivation and Satiation: The Interrelations between Food and Wheel Running,” by W. David Pierce, W. Frank Epling, and D. P. Boer, 1986, Journal of the Experimental Analysis of Behavior, 46, 199–210. Copyright 1986 held by the Society for the Experimental Analysis of Behavior. Republished with permission.

FIG. 7.8 The graph shows the number of bar presses for 60 s of wheel running as a function of food deprivation. From “Deprivation and Satiation: The Interrelations between Food and Wheel Running,” by W. David Pierce, W. Frank Epling, and D. P. Boer, 1986, Journal of the Experimental Analysis of Behavior, 46, 199–210. Copyright 1986 held by the Society for the Experimental Analysis of Behavior. Republished with permission.

and 1882 times when again food deprived. This indicated that the effect was reversible and was tied to the level of food deprivation (see Belke, Pierce, & Duncan, 2006, on substitutability of food and wheel running).

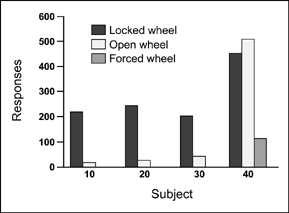

In a second experiment, we investigated the effects of exercise on the reinforcing effectiveness of food. Four male rats were trained to press a lever for food pellets. When lever pressing occurred reliably, we tested the effects of exercise on each animal's willingness to work for food. In this case, we expected that a day of exercise would decrease the reinforcement effectiveness of food on the next day.

Test days were arranged to measure the reinforcing effects of food. One day before each test, animals were placed in their wheels without food. On some of the days before a test, the wheel was free to turn, and on other days it was not. Three of the four rats ran moderately in their activity wheels on exercise days. One lazy rat did not run when given the opportunity. This animal was subsequently forced to exercise on a motordriven wheel. All animals were well rested (3–4 h of rest) before each food test. This ensured that any effects were not caused by fatigue.

FIG. 7.9 The graph shows the number of bar presses for food when rats were allowed to run on a wheel as compared with no physical activity. From “Deprivation and Satiation: The Interrelations between Food and Wheel Running,” by W. David Pierce, W. Frank Epling, and D. P. Boer, 1986, Journal of the Experimental Analysis of Behavior, 46, 199–210. Copyright 1986 held by the Society for the Experimental Analysis of Behavior. Republished with permission.

The reinforcement effectiveness of food was assessed by counting the number of lever presses for food as food became more and more difficult to obtain. For example, an animal had to press 5 times for the first food pellet, 10 for the next, then 15, 20, 25, and so on. As m the first experiment, the giving-up point was used to measure reinforcement effectiveness. Presumably, the more effective the reinforcer (i.e., food), the harder the animal would work for it.

Figure 7.9 shows that when test days were preceded by a day of exercise, the reinforcing effectiveness of food decreased sharply. Animals pressed the lever more than 200 times when they were not allowed to run but no more than 38 times when running preceded test sessions. Food no longer supported lever presses following a day of moderate wheel running, even though a lengthy rest period preceded the test. Although wheel running was moderate it represented a large change in physical activity since the animals were previously sedentary.

Prior to each test, the animals spent an entire day without food. Because of this, the reinforcing effectiveness of food should have increased. Exercise, however, seemed to override the effects of food deprivation since responding for food went down rather than up. Other evidence from these experiments suggested that the effects of exercise were similar to feeding the animal. Although exercise reduces the reinforcement effectiveness of food, the effect is probably not because wheel running serves as an economic substitute for food consumption (Belke, Pierce, & Duncan, 2006).

The rat that was forced to run also showed a sharp decline in lever pressing for food (see Figure 7.9). Exercise was again moderate but substantial relative to the animal's sedentary history. Because the reinforcement effectiveness of food decreased with forced exercise, we concluded that both forced and voluntary physical activity produce a decline in the value of food reinforcement. This finding suggests that people who increase their physical activity because of occupational requirements (e.g., ballet dancers) may value food less.

In our view, the motivational interrelations between eating and physical activity have a basis in natural selection. Natural selection favored those animals that increased travel in times of food scarcity. During a famine, organisms can either stay and conserve energy or become mobile and travel to another location. The particular strategy adopted by a species depends on natural selection. If travel led to reinstatement of food supply and staying put resulted in starvation, then those animals that traveled gained reproductive advantage.

A major problem for an evolutionary analysis of activity anorexia is accounting for the decreased appetite of animals that travel to a new food patch. The fact that increasing energy expenditure is accompanied by decreasing caloric intake seems to violate common sense. From a homeostatic (i.e., energy balance) perspective, food intake and energy expenditure should be positively related. In fact, this is the case if an animal has the time to adjust to a new level of activity and food supply is not greatly reduced.

When depletion of food is severe, however, travel should not stop when food is infrequently contacted. This is because stopping to eat may be negatively balanced against reaching a more abundant food patch. Frequent contact with food would signal a replenished food supply, and this should reduce the tendency to travel. Recall that a decline in the reinforcing effectiveness of food means that animals will not work hard for nourishment. When food is scarce, considerable effort may be required to obtain it. For this reason, animals ignore food and continue to travel. However, as food becomes more plentiful and the effort to acquire it decreases, the organism begins to eat. Food consumption lowers the reinforcement effectiveness of physical activity and travel stops (see also Belke, Pierce, & Duncan, 2006, on the partial substitution of food for physical activity). On this basis, animals that expend large amounts of energy on a migration or trek become anorexic.

When scientists are confronted with new and challenging data, they are typically loathe to accept the findings. This is because researchers have invested time, money, and effort in experiments that may depend on a particular view of the world. Consider a person who has made a career of investigating the operant behavior of pigeons, with rate of pecking a key as the major dependent variable. The suggestion that key pecking is actually respondent rather than operant behavior would not be well received by such a scientist. If key pecks are reflexive, then conclusions about operant behavior based on these responses are questionable. One possibility is to go to some effort to explain the data within the context of operant conditioning.

In fact, Brown and Jenkins (1968) suggested just this sort of explanation for their results. Recall that these experimenters pointed to the species-specific tendency of pigeons to peck at stimuli they look at. When the light is illuminated, there is a high probability that the birds will look and peck. Some of these responses are followed by food, and pecking increases in frequency. Other investigators noted that when birds are magazine trained they stand in the general area of the feeder and the response key is typically at head height just above the food tray. Anyone who has watched a pigeon knows that they have a high frequency of bobbing their heads. Since they are close to the key and are making pecking (or bobbing) motions, it is possible that a strike at the key is inadvertently followed by food delivery. From this perspective, key pecks are superstitious in the sense that they are accidentally reinforced. The superstitious explanation has an advantage because it does not require postulating a look-peck connection and it is entirely consistent with operant conditioning.

Although these explanations of pecking as an operant are plausible, the possibility remains that autoshaped pecking is respondent behavior. An ingenious experiment by Williams and Williams (1969) was designed to answer this question. In their experiment on negative automaintenance, pigeons were placed in an operant chamber and key illumination was repeatedly followed by food. This is, of course, the same procedure that Brown and Jenkins (1968) used to show autoshaping. The twist in the Williams and Williams procedure was that if the bird pecked the key when it was illuminated, food was not presented. This is called omission training because if the pigeon pecks the key the reinforcer is omitted, or if the response is omitted the reinforcer is delivered.

The logic of this procedure is that if pecking is respondent, then it is elicited by the key light and the pigeon will reflexively strike the disk. If, on the other hand, pecking is operant, then striking the key prevents reinforcement and responses should not be maintained. Thus, the clear prediction is that pecking is respondent behavior if the bird continues to peck with the omission procedure in place. Using the omission procedure, Williams and Williams (1969) found that pigeons frequently pecked the key even though responses prevented reinforcement. This finding suggests that the sight of grain is an unconditioned stimulus for pigeons, eliciting an unconditioned response of pecking at the food. When a key light stimulus precedes grain presentation, it becomes a CS that elicits pecking at the key (CR). Figure 7.10 shows this arrangement between stimulus events and responses. It is also the case that by not presenting the food (US), the key light (CS) is no longer paired with the US and the response (CR) undergoes extinction.

In discussing their results, Williams and Williams state that “the stimulus-reinforcer pairing overrode opposing effects of … reinforcement indicat[ing] that the effect was a powerful one, and demonstrat[ing] that a high level of responding does not imply the operation of … [operant] reinforcement” (1969, p. 520). The puzzling aspect of this finding is that in most cases pecking to a key is regulated by reinforcement and is clearly operant. Many experiments have shown that key pecks increase or decrease in frequency depending on the consequences that follow behavior.

FIG. 7.10 Omission procedures are based on Williams and Williams (1969). The birds pecked the key even though these responses prevented reinforcement.

Because of this apparent contradiction, several experiments were designed to investigate the nature of autoshaped pecking. Schwartz and Williams (1972a) preceded grain reinforcement for pigeons by turning on a red or white light on two separate keys. The birds responded by pecking the illuminated disk (i.e., they were autoshaped). On some trials, the birds were presented with both the red and white keys. Pecks on the red key prevented reinforcement, as in the omission procedure used by Williams and Williams (1969). Pecks to the white key, however, did not prevent reinforcement.

On these choice trials, the pigeons showed a definite preference for the white key that did not stop the delivery of grain. In other words, the birds more frequently pecked the key that was followed by the presentation of grain. Because this is a description of behavior regulated by an operant contingency (peck → food), autoshaped key pecks cannot be exclusively respondent. In concluding their paper, Schwartz and Williams wrote:

A simple application of respondent principles cannot account for the phenomenon as originally described … and it cannot account for the rate and preference results of the present study. An indication of the way operant factors can modulate the performance of automaintained behavior has been given. … The analysis suggests that while automaintained behavior departs in important ways from the familiar patterns seen with arbitrary responses, the concepts and procedures developed from the operant framework are, nevertheless, influential in the automaintenance situation.

(Schwartz & Williams, 1972a, p. 356)

Schwartz and Williams (1972b) went on to investigate the nature of key pecking by pigeons in several other experiments. The researchers precisely measured the contact duration of each peck that birds made to a response key. When the omission procedure was in effect, pigeons produced shortduration pecks. If the birds were autoshaped, but key pecks did not prevent the delivery of grain, the duration of the pecks was long. These same long-duration pecks occurred when the pigeons responded for food on a schedule of reinforcement. Generally, it appears that there are two types of key pecks: short-duration pecks evoked (perhaps elicited) by the presentation of grain, and long-duration pecks that occur when the bird's behavior is brought under operant control.

Other evidence also suggests that both operant and respondent conditioning are involved in autoshaping. It is likely that the first autoshaped pecking is respondent behavior elicited by light-food pairings. Once pecking produces food, however, it comes under operant control. Even when the omission procedure is in effect both operant and respondent behavior is conditioned and there is probably no uniform learning process underlying autoshaped responses (Papachristos & Gallistel, 2006). During omission training, a response to the key turns off the key light and food is not delivered. If the bird does not peck the key, the light is eventually turned off and food is presented. Because on these trials turning the light off is associated with reinforcement, a dark key becomes a conditioned reinforcer. Thus, the bird pecks the key and is reinforced when the light goes off. Hursh, Navarick, and Fantino (1974) provided evidence for this view. They showed that birds quit responding during omission training if the key light did not immediately go out when a response was made.

This chapter has considered several areas of research on respondent-operant interactions. Autoshaping showed that an operant response (key pecking for food) could actually be elicited by respondent procedures. Before this research, operants and respondents had been treated as separate systems subject to independent controlling procedures. The Brelands' animal training demonstrations provided a hint that the two systems were not distinct—with species-specific behavior being elicited by operant contingencies. Their work revealed the biological foundations of conditioning as well as the contributions made by biologically relevant factors. Animals are prepared by evolution to be responsive to specific events and differentially sensitive to various aspects of the environment.