Chapter 1

Implement and monitor an Azure Infrastructure

Working as a Microsoft Azure cloud solutions architect, you will be designing solutions and engaging with IT professionals who will implement your design. So, why does the AZ-303 certification exam require you to know how to deploy and configure resources? As an architect, you must understand how resources are linked to create a solution that meets your customers’ requirements, while adhering to the pillars of great architecture:

■ Cost optimization

■ Operational excellence

■ Performance efficiency

■ Reliability

■ Security

To achieve this requires a deep understanding of how each underlying resource is implemented and configured. The AZ-303 exam expects you to demonstrate this knowledge through hands-on labs, both through the Azure portal and on the command line.

Once your design is implemented and starts to move through the stages of development and testing, you need feedback to ensure that these pillars are maintained. There is little point having a solution in production that is expensive, consistently fails, and is insecure. Monitoring the infrastructure throughout development and testing and into production provides continuous feedback at every stage, and it ensures that your product does not fail or become insecure.

Each resource in Azure can be configured for monitoring to return feedback to centralized locations. The AZ-303 certification exam expects you to demonstrate a solid understanding of monitoring. You must know how to configure your resources for monitoring, how to collate the data, and how it can be visualized to pinpoint possible issues and faults.

The Azure Solution Architect certification is an expert-level title, so you are expected to have at least intermediate-level Azure configuration abilities. You are also expected to have basic scripting skills with the Azure CLI and the Azure PowerShell modules.

Skills covered in this chapter:

Skill 1.1: Implement cloud infrastructure monitoring

Continuously monitoring applications and infrastructure will enable your customers to be timelier in their responses to issues and changes. Responses to alerts generated from a well-monitored system can be automated, meaning in some circumstances, an application can self-heal. There are many monitoring solutions within Azure, each with its own use cases and configurability. As a solution architect, you need an excellent understanding of which monitoring solution fits which use case. This skill looks at some of the monitoring options available to you, what they monitor, and how to configure them.

This skill covers how to:

Monitor security

Your customers’ reputation is linked with the security of their systems; therefore, as an architect, you must know how to design secure systems. This is only one part of the puzzle; you cannot assume that your design is bulletproof. You must also be able to instruct your customers how to monitor systems continuously for potential attacks and mitigate the threats before data becomes at risk.

There are multiple security examinations available for Azure, though for this exam, you need to know the options available to you to monitor security and their high-level use cases.

Azure Security Center

When architecting solutions in Azure, there is a shared responsibility between the customer and Azure to ensure the resources are kept safe. Azure Security Center is an infrastructure security management system designed to help mitigate the security challenges that moving workloads to the cloud brings:

■ Lack of security skills. Your customers might not have the traditional in-house skills and capital needed to secure a complex infrastructure.

■ Increasing attack sophistication. Attacks are becoming more sophisticated, whether your workloads are in the cloud, are on-premises, or are part of a hybrid cloud and on-premises setup.

■ Frequently changing infrastructure. Because of the flexibility of the cloud, architecture can rapidly change, bringing ever-moving attack vectors.

Security center comes in two tiers: Free and Standard:

■ Free tier. The free tier is enabled by default and provides security recommendations on Azure VMs and App services.

■ Standard tier. The standard tier increases monitoring to any cloud VM and hybrid VM workloads. The standard tier also includes some of the most frequently utilized PaaS services, such as data, storage, and containers.

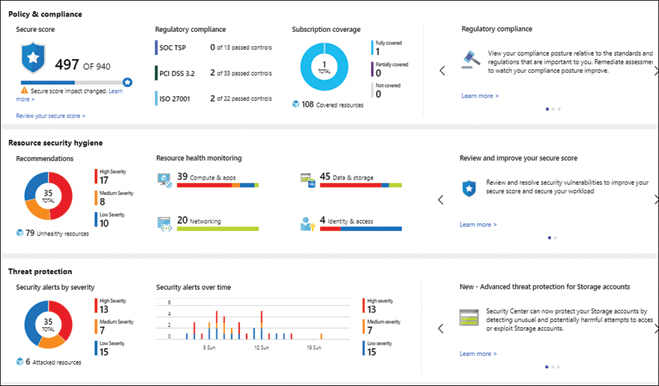

When you activate Security Center for either tier, a monitoring agent is required for most of the security assessments. You can configure Security Center to automatically deploy the Log Analytics Agent onto Azure virtual machines, though PaaS (Platform as a Service) services require no extra configuration. For on-premises and cloud VMs, the Log Analytics Agent must be manually installed. Once the agents are installed and configured, Security Center begins assessing the security state of all your VMs, networks, applications, and data. The Security Center analytics engine analyzes the data returned from the agents to provide a security summary, as displayed in Figure 1-1.

FIGURE 1-1 Security Center Overview blade

Figure 1-1 provides an excellent overview of the Security Center standard tier core features:

■ Policy & Compliance. This section includes the Secure Score, which is a key indicator to how your infrastructure is secured. Security Center assesses resources across your subscriptions and organization for security issues. The Secure Score is an aggregation of identified security issues and their corresponding risk levels. The higher the Security Score, the lower the risk. The compliance section tracks whether regulations for standards such as ISO 27001 and PCI DSS are being followed.

■ Resource Security Hygiene. This section provides resource and network recommendations. Drill through the menus to view recommendations and remediate them to improve your security posture and the Secure Score.

■ Threat Protection. Logs from data and compute resources are passed through algorithms for behavioral analysis, anomaly detection, and integrated threat intelligence to look for possible threats. Alerts are created depending on severity.

The standard tier also includes just-in-time (JIT) access for Azure VMs. With JIT access enabled on a VM, an administrator can request access from an IP range for a specified length of time. If the administrator has the correct RBAC permissions, Azure creates a network rule, and the administrator is granted access. Once the specified time has passed, Azure removes the network rule to revoke access.

Azure Sentinel

Azure Sentinel is a security orchestrated automated response (SOAR) and security information and event management (SIEM) solution. Security Center is used to collect data and detect security vulnerabilities. Azure Sentinel extends beyond this by bringing in tools to help your customers hunt for threats, and then investigate and respond to them—all at enterprise scale:

■ Collects data at cloud scale. Data collected includes other cloud, on-premises, Microsoft 365, and Advanced Threat Protection data.

■ Detect undetected threats. Threats are detected using Microsoft analytics and threat intelligence.

■ Investigate threats with AI. You can hunt for suspicious activities at scale.

■ Respond to incidents. Azure Sentinel includes built-in orchestration and automation of common tasks.

Azure Sentinel requires a Log Analytics workspace when it is enabled and is billed based on the amount of data ingested from the workspace.

Monitor performance

Once the applications your customers have architected go into production, response time is likely to be one of the main KPIs your users are interested in. Performance needs to be monitored so that your customers know about potential issues before the application users do. This section looks at how to configure resources for performance monitoring and how Azure Monitor can use this data to look for performance issues.

Configure diagnostic settings on resources

Azure automatically generates audit and diagnostic information across the platform in the form of platform logs. Platform logs are invaluable to an architect because they contain information generated across different layers of Azure:

■ Activity Log. All write operations (PUT, POST, DELETE) on a resource (the management plane). Tracked at the subscription level, this log contains who made the change, from where a change was made, and when a change was made.

■ Azure Active Directory Log. This is a full audit train and tracking of sign-in activity for Azure Active Directory.

■ Resource Logs. Resource logs are available for operations that were performed within a resource (the data plane). For example, a request on a WebApp or the number of times a logic app has run can both be logged. The resource log detail varies with resource type because each resource delivers a different service.

This information gives an architect a view of what is currently happening on their customers’ application(s) and what happened previously.

Activity Log and Azure Active Directory Log are automatically available to view within the Azure portal. Resource logs must be configured at the resource level through diagnostic settings before they can be viewed. Configuring diagnostic settings has the same generic steps, regardless of the resource type.

Platform as a Service (PaaS)

Follow these steps on a platform as a service (PaaS) resource to enable diagnostic settings:

Navigate to the menu blade for a PaaS resource in the Azure portal. Scroll down to Monitoring and click Diagnostic Settings. The Diagnostic Settings blade opens, which shows a list of settings that can be streamed to other destinations. Click Add Diagnostic Setting to configure data collection.

Clicking Add opens the Diagnostics Setting Configuration blade, as shown in Figure 1-2.

FIGURE 1-2 Configure diagnostic settings

In the Diagnostic Setting Name field, add a unique name for this diagnostic setting at the resource.

Under Category Details, select all categories of data you want to collect:

■ Log. These are the resource logs. The categories of log will differ depending on the resource type chosen. This screenshot is from a Logic App.

■ Metric. Choosing this option will stream numerical metric data in a time series format about this resource.

Under Destination Details, select at least one destination for the chosen categories to stream to:

■ Log Analytics. Check this to stream data to a Log Analytics workspace. For more information about Log Analytics see “Configure a Log Analytics Workspace,” later in this chapter. If Log Analytics is selected, it becomes mandatory to select the Subscription and Log Analytics Workspace, which will receive the data, as shown in Figure 1-2.

■ Archive To A Storage Account. Check this to archive your chosen categories into a storage account; this option is most useful if future auditing of the resource is required. Once you have chosen this option, the Retention (Days) entry point is enabled with a value of 0 for each selected category, as shown previously in Figure 1-2. Edit this number value to set the number of days each category should be retained. If you change this value later, it will only take effect on new logs and metrics stored. Old logs and metrics will continue to be retained for the original retention period. If Archive to Storage Account is selected, a Subscription and Storage Account must be selected from the respective drop-down menus, as shown previously in Figure 1-2.

■ Stream To An Event Hub. Select this option to send diagnostics data to an Event Hub. Sending data to an Event Hub enables streaming of the data outside of Azure to third-party applications, such as security information and event management (SIEM) software. If Stream To An Event Hub is selected, the Subscription and Event Hub Namespace fields must be populated.

Once the diagnostic settings are chosen, click Save at the top left to save the choices. The categories and destinations selected are now displayed on the Diagnostics Settings blade, and data will automatically be sent to the chosen destinations.

Diagnostic settings also can be managed on the command line through PowerShell using the Set-AzDiagnosticSetting cmdlet or the az monitor diagnostic-settings Azure CLI command. For example, to enable specified log categories for an Azure SQL database, execute this command in PowerShell:

Set-AzDiagnosticSetting -Name sqldb112-diagsettings ' -ResourceId $dbResource.ResourceId ' -Category QueryStoreRuntimeStatistics, QueryStoreWaitStatistics, Errors, DatabaseWaitStatistics, Deadlocks -Enabled $true ' -StorageAccountId $storageResource.ResourceId ' -WorkspaceId $workspaceResource.ResourceId

Azure Virtual Machines

Azure VMs are not part of PaaS. Instead, they form part of Azure’s Infrastructure as a Service (IaaS) offering, and you manage them. For an Azure VM to log data, the Azure diagnostics extension must be installed. Doing so sets up an Azure Monitor agent on the VM. The diagnostic extension is an Azure VM extension, meaning it can be installed via an ARM template or on the command line. It can also be installed through the Azure portal. The name of the extension differs between operating systems. For Windows, it is the Windows Azure diagnostics extension (WAD); for Linux, it is the Linux diagnostic extension (LAD). To install either extension through the Azure portal, navigate to the Diagnostic Settings menu item of any Azure virtual machine. You will have the option to choose Enable Guest-Level Monitoring if the diagnostic extension has not already been installed. Once installed, tabs for metrics and logging are enabled within the Diagnostic Settings blade. The number of tabs and their configurable contents depend on the operating system of the VM. For a Windows VM, these tabs are displayed:

■ Overview. This is a summary page that shows the options selected in the other tabs.

■ Performance Counters. Choose Basic to pick from groupings of counters to be collected, such as CPU, Memory, and Disk. Choose Custom to pick specific counters.

■ Logs. Choose Basic to pick from groupings of Application, Security, and System logs to be collected, or choose Custom to select specific logs and levels using an XPath expression, IIS Logs, .Net application logs, and event tracing for Windows (ETW) logs can also be selected for collection.

■ Crash Dumps. Collect full or mini dumps for selected processes.

■ Sinks. Optionally, you can send data to Azure Monitor or Application Insights.

■ Agent. If the diagnostics agent is malfunctioning, it can be removed from this tab and can then be reinstalled. You can also edit the Log Level, maximum local disk space (Disk Quota), and Storage Account for the agent.

If you have created a Linux VM, you will see the following tabs:

■ Overview. This is a summary page that displays the options selected from the other tabs.

■ Metrics. Choose Basic to pick from groupings of metrics to be collected, such as Processor, Memory, and Network and their sample rates. If you choose Custom, you can then choose Add New Metric or Delete specific metrics, and you can set individual sample rates.

■ Syslog. On this tab, you can choose which syslog facilities to collect and the severity level at which you want to collect them.

■ Agent. If the diagnostics agent is malfunctioning, it can be removed from this tab, and can then be reinstalled. Also, you can Pick A Storage Account for the agent.

Note Diagnostic Settings and Diagnostics Extension

Not all the services have the Diagnostic Settings menu item in their menu blades. When the Diagnostic Settings option is missing, navigate to the resource group and click Diagnostic Settings. If Diagnostic Settings can be enabled for the service, it will be listed. For example, VPN gateways must be configured in this way. If you are planning to use the Log Analytics extension on a Linux VM, it must be installed before the diagnostic extension.

Create a performance baseline for resources

Baselining resources gives your customers a view of what expected resource behavior looks like. When performance degradation occurs, your customers can use their resource baselines to aid in their analyses and fault resolution.

Azure Monitor collects two main types of data:

■ Metrics. Timeseries and numerical-measured values or counts, such as CPU usage or waits

■ Logs. Events and trace files

The metrics in Azure Monitor form the baseline, giving a timeseries view of your resources. You can see a metrics view for most single resources by choosing the Metrics menu against the resource itself. You can use this to build up a view of how your resource is performing. Here is an example for an Azure VM:

In the Azure portal, navigate to any virtual machine and click Metrics on the Virtual Machine menu blade.

You must now choose the metrics to add to the chart. The scope has already been selected for you—it is the VM. Select a metric from the Metrics menu and then select an aggregation from the Aggregation menu. Click away from the metric, and it will be added to the chart.

To add a new metric, click Add Metric and repeat step 2. Repeat this process until all metrics you require are present on the chart.

Figure 1-3 shows a metrics chart, with the CPU performance for the last 24 hours suggesting this VM might need to be scaled up.

FIGURE 1-3 Metrics chart for VM CPU usage

Once completed, you can choose to add the chart to your Azure portal dashboard by clicking Pin To Dashboard. To navigate to a dashboard page, click the menu icon at the top left of any Azure page, click Dashboard, and choose the name of the dashboard to which you added the chart.

Monitor for unused resources

Because of the flexibility of Azure, it can be easy for your customers to have unused or under-utilized resources hidden within their subscriptions. With pay-by-the-minute or hourly billing, the cost of an unused resource could affect spending considerably. Azure Advisor contains cost recommendations that cover the following:

■ Under utilized VMs. These are VMs that can be downsized or deallocated.

■ Right-sizing database sources. Azure SQL, MySQL, or MariaDB can be downsized.

■ Idle network gateways. These are VNet gateways that have not been used for 90 or more days and could be deleted

■ Reserved VM / PaaS instances. You can buy capacity up front to save costs based on PaaS (Platform as a Service) and VM usage.

The Azure Advisor recommendations are free, and you can access Azure Advisor through the Azure portal:

Search for Azure Advisor in the search resources bar in the Azure portal. Select Azure Advisor in the drop-down menu that opens from the search bar as you type the resource name. The Azure Advisor overview page loads, as shown in Figure 1-4; the Cost summary is shown at the top left.

FIGURE 1-4 Azure Advisor Overview blade

Click the Cost square to drill into the recommendations. Each recommendation is shown as High Impact, Medium Impact, or Low Impact, and the number and type of Impacted Resources are shown at the bottom of each recommendation. Potential yearly cost savings are shown in the top right of the Cost square.

Monitor performance capacity

Azure Monitor can collate data from many different sources through a variety of agents. In a hybrid environment, your customers will need a single view across their organization. To deliver this functionality, your customers will need to combine on-premises workload metrics with those from Azure. The diagnostic agent for VMs will only collect data from Azure VMs; it does not support on-premises VMs.

The Log Analytics Agent will collect data from Azure VMs, on-premises VMs, and VMs managed by System Center Operations Manager (SCOM). The Log Analytics Agent is also referred to as “OMS Linux Agent” or “Microsoft Monitoring Agent (Windows).” The Log Analytics Agent can be installed on an Azure VM from the Virtual Machines section of a Log Analytics workspace. The installation on an on-premises machine requires the agent to be downloaded and installed from the command line.

The VM data collected by the Log Analytics Agent can be viewed in Azure Monitor Log. Azure Monitor Log uses KQL (Kusto Query Language) to create reports on the data using queries. Azure Monitor Log comes with built-in queries to help you get started. To view your data using these queries, log in to the Azure portal and follow these steps:

Search for Monitor in the search resources bar at the top of the Azure portal. (Note that Azure Monitor is listed in the Azure portal as Monitor.) Select Monitor in the drop-down menu that opens from the search bar as you type the resource name.

Choose Logs from left pane. Azure Monitor Logs opens with the Example Queries page. Scroll through the All Queries section, where you can see the list of Azure resources that have example queries.

Scroll to the bottom and choose Other > Memory And CPU Usage. The example KQL is loaded into the query pane. Click Run to execute the query and view results.

Click Select Scope, which is located to the left of Run. Here, you can choose the scope at which your query will run. Select a resource group that contains virtual machines that are sending data to Log Analytics. Click Apply; you are returned to the Query Editor, where the selected scope is now to the left of Select Scope. Click Run; the data returned is restricted to your selected scope, which is the resource group you just selected.

Now alter the KQL by editing it directly. In Figure 1-5, the KQL has been edited from the one selected in Step 3 above. The TimeGenerated > ago(2h) predicate filter has been set to 2 hours ago, and the summarization of values returned— bin(TimeGenerated, 2m)—is grouped to 2 minutes.

FIGURE 1-5 Viewing capacity through Azure Monitor logs

Exam Tip KQL

Exam Tip KQL

It is important to have a basic understanding of the KQL language for the exam, though this can be difficult without access to the infrastructure that is creating data to query. Microsoft provides a tutorial database and a demo log analytics portal. These can be accessed for practice at https://docs.microsoft.com/en-us/azure/data-explorer/kusto/query/tutorial and https://portal.loganalytics.io/demo.

Need More Review? Azure Monitor Logs

The example in Figure 1-5 shows a single use case for Azure Monitor Logs. To learn more about the vast number of services whose data can be mined through logs and metrics, visit https://docs.microsoft.com/en-us/azure/azure-monitor/platform/data-platform-logs.

Visualize diagnostics data using Azure Monitor

Azure Monitor can collate data from many different sources through a variety of agents. Your customers will find the vast amount of data almost impossible to analyze without a graphical representation of the data. You have already seen how Azure Monitor can pin charts to your dashboard, but you can have more visualization capabilities by using Azure Monitor workbooks, which read metrics and logs from Log Analytics to create visualizations of data across multiple sources. Azure Monitor comes pre-loaded with workbook templates, which allow your customers to view insights about their resources, such as identifying VMs with low memory or high-CPU usage or viewing the storage capacity of their storage accounts. All templates can report across a subscription. To view the Performance Analysis workbook template in Azure Monitor, follow these steps:

Search for monitor in the search resources bar at the top of the Azure portal. (Note that Azure Monitor is listed in the Azure portal as Monitor.) Select Monitor in the drop-down menu that opens from the search bar as you type the resource name.

In the Azure Monitor menu, click Workbooks, and from the Gallery under Virtual Machines, click Key Metrics.

Choose the subscription you want to view and the Log Analytics workspace to which your VMs are logging metrics. Choose a Time Range to further filter the data. The workbook visualization loads with the Overview tab selected. The Overview tab displays the CPU utilization for all VMs in the selected subscription.

Click the Key Metrics tab to view the key metrics of CPU, disk, and network usage in a tabular format, as shown in Figure 1-6.

FIGURE 1-6 Key Metrics workbook template showing the CPU, disk, and network usage for virtual machines

Click through the other tabs. The Regions tab displays the highest CPU usage in each Azure region where the subscription contains a virtual machine. The Resource Health tab displays the health of each virtual machine in the subscription. Clicking the virtual machine in the Resource Health tab will drill through to the Resource Health blade of the virtual machine.

Return to the Gallery you navigated to in Step 2 and explore the other Workbooks available for virtual machines.

If your charts do not load, it is because VM insights have not been configured for the VMs. This is also indicated by a red exclamation mark and a Not Onboarded appearing to the right of the Time Range drop-down menu. To configure VM insights, see “Configure logging for workloads,” later in this chapter. Go back to the Gallery to explore the other templates available to you.

Azure Monitor can also send log and metric data to other sources for analysis, such as Power BI, where further sources of data can be combined to create business reporting. Operational dashboards can be created using Grafana. (You can do this by installing the Azure Monitor plugin from within Grafana.) Grafana is an open-source platform primarily used for detecting and triaging operations incidents.

Monitor health and availability

Understanding how to monitor the health of your customers’ application infrastructure is key for detecting potential issues and reducing downtime. Because your customers’ application infrastructure uses Azure services and these services can be affected by service-related downtime, your customers might require alerts if an underlying service becomes unavailable. This section looks at methods to do just that.

Monitor service health

Azure Service Health tracks the health of Azure services across the world, reporting the health of resources in the regions where you are using them. Azure Service Health is a free service that automatically tracks events that can affect resources.

To view Azure Service Health, log in to the Azure portal and search for service health in the search resources bar at the top of the portal. Select the Service Health menu option, which will be shown as an option in the drop-down menu as you type the resource name. The menu options on the left-hand side under Active Events correspond to the type of events, which are tracked in Azure Service Health:

■ Service Issues. Azure services with current problems in your regions

■ Planned Maintenance. Maintenance events that can affect your resources in the future

■ Health Advisories. Notification of depreciation of features or upgrade requirements that you use

■ Security Advisories. Security violations and notifications for Azure services that you are using

Choosing Health History from the Service Health menu lists all historical health events that have happened in the regions you use over a specified time period.

Selecting the Resource Health menu option lists resources by resource type and shows where service issues are affecting your resources. You can click the listed resource to drill down for the resource health history or to read more about a current issue affecting the resource.

Navigating back to the Service Health menu, you can create an alert for Service Health events in Health Alerts. Health Alerts monitors the activity log and sends an alert if Azure issues a Service Health notification. Therefore, diagnostic logs must be configured at the subscription level to include Service Health; otherwise, Health Alerts will not be configured.

Monitor networking

Monitoring network health for IaaS products is performed with Azure Network Watcher. Azure Network Watcher has tools to view metrics, enable logging, and diagnose and monitor resources attached to an Azure Virtual Network (VNet). Azure Network Watcher is automatically activated for a region as soon as a VNet is created in your subscription. To understand the monitoring capabilities of Azure Network Watcher, you need to explore three tools: Network Watcher Topology, Connection Monitor, and Network Performance Monitor.

Topology

Azure Network Watcher topology gives an overview of all VNets and their connected resources within a resource group. To view a topology, open the Azure portal and search for network watcher in the search resources bar at the top of the page, select Network Watcher from the drop-down menu that is displayed as you type the resource name. Topology can be selected in the menu on the left side of the portal in the Network Watcher menu. Select a Subscription and Resource Group that contains at least one VNet. The topology will automatically load, as shown in Figure 1-7.

FIGURE 1-7 Network Watcher Topology for a specified resource group

Figure 1-7 shows two VNets: vnet2vnet-vnet1 and vnet2vnet-vnet2. This is the network topology of the infrastructure created in “Implement VNet-to-VNet connections,” later in this chapter. An additional virtual machine (151-vnet-win) has also been added to the default1 subnet. You can see the mandatory gateway subnets, their VPN Gateways (VNetGW1, VNetGW2) and the connections for each VPN Gateway (VNet1-VNet2 andVNet2-Vnet1).

Connection Monitor

Connection Monitor is generally used to view latency; it can provide the minimum, average, and maximum latency observed over time or at a point in time. This data can be used to monitor whether moving Azure resources to new regions might decrease latency. Connection Monitor can also monitor for topology changes that can affect communication between a VM and an endpoint. If an endpoint becomes unreachable, the Connection Troubleshoot feature of Network Watcher can identify the reason as being DNS resolution, VM capacity, firewall, or routing issues.

Network Performance Monitor

Network Performance Monitor (NPM) monitors network performance between points in your infrastructure. NPM detects network issues and can be configured to generate alerts based on thresholds set for a network link. NPM has the following capabilities:

■ Performance Monitor. Detect network issues across your cloud and hybrid environments

■ Service Connectivity Monitor. Identify bottlenecks and outages between your users and their services

■ ExpressRoute Monitor. Monitor end-to-end connectivity over Azure ExpressRoute

To use Performance Monitor in Azure, at least one VM on your network will require the Log Analytics Agent to be installed. Network Performance Monitor is enabled in Network Watcher.

Need More Review? Network Watcher

To learn more about monitoring IaaS networks using Network Watcher, see https://docs.microsoft.com/en-us/azure/network-watcher/.

Monitor cost

Azure charges your customers for the resources and technologies they use and the data that flows between the resources and their users. In most cases, as soon as a resource is created, your customers will start being charged for the resource. Without controlling and monitoring spend, your customers could be in for a shocking bill at the end of the month! The cost management features of Azure Cost Management and Billing enable your customers to control costs by analyzing spend and receiving alerts based on spend thresholds.

Monitor spend

Azure Cost Management uses budgets to control costs and alert your customers when budgets are about to be breached. When a budget is about to be breached, Cost Management and Billing can raise an alert to enable your customers to act. To create a budget, use Cost Management in the Azure portal, and follow these steps:

Open the Azure portal and search for cost management in the search resources bar at the top of the Azure portal. Select Cost Management + Billing in the drop-down menu that opens as you start to type the resource name.

Select Cost Management in the left-hand menu. The Cost Management menu now loads. Choose Budgets from the Cost Management section of the Cost Management menu.

If you have any budgets, they will be listed on the Budgets blade which is now displayed. Click Add at the top left to add a budget.

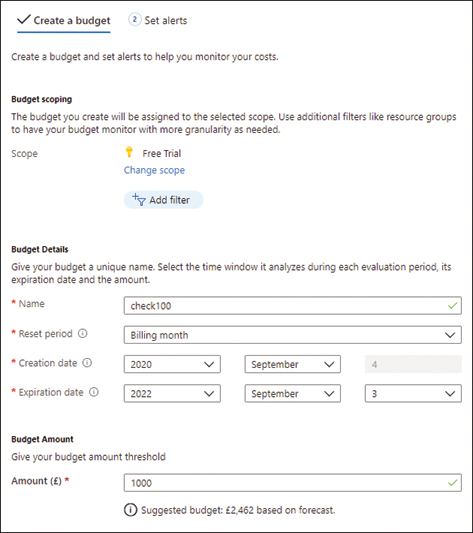

The Create Budget tab is opened, which has the configuration sections in the following list. Once you have chosen your options, your budget should appear as shown in Figure 1-8.

FIGURE 1-8 Creating a budget in Cost Management

■ Scope. You can set a budget at the management group, subscription, or resource group levels. For example, set the Scope to the subscription level.

■ Filter. This is often used to filter to a taxonomic tag, such as a department, to provide cross-organization budgetary views. For this example, do not add a filter.

■ Name. Enter a Name for your budget.

■ Reset Period. Choose the period over which your internal budget period resets. For this example, set the Reset Period to Billing Month.

■ Creation Date. This is the date to start the budget. You can choose options from the start of the current billing month or options that extend into the future. For this example, leave the default setting.

■ Expiration Date. This is when the budget will end. For this example, leave this as the default setting.

■ Budget Amount. The limit you require to be set for the budget. This will be in your subscription currency, which may differ from your local currency. Enter a value that is just above your current spend. Click Next at the bottom of the page.

The Set Alerts tab is now active, which is where you can configure an alert on your budget. For a budget alert, you have the following configuration options:

■ Alert Conditions. Enter the % Of Budget upon which you would like the alert to fire. Your customers will need to set this to a value that will give them time to remediate possible overspend before the limit is breached. For this example, choose 75%. Leave Action Group empty, as you will explore action groups later in this chapter in “Initiate automated responses by using Action Groups.”

■ Alert Recipients. Enter the email addresses of the person(s) who requires this report.

Click Create; the budget is created along with its corresponding alert.

When a cost alert is triggered, the notifications are fired, and an active alert is created for the budget. The alerts can be viewed in the Cost Alerts menu option displayed on the left of Cost Management. In Cost Alerts, you have the option of dismissing the alerts or re-activating a dismissed alert.

Report on spend

Azure Cost Management is also the best place to report on spend. Navigate back to the Cost Management menu in the Azure portal and choose Cost Analysis. The Cost Analysis blade is preconfigured with a summary dashboard of your current and past spend, as shown in Figure 1-9.

FIGURE 1-9 Cost analysis to report on spend in Azure Cost Management

Figure 1-9 shows the spend on the current billing month, with accumulated costs broken down into services, locations, and resource groups. You can change the scope to management group, subscription, or resource group. The ability to filter by tag is considered a best practice, and it is one of the key features of cost analysis. For example, if you tag by department, you can produce an analysis of each department’s spend. Click Download at the top of the page to manually download the chart data or to schedule spend data for extraction to a storage account.

Configure advanced logging

Advanced monitoring in Azure Monitor is done through Insights, which is part of Azure Monitor. Insights provides your customer with a specialized monitoring experience for their applications and services. Insights leverages Azure Monitor Logs which sit on a Log Analytics workspace. Therefore, before you explore Insights, you will need to create and configure a workspace.

Configure a Log Analytics workspace

To create a Log Analytics workspace in the portal, search for log analytics in the search resources bar at the top of the Azure portal. Select Log Analytics Workspaces in the drop-down menu that opens as you type in the resource name. To add and configure a workspace, follow these steps:

Click Add at the top-left of the Workspaces blade. Enter a name for the workspace, choose a resource group, and select the region where you need your workspace to reside. Click Review + Create, and then click Create to create the workspace.

Once created, your new workspace is listed. Click the workspace name to look at the configuration options.

In the left-hand menu under Settings, choose Agents Management. At the top of the page are Windows Servers and Linux Servers tabs. To manually onboard a VM to Log Analytics, you will require the ID and keys from these tabs. You will explore on-boarding VMs in “Configure logging for workloads,” later in this skill.

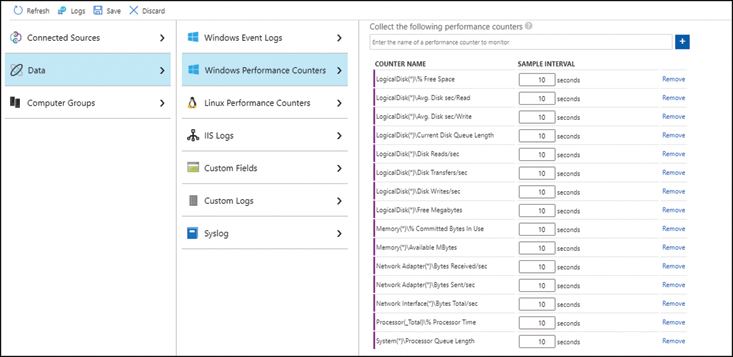

On the Log Analytics Workspaces menu, choose Advanced Settings. The Data section is where you can configure which counters and log files are collected for your resources. For example, click Data > Windows Performance Counters. The counters available are listed, but until you select Add The Selected Performance Counters, the data will not be collected for any Windows VM connected to this workspace. Once selected, the screen is updated, as shown in Figure 1-10.

FIGURE 1-10 Configuring the Log Analytics workspace to collect Windows Performance Counters

When the VM Log Analytics Agents refresh their configurations, the agents pick up the new counter configurations and send the selected data back to the Log Analytics workspace.

You will need to repeat this exercise for event logs, Linux performance counters, and other data sources you require.

Staying in the Log Analytics workspace that you have just created, click Virtual Machines in the left-side menu. A table is displayed, which lists the VMs that could be connected to the Log Analytics workspace you have just created. The performance counter configuration you made in steps 3 through 5 will only affect VMs listed as Connected in this table.

Implement and configure Azure Monitor insights, including app insights, networks, and containers

Modern applications hosted within the cloud are often complex, combining multiple PaaS and IaaS services. Monitoring, maintaining, and diagnosing such applications can be an almost impossible task if tools to analyze application data and alert on key metrics are not implemented. Azure Monitor provides Insights, which brings full stack observability across applications and infrastructure, thus enabling deep alerting and diagnostic capabilities. This section looks at the Insights available for applications, networking, and containers in Azure Monitor.

Application Insights

Application insights is an Application Performance Management (APM) service for developers to monitor their live applications. Application insights will automatically detect anomalies across hybrid, on-premises, or public cloud applications where you need to

■ Analyze and address issues and problems that affect your application’s health

■ Improve your application’s development lifecycle

■ Analyze users’ activities to help understand them better

To integrate Application Insights with your applications, you must set up an Application Insights resource in the Azure portal. To do this, navigate to Application Insights in the portal and click Add. Choose a name, resource group, and region to create the resource. Once the resource is created, an Instrumentation Key is available on the Overview page. Your customers give this key to their developers. Developers use a software development kit (SDK) to add an instrumentation package to their applications. The instrumentation package uses the Application Insights instrumentation key to route telemetry to the Application Insights resource for analysis.

Once telemetry is flowing to Application Insights, there are built-in visualizations that allow you to analyze your environment. Figure 1-11 shows two of the visualizations: Application Map and Live Metrics.

FIGURE 1-11 The Application Map and Live Metrics, which is part of Application Insights

Application Map displays an overview of your application, where each node is an application component. The links between nodes are the dependencies. The Application Map shows health KPI and alert statuses on each node, which you can drill into for detailed analyses.

Live Metrics provides a real-time view of your application without having to configure settings, which might affect availability. You can plot metric counters live, and you can drill through to failed requests and exceptions.

Two other commonly used insights are Availability and Failures; example output for both is shown in Figure 1-12.

FIGURE 1-12 Failures and Availability insights

Failures are displayed in the top-left portion of Figure 1-12. Failures are plotted over a time range and are grouped by type. You can click the Successful and Failed buttons under Drill Into to investigate operation, dependency, and exceptions.

Availability must be configured by adding an availability test to the Availability page of Application Insights. You enter the URL of the endpoint you want to be monitored, and Azure tests availability from five different locations. You can also configure the availability test to check the response time for downloading page dependencies, such as images, style sheets, and scripts. Azure plots the responses on the availability page charts, which include latency. You can set up alerts from the availability tests for immediate notification of possible downtime.

Network Insights

Network Insights provides a comprehensive overview of your network inventory without any configuration. You can view the health and metrics for all network resources and identify their dependencies. The following Insights are available through Network Insights:

■ Search And Filtering. You might have thousands of network resources. Viewing the analysis data for a single resource can be tricky. With Search And Filtering, you can enter a single resource name, and the resource along with its dependencies will be returned.

■ Alerts. This shows all alerts generated for the selected resources across all subscriptions.

■ Resource And Health Metric. Grouped by resource type, this is a summary view of the selected components. The summaries are displayed as tiles.

■ Dependency View. Drill through the health and metric tiles to view dependencies and metrics for the chosen resource type, as shown in Figure 1-13 for two VNet gateways in a VNet-to-VNet configuration.

FIGURE 1-13 The dependency view of two VNet gateways in a VNet-to-VNet configuration in Network Insights

Azure Monitor for Containers

When you are architecting solutions with containers, monitoring them is critical. Azure Monitor for containers collects processor and memory metrics from container workloads. The workloads can be deployed on-premises to Kubernetes on Azure Stack, or they can be deployed on Azure Kubernetes Service (AKS), Azure Container Instances (ACI), or other Azure-based, third-party container orchestrators.

When enabled, the Kubernetes Metrics API sends metrics for controllers, nodes, and containers. Container logs are also collected. The metric and log data are sent to a Log Analytics workspace, which is a requirement for Azure Monitor for containers. The method for enabling Azure Monitor for containers differs depending on the service it is to be enabled on. Here is an example command to create an AKS cluster with Azure CLI:

az aks create --resource-group $resourceGroupName --name myAKSCluster --node-count 1 --enable-addons monitoring --generate-ssh-keys

The --enable-addons monitoring option enables Azure Monitor for containers. If you want to use an existing Log Analytics workspace, you must pass it the workspace ID with –workspace-resource-id; otherwise, a Log Analytics workspace will be created for you. You can also enable monitoring on an existing cluster using the following Azure CLI command:

az aks enable-addons --addons monitoring --name myAKSCluster --resource-group $resourceGroupName

The --workspace-resource-id can be specified to use an existing workspace. Once the metrics and logs are being collected, you can access the data from the AKS cluster’s Insights menu or through the Azure Monitor Containers menu. If you are using Azure Monitor, you will need to select the Monitored Clusters tab at the top of the window and then select the cluster you want to view. The Cluster view is a summary of counters for the cluster, as shown in Figure 1-14.

FIGURE 1-14 The Cluster summary view for AKS in Azure Monitor for containers

In Figure 1-14, the top-left chart shows the Node CPU Utilization % of the cluster. The application running on AKS in this example contains an HTML front end (azure-vote-front) with a Redis instance on the back end (azure-vote-back). To deploy this infrastructure follow the Azure quickstart: https://docs.microsoft.com/en-us/azure/aks/kubernetes-walkthrough. When the application is deployed there is one replica of azure-vote-front which is being stressed by multiple concurrent requests. Across the top of the window are six tabs: What’s New, Cluster, Nodes, Controllers, Containers, and Deployments (Preview). In Figure 1-15, the Nodes tab has been selected. The top node listed in the table in Figure 1-15 is named azure-vote-front. The Trend 95th % column displays a single small green bar; eight red, full-height bars, which suggests a large increase in application traffic.

FIGURE 1-15 Nodes in Azure Monitor for containers

■ The number of replicas for azure-vote-front is increased to ten. This can be seen from the nine listings of azure-vote-front underneath the original node. There is no data for these nodes while the top node in the table is at full capacity. The yellow bars for the new nodes and the original node show the load has been distributed equally between each of the 10 nodes. Looking back to Figure 1-14, the manual scale to 10 azure-vote-front nodes corresponds quite nicely to the bottom-right chart, Active Pod Count. You can also see the increased CPU demand of the 10 nodes displayed on the Node CPU Utilization % chart. Switching back to Figure 1-15, the number of azure-front-end nodes is scaled back to 7, and then shortly afterward, it is scaled to 3. This corresponds to the stop in data of the bottom 3 azure-vote-front nodes in the Trend 95th % column, and then it corresponds to the stop in data for all but the top three nodes. You can also see the increase in stress on the top 3 nodes as the Trend 95th % column bars increase in size and go from yellow to orange to red.

Need More Review? Azure Monitor Insights

Insights is an immense tool; to learn more, visit https://docs.microsoft.com/en-us/azure/azure-monitor/insights/insights-overview.

Configure logging for workloads

When architecting VMs at scale, monitoring their workloads and dependent resources has been historically complex. Azure Monitor for VMs is designed for scale, and it analyzes Windows and Linux VMs and VM scale sets through its health and performance metrics. Azure Monitor for VMs monitors the VMs and application dependencies on workloads that are in Azure, on-premises, or in other clouds.

On-boarding a VM in Azure can be performed one at a time in the Azure portal by navigating to a VM, scrolling down in the Azure Monitor menu to Monitoring, choosing Insights, and clicking Enable. The Azure portal sends a deployment request to the VM to install the Log Analytics and Dependency agents. The Dependency agent is required for mapping dependencies, and Log Analytics agent for collecting performance and log data. Azure Monitor for VMs is designed for monitoring workloads at scale, if you are deploying for hundreds of VMs you will need to automate the task. Azure Policy can be configured to deploy the agents, report on compliance, and remediate non-compliant VMs. For on-premises and other cloud VMs, the agents can be deployed manually or pushed out through a designed state-management tool.

Once the data is collected, it can be viewed in the Insights blade of a single VM, or for a rolled-up aggregated view at the subscription level from within Azure Monitor. To view the aggregated data and explore the output in the Azure portal, follow these steps:

Search for azure monitor in the search resources bar at the top of the Azure portal. Choose Monitor from the drop-down menu that is displayed once you start to type the resource name.

In the Insights menu, click Virtual Machines.

The Getting Started tab for VM Insights is displayed. From this tab, the following configuration options are shown:

■ Monitored. This option shows the machines being monitored by Azure Monitor for VMs. You can choose to view the data at subscription, resource group and single VM level by clicking on the listed names.

■ Not Monitored. This option lists VMs in your subscriptions that are not monitored. From here, you can enable the VMs.

■ Workspace Configuration From here, you can configure the Log Analytics workspaces that have been enabled for Azure Monitor for VMs.

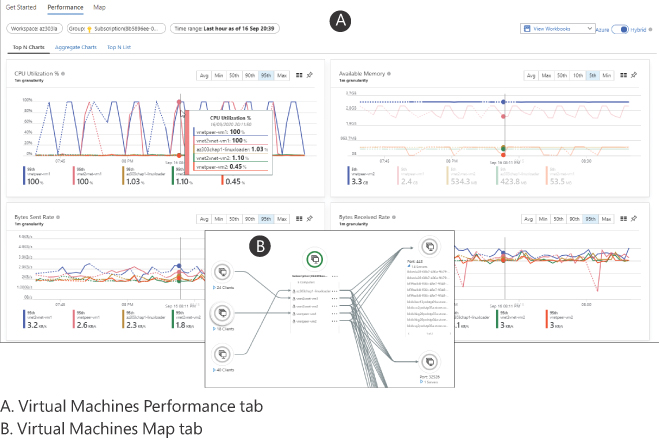

Click the subscription name to view the performance of all enabled VMs. This view includes CPU, memory, network, and disk metrics. Just below the Performance tab are further analysis view tabs; click through these to view the aggregate charts and lists (see image A of Figure 1-16).

FIGURE 1-16 Azure Monitor for VMs using the Performance and Map tabs

Go back to the Get Started tab and choose a resource group with multiple VMs. Next, click Map to view the dependencies for the application, as shown in the inset (B) portion of Figure 1-16.

Need More Review? Azure Monitor for VMs

To learn more about monitoring workloads at scale, visit https://docs.microsoft.com/en-us/azure/azure-monitor/insights/vminsights-overview.

Initiate automated responses by using Action Groups

Throughout this chapter, alerts have been referenced and set up by specifying single email accounts. For your customers deployments, it is highly unlikely that a single individual will be responsible for an alert or set of alerts. Also, an email might not guarantee a quick enough response to an issue. When you are looking to mitigate slow responses to an alert, you should recommend configuring action groups. An action group is a collection of notifications and automation tasks that are triggered when an alert is fired. You can set up multiple action groups that notify different groups or trigger different responses, depending on the alert. To examine the options available in an action group, follow these steps to create an action group in the Azure portal. Note action groups can also be created on the command line and with an ARM template.

Navigate to azure monitor using the search resources bar at the top of the Azure portal. Choose Monitor from the drop-down menu that is displayed once you start to type the resource name.

Click Alerts in the Monitor menu, and then click Manage Actions at the top of the Alerts blade. The Managed Action blade will open.

If you have any action groups, they will be listed in the Manage Actions blade. Click Add Action Group at the top-right to add a new action.

The Create Action Group configuration page is displayed with the Basics tab open. Click Next: Notifications > at the bottom of the page. Following are the options shown on the Basics tab:

■ Subscription. Choose the subscription into which you want to save the action group.

■ Resource Group. Choose a resource group from the subscription or create a new default resource group for action groups.

■ Action Group Name. This is the name for the action group, and it must be unique within the resource group.

■ Display Name. This is included in email and SMS messages.

The Create Action Group page switches to the Notifications tab. Here, you can configure how users are alerted if the Action Group is triggered:

■ Notification Type. This is the type of notification that will be sent to the receiver. You can choose from:

■ Email Azure Resource Manager Role. Choosing this option emails all subscription members of the role.

■ Email / SMS message / Push / Voice. A push notification will be sent to the Azure app that is linked to an Azure AD account, Voice calls a number, including a land line. There are limits to these actions: 1 SMS every 5 minutes, 1 Voice every 5 minutes, and 100 emails an hour.

■ Name. The name of the notification. It must be unique from other notification names and from action names.

Click Next: Actions > at the bottom of the page.

The Create Action Group page switches to the Actions tab. Here, you can configure automated actions if the Action Group is triggered:

■ Action type. This is the automated action that will be performed when the action group is triggered:

■ ITSM. Automatically log a ticket in a specified IT Service Management (ITSM) software.

■ Logic App. Create a logic flow to automate a response such as posting a message to Microsoft Teams.

■ Secure Webhook / Webhook. This option sends a JSON payload to an external REST API.

■ Azure Automation Runbook. Use this option to create a runbook to run code in response to an alert, such as stopping an Azure VM following a budget breach.

■ Azure Function. Use this option to invoke an Azure function to run in response to an alert, such as starting a VM that has been stopped.

■ Name. The name of the action. It must be unique from other action names and from Notification names.

■ Configure. This option is activated once the Action Type is chosen. Here, you enter the notification details, Webhook URL, Logic App Name or Function App Name. You can also enable the common alert schema, which provides the following functionality:

■ SMS. This creates a consistent template for all alerts.

■ Email. This creates a consistent email template for all alerts.

■ JSON. This creates a consistent JSON schema for integrations to webhooks, logic apps, Azure functions, and automation runbooks.

Once you are happy with the action group configuration, click Review + Create to add the action group.

Configure and manage advanced alerts

Throughout this skill, you have explored how to monitor resources for a wide range of issues and anomalies. The sheer scale of the data that can be produced while monitoring solutions architected in the public and hybrid cloud is vast. This means trying to sift through the data manually to detect problems will be almost impossible or take an unreasonable and expensive amount of labor. Creating alerts based on the underlying metric and log data will automate some of these tasks for your customers.

Collect alerts and metrics across multiple subscriptions

Azure Monitor alerts give you the ability to trigger alerts on resources for a subscription. The alerting experience is unified for the three types of alert: Metric, Log, and Activity Log. For example, you might want to know whenever a VM is stopped in your production subscription, so that you can try to restart it. Follow these directions to create the VM stopped example alert in the Azure portal:

Navigate to Azure Monitor, choose Alerts in the left-side menu. At the top of the page, click New Alert Rule.

The Create Alert Rule blade loads, which allows you to select a subscription, resource group, resource, or set of resources. Choose all virtual machines in your subscription by using the Virtual Machines filter and a single location.

Now, to select all the VMs in the same location, select the subscription (as shown in Figure 1-17). At the bottom right, you can see the available signal types, which are resources within the same location; Metric and Activity Log are both available.

FIGURE 1-17 Select the target scope in unified alerts

Now, change the Filter By Resource Type and Filter By Location to All and select the subscription once more. Note the available signal types is now just Activity Log because Metric cannot be used for alerts across regions. Click Done.

Click Select Condition, which opens the Configure Signal Logic page. The signal types available will depend on the Scope selected in the previous step:

Log. Create a KQL query for data in log analytics; if the query returns rows, then the alert is fired.

Metric. Set a threshold value against a metric, such as “greater than an average of X.” If the threshold is breached, the alert is fired.

Activity Log. If a matching Activity Log type is created in the subscription’s activity log, the alert is fired.

The signal type available at the Subscription level is Activity Log. Enter virtual machine in the search box to filter the data. Scroll down on the same blade and select Deallocate Virtual Machine (Microsoft.ClassicCompute/virtualMachines). Leave Alert Logic set to All. Click Done.

Click Action Group to pick an action group. Recall from the previous section that this is a grouping of notifications and automated responses. For this example, create an action group that emails you.

Enter an Alert Rule Name and Description, and then select a Resource Group to save it to.

Click Create Alert Rule to create the alert rule.

Test the alert by stopping a virtual machine within your selected subscription.

If you need to collect alerts across multiple subscriptions, you can automate the process using ARM templates to deploy an alert configuration to each subscription.

View alerts in Azure Monitor Logs

All alerts that have been triggered, regardless of where they are set up, can be viewed in the unified alerts experience in Azure Monitor. To access this information, navigate to Azure Monitor and click Alerts in the left-hand menu. The Alerts blade is displayed listing all alerts for the last 24 hours. The alerts are grouped by severity. For example, all alerts of severity 0 are grouped into a severity line titled Sev 0. Clicking the line for a severity will drill down to the alerts that are contained within that severity rating. Choosing a specific alert in the detail view gives you the option to change the status of an alert to acknowledged.

A similar view of the data is available through Azure Monitor Logs, within Workbooks. From Azure Monitor, select Workbooks in the left-hand menu. Scroll down in the Workbooks Gallery and select the Alerts workbook template under Azure Resources. A similar view to that of the unified alerts experience is shown. You have the option to filter by Subscriptions, Resource Groups, Resource Types, Resources, Time Range, and State. Clicking a an alert in the Alert Summary list drills through to the Alert Details, as shown in Figure 1-18.

FIGURE 1-18 The Alerts Workbook template for Azure Monitor logs

Skill 1.2: Implement storage accounts

Azure Storage is a managed data store. It is secure, durable, massively scalable, and highly available out of the box. You can configure Azure Storage to withstand a local outage or natural disaster by using replication. Azure Storage can accommodate a vast variety of data use cases across its core services and is accessible worldwide. As an Azure architect, you need to know how a storage account and its core services can be configured to suit your customers’ requirements.

This skill covers how to:

Select storage account options based on a use case

Configuring a storage account during the creation process determines the features that are available for use. This configuration governs which core services, performance tiers, and access tiers are accessible after account creation. Therefore, when architecting storage for your applications, careful consideration must be given to the storage account options.

All storage accounts are encrypted at rest using Microsoft-managed encryption keys and Storage Service Encryption (SSE) for data at rest.

Core services

To explore storage accounts further, it is important to understand the core services available in an Azure Storage and how they can be used:

■ Azure Blobs. Azure blobs are optimized for storing massive amounts of unstructured data—either binary or text based. Azure blobs can be used for images, documents, backup files, streaming video, and audio. Blobs come in three types:

■ Block blobs. Binary and test data, up to 4.7TB.

■ Append blobs. Block blobs that are optimized for appends and are good for logging.

■ Page blobs. Random read/write blobs used for VM VHD files or disks and can be up to 8TB.

■ Azure Files. Server Message Block (SMB)–based fileshare service. Use as a replacement for a traditional on-premises fileshare or share configuration files between multiple Azure workloads. Azure Files can be synchronized to an on-premises server for hybrid fileshare scenarios.

■ Azure Queues. Stores messages of up to 64K. Typically used for first-in first-out (FIFO) asynchronous processing scenarios.

■ Azure Tables. A structured NoSQL data service. It is a key/value store that has a schema-less design, which can be used to hold large amounts of flexible data. (Azure Cosmos DB is recommended for all unstructured flexible data.)

■ Azure Disks. Disks for virtual machines. Although listed as a core service, it is not configurable; instead, it is fully managed by Azure.

Storage account type

The core services available for use depend on the storage account type chosen. The default type for a storage account on creation is General-Purpose V2, which is the Microsoft-recommended storage account type and supports all core services listed in the previous section.

The following Azure CLI command creates a General-purpose V2 account called az303defaultsa.

az storage account create --name az303defaultsa --resource-group $resourceGroupName

To change the storage account type, add the --kind parameter, which has the following options:

■ StorageV2. Also known as General-purpose V2, this is the default for a storage account and the Microsoft-recommended account type. Access to all core services and their associated performance tiers and access tiers is available.

■ Storage. Also known as General-purpose V1, this is provided for legacy support of older deployments. Access to all core services and performance tiers is available but no access tiers are available for selection. It is possible to upgrade from V1 to V2 using the command line.

■ Blob Storage. This is provided for legacy support of blobs. All access tiers are available, but only standard performance is available for selection. Use General-purpose V2 instead of Blob Storage when possible.

■ BlockBlobStorage. Low latency storage for blobs with high transaction rates, premium performance with no access tiers.

■ FileStorage Files only, premium performance and no access tiers. This option can be specifically configured for file related performance enhancements such as IOPS bursting.

The following Azure CLI command creates a BlockBlobStorage account called az303blockblob:

az storage account create --name az303blockblob --resource-group $resourceGroupName --kind BlockBlobStorage

Access tier

Blobs support three access tiers, Hot, Cool, and Archive. The access tiers are optimized for specific patterns of data usage. These patterns correspond to the frequency of access to the underlying data. This means by selecting your access tier carefully, you can reduce your costs. Examining this further:

■ Hot tier. Highest storage costs, lowest access costs. Used for frequently accessed data and is the default tier.

■ Cool tier. Lower storage costs than hot, higher access costs. Use for data that will be stored “as is” and not accessed for at least 30 days.

■ Archive tier. This is at the blob level only. Lowest storage costs, highest access costs. Only use for data that will remain “as is” that will not be accessed for at least 180 days and can stand high retrieval latency of several hours. Great for long term backups and archival data.

az storage account create --name az303blobaccesstier --resource-group $resourceGroupName –kind StorageV2 –access-tier hot

The Azure CLI command above creates a General-purpose V2 account called az303blobaccesstier with a Hot access tier.

az storage account create --name az303blobaccesstier --resource-group $resourceGroupName --kind StorageV2 --access-tier hot

An access tier can be changed at any time using the command line or Azure portal. To change az303blobaccesstier to the Cool tier in Azure CLI, issue the following command:

az storage account update --name az303blobaccesstier --resource-group $resourceGroupName --kind StorageV2 --access-tier cool

Note Early Deletion Penalty

Changing the tier from Archive or Cool before the respective 180-day or 30-day periods will incur an early deletion penalty equivalent to the remaining days’ cost of the storage.

Exam Tip Azure Storage Configuration

Exam Tip Azure Storage Configuration

Understanding which core services, access tiers, and performance tiers are available for the storage account types is an important area for this certification. See https://docs.microsoft.com/en-us/azure/storage/common/storage-account-overview#types-of-storage-accounts for further review.

Configure Azure Files and Blob Storage

Once you have chosen your storage account options, you need to set up use case–specific “containers” for your data. These are the Azure Storage core services, as previously listed. The method of creating these containers changes depends on the core service you are configuring. The AZ-303 certification requires you to understand the configuration for Azure Files and Blob Storage.

Azure Files

Azure Files can be configured on the command line and within the Azure portal. Follow these steps to configure Azure Files by executing cmdlets in PowerShell:

Use these cmdlets to create a storage account:

$resourceGroupName = "12storage" $location="northeurope" $storageAccountName = "az303fsdemosa" New-AzResourceGroup -Name $resourceGroupName -Location $location ' -Tag @{department="development";env="dev"} $sacc = New-AzStorageAccount ' -ResourceGroupName $resourceGroupName ' -Name $storageAccountName ' -Location $location ' -Kind StorageV2 ' -SkuName Standard_LRS ' -EnableLargeFileShareThese PowerShell cmdlets create a storage account named az303fsdemosa that supports the Azure Files core service. If you compare these cmdlets to the Azure CLI command from the “Access tiers” section, it is somewhat similar except for the -EnableLargeFileShare cmdlet. This cmdlet instructs Azure to enable File shares of more than 5TB in this storage account. The storage account object is stored in a $sacc variable, which enables you to use the storage account context later in your configuration without having to retrieve it again. You will explore storage account contexts in “Manage access keys,” later in this chapter.

Create a fileshare named az303share and set a max size of 1TB using -QuotaGB in this PowerShell cmdlet:

$shareName = "az303share" New-AzRmStorageShare ' -StorageAccount $sacc ' -Name $shareName ' -QuotaGiB 1024

Note Changing Quotas

Quotas can be changed with Update-AzRmStorageShare.

At this point, you could start uploading files to your share once you have created a folder structure that is called a “directory structure” in Azure Files. Execute the following commands in PowerShell to create a folder named topLevelDir:

$dirName = "topLevelDir" New-AzStorageDirectory ' -Context $sacc.Context ' -ShareName $shareName ' -Path $dirNamePowerShell returns the URL for the directory, as shown in the following output:

-Directory: https://az303fsdemosa.file.core.windows.net/az303share Type Length Name Directory 0 topLevelDir

This URL can be used from inside an application to access the directory from anywhere, providing the application is authenticated and authorized to do so.

You should still have the storage account context in your PowerShell session. You can now use this instead of the directory URL to upload a file to your new directory. Execute this cmdlet to upload a file named file.txt:

"AZ-303 Azure Files share example" | out-file -FilePath "file.txt" -Force Set-AzStorageFileContent ' -Context $sacc.Context ' -ShareName $shareName ' -Source "file.txt" ' -Path "$($dirName)\file.txt"

Use the Azure portal to explore the storage account fileshare and check for the file’s existence.

Blob Storage

Blobs are stored in a container; you can think of a container as a grouping of blobs. A container works for blobs in much the same way a folder does for files. As previously discussed, an Azure Storage account can support multiple core services. Therefore, for this example, the az303fsdemosa storage account will be updated to enable blobs to be stored. Follow the steps below— executing the commands in PowerShell—to configure a blob container, upload a file, and further explore the blob configuration options. This example assumes you are continuing from step 4 in the previous section (“Azure Files”) with the storage account context available in the $sacc object. If this is not the case, read the “Manage access keys” section later in this chapter to learn how to obtain the storage account context:

In PowerShell, execute the following cmdlet to create a blob container named images:

$containerName = "images" New-AzStorageContainer ' -Name $containerName ' -Context $sacc.Context ' -Permission blobNote the parameter -Permission, which sets the public access level of the blob; there are three values for this parameter:

■ None. This parameter means no public access is allowed; containers with this parameter are private. To use this container, a service must authenticate and be authorized to do so.

■ Blob. This parameter grants read access to the blobs in the container when directly accessed. Container contents or other data cannot be accessed without authentication and authorization.

■ Container. This parameter grants read access to the blobs and the container. The contents of the container can be listed.

You can now use the storage account context to upload files to the container. Execute the following commands in PowerShell to upload a file to the images container created above.

Set-AzStorageBlobContent -File "D:\az303files\uploadTest.jpg" ' -Container $containerName ' -Blob "uploadTest.jpg" ' -Context $sacc.Context

Note Edit the Code Block

You will need to edit this code block to set the -File parameter to an image file that exists on your client. You might also want to change the -Blob parameter so that the file names match after upload.

Open the Azure portal and navigate to the az303fsdemosa storage account. In the Storage Account menu, under Blob Service, choose Containers. Click the Images container name to view the file stored within it.

On the Storage Account menu, click Data Protection. Here, you can configure Blob Soft Delete, which enables a mechanism for recovering accidentally deleted Blobs. The retention policy is between 7 to 365 days. Blob Soft Delete is a storage account–level property that affects all blob containers. To enable Blob Soft Delete using PowerShell, set a retention policy on the storage account object using the following command:

$sacc | Enable-AzStorageDeleteRetentionPolicy -RetentionDays 7

Switch back to the Azure portal and click through the other blob service options to further examine them:

■ Lifecycle Management. This option allows you to set rules to automatically transition blobs through the Cool and Archive tiers to possible deletion after a specified number of days since modification.

■ Custom Domain. Blob storage can be configured to use custom domain names.

■ Azure CDN. This option provides integration to Azure CDN to give consistent latency for access anywhere in the world.

■ Azure Search. This option adds full text search to blobs using Azure Cognitive Search.

Manage access keys

When you create a storage account, Azure also creates two access keys, which can be used to programmatically access the account. For example, in the “Azure Files” section, “context” was mentioned on multiple occasions. An Azure PowerShell context object holds authentication information, which allows you to run PowerShell cmdlets against resources. In the “Azure Files” section, the context is a storage context, which allows you to run storage cmdlets on a storage account resource that requires a context. To retrieve the context for the account in PowerShell, you must first retrieve the access key for the storage account. The context is retrieved using the key. For example, on the az303fsdemosa account used in the “Azure Files” section, you would use this code:

$key1=(Get-AzStorageAccountKey '

-name $storageAccountName '

-ResourceGroupName $resourceGroupName '

).value[0]

$key1

$ctx = New-AzStorageContext '

-StorageAccountName $storageAccountName '

-StorageAccountKey $key1

$key1 stores the primary access key, and the storage context is in $ctx. The context can be used to manage the storage account configuration and access the stored data.

Microsoft recommends that the access keys be regularly rotated. Rotating the keys helps to keep the storage accounts secure by invalidating old keys. To manually rotate the keys, the following process must be followed:

Alter service connections to use the secondary key.

Rotate the primary key in the Azure portal or on the command line. For example, to rotate key1 for the az303fsdemosa storage account in PowerShell, execute the following commands:

New-AzStorageAccountKey ' -ResourceGroupName $resourceGroupName ' -Name $storageAccountName ' -KeyName key1

Alter service connections to use the primary key again.

Rotate the secondary key using the same method as shown in step2.

The switch between primary and secondary in this process is why Microsoft recommends that only the primary or secondary keys are used by all services by default. Otherwise, connections to storage accounts will be lost when you rotate the keys.

Need More Review? Manage Access Keys

To learn about using Azure Key Vault to manage access keys, see https://docs.microsoft.com/en-us/azure/storage/common/storage-account-keys-manage.

Configure network access to the storage account

The configurable core services are bound endpoints, and each has a unique address based on a well-known URI:

■ Blob. http://<storage-account-name>.blob.core.windows.net

■ File. http://< storage-account-name>.file.core.windows.net

■ Table. http://< storage-account-name>.table.core.windows.net

■ Queue. http://< storage-account-name>.queue.core.windows.net

The endpoints are public, and by default, the storage account is configured to accept all traffic to the public endpoints, even traffic from the Internet. However, you cannot gain access to an endpoint without proper authorization through an access key, shared access signature (SAS) token, or via Azure AD. It is likely that your customers’ use cases will require the public endpoint to be secured to a range of IP addresses or to a specific VNet. This is configured using storage firewalls and virtual networks. You may use the command line or the Azure portal to configure network access. To explore settings in the Azure portal, follow these steps:

Using the Azure portal, search for storage account in the search resources bar. Select Storage Accounts in the drop-down menu that is displayed as you type the resource name into the search. Select 12storage from the storage account list. This step assumes you still have available the storage account you created earlier in this chapter. If not, pick any newly created storage account.

In the Storage Account menu, scroll down and click Firewalls And Virtual Networks to open the blade.

The default configuration of All Networks is selected. As discussed, this means all traffic, even Internet traffic, can access the endpoint. Choose Selected Networks. The configuration options for VNets and the storage account firewall are shown in Figure 1-19.

FIGURE 1-19 Configure the storage account firewall and virtual networks

By choosing Selected Networks, the network rule is now set to “deny,” which means no traffic is allowed access to the storage account private endpoints by default. To allow access to your services, specific rules must be added in the Firewall or Virtual Networks sections of the Firewalls And Virtual Networks blade.

The Firewall section governs which public IP address ranges can be granted access to the storage account. You have the option to configure the following settings:

■ Add Your Client IP Address. The Azure portal picks up your public Internet-facing IP address from your browser. Choosing this option will add your client to the access list. For this demo, leave this option unchecked.

■ Address Range. Individual IP addresses, such as your customers’ static public Internet-facing IP addresses or a range of addresses in CIDR notation, can be added.

Access to the storage account can be secured to specific subnets within a VNet, which further isolates access to your storage account. The VNet can be in a different subscription. From the Virtual Networks section on the same blade, you have the following options:

■ Subscription. This is where you choose the subscription in which your VNet resides.

■ Virtual Networks. This is where you choose a VNet, though only networks within the storage accounts regional pair will be listed.

■ Subnets. This is where you choose the subnets of the chosen VNet that require access.

Click Enable. This will create a service endpoint for storage in the VNet.

Click Add. This allows you to add the VNet and selected subnet for access to the storage account.