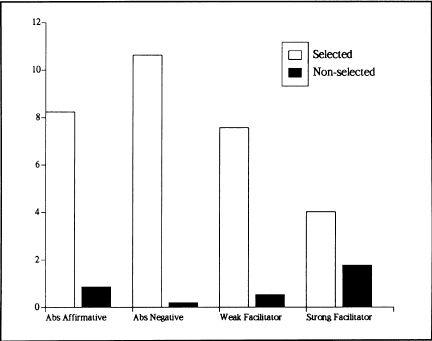

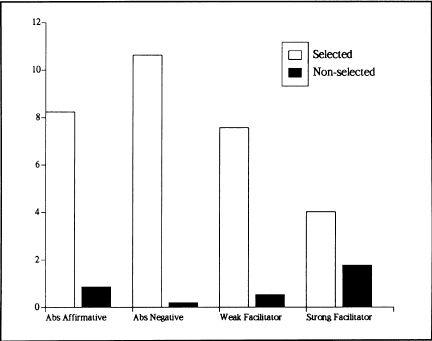

FIG. 3.1. Mean inspection times for selected and non-selected cards on each conditional in the study of Evans (in press, Experiment 1).

Relevance, rationality, and tacit processing

In the previous chapters, we have introduced our basic thesis that people’s reasoning and decision making help them to achieve their basic goals within cognitive constraints. Rationality1 is not an all or nothing matter: it comes in degrees. People will tend to be more rational in this sense in their practical thought about basic goals and sub-goals, than in their theoretical reasoning that is not directly related to practical goals. They will tend to be better at achieving their goals when they are relying on basic, preconscious processes, such as those that process visual information, than when relying on the explicit use of representations in their conscious reasoning, where their cognitive constraints can be serious. This view of rationality is the first of two basic theoretical foundations for the arguments advanced in this book. The second is that all human thought is highly subject to what we term relevance effects. For us this means that people reason only about a highly selective representation of problem information and prior knowledge, and that this selection is determined preconsciously. This idea connects with what some other authors in the field are describing as “focusing”, as we shall see.

The purpose of this chapter is to explore this notion of relevance and associated issues. In particular we consider the extent to which reasoning and decision making are determined by conscious and unconscious processes. We also begin to explore the relationship between relevance and rationality.

There is a difference between relevance and what has been called “the principle of relevance”. Both concepts have origins in the study of pragmatics—the study of how people use their background beliefs and their understanding of each other to communicate successfully. Grice (1975, 1989) pointed out that it is only possible to understand discourse by going beyond what can be logically derived from it, and that communication is generally a goal-directed activity in which those taking part co-operate to achieve common ends. For instance, an underlying reason for a group to communicate might be the building of a house, and to do that, ideas about the design would have to be shared and orders for materials issued before the necessary sub-goals could be achieved (cf. Wittgenstein, 1953). In our terms, Grice held that people display a high degree of rationality1 when they communicate with each other. He argued that speakers and hearers could understand each other well because each presumed the other to be acting in line with a set of informal pragmatic maxims or principles. For example, a hearer expects a speaker to be as informative as is required to attain their presupposed goal but no more so.

One of Grice’s maxims is “be relevant”. This idea was developed into the principle of relevance by Sperber and Wilson (1986) and ceased to be a maxim in Grice’s sense. Sperber and Wilson argued that all acts of communication, whether by speech, gesture, or other means, carry a guarantee of relevance from the communicator to the audience. It is fairly easy to show that we would have little chance of communicating with one another without this principle. Let us consider some example utterances:

A:I don’t think I will bother to take my raincoat. |

|

B:They’re forecasting rain for this afternoon. |

The speaker of 3.1 produces two utterances which, in purely linguistic terms, are entirely unconnected. However, the speaker would be violating relevance if the two sentences were indeed unrelated. An utterance such as 3.1 would probably occur in a context where the speaker had cakes baking in an oven and would be out at the time they were due to finish. So the communication to the listener is really to the effect that he/she should take the cake out of the oven in half and hour’s time.

Sperber and Wilson reinforce Grice’s view that understanding communication goes way beyond what people could logically extract from the content of statements made. In particular, they develop the notion of “mutual manifestness”. The cognitive environment for an individual consists of facts that are manifest. A fact is manifest if the individual is capable of representing it mentally. In the case of utterance 3.1, manifest facts might include (a) that the listener was present when the cakes were put in the oven, (b) the smell of cooking cakes, or (c) an earlier conversation in which the speaker has told the listener that he/she will need to take the cakes out. Arelevant discourse takes account of what is mutually manifest in the cognitive environment. In this case it may be mutually manifest that there was a similar previous occasion on which the listener forgot the cakes and they burnt. This would permit the speaker to add some additional comment such as “Remember what happened last time” whilst maintaining relevance.

In example 3.2, the relevance of weather is established by the utterance of A, even though no explicit reference to weather is made. This is because A has raised the issue of whether to take a raincoat and it is mutually manifest that such decisions are normally based on judgements about the weather. The reply of B which relays the weather forecast is not only therefore relevant in the context established but also communicates an implicature of advice to A that A should in fact take the raincoat. Again, it is perfectly clear that without these pragmatic principles the discourse in 3.2 makes no sense. There is no purely semantic analysis that can connect the two sentences.

The distinction between language comprehension and explicit deductive inference is hazy indeed. As our examples indicate, all discourse comprehension calls for inferences, often of an implicit nature. In a deductive reasoning task, of course, subjects are instructed explicitly to draw inferences. However, they are presented with sentences that have to be understood. These will be subject to the same kinds of influence of pragmatic factors as we have already discussed. Then there is the question of whether instructions for explicit reasoning do indeed elicit a conscious deductive process of a different nature from that of implicit inference. This may be the case (we return to this problem in Chapter 6) but we would argue that the cognitive representations to which such processes are applied are both highly selective and preconsciously determined.

Sperber and Wilson’s theory is intended not simply as a pragmatic theory of communication but as a general theory of cognitive processing. This is made clearer by the recent reformulation of the theory (see, for example, Sperber, Cara, & Girotto, 1995) in which two principles of relevance are distinguished as follows:

The first (cognitive) principle of relevance:

Human cognitive processes are aimed at processing the most relevant information in the most relevant way.

The second (communicative) principle of relevance:

Every utterance conveys a presumption of its own relevance.

Note that the second principle corresponds to what was previously described as the principle of relevance. Sperber et al. also provide the following definition of relevance:

The greater the cognitive effect resulting from processing the information, the greater the relevance.

The greater the processing effort required for processing the information, the lesser the relevance.

In support of this cognitive theory of relevance, Sperber et al. (1995) present a new interpretation of work on the Wason selection task, which is discussed in Chapter 4. We agree broadly with their approach and in particular the emphasis upon processing effort, which is one of the bounding conditions on rational thought. However, following Evans (1989), our use of the term “relevance”—unless otherwise indicated— refers to more generally what determines the focus of subjects’ attention or the content of thought. Our basic idea is that explicit or conscious thinking is focused on highly selected representations which appear “relevant” but that this relevance is determined mostly by preconscious and tacit processes. This notion of relevance is related to, but subtly different from, that of availability in reasoning.

The availability heuristic was introduced by Tversky and Kahneman (1973) in order to account for judgements of frequency and probability. It was argued that people judge frequency by the ease with which examples can be “brought to mind” and that psychological factors influencing availability result in a number of cognitive biases. This idea of availability has also been applied to reasoning, for example by Pollard (1982) who argued that both the salience of presented information and the retrieval of associated knowledge could influence response on reasoning tasks. However, there are a number of examples in the reasoning literature that show that the availability of information does not necessarily influence responding. A good example is the so-called base rate fallacy, which we discuss in detail in Chapter 5. In this case the base rate statistic is presented in tasks requiring posterior probability judgement, but not seen as relevant in the standard versions of the problem, and thus disregarded by most subjects. Thus availability is necessary but not sufficient for relevance. Note that the concept of availability is broadly equivalent to manifestness in Sperber and Wilson’s system.

Relevance and tacit processing

Evans (1989) presented a discussion of preconsciously cued relevance in order to account for biases in reasoning. By “bias” we mean systematic errors relative to some impersonal normative standard, such as that of formal logic or probability theory. This work, presented prior to the analysis of Evans (1993a), made little reference to the issue of rationality. In our present terminology, biases—by definition—limit rationality2. Whether they limit rationality1 also, however, is a moot question and one to which we will devote much attention in this book. In general, we shall argue that many biases reflect processes that are adaptive in the real world if not in the laboratory—a good example being the belief bias effect discussed in Chapter 5. Where biases do not appear to be goal-serving in a natural setting, they may reflect some basic cognitive constraints on human information-processing ability.

The Evans (1989) work was based upon application of the “heuristic-analytic” (H-A) theory of reasoning. This theory was originally presented by Evans (1984) but is a development of an earlier “dual process” theory (Wason & Evans, 1975; Evans & Wason, 1976). The major development in the H-A theory is the explication of the concept of relevance. Unconscious heuristic processes are proposed to produce a representation of relevant information, which is a combination both of that selected from the present information and that retrieved from memory. Analytic processes are assumed to introduce some form of explicit reasoning that operates upon the relevant information in a second stage.

The current book builds upon the heuristic-analytic theory, extending and revising it in important respects. The H-A theory, as presented by Evans (1989), had two notable weaknesses that failed to elude several critics of the theory. One problem was that the issue of rationality was insufficiently addressed. We have already indicated that this is to be a central concern in the present work. The second problem is that the nature of the analytic stage was entirely unspecified, and hence the issue of how deductive competence is achieved was not addressed. This problem will not be avoided in the present volume, although its discussion is largely deferred until Chapters 6 and 7. For the time being we will content ourselves with the assumption that explicit reasoning does occur in an analytic stage, but only in application to information selected as relevant.

The word “heuristic” in the H-A theory is open to misinterpretation. The term is often applied to short-cut rules that may be applied in a conscious strategy in order to produce an output of some kind, such as the solution to a problem, or a decision taken. In the H-A theory, heuristic processes refer only to preconscious processes whose output is the explicit representation of relevant information. In the Evans (1989) theory, further, analytic processing is required before a behavioural outcome is observed. We now take a somewhat different view, which is explicated in detail in Chapter 7 of this book. Although tacit processes are certainly responsible for relevance and focusing, we believe that they may also lead directly to judgements and actions. Our position now is that explicit reasoning processes may determine decisions but need not. The central idea of the Evans (1989) theory, however, remains. Such explicit thinking as we engage in is mostly directed and focused by tacit, preconscious processes. These processes strongly constrain our ability to be rational2. For example, our understanding of logical principles will avail us little if our attention is focused on the wrong part of the problem information.

Although we are not concerned in this book with the mechanisms of “heuristic” or tacit cognitive processes, we would like to note the similarity between our view of such processes and the characterisation of cognition by connectionist or neural network theorists (see Smolensky, 1988, on connectionist approaches to cognition). In network models, there are many units that have multiple interconnections, rather like neurons in the human brain—hence the term “neural net”. Activity in the network consists of excitatory and inhibitory links between units. Learning occurs by modification to the set of mathematical weights that determine these connections. Hence, knowledge in a network is tacit or implicit. There is no need to postulate propositions or rules, just a set of weightings. The knowledge contained in a network—like the tacit knowledge contained in a human being—can only be inferred by observation of its behaviour.1

We are attracted to the neural network characterisation of heuristic processes for several reasons. We believe that what is relevant often “pops out” into consciousness very rapidly, despite great complexity in the nature of pragmatic processes and the very large amount of stored knowledge that has to be searched. For those who would argue that this is an implausible model of thinking, consider that we already know that much cognitive processing is of this kind—rapid, preconscious, and computationally very powerful. Visual perception provides an astonishing example in which our conscious percepts are immediately “given” in real time, despite the incredible amount of information processing required. Similarly, we (or rather our brains) effortlessly and immediately apply a combination of linguistic and pragmatic knowledge to derive, without any apparent conscious effort of thought, the meaning of everyday discourse. Such processes are almost unimaginably complex to model by sequential, propositional means but significant progress in both of these domains is being made by use of computer simulations based on neural networks.

Hence, our characterisation of the processes responsible for relevance in reasoning and decision making is entirely consistent with the nature of cognition in general and is plausibly modelled by the connectionist approach. We are not precluding the possibility of sequential and conscious reasoning at the analytic stage (see Chapter 6), but we are arguing that much important processing has been completed prior to or independently of conscious thought. We also see links between our proposals and those of researchers in the implicit learning tradition (see Berry & Dienes, 1993; Evans, 1995a; Reber, 1993). Research in this area suggests that there are separate explicit and implicit cognitive systems: people may hold knowledge at a tacit but not explicit level or vice versa. Tacit knowledge may reflect innate modules or compilation of once conscious knowledge but it may also be acquired implicitly without ever being in the explicit system. The defining characteristic of tacit knowledge is that people can demonstrate it in their behaviour—i.e. they have procedural knowledge of how to do something, but cannot verbalise it. We take this to mean that they are not conscious of the truth of some explicit propositional representation of this knowledge. (Ryle, 1949, provides the philosophical background to much contemporary discussion of the difference between knowing how and knowing that or propositional knowledge.) With tacit knowledge, there can also be a lack of meta-knowledge—people may not be aware that they possess knowledge held tacitly.

The rational function of relevance

Why is it adaptive, or rational1, for relevant representations to pop up from preconscious processes and for our explicit reasoning to be limited by such representations? Reber (1993) presents an evolutionary argument for the “primacy of the implicit” in which he suggests that the implicit system is highly adaptive, and that much of it evolved first in our early human and pre-human ancestors, and is shared with other animals. But whether our tacit abilities are old in evolutionary terms, as aspects of our visual system must be, or relatively young in these terms, as some of our tacit linguistic abilities must be, the evidence shows that these abilities are far more robust in the face of neurological insult than are explicit processes (see also Berry & Dienes, 1993). This is evidence not simply for their primacy but also for their distributed, connectionist nature. The reason that implicit processes serve rationality1, however, is that they are so enormously computationally powerful, allowing extremely rapid real time processing of vision, language, and memory. The explicit system, by contrast, is an inherently sequential processing system of highly limited channel capacity For example, the amount of information that can be held in verbal working memory and thus reported (see Ericsson & Simon, 1980) is the merest fraction of that which the brain must process in order to allow us to execute the simplest of behaviours.

Although almost all cognitive psychologists accept that tacit processing underlies much cognition, there is still a widespread identification of reasoning and decision making with conscious explicit processes. We hold that this is mistaken, though we do agree that explicit processes have an important part to play in intelligent thought. The principal problem for consciousness is the vast overload of information both in the environment and in memory that is potentially available. Without very rapid and effective selection of relevant information, intelligent thought would be virtually impossible. The network approach manifests rationality1 in that networks essentially acquire their knowledge through interaction with the environment. For example, a network classifying patterns is doing so by implicit learning of previous exemplars, and not by the application of explicit rules or principles.

We do not take the epiphenomenalist position of arguing that conscious thought serves no purpose. On the contrary, we believe that explicit processes can produce logical reasoning and influence decision making (see Chapters 6 and 7). Our point is that explicit reasoning can only be effectively applied to a very limited amount of information, the representation of which must derive from preconscious, implicit relevance processes.2 Otherwise, as Oaksford and Chater (1993, 1995) have indicated, real life reasoning based on a large set of beliefs would be hopelessly intractable.

In previous chapters we have stated that effectively pursuing goals makes for rationality1. We have stressed the fundamental importance of practical thought, motivated by the need to solve problems and make decisions in order to achieve everyday objectives. Also of importance is the basic theoretical reasoning closely tied to such practical thought. When effective, this reasoning is motivated by an epistemic goal, such as inferring whether some tree has apples on it, that will help to attain some practical objective, such as getting an apple to eat. As indicated earlier, Sperber and Wilson argue that relevant information is that which will have the most cognitive effects for the smallest processing effort. We would stress that not just any cognitive effects, for however little effort, determine relevance. What is subjectively relevant is what is taken to further the currently active goals, be they epistemic, practical, or a combination of both. With the practical goal of getting an apple to eat, we have the epistemic sub-goal of inferring whether a tree we are looking at in the distance has apples on it. Anything we can remember that will help us infer whether it does or it does not will be relevant to us in this context. Anything anyone else can say to help us perform either inference will also be relevant.

Thus relevance is partly to be explained in terms of what has epistemic utility, but there is more to it than that, except in the case of pure, academic theoretical reasoning, not aimed at any practical goal. The defining characteristic of relevance processes, as we understand them, is that they selectively retrieve information from memory, or extract it from a linguistic context, with the object of advancing some further goal. Nor would we wish to restrict our notion of what is retrieved to verbal as opposed to procedural knowledge. Previously learned rules and heuristics, for example, may be retrieved as relevant in the given context.

As noted in the previous chapter, Anderson’s (1990) rational analysis of cognition has similarities with our own approach, and we would regard him essentially as a theorist of personal rationality. He has recently revised his earlier ACT* model into ACT-R, which is a theory of adaptive cognition based upon production systems (see Anderson, 1993). Such systems are programmed with a set of production rules of the form “if <condition> then <action>”. Like ourselves, Anderson relies on an extension of the distinction between knowing how and knowing that. He distinguishes procedural knowledge, which he tries to account for using production rules, and declarative knowledge. People can only display the former in their behaviour, but can give a verbal report of the latter. Clearly his distinction is close to the one we have made between tacit or implicit states or processes, and explicit ones that can be reported. Sometimes explicit, conscious representations can be difficult to describe, as Anderson points out. For example, some people are good at forming mental images that they find hard to describe fully We discuss the problem of introspective report and explicit processes in Chapter 7. In general, we believe that explicit processes are identifiable through verbalisations, but not necessarily by self-describing introspective reports.

We are then in agreement with Anderson about the importance of a number of issues. We usually prefer to speak of implicit or explicit states and processes, rather than of knowledge, because these states or processes can go wrong, and then they do not embody or yield knowledge. This can happen in unrealistic psychological experiments, because of general cognitive limitations, or quite simply because people make mistakes. Anderson’s main interests are in memory, skill acquisition, and other cognition that for the most part is not directly related to reasoning and decision making. The tacit, implicit, or procedural is of widely recognised importance in these other areas of cognition, but our argument is that it is of equal importance in reasoning and decision making. These states and processes are essential, we argue, for selecting relevant information for explicit representation and processing in explicit inference.

Conditional reasoning is the study of how people make inferences using “if” and other ways of expressing conditionality In the deductive reasoning literature, relevance effects have been most clearly demonstrated on conditional reasoning tasks. We will give a brief historical survey in this section of how such effects were discovered, as well as pointing to some of the most recent evidence concerning relevance and reasoning.

The conditional truth table and selection tasks

In textbooks on propositional logic, the sentence “if p then q” is treated as an extensional conditional, with the truth table:

Case |

Truth of rule |

p, q |

true |

p, not-q |

false |

not-p, q |

true |

not-p, not-q |

true |

Thus this conditional is considered to be true whenever its antecedent condition is false. For example, “If that animal is a dog then it has four legs” is true both of a cat (which has four legs) and a goldfish (which does not). Wason (1966) suggested that this is psychologically implausible and that people actually think that conditionals are irrelevant when the antecedent condition is false. For example, the above conditional only applies to dogs. He called this hypothesis the “defective truth table”.

The first experiment to test Wason’s hypothesis was conducted by Johnson-Laird and Tagart (1969) who gave subjects a pack of cards with a letter-number pair printed on them. They were then given a conditional such as “If the letter is a B then the number is a 7” and asked to sort the cards into three piles, “true”, “false”, and “irrelevant”. As Wason predicted, most false antecedent cards (ones with a letter which was not a B) were classified as irrelevant to the truth of the conditional.

Evans (1972) ran a variant on this experiment in which subjects were asked to construct in turn cases that made the conditional statement true or made it false. His materials were different from those of Johnson-Laird and Tagart, but if we imagine that the same kind of conditional was tested, the task of the subject was effectively to construct a letter-number pair to verify or to falsify a conditional. The key point of this experiment, however, was that subjects were instructed to perform these tasks exhaustively—i.e. to construct all logically possible ways of verifying and of falsifying the statement. Thus any case that was not selected was inferred by the experimenter to be irrelevant. The idea was to avoid demand characteristics that cue the subject to think that some cards are irrelevant.

The method of Johnson-Laird and Tagart has become known as the truth table evaluation task and that of Evans as the truth table construction task. Not only did Evans (1972) replicate the basic findings of Johnson-Laird et al., but in a later study (Evans, 1975) demonstrated that the results of using the construction and evaluation forms of the truth table task are effectively identical. In other words, subjects omit on the construction task precisely the same cases that they judge to be irrelevant on the evaluation task. This quite surprising task independence was an early indication of the power of relevance effects in conditional reasoning.

A more significant feature of the Evans (1972) study was the introduction of the “negations paradigm” into the truth table task. In this method, four types of conditionals of the following kind are compared:

Form |

Example |

If p then q |

If the letter is A then the number is 3 |

If p then not q |

If the letter is D then the number is not 7 |

If not p then q |

If the letter is not N then the number is 1 |

If not p then not q |

If the letter is not G then number is not 5 |

This task led to the accidental discovery of a second relevance effect in conditional reasoning, which Evans termed “matching bias”. In addition to being more likely to regard any of these statements as irrelevant when the antecedent condition was irrelevant, subjects were also more likely to regard as irrelevant cases in which their was a mismatch between the elements referred to in the statements and those on the cards. For example, consider the case where the antecedent is false and the consequent true (the FT case). For the conditional “If the letter is A then the number is not 4” such a case is created by an instance such as G6, i.e. by a pairing a letter that is not an A with a number that is not a 4 (a double mismatch). On the other hand, for the conditional “If the letter is not A then the number is 4” the FT case is produced by A4, a double match. In the former case, the great majority of subjects failed to construct the FT case (and in later evaluation tasks reported in the literature, rated it as irrelevant). However, the dominant response in the second case is to construct the case as a falsifier of the statement (or to rate such a case as false). It was subsequently discovered that the same two causes of relevance apply on the better known and much more investigated problem known as the Wason selection task (Wason, 1966). There are many published experiments on the selection task which have most recently been reviewed by Evans et al. (1993a, Chapter 4). In what is known as the standard abstract form of the task, subjects are shown four cards displaying values such as:

T |

J |

4 |

8 |

together with a statement such as:

If there is a T on one side of the card then there is a 4 on the other side of the card.

Subjects are instructed that each card has a letter on one side and a number on the other. They are then asked which cards need to be turned over in order to discover whether the statement is true or false. Wason’s original findings, which have been replicated many times, were that few intelligent adult subjects (typically less than 10%) find the logically correct answer which is the T and the 8 (p and not-q). Most choose either T alone (p) or T and 4 (p and q). T and 8 are the logically necessary choices, because only a card which has a T on one side and does not have a 4 on the other could falsify the conditional. Wason’s original interpretation was that subjects were exhibiting a confirmation bias by looking for the case T and 4. However, from a strictly logical point of view, turning the 4 to find a T does not constitute confirmation because the conditional is consistent with this card no matter what is on the back.

Application of the negations paradigm to the selection task (first done by Evans & Lynch, 1973) shows that matching bias provides a much better account of the data than does confirmation bias. Consider, for example, the case of the conditional with a negated consequent: “If there is a B on one side of the card, then there is not a 7 on the other side”. If subjects have a confirmation bias, then they should choose the B and the card that is not a 7, in order find the confirming TT case (true-antecedent and true-consequent). If they have a matching bias, however, they should choose the B and 7, which are the logically correct choices. The latter is what the great majority of subjects actually do. In fact, on all four logical cases, subjects choose cards that match the lexical content of the conditional far more often than cards that do not (see Evans et al., 1993a, for a review of the experiments).

When selection task choices are averaged over the four conditionals, so that matching bias is controlled, what is found is that the true-antecedent is the most popular and the false-antecedent the least popular choice. This, of course, links with Wason’s defective truth table hypothesis and the findings on the truth table task. In summary, the two tasks provide evidence of two (independent) sources of relevance:

Cases appear more relevant when the antecedent condition is fulfilled.

Cases appear more relevant when their features match those named in the conditional.

Evans (1989) attributes these two findings to the if- and not-heuristics respectively, which determine relevance in the preconscious manner discussed earlier. The linguistic function of “if” is to direct the listener’s attention to the possible state of affairs in which the antecedent condition applies, hence enhancing relevance for true antecedent cases. The linguistic function of “not” is to direct attention to (i.e. heighten the relevance of) the proposition it denies. We do not normally use negation in natural language to assert new information; rather we use it to deny presuppositions. If we say “We are going to choir practice tonight” or “We are not going to choir practice tonight”, the topic of the discourse is the same in either case. In the latter case, the listener will be thinking about us going to choir practice, not any of the many things we may be doing instead. The statement will also only be relevant in a context where we can presume that the listener did think that we were going and needed to know that we were not—a fellow member of the choir, perhaps, who could give apologies.

Experimental evidence of relevance in conditional reasoning

Some critics complain that the relevance account of the selection task and matching bias does little more than describe the phenomena. However, several types of evidence have now been produced in support of the relevance explanation of the truth table and selection task data. First, let us consider the role of explicit and implicit negation.

Evans (1983) suggested that the use of explicit negations in truth table cases should reduce or eliminate the matching bias effect. He slightly modified the task by using conjunctive sentences to describe the cases. For example, for the statement “If the letter is Athen the number is not 4” the control group received a description of a letter-number pair using implicit negation as is used in the standard task—i.e. “The letter is B and the number is 7” was used to convey the FT case, instead of just “B7”. In the experimental group, however, explicit negations were used, so that this case became “The letter is not A and the number is not 4”.

Note that with the explicit negation form, all cases now match the statements in terms of the letters and numbers. Evans (1983) found a substantial and significant reduction of the matching bias in this condition and a corresponding increase in correct responding. We should, of course, expect that the use of explicitly negative cases on the cards would similarly inhibit matching bias on the selection task. Several recent studies have shown that explicit negatives do not by themselves facilitate correct choices on the affirmative abstract selection task (see Girotto, Mazzocco, & Cherubini, 1992; Griggs & Cox, 1993; Jackson & Griggs, 1990; Kroger, Cheng, & Holyoak, 1993). However, none of these studies used the negations paradigm to allow a proper measure of matching.

A relevant recent study was conducted by Evans, Clibbens, and Rood (in press a) in which the negations paradigm was employed throughout. In the first experiment they attempted to replicate the findings of Evans (1983) using the truth table task, and also to extend the effect from the usual if then to other forms of conditionals—namely only if conditionals, i.e. “(Not) p only if (not) q” and reverse conditionals, i.e. “(Not) q if (not) p”. With all three forms of conditionals, very substantial matching bias was found with implicit negations. With explicit negations, however, the effect was all but eliminated. Thus the findings both very strongly replicated and extended the findings of Evans (1983). In two further experiments, Evans et al. (in press a) generalised this finding to the selection task where explicit negative cases completely removed the matching bias effect.

The latter results do not conflict with those of other recent studies quoted earlier, however, because this reduced matching bias was not accompanied by a facilitation in correct responding. The previously mismatching cards were selected more frequently regardless of their logical status. Moreover, it is consistent with the H-A theory that release from matching should facilitate logically correct responses on the truth table task but not the selection task. This is because the truth table task is proposed to elicit analytic as well as heuristic processes, whereas the selection task generally reflects only relevance judgements (Evans, 1989). The issue of whether analytic reasoning can affect selection task choices, however, is dealt with in Chapter 6.

A different approach to providing evidence for relevance on the selection task is to try to show directly that subjects attend specifically to the cards they select. As noted above, Evans (1989) has argued that on the selection task (but not the truth table task) the data can be accounted for by relevance or heuristic processes alone. The argument is that the cards that are not selected are simply not thought about, rather than thought about and rejected after analysis of the consequence of turning them over. In this account, in fact, there is no reason to suppose that any analytic reasoning about the backs of the cards occurs at all. The first evidence of attentional bias was presented by Evans, Ball, and Brooks (1987) who used a computer version of the task in which subjects had to signal a decision for every card—Yes to select, No to reject—by pressing a key. They found a correlation between decision order and choice. Matching cards, for example, were not only chosen more often but decided about earlier.

A much stronger method was devised recently by Evans (1995b and in press). This also used a computer presented version of the selection task. In this case subjects had to choose cards by pointing with a mouse at the card on the screen and then clicking the mouse button. The innovation was an instruction to subjects to point at the card they were considering selecting, but not to press the button until they were sure. The time spent pointing before selecting was recorded by the computer as the card inspection time. Evans reported several experiments that used this method and that permitted the testing of two predictions:

Those cards that have a higher mean selection frequency will also have a higher mean inspection time. |

|

On any given card, those subjects who choose the card will have higher inspection times than those who do not. |

Prediction P1 is at the usual theoretical level of overall performance. P2, however, is stronger because it attempts to differentiate the subjects who choose a given card from those who do not. No current theory of reasoning attempts to account for these kinds of individual differences. In the experiments, a variety of abstract and thematic versions of the selection task were employed and in all cases strong support was found for both predictions. To illustrate P2, the predicted difference between choosers and non-choosers, we present the data from one of the experiments in Fig. 3.1. The results, as can be seen, are quite dramatic. Subjects scarcely inspect at all the cards that they do not choose.

FIG. 3.1. Mean inspection times for selected and non-selected cards on each conditional in the study of Evans (in press, Experiment 1).

A commonly asked question about these findings is: Why do subjects spend 15 or 20 seconds looking at a card that they will choose anyway? What are they thinking about? The answer to this was provided by an experiment of Evans (1995b) in which concurrent verbal protocols were recorded. First, it was found—in confirmation of the inspection time data—that people tended only to refer to the cards that they ended up selecting. (A similar finding is reported by Beattie & Baron, 1988.) More interestingly, when subjects chose a card they also referred quite frequently to the value on the back of the card. This finding suggests that subjects do engage in analytic reasoning on the selection task but that this does not affect the choices they make. First, cards that are not selected are not attended to. Hence, analytic reasoning is not used to reject cards. Secondly, when subjects do think about a card, they generally convince themselves to select it. This may be a peculiarity of the selection task and its instructions. For example, Evans and Wason (1976) presented four groups of subjects with alternative purported “solutions” to the problem and found that subjects would happily justify the answer they were given. In fact, none complained they had been given the wrong answer!

Relevance, focusing and rationality

Recently, Sperber, Cara, and Girotto (1995) have presented a new relevance theory of conditional reasoning, based on the Sperber and Wilson (1986) theory and different in some significant ways from the Evans (1989) account. For example, they do not accept the distinction between heuristic and analytic processes, arguing instead that all levels of representation and process in human cognition are guided by relevance. What the results of their experiments and those of other recent researchers (Green & Larking, 1995; Love & Kessler, 1995) show is that manipulations that cause people to focus on the counter-example case of p and not-q facilitate correct responding on the selection task.

What other authors refer to as “focusing” is also closely related to what we call relevance effects. Legrenzi, Girotto, and Johnson-Laird (1993), for example, suggest that subjects focus on what is explicitly represented in mental models. Other psychologists are using the term focusing without necessarily linking it with mental model theory (e.g. Love & Kessler, 1995). A useful terminological approach here might be to use the term focusing to refer to the phenomenon of selective attention in reasoning in a theoretical neutral manner. In this spirit we are happy to use the term ourselves, but will argue theoretically that relevance is the main cause of focusing. The further theoretical argument that focusing reflects what is explicitly represented in mental models is not incompatible with this position, because pragmatic considerations such as relevance are required in any case to explain what people represent in their mental models.3 We will discuss the relation between relevance and model theory further in Chapter 6, but suffice it to say that Evans (e.g. 1991, 1993b) has already suggested that what is subjectively relevant might be that which has explicit representation in a mental model.

A final point to consider before moving on is this: How can relevance effects be rational if they lead to biases such as matching bias? As well as being irrational2, matching bias may appear to be irrational1—it certainly does not serve the subjects’ goal of getting the right answer in the reasoning experiments. However, the notion of rationality1 is embedded in the concept that we have evolved in an adaptive manner that allows us to achieve real world goals, not to solve laboratory problems. Indeed, central to our theoretical view is the argument that subjects cannot consciously adjust their tacit or procedural mechanisms for the sake of an experiment. Matching bias is, in fact, a consequence of generally useful mechanisms that lead us to direct attention appropriately when understanding natural language. The if-heuristic enables us to expand our beliefs efficiently, or the information we extract from discourse, when we believe, or have been told, that a conditional is true. The not-heuristic helps us to co-operate on a topic for discourse and to determine how much confidence we should have in what we are told.

Suppose someone says to us that we are not looking at an apple tree, lb understand this and how believable it is, we are better off thinking about apple trees and engaging our, largely tacit, recognition ability for them. We could not have such an ability for the unlimited set of completely heterogeneous things that are not apple trees.

Relevance in Decision Making and Judgement

We now turn to consideration of some recent studies in the psychology of decision making, which in our view provide evidence of relevance effects, even though they are not presented in these terms by their authors. We start by consideration of some of the very interesting experiments summarised by Shafir, Simonson, and Tversky (1993).

Relevance and reason-based choice

The theoretical emphasis of Shafir et al. is on reason-based choice, although they wisely avoid a mentalistic definition of “reasons”, which would imply that these are found in propositions that people explicitly represent and can report. Shafir et al. recognise the evidence in the literature of the unreliability of introspective accounts of the reasons for decision making (see Chapter 6). They define “reasons” as the “factors or motives that affect decision, whether or not they can be articulated or recognised by the decision maker” (p. 13). When we examine the phenomena they discuss, we find that this analysis of reason-based choice is closely related to what we hold to affect relevance. That is, factors that induce selective attention or focusing on certain aspects of the available information, and that explain why people make particular choices.

Perhaps the best example discussed by Shafir et al. is a study of “reasons pro and con” (Shafir, 1993). In this experiment subjects consider two choices that have a number of attributes. One choice is fairly average on all dimensions; the other is positive on some and negative on others. One group of subjects are asked to decide which option to choose and the other which option to reject: logically, but not psychologically equivalent tasks. For example, here are the positive and negative versions of one of Shafir’s problems:

Imagine that you are planning a vacation in a warm spot over spring break. You currently have two options that are reasonably priced. The travel brochure gives only a limited amount of information about the two options. Given the information available, which vacation spot would you prefer?

Cancel

Imagine that you are planning a vacation in a warm spot over spring break. You currently have two options that are reasonably priced, but you can no longer retain your reservations on both. The travel brochure gives only a limited amount of information about the two options. Given the information available which would you decide to cancel?

The subjects of either group are then shown the following information:

Spot A |

Spot B |

average weather |

lots of sunshine |

average beaches |

gorgeous beaches and coral reefs |

medium-quality hotel |

ultra-modern hotel |

medium-temperature water |

very cold water |

average nightlife |

very strong winds |

no nightlife |

Subjects who were asked which they would prefer, showed a clear preference for Spot B (67%). However, this dropped sharply in the cancel condition where only 48% chose B (by cancelling A). The difference seems to imply a violation of impersonal principles for rationality2, but strikes us as a clear example of a relevance effect on a decision-making task. Note first that the subjects do not make a choice arising from their own desires in a case of actual decision making. They are asked to make a hypothetical choice, and pragmatic factors affecting relevance should have an effect. When asked for a preference, the relevance of the positive aspects of the options is highlighted. There are more positive reasons to choose B (more sunshine, better beaches and hotels). When asked to make a decision to cancel, however, the negative aspects of the options come into focus. B is now less attractive because it has some clear negative features (cold water, no nightlife, etc.), which could justify a decision to cancel.

Another phenomenon of interest to us discussed by Shafir et al. is the “disjunction effect” (see also Shafir & Tversky, 1992; Tversky & Shafir, 1992). A set of experiments show a further violation of an impersonal standard, as captured by Savage’s (1954) sure-thing principle, which informally states the following: If you would prefer A to B when you know that C has occurred, and if you would prefer A to B when you know that C has not occurred, then you should prefer A to B when you do not know whether C has occurred or not. One experiment providing evidence of violation was also about a possible holiday, this time at the Christmas break and in Hawaii (Tversky & Shafir, 1992). All groups were told that they had just taken a tough qualifying examination and had three choices: to buy, not buy or (at a small cost) to defer their decision. The result was that 54% of the subjects told they had passed the examination chose to buy and 30% to defer. For the group told that they had failed the exam, the figures were very similar: 57% buy and 31% defer. However, of the group who were told that they did not know the result, but would know it after the period of deferral, only 32% chose to buy with deferral being the most popular option (61%). The interpretation of this finding offered by Shafir et al. is that the reasons for taking the holiday are different in the case of passing (celebration) and failing (consolation) but uncertain in the case of the group with the unknown result. Lacking a clear reason, they suggest, subjects prefer to defer the decision until they know the result of the examination.

Notice again that the subjects are asked to make a hypothetical choice. One reason why people might opt to defer a decision like this in real life until they know all the relevant facts, is difficulty in predicting how they will feel. That is, they may think it only fairly probable that they will want a consolation holiday if they hear of failure in the examination, and wonder as well if they will then be too depressed to enjoy a holiday. That would give them a good reason, as Shafir et al. are aware, to put off their decision until they do hear the result and know exactly how they feel. Many more factors can be relevant in real life than should strictly be taken into account in a controlled experiment. Even the way one hears whether one passed or failed—e.g. from a sympathetic or an unsympathetic lecturer—could affect how one feels about the holiday.

The general psychological significance of the disjunction effect, we believe, is that subjects’ choices are determined more by past experience and available experience than by calculation of future, uncertain possibilities. This is the aspect stressed by Shafir and Tversky (1992) who themselves discuss a possible connection between the disjunction effect and the characteristic failure of subjects on the Wason selection task. The selection task also requires subjects to cope with an uncertain disjunction of hidden values on the back of cards. Recall our argument (in Chapter 2) that no one could make most ordinary decisions by considering all the possible states of affairs that might result from alternative actions, and by combining computations of probability and utility in order to choose optimally. Our view is that actual decision making is affected more by past experience and learning, e.g. about one’s feelings in similar cases, than by reasoning about uncertain possibilities. In many real world situations, it would be hard to distinguish these hypotheses, because past experience would also form the basis of any reasoning and calculation of outcomes. However, on novel experimental problems, such as the selection task or the disjunction problems of Shafir and Tversky, the absence of decision making based on forward reasoning becomes apparent.

Relevance in data selection tasks

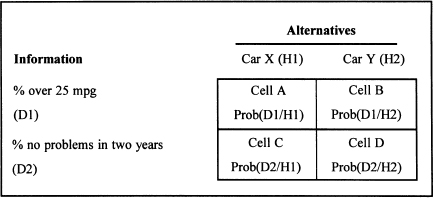

Due to the fame of the Wason selection task, it has perhaps been overlooked in the literature that there is a class of experimental problems that might be defined as selection tasks, of which Wason’s famous problem is just one. A generic selection task, we suggest, is one on which subjects are required actively to seek or choose information in order to make a decision. A task that fits this description is that associated with the pseudo-diagnosticity effect (Doherty, Mynatt, Tweney, & Schiavo, 1979), which we discussed briefly in Chapter 2. In this task, subjects are trying to decide between two hypotheses (H1 and H2) with respect to two alternative pieces of evidence (D1, D2). To illustrate with one of the problems used in a recent paper by Mynatt, Doherty, and Dragan (1993), subjects are trying to decide whether a car is type X or Y. Two types of information that are potentially available concern whether the car does better than 25 miles per gallon and whether it has major mechanical problems within the first two years of ownership. The two types of information about the two hypotheses can be described in the form of a 2×2 table with cells A, B, C, and D (see Fig. 3.2).

FIG. 3.2. Pseudo-diagnosticity problem from Mynatt et al. (1993).

In the standard experiment subjects are given information A, Prob(D1/H1), and asked which of B, C, or D they would choose to know in order to decide if the car is X or Y. Note the close structural similarity to the Wason selection task in which subjects have to choose which card to turn over (to reveal information) in order to decide whether the statement is true or false. The main difference is that on Mynatt et al.’s problem subjects are restricted to one choice, whereas subjects on the Wason selection task can choose all or any cards. The correct choice would appear to be cell B because information can only be diagnostic, in a Bayesian approach, if it gives the likelihood ratio of two hypotheses—i. e. Prob(D1/H1)/Prob(D1/H2). However, the classic finding is that subjects reason pseudo-diagnostically, by seeking more information about the hypothesis under consideration—in this case from cell C, giving Prob(D2/H1). For example, in Experiment 1 of the Mynatt et al. paper, choices were: B 28%, C 59%, and D 13%.

In discussing this effect, Mynatt et al. put forward an important psychological hypothesis. They argue that subjects can only think about one hypothesis at a time, or, putting it more generally, only entertain one mental model of a possible state of affairs at once. This notion of highly selective focus of thinking is of course compatible with our general theoretical approach. In support of their claim, Mynatt et al. compare the above problem with a similar one in which subjects have to decide instead on which car they would choose to buy: an action as opposed to inference version of the problem. This task does not require hypothetical reasoning about alternative states of the world, and so the authors suggest will be less susceptible to pseudo-diagnostic reasoning. (There is an analogy here to the distinction between the indicative and deontic versions of the Wason selection task, to be discussed in Chapter 4.) Choices in the action version were B 51%, C 40%, and D 9%.

Taking the effect of relevance into account, we would say that, in the inference problem, hypothesis 1 (car X) is foregrounded by presentation of information A. This relates to hypothesis 1, directing attention to it and so leading to choice C. A slightly different interpretation of the shift of responding in the action version—and one more in line with relevance effects—is that in this version the attribute of fuel consumption becomes foregrounded, leading to the great frequency of B choices. The preamble to this problem reads:

You are thinking of buying a new car. You’ve narrowed it down to either car X or car Y. Two of the things you are concerned about are petrol consumption and mechanical reliability.

The wording here indicates the concern with the attributes. When the information A is presented, petrol consumption is given pragmatic prominence, and attention is focused on it rather than mechanical reliability. Mynatt et al. assume that the proportion choosing B on this task is simply a function of their different utility judgements for the two dimensions. Because they did not have a condition in which A described reliability information, we cannot tell whether this is right. It is plausible, however, that a significant difference in utility between the two attributes could cause people to focus on the one with the greater value, whichever attribute was given in A. In any case, consider now the preamble to the inference version:

Your sister bought a car a couple of years ago. It’s either a car X or a car Y, but you can’t remember which. You do remember that her car does over 25 miles per gallon and has not had any major mechanical problems in the two years since she owned it.

This is quite different psychologically, because instead of stressing the dimensions of fuel consumption and reliability, specific information about the car is given. This encourages subjects to form a concrete mental model of the sister’s car. When information A is then presented it is actually the following: 65% of X cars do over 25 miles per gallon.

Because the majority of X cars are like the sister’s, the subject will tend to attach X to the mental model to form the hypothesis that this car is an X, leading to the characteristic choice of C, with more information about X cars. Suppose that the evidence in A disconfirms that the car is an X. This should then lead to subjects attaching Y to the model, forming the hypothesis that the car is a Y, and so choosing predominantly choice B. However, because attention is focused on the attribute of fuel consumption in the action problem, this manipulation should not affect this version. This way of looking at the findings is supported by Experiment 2 of Mynatt et al. in which the information A was: 35% of X cars do over 25 miles per gallon. Choices for the inference version were now: B 46%, C 43%, and D 10%—a big shift from C to B compared with Experiment 1, whereas the action choices were B 52%, C 38%, and D 10%—very similar to those of Experiment l.4

A recent experiment reported by Legrenzi et al. (1993) in their study of focusing effects also required subjects to seek information relevant to a decision. Subjects were asked to imagine themselves as tourists in one of several capital cities and asked to decide in turn whether or not to attend a particular event (sporting event, film, etc.). Subjects were then instructed to request information from the experimenter before making a decision. What happened was that most subjects asked several questions about the event itself, but hardly any about alternative actions that might be available to them. For example, if deciding whether or not to view a film on a one day visit to Rome, subjects asked about the director of the film, price of admission, and so on, but rarely asked about other ways they could spend their day in Rome. This fits with the claims of Mynatt et al. that, due to limitations on working memory, people can only have a representation of one object at a time—or a single mental model. This may not be a severe limitation in practical thought about action if people can focus on what has reasonable utility for them, or on what has been pragmatically given utility by someone else in a piece of discourse. It may, however, be more of a problem when the object is to infer, in theoretical reasoning, which one of two or more hypotheses is most likely to be true. Certainly, accurate deductive reasoning usually requires consideration of multiple mental models, although subjects are known to find this very difficult (see Johnson-Laird & Bara, 1984).

In this chapter, we have started to develop our psychological theory of how people reason and make decisions, building on our preference for a personal, rather than an impersonal, view of rationality. In the previous chapters this was principally an argument concerning the appropriate normative methods for assessing rationality, but the discussion of relevance and selective thinking in the present chapter has added psychological distinctions to the argument. In both the study of reasoning and decision making, psychologists who presuppose an impersonal view of rationality expect people to be rational by virtue of conscious, explicit reasoning. For example, the classic “rational man” of behavioural decision theory makes choices by constructing decision trees in which future events are analysed with utilities and probabilities, and by consciously calculating the action most likely to maximise utility.

What is significant is that people are reasonably good at achieving many of their basic goals, thus appearing to be sensitive, to some extent, to the probabilities and utilities of outcomes. However, this results more from experience, learning, and the application of preconscious heuristics than from conscious reasoning about hypothetical alternatives, and almost never from the explicit representation of probabilities and utilities as numbers. We have discussed a number of sources of evidence in this chapter showing that people have rather little ability to reason in this way. On the Wason selection task, subjects appear to think only about certain cards, and to be influenced not at all by hypothetical reasoning about hidden values; the disjunction effect of Shafir and Tversky shows that people can hesitate to make a decision to perform an action that they may have different reasons for; and the pseudo-diagnosticity effect also suggests that people are only able to think about one hypothesis—or mental model—at a time. It appears that people tend to build a single mental model in their working memories and have little capacity for the consideration of alternative, hypothetical possibilities there.

The reasons that we believe these tight constraints on conscious reasoning to be compatible with rationality1 lie in the power and adaptiveness of what we term relevance processes. These are the essentially preconscious processes responsible for building our mental representations or directing the focus of our attention. We have discussed our general conception of such processes as reflecting computationally powerful and parallel processes of the kind envisaged in neural network or connectionist theories of cognition. We have also emphasised the crucial role of pragmatic principles and mechanisms in the determination of relevance.

We are not dismayed by the experimental evidence of reasoning biases shown in experiments described in this chapter and elsewhere in the literature. Most of these experiments require people to engage in conscious hypothetical reasoning in order to find the correct answers—precisely the weakest area of human cognition in our view. The fallacy is to infer that this will necessarily lead to highly irrational decision making in the everyday world. Many ordinary decisions derive from highly efficient processes that, in effect, conform to the pragmatic and relevance principles discussed in this chapter. In the following two chapters (4 and 5), we will consider the experimental literature on reasoning and decision making from the complementary perspectives of rationality1 and relevance theory. We will show that much behaviour in the laboratory, including that which is normally described as biased, can be viewed as rational1 especially when we assume that normally effective processes are carried over, sometimes inappropriately, into the laboratory setting. We will also continue to emphasise the largely preconscious nature of human thinking and the importance of pragmatically determined relevance. We then address the issues of deductive competence and explicit reasoning in Chapter 6.

1. |

For an argument that tacit learning in networks is rational in a sense similar to our use of the term rationality1 see Shanks (1995). |

2. |

Jonathan Lowe (personal communication) suggests that we overstate the problem of information overload because the system need only respond to significant changes in the background state. However, to our understanding, the ability to detect and respond to potentially many such changes implies a highly developed, pre-attentive mechanism. |

3. |

We are grateful to Paolo Legrenzi for a useful discussion with us about the connection between the terms “focusing” and “relevance”. |

4. |

Preliminary results from some current research in collaboration between Legrenzi, Evans, & Girotto are indicating support for the relevance interpretation of the Mynatt et al. results advanced here. |