Figure 30.1 A Typology of Research Designs

Thad Dunning

Confounding poses pervasive problems in the social sciences. For example, does granting property titles to poor land squatters boost access to credit markets, thereby fostering broad socioeconomic development (De Soto 2000)? To investigate this question, researchers might compare poor squatters who possess land titles to those who do not. However, differences in access to credit markets could in part be due to factors—such as family background—that also make certain poor squatters more likely to acquire titles to their property. Investigators may seek to control for such confounders, by comparing titled and untitled squatters with similar family backgrounds. Yet, even within strata defined by family background, there may be other difficult-to-measure confounders—such as determination—that are associated with obtaining titles and that also influence economic and political behaviors. Conventional quantitative methods for dealing with confounding, such as multivariate regression, require other essentially unverifiable modeling assumptions to be met, which is a further difficulty.

Social scientists have thus sharply increased their use of field and lab experiments and observational studies such as natural experiments, which may provide a way to address confounding while limiting reliance on the assumptions of conventional quantitative methods (Gerber and Green 2008; Morton and Williams 2010; Dunning 2008a, 2010a). Researchers working on Latin American politics are no exception. In fact, some of the most interesting recent exemplars of this style of research arguably come from research in Latin America, where natural and field experiments have increasingly been used to investigate questions of broad substantive import. Moreover, these methods are sometimes combined with extensive qualitative fieldwork and contextual knowledge that—as I shall argue below—are in fact indispensable for their persuasive use. Natural and field experiments may therefore provide ready complements to the traditional strengths of many researchers working on Latin American politics.

Yet, field and natural experiments can also have significant limitations, which are also important to explore. In this chapter, I describe the growing use of these methods in the study of Latin American politics. After introducing an evaluative framework developed in previous research (Dunning 2010a), I ask whether these methods can answer the kinds of “big” causal questions typical of research on Latin American politics—such as the relationship of democratization to redistribution, the causes and consequences of federalist transfers, or the relationship of electoral rules to policy-making. I argue that while these methods may often be insufficient to answer such questions on their own, they can usefully complement other approaches, and they could be employed with greater intellectual profit in a number of substantive domains.

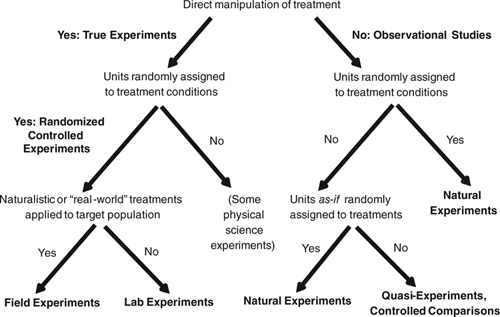

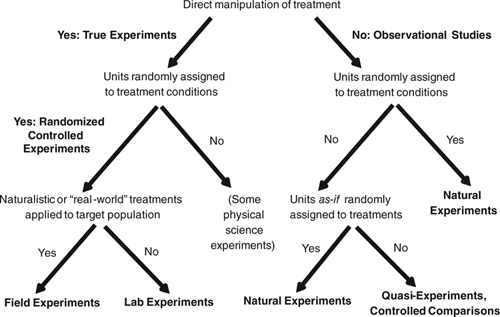

It is useful to begin by distinguishing natural and field experiments from other research designs, such as lab experiments, quasi-experiments, or conventional controlled comparisons. Though regrettable from the point of view of terminological clarity, only two of these labels—field and lab experiments—in fact refer to true experiments; natural experiments, quasi-experiments, and controlled comparisons instead refer to observational studies, a distinction I discuss further below. Figure 30.1 charts the conceptual relationship between these various research designs.

Direct manipulation of treatment conditions is the first hallmark of true experiments (left side of Figure 30.1). Thus, in an experiment to estimate the effects of land titles, researchers would extend land titles to some poor squatters (the treatment group), while other squatters would only retain their de facto claims to plots (the control group).1 Such experimental manipulation of treatments plays a key role in experimental accounts of causal inference (Holland 1986).

Figure 30.1 A Typology of Research Designs

In randomized controlled experiments, the second hallmark is randomization, which also plays a crucial role. To continue the example above, in a hypothetical randomized controlled experiment, assignment of squatters to de jure land titles or the status quo property claims would be done through an actual randomizing device, such as a lottery.2 Randomization implies that more determined squatters are just as likely to be assigned to the control group—and continue with their status quo property claims—as they are to go into the treatment group and thereby obtain land titles. Thus, because of randomization, possible confounders such as family background or determination would be balanced across the assigned-to-treatment and assigned-to-control groups, up to random error (Fisher 1935). Large post-titling differences across these groups would then provide reliable evidence for a causal effect of property titles.

Randomized controlled experiments can be further classified as field experiments or lab experiments.3 Field experiments are randomized controlled experiments in which target populations (or samples thereof) are exposed to naturalistic or “real-world” treatments (bottom-left of Figure 30.1). These are sometimes distinguished from laboratory experiments both by the nature of the experimental stimuli and of the types of units exposed to it; the treatments in lab experiments are sometimes seen as less “naturalistic” and administered to subjects who may not be drawn from a target population of direct interest.4 However, as Gerber and Green (2008) point out, whether any given study should be thought of as a lab or field experiment depends on the research question being posed. For example, a canonical lab experiment in psychology or behavioral economics might ask college students to play stylized games, as a way to assess attitude formation or decision-making processes. Yet, if one seeks to understand the decision-making of college students in abstract economic settings, such lab experiments in which undergraduates make allocative decisions could be regarded as field experiments. Notwithstanding these conceptual gray spots, I will describe the randomized controlled experiments discussed in this chapter as field experiments, since they largely involve the exposure of Latin American citizens to real-world stimuli—for instance, corruption allegations against politicians involved in actual political campaigns.5

Such true experiments can be contrasted with observational studies, in at least two ways. First, with observational studies, there is no experimental intervention or manipulation (right-side of Figure 30.1); this is the definitional criterion. Here, the researcher studies the world as she encounters it. Observational studies remain the dominant form of research in most social-science disciplines; one reason for this is that in many political or economic settings, direct experimental manipulation may be expensive or unethical. Moreover, institutional variables and other treatments of interest to political scientists may not often be amenable to direct experimental manipulation.

Second, many observational studies also lack randomization. Standard controlled comparisons such as “matching” designs, for example, do not involve randomization to treatment conditions (bottom-right of Figure 30.1). In the “quasi-experiments” discussed by Donald Campbell and Stanley (1963), non-random assignment to treatments is also a central feature (Achen 1986: 4).6 For example, in many observational studies, subjects self-select into treatment or control groups. For instance, some squatters may exert effort that allows them to obtain land titles, while others do not. Politicians may also choose to extend titles to favored constituents. Such selection processes raise standard concerns about confounding, because squatters who obtain land titles (perhaps by cultivating favors from politicians) may be more determined than those who do not, or may differ in other ways that matter for socio-economic outcomes. Thus, post-titling differences between titled and untitled squatters are difficult to attribute to the effects of titles: they could be due to the titles, the confounders, or both.

Natural experiments differ from other observational studies in this second respect, however. While there is no direct manipulation by the researcher of assignment to treatment conditions—and thus, natural experiments are observational studies—with a natural experiment, a researcher can make a credible claim that treatment assignment is random—or as good as random. In some natural experiments, as Figure 30.1 suggests, a lottery or other true randomizing device assigns subjects at random to treatment and control conditions. In this case, the distinction between a randomized controlled experiment and a natural experiment with true randomization simply concerns whether the investigator has planned and manipulated a particular treatment—in the case of a randomized controlled experiment—or instead has simply found that this treatment has been applied at random by policy makers or other actors.7

In other natural experiments, by contrast, social and political processes instead are claimed to have assigned units as-if at random to a key intervention—even though there is no true randomizing device involved. With such natural experiments, much attention should be focused on evaluating the central assumption and definitional criterion of as-if random assignment.

Social scientists increasingly seek to discover and use natural experiments, because of their potential usefulness in overcoming standard problems of confounding; another advantage with natural as well as field experiments is that data analysis can be substantially simpler and can rest on more credible assumptions than conventional quantitative tools such as multivariate regression (Dunning 2008a, 2010a; Sekhon 2009). Yet, there can be substantial limitations as well, which I discuss below.

As Table 30.1 suggests, natural experiments include several types—including what I call “standard” natural experiments, as well as specialized types such as regression-discontinuity (RD) designs and instrumental-variables (IV) designs. Standard natural experiments involve random or as-if random assignment to treatment and control conditions but otherwise may encompass a variety of different specific designs; the more specific features of RD and IV designs are discussed below. One may also distinguish natural experiments by whether treatment assignment is truly randomized or is only claimed to be as good as random, as noted in the second column of Table 30.1.

An example of a natural experiment with true randomization is the study by De la O (2010), who compares Mexican villages randomly selected to receive conditional cash transfers, through the government’s PROGRESA program, twenty-one months before the 2000 presidential elections to those randomly selected to enter the program only six months before the elections. She finds that early enrollment in PROGRESA caused an increase in electoral turnout of 7 percent and an increase in incumbent vote share of 16 percent, a finding that sheds light on the electoral efficacy of federal transfers in this context.

Similarly, Angrist and colleagues (Angrist et al. 2002; Angrist, Bettinger, and Kremer 2006) provide an example of a natural experiment in which authorities used randomized lotteries to distribute vouchers for private schools in Bogotá, Colombia.8 These authors compare school completion rates and test scores among lottery winners—who received vouchers at random—with lottery losers, finding that winners were more likely to finish eighth grade as well as secondary school and scored 0.2 standard deviations higher on achievement tests. While the focus here is on the educational impacts of vouchers,

Table 30.1 provides a non-exhaustive list of unpublished, forthcoming, or recently published studies of Latin American politics that use natural experiments. The listed studies suggest the breadth of substantive topics that have been investigated using these methods, as well as the range of countries in which such studies have been undertaken. In this section, I survey several recent examples of natural experiments in Latin America, before turning to a discussion of field experiments.

researchers could certainly take advantage of such lotteries to ask political questions: for example, how does exposure to private schooling influence political attitudes towards the proper role of government in the economy?

Other standard natural experiments rely instead, however, on the assumption of as-if random assignment. Galiani and Schargrodsky (2004) provide an interesting example on the effects of land titling in Argentina. In 1981, a group of squatters organized by the Catholic church occupied an urban wasteland in the province of Buenos Aires, dividing the land into similar-sized parcels which were allocated to individual families. A 1984 law, adopted after the return to democracy in 1983, expropriated this land, with the intention of transferring title to the squatters. However, some of the original owners then challenged the expropriation in court, leading to long delays in the transfer of titles to property owned by those owners, while other titles were ceded and transferred to squatters immediately.

The legal action therefore created a “treatment” group—squatters to whom titles were ceded immediately—and a control group—squatters to whom titles were not ceded. Galiani and Schargrodsky (2004) find significant differences across the treatment and control groups in average housing investment, household structure, and educational attainment of children—though not in access to credit markets, which contradicts De Soto’s theory that the poor will use titled property to collateralize debt.

Yet, was the assignment of squatters to these treatment and control groups really as good as random—as must be true in a valid natural experiment? In 1981, these authors assert, neither squatters nor Catholic church organizers could have successfully predicted which particular parcels would eventually have their titles transferred in 1984 and which would not. On the basis of extensive interviews and other qualitative fieldwork, the authors argue convincingly that idiosyncratic factors explain the decision of some owners to challenge expropriation; moreover, the government offered very similar compensation in per-meter terms to the original owners in both the treatment and the control groups, which helps makes the assertion of as-if random assignment compelling. Galiani and Schargrodsky (2004) also show that pre-treatment characteristics of squatters, such as age and sex, as well as characteristics of the parcels themselves, such as distance from polluted creeks, are statistically unrelated to the granting of titles—just as they would be, in expectation, if squatters were truly assigned titles at random. Note that the natural experiment therefore plays a key role in making causal inferences persuasive. Without it, confounders could explain ex-post differences between squatters with and without titles.

In regression-discontinuity (RD) designs, assignment to treatment is determined by the value of a covariate, sometimes called a forcing variable; there is a sharp discontinuity in the probability of receiving treatment at a particular threshold value of this covariate (Campbell and Stanley 1963: 61–64; Rubin 1977).9 For example, Thistlewaite and Campbell (1960) compared students who just scored above the qualifying score on a national merit scholarship program—and thus received public recognition for their scholastic achievement—with those who scored just below the required score and thus did not receive recognition from the scholarship program. Because there is an element of unpredictability and luck in exam performance—and so long as the threshold is not manipulated ex-post to include particular exam-takers—students just above the threshold are likely to be very similar to students just below the threshold. Comparisons of students just above and just below the critical threshold may thus be used to estimate the effect of public recognition of scholastic achievement, among this group of students.

As Table 30.1 suggests, regression-discontinuity designs have found especially wide use in recent work on Latin American politics. Fujiwara (2009) and Hidalgo (2010), for instance, use regression-discontinuity designs to study the impact of electronic voting on de facto enfranchisement, patterns of partisan support, and fiscal policy in Brazil. In the 1998 elections, municipalities with more than 40,500 registered voters used electronic ballots, while municipalities with fewer than 40,500 voters continued to use traditional paper ballots. The electronic voting machines displayed candidates’ names, party affiliations, and photographs; this was thought to ease the voting process for illiterate voters, in particular. Municipalities “ just above” and “ just below” the threshold of 40,500 registered voters should on average be highly similar; indeed, since the threshold was announced in May of 1998 and the number of registered voters was recorded in the municipal elections of 1996, municipalities should not have been able to manipulate their position in relation to the threshold. Nor is there any evidence that the particular threshold was chosen by municipalities to exclude or include particular municipalities. (The threshold was applied uniformly throughout the country with the exception of four states). Thus, comparisons around the threshold can be plausibly used to estimate the impact of the voting technology.

Hidalgo (2010) and Fujiwara (2009) find that introduction of electronic voting increased the effective franchise in legislative elections by about 13–15 percentage points or about 33 percent—a massive effect that appears more pronounced in poorer municipalities with higher illiteracy rates. While Fujiwara (2009) finds that this de facto enfranchisement had a large and positive effect on votes received by the Worker’s Party (PT) and presents some evidence that states in which a larger proportion of the electorate voted electronically had more progressive fiscal policies, Hidalgo (2010) finds that expansion of the franchise did not greatly tilt the ideological balance in the national Chamber of Deputies and may have even favored center-right candidates, on average. Yet, voting reforms also elevated the proportion of voters who voted for party lists only—rather than choosing individual candidates, as allowed under Brazil’s open list proportional representation electoral rules—and also boosted the vote shares of parties with clear ideological identities. Moreover, the introduction of voting machines led to substantial declines in the vote shares of incumbent “machine” parties in several northeastern states. Hidalgo (2010) suggests that the introduction of voting machines contributed to the strengthening of programmatic politics in Brazil, an outcome noted by many scholars studying the country. Thus, here is an example of a natural-experimental study that contributes decisively to a very “big” question of broad importance in developing democracies, namely, the sources of transitions from more clientelistic to more programmatic forms of politics.

Regression-discontinuity research designs have also been used extensively to study the economic and political impacts of federal transfers in Latin America. Green (2005), for example, calculates the electoral returns of the Mexican conditional cash-transfer program, PROGRESA (the same program studied by De La O 2010), using a regression-discontinuity design based on a municipal poverty ranking. Similarly, Manacorda, Miguel, and Vigorito (2009) use a discontinuity in program assignment based on a pre-treatment eligibility score to study the effects of cash transfers on support for the incumbent Frente Amplio government in Uruguay. They find that program beneficiaries are much more likely than non-beneficiaries to support the incumbent, by around 11 to 14 percentage points. Litschig and Morrison (2009) study the effect of federal transfers on municipal incumbents’ vote shares in Brazil, while Brollo, Nannicini, Perotti, and Tabellini (2010) study the effect of such transfers on political corruption and on the qualities of political candidates. Both of these latter studies take advantage of the fact that the size of some federal transfers in Brazil depends on given population thresholds, so they can construct regression-discontinuity designs in which municipalities just on either side of the relevant thresholds are compared.

A different kind of regression-discontinuity design, which has also found growing use in Latin America, takes advantage of the fact that in very close and fair elections, there is an element of luck and unpredictability in the outcome; thus, underlying attributes of near-winners may not differ greatly from near-losers. As Lee (2008) suggested in his study of the U.S. Congress, this may allow for natural-experimental comparisons: since near-winners and near-losers of close elections should be nearly identical, on average, comparisons of these groups after an election can be used to estimate the effects of winning office.

For instance, Titiunik (2009) studies the incumbency advantage of political parties in Brazil’s municipal mayor elections, comparing municipalities where a party barely lost the 2000 mayor elections to municipalities where it barely won. Contrary to findings in the United States, she finds evidence of a strong negative effect of incumbency on both the vote share and the probability of winning in the following election; the significant estimated effect sizes range from around negative 4 percentage points of the vote share for the Liberal Front Party (PFL) to around negative 19 percentage points for the Party of the Brazilian Democratic Movement (PMDB).

Using a related research design, Boas, Hidalgo, and Richardson (2010) study the effect of campaign contributions on government contracts received by donors. In their working paper, these authors compare the returns to donations to near-winners and near-losers of campaigns, showing that public-works companies that rely on government contracts may receive a substantial monetary return on electoral investments. The effect size is striking: these authors find that public works firms who donate to winners receive about 2,300 times their donation in additional federal contracts.

Next, in a study of the relationship between political incumbency and media access, Boas and Hidalgo (2010) show that near-winners of city council elections are much more likely than near-losers to have their applications for community radio licenses approved by the federal government, a finding that reinforces previous research on the political control of the media in Brazil. Finally, Brollo and Nannicini (2010) use an RD design to study the effect of partisan affiliation on federal transfers to municipalities in Brazil, comparing winners and losers of close elections and stratifying on whether winner is member of the president’s coalition.

Regression-discontinuity designs have also been used to study the political and economic impact of electoral rules. For instance, Fujiwara (2008) exploits the fact that the Brazilian Federal Constitution states that municipalities with less than 200,000 registered voters must use a single-ballot plurality rule (a first-past-the-post system where the candidate with the most votes is elected) to elect their mayors, while municipalities with more than 200,000 voters must use the dual-ballot plurality rule (second-round “runoff”), a system where voters may vote twice. He finds that in the neighborhood of this threshold, the change from single-ballot to second-round runoff systems increases voting for third-place finishers and decreases the difference between third-place and first- and second-place finishers, a finding consistent both with strategic voting and with the observation of Duverger (1954) and of Cox (1997) that in elections for m seats, m+1 candidates should command most of the votes. Chamon, de Mello, and Firpo (2009) extend this same idea, finding that the greater political competition induced by the discontinuous change in electoral rules in mayor elections at the threshold of 200,000 voters induces greater investment and reduces current expenditures, particularly personnel expenditures. Finally, Ferraz and Finan (2010) take advantage of a constitutional amendment in Brazil that sets salary caps on the wages of local legislators as a function of the population size of municipalities. Using this rule to construct an RD design, they find that higher wages increase legislative productivity and political entry but also increase reelection rates among incumbent politicians.

A final kind of natural experiment worthy of mention is the instrumental-variables (IV) design. Consider the challenge of inferring the impact of a given independent variable on a particular dependent variable—where this inference is made more difficult, given the strong possibility that reciprocal causation or omitted variable bias may pose a problem for causal inference. The solution offered by the IV design is to find an additional variable—an instrument—that is correlated with the independent variable but could not be influenced by the dependent variable or correlated with its other causes. That is, the instrumental variable is treated as if it “assigns” units to values of the independent variable in a way that is as-if random, even though no explicit randomization occurred.

For this IV approach to be valid, several conditions must typically hold. First, and crucially, assignment to the instrument must be random or as-if random. Second, while the instrument must be correlated with the treatment variable of interest, it must not independently affect the dependent variable, above and beyond its effect on the treatment variable. For further discussion of these and other assumptions, see Sovey and Green (2011) or Dunning (2008b).

One example of an IV design in research on Latin America is Hidalgo, Naidu, Nichter, and Richardson (2010), who study the effects of economic growth on land invasions in Brazil. Arguing that reverse causality or omitted variables could be a concern—for instance, land invasions could influence growth, and unmeasured institutions could influence both growth and invasions—these authors use rainfall growth as an instrumental variable for economic growth.10 The idea is that annual changes in rainfall provide as-if random shocks to economic growth. The authors find that decreases in growth, instrumented by rainfall, indeed encourage land invasions.

This application illuminates characteristic strengths and limitations of IV designs. Rainfall certainly appears to affect growth, as required by the IV approach; yet, it rainfall may or may not influence land invasions only through its effect on growth, which is also required for the approach to be valid. Any direct effect of rainfall—for instance, if floods make it harder to organize invasions—would violate the assumptions of instrumental-variables regression model. Variation in rainfall may also influence growth only in particular sectors, such as agriculture, and growth in distinct economic sectors may have idiosyncratic effects on the likelihood of invasions (Dunning 2008b).

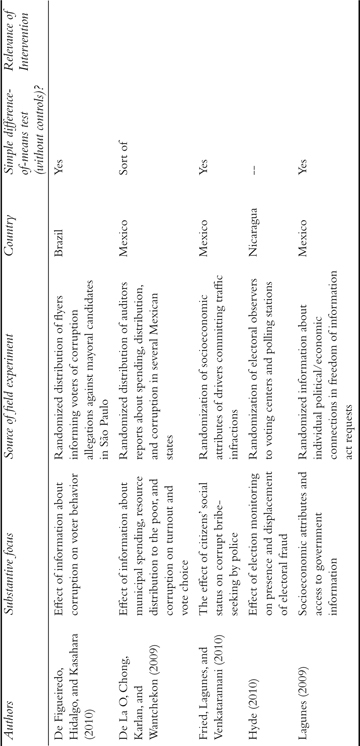

Other studies of Latin American politics have instead deployed field experiments, in which the manipulation is under investigators’ control. Recent field experiments on Latin American politics have also addressed a variety of substantive questions. However, as the shorter list in Table 30.2 (compared to Table 30.1) may imply, it is fair to say that this methodological approach is in its infancy in this substantive realm.11

Table 30.2 Field Experiments in the Study of Latin American Politics

For instance, do voters hold politicians accountable when exposed to information about politicians’ performance in office? To investigate this question, De Figueiredo, Hidalgo, and Kasahara (2010) sent flyers to voters in randomly selected precincts during the 2008 mayoral elections in São Paulo, describing the corruption convictions of the center-right candidate (first treatment) and the center-left candidate (second treatment). They then compared electoral outcomes in these sets of precincts to those in which flyers were not distributed (the control), finding that information about corruption convictions reduced turnout and the vote-share of the center-left candidate, though not for the center-right candidate.

Similarly, Chong, De La O, Karlan, and Wantchekon (2011) randomly assigned precincts in several Mexican states to receive information about municipalities’ overall spending, distribution of resources to the poor, and corruption, or to receive no such information. Like De Figueiredo et al. (2010), they found that corruption information suppressed turnout in municipal elections, while expenditure information increased electoral participation and incumbent parties’ vote share.

Other field experiments in Latin America have investigated how wealth or political connections may affect citizens’ access to key services of the state. For example, Fried, Lagunes, and Venkataramani (2010) employed an experiment in Mexico City, in which automobile drivers committed identical traffic infractions but socioeconomic characteristics of the drivers and their cars were varied at random; they found some evidence that officers solicited bribes disproportionately from poorer individuals. Lagunes (2009), on the other hand, found that requests for documents under Freedom of Information laws in Mexico were about equally attended to, regardless of any information provided about the wealth and political connections of applicants.

Finally, Hyde (2010) randomly assigned election monitors to voting centers, as well as polling stations within those centers, in the 2006 Nicaraguan general elections. The experimental design allowed her to detect whether electoral monitoring surpressed fraud (by comparing centers with monitors to those without monitors) or displaced fraud (by comparing polling stations with and without monitors, within voting centers assigned to monitoring). Hyde did not find strong evidence of either supression or displacement in this election.

Beyond these natural and field experiments, other experimental work in Latin America can also be mentioned. Of particular interest is Gonzalez-Ocantos, Kiewiet de Jonge, Meléndez, Osorio, and Nickerson (2010), who estimate the incidence of vote-buying using a list experiment embedded in a survey.12 This technique provides an innovative way to overcome problems with social desirability bias in studies of vote-buying and suggests that the prevalence of this practice may be much greater than traditional surveys suggest. Dunning (2010b) uses an experiment to compare the effects of candidate race and class on voter preferences in Brazil, while Finan and Schechter (2010) use survey-experimental measures of reciprocity and survey data on vote-buying to argue that individuals with greater internalized norms of reciprocity—who are thus less likely to renege on implicit vote-buying contracts—are disproportionately targeted for clientelist transfers in Paraguay. Finally, Desposato (2007) uses an experiment to study the effects of positive and negative campaigning, comparing responses of survey respondents to videos containing negative, positive, or no campaign message and finding strong effects of vote and turnout intentions.

The growing use of field and natural experiments in the study of Latin American politics raises the questions: What are their strengths and limitations? Can these methods make important contributions to answering the broad substantive questions that tend to animate scholars of Latin American politics?

Elsewhere, I have suggested three criteria along which natural experiments (as well as other research designs) may be evaluated: the plausibility of as-if random assignment, the credibility of statistical models, and the substantive relevance of the intervention (Dunning 2010a). In Latin America as elsewhere, specific field and natural experiments may vary with respect to these three criteria. Tradeoffs between these dimensions may routinely arise in any particular study, and good research can be understood as the process of managing these tradeoffs astutely.

With respect to the first criterion—the plausibility of as-if random assignment—field experiments tend to be very strong, barring some failure of randomization. So do natural experiments with true randomization, such as the Colombia voucher studies. Strong regression-discontinuity designs and some “standard” natural experiments (such as the Argentina land titling study) can be quite compelling on these grounds as well.

However, other studies may leave something to be desired in this respect, which can undercut the claim that a natural experiment is being used (see Dunning 2008a, 2010a). In an alleged natural experiment, this assertion should therefore be supported both by the available empirical evidence—for example, by showing that treatment and control groups are statistically equivalent on measured pre-treatment covariates, just as they would likely be with true randomization—and by a priori reasoning and substantive knowledge about the process by which treatment assignment took place.13 As I discuss further below, this kind of knowledge is often gained only through intensive and close-range fieldwork.

On the second criterion—the credibility of statistical models—field and natural experiments should in principle be strong as well. After all, random or as-if random assignment should ensure that treatment assignment is statistically independent of other factors that influence outcomes. Thus, the need to adjust for confounding variables is minimal, and a simple difference-of-means test—without control variables—suffices to assess the causal effect of treatment assignment.14

Unfortunately, this analytic simplicity is not inherent in the experimental or natural experimental approach. Indeed, many studies analyzing experimental or natural experimental data only present the results of fitting large multivariate regression models—the statistical assumptions of which are difficult to explicate and defend, let alone validate, and for which risks of data mining associated with the fitting of multiple statistical specifications can arise. Studies of Latin American politics are no exception. In the final column of Table 30.1, I indicate whether a simple, unadjusted difference-of-means test is used to evaluate the null hypothesis of no effect of treatment. While I use a quite permissive coding here, only a bare majority of the studies in the table report unadjusted differences-of-means tests, in addition to any auxiliary analyses.15 For further discussion of these points, see Dunning (2010a).

Finally, a third dimension along which research designs may vary involves the theoretical and substantive relevance of the intervention. Roughly speaking, this criterion corresponds to answers to the question: does the intervention assigned by Nature shed light on the effects of a treatment about which we are interested for substantive, policy, or social-scientific purposes? Answers to this question might be more or less affirmative for a number of distinct reasons. For instance, the type of subjects or units exposed to the intervention might be more or less like the populations in which we are most interested (Campbell and Stanley 1963). Next, the particular treatment that has been manipulated by Nature might have idiosyncratic effects that are possibly distinct from the effects of treatments we care about most (Dunning 2008a, 2008b). Finally, natural-experimental interventions (like the interventions in some true experiments) may “bundle” many distinct treatments or components of treatments, limiting the extent to which a natural experiment isolates the effect of a treatment that we care most about for particular substantive or social-scientific purposes. Below, I will comment further on the success of field and natural experiments in Latin America along this dimension.

Underlying all three of these dimensions is a fourth that is crucial for achieving success on the first three dimensions: qualitative methods and substantive knowledge. Indeed, the persuasive use of natural experiments often relies on extensive contextual knowledge—for example, in the Argentina squatters study, on the process by which treatment assignment took place. Case-based knowledge is also often necessary to recognize and validate a potential natural experiment, and the skills of many qualitative researchers are well-suited to the implementation of field experiments. In both field and natural experiments, “causal process observations” (Collier, Brady, and Seawright 2010) can enrich understanding of both mechanisms and outcomes, perhaps through the kind of “experimental ethnography” recommended by Sherman and Strang (2004; see Paluck 2008).16 Indeed, the act of collecting the original data used in natural and field experiments—rather than using off-the-shelf data, as is often the case in conventional quantitative analysis—virtually requires scholars to do fieldwork in some form, which may make scholars aware of the process of treatment assignment and of details that may be important for interpreting causal effects.17 Many fieldwork-oriented researchers working in Latin America may thus be well-positioned to exploit natural and field experiments as one methodological tool in an overall research program.

Research on Latin American politics has often raised important substantive questions that often end up stimulating research on other regions as well—such as the sources of democratic breakdown and democratization, the causes and consequences of federalism, or the relationship between political parties and social movements. It is therefore natural for a survey of natural and field experiments in Latin America to ask: can these methods help answer such “big” questions? In this respect, assessing the substantive relevance of intervention—the third dimension of the evaluative framework discussed above—seems particularly relevant for studies of Latin American politics.

A first point to make in this respect is that many of the studies discussed above do not consider apparently trivial topics. For instance, these studies investigate such questions as the relationship of voting technologies to de facto enfranchisement of illiterates (Fujiwara 2009); the impact of electoral rules on policy-making (Fujiwara 2008; Chamon et al. 2009); the effect of federal transfers on support for political incumbents (De La O 2010; Manacorda et al. 2009); and the economic returns to campaign donations (Boas et al. 2010). They also estimate the party-based electoral advantage provided by incumbency (Titiunik 2009) and investigate other topics that may be key for democratic “deepening,” such as the ability of voters to hold politicians accountable for corrupt acts (De Figueiredo, Hidalgo, and Kasah 2010, Chong et al. 2011). These are important topics, and investigating them empirically has previously posed considerable challenges: after all, confounding factors are typically associated with electoral rules, federal transfers, or campaign donations, which makes it difficult to infer the causal effects of such variables. Thus, it seems that these field and natural experiments usefully contribute to the study of the topics discussed by other contributors to this volume, such as role of political parties in Latin America (chapter 4), the causes and consequences of decentralization and federalism (chapter 3), and the nature of accountability and representation (chapter 8).

Yet, my survey of the recent rise of natural and field experiments in Latin America also suggests some weaknesses. For example, one apparent limitation is the absence to date of large-scale comparison across the various “micro” studies discussed here. Notice, for instance, that all of the studies listed in Table 30.1 are single-country studies.18 This is not necessarily bad for all research questions. After all, within-country comparisons have been praised for a number of different reasons (Snyder 2001), and they are increasingly prevalent in comparative politics. Yet, the apparent “one-off” character of some of these experimental and natural experimental studies may limit the extent to which their results are integrated in an explicitly comparative framework.

It is important to point out that this defect is not inherent in these methods. Indeed, the results from some of the separate studies listed in Table 30.1 could allow for interesting comparisons across countries. For instance, Manacorda et al.’s (2009) study of the electoral effects of federal transfers in Uruguay is similar to a host of studies on the political impact of PROGRESA in Mexico (Green 2005; De La O 2010), as well as conditional cash-transfer programs in other countries. In principle, one could also replicate the field experiments listed in the table across multiple countries. Of course, rigorous causal inferences may not be easy to draw from divergent results across countries, since many factors vary across each context. Yet, interesting insights might nonetheless be obtained from integrating experiments or natural experiments in a more systematically comparative framework.

This chapter’s survey also suggests that while analysts increasingly employ several particular kinds of natural experiments—such as regression-discontinuity designs in which near-winners and near-losers of elections are compared—there may also be many ways to extend such designs in interesting and novel directions, as in the RD study by Boas and Hidalgo (2010) on the economic returns to campaign contributions in Brazil. In addition, many distinct kinds of “standard” natural experiments (i.e., those in which a regression-discontinuity or instrumental-variables strategy is not used) may await researchers alert to their possible existence. The list of published, forthcoming, or working papers listed in Table 30.1 may represent only the beginning of efforts to use such methods, together with other approaches, to investigate substantively important topics.

In sum, the increasing use of natural and field experiments does appear promising in many ways. After all, strong research design can play a vital role in bolstering valid causal inference. Random or as-if random assignment to treatment plays a key role in overcoming pervasive issues of confounding. Strong research designs also permit simple and transparent data analysis, at least in principle if not always in practice. Finally, field and natural experiments can sometimes be used to address big, important questions, though the extent to which an intervention is deemed theoretically relevant may depend on the nature of the question and the state of scientific progress in answering it.

Yet, research design alone is also rarely sufficient. Many modes of inquiry seem to be involved in successful causal inference, and field and natural experiments may be most likely to be useful when combined with other methods, as one part of an overall research agenda. For scholars of Latin American politics, particularly those oriented towards intensive fieldwork, incorporating natural or field experiments into a broader project may allow successful multi-method work that leverages traditional strengths in the field while also helping to bolster valid causal inference. In this way, natural and field experiments may usefully inform the broad debates discussed by other contributors to this volume.

1 In some experiments, what is manipulated is assignment to treatment conditions; I do not focus further attention on this subtlety here.

2 Though many experiments are randomized controlled experiments, some are not; for instance, in some physical science experiments, stimuli are under the control of the experimental researcher, but there is no randomization of units to treatment conditions (and there may be no “control” group).

3 There are, of course, other varieties of randomized controlled experiments, such as survey experiments, in which the effects of variation in question order, the content of vignettes posed to respondents, or other treatments in the context of survey questionnaires are studied.

4 Thus, a hypothetical experiment in which squatters are assigned at random to receive de jure titles or instead retain only de facto property claims can be described as a field experiment, if researchers are interested in the effect of actual land titles on the real-world behavior of poor squatters.

5 A number of recent studies might also be described as “lab-in-the-field” experiments, in which subjects from a target population of interest are recruited—for example, through door-to-door interviews with a probability sample of residents—but play behavioral games or are exposed to other treatments that are standard in lab experiments.

6 The lack of randomization involved in this research design helped generate Campbell’s famous check-list of threats to internal validity in quasi-experiments.

7 In some settings, the distinction may not imply a major difference: if scholars are interested in the effects of lotteries for school vouchers in Colombia (an example discussed below), whether the lotteries are conducted by policy makers or by researchers may be trivial. In other settings, however, the greater degree of researcher control over the nature of the manipulation afforded by randomized controlled experiments may be crucial for testing particular theoretical or substantive claims. It is thus useful to retain the distinction between observational studies—even those that feature true randomization—and randomized controlled experiments.

8 Such natural experiments are like true experiments in that the treatment is randomized, yet the manipulation is not typically introduced by the researcher, making these observational studies.

9 If the treatment has an effect, there may be a sharp discontinuity in the regression lines relating the outcome to the forcing variable on either side of the threshold, which is what gives the method its name. This name does not imply that regression modeling is the best way to analyze data from an RD design.

10 The approach is similar to Miguel, Satyanath, and Sergenti’s (2004) use of rainfall growth to instrument for economic growth, in a study of the effect of growth on civil war in Africa.

11 Of course, the studies in Tables 30.1 and 30.2 are not intended as exhaustive censuses of existing natural and field experiments in the study of Latin American politics.

12 In this list experiment, respondents are shown a list of activities that might have happened during the last election; the treatment condition includes a mention of vote-buying, while the control condition omits this item. Respondents are asked to report how many of the items on the occurred during the last elections, not which ones. The experiment may thus circumvent social desirability biases that prevent the direct reporting of vote buying in response to direct questions. In fact, Gonzalez-Ocantos et al. (2010) show that using the list experiment can sharply elevate estimates of the incidence of vote-buying.

13 “Pre-treatment covariates” are those whose values are thought to have been determined before the intervention of interest took place. In particular, they are not themselves seen as outcomes of the treatment.

14 The causal and statistical model that justifies such simple comparisons is the Neyman (also called the Neyman-Rubin-Holland) model; see Dunning (2010a) for further discussion.

15 If an analyst reports results from a bivariate regression of the outcome on a constant and a dummy variable for treatment, without control variables, this is coded as a simple difference-of-means test—even though estimated standard errors can be misleading (Freedman 2008, Dunning 2010a).

16 Experimental ethnography may refer to the deep or extensive interviewing of selected subjects assigned to treatment and control groups. The focus may be qualitative and interpretive in nature, with the meaning subjects attribute to the treatment (or its absence) being a central topic of concern.

17 This seems true to some extent even when scholars hire survey firms, as they must still interact with a local firm to design the study, train investigators, and so on.

18 For apparently fortuitous reasons, Brazil appears quite over-represented, with fully 13 of 22 or nearly 60 percent of studies in Table 30.1 focused on this country.

Achen, Christopher. 1986. The Statistical Analysis of Quasi-Experiments. Berkeley: University of California Press.

Angrist, Joshua, Eric Bettinger, Erik Bloom, Elizabeth King, and Michael Kremer. 2002. “Vouchers for Private Schooling in Colombia: Evidence from a Randomized Natural Experiment.” American Economic Review 92 (5): 1525–57.

Angrist, Joshua, Eric Bettinger, and Michael Kremer. 2006. “Long-Term Consequences of Secondary School Vouchers: Evidence from Administrative Records in Colombia.” American Economic Review 96(3): 847–62.

Boas, Taylor, and F. Daniel Hidalgo. 2010. “Controlling the Airwaves: Incumbency Advantage and Community Radio in Brazil.” Working paper, Boston University and University of California Berkeley.

Boas, Taylor, F. Daniel Hidalgo, and Neal Richardson. 2010. “The Returns to Political Investment: Campaign Donations and Government Contracts in Brazil.” Working paper, Boston University and University of California Berkeley.

Brollo, Fernanda, and Tommaso Nannicini. 2010. “Tying Your Enemy’s Hands in Close Races: The Politics of Federal Transfers in Brazil.” Working paper, Bocconi University.

Brollo, Fernanda, Tommaso Nannicini, Roberto Perotti, and Guido Tabellini. 2010. “The Political Resource Curse.” Working paper, Bocconi University.

Campbell, Donald T. and Julian C. Stanley. 1963. Experimental and Quasi-Experimental Designs for Research. Boston, MA: Houghton Mifflin.

Chamon, Marcos, João M. P.de Mello, and Sergio Firpo. 2009. “Electoral Rules, Political Competition, and Fiscal Expenditures: Regression Discontinuity Evidence from Brazilian Municipalities.” IZA Discussion Paper No. 4658.

Chong, Alberto, Ana De La O, Dean Karlan, and Leonard Wantchekon. 2011. “Information Dissemination and Local Governments’ Electoral Returns: Evidence from a Field Experiment in Mexico.” Unpublished manuscript.

Collier, David, Henry E. Brady, and Jason Seawright. 2010. “Sources of Leverage in Causal Inference: Toward an Alternative View of Methodology.” Chapter 13 in David Collier and Henry Brady, eds., Rethinking Social Inquiry: Diverse Tools, Shared Standards. Lanham, MD: Rowman & Littlefield.

Cox, David R. 1958. Planning of Experiments. New York: Wiley.

Cox, Gary W. 1997. Making Votes Count: Strategic Coordination in the World’s Electoral Systems. Cambridge: Cambridge University Press.

De Figueiredo, Miguel F. P., and F. Daniel Hidalgo. 2010. “When Do Voters Punish Corrupt Politicians? Experimental Evidence from Brazil.” Working Paper, University of California Berkeley.

De Figueiredo, Miguel F. P., F. Daniel Hidalgo, Yuri Kasahara. 2010. “When Do Voters Punish Corrupt Politicians? Experimental Evidence from Brazil.” Working paper, University of California Berkeley.

Deaton, Angus. 2009. “Instruments of Development: Randomization in the Tropics, and the Search for the Elusive Keys to Economic Development.” The Keynes Lecture, British Academy, October9, 2008.

De la O, Ana. 2010. “Do Conditional Cash Transfers Affect Electoral Behavior? Evidence from a Randomized Experiment in Mexico.” Working paper, Yale University.

De Soto, Hernando. 2000. The Mystery of Capital: Why Capitalism Triumphs in the West and Fails Everywhere Else. New York: Basic Books.

Desposato, Scott. 2007. “The Impact of Campaign Messages in New Democracies: Results From An Experiment in Brazil.” Working paper, University of California San Diego.

Di Tella, Rafael, Sebastian Galiani, and Ernesto Schargrodsky. 2007. The Formation of Beliefs: Evidence from the Allocation of Land Titles to Squatters. Quarterly Journal of Economics 122: 209–41.

Druckman, James N., Donald P. Green, James H. Kuklinski, and Arthur Lupia. 2006. “The Growth and Development of Experimental Research in Political Science.” American Political Science Review 100 (4): 627–35.

Dunning, Thad. 2008a. “Improving Causal Inference: Strengths and Limitations of Natural Experiments.” Political Research Quarterly 61 (2): 282–93.

Dunning, Thad. 2008b. “Model Specification in Instrumental-Variables Regression.” Political Analysis 16 (3): 290–302.

Dunning, Thad. 2010a. “Design-Based Inference: Beyond the Pitfalls of Regression Analysis?” In David Collier and Henry Brady, eds., Rethinking Social Inquiry: Diverse Tools, Shared Standards. Lanham, MD: Rowman & Littlefield, 2nd edition.

Dunning, Thad. 2010b. “Race, Class, and Voter Preferences in Brazil.” Working Paper, Yale University.

Duverger, Maurice. 1954. Political Parties. London: Methuen. Ferraz, Claudio, and Frederico Finan. 2008. “Exposing Corrupt Politicians: The Effect of Brazil’s Publicly Released Audits on Electoral Outcomes.” Quarterly Journal of Economics 123 (2): 703–45.

Ferraz, Claudio, and Frederico Finan. 2010. “Motivating Politicians: The Impacts of Monetary Incentives on Quality and Performance.” Working paper, University of California Berkeley.

Finan, Frederico, and Laura Schecter. 2010. “Vote-Buying and Reciprocity.” Working paper, Departments of Economics, University of California Berkeley and University of Wisconsin-Madison.

Fisher, Sir Ronald A. 1935. “The Design of Experiments.” In J. H. Bennett, ed., Statistical Methods, Experimental Design, and Scientific Inference. Oxford: Oxford University Press.

Freedman, David A. 2009. Statistical Models: Theory and Practice. Cambridge: Cambridge University Press, 2nd edition.

Fried, Brian J., Paul Lagunes, and Atheender Venkataramani. 2010. “Corruption and Inequality at the Crossroad: A Multimethod Study of Bribery and Discrimination in Latin America.” Latin American Research Review 45 (1): 76–97.

Fujiwara, Thomas. 2008. “A Regression Discontinuity Test of Strategic Voting and Duverger’s Law.” Working paper, University of British Columbia.

Fujiwara, Thomas. 2009. “Can Voting Technology Empower the Poor? Regression Discontinuity Evidence from Brazil.” Working paper, University of British Columbia.

Galiani, Sebastian, and Ernesto Schargrodsky. 2004. “The Health Effects of Land Titling.” Economics and Human Biology 2: 353–72.

Gerber, Alan S., and Donald P. Green. 2008. “Field Experiments and Natural Experiments.” In Janet Box-Steffensmeier, Henry E. Brady, and David Collier, eds., The Oxford Handbook of Political Methodology. New York: Oxford University Press, 357–81.

Gonzalez-Ocantos, Kiewiet de Jonge, Meléndez, Osorio, and Nickerson. 2010. “Vote Buying and Social Desirability Bias: Experimental Evidence from Nicaragua.” Working paper, Notre Dame University.

Green, Tina. 2005. “Do Social Transfer Programs Affect Voter Behavior? Evidence from Progresa in Mexico.” Working Paper, University of California Berkeley.

Green, Donald. 2009. “Regression Adjustments to Experimental Data: Do David Freedman’s Concerns Apply to Political Science?” Working paper, Yale University.

Gonzalez-Ocantos, Ezequiel, Chad Kiewietde Jonge, Carlos Meléndez, Javier Osorio, and David W. Nickerson. 2010. “Vote Buying and Social Desirability Bias: Experimental Evidence from Nicaragua.” Working Paper, Notre Dame University.

Heckman, James J. 2000. “Causal Parameters and Policy Analysis in Economics: A Twentieth Century Retrospective.” Quarterly Journal of Economics 115: 45–97.

Hidalgo, F. Daniel. 2010. “Digital Democratization: Suffrage Expansion and the Decline of Political Machines in Brazil.” Manuscript, Department of Political Science, University of California Berkeley.

Hidalgo, F. Daniel, Suresh Naidu, Simeon Nichter, and Neal Richardson. 2010. “Occupational Choices: Economic Determinants of Land Invasions.” Review of Economics and Statistics 92 (3): 505–23.

Holland, Paul W. 1986. “Statistics and Causal Inference.” Journal of the American Statistical Association 81 (396): 945–60.

Hyde, Susan D. 2010. “Election Fraud Deterrence, Displacement, or Both? Evidence from a Multi-Level Field Experiment in Nicaragua.” Working paper, Yale University.

Imbens, Guido. 2009. “Better LATE Than Nothing: Some Comments on Deaton (2009) and Heckman and Urzua (2009).” Working paper, Harvard University.

Lagunes, Paul. 2009. “Irregular Transparency? An Experiment Involving Mexico’s Freedom of Information Law.” Social Science Research Network. http://ssrn.com/abstract=1398025.

Lee, David S. 2008. “Randomized Experiments from Non-random Selection in U.S. House Elections.” Journal of Econometrics 142 (2): 675–97.

Litschig, Stephan, and Kevin Morrison. 2009. “Local Electoral Effects of Intergovernmental Fiscal Transfers: Quasi-Experimental Evidence from Brazil, 1982–1988.” Working paper, Universitat Pompeu Fabra and Cornell University.

Manacorda, Marco, Edward Miguel, and Andrea Vigorito. 2009. “Government Transfers and Political Support.” Working Paper, Department of Economics, University of California Berkeley.

Miguel, Edward, Shanker Satyanath, and Ernest Sergenti. 2004. “Economic Shocks and Civil Conflict: An Instrumental Variables Approach.” Journal of Political Economy 112 (4): 725–53.

Morton, Rebecca B., and Kenneth C. Williams. 2010. Experimental Political Science and the Study of Causality: From Nature to the Lab. New York: Cambridge University Press.

Paluck, Elizabeth Levy. 2008. “The Promising Integration of Qualitative Methods and Field Experiments.” Qualitative and Multi-Method Research 6 (2): 23–30.

Richardson, Benjamin Ward. 1887 [1936]. “John Snow, M.D.” The Asclepiad 4: 274–300, London. Reprinted in Snow on Cholera. London: Oxford University Press, 1936.

Rosenzweig, Mark R., and Kenneth I. Wolpin. 2000. “Natural ‘Natural Experiments’ in Economics.” Journal of Economic Literature 38 (4): 827–74.

Rubin, Donald B. 1977. “Assignment to Treatment on the Basis of a Covariate.” Journal of Educational Statistics 2: 1–26.

Sekhon, Jasjeet S. 2009. “Opiates for the Matches: Matching Methods for Causal Inference.” Annual Review of Political Science 12: 487–508.

Sherman, Lawrence, and Heather Strang. 2004. “Experimental Ethnography: The Marriage of Qualitative and Quantitative Research.” The Annals of the American Academy of Political and Social Sciences 595, 204–22.

Snow, John. 1855. On the Mode of Communication of Cholera.London: John Churchill, 2nd edition. Reprinted in Snow on Cholera, London: Oxford University Press, 1936.

Sovey, Allison J., and Donald P. Green. 2011. “Instrumental Variables Estimation in Political Science: A Readers’ Guide.” American Journal of Political Science 55 (1): 188–200.

Thistlethwaite, Donald L., and Donald T. Campbell. 1960. “Regression-discontinuity Analysis: An Alternative to the Ex-post Facto Experiment.” Journal of Educational Psychology 51 (6): 309–17.

Titiunik, Rocío. 2009. “Incumbency Advantage in Brazil: Evidence from Municipal Mayor Elections.” Working paper, University of Michigan.