11

Optimality and Evolution

- 11.1 Optimality in Systems Biology Models

- 11.2 Optimal Enzyme Concentrations

- 11.3 Evolution and Self-Organization

- 11.4 Evolutionary Game Theory

- Exercises

- References

- Further Reading

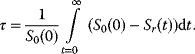

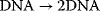

Evolution is an open process without any goals. However, there is a general trend toward increasing complexity, and phenotypes arising from mutation and selection often look as if they were optimized for specific biological functions. The evolution toward optimal solutions can be studied experimentally: in a competition among bacteria, faster growing mutants are likely to outcompete the wild-type population until bacterial genomes, after many such changes, become enriched with features needed for fast growth. Dekel and Alon [1] grew Escherichia coli bacteria under different combinations of lactose levels and under artificially induced expression levels of the Lac operon (which includes the gene lacZ). From the measured growth rates, they predicted that there exists, for each lactose level, an optimal LacZ expression level that maximizes growth and, therefore, the fitness advantage in a direct competition. The prediction was tested by having bacteria evolve in serial dilution experiments at given lactose levels. After a few hundred generations, the wild-type population was in fact replaced by mutants with the predicted optimal LacZ expression levels.

To picture the mechanism of evolution, we can imagine individuals as points in a phenotype space (see Figure 11.1), and a population forming a cloud (or distribution) of points. Individuals' chances to replicate (or their expected number of descendants) depend on the phenotype and can be described by a fitness function. Due to mutations and recombination of genes, offspring may show new phenotypes and may spread in this fitness landscape. Due to a selection for high fitness, a population of individuals will change from generation to generation, tending to move toward a fitness maximum. The fitness landscape can have local optima, each surrounded by a basin of attraction. If rare mutations are needed to jump into another basin of attraction, the population may be confined to a current local optimum even if better optima exist elsewhere. The fitness function can also be drawn directly as a landscape in genotype space. Since the same phenotype, on which selection primarily acts, can emerge from various genotypes, movements in genotype space will often be fitness-neutral. Moreover, analogous traits can evolve, under similar selective pressures, in organisms that are genetically very distant.

Figure 11.1 Evolution in a fitness landscape. (a) Evolution by mutation and selection. A population, shown as a frequency density in phenotype space (gray), changes by mutation and selection. A higher fitness value (shown at the bottom) represents a selection advantage and leads to an increase in frequency. As the different phenotypes change in their frequencies, the distribution gradually moves along the fitness gradient (dashed line). (b) Stationary population around a local fitness optimum. Mutation and selection are balanced and the population is stable. (c) Fitness landscape with two optima and their basins of attraction (marked by (i) and (ii)).

Evolution as a Search Strategy

Evolution by mutation and selection is an efficient search strategy for optima in complex fitness landscapes and can lead to relatively predictable outcomes. This may sound surprising because mutations are random events. Any innovation emerges from individuals in which certain mutations first occurred and which had to survive and pass it on. However, the number of possible favorable mutations is usually large, and so is the number of individuals in which these mutations can occur: thus, even if a certain change is very unlikely to occur in a single individual, it may be very likely to occur in a species. This is how evolution, the “tinkerer,” can act as an engineer [2].

Biomechanical problems solved by evolution are numerous and complex, and the solutions are often so good that engineers try to learn from them. Evolution has even inspired the development of very successful algorithms for numerical optimization [3]. Genetic algorithms (see Section 6.1) can tackle general, not necessarily biological optimization problems: metaphorically, candidate solutions to a problem are described as “genotypes” (usually encoded by numbers). A population of such potential solutions is then optimized in a series of mutation and selection steps. Since genetic algorithms simulate populations of individuals and not single individuals (as in, for example, simulated annealing; see Section 6.1), they allow for recombination of advantageous “partial solutions” from different individuals. This can considerably speed up the convergence toward optimal solutions.

What makes mutation and selection such a powerful search strategy? One reason is that the search for alternatives happens in a massively parallel way. A bacterial population produces huge numbers of mutants in every generation, and there is a constant selection for advantageous mutations that can be integrated into the complex physiology and genetics of existing cells. In constant environments, well-adapted genotypes are continuously tested and advantageous traits are actively conserved. At the same time, the population remains genetically flexible: when conditions are changing, it can move along a fitness gradient toward the next local optimum (see Figure 11.1a).

During evolution, there were long periods of relatively stable conditions, in which species could adapt to specific ecological niches. These periods, however, were interrupted by sudden environmental changes in which specialists went extinct and new species arose from the remaining generalists. Thus, to succeed in evolution, species should be not only well adapted, but also evolvable, that is, able to further adapt genetically [4]. As we saw in Sections 8.3 and 10.2, evolvability can be improved by the existence of robust physiological modules. Simulations suggest that varying external conditions can speed up evolution toward modular, well-adapted phenotypes [5]. Evolvability is further facilitated by exaptation – the reuse of existing traits to perform new functions. An example of exaptation are crystallin proteins, which were originally enzymes, but adopted a second unrelated function, namely, to increase the refraction index of the eye lens. Also in metabolism, potentially useful traits can emerge as by-products: for instance, the metabolic networks that enable cells to grow on one carbon source will, typically, also allow them to live on many other carbon sources. Even if a carbon source has never been present during evolution, the evolutionary step to using it as a nutrient can be relatively small [6].

Finally, we should remember that genotypes and phenotypes are not linked by abstract rules, but through very concrete physiological processes – the processes we attempt to understand in systems biology. Accordingly, what appears to be a selection for phenotypic traits may actually be a selection for regulation mechanisms that shape and control these traits and adapt them to an individual's needs and environment. An example is given by the way in which bones grow to their functional shapes, adapt to typical mechanical stresses, and re-enforce their structures after a fracture. This adaptation is based on feedback mechanisms. Higher stresses will cause bone growth and a strengthening of internal structures, while stresses below average cause a remodeling, that is, a removal of material [7]. The result of this regulation, described by the mechanostat model [8], are well-adapted bone shapes and microstructures. The physiological processes underlying this adaptation have been studied in detail [9] and the resulting microstructures of bones can be simulated [10]. Similar principles hold for other structures that emerge from self-organized processes, for example, cell organelles [11]: an evolutionary selection for good shapes may, effectively, consist in a selection for regulation mechanisms that produce the right shapes under the right circumstances.

Control of Evolution Processes

Evolution can proceed quite predictably. We saw an example in the artificial evolution of Lac operon expression levels, where the expression level after evolution could be predicted from previous experiments. Another known example is the evolution from C3 to C4 carbon fixation systems in plants, which occurred independently more than 60 times in evolution. The evolution toward C4 metabolism involves a number of changes in metabolism. In theory, these changes could take place in various orders. However, simulations of the evolutionary process, based on fitness evaluation in metabolic models, showed that the evolutionary trajectory (i.e., the best sequence of adaptation steps) is almost uniquely determined [12]. Even if individual gene mutations cannot be foreseen, the order of phenotypic changes seems to be quite predictable.

Can evolutionary dynamics, for example, the evolution of microbes in experiments, be steered by controlling the environment or by applying genetic modifications that constrain further evolution? Avoiding unintended evolution is important in biotechnology: if genetic changes, meant to increase the production of chemicals, burden cells excessively, the cells' growth rate will be severely reduced and the engineered cells may be outcompeted by faster growing mutants. This can be avoided, for instance, by applying genetic changes that stoichiometrically couple biomass production to production of the desired product.

Another case in which controlling evolution would matter is the prevention of bacterial resistances. Resistant bacteria carry mutations that make them less sensitive to antibiotic drugs. The resistant mutants have better chances to survive, so their resistance genes will be selected for. Chait et al. [13] have developed a method to prevent this. A combined administration of antibiotics can either increase or reduce antibiotics' effects on cell proliferation (synergism or antagonism). In extreme cases of antagonism, drug combinations can even have a weaker effect than either of the drugs alone. This phenomenon, known as suppressive drug combination, has a paradoxical consequence: mutants that have become resistant to one drug will suffer more strongly from the other one (because the first drug cannot exert its suppressive effect) and will be selected against. Thus, a suppressive combination of antibiotics traps bacteria in a local fitness optimum where resistances cannot spread. In experiments, this was shown to prevent the emergence of resistances [13,14].

There is, however, another lesson to be learned from this: if both drugs are applied, their effect decreases. For somebody who does not care about resistances spreading, taking only one of the drugs would be more effective. The fight against bacterial resistance – from which we all benefit – can thus be undermined by everyone's desire to get the most effective drug treatment now. This means that we're also trapped in a dilemma called “tragedy of the commons,” which we encounter again in Section 11.4.

11.1 Optimality in Systems Biology Models

Optimality principles play a central role in many fields of research and development, including engineering, economics, and physics. Whenever we build a machine, steer a technical process, or produce goods, we want to do this effectively (i.e., realize some objective, which we define) and efficiently (i.e., do so with the least possible effort). Often, we need to deal with trade-offs, for instance, if machines that are built to be more durable and energy-efficient become more costly. Moreover, what is optimal for one person may be detrimental for society. In such cases, the solution to a problem depends crucially on what the optimality problem is considered exactly.

In physics, optimality principles are central, but they are used in a more abstract sense and without referring to human intentions. Many physical laws can be formulated in a way as if nature maximized or minimized certain functions. In classical mechanics, laws as basic as Newton's laws of motion can be derived from variational principles [15]: in Lagrangian mechanics, instead of integrating the system equations in time, we consider the system's entire trajectory and compare it with other conceivable (but unphysical) trajectories. Each trajectory is scored by a so-called action functional, and the real, physical trajectory is a trajectory for which this functional shows an extremal value. Variational principles exist for many physical laws, and they sometimes connect different theories of the same phenomenon. For instance, Fermat's principle states that light rays from point A to point B follow paths of extremal duration: small deviations from the path would always increase (or, in some cases, always decrease) the time to reach point B. Fermat's principle entails not only straight light rays, but also the law of diffraction. An explanation comes from wave optics: light waves following extremal paths and the paths surrounding them show constructive interference, allowing for light to be detected at the end point; in quantum electrodynamics, the same principle holds for the wave function of photons [16]. Another variational principle, defining thermodynamically feasible flux distributions [17], is described in Section 15.6.

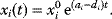

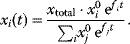

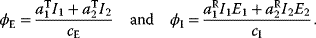

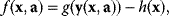

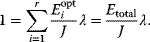

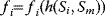

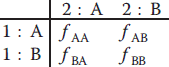

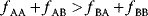

The idea of optimality is also common in biology and bioengineering. On the one hand, we can assume that phenotypic traits of organisms, for example, the body shapes of animals, are optimized during evolution. On the other hand, engineered microorganisms are supposed to maximize, for instance, the yield of some chemical product. In both cases, optimality assumptions can be translated into a mathematical model. Optimality-based (or “teleological”) models follow a common scheme: we consider different possible variants  of a biological system, score them by a fitness function

of a biological system, score them by a fitness function  , and select variants of maximal fitness. In studies of cellular networks, model variants considered can differ in their structure, kinetic parameters, or regulation and may be constrained by physical limitations. The fitness function can represent either the expected evolutionary success or our goals in metabolic engineering. In models, the fitness is expressed as a function of the system's behavior and, possibly, of costs caused by the system.

, and select variants of maximal fitness. In studies of cellular networks, model variants considered can differ in their structure, kinetic parameters, or regulation and may be constrained by physical limitations. The fitness function can represent either the expected evolutionary success or our goals in metabolic engineering. In models, the fitness is expressed as a function of the system's behavior and, possibly, of costs caused by the system.

Teleological models not only describe how a system works, but also explore why it works in this way and why it shows its specific dynamics. Thus, these models incorporate mechanisms, but their main focus is on how a system may have been optimized, according to what principles, and under what constraints. Optimality studies can also tell us about potential limits of a system. We may ask: if a known fitness function had to be maximized in evolution or biotechnology, what fitness value could be maximally achieved, and how? Turning this around, if a system is close to its theoretical optimum, we may suspect that natural selection, with fitness criteria similar to the ones assumed, has acted upon the system. Finally, optimality studies force us to think about the typical environment to which systems are adapted. A general assumption is that species are optimized for routine situations, but also rare severe events can exert considerable selective pressures. Generally, variable environments entail different types of selective pressure (e.g., toward robustness or versatility) than constant environments (adaptation to minimal effort and specialization).

Teleological Modeling Approaches

How does the concept of optimality fit into the mechanistic framework of molecular biology? As noted in Section 8.1.5, observations can be explained not only by cause and effect (Aristotle's causa efficiens), but also by objectives (Aristotle's causa finalis). In our languages, we do this quite naturally, for instance, when saying “E. coli bacteria produce flagella in order to swim.” The sentence not only describes a physical process, but also relates it to a task. This does not mean that we ascribe intentions to cells or to evolution – it simply expresses the fact that flagella can increase cells' chances to proliferate, and this is a reason why E. coli bacteria – which have outcompeted many mutants in the past – can express flagella.

Teleological statements are statements about biological function. But then, what exactly is meant by “function,” and why do we need such a concept? Can't we simply describe what is happening inside cells? In fact, cell biology combines two very different levels of description: on the one hand, the physical dynamics within cells and, on the other hand, the evolutionary mechanisms that lead to these cells and can be seen at work in evolution experiments. The two perspectives inform each other: the cellular dynamics we observe today is the one that has succeeded in evolution, and changes in this dynamics will affect the species' further evolution. The entanglement of long-term selection and short-term cell physiology is subsumed in the concept of biological function.

Thus, “cells express flagella in order to swim” can be read as a short form of “cells that cease to produce flagella may be less vital due to shortage of nutrients” or, one step further, “without producing flagella in the right moment, cells would have been outcompeted by other cells (maybe, by cells producing flagella).” Optimality-based models translate such hypotheses into mathematical formulas. Here, “in order to” is represented by scoring a system by an objective function, and optimizing it. Dynamic models relate cause and mechanistic effect; optimality models, in contrast, relate mechanisms and fitness effects.

Epistemologically, the concept of “function” has a similar justification as the concept of randomness. Random noise can be used as a proxy for processes that we omit from our models, either because they are too difficult to describe or because their details are unknown. Since we cannot neglect them completely, we account for them in a simple way – by random noise. Similarly, a functional objective can be a proxy for evolutionary selection, which by itself has no place in biochemical models. Since we cannot neglect evolution completely, we may account for it in a simple way – by a hypothetical optimization with some presumable objective. The biological justification lies in the assumption that evolution would already have selected out system variants that are clearly suboptimal. How much nonoptimality can be tolerated depends on many factors, including the strictness of selection (reflected by the steepness of the fitness function in models), population size, and the evolutionary time span considered.

Optimality and Model Fitting

Another point in systems biology modeling where optimization matters is parameter estimation. As mathematical problems, estimation and optimization of biochemical parameters are very similar: to find the most likely model, parameters are optimized for goodness of fit, and to find the most performant one, they are optimized for some fitness function. Biochemical constraints play a central role in both cases. Algorithms for numerical optimization are described in Section 6.1. They range from an optimization of structural model variants to linear flux optimization (as in flux balance analysis (FBA)) or a nonlinear optimization of enzyme levels or rate constants.

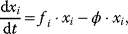

11.1.1 Mathematical Concepts for Optimality and Compromise

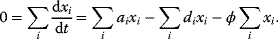

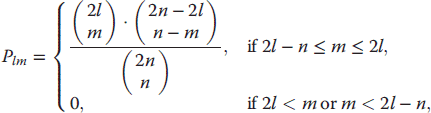

In an optimality perspective, all biological traits result from compromise. In the case of the Lac operon, an increased expression level increases the cell's energy supply (if lactose is present), but also demands resources (e.g., energy and ribosomes) and causes a major physiological burden due to side effects of the Lac permease activity [18]. To capture such trade-offs, optimality models typically combine four components: a system to be optimized, a fitness function (which may comprise costs and benefits), ways in which the system can be varied, and constraints under which these variations take place. We will now see how such trade-offs can be treated mathematically (Figure 11.2 gives an overview).

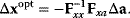

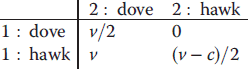

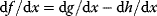

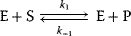

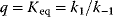

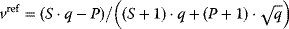

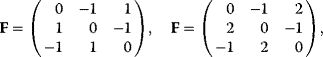

Figure 11.2 Optimization under constraints. (a) Cost–benefit optimization. A fitness function, defined as the difference  between benefit and cost, becomes maximal where both curves have the same slope (dashed). (b) Local optimum under an equality constraint (black line). A fitness function

between benefit and cost, becomes maximal where both curves have the same slope (dashed). (b) Local optimum under an equality constraint (black line). A fitness function  in two dimensions is shown by contour lines. The constraint is defined by an equality

in two dimensions is shown by contour lines. The constraint is defined by an equality  . In the constrained optimum point (red circle), contour lines and constraint line are parallel, so the gradients must satisfy an equation

. In the constrained optimum point (red circle), contour lines and constraint line are parallel, so the gradients must satisfy an equation  with a Lagrange multiplier

with a Lagrange multiplier  . (c) Linear programming: a feasible region (white) is defined by linear inequality constraints for the arguments

. (c) Linear programming: a feasible region (white) is defined by linear inequality constraints for the arguments  and

and  . The linear fitness function is shown by contour lines and gradient (arrow). (d) Pareto (multi-objective) optimization with two objectives

. The linear fitness function is shown by contour lines and gradient (arrow). (d) Pareto (multi-objective) optimization with two objectives  and

and  (shown on the axes). Feasible combinations

(shown on the axes). Feasible combinations  lie within the white region. States in which none of the objectives can be increased without decreasing the other objective are Pareto-optimal (red). Pareto optimization yields a continuous set of solutions, the so-called Pareto front.

lie within the white region. States in which none of the objectives can be increased without decreasing the other objective are Pareto-optimal (red). Pareto optimization yields a continuous set of solutions, the so-called Pareto front.

Cost–Benefit Models

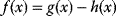

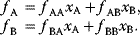

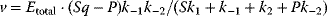

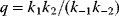

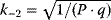

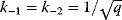

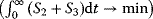

In cost–benefit calculations for metabolic systems [1,19–21], a difference

between a benefit  and a cost

and a cost  is assumed as a fitness function (see Figure 11.2a). The variable

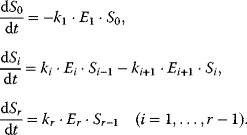

is assumed as a fitness function (see Figure 11.2a). The variable  is a vector describing quantitative traits to be varied, for example, enzyme abundances in a kinetic model. In the model for Lac expression [1], bacterial growth is treated as a function of two variables: external lactose concentration (an external parameter) and expression of LacZ (regarded as a control parameter, optimized by evolution). The function was first fitted to measured growth rates and then used to predict the optimal LacZ expression levels for different lactose concentrations. A local optimum of the variable

is a vector describing quantitative traits to be varied, for example, enzyme abundances in a kinetic model. In the model for Lac expression [1], bacterial growth is treated as a function of two variables: external lactose concentration (an external parameter) and expression of LacZ (regarded as a control parameter, optimized by evolution). The function was first fitted to measured growth rates and then used to predict the optimal LacZ expression levels for different lactose concentrations. A local optimum of the variable  – in this case, the enzyme level – implies a vanishing fitness slope

– in this case, the enzyme level – implies a vanishing fitness slope  . The marginal benefit

. The marginal benefit  and the marginal cost

and the marginal cost  must therefore be equal. In an optimal state, the benefit obtained from expressing an additional enzyme molecule and the cost for producing this molecule must exactly be balanced. Figure 11.2a illustrates this: since the benefit saturates at high

must therefore be equal. In an optimal state, the benefit obtained from expressing an additional enzyme molecule and the cost for producing this molecule must exactly be balanced. Figure 11.2a illustrates this: since the benefit saturates at high  values while the cost keeps increasing, the fitness function shows a local maximum. If the cost function were much steeper, the marginal cost would exceed the marginal benefit already at the level

values while the cost keeps increasing, the fitness function shows a local maximum. If the cost function were much steeper, the marginal cost would exceed the marginal benefit already at the level  . Since enzyme levels cannot be negative, this point would be a boundary optimum, and in this case the enzyme should not be expressed.

. Since enzyme levels cannot be negative, this point would be a boundary optimum, and in this case the enzyme should not be expressed.

Inequality Constraints

Which constraints need to be considered in optimization? This depends very much on our models and questions. Typically, there are nonnegativity constraints (e.g., for enzyme levels) and physical restrictions (e.g., upper bounds on substance concentrations, bounds on rate constants due to diffusion limitation, or Haldane relationships between rate constants to be optimized). However, inequality constraints can also represent biological costs. In fact, various important constraints on cell function, including limits on cell sizes and growth rates, the dense packing of proteins in cells, and cell death at higher temperatures, can be derived from basic information such as protein sizes, stabilities, and rates of folding and diffusion, as well as the known protein length distribution [22].

Mathematically, costs and constraints are, to an extent, interchangeable, which provides some freedom in how to formulate a model. As an example, consider a cost–benefit problem as in Eq. (11.1), but with several variables  . In a unique global optimum

. In a unique global optimum  , the cost function has a certain value

, the cost function has a certain value  . We can now reformulate the model: we drop the cost terms and require that

. We can now reformulate the model: we drop the cost terms and require that  be maximized under the constraint

be maximized under the constraint  . Our state

. Our state  will be a global optimum of the new problem. Likewise, if we start from a local optimum

will be a global optimum of the new problem. Likewise, if we start from a local optimum  with cost

with cost  and introduce the equality constraint

and introduce the equality constraint  , the optimum

, the optimum  will also be a local optimum to the new problem. In this way, a quantitative cost term for enzyme levels, for instance, can formally be replaced by a bound on enzyme levels. The advantage a cost constraint over an additive cost term is that benefits and costs can be measured on different scales and in different units. There is no need to quantify their “relative importance.”

will also be a local optimum to the new problem. In this way, a quantitative cost term for enzyme levels, for instance, can formally be replaced by a bound on enzyme levels. The advantage a cost constraint over an additive cost term is that benefits and costs can be measured on different scales and in different units. There is no need to quantify their “relative importance.”

In some case, however, it can be practical to do exactly the opposite: to replace a constraint by a cost term. If our system state hits some upper bound  , we can express this by an equality constraint

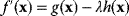

, we can express this by an equality constraint  . This constraint can be treated by the method of Lagrange multipliers: instead of maximizing

. This constraint can be treated by the method of Lagrange multipliers: instead of maximizing  under the constraint

under the constraint  , we search for a number

, we search for a number  , called the Lagrange multiplier, such that

, called the Lagrange multiplier, such that  becomes maximal with respect to

becomes maximal with respect to  (where the constraint

(where the constraint  must still hold). The necessary optimality condition reads

must still hold). The necessary optimality condition reads  . Effectively, the original constraint is replaced by a virtual cost term that is subtracted from the objective function. The relative importance of costs and benefits is determined by the value of the Lagrange multiplier in the original optimal state.

. Effectively, the original constraint is replaced by a virtual cost term that is subtracted from the objective function. The relative importance of costs and benefits is determined by the value of the Lagrange multiplier in the original optimal state.

Pareto Optimality

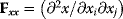

If an organism is subject to multiple objectives, its potential phenotypes can be depicted as points in a space spanned by these objective functions (see Figure 11.3a). If there are trade-offs between the objectives, only a limited region of this space will be accessible. Boundary points of this region in which none of the objectives can be improved without compromising the others form the Pareto front. We will see an example – a Pareto front of metabolic fluxes in bacterial metabolism – further below. Pareto optimality problems allow for many solutions, and unlike local minima in single-objective problems, these solutions are not directly comparable: one solution is not better or worse than another one – it is just different. Moreover, each objective can be scored on a different scale – there is no need to make them directly comparable.

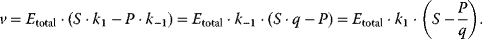

Figure 11.3 Pareto optimality for a system with multiple objectives. (a) Variants of a system, shown as points in the space of objective functions. (b) The same system variants, shown in phenotype space (axes correspond to phenotypical traits) [23]. The two objective functions are shown by contour lines; their optimum points are called archetypes. In a Pareto-optimal point (red), the gradients of both objective functions are parallel, but with opposite directions: none of the objectives can be simultaneously improved without compromising the other one. In a nonoptimum point (gray), both objectives could still be improved. (c) In a problem with three objectives, the archetypes form a triangle in phenotype space. The criterion for a Pareto optimum (red) is that the gradients, weighted by positive prefactors, must sum to zero. This resembles the balance between objective and constraint in Lagrange optimization (Figure 11.2b). With simple objective functions (circular contour lines, not shown), this condition is satisfied exactly within the triangle, so the Pareto optima are also convex combinations of the archetypes.

Pareto optimality can be depicted very intuitively with the concept of archetypes, introduced by Shoval et al. to refer to phenotypes that optimize, individually, one of the different objectives [23,24]. The role of archetypes becomes clear when phenotypes are displayed in another space, the space of phenotype variables (Figure 11.3b). In a problem with three objectives and two phenotypical traits, the three archetypes would define a triangle in a plane. If the objectives, as functions in this space, have circular contour lines, as we assume as an approximation, the possible Pareto optima will fill this triangle. Each of them will thus be a convex combination of the archetype vectors. The same concept applies also to higher dimensions (i.e., more objectives and traits).

Shoval et al. applied this approach to different kinds of data, for instance, Darwin's data on the body shapes of ground finches. When plotting body size against a quantitative measure of beak shape, they obtained a triangle of data points. The three archetypes, with their extreme combinations of the traits, could be explained as optimal adaptations to particular diets. All other species, located within the triangle, represent less specialized adaptations, possibly adaptations to mixed diets. In the theoretical model, triangles are expected to appear for simple objective functions with circular contour lines. With more general objective functions, the triangles will be distorted [24]. In general, whether a cloud of data points forms a triangle (or, more generally, an  -dimensional convex polytope in

-dimensional convex polytope in  -dimensional space) needs to be tested statistically.

-dimensional space) needs to be tested statistically.

Compromises in the Choice of Catalytic Constants

As an example of optimality considerations, including compromises and possibly nonoptimality, let us consider the choice of  values in metabolic systems. Empirically,

values in metabolic systems. Empirically,  values tend to be higher in central metabolism, where fluxes are large and enzyme levels are high [25]. This suggests that

values tend to be higher in central metabolism, where fluxes are large and enzyme levels are high [25]. This suggests that  values in other regions stay below their biochemically possible maximum, contradicting the optimality assumption whereby each

values in other regions stay below their biochemically possible maximum, contradicting the optimality assumption whereby each  value should be as high as possible. Thus, what prevents some

value should be as high as possible. Thus, what prevents some  values from increasing? In some cases, enzymes with higher

values from increasing? In some cases, enzymes with higher  values become larger and therefore more costly. In other cases, high

values become larger and therefore more costly. In other cases, high  values could compromise substrate specificity (which may be more relevant in secondary than in central metabolism), allosteric regulation (as in the case of phosphofructokinase, a highly regulated enzyme with a rather low catalytic constant), or a favorable temperature dependence of kinetic properties. Finally, enzymes can also exert additional functions (e.g., metabolize alternative substrates or act as a signaling, structural, or cell cycle protein), which may compromise their

values could compromise substrate specificity (which may be more relevant in secondary than in central metabolism), allosteric regulation (as in the case of phosphofructokinase, a highly regulated enzyme with a rather low catalytic constant), or a favorable temperature dependence of kinetic properties. Finally, enzymes can also exert additional functions (e.g., metabolize alternative substrates or act as a signaling, structural, or cell cycle protein), which may compromise their  values.

values.

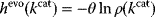

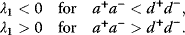

However, we may also assume an evolutionary balance between a selection for high  values and a tendency toward lower

values and a tendency toward lower  values caused by random mutations. Formally, this nonoptimal balance can be treated in an optimality framework by framing the effect of mutations as an effective evolutionary cost. This cost appears as a fitness term, but it actually reflects the fact that random mutations of an enzyme are much more likely to decrease than to increase its

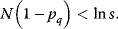

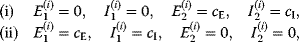

values caused by random mutations. Formally, this nonoptimal balance can be treated in an optimality framework by framing the effect of mutations as an effective evolutionary cost. This cost appears as a fitness term, but it actually reflects the fact that random mutations of an enzyme are much more likely to decrease than to increase its  value. The prior probability

value. The prior probability  , that is, the number of gene sequences that realize a certain

, that is, the number of gene sequences that realize a certain  value, defines the evolutionary cost

value, defines the evolutionary cost  . The constant parameter

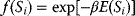

. The constant parameter  describes the strictness of selection [25]. The most likely

describes the strictness of selection [25]. The most likely  value after an evolution is thus not expected to maximize an organism's actual fitness

value after an evolution is thus not expected to maximize an organism's actual fitness  , but the apparent fitness

, but the apparent fitness  .

.

The concept of apparent fitness is analogous to the concept of free energy in thermodynamics. To describe systems at given temperature  , the principle of minimal energy

, the principle of minimal energy  (a stability condition from classical mechanics) is replaced by a principle of minimal free energy

(a stability condition from classical mechanics) is replaced by a principle of minimal free energy  , where the entropy

, where the entropy  refers to the number

refers to the number  of microstates showing the energy

of microstates showing the energy  . The term

. The term  appears formally as an energy, but it actually reflects the fact that macrostates of higher energy contain much more microstates (Section 15.6).

appears formally as an energy, but it actually reflects the fact that macrostates of higher energy contain much more microstates (Section 15.6).

Of course, the notion of evolutionary costs is not restricted to  values, but applies very generally. A similar type of effective cost is the evolutionary effort, quantified by counting the number of mutations or events necessary to attain a given state [26].

values, but applies very generally. A similar type of effective cost is the evolutionary effort, quantified by counting the number of mutations or events necessary to attain a given state [26].

Table 11.1 Some optimality approaches used in metabolic modeling.

| Name | Formalism | Objective | Control variables | Main constraints | Reference |

| FBA | Stoichiometric | Flux benefit | Fluxes | Stationary fluxes | [27] |

| FBA with minimal fluxes | Stoichiometric | Flux cost | Fluxes | Stationary, flux benefit | [28] |

| Resource balance analysis | Stoichiometric | Growth rate | Fluxes | Stationary, whole cell | [29] |

| Enzyme rate constants | Reaction kinetics | Different objectives | Rate constants | Haldane relations | [30] |

| Enzyme allocation | Kinetic | Pathway flux | Enzymes (static) | Total enzyme | [31] |

| Temporal enzyme allocation | Kinetic | Substrate conversion | Enzymes (dynamic) | Total enzyme | [32] |

| Transcriptional regulation | Kinetic | Substrate conversion | Rate constants | Feedback structure | [33] |

| Enzyme adaptation | Kinetic | General fitness | Enzymes (changes) | Model structure | [20] |

| Growth rate optimization | Kinetic, growing cell | Growth rate | Protein allocation | Total protein | [34] |

11.1.2 Metabolism Is Shaped by Optimality

A field within systems biology in which optimality considerations are central is metabolic modeling. Aside from standard methods such as FBA, which take optimality as one of their basic assumptions, there are dedicated optimality-based studies on pathway architecture, choices between pathways, metabolic flux distributions, the enzyme levels supporting them, and the regulatory systems controlling these enzyme levels (Table 11.1). In the forthcoming sections, we shall see a number of examples of these approaches.

When describing metabolic systems as optimized, we first need to specify an objective function. The objective does not reflect an absolute truth, but is related to the evolution scenario we consider. In natural environments, organisms will experience times of plenty, where fast growth is possible and maybe crucial for evolutionary success, and times of limitation, where efficient, sustainable usage of resources is most important. Both situations can be mimicked by artificial evolution experiments and studied with models. If bacteria are experimentally selected for fast growth – and nothing else – their growth rate will be a meaningful fitness function: mutants that grow faster will be those that survive, and those are also the ones we are going to model as optimized. However, setting up an experiment that selects for growth and nothing else is difficult: in a bioreactor with continuous dilution, cells could evolve to stick to the walls of the bioreactor, which would allow them not to be washed out. Even if these cells replicate slowly, their strategy provides a selection advantage in this setting. In serial dilution experiments, this drawback is avoided by transferring the microbial population between different flasks every day. However, if the cultures are grown as batch cultures, the cells will experience different conditions during the course of each day and may switch between growth and stationary phases. Again, many more traits, not just for a fast growth under constant conditions, will be selected for in the experiment.

Thus, it is the experimental setup that determines what is selected for and what objective should be used in our models. There is also a version of serial dilution that can select for nutrient efficiency instead of fast inefficient growth [35]. Unlike normal serial dilution experiments, this experiment requires – and allows for – models in which nutrient efficiency is the objective function. Not surprisingly, selection processes in natural environments are even more complicated: a species' long-term survival depends on many factors, including the populating of new habitats, defenses against pathogens, and complex social behavior. How evolution depends on interactions between organisms and environment can be studied through evolutionary game theory, which we discuss in Section 11.4.

Given a fitness function for an entire cell, how can we derive from it objectives for individual pathways? Metabolic pathways contribute to a cell's fitness by performing some local function (e.g., production of fatty acids), which contributes to more general cell functions (e.g., maintenance of cell membranes) and thereby to cell viability in general. Compromises between opposing subobjectives may be inevitable, for example, between high production fluxes, large product yields, and low enzyme investment. The relative importance of the different objectives may depend on the cell's current environment [36]. Once we have identified an objective, we can ask how pathway structure, flux distribution, or enzyme profile should be chosen, and also how regulation systems can ensure optimal behavior under various physiological conditions.

What Functional Reasons Can Explain Metabolic Network Structures?

If the laws of chemistry (e.g., conservation of atom numbers) were the only restriction, an enormous number of metabolic network structures could exist (see Section 8.1). The actual network structures, however, are determined by the set of enzymes encoded in cells' genomes or, more specifically, by the set of enzymes expressed by cells. The sizes and capabilities of metabolic networks vary widely, but their structures follow some general principles. Important precursors (such as nucleotides and amino acids in growing cells) form the central nodes that are connected by pathways. Many reactions involve cofactors, which connect these pathways very tightly. A relatively small set of cofactors has probably been used since the early times of metabolic network evolution [37].

By which chemical reactions will two substances be connected? In fact, the number of possibilities is vast: already for the route from chorismate to tyrosine, realized by three reactions in E. coli, a computational screen yielded more than 350 000 potential pathway variants [38]. Out of numerous possible pathways, only very few are actually used by organisms, and we may wonder what determines this selection. Some of the choices may be due to specific functional advantages [39]: for instance, glucose molecules are phosphorylated at the very beginning of glycolysis; this prevents them from diffusing through membranes, thus keeping them inside the cell. But there are also more general reasons informing pathway structure. We shall discuss three important principles: the preferences for yield-efficient, short, and thermodynamically efficient pathways [38,40].

Yield Efficiency

Alternative pathways may produce different amounts of product from the same substrate amount. At given substrate uptake rates, high-yield pathways produce more product per time and should therefore be preferable in evolution. However, there may be a trade-off between a high product yield and a high product production per amount of enzyme needed to catalyze the pathway flux. The reason is that yield-efficient pathways tend to operate closer to equilibrium, where enzymes are used inefficiently. At equal enzyme investment, yield-inefficient pathways may thus provide larger fluxes, enabling faster cell growth. This is why some metabolic pathways exist in alternative versions with different yields, even in the same organism. We will come back to this point, using glycolysis as an example.

Preference for Short Pathways

The preference for short pathways is exemplified by the non-oxidative part of the pentose phosphate pathway, a pathway with the intricate task of converting six pentose molecules into five hexose molecules. If we additionally require that intermediates have at least three carbon atoms and that enzymes can transfer two or three carbon atoms, the pentose phosphate pathway solves this task in a minimal number of enzymatic steps [41] (Figure 11.4). A trend toward minimal size is also visible more generally in central metabolism: Noor et al. showed that glycolysis, pentose phosphate pathway, and TCA cycle are composed of reaction modules that connect a number of central precursor metabolites and that, in each case, consist of minimal possible numbers of enzymatic steps [42].

Figure 11.4 A metabolic pathway of minimal size. The non-oxidative phase pentose phosphate pathway converts six pentose molecules into five hexose molecules in a minimal number of reactions. Metabolites shown (numbers of carbon atoms in parentheses): D-ribose 5-phosphate and D-xylulose 5-phosphate (5); D-glyceraldehyde 3-phospate (3); D-sedoheptulose 7-phosphate (7); D-fructose 6-phosphate and D-fructose 1,6-bisphosphate (6); D-erythrose 4-phosphate (4). Enzymes: transketolase (TK); transaldolase (TA); aldolase (AL).

Pathways Must Be Thermodynamically Feasible

A third general factor that affects pathway structure is thermodynamic efficiency. The metabolic flux directions, in each single reaction, are determined by the negative Gibbs energies of reactions (also called reaction affinity or thermodynamic driving force; see Section 15.6). To allow for a forward flux, the driving force must be positive. This holds not only for an entire pathway, but also for each single reaction within a pathway. However, the driving force has also a quantitative meaning: it determines the percentage of enzyme capacity that is wasted by the backward flux within that reaction. If the force approaches zero (i.e., a chemical equilibrium), the enzyme demand for realizing a given flux rises fast. Therefore, a sufficiently large positive driving force must exist in every reaction (see Section 15.6).

Glycolysis examplifies the role of thermodynamics in determining pathway structure. The typical chemical potential difference between glucose and lactate would allow for producing four molecules of ATP per glucose molecule; however, this would leave practically no energy to be dissipated and thus to drive a flux in the forward direction. Assuming that the flux is proportional to the thermodynamic force  along the pathway – which, far from equilibrium, is only a rough approximation – a maximal rate of ATP production will be reached by glycolytic pathways that produce only half of the maximal number of ATP molecules [43]. In glycolytic pathways found in nature, this is indeed the typical case. The two ATP molecules, however, are not produced directly. First, two ATP molecules are consumed in upper glycolysis, and then four ATP molecules are produced in lower glycolysis. A comparison with other potential pathway structures shows that this procedure allows for a maximal glycolytic flux at given enzyme investment [40,44]. A systematic way to assess the thermodynamic efficiency of a pathway is the max–min driving force (MDF) method [45]. Based on equilibrium constants and bounds on metabolite concentrations, it distributes the reaction driving forces in such a way (by choosing the metabolite concentrations) that small forces are avoided. Specifically, concentrations are arranged in such a way that the lowest reaction driving force in the pathway is as high as possible. The minimal driving force can be used as a quality criterion to compare pathway structures. If the minimal driving force is low, a pathway is unfavorable in terms of enzyme demand. Like shorter pathways, also pathways with favorable thermodynamics allow cells to reduce their enzyme investments. Also the ATP investment in upper glycolysis can be explained with this concept: without the early phosphorylation by ATP, a sufficient driving force in the pathway would require very high concentrations of the first intermediates. With the phosphorylation, the chemical potentials of the first intermediates can be high, but their concentrations will be much lower!

along the pathway – which, far from equilibrium, is only a rough approximation – a maximal rate of ATP production will be reached by glycolytic pathways that produce only half of the maximal number of ATP molecules [43]. In glycolytic pathways found in nature, this is indeed the typical case. The two ATP molecules, however, are not produced directly. First, two ATP molecules are consumed in upper glycolysis, and then four ATP molecules are produced in lower glycolysis. A comparison with other potential pathway structures shows that this procedure allows for a maximal glycolytic flux at given enzyme investment [40,44]. A systematic way to assess the thermodynamic efficiency of a pathway is the max–min driving force (MDF) method [45]. Based on equilibrium constants and bounds on metabolite concentrations, it distributes the reaction driving forces in such a way (by choosing the metabolite concentrations) that small forces are avoided. Specifically, concentrations are arranged in such a way that the lowest reaction driving force in the pathway is as high as possible. The minimal driving force can be used as a quality criterion to compare pathway structures. If the minimal driving force is low, a pathway is unfavorable in terms of enzyme demand. Like shorter pathways, also pathways with favorable thermodynamics allow cells to reduce their enzyme investments. Also the ATP investment in upper glycolysis can be explained with this concept: without the early phosphorylation by ATP, a sufficient driving force in the pathway would require very high concentrations of the first intermediates. With the phosphorylation, the chemical potentials of the first intermediates can be high, but their concentrations will be much lower!

Enzyme Investments

The preferences for short pathways and the avoidance of small driving forces may be explained by a single requirement: the need to realize flux distributions at a minimal enzyme cost. If enzyme investments are a main factor driving the choice of pathways, how can we quantify them? Typically, the notion of protein investment captures marginal biological costs, that is, a fitness reduction caused by an additional expression of proteins. For growing bacteria, the costs can be defined as the measured reduction in growth rate caused by artificially induced proteins. To ensure that the measurement concerns only costs, and not benefits caused by the proteins, one studies proteins without a normal physiological function (e.g., fluorescent marker proteins) or enzymes without available substrate [1,46].

For use in models, various proxies for enzyme cost have been proposed, including the total amount of enzyme in cells or specific pathways [19] or the total energy utilization [47]. To define simple cost functions, we can assume that protein cost increases linearly with the protein production rate, that is, with the amount of amino acids invested per time in translation [48]. With these definitions, protein cost will increase with protein abundance, protein chain length, and the effective protein turnover rate (which may include dilution in growing cells) [49].

11.1.3 Optimality Approaches in Metabolic Modeling

Based on known requirements for efficient metabolism (high product yields, short pathways, sufficient thermodynamic forces, and low enzyme investment), one may attempt to predict metabolic flux distributions as well as the enzyme levels driving them.

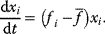

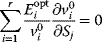

Flux Optimization

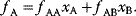

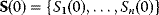

Flux prediction has many possible applications: for instance, as a sanity check in network reconstruction or for scoring possible pathway variants in metabolic engineering [50,51]. Understanding how flux distributions are chosen in nature can also shed light on the allocation of protein resources, for instance, on the enzyme investments in different metabolic pathways (see Figure 8.15). Flux distributions in metabolic networks can be predicted by FBA (see Chapter 3). In a typical FBA, flux distributions are predicted by maximizing a linear flux benefit  under the stationarity constraint

under the stationarity constraint  and bounds on individual fluxes. FBA can predict optimal yields and the viability of cells after enzyme deletions. The flux distribution itself, however, is usually underdetermined, and flux predictions are often unreliable. One reason is that FBA, by placing a bound on the substrate supply, effectively maximizes the product yield per substrate invested. When cells need to grow fast, however, it is more plausible to assume that cells maximize the product yield per enzyme investment.

and bounds on individual fluxes. FBA can predict optimal yields and the viability of cells after enzyme deletions. The flux distribution itself, however, is usually underdetermined, and flux predictions are often unreliable. One reason is that FBA, by placing a bound on the substrate supply, effectively maximizes the product yield per substrate invested. When cells need to grow fast, however, it is more plausible to assume that cells maximize the product yield per enzyme investment.

To account for this fact, some variants of FBA suppress unnecessary pathway fluxes in their solutions. The principle of minimal fluxes [28] states that a flux distribution  must achieve a given benefit

must achieve a given benefit  at a minimal sum of absolute fluxes

at a minimal sum of absolute fluxes  . In this sum, the fluxes can also be individually weighted (see Chapter 3). This flux cost may be seen as a proxy for the (unknown) enzyme costs required to realize the fluxes. In contrast to standard FBA, FBA with flux minimization resolves many of the underdetermined fluxes and yields more realistic flux predictions [36,52]. FBA with molecular crowding [53], an alternative method, pursues a similar, yet contrary approach: it translates absolute fluxes into approximate enzyme demands and puts upper bounds on the latter while maximizing the linear flux benefit. Resource balance analysis (RBA), finally, not only covers metabolism, but also brings macromolecule production into the picture [29]. It assumes a growing cell in which macromolecules, including enzymes, are diluted. Therefore, metabolic reactions depend on a continuous production of enzymes and, indirectly, on ribosome production. Macromolecule synthesis, in turn, depends on precursors and energy to be supplied by metabolism. RBA checks, for different possible growth rates, whether a consistent cell state can be sustained, and determines the maximal possible growth rate and the corresponding metabolic fluxes and macromolecule concentrations.

. In this sum, the fluxes can also be individually weighted (see Chapter 3). This flux cost may be seen as a proxy for the (unknown) enzyme costs required to realize the fluxes. In contrast to standard FBA, FBA with flux minimization resolves many of the underdetermined fluxes and yields more realistic flux predictions [36,52]. FBA with molecular crowding [53], an alternative method, pursues a similar, yet contrary approach: it translates absolute fluxes into approximate enzyme demands and puts upper bounds on the latter while maximizing the linear flux benefit. Resource balance analysis (RBA), finally, not only covers metabolism, but also brings macromolecule production into the picture [29]. It assumes a growing cell in which macromolecules, including enzymes, are diluted. Therefore, metabolic reactions depend on a continuous production of enzymes and, indirectly, on ribosome production. Macromolecule synthesis, in turn, depends on precursors and energy to be supplied by metabolism. RBA checks, for different possible growth rates, whether a consistent cell state can be sustained, and determines the maximal possible growth rate and the corresponding metabolic fluxes and macromolecule concentrations.

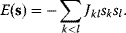

All these methods assume, in one way or another, a trade-off between flux benefits and flux costs, which sometimes appear in the form of constraints. However, this trade-off can be studied more directly by adopting a multi-objective approach. Instead of being optimized in only one way, flux distributions may be chosen to optimize different objectives under different growth conditions [36] or compromises between different objectives. For flux distributions in the central metabolism of E. coli, measured by isotope labeling of carbon atoms, this has been demonstrated [52]. Each flux distribution can be characterized by three objectives: ATP yield and biomass yield (both to be maximized), and the sum of absolute fluxes (to be minimized). By assessing all possible flux distributions in an FBA model, the Pareto surface for these objectives can be computed. As shown in Figure 11.5, the measured flux distributions are close to the Pareto surface; each of them represents a different compromise under a different experimental condition. The fact that the flux distributions are not exactly on the Pareto surface can mean two things: either that these are not optimized or that other optimality criteria, aside from those considered in the analysis, play a role. One such possibility could be anticipation behavior: by deviating from the momentary optimum, flux distributions could facilitate future flux changes and thus the adaptation to new environmental conditions.

Figure 11.5 Metabolic flux distributions in E. coli central metabolism are close to being Pareto-optimal. Axes correspond to three metabolic objectives: biomass yield, ATP yield, and the sum of absolute fluxes. Dots represent measured flux distributions under different growth conditions. The polyhedron comprises all possible combinations of objective values, determined from a stoichiometric model. The Pareto surface is marked in red. The measured flux distributions are close to, but not exactly on the Pareto surface. (From Ref. [52].)

Optimization of Enzyme Levels

If flux distributions are thought to be optimized, optimality should also hold for enzyme levels and the regulation systems behind them. In models, the question of optimal enzyme levels can be addressed in two ways. Either we assume that some predefined flux distribution must be realized or we directly optimize cell fitness as a function of enzyme levels. In both cases, fluxes and enzyme investments are linked by enzyme kinetics.

In the first approach, we use flux analysis to determine a flux distribution and then search for enzyme profiles (and corresponding metabolite profiles) that can realize this flux distribution with a given choice of rate laws [54,55]. Since the fluxes do not uniquely determine the enzyme profile, an additional optimality criterion is needed to pick a solution, for instance, minimization of total enzyme cost. Since reactions with low driving forces would automatically imply larger enzyme levels, they will automatically be avoided: either by shutting down the enzyme or by an arrangement of enzyme profiles that ensures sufficient reaction affinities in all reactions.

Effectively, this approach translates a given flux distribution into an optimal enzyme profile and thereby into an overall enzyme cost. In theory, cost functions of this sort could also be used to define nonlinear flux costs for FBA, but these would be hard to compute in practice. It can be generally proven, though, that such flux cost functions, at equal flux benefits, are minimized by elementary flux modes [56,57].

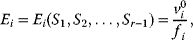

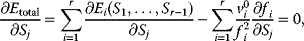

In the second approach, the metabolic objective is directly written as a function  of the enzyme levels

of the enzyme levels  , mediated through enzyme-dependent steady-state concentrations and fluxes. The enzyme levels are optimized for a high fitness value, either at a given total enzyme investment or with a cost term for enzymes (following Eq. (11.1), where

, mediated through enzyme-dependent steady-state concentrations and fluxes. The enzyme levels are optimized for a high fitness value, either at a given total enzyme investment or with a cost term for enzymes (following Eq. (11.1), where  is a metabolic objective scoring the stationary fluxes and concentrations and

is a metabolic objective scoring the stationary fluxes and concentrations and  scores the enzyme investments). Given the fitness function, we can compute optimal protein profiles and study how they should be adapted after perturbations. Modeling approaches of this sort will be discussed further below.

scores the enzyme investments). Given the fitness function, we can compute optimal protein profiles and study how they should be adapted after perturbations. Modeling approaches of this sort will be discussed further below.

Measurements of Enzyme Fitness Functions

The fitness as a function of enzyme levels can also be explored by experiments. If the fitness function is given by the cell growth rate, the fitness landscape can be experimentally screened by varying the expression of a protein and recording the resulting growth rates [1]. The protein levels in E. coli, for instance, can be varied by manipulating the ribosome binding sites. By modifying the ribosome binding sites of several genes combinatorially, one can produce a library of bacterial clones with different expression profiles of these genes. In this way, suitable expression profiles for a recombinant carotenoid synthesis pathway in E. coli have been determined [58]. A similar approach was applied to constitutive gene promoters in Saccharomyces cerevisiae. A large number of variants of the engineered violacein biosynthetic pathway, differing in their combinations of promoter strengths, were obtained by combinatorial assembly. Based on measured production rates, a statistical model could be trained to predict the preferential production of different pathway products depending on the promoter strengths [59].

Another way to measure the benefits from single genes is the genetic tug-of-war (gTOW) method [60], which allows for systematic measurements of maximal tolerable gene copy numbers, that is, copy numbers at which the selective pressure against an even higher expression begins to be strong. The method, established for the yeast S. cerevisiae, uses a plasmid vector whose copy number in cells ranges typically from 10 to 40. In a yeast strain with a deletion of the gene leu2 (coding for a leucine biosynthesis enzyme needed for growth on leucine-free media) and with plasmids containing a low-expression variant of the same gene, larger copy numbers of the plasmid lead to a growth advantage. Due to a selection for higher copy numbers, the typical numbers rise to more than 100. Now a second gene, the target gene under study, is inserted into the plasmid, and thus expressed depending on the plasmid's copy number. The gene's expression can have beneficial or (especially at very high copy numbers) deleterious fitness effects, which are overlaid with the (mostly beneficial) effect of leu expression. Eventually, the cells arrive at an optimal copy number, the number at which a small further change in copy number has no net effect because marginal advantage (due to increased leu expression) and marginal disadvantage (from increased target gene expression) cancel out. By measuring this optimal copy number, one can compare the fitness effects (or, a bit simplified, the maximal tolerable gene copy numbers) of different target genes.

11.1.4 Metabolic Strategies

Fermentation and Respiration as Examples of High-Rate and High-Yield Strategies

Cell choices between high-flux and high-yield strategies are exemplified by the choice between two metabolic strategies in central metabolism, fermentation and respiration. Glycolysis realizes fermentation, producing incompletely oxidized substances such as lactate or ethanol. In terms of yield, this is rather inefficient: only two ATP molecules are produced per glucose molecule. Exactly because of this, however, large amounts of Gibbs energy are dissipated, which suppresses backward fluxes and thereby increases the metabolic flux. Respiration, performed by the TCA cycle and oxidative phosphorylation, uses oxygen to oxidize carbohydrates completely into CO2. It has a much higher yield than glycolysis, producing up to 36 molecules of ATP per glucose molecule. However, this leaves little Gibbs energy for driving the reactions, so more enzyme may need to be invested to obtain the same ATP production flux. The details depend on the concentrations of extracellular compounds (e.g., oxygen and carbon dioxide), which affect the overall Gibbs free energy of the pathway.

Cells tend to use high-yield strategies under nutrient limitation and in multicellular organisms (although microbes such as E. coli can also be engineered to use high-yield strategies [61]). Low-yield strategies, such as additional fermentation on top of respiration in yeast (Crabtree effect) [62] and the Warburg effect in cancer cells [63], are preferred when nutrients are abundant and in situations of strong competition. Low-yield strategies may be profitable when enzyme levels are costly or constrained, or when the capacity for respiration is limited. For instance, when the maximal capacity of oxidative phosphorylation has been reached (because of limited space on mitochondrial membranes), additional fermentation could increase the ATP production flux [64]. Moreover, a higher thermodynamic driving force may lead to a higher ATP production per time at the same total enzyme investment, which in turn would allow for faster growth [53,65].

Paradoxically, low-yield strategies can outperform high-yield strategies even if their performance is worse. Grown separately on equal substrates, a high-yield strategy leads to higher cell densities (in continuous cultures) and longer survival (in batch cultures). However, when grown on a shared food source, microbes using a low-yield strategy initiate a competition in which they use their waste of nutrients as a weapon to outperform yield-efficient microbes. Therefore, inefficient metabolism can provide a selection advantage for individual cells that is paid by a permanent loss of the cell population. But this is not the end of the story: excreted fermentation products, such as lactate or ethanol, can serve as nutrients for other cells or for later times. Thus, depending on the frame of description, fermentation can also appear as a cooperative strategy. In Section 11.4, we will see how the social behavior of microbes is framed by evolutionary game theory.

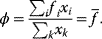

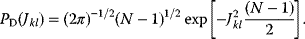

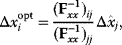

Trade-off between Product Yield and Enzyme Cost

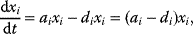

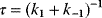

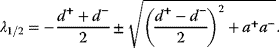

Aside from the most typically studied variant of glycolysis, called Embden–Meyerhof–Parnas (EMP) pathway, there are other variants of glycolysis with different ATP yields. EMP glycolysis produces two ATP molecules from one molecule of glucose and is common in eukaryotes. An alternative variant, the Entner–Doudoroff (ED) pathway, produces one ATP molecule only (see Figure 11.6). If the two variants are compared at equal ATP production rates, the ED pathway consumes twice as much glucose. Nevertheless, many bacteria use the ED pathway, either as an alternative or in addition to the EMP pathway.

Figure 11.6 Comparison between two variants of glycolysis. The EMP pathway shows a higher ATP yield (two ATP molecules per molecule of glucose) than the ED pathway (one ATP molecule per glucose molecule). However, its enzyme demand per ATP flux is more than twice as high [45].

We already saw that pathways with lower yields may be justified by their larger energy dissipation, which can lower the enzyme demand. By modeling the energetics of both pathways, Flamholz et al. [55] showed that the enzyme demand of the EMP pathway, at equal ATP production rates, is about twice as high. Thus, the choice between the two pathways will depend on what is more critical in a given environment – an efficient usage of glucose or a low enzyme demand. If ATP production matters less (e.g., because other ATP sources, such as respiration or photosynthesis, exist), or if glucose can be assumed to be abundant (as in the case of Zymomonas mobilis, which is adapted to very large glucose concentrations), the ED pathway will be preferable. This prediction has been confirmed by a broad comparison of microbes with different lifestyles [55].

Molenaar et al. modeled the choice between high-yield and low-yield strategies as a problem of optimal resource allocation [34]. In their models, cells can choose between two pathways for biomass precursor production: one with high cost and high yield and another one with low cost and low yield. Given a fixed substrate concentration and aiming at maximal growth, cells can allocate a fixed total amount of protein resources between the two pathways. After numerical optimization, the simulated cells employ the high-yield pathway when substrate is scarce, and the low-yield pathway when the substrate level increases. The two cases correspond, respectively, to slow-growth and fast-growth conditions.

11.1.5 Optimal Metabolic Adaptation

Metabolic enzymes show characteristic expression patterns across the metabolic network, but also characteristic temporal patterns during adaptation. Mechanistically, expression patterns follow, for instance, from the regulation functions encoded in the genes' promoter sequences (see Section 9.3). If a pathway is controlled by a single transcription factor, the enzymes in that pathway will tend to show a coherent expression. However, we may still ask why the regulation system works in this way: why is it that transcription factor regulons and metabolic pathway structures match, even if the metabolic network and its transcriptional regulation evolve independently? In terms of function, coherent expression patterns may simply be the best way to regulate metabolism. This hypothesis can be studied by models in which enzyme profiles control the metabolic state and are chosen to optimize the metabolic performance.

Optimal Adaptation of Enzyme Activities

Using such models, we can address a number of questions. If a metabolic state is perturbed, how should cells adapt their enzyme levels? And, in particular, if an enzyme level itself is perturbed, how should the other enzymes respond? To predict such adaptations, we may consider a kinetic pathway model and determine its enzyme levels from an optimality principle as in Eq. (11.1). We start in an initially optimal state. When an external perturbation is applied, this will change the fitness landscape in enzyme space and shift the optimum point. Similarly, when a knockdown forces one enzyme level to a lower value, this will lead to a new, constrained optimum for the other enzymes. In both cases, the cell should adapt its enzyme activities to reach the new optimum. Given a model, the necessary enzyme adaptations can be computationally optimized.

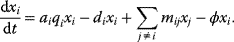

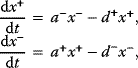

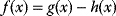

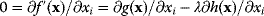

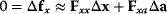

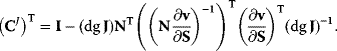

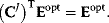

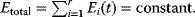

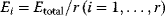

A mathematical approach for such scenarios was developed in Ref. [20]: under the assumption of small perturbations, the optimal adaptations can be directly computed from the metabolic response coefficients and the local curvatures of the fitness landscape (i.e., the Hessian matrix). Consider a kinetic model with steady-state metabolite concentrations and fluxes (in a state vector  ) depending on control variables (e.g., enzyme activities, in a vector

) depending on control variables (e.g., enzyme activities, in a vector  ) and environment variables (in a vector

) and environment variables (in a vector  ). The possible control profiles

). The possible control profiles  are scored by a fitness function

are scored by a fitness function

which describes the trade-off between a metabolic objective  and a cost function

and a cost function  . The optimality principle requires that, given the environment

. The optimality principle requires that, given the environment  , the control profile

, the control profile  must be chosen to maximize

must be chosen to maximize  . As a condition for a local optimum, the gradient

. As a condition for a local optimum, the gradient  must vanish and the Hessian matrix

must vanish and the Hessian matrix  must be negative definite. Now we assume a change

must be negative definite. Now we assume a change  of the environment parameters, causing a shift in the optimal enzyme profile. The adaptation

of the environment parameters, causing a shift in the optimal enzyme profile. The adaptation  that brings the cell from the old to the new optimal state can be determined as follows. To provide an optimal adaptation, the change

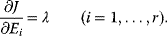

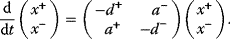

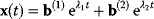

that brings the cell from the old to the new optimal state can be determined as follows. To provide an optimal adaptation, the change  of the fitness gradient during the adaptation must vanish. We approximate the fitness landscape to second order around the initial optimum and obtain the optimality condition

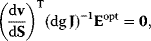

of the fitness gradient during the adaptation must vanish. We approximate the fitness landscape to second order around the initial optimum and obtain the optimality condition

and thus

The Hessian matrices  and

and  can be obtained from gradients and curvatures of

can be obtained from gradients and curvatures of  and

and  and from the first- and second-order response coefficients of the state variables in

and from the first- and second-order response coefficients of the state variables in  with respect to

with respect to  or

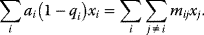

or  . In the second adaptation scenario, we assume that one control variable

. In the second adaptation scenario, we assume that one control variable  is constrained to some higher or lower value (in the case of enzyme levels, by overexpression or knockdown). How should the other control variables be adapted? If a change

is constrained to some higher or lower value (in the case of enzyme levels, by overexpression or knockdown). How should the other control variables be adapted? If a change  is enforced on the

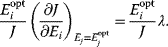

is enforced on the  enzyme, the optimal adaptation profile of all enzymes can be written as

enzyme, the optimal adaptation profile of all enzymes can be written as

where  automatically assumes its perturbed value

automatically assumes its perturbed value  . Even if the Hessian matrix

. Even if the Hessian matrix  is unknown, its symmetry implies a general symmetry between perturbation and response. We can see this as follows: in the initial optimum state, the matrix

is unknown, its symmetry implies a general symmetry between perturbation and response. We can see this as follows: in the initial optimum state, the matrix  must be negative definite, so the elements

must be negative definite, so the elements  will all be negative. Since

will all be negative. Since  itself is symmetric, the coefficients

itself is symmetric, the coefficients  must form a matrix with a symmetric sign pattern. This means that whenever a knockdown of enzyme A necessitates an adaptive upregulation of enzyme B, a knockdown of B should also necessitate an adaptive upregulation of A. An analogous relationship holds for cases of adaptive downregulation. Symmetric behavior, as predicted, has been found in expression data.

must form a matrix with a symmetric sign pattern. This means that whenever a knockdown of enzyme A necessitates an adaptive upregulation of enzyme B, a knockdown of B should also necessitate an adaptive upregulation of A. An analogous relationship holds for cases of adaptive downregulation. Symmetric behavior, as predicted, has been found in expression data.

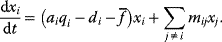

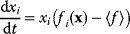

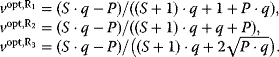

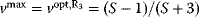

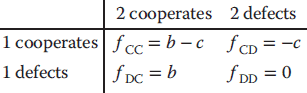

Optimal Control Profiles Reflect the Objective and the System Controlled