12

Models of Biochemical Systems

- 12.1 Metabolic Systems

- 12.2 Signaling Pathways

- 12.3 The Cell Cycle

- 12.4 The Aging Process

- Exercises

- References

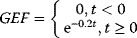

Systems biology attempts to understand structure, function, regulation, or development of biological systems by combining experimental and computational approaches. We must acknowledge that different parts of cellular organization are studied and understood in different ways and to different extent. This is related to diverse experimental techniques that can be used to measure the abundance of metabolites, proteins, mRNA, or other types of compounds. For example, enzyme kinetic measurements are performed for more than a century, while mRNA measurements (e.g., RNAseq or microarray data) or protein measurements (e.g., as mass spectrometry analysis) have been developed more recently. Not all data can be provided with the same accuracy and reproducibility. These and other complications in studying life caused a nonuniform progress in modeling different parts of cellular life. Moreover, the diversity of scientific questions and the availability of computational tools to tackle them provoked the development of very different types of models for different biological processes. Stimulating or modeling of biochemical networks is the recent development to assemble parts lists for various networks, such as genome-scale metabolic reconstructions, compilations of all compounds in signaling networks, lists of all transcription factors, and many more. These can often be found in specialized databases such as Reactome, KEGG, and BioModels (see Chapter 16). In this chapter, we will introduce a number of classical and more recent areas of systems biological research. Specifically, we discuss modeling of metabolic systems, signaling pathways, cell-cycle regulation, and development and differentiation, primarily with ODE systems.

12.1 Metabolic Systems

12.1.1 Basic Elements of Metabolic Modeling

Metabolic networks are on the one side defined by the enzymes converting substrates into products in a reversible or irreversible manner. Without enzymes, those reactions are essentially impossible or too slow. On the other side, the networks are characterized by the metabolites that are converted by the various enzymes. Biochemical studies have revealed a number of important pathways including catabolic pathways and pathways of the energy metabolism, such as glycolysis, the pentose-phosphate pathway, the tricarboxylic acid (TCA) cycle, and oxidative phosphorylation, and anabolic pathways including gluconeogenesis, amino acid synthesis pathways, synthesis of fatty acids, synthesis of nucleic acids, and synthesis and degradation of more complex molecules. Databases such as the Kyoto Encyclopedia of Genes and Genomes Pathway (KEGG, http://http://www.genome.jp/kegg/pathway.html) provide a comprehensive overview of the composition of pathways in various organisms [3].

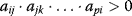

Here, we will focus on their characteristics that are essential for modeling. Chapter 2 provides a summary of the first steps to build a model. First, we sketch the metabolites and the converting reactions in a cartoon to get an overview and an intuitive understanding (Figure 12.1). Based on that cartoon and on further information, we set the limits of our model. To this end, we consider what kind of question we want to answer with our model, what information in terms of qualitative and quantitative data is available, and how can we make the model as simple as possible but as comprehensive as necessary. Then, for every compound, which is part of the system, we formulate the balance equations (see also Section 3.1) summing up all reactions that produce the compound (with a positive sign) and all reactions that degrade the compound (with a negative sign). At this stage, the model is suited for a network analysis, such as the stoichiometric analysis (Section 3.1) or, with some additional information, flux balance analysis (Section 3.2). In order to study the dynamics of the system, we must add kinetic descriptions to the individual reactions (Chapter 4). Keep in mind that the reaction kinetics may depend on

- the concentrations of substrates and products (in Figure 12.1: G1P and G6P),

- specific parameters such as KM-values,

- the amount and activity of the catalyzing enzyme (here hidden in the Vmax values, see Chapter 4), and

- the activity of modifiers, which are not shown in the example in Figure 12.1.

Figure 12.1 Designing metabolic models. (a) Basic elements of metabolic networks comprise metabolites and the connecting reactions. The reactions can be specific for one enzyme (e.g., the phosphoglucomutase) or they can lump several processes such as transport or branch to synthesis. (b) Basic steps for designing structured dynamic models: first, one has to choose the system of interest and its limits. Second, all metabolite changes have to be balanced according to the contribution reactions (Chapter 3). Next, all reactions are described with kinetic expressions. Note that the shown expressions are a reasonable choice; other expressions can be appropriate depending on the available knowledge and the purpose of the model.

In the following, we will discuss in more detail models for three example pathways: the upper glycolysis, the full glycolysis, and the threonine synthesis.

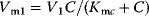

12.1.2 Toy Model of Upper Glycolysis

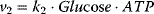

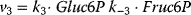

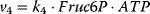

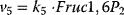

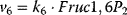

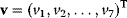

A first model of the upper part of glycolysis is depicted in Figure 12.2. It comprises six reactions and six metabolites. Note that we neglect the formation of phosphate Pi here.

Figure 12.2 Toy model of the upper glycolysis. The model involves the reactions glucose uptake (v1), the phosphorylation of glucose under conversion of ATP to ADP by the enzyme hexokinase (v2), intramolecular rearrangments by the enzyme phosphoglucoisomerase (v3), a second phosphorylation (and ATP/ADP conversion) by phosphofructokinase (v4), dephosphorylation without involvement of ATP/ADP by fructose-bisphosphatase (v5), and splitting of the hexose (6-C-sugar) into two trioses (3-C-sugars) by aldolase (v6). Abbreviations: Gluc-6P – glucose-6-phosphate, Fruc-6P – fructose-6-phosphate, Fruc-1,6P2 – fructose-1,6-bisphosphate.

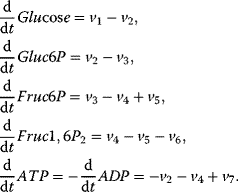

The corresponding ODE system reads

With mass action kinetics, the rate equations read  ,

,  ,

,  ,

,  ,

,  ,

,  , and

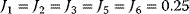

, and  . Given the values of the parameters

. Given the values of the parameters  and the initial concentrations, one may simulate the time behavior of the network as depicted in Figure 12.3.

and the initial concentrations, one may simulate the time behavior of the network as depicted in Figure 12.3.

Figure 12.3 Dynamic behavior of the upper glycolysis model (Figure 12.2 and Eq. (12.1)). Initial conditions at t = 0 Glucose(0) = Gluc-6P(0) = Fruc-6P(0) = Fruc-1,6P2(0) = 0 and ATP(0) = ADP(0) =0.5 (arbitrary units). Parameters: k1 = 0.25, k2 = 1, k3 = 1, k-3 = 1, k4 = 1, k5 = 1, k6 = 1, and k7 = 2.5. The steady-state concentrations are Glucosess = 0.357, Gluc-6Pss = 0.964, Fruc-6Pss = 0.714, Fruc-1,6P2ss = 0.25, and ATPss = 0.7, and ADPss = 0.2. The steady-state fluxes are  ,

,  , and

, and  . (a) Time course plots (concentration versus time), (b) phase plane plot (concentrations versus concentration of ATP with varying time); all curves start at ATP = 0.5 for t = 0.

. (a) Time course plots (concentration versus time), (b) phase plane plot (concentrations versus concentration of ATP with varying time); all curves start at ATP = 0.5 for t = 0.

We see starting with zero concentrations of all hexoses (here, glucose, fructose, and their phosphates), that they accumulate until production and degradation are balanced. They approach a steady state. ATP rises and decreases to the same extent as ADP decreases and rises, and their total remains constant. This is due to the conservation of adenine nucleotides, which could be revealed by stoichiometric analysis (Section 3.1).

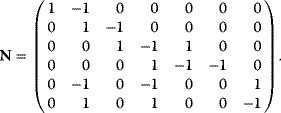

For this upper glycolysis model, the concentration vector is S = (Glucose, Gluc6P, Fruc6P, Fruc1,6P2, ATP, ADP)T, the vector of reaction rates is  , and the stoichiometric matrix reads

, and the stoichiometric matrix reads

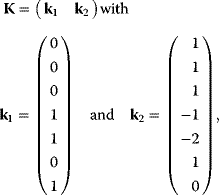

It comprises  reactions and has

reactions and has  . Thus, the kernel matrix (see Chapter 3) has two linear independent columns. A possible representation is

. Thus, the kernel matrix (see Chapter 3) has two linear independent columns. A possible representation is

Figure 12.4 shows the flux and concentration control coefficients (see Section 4.2) for the model of upper glycolysis in gray scale (see scaling bar). Reaction v1 has a flux control of 1 over all steady-state fluxes, reactions v2, v3, v4, and v7 have no control over fluxes; they are determined by v1. Reactions v5 and v6 have positive or negative control over J4, J5, and J7, respectively, since they control the turnover of fructose phosphates.

Figure 12.4 Flux and concentration control coefficients for the glycolysis model given by the equation system (12.1) with the parameters given in the legend to Figure 12.3. Values of the coefficients are indicated in gray scale: gray means zero control, white or light gray indicates positive control, and dark gray or black negative control, respectively.

The concentration control shows a more interesting pattern. As a rule of thumb, it holds that producing reactions have a positive control and degrading reactions have a negative control, such as v1 and v2 for glucose. But also distant reactions can exert concentration control, such as v4 to v6 over Gluc6P.

More comprehensive models of glycolysis can be used to study details of the dynamics, such as the occurrence of oscillations or the effect of perturbations. Examples are the models of Hynne et al. [4] or the Reuss group [5,6] or a model based on genome-scale reconstructions [3]. An overview of the most important reactions in glycolysis is given in Figure 12.5.

Figure 12.5 Full glycolysis models. (a) Main reactions and metabolites. 1-inGlc – influx of glucose, 2-GlcTrans – transport of glucose, 3-HK – hexokinase, 4-storage/synthesis, 5-PGI – phosphoglucoisomerase, 6-PFK – phosphofructokinase, 7-FBP – fructosbisphosphatase, 8-ALD – aldolase, 9-TIM – triosephosphate isomerase, 10-GPD – NAD-dependent glycerol-3-phosphate dehydrogenase, 11-GPP – glycerol-3-phosphate phosphatase, 12-difGlyc – (facilitated) diffusion of glycerol over membrane, 13-GAPDH – glyceraldehyde phosphate dehydrogenase, 14-phosphoglycerate kinase + phosphoglycerate mutase + enolase, 15-PK pyruvate kinase, 16-synthesis, 17-PDC – pyruvate decarboxylase, 18-diffusion of acetate, 19-ADH – alcohol dehydrogenase, 20-difEth – ethanol diffusion, 21-ATP consumption, 22-AK – adenylate kinase. (b) Network of reactions connected by common metabolites, (c) network of metabolites connected by common reactions.

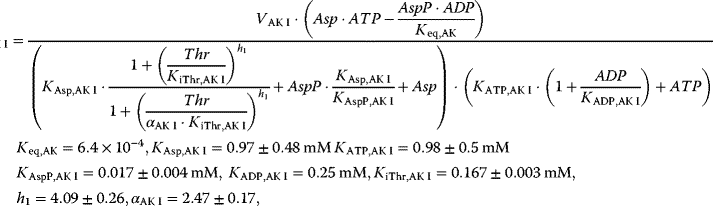

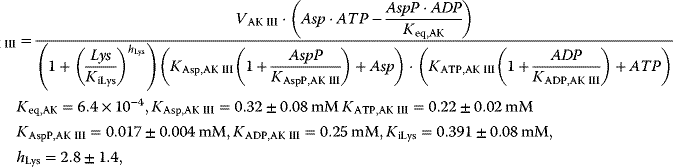

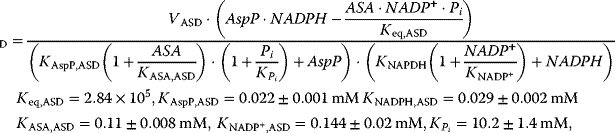

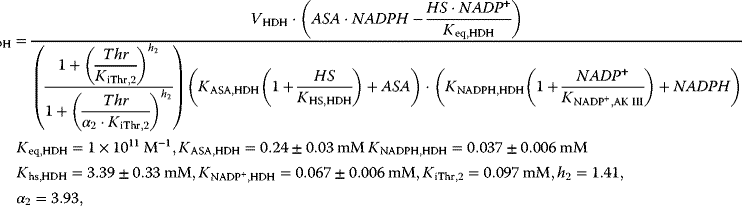

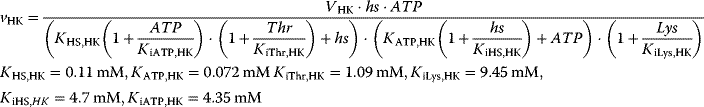

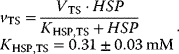

12.1.3 Threonine Synthesis Pathway Model

Threonine is an amino acid and it is essential for birds and mammals. The synthesis pathway from aspartate involves five steps. It is known for a long time and has attracted some interest with respect to its economic industrial production for a variety of uses. The kinetics of all the five enzymes from E. coli have been studied extensively, the complete genome sequence of this organism is now known and there is an extensive range of genetic tools available. The intensive study and the availability of kinetic information make it a good example for metabolic modeling of the pathway (Figure 12.6).

Figure 12.6 Model of the threonine pathway. Aspartate is converted into threonine in five steps. Threonine exerts negative feedback on its producing reactions. The pathway consumes ATP and NADPH.

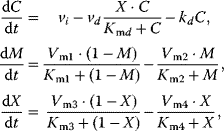

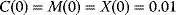

The reaction system can be described with the following set of differential equations:

with

This system has no nontrivial steady state, that is, no steady state with nonzero flux, since aspartate is always degraded, while threonine is only produced. The same imbalance holds for the couples ATP/ADP and NADPH+H+/NADP+. The dynamics is shown in Figure 12.7.

Figure 12.7 Dynamics of the threonine pathway model. The parameters are given in the text.

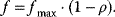

Threonine exerts feedback inhibition on the pathway producing it. This is illustrated in Figure 12.8. The higher the threonine concentration, the lower the rates of the inhibited reactions. The effect is that production of threonine is downregulated as long as its level is sufficient, thereby saving aspartate and energy.

Figure 12.8 Effect of feedback inhibition in the model depicted in Figure 12.6. Threonine is a product of the pathway but exhibits negative feedback on its producing reactions. Consequently, their rates decrease with increasing threonine concentration leading to a homeostasis.

12.2 Signaling Pathways

12.2.1 Function and Structure of Intra- and Intercellular Communication

On the molecular level, signaling involves the same type of processes as metabolism: production or degradation of substances, molecular modifications (mainly phosphorylation, also methylation, acetylation), localization of compounds to specific cell compartments, and activation or inhibition of reactions. From a modeling point of view, there are some important differences between signaling and metabolism. (i) Signaling pathways serve for information processing and transfer of information, while metabolism provides mainly mass transfer. (ii) The metabolic network is determined by the present set of enzymes catalyzing the reactions. Signaling pathways involve compounds of different type; they may form highly organized complexes, and may dynamically assemble upon occurrence of the signal. (iii) The quantity of converted material is high in metabolism (amounts are usually given in concentrations in the order of µM or mM) compared to the number of molecules involved in signaling processes (in yeast or E. coli cells, typical abundance of proteins in signal cascades is in the order of 10–104 molecules per cell). (iv) The different amounts of components have an effect on the concentration ratio of catalysts and substrates. In metabolism, this ratio is usually low, that is, the enzyme concentration is much lower than the substrate concentration, which gives rise to the quasi-steady state assumption used in Michaelis–Menten kinetics (Section 4.1). In signaling processes, amounts of catalysts and their substrates are frequently in the same order of magnitude.

Cells have a broad spectrum of receiving and processing signals; not all of them can be considered here. A typical sequence of events in signaling pathways is shown in Figure 12.9 and proceeds as follows.

Figure 12.9 Visualization of the signaling paradigm. The receptor is stimulated by a ligand or another kind of signal and becomes active. The active receptor initiates the internal signaling cascade: Level I response comprises processes at the cellular membrane such as receptor phosphorylation, G protein cycle, recruitment of specific proteins, establishment of transient interactions, and modifications. Level II response includes all interactions and mutual modifications of signaling proteins in the cytosol. They lead primarily to the activation of downstream components, but can also exert positive or negative feedback within the current signaling cascade or crosstalk to other areas of the signaling network. Level III response denotes immediate actions of signaling molecules on functional proteins such as metabolic enzymes or cell-cycle proteins leading to immediate regulatory effects. These interactions can be fast, i.e. within a few minutes in yeast. For level IV response, an activated protein enters the nucleus, transcription factors are activated or deactivated. The transcription factors regulate the transcription rate of a set of genes. The absolute amount or the relative changes in protein concentrations alter the state of the cell and trigger the long-term response to the signal.

The signal – a substance acting as ligand, hormones, growth factors or a physical stimulus, oxidative stress, and osmotic stress – approaches the cell surface and is perceived by a transmembrane receptor. The receptor changes its state from susceptible to active and triggers subsequent processes within the cell. The active receptor stimulates an internal signaling cascade.

In order to distinguish between fast and slow processes and to characterize the main location of events, we discriminate between responses on levels I–IV. Level I response comprises all processes that take place at the membrane due to receptor activation. These processes may include the recruitment of a number of proteins to the vicinity of the receptor that were previously located either at the membrane or in the cytosol. These proteins may form large heteromeric complexes and modify each other and the receptor and they form a local environment of information transmission. They may also serve as anchor for cell structures such as actin cables.

Level II response includes all downstream interaction of signaling molecules in the cytoplasm. There occur different types of protein–protein interactions such as complex formation, phosphorylation and dephosphorylation, or phosphotransfer, as well as targeting for degradation. The effects include both forward signaling as well as positive and negative feedback. Also crosstalk between signaling pathways that are primarily considered responsible for transmission of specific signals is observed. Note that the concept of crosstalk is strongly related to the perception of signaling by the investigator. If we consider separate signaling pathways that transmit the information about a specific cue, we can also consider their crosstalk, that is, interactions between components of diverse signaling pathways that lead to a divergence of the information to other targets than those of the specific pathway (measures for crosstalk are discussed below). If we consider all signaling processes as one large signaling network, then we simply observe various interactions in this network.

Level III response comprises all direct effects of active signaling molecules on other cellular networks such as phosphorylation of cell-cycle components or metabolic enzymes. These effects proceed on fast time scales and may lead to immediate cellular responses.

Level IV response is comparatively slow. The sequence of state changes within the signaling pathway crosses the nuclear membrane. Eventually, transcription factors are activated or deactivated. Such a transcription factor changes its binding properties to regulatory regions on the DNA upstream of a set of genes; the transcription rate of these genes is altered (typically increased). The either newly produced proteins or the changes in protein concentration cause an adaptation response of the cell to the signal. Also on level IV, signaling pathways are regulated by a number of control mechanisms including feedback and feed-forward modulation.

This is somehow the typical picture; many pathways may work in completely different manner. As example, an overview on signaling pathways that are stimulated in yeast stress response is given in Figure 12.10. More extended versions of the yeast signaling network with focus on the precise types of interactions can be found in Ref. [7].

Figure 12.10 Overview of signaling pathways in yeast: the HOG pathway is activated by osmotic shock, the pheromone pathway is activated by a pheromone from cells of opposite mating type, and the filamentous growth pathway is stimulated by starvation conditions. In each case, the signal interacts with the receptor. The receptor activates a cascade of intracellular processes including complex formations, phosphorylations, and transport steps. A MAP kinase cascade is a particular part of many signaling pathways. Eventually, transcription factors are activated that regulate the expression of a set of genes. Beside the indicated connections further interactions of components are possible. For example, crosstalk may occur, that is, the activation of the downstream part of one pathway by a component of another pathway. This is supported by the frequent incidence of some proteins like Ste11 in the scheme.

With respect to the activating or inhibiting effect of an external cue on cellular response features, signaling pathways may exhibit different types of logical behavior. Positive and negative logic of signaling systems can be found:

- Before activation, the signaling pathway is OFF (or the activity of all components is on a basic level). Ligand binding or physical cue leads to activation or switch-on of the pathway's components. Examples: MAPK pathway, G proteins.

- Before activation, some components of the signaling pathway are ON or have high activity or are produced and degraded with high rates. Ligand binding or a physical cue reduces the activity or interrupts the production process. Examples: Phosphorelay system (constant consumption and relay of ATP-derived phosphate group is interrupted by osmotic stress), Wnt signaling pathway (constant production and destruction of β-catenin is modified as a consequence of Wnt binding through interruption of destruction and, hence, accumulation of β-catenin).

Figure 12.11 Schematic representation of receptor activation: the binding of the ligand at the external side leads to conformational changes at the internal side allow for new interactions or further modifications.

These examples are explained below in more detail along with the general structure of signal pathway building blocks (Section 12.2.3).

12.2.2 Receptor–Ligand Interactions

Many receptors are transmembrane proteins. They receive the signal and transmit it. Upon signal sensing they change their conformation. In the active form, they are able to initiate a downstream process within the cell (Figure 12.11).

The simplest concept of the interaction between receptor R and ligand L is reversible binding to form the active complex LR:

The dissociation constant is calculated as

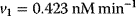

Typical values for  are

are

Cells have the ability to regulate the number and the activity of specific receptors, for example in order to weaken the signal transmission during long-term stimulation. Balancing production and degradation regulates the number of receptors. Phosphorylation of serine, threonine or tyrosine residues of the cytosolic domain by protein kinases mainly regulates the activity.

Hence, a more realistic scenario for ligand–receptor interaction is depicted in Figure 12.12.

Figure 12.12 Receptor activation by ligand. (a) Schematic representation: L – ligand, Ri – inactive receptor, Rs – susceptible receptor, Ra – active receptor. vp* - production steps, vd* - degradation steps, other steps – transistion between inactive, susceptible, and active state of receptor. (b) Time course of active (red line) and susceptible (blue line) receptor after stimulation with  α-factor at

α-factor at  . The total number of receptors is 10.000. The concentration of the active receptor increases immediately and then declines slowly, while the susceptible receptor is effectively reduced to zero.

. The total number of receptors is 10.000. The concentration of the active receptor increases immediately and then declines slowly, while the susceptible receptor is effectively reduced to zero.

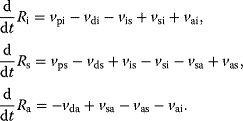

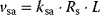

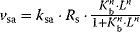

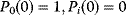

We assume that the receptor may be present in an inactive state Ri or in a susceptible state Rs. The susceptible form can interact with the ligand to form the active state Ra. The inactive or the susceptible forms are produced from precursors ( ), all three forms may be degraded (

), all three forms may be degraded ( ). The rates of production and degradation processes as well as the equilibria between the different states are influenced by the cell state, for example, by the cell-cycle stage. In general, the dynamics of this scenario can be described by the following set of differential equations:

). The rates of production and degradation processes as well as the equilibria between the different states are influenced by the cell state, for example, by the cell-cycle stage. In general, the dynamics of this scenario can be described by the following set of differential equations:

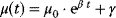

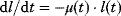

For the production terms, we may either assume constant values or (as mentioned above) rates that depend on the actual cell state. The degradation terms might be assumed to be linearly dependent on the concentration of their substrates ( ). This may also be a first guess for the state changes of the receptor (e.g.,

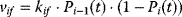

). This may also be a first guess for the state changes of the receptor (e.g.,  ). The receptor activation is dependent on the ligand concentration (or any other value related to the signal). A linear approximation of the respective rate is

). The receptor activation is dependent on the ligand concentration (or any other value related to the signal). A linear approximation of the respective rate is  . If the receptor is a dimer or oligomer, it might be sensible to include this information into the rate expression as

. If the receptor is a dimer or oligomer, it might be sensible to include this information into the rate expression as  , where

, where  denotes the binding constant to the monomer and n denotes the Hill coefficient (Eq. (4.44)).

denotes the binding constant to the monomer and n denotes the Hill coefficient (Eq. (4.44)).

12.2.3 Structural Components of Signaling Pathways

Signaling pathways constitute often highly complex networks, but it has been observed that they are frequently composed of typical building blocks. These components include Ras proteins, G protein cycles, phosphorelay systems, and MAP kinase cascades. In this chapter, we will discuss their general composition and function as well as modeling approaches.

12.2.3.1 G Proteins

G proteins are essential parts of many signaling pathways. The reason for their name is that they bind the guanine nucleotides GDP and GTP. They are heterotrimers, that is, consist of three different subunits. G proteins are associated to cell surface receptors with a heptahelical transmembrane structure, the G protein-coupled receptors (GPCR). Signal transduction cascades involving (i) such a transmembrane surface receptor, (ii) an associated G protein, and (iii) an intracellular effector that produces a second messenger play an important role in cellular communication and are well studied [9,10]. In humans, G protein-coupled receptors mediate responses to light, flavors, odors, numerous hormones, neurotransmitters, and other signals [11–13]. In unicellular eukaryotes, receptors of this type mediate signals that affect such basic processes as cell division, cell–cell fusion (mating), morphogenesis, and chemotaxis [11,14–16].

The cycle of G protein activation and inactivation is shown in Figure 12.13. When GDP is bound, the G protein α subunit (Gα) is associated with the G protein βγ heterodimer (Gβγ) and is inactive. Agonist binding to a receptor promotes guanine nucleotide exchange; Gα releases GDP, binds GTP, and dissociates from Gβγ. The dissociated subunits Gα or Gβγ, or both, are then free to activate target proteins (downstream effectors), which initiates signaling. When GTP is hydrolyzed, the subunits are able to reassociate. Gβγ antagonizes receptor action by inhibiting guanine nucleotide exchange. RGS (regulator of G protein signaling) proteins bind to Gα, stimulate GTP hydrolysis, and thereby reverse G protein activation. This general scheme can also be applied to the regulation of small monomeric Ras-like GTPases, such as Rho. In this case, the receptor, Gβγ, and RGS are replaced by GEF and GAP (see below).

Figure 12.13 Activation cycle of G protein. (a) Without activation, the heterotrimeric G protein is bound to GDP. Upon activation by the activated receptor, an exchange of GDP with GTP occurs and the G protein is divided into GTP-bound Gα and the heterodimer Gβγ. Gα-bound GTP is hydrolyzed, either slowly in reaction vh0 or fast in reaction vh1 supported by the RGS protein. GDP-bound Gα can reassociate with Gβγ (reaction vsr). (b) Time course of G protein activation. The total number of molecules is 10.000. The concentration of GDP-bound Gα is low for the whole period due to fast complex formation with the heterodimer Gβγ.

Direct targets include different types of effectors, such as adenylyl cyclase, phospholipase C, exchange factors for small GTPases, some calcium and potassium channels, plasma membrane Na+/H+ exchangers, and certain protein kinases [10,17–19]. Typically, these effectors produce second messengers or other biochemical changes that lead to stimulation of a protein kinase or a protein kinase cascade (or, as mentioned, are themselves a protein kinase). Signaling persists until GTP is hydrolyzed to GDP and the Gα and Gβγ subunits reassociate, completing the cycle of activation. The strength of the G protein-initiated signal depends on (i) the rate of nucleotide exchange, (ii) the rate of spontaneous GTP hydrolysis, (iii) the rate of RGS-supported GTP hydrolysis, and (iv) the rate of subunit reassociation. RGS proteins act as GTPase-activating proteins (GAPs) for a variety of different Gα classes. Thereby, they shorten the lifetime of the activated state of a G protein, and contribute to signal cessation. Furthermore, they may contain additional modular domains with signaling functions and contribute to diversity and complexity of the cellular signaling networks [20–23].

12.2.3.2 Small G Proteins

Small G proteins are monomeric G proteins with molecular weight of 20–40 kDa. Like heterotrimeric G proteins, their activity depends on the binding of GTP. More than 100 small G proteins have been identified. They belong to five families: Ras, Rho, Rab, Ran, and Arf. They regulate a wide variety of cell functions as biological timers that initiate and terminate specific cell functions and determine the periods of time [24].

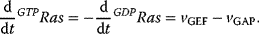

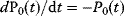

The transition from GDP-bound to GTP-bound states is catalyzed by a guanine nucleotide exchange factor (GEF), which induces exchange between the bound GDP and the cellular GTP. The reverse process is facilitated by a GTPase-activating protein (GAP), which induces hydrolysis of the bound GTP. Its dynamics can be described with the following equation with appropriate choice of the rates vGEF and vGAP:

Figure 12.14 illustrates the wiring of a Ras protein and the dependence of its activity on concentration ratio of the activating GEF and the deactivating GAP.

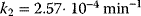

Figure 12.14 The Ras activation cycle. (a) Wiring diagram: GEF supports the transition form GDP-bound to GTP-bound states to activate Ras, while GAP induces hydrolysis of the bound GTP resulting in Ras deactivation. (b) Steady states of active Ras depending on the concentration ratio of its activator GEF and the inhibitior GAP. We compare the behavior for a model with mass action kinetics (curve a) with the behavior obtained with Michaelis–Menten kinetics for decreasing values of the KM-value (curves b–d). The smaller the KM-value, the more sigmoidal the response curve leading to an almost step-like shape in case of very low KM-values. Parameters:  ,

,  (all panels), (b)

(all panels), (b)  , (c)

, (c)  , (d)

, (d)  .

.

Mutations of the Ras protooncogenes (H-Ras, N-Ras, and K-Ras) are found in many human tumors. Most of these mutations result in the abolishment of normal GTPase activity of Ras. The Ras mutants can still bind to GAP, but cannot catalyze GTP hydrolysis. Therefore, they stay active for a long time.

12.2.3.3 Phosphorelay Systems

Most phosphorylation events in signaling pathways take place under consumption of ATP. Phosphorelay (or phosphotransfer) systems employ another mechanism: after an initial phosphorylation using ATP (or another phosphate donor), the phosphate group is transferred directly from one protein to the next without further consumption of ATP (or external donation of phosphate). Examples are the bacterial phosphoenolpyruvate:carbohydrate phosphotransferase [25–28], the two-component system of E. coli, or the Sln1 pathway involved in osmoresponse of yeast [29].

Figure 12.15 shows a scheme of a phosphorelay system from the high osmolarity glycerol (HOG) signaling pathway in yeast as well as a schematic representation of a phosphorelay system.

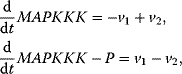

Figure 12.15 Schematic representation of a phosphorelay system. (a) Phosphorelay system belonging to the Sln1-branch of the HOG pathway in yeast. (b) General scheme of phosphorylation and dephosphorylation in a phosphorelay.

This pathway is organized as follows [30]. It involves the transmembrane protein Sln1, which is present as a dimer. Under normal conditions, the pathway is active, since Sln1 continuously autophosphorylates at a histidine residue, Sln1H-P, under consumption of ATP. Subsequently, this phosphate group is transferred to an aspartate group of Sln1 (resulting in Sln1A-P), then to a histidine residue of Ypd1 and finally to an aspartate residue of Ssk1. Ssk1 is continuously dephosphorylated by a phosphatase. Without stress, the proteins are mainly present in their phosphorylated form. The pathway is blocked by an increase in the external osmolarity and a concomitant loss of turgor pressure in the cell. The phosphorylation of Sln1 stops, the pathway runs out of transferable phosphate groups, and the concentration of Ssk1 rises. This constitutes the downstream signal. The temporal behavior of a generalized phosphorelay system (Figure 12.15) can be described with the following set of ODEs:

For the ODE system in Eq. (12.12), the following conservation relations hold

The existence of conservation relations is in agreement with the assumption that production and degradation of the proteins occurs on a larger time scale as the phosphorylation events.

The temporal behavior of a phosphorelay system upon external stimulation is shown in Figure 12.16. Before the stimulus, the concentrations of A, B, and C assume low, but nonzero, levels due to continuous flow of phosphate groups through the network. During stimulation, they increase one after the other up to a maximal level that is determined by the total concentration of each protein. After removal of stimulus, all three concentrations return quickly to their initial values.

Figure 12.16 Dynamics of the phosphorelay system. (a) Time courses after stimulation from time 100 to time 500 (a.u.) by decreasing  to zero. (b) Dependence of steady-state level of the phosphorelay output, C, on the cascade activation strength,

to zero. (b) Dependence of steady-state level of the phosphorelay output, C, on the cascade activation strength,  , and the terminal dephosphorylation,

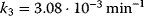

, and the terminal dephosphorylation,  . Parameter values:

. Parameter values:  ,

,  ,

,  .

.

Figure 12.16b illustrates the dependence of the sensitivity of the phosphorelay system on the value of the terminal dephosphorylation. For a low rate constant  , for example,

, for example,  , the concentration C is low (almost) independent of the value of

, the concentration C is low (almost) independent of the value of  , while for high

, while for high  , for example,

, for example,  , the concentration C is (almost always) maximal. Changing of

, the concentration C is (almost always) maximal. Changing of  leads to a change of C-levels only in the range

leads to a change of C-levels only in the range  .

.

This system is an example for a case where we can draw initial conclusions about feasible parameter values just from the network structure and the task of the module.

12.2.3.4 MAP Kinase Cascades

Mitogen-activated protein kinases (MAPKs) are a family of serine/threonine kinases that transduce signals from the cell membrane to the nucleus in response to a wide range of stimuli. Independent or coupled kinase cascades participate in many different intracellular signaling pathways that control a spectrum of cellular processes, including cell growth, differentiation, transformation, and apoptosis. MAPK cascades are widely involved in eukaryotic signal transduction, and these pathways are conserved from yeast to mammals.

A general scheme of a MAPK cascade is depicted in Figure 12.17. This pathway consists of several levels (usually three to four), where the activated kinase at each level phosphorylates the kinase at the next level down the cascade. The MAP kinase (MAPK) is at the terminal level of the cascade. It is activated by the MAPK kinase (MAPKK) by phosphorylation of two sites, conserved threonine and tyrosine residues. The MAPKK is itself phosphorylated at serine and threonine residues by the MAPKK kinase (MAPKKK). Several mechanisms are known to activate MAPKKKs by phosphorylation of a tyrosine residue. In some cases, the upstream kinase may be considered as a MAPKKK kinase (MAPKKKK). Dephosphorylation of either residue is thought to inactivate the kinases, and mutants lacking either residue are almost inactive. At each cascade level, protein phosphatases can inactivate the corresponding kinase, although it is in some cases a matter of debate whether this reaction is performed by an independent protein or by the kinase itself as autodephosphorylation. Also ubiquitin-dependent degradation of phosphorylated proteins is reported.

Figure 12.17 Schematic representation of the MAP kinase cascade. An upstream signal (often by a further kinase called MAP kinase kinase kinase kinase) causes phosphorylation of the MAPKKK. The phosphorylated MAPKKK in turn phosphorylates the protein at the next level. Dephosphorylation is assumed to occur continuously by phosphatases or autodephosphorylation.

Although they are conserved through species, elements of the MAPK cascade got different names in various studied systems. Some examples are listed in Table 12.1 (see also [31]).

Table 12.1 Names of the components of MAP kinase pathways in different organisms and different pathways.

| Organism | Budding yeast | Xensopus oocytes | Human, cell-cycle regulation | |||

| HOG pathway | Pheromone pathway | p38 pathway | JNK pathway | |||

| MAPKKK | Ssk2/Ssk22 | Ste11 | Mos | Rafs (c-, A-, and B-) | Tak1 | MEKKs |

| MAPKK | Pbs2 | Ste7 | MEK1 | MEK1/2 | MKK3/6 | MKK4/7 |

| MAPK | Hog1 | Fus3 | p42 MAPK | ERK1/2 | p38 | JNK1/2 |

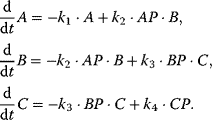

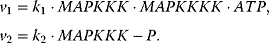

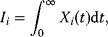

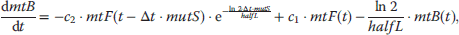

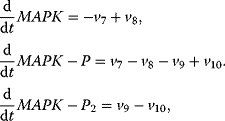

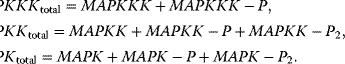

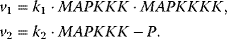

In the following, we will present typical modeling approaches and then discuss functional properties of signaling cascades. The dynamics of a MAPK cascade may be represented by the following ODE system:

The variables in the ODE system fulfill a set of moiety conservation relations, irrespective of the concrete choice of expression for the rates  . It holds

. It holds

The conservation relations reflect that we do not consider production or degradation of the involved proteins in this model. This is justified by the supposition that protein production and degradation take place on a different time scale as signal transduction.

The choice of the expressions for the rates is a matter of elaborateness of experimental knowledge and of modeling taste. We will discuss here different possibilities. Assuming only mass action results in linear and bilinear expressions such as

The kinetic constants  are first- (i even) and second-order (i odd) rate constants. In these expressions, the concentrations of the donor and acceptor of the transferred phosphate group, ATP and ADP, are not explicitly considered but included in the rate constants

are first- (i even) and second-order (i odd) rate constants. In these expressions, the concentrations of the donor and acceptor of the transferred phosphate group, ATP and ADP, are not explicitly considered but included in the rate constants  and

and  . Considering ATP and ADP explicitly results in

. Considering ATP and ADP explicitly results in

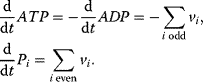

In addition, we have to care about the ATP–ADP balance and add three more differential equations

Here, we find two more conservation relations, the conservation of adenine nucleotides,  and the conservation of phosphate groups

and the conservation of phosphate groups

One may take into account that enzymes catalyze all steps [32] and therefore consider Michaelis–Menten kinetics for the individual steps [33]. Taking again the first and second reactions as examples for kinase and phosphatase steps, we get

where  is a first-order rate constant,

is a first-order rate constant,  and

and  are Michaelis constants, and

are Michaelis constants, and  denotes a maximal enzyme rate. Reported values for Michaelis constants are 15 nM [33], 46 nM and 159 nM [34], and 300 nM [32]. For maximal rates, values of about

denotes a maximal enzyme rate. Reported values for Michaelis constants are 15 nM [33], 46 nM and 159 nM [34], and 300 nM [32]. For maximal rates, values of about  [33] are used in models.

[33] are used in models.

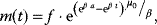

The performance of MAPK cascades, that is, their ability to amplify the signal, to enhance the concentration of the double phosphorylated MAPK notably, and the speed of activation, depends crucially on the kinetic constants of the kinases,  , and phosphatases,

, and phosphatases,  (Eq. (12.19)), and, moreover, on their ratio (see Figure 12.18). If the ratio

(Eq. (12.19)), and, moreover, on their ratio (see Figure 12.18). If the ratio  is low (phosphatases stronger than kinases), then the amplification is high, but at very low absolute concentrations of phosphorylated MAPK. High values of

is low (phosphatases stronger than kinases), then the amplification is high, but at very low absolute concentrations of phosphorylated MAPK. High values of  ensure high absolute concentrations of MAPK-P2, but with negligible amplification. High values of both

ensure high absolute concentrations of MAPK-P2, but with negligible amplification. High values of both  and

and  ensure fast activation of downstream targets.

ensure fast activation of downstream targets.

Figure 12.18 Parameter dependence of MAPK cascade performance. Shown are steady-state simulations for changing values of rate constants for kinases, k+, and phosphatases, k− (in arbitrary units). Upper row: Absolute values of the output signal MAPK-PP depending on the input signal (high: MAPKKKK = 0.1 or low: MAPKKKK = 0.01) for varying ratio of k+/k−. Second row: ratio of the output signal for high versus low input signal (MAPKKKK = 0.1 or MAPKKKK = 0.01) for varying ratio of k+/k− right panel: time course of MAPK activation for different values of k+ and a ratio k+/k− = 20.

Frequently, the proteins of MAPK cascades interact with scaffold proteins. In this case, a reversible assembly of oligomeric protein complexes that includes both enzymatic proteins and proteins without known enzymatic activity precedes the signal transduction. These nonenzymatic components can serve as scaffolds or anchors to the plasma membrane and regulate the efficiency, specificity, and localization of the signaling pathway.

12.2.3.5 The Wnt/β-Catenin Signaling Pathway

The Wnt/β-catenin signaling pathway is an important regulatory pathway for a multitude of processes in higher eukaryotic systems. It has been found due to its role in tumor growth, especially through the use of mouse models of cancer and oncogenic retroviruses. However, it is also important in normal embryonic development. It is relevant for cell fate specification, cell proliferation, and cell migration or formation of body axis. Recently, its role in stem cell differentiation and reprogramming has been discussed.

In fact, the Wnt signaling comprises not just one pathway, but at least three pathways, that is, the canonical Wnt pathway, the noncanonical planar cell polarity pathway, and the noncanonical Wnt/calcium pathway. All three pathways employ binding of the Wnt ligand to the Frizzled receptors, but have different downstream architecture and effects (Figure 12.19). Wnt denotes a family of 19 genes (in vertebrates, other figures hold for other branches of the animal kingdom).

Figure 12.19 The Wnt signaling pathway. The receptor Frizzled is a G-protein-coupled receptor and frequently occurs together with a coreceptor such as LRP. Without Wnt signaling, β-catenin is continuously produced and degraded by the destruction complex comprising APC, axin, GSK3, and CK1, resulting in low β-catenin concentrations. Upon binding of the Wnt ligand, the phosphoprotein Dsh is recruited to the receptor. The destruction complex is reorganized, partially binding to the membrane proteins. β-catenin can accumulate, enter the nucleus and activate transcription factors leading to gene expression changes.

The major function of the canonical pathway is to stimulate the accumulation of β-catenin, which is normally kept at a low concentration through balanced continuous production and degradation by the destruction complex comprising adenomatous polyposis coli (APC), axin (axin1 or axin2), glycogen synthase kinase 3 (GSK3), and casein kinase 1a (CK1). This complex targets β-catenin for ubiquitination and proteasomal digestions. The G-protein-coupled receptor Frizzled often functions together with coreceptors, such as the lipoprotein receptor-related protein LRP or receptor tyrosine kinases. When the ligand Wnt binds to the receptor and its coreceptors, they recruit cytoplasmic proteins to the membrane (level 1 response, see Section 12.2.1): the phosphoprotein Dsh is recruited to the receptor, axin binds to LPR. The destruction complex is reorganized and the GSK3 activity is inhibited. β-catenin can accumulate (level 2 response). Among other consequences, it enters the nucleus and activates transcription factors leading to gene expression changes (level 4 response).

An early experiment-based model of the dynamics of the destruction complex and its regulation upon Wnt signaling investigated the quantitative roles of APC and axin (present in low amounts) [35]. They investigated which reaction steps would have high control (see concentration control coefficients introduced in Chapter 4) over the β-catenin concentration and found that strong negative control is exerted by the assembly of the destruction complex and the binding of β-catenin to the destruction complex. Positive control is exerted by reactions leading to the disassembly of the destruction complex, the dissociation of β-catenin from the destruction complex, the axin degradation, and the β-catenin synthesis.

Another model has analyzed the robustness of β-catenin levels in response to Wnt signaling [36]. They found that while the absolute levels of β-catenin are very sensitive to many types of perturbations, the fold changes in response to Wnt are not. Here fold change means the level of β-catenin after Wnt-stimulation divided by the level before Wnt-stimulation. An incoherent feed-forward loop can cause such a behavior [37].

The following minimal model is suited to demonstrate major features of the canonical Wnt signaling pathway in wild-type and some mutant conditions [38]. The wiring of the model is shown in Figure 12.20. The ODE system reads

Figure 12.20 Wiring of the minimal model for the Wnt signaling pathway. (a) Basic model, (b) model including the incoherent feedforward loop via consideration of the repressor.

Conservation relations:

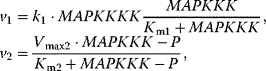

Rate equations:

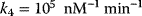

The parameter values are  ,

,  ,

, ,

, ,

,  ,

, ,

,  ,

,  ,

,  ,

, ,

,  ,

,  ,

,  ,

,  ,

,  ,

,  ,

,  ,

,  , and

, and  .

.

The dynamics and steady-state behavior of the model are shown in Figure 12.21.

Figure 12.21 Time course simulations for the minimal model of the Wnt pathway as shown in Figure 12.20 and given by set (equation set 12.23). Panel (a) shows the response of all components to a Wnt stimulus of 100 nM at time point 10 min. All other panels: Wnt stimulus varies from 0.01 to 100 nM. We see saturation for Wnt above 10 nM. Panels (a)–(c) show simulations for the case without repressor, while panels (d)–(f) include a repressor. The effect of repressor is most obvious for mRNA dynamics shown in (c) and (f) where the presence of repressor leads to overshoot behavior.

12.2.4 Analysis of Dynamic and Regulatory Features of Signaling Pathways

12.2.4.1 Detecting Feedback Loops in Dynamical Systems

The existence of positive or negative feedback cycles in complex signaling networks that are described with ODE systems can be derived from the analysis of the Jacobian matrix M (see Chapter 4, Chapter 15, Eq. (15.39)) [39,40]. With  (

( ) being differential equations describing the dynamics of component xi and

) being differential equations describing the dynamics of component xi and  being the terms of the Jacobian

being the terms of the Jacobian  , we can classify single nonzero terms of the Jacobian or sequences thereof as follows:

, we can classify single nonzero terms of the Jacobian or sequences thereof as follows:

for some i denotes direct autocatalysis,

for some i denotes direct autocatalysis, for some i denotes direct autoinhibition,

for some i denotes direct autoinhibition, for some i and j denotes a positive feedback including two components. It has been termed symbiosis for both

for some i and j denotes a positive feedback including two components. It has been termed symbiosis for both  or competition for both

or competition for both  ,

, for some i and j denotes a negative feedback including two components,

for some i and j denotes a negative feedback including two components, is a positive feedback of length

is a positive feedback of length  , and

, and is a negative feedback of length

is a negative feedback of length  .

.

Obviously, a feedback loop should not contain more than n elements  in a sequence (otherwise, it would be repetitive). Zero elements of the Jacobian in a sequence cut a feedback. An odd number of negative elements causes negative feedback, while a positive (or zero) number of negative terms leads to positive feedback.

in a sequence (otherwise, it would be repetitive). Zero elements of the Jacobian in a sequence cut a feedback. An odd number of negative elements causes negative feedback, while a positive (or zero) number of negative terms leads to positive feedback.

Signaling pathways can exhibit interesting dynamic and regulatory features, such as other regulatory pathway. Dynamic motifs are discussed in detail in Section 8.2.

Among the various regulatory features of signaling pathways, negative feedback has attracted outstanding interest. It also plays an important role in metabolic pathways, for example, in amino acid synthesis pathways (Section 12.1.3), where a negative feedback signal from the amino acid at the end to the precursors at the beginning of the pathway prevents an overproduction of this amino acid. The implementation of the feedback and the respective dynamic behavior show a wide variation. Feedback can bring about limit cycle-type oscillations in cell-cycle models. In signaling pathways, negative feedback may cause an adjustment of the response or damped oscillations [41].

12.2.4.2 Quantitative Measures for Properties of Signaling Pathways

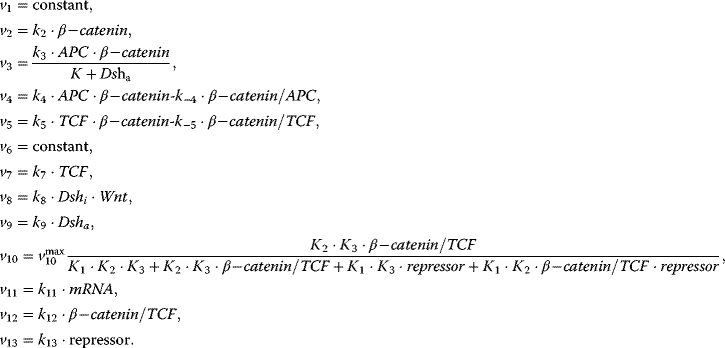

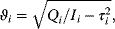

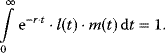

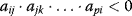

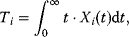

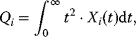

The dynamic behavior of signaling pathways can be quantitatively characterized by a number of measures [42]. Let  be the time-dependent concentration of the kinase i (or another interesting compound). The signaling time

be the time-dependent concentration of the kinase i (or another interesting compound). The signaling time  describes the average time to activate the kinase i. The signal duration

describes the average time to activate the kinase i. The signal duration  gives the average time during which the kinase i remains activated. The signal amplitude

gives the average time during which the kinase i remains activated. The signal amplitude  is a measure for the average concentration of activated kinase i. The following definitions have been introduced. The quantity

is a measure for the average concentration of activated kinase i. The following definitions have been introduced. The quantity

is the total amount of active kinase i generated during the signaling period, that is, the integrated response of  (the area covered by a plot

(the area covered by a plot  versus time). Further measures are

versus time). Further measures are

The signaling time can now be defined as

that is, as the average of time, analogous to mean value of a statistical distribution. Note that other definitions for characteristic times have been introduced in Section 6.3. The signal duration

gives a measure of how extended the signaling response is around the mean time (compatible to standard deviation). The signal amplitude is defined as

In a geometric representation, this is the height of a rectangle whose length is  and whose area equals the area under the curve

and whose area equals the area under the curve  . Note that this measure might be different from the maximal value

. Note that this measure might be different from the maximal value  that

that  assumes during the time course.

assumes during the time course.

Figure 12.22 shows a signaling pathway with successive activation of compounds and the respective time courses. The characteristic quantities are given in Table 12.2 and for  shown in the figure.

shown in the figure.

Figure 12.22 Characteristic measures for dynamic variables. (a) Wiring of an example signaling cascade with  ,

,  ,

,  ,

,  , and

, and  for

for  . (b) Time courses of

. (b) Time courses of  . The horizontal lines indicate the concentration measures for

. The horizontal lines indicate the concentration measures for  , that is, the calculated signal amplitude

, that is, the calculated signal amplitude  and

and  , and vertical lines indicate time measures for

, and vertical lines indicate time measures for  , that is, the time

, that is, the time  of

of  , the characteristic time

, the characteristic time  and the dotted vertical lines cover the signaling time

and the dotted vertical lines cover the signaling time  .

.

Table 12.2 Dynamic characteristics of the signaling cascade shown in Figure 12.22.

| Compound | Integral, Ii | Maximum,  |

Time  , ,  |

Characteristic time,  |

Signal duration,  |

Signal amplitude, Ai |

| X1 | 0.797 | 0.288 | 0.904 | 2.008 | 1.458 | 0.273 |

| X2 | 0.695 | 0.180 | 1.871 | 3.015 | 1.811 | 0.192 |

| X3 | 0.629 | 0.133 | 2.855 | 4.020 | 2.109 | 0.149 |

12.2.4.3 Crosstalk in Signaling Pathways

Signal transmission in cellular context is often not unique in the sense that an activated protein has a high specificity for another protein. Instead, there might be crosstalk, that is, proteins of one signaling pathway interact with proteins assigned to another pathway. Strictly speaking, the assignment of proteins to one pathway is often arbitrary and may result, for example, from the history of their function discovery. Frequently, protein interactions form a network with various binding, activation, and inhibition events, such as illustrated in Figure 12.10.

In order to arrive at quantitative measures for crosstalk, let us consider the simplified scheme in Figure 12.23: External signal α binds to receptor A, which activates target A via a series of interactions. In the same way, external signal β binds to receptor B, which activates target B. In addition, there are events that mediate an effect of receptor B on target A.

Figure 12.23 Crosstalk of signaling pathways.

Let us concentrate on pathway A and define all measures from its perspective. Signaling from α via RA to TA shall be called intrinsic, while signals from β to TA are extrinsic. Further, in order to quantify crosstalk, we need a quantitative measure for the effect of an external stimulus on the target. If we are interested in the level of activation, such a measure might be the integral over the time course of TA (Eq. (12.27)), its maximal value or its amplitude (Eq. (12.31)). If we are interested in the response timing, we can consider the time of the maximal value or the characteristic time (for an overview on measures see Table 12.2). Whatever measure we chose, shall be denoted by X in the following.

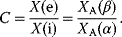

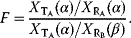

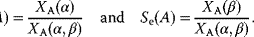

The crosstalk C is the activation of pathway A by the extrinsic stimulus β relative to the intrinsic stimulus α

The fidelity F [43] is viewed as output due to the intrinsic signal divided by the output in response to the extrinsic signal and reads in our notation:

In addition, the intrinsic sensitivity  expresses how an extrinsic signal modifies the intrinsic signal when acting in parallel, while the extrinsic sensitivity

expresses how an extrinsic signal modifies the intrinsic signal when acting in parallel, while the extrinsic sensitivity  quantifies the effect of the intrinsic signal on the extrinsic signal, respectively [44]:

quantifies the effect of the intrinsic signal on the extrinsic signal, respectively [44]:

Table 12.3 shows how different specificity values can be interpreted.

Table 12.3 Effect of crosstalk on signaling.

| Se > 1 | Se < 1 | |

| Si > 1 | Mutual signal inhibition | Dominance of intrinsic signal |

| Si < 1 | Dominance of extrinsic signal | Mutual signal amplification |

12.3 The Cell Cycle

Growth and reproduction are major characteristics of life. Crucial for these is the cell division by which one cell divides into two and all constituents of the mother cell are distributed between the two daughter cells. This requires that the genome has to be duplicated in advance, which is accomplished by the DNA polymerase, an enzyme that utilizes deoxynucleotide triphosphates (dNTPs) for the synthesis of two identical DNA double strands from one parent double strand. In this case, each single strand acts as template for one of the new double strands. Several types of DNA polymerases have been found in prokaryotic and eukaryotic cells, but all of them synthesize DNA only in 5′ → 3′ direction. In addition to DNA polymerase, several further proteins are involved in DNA replication: proteins responsible for the unwinding and opening of the mother strand (template double strand), proteins that bind the opened single-stranded DNA and prevent it from rewinding during synthesis, an enzyme called primase that is responsible for the synthesis of short RNA primers that are required by the DNA polymerase for the initialization of DNA polymerization, and a DNA ligase that is responsible for linkage of DNA fragments that are synthesized discontinuously on one of the two template strands, because of the limitation to 5′ → 3′ synthesis. Like the DNA, also other cellular organelles have to be doubled, such as the centrosome involved in the organization of the mitotic spindle.

The cell cycle is divided into two major phases: the interphase and the M phase (Figure 12.25). The interphase is often a relatively long period between two subsequent cell divisions. Cell division itself takes place during M-phase and consists of two steps: first, the nuclear division in which the duplicated genome is separated into two parts, and second, the cytoplasmic division or cytokinesis, where the cell divides into two cells. The latter not only allocates the two separated genomes to each of the newly developing cells, but also distributes the cytoplasmic organelles and substances between them. Finally, the centrosome is replicated and divided between both cells as well.

Figure 12.25 The cell cycle is divided into the interphase, which is the period between two subsequent cell divisions, and the M phase, during which one cell separates into two. Major control points of the cell cycle are indicated by arrows. More details are given in the text.

DNA replication takes place during interphase in the so-called S phase (S = synthesis) of the cell cycle (Figure 12.25). This phase is usually preceded by a gap phase, G1, and followed by another gap phase, G2. From G1 phase, cells can also leave the cell cycle and enter a rest phase, G0. The interphase normally represents 90% of the cell cycle length. During interphase, the chromosomes are dispersed as chromatin in the nucleus. Cell division occurs during M phase, which follows the G2 phase, and consists of mitosis and cytokinesis. Mitosis is divided into different stages. During the first stage – the prophase – chromosomes condense into their compact form and the two centrosomes of a cell begin recruiting microtubules for the formation of the mitotic spindle. In later stages of mitosis, this spindle is used for the equal segregation of the chromatids of each chromosome to opposite cellular poles. During the following prometaphase, the nuclear envelope dissolves and the microtubules of the mitotic spindle attach to protein structures, called kinetochores, at the centromeres of each chromosome. In the following metaphase, all chromosomes line up in the middle of the spindle and form the metaphase plate. During anaphase, the proteins holding together both sister chromatids are degraded and each chromatid of a chromosome segregates into opposite directions. Finally, during telophase, new nuclear envelopes are recreated around the separated genetic materials and form two new nuclei. The chromosomes unfold again into chromatin. The mitotic reaction is often followed by a cytokinesis where the cellular membrane pinches off between the two newly separated nuclei and two new cells are formed.

The cell cycle is strictly controlled by specific proteins. When a certain checkpoint, the restriction point, in the G1 phase is passed, this leads to a series of specific steps that end up in cell division. At this point the cell checks, whether it has achieved a sufficient size and whether the external conditions are suitable for reproduction. The control system ensures that a new phase of the cycle is only entered if the preceding phase has been finished successfully. For instance, to enter a new M phase it has to be assured that DNA replication during S phase has been correctly completed.

Passage through the eukaryotic cell cycle is strictly regulated by periodic synthesis and destruction of cyclins that bind and activate cyclin-dependent kinases (CDKs). The term “kinase” expresses that their function is phosphorylation of proteins with controlling properties. A contrary function is carried out by a “phosphatase.” It dephosphorylates a previously phosphorylated protein and thereby toggles its activity. Cyclin-dependent kinase inhibitors (CKI) also play important roles in cell-cycle control by coordinating internal and external signals and impeding proliferation at several key checkpoints.

The general scheme of the cell cycle is conserved from yeast to mammals. The levels of cyclins rise and fall during the stages of the cell cycle. The levels of CDKs appear to remain constant during cell cycle, but the individual molecules are either unbound or bound to cyclins. In budding yeast, one CDK (Cdc28) and nine different cyclins (Cln1–Cln3, Clb1–Clb6) are found that seem to be at least partially redundant. In contrast, mammals employ a variety of different cyclins and CDKs. Cyclins include a G1 cyclin (cyclin D), S phase cyclins (A and E), and mitotic cyclins (A and B). Mammals have nine different CDKs (referred to as CDK1-9) that are important in different phases of the cell cycle. The anaphase-promoting complex (APC) triggers the events leading to destruction of the cohesions, thus allowing the sister chromatids to separate and degrades the mitotic cyclins. A comprehensive map of cell cycle-related protein–protein interactions can be found in large-scale reconstructions, that is, for budding yeast [45].

12.3.1 Steps in the Cycle

Let us take a course through the mammalian cell cycle starting in G1 phase. As the level of G1 cyclins rises, they bind to their CDKs and signal the cell to prepare the chromosomes for replication. When the level of S phase promoting factor (SPF) rises, which includes cyclin A bound to CDK2, it enters the nucleus and prepares the cell to duplicate its DNA (and its centrosomes). As DNA replication continues, cyclin E is destroyed, and the level of mitotic cyclins begins to increase (in G2). The M phase-promoting factor (the complex of mitotic cyclins with the M-phase CDK) initiates (1) assembly of the mitotic spindle, (2) breakdown of the nuclear envelope, and (3) condensation of the chromosomes. These events take the cell to metaphase of mitosis. At this point, the M-phase promoting factor activates the APC, which allows the sister chromatids at the metaphase plate to separate and move to the poles (anaphase), thereby completing mitosis. APC destroys the mitotic cyclins by coupling them to ubiquitin, which targets them for destruction by proteasomes. APC turns on the synthesis of G1 cyclin for the next turn of the cycle and it degrades geminin, a protein that has kept the freshly synthesized DNA in S phase from being rereplicated before mitosis.

A number of checkpoints ensure that all processes connected with cell cycle progression, DNA doubling and separation, and cell division occur correctly. At these checkpoints, the cell cycle can be aborted or arrested. They involve checks on completion of S phase, on DNA damage, and on failure of spindle behavior. If the damage is irreparable, apoptosis is triggered. An important checkpoint in G1 has been identified in both yeast and mammalian cells. Referred to as “Start” in yeast and as “restriction point” in mammalian cells, this is the point at which the cell becomes committed to DNA replication and completing a cell cycle [46–49]. All the checkpoints require the services of complexes of proteins. Mutations in the genes encoding some of these proteins have been associated with cancer. These genes are regarded as oncogenes. Failures in checkpoints permit the cell to continue dividing despite damage to its integrity. Understanding how the proteins interact to regulate the cell cycle has become increasingly important to researchers and clinicians when it was discovered that many of the genes that encode cell cycle regulatory activities are targets for alterations that underlie the development of cancer. Several therapeutic agents, such as DNA-damaging drugs, microtubule inhibitors, antimetabolites, and topoisomerase inhibitors, take advantage of this disruption in normal cell cycle regulation to target checkpoint controls and ultimately induce growth arrest or apoptosis of neoplastic cells.

For the presentation of modeling approaches, we will focus on the yeast cell cycle since intensive experimental and computational studies have been carried out using different types of yeast as model organisms. Mathematical models of the cell cycle can be used to tackle, for example, the following relevant problems:

- The cell seems to monitor the volume ratio of nucleus and cytoplasm and to trigger cell division at a characteristic ratio. During oogenesis, this ratio is abnormally small (the cells accumulate maternal cytoplasm), while after fertilization cells divide without cell growth. How is the dependence on the ratio regulated?

- Cancer cells have a failure in cell-cycle regulation. Which proteins or protein complexes are essential for checkpoint examination?

- What causes the oscillatory behavior of the compounds involved in the cell cycle?

12.3.2 Minimal Cascade Model of a Mitotic Oscillator

One of the first genes to be identified as being an important regulator of the cell cycle in yeast was cdc2/cdc28 [50], where cdc2 refers to fission yeast and cdc28 to budding yeast. Activation of the cdc2/cdc28 kinase requires association with a regulatory subunit referred to as a cyclin.

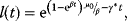

A minimal model for the mitotic oscillator involving a cyclin and the Cdc2 kinase has been presented by Goldbeter [51]. It covers the cascade of posttranslational modifications that modulate the activity of Cdc2 kinase during cell cycle. In the first cycle of the bicyclic cascade model, the cyclin promotes the activation of the Cdc2 kinase by reversible dephosphorylation. In the second cycle, the Cdc2 kinase activates a cyclin protease by reversible phosphorylation. The model was used to test the hypothesis that cell-cycle oscillations may arise from a negative feedback loop with delay, that is, that cyclin activates the Cdc2 kinase, while the Cdc2 kinase eventually triggers the degradation of the cyclin.

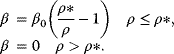

The minimal cascade model is represented in Figure 12.26. It involves only two main actors, cyclin and cyclin-dependent kinase. Cyclin is synthesized at constant rate,  , and triggers the transformation of inactive (M+) into active (M) Cdc2 kinase by enhancing the rate of a phosphatase,

, and triggers the transformation of inactive (M+) into active (M) Cdc2 kinase by enhancing the rate of a phosphatase,  . A kinase with rate

. A kinase with rate  reverts this modification. In the lower cycle, the Cdc2 kinase phosphorylates a protease (

reverts this modification. In the lower cycle, the Cdc2 kinase phosphorylates a protease ( ) shifting it from the inactive (X+) to the active (X) form. The activation of the cyclin protease is reverted by a further phosphatase with rate

) shifting it from the inactive (X+) to the active (X) form. The activation of the cyclin protease is reverted by a further phosphatase with rate  . The dynamics is governed by the following ODE system:

. The dynamics is governed by the following ODE system:

where C denotes the cyclin concentration; M and X represent the fractional concentrations of active Cdc2 kinase and active cyclin protease, respectively, while  and

and  are the fractions of inactive kinase and phosphatase, respectively.

are the fractions of inactive kinase and phosphatase, respectively.  values are Michaelis constants (Chapter 4).

values are Michaelis constants (Chapter 4).  and

and  are effective maximal rates (Chapter 4). Note that the use of Michaelis–Menten kinetics in the differential equations for the changes of M and X leads to the so-called Goldbeter–Koshland switch or ultrasensitivity [52], that is, a sharp switch in the activity of the downstream component when the concentration of the activating component reaches the Km value.

are effective maximal rates (Chapter 4). Note that the use of Michaelis–Menten kinetics in the differential equations for the changes of M and X leads to the so-called Goldbeter–Koshland switch or ultrasensitivity [52], that is, a sharp switch in the activity of the downstream component when the concentration of the activating component reaches the Km value.

Figure 12.26 Goldbeter's minimal model of the mitotic oscillator. (a) Illustration of the model comprising cyclin production and degradation, phosphorylation and dephosphorylation of Cdk1, and phosphorylation and dephosphorylation of the cyclin protease (see text). (b) Threshold-type dependence of the fractional concentration of active Cdk1 on the cyclin concentration. (c) Time courses of cyclin (C), active Cdk1 (M), and active cyclin protease (X) exhibition oscillations according to Eq. (12.35). (d) Limit cycle behavior, represented for the variables C and M. Parameter values:  , (

, ( ),

),  ,

,  ,

,  ,

,  ,

,  ,

,  , and

, and  . Initial conditions in (b) and (c) are

. Initial conditions in (b) and (c) are  . Units: µM and min−1.

. Units: µM and min−1.

This model involves only Michaelis–Menten-type kinetics, but no form of positive cooperativity. It can be used to test whether oscillations can arise solely as a result of the negative feedback provided by the Cdc2-induced cyclin degradation and of the threshold and time delay involved in the cascade. The time delay is implemented by considering posttranslational modifications (phosphorylation/dephosphorylation cycles  and

and  ). For certain parameters, they lead to a threshold in the dependence of steady-state values for M on C and for X on M (Figure 12.26b). Provided that this threshold exists, the evolution of the bicyclic cascade proceeds in a periodic manner (Figure 12.26c). Starting from low initial cyclin concentration, this value accumulates at constant rate, while M and X stay low. As soon as C crosses the activation threshold, M rises. If M crosses the threshold, X starts to increase sharply. X in turn accelerates cyclin degradation and consequently, C, M, and X drop rapidly. The resulting oscillations are of the limit cycle type. The respective limit cycle is shown in phase-plane representation in Figure 12.26d.

). For certain parameters, they lead to a threshold in the dependence of steady-state values for M on C and for X on M (Figure 12.26b). Provided that this threshold exists, the evolution of the bicyclic cascade proceeds in a periodic manner (Figure 12.26c). Starting from low initial cyclin concentration, this value accumulates at constant rate, while M and X stay low. As soon as C crosses the activation threshold, M rises. If M crosses the threshold, X starts to increase sharply. X in turn accelerates cyclin degradation and consequently, C, M, and X drop rapidly. The resulting oscillations are of the limit cycle type. The respective limit cycle is shown in phase-plane representation in Figure 12.26d.

12.3.3 Models of Budding Yeast Cell Cycle

Tyson, Novak, Chen and colleagues have developed a series of models describing the cell cycle of budding yeast in great detail [53–56]. These comprehensive models employ a set of assumptions that are summarized in the following.

The cell cycle is an alternating sequence of the transition from G1 phase to S/M phase, called “Start” (in mammalian cells it is called “restriction point”), and the transition from S/M to G1, called “Finish.” An overview is given in Figure 12.27.

Figure 12.27 Schematic representation of the yeast cell cycle (inspired by Fall et al. [60]). The outer ring represents the cellular events. Beginning with cell division, it follows the G1 phase. The cells possess a single set of chromosomes (shown as one black line). At “Start,” the cell goes into the S phase and replicates the DNA (two black lines). The sister chromatids are initially kept together by proteins. During M phase they are aligned, attached to the spindle body, and segregated to different parts of the cell. The cycle closes with formation of two new daughter cells. The inner part represents main molecular events driving the cell cycle comprising (1) protein production and degradation, (2) phosphorylation and dephosphorylation, and (3) complex formation and disintegration. For sake of clarity, CDK Cdc28 is not shown. The “Start” is initiated by activation of CDK by cyclins Cln2 and Clb5. The CDK activity is responsible for progression through S and M phase. At Finish, the proteolytic activity coordinated by APC destroys the cyclins and renders thereby the CDK inactive.

The CDK (Cdc28) forms complexes with the cyclins Cln1 to Cln3 and Clb1 to Clb6, and these complexes control the major cell-cycle events in budding yeast cells. The complexes Cln1–2/Cdc28 control budding, the complex Cln3/Cdc28 governs the execution of the checkpoint “Start,” Clb5–6/Cdc28 ensures timely DNA replication, Clb3–4/Cdc28 assists DNA replication and spindle formation, and Clb1–2/Cdc28 is necessary for completion of mitosis.

The cyclin–CDK complexes are in turn regulated by synthesis and degradation of cyclins and by the Clb-dependent kinase inhibitor (CKI) Sic1. The expression of the gene for Cln2 is controlled by the transcription factor SBF, the expression of the gene for Clb5 is controlled by the transcription factor MBF. Both transcription factors are regulated by CDKs. All cyclins are degraded by proteasomes following ubiquitination. APC is one of the complexes triggering ubiquitination of cyclins.

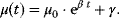

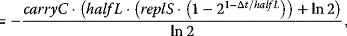

For the implementation of these processes in a mathematical model, the following points are important. Activation of cyclins and cyclin-dependent kinases occurs in principle by the negative feedback loop presented in Goldbeter's minimal model (see Section 12.3.2). Furthermore, these models are based on the assumption that the cells exhibit exponential growth, that is, the dynamics of the cell mass M is governed by  . At the instance of cell division, M is replaced by

. At the instance of cell division, M is replaced by  . In some cases, uneven division is considered. Cell growth implies adaptation of the negative feedback model to growing cells.

. In some cases, uneven division is considered. Cell growth implies adaptation of the negative feedback model to growing cells.

The transitions “Start” and “Finish” characterize the wild-type cell cycle. At “Start,” the transcription factor SBF is turned on and the levels of the cyclins Cln2 and Clb5 increase. They form complexes with Cdc28. The boost in Cln2/Cdc28 has three main consequences: it initiates bud formation, it phosphorylates the CKI Sic1 promoting its disappearance, and it inactivates Hct1, which in conjunction with APC was responsible for Clb2 degradation in G1 phase. Hence, DNA synthesis takes place and the bud emerges. Subsequently, the level of Clb2 increases and the spindle starts to form. Clb2/Cdc28 inactivates SBF and Cln2 decreases. Inactivation of MBF causes Clb5 to decrease. Clb2/Cdc28 induces progression through mitosis. Cdc20 and Hct1, which target proteins to APC for ubiquitination, regulate the metaphase–anaphase transition. Cdc20 has several tasks in the anaphase. It activates Hct1, promoting degradation of Clb2, and it activates the transcription factor of Sic1. Thus, at “Finish,” Clb2 is destroyed and Sic1 reappears.

The dynamics of some key players in cell cycle according to the model given in Chen et al. [54] is shown in Figure 12.28 for two successive cycles. At “Start,” Cln2 and Clb5 levels rise and Sic1 is degraded, while at “Finish,” Clb2 vanishes and Sic1 is newly produced.

Figure 12.28 Temporal behavior of some key players during two successive rounds of yeast cell cycle. The dotted line indicates the cell mass that halves after every cell division. The levels of Cln2, Clb2total, Clb5total, and Sic1total are simulated according to the model presented by Chen et al. [54].