20

Language in the Brain

IN 1830, A THIRTY-YEAR-OLD Frenchman by the name of Louis Victor Leborgne lost the ability to speak. Leborgne could no longer say anything other than the syllable tan. What was peculiar about Leborgne’s case was that he was, for the most part, otherwise intellectually typical. It was clear that when he spoke, he was trying to express certain ideas—he would use gestures and alter the tone and emphasis of his speech—but the only sound that ever came out was tan. Leborgne could understand language; he just couldn’t produce it. After many years of hospitalization, he became known around the hospital as Tan.

Twenty years after patient Tan passed away, his brain was examined by a French physician named Paul Broca who had a particular interest in the neurology of language. Broca found that Leborgne had brain damage to a specific and isolated region in the left frontal lobe.

Broca had a hunch that there were specific areas in the brain for language. Leborgne’s brain was Broca’s first clue that this idea might be right. Over the next two years, Broca painstakingly sought out the brains of any recently deceased patients who had had impairment in their ability to articulate language but retained their other intellectual faculties. In 1865, after performing autopsies on twelve different brains, he published his now famous paper “Localization of Speech in the Third Left Frontal Cultivation.” It turned out that all of these patients had damage to similar regions on the left side of the neocortex, a region that has come to be called Broca’s area. This has been observed countless times over the past hundred and fifty years—if Broca’s area is damaged, humans lose the ability to produce speech, a condition now called Broca’s aphasia.

Several years after Broca did his work, Carl Wernicke, a German physician, was perplexed by a different set of language difficulties. Wernicke found patients who, unlike Broca’s, could speak fine but lacked the ability to understand speech. These patients would produce whole sentences, but the sentences made no sense. For example, such a patient might say something like “You know that smoodle pinkered and that I want to get him round and take care of him like you want before.”

Wernicke, following Broca’s strategy, also found a damaged area in the brains of these patients. It was also on the left side but farther back in the posterior neocortex, a region now dubbed Wernicke’s area. Damage to Wernicke’s causes Wernicke’s aphasia, a condition in which patients lose the ability to understand speech.

A revealing feature of both Broca’s and Wernicke’s areas is that their language functions are not selective for only certain modalities of language, but rather are selective for language in general. Patients with Broca’s aphasia become equally impaired in speaking words as they are in writing words. Patients who primarily communicate using sign language lose their ability to sign fluently when Broca’s area is damaged. Damage to Wernicke’s area produces deficits in understanding both spoken language and written language. Indeed, these same language areas are activated when a hearing-abled person listens to someone speak and when a deaf person watches someone sign. Broca’s area is not selective for verbalizing, writing, or signing; it is selective for the general ability to produce language. And Wernicke’s area is not selective for listening, reading, or watching signs; it is selective for the general ability to understand language.

Figure 20.1

Original art by Rebecca Gelernter

The human motor cortex has a unique connection directly to the brainstem area for controlling the larynx and vocal cords—this is one of the few structural differences between the brains of humans and those of other apes. The human neocortex can uniquely control the vocal cords, which is surely an adaptation for using verbal language. But this is a red herring in trying to understand the evolution of language; this unique circuitry is not the evolutionary breakthrough that enabled language. We know this because humans can learn nonverbal language with as much fluency and ease as they learn verbal language—language is not a trick that requires this wiring with the vocal cords. Humans’ unique control of the larynx either coevolved with other changes for language in general, evolved after them (to transition from a gesture-like language to a verbal language), or evolved before them (adapted for some other nonlanguage purpose). In any case, it is not human control of the larynx that enabled language.

Broca’s and Wernicke’s discoveries demonstrated that language emerges from specific regions in the brain and that it is contained in a subnetwork almost always found on the left side of the neocortex. The specific regions for language also help explain the fact that language capacity can be quite dissociated from other intellectual capacities. Many people who become linguistically impaired are otherwise intellectually typical. And people can be linguistically gifted while otherwise intellectually impaired. In 1995, two researchers, Neil Smith and Ianthi-Maria Tsimpli, published their research on a child language savant named Christopher. Christopher was extremely cognitively impaired, had terrible hand-eye coordination, would struggle to do basic tasks such as buttoning a shirt, and was incapable of figuring out how to win a game of tic-tac-toe or checkers. But Christopher was superhuman when it came to language: he could read, write, and speak over fifteen languages. Although the rest of his brain was “impaired,” his language areas were not only spared but were brilliant. The point is that language emerges not from the brain as a whole but from specific subsystems.

This suggests that language is not an inevitable consequence of having more neocortex. It is not something humans got “for free” by virtue of scaling up a chimpanzee brain. Language is a specific and independent skill that evolution wove into our brains.

So this would seem to close the case. We have found the language organ of the human brain: humans evolved two new areas of neocortex—Broca’s and Wernicke’s areas—which are wired together into a specific subnetwork specialized for language. This subnetwork gifted us language, and that is why humans have language and other apes don’t. Case closed.

Unfortunately, the story is not so simple.

Laughs or Language?

The following fact complicates things: Your brain and a chimpanzee brain are practically identical; a human brain is, almost exactly, just a scaled-up chimpanzee brain. This includes the regions known as Broca’s area and Wernicke’s area. These areas did not evolve in early humans; they emerged much earlier, in the first primates. They are part of the areas of the neocortex that emerged with the breakthrough of mentalizing. Chimpanzees, bonobos, and even macaque monkeys all have exactly these areas with practically identical connectivity. Thus, it was not the emergence of Broca’s or Wernicke’s areas that gave humans the gift of language.

Perhaps human language was an elaboration on the existing system of ape communication? This might explain why these language areas are still present in other primates. Chimpanzees, bonobos, and gorillas all have sophisticated suites of gestures and hoots that signal different things. Wings evolved from arms, and multicellular organisms evolved from single-celled organisms, so it would make sense if human language evolved from the more primitive communication systems of our ape ancestors. But this is not how language evolved in the brain.

In other primates, these language areas of the neocortex are present but have nothing to do with communication. If you damage Broca’s and Wernicke’s areas in a monkey, it has no impact on monkey communication. If you damage them in humans, we lose the ability to use language entirely.

When we compare ape gestures to human language, we are comparing apples to oranges. Their common use for communication obscures the fact that they are entirely different neurological systems without any evolutionary relationship to each other.

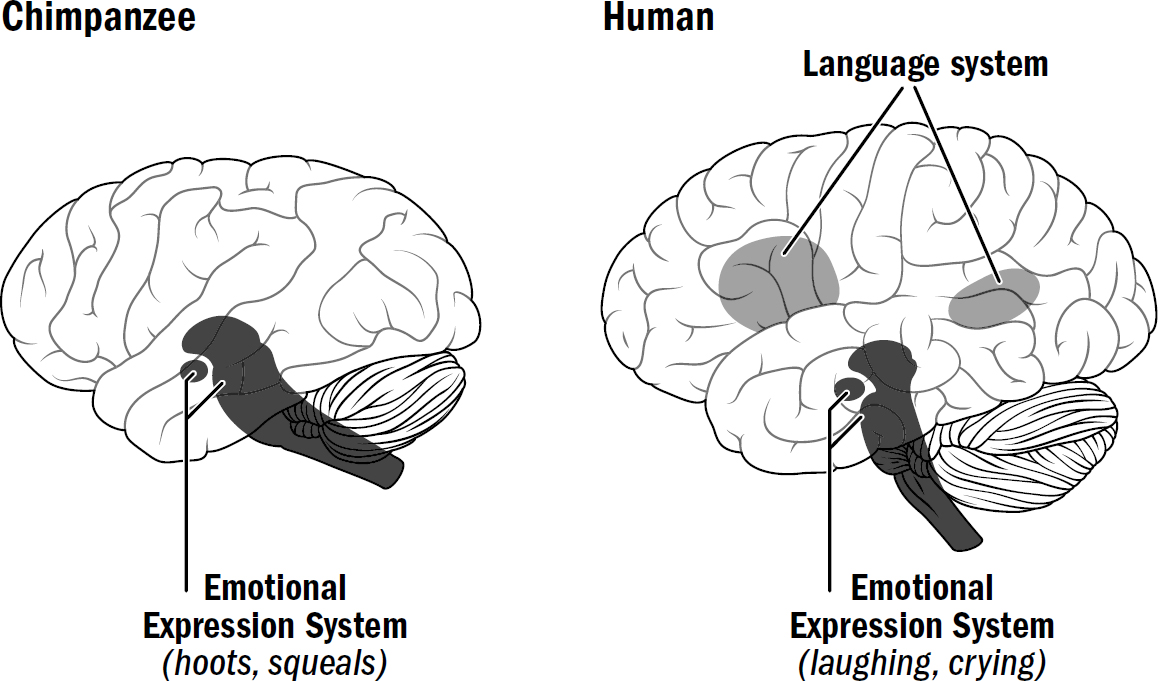

Humans have, in fact, inherited the exact same communication system of apes, but it isn’t our language—it is our emotional expressions.

In the mid-1990s, a teacher in his fifties noticed that he was struggling to speak. Over the course of three days, his symptoms worsened. By the time he made it to the doctor, the right side of his face was paralyzed, and the man’s speech was slowed and slurred. When he was asked to smile, only one side of his face would move, leading to a lopsided smirk (figure 20.2).

When examining the man, the doctor noticed something perplexing. When the doctor told a joke or said something genuinely pleasant, the man could smile just fine. The left side of his face worked normally when he was laughing, but when he was asked to smile voluntarily, the man was unable to do it.

The human brain has parallel control of facial expressions; there is an older emotional-expression system that has a hard-coded mapping between emotional states and reflexive responses. This system is controlled by ancient structures like the amygdala. Then there is a separate system that provides voluntary control of facial muscles that is controlled by the neocortex.

Figure 20.2: A patient with a damaged connection between motor cortex and the left side of face, but an intact connection between the amygdala and left side of face is spared

Images from Trepel et al., 1996. Used with permission.

It turned out that this teacher had a lesion in his brain stem that had disrupted the connection between his neocortex and the muscles on the left side of his face but had spared the connection between his amygdala and those same muscles. This meant that he couldn’t voluntarily control the left side of his face, but his emotional-expression system could control his face just fine. While he was unable to voluntarily lift an eyebrow, he was eminently able to laugh, frown, and cry.

This is also seen in individuals with severe forms of Broca’s and Wernicke’s aphasia. Even individuals who can’t utter a single word can readily laugh and cry. Why? Because emotional expressions emerge from a system entirely separate from language.

The apples-to-apples comparison between ape and human communication is between ape vocalizations and human emotional expressions. To simplify a bit: Other primates have a single communication system, their emotional-expression system, located in older areas like the amygdala and brainstem. It maps emotional states to communicative gestures and sounds. Indeed, as noticed by Jane Goodall, “the production of a sound in the absence of the appropriate emotional state seems to be an almost impossible task [for chimpanzees].” This emotional-expression system is ancient, going back to early mammals, perhaps even earlier. Humans, however, have two communication systems—we have this same ancient emotional expression system and we have a newly evolved language system in the neocortex.

Figure 20.3

Original art by Rebecca Gelernter

Human laughs, cries, and scowls are evolutionary remnants of an ancient and more primitive system for communication, a system from which ape hoots and gestures emerge. However, when we speak words, we are doing something without any clear analog to any system of ape communication.

This explains why lesions to Broca’s and Wernicke’s areas in monkeys have absolutely no impact on communication. A monkey can still hoot and holler for the same reason a human with such damage can still laugh, cry, smile, frown, and scowl even while he can’t utter a single coherent word. The gestures of monkeys are automatic emotional expressions and don’t emerge from the neocortex; they are more like a human laugh than language.

The emotional-expression system and the language system have another difference: one is genetically hardwired, and the other is learned. The shared emotional-expression system of humans and other apes is, for the most part, genetically hardwired. As evidence, monkeys who are raised in isolation still end up producing all of their normal gesture-call behavior, and chimpanzees and bonobos share almost 90 percent of the same gestures. Similarly, human cultures and children from around the world have remarkable overlap in emotional expressions, suggesting that at least some parts of our emotional expressions are genetically hard-coded and not learned. All human beings (even those born blind and deaf) cry, smile, laugh, and frown in relatively similar ways in response to similar emotional states.

However, the newer language system in humans is incredibly sensitive to learning—if a child goes long enough without being taught language, he or she will be unable to acquire it later in life. Unlike innate emotional expressions, features of language differ greatly across cultures. And indeed, a human baby born without any neocortex will still express these emotions in the usual way but will never speak.

So here is the neurobiological conundrum of language. Language did not emerge from some newly evolved structure. Language did not emerge from humans’ unique neocortical control over the larynx and face (although this did enable more complex verbalizations). Language did not emerge from some elaboration of the communication systems of early apes. And yet, language is entirely new.

So what unlocked language?

The Language Curriculum

All birds know how to fly. Does this mean that all birds have genetically hardwired knowledge of flying? Well, no. Birds are not born knowing how to fly; all baby birds must independently learn how to fly. They start by flapping wings, trying to hover, making their first glide attempt, and eventually, after enough repetitions, they figure it out. But if flying is not genetically hard-coded, then how is it that approximately 100 percent of all baby birds independently learn such a complex skill?

A skill as sophisticated as flying is too information-dense to hard-code directly into a genome. It is more efficient to encode a generic learning system (such as a cortex) and a specific hardwired learning curriculum (instinct to want to jump, instinct to flap wings, and instinct to attempt to glide). It is the pairing of a learning system and a curriculum that enables every single baby bird to learn how to fly.

In the world of artificial intelligence, the power and importance of curriculum is well known. In the 1990s, a linguist and professor of cognitive sciences at UC San Diego, Jeffrey Elman, was one of the first to use neural networks to try to predict the next word in a sentence given the previous words. The learning strategy was simple: Keep showing the neural network word after word, sentence after sentence, have it predict the next word based on the prior words, then nudge all the weights in the network toward the right answer each time. Theoretically, it should have been able to correctly predict the next word in a novel sentence it had never seen before.

It didn’t work.

Then Elman tried something different. Instead of showing the neural network sentences of all levels of complexity at the same time, he first showed it extremely simple sentences, and only after the network performed well at these did he increase the level of complexity. In other words, he designed a curriculum. And this, it turned out, worked. After being trained with this curriculum, his neural network could correctly complete complex sentences.

This idea of designing a curriculum for AI applies not just to language but to many types of learning. Remember the model-free reinforcement algorithm TD-Gammon that we saw in breakthrough #2? TD-Gammon enabled a computer to outperform humans in the game of backgammon. I left out a crucial part of how TD-Gammon was trained. It did not learn through the trial and error of endless games of backgammon against a human expert. If it had done this, it would never have learned, because it would never have won. TD-Gammon was trained by playing against itself. TD-Gammon always had an evenly matched player. This is the standard strategy for training reinforcement learning systems. Google’s AlphaZero was also trained by playing itself. The curriculum used to train a model is as crucial as the model itself.

To teach a new skill, it is often easier to change the curriculum instead of changing the learning system. Indeed, this is the solution that evolution seems to have repeatedly settled on when enabling complex skills—monkey climbing, bird flying, and, yes, even human language all seem to work this way. They emerge from newly evolved hardwired curriculums.

Long before human babies engage in conversations using words, they engage in what are called proto-conversations. By four months of age, long before babies speak, they will take turns with their parents in back-and-forth vocalizations, facial expressions, and gestures. It has been shown that infants will match the pause duration of their mothers, thereby enabling a rhythm of turn-taking; infants will vocalize, pause, attend to their parents, and wait for their parents’ response. It seems conversation is not a natural consequence of the ability to learn language; rather, the ability to learn language is, at least in part, a consequence of a simpler genetically hard-coded instinct to engage in conversation. It seems to be this hardwired curriculum of gestural and vocal turn-taking on which language is built. This type of turn-taking evolved first in early humans; chimpanzee infants show no such behavior.

By nine months of age, still before speech, human infants begin to demonstrate a second novel behavior: joint attention to objects. When a mother looks at or points to an object, a human infant will focus on that same object and use various nonverbal mechanisms to confirm that she saw what her mother saw. These nonverbal confirmations can be as simple as the baby looking back and forth between the object and her mother while smiling, grasping it and offering it to her mother, or just pointing to it and looking back at her mother.

Scientists have gone to great lengths to confirm that this behavior is not an attempt to obtain the object or get a positive emotional response from their parents, but instead is a genuine attempt to share attention with others. For example, an infant who points to an object will continue pointing to it until her parent alternates their gaze between the same object and the infant. If the parent simply looks at the infant and speaks enthusiastically or looks at the object but doesn’t look back at the infant (confirming she saw what the infant saw), the infant will be unsatisfied and point again. The fact that infants frequently are satisfied by this confirmation without being given the object of their attention strongly suggests their intent was not to obtain the object but to engage in joint attention with their mothers.

Like proto-conversations, this pre-language behavior of joint attention seems to be unique to human infants; nonhuman primates do not engage in joint attention. Chimpanzees show no interest in ensuring someone else attends to the same object they do. They will, of course, follow the gaze of others around them—looking in the direction they see others look. But there is a crucial distinction between joint attention and gaze following. Lots of animals, even turtles, have been shown to follow the gaze of another of their own species. If a turtle looks in a certain direction, nearby turtles will often do the same. But this can be explained merely by a reflex to look where others look. Joint attention, however, is a more deliberate process of going back and forth to confirm that both minds are attending to the same external object.

What’s the point of children’s quirky prewired ability to engage in proto-conversations and joint attention? It is not for imitation learning; nonhuman primates engage in imitation learning just fine without proto-conversations or joint attention. It is not for building social bonds; nonhuman primates and other mammals have plenty of other mechanisms for building social bonds. It seems that joint attention and proto-conversations evolved for a single reason. What is one of the first things that parents do once they have achieved a state of joint attention with their child? They assign labels to things.

The more joint attention expressed by an infant at the age of one year, the larger the child’s vocabulary is twelve months later. Once human infants begin to learn words, they start naturally combining these words to form grammatical sentences. With the foundation of declarative labels in place through the hardwired systems of proto-conversations and joint attention, grammar allows them to combine these words into sentences, which can then be constructed to create entire stories and ideas.

Humans may have also evolved a unique hardwired instinct to ask questions to inquire about the inner simulations of others. Even Kanzi, Washoe, and the other apes that acquired impressively sophisticated language abilities never asked even the simplest questions about others. They would request food and play but would not inquire about another’s inner mental world. Even before human children can construct grammatical sentences, they will ask others questions: “Want this?” “Hungry?” All languages use the same rising intonation when asking yes/no questions. When you hear someone speak in a language you do not understand, you can still identify when you are being asked a question. This instinct to understand how to designate a question may also be a key part of our language curriculum.

So we don’t realize it, but when we happily go back and forth making incoherent babbles with babies (proto-conversations), when we pass objects back and forth and smile (joint attention), and when we pose and answer even nonsensical questions from infants, we are unknowingly executing an evolutionarily hard-coded learning program designed to give human infants the gift of language. This is why humans deprived of contact with others will develop emotional expressions, but they’ll never develop language. The language curriculum requires both a teacher and a student.

And as this instinctual learning curriculum is executed, young human brains repurpose older mentalizing areas of the neocortex for the new purpose of language. It isn’t Broca’s or Wernicke’s areas that are new, it is the underlying learning program that repurposes them for language that is new. As proof that there is nothing special about Broca’s or Wernicke’s areas: Children with the entire left hemisphere removed can still learn language just fine and will repurpose other areas of the neocortex on the right side of the brain to execute language. In fact, about 10 percent of people, for whatever reason, tend to use the right side of the brain, not the left, for language. Newer studies are even calling into question the idea that Broca’s and Wernicke’s areas are actually the loci of language; language areas may be located all over the neocortex and even in the basal ganglia.

Here is the point: There is no language organ in the human brain, just as there is no flight organ in the bird brain. Asking where language lives in the brain may be as silly as asking where playing baseball or playing guitar lives in the brain. Such complex skills are not localized to a specific area; they emerge from a complex interplay of many areas. What makes these skills possible is not a single region that executes them but a curriculum that forces a complex network of regions to work together to learn them.

So this is why your brain and a chimp brain are practically identical and yet only humans have language. What is unique in the human brain is not in the neocortex; what is unique is hidden and subtle, tucked deep in older structures like the amygdala and brain stem. It is an adjustment to hardwired instincts that makes us take turns, makes children and parents stare back and forth, and that makes us ask questions.

This is also why apes can learn the basics of language. The ape neocortex is eminently capable of it. Apes struggle to become sophisticated at it merely because they don’t have the required instincts to learn it. It is hard to get chimps to engage in joint attention; it is hard to get them to take turns; and they have no instinct to share their thoughts or ask questions. And without these instincts, language is largely out of reach, just as a bird without the instinct to jump would never learn to fly.

So, to recap: We know that the breakthrough that makes the human brain different is that of language. It is powerful because it allows us to learn from other people’s imaginations and allows ideas to accumulate across generations. And we know that language emerges in the human brain through a hardwired curriculum to learn it that repurposes older mentalizing neocortical areas into language areas.

With this knowledge, we can now turn to the actual story of our ancestral early humans. We can ask: Why were ancestral humans endowed with this odd and specific form of communication? Or perhaps more important: Why were the many other smart animals—chimps, birds, whales—not endowed with this odd and specific form of communication? Most evolutionary tricks that are as powerful as language are independently found by multiple lineages; eyes, wings, and multicellularity all independently evolved multiple times. Indeed, simulation and perhaps even mentalizing seem to have independently evolved along other lineages (birds show signs of simulation, and other mammals outside of just primates show hints of theory of mind). And yet language, at least as far as we know, has emerged only once. Why?