7

The Problems of Pattern Recognition

FIVE HUNDRED MILLION years ago, the fish-like ancestor of every vertebrate alive today—the inch-long grandmother of every pigeon, shark, mouse, dog, and, yes, human—swam unknowingly toward danger. She swam through the translucent underwater plants of the Cambrian, gently weaving between their thick seaweed-like stalks. She was hunting for coral larvae, the protein-rich offspring of the brainless animals populating the sea. Unbeknownst to her, she too was being hunted.

An Anomalocaris—a foot-long arthropod with two spiked claws sprouting from its head—lay hidden in the sand. Anomalocaris was the apex predator of the Cambrian, and it was waiting patiently for an unlucky creature to come within lunging distance.

Our vertebrate ancestor would have noticed the unfamiliar smell and the irregular-shaped mound of sand in the distance. But there were always unfamiliar smells in the Cambrian ocean; it was a zoo of microbes, plants, fungi, and animals, each releasing their own unique portfolio of scents. And there was always a backdrop of unfamiliar shapes, an ever-moving portrait of countless objects, both living and inanimate. And so she thought nothing of it.

As she emerged from the safety of the Cambrian plants, the arthropod spotted her and lurched forward. Within milliseconds, her reflexive escape response kicked in. Grandma Fish’s eyes detected a fast-moving object in her periphery, triggering a hardwired reflexive turn and dash in the opposite direction. The activation of this escape response flooded her brain with norepinephrine, triggering a state of high arousal, making sensory responses more sensitive, pausing all restorative functions, and reallocating energy to her muscles. In the nick of time, she escaped the clasping talons and swam away.

This has unfolded billions of times, a never-ending cycle of hunting and escaping, of anticipation and fear. But this time was different—our vertebrate ancestor would remember the smell of that dangerous arthropod; she would remember the sight of its eyes peeking through the sand. She wouldn’t make the same mistake again. Sometime around five hundred million years ago, our ancestor evolved pattern recognition.

The Harder-Than-You-Would-Think Problem of Recognizing a Smell

Early bilaterians could not perceive what humans experience as smell. Despite how little effort it takes for you to distinguish the scent of a sunflower from that of a salmon, it is, in fact, a remarkably complicated intellectual feat, one inherited from the first vertebrates.

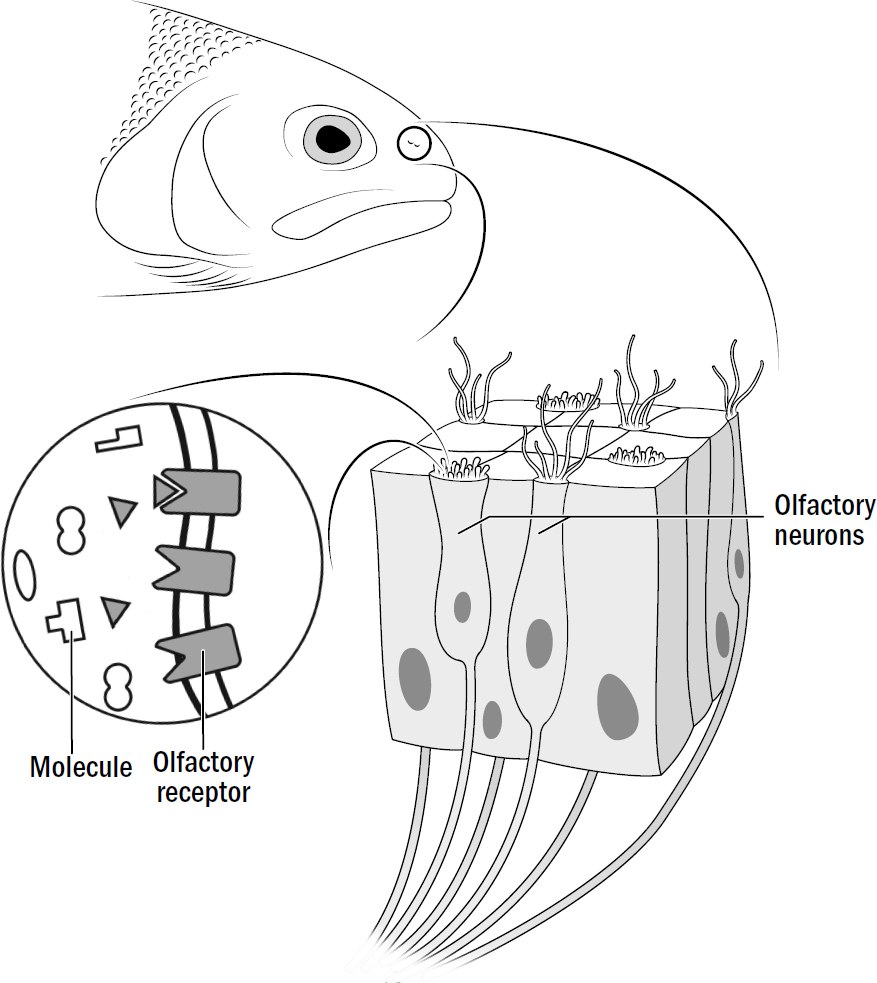

Just as you have in your nose today, within the nostrils of early vertebrates were thousands of olfactory neurons. In the lamprey fish, there are about fifty different types of olfactory neurons, each type containing a unique olfactory receptor that responds to specific types of molecules. Most smells are made up not of a single molecule but of multiple molecules. When you come home and recognize the smell of your family’s best pulled pork, your brain isn’t recognizing the pulled-pork molecule (there is no such thing). Rather, it is recognizing a particular soup of many molecules that activates a symphony of olfactory neurons. Any given smell is represented by a pattern of activated olfactory neurons. In summary, smell recognition is nothing more than pattern recognition.

Our nematode-like ancestor’s ability to recognize the world was constrained to only the sensory machinery of individual neurons. It could recognize the presence of light by the activation of a single photosensitive neuron or the presence of touch from the activation of a single mechanosensory neuron. Although useful for steering, this rendered a painfully opaque picture of the outside world. Indeed, the brilliance of steering was that it enabled the first bilaterians to find food and avoid predators without perceiving much of anything about the world.

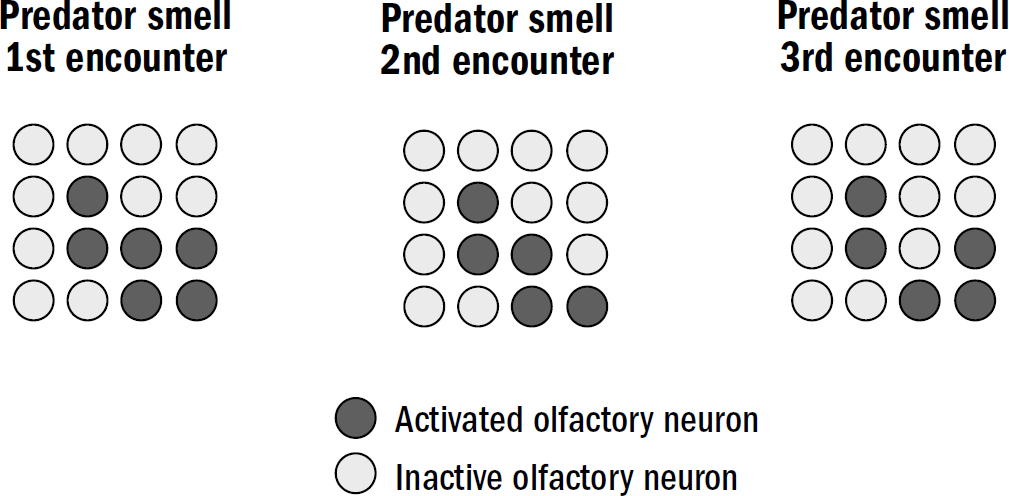

Figure 7.1: Inside the nose of a vertebrate

Original art by Rebecca Gelernter

However, most of the information about the world around you can’t be found in a single activated neuron but only in the pattern of activated neurons. You can distinguish a car from a house based on the pattern of photons hitting your retina. You can distinguish the ramblings of a person from the roar of a panther based on the pattern of sound waves hitting your inner ear. And, yes, you can distinguish the smell of a rose from the smell of chicken based on the pattern of olfactory neurons activated in your nose. For hundreds of millions of years, animals were deprived of this skill, stuck in a perceptual prison.

When you recognize that a plate is too hot or a needle too sharp, you are recognizing attributes of the world the way early bilaterians did, with the activations of individual neurons. However, when you recognize a smell, a face, or a sound, you are recognizing things in the world in a way that was beyond early bilaterians; you are using a skill that emerged later in early vertebrates.

Early vertebrates could recognize things using brain structures that decoded patterns of neurons. This dramatically expanded the scope of what animals could perceive. Within the small mosaic of only fifty types of olfactory neurons lived a universe of different patterns that could be recognized. Fifty cells can represent over one hundred trillion patterns.*

HOW EARLY BILATERIANS RECOGNIZED THINGS IN THE WORLD |

HOW EARLY VERTEBRATES RECOGNIZED THINGS IN THE WORLD |

A single neuron detects a specific thing |

Brain decodes the pattern of activated neurons to recognize a specific thing |

Small number of things can be recognized |

Large number of things can be recognized |

New things can be recognized only through evolutionary tinkering (new sensory machinery needed) |

New things can be recognized without evolutionary tinkering but through learning to recognize a new pattern (no new sensory machinery needed) |

Pattern recognition is hard. Many animals alive today, even after another half billion years of evolution, never acquired this ability—the nematodes and flatworms of today show no evidence of pattern recognition.

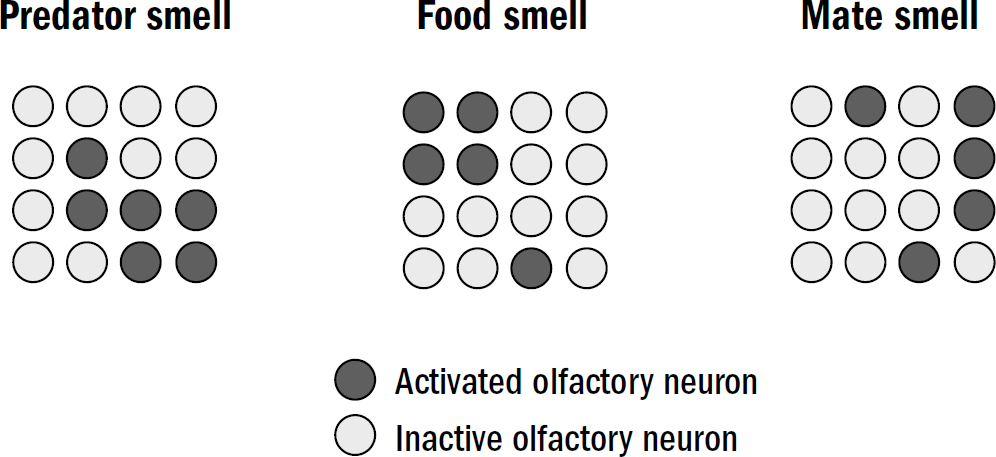

There were two computational challenges the vertebrate brain needed to solve to recognize patterns. In figure 7.2, you can see an example of three fictional smell patterns: one for a dangerous predator, one for yummy food, and one for an attractive mate. Perhaps you can see from this figure why pattern recognition won’t be easy—these patterns overlap with each other despite having different meanings. One should trigger escape and the others approach. This was the first problem of pattern recognition, that of discrimination: how to recognize overlapping patterns as distinct.

The first time a fish experiences fear in the presence of a novel predator smell, it will remember that specific smell pattern. But the next time the fish encounters that same predator smell, it won’t activate the exact same pattern of olfactory neurons. The balance of molecules will never be identical—the age of the new arthropod, or its sex, or its diet, or many other things might be different that could slightly alter its scent. Even the background smells from the surrounding environment might be different, interfering in slightly different ways. The result of all these minor perturbations is that the next encounter will be similar but not the same. In figure 7.3 you can see three examples of the olfactory patterns that the next encounter with the predator smell might activate. This is the second challenge of pattern recognition: how to generalize a previous pattern to recognize novel patterns that are similar but not the same.

Figure 7.2: The discrimination problem

Figure by Max Bennett

Figure 7.3: The generalization problem

Figure by Max Bennett

How Computers Recognize Patterns

You can unlock your iPhone with your face. Doing this requires your phone to solve the generalization and discrimination problems. Your iPhone needs to be able to tell the difference between your face and other people’s faces, despite the fact that faces have overlapping features (discrimination). And your iPhone needs to identify your face despite changes in shading, angle, facial hair, and more (generalization). Clearly, modern AI systems successfully navigate these two challenges of pattern recognition. How?

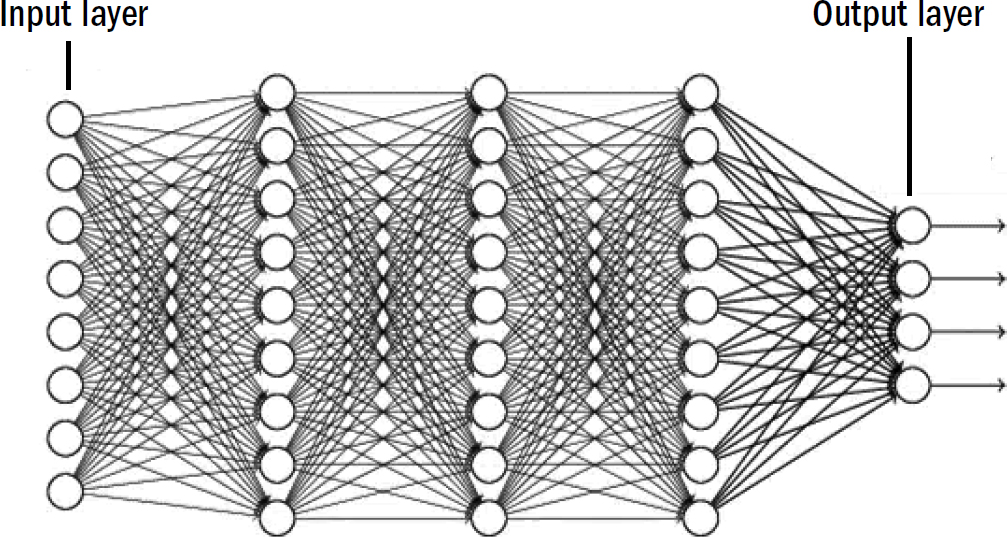

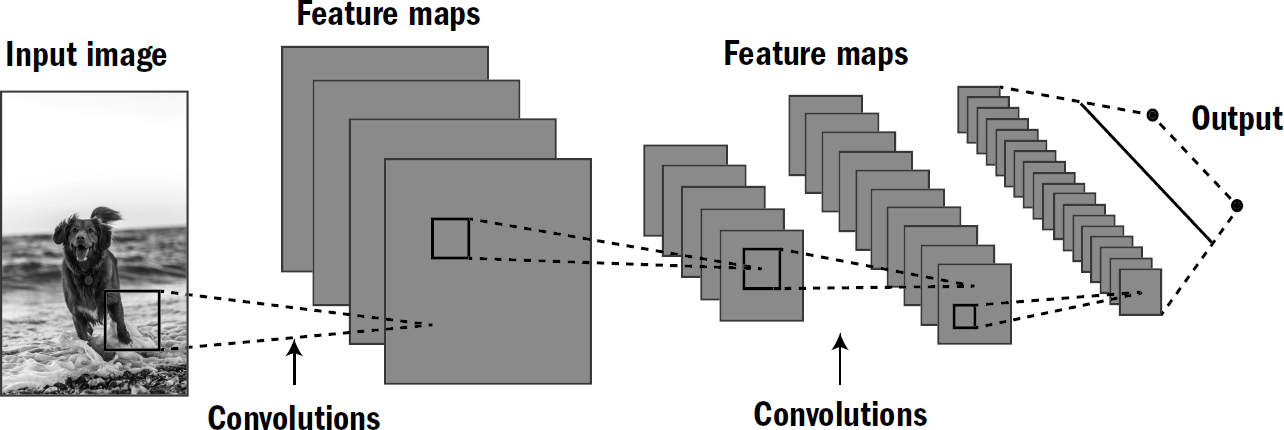

The standard approach is the following: Create a network of neurons like in figure 7.4 where you provide an input pattern on one side that flows through layers of neurons until they are transformed into an output on the other end of the network. By adjusting the weights of the connections between neurons, you can make the network perform a variety of operations on its input. If you can edit the weights to be just right, you can get an algorithm to take an input pattern and recognize it correctly at the end of the network. If you edit the weights one way, it can recognize faces. If you edit the weights a different way, it can recognize smells.

The hard part is teaching the network how to learn the right weights. The state-of-the-art mechanism for doing this was popularized by Geoffrey Hinton, David Rumelhart, and Ronald Williams in the 1980s. Their method is as follows: If you were training a neural network to categorize smell patterns into egg smells or flower smells, you would show it a bunch of smell patterns and simultaneously tell the network whether each pattern is from an egg or a flower (as measured by the activation of a specific neuron at the end of the network). In other words, you tell the network the correct answer. You then compare the actual output with the desired output and nudge the weights across the entire network in the direction that makes the actual output closer to the desired output. If you do this many times (like, millions of times), the network eventually learns to accurately recognize patterns—it can identify smells of eggs and flowers. They called this learning mechanism backpropagation: they propagate the error at the end back throughout the entire network, calculate the exact error contribution of each synapse, and nudge that synapse accordingly.

The above type of learning, in which a network is trained by providing examples alongside the correct answer, is called supervised learning (a human has supervised the learning process by providing the network with the correct answers). Many supervised learning methods are more complex than this, but the principle is the same: the correct answers are provided, and networks are tweaked using backpropagation to update weights until the categorization of input patterns is sufficiently accurate. This design has proven to work so generally that it is now applied to image recognition, natural language processing, speech recognition, and self-driving cars.

But even one of the inventors of backpropagation, Geoffrey Hinton, realized that his creation, although effective, was a poor model of how the brain actually works. First, the brain does not do supervised learning—you are not given labeled data when you learn that one smell is an egg and another is a strawberry. Even before children learn the words egg and strawberry, they can clearly recognize that they are different. Second, backpropagation is biologically implausible. Backpropagation works by magically nudging millions of synapses simultaneously and in exactly the right amount to move the output of the network in the right direction. There is no conceivable way the brain could do this. So then how does the brain recognize patterns?

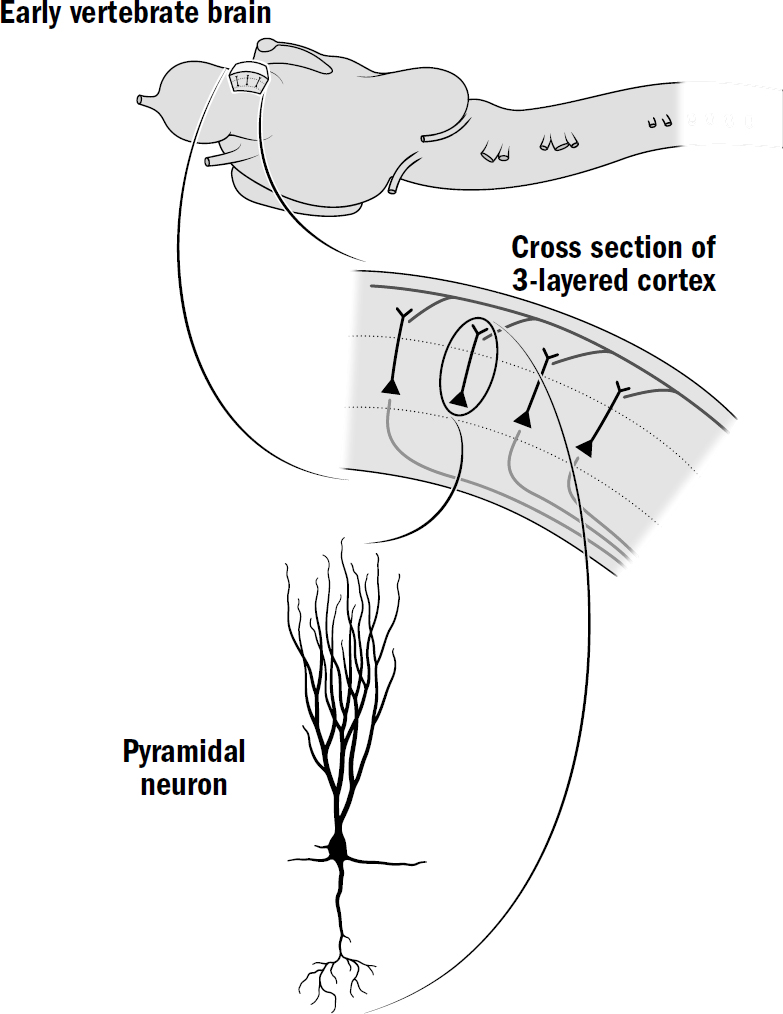

Figure 7.5: The cortex of early vertebrates

Original art by Rebecca Gelernter

The Cortex

Olfactory neurons of fish send their output to a structure at the top of the brain called the cortex. The cortex of simpler vertebrates, like the lamprey fish and reptiles, is made up of a thin sheet of three layers of neurons.

In the first cortex evolved a new morphology of neuron, the pyramidal neuron, named after their pyramid-like shape. These pyramidal neurons have hundreds of dendrites and receive inputs across thousands of synapses. These were the first neurons designed for the purpose of recognizing patterns.

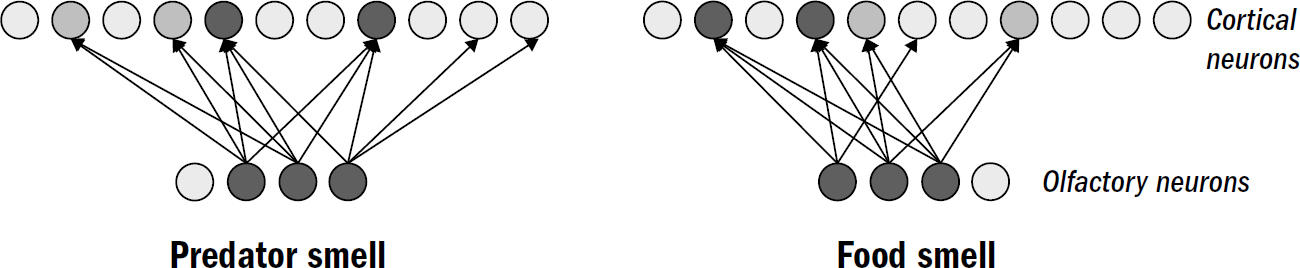

Olfactory neurons send their signals to the pyramidal neurons of the cortex. This network of olfactory input to the cortex has two interesting properties. First, there is a large dimensionality expansion—a small number of olfactory neurons connect to a much larger number of cortical neurons. Second, they connected sparsely; a given olfactory cell will connect to only a subset of these cortical cells. These two seemingly innocuous features of wiring may solve the discrimination problem.

Figure 7.6: Expansion and sparsity (also called expansion recoding) can solve the discrimination problem

Original art by Rebecca Gelernter

Using figure 7.6 you can intuit why expansion and sparsity achieve this. Even though the predator-smell and food-smell patterns are overlapping, the cortical neurons that get input from all the activated neurons will be different. As such, the pattern that gets activated in the cortex will be different despite the fact that the input is overlapping. This operation is sometimes called pattern separation, decorrelation, or orthogonalization.

Neuroscientists have also found hints of how the cortex might solve the problem of generalization. Pyramidal cells of the cortex send their axons back onto themselves, synapsing on hundreds to thousands of other nearby pyramidal cells. This means that when a smell pattern activates a pattern of pyramidal neurons, this ensemble of cells gets automatically wired together through Hebbian plasticity.* The next time a pattern shows up, even if it is incomplete, the full pattern can be reactivated in the cortex. This trick is called auto-association; neurons in the cortex automatically learn associations with themselves. This offers a solution to the generalization problem—the cortex can recognize a pattern that is similar but not the same.

Auto-association reveals an important way in which vertebrate memory differs from computer memory. Auto-association suggests that vertebrate brains use content-addressable memory—memories are recalled by providing subsets of the original experience, which reactivate the original pattern. If I tell you the beginning of a story you’ve heard before, you can recall the rest; if I show you half a picture of your car, you can draw the rest. However, computers use register-addressable memory—memories that can be recalled only if you have the unique memory address for them. If you lose the address, you lose the memory.

Auto-associative memory does not have this challenge of losing memory addresses, but it does struggle with a different form of forgetfulness. Register-addressable memory enables computers to segregate where information is stored, ensuring that new information does not overwrite old information. In contrast, auto-associative information is stored in a shared population of neurons, which exposes it to the risk of accidentally overwriting old memories. Indeed, as we will see, this is an essential challenge with pattern recognition using networks of neurons.

Catastrophic Forgetting (or The Continual Learning Problem, Part 2)

In 1989, Neal Cohen and Michael McCloskey were trying to teach artificial neural networks to do math. Not complicated math, just addition. They were neuroscientists at Johns Hopkins, and they were both interested in how neural networks stored and maintained memories. This was before artificial neural networks had entered the mainstream, before they had proven their many practical uses—neural networks were still something to be probed for missing capabilities and unseen limitations.

Cohen and McCloskey converted numbers into patterns of neurons, then trained a neural network to do addition by transforming two input numbers (e.g., 1 and 3) into the correct output number (in this case, 4). They first taught the network to add ones (1+2, 1+3, 1+4, and so on) until it got good at it. Then they taught the same network to add twos (2+1, 2+2, 2+3, and so on) until it got good at this as well.

But then they noticed a problem. After they taught the network to add twos, it forgot how to add ones. When they propagated errors back through the network and updated the weights to teach it to add twos, the network had simply overridden the memories of how to add ones. It successfully learned the new task at the expense of the previous task.

Cohen and McCloskey referred to this property of artificial neural networks as the problem of catastrophic forgetting. This was not an esoteric finding but a ubiquitous and devastating limitation of neural networks: when you train a neural network to recognize a new pattern or perform a new task, you risk interfering with the network’s previously learned patterns.

How do modern AI systems overcome this problem? Well, they don’t yet. Programmers merely avoid the problem by freezing their AI systems after they are trained. We don’t let AI systems learn things sequentially; they learn things all at once and then stop learning.

The artificial neural networks that recognize faces, drive cars, or detect cancer in radiology images do not learn continually from new experiences. As of this book going to print, even ChatGPT, the famous chatbot released by OpenAI, does not continually learn from the millions of people who speak to it. It too stopped learning the moment it was released into the world. These systems are not allowed to learn new things because of the risk that they will forget old things (or learn the wrong things). So modern AI systems are frozen in time, their parameters locked down; they are allowed to be updated only when retrained from scratch with humans meticulously monitoring their performance on all the relevant tasks.

The humanlike artificial intelligences we strive to create are, of course, not like this. Rosey from The Jetsons learned as you spoke to her—you could show her how to play a game and she could then play it without forgetting how to play other games.

While we are only just beginning to explore how to make continual learning work, animal brains have been doing so for a long time.

We saw in chapter 4 that even early bilaterians learned continually; the connections between neurons were strengthened and weakened with each new experience. But these early bilaterians never faced the problem of catastrophic forgetting because they never learned patterns in the first place. If things are recognized in the world using only individual sensory neurons, then the connection between these sensory neurons and motor neurons can be strengthened and weakened without interfering with each other. It is only when knowledge is represented in a pattern of neurons, like in artificial neural networks or in the cortex of vertebrates, that learning new things risks interfering with the memory of old things.

As soon as pattern recognition evolved, so too did a solution to the problem of catastrophic forgetting. Indeed, even fish avoid catastrophic forgetting fantastically well. Train a fish to escape from a net through a small escape hatch, leave the fish alone for an entire year, and then test it again. During this long stretch of time, its brain will have received a constant stream of patterns, learning continually to recognize new smells, sights, and sounds. And yet, when you place the fish back in the same net an entire year later, it will remember how to get out with almost the same speed and accuracy as it did the year before.

There are several theories about how vertebrate brains do this. One theory is that the cortex’s ability to perform pattern separation shields it from the problem of catastrophic forgetting; by separating incoming patterns in the cortex, patterns are inherently unlikely to interfere with each other.

Another theory is that learning in the cortex selectively occurs only during moments of surprise; only when the cortex sees a pattern that passes some threshold of novelty are the weights of synapses allowed to change. This enables learned patterns to remain stable for long periods of time, as learning occurs only selectively. There is some evidence that the wiring between the cortex and the thalamus—both structures that emerged alongside each other in early vertebrates—are always measuring the level of novelty between incoming sensory data through the thalamus and the patterns represented in the cortex. If there is a match, then no learning is allowed, hence noisy inputs don’t interfere with existing learned patterns. However, if there is a mismatch—if an incoming pattern is sufficiently new—then this triggers a process of neuromodulator release, which triggers changes in synaptic connections in the cortex, enabling it to now learn this new pattern.

We do not yet understand exactly how simple vertebrate brains, like those of fish, reptiles, and amphibians, are capable of overcoming the challenges of catastrophic forgetting. But the next time you spot a fish, you will be in the presence of the answer, hidden in its small cartilaginous head.

The Invariance Problem

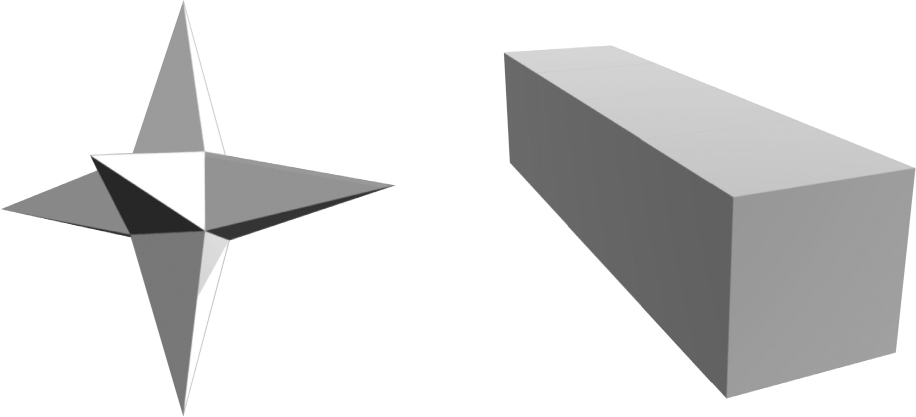

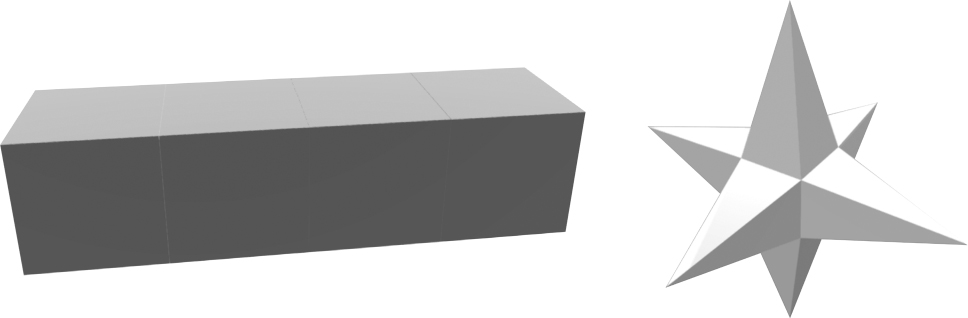

Look at the two objects below.

As you view each object, a specific pattern of neurons in the backs of your eyes light up. The minuscule half-millimeter-thick membrane in the back of the eye—the retina—contains over one hundred million neurons of five different types. Each region of the retina receives input from a different location of the visual field, and each type of neuron is sensitive to different colors and contrasts. As you view each object, a unique pattern of neurons activates a symphony of spikes. Like the olfactory neurons that make up a smell pattern, the neurons in the retina make up a visual pattern; your ability to see exists only in your ability to recognize these visual patterns.

Figure 7.7

Free 3D objects found on SketchFab.com

The activated neurons in the retina send their signals to the thalamus, which then sends these signals to the part of the cortex that processes visual input (the visual cortex). The visual cortex decodes and memorizes the visual pattern the same way the olfactory cortex decodes and memorizes smell patterns. This is, however, where the similarity between sight and smell ends.

Look at the objects below. Can you identify which shapes are the same as the ones in the first picture?

Figure 7.8

Free 3D objects found on SketchFab.com

The fact that it is so effortlessly obvious to you that the objects in figure 7.8 are the same as those in figure 7.7 is mind-blowing. Depending on where you focus, the activated neurons in your retina could be completely non-overlapping, with not a single shared neuron, and yet you could still identify them as the same object.

The pattern of olfactory neurons activated by the smell of an egg is the same no matter the rotation, distance, or location of the egg. The same molecules diffuse through the air and activate the same olfactory neurons. But this is not the case for other senses such as vision.

The same visual object can activate different patterns depending on its rotation, distance, or location in your visual field. This creates what is called the invariance problem: how to recognize a pattern as the same despite large variances in its inputs.

Nothing we have reviewed about auto-association in the cortex provides a satisfactory explanation for how the brain so effortlessly did this. The auto-associative networks we described cannot identify an object you have never seen before from completely different angles. An auto-associative network would treat these as different objects because the input neurons are completely different.

This is not only a problem with vision. When you recognize the same set of words spoken by the high-pitched voice of a child and the low-pitched voice of an adult, you are solving the invariance problem. The neurons activated in your inner ear are different because the pitch of the sound is completely different, and yet you can still tell they are the same words. Your brain is somehow recognizing a common pattern despite huge variances in the sensory input.

In 1958, decades before Cohen and McCloskey discovered the problem of catastrophic forgetting, a different team of neuroscientists, also at Johns Hopkins, were exploring a different aspect of pattern recognition.

David Hubel and Torsten Wiesel anesthetized cats, put electrodes into their cortices, and recorded the activity of neurons as they presented the cats with different visual stimuli. They presented dots, lines, and various shapes in different locations in the cats’ visual field. They wanted to know how the cortex encoded visual input.

In mammal brains (cats, rats, monkeys, humans, et cetera), the part of the cortex that first receives input from the eye is called V1 (the first visual area). Hubel and Wiesel discovered that individual neurons in V1 were surprisingly selective with what they respond to. Some neurons were activated only by vertical lines at a specific location in a cat’s visual field. Other neurons were activated only by horizonal lines at some other location, and still others by 45-degree lines at a different location. The entire surface area of V1 makes up a map of the cat’s full field of view, with individual neurons selective for lines of specific orientations at each location.

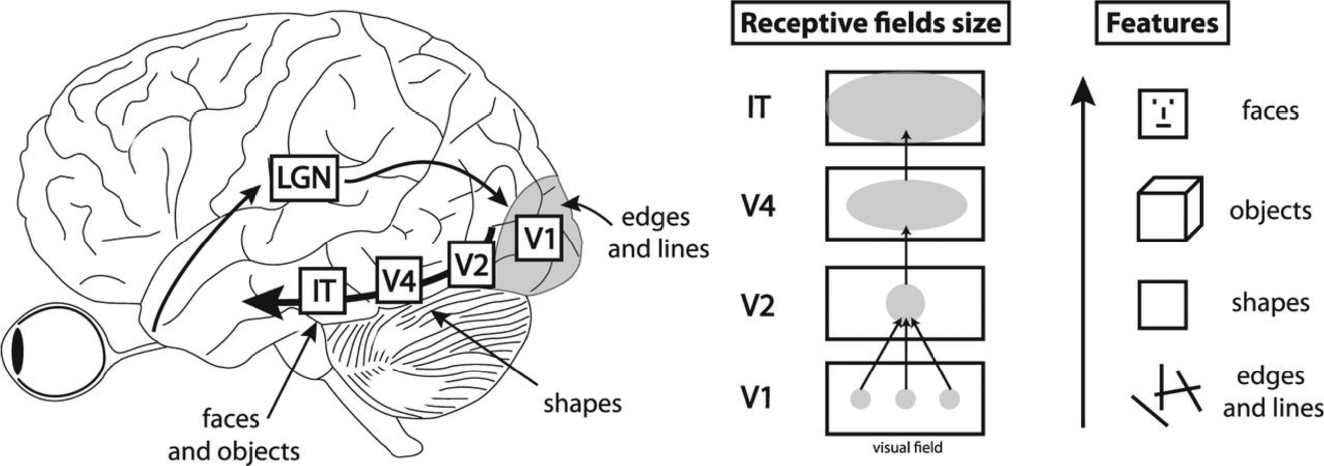

V1 decomposes the complex patterns of visual input into simpler features, like lines and edges. From here, the visual system creates a hierarchy: V1 sends its output to a nearby region of cortex called V2, which then sends information to an area called V4, which then sends information to an area called IT.

Neurons at progressively higher levels of this cortical hierarchy become sensitive to progressively more sophisticated features of visual stimuli—neurons in V1 are primarily activated by basic edges and lines, neurons in V2 and V4 are sensitive to more complex shapes and objects, and neurons in IT are sensitive to complex whole objects such as specific faces. A neuron in V1 responds only to input in a specific region of one’s visual field; in contrast, a neuron in IT can detect objects across any region of the eye. While V1 decomposes pictures into simple features, as visual information flows up the hierarchy, it is pieced back together into whole objects.

In the late 1970s, well over twenty years after Hubel and Wiesel’s initial work, a computer scientist by the name of Kunihiko Fukushima was trying to get computers to recognize objects in pictures. Despite his best attempts, he couldn’t get standard neural networks, like those depicted earlier in the chapter, to successfully do it; even small changes in the location, rotation, or size of an object activated entirely different sets of neurons, which blinded networks to generalizing different patterns to the same object—a square over here would be incorrectly perceived as different from the same square over there. He had stumbled on the invariance problem. And he knew that somehow, brains solved it.

Fukushima had spent the prior four years working in a research group that included several neurophysiologists, and so he was familiar with the work of Hubel and Wiesel. Hubel and Wiesel had discovered two things. First, visual processing in mammals was hierarchical, with lower levels having smaller receptive fields and recognizing simpler features, and higher levels having larger receptive fields and recognizing more complex objects. Second, at a given level of the hierarchy, neurons were all sensitive to similar features, just in different places. For example, one area of V1 would look for lines at one location, and another area would look for lines for another location, but they were all looking for lines.

Fukushima had a hunch that these two findings were clues as to how brains solved the invariance problem, and so Fukushima invented a new architecture of artificial neural networks, one designed to capture these two ideas discovered by Hubel and Wiesel. His architecture departed from the standard approach of taking a picture and throwing it into a fully connected neural network. His architecture first decomposed input pictures into multiple feature maps, like V1 seemed to do. Each feature map was a grid that signaled the location of a feature—such as vertical or horizontal lines—within the input picture. This process is called a convolution, hence the name applied to the type of network that Fukushima had invented: convolutional neural networks.*

After these feature maps identified certain features, their output was compressed and passed to another set of feature maps that could combine them into higher-level features across a wider area of the picture, merging lines and edges into more complex objects. All this was designed to be analogous to the visual processing of the mammalian cortex. And, amazingly, it worked.

Figure 7.10: A convolutional neural network

Figure designed by Max Bennett. The dog photo is from Oscar Sutton (purchased on Unsplash).

Most modern AI systems that use computer vision, from your self-driving car to the algorithms that detect tumors in radiology images use Fukushima’s convolutional neural networks. AI was blind, but now can see, a gift that can be traced all the way back to probing cat neurons over fifty years ago.

The brilliance of Fukushima’s convolutional neural network is that it imposes a clever “inductive bias.” An inductive bias is an assumption made by an AI system by virtue of how it is designed. Convolutional neural networks are designed with the assumption of translational invariance, that a given feature in one location should be treated the same as that same feature but in a different location. This is an impregnable fact of our visual world: the same thing can exist in different places without the thing being different. And so, instead of trying to get an arbitrary web of neurons to learn this fact about the visual world, which would require too much time and data, Fukushima simply encoded this rule directly into the architecture of the network.

Despite being inspired by the brain, convolutional neural networks (CNNs) are, in fact, a poor approximation of how brains recognize visual patterns. First, visual processing isn’t as hierarchical as originally thought; input frequently skips levels and branches out to multiple levels simultaneously. Second, CNNs impose the constraint of translation, but they don’t inherently understand rotations of 3D objects, and thus don’t do a great job recognizing objects when rotated.* Third, modern CNNs are still founded on supervision and backpropagation—with its magical simultaneous updating of many connections—while the cortex seems to recognize objects without supervision and without backpropagation.

And fourth, and perhaps most important, CNNs were inspired by the mammal visual cortex, which is much more complex than the simpler visual cortex of fish; and yet the fish brain—lacking any obvious hierarchy or the other bells and whistles of the mammalian cortex—is still eminately capable of solving the invariance problem.

In 2022, the comparative psychologist Caroline DeLong at Rochester Institute of Technology trained goldfish to tap pictures to get food. She presented the goldfish with two pictures. Whenever the fish tapped specifically a picture of a frog, she gave the fish food. Fish quickly learned to swim right up to the frog picture whenever it was presented. DeLong then changed the experiment. She presented the picture of the same frog but from new angles that the fish had never seen before. If fish were unable to recognize the same object from different angles, they would have treated this like any other photo. And yet, amazingly, the fish swam right up to the new frog picture, clearly able to immediately recognize the frog despite the new angle, just as you recognized the 3D objects a few pages ago.

How the fish brain does this is not understood. While auto-association captures some principles of how pattern recognition works in the cortex, clearly even the cortex of fish is doing something far more sophisticated. Some theorize that the vertebrate brain’s ability to solve the invariance problem derives not from the unique cortical structures in mammals, but from the complex interactions between the cortex and the thalamus, interactions that have been present since the first vertebrates. Perhaps the thalamus—a ball-shaped structure at the center of the brain—operates like a three-dimensional blackboard, with the cortex providing initial sensory input and the thalamus intelligently routing this sensory information around other areas of the cortex, together rendering full 3D objects from 2D input, thereby flexibly able to recognize rotated and translated objects.

Perhaps the best lesson from CNNs is not the success of the specific assumptions they attempt to emulate—such as translational invariance—but the success of assumptions themselves. Indeed, while CNNs may not capture exactly how the brain works, they reveal the power of a good inductive bias. In pattern recognition, it is good assumptions that make learning fast and efficient. The vertebrate cortex surely has such an inductive bias, we just don’t know what it is.

In some ways, the tiny fish brain surpasses some of our best computer-vision systems. CNNs require incredible amounts of data to understand changes in rotations and 3D objects, but a fish seems to recognize new angles of a 3D object in one shot.

In the predatory arms race of the Cambrian, evolution shifted from arming animals with new sensory neurons for detecting specific things to arming animals with general mechanisms for recognizing anything.

With this new ability of pattern recognition, vertebrate sensory organs exploded with complexity, quickly flowering into their modern form. Noses evolved to detect chemicals; inner ears evolved to detect frequencies of sound; eyes evolved to detect sights. The coevolution of the familiar sensory organs and the familiar brain of vertebrates is not a coincidence—they each facilitated the other’s growth and complexity. Each incremental improvement to the brain’s pattern recognition expanded the benefits to be gained by having more detailed sensory organs; and each incremental improvement in the detail of sensory organs expanded the benefits to be gained by more sophisticated pattern recognition.

In the brain, the result was the vertebrate cortex, which somehow recognizes patterns without supervision, somehow accurately discriminates overlapping patterns and generalizes patterns to new experiences, somehow continually learns patterns without suffering from catastrophic forgetting, and somehow recognizes patterns despite large variances in its input.

The elaboration of pattern recognition and sensory organs, in turn, also found themselves in a feedback loop with reinforcement learning itself. It is also not a coincidence that pattern recognition and reinforcement learning evolved simultaneously in evolution. The greater the brain’s ability to learn arbitrary actions in response to things in the world, the greater the benefit to be gained from recognizing more things in the world. The more unique objects and places a brain can recognize, the more unique actions it can learn to take. And so the cortex, basal ganglia, and sensory organs evolved together, all emerging from the same machinations of reinforcement learning.