It’s not an experiment if you know it’s going to work.

—JEFF BEZOS

TO GET HER innovation up and running, Jean could have prepared a business plan addressing all of the issues she knew would come—legal problems, the lack of technology capabilities, the need to introduce a radically different editorial process, and many more. In business school, we are taught that is the step that comes next. But instead, with her boss on board, Jean took a more modern approach.

“It used to be strategy-strategy-strategy-implement. Now by the time you implement, your strategy is outdated,” her boss Jon Yaged explained. They decided to adopt an agile or scrum approach (more about those later) in which they asked, “What is the one thing I need to get done today?” They would conduct a low-cost experiment, learn from it, and adapt.

Of all the value blockers the idea would face, Jon and Jean believed that getting author and reader adoption was the most urgent. If they could prove that would-be self-published authors liked the idea and that romance readers would get engaged, they would have greater fodder to convince the company to implement the innovation. The potential of the opportunity would make the legal, operational, talent, and process issues worth solving.

Unfortunately, most organizations expect the person with the idea to identify all possible areas of concern and solve them before giving permission to act. To proceed with a new idea, most innovators are told, in effect, to “prove it.” This business-planning approach is so deeply ingrained that it has become reflexive and automatic.

We even have language to describe the dilemma. Established organizations are oriented toward a prove–plan–execute model. Internal innovators instead necessarily gravitate toward an act–learn–build model: take action, learn from it, and then build based on the last learning. It’s ALB versus PPE.

The problem is that a PPE approach rarely works for truly innovative ideas because it’s impossible to prove a truly new idea by analyzing existing data. You can’t build out a financial projection if you do not yet know how users will react to your innovation. You need to create the data (how users will react). You can’t look it up.

Sometimes You Have to Disobey the System

If a new idea requires experimentation to prove it will work, and if the system demands proof before the innovator is allowed to experiment, we have only two options for shattering this catch-22. Either the system must change, or the innovator must circumvent the system. Historically, innovators choose the latter, opting to operate in stealth mode.

1 If you’ve ever navigated London’s subway system, or just about any modern subway system for that matter, you should thank Harry Beck for disobeying. Before 1934, the London Underground map showed a true geographical representation of the actual layout of the city. Stations in the city center were tightly crunched together on the map, and the distances between stations in outlying regions were shown as long blank spaces. The map was technically accurate and comprehensive—but almost impossible to read. Commuters hated it.

Harry Beck, then a draftsman working for the London Underground Signals Office, had a different idea. In his spare time, he began drafting a mock-up version of a simplified yet functional map: horizontal, vertical, and forty-five-degree-angle lines with equally spaced dots denoting station stops and transfer points. He showed his map to management. No, they said, “too revolutionary.”

2But Beck wouldn’t take no for an answer. He revised the map, got a rejection, and incorporated the feedback into the next design. He considered each attempt an experiment to learn and improve his design.

Eventually, he convinced authorities to try his map with riders. The first print run of 300,000 copies was snapped up in a few days, and a million more copies were quickly printed. Today, his iconic map inspires subway maps around the world.

The moral of this story: when your company asks for proof, sometimes you have to disobey the system in order to experiment. That pattern is quite common among internal innovators. 3M’s innovations, for example, are littered with stories of disobedience. When Arthur Fry, a 3M marketer, had his idea for the Post-it Note rejected, he made a batch of Post-its on his own and shared them with executive assistants in the company.

He first thought the product would serve as a paper bookmark. But as it turned out, the 3M executive assistants found entirely new uses for Fry’s invention. They wrote reminders on the bookmarks and stuck them around the desk space. They wrote “sign here” on them and stuck them to documents needing their bosses’ signatures.

Had Fry followed a PPE approach, his intended market—bookmarks—would have been too small to gain the interest of 3M. But by following an ALB approach, taking action on an experiment, he discovered an entirely new market. The Post-it Note quickly became the best-selling office product in the world. Even in today’s digital world, more than fifty billion Post-it Notes are sold every year.

Learn by Doing

Fortunately, that internal innovator’s dilemma—disobey my company’s rules or do battle with the bureaucracy—has begun to change. In the past five to ten years, forward-looking organizations are realizing that the prove-it-do-it approach presents two critical issues.

First, they are recognizing an unassailable bit of logic: when an idea is novel, data that would prove its viability do not yet exist. Sometimes you just need to act and learn. For example, when HP developed the first electronic calculator, market research promised little potential for the innovation. An entrenched competitor was already able to satisfy customer needs at a far superior price point: the slide rule. But instead of looking for proof, HP leadership decided to act. They approved the production of one thousand units, just to see how the market would react. Within a few years, HP was selling one thousand units

per day.

3Second, in fast-paced environments, the cost of taking time out to do analysis can be significant. Wait too long, and you may lose your window to act. At McKinsey, we were taught to seek an “80 percent solution”: conduct analysis until we were 80 percent confident we had the right answer. But former secretary of state and retired four-star general Colin Powell believes that in fast-moving, uncertain environments, waiting for 80 percent confidence is unreasonable and risky. He advocates a “40–70 rule.” As soon as you have enough data to be 40 percent confident, then use your gut and act. If you have waited to be 70 percent confident, you have taken too long.

The difference between 80 percent and 40–70 percent confidence may seem abstract, but the implications are profound. For fifty years, corporations have held onto the idea that if they analyzed all the issues, they could move forward into the future with confidence. In a static world, this makes sense. In a dynamic one, the calculus changes. Perhaps this is why a new approach is taking hold. It is known by various names—lean, agile, scrum, and more—but the essence is the same: take action on a small experiment, learn, and improve your idea.

The Emergence of Agile

Agile, lean, whatever you call it, is such an important concept that it’s worth taking a little time to understand its origins. Though many believe this idea is new, its roots stretch back several decades. Think of it as a confluence of three ideas: cycles of adaptation (instead of linear progressions), human-centered (instead of technology-centered) design, and agile development (instead of “waterfalls”).

Cycles of Adaptation

In the mid-1970s, a fighter pilot named John Boyd proposed a radical new approach to warfare. Instead of viewing battles as linear progressions, he suggested we should view them as loops in which each opponent cycles through four phases: observing the environment, orienting themselves to what is happening, deciding what to do, and acting. Then they repeat the process, starting with observing the results that their new decision produces.

This “OODA loop” (observe–orient–decide–act) has proven highly influential in shaping military strategy. It has also influenced a broad range of business domains, including business strategy, project management, operations, and manufacturing. Indeed, a direct link shows OODA as the seed that flowered into what we now call “lean.”

Toyota’s just-in-time manufacturing is a direct outgrowth of the OODA loop. Steve Blank, founder of the “Lean Startup” approach, said that while “the Customer Development Model with its iterative loops/pivots may sound like a new idea for entrepreneurs, it shares many features with a U.S. war-fighting strategy known as the ‘OODA Loop.’” And Jeff Sutherland, the creator of “scrum,” said, “Scrum is not an ideology. Scrum comes from fighter aviation and hardware manufacturing.” Reid Hoffman, the founder of LinkedIn, said, “In Silicon Valley, the OODA loop of your decision-making is effectively what differentiates your ability to succeed.”

4Finally, the OODA loop has also influenced how companies do strategic planning. The term “discovery-driven planning,” introduced by Ian MacMillan and Rita McGrath in the 2000s, suggests that companies track leading indicators for their strategic ideas to learn whether the strategies are working. Instead of building a new business, for example, you identify the key uncertainties, launch a small initiative to track those uncertainties, and then invest more if the uncertainties indicate your idea is a good one.

Human-Centered Design

About the same time that Toyota was applying OODA to continuous improvement, architects and urban planners were embracing a concept that had been proposed a decade earlier called “satisficing” (a blend of “satisfy” and “suffice”)—the idea that decision-making means exploring available alternatives until an acceptable threshold is reached. Instead of looking for an optimal solution, you are continually looking for a good-enough one.

This idea marks a departure from science toward design. While science seeks the truth, design seeks a solution.

Throughout the 1980s and 1990s, thanks in great part to a series of thinkers at Stanford University (most notably David Kelley, the founder of IDEO), this search for a solution evolved into what we now call design thinking, an underlying approach that has moved through several evolutions.

Designers began designing in collaboration with users: build something, let someone use it, and see if it works. They started involving users in the design process itself, in what came to be called user-centered design. They developed the approach further to explore the entire user journey, looking at what happened before and after the user interacted with what they were designing. Then came the next evolution, called human-centered design, which is where we are today.

My father, Klaus Krippendorff, is one of the leading advocates of human-centered design. He was trained at the Ulm School, a relatively new but highly influential design school in Germany to which many of the roots of design thinking can be traced. He and like-minded scholars argue that people do not interact with objects, but with the meaning they place on those objects, so an empathetic understanding of the emotional experience of users is critical. They also argue for a wide-angle perspective: looking not just at the user, but also at the network of stakeholders that might influence adoption.

Agile Development

In the mid- to late 1990s, software programmers began to abandon the old “waterfall” approach, in which you program in a sequence of work streams, one leading to the next. These programmers instead adopted a concept they called “agile,” combining cyclical, continual improvement with human-centered design.

The agile methodology, in a nutshell, advocates for continuous planning, experimentation, and integration, moving through cycles of development, evaluation, and approval, on to the next level of development, and so forth. It quickly spread from the software world to a large community of followers in a wide swath of industries.

If we pull these three concepts together—cycles of adaptation, human-centered design, and agile—we see the key foundational elements of an approach to problem-solving that is now changing how we think about strategy and innovation. It has expanded from technology and science to society and governance and, of course, to business.

An Agile Enterprise

Until recently, these three concepts have existed as separate ideas belonging to different, mostly unrelated domains (urban planning, warfare, software development, etc.). This has contributed to the myth that the agile approach works only in some domains—creative industries and small startups—and that it is contradictory to the fundamental orientation of large organizations. But forward-looking business leaders now recognize that this agile philosophy is far more versatile and applicable, and they are urging their companies to figure out ways to embrace it.

Numerous leading firms have formalized agile approaches in how they do business, including GE, Intuit, Qualcomm, Fidelity Investments, and ING.

Federal-Mogul, a 120-year-old auto-parts manufacturer that is one of our clients, has been able to effectively design agile/lead/design experiments. Using the approach suggested in this chapter, they have rethought how engineers within their client companies can participate in the design process. This new approach, now implemented globally, helps differentiate them from the competition.

A few points are worth repeating. First, whatever you call it, agile/lean/design thinking is not a novel concept; it has roots that are decades long.

Second, it is not just for technology or new products. You can use it to approach any organizational or strategic effort—a new philanthropic program, pricing structure, hiring approach, process improvement, marketing message, sourcing strategy, or reporting structure, to name just a few.

Third, it is not just for startups. A growing number of large companies are recognizing that this is a superior approach to designing strategy, especially in a faster-paced, more uncertain world that rewards speed and experimentation. Eric Ries, Steve Blank’s former student and one of the most influential propagators of the “Lean Startup” movement, has now successfully applied the approach to numerous large, established organizations.

5Fourth, you do not need official blessing to begin applying this approach. Even if you do not (yet) have formal approval to work on developing a new idea, you can start experimenting in a lean/agile way.

All of this is good news for internal innovators, who often must work outside of their company’s official innovation structure. It’s difficult to get approval for a $400,000 beta launch. Getting a few thousand dollars and a few days of time to run a contained experiment is a whole lot easier.

In

The Innovator’s Hypothesis,

6 Michael Schrage advocates using small teams to collaboratively (and competitively) design and implement business experiments that will matter to senior leadership. I have used that approach with my clients with considerable success. To do this, gather teams of five people, have them develop five experiments, and give them $5,000 and five weeks (working two or three days a week) to conduct an experiment. By containing the experiment cost in this way, you might be able to operate without formal approval; certainly, you can get approval more easily.

Designing an Agile/Lean Experiment

Okay, you may be thinking, what next? I’m ready to do the kind of experiments you’re talking about, but what exactly do I do? It’s a question we get often at this point. They want solid, here’s-how-to-go-about-it advice. Rather than invent something from scratch, we started with what already works.

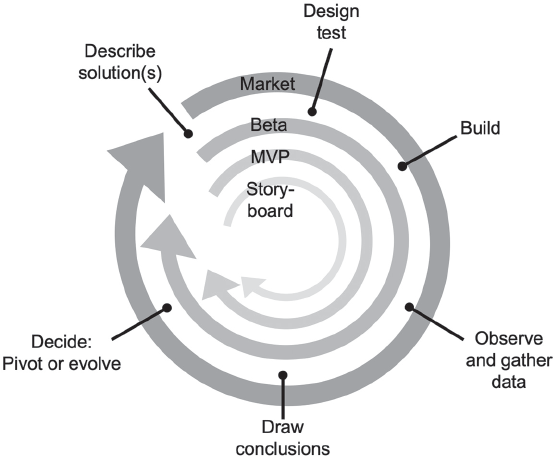

We know, for starters, that most agile/lean approaches involve four cycles of experiments:

1. Storyboard or text description: You build, at no financial cost, something you can show to prospective users to see how they react.

2. Minimum viable proposition (MVP): You build a partial solution (e.g., a website with limited functionality) and actually try to sell the solution. This will give you a clearer sense of how potential customers will react.

3. Beta version: You then invest more money to build your beta product, something with more functionality but still not marketed as complete.

4. Market version: You finalize your solution and launch something your company can stand behind as complete.

Then we lined up several agile approaches from leading experts and mapped them against the paths that successful internal innovators had described to us. We found remarkable similarities, which I synthesized into a six-step model that will help you find your solutions more quickly and at a lower cost. You will see that this model echoes John Boyd’s OODA loop, albeit in a somewhat different sequence:

1. Describe potential solutions. (Act)

2. Design a test. (Act)

3. Build. (Act)

4. Observe and gather data. (Observe)

5. Draw conclusions. (Orient)

6. Pivot or evolve. (Decide)

Think of these six steps as outlining one experiment cycle; from each cycle you will learn something new. If you are already close to a solution, maybe one cycle is all you need. But more likely, you’ll need more. If your idea exists within your area of authority, you may be able to follow the process through all four cycles—four cycles, six steps each. But we have found that it’s much more common that after the first two cycles you will need a formal business case to win approval to continue.

The key is to sequence your experiments so you start out risking very little, in terms of money and time, to learn as much as possible. When you finish one cycle, you decide whether to expand your investment and risk stepping out into the next cycle. Then, as you gain more confidence in your idea’s potential value, you increase what you are willing to invest in the experiment.

Let’s walk through all six steps together (

figure 7.1). Remember, you may end up doing these six steps four times, through the four cycles. And you may find that the specifics of some of the steps will look different in the various cycles.

Figure 7.1 The experimentation process.

Step One: Describe Potential Solutions

You may already have a clear solution in mind, but before you commit to that vision, you’d be well served to develop several alternatives. If you invest everything in just one solution and it turns out to be flawed, you’ll have to start again from scratch. What’s worse, you will have wasted precious time and money, and you might very well have demolished the corporate support you’re going to need later. By testing multiple solutions simultaneously, you can arrive at a more optimal solution in less time.

In January 2010, Prescott Logan left his role as product manager of GE’s Drivetrain Technologies business and took the job of building the Energy Storage division as its general manager. He and his team were provided with a well-thought-through business plan that laid out the needs and solutions that the division should be built around.

In the terminology of our framework, Prescott’s team was given approval for a “market version.” They had approval and funding to execute. Their next step was to build a factory, ramp up a sales force, and launch the business. But they soon sensed that the plan contained considerable uncertainty. GE’s battery technology had the potential to transform the industry, but that potential had not been tested. Were they about to jump off a cliff?

Prescott had seen a speech by Steve Blank and wondered if Steve’s approach could help. So he sent Steve an email, out of the blue, asking for help. After some initial reluctance (his focus was entrepreneurship, not corporate innovation), Steve agreed to speak with Prescott. The prospect of exploring how lean/agile could work inside a huge organization like GE appealed to Steve, and he agreed to help.

As far as we can tell, this is the first instance of a large company formally adopting the Lean Startup methodology. Here’s what happened.

Prescott’s original business plan made a critical assumption: that intermediaries like Ericsson and Emerson Electric would underwrite the battery factory in exchange for the right to sell the batteries to their telecommunications customers. But it turned out neither Ericsson nor Emerson Electric was ready to do so until the market potential of the batteries was proven.

So, with coaching from Steve, Prescott’s team decided to step back and run some tests. They ended up testing two solutions: a battery to provide backup power to data centers, and a battery to help telecom companies in rural areas of developing countries.

Step Two: Design a Test

The success of your solution depends on several assumptions being true. GE’s version of the lean approach, which they call FastWorks, calls these assumptions “leaps of faith.” McKinsey teaches its consultants to focus on “what must be true” for a hypothesis to work. So before you interact with your customer to test the concept, it is worth sitting down with your team to brainstorm about the assumptions inherent in the solutions you are testing. Ask, “What must be true for this to work?” or, more challenging yet, “What are we assuming is true?” You will quickly uncover a number of hidden assumptions.

For example, Prescott suggests looking out for three sets of assumptions:

7 1. Positioning. Have you chosen a target customer that will adopt your innovation? Will they be able to get approval to buy it? Who will they need to get approval from? How long will approval take? How much are they willing to pay?

2. Product. Can you deliver on the product? Is it technically feasible? What will it cost to produce?

3. Processes. Will your internal processes support the concept? Can your accounting and customer-service processes move at the appropriate pace?

Remember the Eight P’s from the last chapter? You’ll recognize that Prescott’s checklist focuses on three of the P’s. It’s well worth the time to walk through all of the P’s, as you did earlier in the Value Blockers exercise, this time thinking not only about internal Value Blockers but about the broader market assumptions inherent in your idea. Then choose the most critical ones to test with an experiment right now. For each P, identify the key assumption (if one exists) that your innovation idea depends on, decide what metric you could use to test the validity of your assumption, and define the “success target” that would indicate that the assumption is sound. With that kind of clarity, you can strategically focus your experimentation efforts.

Step Three: Build

Next, you build something tangible that you can use to generate data to prove (or disprove) your assumptions. To keep your investment low, start small, perhaps with a storyboard or text narrative that describes the concept. You might create a PowerPoint presentation, with images that communicate to a potential customer what the product could do. Or you could design a mock-up of a marketing brochure. If you are testing a physical space, you might rent a room and set up furniture in the space. If you have the capability, you might create a three-dimensional depiction of the product using 3-D printing; or build a prototype using parts bought from a store.

There’s a perfect example on your desk right now—the trackball mouse. When Apple got the idea and the technology for a computer mouse from Xerox, they began designing their version. The Xerox technology required lasers to track the mouse movements, which made the mouse far too expensive for consumer application. Apple engineers, looking for lower-cost alternatives, had the idea of using a ball that would track movement against a flat surface. They could have designed a mouse with a high-tech ball, but instead they went to a pharmacy and bought a roll-on antiperspirant to build a mock-up.

It’s remarkable how much information you can extract from would-be buyers simply by putting a conceptual piece in front of them and asking what they think. When a group of Chrysler managers were debating whether to launch a convertible version of the popular Sebring car, they proposed to CEO Lee Iacocca an expensive market-research effort involving focus groups and surveys. Lee suggested the team first take an existing Sebring, cut off its roof, and drive it around to see how people reacted.

For Prescott’s first cycle (the “storyboard” in

figure 7.1), his team pulled together data on the performance and specifications of their proposed battery: how quickly it would charge, how long it would last, and how much it would cost.

When you have completed the cycle for your storyboard, it is time to develop a minimum viable proposition (MVP). In the MVP cycle, you will build something that actually works, to see if you can generate sales.

The key word here is “minimum.” For instance, you might build a website that performs some of the functions but not every single one. You might put together a program that requires you to perform manually things that will be automated in a future version. You might find a partner who for now will do some of the work that you may eventually do in-house. At GE, Prescott’s team eventually built some batteries for telecom companies in rural, developing countries—but built only what they needed to run their test.

Steve Blank, who introduced the MVP concept

8 (he uses the term “minimum viable product”), defines it as something that delivers the minimum level of performance your user will need in order to make a purchase. It’s critical, he emphasizes, because it accelerates the speed with which you learn.

If the MVP proves successful, in a later cycle you will create a beta version, with more features. You will test and improve the beta, and then formally launch the market version, ready for market.

Keeping the costs down during each cycle is particularly critical for internal innovators because you want to operate within certain thresholds where you need less authorization. Several internal innovators I interviewed told us they could fund projects unilaterally if expenses stayed below $10,000; once over $10,000, they needed their manager’s approval.

Luckily, the cost of conducting experiments is dropping dramatically, thanks to new technology and the increasing ease with which you can gather data. Rietveld Architects LLP, for example, is well known throughout the United States and Europe for designing creative, energy-efficient spaces, both residential and commercial. Their typical process involves building numerous models, each increasing in detail and scale, to help clients visualize their designs. Like most architectural firms, they built these models by hand; it typically took two full-time employees two months. But a few years ago, they bought a 3-D printer, and today they can produce the same model with just a few hours of one employee’s time.

Similarly, Manu Prakash, a professor of bioengineering at Stanford University, designed a microscope that anyone can build with everyday materials for less than a dollar. Using his template, schools in poor countries can print, cut, and fold, and thereby make microscopes available to students.

One of our clients headed an IT group that wanted to change the interface their internal clients used to make requests. Whereas in the past they would have stepped into a multimonth process—defining requirements, building, testing, piloting, and launching—they decided to adopt a more agile approach. They built a simple website that fed information to IT workers, who completed the missing tasks manually. With this, they were able to start measuring things like how many internal clients wanted to use such a system, what jobs they would use it for, and how likely they were to recommend the system to others, all in a matter of days rather than months and at a fraction of the cost.

Gone are the days in which you needed significant budget approvals to launch your test. You can tap affordable, short-term talent through portals like HourlyNerd.com and Upwork; get designers to create storyboards and sample videos at minimal cost through services like Fiverr.com or 99designs.com; produce samples for pennies using 3-D printing; and run small-batch manufacturing at a fraction of what it cost just five years ago from platforms like Alibaba.com.

Step Four: Observe and Gather Data

The next step is to get out of the office and put what you have built (your storyboard, MVP, or beta) in front of your key stakeholders: users, internal stakeholders, external partners, people who influence the buying process, or anyone else whose input you need to complete the test you designed in step three.

A manufacturer of custom picture frames, for example, had the idea of creating a kiosk for framing shops in which customers could see how their artwork would look in different types of frames. Two stakeholders were critical: users (people who buy picture frames) and the owners of the framing shops. Even though they had the kiosk and the software already built, they recognized that simply installing kiosks in stores would be unnecessarily costly. Instead, they could create a mock-up, show it to frame-shop owners at a conference they were organizing, and get their feedback. They asked questions like “What would this kiosk have to do for you to adopt it?” or “What are your concerns about putting this kiosk in your store?” A similar low-cost experiment with users would give them a much better idea what the adoption rate might be and what price they might be able to charge.

The goal is to learn as quickly and inexpensively as possible.

Talk to prospective customers with your storyboard or MVP in hand. Ask them to describe the need they would want to address with your solution. Ask them to outline when and where and why this need arises for them. Have them walk you through how and where they search for potential solutions. Ask them on what basis they compare their solution options and how they ultimately choose to purchase one, or (as is often the case) why they choose to do nothing.

Is this kind of interviewing new to you? Here’s a technique you might find helpful. Pretend you are writing a play, and this will be a key scene. The scene opens with your prospective buyer talking to a colleague about a concern they both recognize. They brainstorm a couple of solutions, and eventually your name comes up. The buyer asks you to come in and chat. Now imagine, as lifelike as possible, how that conversation would go. What would you want to learn, and what might happen next?

In later cycles, as you develop your solution further, you will probably want to come up with other “discover” stories. But for now, pick one that seems, based on your interviews, the most common. Really get into that story and understand the full context of what’s happening with your customer: the psychology, the feelings, the circumstances.

It’s also very valuable to learn how your customers react after they purchase your concept. Think of this as describing the story of them using your product or service.

Several models and experts suggest that the two most important parts of this story are the beginning and the end—the state they are in when they buy, and the state they are in when they have met their need. In between these two, you simply want to note any steps that you are fairly certain will happen. Knowing where your users start from and where you want them to end up will likely give you some great ideas for improving your proposed solution.

At this point, you do not need to be too detailed because you may still have several uncertainties about what your product or service actually is. What you are doing here is drafting out the key points in the story that move the client from a decision to purchase to a successful conclusion in which they have solved their problem.

Step Five: Draw Conclusions

When your team comes back from observation and data gathering, lay out everything you have learned. Look at everything as equally valuable; keep an open mind. This way you reduce the risk of information bias, which can occur when you latch onto one insight and overweight its conclusions.

Have a review meeting with your team. Look at the data. Draw conclusions for each area that you are testing. Synthesize these into an overarching conclusion that will take you to the final step in the cycle: whether to pivot or evolve.

Consider what happened with Procter & Gamble’s Febreze product. It was built on cutting-edge technology that could eliminate odors from fabrics. But its launch—using P&G’s traditional approach—was a failure, selling far fewer units than projected. To their credit, a P&G team took a new direction, to better understand the situation. They hired a researcher from Harvard Business School, got out of the office, and spoke to would-be users.

They learned that the problem lay in their positioning. Describing Febreze as a way to remove bad odors missed the mark because the users who lived with these odors had gotten so used to them they were no longer bothered. For example, they visited a woman who lived with nine cats. Though the researchers found the cat stench overpowering, the cats’ owner hardly noticed it at all. Adopting an innovation begins when someone recognizes the pain or need your innovation promises to resolve. Would-be Febreze users simply didn’t recognize a need.

Their second insight came from a woman in Phoenix who said, “It’s nice, you know? Spraying feels like a little mini-celebration when I’m done with a room.”

This led the team to adjust its messaging, filling ads with images of women (their core customer) spraying a room

after they clean it, and smiling in celebration. With this small adjustment to the messaging (in the 8P framework, this is promotion), with no expensive changes to product or processes or business model, Febreze became one of P&G’s most successful new-product launches.

9Step Six: Pivot or Evolve

The final step is to decide whether to pivot (change direction) or evolve (continue on your current path, increasing your investment while making improvements).

Business history is littered with success stories of ideas that made a surprising decision at this juncture.

Consider the Facebook “Like” button, which evolved out of a successful experiment. By the most recent count available (in 2010), it is clicked by more than seven million people every twenty minutes. Yet at first the project was considered cursed because it had failed multiple reviews by Mark Zuckerberg over a nearly two-year period. Then three Facebook employees—Jonathan Pines, Jared Morgenstern, and Soleio Cuervo—took up the challenge.

One of the company’s biggest concerns was that allowing people to “like” pages might diminish their interest in leaving comments, a key indicator of user engagement. So the team designed an experiment. With the help of a Facebook data scientist named Itamar Rosenn, they set up the experiment, performed a few tests, and tracked data. They found that the presence of a “Like” button actually increased the number of comments. The team won approval of the “Like” button and relatively quickly made it available to all Facebook users.

10Two further examples: Nintendo’s famous Mario Brothers game was born out of a pivot. A development team at Nintendo was distraught when they lost the license rights to use the Popeye character in the game they had been developing specifically for him. So instead they created a new character they named “Mario.” The Mario Brothers franchise, arguably today one of Nintendo’s most important sources of competitive advantage, was born.

Wrigley, too, was born out of a pivot. A young man named William Wrigley moved to Chicago in the 1890s to sell soap and baking soda. As a marketing gimmick, he came up with the idea of giving away chewing gum with every purchase. When the chewing gum proved more popular than the soap and baking soda, he pivoted and started selling the gum. Today, the company that carries his last name generates more than $5 billion in annual revenue.

11

To begin taking action on your idea, follow six steps:

1. Describe potential solutions, making sure to pick more than one.

2. Design a test by identifying the key assumptions that your innovation idea depends on, deciding what metric you could use to test the validity of your assumption, and defining the “success target” that would indicate that the assumption is sound.

3. Build a storyboard, minimum viable proposition (MVP), or beta.

4. Observe and gather data by getting out of the office and putting your storyboard, MVP, or beta in front of your key stakeholders to see how they react.

5. Draw conclusions for each area that you are testing by looking at and synthesizing the data.

6. Decide whether to pivot (change direction) or evolve (continue on your current path).

The traditional approach to innovation involves a well-established process: write a business plan, get approval, build a solution, and then launch it.

This is changing. Even within large organizations, we see this formal process giving way to an approach characterized by a series of experiments. “Agile/lean” is no longer the sole territory of high-tech entrepreneurs in blue jeans.

If you have an idea you want to pursue, this is very good news. It means that you can begin right now, by conducting small, inexpensive experiments for which you don’t need formal approval. You will find that new technology makes it easier than ever to run small experiments. You’ll also find a growing recognition that breaking your project down into smaller increments (the essence of agile) makes sense and is well received by colleagues.

Learning from your experiments is easier too. You can access data more easily, quickly, and inexpensively; for instance, you can measure website hits and average ratings in minutes rather than having to wait months to get prototypes into retail stores. Google Consumer Surveys, and research offerings built on Google’s survey platform, allow you to conduct research for $2,000 that five years ago cost $50,000.

In sum, there is no reason to bet on untested beliefs. As Jeff Sutherland, author of

Scrum: The Art of Doing Twice the Work in Half the Time,

12 urges, “Don’t guess. Plan, Do, Decide, Act. Plan what you’re going to do. Do it. Decide whether it did what you wanted. Act on that and change how you’re doing things.”

Jean and her team decided their first step would be to build a basic website that simulated what the final site would be like and put it in front of a group of people who represented the audience they thought they would attract. If that group liked it, then they would build a more robust site. This would be the lowest-cost approach they could think of to assess whether authors and readers would adopt the idea if launched.

Many people were surprised at the outcome. Jean Feiwel was not.