Security management is the process by which security controls are implemented and security managers are subject to control. Some of the elements of this architecture—the management of passwords and accounts, authorization controls, legal issues, privacy, and so forth—are discussed in their own chapters. The following additional elements also form part of the structure:

• Acceptable use enforcement

• Administrative security

• Accountability controls

• Activity Monitoring and Audit

One of the best things that a company can do is to have an acceptable use policy (AUP) that dictates what employees can do with the computers they use and the networks and data they have access to. Many early AUPs only addressed Internet access; they either told subscribers of an ISP what was deemed acceptable or listed company policies created to reduce bandwidth demands. Now, however, AUPs are attempting to specify the entire panorama of computer use, from what subjects and employees are allowed to read about on the Internet, to what’s okay to say in an internal e-mail, to whether a personal music CD can be inserted in the CD-ROM drive of the office desktop.

A problem with many of these AUPs is that they do not have compliance enforcement written into them or do not evenly and fairly apply their own rules. One thing is certain: if an AUP is not enforced, it’s not worth having. Before proposing potential enforcement rules, let’s look at some typical enforcement statements.

Early enforcement policies for AUPs primarily consisted of withholding or canceling service. If, for example, copyright or trade secret infringement were violated or a subscriber harassed or intimidated another user, knowingly released a virus, or unlawfully accessed someone else’s information, the ISP simply dropped that customer and refused to provide them service. This type of policy is still in use by many ISPs today. Example enforcement policies of this type are those of Focal Communications, MOREnet, and Plain Communications.

The following examples of AUP enforcement statements are taken from public web site statements. Similar statements exist in private AUPs. The examples are provided in hopes they may provide some impetus to write strong enforcement statements and sound AUPs.

• From www.focal.com/policy/abuse.html:

“Focal may in its discretion, without liability, and without notice terminate or suspend service based on it(s) determination that a violation of the Policy has occurred.”

• From www.more.net/about/policies/aup.html:

“Reported and perceived violations of the Acceptable Use Policy will be reviewed by the MOREnet Executive Director. Violations that are not promptly remedied by the member institution or project participant may result in action including the termination of MOREnet service or the forfeiture of MOREnet membership.”

• From www.plain.co.nz/policy/enforcement.html:

“Upon the first verifiable violation of one of these policies, and a single formal letter of complaint, Plain will issue a written warning to the customer. “Upon the second verifiable violation of one of these policies, and formal letters of complaints from three different sources, Plain will suspend the customer’s service until the customer submits to Plain, in writing, an agreement to cease-and-desist. “Upon the third verifiable violation of one of these policies, and one more letter of complaint, Plain will terminate the customer’s service.”

As organizations learn more about the types of abuse that regularly occurs, and the need to have strong enforcement, many ISPs and others are taking an even stronger stance. They are defining monitoring steps that they take, imposing penalties on employees and stating full cooperation with appropriate authorities. Examples of such enforcement statements can be found at Empowering Media, Café.com, Efficient Networks, the University of Miami School of Law, Ricochet Networks, and the University of California, Davis:

• From www.hostasite.com:

“Violators of the policy are responsible, without limitations, for the cost of labor to clean up and correct any damage done to the operation of the network and business operations supported by the network, and to respond to complaints incurred by Empowering Media. Such labor is categorized as emergency security breach recovery and is currently charged at $195 USD per hour required.”

• From www.cafe.com:

“If we suspect violations of any of the above, we will investigate and we may institute legal action, immediately deactivate Service to any account without prior notice to you, and cooperate with law enforcement authorities in bringing legal proceedings against violators.”

• From www.speedstream.com/legal_use.html:

“In order for Efficient Networks to comply with applicable laws, including without limitation the Electronic Communications Privacy Act 18 U.S.C. 2701 et seq., to comply with appropriate government requests, or to protect Efficient Networks, Efficient Networks may access and disclose any information, including without limitation, the personal identifying information of Efficient Networks visitors passing through its network, and any other information it considers necessary or appropriate without notice to you. Efficient Networks will cooperate with law enforcement authorities in investigating suspected violation of the Rules and any other illegal activity. Efficient Networks reserves the right to report to law enforcement authorities any suspected illegal activity of which it becomes aware. “In the case of any violation of these Rules, Efficient Networks reserves the right to pursue all remedies available by law and in equity for such violations. These Rules apply to all visits to the Efficient Networks Web site, both now and in the future.”

• From www.law.miami.edu/legal/usepolicy.html:

“In addition, offenders may be referred to their supervisor, the Dean, or other appropriate disciplinary authority for further action. If the individual is a student, the matter may be referred to the Honor Council.

“Any offense that violates local, state, or federal laws may result in the immediate loss of all University computing privileges and may be referred to appropriate University disciplinary authorities and/or law enforcement authorities.”

• From www.ricochet.com/DOCS/P_Acceptableusepolicy.pdf:

“RNI may involve, and shall cooperate with, law enforcement authorities if criminal activity is suspected, and each User consents to RNI’s disclosure of information about such User to any law enforcement agency or other governmental entity or to comply with any court order. In addition, Users who violate this AUP may be subject to civil or criminal liability. RNI SHALL NOT BE LIABLE FOR ANY LOSSES OR DAMAGES SUFFERED BY ANY USER OR THIRD PARTY RESULTING DIRECTLY OR INDIRECTLY FROM ANY ACT TAKEN BY RNI PURSUAL TO THIS AUP.”

• From www.ucdavis.edu/text_only/aup_txt.html:

“Any offense which violates local, state or federal laws may result in the immediate loss of all University computing privileges and will be referred to appropriate University offices and/or law enforcement authorities.”

It may seem that the first logical step to take when developing enforcement policy is to decide what the proper response to noncompliance should be. However, writing enforcement language that states what your company will do is more important. Putting strong enforcement statements into an AUP because you think they will deter abuse is foolhardy. Enforcement statements should not be written in the hopes that their language will prevent it; they should be written to define the punishment for noncompliance. More harm than good can be done if a strong enforcement policy is not applied when violations occur.

NOTE For additional information on AUPs, see www.cert.org/security-improvement/practices/p034.html.

In addition to writing statements that accurately reflect the actions that will be taken, the following items should be considered when writing AUP enforcement text:

• Consult with your legal representation. Laws may require your cooperation with law enforcement, the reporting of certain violations, treatment of those accused, punishment meted out, and disclosure of private information. How enforcement policy is stated may also have legal bearing. It is best to have legal advice from those with legal background and knowledge in this area.

• Ensure management agreement on the consequences listed. Without management agreement, you may find yourself with a tough enforcement policy that no one is willing to actually use. Think of things that may cause problems; for example, discontinuing services such as network access may not be a valid, in-house rule if network access is required for the employee to do their job. Discontinuing Internet access, or restricting access to specific site and/or specific network servers, may be a more enforceable policy.

• Invite participation by all stakeholders. Just as the policy itself should be developed in total with everyone’s input, so should enforcement be discussed with them. Although it’s true that laws and management policy may dictate what must be stated in certain parts of your enforcement document, you’ll get more voluntary participation if the people to whom a policy applies have some say in its development.

• Develop the policy and its enforcement rules as part of an overall security policy. Other parts of the security policy may have enforcement clauses, too, and you will want to coordinate them.

• Develop enforcement rules for each variation of the AUP. There is no single AUP; instead, there should be an AUP for different IT products and/or roles. The most common AUP will be a broad policy that covers workstations, as well as access to the network and Internet by most employees. You will also want to have a separate AUP that addresses the practices of IT administrative staff. For example, IT pros have a higher level of access to systems than others and might not be held to the same restrictions of use as most employees. So a separate AUP should dictate what constitutes acceptable use of systems by them. Likewise, a more severe enforcement clause will lay out punishment for noncompliance. IT pros can do damage without having to illegally hack systems, so the consequences for their irresponsible actions should be appropriate.

• Where possible, enforce the policy by using filtering technology. Use a product that blocks site access and records access attempts and access and report violations.

• Consider a stepped enforcement rule. On the first violation, perhaps dependent on the type of violation, a lesser punishment such as increased monitoring and more restricted access may be appropriate. After a second violation, something stronger may be in order. At some point, perhaps dismissal.

• Determine when and if you will bring in law enforcement. Laws are laws, and you are obligated to follow them, but there may be gray areas. For example, if an attack is stopped before it is successful and you learn it was carried out by the son of the Vice President, do you smile and shrug? Call in the FBI? What if the attack was successful? Would it matter what the nature of the attack was? Before you chastise me for not recommending a zero-tolerance strategy, do you have employees arrested for stealing a few paperclips? You should always obtain legal guidance in this area.

• Designate who in the organization will be responsible for enforcement. This individual should have the authority to enforce the policy. The Chief Information Officer (CIO) may be the appropriate choice in some organizations.

• Let everyone know the rules. Employees should be informed of the policy and have the opportunity to discuss it and understand its meaning and the consequences of noncompliance. The policy should be reviewed with them when they join the company and at least once per year thereafter. Have them sign off that this was done and provide a contact person for them so they can ask questions at a later time if they want to. If possible, place the policy online and remind employees of their required cooperation. Some organizations state the existence of and provide a link to the policy in the system logon banner.

• Review the policy periodically. Laws, people, processes, and times change. Your policy may, too. Keep the policy and its enforcement section up-to-date.

• Be prepared to mete out the punishments outlined in your policy. The worst possible thing you can do is have a harsh enforcement policy and then do nothing to carry it out.

AUP enforcement is not just a matter of writing strong words and meting out punishment. Enforcement also means detecting the abuse and proactively stopping infractions from occurring. Products such as Websense (www.websense.com) and SurfControl (www.surfcontrol.com) use filtering technologies to block access to sites or even to block keywords that an organization has deemed unacceptable. Filtering products may also filter e-mail for regulated topics. Other products may take a more passive approach, such as simply recording every page visited and allowing reports to track user activity on the Internet.

In either case, all Internet access must pass through a control point such as a firewall or proxy server. A product is integrated with these control points and configured to meet the demands of the company. Attempts and successes are logged, and if the product is set to block, access is blocked.

NOTE A 90-page document on the effectiveness of these types of filtering products can be found at http://www.aba.gov.au/internet/research/filtering/filtereffectiveness.pdf.

The Websense product is backed by a constantly updated master database of more than four million sites organized into more than 80 categories. This database makes it possible, for example, to block access to gambling, MP3, political, shopping, and adult content sites. An additional Websense product can manage employee use of media-rich network protocols, instant messaging, streaming media, and so forth, allowing access when bandwidth permits and blocking access when the organization needs that capability for business-related activity.

When considering controls that determine the availability and integrity of computing systems, data, and networks, consider the potential opportunities an authorized administrator has as compared to the ordinary user. Systems administrators, operators who perform backup, database administrators, maintenance technicians, and even help desk support personnel, all have elevated privileges within your network. To ensure the security of your systems, you must also consider the controls that can prevent administrative abuse of privilege. Remember, strong controls over the day-to-day transactions and data uses of your organization cannot in themselves ensure integrity and availability. If the controls over the use of administrative authority are not strong as well, the other controls are weakened as well.

In addition to directly controlling administrative privilege, several management practices will help secure networks from abuse and insecure practices.

Two principles of security will help you avoid abuse of power: limiting authority and separation of duties.

You can limit authority by assigning each IT employee only the authority needed to do their job. Within the structure of your IT infrastructure are different systems, and each can be naturally segmented into different authority categories. Examples of such segmentation are network infrastructure, appliances, servers, desktops, and laptops.

Another way to distribute authority is between service administration and data administration. Service administration is that which controls the logical infrastructure of the network, such as domain controllers and other central administration servers. These administrators manage the specialized servers on which these controls run, segment users into groups, assign privileges, and so on. Data administrators, on the other hand, manage the file, database, web content, and other servers. Even within these structures, authority can be further broken down—that is, roles can be devised and privileges limited. Backup operators of file servers should not be the same individuals that have privileges to back up the database server. Database administrators may be restricted to certain servers, as may file and print server administrators.

In the large enterprises, these roles can be subdivided ad infinitum—some help desk operators may have the authority to reset accounts and passwords, while others are restricted to helping run applications. The idea, of course, is to recognize that all administrators with elevated privileges must be trusted, but some should be trusted more than others. The fewer the number of individuals that have all-inclusive or wide-ranging privileges, the fewer that can abuse those privileges.

Another control is separation of duties. In short, if a critical function can be broken into two or more parts, divide the duties among IT roles. If this is done, abuses of trust would require collaboration and, therefore, will be less likely to occur. The classic example of this separation is the following rule: developers develop software, and administrators install and manage it on systems. This means that developers do not have administrative privileges on production systems. If a developer were to develop malicious code, she would not have the ability to launch it, on her own, in the production network. She would have to coerce, trick, or be in collusion with an administrator. She might also attempt to hide the code in customized, in-house software; however other controls, including software review and the fact that others work on the software, mean that there is a good chance of discovery—or at least, perhaps, enough of a chance to deter many attempts.

Even on the administration side, many roles can be so split. Take, for example, the privilege of software backup. Should these individuals also have the right to restore software? In many organizations these roles are split. A backup operator cannot accidentally or maliciously restore old versions of data, thus damaging the integrity of databases and causing havoc.

The following management practices can contribute to administrative security:

• Controls on remote access, and access to consoles and administrative ports Controls that can enhance administrative security are the controls placed on out-of-band access to devices such as serial ports and modems, and physical control of access to sensitive devices and servers. Limiting which administrators can physically access these systems, or who can log on at the console, can be an important control. Limiting remote access is another effective move. Just because an employee has administrative status doesn’t mean their authority can’t be limited.

• Vetting administrators IT admins have enormous power over the assets of organizations. Every IT employee with these privileges should be thoroughly vetted before employment, including reference checks and background checks. This should not hamper employment. Clerks who handle money are often put through more extreme checks.

• Using automated methods of software distribution Using an automated method of OS and software installation not only ensures standard setup and security configuration, thus preventing accidental compromise, it also is a good practice for inhibiting the abuse of power. When systems are automatically installed and configured, there are fewer opportunities for the installation of back door programs and other malicious code or configuration to occur.

• Using standard administrative procedures and scripts The use of scripts can mean efficiency, but the use of a rogue script can mean damage to systems. By standardizing scripts, there is less chance of abuse. Scripts can also be digitally signed, which can ensure that only authorized scripts are run.

Accountability controls are those that ensure activity on the network and on systems can be attributed to an actual individual. These controls are things such as

• Authentication controls Passwords, accounts, biometrics, smart cards, and other such devices and algorithms that sufficiently guard the authentication practice

• Authorization controls Settings and devices that restrict access to specific users and groups

When used properly, accounts, passwords, and authorization controls can hold people accountable for their actions on your network. Proper use means the assignment of at least one account for each employee authorized to use systems. If two or more people share an account, how can you know which one was responsible for stealing company secrets? A strong password policy and employee education also enforce this rule. When passwords are difficult to guess and employees understand they should not be shared, proper accountability is more likely. Authorization controls ensure that access to resources and privileges is restricted to the proper person. For example, if only members of the Schema Admins group can modify the Active Directory Schema in a Windows 2000 domain, and the Schema is modified, then either a member of that group did so or there has been a breech in security. Chapter 6 explains more about authentication and authorization practices and algorithms.

There are exceptions to the one employee, one account rule:

• In some limited situations, a system is set up for a single, read-only activity that many employees need to access. Rather than provide every one of these individuals with an account and password, a single account is used and restricted to this access. This type of system might be a warehouse location kiosk, a visitor information kiosk, or the like.

• All administrative employees should have at least two accounts—one account to be used when they access their e-mail, look up information on the Internet, and do other mundane things; and one that they can use to fulfill their administrative duties.

• For some highly privileged activities, a single account might be assigned the privilege, while two trusted employees each create half of the password. Neither can thus perform the activity on their own; it requires both of them to do so. In addition, since both may be held accountable, each will watch the other perform the duty. This technique is often used to protect the original Administrator account on a Windows server. Other administrative accounts are created and used for normal administration. This account can be assigned a long and complex password and then not be used unless necessary to recover a server where the administrative account’s passwords are forgotten or lost when all employees leave the company or some other emergency occurs. Another such account might be an administrative account on the root certification authority. When it is necessary to use this account, such as to renew this server’s certificate, two IT employees must be present to log on. This lessens the chance that the keys will be compromised.

Monitoring and auditing activity on systems is important for two reasons. First, monitoring activity tells the systems administrator which systems are operating the way they should, where systems are failing, where performance is an issue, and what type of load exists at any one time. These details allow proper maintenance and discovery of performance bottlenecks, and they point to areas where further investigation is necessary. The wise administrator uses every possible tool to determine general network and system health, and then acts accordingly. Second, and of interest to security, is the exposure of suspicious activity, audit trails of normal and abnormal use, and forensic evidence that is useful in diagnosing attacks and potentially catching and prosecuting attackers. Suspicious activity may consist of obvious symptoms such as known attack codes or signatures, or may be patterns that, to the experienced, mean possible attempts or successful intrusions.

In order to benefit from the information available in logs and from other monitoring techniques, you must understand the type of information available and how to obtain it. You must also understand what to do with it. Three types of information are useful:

• Activity logs

• System and network monitoring activity

• Vulnerability scans

Each operating system, device, and application may provide extensive logging activity. There are, however, decisions to be made about how much activity to record. The range of information that is logged by default varies, as does what is available to log, and there is no clear-cut answer on what should be logged. The answer depends on the activity and on the reason for logging.

NOTE When examining log files, it’s important to understand what gets logged and what does not. This will vary by the type of log, the type of event, the operating system and product, whether or not there are additional things you can select, and the type of data. In addition, if you are looking for “who” participated in the event or “what” machine they were using, this may or may not be a part of the log. Windows event logs prior to Windows Server 2003, for example, did not include the IP address of the computer, just the hostname. And web server logs do not include exact information no matter which brand they are. Much web activity goes through a proxy server, so you may find that you know the network source but not the exact system it came from.

In general, the following questions must be answered:

• What is logged by default? This includes not just the typical security information, such as successful and unsuccessful logons or access to files, but also the actions of services and applications that run on the system.

• Where is the information logged? There may be several locations.

• Do logs grow indefinitely with the information added, or is log file size set? If the latter, what happens when the log file is full?

• What types of additional information can be logged? How is it turned on?

• When is specific logging activity desired? Are there specific items that are appropriate choices for some environments but not others? For some servers but not others? For servers but not desktop systems?

• Which logs should be archived and how long should archives be kept?

• How are logs protected from accidental or malicious change or tampering?

Not every operating system or application logs the same types of information. To know what to configure, where to find logs, and what information within the logs is useful requires knowledge of the specific system. However, looking at an example of the logs on one system is useful because it gives meaning to the types of questions that need to be asked. Windows and Unix logs are different, but for both I want to be able to identify who, what, when, where, and why. The following example discusses Windows logs.

NOTE Performing a security evaluation or audit on one operation system can be daunting. Imagine the situation when one operating system hosts another. Today’s mainframe systems often do just that, hosting Unix or Linux. For insight into auditing such a system, see the paper “Auditing Unix System services in OS/390” at http://www-1.ibm.com/servers/eserver/zseries/zos/racf/pdf/toronto_03_2001_auditing_unix_system_services.pdf.

Windows audit logging for NT, XP, and Windows 2000 is turned entirely off by default. (Log activity is collected for some services, such as IIS, and system and application events are logged.)

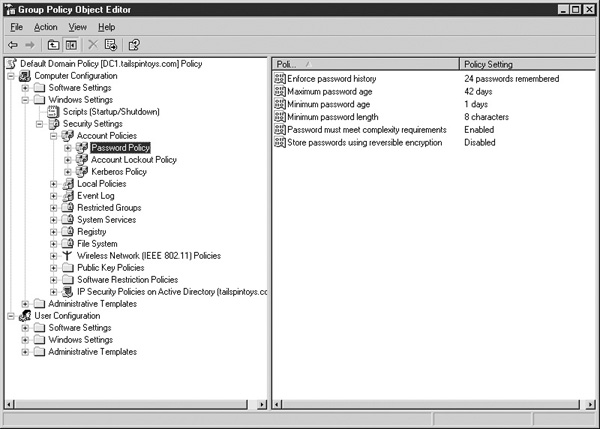

Windows Server 2003 has some audit logging turned on by default, and those settings are shown in Figure 8-1. An administrator can turn on security logging for all or some of the categories and can set additional security logging by directly specifying object access in the registry, in a directory, and in the file system. It’s even possible to set auditing requirements for all servers and desktops in a Windows 2000 or Windows Server 2003 domain using Group Policy (a native configuration, security, application installation, and script repository utility).

FIGURE 8-1 Windows’ security audit policy (Windows Server 2003 default)

Even when using Windows Server 2003, it’s important to understand what to set because the Windows Server 2003 default policy logs the bare minimum activity. Needless to say, turning on all logging categories is not appropriate either. For example, in Windows security auditing, the category Audit Process Tracking would be inappropriate for most production systems because it records every bit of activity for every process—way too much information for normal drive configurations and audit log review. However, in a development environment, or when vetting custom software to determine that it only does what it says it does, turning on Audit Process Tracking may provide just the amount of information necessary for developers troubleshooting code or analysts inspecting it.

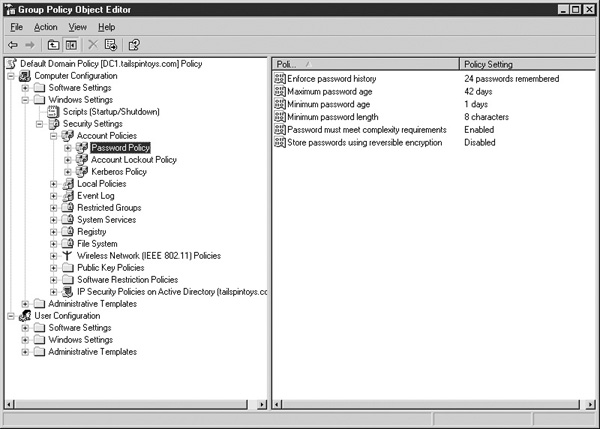

Audit events are logged to a special local security event log on every Windows NT (and above) computer that is configured to audit security events. Event logs are located in the %windir%\system32\config folder. In addition to security events, many other events that may provide security or activity tracking information are logged to the application log, the system log—or, on a Windows 2000 and above domain controller—the DNS Server log, Directory Service log, or File Replication Service log. These logs are shown in Figure 8-2. In addition, many processes offer additional logging capabilities. For example, if the DHCP service is installed, it, too, can be configured to log additional information such as when it leases an address, whether it is authorized in the domain, and whether another DHCP server is found on the network. These events are not logged in the security event log; instead, DHCP events are logged to %windir%\system32\dhcp.

FIGURE 8-2 Windows Server 2003 domain event logs

Many services and applications typically can have additional logging activity turned on, and that activity is logged either to the Windows event logs, to system or application logs, or to special logs that the service or application creates. IIS follows this pattern, as do Microsoft server applications such as Exchange, SQL Server, and ISA Server. The wise systems administrator, and auditor, will determine what is running on systems in the Windows network and what logging capabilities are available for each of them. While much log information relates only to system or application operation, it may become part of a forensics investigation if it is necessary or warranted to reconstruct activity. A journal should be kept that includes what information is being logged on each system and where it is recorded.

Many of the special application logs are basic text files, but the special “event logs” are not. These files have their own format and access to them can be managed. While any application can be programmed to record events to these log files, events cannot be modified or deleted within the logs.

NOTE Some time ago, a utility was developed to delete an event from the security log, but when it was used, log files were corrupted. This provided evidence that an attack had occurred, at least.

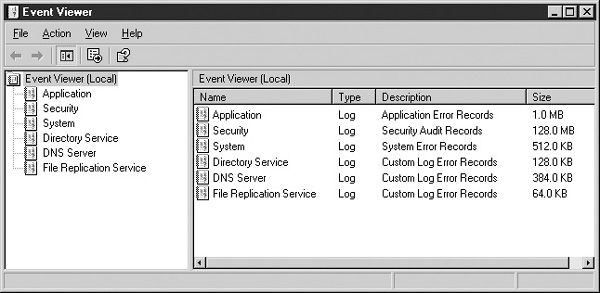

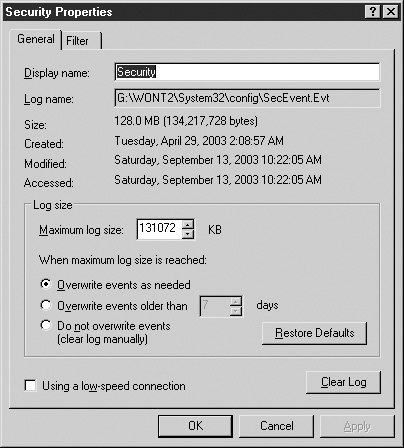

Event logs do not automatically archive themselves, must be given a size, and may be configured, as shown in Figure 8-3, to overwrite old events, halt logging until manually cleared, or in Security Options, stop the system when the log file is full. Best practices advise creating a large log file and allowing events to be overwritten, but monitoring the fullness of files and archiving frequently so that no records are lost.

FIGURE 8-3 Log file configuration

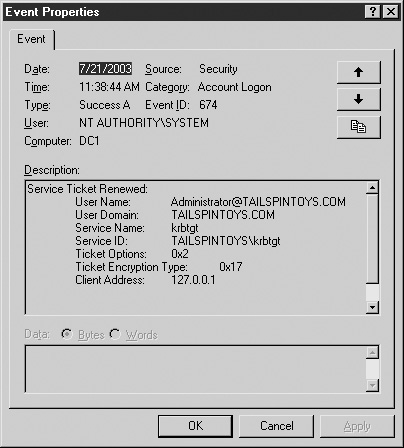

Auditable events produce one or more records in the security log. Each record includes event-dependent information. While all events include an event ID, a brief description of the event and the event date, time, source, category, user, type, and computer, other information is dependent on the event type. Figure 8-4 shows the successful Administrator account local logon to the domain controller. Note the inclusion of the Client IP address, represented in Figure 8-4 as the local address.

Early security and systems administrator advice emphasizes that logs must be reviewed on a daily basis and assumes that there is time to do so. We now know that except in unusual circumstances that does not happen. Today’s best practice advises that the following actions be taken:

• Post log data to external server.

• Consolidate logs to a central source.

• Apply filters or queries to produce meaningful results.

Posting log data to an external server helps to protect the log data. If a server is compromised, attackers cannot modify the local logs and cover their tracks. Consolidating logs to a central source makes the data easier to mange—queries only need to be run on one batch of data. The Unix utility syslog, when utilized, enables posting and consolidation of log data to a central syslog server. A version of syslog is available for Windows.

The downside, of course, is that network problems may prevent events from being posted, and a successful attack on the syslog server can destroy or call into question the validity of security events for an entire network.

Four other techniques for log consolidation are listed here:

• Collect copies of security event logs on a regular basics and archive them in a SQL database such as Microsoft SQL Server, and then develop SQL queries to make reports or use a product such as Microsoft’s LogParser to directly query this database or any log type.

• Invest in a third-party security management tool that collects and analyzes specific types of log data. Examples of such tools are Microsoft’s MOM (Windows) and NetIQ.

• Develop a Security Information Management (SIM) system or an integrated collection of software that attempts to pull all log data from all sources—security logs, web server logs, IDS logs and so forth—for example, Guardnet’s neuSECURE, netForensics, or Itellitatics’ Network Security Manager (NSM).

• Use or add the log management capabilities of systems management tools such as IBM’s Tivoli or Enterasys Dragon.

In addition to log data, system activity and activity on the network can alert the knowledgeable administrator to potential problems. Just as systems and networks should be monitored so that repairs to critical systems and bottlenecks in performance can be investigated and resolved, knowledge of these same activities can mean that all is well, or that an attack is underway. Is that system unreachable due to a hard disk crash? Or the result of a denial of service attack? Why today is there a sudden surge in packets from a network that is too busy?

Some SIM tools seek also to provide a picture of network activity, and many management tools report on system activity. In addition, IDS systems, as described in Chapter 14, and protocol analyzers can provide access to the content of frames on the network.

No security toolkit is complete without its contingent of vulnerability scanners. These tools provide an audit of currently available systems against well-known configuration weaknesses, system vulnerabilities, and patch levels. They can be comprehensive, such as Retina Network Security Scanner; they can be operating system–specific, such as Microsoft’s Security Baseline Analyzer or the Center for Internet Securities’ scanners for Windows, Cisco, Solaris, and other systems; or they can be uniquely fixed on a single vulnerability or service such as eEye’s Digital Securities Retina SQL Sapphire Worm tool. They may be incredibly automated, requiring a simple start command, or may require sophisticated knowledge or the completion of a long list of activities.

Before using a vulnerability scanner, or commissioning such a scan, care should be taken to understand what the potential results will show. Even simple, single-vulnerability scanners may stop short of identifying vulnerabilities. They may, instead, simply indicate that the specific vulnerable service is running on a machine. More complex scans can produce reports that are hundreds of pages long. What do all the entries mean? Some of them may be false positives, some may require advanced technical knowledge to understand or mitigate, and still others may be vulnerabilities that you can do nothing about. For example, running a web server does make you more vulnerable to attack than if you don’t run one, but if the web server is critical to the functioning of your organization, then it’s a risk you agree to take.

While vulnerability scanning products vary, it’s important to note that a basic vulnerability assessment and mitigation does not require fancy tools or expensive consultants. Free and low-cost tools are available, and many free sources of vulnerability lists exist. Operating system–specific lists are available on the Internet from operating system vendors.

The use of a freely downloadable “Self-Assessment Guide for Information Technology Systems” from the National Institute of Standards and Technologies is specified for all government offices. While some of the specifics of this guide may only be applicable to government offices, much of the advice is useful for any organization; and the document provides a questionnaire format that, like an auditor’s worksheets, may assist even the information security neophyte in performing an assessment. Items in the questionnaire cover such issues as risk management, security controls, IT life cycle, system security plan, personnel security, physical and environmental protection, input and output controls, contingency planning, hardware and software maintenance, data integrity, documentation, security awareness training, incident response capability, identification and authentication, logical access controls, and audit trails.

The security management architecture of your network is important because it reinforces, controls, and makes whole the rest of your security framework. If security management is not properly controlled, it obviates all of the data and transaction controls placed elsewhere in the system. Security management controls span acceptable use enforcement, administrative security, accountability controls, logging, and audit—a range of activities that has an impact on the entire network infrastructure.