The first hurdle an attacker must cross to attack your systems is to connect to them. You can limit and prevent these connections in many ways, but you must almost always allow some communication in order for the organization’s work to successfully proceed. One of the most successful strategies in limiting access to systems is to insist on proof of identity—to communicate only with known individuals and to limit each user’s ability to operate on your systems. These twin controls, authentication and authorization, respectively, must be managed to ensure identified and authorized individuals can do their jobs, while blocking access to those who would attack your systems.

Authentication is the process by which people prove they are who they say they are. In the real world, we do this quite frequently by using our driver’s license, passport, or even a mutual acquaintance to prove identity. It’s interesting that the driver’s license, a non-digital expression of identity, actually fulfills the information security goal of providing at least two-factor authentication. Two-factor authentication is an authentication system that is based on at least two of the following:

• Something you have

• Something you are

• Something you know

This was not always the case. For many years, the driver’s license did not require a photo ID. It was a much weaker form of identification, because it required only something you had (the license), and it was often abused. Computer security similarly needs stronger controls than the simple password (something you know), but it is difficult to move the vast majority of systems to something stronger, and there is little agreement on what that “something stronger” should be.

It is important to understand the variety of available authentication processes and their strengths and weaknesses. Then, when you are faced with a choice, or the compelling need to improve your systems, you can make intelligent choices that will improve the security of your networks. In your investigations, pay special attention to how these systems are implemented and the authentication factors that are used. You will find that the security of your network will not benefit from using the authentication method with the most “promise” for security unless both the way it’s coded and the way you implement it are equally as secure.

Here is an introduction to the types of authentication systems available today:

• Systems that use username and password combinations, including Kerberos

• Systems that use certificates or tokens

• Biometrics

Ancient military practices required identification at the borders of encampments by name and password or phrase. “Halt! Who goes there?” may have been the challenge, and the response a predetermined statement. An unknown visitor with the correct answer was accepted into the camp, while the incorrect answer was met with imprisonment and possibly death. This is the same strategy used by many network authentication algorithms that rely on usernames and passwords (though death is not the usual penalty for forgetting your password). A challenge is used, and the credentials are used to provide a response. If the response can be validated, the user is said to be authenticated, and the user is allowed to access the system. Otherwise, the user is prevented from accessing the system.

Other password-based systems are much simpler, and some, such as Kerberos, more complex, but they rely on a simple fallacy: they trust that anyone who knows a particular user’s password is that user. When users share passwords, write them down where they can be read, or use weak controls to protect their password database, a false sense of security results. Anyone who obtains the password of a valid user account can become that user on your system.

Your evaluation of password-based systems, therefore, must not just evaluate the algorithm for its security, but also the controls available to protect the passwords themselves. You also need to remember that initial authentication to the system is only one small part of an authentication system—many systems also authenticate users when they attempt to access resources on network devices.

Many password authentication systems exist. Three types are in heavy use today: local storage and comparison, central storage and comparison, and challenge and response. Other types, seen less frequently, are Kerberos and One-Time Password systems.

NOTE Kerberos is the default authentication system for Windows 2000 and Windows Server 2003 domains. As the number of these systems grow, Kerberos will become one of the more heavily used forms of password authentication.

Early computer systems did not require passwords. Whoever physically possessed the system could use it. As systems developed, a requirement to restrict access to the privileged few was recognized, and a system of user identification was developed. User passwords were entered in simple machine-resident databases by administrators and were provided to users.

Often, passwords were in the database in plain text. If you were able to open and read the file, you could determine what anyone’s password was. The security of the database relied on controlling access to the file, and on the good will of administrators and users. Administrators were in charge of changing passwords, communicating changes to the users, and recovering passwords for users who couldn’t remember them. Later, the ability for users to change their own passwords was added, as was the ability to force users to do so periodically. Since the password database contained all information in plain text, the algorithm for authentication was simple—the password was entered at the console and was simply compared to the one in the file.

This simple authentication process was, and still is, used extensively for applications that require their own authentication processes. They create and manage their own stored-password file and do no encryption. Security relies on the protection of the password file. Because passwords can be intercepted by rogue software, these systems are not well protected. More information on authentication as used in applications can be found in Chapters 23 and 27.

Securing Passwords with Encryption and Securing the Password File In time, a growing recognition of the accessibility of the password file resulted in attempts to hide it or strengthen its defense. While the Unix etc/passwd file is world readable (meaning that this text file can be opened and read by all users) the password field in the file is encrypted and is thus unreadable. Blanking the password field (possible after booting from a CD), however, allows a user to log on with no password. In most modern Unix systems, a shadow password file (etc/shadow) is created from the etc/passwd file and is restricted to access by system and root alone, thus eliminating, or making more difficult some attacks.

Early versions of Windows used easily crackable password (.pwd) files. Similarly, while Windows NT password files are not text files, a number of attacks exist that either delete the Security Account Manager (SAM) so that a new SAM, including a blank Administrator account password is created on re-boot, or that brute force the passwords. Later versions of Windows NT added the syskey utility, which adds a layer of protection to the database in the form of additional encryption. However, pwdump2, a freeware utility, can be used to extract the password hashes from syskey-protected files.

Numerous freely available products can crack Windows and Unix passwords. Two of the most famous are LC4 (formerly known as LOphtCrack) and John the Ripper. These products typically work by using a combination of attacks: a dictionary attack (using the same algorithm as the operating system to hash words in a dictionary and then compare the result to the password hashes in the password file), heuristics (looking at the things people commonly do, such as create passwords with numbers at the end and capital letters at the beginning, and brute force (checking every possible character combination).

You can find evidence of successful attacks using these products. For example, the hacker known as “Analyzer,” who was convicted of hacking the Pentagon, is said to have used John the Ripper. In some studies, researchers claim they can, with powerful-enough equipment and access to the password database, crack any password created from normal characters, punctuation marks, and numbers, in under eight days. A legitimate use of these cracking programs is to audit a company’s passwords to see if they are in compliance with policy.

Another blow to Windows systems that are protected by passwords is the availability of a bootable floppy Linux application that can replace the Administrators password on a standalone server. If an attacker has physical access to the computer, they can take it over—though this is also true of other operating systems using different attacks.

Protection for account database files on many operating systems was originally very weak, and may still be less than it could be. Administrators can improve security by implementing stronger authorization controls (file permissions) on the database files.

In any case, ample tools are available to eventually compromise passwords if the machine is in the physical possession of the attacker, or if the attacker can obtain physical possession of the password database. Every system should be physically protected, but where a centralized database for accounts exists, extra precautions should be taken. In addition, user training and account controls can strengthen passwords and make the attacker’s job harder—perhaps hard enough that the attacker will move on to easier pickings.

Windows 2000 and Windows Server 2003 domains store password data in the Active Directory, a self-replicating database. This database is protected with object permissions and can be further secured by network controls over data transfer.

TIP Try this experiment for yourself. Do a Google search on “etc/passwd,” “Windows SAM,” or “network authentication”. You may be surprised to find system administration notes, including documentation on the authentication practices of organizations, or acceptable use policies (which reveal deadlines for implementing some form of secure authentication, therefore allowing an attacker to deduce what is in use now), and other notes providing ample inside information useful to attackers. Search your own domain for the leakage of such information about your organization, and at least restrict its access to internal use.

Many applications now use the credentials of the logged on user, instead of maintaining their own password databases. Others have adopted the practices implemented in the operating systems and use encryption to protect stored passwords.

When passwords are encrypted, authentication processes change. Instead of doing a simple comparison, the system must first take the user-entered, plaintext password and encrypt it using the same algorithm used for its storage in the password file. Next, the newly encrypted password is compared to the stored encrypted password. If they match, the user is authenticated. This is how many operating systems and applications work today.

How does this change when applications are located on servers that client workstations must interface with? What happens when centralized account databases reside on remote hosts? Sometimes the password entered by the user is encrypted, passed over the network in this state, and then compared by the remote server to its stored encrypted password. This is the ideal situation. Unfortunately, some network applications transmit passwords in clear text—telnet, FTP, rlogin, and many others, do so by default. Even systems with secure local, or even centralized, network logon systems may use these and other applications which then transmit passwords in clear text. If attackers can capture this data in flight, they can use it to log on as that user. In addition to these network applications, early remote authentication algorithms (used to log on via dial-up connections), such as Password Authentication Protocol (PAP), also transmit clear text passwords from client to server.

One solution to the problem of securing authentication credentials across the network is to use the challenge and response authentication algorithm. Numerous flavors of this process are available and are built into modern operating systems. Two examples are the Windows LAN Manager challenge and response authentication process and RSA’s SecurID system.

Windows LAN Manager Challenge and Response The Windows LAN Manager (LM) challenge and response authentication system is used by legacy Windows operating systems for network authentication. Windows 2000 and above, when joined in a Windows 2000 or Windows Server 2003 domain, will use Kerberos.

Windows network authentication can occur either at the initial logon by a user or during resource access. There are three versions of the LM challenge and response: LM, NTLM, and NTLMv2. Each version is different—NTLM improves on LM, and NTLMv2 on NTLM. However, the basic process is the same, and LM and NTLM use the same steps. The difference is in the way the password is protected when stored, the keyspace size, and the additional processing required. The keyspace is the number of possible keys that can be created within the given constraints.

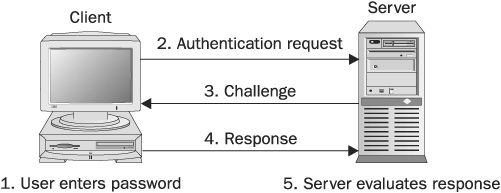

These are the steps followed by LM and NTLM:

1. The user enters a password.

2. The client issues an authentication request.

3. The server issues a challenge in the form of a random number.

4. The client uses a cryptographic hash of the password entered in step 1 (hashed with the same algorithm used in the storage of passwords) to encrypt the challenge and return it to the server. This is the response.

5. The server uses its stored copy of the password hash to decrypt the response. The server compares the original challenge to the received copy. If there is a match, the user is authenticated.

Figure 6-1 illustrates these steps.

FIGURE 6-1 The challenge and response algorithm is a five-step process

The first version of the system, known as LAN Manager (LM), and still used by Windows 9x systems, is considered extremely weak for the following reasons:

• The LM password has a length limit of 14 characters and can only contain uppercase letters, special characters, and numbers. It is based on the OEM character set, which makes a smaller keyspace, reducing the number of possible keys that can exist in the system.

• The password is hashed by converting all characters to uppercase, dividing the password into two 7-character parts, padding passwords of less than 14 characters, and then using these parts to encrypt a constant using DES. (Thus, any password longer than 7 characters is really no stronger than a 7-character password, as each half can be attacked separately, and the results can be recombined to make the password. Ordinarily, the longer a password is, the stronger it is, but in this case the effective length of the password will never be more than 7 characters.

The NTLM network authentication system, developed for Windows NT, is a more secure system. NTLM passwords are based on the Unicode character set and can be 128 characters in length, though the standard GUI only accepts 14 characters. The password is hashed using MD4 into a 16-byte message digest. (A message digest is the result of a cryptographic hash of some data, in this case the password.) The cryptographic hash is a one-way function (OWF) that can encrypt a variable-length password and produce a fixed-length hash. An OWF cannot be decrypted.

NTLM’s weaknesses are as follows:

• It is subject to replay attacks. If the response can be captured, it might be used by an attacker to authenticate to the server.

• The LM hash is also used in the response. Both the LM hash and the NTLM hash are used to produce two encrypted versions of the response, and both are sent to the server.

• The LM hash is still stored in the server’s account database or that of the domain controller. Since the LM hash is weaker and may be more readily cracked, the result can be used to deduce the NTLM hash, as well. Accounts can still be easily compromised.

NTLMv2, available since Windows NT service pack 4 (1998), improves upon the NTLM algorithm by requiring that the server and client computer clocks be synchronized within 30 minutes of each other. Controls introduced with the new algorithm allow administrators to configure systems such that the LM hash is not used to compute a response to the challenge. In addition, if both client and server support it, enhanced session security is negotiated, including message integrity (a guarantee that the message that arrives is the same as was sent or an error is logged) and message confidentiality (encryption). Session security protects against a replay attack, which is where an attacker captures the client’s response and uses this in an attempt to authenticate—in essence the attack program “replays” the authentication. NTLMv2’s weakness is that the LM password hashes and challenges are still present in the account database.

Windows NT, Windows 2000, and Windows Server 2003 can be configured to eliminate both the storage of and use of LM hashes. A client application is available for Windows 9x, and once it is installed, the NTLM can be configured. With these adjustments, the network authentication process in a Windows network can be made more secure.

CHAP and MS-CHAP The Challenge Handshake Authentication Protocol (CHAP, described in RFC 1994) and the Microsoft version, MS-CHAP (RFC 2433), are used for remote authentication. These protocols use a password hash to encrypt a challenge string. The remote access server uses the encrypted password hash from its account database to encrypt the challenge string and compare the two results. If they are the same, the user is authenticated.

CHAP requires that the user’s password be stored in reversibly encrypted text. This is considered to be less secure than a one-way encryption, since decryption is possible. MS-CHAP does not require that the password be stored as reversibly encrypted text, instead the MD4 hash of the password is stored. MD4 is a one-way algorithm, once, hashed, the password can not be decrypted.

In addition to more secure storage of credentials, the MS-CHAPv2 (RFC 2759) requires mutual authentication—the user must authenticate to the server, and the server must also prove its identity. To do so, the server encrypts a challenge sent by the client. Since the server uses the client’s password to do so, and only a server that holds the account database in which the client has a password could do so, the client is also assured that it is talking to a valid remote access server. This is a stronger algorithm.

Kerberos is a network authentication system based on the use of tickets. In the Kerberos standard (RFC 1510), passwords are key to the system, but in some systems certificates may be used instead. Kerberos is a complex protocol developed at the Massachusetts Institute of Technology to provide authentication in a hostile network. Its developers, unlike those of some other network authentication systems, assumed that malicious individuals, as well as curious users, would have access to the network. For this reason, Kerberos has designed into it various facilities that attempt to deal with common attacks on authentication systems. The Kerberos authentication process follows these steps:

1. A user enters their password.

2. Data about the client and possibly an authenticator is sent to the server. The authenticator is the result of using the password (which may be hashed or otherwise manipulated) to encrypt a timestamp (the clock time on the client computer). This authenticator and a plaintext copy of the timestamp accompany a request for logon, which is sent to the Kerberos authentication server (AS). This is known as pre-authentication and may not be part of all Kerberos implementations—this is the KRB_AS_REQ message.

NOTE Typically both the AS and the Ticket Granting Service (TGS) are part of the same server, as is the Key Distribution Center (KDC). The KDC is a centralized database of user account information, including passwords. Each Kerberos realm maintains at least one KDC (a realm being a logical collection of servers and clients comparable to a Windows domain).

3. The KDC checks the timestamp from the workstation against its own time. The difference must be no more than the authorized time skew (which is five minutes, by default). If the time difference is greater, the request is rejected.

4. The KDC, since it maintains a copy of the user’s password, can use the password to encrypt the plaintext copy of the timestamp and compare the result to the authenticator. If the results match, the user is authenticated, and a ticket-granting ticket (TGT) is returned to the client—this is the KRB_AS_REP message.

5. The client sends the TGT to the KDC with a request for the use of a specific resource, and it includes a fresh authenticator. The request might be for resources local to the client computer or for network resources. This is the KRB_TGS_REQ message, and it is handled by the Ticket Granting Service or TGS.

6. The KDC validates the authenticator and examines the TGT. Since it originally signed the TGT by encrypting a portion of the TGT using its own credentials, it can verify that the TGT is one of its own. Since a valid authenticator is present, the TGT is also less likely to be a replay. (A captured request would most likely have an invalid timestamp by the time it is used—one that differs by more than the skew time from the KDC’s clock.)

7. If all is well, the KDC issues a service ticket for the requested resource—this is the KRB_TGS_REP message. Part of the ticket is encrypted using the credentials of the service (perhaps using the password for the computer account on which the service lies), and part of the ticket is encrypted with the credentials of the client.

8. The client can decrypt its part of the ticket and thus knows what resource it may use. The client sends the ticket to the resource computer along with a fresh authenticator. (During initial logon, the resource computer is the client computer, and the service ticket is used locally.)

9. The resource computer (the client) validates the timestamp by checking whether the time is within the valid period, and then decrypts its portion of the ticket. This tells the computer which resource is requested and provides proof that the client has been authenticated. (Only the KDC would have a copy of the computer’s password, and the KDC would not issue a ticket unless the client was authenticated. The resource computer (the client) then uses an authorization process to determine whether the user is allowed to access the resource.

Figure 6-2 illustrates these steps for Kerberos authentication.

FIGURE 6-2 The Kerberos authentication system uses tickets and a multistep process

Did you catch the important distinction at the end of the process? The ability of the user to access and use the resource depends on two things: first, whether they can successfully authenticate to the KDC and obtain a service ticket, and secondly, whether they are authorized to access the resource. Kerberos is an authentication protocol only.

In addition to the authenticator and the use of computer passwords to encrypt ticket data, other Kerberos controls can be used. Tickets can be reused, but they are given an expiration date. Expired tickets can possibly be renewed, but the number of renewals can also be controlled.

In most implementations, however, Kerberos relies on passwords, so all the normal precautions about password-based authentication systems apply. If the user’s password can be obtained, it makes no difference how strong the authentication system is. The account is compromised. However, there are no known successful attacks against Kerberos data available on the network. Attacks must be mounted against the password database, or passwords must be gained in an out-of-bounds attack (social engineering, accidental discovery, and so on).

The issue with passwords is twofold. First, they are, in most cases, created by people. People need to be taught how to construct strong passwords, and most people are not. These strong passwords must also be remembered and not written down, which means, in most cases, that long passwords cannot be required. Second, passwords do get known by people other than the individual they belong to. People do write passwords down and often leave them where others can find them. People also share passwords; after all, someone needs access to their system! (The best answer to this last problem, of course, is that every authorized user must have their own account and password that is valid on the systems they must access, and all users must be trained to refuse requests for their password.)

Passwords are subject to a number of different attacks. They can be captured and cracked, or possibly used in a replay attack. For example, it is possible to capture the response string of a Windows LM authentication, or obtain the hash from the account database and use this to log on. (For this attack, code must be written to request authentication, and then to return the response using the captured hash rather than a hash of the entered password. This technique is known as “passing the hash.”)

One solution to this type of attack would be to use a system that requires the password to be different every time it is used. In systems other than computers, this has been accomplished with the use of a one-time pad. When two people need to send encrypted messages, if they each have a copy of the one-time pad, each can use the day’s password, or some other method for determining which password to use. The advantage, of course, to such a system, is that even if a key is cracked or deduced, it is only good for the current message. The next message uses a different key.

How, then, can this be accomplished in a computer system? Two current methods that use one-time passwords are the S/Key system, and RSA’s SecurID system.

RSA SecurID System RSA SecurID uses hardware- or software-based authenticators. Authenticators are either hardware tokens (such as a key fob, card, or pinpad) or software (software is available for PDAs, such as those from Pocket PC and Palm, or phones, such as Erickson and Nokia, and for Windows workstations). The authenticators generate a simple one-time authentication code that changes every 60 seconds. The user combines their personal identification number (PIN) and this code to create the password. RSA’s RSA ACE/Server can validate this password, since its clock is synchronized with the token and it knows the user’s PIN. Since the authentication code changes every 60 seconds, the password will change each time its used.

This system is a two-factor system since it combines the use of something you know, the PIN, and something you have, the authenticator.

S/Key S/Key, a system first developed by Bellcore and described in RFC 2289, uses a passphrase to generate one-time passwords. The original passphrase, and the number representing how many passwords will be generated from it, is entered into a server. The server generates a new password each time an authentication request is made. Client software that acts as a one-time generator is used on a workstation to generate the same password when the user enters the passphrase. Since both systems know the passphrase, and both systems are set to the same number of times the passphrase can be used, both systems can generate the same password independently.

The algorithm incorporates a series of hashes of the passphrase and a challenge. The first time it is used, the number of hashes equals the number of times the passphrase may be used. Each successive use reduces the number of hashes by one. Eventually, the number of times the passphrase may be used is exhausted, and either a new passphrase must be set, or the old one must be reset.

When the client system issues an authentication request, the server issues a challenge. The server challenge is a hash algorithm identifier (which will be MD4, MD5, or SHA1), a sequence number, and a seed (which is a clear text character string of 1 to 16 characters). Thus, a server challenge might look like this: opt-md5 567 mydoghasfleas. The challenge is processed by the one-time generator and the passphrase entered by the user to produce a one-time password that is 64 bits in length. This password must be entered into the system; in some cases this is automatically done, in others it can be cut and pasted, and in still other implementations the user must type it in. The password is used to encrypt the challenge to create the response. The response is then returned to the server, and the server validates it.

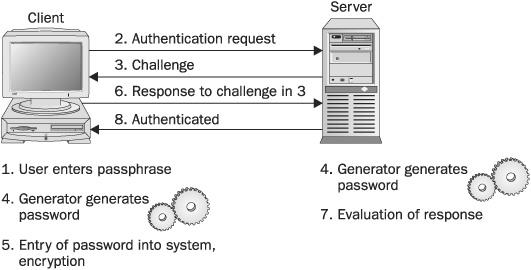

The steps for this process are as follows:

1. The user enters a passphrase.

2. The client issues an authentication request.

3. The server issues a challenge.

4. The generator on the client and the generator on the server generate the same one-time password.

5. The generated password is displayed to the user for entry or is directly entered by the system. The password is used to encrypt the response.

6. The response is sent to the server.

7. The server creates its own encryption of the challenge using its own generated password, which is the same as the client’s. The response is evaluated.

8. If there is a match, the user is authenticated.

Figure 6-3 illustrates these steps.

FIGURE 6-3 The S/Key one-time password process is a modified challenge and response authentication system

S/Key, like other one-time password systems, does provide a defense against passive eavesdropping and replay attacks. There is, however, no privacy of transmitted data, nor any protection from session hijacking. A secure channel, such as IP Security (IPSec) or Secure Shell (SSH) can provide additional protection. Other weaknesses of such a system are in its possible implementations. Since the passphrase must be eventually reset, the implementation should provide for this to be done in a secure manner. If this is not the case, it may be possible for an attacker to capture the passphrase and thus prepare an attack on the system. S/Key’s implementation in some systems leaves the traditional logon in place. If a user faces the choice between entering a complicated passphrase and then a long, generated password, users may opt to use the traditional logon, thus weakening the authentication process.

A certificate is a collection of information that binds an identity (user, computer, service, or device) to the public key of a public/private key pair. The typical certificate includes information about the identity and specifies the purposes for which the certificate may be used, a serial number, and a location where more information about the authority that issued the certificate may be found. The certificate is digitally signed by the issuing authority, the certificate authority (CA). The infrastructure used to support certificates in an organization is called the Public Key Infrastructure (PKI). More information on PKI can be found in Chapter 7.

The certificate, in addition to being stored by the identity it belongs to, may itself be broadly available. It may be exchanged in e-mail, distributed as part of some application’s initialization, or stored in a central database of some sort where those who need a copy can retrieve one. Each certificate’s public key has its associated private key, which is kept secret, usually only stored locally by the identity. (Some implementations provide private key archiving, but often it is the security of the private key that provides the guarantee of identity.)

An important concept to understand is that unlike symmetric key algorithms, where a single key is used to both decrypt and encrypt, public/private key algorithms use two keys: one key is used to encrypt, the other to decrypt. If the public key encrypts, only the related private key can decrypt. If the private key encrypts, only the related public key can decrypt.

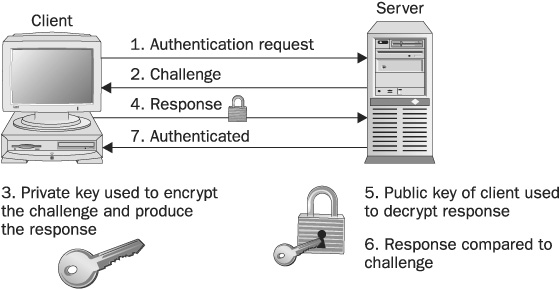

When certificates are used for authentication, the private key is used to encrypt or digitally sign some request or challenge. The related public key (available from the certificate) can be used by the server or a central authentication server to decrypt the request. If the result matches what is expected, then proof of identity is obtained. Since the related public key can successfully decrypt the challenge, and only the identity to which the private key belongs can have the private key that encrypted the challenge, the message must come from the identity. These authentication steps are as follows:

1. The client issues an authentication request.

2. A challenge is issued by the server.

3. The workstation uses its private key to encrypt the challenge.

4. The response is returned to the server.

5. Since the server has a copy of the certificate, it can use the public key to decrypt the response.

6. The result is compared to the challenge.

7. If there is a match, the client is authenticated.

Figure 6-4 illustrates this concept.

FIGURE 6-4 Certificate authentication uses public and private keys

It is useful here to understand that the original set of keys is generated by the client, and only the public key is sent to the CA. The CA generates the certificate and signs it using its private key, and then returns a copy of the certificate to the user and to its database. In some systems, another database also receives a copy of the certificate. For example, When Windows 2000 Certificate Services is implemented in a Windows 2000 domain, the certificate is bound to the Active Directory identity of the client, and a copy of the certificate is available in the Active Directory. It is the digital signing of the certificate that enables other systems to evaluate the certificate for its authenticity. If they can obtain a copy of the CA’s certificate, they can verify the signature on the client certificate and thus be assured that the certificate is valid.

Two systems that use certificates for authentication are SSL/TLS and smart cards.

Secure Sockets Layer (SSL) is a certificate-based system developed by Netscape that is used to provide authentication of secure web servers and clients and to share encryption keys between servers and clients. It can be used in e-commerce or wherever machine authentication or secure communications are required. Transport Layer Security (TLS) is the Internet standard version (RFC 2246) of the proprietary SSL. While both TLS and SSL perform the same function, they are not compatible—a server that uses SSL cannot establish a secure session with a client that only uses TLS. Applications must be made SSL- or TLS-aware before one or the other system can be used.

NOTE While the most common implementation of SSL provides for secure communication and server authentication, client authentication may also be implemented. Clients must have their own certificate for this purpose, and the web server must be configured to require client authentication.

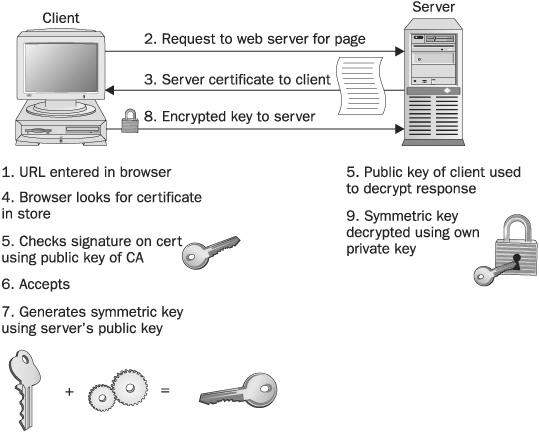

In the most commonly implemented use of SSL, an organization obtains a server SSL certificate from a public CA, such as VeriSign, and installs the certificate on its web server. The organization could produce its own certificate, from an in-house implementation of certificate services, but the advantage of a public CA certificate is that a copy of the CA’s certificate is automatically a part of Internet browsers. Thus, the identity of the server can be proven by the client. The authentication process (illustrated in Figure 6-5) works like this:

FIGURE 6-5 SSL can be used for server authentication and to provide secure communications between a web server and a client

1. The user enters the URL for the server in the browser.

2. The client request for the web page is sent to the server.

3. The server receives the request and sends its server certificate to the client.

4. The client’s browser checks its certificate store for a certificate from the CA that issued the server certificate.

5. If the CA certificate is found, the browser validates the certificate by checking the signature on the server’s certificate using the public key provided on the CA’s certificate.

6. If this test is successful, the browser accepts the server certificate as valid.

7. A symmetric encryption key is generated and encrypted by the client, using the server’s public key.

8. The encrypted key is returned to the server.

9. The server decrypts the key with the server’s own private key. The two computers now share an encryption key that can be used to secure communications between the two of them.

There are many potential problems with this system:

• Unless the web server is properly configured to require the use of SSL, the server is not authenticated to the client and normal, unprotected communication can occur. The security relies on the user using the https:/ designation instead of http:/ in their URL entry.

• If the client does not have a copy of the CA’s certificate, the server will offer to provide one. While this ensures that encrypted communication between the client and the server will occur, it does not provide server authentication. The security of the communication relies on the user refusing to connect with a server that cannot be identified by a third party.

• The process for getting a CA certificate in the browser’s store is not well controlled. In the past, it may have been a matter of paying a fee or depended on who you knew. Microsoft now requires that certificates included in its browser store are from CAs that pass an audit.

• Protection of the private key is paramount. While the default implementations only require that the key be in a protected area of the system, it is possible to implement hardware-based systems that require the private key to be stored only on a hardware device.

• As with any PKI-based system, the decision to provide a certificate to an organization for use on its web server is based on policies written by people, and a decision is made by people. Mistakes can be made. An SSL certificate that identifies a server as belonging to a company might be issued to someone who does not represent that company. And even though a certificate has expired, or another problem is discovered and a warning is issued, many users will just ignore the warning and continue on.

The protection of the private key is paramount in certificate-based authentication systems. If an attacker can obtain the private key, they can spoof the identity of the client and authenticate. Implementations of these systems do a good job of protecting the private key, but, ultimately, if the key is stored on the computer, there is potential for compromise.

A better system would be to require that the private key be protected and separate from the computer. Smart cards can be used for this purpose. While there are many types of smart cards, the ones used for authentication look like a credit card but contain a computer chip that is used to store the private key and a copy of the certificate, as well as to provide processing. Care should be taken to select the appropriate smart card for the application that will use them. Additional hardware tokens, such as those made by Rainbow Technologies and Datakey, can be USB-based and serve similar purposes. Smart cards require special smart card readers to provide communication between the smart cards and the computer system.

In a typical smart card implementation, the following steps are used to authenticate the client:

1. The user inserts the smart card into the reader.

2. The computer-resident application responds by prompting the user for their unique PIN. (The length of the PIN varies according to the type of smart card.)

3. The user enters their PIN.

4. If the PIN is correct, the computer application can communicate with the smart card. The private key is used to encrypt some data. This data may be a challenge, or in the case of the Microsoft Windows 2000 implementation of smart cards, it may be the timestamp of the client computer. The encryption occurs on the smart card.

5. The encrypted data is transferred to the computer and possibly to a server on the network.

6. The public key (the certificate can be made available) is used to decrypt the data. Since only the possessor of the smart card has the private key, and because a valid PIN must be entered to start the process, successfully decrypting the data means the user is authenticated.

NOTE In the Windows 2000 implementation of smart cards, the encrypted timestamp, along with a plain text copy of the timestamp is sent to the KDC. In the Windows implementation of Kerberos, the KDC can use the client’s public key to decrypt the timestamp and compare it to the plaintext version. If these match, the user is authenticated and a TGT is provided to the client. Kerberos processing of the session ticket request continues in the normal manner. (More information about Kerberos processing can be found in the “Kerberos” section earlier in this chapter.)

The use of smart cards to store the private key and certificate solves the problem of protecting the keys. However, user training must be provided so that users do not tape a written copy of their PIN to their smart card, or otherwise make it known. As in more traditional authentication systems, it is the person who possesses the smart card and PIN who will be identified as the user.

Smart cards are also extremely resistant to brute force and dictionary attacks, since a small number of incorrect PIN entries will render the smart card useless for authentication. Additional security can be gained by requiring the presence of the smart card to maintain the session. The system can be locked when the smart card is removed. Users leaving for any amount of time can simply remove their card, and the system is locked against any individual who might be able to physically access the computer. Users can be encouraged to remove their cards by making it their employee ID and requiring the user to have their ID at all times. This also ensures that the smart card will not be left overnight in the user’s desk.

Problems with smart cards are usually expressed in terms of management issues. Issuing smart cards, training users, justifying the costs, dealing with lost cards, and the like are all problems. In addition, the implementation should be checked to ensure that systems can be configured to require the use of a smart card. Some implementations allow the alternative use of a password, which weakens the system because an attack only needs to be mounted against the password—the additional security the smart card provides is eliminated by this ability to go around it. To determine whether a proposed system has this weakness, examine the documentation for this option, and look also for areas where the smart card cannot be used, such as for administrative commands or secondary logons. Secondary logons are logons such as the su command in Unix and the runas process in Windows 2000.

The Extensible Authentication Protocol (EAP) was developed to allow pluggable modules to be incorporated in an overall authentication process. This means authentication interfaces and basic processes can all remain the same, while changes can be made to the acceptable credentials and the precise way that they are manipulated. Once EAP is implemented in a system, new algorithms for authentication can be added as they are developed, without requiring huge changes in the operating system. EAP is currently implemented in several remote access systems, including Microsoft’s implementation of Remote Authentication Dial-In User Service (RADIUS).

Authentication modules used with EAP are called EAP types. Several EAP types exist, with the name indicating the type of authentication used:

• EAP/TLS Uses the TLS authentication protocol and provides the ability to use smart cards for remote authentication.

• EAP/MD5-CHAP Allows the use of passwords by organizations that require increased security for remote wireless 802.1x authentication but that do not have the PKI to support passwords.

Biometric methods of authentication take two-factor authentication to the extreme—the “something you have” is something that is physically part of you. Biometric systems include the use of facial recognition and identification, retinal scans, iris scans, fingerprints, hand geometry, voice recognition, lip movement, and keystroke analysis. Biometric devices are commonly used today to provide authentication for access to computer systems and buildings, and even to permit pulling a trigger on a gun. In each case, the algorithm for comparison may differ, but a body part is examined and a number of unique points are mapped for comparison with stored mappings in a database. If the mappings match, the individual is authenticated.

The process hinges on two things: first, that the body part examined can be said to be unique, and second, that the system can be tuned to require enough information to establish a unique identity and not result in a false rejection, while not requiring so little information as to provide false positives. All of the biometrics currently in use have been established because they represent characteristics that are unique to individuals. The relative accuracy of each system is judged by the number of false rejections and false positives that it generates.

In addition to false negatives and false positives, biometrics live under the shadow, popularized by the entertainment industry, of malicious attackers cutting body parts from the real person and using them to authenticate to systems. In one plot, the terrorist does just this—cuts off the finger of his FBI escort and uses it to enter a secure site and murder other agents. In the movie The 6th Day, the hero likewise cuts off the finger of the enemy and uses it to enter the enemy stronghold. Biometric manufacturers claim that this cannot be done, that a severed finger would too quickly lose enough physical coherence to be used in this way. Some also manufacture fingerprint readers that require the presence of a pulse, or some other physical characteristic. However, the reality of whether or not it is possible to use severed body parts does not matter. Believing that a severed finger can admit them to stores of diamonds in mines in Africa, workers have actually cut fingers from those privileged to enter.

NOTE Biometric systems are beginning to be used in airports, in criminal justice systems, and in casinos in an attempt to recognize known terrorists, criminals, and individuals thought to be cheaters. This use of biometrics, however, is for identification not authentication. The systems are attempting to recognize, from among the many who pass by their cameras, individuals who have been previously identified and whose photograph or image is stored in a database. This is much like using fingerprints retrieved from a crime scene to identify individuals from a database of fingerprints. Authentication, on the other hand, uses some account information (indicating who we claim to be) and only has to attempt to match something else we provide (a password, PIN, or biometric) against the information stored for that account. The use of biometric systems, such as those using facial features, for identification instead of authentication is fraught with problems. Many systems report high false positives and false negatives.

Other attacks on fingerprint systems have also been demonstrated—one such is the gummy finger attack. In May of 2002, Tsutomu Matsumoto, a graduate student of environment and information science at Yokohama National University obtained an imprint of an audience member’s finger and prepared a fake finger with the impression. He used about $10 of commonly available items to produce something the texture of the candy gummy worms. He then used the “gummy finger” to defeat ten different commercial fingerprint readers. While this attack would require access to the individual’s finger, another similar attack was demonstrated in which Matsumoto used latent fingerprints from various surfaces. This attack was also successful. These attacks not only defeat systems most people believe to be undefeatable, but after the attack you can eat the evidence!

We have been discussing authentication as if it were only used for user logon. Nothing can be further from the truth. Here are some additional uses for authentication:

• Computer authenticating to a central server upon boot In Windows 2000 and Windows Server 2003, client computers joined in the domain logon at boot and receive security policy. Wireless networks may also require some computer credentials before the computer is allowed to have access to the network.

• Computer establishing a secure channel for network communication Examples of this are SSH and IPSec. More information on these two systems is included in the following sections.

• Computer requesting access to resources This may also trigger a request for authentication. More information can be found in the “Authorization” section soon in this chapter.

SSH Communications Security produces Secure Shell for Servers, which is available for most versions of Unix, and a separate product for Windows systems. An open-source version of the product is also available. SSH provides a secure channel for use in remote administration. Traditional Unix tools do not require protected authentication, nor do they provide confidentiality, but SSH does. A large number of authentication systems can be used with the product, including passwords, RSA’s SecurID, Symark’s PowerPassword, S/Key, and Kerberos.

IP Security, commonly referred to as IPSec, is designed to provide a secure communication channel between two devices. Computers, routers, firewalls, and the like can establish IPSec sessions with other network devices. IPSec can provide confidentiality, data authentication, data integrity, and protection from replay. Multiple RFCs describe the standard.

Many implementations of IPSec exist, and it is widely deployed in virtual private networks (VPNs). It can also be used to secure communication on LANs or WANs between two computers. Since it operates between the network and transport layers in the network stack, applications do not have to be aware of IPSec. IPSec can also be used to simply block specific protocols, or communication from specific computers or IP address block ranges. When used between two devices, mutual authentication from device to device is required. Multiple encryption and authentication algorithms can be supported, as the protocol was designed to be flexible. For example, in Windows 2000, Windows XP Professional and Windows Server 2003, IPSec can use shared keys, Kerberos, or certificates for authentication; MD5 or SHA1 for integrity; and DES or Triple DES for encryption.

The counterpart to authentication is authorization. Authentication establishes who the user is; authorization specifies what that user can do. Typically thought of as a way of establishing access to resources, such as files and printers, authorization also addresses the suite of privileges that a user may have on the system or on the network. In its ultimate use, authorization even specifies whether the user can access the system at all. There are a variety of types of authorization systems, including user rights, role-based authorization, access control lists, and rule-based authorization.

NOTE Authorization is most often described in terms of users accessing resources such as files or exercising privileges such as shutting down the system. However, authorization is also specific to particular areas of the system. For example, many operating systems are divided into user space and kernel space, and the ability of an executable to run in one space or the other is strictly controlled. To run within the kernel, the executable must be privileged, and this right is usually restricted to native operating system components. An exception to this rule is the right of third-party device drivers to operate in the kernel mode of Windows NT 4.0 and above.

Privileges or user rights are different than permissions. User rights provide the authorization to do things that affect the entire system. The ability to create groups, assign users to groups, log on to a system, and many more user rights can be assigned. Other user rights are implicit and are rights that are granted to default groups—groups that are created by the operating system instead of by administrators. These rights cannot be removed.

In the typical implementation of a Unix system, implicit privileges are granted to the root account. This account (root, superuser, system admin) is authorized to do anything on the system. Users, on the other hand, have limited rights, including the ability to log on, access certain files, and run applications they are authorized to execute.

On some Unix systems, system administrators can grant certain users the right to use specific commands as root, without issuing them the root password. An application that can do this, and which is in the public domain, is called Sudo (short for superuser do).

Each job within a company has a role to play. Each employee requires privileges (the right to do something) and permissions (granting access to resources and specifying what they can do with them) if they are to do their job. Early designers of computer systems recognized that the needs of possible users of systems would vary, and that not all users should be given the right to administer the system.

Two early roles for computer systems were those of user and administrator. Early systems defined roles for these types of users to play and granted them access based on their membership in one of these two groups. Administrators (superusers, root, admins, and the like) were granted special privileges and allowed access to a larger array of computer resources than were ordinary users. Administrators, for example, could add users, assign passwords, access system files and programs, and reboot the machine. Ordinary users could log on and perhaps read data, modify it, and execute programs. This grouping was later extended to include the role of auditor (a user who can read system information and information about the activities of others on the system, but not modify system data or perform other administrator role functions). As systems grew, the roles of users were made more granular. Users might be quantified by their security clearance, for example, and allowed access to specified data or allowed to run certain applications. Other distinctions might be made due to the user’s role in a database or other application system.

In the simplest examples of these role-based systems, users are added to groups that have specific rights and privileges. Other role-based systems use more complex systems of access control, including some that can only be implemented if the operating system is designed to manage them. In the Bell-LaPadula security model, for example, data resources are divided into layers or zones. Each zone represents a data classification, and data may not be moved from zone to zone without special authorization, and a user must be provided access to the zone to use the data. In that role, the user may not write to a zone lower in the hierarchy (from secret to confidential, for example), nor may they read data in a higher level than they have access to (a user granted access to the public zone, for example, may not read data in the confidential or secret zones).

The Unix role-based access control (RBAC) facility can be used to delegate administrative privileges to ordinary users. It works by defining role accounts, or accounts that can be used to perform certain administrative tasks. Role accounts are not accessible to normal logons—they can only be accessed with the su command.

Attendance at some social events is limited to invitees only. To ensure that only invited guests are welcomed to the party, a list of authorized individuals may be provided to those who permit the guests in. If you arrive, the name you provide is checked against this list, and entry is granted or denied. Authentication, in the form of a photo identification check, may or may not play a part here, but this is a good, simple example of the use of an access control list (ACL).

Information systems may also use access control lists to determine whether the requested service or resource is authorized. Access to files on a server is often controlled by information that is maintained on each file. Likewise, the ability for different types of communication to pass a network device can be controlled by access control lists.

Both Windows (NT and above) and Unix systems use file permissions to manage access to files. The implementation varies, but it works well for both systems. It is only when you require interoperability that problems arise in ensuring that proper authorization is maintained across platforms.

A brief introduction to these systems is given in the following sections. More information on Windows and Unix object permissions is presented in Chapter 7.

Windows File-Access Permissions The Windows NTFS file system maintains an ACL for each file and folder. The ACL is composed of a list of access control entries (ACEs). Each ACE includes a security identifier (SID) and the permission(s) granted to that SID. Permissions may be either access or deny, and SIDs may represent user accounts, computer accounts, or groups. ACEs may be assigned by administrators, owners of the file, or users with the permission to apply permissions.

Part of the logon process is the determination of the privileges and group memberships for the specific user or computer. A list is composed that includes the user’s SID, the SIDs of the groups of which the user is a member, and the privileges the user has. When a connection to a computer is made, an access token is created for the user and attached to any running processes they may start on that system.

Permissions in Windows systems are very granular. The permissions listed in Table 6-1 actually represent sets of permissions, but the permissions can be individually assigned as well.

TABLE 6-1 Windows File Permissions

When an attempt to access a resource is made, the security subsystem compares the list of ACEs on the resource to the list of SIDs and privileges in the access token. If there is a match, both of SID and access right requested, authorization is granted unless the access authorization is “deny.” Permissions are cumulative, (that is if the read permission is granted to a user and the write permission is granted to a user, then the user has the read and write permission) but the presence of a deny authorization will result in denial, even in the case of an access permission. The lack of any match results in an implicit denial.

NOTE In Windows 2000, Windows XP Professional, and Windows Server 2003, there are exceptions to the “deny overrides accept” rule because of how permissions are processed. Should an accept be processed before the deny permission is, the presence of a deny will not matter. In Windows NT, deny permissions were always sorted with deny permissions processed first, but there were changes to the inheritance model of file permissions in the newer operating systems.

It should be noted that file permissions and other object-based permissions in Windows can also be supplemented by permissions on shared folders. That is, if a folder is directly accessible from the network because of the Server Message Block (SMB) protocol, permissions can be set on the folder to control access. These permissions are evaluated along with the underlying permissions set directly on the folder using the NTFS permission set. In the case where there is a conflict between the two sets of permissions, the most restrictive permission wins. For example, if the share permission gives Read and Write permission to the Accountants group, of which Joe is a member, but the underlying folder permission denies Joe access, then Joe will be denied access to the folder.

Unix File-Access Permissions Traditional Unix file systems do not use ACLs. Instead, files are protected by limiting access by user account and group. If you want to grant read access to a single individual in addition to the owner, for example, you cannot do so. If you want to grant read access to one group and write access to another, you cannot. This lack of granularity is countered in some Unix systems (such as Solaris) by providing ACLs, but before we look at that system, we’ll examine the traditional file protection system.

Information about a file, with the exception of the filename, is included in the inode. The file inode contains information about the file, including the user ID of the file’s owner, the group to which the file belongs, and the file mode. The file mode is the set of read/write/execute permissions.

File permissions are assigned in order to control access, and they consist of three levels of access: owner, group and all others. Owner privileges include the right to determine who can access the file and read it, write to it, or if it is an executable, execute it. There is little granularity to these permissions. Directories can also have permissions assigned to Owner, Group, and all others. Table 6-2 lists and explains the permissions.

TABLE 6-2 Traditional Unix File Permissions

ACLs are offered in addition to the traditional Unix file protection scheme. ACL entries can be defined on a file and set through commands. These commands include information on the type of entry (the user or the ACL mask), the user ID (UID), group ID (GID), and the perms (permissions). The mask entry specifies the maximum permissions allowed for users (not including the owner) and groups. Even if an explicit permission has been granted for write or execute permission, if an ACL mask is set to read, read will be the only permission granted.

ACLs are used by network devices to control access to networks and to control the type of access granted. Specifically, routers and firewalls may have lists of access controls that specify which ports on which computers can be accessed by incoming communications, or which types of traffic can be accepted by the device and routed to an alternative network. Additional information on ACLs used by network devices can be found in Chapters 10 and 11.

Rule-based authorization requires the development of rules that stipulate what a specific user can do on a system. These rules might provide information such as “User Joe can access resource Z but cannot access resource D.” More complex rules specify combinations, such as “User Nancy can read file P only if she is sitting at the console in the data center.” In a small system, rule-based authorization may not be too difficult to maintain, but in larger systems and networks it is excruciatingly tedious and difficult to administer.

Authentication is the process of proving you are who you say you are. You can take that quite literally. If someone possesses your user credentials, it may be possible for that person to say they are you, and to prove it. You should always evaluate an authentication system based on how easy it would be to go around its controls. While many modern systems are based on hardware, such as tokens and smart cards, and on processes that can be assumed to be more secure, such as one-time passwords, most systems still rely on passwords for authentication. User training and account controls are a critical part of securing authentication.

Authorization, on the other hand, determines what an authenticated user can do on the system or network. A number of controls exist that can help define these rights of access explicitly.