Complementary Experimental Tools

Valuable Experimental Methods That Complement Mainstream Research Biophysics Techniques

Anything found to be true of E. coli must also be true of elephants

Jacques Monod, 1954 (from Friedmann, 2004)

General Idea: There are several important accessory experimental methods that complement techniques of biophysics, many of which are invaluable to the efficient functioning of biophysical methods. They include controllable chemical techniques for gluing biological matter to substrates, the use of “model” organisms, genetic engineering tools, crystal preparation for structural biology studies, and a range of bulk sample methods, including some of relevance to biomedicine.

The key importance for a student of physics in regard to learning aspects of biophysical tools and technique is to understand the physics involved. However, the devil is often in the detail, and the details of many biophysical methods include the application of techniques that are not directly biophysical as such, but which are still invaluable, and sometimes essential, to the optimal functioning of the biophysical tool. In this chapter we discuss the key details of these important, complementary approaches. We also include discussion of the applications of biophysics in biomedical techniques. There are several textbooks dedicated to expert-level medical physics technologies; however, what we do here is highlight the important biophysical features of these to give the reader a basic all-round knowledge of how biophysics tools are applied to clinically relevant questions.

Bioconjugation is an important emerging field of research in its own right. New methods for chemical derivatization of all the major classes of biomolecules have been developed, many with a significant level of specificity. As we have seen from the earlier chapters in this book that outline experimental biophysics tools, bioconjugation has several applications to biophysical techniques, especially those requiring molecular level precision, for example, labeling biomolecules with a specific fluorophore tag or EM marker, conjugating a molecule to a bead for optical and magnetic tweezers experiments, chemically modifying surfaces in order to purify a mixture of molecules.

Biotin is a natural molecule of the B-group of vitamins, relatively small with a molecular weight roughly twice that of a typical amino acid residue (see Chapter 2). It binds with high affinity to two structurally similar proteins called “avidin” (found in egg white of animals) and “streptavidin” (found in bacteria of the genus Streptomyces; these bacteria have proved highly beneficial to humans since they produce >100,000 different types of natural antibiotics, severally used in clinical practice). Chemical binding affinity in general can be characterized in terms of a dissociation constant, Kd. This is defined as the product of all the two concentrations of the separate components in solution that bind together divided by the concentration of the bound complex itself and thus has the same units as concentration (e.g., molarity, or M). The biotin–avidin or biotin–streptavidin interaction has a Kd of 10−14 to 10−15 M. Thus, the concentration of “free” biotin in solution in the presence of avidin or streptavidin is exceptionally low, equivalent to just a single molecule inside a volume of a very large cell of ~100 μm diameter.

KEY POINT 7.1

“Affinity” describes the strength of a single interaction between two molecules. However, if multiple interactions are involved, for example, due not only to a strong covalent interaction but also to multiple noncovalent interactions, then this accumulated binding strength is referred to as the “avidity.”

Thirty percent of the amino acid sequence of avidin is identical to streptavidin; however, their secondary, tertiary, and quaternary structures are almost the same: each molecule containing four biotin binding sites. Avidin has a higher intrinsic chemical affinity to biotin than streptavidin, though this situation is often reversed when avidin/streptavidin are bound to a conjugate. However, a modified version of avidin called “NeutrAvidin” has a variety of chemical groups removed from the structure including outer carbohydrate groups, which reduces nonspecific noncovalent binding to a range of biomolecules compared to both avidin and streptavidin. As a result, this is often the biotin binder of choice in many applications.

These strong interactions are very commonly used by biochemists in conjugation chemistry. Biotin and streptavidin/avidin pairs can be chemically bound to a biomolecule using accessible reactive groups on the biomolecules, for example, the use of carboxyl, amine, or sulfhydryl groups in protein labeling (see in the following text). Separately, streptavidin/avidin can also be chemically labeled with, for example, a fluorescent tag and used to probe for the “biotinylated” sites on the protein following incubation with the sample.

7.2.2 CARBOXYL, AMINE, AND SULFHYDRYL CONJUGATION

Carboxyl (—COOH), amine (—NH2), and sulfhydryl (—SH) groups are present in many biomolecules and can all form covalent bonds to bridge to another chemical group through loss of a hydrogen atom. For example, conjugation to a protein can be achieved via certain amino acids that contain reactive amine groups—these are called “primary” (free) amine groups that are present in the side “substituent” group of amino acids and do not partake in peptide bond formation. For example, the amine acid lysine contains one such primary amine (see Chapter 2), which under normal cellular pH levels is bound to a proton to form the ammonium ion of —NH3+. Primary amines can undergo several types of chemical conjugation reactions, for example, acylation, isocyanate formation, and reduction.

Similarly, some amino acids (e.g., aspartic acid and glutamic acid) contain one or more reactive carboxyl groups that do not participate in peptide bond formation. These can be coupled to primary amine groups using a cross-linker chemical such as carbodiimide (EDC or CDI). The stability of the cross-link is often increased using an additional coupler called “sulfo-N-hydroxysuccinimide (sulfo-NHS).”

Chemically reactive sulfhydryl groups can also be used for conjugation to proteins. For example, the amino acid cysteine contains a free sulfhydryl group. A common cross-linker chemical is maleimide, with others including alkylation reagents and pyridyl disulfide. Normally however cysteine residues would be buried deep in the inaccessible hydrophobic core of a protein often present in the form of two nearby cysteine molecules bound together via their respective sulfur atoms to form a disulfide bridge —S—S— (the subsequent cysteine dimer is called cystine), which stabilize a folded protein structure. Chemically interfering with the sulfhydryl group’s native cysteine amino acid residues can therefore change the structure and function of the protein.

However, there are many proteins that contain no native cysteine residues. This is possibly due to the function of these proteins requiring significant dynamic molecular conformational changes that may be inhibited by the presence of —S—S— bonds in the structure. For these, it is possible to introduce one or more foreign cysteine residues by modification of the DNA encoding the protein using genetic engineering at specific sequence DNA locations. This technique is an example of site-directed mutagenesis (SDM), here specifically site-directed cysteine mutagenesis discussed later in this chapter. By introducing nonnative cysteines in this way, they can be free to be used for chemical conjugation reactions while minimizing impairment to the protein’s original biological function (though note that, in practice, significant optimization is often still involved in finding the best candidate locations in a protein sequence for a nonnative cysteine residue so as not to affect its biological function).

Binding to cysteine residues is also the most common method used in attaching spin labels for ESR (see Chapter 5), especially through the cross-linker chemical methanethiosulfonate that contains an —NO group with a strong ESR signal response.

An antibody, or immunoglobulin (Ig), is a complex protein with bound sugar groups produced by cells of the immune system in animals to bind to specific harmful infecting agents in the body, such as bacteria and viruses. The basic structure of the most common class of antibody is Y-shaped (Figure 7.1), with a high molecular weight of ~150 kDa. The types (or isotypes) of antibodies of this class are mostly found in mammals and are IgD (found in milk/saliva), IgE (commonly produced in allergic responses), and IgG, which are produced in several immune responses and are the most widely used in biophysical techniques. Larger variants consisting of multiple Y-shaped subunits include IgA (a Y-subunit dimer) and IgM (a Y-subunit pentamer). Other antibodies include IgW (found in sharks and skates, structurally similar to IgD) and IgY (found in birds and reptiles).

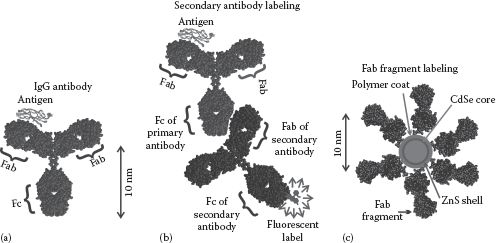

FIGURE 7.1 Antibody labeling. Use of (a) immunoglobulin IgG antibody directly and (b) IgG as a primary and a secondary IgG antibody, which is labeled with a biophysical tag that binds to the Fc region. (c) Fab fragments can also be used directly.

The stalk of the Y structure is called the Fc region whose sequence and structure are reasonably constant across a given species of animal. The tips of the Y comprise two Fab regions whose sequence and structure are highly variable and act as a unique binding site for a specific region of a target biomolecule (known as an antigen), with the specific binding site of the antigen called the “epitope.” This makes antibodies particularly useful for specific biomolecule conjugation. Antibodies can also be classed as monoclonal (derived from identical immune cells and therefore binding to a single epitope of a given antigen) or polyclonal (derived from multiple immune cells against one antigen, therefore containing a mixture of antibodies that will potentially target different epitopes of the same antigen).

The antibody–antigen interaction is primarily due to significantly high van der Waals forces due to the tight-fitting surface interfaces between the Fab binding pocket and the antigen. Typical affinity values are not as high as strong covalent interactions with Kd values of ~10−7 M being at the high end of the affinity range.

Fluorophores or EM gold labels, for example, can be attached to the Fc region of IgG molecules and to isolated Fab regions that have been truncated from the native IgG structure, to enable specific labeling of biological structures. Secondary labeling can also be employed (see Chapter 3); here a primary antibody binds to its antigen (e.g., a protein on the cell membrane surface of a specific cell type) while a secondary antibody, whose Fc region has a bound label, specifically binds to the Fc region of the primary antibody. The advantage of this method is primarily one of cost, since a secondary antibody will bind the Fc region of all primary antibodies from the same species and so circumvents the needs to generate multiple different labeled primary antibodies.

Antibodies are also used significantly in single-molecule manipulation experiments. For example, single-molecule magnetic and optical tweezers experiments on DNA often utilize a label called “digoxigenin (DIG).” DIG is a steroid found exclusively in the flowers and leaves of the plants of the Digitalis genus, highly toxic to animals and perhaps as a result through evolution has highly immunogenic properties (meaning it has a high ability to provoke an immune response, thus provoking production of several specific antibodies to bind to DIG), and antibodies with specificity against DIG (called generally “anti-DIG”) have very high affinity. DIG is often added to one end of a DNA molecule, while a trapped bead that has been coated in anti-DIG molecule can then bind to it to enable single-molecule manipulation of the DNA.

DIG is an example of a class of chemical called “haptans.” These are the most common secondary labeling molecule for immuno-hybridization chemistry, due to their highly immunogenic properties (e.g., biotin is a haptan). DIG is also commonly used in fluorescence in situ hybridization (FISH) assays. In FISH, DIG is normally covalently bound to a specific nucleotide triphosphate probe, and the fluorescently labeled IgG secondary antibody anti-DIG is subsequently used to probe for its location on the chromosome, thus allowing specific DNA sequences, and genes, to be identified following fluorescence microscopy.

Click chemistry is the general term that describes chemical synthesis by joining small-molecule units together both quickly and reliably, which is ideally modular and has high yield. It is not a single specific chemical reaction. However, one of the most popular examples of click chemistry is the azide–alkyne Huisgen cycloaddition. This chemical reaction uses copper as a catalyst and results in a highly selective and strong covalent bond formed between azide (triple bonded N—N atoms) and alkyne (triple bonded C—C bonds) chemical groups to form stable 1,2,3-triazoles. This method of chemical conjugation is rapidly becoming popular in part due to its specific use in conjunction with increased development of oligonucleotide labeling.

7.2.5 NUCLEIC ACID OLIGO INSERTS

Short ~10 base pair sequences of nucleotide bases known as oligonucleotides (or just oligos) can be used to label specific sites on a DNA molecule. A DNA sequence can be cut at specific locations by enzymes called “restriction endonucleases,” which enables short sequences of DNA complementary to a specific oligo sequence to be inserted at that location. Incubation with the oligo will then result in binding to the complementary sequence. This is useful since oligos can be modified to be bound to a variety of chemical groups, including biotin, azide, and alkynes, to facilitate conjugation to another biomolecule or structure. Also, oligos can be derivatized with a fluorescent dye label either directly or via, for example, a bound biotin molecule, to enable fluorescence imaging visualization of specific DNA sequence locations.

Aptamers are short sequences of either nucleotides or amino acids that bind to a specific region of a target biomolecule. These peptides and RNA- or DNA-based oligonucleotides have a molecular weight that is relatively low at ~8–25 kDa compared to antibodies that are an order of magnitude greater. Most aptamers are unnatural in being chemically synthesized structures, though some natural aptamers do exist, for example, a class of RNA structures known as riboswitches (a riboswitch is an interesting component of some mRNA molecules that can alter the activity of proteins that are involved in manufacturing the mRNA and so regulate their own activity).

Aptamers fold into specific 3D shapes to fit tightly to specific structural motifs for a range of different biomolecules with a very low unbinding rate measured as an equivalent dissociation constant in the pico- to nanomolar range. They operate solely via a structural recognition process, that is, no chemical bonding is involved. This is a similar process to that of an antigen–antibody reaction, and thus aptamers are also referred to as chemical antibodies.

Due to their relatively small size, aptamers offer some advantages over protein-based antibodies. For example, they can penetrate tissues faster. Also, aptamers in general do not evoke a significant immune response in the human body (they are described as nonimmunogenic). They are also relatively stable to heat, in that their tertiary and secondary structures can be denatured at temperatures as high as 95°C, but will then reversibly fold back into their original 3D conformation once the temperature is lowered to ~50°C or less, compared to antibodies that would irreversibly denature. This enables faster chemical reaction rates during incubation stages, for example, when labeling aptamers with fluorophore dye tags.

Aptamers can recognize a wide range of targets including small biomolecules such as ATP, ions, proteins, and sugars, but will also bind specifically to larger length scale biological matter, such as cells and viruses. The standard method of aptamer manufacture is known as systematic evolution of ligands by exponential enrichment. It involves repeated binding, selection, and then amplification of aptamers from an initial library of as many as ~1018 random sequences that, perhaps surprisingly, can home in on an ideal aptamer sequence in a relatively cost-effective manner.

Aptamers have significant potential for use as drugs, for example, to block the activity of a range of biomolecules. Also, they have been used in biophysical applications as markers of a range of biomolecules. For example, although protein metabolites can be labeled using fluorescent proteins, this is not true for nonprotein biomolecules. However, aptamers can enable such biomolecules to be labeled, for example, if chemically tagged with a fluorophore they can report on the spatial localization of ATP accurately in live cells using fluorescence microscopy techniques, which is difficult to quantify using other methods.

KEY BIOLOGICAL APPLICATIONS: BIOCONJUGATION TECHNIQUES

Attaching biophysical probes; Molecular separation; Molecular manipulation.

Technical advances of light microscopy have now enabled the capability to monitor whole, functional organisms (see Chapter 3). Biophysics here has gone full circle in this sense, from its earlier historical conception in, in essence, physiological dissection of relatively large masses of biological tissue. A key difference now however is one of enormously enhanced spatial and temporal resolution. Also, researchers now benefit greatly from a significant knowledge of underlying molecular biochemistry and genetics. Much progress has been made in biophysics through the experimental use of carefully selected model organisms that have ideal properties for light microscopy in particular; namely, they are thin and reasonably optically transparent. However, model organisms are also invaluable in offering the researcher a tractable biological system that is already well understood at a level of biochemistry and genetics.

7.3.1 MODEL BACTERIA AND BACTERIOPHAGES

There are a few select model bacteria species that have emerged as model organisms. Escherichia coli (E. coli) is the best known. E. coli is a model Gram-negative organism (see Chapter 2) whose genome (i.e., total collection of genes in each cell) comprises only ~4000 genes. There are several genetic variants of E. coli, noting that the spontaneous mutation rate of a nucleotide base pair in E. coli is ~10−9 per base pair per cell generation, some of which may generate a selective advantage for that individual cell and so be propagated to subsequent generations through natural selection (see Chapter 2). However, there are in fact only four key cell sources from which almost all of the variants are in use in modern microbiology research, which are called K-12, B, C, and W. Of these, K-12 is mostly used, which was originally isolated from the feces of a patient recovering from diphtheria in Stanford University Hospital in 1922.

Gram-positive bacteria lack a second outer cell membrane that Gram-negative bacteria possess. As a result, many exhibit different forms of biophysical and biochemical interactions with the outside world, necessitating a model Gram-positive bacterium for their study. The most popular model Gram-positive bacterium is currently Bacillus subtilis, which is a soil-dwelling bacterium. It undergoes an asymmetrical spore-forming process as part of its normal cell cycle, and this has been used as a mimic for biochemically triggered cell shape changes such as those that occur in higher organisms during the development of complex tissues.

There are many viruses known to infect bacteria, known as bacteriophages. Although, by the definition used in this book, viruses are not living as such, they are excellent model systems for studying genes. This is because they do not possess many genes (typically only a few tens of native genes), but rather hijack the genetic machinery of their host cell; if this host cell itself is a model organism such as E. coli, then this can offer significant insights into methods of gene operation/regulation and repair, for example. The most common model bacterium-infecting virus is called “bacteriophage lambda” (or just lambda phage) that infects E. coli. This has been used for many genetics investigations, and in fact since its DNA genetic code of almost ~49,000 nucleotide base pairs is so well characterized, methods for its reliable purification have been developed, and so there exists a readily available source of this DNA (called λ DNA), which is used in many in vitro investigations, including single-molecule experiments of optical and magnetic tweezers (see Chapter 6). Another model of bacterium-infecting virus includes bacteriophage Mu (also called Mu phage), which has generated significant insight into relatively large transposable sections of DNA called “transposons” that undergo a natural splicing out from their original location in the genetic code and relocated en masse in a different location.

KEY POINT 7.2

“Microbiology” is the study of living organisms whose length scale is around ~10−6 m, which includes mainly not only bacteria but also viruses that infect bacteria as well as eukaryotic cells such as yeast. These cells are normally classed as being “unicellular,” though in fact for much of their lifetime, they exist in colonies with either cells of their own type or with different species. However, since microbiology research can perform experiments on single cells in a highly controlled way without the added complication of a multicellular heterogeneous tissue environment, this has significantly increased our knowledge of biochemistry, genetics, cell biology, and even developmental biology in the life sciences in general.

7.3.2 MODEL UNICELLULAR EUKARYOTES OR “SIMPLE” MULTICELLULAR EUKARYOTES

Unlike prokaryotes, eukaryotes possess a distinct nucleus, as well as other subcellular organelles. This added compartmentalization of biological function can complicate experimental investigations (though note that even prokaryotes have distinct areas of local architecture in their cells so should not be perceived as a simple “living test tube”). Model eukaryotes for the study of cellular effects possess relatively few genes and also are ideally easy to cultivate in the laboratory with a reasonably short cell division time allowing cell cultures to be prepared quickly. In this regard, three organisms have emerged as model organisms. One includes the single-celled eukaryotic protozoan parasite of the Trypanosoma genus that causes African sleeping sickness, specifically a species called Trypanosoma brucei, which has emerged as a model cell to study the synthesis of lipids. A more widely used eukaryote model cell organism is yeast, especially the species called Saccharomyces cerevisiae also known as budding yeast or baker’s yeast. This has been used in multiple light microscopy investigations, for example, involving placing a fluorescent tag on specific proteins in the cell to perform superresolution microscopy (see Chapter 4). The third very popular model eukaryote unicellular organism is Chlamydomonas reinhardtii (C. reinhardtii). This is a green alga and has been used extensively to study photosynthesis and cell motility.

Dictyostelium discoideum is a more complex multicellular eukaryote, also known as slime mould. It has been used as a model organism in studies involving cell-to-cell communication and cell differentiation (i.e., how eukaryote cells in multicellular organisms commit to being different specific cell types). It has also been used to investigate the effects of programmed cell death, or apoptosis (see the following text).

More complex eukaryotic cells are those that would normally reside in tissues, and many biomedical investigations benefit from model human cells to perform investigations into human disease. The main problem with using more complex cells from animals is that they normally undergo the natural process of programmed cell death, called apoptosis, as part of their cell cycle. This means that it is impossible to study such cells over multiple generations and also technically challenging to grow a cell culture sample. To overcome this, immortalized cells are used, which have been modified to overcome apoptosis.

An immortal cell derived from a multicellular organism is one that under normal circumstances would not proliferate indefinitely but, due to being genetically modified, is no longer limited by the Hayflick limit. This is a limit to future cell division set either by DNA damage or by shortening of cellular structures called “telomeres,” which are repeating DNA sequences that cap the end of chromosomes (see Chapter 2). Telomeres normally get shorter with each subsequent cell division such that at a critical telomere length cell death is triggered by the complex biochemical and cellular process of apoptosis. However, immortal cells can continue undergoing cell division and be grown under cultured in vitro conditions for prolonged periods. This makes them invaluable for studying a variety of cell processes in complex animal cells, especially human cells.

Cancer cells are natural examples of immortal cells, but can also be prepared using biochemical methods. Common immortalized cell lines include the Chinese hamster ovary, human embryonic kidney, Jurkat (T lymphocyte, a cell type used in the immune response), and 3T3 (mouse fibroblasts from connective tissue) cells. However, the oldest and most commonly utilized human cell strain is the HeLa cell. These are epithelial cervical cells that were originally cultured from a cancerous cervical tumor of a patient named Henrietta Lacks in 1951. She ultimately died as a result of this cancer, but left a substantial scientific research legacy in these cells. Although there are potential limitations to their use in having undergone potentially several mutations from the original normal cell source, they are still invaluable to biomedical research utilizing biophysical techniques, especially those that use fluorescence microscopy.

Traditionally, plants have received less historical interest as the focus of biophysical investigations compared to animal studies, due in part to the lower relevance to human biomedicine. However, global issues relating to food and energy (see Chapter 9) have focused recent research efforts in this direction in particular. Many biophysical techniques have been applied to monitoring the development of complex plant tissues, especially involving advanced light microscopy techniques such as light sheet microscopy (see Chapter 4), which has been used to study the development of plant roots from the level of a few cells up to complex multicellular tissue.

The most popular model plant organism is Arabidopsis thaliana, also known commonly as mouse ear cress. It is a relatively small plant with a short generation time and thus easy to cultivate and has been characterized extensively genetically and biochemically. It was the first plant to have its full genome sequenced.

Two key model animal organisms for biophysics techniques are those that optimized for in vivo light microscopy investigations, including the zebrafish Danio rerio and the nematode flatworm Caenorhabditis elegans. The C. elegans flatworm is ~1 mm in length and ~80 μm in diameter, which lives naturally in soil. It is the simplest eukaryotic multicellular organism known to possess only ~1000 cells in its adult form. It also breeds relatively easily and fast taking ~3 days to reach maturation, which allows experiments to be performed reasonably quickly, is genetically very well characterized, and has many tissue systems that have generic similarities to those of other more complex organisms, including a complex network of nerves, blood vessels and heart, and a gut. D. rerio is more complex in having ~106 cells in total in the adult form, and a length of a few cm and several hundred microns thick, and takes more like ~3 months to reach maturation.

These characteristics set more technical challenges on the use of D. rerio compared to C. elegans; however, it has a significant advantage in possessing a spinal cord in which C. elegans does not, making it the model organism of choice for investigating specifically vertebrate features, though C. elegans has been used in particular for studies of the nervous system. These investigations were first pioneered by the Nobel Laureate Sydney Brenner in the 1960s, but later involved the use of advanced biophysics optical imaging and stimulation methods using an invaluable technique of optogenetics, which can use light to control the expression of genes (see later in this chapter). At the time of writing, C. elegans is the only organism for which the connectome (the wiring diagram of all nerve cells in an organism) has been determined.

The relative optical transparency of these organisms allows standard bright-field light microscopy to be performed, a caveat being that adult zebrafish grow pigmented stripes on their skin, hence their name, which can impair the passage of visible light photons. However, mutated variants of zebrafish have now been produced in which the adult is colorless.

Among invertebrate organisms, that is, those lacking an internal skeleton, Drosophila melanogaster (the common fruit fly) is the best studied. Fruit flies are relatively easy to cultivate in the laboratory and breed rapidly with relatively short life cycles. They also possess relatively few chromosomes and so have formed the basis of several genetics studies, with light microscopy techniques used to identify positions of specifically labeled genes on isolated chromosomes.

For studying more complex biological processes in animals, rodents, in particular mice, have been an invaluable model organism. Mice have been used in several biophysical investigations involving deep tissue imaging in particular. Biological questions involving practical human biomedicine issues, for example, the development of new drugs and/or investigating specific effects of human disease that affects multiple cell types and/or multiply connected cells in tissues, ultimately involve larger animals of greater similarity to humans, culminating in the use of primates. The use of primates in scientific research is clearly a challenging issue for many, though such investigations require significant oversight before being granted approval from ethical review committees that are independent from the researchers performing the investigations.

KEY BIOLOGICAL APPLICATIONS: MODEL ORGANISMS

Multiple biophysical investigations requiring tractable, well-characterized organism systems to study a range of biological processes.

KEY POINT 7.3

A “model organism,” in terms of the requirements for biologists, is selected on its being genetically and phenotypically/behaviorally very well characterized from previous experimental studies and also possesses biological features that at some level are “generic” in allowing us to gain insight into a biological process common to many organisms (especially true for biological processes in humans, since these give us potential biomedical insight). For the biophysicist, these organisms must also satisfy an essential condition of being experimentally very tractable. For animal tissue research, this includes the use of thin, optically transparent organisms for light microscopy. One must always bear in mind that some of the results from model organisms may differ in important ways from other specific organisms that possess equivalent biological processes under study.

The ability to sequence and then controllably modify the DNA genetic code of cells has complemented experimental biophysical techniques enormously. These genetic technologies enable controlled expression of specific proteins for purification and subsequent in vitro experimentation as well as enable the study of the function of specific genes by modifying them through controlled mutation or deleting them entirely, such that the biological function might be characterized using a range of biophysical tools discussed in the previous experimental chapters of this book. One of the most beneficial aspects of this modern molecular biology technology has been the ability to engineer specific biophysical labels at the level of the genetic code, through incorporation either of label binding sites or of fluorescent protein sequences directly.

Molecular cloning describes a suite of tools using a combination of genetic engineering, cell and molecular biology, and biochemistry, to generate modified DNA, to enable it to be replicated within a host organism (“cloning” simply refers to generating a population of cells all containing the same DNA genetic code). The modified DNA may be derived from the same or different species as the host organism.

In essence, for cloning of genomic DNA (i.e., DNA obtained from a cell’s nucleus), the source DNA, which is to be modified and ultimately cloned, is first isolated and purified from its originator species. Any tissue/cell source can in principle be used for this provided the DNA is mostly intact. This DNA is purified (using a phenol extraction), and the number of purified DNA molecules present is amplified using polymerase chain reaction (PCR) (see Chapter 2). To ensure efficient PCR, primers need to be added to the DNA sequence (short sequences of 10–20 nucleotide base pairs that act as binding sites for initiating DNA replication by the enzyme DNA polymerase). PCR can also be used on an RNA sample sources, but using a modified PCR technique of the reverse transcription polymerase chain reaction that first converts RNA back into complementary DNA (cDNA) that is then amplified using conventional PCR. A similar process can also be used on synthetic DNA, that is, artificial DNA sequences not from a native cell or tissue source.

The amplified, purified DNA is then chemically broken up into fragments by restriction endonuclease enzymes, which cut the DNA at specific sequence locations. At this stage, additional small segments of DNA from other sources may be added that are designed to bind to specific cut ends of the DNA fragments. These modified fragments are then combined with vector DNA. In molecular biology, a vector is a DNA molecule that is used to carry modified (often foreign) DNA into a host cell, where it will ultimately be replicated and the genes in that recombinant DNA expressed can be replicated and/or expressed. Vectors are generally variants of either bacterial plasmids or viruses (see Chapter 2). Such a vector that contains the modified DNA is known as recombinant DNA. Vectors in general are designed to have multiple specific sequence restriction sites that recognize the corresponding fragment ends (called “sticky ends”) of the DNA generated by the cutting action of the restriction endonucleases. Another enzyme called “DNA ligase” catalyzes the binding of the sticky ends into the vector DNA at the appropriate restriction site in the vector, in a process called ligation. It is possible for other ligation products to form at this stage in addition to the desired recombinant DNA, but these can be isolated out at a later stage after the recombinant DNA has been inserted in the host cell.

KEY POINT 7.4

The major types of vectors are viruses and plasmids, of which the latter is the most common. Also, hybrid vectors exist such as a “cosmid” constructed from a lambda phage and a plasmid, and artificial chromosomes that are relatively large modified chromosome segments of DNA inserted into a plasmid. All vectors possess an origin of replication, multiple restriction sites (also known as multiple cloning sites), and one or more selectable marker genes.

Insertion of the recombinant DNA into the target host cell is done through a process called either “transformation” for bacterial cells, “transfection” for eukaryotic cells, or, if a virus is used as a vector, “transduction” (the term “transformation” in the context of animal cells actually refers to changing to a cancerous state, so is avoided here). The recombinant DNA needs to pass through the cell membrane barrier, and this can be achieved using both natural and artificial means. For natural transformation to occur, the cell must be in a specific physiological state, termed competent, which requires the expression of typically tens of different proteins in bacteria to allow the cell to take up and incorporate external DNA from solution (e.g., filamentous pili structures of the outer member, as well as protein complexes in the cell membrane to pump DNA from the outside to the inside). This natural phenomenon in bacteria occurs in a process called “horizontal gene transfer,” which results in genetic diversity through transfer of plasmid DNA between different cells, and is, for example, a mechanism for propagating antibiotic resistance in a cell population. It may also have evolved as a mechanism to assist in the repair of damaged DNA, that is, to enable the internalization of nondamaged DNA that can then be used as a template from which to repair native damaged DNA.

Artificial methods can improve the rate of transformation. These can include treating cells first with enzymes to strip away outer cells walls, adding divalent metal ions such as magnesium or calcium to increase binding of DNA (which has a net negative charge in solution due to the presence of the backbone of negatively charged phosphate groups), or increasing cell membrane fluidity. These also include methods that involve a combination of cold and heat shocking cells to increase internalization of recombinant by undetermined mechanisms as well as using ultrasound (sonication) to increase the collision frequency of recombinant DNA with host cells. The most effective method however is electroporation. This involves placing the aqueous suspension of host cells and recombinant DNA into an electrostatic field of strength 10–20 kV cm−1 for a few milliseconds that increases the cell membrane permeability dramatically through creating transient holes in the membrane through which plasmid DNA may enter.

Transfection can be accomplished using an extensive range of techniques, some of which are similar to those used for transformation, for example, the use of electroporation. Other more involved methods have been optimized specifically for host animal cell transfection however. These include biochemical-based methods such as packaging recombinant DNA into modified liposomes that then empty their contents into a cell upon impact on, and merging with, the cell membrane. A related method is protoplast fusion that involves chemically or enzymatically stripping away the cell wall from a bacterial cell to enable it to fuse in suspension with a host animal cell. This delivers the vector that may be inside the bacterial cell, but with the disadvantage of delivery of the entire bacterial cell contents, which may potentially be detrimental to the host cell.

But there are also several biophysical techniques for transfection. These include sonoporation (using ultrasound to generate transient pores in cell membranes), cell squeezing (gently massaging cells through narrow flow channels to increase the membrane permeability), impalefection (introducing DNA bound to a surface of a nanofiber by stabbing the cell), gene guns (similar to impalefection but using DNA bound to nanoparticles that are fired into the host cell), and magnet-assisted transfection or magnetofection (similar to the gene gun approach, though here DNA is bound to a magnetic nanoparticle with an external B-field used to force the particles into the host cells).

The biophysical transfection tool with the most finesse involves optical transfection, also known as photoporation. Here, a laser beam is controllably focused onto the cell membrane generating localized heating sufficient to form a pore in the cell membrane and allow recombinant DNA outside the cell to enter by diffusion. Single-photon absorption processes in the lipid bilayer can be used here, centered on short wavelength visible light lasers; however, better spatial precision is enabled by using a high-power near-infrared (IR) femtosecond pulsed laser that relies on two-photon absorption in the cell membrane, resulting in smaller pores and less potential cell damage.

Viruses undergoing transfection (i.e., viral transduction) are valuable because they can transfer genes into a wide variety of human cells in particular with very high transfer rates. However, this method can also be used for other cell types, including bacteria. Here, the recombinant DNA is packaged into an empty virus capsid protein coat (see Chapter 2). The virus then performs its normal roles of attaching to host cell and then injecting the DNA into the cell very efficiently, compared to the other transfection/transformation methods.

The process of inserting recombinant DNA into a host cell has normally low efficiency, with only a small proportion of host cells successfully taking up the external DNA. This presents a technical challenge in knowing which cells have done so, since these are the ones that need to be selectively cultivated from a population. This selection is achieved by engineering one or more selectable markers into the vector. A selectable marker is usually a gene conferring resistance against a specific antibiotic that would otherwise be lethal to the cell. For example, in bacteria, there are several resistance genes available that are effective against broad-spectrum antibiotics such as ampicillin, chloramphenicol, and kanamycin. Those host cells that have successfully taken up a plasmid vector during transformation will survive culturing conditions that include the appropriate antibiotic, whereas those that have not taken up the plasmid vector will die. Using host animal cells, such as human cells, involves a similar strategy to engineer a stable transfection such that the recombinant DNA is incorporated ultimately into the genomic DNA, using a marker gene that is encoded into the genomic DNA conferring resistance against the antibiotic Geneticin. Unstable or transient transfection does not utilize marker genes on the host cell genome but instead retains the recombinant DNA as plasmids. These ultimately become diluted after multiple cell generations and so the recombinant DNA is lost.

7.4.2 SITE-DIRECTED MUTAGENESIS

SDM is a molecular biology tool that uses the techniques of molecular cloning described earlier to make controlled, spatially localized mutations to a DNA sequence, at the level of just a few, or sometimes one, nucleotide base pairs. The types of mutations include a single base change (point mutation), deletion or insertion, as well as multiple base pair changes. The basic method of SDM uses a short DNA primer sequence that contains the desired mutations and is complementary to the template DNA around the mutation site and can therefore displace the native DNA by hybridizing with the DNA in forming stable Watson–Crick base pairs. This recombinant DNA is then cloned using the same procedure as described in Section 7.4.

SDM has been used in particular to generate specific cysteine point mutations. These have been applied for bioconjugation of proteins as already discussed in the chapter and also for a technique called cysteine scanning (or cys-scanning) mutagenesis. In cys-canning mutagenesis, multiple point mutations are made to generate several foreign cysteine sites, typically in pairs. The purpose here is that if a pair of such nonnative cysteine amino acids is biochemically detected as forming a disulphide bond in the resultant protein, then this indicates that these native residue sites that were mutated must be within ~0.2 nm distance. In other words, it enables 3D mapping of the location of different key residues in a protein. This was used, for example, in determining key residues used in the rotation of the F1Fo-ATP synthase that generates the universal cellular fuel of ATP (see Chapter 2).

A similar SDM technique is that of alanine scanning. Here, the DNA sequence is point mutated to replace specific amino acid residues in a protein with the amino acid alanine. Alanine consists of just a methyl (—CH3) substituent group and so exhibits relatively little steric hindrance effects, as well as minimal chemical reactivity. Substituting individual native amino acid residues with alanine, and then performing a function test on that protein, can generate insight into the importance of specific amino acid side groups on the protein’s biological function.

7.4.3 CONTROLLING GENE EXPRESSION

There are several molecular biology tools that allow control of the level of protein expression from a gene. The ultimate control is to delete the entire gene from the genome of a specific population of cells under investigation. These deletion mutants, also known as gene knockouts, are often invaluable in determining the biological function of a given gene, since the mutated cells can be subjected to a range of functionality tests and compares against the native cell (referred to as the wild type).

A more finely tuned, reversible method to modify gene expression is to use RNA silencing. RNA silencing is a natural and ubiquitous phenomenon in all eukaryote cells in which the expression of one or more genes is downregulated (which in molecular biology speaks for “lowered”) or turned off entirely by the action of a small RNA molecule whose sequence is complementary to a region of an mRNA molecule (which would ultimately be translated to a specific peptide or protein). RNA silencing can be adapted by generating synthetic small RNA sequences to specially and controllably regulate gene expression. Most known RNA silencing effects operate through such RNA interference, using either microRNA or similar small interfering RNA molecules, which operate via subtly different mechanisms but which both ultimately result in the degradation of a targeted mRNA molecule.

Gene expression in prokaryotes can also be silenced using a recently developed technique that utilizes clustered regularly interspaced short palindromic repeats (CRISPR, pronounced “crisper,” Jinek et al., 2012). CRISPR-associated genes naturally express proteins whose biological role is to catalyze the fragmentation of external foreign DNA and insert them into these repeating CRISPR sequences on the host cell genome. When these small CRISPR DNA inserts are transcribed into mRNA, they silence expression of the external DNA—it is a remarkable bacterial immune response against invading pathogens such as viruses. However, the CRISPR are also found in several species that are used as model organisms including C. elegans and zebrafish and can also be effective in human cells as a gene silencing tool. CRISPR has enormous potential for revolutionizing the process of gene editing.

Transcription activator-like effector nucleases (TALENs) can also be used to suppress expression from specific to genes. TALENs are enzymes that could be encoded onto a plasmid vector in a host cell. These can bind to a specific sequence of DNA and catalyze cutting of the DNA at that point. The cell has complex enzyme systems to repair such a cut DNA molecule; however, the repaired DNA is often not a perfect replica of the original that can result in a nonfunctional protein expressed from this repaired DNA. Thus, although gene expression remains there is no functional protein which results.

RNA silencing can also use upregulated (i.e., “increased”) gene expression, for example, by silencing a gene that expresses a transcription factor (see Chapter 2) that would normally represses the expression of another gene. Another method to increase gene expression includes concatemerization of genes, that is, generating multiple sequential copies under control of the same promoter (see Chapter 2).

Expression of genes in plasmids, especially those in bacteria, can be controlled through inducer chemicals. These chemicals affect the ability of a transcription factor to bind to a specific promoter of an operon. The operon is a cluster of genes on the same section of the chromosome that are all under control of the same promoter, all of which get transcribed and translated in the same continuous gene expression burst (see Chapter 2). The short nucleotide base pair sequence of the promoter on the DNA acts as an initial binding site for RNA polymerase and determines where transcription of an mRNA sequence translated from the DNA begins. Insight into the operation of this system was made originally using studies of the bacterial lac operon, and this system is also used today to control gene expression of recombinant DNA in plasmids.

Although some transcription factors act to recruit the RNA polymerase, and so result in upregulation, most act as repressors through binding to the promoter that inhibits binding of RNA polymerase, as is the case in the lac operon. The lac operon consists of three genes that express enzymes involved in the internalization into the cell and metabolism of the disaccharide lactose into the monosaccharides glucose and galactose. Decreases in the cell’s concentration of lactose result in reduced affinity of the repressor protein to the lacI gene that in turn is responsible for generating the LacI protein repressor molecule that inhibits expression of the operon genes and is by default normally switched “on” (note that the names of genes are conventionally written in italics starting with a lowercase letter, while the corresponding protein, which is ultimately generated from that gene following transcription and translation, is written in non-italics using the same word but with the first letter in uppercase). This prevents operon gene expression. This system is also regulated in the opposite direction by a protein called CAP whose binding in the promoter region is inversely proportional to cellular glucose concentration. Thus, there is negative feedback between gene expression and the products of gene expression.

The nonnative chemical isopropyl-β-D-thio-galactoside (IPTG) binds to LacI and in doing so reduces the Lacl affinity to the promoter, thus causing the operon genes to be expressed. This effect is used in genetic studies involving controllable gene expression in bacteria. Here, a gene under investigation desired to be expressed is fused upstream of the lac promoter region in the lac operon and into a plasmid vector using the molecular cloning methods described earlier in this chapter. These plasmids are also replicated during normal cell growth and division and so get passed on to subsequent cell generations.

If IPTG is added to the growth media, it will be ingested by the cells, and the repressing effects of LacI will be inactivated; thus, the protein of interest will start to be made by the cells, often at levels far above normal wild type levels, as it is difficult to prevent a large number of plasmids from being present in each cell. Since IPTG does not have an infinite binding affinity to LacI, there is still some degree of suppression of protein production, but also the LacI repressor similarly is not permanently bound to the operator region, and so if even in the absence of IPTG, a small amount of protein is often produced (this effect is commonly described as being due to a leaky plasmid).

In theory, it is possible to cater the IPTG concentration to a desired cellular concentration of expressed protein. In practice though the response curve for changes in IPTG concentration is steeply sigmoidal, the effect is largely all or nothing in response to changes in IPTG concentration. However, another operon system used for genetics research in E. coli and other bacteria is the arabinose operon that uses the monosaccharide arabinose as the equivalent repressor binder; here the steepness of the sigmoidal response is less than the IPTG operon system, which makes it feasible to control the protein output by varying the external concentration of arabinose.

A valuable technique for degrading the activity of specific expressed proteins from genes in prokaryotes is degron-targeted proteolysis. Prokaryotes have a native system for reducing the concentration level of specific proteins in live cells, which involves their controlled degradation by proteolysis. In the native cell, proteins are first marked for degradation by tagging them with a short amino acid degradation sequence, or degron. In E. coli, an adaptor protein called SspB facilitates binding of protein substrates tagged with the SsrA peptide to a protease called “ClpXP” (pronounced “Clip X P”). ClpXP is an enzyme that specifically leads to proteolytic degradation of proteins that possess the degron tag.

This system can be utilized synthetically by using molecular cloning techniques to engineer a foreign ssrA tag onto a specific protein which one wishes to target for degradation. This modification is then transformed into a modified E. coli cell strain in which the native gene sspB that encodes for the protein SspB has been deleted. Then, a plasmid that contains the sspB gene is transformed into this strain such that expression of this gene is under control on an inducible promoter. For example, this gene might then be switched “on” by the addition of extracellular arabinose to an arabinose-inducible promoter, in which case the SsrB protein is manufactured that then results in proteolysis of the SsrA-tagged protein.

This is a particularly powerful approach in the case of studying essential proteins. An essential protein is required for the cell to function, and so deleting the protein would normally be lethal and no cell population could be grown. However, by using this degron-tagging strategy, a cell population can first be grown in the absence of SspB expression, and these cells are then observed following controlled degradation of the essential protein after arabinose (or equivalent) induction.

KEY POINT 7.5

Proteolysis is the process of breaking down proteins into shorter peptides. Although this can be achieved using heat and the application of nonbiological chemical reagents such as acids and bases, the majority of proteolysis occurs by the chemical catalysis due to enzymes called proteases, which target specific amino acid sequences for their point of cleavage of a specific protein.

7.4.4 DNA-ENCODED REPORTER TAGS

As outlined previously, several options exist for fluorescent tags to be encoded into the DNA genetic code of an organism, either directly, in the case of fluorescent proteins, or indirectly, in the case of SNAP/CLIP-tags. Similarly, different segment halves of a fluorescent protein can be separately encoded next to the gene that expresses proteins that are thought to interact, in the BiFC technique, which generates a functional fluorescent protein molecule when two such proteins are within a few nm distance (see Chapter 3).

Most genetically encoded tags are engineered to be at one of the ends of the protein under investigation, to minimize structural disruption of the protein molecule. Normally, a linker sequence is used of ~10 amino acids to increase the flexibility with the protein tag and reduce steric hindrance effects. A common linker sequence involves repeats of the amino acid sequence “EAAAK” bounded by alanine residues, which is known to form stable but flexible helical structures (whose structure resembles a conventional mechanical spring). The choice of whether to use the C- or N-terminus of a protein is often based on the need for binding at or near to either terminus as part of the protein’s biological function, that is, a terminus is selected for tagging so as to minimize any disruption to the normal binding activities of the protein molecule. Often there may be binding sequences at both termini, in which case the binding ability can still be retained in the tagged sequence by copying the end DNA sequence of the tagged terminus onto the very end of the tag itself.

Ideally, the native gene for a protein under investigation is entirely deleted and replaced at the same location in the DNA sequence by the tagged gene. However, sometimes this results in too significant an impairment of the biological function of the tagged protein, due to a combination of the tag’s size and interference of native binding surfaces of the protein. A compromise in this circumstance is to retain the native untagged gene on the cell’s genome but then create an additional tagged copy of the gene on a separate plasmid, resulting in a merodiploid strain (a cell strain that contains a partial copy of its genome). The disadvantage with such techniques is that there is a mixed population of tagged and untagged protein in the cell, whose relative proportion is often difficult to quantify accurately using biochemical methods such as western blots (see Chapter 6).

A useful tool for researchers utilizing fluorescent proteins in live cells is the ASKA library (Kitagawa et al., 2005), which stands for “A complete Set of E. coli K-12 ORF Archive.” It is a collection (or “library”) of genes fused to genetically encoded fluorescent protein tags. Here, each open reading frame (or “ORF”), that is, the region of DNA between adjacent start and stop codons that contains one or more genes (see Chapter 2), in the model bacterium E. coli, has been fused with the DNA sequence for the yellow variant of GFP, YFP. The library is stored in the form of DNA plasmid vectors under IPTG inducer control of the lac operon.

In principle, each protein product from all coding bacterial genes is available to study using fluorescence microscopy. The principle weakness with the AKSA library is that the resultant protein fusions are all expressed at cellular levels that are far more concentrated than those found for the native nonfusion protein due to the nature of the IPTC expression system employed, which may result in nonphysiological behavior. However, plasmid construct sequences can be spliced out from the ASKA library and used for developing genomically tagged variants.

Optogenetics (see Pastrana, 2010; Yizhar et al., 2011) specifically describes a set of techniques that utilize light-sensitive proteins that are synthetically genetically coded into nerve cells. These foreign proteins are introduced into nerve cells using the transfection delivery methods of molecular cloning described earlier in this chapter. These optogenetics techniques enable investigation into the behavior of nerves and nerve tissue by controlling the ion flux into and out of a nerve cell by using localized exposure to specific wavelengths of visible light. Optogenetics can thus be used with several advanced light microscopy techniques, especially those of relevance to deep tissue imaging such as multiphoton excitation methods (see Chapter 4). These light-sensitive proteins include a range of opsin proteins (referred to as luminopsins) that are prevalent in the cell membranes of single-celled organisms as channel protein complexes. These can pump protons, or a variety of other ions, across the membrane using the energy from the absorption of photons of visible light, as well as other membrane proteins that act as ion and voltage sensors (Figure 7.2).

FIGURE 7.2 Optogenetics techniques. Schematic of different classes of light-sensitive opsin proteins, or luminopsins, made naturally by various single-celled organisms, which can be introduced into the nerve cells of animals using molecule cloning techniques. These luminopsins include proton pumps called archeorhodopsins, bacteriorhopsins, and proteorhodopsins that pump protons across the cell membrane out of the cell due to absorption of typically blue light (activation wavelength λ1 ~ 390–540 nm), chloride negative ion (anion) pumps called halorhodopsins that pump chloride ions out of the cell (green/yellow activation wavelength λ2 ~ 540–590 nm), and nonspecific positive ion (cation) pumps called channelrhodopsins that pump cations into the cell (red activation wavelength λ3 > 590 nm).

For example, bacteriorhodopsin, proteorhodopsin, and archaerhodopsin are all proton pumps integrated in the cell membranes of either bacteria or archaea. Upon absorption of blue-green light (the activation wavelengths λ span the range ~390–540 nm), they will pump protons from the cytoplasm to the outside of the cell. Their biological role is to establish a proton motive force across the cell membrane, which is then used to energize the production of ATP (see Chapter 2).

Similarly halorhodopsin is a chloride ion pump found in a type of archaea known as halobacteria that thrive in very salty conditions, whose biological role is to maintain the osmotic balance of a cell by pumping chloride into their cytoplasm from the outside, energized by absorption of yellow/green light (typically 540 nm < λ < 590 nm). Channelrhodopsin (ChR), which is found in the single-celled model alga C. reinhardtii, acts as a pump for a range of nonspecific positive ions including protons, Na+ and K+ as well as the divalent Ca2+ ion. However, here longer wavelength red light (λ > 590 nm) fuels a pumping action from the outside of the cell to the cytoplasm inside.

In addition, light-sensitive protein sensors are used, for example, chloride and calcium ion sensors, as well as membrane voltage sensor protein complexes. Finally, another class of light-sensitive membrane integrated proteins are used, the most commonly used being the optoXR type. These undergo conformational changes upon the absorption of light, which triggers intracellular chemical signaling reactions.

The light-sensitive pumps used in optogenetics have a typical “on time” constant of a few ms, though this is dependent on the local excitation of laser illumination. The importance of this is that it is comparable to the electrical conduction time from one end of a single nerve cell to the other and so in principle allows individual action potential pulses to be probed. The nervous conduction speed varies with nerve cell type but is roughly in the range 1–100 ms−1, and so a signal propagation time in a long nerve cell that is a few mm in length can be as slow as a few ms.

The “off time” constant, that is, a measure of either the time taken to switch from “on” to “off” following removal of the light stimulation, varies usually from a few ms up to several hundred ms. Some ChR complexes have a bistable modulation capability, in that they can be activated with one wavelength of light and deactivated with another. For example, ChR2-step function opsins (SFOs) are activated by blue light of peak λ = 470 nm and deactivated with orange/red light of peak λ = 590 nm, while a different version of this bistable ChR called VChR1-SFOs has the opposite dependence with wavelength such that it is activated by yellow light of peak λ = 560 nm, but deactivated with a violet light of peak λ = 390 nm. The off times for these bistable complexes are typically a few seconds to tens of seconds. Light-sensitive biochemical modulation complexes such as the optoXRs have off times of typically a few seconds to tens of seconds.

Genetic mutation of all light-sensitive protein complexes can generate much longer off times of several minutes if required. This can result in a far more stable on state. The rapid on times of these complexes enable fast activation to be performed either to stimulate nervous signal conduction in a single nerve cell or to inhibit it. Expanding the off time scale using genetic mutants of these lightsensitive proteins enables experiments using a far wider measurement sample window. Note also that since different classes of light-sensitive proteins operate over different regions of the visible light spectrum, this offers the possibility for combining multiple different light-sensitive proteins in the same cell. Multicolor activation/deactivation of optogenetics constructs in this way result in a valuable neuroengineering toolbox.

Optogenetics is very useful when used in conjunction with the advanced optical techniques discussed previously (Chapter 4), in enabling control of the sensory state of single nerve cells. The real potency of this method is that it spans multiple length scales of the nervous sensory system of animal biology. For example, it can be applied to individual nerve cells cultured from samples of live nerve tissue (i.e., ex vivo), to probe the effects of sensory communication between individual nerve cells. With advanced fluorescence microscopy methods, these can be combined with detection of single-molecule chemical transmitters at the synapse junctions between nerve cells, to explore the molecular scale mechanisms of sensory nervous conduction and regulation. But larger length scale experiments can also be applied using intact living animals to explore the ways that neural processing between multiple nerve cells occurs. For example, using light stimulation of optogenetically engineered parts of the nerve tissue in C. elegans can result in control of the swimming behavior of the whole organism. Similar approaches have been applied to monitor neural processing in fruit flies, and also experiments on live rodents and primates using optical fiber activation of optogenetics constructs in the brain have been performed to monitor the effect on whole organism movement and other aspects of animal behavior relating to complex neural processing. In other words, optogenetics enables insight into the operation of nerves from the length scale of single molecules through to cells and tissues up to the level of whole organisms. Such techniques have also a direct biomedical relevance in offering insights into various neurological diseases and psychiatric disorders.

KEY BIOLOGICAL APPLICATIONS: MOLECULAR CLONING

Controlled gene expression investigations; Protein purification; Genetics studies.

Enormous advances have been made in the life sciences due to structural information of biomolecules, which is precise within the diameter of single constituent atoms (see Chapter 5). The most successful biophysical technique to achieve this, as measured by the number of different uploaded PDB files of atomic spatial coordinates of various biomolecule structures into the primary international PDB data repository of the Protein Data Bank (www.pdb.org, see Chapter 2), has been x-ray crystallography. We explored aspects of the physics of this technique previously in Chapter 5. At present, a technical hurdle in x-ray crystallography is the preparation of crystals that are large enough to generate a strong signal in the diffraction pattern while being of sufficient quality to achieve this diffraction to a high measurable spatial resolution. There are therefore important aspects to the practical methods for generating biomolecular crystals, which we discuss here.

7.5.1 BIOMOLECULE PURIFICATION

The first step in making biomolecule crystals is to purify the biomolecule in question. Crystal manufacture ultimately requires a supersaturated solution of the biomolecule (meaning a solution whose effective concentration is above the saturation concentration level equivalent to the concentration in which any further increase in concentration results in biomolecules precipitating out of solution). This implies generating high concentrations equivalent in practice to several mg mL−1.

Although crystals can be formed from a range of biomolecule types, including sugars and nucleic acids, the majority of biomolecule crystal structures that have been determined relate to proteins, or proteins interacting with another biomolecule type. The high purity and concentration required ideally utilizes molecular cloning of the gene coding for the protein in a plasmid to overexpress the protein. However, often a suitable recombinant DNA expression system is technically too difficult to achieve, requiring less ideal purification of the protein from its native cell/tissue source. This requires a careful selection of the best model organism system to use to maximize the yield of protein purified. Often bacterial or yeast systems are used since they are easy to grow in liquid cultures; however, the quantities of protein required often necessitate the growth of several hundred liters of cells in culture.

The methods used for extraction of biomolecules from the native source are classical biochemical purification techniques, for example, tissue homogenization, followed by a series of fractionation precipitation stages. Fractionation precipitation involves altering the solubility of the biomolecule, most usually a protein, by changing the pH and ionic strength of the buffer solution. The ionic strength is often adjusted by addition of ammonium sulfate at high concentrations of ~2.0 M, such that above certain threshold levels of ammonium sulfate, a given protein at a certain pH will precipitate out of a solution, and so this procedure is also referred to as ammonium sulfate precipitation.

At low concentrations of ammonium sulfate, the solubility of a protein actually increases with increasing ammonium sulfate, a process called “salting in” involving an increase in the number of electrostatic bonds formed between surface electrostatic amino acid groups and water molecules mediated through ionic salt bridges. At high levels of ammonium sulfate, the electrostatic amino acid surface residues will all eventually be fully occupied with salt bridges and any extra added ammonium sulfate results in attraction of water molecules away from the protein, thus reducing its solubility, known as salting out. Different proteins salt in and out at different threshold concentrations of ammonium sulfate; thus, a mixture of different proteins can be separated by centrifuging the sample to generate a pellet of the precipitated protein(s) and then subject either the pellet or the suspension to further biochemical processing—for example, to use gel filtration chromatography to further separate any remaining mixtures of biomolecules on the basis of size, shape, and charge, etc., in addition to methods of dialysis (see Chapter 6). Ammonium sulfate can also be used in the final stage of this procedure to generate a high concentration of the purified protein, for example, to salt out, then resuspend the protein, and dissolve fully in the final desired pH buffer for the purified protein to be crystallized. Other precipitants aside from ammonium sulfate can be used depending on the pH buffer and protein, including formate, ammonium phosphate, the alcohol 2-propanol, and the polymer polyethylene glycol (PEG) in a range of molecular weight value from 400 to 8000 Da.

Biomolecule crystallization, most typically involving proteins, is a special case of a thermodynamic phase separation in a nonideal mixture. Protein molecules separate from water in solution to form a distinct, ordered crystalline phase. The nonideal properties can be modeled as a virial expansion (see Equation 4.25) in which the parameter B is the second virial coefficient and is negative, indicative of net attractive forces between the protein molecules.

Once a highly purified biomolecule solution has been prepared, then in principle the attractive forces between the biomolecules can result in crystal formation if a supersaturated solution in generated. It is valuable to depict the dependence of protein solubility on precipitant concentration as a 2D phase diagram (Figure 7.3). The undersaturation zone indicates solubilized protein, whereas regions to the upper right of the saturation curve indicate the supersaturation zone in which there is more protein present than can be dissolved in the water available.

The crystallization process involves local decrease in entropy S due to an increase in the order of the constituent molecules of the crystals, which is offset by a greater increase in local disorder of all of the surrounding water molecules due to breakage of solvation bonds with the molecules that undergo crystallization. Dissolving a crystal breaks strong molecular bonds and so releases enthalpy H as heat (i.e., an exothermic process), and similarly crystal formation is an endothermic process. Thus, we can say that

(7.1) |

FIGURE 7.3 Generating protein crystals. Schematic of 2D phase diagram showing the dependence of protein concentration on precipitant concentration. Water vapor loss from a concentrated solution (point I) results in supersaturated concentrations, which can result in crystal nucleation (point II). Crystals can grow until the point III is reached, and further vapor loss can result in more crystal nucleation (point IV).

The change in enthalpy for the local system composed of all molecules in a given crystal is positive for the transition of disordered solution to ordered crystal (i.e., crystallization), as is the change in entropy in this local system. Therefore, the likelihood that the crystallization process occurs spontaneously, which requires ΔGcrystallization < 0, increases at lower temperatures T. This is the same basic argument for a change of state from liquid to solid.

Optimal conditions of precipitant concentration can be determined in advance to find a crystallization window that maximizes the likely number of crystals formed. For example, static light scattering experiments (see Chapter 4) can be performed on protein solutions containing different concentrations of precipitant. Using the Zimm model as embodied by Equation 4.30 allows the second virial coefficient to be estimated. Estimated preliminary values of B can then be plotted against the empirical crystallization success rate (e.g., number of small crystals observed forming in a given period of time) to determine an empirical crystallization window by extrapolating back to the ideal associated range in precipitant concentration, focusing on efforts for longer time scale in crystal growth experiments.

Indeed, the trick for obtaining homogeneous crystals as opposed to amorphous precipitated protein is to span the metastable zone between supersaturation and undersaturation by making gradual changes to the effective precipitant concentration. For example, crystals can be simply formed using a solvent evaporation method, which results in very gradual increases in precipitant concentration due to the evaporation of solvent (usually water) from the solution. Other popular methods include slow cooling of the saturated solution, convection heat flow in the sample, and sublimation methods under vacuum. The most common techniques however are vapor diffusion methods.

Two popular types of vapor diffusion techniques used are sitting drop and hanging drop methods. In both methods, a solution of precipitant and concentrated but undersaturated protein is present in a droplet inside a closed microwell chamber. The chamber also contains a larger reservoir consisting of a precipitant at higher concentration than the droplet but no protein, and the two methods only essentially differ in the orientation of the protein droplet relative to the reservoir (in the hanging drop method the droplet is directly above the reservoir, in the sitting drop method it is shifted to the side). Water evaporated from the droplet is absorbed into the reservoir, resulting in a gradual increase in the protein concentration of the droplet, ultimately to supersaturation levels.

The physical principles of these crystallization methods are all similar; in terms of the phase diagram, a typical initial position in the crystallization process is indicated by point I on the phase diagram. Then, due to water evaporation from the solution, the position of the phase diagram will translate gradually to point II in the supersaturation zone just above the metastable zone. If the temperature and pH conditions are optimal, then a crystal may nucleate at this point. Further evaporation causes crystal growth and translation on the phase diagram to point III on the saturation curve. At this point, any further water evaporation then potentially results in translation back up the phase transition boundary curve to the supersaturation point IV, which again may result in further crystal nucleation and additional crystal growth. Nucleation may also be seeded by particulate contaminants in the solution, which ultimately results in multiple nucleation sites with each resultant crystal being smaller than were a single nucleation site present. Thus, solution and sample vessel cleanliness are also essential in generating large crystals. Similarly, mechanical vibration and air disturbances can result in detrimental multiple nucleation sites. But the rule of thumb with crystal formation is that any changes to physical and chemical conditions in seeding and growing crystals should be made slowly—although some proteins can crystallize after only a few minutes, most research grade protein crystals require several months to grow, sometimes over a year.

Nucleation can be modeled as two processes of primary nucleation and secondary nucleation. Primary nucleation is the initial formation such that no other crystals influence the process (because either they are not present or they are too far away). The rate B of primary nucleation can be modeled empirically as

(7.2) |

where

B1 is the number of crystal nuclei formed per unit volume per unit time

N is the number of crystal nuclei per unit volume

kn is a rate constant (an on-rate)

C is the solute concentration

Csat is the solute concentration at saturation

n is an empirically determined exponent typically in the range 3–4, though it can be as high as ~10

The secondary nucleation process is more complex and is dependent on the presence of other nearby crystal nuclei whose separation is small enough to influence the kinetics of further crystal growth. Effects such as fluid shear are important here, as are collisions between preexisting crystals. This process can be modeled empirically as

(7.3) |

where

k1 is the secondary nucleation rate constant

MT is the density of the crystal suspension

j and b are empirical exponents of ~1 (as high as ~1.5) and ~2 (as high as ~5), respectively

Analytical modeling of the nucleation process can be done by considering the typical free energy change per molecule ΔGn associated with nucleation. This is given by the sum of the bulk solution (ΔGb) and crystal surface (ΔGs) terms:

(7.4) |

where

α is the interfacial free energy per unit area of a crystal of effective radius r

Ω is the volume per molecule