18.5 Advanced Strategy: Repeated Games and Punishments

So far we’ve focused on situations in which you interact with the other players only once. When you’re never going to see someone again, you have every reason to be as aggressive as possible. But if you interact with them repeatedly, then the decisions you make today will color your future interactions. As we’ll see, this sets the stage for even richer strategies. We’ll discover that the threat of punishment can actually lead to more cooperation.

Collusion and the Prisoner’s Dilemma

Let’s return to the Prisoner’s Dilemma and explore whether it’s possible to induce other players to cooperate. We’ll focus on an extreme example of cooperation, called collusion, which refers to agreements by rivals to stop competing with each other. Instead of each offering lower prices in an attempt to gain market share, when firms collude, they all agree to charge a higher price in the hope that they’ll all earn larger profits. We’ll analyze collusion because it presents an interesting example of the Prisoner’s Dilemma.

When rivals collude to raise prices, they increase their profits.

In 2009, executives at several major book publishers organized a series of private lunches in various New York restaurants. Their goal was to figure out how to remain profitable in the emerging e-book marketplace. Spurred on by Apple—who wanted to counter Amazon’s dominant role selling e-books—they came up with a plan: Rather than each competing to offer the lowest prices, they would all agree to charge the same high price for their e-books.

That is, they agreed to collude, with each effectively promising to raise the price of their bestsellers from $9.99 to $12.99. Colluding like this to fix prices is illegal because it hurts consumers. But the publishers were interested in something more direct: Would this agreement work?

Collusion is a Prisoner’s Dilemma.

To evaluate the likely outcome, put yourself in the shoes of David Shanks, CEO of Penguin Books. Should he go along with the plan to raise his prices to $12.99?

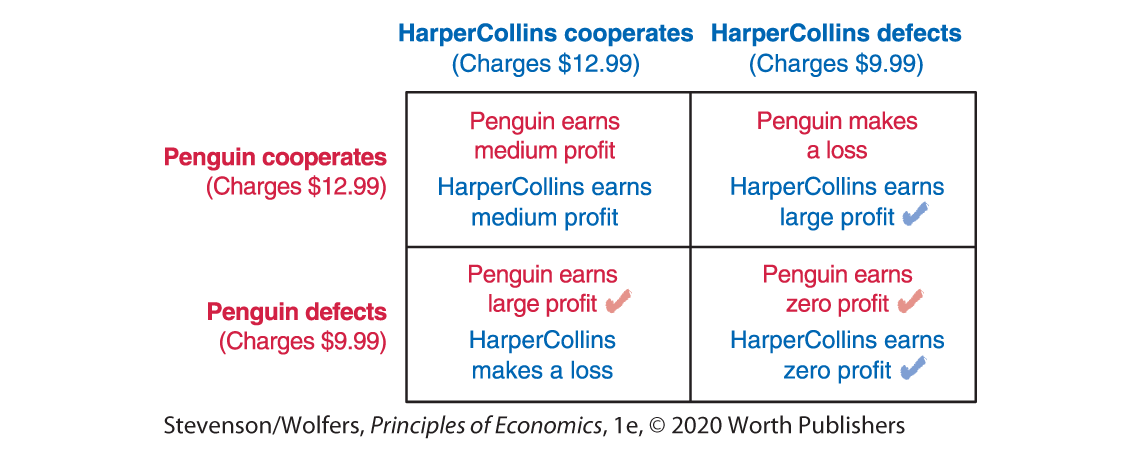

Game theory is particularly useful in the sort of high-stakes strategic interactions that often arise in oligopolies. Following our four-step recipe, begin by considering all the possible outcomes. Penguin can cooperate with this plan to raise prices, or defect, and keep charging $9.99. The other publishers face similar alternatives. To simplify things, the payoff table in Figure 8 sets this up as a game involving only two publishers—Penguin and HarperCollins—though in reality there were five publishers involved. But this simpler game is sufficient to highlight the key forces involved.

Figure 8 | Will Rivals Collude?

Initially each publisher was charging $9.99 per book, and they each earned zero economic profit. Their hope was that if they all cooperated with the plan to raise their prices to $12.99, then they could all be better off, and earn a medium profit.

But collusion is an example of a Prisoner’s Dilemma, which means that we should expect the publishers to fail to cooperate. To see this, move to step two and consider the “what ifs.” If your rivals charge $12.99, then (step three!) your best response is to defect and charge only $9.99, because you’ll gain enough market share to earn a large profit. If your rivals defect, you don’t want to be the only company selling at $12.99, because then you’ll lose so much market share that you’ll make a loss. Now, in step four, put yourself in the shoes of your rivals. You’ll discover that even if Penguin cooperates with the plan to collude, HarperCollins will defect, leading Penguin to make a loss. The Nash equilibrium is that each firm chooses to defect from the agreement to collusively charge high prices.

Based on this analysis, you might predict that collusion won’t work. It says that Penguin will defect from the collusive agreement, and if it doesn’t, its rivals will. This is an example where the failure to cooperate in the Prisoner’s Dilemma is good for society, because it prevents collusion from occurring.

It’s a comforting thought. But in reality, all of the major publishers ended up colluding and charging $12.99 for their e-books. Why?

It only takes a few clicks to change the price on an e-book.

Finitely Repeated Games

We’ve treated Penguin’s choices as if it were playing a one-shot game, which means that this strategic interaction occurs only once. In a one-shot game you don’t need to worry about how today’s decision might change how other others will treat you in the future. But in reality, Penguin effectively plays the game shown in Figure 8 every day. That is, Penguin faces the same rivals and the same payoffs in successive periods—a situation we call a repeated game.

Solve finitely repeated games by looking forward and reasoning backward.

In a finitely repeated game, the players interact a fixed number of times. For instance, if Penguin and HarperCollins thought they would interact exactly three times, that would be an example of a finitely repeated game. Even though that’s not the situation they actually faced, we can do a quick analysis on that premise to see how it would play out over three periods.

Remember that in games that play out over time you should look forward and reason backward. So let’s look forward to the last interaction. In the final period, both players know they’ll never interact again, and so they effectively face a one-shot Prisoner’s Dilemma. In a one-shot Prisoner’s Dilemma, both will choose to defect. Now let’s reason back to the second-to-last period. Both players understand that no matter what they do, their rival will defect in the next period. Given this, the second-to-last round is also effectively a one-shot game, and so again, both players will likely defect. And so as you continue to roll back your analysis to earlier periods, you’ll conclude that neither publisher will cooperate in any period.

That is, the Prisoner’s Dilemma continues to yield uncooperative outcomes when it’s repeated a fixed or finite number of times. This is because when there’s a known final period, both players know that they’ll cheat in that final period. The incentive to defect in the final period then rolls back to the next-to-final period, and so on all the way back to the first period, undermining the incentive to cooperate in every stage.

Indefinitely Repeated Games

The CEO of Penguin realizes that he isn’t going to interact with his rivals a fixed number of times. There’s no known end date, and so the logic of a finitely repeated game is of little help to him. And, with no known end date, there’s no reason to expect that cooperation will necessarily unravel.

In fact, he faces an indefinitely repeated game, which arises when you expect to keep interacting with the other players for quite some time—perhaps until one of you goes out of business—but you don’t know precisely for how long. The result is that the game is repeated an indefinite number of times. This is important because, if you don’t know when your interactions will end, there’s no last period in which you or your rival will definitely be tempted to defect. And this undermines the logic that leads you to want to defect in earlier periods. Perhaps this points to a reason to be more optimistic that you can sustain cooperation.

A strategy is a list of instructions.

In this sort of repeated interaction, you’ll need a strategic plan that describes or lists how you’ll respond to any possible situation. You can think of it as a list of instructions that you could leave with your lawyer, telling her exactly how to respond in any situation that may arise. In a game that plays out over time the list of instructions might be quite complex, describing how to respond to different contingencies. Importantly, your strategic plan may depend on past choices made by other players. This means that your strategic plan might include a threat to your rivals that if they defect from a collusive agreement, you’ll follow up with a punishment. If that threat is credible, it might be sufficient to elicit cooperation in an indefinitely repeated Prisoner’s Dilemma.

The Grim Trigger strategy punishes your rival for not cooperating.

One difficulty with analyzing indefinitely repeated games is that there are millions of possible strategic plans to consider. Rather than evaluating them all, we’re going to focus on a specific plan that’s particularly likely to elicit cooperation.

The Grim Trigger strategy is relatively simple, and involves only two instructions:

- If the other players have cooperated in all previous rounds, then you’ll cooperate.

- If any player has defected in any previous round, you’ll defect.

Effectively this strategy says: I’m willing to cooperate, but if you fail to play along, I’ll punish you by defecting forever. You can see how this might provide a strong incentive for your rivals to cooperate!

Cooperation can be an equilibrium.

If you and your rivals play the Grim Trigger strategy, what will the equilibrium be? We can analyze this using our four-step recipe.

Start with step one: Consider all of the different possibilities. We’ll start by considering what happens if neither player has defected yet. You have two choices: Continue to follow your strategic plan, which means cooperating given that no one has defected, or deviate from your strategic plan by unilaterally defecting. Likewise, your rival has the same two choices. We can see these alternatives in Figure 9:

Figure 9 | Payoff Table if Both Firms Have Cooperated in the Past

Notice something different about the payoff table: The two choices are whether to continue following your strategic plan or whether to deviate. And the payoffs consider both what will happen today, and also how this changes the future.

Okay, step two: consider the “what ifs,” and step three: play your best response.

What if your rival follows their strategic plan and continues cooperating?

- You can cooperate: You’ll earn a medium profit today. You also need to consider the future. If you both cooperate today, you’ll also be in a position to keep cooperating tomorrow, which ensures you can continue to earn a medium profit in the future.

- Or you can defect: You’ll earn a large profit today. But the downside is that your rival will never cooperate with you again, and so you’ll earn zero profits in all future periods.

How do these compare? As long as you value those future profits enough, you’re better off cooperating, so we put a red check mark in the upper-left corner.

What if your rival deviates from their strategic plan and defects?

You can continue to cooperate and make a loss today (and zero in the future), or defect and make zero today (and zero in the future). Comparing these, your best response is also to defect. So put a red check in the lower-right box.

Time for step four: Put yourself in your rival’s shoes. Your rival needs to consider two “what ifs.”

If you follow your strategic plan and cooperate, your rival has to decide whether to:

- Continue following its strategic plan of cooperating with you: This earns your rival a medium profit today and also keeps alive the possibility of cooperating and earning a medium profit in the future.

- Deviate from its strategic plan by defecting today: This earns your rival a large profit today, but destroys the possibility of future cooperation, leading to zero profits in all future periods.

If your rival values the future benefits enough, it’ll choose to cooperate, so we put a blue check mark in the top-left box.

What if you deviate from your strategic plan and defect?

Your rival can continue to cooperate and make a loss today (and zero in the future), or defect and make zero today (and zero in the future). Comparing these, its best response is also to defect, and so we put a blue check mark in the bottom-right corner.

Now, look for a Nash equilibrium, and you’ll discover two check marks in the top-left corner. For both you and your rival, continuing to follow the Grim Trigger strategy—and continuing to cooperate—is a Nash Equilibrium! (It’s not the only equilibrium, though.) Even though the whole point of the Prisoner’s Dilemma is to illustrate that often cooperation won’t be an equilibrium in the one-shot game—or indeed in a finitely repeated game—it can be an equilibrium now that the game is indefinitely repeated.

This is a big deal! It should restore your faith that cooperation is possible—as long as people continue to interact for an unknown period of time. It won’t always occur, but it can.

And indeed, cooperation is exactly what occurred, as the publishers cooperated with their joint plan to collude, continuing to charge $12.99 for e-books. Eventually, they faced a different problem: Collusion like this is illegal, and when it was discovered, each company ended up paying the government millions in fines.

Punishment drives cooperation.

The logic of the Prisoner’s Dilemma—at least the one-shot version—is that defecting rather than cooperating is an attractive short-run option because you’ll immediately earn a large profit. But in the indefinitely repeated version, defecting is not a good long-run choice because it triggers a large punishment: Your rival could refuse to cooperate with you again in the future. And it’s the loss of these future profits that makes cooperating a better choice.

Threats of punishment only work if they are credible.

Of course, your threat to punish your rival for not cooperating will only work if your rival believes you’ll follow through with it—that is, if it is a credible threat. For a threat to be credible, it must be in your best interest to take the actions you threatened to take. If your rival is playing a Grim Trigger strategy, then the threat to defect forever after your rival has defected is your best choice. It’s credible, because after either player defects, your rival will continue to defect forever, and so your best response is also to defect. The Grim Trigger strategy ensures that any defection destroys any possibility of future cooperation, and so you can resume playing as if this were a one-shot game, in which case your best response is to defect.

There’s also some good news worth emphasizing: If the punishment is strong enough to deter cheating, it need never actually be inflicted. We might simply both cooperate forever!

Repeated play helps solve the Prisoner’s Dilemma.

Where does this leave us? The key insight of the Prisoner’s Dilemma is that it can be difficult to sustain cooperation in strategic interactions. But it’s not necessarily a deal-breaker. If you’re repeating your interactions—and repeating them an indefinite number of times—then you have another tool to help you solve it: The threat of future punishments. And this threat can be sufficient to sustain cooperation.