4

THE AMAZING QUANTUM

“But, but, but . . . if anyone says he can think about quantum theory without getting giddy, it merely shows that he hasn’t understood the first thing about it!”

NIELS BOHR

THE QUANTUM REVOLUTION

We have seen how the shift from the nineteenth century to the twentieth involved a fundamental transformation in physics. At the same time, there was a significant change in the technological basis of industry and society. In the nineteenth century, the prime mover had been steam. The steam engine transformed both working life and, through the railways, transport. In the twentieth century, it was electricity and then electronics that took over in the transformative role.

Electrical lighting, then electric motors, began to make a huge difference to everyday life. As the use of electricity became commonplace, it increasingly took over communications too. First the telegraph and then radio shrank the world. It is not entirely surprising that when Albert Einstein first had his ideas on special relativity, dependent on an understanding of what simultaneity meant across different locations, he was working in the Swiss Patent Office in Bern, regularly handling patents for methods of using electricity to synchronize remote clocks.

The electronic age

With these more sophisticated uses of electricity came the need to produce more complex circuits. Crude devices that had been used to examine the behavior of an electrical phenomenon called “cathode rays” were transformed into versatile electrical devices known as vacuum tubes. These electrical components could force currents to flow in only one direction, could act as switches for the flow of electricity, or could amplify a small variation in an electrical signal so that, for example, a low-energy radio signal could be transformed into a sound loud enough to fill a room. With a better understanding of the electron’s role in electrical currents, equipment making use of vacuum tubes became known as electronics.

At this stage, quantum theory was in its infancy. The first triode vacuum tubes, providing the switching and amplifying capabilities, became available around the same time as Bohr’s quantum atom paper was published. Although electrons were beginning to be seen as quantum particles, it wouldn’t be until the full-scale quantum revolution of Werner Heisenberg, Erwin Schrödinger, Max Born, and Paul Dirac that it was realized that the behavior of these quantum particles could be controlled more effectively using devices that were explicitly designed to make use of quantum behavior.

There was every need for such a device, because vacuum tubes were problematic. It’s notable that when science-fiction writer James Blish conceived in the 1940s a probe that could enter the atmosphere of Jupiter, he noted that such probes couldn’t have electronics on board as the atmospheric pressure would cause the fragile glass tubes to implode. Even in a more earthbound location, vacuum tubes were large, generated a lot of heat, and required high-voltage electricity, which itself needed heavy equipment to produce, meaning that electronic devices were not portable. Enter the transistor.

Although an early solid-state triode had been proposed back in 1926 by Julius Lilienfeld (whose patents caused problems for the team building transistors), it could not be made to work, in part because of a lack of understanding of the quantum nature of semiconductors—substances that sit between a conductor and an insulator, such as silicon and germanium—which would prove essential for the development of solid-state electronics.

Quantum proliferation

The reason a team from Bell Labs succeeded in getting the transistor operational in 1947 was that they were among the first electronic experts to have a firm grasp of quantum physics. It was this quantum expertise that became the driving force behind electronics, branching out into integrated circuits, lasers, LEDs (light-emitting diodes), and more. Explicit quantum devices such as these are now responsible for thirty-five percent of GDP in developed countries. Although this is a broad-brush figure, it seems a reasonable estimate given the importance of electronics in modern society. And this figure does not include the many occupations where the quantum revolution has transformed jobs. There were science writers, for example, before electronics—but jobs like this are now entirely dependent on computers, the internet, smartphones, and more.

Quantum physics is fascinating and gives a unique insight into the lowest-level workings of reality we can access, even if it emphasizes that this level tells us only about what we can measure rather than the true “reality” beneath. However, unlike much theoretical physics, quantum theory has also had a transforming impact on our everyday lives.

BIOGRAPHIES

HEIKE KAMERLINGH ONNES (1853–1926)

Born in Groningen, Netherlands, in 1853, Heike Kamerlingh Onnes was a master of the supercold. After attending university at Groningen and Heidelberg, he became professor of experimental physics at the University of Leiden in 1882, staying until 1923. There, he worked on low-temperature physics. His first big success was in 1908, when he managed to liquefy helium, getting the element down to a temperature of -456.97°F (-271.65°C), just 1.5 degrees above absolute zero (-459.67°F/-273.15°C), the lowest temperature that had ever been achieved. In 1911, working on the effects of low temperature on conductivity, he discovered that mercury went through a change of state at -452.11°F (-268.95°C), where its electrical resistance entirely disappeared—he had discovered the phenomenon of superconductivity. Kamerlingh Onnes was considered old-fashioned and overbearing (despite having many assistants, only his name tends to appear on his papers), but there is no doubt of his achievements. At the time, superconductivity was considered a useless oddity—when Kamerlingh Onnes won the Nobel Prize in 1913, the citation did not mention it—but it has come to be a significant application of quantum physics. He died in Leiden in 1926.

WILLIAM SHOCKLEY (1910–1989)

Born in London, England, in 1910 to US parents, physicist William Shockley was brought up in Palo Alto, California, and studied at Caltech and MIT. He went straight from his doctorate to Bell Labs, where he stayed until 1956, when he left to set up his own company, Shockley Semiconductor Laboratory, the first in Silicon Valley. After wartime work on radar, Shockley was asked to head up a team investigating solid-state physics with the hope of moving away from the delicate vacuum tubes used in early electronics. Shockley worked closely with John Bardeen and Walter Brattain, developing a solid-state equivalent of the triode, which they named the transistor. That this was a team effort was reflected in the three sharing the Nobel Prize in Physics in 1956. However, Shockley devised several of the theoretical advances that made transistors feasible, and invented two key types of transistor. Shockley was difficult to work with, alienating the other Bell team members and many of the staff at Shockley Semiconductor Laboratory. Eight of his staff split off to form their own, more successful company in 1957. In 1963, Shockley moved to Stanford University, where he worked until retirement. He died at Stanford in 1989.

THEODORE MAIMAN (1927–2007)

US engineer and physicist Theodore Maiman, born in Los Angeles in 1927, won the race to produce the first working laser. He had the ideal background: with a first degree from the University of Colorado in Engineering Physics, Maiman went on to get a Master’s in Electrical Engineering and a PhD in Physics at Stanford. This combination of the practical engineering and the solid physics enabled him to overcome significant technical hurdles in producing a laser. Maiman, who had joined Hughes Corporation in 1956, had experience working with rubies in masers, the microwave equivalent of a laser. It was thought at the time, due to an erroneous report, that rubies wouldn’t work in lasers, but Maiman was determined to give them a try. Inspired by the flashtube of an early electronic flash, he got his laser working on May 16, 1960. He was horrified when the press described his technology, intended to enhance communications, as a “science-fiction death ray.” Maiman went on to head up a company specializing in lasers and another company that developed large-screen laser video displays. Despite being the first to produce a working laser, Maiman was excluded from the Nobel Prize. He died in Vancouver.

BRIAN JOSEPHSON (1940–)

Born in Cardiff, Wales, in 1940, apart from a short period as an assistant professor at the University of Illinois, Brian Josephson has spent his career at the University of Cambridge, England. Just two years after gaining his BA in Natural Sciences in 1962, Josephson wrote a paper called “Possible New Effects in Superconductive Tunnelling,” on what became known as the “Josephson effect”—a tunneling mechanism in superconducting metal junctions. He won the 1973 Nobel Prize in Physics for this work. This joined a handful of known examples of quantum effects that could be directly used in a working device, proving particularly useful in components known as SQUIDs (superconducting quantum interference devices). Josephson was only thirty-three when he received the Nobel Prize. Toward the end of the 1970s, he became uncomfortable with the way science ignored areas of apparent experience such as telepathy and the paranormal. While still in the physics department, he set up his Mind–Matter Unification Project. It is arguable that Josephson has not contributed much to physics since his early work, but there is no doubt of his essential involvement in the development of quantum technology. He retired his professorship in 2007, but continues his research at Cambridge.

TIMELINE

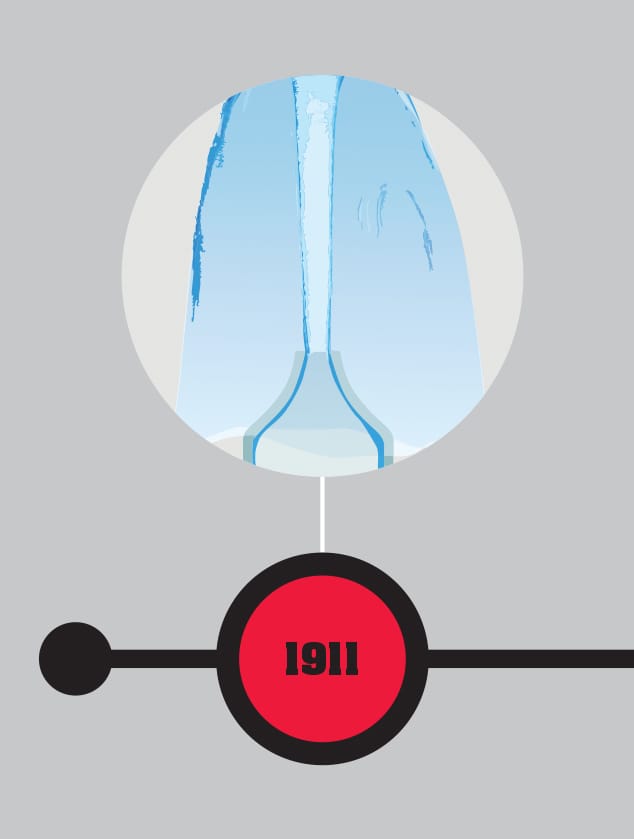

SUPERCONDUCTIVITY

Just three years after becoming the first to achieve such low temperatures, Heike Kamerlingh Onnes discovers superconductivity in mercury at little over four degrees above absolute zero (-459.67°F/-273.15°C). At this temperature (-452.11°F/-268.95°C), the electrical resistance of the metal suddenly and unexpectedly drops to zero.

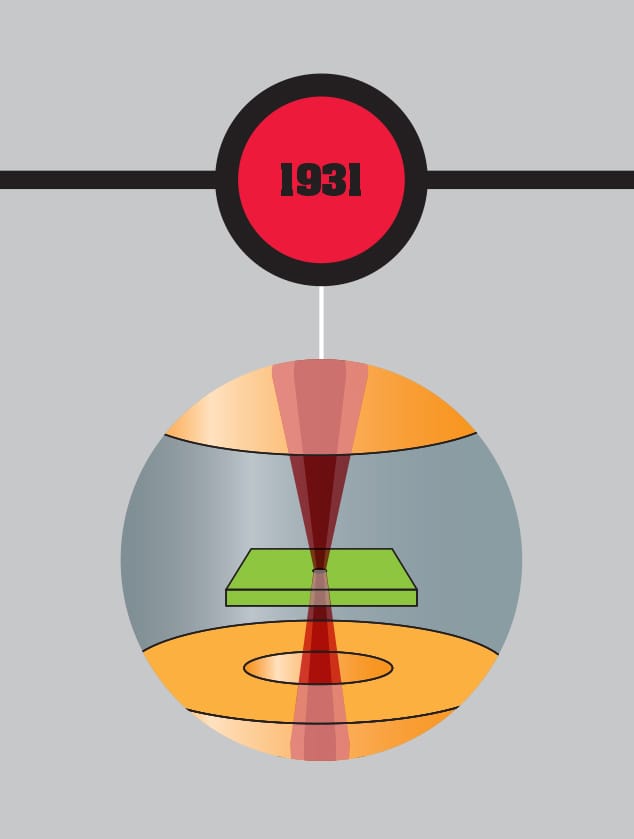

ELECTRON MICROSCOPE

The first electron microscope is constructed by Ernst Ruska and Max Knoll, beginning the move away from the dominance of optical instruments. Dependent on the discovery that electrons could behave as waves with much smaller wavelength than light, the device could resolve far smaller objects than a conventional microscope was able to.

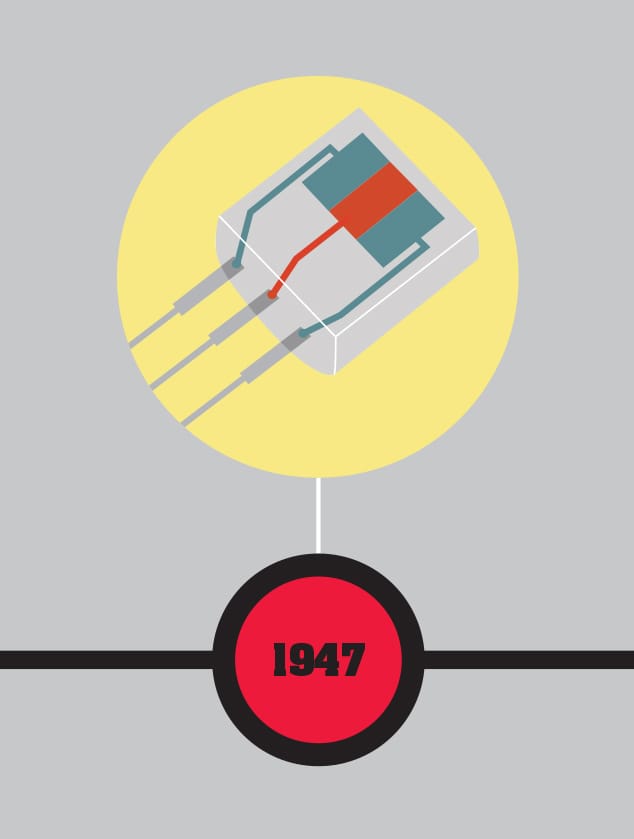

ELECTRONIC DEVICES

John Bardeen, Walter Brattain, and William Shockley demonstrate the transistor, the first step in solid-state electronics that would have been impossible to design without a knowledge of quantum physics. The transistor rapidly replaced vacuum-tube electronics and paved the way for all our modern electronic devices.

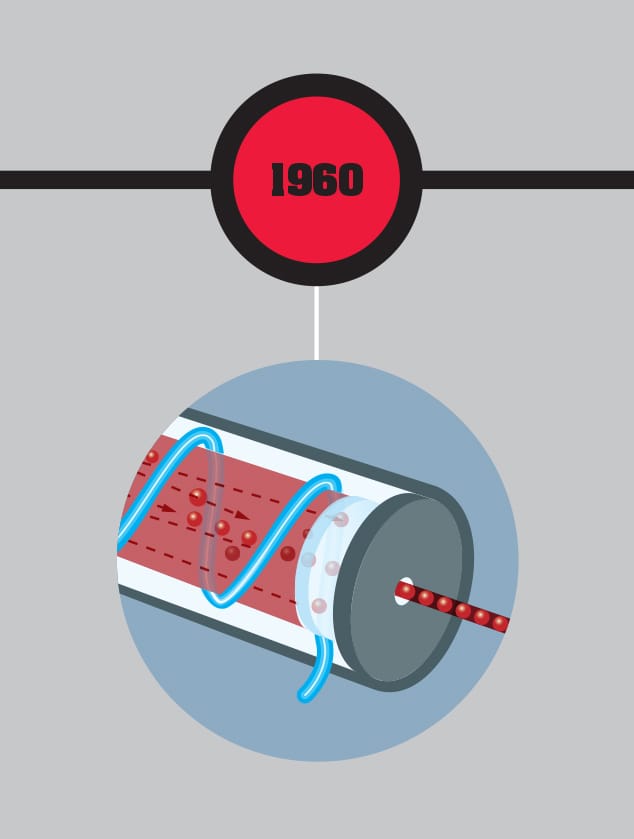

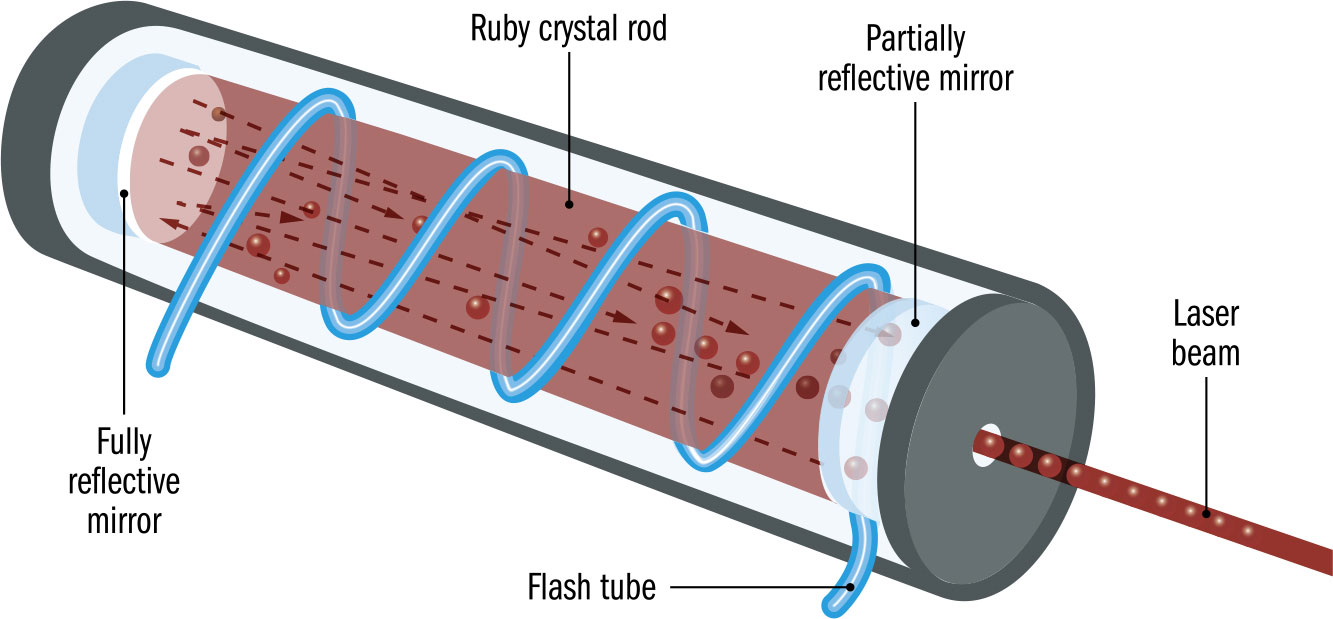

LASER LIGHT

Despite being told by experts that his design would not work, Theodore Maiman produces the first working laser at Hughes Corporation, using an artificial ruby. Based on a theory by Albert Einstein from over thirty years earlier, Maiman’s laser produces “coherent” light, generating photons of very similar energy with their phases in step.

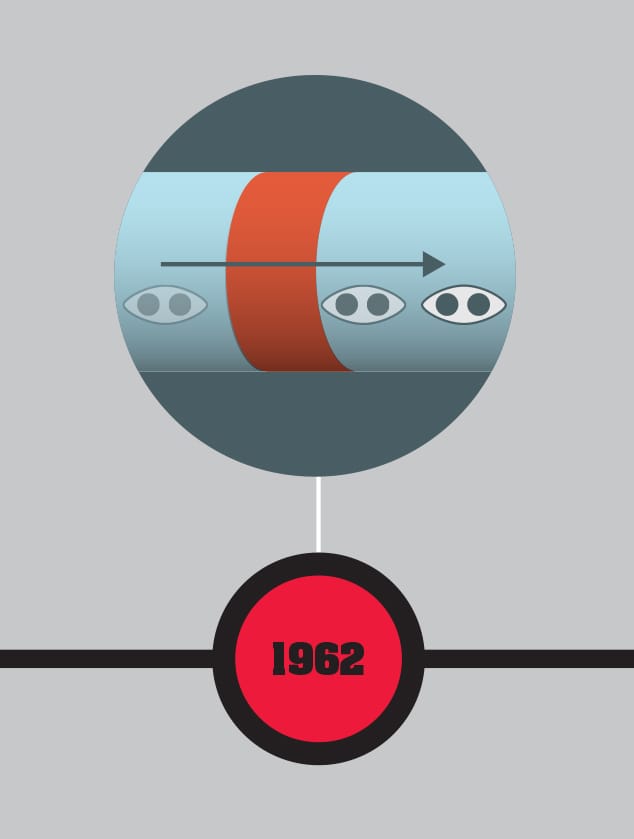

JOSEPHSON EFFECT

Twenty-two-year-old Brian Josephson discovers the “Josephson effect,” leading to the development of Josephson junctions, single-electron transistors, and SQUIDs (superconducting quantum interference devices). Based on quantum tunneling in superconducting junctions, SQUIDs are ultrasensitive magnetic-field detectors with the potential to detect anything from variations in the Earth’s field to unexploded bombs.

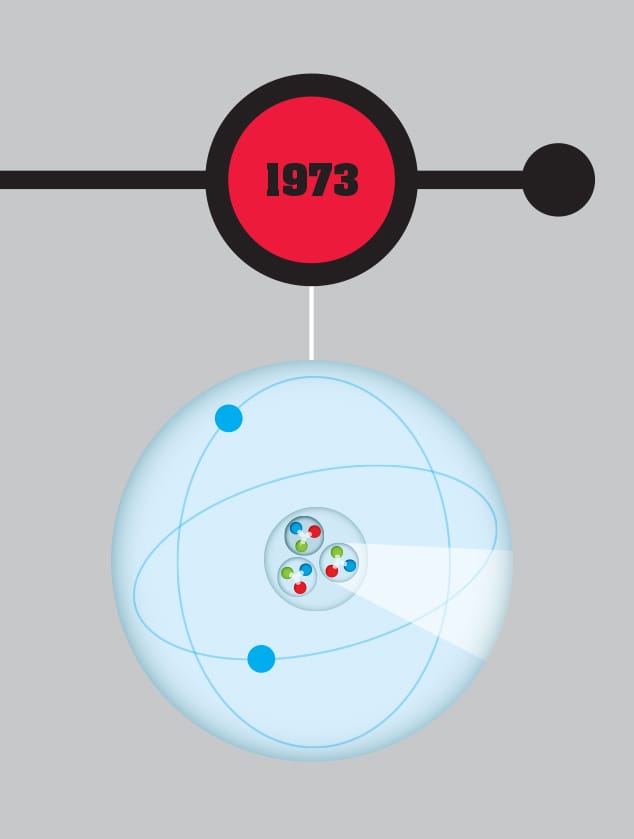

NUCLEAR FORCE

Quantum chromodynamics, an equivalent of quantum electrodynamics (QED) for the strong nuclear force between quarks, is developed. Because, unlike electrical charge, there are three different types of charge in the strong nuclear force (given the names “red,” “blue,” and “green”), the interaction of quarks and gluons (their equivalent of photons) is significantly more complex than QED.

THE LASER

THE MAIN CONCEPT | Many of the experimental developments in quantum physics have been dependent on one quantum device: the laser. In the mid-1950s, the theory of the maser, standing for “microwave amplification through the stimulated emission of radiation,” was developed by Russian physicists Alexander Prokhorov and Nikolai Basov, with a working maser produced by the US physicist Charles Townes soon after. This used a quantum interaction between photons and atoms in a material to amplify a microwave beam. Although masers could be used in telecommunications and atomic clocks, they were limited in power, and better alternatives could perform the same functions. It was clear that a version working with visible light would have much wider application. On May 16, 1960, US engineer and physicist Theodore Maiman constructed the first working laser, based on an artificial ruby and the tube from a camera flashgun. The key to the laser’s effectiveness was that the stimulated emission process meant it had a single, sharp frequency and the phase of all the photons were in step—it was “coherent” light. This meant that a laser beam dispersed far less than ordinary light, making it ideal for telecommunications and as a specialist cutting device. Since 1960, a wide range of laser technologies has been developed, making the technology pervasive.

DRILL DOWN | Most early lasers such as Maiman’s were based on a ruby. Within a few years, these were joined by gas-based lasers, using less corrosive materials than the original experimental alkali metal gases. However, the real breakthrough in the modern ubiquity of the laser was the semiconductor device. Practically every domestic laser, whether in a CD, DVD, or Blu-ray player, laser printer, or laser pointer, is likely to be a semiconductor laser. These are tiny devices small enough to fit through the eye of a needle and operate on a similar principle to the light-emitting diode (LED), pumping electrons into a semiconductor, where they drop in energy to give off photons.

MATTER | Despite Albert Einstein’s opposition to the probabilistic aspects of quantum theory, the laser is another of his contributions to the quantum world. In 1917, based on Niels Bohr’s idea of the quantum atom, Einstein suggested it would be possible for an atom to absorb a photon of light then release it in “stimulated emission” when a second photon hit the atom.

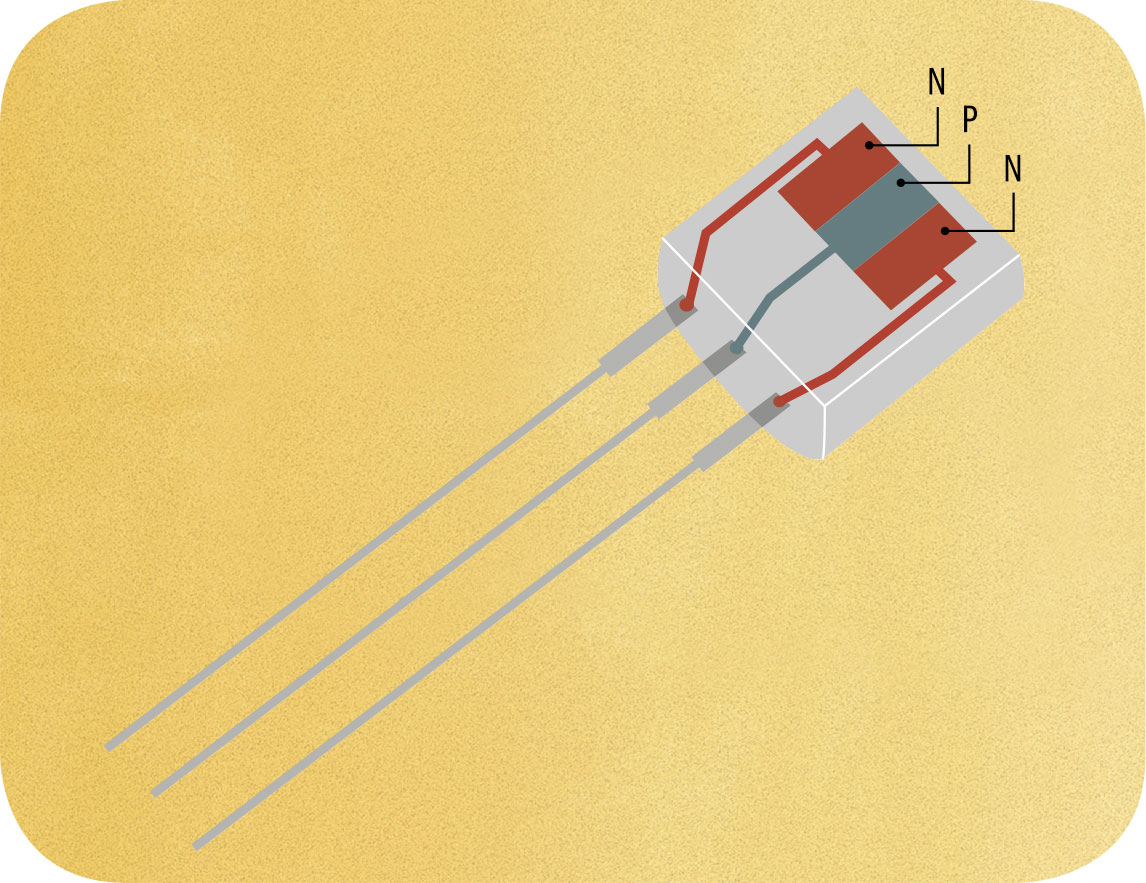

THE TRANSISTOR

THE MAIN CONCEPT | In the early development of electronics, a particularly useful device called a triode was produced. This enabled a small electrical signal either to be amplified or to be used to switch another electrical current on and off. The amplification aspect was particularly useful in audio and broadcasting, while the switching ability made it possible to construct the logic gates that make an electronic computer possible. However, triode valves (vacuum tubes) were too large, fragile, and energy-consuming to be used in the large numbers required by most electronic devices—and impractical for anything portable. The transistor was devised as a solid-state replacement for the triode: small, robust, and low in energy consumption. Its design depended fundamentally on an understanding of quantum physics. The simplest form of transistor was a sandwich of three slices of a semiconductor such as silicon or germanium. These were produced in two forms, “n-type” (typically “doped” by adding a small amount of phosphorus) and “p-type” (typically doped with boron). The n-type semiconductor has extra electrons, whereas the p-type has fewer than usual. The sandwich would be set up in either n-p-n or p-n-p format, with the central slice controlling the electrical current flowing between the other two. The transistor, particularly when built into integrated circuits, transformed electronics.

DRILL DOWN | A modern computer processor can contain 500 million transistors. Cramming these in requires “integrated circuits,” where all the components are formed as layers on the surface of a silicon chip. Here, the transistors are typically MOSFET (“metal oxide semiconductor field effect transistor”). The field effect transistor was devised before the first working transistors, but was initially impractical to construct. Instead of using a central “slice” of semiconductor, it has an external electrode called a gate, which sits over a gap between two pieces of semiconductor and uses an electrical field to control the current. Other forms of transistor used in flash memory make use of tunneling effects to store charge.

MATTER | The first computer using transistors was built at the University of Manchester, England, in 1953, just six years after John Bardeen, William Shockley, and Walter Brattain made the original transistor. Up until then, electronic computers had been huge and impractical for many purposes—for example, the 1946 ENIAC had 20,000 vacuum tubes and needed 150 kilowatts of electricity to run.

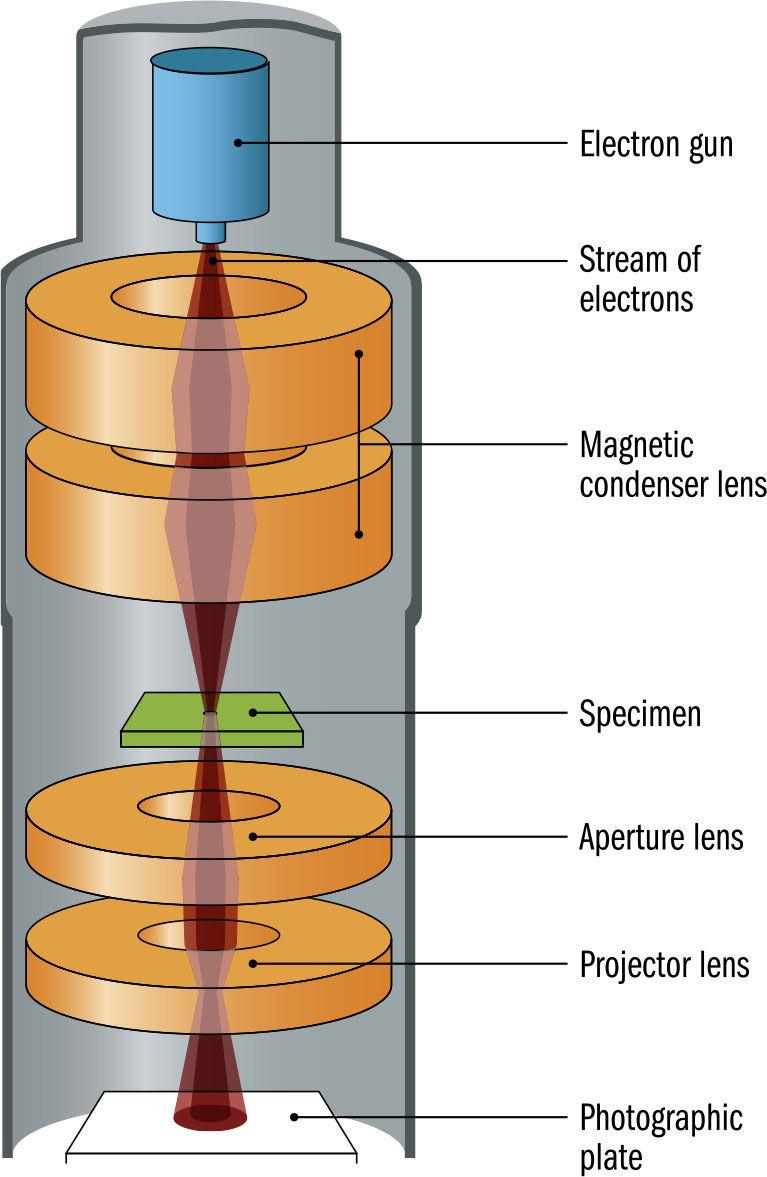

THE ELECTRON MICROSCOPE

THE MAIN CONCEPT | In 1924, just three years after French physicist Louis de Broglie suggested that electrons had wave-like behavior, this was experimentally demonstrated. By 1931, the concept found a practical application in microscopes that use electrons. Conventional microscopes use light and lenses to examine small objects. But the resolution of a microscope—the minimum scale at which it can focus—is limited by the wavelength of light. It is impossible to pick up detail that is much smaller than a single wavelength. A light-based microscope can resolve down to around 200 nanometers—0.2 millionths of a meter—providing approximately ×2,000 magnification. But the best electron microscopes can resolve down to around 50 picometers—0.05 billionths of a meter—providing magnification of up to ×10,000,000. As early as 1933, German physicist Ernst Ruska developed a prototype electron microscope with better resolution than was possible with light. The approach is a little like an upside-down optical microscope. Instead of light passing up through the sample to the lens, this type of electron microscope sends a focused beam of electrons through a thin sample, then focuses the result on a phosphorescent viewing screen or photographic plate below. Soon after, an alternative electron microscope was developed that uses the impact of electrons on the surface of a sample.

DRILL DOWN | The earliest design of electron microscope is the “transmission electron microscope.” However, by 1937, an alternative “scanning electron microscope” was developed, passing an electron beam over the sample and detecting the electrons or electromagnetic radiation generated from the surface by this bombardment. Both are still used. Scanning electron microscopes have lower resolution than transmission electron microscopes, but don’t penetrate the surface of samples and so can deal with thicker or three-dimensional samples. There is, however, a limit to the kind of samples scanning electron microscopes can examine. Such samples need to be hard and dry to withstand a high vacuum and, if not electrically conducting, need a thin conductive coating added.

MATTER | The scanning tunneling microscope is sometimes confused with an electron microscope, but is a different electron-based quantum device, developed in 1981. A scanning tunneling microscope uses a tiny conducting tip that rides over the surface of the sample, measuring the electrical current tunneling between the tip and the surface. This microscope can also manipulate matter down to the level of individual atoms.

SUPERCONDUCTORS

THE MAIN CONCEPT | One quantum peculiarity caused a major shock when discovered. Dutch physicist Heike Kamerlingh Onnes, working at the University of Leiden, was an expert on low temperatures. In 1911, he was studying the conductivity of metals near to absolute zero (-459.67°F/-273.15°C). As he lowered the temperature of mercury, it went through a sudden change at -452.11°F (-268.95°C), losing all electrical resistance. Usually the electrons flowing through an electrical conductor interact with the atoms, resulting in resistance. But once superconducting, electrons carried on as if there was nothing to stop them. To test the effect, Kamerlingh Onnes started an electrical current in a superconducting wire. He could keep the experiment going for only a few hours, but in that timescale, the flow continued unchecked. A more sophisticated experiment run in the 1950s continued for eighteen months with no detectable change in current. Kamerlingh Onnes immediately thought of the benefit for electricity distribution, where loss of energy to heat through electrical resistance is a significant problem. However, the need to keep superconductors at extremely low temperatures has limited their use to generating powerful magnetic fields in specialist equipment. Basic superconductivity (there are several types) was later explained as a quantum effect where electrons act together, unified by the influence of the superconductor.

DRILL DOWN | The main drawback of superconductors is the need to keep them at extremely low temperatures. For decades, attempts have been made to find a material that was superconducting at higher temperatures—ideally requiring no special cooling. By the 1980s, superconductors based on special ceramic materials that combined, for example, mercury, barium, calcium, copper, and oxygen, were working in the range of -297.4°F (-183°C) to -234.4°F (-148°C). This is a significant improvement on the early superconductors, because such cooling can be produced with liquid nitrogen rather than the far more expensive and tricky to handle liquid helium. Experimenters continue to search for the elusive room-temperature superconducting material.

MATTER | As well as reducing electrical resistance to zero, a superconductor produces the so-called Meissner effect, named for German physicist Walther Meissner. The Meissner effect says that any magnetic field is expelled from a material as it becomes superconducting. This leads to a dramatic demonstration of superconductivity, as a magnet will levitate above a superconductor because its magnetic field is unable to penetrate the material.

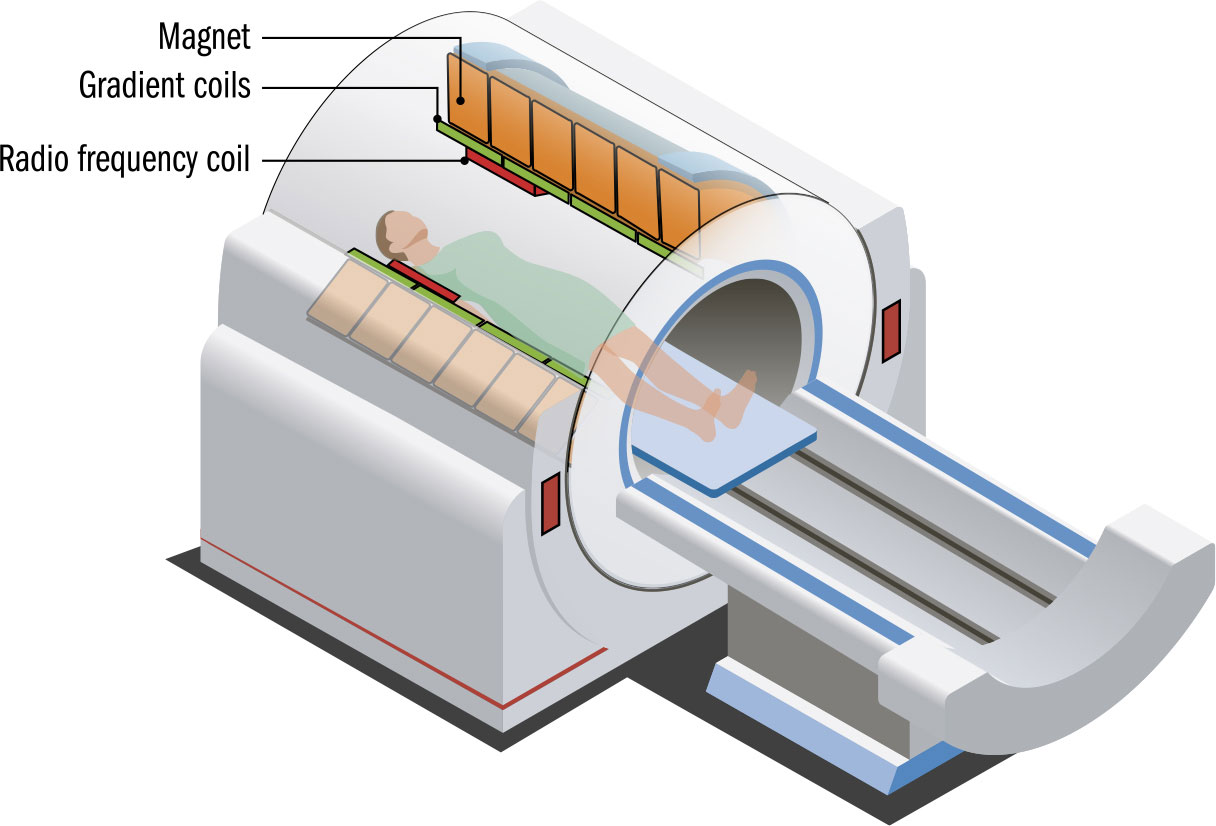

THE MRI SCANNER

THE MAIN CONCEPT | The most sophisticated piece of quantum technology many of us encounter is the MRI scanner, incorporating multiple quantum devices. The initials “MRI” stand for “magnetic resonance imaging”—the name was changed from the more accurate NMR, “nuclear magnetic resonance,” as the word “nuclear” was disconcerting. Rather than use hazardous X-rays, MRI scanners turns atoms into radio transmitters. The scanner works on the hydrogen in the human body’s water molecules. As the body passes through the scanner, a strong magnetic field is applied. This flips the quantum spins of the protons in those hydrogen atoms. When the magnetic field is switched off, the spins flip back, producing radio-frequency photons. This electromagnetic radiation is picked up by receiver coils, building up a picture from the signals from these vast numbers of tiny transmitters. Such manipulation of spin is a quantum effect, but another is required to produce the magnetic field that triggers it. Scanners use extremely powerful magnets to provide the field required to flip spins. The magnets are cooled to very low temperatures, typically around -452°F (-269°C), using liquid helium. At this temperature, the magnets become superconductors, enabling unusually strong fields to be produced. Superconducting magnets have found use in a range of specialist applications that require intense magnetic fields.

DRILL DOWN | Superconducting magnets are used whenever extremely strong magnetic fields are required. The most impressive use is in the largest machine in the world, the Large Hadron Collider (LHC) at the CERN laboratory near Geneva, Switzerland. In the LHC, around 10,000 superconducting magnets are used to keep the beams of protons that circulate around the accelerator on track. Elsewhere, superconducting magnets are being used in experimental Maglev trains, which float above the track and are accelerated along it by magnetic interaction. The first planned commercial Maglev train, the Chou Shinkansen, linking Tokyo, Nagoya, and Osaka in Japan, is expected to reach speeds of around 500 kilometers per hour (320 miles per hour).

MATTER | MRI scanners are infamously noisy. This is because smaller electromagnets called gradient coils are turned on and off to make changes to the magnetic field in localized areas of the patient to build an image. The coils making up the electromagnets expand and contract so forcefully that they produce a thudding as loud as 120 decibels—comparable to a jet engine.

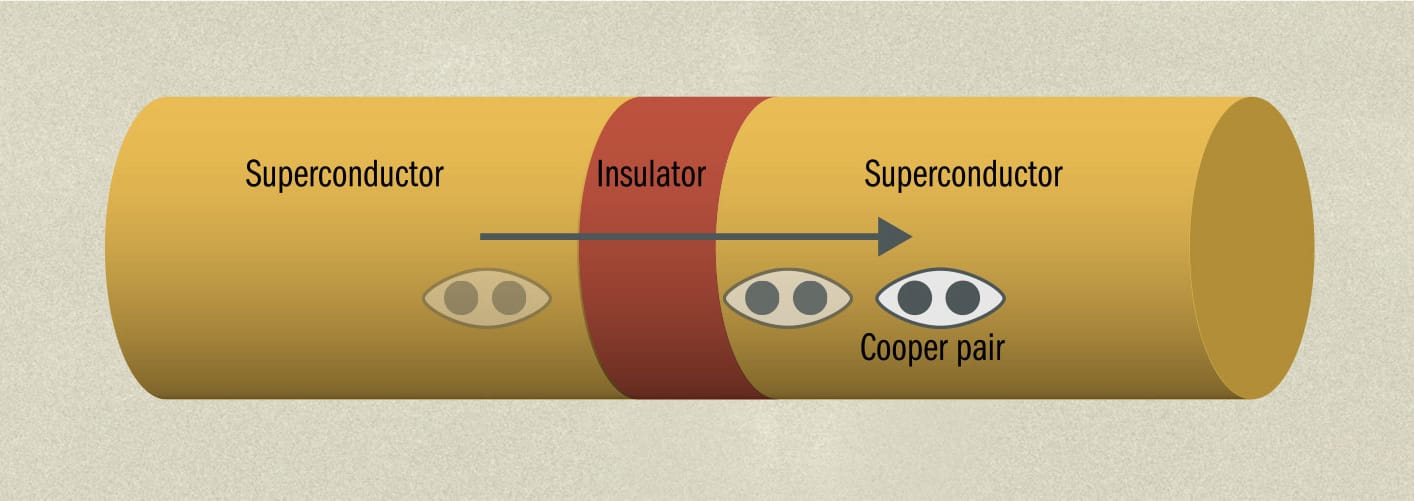

THE JOSEPHSON JUNCTION

THE MAIN CONCEPT | In 1962, Welsh graduate student Brian Josephson devised a remarkable superconducting quantum device that won him the Nobel Prize eleven years later—the Josephson junction. It consists of two small segments of superconductor with a barrier between them, which could be an insulator or a conventional electrical conductor. Part of the complex quantum effect behind superconductivity involves pairs of electrons acting as if they are a single entity as a result of an interaction with the lattice of the superconductor. These “Cooper pairs,” named for US physicist Leon Cooper who discovered them, play a key role in the Josephson junction. Just as a single quantum particle can tunnel through a barrier, Josephson predicted that Cooper pairs would also do so. And he showed that when an alternating current was put across a Josephson junction, the junction would provide extremely sensitive voltage measurements depending on the frequency of the current. Josephson’s paper describing the junction enabled a number of potential applications to be developed by other physicists, although Josephson himself was only really interested in the underlying physics. For example, Josephson junctions have found their way into experimental quantum computers, and in astronomy are used to produce very wide-spectrum equivalents of the charge-coupled devices used in digital cameras.

DRILL DOWN | The SQUID, or “superconducting quantum interference device,” is the application of a Josephson junction with the widest potential use. SQUIDs employ Josephson junctions to detect tiny changes in the nearby magnetic field, producing changes in the voltage across the junctions. SQUIDs are being tried out in everything from quantum computers and variants of the MRI scanner to unexploded bomb detectors. Here, a SQUID-based detector is used to map out tiny variations in the Earth’s magnetic field due to the intervening objects. The sensitivity of the SQUID means it can map out objects with unrivaled clarity. It’s also better than any alternatives at a distance and works through undergrowth or water.

MATTER | Josephson’s paper on the Josephson junction is extremely detailed, displaying an intensity of character in the physicist—just twenty-two at the time. One of his lecturers, Philip Anderson, remarked that teaching Josephson “was a disconcerting experience for a lecturer . . . because everything had to be right or he would come up and explain it to me after class.”

QUANTUM DOTS

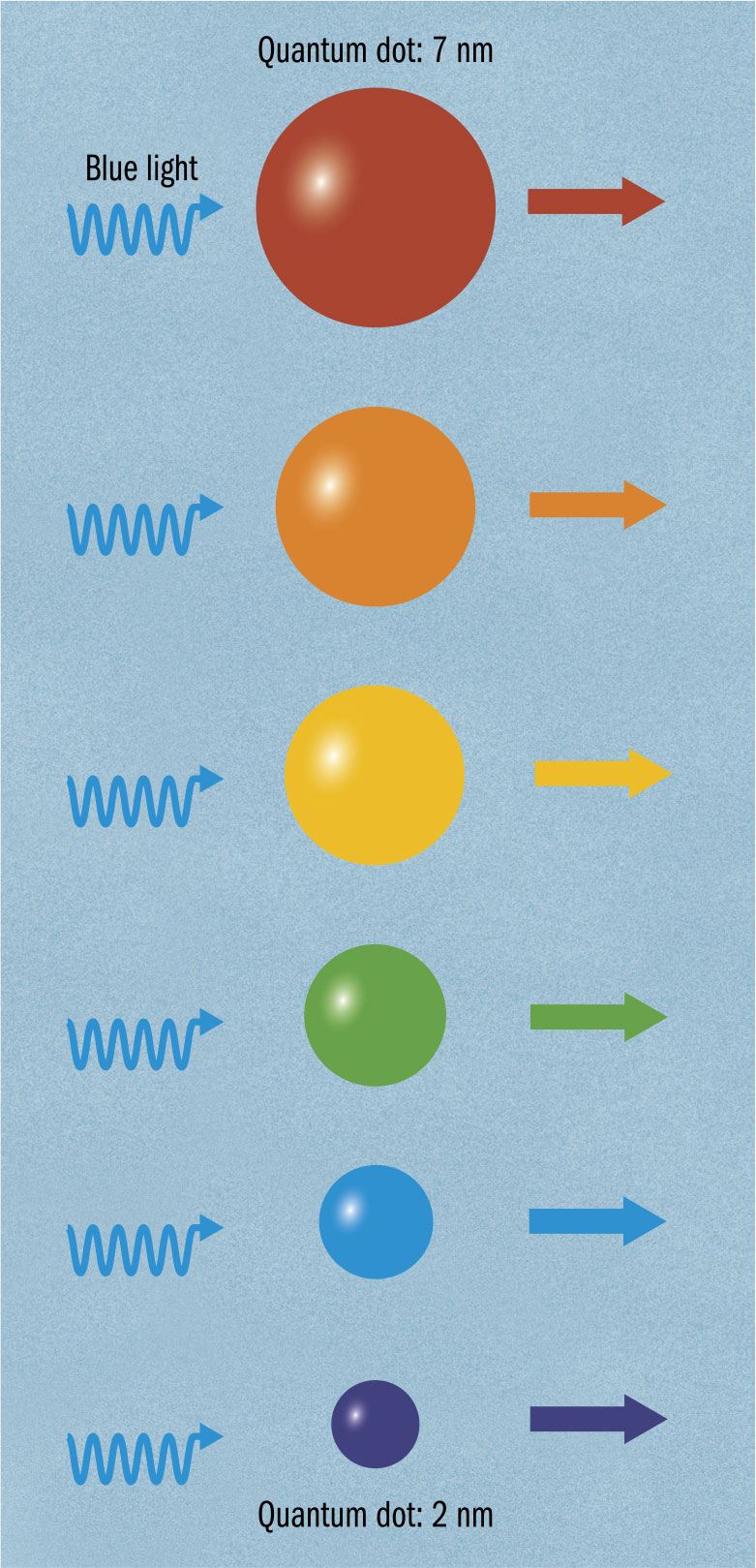

THE MAIN CONCEPT | For many applications of quantum technology, it can be useful to pin down a quantum particle. Depending on the particle, different types of trap have been employed to keep a particle in place. For example, photons have been held in mirrored cavities, and charged particles can be held using electromagnetic forces to repel their charge from all directions. This is fine for a laboratory experiment, but too bulky to be incorporated into a commercial electronic device. A quantum dot is an extremely small semiconductor device capable of trapping an electron. As a result, it can act as an artificial atom. When an electron takes a quantum leap from a higher to a lower shell in an atom, the energy difference is given off as a photon—the atom has produced light. A quantum dot can behave similarly. The electron held in the dot is given extra energy, then drops back, emitting a photon. When a crystal composed of many quantum dots has an electrical current put across it, it produces light with a color that depends on the dot’s size and shape, generating striking colors. Quantum dots can also be used as single-electron transistors. Here, the quantum dot acts like a miniature flash memory, capable of holding data without power.

DRILL DOWN | Flash memory, used in memory sticks and SSD computer memory, stores data without the need for a constant electrical current. In flash memory, each bit of storage is an isolated conductor surrounded by insulator. This conductor is charged up to represent a 1 or discharged to represent a 0—because of the insulation, interaction with it can be performed only by quantum tunneling. In a quantum-dot single-electron transistor, that conductor (known as an island) is home to a single electron, rather than the multiple-electron charge in flash memory. This makes the quantum dot a good candidate for a qubit, the fundamental unit of storage in a quantum computer.

MATTER | Perhaps the most dramatic example of an electromagnetic trap for a quantum particle was demonstrated in 1980 by Hans Dehmelt of the University of Washington. He isolated a single barium ion (a charged atom). When illuminated by the right color of laser light, that ion was visible to the naked eye as a pinprick of brilliance floating in space.

QUANTUM OPTICS

THE MAIN CONCEPT | In a sense, all optical devices are quantum—they handle photons, and quantum electrodynamics (QED) describes the way that they work. So, for example, reflection off a mirror or the focusing action of a lens are quantum phenomena. However, just as electronics has been transformed by our understanding of quantum physics, so the apparently impossible can be undertaken optically using technology constructed to make explicit use of quantum phenomena—the field of quantum optics, also called photonics. One option is to use metamaterials. These are specially constructed lattices, or patterns of holes in metal sheets, that have the remarkable property of a negative refractive index. When light passes through a metamaterial, it bends the opposite way to when passing into or out of glass. Optical lenses hit a resolution limit around the wavelength of light, but this negative refractive index enables a metamaterial device to bring optical focus down to a scale previously available only to electron microscopes. Another photonic technology is a photonic crystal. Unlike metamaterials, photonic crystals exist in nature—for example producing the iridescence in an opal, a butterfly’s wing, or a peacock’s tail. But artificial photonic crystals could provide the equivalent of semiconductors in electronics, enabling the production of optical computers where photons, rather than electrons, do the work.

DRILL DOWN | The most remarkable application of quantum optics is more reminiscent of Harry Potter or Star Trek than traditional physics. Some metamaterials can make an object invisible by bending light around the object to make it disappear. This has been done on a small scale with microwaves, but is harder to produce with visible light, as the materials used absorb too much of the light to work effectively. However, there are alternative mechanisms that either optically amplify the restricted output of the metamaterial or use a photonic crystal to control the way that the light is diffracted—so we may still have a form of invisibility cloaking from quantum technology.

MATTER | Quantum optical devices are now ubiquitous in the form of LEDs (light-emitting diodes). These bulbs, which generate light by a quantum effect where electrons plunge into “holes” in semiconductors, giving off photons, have been around since the 1950s. However, the recent addition of blue LEDs to red and green, enabling white light output, has seen a transformation of low-energy lighting.

SUPERFLUIDS

THE MAIN CONCEPT | Around the same time Heike Kamerlingh Onnes discovered superconductivity, he also noticed an oddity in the behavior of the liquid helium he was using to cool his superconducting mercury. At around -455.8°F (-271°C), the helium suddenly underwent a dramatic increase in its ability to conduct heat. What was happening was a mystery, and little else was made of it until 1938 when Pyotr Kapitsa, working in Russia, and John Allen and Don Misener in the UK, established that the helium was undergoing as remarkable a transition as does a superconductor when it cools. At this critical temperature, liquid helium suddenly lost all viscosity, becoming what would be later known as a superfluid. Viscosity is a measure of the “gloopiness” of a substance—the more viscous it is, the more it resists flowing. Just as superconductors lose all electrical resistance at their critical temperature, so superfluid helium loses all resistance to movement when cooled sufficiently. Once a superfluid is set in motion, unless it reaches the critical temperature, it will never stop flowing. Superfluidity is the rare example of a quantum phenomenon that is directly visible to the human eye—stir superfluid helium and you can see its continuous rotation, while superfluids in vessels attempt to escape from any orifice.

DRILL DOWN | Helium in the form of a superfluid creeps up the walls of a container as a thin film, and if the container is not sealed, it will continue over the rim and flow out. Suitably shaped vessels can also produce a self-powering superfluid fountain. This behavior has provided the first practical use of a superfluid, in the 1983 Infrared Astronomical Satellite. The mirror of an infrared telescope needs to be kept at a constant low temperature to avoid distortion of the image. A container of superfluid helium was used, shaped so that a tiny amount pumped itself out at a time, in the process maintaining the temperature of the satellite.

MATTER | The helium atoms in a superfluid form a special type of medium known as a Bose–Einstein condensate, where the atoms share a single quantum wave function. This applies only to the most common helium variant, helium-4. However, surprisingly, helium-3 can also become a superfluid at -459.67°F (-273.15°C) when its atoms pair up, rather like the Cooper pairs that enable superconductivity.

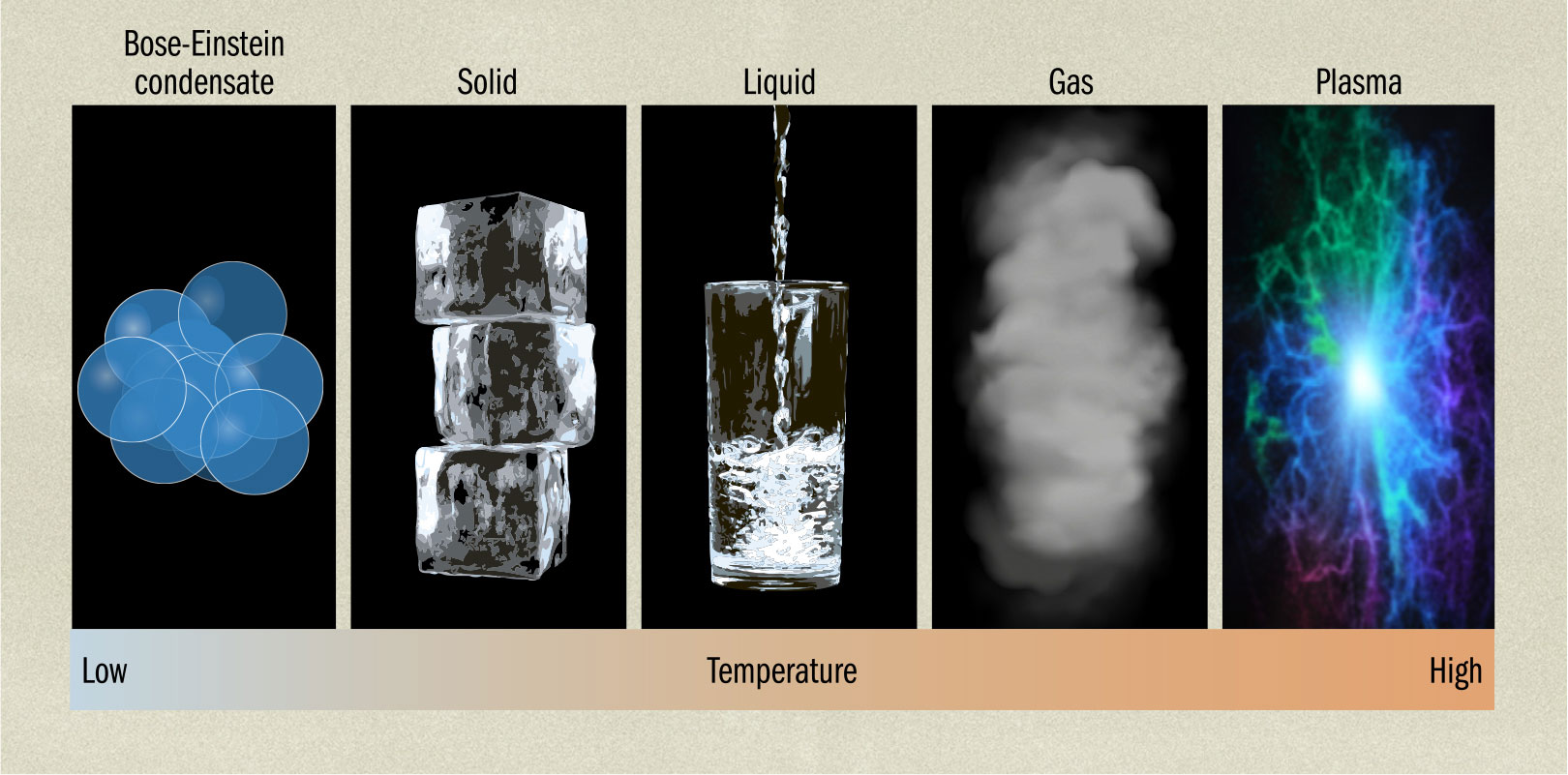

BOSE–EINSTEIN CONDENSATES

THE MAIN CONCEPT | Most of us were taught at school that matter has a total of three states: solid, liquid, and gas. With the development of Crookes tubes and other early electronic devices, a fourth state of matter was discovered, which would later be called a plasma. This is like a gas, but instead of being composed of atoms, is made up of ions, which are electrically charged atoms that have either gained or lost electrons. However, quantum physics has introduced a fifth state, the Bose–Einstein condensate. A Bose–Einstein condensate is a supercooled gas of bosons (particles such as photons). Because of the low temperatures required to form the condensate, most of the quantum particles are at the lowest energy state, and they begin to behave as if they are a single collective particle, sharing a wave function. The result is a material where large collections of particles undergo processes normally associated with single particles. So, for instance, the quantum double-slit experiment can be run using condensates. This is also why a superfluid behaves as a single entity. As yet, Bose–Einstein condensates have not found a practical use. However, it has been suggested that a Bose–Einstein condensate could be used in a detector for stealth aircraft, monitoring tiny changes in gravitational pull.

DRILL DOWN | The basic particles making up matter and light come in two forms—bosons and fermions. Bosons, which include photons, can have many identical particles crammed together, all in the same state. Fermions, such as electrons or protons, obey the Pauli exclusion principle, allowing only one particle at the same location in the same state. Fermions have quantum spin of 1/2, whereas bosons have whole integer spin. This means that compound particles such as atoms can be either bosons or fermions depending on their makeup. So, for example, helium-4 is a boson, but helium-3 is a fermion. This is why helium-3 needs to pair up to form a superfluid.

MATTER | The most dramatic demonstration using a Bose–Einstein condensate was at Harvard, when Danish physicist Lene Hau used one to capture light. A laser was shone into a condensate, creating a pathway through the opaque material for a second laser. When the first laser was switched off, the light from the second was trapped in a mix of matter and light called a dark state.

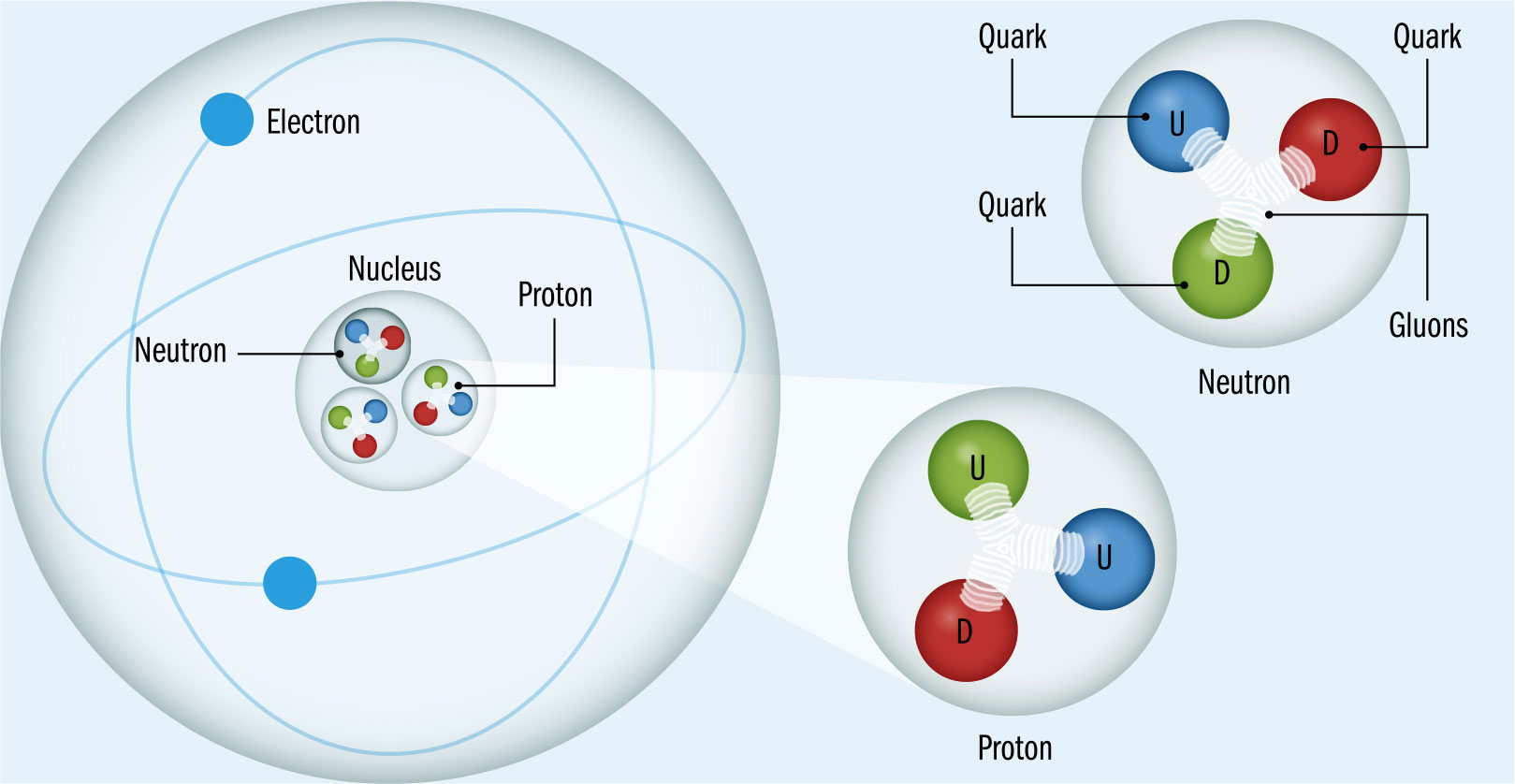

QUANTUM CHROMODYNAMICS

THE MAIN CONCEPT | With quantum electrodynamics (QED), Richard Feynman and the other developers of the theory found a way to cover interactions between matter and light, explaining electromagnetism and the way that photons carry the electromagnetic force. However, there was not an equivalent for the strong nuclear force, which is responsible for attraction between the quarks that make up protons and neutrons, and for holding together the atomic nucleus. Unlike an electron, quarks have two charges—the familiar electrical charge and the “color” charge, which comes in three different types: “red,” “blue,” and “green” (there is no actual color involved here—it’s just a name). Just as QED describes electromagnetic interactions, in the 1970s, an equivalent approach was developed to describe strong nuclear force interactions, called quantum chromodynamics, or QCD. Where the photon carries the electromagnetic force, bosons known as gluons carry the strong nuclear force. Unlike photons, the gluons can interact with each other and have a color charge. This makes QCD messier than QED, with more complex Feynman diagrams, and means the forces that are produced by QCD work very differently. This is why we don’t see “naked” quarks. The forces between quarks get stronger as the particles are separated, meaning that the quarks always stay inside particles such as protons.

DRILL DOWN | The “color” scheme used in quantum chromodynamics is arbitrary, in the sense that the particles aren’t really colored—but it was chosen for a reason. Just as the primary colors of red, blue, and green combine to make white, quarks always combine so that their colors produce “white.” Where three quarks combine to make a proton or neutron, there must be one each of red, blue, and green. Similarly, in particles called mesons, which are made up of just two quarks, there must always be a combination of, a red quark and an anti-red quark, so the result is a cancellation of the colors back to pristine white.

MATTER | It might seem that the devisors of QCD chose the wrong colors, because most of us are taught that the primary colors are red, yellow, and blue. However, these aren’t the true primaries. Red, green, and blue are the primaries. Pigments, which absorb some colors, take their opposites, magenta, yellow, and cyan—simplified for children to red, yellow, and blue.

QUANTUM BIOLOGY

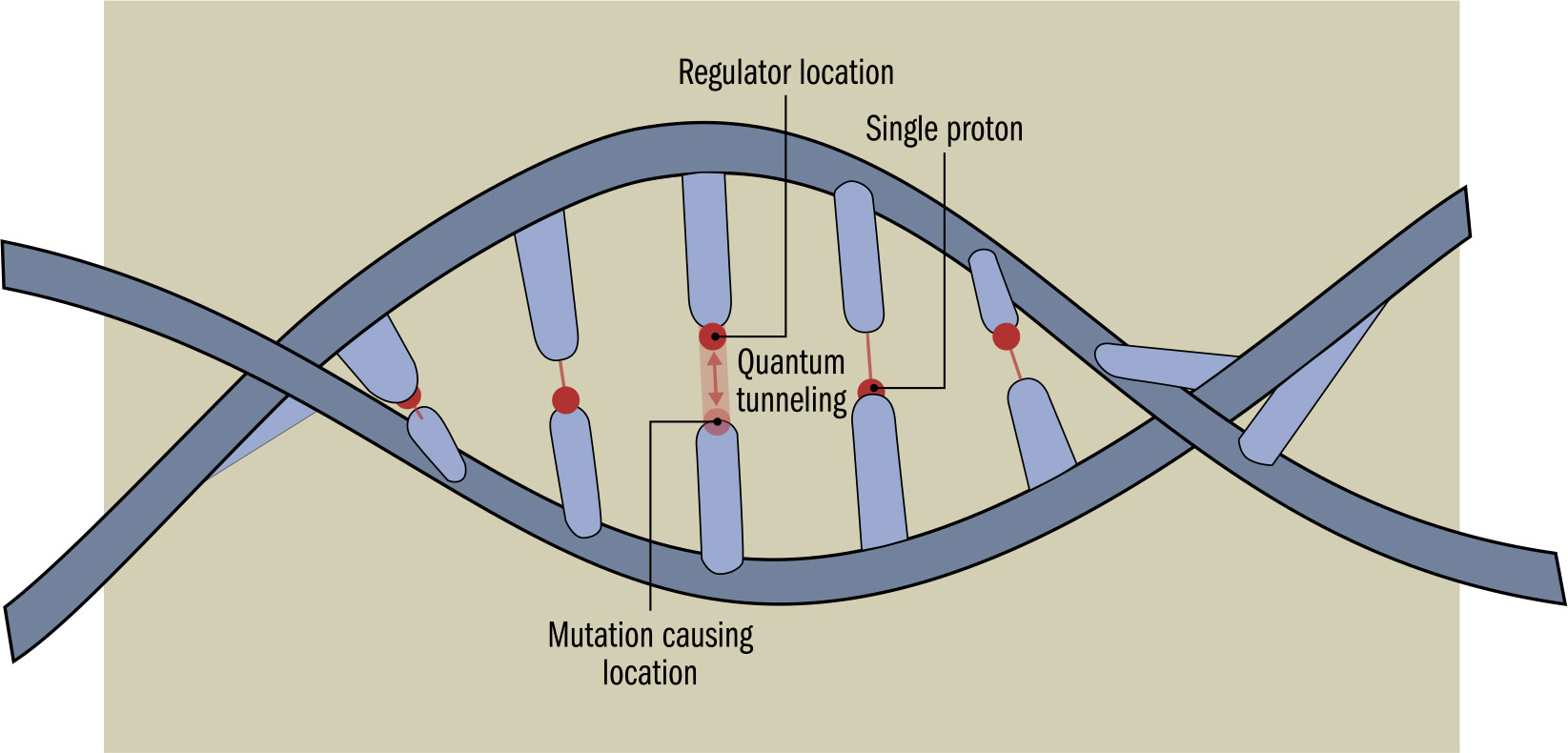

THE MAIN CONCEPT | For a long time, it was thought that the warm, wet conditions in biological organisms made it impossible for explicit quantum biological mechanisms to exist. However, more recently, it has been discovered that a number of biological processes depend on quantum physics. One of the earliest discoveries dates back to the 1970s and involves enzymes. We’re familiar with enzymes helping biological washing powders digest stains. Primarily, though, enzymes are biological catalysts making processes inside organisms, such as the digestion of food, go faster. This catalysis often involves making it easier for protons or electrons to get across a barrier, enabling a chemical reaction to take place. Some of these particles would have enough energy to get past the barrier anyway, but enzymes make it easier for other particles to use quantum tunneling to get through. The result is reactions that are significantly speeded up, in some cases making them thousands of times faster. As well as this increased speed, quantum effects of the catalyst make a sufficiently large difference that, without them, many living organisms, including humans, would not be able to function. It is expected that a wide range of other quantum processes, including photosynthesis and tunneling across DNA base pairs, causing mutations, will be verified.

DRILL DOWN | Deoxyribonucleic acid, (DNA), is the complex molecule that provides the “blueprint” for life. A primary mechanism of evolution is due to errors introduced when DNA is duplicated, errors that may have a quantum cause. DNA is shaped like a spiral staircase. When it divides to duplicate, the “treads” of the staircase, called base pairs, split down the middle. Each half of a base pair ends in a proton—which is capable of tunneling across to the opposite side of the pair, changing the chemical makeup of the two halves, modifying the genetic code. As a result, the data stored in the DNA could be altered, producing a new variant, or mutation.

MATTER | It’s thought that quantum processes could act as a natural quantum computer in plant photosynthesis. The energy produced in photosynthesis has to be passed to a different part of the plant’s cell, with a number of options for routing. Somehow, the best route is selected, perhaps via a wave-like process using a probabilistic quantum mechanism that tries all possible routes.

QUANTUM GRAVITY

THE MAIN CONCEPT | Between them, quantum theory and relativity support the bulk of modern physics. However, there is a problem—the two are incompatible. Albert Einstein’s general theory of relativity is “classical”—in it, the force of gravity is continuously variable. And quantum theory assumes space-time is not capable of warping. It might seem that there isn’t much need to make a unification. Quantum theory is extremely effective at describing small objects, while general relativity kicks in for large-scale subjects, which are the only ones where the weak force of gravity is significant. However, there are some applications where the two aspects are forced together. For example, big bang theory covers the entire universe, but begins with a near-dimensionless point, where quantum physics should reign supreme. Similarly, although the concept of a black hole emerged from the general theory of relativity (in fact, from the very first solution of the equations, made soon after the theory was published) it seems that the heart of a black hole should also be a near-dimensionless point, requiring quantum physics to explain its behavior. Some believe such a unification is possible with concepts such as string theory or loop quantum gravity—others think we will need to totally rewrite at least one of these highly successful theories.

DRILL DOWN | A number of attempts have been put forward to provide a unified approach that delivers quantum gravity. Perhaps the best known is string theory (or more properly, the overarching M-theory of which it forms a part). As a simple description, this sounds very attractive—all particles become vibrating variants on the same fundamental object, a string. However, the theory requires more spatial dimensions than we observe, and fails to make any testable predictions. Another alternative theory, loop quantum gravity, envisages a quantum framework for space-time itself, but as yet is less well formulated than string theory and does not fulfill all the requirements to combine quantum physics and general relativity.

MATTER | If gravity were quantized, we would expect that, like the existing quantum forces such as electromagnetism and the strong nuclear force, it would have a carrier particle. In the case of electromagnetism, this is the photon—for gravity, the hypothetical particle is the graviton, but gravity is so weak that detecting a graviton is not feasible with any currently envisaged technology.