Chapter 14. Custom PCs and Common Devices

This chapter covers the following A+ 220-1001 exam objectives:

• 3.8 – Given a scenario, select and configure appropriate components for a custom PC configuration to meet customer specifications or needs.

• 3.9 – Given a scenario, install and configure common devices.

Let’s customize! This chapter is all about purpose; the reasons people use computers; and how people use them. In short, custom computers. We’ll also briefly discuss the basics of first time computer usage. Let’s go!

3.8 – Given a scenario, select and configure appropriate components for a custom PC configuration to meet customer specifications or needs.

ExamAlert

Objective 3.8 concentrates on the following concepts: Graphics/CAD/CAM design workstation, audio/video editing workstation, virtualization workstation, gaming PC, network attached storage device, standard thick client, and thin client.

There are several custom configurations that you might encounter in the IT field. You should be able to describe what each type of computer is and the hardware that is required for these custom computers to function properly.

Graphic/CAD/CAM Design Workstation

Graphic workstations are computers that illustrators, graphic designers, artists, and photographers work at (among other professions). Often, these graphic workstations will be Mac desktops, but can be PCs as well; it depends on the preference of the user. Professionals will use software tools such as Adobe Illustrator, Photoshop and Fireworks, as well as CorelDRAW, GIMP, and so on.

Computer-aided design (CAD) and computer-aided manufacturing (CAM) workstations are common in electrical engineering, architecture, drafting, and many other engineering arenas. They run software such as AutoCAD.

Both types of software are GPU and CPU-intensive and the images require a lot of space on the screen. 3-D design and rendering of drawings and illustrations can be very taxing on a computer. So, hardware-wise, these workstations need a high-end video card, perhaps a workstation-class video card—which is much more expensive. They also require as much RAM as possible. If a program has a recommended RAM requirement of 4 GB of RAM, you should consider quadrupling that amount; plus, the faster the RAM, the better—just make sure your motherboard (and CPU) can support it. Next, a solid-state drive can be very helpful when opening large files, saving them, and especially, rendering them into final deliverable files. That means a minimum SATA Rev. 3.0 hard drive is good, but something NVMe would be better—either M.2 or PCI Express-slot based, or possibly something more advanced than that. Finally, going beyond the computer itself, a large display is often required; one that has the correct input based on the video card. For example, a 27” LED-LCD with excellent contrast ratio and black levels, and the ability to connect with DisplayPort, DVI, or HDMI—whichever the professional favors.

ExamAlert

Don’t forget, graphic/CAD/CAM design workstations need powerful high-end video cards, solid-state drives (SATA and/or M.2), and as much RAM as possible.

Audio/Video Editing Workstation

Multimedia editing, processing, and rendering require a fast computer with high-capacity storage and big displays (usually more than one). Examples of audio/video workstations include

• Video recording/editing PCs: These run software such as Adobe Premiere Pro or Apple Final Cut.

• Music recording PCs: These run software such as Logic Pro X or Pro Tools.

Note

Identify the software programs listed above and understand exactly what they are used for.

Adobe Premiere Pro: https://www.adobe.com/products/premiere.html

Apple Final Cut: https://www.apple.com/final-cut-pro/

Apple Logic Pro: https://www.apple.com/logic-pro/

Avid Pro Tools: https://www.avid.com/en/pro-tools

This just scratches the surface, but you get the idea. These computers need to be designed to easily manipulate video files and music files. So, from a hardware standpoint, they need a specialized video or audio card, the fastest hard drive available with a lot of storage space (definitely SSD and perhaps NVMe-based, or SATA Express), and multiple monitors (to view all of the editing windows). Keep in mind that the video cards and specialized storage drives are going to be expensive devices; be sure to employ all antistatic measures before working with those cards.

Some video editing workstations might require a secondary video card or external device for the capturing of video or for other video usage. Likewise, many audio editing workstations will require a secondary audio device, and will often rely on external audio processors and other audio equipment to “shape” the sound before it enters the computer via USB or otherwise.

ExamAlert

Remember that audio/video workstations need specialized A/V cards; large, fast hard drives; and multiple monitors.

Note

Most specialized, custom computers should have powerful multi-core CPUs.

Virtualization Workstation

A virtualization workstation is a computer that runs one or more virtual operating systems (also known as virtual machines or VMs). Did you ever wish that you had another two or three extra computers lying around so that you could test multiple versions of Windows, Linux, and possibly a Windows Server OS all at the same time? Well, with virtual software, you can do this by creating virtual machines for each OS. But if you run those at the same time on your main computer, you are probably going to bring that PC to a standstill. However, if you build a workstation specializing in virtualization, you can run whatever operating systems on it that you need. The virtualization workstation uses what is known as a hypervisor, which allows multiple virtual operating systems (guests) to run at the same time on a single computer. It is also known as a virtual machine manager (VMM). But there are two different kinds:

• Type 1: Native: This means that the hypervisor runs directly on the host computer’s hardware. Because of this, it is also known as bare metal. Examples of this include VMware vSphere and Microsoft Hyper-V (for Windows Server).

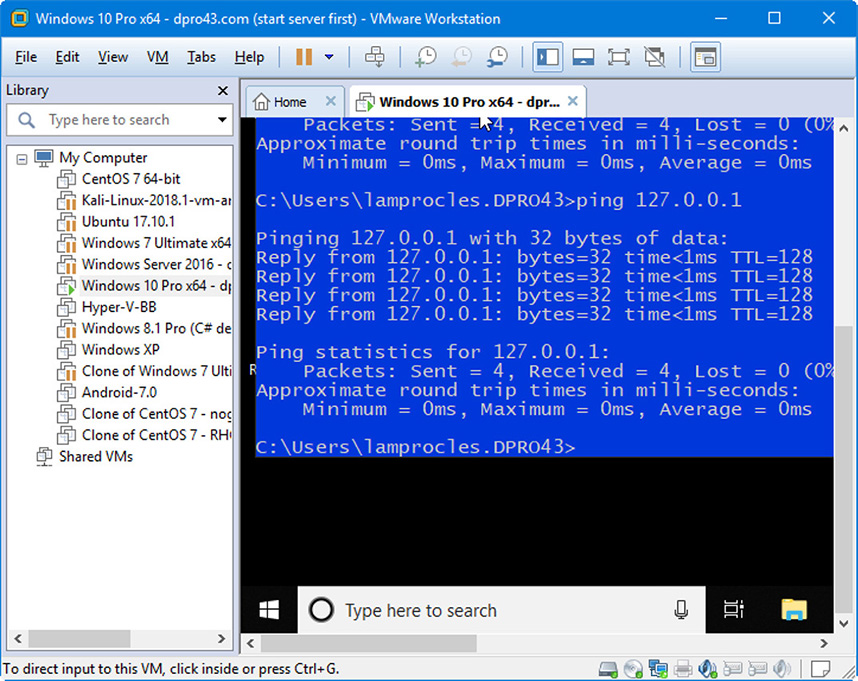

• Type 2: Hosted: This means that the hypervisor runs within (or “on top of”) the operating system. Guest operating systems run within the hypervisor. Compared to Type 1, guests are one level removed from the hardware and therefore run less efficiently. Examples of this include VirtualBox, VMware Workstation, and Hyper-V for Windows 10. Figure 14.1 shows an example of VMware Workstation running. You will note that it has a variety of virtual machines inside, such as Windows 10 Pro (running), Windows Server, and Ubuntu Linux.

Figure 14.1 VMware Workstation

Generally, Type 1 is a much faster and efficient solution than Type 2. Because of this, Type 1 hypervisors are the kind used for virtual servers by web-hosting companies and by companies that offer cloud-computing solutions. It makes sense, too. If you have ever run a powerful operating system within a Type 2 hypervisor, you know that a ton of resources are used, and those resources are taken from the hosting operating system. It is not nearly as efficient as running the hosted OS within a Type 1 environment. However, keep in mind that the hardware/software requirements for a Type 1 hypervisor are more stringent and costlier.

For virtualization programs to function, the appropriate virtualization extensions need to be turned on in the UEFI/BIOS. Intel CPUs that support x86 virtualization use the VT-x virtualization extension. Intel chipsets use the VT-d and VT-c extensions for input-output memory management and network virtualization, respectively. AMD CPUs that support x86 virtualization use the AMD-V extension. AMD chipsets use the AMD-Vi extension. After virtualization has been enabled in the UEFI/BIOS, some programs, such as Microsoft Hyper-V, need to be turned on in Windows. This can be done in Control Panel > All Control Panel Items > Programs and Features, and click the Turn Windows features on or off link. Then checkmark Hyper-V. Windows will disallow it if virtualization has not been enabled in the UEFI/BIOS, or in the uncommon case that the CPU doesn’t support virtualization.

Any computer designed to run a hypervisor often has a powerful CPU (or multiple CPUs) with multiple cores and as much RAM as can fit in the system. This means a powerful, compatible motherboard as well. So in essence, the guts—the core of the system—need to be robust. Keep in mind that the motherboard UEFI/BIOS and the CPU should have virtualization support.

ExamAlert

Remember that virtualization systems depend on the CPU and RAM heavily. These systems require maximum RAM and CPU cores.

Gaming PC

Now we get to the core of it: Custom computing is taken to extremes when it comes to gaming. Gaming PCs require almost all the resources mentioned previously: a powerful, multicore CPU; lots of fast RAM; one or more SSDs (SATA Express or PCI Express); advanced cooling methods (liquid cooling if you want to be serious); high-definition sound card; plus a fast network adapter and strong Internet connection.

But without a doubt, the most important component is the video card. Typical end-user video cards cannot handle today’s PC games. So, a high-end video card with a specialized GPU is the key. Also, a big monitor that supports high resolutions and refresh rates couldn’t hurt. (And let’s not forget about mad skills).

All of this establishes a computer that is expensive and requires care and maintenance to keep it running in perfect form. For the person who is not satisfied with gaming consoles, this is the path to take.

ExamAlert

A gaming PC requires a high-end video with specialized GPU, a high-definition sound card, an SSD, and high-end cooling.

Games are some of the most powerful applications available. If even just one of the mentioned elements is missing from a gaming system, it could easily ruin the experience. You might think you could do without an SSD; however, SSDs provide faster load times of games and levels, but don’t do too much after that. However, when you look at the mammoth size of some of today’s games, that’s enough reason to install one. As of the writing of this book (2019), many gamers rely on M.2 drives.

Don’t forget, the video card is the #1 component of this equation. Gamers are always looking to push the envelope for video performance by increasing the number of frames per second (frames/s or fps) that the video card sends to the monitor. One of the ways to improve the video subsystem is to employ multiple video cards. It’s possible to take video to the next level by incorporating NVIDIA’s Scalable Link Interface (SLI) or AMD’s CrossFire (CF). A computer that uses one of these technologies has two (or more) identical video cards that work together for greater performance and higher resolution. The compatible cards are bridged together to essentially work as one unit. It is important to have a compatible motherboard and ample cooling when attempting this type of configuration. Currently, this is done with two or more PCI Express video cards (x16/version 3 or higher) and is most commonly found in gaming rigs, but you might find it in other PCs as well (such as video editing or CAD/CAM workstations). Because some motherboards come with only one PCIe x16 slot for video, a gaming system needs a more advanced motherboard: one with at least two PCIe x16 slots to accomplish SLI. It is costly being a gamer!

Network Attached Storage Device

Network attached storage (NAS) is when one or more hard drives are installed into a device known as a NAS box or NAS server that connects directly to the network. The device can then be accessed via browsing or as a mapped network drive from any computer on the network. For example, a typical two-bay NAS box can hold two SATA drives to be used together as one large capacity or in a RAID 1 mirrored configuration for fault tolerance. Remember, RAID 1 mirroring means that two drives are used in unison and all data is written to both drives, giving you a mirror or extra copy of the data if one drive fails. Larger NAS boxes can have 4 drives or more, and can incorporate RAID 5 (striping with parity), RAID 6 (striping with double parity), or RAID 10 (stripe of mirrors).

You could also build your own custom PC that acts as a NAS box. This would require two or more identical drives, preferably with trays on the front of the case for easy accessibility. Then, run FreeNAS or other similar software to take just about any system with the right hard drives and turn it into a network attached storage box. With the right hardware you can end up with a faster NAS box than a lot of the devices on the market today.

These devices connect to the network by way of an RJ-45 port (often a gigabit NIC running at 1000 Mbps, or even 10 Gbps). Of course, there are much more advanced versions of NAS boxes that would be used by larger companies. These often have hot-swappable drives that can be removed and replaced while the device is on. Usually, they are mounted to a plastic enclosure or tray that is slid into the NAS device. But watch out: Not every hard drive in a plastic hard drive enclosure is hot-swappable. The cheaper versions are usually not; they can be swapped in and out but not while the computer is on. These devices might work in conjunction with cloud storage and, in some cases, act as cloud storage themselves. It all depends on their design and their desired usage.

The ultimate goal of the NAS box is to allow file sharing to multiple users in one or more locations. To do this, the administrator of the NAS box first sets up the RAID array (if the device is to use one). Then the admin formats the drives to a particular file system; for instance, ext4 or BTRFS (for typical Linux-based NAS devices) or perhaps ZFS (for PC-based free NAS solutions). Next, the admin sets up services; for example, SMB for Windows and Mac connectivity. To connect to the NAS box from a system over the network, the path would be \\nasbox (for Windows), or smb://nasbox (for Mac). Replace nasbox with whatever name the device uses. The admin might also choose to make use of AFP or NFS for connectivity. See Chapter 5, “Ports, Protocols, and Network Devices,” for more information about these protocols. All of this is configured in the included software which is loaded into a separate volume from the data to be stored by the users. Generally, an admin makes configurations in the NAS box with an Internet browser, using either the IP address or name of the device to connect.

Some of these NAS devices go beyond simple file sharing. For example, they might also incorporate media streaming of videos, an FTP server, and a web server. Some provide many other services, but beware, any of these can be taxing on the CPU, and can lead to potential security vulnerabilities. Use only what you need!

Note

The following link leads to a video/article demonstrating a NAS device installation: https://dprocomputer.com/blog/?p=2006

ExamAlert

Network attached storage (NAS) devices usually require a RAID array, gigabit NIC, file sharing, and the capability for media sharing.

Thin Client

A thin client (also known as a slim, lean, or cloud client) is a computer that has few resources compared to a typical PC. Usually, it depends heavily on a server. It is often a small device integrated directly into the display or could be a stand-alone device using an ultra-small form factor (about the size of a cable modem or gaming console). Some thin clients are also known as diskless workstations because they have no hard drive or optical discs. They do have a CPU, RAM, and ports for the display, keyboard, mouse, and network; they can connect wirelessly as well. They are also known simply as computer terminals which might provide only a basic GUI and possibly a web browser. There is a bit of a gray area when it comes to thin clients due to the different models and types over the years, but the following gives a somewhat mainstream scenario.

Other examples of thin clients include point-of-sale (POS) systems such as the self-checkout systems used at stores or touchscreen menus used at restaurants. They serve a single purpose and require minimum hardware resources and minimum OS requirements.

When a typical thin client is turned on, it loads the OS and applications from an image stored (embedded) on flash memory or from a server. The OS and apps are loaded into RAM; when the thin client is turned off, all memory is cleared.

ExamAlert

Viruses have a hard time sticking around a thin client because the RAM is completely cleared every time it is turned off.

So, the thin client is dependent on the server for a lot of resources. Thin clients can connect to an in-house server that runs specially configured software or they can connect to a cloud infrastructure to obtain their applications (and possibly their entire operating system).

Note

Back in the day, this was how a mainframe system worked; however, back then, the terminal did virtually no processing, had no CPU, and was therefore referred to as a “dumb” terminal. This is an example of centralized computing, where the server does the bulk of the processing. Today, we still have mainframes (super-computers), but the terminal (thin client) incorporates a CPU.

The whole idea behind thin clients is to transfer a lot of the responsibilities and resources to the server. With thin-client computing, an organization purchases more powerful and expensive servers but possibly saves money overall by spending less on each thin client (for example, Lenovo thin clients) while benefitting from a secure design. The typical thin client might have one of several operating systems embedded into the flash memory, depending on the model purchased. This method of centralizing resources, data, and user profiles is considered to be a more organized and secure solution than the typical PC-based, client/server network, but it isn’t nearly as common.

ExamAlert

A thin client runs basic, single-purpose applications, meets the minimum manufacturer’s requirements for the selected operating system, and requires network connectivity to reach a server or host system where some, or even the majority, of processing takes place.

Standard Thick Client

A standard thick client is effectively a PC. It is much more common in the workplace than the thin client. Unlike a thin client, a thick client performs the bulk of data processing operations by itself and uses a drive to store the OS, files, user profile, and so on. In comparison to thin clients and the somewhat centralized computing, with a thick client, a typical local area network of PCs would be known as distributed computing, where the processing load is dispersed more evenly among all the computers. There are still servers, of course, but the thick client has more power and capabilities compared to the thin client. Distributed computing is by far the more common method today. When using a thick client, it’s important to verify that the thick client meets the recommended requirements for the selected OS.

An example of a standard thick client is a desktop computer running Windows 10 and Microsoft Office, and offers web browsing and the ability to easily install software. This standard thick client should meet (or exceed) the minimum requirements for Windows 10, including a 1 GHz 64-bit CPU, 2 GB of RAM, and 20 GB of free hard drive space.

Note

Personally, I always recommend exceeding the minimum requirements as much as possible; and an SSD can’t hurt either.

ExamAlert

A standard thick client runs desktop applications such as Microsoft Office and meets the manufacturer’s recommended requirements for the selected operating system. The majority of processing takes place on the thick client itself.

Cram Quiz

Answer these questions. The answers follow the last question. If you cannot answer these questions correctly, consider reading this section again until you can.

1. Which of the following is the best type of custom computer for use with Pro Tools?

![]() A. Graphic/CAD/CAM design workstation

A. Graphic/CAD/CAM design workstation

![]() B. Audio/video editing workstation

B. Audio/video editing workstation

![]() C. Gaming PC

C. Gaming PC

![]() D. Virtualization workstation

D. Virtualization workstation

2. Which of the following would include a gigabit NIC and a RAID array?

![]() A. Gaming PC

A. Gaming PC

![]() B. Audio/video editing workstation

B. Audio/video editing workstation

![]() C. Thin client

C. Thin client

![]() D. NAS

D. NAS

3. Your organization needs to run Windows in a virtual environment. The OS is expected to require a huge amount of resources for a powerful application it will run. What should you install Windows to?

![]() A. Type 2 hypervisor

A. Type 2 hypervisor

![]() B. Gaming PC

B. Gaming PC

![]() C. Type 1 hypervisor

C. Type 1 hypervisor

![]() D. Thin client

D. Thin client

Cram Quiz Answers

1. B. The audio/video editing workstation is the type of custom computer that would use Pro Tools, Logic Pro X, and other music and video editing programs.

2. D. A NAS device (network attached storage) will allow users to access files and stream media; it normally has a gigabit NIC and a RAID array. The rest of the answers will most likely include a gigabit network connection, but not a RAID array.

3. C. If the virtual operating system needs a lot of resources, the best bet is a “bare metal” type 1 hypervisor. Type 2 hypervisors run on top of an operating system and therefore are not as efficient with resources. Gaming PCs have lots of resources but are not meant to run virtual environments. Thin clients have the least amount of resources.

3.9 – Given a scenario, install and configure common devices.

ExamAlert

Objective 3.9 focuses on the following: desktops (thin client, thick client, account setup/settings); and laptop/common mobile devices (touchpad configuration, touchscreen configuration, application installations/configurations, synchronization settings, account setup/settings, wireless settings).

This section is about doing basic configurations of desktops and mobile devices. A lot of what is covered in this section is fairly basic and may have been covered elsewhere in the book. Also, a good deal of it probably is well-known to a lot of you readers. So, I’ve decided to keep it brief, and explain things with a “train the trainer” mindset. As a technician, you will often be called upon to explain to users how to use a computer, connect peripherals, and configure basic settings. Allow me to share some of my training knowledge with you to make the process more efficient and pleasant for everyone involved.

Desktop Devices and Settings

The setups of thin and thick clients can differ from a hardware and connectivity standpoint. The main difference being that the thick client has a hard drive (and additional hardware). But generally, they use the same types of ports and peripherals, such as keyboards, mice, monitors, and wired and wireless network connections.

Thin and thick clients might even run the same operating system. The real difference is how the operating system is “installed”, and how it runs. For example, a typical thick client has its operating system installed locally, to a hard drive that is internal to the computer. But a thin client doesn’t use a hard drive. Instead, it gets its OS either from a server (over the network or from the cloud), or it uses an embedded OS image stored in flash memory. In the more common case of a server, the concept is that the thin client requires limited resources, but this means the server must have a greater amount of resources to support the thin client’s needs: OS, apps, and data. Quite often this means virtualization and the use of backend products from Citrix, Microsoft, AWS, and others.

Regardless of whether we use thin or thick clients, the basic settings and account setup is going to be very similar. Once everything has been connected and the operating systems have been set up, the user needs to perform an initial login. The user will usually be required to enter a username and password, and possibly use other authentication methods such as biometric data. If the computer is a member of a domain (for example a Windows 10 client connecting to a Windows Server 2016), then the user will have to select the domain to log on to. This is normally listed directly below the username and password.

For initial logins, the user will often be prompted to go through account setup. Typical setup questions include: “What country are you from?”, “What language would you like to use”, and “What time zone are you located in”. Then, there may be additional configuration questions based on video, the Internet connection the user would like to use, and so on. All of this information is stored in a user profile, so that the user doesn’t have to reconfigure everything after each login. This setup is virtually the same irrespective of the OS you use: Windows, macOS, or Linux.

One of the things I like to tell users is to select a strong password and commit it to memory right away before first logging in. It’s also a good idea to write a setup guide—a short document that explains step-by-step how the user can first start using their computer. This type of documentation might be required if your organization is compliant with certain regulations or standards.

Laptop Configurations and Settings

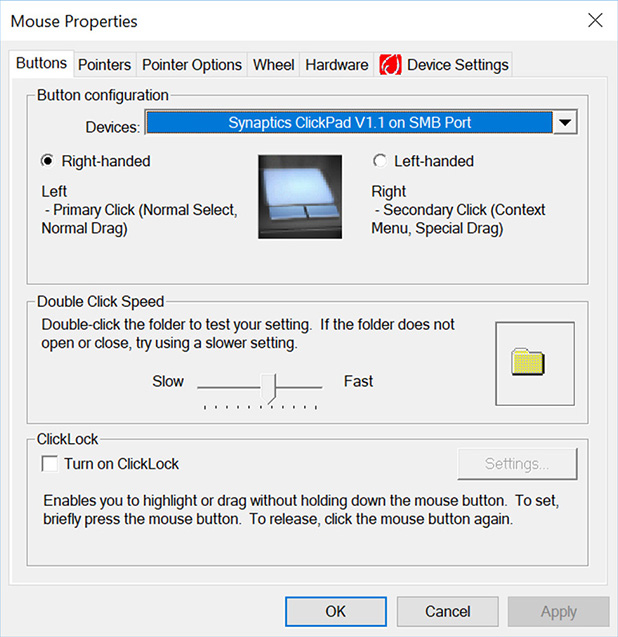

Laptops and Chromebooks have some added features such as the touchpad and touchscreen. These are configured for the average user by default, but in some cases, a device might automatically attempt to calibrate itself based on the user during the initial login, potentially asking the user to tap, drag, and perform other motions. If not, then these devices can be configured in the Control Panel (in Windows), and Settings > Device (in Chrome OS). For example, Figure 14.2 displays the Mouse Properties window in Windows 10. It shows a Synaptics touchpad device being used by a laptop. Configuration of these is similar to the configuration of a mouse—which is why Windows usually places it in the Mouse Properties—but you might have additional configurations for touch sensitivity and the types of two-finger or multi-finger gestures. Often, a touchpad can be disabled from one of the tabs in the Mouse Properties window as well.

Figure 14.2 Typical Touchpad Mouse Properties in Windows

When working with users, teach them about the primary click and the secondary click (also known as alternate click). For the right-handed user, the primary click is the left click; for the left-handed user, the primary click is the right-click. As shown in Figure 14.2, a touchpad’s buttons are usually on the bottom, as opposed to a mouse which normally has them at the top. Teach users that they can usually tap and drag on today’s touchpads in the same way that they would with a touchscreen (which almost everyone knows how to do).

Speaking of touchscreens; there are some that can have the touch sensitivity increased or decreased, but many (as of the writing of this book) have a set pressure sensitivity configuration that cannot be changed, or if it can be changed, is deep within the OS. However, the touchscreen orientation can be modified for landscape or portrait mode, locked into place, and some can be configured to work better with a stylus instead of a finger. Once again, teach users how to tap, drag, pinch, expand, and perform two-finger scrolls and other multi-finger gestures.

A user should also be trained on how to install applications (if he/she has the ability to do so!), synchronize data, perform initial account setup and connect to wireless networks. Most of these things we discuss elsewhere in the book. The basic steps for these are virtually the same whether a user account is Apple, Microsoft, or Google-based. But there are a couple of things to remember:

1. When a user first logs on to a computer that user will need to know the username (which could be an e-mail address or common name) and the password. Complex and lengthy passwords can be difficult for some users to remember and often result in help desk and tech support calls. Train the user to memorize a password when it is first selected by going over it again and again in his or her mind.

2. To access the Internet, a user might be required to connect to a wireless network. Make it easy for the user to remember the wireless network name (SSID), and the password (pre-shared key or PSK). If the password is in some way delivered to the user, make sure that the password becomes deleted or unavailable after 24 hours. If any passwords are written down, make sure that they are shredded after memorization.

3. Apps can be downloaded from a variety of locations: the Play Store, App Store, the Microsoft Store, and so on—it all depends on what OS is being used. Train the user to be very careful when selecting an app, and consider locking mechanisms that disallow users from installing apps at all; that is, if they aren’t part of the OS design by default.

4. Know that many operating systems and their associated apps will by default save files that are created to the cloud. Train the user as to how this works and that this type of file save happens automatically (auto-save). However, a user can opt to save documents locally (if storage is available). Teach them to find the “save as” icon or use F12 on the keyboard, or Ctrl +S.

5. Most companies and their OSes setup new accounts to synchronize to the cloud automatically. That means that settings and data can be transferred from one device to another. Teach users how this works—and for older users—how this is a departure from the way user profiles had been approached for decades. If the account can follow the user, then the settings and data can follow as well, because they are all stored on the cloud. But a user can go further, for example, synchronizing across different systems and programs: for example, Microsoft Outlook with Gmail, or Thunderbird with Gmail, or going from Apple to Google. This is where it can get a bit more difficult for the user and the tech because tech organizations like to keep everything in house. Just remember, there’s always a way to connect systems, synchronize across platforms, and use one provider’s account with another provider’s OS and apps.

Cram Quiz

Answer these questions. The answers follow the last question. If you cannot answer these questions correctly, consider reading this section again until you can.

1. You have set up a user to work at a thin client. They will be accessing the OS image and data from a Windows Server 2016 as well as data from the Google Cloud. Which of the following does this configuration not require? (Select the two best answers.)

![]() A. Network connection

A. Network connection

![]() B. CPU

B. CPU

![]() C. M.2

C. M.2

![]() D. Virtualization

D. Virtualization

![]() E. SSD

E. SSD

2. A customer has a brand new Chromebook and needs help configuring it. Which of the following should you help the user with? (Select the three best answers.)

![]() A. Touchpad

A. Touchpad

![]() B. Initial account setup

B. Initial account setup

![]() C. Registry

C. Registry

![]() D. Touchscreen

D. Touchscreen

![]() E. App Store applications

E. App Store applications

![]() F. Task Manager

F. Task Manager

Cram Quiz Answers

1. C and E. This configuration will not need a hard drive, be it M.2 or other SSD. Thin clients are meant to use an OS that is embedded in RAM (or other similar memory) or more often, grab an image from a server, often as a virtual machine. To do so, the thin client will need a network connection (wired or wireless), and every computer needs a CPU.

2. A, B and D. You should show the user how to configure the touchpad and touchscreen, and guide the user through the initial account setup. Chrome OS is a fairly simple system compared to Windows and other operating systems. To configure devices, simply go to the “Home” or app launcher button, then Settings, then Devices. The registry is a Windows configuration tool—even if this was a Windows computer, the typical user has no place in the registry. The App Store is Apple’s application download site. Google uses the Play Store. The Task Manager is another Windows utility. Consider writing a short user guide in Word document format if you have multiple users accessing the same type of system for the first time. Write it once, and train many!