Chapter 19

Cisco DNA Software-Defined Access

This chapter examines one of Cisco’s newest and most exciting innovations in the area of enterprise networking—Software-Defined Access. SD-Access brings an entirely new way of building—even of thinking about and designing—the enterprise network. SD-Access embodies many of the key aspects of Cisco Digital Network Architecture, including such key attributes as automation, assurance, and integrated security capabilities.

However, to really appreciate what the SD-Access solution provides, we’ll first examine some of the key issues that confront enterprise network deployments today. The rest of the chapter presents the following:

Software-Defined Access as a fabric for the enterprise

Capabilities and benefits of Software-Defined Access

An SD-Access case study

The Challenges of Enterprise Networks Today

As capable as the enterprise network has become over the last 20-plus years, it faces some significant challenges and headwinds in terms of daily operation as well as present and future growth.

The enterprise network is the backbone of many organizations worldwide. Take away the network, and many companies, schools, hospitals, and other types of businesses would be unable to function. At the same time, the network is called upon to accommodate a vast and growing array of diverse user communities, device types, and demanding business applications.

The enterprise network continues to evolve to address these needs. However, at the same time, it also needs to meet an ever-growing set of additional—and critical—requirements. These include (but are not limited to) the need for:

Greater simplicity: Networks have grown to be very complicated to design, implement, and operate. As more functions are mapped onto the network, it must accommodate an ever-growing set of needs, and has become more and more complex as a result. Each function provided by the network makes sense in and of itself, but the aggregate of all the various functions, and their interaction, has become, for many organizations, cumbersome to implement and unwieldy to manage. Combined with the manually intensive methods typically used for network deployments, this complexity makes it difficult for the enterprise network to keep pace with the rate of change the organization desires, or demands.

Increased flexibility: Many enterprise network designs are relatively rigid and inflexible. The placement of Layer 2 and Layer 3 boundaries can, in some cases, make it difficult or impossible to accommodate necessary application requirements. For example, an application may need, or desire, a common IP subnet to be “stretched” across multiple wiring closets in a site. In a traditional routed access design model (in which IP routing is provisioned down to the access layer), this requirement is not possible to accommodate. In a multilayer design model, this need could be accommodated, but the use of a wide-spanning Layer 2 domain in this model (depending on how it is implemented) may expose the enterprise to the possibility of broadcast storms or other similar anomalies that could cripple the network. Organizations need their networks to be flexible to meet application demands such as these, when they arise. Today’s network designs often fall short. Although such protocols and applications can be altered over time to reduce or eliminate such requirements, many organizations face the challenge of accommodating such legacy applications and capabilities within their networks. Where such applications can be altered or updated, they should be—where they cannot be, a network-based solution may be advantageous.

Integrated security: Organizations depend on their networks. The simple fact is that today, the proper and ongoing operation of the network is critical to the functioning of any organization, and is foundational for the business applications used by that organization. Yet, many networks lack the inherent security and segmentation systems that may be desired. Being able to separate corporate users from guests, Internet of Things (IoT) devices from internal systems, or different user communities from each other over a common network has proved to be a daunting task for many enterprise networks in the past. Capabilities such as Multiprotocol Label Switching Virtual Private Networks (MPLS VPNs) exist, but are complex to understand, implement, and operationalize for many enterprise deployments.

Integrated mobility: The deployment and use of mobile, wireless devices is ubiquitous in today’s world—and the enterprise network is no exception. However, many organizations implement their wireless network separate from and “over the top” of their existing wired network, leading to a situation where the sets of available network services (such as security capabilities, segmentation, quality of service [QoS], etc.) are different for wired and wireless users. As a consequence, wired and wireless network deployments typically do not offer a simple, ubiquitous experience for both wired and wireless users, and typically cannot offer a common set of services with common deployment models and scale. As organizations move forward and embrace wireless mobile devices even further in their networks, there is a strong desire to provide a shared set of common services, deployed the same way, holistically for both wired and wireless users within the enterprise network.

Greater visibility: Enterprise networks are carrying more traffic, and more various types of traffic, across their network backbones than ever before. Being able to properly understand these traffic flows, optimize them, and plan for future network and application growth and change are key attributes for any organization. The foundation for such an understanding is greater visibility into what types of traffic and applications are flowing over the network, and how these applications are performing versus the key goals of the organization. Today, many enterprises lack the visibility they want into the flow and operation of various traffic types within their networks.

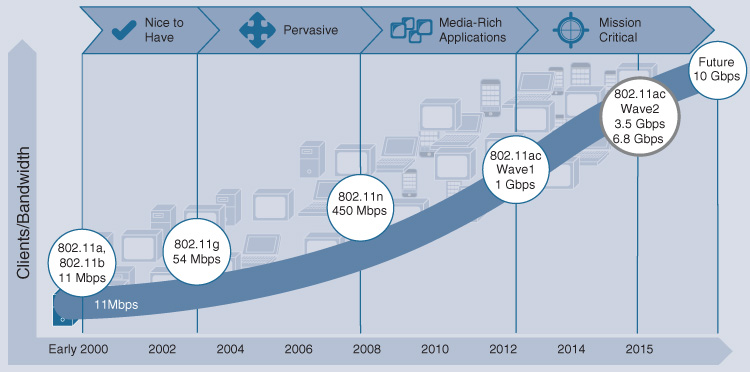

Increased performance: Finally, the ever-greater “need for speed” is a common fact of life in networking—and for the devices and applications they support. In the wired network, this includes the movement beyond 10-Gigabit Ethernet to embrace the new 25-Gbps and 100-Gbps Ethernet standards for backbone links, as well as multi-Gigabit Ethernet links to the desktop. In the wireless network, this includes the movement toward 802.11ac Wave 2 deployments, and in future the migration to the new 802.11ax standard as this emerges—all of which require more performance from the wired network supporting the wireless devices. By driving the performance per access point (AP) toward 10 Gbps, these developments necessitate a movement toward a distributed traffic-forwarding model, as traditional centralized forwarding wireless designs struggle to keep pace.

Cisco saw these trends, and others, beginning to emerge in the enterprise network space several years ago. In response, Cisco created Software-Defined Access (SD-Access). The following sections explore

A high-level review of SD-Access capabilities

What SD-Access is—details of architecture and components

How SD-Access operates: network designs, deployments, and protocols

The benefits that SD-Access provides, and the functionality it delivers

So, let’s get started and see what SD-Access has in store!

Software-Defined Access: A High-Level Overview

In 2017, Cisco introduced Software-Defined Access as a new solution that provides an automated, intent-driven, policy-based infrastructure with integrated network security and vastly simplified design, operation, and use.

SD-Access, at its core, is based on some of the key technologies outlined previously in this book:

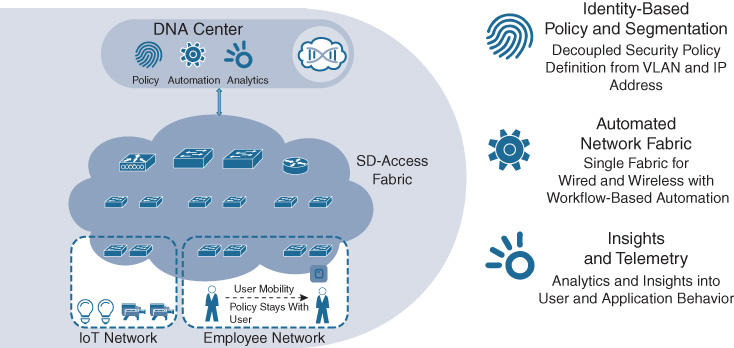

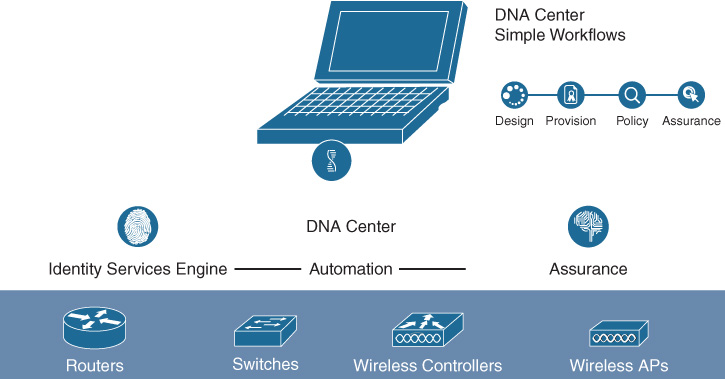

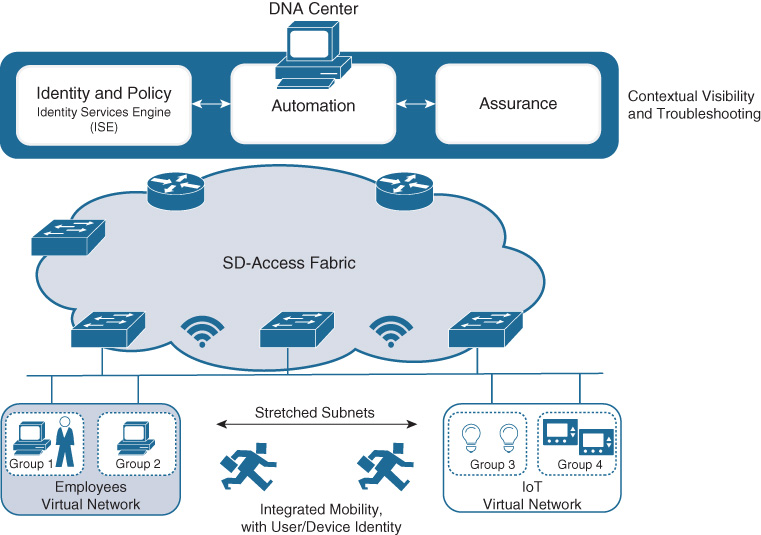

Cisco DNA Center: Cisco DNA Center is the key network automation and assurance engine for SD-Access deployments. When deployed in conjunction with Cisco Identity Services Engine (ISE) as the nexus for policy and security, Cisco DNA Center provides a single, central point for the design, implementation, and ongoing maintenance and operation of an SD-Access network.

Cisco DNA flexible infrastructure: The flexible infrastructure of Cisco DNA was covered in depth in Chapter 7, “Hardware Innovations” and Chapter 8, “Software Innovations.” By levering these flexible, foundational hardware and software elements within a Cisco network, SD-Access is able to offer a set of solutions that allows an evolutionary approach to network design and implementation—easing the transition to the software-defined, intent-driven networking world, and providing outstanding investment protection.

Next-generation network control-plane, data-plane, and policy-plane capabilities: SD-Access builds on top of existing capabilities and standards, such as those outlined in Chapter 9, “Protocol Innovations,” including Location/ID Separation Protocol (LISP), Virtual Extensible Local Area Network (VXLAN), and Scalable Group Tags (SGT). By leveraging these capabilities, expanding upon them, and linking them with Cisco DNA Center to provide a powerful automation and assurance solution, SD-Access enables the transition to a simpler, more powerful, and more flexible network infrastructure for organizations of all sizes.

SD-Access: A Fabric for the Enterprise

The SD-Access solution allows for the creation of a fabric network deployment for enterprise networks. So, let’s begin with examining two important areas:

What is a fabric?

What benefits can a fabric network deployment help to deliver?

What Is a Fabric?

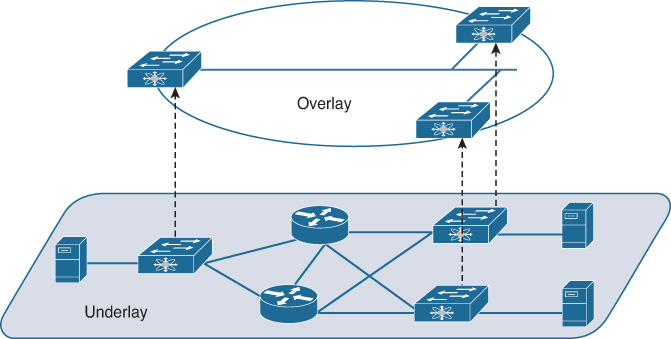

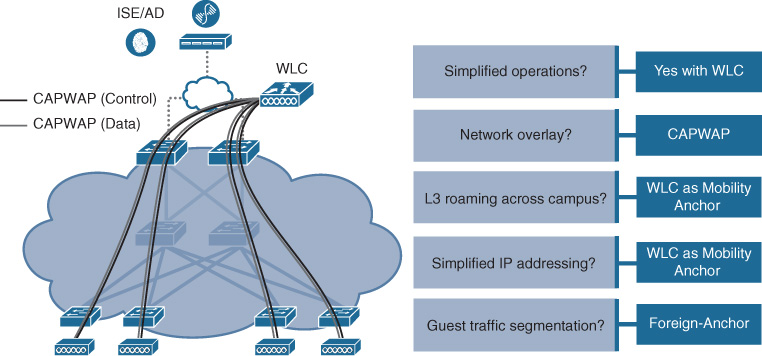

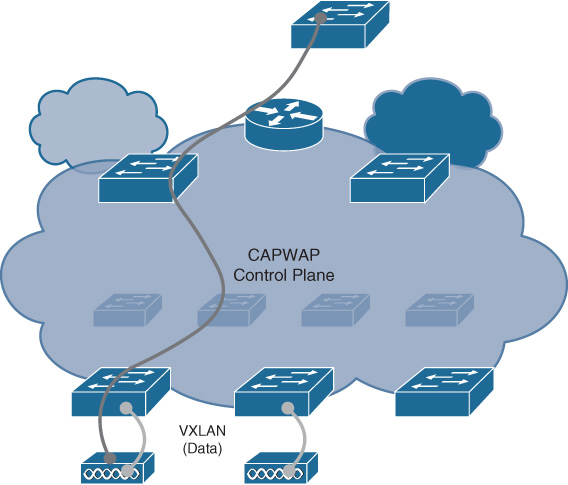

Effectively, a fabric network deployment implements an overlay network. Overlays by themselves are not new—overlay technologies of various flavors (such as CAPWAP, GRE, MPLS VPNs, and others) have been used for many years. Fundamentally, overlays leverage some type of packet encapsulation at the data plane level, along with a control plane to provision and manage the overlay. Overlays are so named because they “overlay,” or ride on top of, the underlying network (the underlay), leveraging their chosen data plane encapsulation for this purpose. Chapter 9 introduced overlays and fabrics in more detail, so this will serve as a refresher.

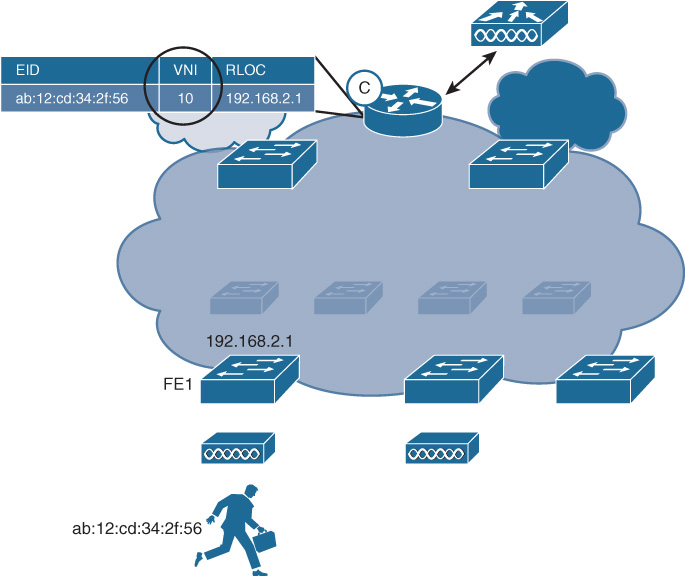

Figure 19-1 outlines the operation of an overlay network.

Overlay networks provide a logical, virtual topology for the deployment, operating on top of the physical underlay network. The two distinct “layers” of the network—logical and physical—can and often do implement different methods for reachability, traffic forwarding, segmentation, and other services within their respective domains.

A network fabric is the combination of the overlay network type chosen and the underlay network that supports it. But before we examine the type of network fabric created and used by SD-Access, we need to answer an obvious question: why use a fabric network at all for enterprise deployments?

Why Use a Fabric?

Perhaps the clearest way to outline why a fabric network is important within the enterprise is to quickly recap the main issues facing enterprise networks, namely:

The need for greater simplicity

The need for increased flexibility

The need for enhanced security

The requirement for ubiquitous mobility

The need for greater visibility

The need for simplified and more rapid deployment

And the ever-growing need for more performance

These various demands place network managers into an awkward position. As stewards of one of the most critical elements of an organization—the enterprise network—one of their primary tasks is to keep the network operational at all times: 24/7/365. How best to accomplish this is broken down into a few simple, high-level steps:

Step 1. Design the network using a solid approach.

Step 2. Implement that design using reliable, proven hardware and software, leveraging a best-practices deployment methodology.

Step 3. Implement a robust set of change-management controls, and make changes only when you must.

Essentially, this means “build the network right in the first place—then stand back and don’t touch it unless you need to.”

Although this approach is functional, it ignores the additional realities imposed upon network managers by other pressures in their organizations. The constant demand to integrate more functions into the enterprise network—voice, video, IoT, mission-critical applications, mergers and acquisitions, and many more—drives the need for constant churn in the network design and deployment. More virtual LANs (VLANs). More subnets. More access control lists (ACLs) and traffic filtering rules. And the list goes on.

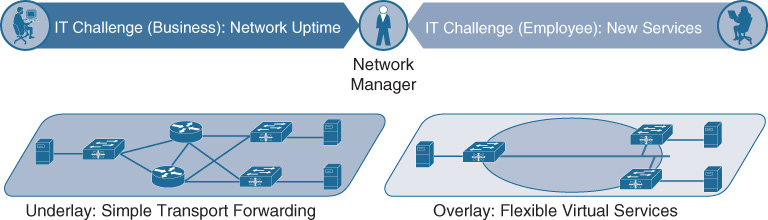

In effect, many network managers end up getting pulled in two contradictory directions, as illustrated in Figure 19-2.

Essentially, many networks undergo this tension between these two goals: keep the network stable, predictable, and always on, and yet at the same time drive constant churn in available network services, functions, and deployments. In a network that consists of only one layer—essentially, that just consists of an underlay, as most networks do today—all of these changes have to be accommodated in the same physical network design and topology.

This places the network at potential risk with every design change or new service implementation, and explains why many organizations find it so slow to roll out new network services, such as (but not limited to) critical capabilities such as network-integrated security and segmentation. It’s all a balance of risk versus reward.

Networks have been built like this for many years. And yet, the ever-increasing pace of change and the need for new enhanced network services are making this approach increasingly untenable. Many network managers have heard these complaints from their organizations: “The network is too slow to change.” “The network is too inflexible.” “We need to be able to move faster and remove bottlenecks.”

So, how can a fabric deployment, using Software-Defined Access, help enterprises to address these concerns?

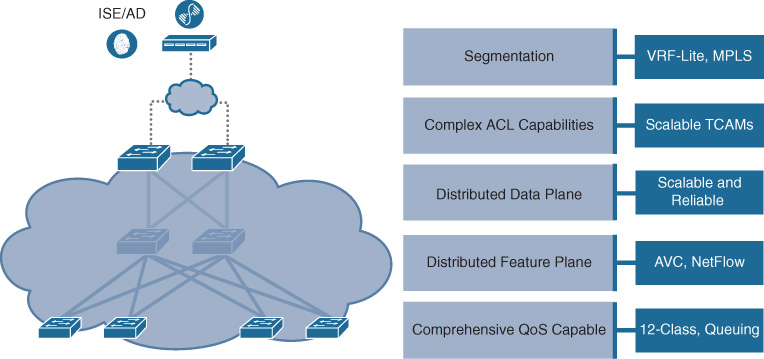

One of the primary benefits of a fabric-based approach is that it separates the “forwarding plane” of the network from the “services plane.” This is illustrated in Figure 19-3.

In this approach, the underlay provides the basic transport for the network. Into the underlay are mapped all of the network devices—switches and routers. The connectivity between these devices is provided using a fully routed network design, which provides the maximum stability and performance (using Equal Cost Multipath [ECMP] routing) and minimizes reconvergence times (via appropriate tuning of routing protocols). The underlay network is configured once and rarely if ever altered unless physical devices or links are added or removed, or unless software updates need to be applied. It is not necessary to make any changes to the underlay to add virtualized services for users. In a fabric-based design, the underlay provides the simple, stable, solid foundation that assists in providing the maximum uptime that organizations need from their network implementations.

The overlay, on the other hand, is where all of the users, devices, and things (collectively known as endpoints) within a fabric-based network are mapped into. The overlay supports virtualized services (such as segmentation) for endpoints, and supports constant change as new services and user communities are added and deleted. Changes to one area of the overlay (for example, adding a new virtual network, or a new group of users or devices) do not affect other portions of the overlay—thus helping to provide the flexibility that organizations require, without placing the network overall at risk.

In addition, because the overlay provides services for both wired and wireless users, and does so in a common way, it assists in creating a fully mobile workplace. With a fabric implementation, the same network services are provided for both wired and wireless endpoints, with the same capabilities and performance. Wired and wireless users alike can enjoy all of the virtualization, security, and segmentation services that a fabric deployment offers.

And speaking of security, an inherent property of a fabric deployment is network segmentation. As outlined in Chapter 9, next-generation encapsulation technologies such as VXLAN provide support for both virtual networks (VNs) and SGTs, allowing for both macro- and micro-segmentation. As you will see, these two levels of segmentation can be combined within SD-Access to provide an unprecedented level of network-integrated segmentation capabilities, which is very useful to augment network security.

Finally, a fabric deployment allows for simplification—one of the most important areas of focus for any organization. To find out why, let’s explore this area a bit further.

Networks tend to be very complex, in many cases due to the difficulty of rolling out network-wide policies such as security (ACLs), QoS, and the like. Many organizations use a combination of security ACLs and VLANs to implement network security policies—mapping users, devices, and things into VLANs (statically or dynamically), and then using ACLs on network devices or firewalls to implement the desired security policies. However, in the process of doing so, many such organizations find that their ACLs end up reflecting their entire IP subnetting structure—i.e., which users in which subnets are allowed to communicate to which devices or services in other subnets ends up being directly “written into” the ACLs the organization deploys.

If you recall, this was examined in some detail back in Chapter 9. The basic fact is that the IP header contains no explicit user/device identity information, so IP addresses and subnets are used as a proxy for this. However, this is how many organizations end up with hundreds, or even thousands, of VLANs. This is how those same organizations end up with ACLs that are hundreds or thousands of lines long—so long and complex, in fact, that they become very cumbersome to deploy, as well as difficult or impossible to maintain. This slows down network implementations, and possibly ends up compromising security due to unforeseen or undetected security holes—all driven by the complexity of this method of security operation.

A fabric solution offers a different, and better, approach. All endpoints connecting to a fabric-based network such as SD-Access are authenticated (either statically or dynamically). Following this authentication, they are assigned to a Scalable Group linked with their role, as well as being mapped into an associated virtual network. These two markings—SGT and VN—are then carried end to end in the fabric overlay packet header (using VXLAN), and all policies created within the fabric (i.e., which users have access to which resources) are based on this encoded group information—not on their IP address or subnet. The abstraction offered by the use of VNs and SGTs for grouping users and devices within an SD-Access deployment is key to the increased simplicity that SD-Access offers versus traditional approaches.

All packets carried within an SD-Access fabric contain user/device IP address information, but this is not used for applying policy in an SD-Access fabric. Polices within SD-Access are group-based in nature (and thus known as group-based policies, or GBPs). IP addresses in SD-Access are used for determining reachability (where has a host connected from or roamed to) as tracked by the fabric’s LISP control plane. Endpoints are subject to security and other policies based on their flexible groupings, using SGTs and VNs as their policy tags—tags that are carried end to end within the SD-Access fabric using the VXLAN-based overlay.

Now you are beginning to see the importance of the protocols—LISP, VXLAN, and SGTs—that were covered in Chapter 9. If you skipped over that section, it is highly recommended to go back and review it now. These protocols are the key to creating network fabrics, and to enabling the next generation of networking that SD-Access represents. They serve as the strong foundation for a fabric-enabled network—one that enables the simplicity and flexibility that today’s organizations require—while also providing integrated support for security and mobility, two key aspects of any modern network deployment.

Capabilities Offered by SD-Access

Now that you understand the power that the separation of IP addressing and policy offered by Software-Defined Access provides, let’s delve a bit deeper and see what advanced, next-generation, network-level capabilities SD-Access offers for organizations.

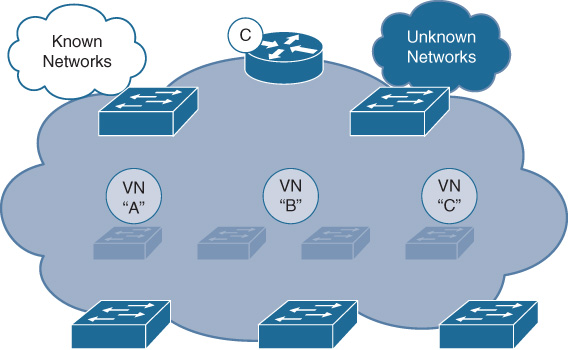

Virtual Networks

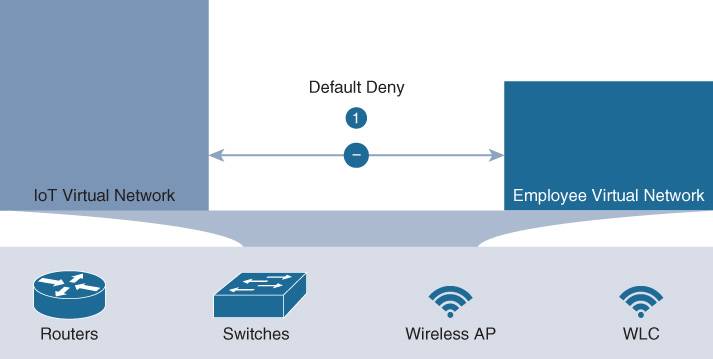

First and foremost, SD-Access offers macro-segmentation using VNs. Equivalent to virtual routing and forwarding instances (VRF) in a traditional segmentation environment, VNs provide separate routed “compartments” within the fabric network infrastructure. Users, devices, and things are mapped into the same, or different VNs, based on their identity. Between VNs, a default-deny policy is implemented (i.e., endpoints in one VN have no access to endpoints in another VN by default). In effect, VNs provide a first level of segmentation that, by default, ensures no communications between users and devices located in different VNs. This is illustrated in Figure 19-4.

In this example, IoT devices are placed into one VN, and employees into another. Because the two VNs are separate and distinct routing spaces, no communication from one to the other is possible, unless explicitly permitted by the network administrator (typically via routing any such inter-VN traffic via a firewall, for example).

VNs provide a “macro” level of segmentation because they separate whole blocks of users and devices. There are many situations where this is desirable—examples include hospitals, airports, stadiums, banks…in fact, any organization that hosts multiple different types of users and things, and which needs these various communities sharing the common network infrastructure to be securely (and simply) separated from each other, while still having access to a common set of network services.

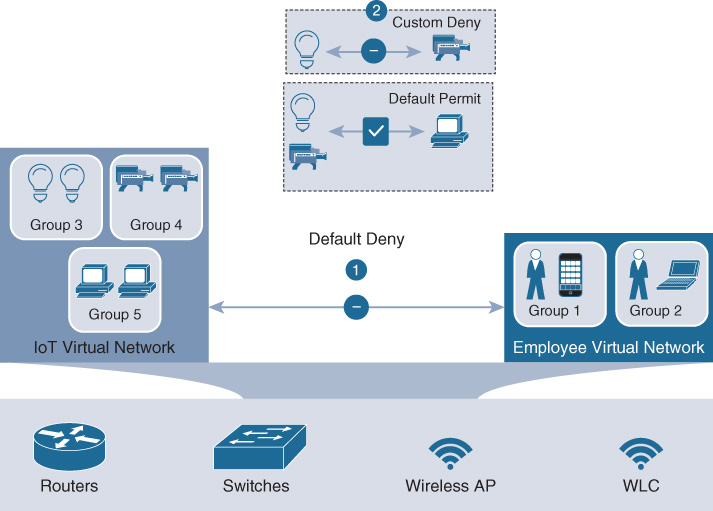

However, as useful as VNs are, they become even more powerful when augmented with micro-segmentation, which allows for group-based access controls even within a VN. Let’s explore this next.

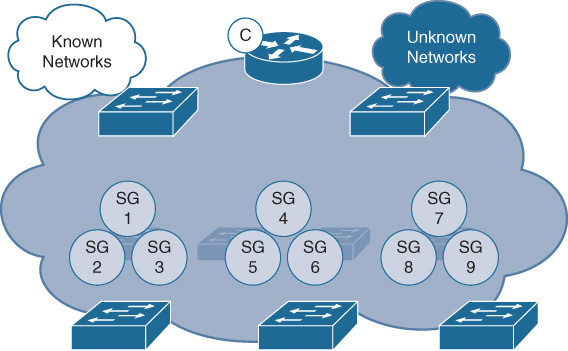

Scalable Groups

The use of Scalable Groups within SD-Access provides the capability for intra-VN traffic filtering and control. Scalable Groups have two aspects: a Scalable Group Tag (SGT), which serves as the group identity for an endpoint, and which is carried end to end in the SD-Access fabric within the VXLAN fabric data plane encapsulation, and a Scalable Group ACL (SGACL, sometimes also previously known as Security Group ACL), which controls what other hosts and resources are accessible to that endpoint, based on the endpoint’s identity and role.

Group-based polices are far easier to define and understand than traditional IP-based ACLs because they are decoupled from the actual IP address structure in use. In this way, group-based ACLs are much more tightly aligned with the way that humans think about network security policies—“I want to give this group of users access to this, this, and this, and deny them from access to that and that”—and, as such, group-based policies are far easier to define, use, and understand than traditional access controls based on IP addresses and subnets.

Group-based policies are defined using either a whitelist model or a blacklist model. In a whitelist model, all traffic between groups is denied unless it is explicitly permitted (i.e., whitelisted) by a network administrator. In a blacklist model, the opposite is true: all traffic between groups is permitted unless such communication is explicitly blocked (i.e., blacklisted) by a network administrator.

In Software-Defined Access, a whitelist model is used by default for the macro-segmentation of traffic between VNs, and a blacklist model is used by default for the micro-segmentation of traffic between groups in the same VN. In effect, the use of groups within SD-Access provides a second level of segmentation, allowing the flexibility to control traffic flows at a group level even within a given VN.

The use of these two levels of segmentation provided by SD-Access—VNs and SGTs—is illustrated in Figure 19-5.

By providing two levels of segmentation, macro and micro, SD-Access provides the most secure network deployment possible for enterprises, and yet at the same time the simplest such approach for such organizations to understand, design, implement, and support. By including segmentation as an integral part of an SD-Access fabric deployment, SD-Access makes segmentation consumable—and opens up the power of integrated network security to all organizations, large and small.

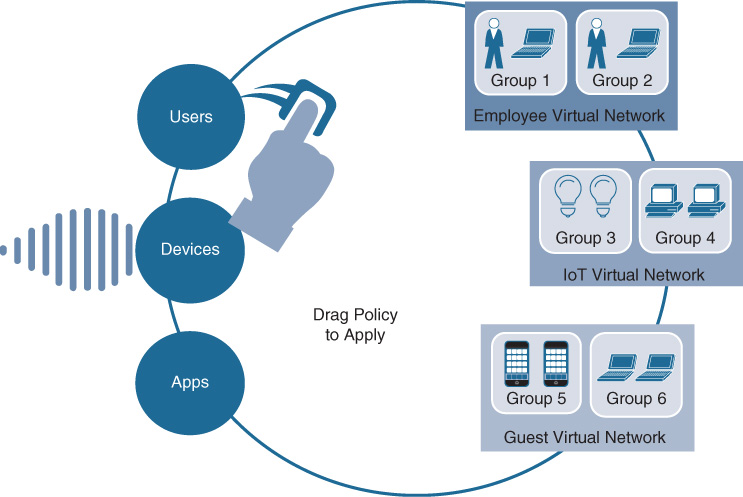

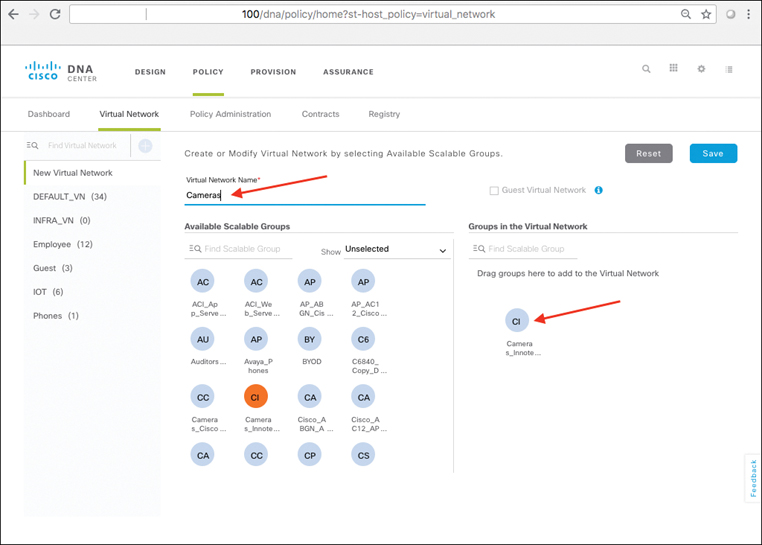

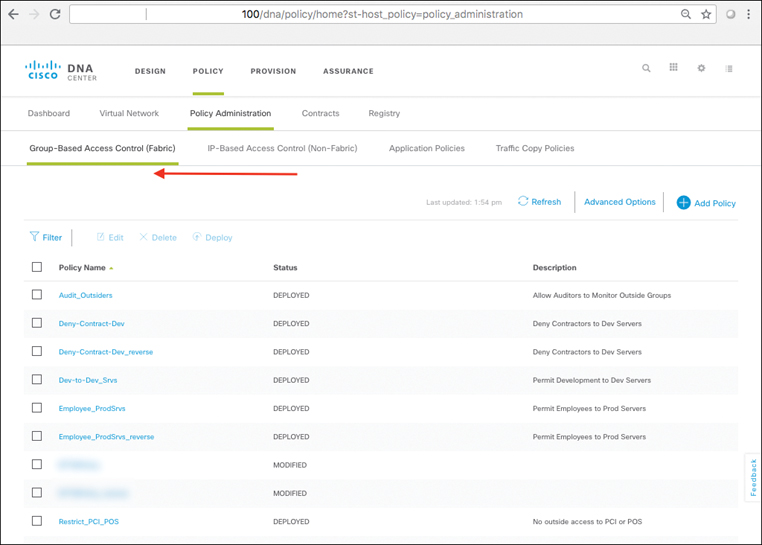

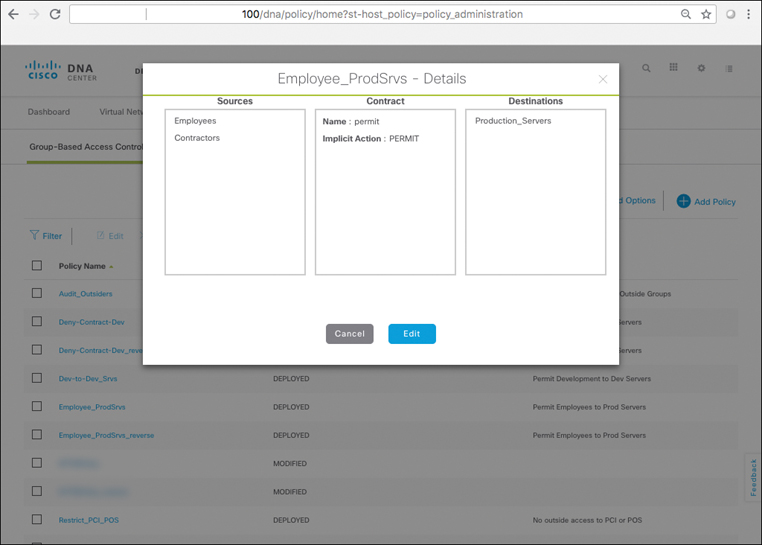

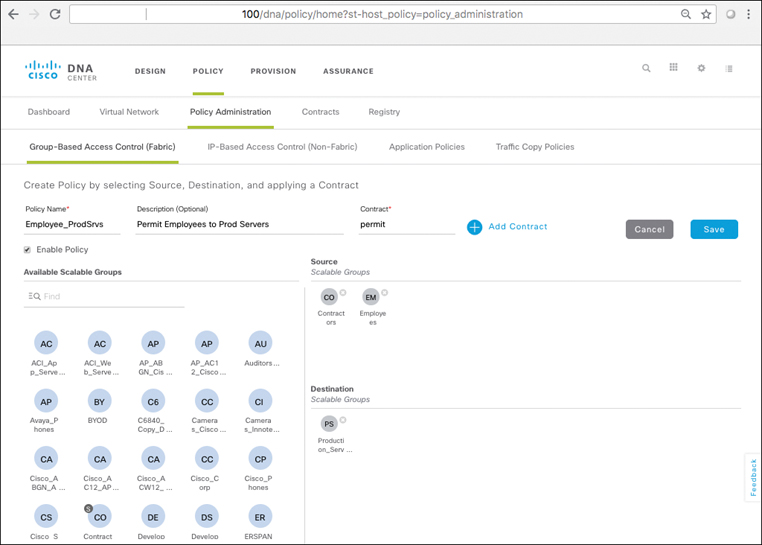

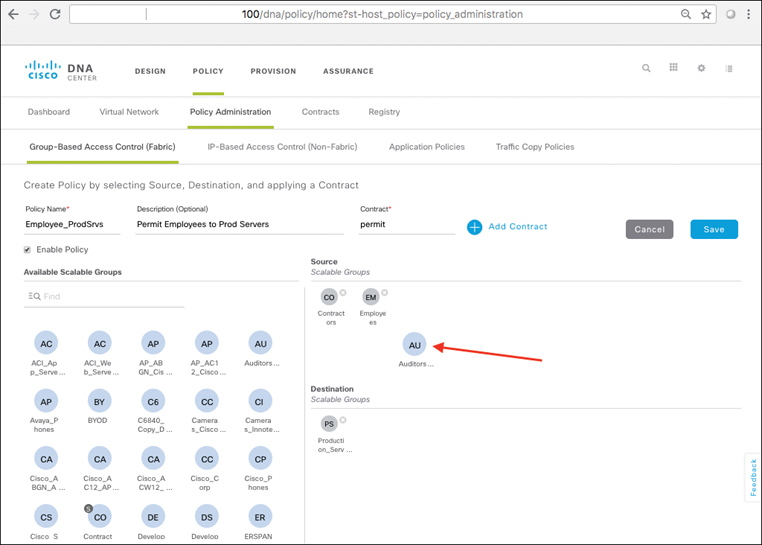

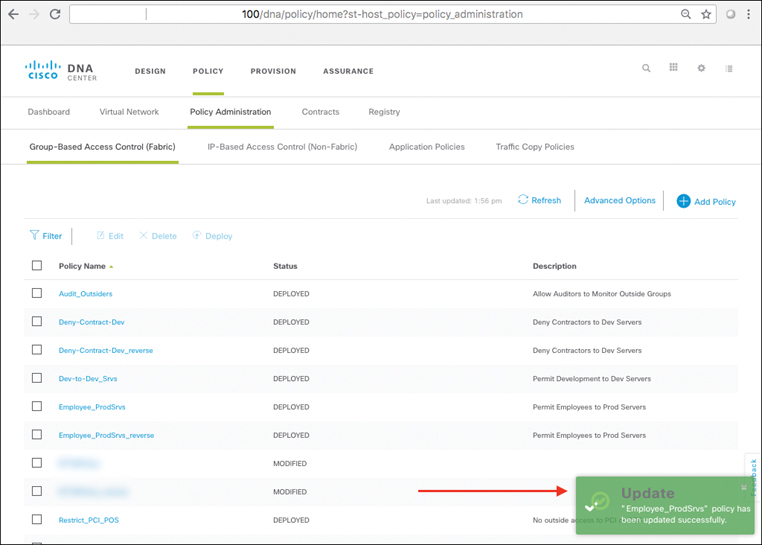

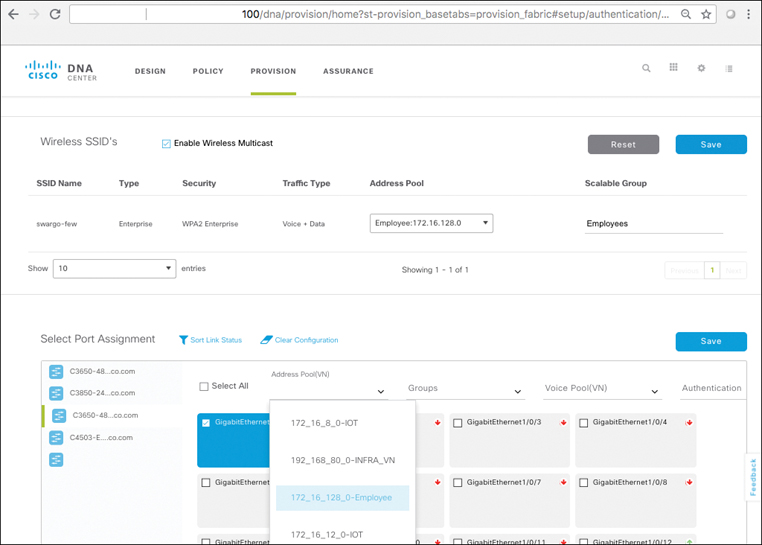

Using Cisco DNA Center, SD-Access also makes the creation and deployment of VNs and group-based policies extremely simple. Policies in SD-Access are tied to user identities, not to subnets and IP addresses. After policies are defined, they seamlessly follow a user or device as it roams around the integrated wired/wireless SD-Access network. Policies are assigned simply by dragging and dropping groups in the Cisco DNA Center user interface, and are completely automated for deployment and use, as illustrated in Figure 19-6.

Before SD-Access, policies were VLAN and IP address based, requiring the network manager to create and maintain complex IP-based ACLs to define and enforce access policy, and then deal with any policy errors or violations manually. With SD-Access, there is no VLAN or IP address/subnet dependency for segmentation and access control (as these are defined at the VN/SGT grouping levels). The network manager in SD-Access is instead able to define one consistent policy, associated with the identity of the user or device, and have that identity (and the associated policy) follow the user or device as it roams within the fabric-based network infrastructure.

As you will see, policies within SD-Access are based on contracts that define who, or what, the user or device has access to in the network. Such contracts are easily updated via Cisco DNA Center to reflect new policy rules and updates as these change over time—with the resulting network-level policies then pushed out to the network elements involved in an automated fashion. This massively simplifies not only the initial definition of network policies, but also their ongoing maintenance over time.

In summary, simplified, multilevel network segmentation based on virtual networks and group-based policy is an inherent property of what SD-Access offers, and is an extremely important and powerful part of the solution.

Let’s continue on and explore what else SD-Access has in store.

Stretched Subnets

Most organizations at one time or another have faced the need to provide a single IP subnet that spans across—i.e., is “stretched between”—multiple wiring closets within a campus deployment. The reasons for such a need vary. They might involve applications that need to reside within a single common subnet, or older devices that cannot easily employ subnetting (due to the higher-level protocols they employ). Whatever the reason, such deployment requests pose a quandary for the typical network manager. Providing this capability in a traditional network deployment means extending a VLAN between multiple wiring closets—connecting them all together into a single, widely spanned Layer 2 domain. Although this is functional to meet the need, acceding to such requests places their network at overall risk.

Because all modern networks employ redundant interconnections between network devices, such wide-spanning Layer 2 domains create loops—many loops—in a typical enterprise network design. The Spanning Tree Protocol “breaks” any such loops by blocking ports and VLANs on redundant uplinks and downlinks within the network, thus avoiding the endless propagation of Layer 2 frames (which are not modified on forwarding) over such redundant paths.

However, this wastes much of the bandwidth (up to 50 percent) within the network due to all of the blocking ports in operation, and is complex to maintain because now there is a Layer 2 topology (maintained via Spanning Tree) and a Layer 3 topology (maintained via routing protocols and first-hop redundancy protocols) to manage, and these must be kept congruent to avoid various challenges to traffic forwarding and network operation. And even when all of this is done, and done properly, the network is still at risk because any misbehaving network device or flapping link could potentially destabilize the entire network—leading in the worst case to a broadcast storm should Spanning Tree fail to contain the issue.

Essentially, the use of wide-spanning Layer 2 VLANs places the entire network domain over which they span into a single, common failure domain—in the sense that a single failure within that domain has the potential to take all of the domain down, with potentially huge consequences for the organization involved.

Due to these severe limitations, many network managers either ban the use of wide-spanning VLANs entirely in their network topologies or, if forced to use them due to the necessity to deploy the applications involved, employ them gingerly and with a keen awareness of the ongoing operational risk they pose.

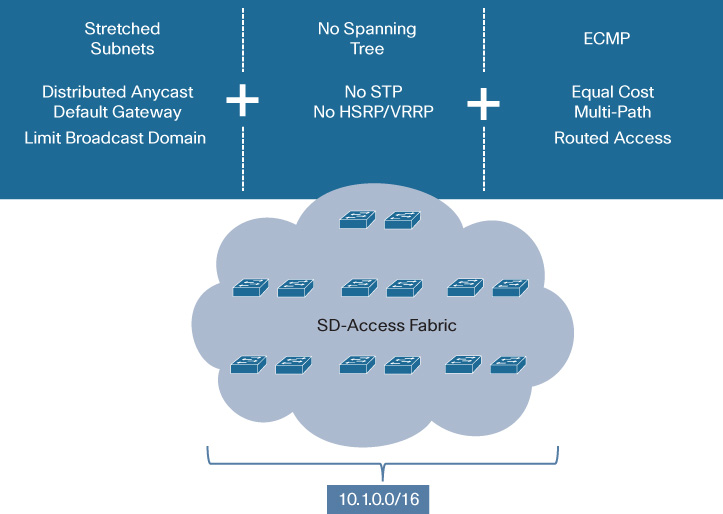

One of the major benefits provided by SD-Access, in addition to integrated identity-based policy and segmentation, is the ability to “stretch” subnets between wiring closets in a campus or branch deployment in a simple manner, and without having to pay the “Spanning Tree tax” associated with the traditional wide-spanning VLAN approach.

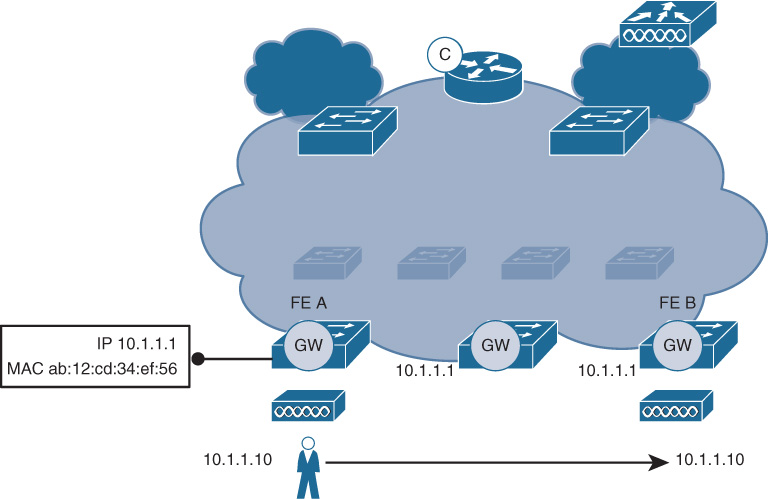

Figure 19-7 illustrates the use of stretched subnets within SD-Access.

With SD-Access, a single subnet (10.1.0.0/16, as shown in Figure 19-7) is stretched across all of the wiring closets within the fabric deployment. By doing so, any endpoint attached within any of these wiring closets can (if properly authenticated) be mapped into this single, wide-spanning IP subnet, which appears identical to the endpoint (same default gateway IP and MAC address), regardless of where the endpoint is attached—without having to span Layer 2 across all of these wiring closets to provide this capability.

This is accomplished by the use of the Distributed Anycast Default Gateway function within SD-Access. No matter where a device attaches to the subnet shown, its default gateway for that subnet is always local, being hosted on the first-hop switch (hence, distributed). Moreover, it always employs the same virtual MAC address for this default gateway (thus, anycast). The combination of these two attributes makes the SD-Access fabric “look the same” no matter where a device attaches—a critical consideration for roaming devices, and a major factor in driving simplification of the network deployment.

In addition, it is vital to note that SD-Access provides this stretched subnet capability without actually extending the Layer 2 domain between wiring closets. Spanning Tree still exists southbound (i.e., toward user ports) from the wiring closet switches in an SD-Access deployment—but critically, Spanning Tree and the Layer 2 domain are not extended “northbound” across the SD-Access fabric. SD-Access makes it possible to have the same IP subnet appear across multiple wiring closets, without having to create Layer 2 loops as a traditional wide-spanning VLAN approach does.

In this way, SD-Access provides the benefits of a stretched IP subnet for applications and devices that may need this capability, but eliminates the risk otherwise associated with doing so. Broadcast domains are limited in extent to a single wiring closet, and no cross-network Layer 2 loops are created.

SD-Access also provides several additional key attributes with the approach it employs for stretched subnets.

First, because SD-Access instantiates the default gateway for each fabric subnet always at the first-hop network switch, traditional first-hop gateway redundancy protocols such as Hot Standby Router Protocol (HSRP) or Virtual Router Redundancy Protocol (VRRP) are not required. This eliminates a major level of complexity for redundant network designs, especially because maintaining congruity between the Layer 2 and Layer 3 first-hop infrastructures—a significant task in traditional networks—is not needed. SD-Access stretched subnets are very simple to design and maintain. In fact, all subnets (known as IP host pools) that are deployed within an SD-Access fabric site are stretched to all wiring closets within that fabric site by default.

Secondly, since SD-Access is deployed on top of a fully routed underlay network, ECMP routing is provided across the fabric for the encapsulated overlay traffic. This ensures that all links between switches in the fabric network are fully utilized—no ports are ever placed into blocking mode—offering vastly improved traffic forwarding compared to traditional Layer 2/Layer 3 designs.

To ensure that traffic is load-balanced over multiple paths optimally, the inner (encapsulated) endpoint packet’s IP five-tuple information (source IP, destination IP, source port, destination port, and protocol) is hashed into the outer encapsulating (VXLAN) packets’ source port. This ensures that all links are utilized equally within the fabric backbone by providing this level of entropy for ECMP link load sharing, while also ensuring that any individual flow transits over only one set of links, thus avoiding out-of-order packet delivery.

The use of a routed underlay also ensures rapid recovery in the event of network link or node failures, because such recovery takes place at the rapid pace associated with a routing protocol, not the relatively more torpid pace associated with Layer 2 reconvergence.

The use of a routed underlay for SD-Access results in a more stable, predictable, and optimized forwarding platform for use by the overlay network, and the ability to then deploy stretched subnets in the SD-Access overlay network provides a flexible deployment model without the trade-offs (i.e., inability to deploy a stretched-subnet design) that such a fully routed network deployment incurs in a traditional (non-fabric) network system.

Finally, the use of stretched subnets with SD-Access allows organizations to massively simplify their IP address planning and provisioning. Because subnets within the SD-Access fabric site are by default stretched to all wiring closets spanned by that fabric site, a much smaller number of much larger IP address pools can be provisioned and used for the site involved. This not only greatly simplifies an organization’s IP address planning, it leads to much more efficient use of the IP address pool space than the typical larger-number-of-smaller-subnets approach used by many enterprise network deployments. This simplicity and efficient use of IP address space in turn allows organizations to be speedier to adapt to the support of new devices and services that need to be mapped into the network infrastructure.

Now that we’ve examined some of the key network-level benefits of an SD-Access deployment, let’s continue and explore the components that combine to create an SD-Access solution.

SD-Access High-Level Architecture and Attributes

This section reviews the high-level architecture of SD-Access, outlines some of the major components that make up the solution, and examines the various attributes associated with these components.

SD-Access as a solution is composed of several primary building blocks:

Cisco DNA Center: This serves as the key component for implementation of the automation and assurance capabilities of SD-Access. Consisting physically of one or more appliances, Cisco DNA Center provides the platform from which an SD-Access solution is designed, provisioned, monitored, and maintained. Cisco DNA Center provides not only the design and automation capabilities to roll out an SD-Access fabric network deployment, it also provides the analytics platform functionality necessary to absorb telemetry from the SD-Access network, and provide actionable insights based on this network data.

Identity Services Engine: ISE provides a key security platform for integration of user/device identity into the SD-Access network. ISE allows for the policy and segmentation capabilities of SD-Access to be based around endpoint and group identity, allowing the implementation of network-based policies that are decoupled from IP addressing—a key attribute of an SD-Access deployment.

Network infrastructure (wired and wireless): The SD-Access solution encompasses both wired and wireless network elements, and provides the ability to create a seamless network fabric from these components. A review of which network elements make up an SD-Access fabric is outlined later in this chapter. A unique and powerful capability of SD-Access is the capacity to reuse many existing network infrastructure elements—switches, routers, access points, and Wireless LAN Controller (WLCs)—and repurpose them for use in a powerful new fabric-based deployment model.

By delivering a solution that embodies the best aspects of software flexibility and rapid development, with hardware-based performance and scale, SD-Access provides “networking at the speed of software.”

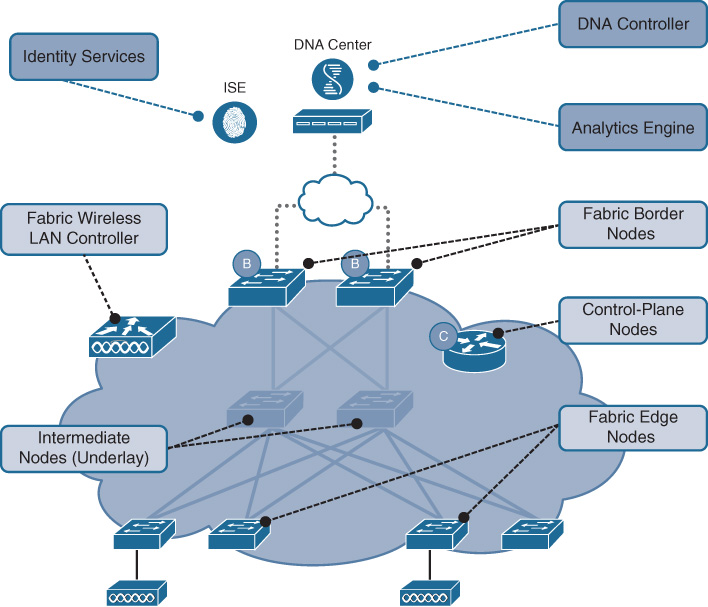

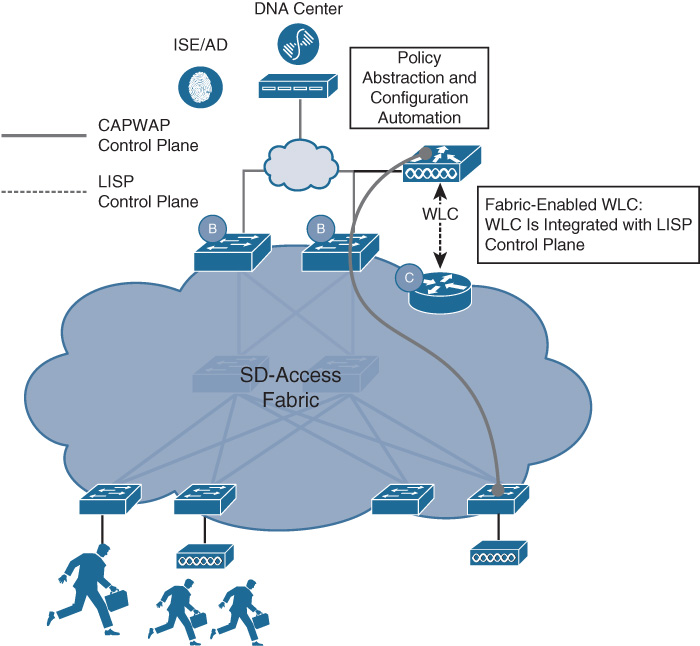

The high-level design of an SD-Access solution is shown in Figure 19-8, along with callouts concerning some of the key attributes of SD-Access.

Now, let’s dive into the details and examine how SD-Access is built, review the components that are part of an SD-Access solution, and detail the benefits SD-Access delivers.

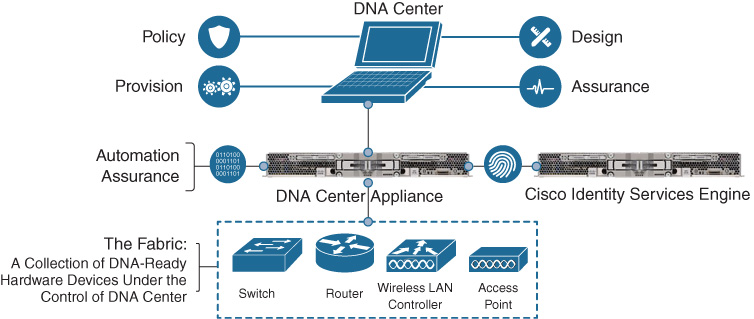

SD-Access Building Blocks

As mentioned, the SD-Access solution leverages several key building blocks: namely, Cisco DNA Center, ISE, and the network infrastructure elements which form the SD-Access fabric. Figure 19-9 illustrates these key building blocks of SD-Access:

Let’s begin by examining Cisco DNA Center, with a focus on how this supports an SD-Access deployment.

Cisco DNA Center in SD-Access

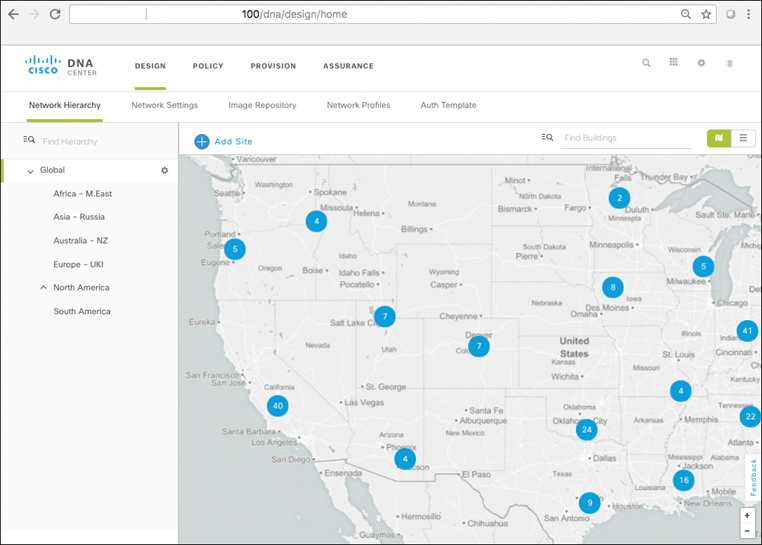

Cisco DNA Center’s support of the SD-Access solution set is focused on a four-step workflow model. These four major workflows consist of Design, Policy, Provision, and Assurance. The major focus of each of these four workflows is as follows:

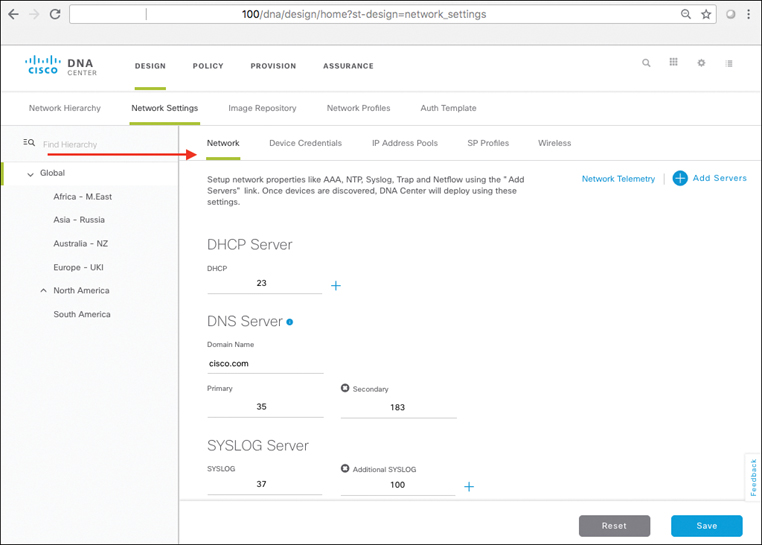

Design: This workflow allows for the design of the overall system. This is where common attributes such as major network capabilities (DHCP and DNS services, IP host pools for user/device IP addressing, etc) are created and enumerated, as well as where network locations, floors, and maps are laid out and populated.

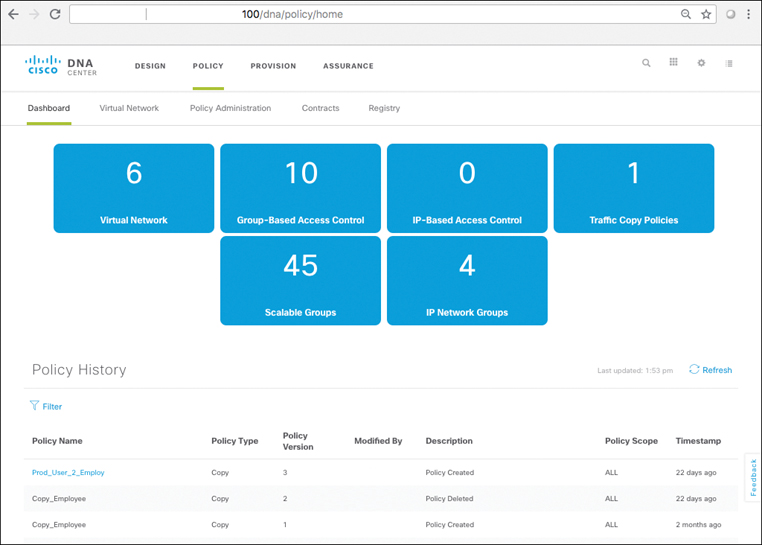

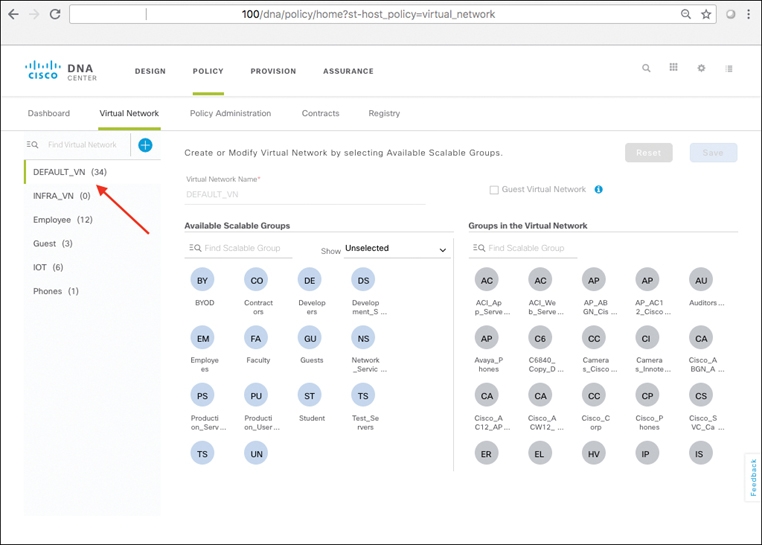

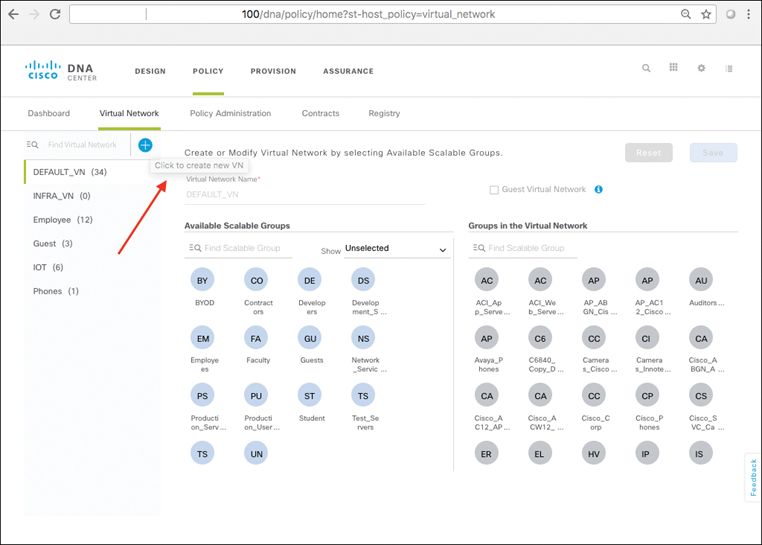

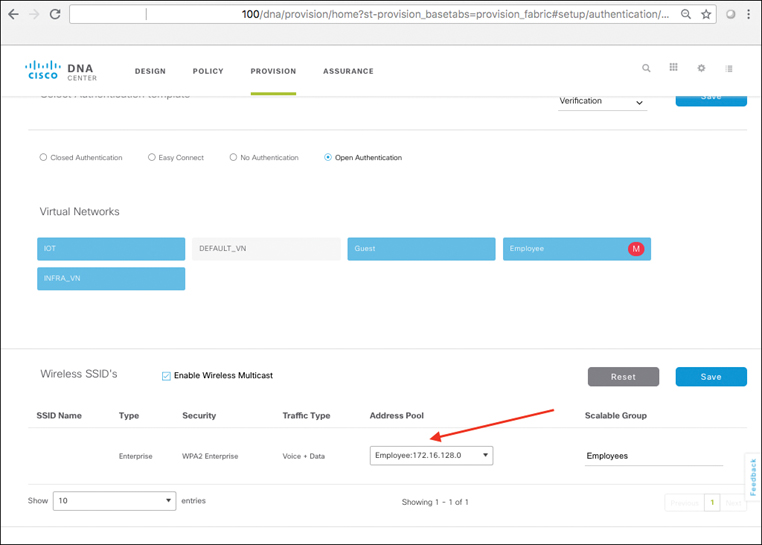

Policy: This workflow allows the creation of fabric-wide policies, such as the creation of Virtual Networks, Scalable Groups, and contracts between groups defining access rights and attributes, in line with defined organizational policies and goals.

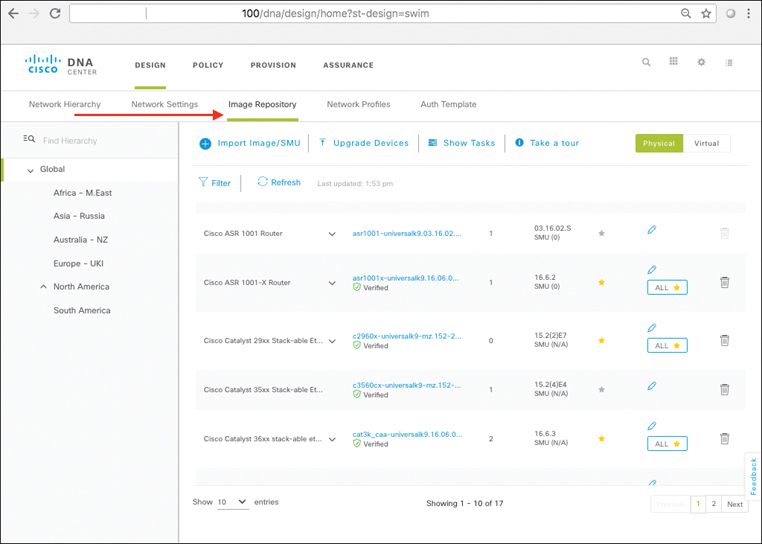

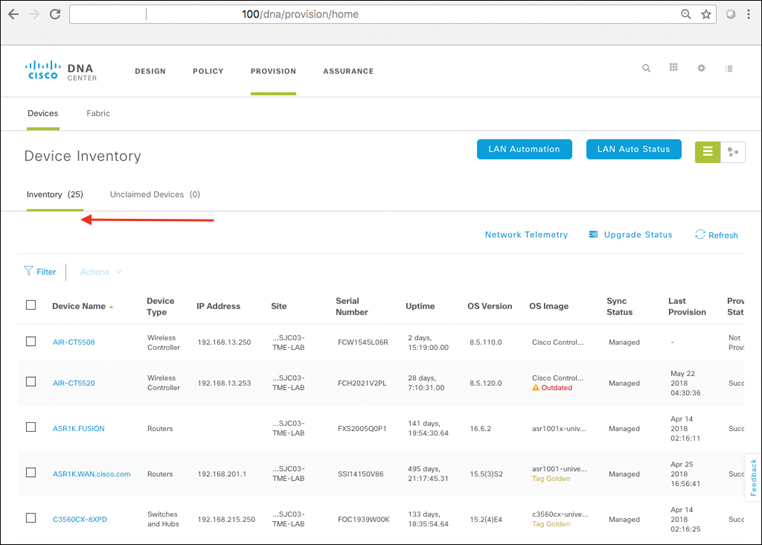

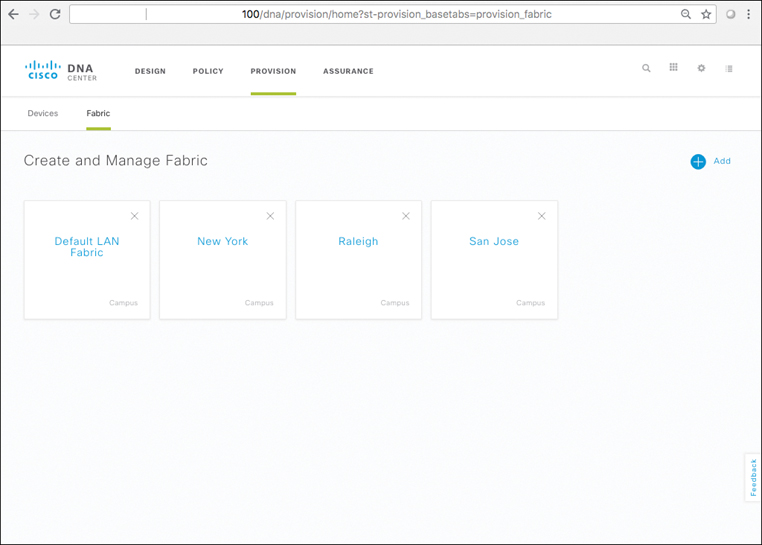

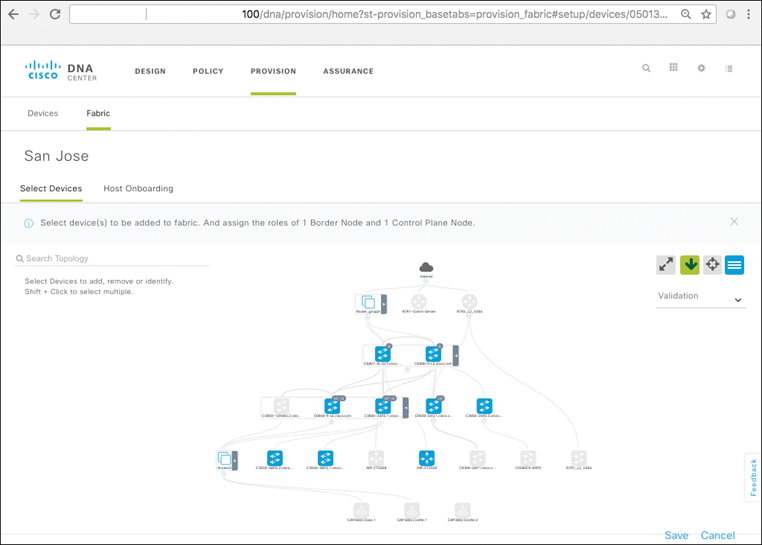

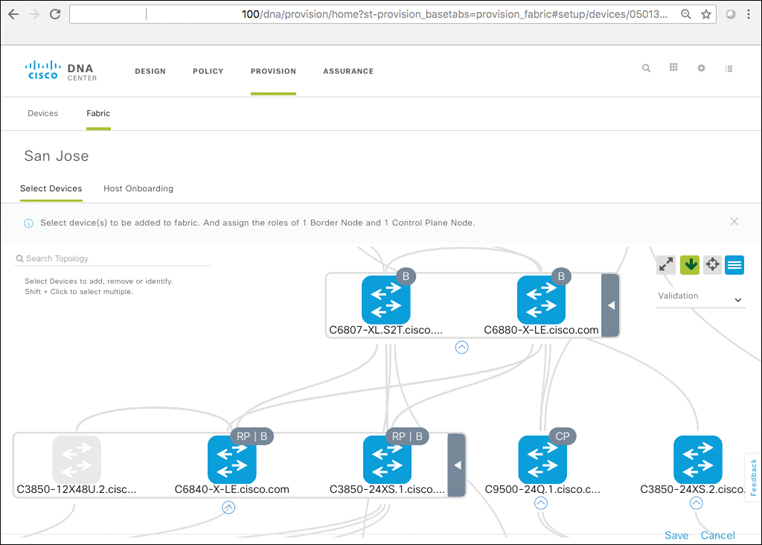

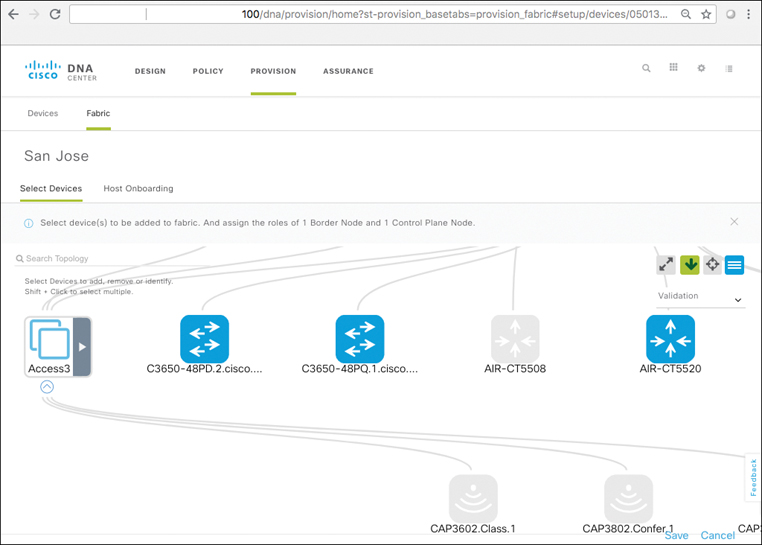

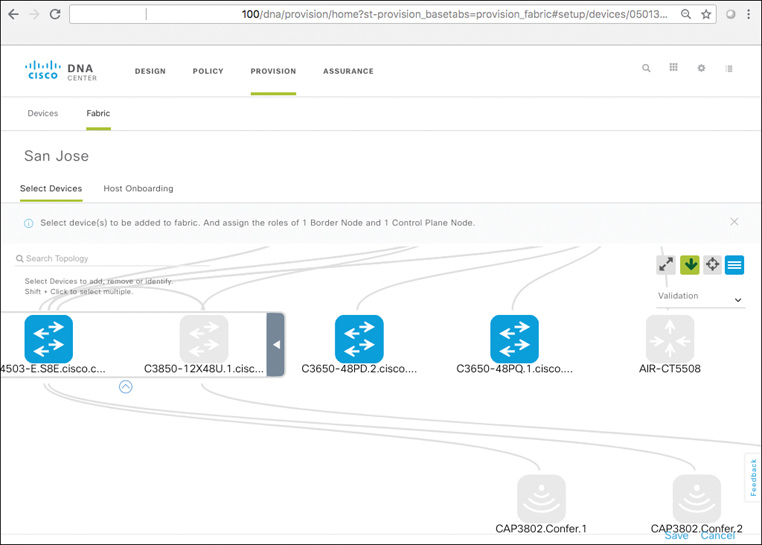

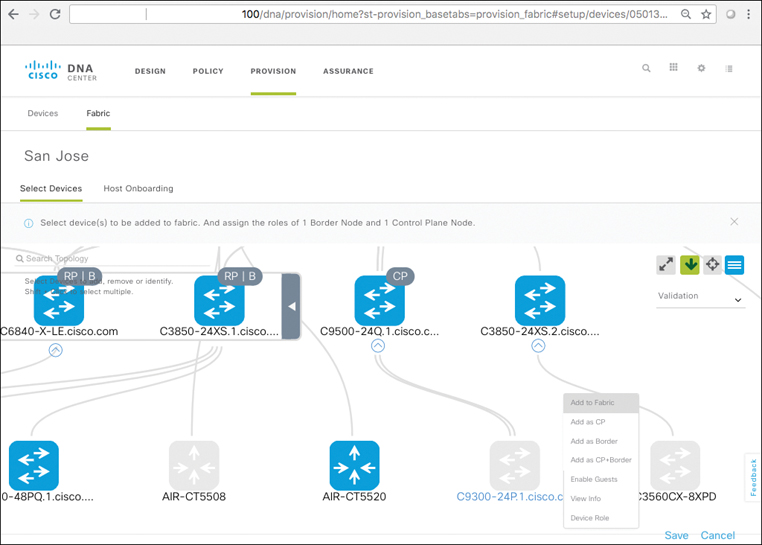

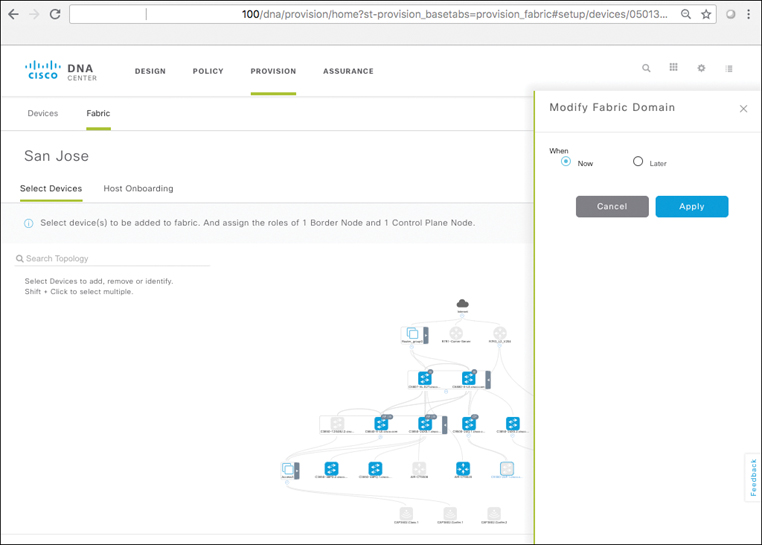

Provision: Design and policy come together in this workflow, where the actual network fabrics are created, devices are assigned to various roles within those fabrics, and device configurations are created and pushed out to the network elements that make up the SD-Access overlay/underlay infrastructure.

Assurance: The final workflow allows for the definition and gathering of telemetry from the underlying network, with the goal of analyzing the vast amounts of data which can be gathered from the network and refining this into actionable insights.

These four workflows in Cisco DNA Center are outlined in Figure 19-10.

As the central point for defining, deploying, and monitoring the SD-Access network, Cisco DNA Center plays a key role in any SD-Access implementation. A single Cisco DNA Center instance can be used to deploy multiple SD-Access fabrics.

Many of the key attributes and capabilities of Cisco DNA Center were outlined previously as we examined the Automation and Assurance functionality of Cisco DNA. Any SD-Access deployment leverages Cisco DNA Center as the core element for the definition, management, and monitoring of the SD-Access fabric.

Now, let’s examine a few of the key elements that Cisco DNA Center provisions in an SD-Access fabric deployment.

SD-Access Fabric Capabilities

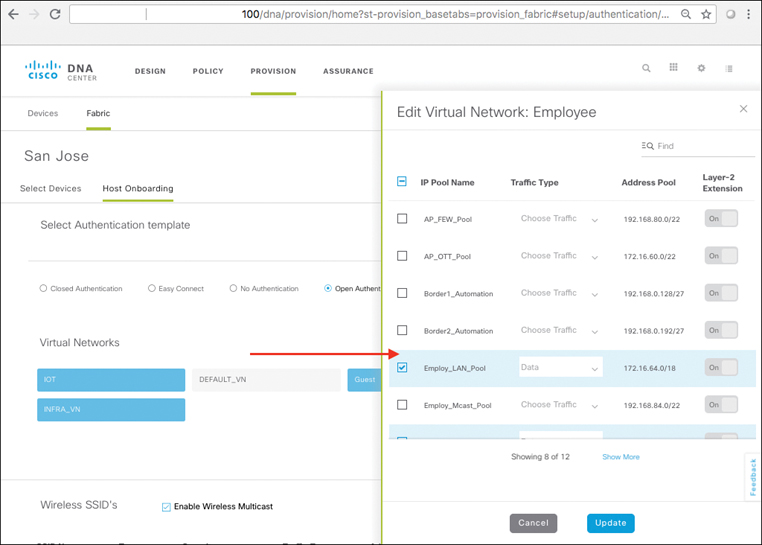

Three of these key elements which are defined in Cisco DNA Center and then rolled out into the SD-Access fabric for deployment are IP Host Pools, Virtual Networks, and Scalable Groups. Let’s double-click on each of these areas to gain a better understanding of the role they play in an SD-Access implementation.

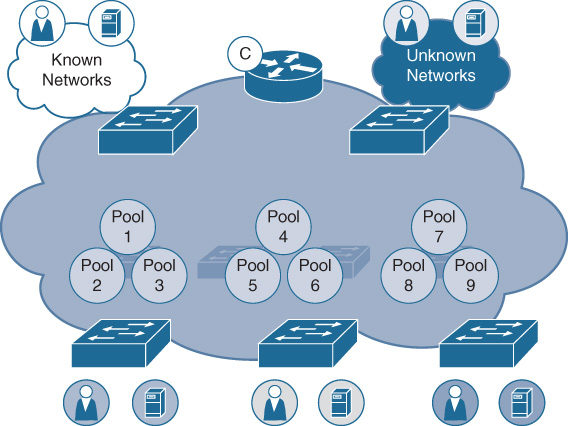

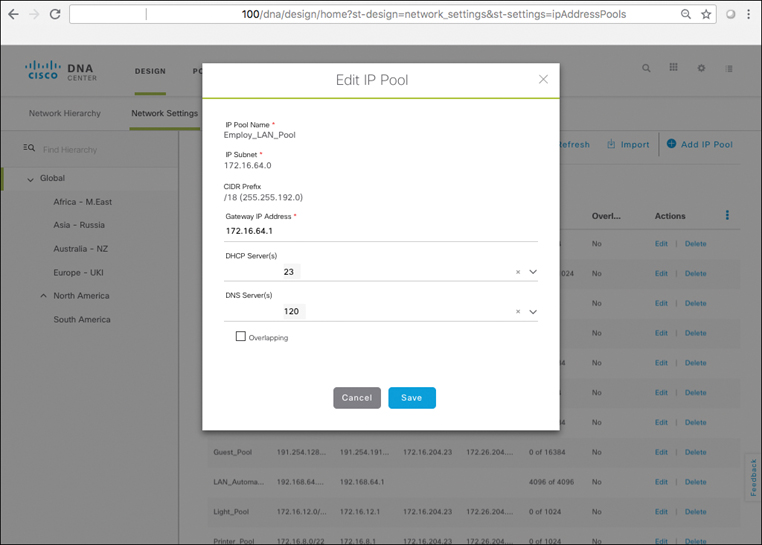

IP Host Pools

IP host pools are created within Cisco DNA Center, and are the IP subnets which are deployed for use by users, devices, and things attached to the SD-Access fabric. Host pools, once defined within a fabric deployment, are bound to a given Virtual Network, and are rolled out by Cisco DNA Center to all of the Fabric Edge switches in the fabric site involved. The subnets defined in the Host Pools are rolled out onto all the network edge switches within that fabric site (same virtual IP and virtual MAC at all locations at that site), thus driving network simplification and standardization.

Each IP host pool is associated with a distributed anycast default gateway (as discussed previously) for the subnet involved, meaning that each edge switch in the fabric serves as the local default gateway for any endpoints (wired or wireless) attached to that switch. The given subnet is by default “stretched” across all of the edge switches within the given fabric site deployment, making this host pool available to endpoints no matter where they attach to the given fabric at that site.

Figure 19-11 illustrates the deployment and use of IP host pools within an SD-Access fabric deployment.

Endpoints attaching to the SD-Access fabric are mapped into the appropriate IP host pools, either statically, or dynamically based on user/device authentication, and are tracked by the fabric control plane, as outlined in the following text concerning fabric device roles. To provide simplicity and allow for easy host mobility, the fabric edge nodes that the endpoints attach to implement the distributed anycast default gateway capability, as outlined previously, providing a very easy-to-use deployment model.

Virtual Networks

Virtual networks are created within Cisco DNA Center and offer a secure, compartmentalized form of macro-segmentation for access control. VNs are mapped to VRFs, which provide complete address space separation between VNs, and are carried across the fabric network as virtual network IDs (VNIs, also sometimes referred to as VNIDs) mapped into the VXLAN data plane header.

An illustration of VNs within an SD-Access deployment is shown in Figure 19-12.

Every SD-Access fabric deployment contains a “default” VN, into which devices and users are mapped by default (i.e., if no other policies or mappings are applied). Additional VNs are created and used as desired by the network administrator to define and enforce the network segmentation polices that the administrator may wish to use within the SD-Access fabric. An additional VN that exists by default within an SD-Access deployment is the INFRA_VN (Infrastructure VN), into which network infrastructure devices such as access points and extended node switches are mapped.

The scale for implementation of additional VNs depends of the scalability associated with network elements within the fabric, including the border and edge node types involved. New platforms are introduced periodically, each with its own scaling parameters, and as well the scale associated with devices may also vary by software release. Please refer to Cisco.com, as well as resources such as the Software-Defined Access Design Guide outlined in the “Further Reading” section at the end of this chapter (https://www.cisco.com/c/dam/en/us/td/docs/solutions/CVD/Campus/CVD-Software-Defined-Access-Design-Guide-2018AUG.pdf) for the latest information associated with VN scale across various device types within an SD-Access fabric deployment.

Scalable Groups

Scalable Groups (SG) are created within Cisco DNA Center as well as ISE, and offer a secure form of micro-segmentation for access control. SGs are carried across the SD-Access fabric as SGTs mapped into the VXLAN data plane header. Scalable Group ACLs (SGACLs) are used for egress traffic filtering and access control with an SD-Access deployment, enforced at the fabric edge and/or fabric border positions in the fabric.

For additional details on the use the SGTs and SGACLs, please refer to Chapter 9, where this is covered in greater depth.

The use of SGTs within an SD-Access deployment is illustrated in Figure 19-13.

The combination of VNs and SGTs within the SD-Access solution, along with the automated deployment of these capabilities by Cisco DNA Center, provide an extremely flexible and powerful segmentation solution for use by enterprise networks of all sizes.

The ability to easily define, roll out, and support an enterprise-wide segmentation solution has long been a desirable goal for many organizations. However, it has often proved to be out of reach for many in the past due to the complexities (MPLS VPNs, VRF-lite, etc.) associated with previous segmentation solutions.

SD-Access and Cisco DNA Center now bring this powerful capability for two levels of segmentation—macro-segmentation using VNs, and micro-segmentation using SGTs—to many enterprise networks worldwide, making this important set of security and access control capabilities far more consumable than they were previously.

Now that you’ve been introduced to some of the higher-level constructs that Cisco DNA Center assists in provisioning in an SD-Access fabric deployment—IP host pools, virtual networks, and Scalable Groups—let’s delve into the roles that various network infrastructure devices support within the fabric.

SD-Access Device Roles

Various network infrastructure devices perform different roles within an SD-Access deployment, and work together to make up the network fabric that SD-Access implements.

The various roles for network elements with an SD-Access solution are outlined in Figure 19-14.

These various device roles and capabilities are summarized briefly as follows:

Cisco DNA Center: Serves as the overall element for designing, provisioning, and monitoring the SD-Access deployment, as well as for providing network monitoring and assurance capabilities.

Identity Services Engine: Serves as the primary repository for identity and policy within the SD-access deployment, providing dynamic authentication and authorization for endpoints attaching to the fabric. ISE also interfaces with external identity sources such as Active Directory.

Control plane nodes: One or more network elements that implement the LISP Map Server/Map Resolver (MS/MR) functionality. Basically, the control plane nodes within the SD-Access fabric “keep track” of where all the users, devices, and things attached to the fabric are located, for both wired and wireless endpoints. The control plane nodes are key elements within the SD-Access fabric architecture, as they serve as the “single source of truth” as to where all devices attached to the SD-Access fabric are located as they connect, authenticate, and roam.

Border nodes: One or more network elements that connect the SD-Access fabric to the “rest of the world” outside of the fabric deployment. One of the major tasks of the border node or nodes is to provide reachability in and out of the fabric environment, and to translate between fabric constructs such as virtual networks and Scalable Group Tags and the corresponding constructs outside of the fabric environment.

Edge nodes: Network elements (typically, many) that attach end devices such as PCs, phones, cameras, and many other types of systems into the SD-Access fabric deployment. Edge nodes serve as the “first-hop” devices for attachment of endpoints into the fabric, and are responsible for device connectivity and policy enforcement. Edge nodes in the LISP parlance are sometimes known as Routing Locators (RLOCs), and the endpoints themselves are denoted by Endpoint IDs (EIDs), typically IP addresses or MAC addresses.

Fabric Wireless LAN Controller (WLC): Performs all the tasks that the WLC traditionally is responsible for—controlling access points attached to the network and managing the radio frequency (RF) space used by these APs, as well as authenticating wireless clients and managing wireless roaming—but also interfaces with the fabric’s control plane node(s) to provide a constantly up-to-date map of which RLOCs (i.e., access-layer fabric edge switches) any wireless EIDs are located behind as they attach and roam within the wireless network.

Intermediate nodes: Any network infrastructure devices that are part of the fabric that lies “in between” two other SD-Access fabric nodes. In most deployments, intermediate nodes consist of distribution or core switches. In many ways, the intermediate nodes have the simplest task of all, as they are provisioned purely in the network underlay and do not participate in the VXLAN-based overlay network directly. As such, they are forwarding packets solely based on the outer IP address in the VXLAN header’s UDP encapsulation, and are often selected for their speed, robustness, and ability to be automated by Cisco DNA Center for this role.

It is important to note that any SD-Access fabric site must consist of a minimum of three logical components: at least one fabric control plane, one or more edges, and one or more borders. Taken together, these constructs comprise an SD-Access fabric, along with Cisco DNA Center for automation and assurance and ISE for authentication, authorization, and accounting (AAA) and policy capabilities.

Now, let’s explore a few of these device roles in greater detail to examine what they entail and the functions they perform. As we examine each role, a sample overview of which devices might typically be employed in these roles is provided.

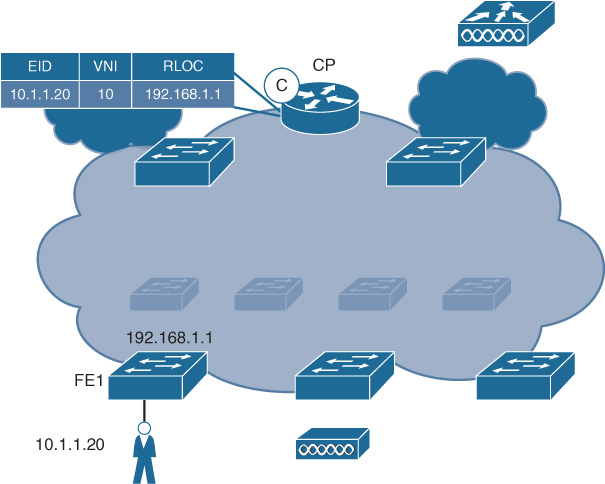

SD-Access Control Plane Nodes, a Closer Look

The role of the control plane node in SD-Access is a crucial one. The control plane node implements the LISP MS/MR functionality, and as such is tasked with operating as the single source of truth about where all users, devices, and things are located (i.e., which RLOC they are located behind) as these endpoints attach to the fabric, and as they roam.

The SD-Access control plane node tracks key information about each EID and provides it on demand to any network element that requires this information (typically, to forward packets to that device’s attached RLOC, via the VXLAN overlay network).

Figure 9-15 illustrates the fabric control plane node in SD-Access.

The fabric control plane node provides several important functions in an SD-Access network:

Acts as a host database, tracking the relationship of EIDs to edge node (RLOC) bindings. The fabric control plane node supports multiple different types of EIDs, such as IPv4 /32s and MAC addresses.

Receives prefix registrations from edge nodes for wired clients, and from fabric-mode WLCs for wireless clients.

Resolves lookup requests from edge and border nodes to locate endpoints within the fabric (i.e., which RLOC is a given endpoint attached to and reachable via).

Updates both edge and border nodes with wireless client mobility and RLOC information as clients roam within the fabric network system, as required.

Effectively, the control plane node in SD-Access acts as a constantly updated database for EID reachability. More than one control plane node can be (and would in most cases be recommended to be) deployed in an SD-Access fabric build.

If more than one control plane node is employed, they do not require or use any complex protocol to synchronize with each other. Rather, each control plane node is simply updated by all of the network elements separately—i.e., if two control plane nodes exist in a given fabric, an edge node, border node, or WLC attached to that fabric is simply configured to communicate with both control plane nodes, and updates both of them when any new device attaches to the fabric, or roams within it. In this way, the control plane node implementation is kept simple, without the complexity that would otherwise be introduced by state sync between nodes.

It is important to note that the control plane node is updated directly by an edge node (for example, an access switch) for wired clients attached to that switch, while for wireless clients the WLC (which manages the wireless domain) is responsible for interfacing with, and updating, the control plane node for wireless clients. The integration of wireless into an SD-Access fabric environment is examined in more detail later in this chapter.

A key attribute that it is critical to note about LISP as a protocol is that it is based on the concept of “conversational learning”—that is to say, a given LISP-speaking device (source RLOC) does not actually learn the location of a given EID (i.e., which destination RLOC that EID currently resides behind) until it has traffic to deliver to that EID. This capability for conversational learning is absolutely key to the LISP architecture, and is a major reason why LISP was selected as the reachability protocol of choice for SD-Access.

The task of a traditional routing protocol, such as Border Gateway Protocol (BGP), is essentially to flood all reachability information everywhere (i.e., to every node in the routing domain). Although this is workable when the number of reachability information elements (i.e., routes) is small—say, a few thousand—such an approach rapidly becomes impractical in a larger deployment.

Imagine, for example, a large campus such as a university that hosts on a daily basis (say) 50,000 to 75,000 users, each of whom may be carrying two or three network devices on average. Using an approach with a traditional routing protocol that attempted to flood the information about 150,000 to 200,000+ endpoints to all network devices would present a huge scaling challenge. Device ternary content-addressable memories (TCAMs) and routing tables would need to be enormous, and very expensive, and tracking all the changes as devices roamed about using the flooding-oriented control plane approach of a traditional routing protocol would rapidly overwhelm the CPU resource available even in large routing platforms—let alone the relatively much smaller CPU and memory resources available in (say) an access switch at the edge of the network.

Rather than use this approach, the LISP control plane node, operating in conjunction with LISP-speaking devices such as edge and border nodes, provides a conversational learning approach where the edge and border nodes query the control plane node for the destination RLOC to use when presented with traffic for a new destination (i.e., one that they have not recently forwarded traffic for). The edges and borders then cache this data for future use, reducing the load on the control plane node.

This use of conversational learning is a major benefit of LISP, as it allows for massive scalability while constraining the need for device resources, and is one of the major motivations for the use of LISP as the control plane protocol for use in SD-Access.

For further details on the operation of LISP, please refer to Chapter 9, which provides a description of the LISP protocol itself, as well as how roaming is handled within LISP.

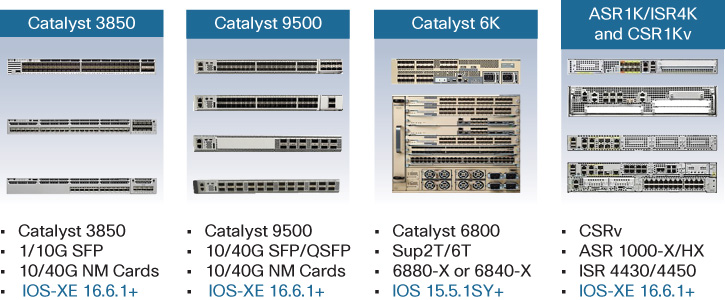

SD-Access Control Plane Nodes: Supported Devices

Multiple devices can fulfill the task of operating as a fabric control plane node. The selection of the appropriate device for this critical task within the fabric is largely based on scale—in terms of both the number of EIDs supported and the responsiveness and performance of the control plane node itself (based on CPU sizing, memory, and other control plane performance factors).

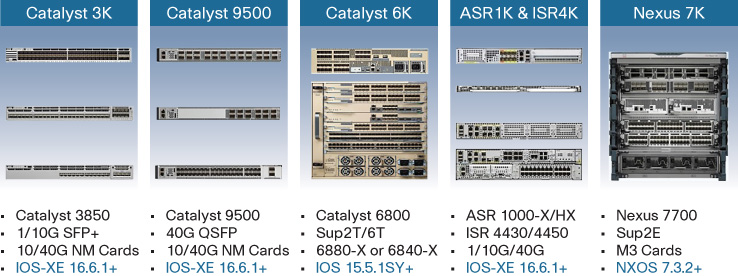

Figure 19-16 outlines some of the platforms that, as of this writing, are able to operate as control plane nodes within the SD-Access fabric.

For many deployments, the fabric control plane node function is based on a switch platform. This is often very convenient, as such switches often exist in the deployment build in any case.

The control plane function is either co-located on a single device with the fabric border function (outlined in the following section) or implemented on a dedicated device for the control plane node. Typically, dedicating a device or devices to the control plane role, rather than having them serve dual functions, results in greater scalability as well as improved fault tolerance (because a single device failure then only impacts one fabric role/function, not both simultaneously). Nevertheless, many fabrics may choose to implement a co-located fabric control plane/border node set of functions on a common device, for the convenience and cost savings this offers.

Typically, the Catalyst 3850 platform (fiber based) is selected as a control plane node only for the smallest fabric deployments, or for pilot systems where an SD-Access fabric is first being tested. The amount of memory and CPU horsepower available in the Catalyst 3850 significantly restricts the scalability it offers for this role, however.

Many fabric deployments choose to leverage a Catalyst 9500 switch as a fabric control plane node. The multicore Intel CPU used in these platforms provides a significant level of performance and scalability for such fabric control plane use, and makes the Catalyst 9500 an ideal choice for many fabric control plane deployments. For branch deployments that require a fabric control plane, the ISR 4000 platforms are also leveraged for this task, with their CPU and memory footprint making them well suited to this task for a typical branch.

The very largest fabric deployments may choose to leverage an ASR 1000 as a fabric control plane node. This offers the greatest scalability for those SD-Access fabric installations that require it. It is worth noting that some control plane node types offer more limited functionality than others.

Please refer to Cisco.com, as well as resources such as the Software-Defined Access Design Guide outlined in the “Further Reading” section at the end of this chapter, for the exact details of the scalability and functionality for each of these control plane options, which vary between platform types. The control plane scale associated with these platforms may also vary between software releases, so referring to the latest online information for these scaling parameters is recommended.

Because new platforms are always being created, and older ones retired, please also be sure to refer to Cisco.com for the latest information on supported devices for the SD-Access fabric control plane role.

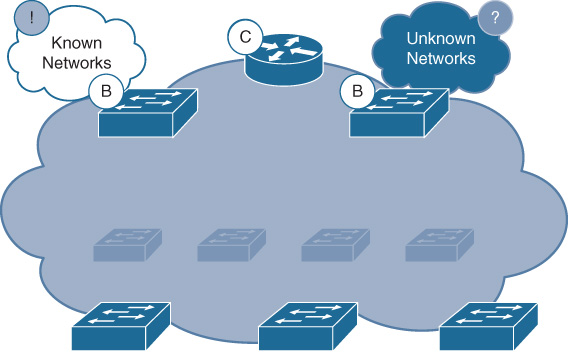

SD-Access Fabric Border Nodes, a Closer Look

Now, let’s examine further the role of a fabric border node in SD-Access fabric.

As previously mentioned, the task of a border node is twofold: to connect the SD-Access fabric to the outside world, and to translate between SD-Access fabric constructs (VNs, SGTs) and any corresponding constructs in the outside network.

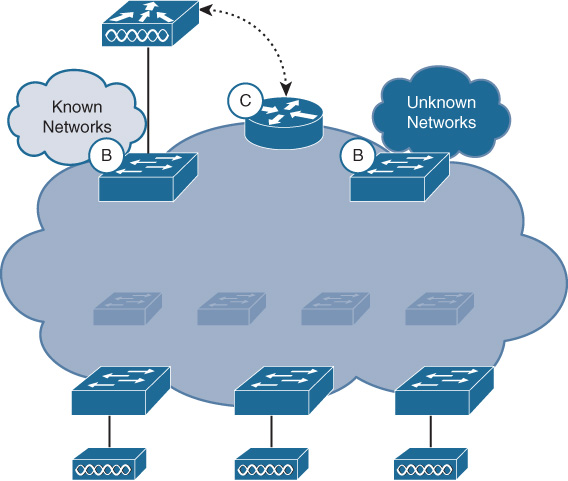

Figure 19-17 depicts fabric border nodes (marked with a B) in an SD-Access deployment.

An important item to note is that SD-Access defines two basic types of border node: a fabric border and a default border. Let’s examine each one of these in turn, after which we’ll look at the devices supported.

SD-Access Fabric Border Nodes

Fabric border nodes (i.e., ones that are not default border nodes) connect the SD-Access fabric deployment to external networks that host a defined set of subnets (in Figure 19-17, this is the border attached to the cloud marked Known Networks). Examples of these are fabric border nodes attaching to a data center, wherein lie a set of defined server subnets, or a fabric border node attaching to a WAN, which hosts a defined set of subnets leading to, and hosted at, branch locations. For this reason, fabric borders are also sometimes referred to as internal borders because they border onto defined areas typically constrained within the enterprise network.

The fabric border node advertises the subnets (IP host pools) located inside the fabric to such external-to-the-fabric destinations, and imports prefixes from these destinations to provide fabric reachability to them. In LISP nomenclature, the fabric border node performs the role of an Ingress/Egress Tunnel Router (xTR).

The tasks performed by a fabric border node include

Connecting to any “known” IP subnets attached to the outside network(s)

Exporting all internal IP host pools to the outside (as aggregates), using traditional IP routing protocols

Importing and registering known IP subnets from outside, into the fabric control plane

Handling any required mappings for the user/device contexts (VN/SGT) from one domain to another

An SD-Access deployment implements one or more fabric border nodes for a given fabric deployment, as needed. Each fabric border node registers the IP subnets that are located beyond it into the LISP mapping database as IP prefixes (with the exception of the default border type, as noted in the next section), allowing the LISP control plane to in turn refer any other nodes needing reachability to those prefixes to the appropriate fabric border node for forwarding. More than one fabric border may be defined and used for redundancy, if required.

Once the traffic arrives at the fabric border node from a fabric node (such as an edge switch), the VXLAN encapsulation for the incoming packet is removed, and the inner (user) packet is then forwarded on the appropriate external interface, and within the appropriate external context (for example, if multiple VRFs are in use in the outside network connected to the fabric border, the packet is forwarded in the correct one as per the border’s routing policy configuration).

When traffic arrives from the external network, the fabric border reverses this process, looking up the destination for the traffic from the fabric control plane node, then encapsulating the data into VXLAN and forwarding this traffic to the destination RLOC (typically, an edge switch).

SD-Access Fabric Default Border Nodes

As mentioned, fabric border nodes lead to “known” destinations outside of the fabric. However, there is often the need to reach out to destinations beyond the fabric for which it is impractical, or even impossible, to enumerate all possible subnets/prefixes (such as the Internet, for example). Borders that lead to such “unknown” destinations are known as fabric default borders.

The fabric default border node advertises the subnets (IP host pools) located inside the fabric to such external destinations. However, the fabric default border does not import any prefixes from the external domain. Rather, the fabric default border node operates similarly to a default route in a traditional network deployment, in that it serves as a forwarding point for all traffic whose destination inside or outside the fabric cannot otherwise be determined. When the LISP mapping system supporting a fabric domain has a “miss” on the lookup for a given external destination, this indicates to the system doing the lookup to forward traffic to the fabric default border node (if so configured). In LISP nomenclature, the fabric default border node performs the role of a proxy xTR (PxTR).

The tasks performed by a fabric default border node include

Connecting to “unknown” IP subnets (i.e., Internet)

Exporting all internal IP host pools to the outside (as aggregates), using traditional IP routing protocols

Handling any required mappings for the user/device contexts (VN/SGT) from one domain to another

Note that a fabric default border node does not import unknown routes. Instead, it is the “default exit” point if no other entries are present for a given destination in the fabric control plane.

An SD-Access deployment may implement one or more fabric border nodes and/or default border nodes for a given fabric site, as needed (the exact number depending on the deployment needs involved, as well as the fabric border platforms and software releases in use—refer to resources such as the Software-Defined Access Design Guide outlined in the “Further Reading” section at the end of this chapter for more details).

The actual operation of a fabric default border node is largely identical to that of a non-default border node for such actions as packet encapsulation, decapsulation, and policy enforcement, with the exception that outside prefixes are not populated by the default border into the fabric control plane. It is worth noting that it is also possible to provision a border as an “anywhere border,” thus allowing it to perform the functions of an internal border as well as a default border.

SD-Access Fabric Border Nodes: Supported Devices

Multiple devices can fulfill the task of operating as a fabric border node or default border node. As with the fabric control plane node, the selection of the appropriate device for this critical task within the fabric is largely based on scale—in terms of both the number of EIDs supported and the performance and sizing of the border node itself. By its nature, a border node must communicate with all devices and users within the fabric, and so is typically sized in line with the fabric it supports.

However, in addition, because the fabric border node is, by virtue of its role and placement within the network, inline with the data path in and out of the fabric, sizing of the fabric border node must also take into account the appropriate device performance, including traffic volumes, link speeds, and copper/optical interface types.

Figure 19-18 outlines some of the platforms that, as of this writing, operate as border nodes within the fabric.

A wide range of fabric border exists, as shown, with a wide range of performance and scalability available. Again, the appropriate choice of fabric border node depends on the scale and functionality requirements involved.

As mentioned previously, a fabric border is implemented either as a dedicated border node or co-located with a fabric control plane function. While some deployments choose the co-located option, others opt for dedicated borders and control planes to provide greater scalability and to lessen the impact of any single device failure within the fabric.

Again, the Catalyst 3850 platforms (fiber based) are typically selected as a border node only for the smallest fabric deployments, or for pilot systems where an SD-Access fabric is first being tested. The amount of memory and CPU horsepower available in the Catalyst 3850, as well as the hardware forwarding performance it offers, may restrict the scalability it offers for this role, however.

Many fabric deployments choose to leverage a Catalyst 9500 switch as a fabric border node. The higher overall performance offered by this platform, both in terms of hardware speeds and feeds and in terms of the multicore Intel CPU used, provides a significant level of performance and scalability for such fabric border use, and makes the Catalyst 9500 an ideal choice for many fabric border deployments.

For branch deployments, the ISR 4000 platforms are also leveraged for this fabric border task, with their CPU and memory footprint making them well suited to this task for a typical branch. The very largest fabric deployments may choose to leverage an ASR 1000 as a fabric border node. This offers the greatest scalability for those SD-Access fabric installations that require this.

When selecting a border platform, please note that not all fabric border functions and capabilities are necessarily available across all of the possible border platforms available.

Please refer to Cisco.com, as well as resources such as the Software-Defined Access Design Guide outlined in the “Further Reading” section at the end of this chapter, for the exact details of the scalability and functionality for each of these fabric border options. The border node scale associated with these platforms varies between platform types, and may also vary between software releases, so referring to the latest online information for these scaling parameters is recommended.

Because new platforms are always being created, and older ones retired, please also be sure to refer to Cisco.com for the latest information on supported devices for the SD-Access fabric border role.

SD-Access Fabric Edge Nodes

Fabric edge nodes serve to attach endpoint devices to the SD-Access fabric. When endpoints attach to the fabric, the edge node serves to authenticate them, either using static authentication (i.e., static mapping of a port to a corresponding VN/SGT assignment) or dynamically using 802.1X and actual user/device identity for assignment to the correct VN/SGT combination, based on that user’s or device’s assigned role.

For traffic ingressing the edge node from attached devices, the fabric edge node looks up the proper location for the destination (RLOC, switch or router) using the fabric control plane node, then encapsulates the ingress packet into VXLAN, inserting the appropriate VN and SGT to allow for proper traffic forwarding and policy enforcement, and subsequently forwards the traffic toward the correct destination RLOC. In LISP nomenclature, the fabric edge node operates as an xTR.

For appropriate QoS handling by the fabric edges as well as intermediate nodes and border nodes, the inner (user) packet DSCP value is copied into the outer IP/UDP-based VXLAN header, and may be used by any QoS policies in use within the fabric network.

Figure 19-19 illustrates the use of fabric edge nodes in SD-Access.

A summary of some of the important functions provided by fabric edge nodes includes

Identifying and authenticating attached wired endpoints

Registering endpoint ID information with fabric control plane nodes

Providing anycast Layer 3 gateway services for attached endpoints

Onboarding users and devices into the SD-Access fabric

Forming VXLAN tunnels with attached APs

As noted, the fabric edge node provides authentication services (leveraging the AAA server provided in the network, such as ISE) for wired endpoints (wireless endpoints are authenticated using the fabric-enabled WLC). The fabric edge also forms VXLAN tunnels with fabric-enabled access points. The details of wireless operation with SD-Access are covered later in this chapter.

A critical service offered by the fabric edge node is the distributed anycast Layer 3 gateway functionality. This effectively offers the same IP address and virtual MAC address for the default gateway for any IP host pool located on any fabric edge, from anywhere in the fabric site. This capability is key for enabling mobility for endpoints within the SD-Access fabric, and is illustrated in Figure 19-20.

The following are important items of note for the distributed anycast default gateway functionality provided by SD-Access:

It is similar principle to what is provided by HSRP/VRRP in a traditional network deployment (i.e., virtual IP gateway).

The same Switch Virtual Interface (SVI) is present on every fabric edge for a given host pool, with the same virtual IP and virtual MAC address.

If a host moves from one fabric edge to another, it does not need to change its Layer 3 default gateway information (because the same virtual IP/MAC address for the device’s default gateway is valid throughput the fabric site).

This anycast gateway capability is also critical for enabling the overall simplicity that an SD-Access-based deployment offers because it supports the “stretched subnet” capability inherent in SD-Access, as outlined previously in this chapter. This in turn allows enterprises, as noted previously, to vastly simplify their IP addressing planning and deployment.

Organizations deploying SD-Access now reap many of the benefits long enjoyed with wireless overlays for IP addressing—smaller numbers of larger subnets, and more efficient use of IP address space—for both their wired and wireless endpoints attached to their fabric edge switches.

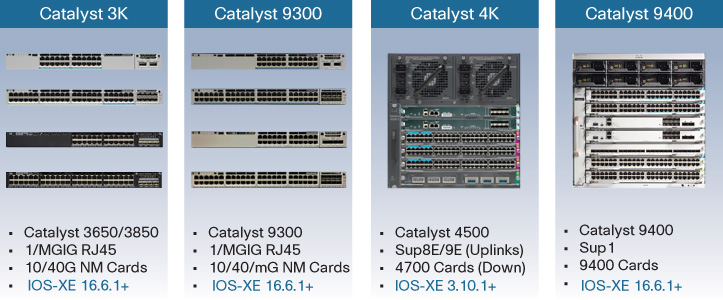

SD-Access Edge Nodes: Supported Devices

Multiple devices can fulfill the task of operating as a fabric edge node. The selection of the appropriate fabric edge node is typically driven by the port densities, uplink speeds, port types (10/100/1000, mGig, PoE or non-PoE, etc.) and edge functionality required.

Figure 19-21 provides an overview of some of the platforms that may be employed in the fabric edge role.

A very important aspect to note with the fabric edge node support in SD-Access is the inclusion of both the Catalyst 3850 and 3650 platforms. This capability to support the fabric edge capability on these platforms is a direct result of the outstanding capability provided by the flexible, programmable Unified Access Data Plane (UADP) chipset they employ.

Every Catalyst 3850 and 3650 ever produced, since the introduction of the platform, can (with the appropriate software load and licensing) be provisioned into an SD-Access fabric and operate as a fabric edge node. As one of the leading access switch platforms in the industry, deployed by many thousands of Cisco customers worldwide, this opens up the ability to deploy VXLAN-based SD-Access fabrics to many networks that would otherwise have to wait for a multiyear hardware refresh cycle before the migration to a next-generation network design could be considered.

The ability to leverage these widely deployed enterprise switches as fabric edge nodes in SD-Access is a direct result of Cisco’s inclusion of programmable ASIC hardware into these platforms. This provides an outstanding level of investment protection for Cisco customers using these platforms, and highlights the importance of the flexible, programmable UADP ASIC that was examined in detail in Chapter 7.

In addition, the Catalyst 4500 using Supervisor 8/9 (for uplinks to the rest of the fabric network) and 4700-series linecards (for downlinks to attached devices) can be employed as an SD-Access fabric edge switch, thus providing outstanding investment protection for those sites that prefer a modular access switch architecture.

Please refer to Cisco.com, as well as resources such as the “Software-Defined Access Design Guide” outlined in the “Further Reading” section at the end of this chapter, for the exact details of the scalability and functionality for each of these fabric edge options as shown. The fabric edge scale associated with these platforms varies between platform types, and may also vary between software releases, so referring to the latest online information for these scaling parameters is recommended.

Because new platforms are always being created, and older ones retired, please also be sure to refer to Cisco.com for the latest information on supported devices for the SD-Access fabric edge role.

SD-Access Extended Nodes

In some types of deployments, it might be desirable to connect certain types of network devices below the fabric edge. These may be devices that are specific types of Layer 2 switches, such as switches that provide form factors other than those supported by the fabric edge node, or that are designed to work in environments too hostile or demanding for a standard fabric edge node to tolerate.

In an SD-Access deployment, such devices are known as extended nodes. These plug into a fabric edge node port and are designated by Cisco DNA Center as extended nodes. Operating at Layer 2, these devices serve to aggregate endpoints attached to them into the upstream fabric edge node, at which point the traffic from these extended nodes is mapped from their respective Layer 2 VLANs into any associated VNs and SGTs, and forwarded within the SD-Access fabric. For this purpose, an extended node has an 802.1Q trunk provisioned from the fabric edge node to the attached extended node.

Because extended nodes lack all the capabilities of a fabric edge node, they depend on the fabric edge for policy enforcement, traffic encapsulation and decapsulation, and endpoint registration into the fabric control plane.

Supported extended nodes for an SD-Access fabric deployment as of this writing include the Catalyst 3560-CX compact switches, as well as selected Cisco Industrial Ethernet switches. Only these specific types of switches are supported as extended nodes, as they are provisioned and supported by Cisco DNA Center as an automated part of the SD-Access solution overall.

The ability to “extend” the edge of the SD-Access fabric using these switches allows SD-Access to support a broader range of deployment options, which traditional Cisco edge switches might not otherwise be able to handle alone. These include such diverse deployment types as hotel rooms, cruise ships, casinos, factory floors, and manufacturing sites, among others.

Please refer to Cisco.com, as well as resources such as the Software-Defined Access Design Guide outlined in the “Further Reading” section at the end of this chapter, for the exact details of the scalability and functionality for each of these fabric extended node options. The extended node scale associated with these platforms varies between platform types, and may also vary between software releases, so referring to the latest online information for these functionality and scaling parameters is recommended.

Because new platforms are always being created, and older ones retired, please also be sure to refer to Cisco.com for the latest information on supported devices for the SD-Access fabric extended node role.

Now that we’ve examined the options available for the SD-Access fabric wired infrastructure, let’s move on to examine wireless integration in an SD-Access fabric deployment.

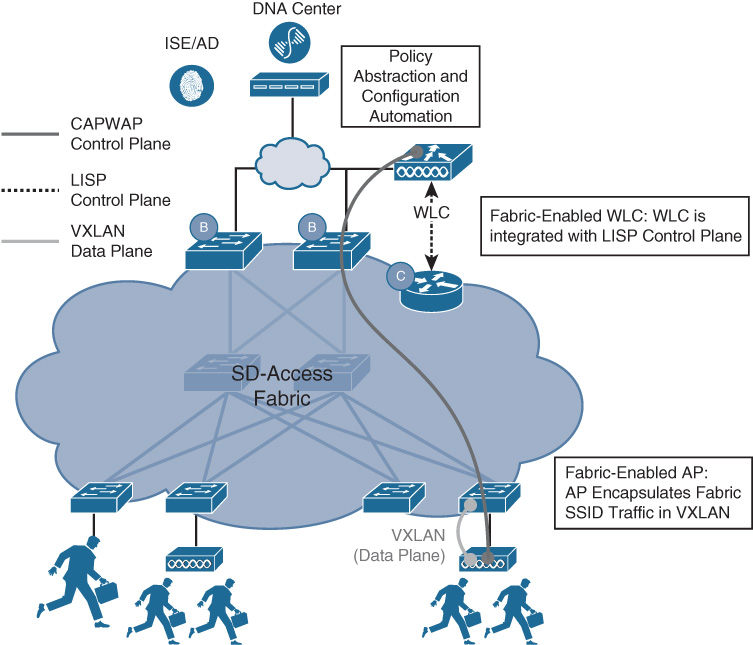

SD-Access Wireless Integration

Wireless mobility is a fact of life—and a necessity—for almost any modern organization. Almost all devices used in an enterprise network offer an option for 802.11 wireless connectivity—and some devices, such as smartphones and tablets, are wireless-only, offering no options for wired attachment. Today’s highly mobile workforce demands secure, speedy, reliable, and easy-to-use access to wireless connectivity.