V

Algebraic logic (II)

Homogeneous locally finite polyadic Boolean algebras of infinite degree

Table of contents

§2.Functional polyadic algebras,

§10.Functional representation,

§13.Factorization and commutativity,

§14.Constants from endomorpbisms,

The purpose of this paper is to do for the lower functional calculus what the first paper of the series did for the monadic special case. To a very great extent, however, the two papers are independent of each other. The situation is similar to that in the theory of differential equations. Once the basic but relatively elementary concept of differentiation is known, it can be used to give a mathematical formulation of the problems of classical Newtonian mechanics, usually in the form of ordinary differential equations. It can also be used to give a mathematical formulation to some of the more sophisticated problems of modern physics, usually in the form of partial differential equations. Similarly, once the algebraic version of the logical operation of quantification is defined, it can be applied to the algebraic study of either classical or modern logic. The analogy is quite close. Traditional Aristotelean logic can be viewed as the theory of propositional functions of a single variable; the lower functional calculus, on the other hand, treats propositional functions of any number of variables.

The analogy between physics and logic can be used to illuminate not only the role of this paper in the series, but, for that matter, the purpose of algebraic logic as a whole. It is fashionable nowadays to give a mathematical exposition of quantum mechanics in terms of certain algebraic systems. The concepts occurring in such an exposition, the terms used to describe them, and the problems selected for special emphasis are all suggested by the facts of physics. The algebra does not pretend to solve physical problems or to give new information about the state of the universe; its primary aim is to study a mathematical subject by mathematical methods. The mathematical machinery set up for this purpose is (unlike its extramathematical source) aesthetically attractive to and intellectually fathomable by the professional mathematician. It can happen that the mathematics will eventually come to be applicable to its own parent and capable of contributing to its growth, but the mathematics is of value even if that does not happen. It is of value not only in an intrinsic mathematical sense, but also because it can be used as a map with which the professional mathematician can venture into the territory of the professional physicist. If in the preceding description of the role of algebraic treatments of quantum mechanics words such as “physics” and “physicist” are replaced by “logic” and “logician”, the result is a fair description of the intended role of algebraic logic.

Not all the problems that interest a logician have their counterpart in algebraic logic. The reason for this is best seen in another analogy: roughly speaking, ordinary logic is to permutation groups as algebraic logic is to abstract (axiomatically defined) groups. Since the problems of transitivity and primitivity cannot even be expressed in the language of abstract group theory, the generality and elegance of that subject are obtained at the cost of some terminological and conceptual sacrifice. For some purposes a more illuminating ratio is this: ordinary logic is to free groups as algebraic logic is to abstract groups. This ratio is very suggestive. The logician’s customary exposition of the propositional calculus is, in fact, a description of the free Boolean algebra on a countable set of generators; similarly, the customary treatments of the functional calculi can be viewed as studies in the theory of free polyadic algebras. In group theory both the algebraic and the combinatorial methods have an important place; the reason for the existence of algebraic logic is the belief that the same is true in logic.

It is not claimed that the algebraic point of view simplifies all definitions and shortens all proofs; in many cases just the opposite is true. The pertinent analogy here is the relation between the classical theory of Hermitian matrices and the approach to the same subject via Hubert space. The definition of the conjugate transpose of a complex matrix can be given with perfect rigor on the first page of an elementary book. The corresponding definition in a book on Hubert space must wait its turn; many properties of inner products and linear functionals have to be established before the adjoint of an operator can make sense in an invariant, algebraic setting. An analogous situation in logic occurs, for instance, in connection with the concept of an individual constant. This simple concept is usually (and quite properly) dismissed with a few words in ordinary treatments of the subject; in the algebraic treatment (cf. § 12) it becomes a part of the most profound development of the theory.

The basic concepts of algebraic logic can be traced back to the work of Tarski and his collaborators; cf., for instance, [4], [6], and [7]. (Unfortunately the proofs of Tarski’s results on cylindric algebras have not yet been published, and it is, consequently, not easy to assess the exact extent of the overlap between the theories of cylindric algebras and polyadic algebras. It is probable that the basic difficulties are the same; the concepts and the techniques are likely to be different.) It will also be obvious to the reader familiar with some of the recent literature of logic that this paper was strongly influenced by Henkin’s thesis [3] and by some of the techniques introduced by Easiowa and Sikorski [5]. It is a pleasure, finally, to express my indebtedness to A. F. Bausch and to Alfred Tarski. I had many stimulating conversations about functional calculi with Bausch and about cylindric algebras with Tarski; I am grateful for their friendly advice and encouragement.

The principal results of the paper are the functional representation (Theorem 10.9), the adjunction of variables (Theorem 11.9), the adjunction of witnessing constants (Theorem 19.6), and the representation of simple algebras (Theorem 17.3). The reader who wants to get a quick snapshot of the subject, avoiding most of the technicalities, can proceed along the following course: glance at § 1 for the notation, read § 2, omit § 3 and § 4, read the definition of local finiteness in § 5, omit § 6 and § 7, read § 8 and § 9, read the remarks and the statements of the theorems (but not the proofs) in § 10, read the definition of dilations in § 11 and the definition of constants in § 12, omit § 13 and § 14, and read the definitions and the theorems in § 15, § 16, and § 17.

For convenience of reference this section contains a summary of those definitions and theorems from the theory of monadic algebras that will be used in the sequel. The theorems in this summary all belong to the elementary part of the subject; their proofs are easy consequences of the definitions and of the standard theory of Boolean algebras. The details and the heuristic motivation are given in [2].

We begin by establishing the notation that will be used for Boolean algebras. The symbols ![]() and

and ![]() are used to indicate the supremum and the infimum, respectively, of sets in a Boolean algebra. Other Boolean symbols, which will be used without any further explanation below, are as follows: p∨q is the supremum of p and q, p

are used to indicate the supremum and the infimum, respectively, of sets in a Boolean algebra. Other Boolean symbols, which will be used without any further explanation below, are as follows: p∨q is the supremum of p and q, p![]() q is the infimum of p and q, p′ is the complement of p, 0 is the zero element of the algebra, 1 is the unit element of the algebra, p – q( = p

q is the infimum of p and q, p′ is the complement of p, 0 is the zero element of the algebra, 1 is the unit element of the algebra, p – q( = p![]() q′) is the difference between p and g, p + q( = (p − q)∨(q – p)) is the Boolean sum (or symmetric difference) of p and q. The symbols

q′) is the difference between p and g, p + q( = (p − q)∨(q – p)) is the Boolean sum (or symmetric difference) of p and q. The symbols ![]() and

and ![]() will denote the natural order in a Boolean algebra, so that, for instance,

will denote the natural order in a Boolean algebra, so that, for instance, ![]() means that p

means that p![]() q = p. The identity mapping of a Boolean algebra onto itself will be denoted by e. It is understood that, by definition, a Boolean algebra contains at least two distinct elements. The symbol O will be used to denote the two-element Boolean algebra {0,1}, so that O is a Boolean subalgebra of every Boolean algebra.

q = p. The identity mapping of a Boolean algebra onto itself will be denoted by e. It is understood that, by definition, a Boolean algebra contains at least two distinct elements. The symbol O will be used to denote the two-element Boolean algebra {0,1}, so that O is a Boolean subalgebra of every Boolean algebra.

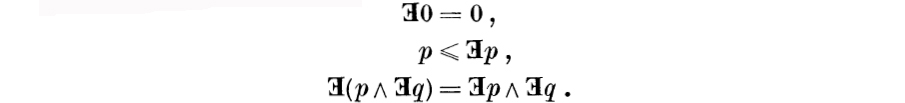

A quantifier (or, properly speaking, an existential quantifier) is a normalized, increasing, and quasi-multiplicative mapping ![]() of a Boolean algebra into itself; in other words, it is a mapping

of a Boolean algebra into itself; in other words, it is a mapping ![]() such that if p and q are in the algebra, then

such that if p and q are in the algebra, then

The discrete quantifier is the identity mapping e; the simple quantifier is the one for which ![]() whenever p ≠ 0. A hemimorphism is a normalized and additive mapping f from one Boolean algebra into another; in other words, it is a mapping f such that f0 = 0 and such that if p and q are in its domain, then f(p∨q) = fp∨fq. Clearly every Boolean homo-morphism (and, in particular, every Boolean endomorphism) is a hemimorphism.

whenever p ≠ 0. A hemimorphism is a normalized and additive mapping f from one Boolean algebra into another; in other words, it is a mapping f such that f0 = 0 and such that if p and q are in its domain, then f(p∨q) = fp∨fq. Clearly every Boolean homo-morphism (and, in particular, every Boolean endomorphism) is a hemimorphism.

The following simple facts are basic in the theory of quantifiers.

(1.1) A quantifier is idempotent i. e., ![]() ).

).

(1.2) A quantifier is monotone (i. e., if ![]() , then

, then ![]() ).

).

(1.3) The range of a quantifier is a Boolean algebra.

(1.4) A quantifier is additive (i. e., ![]() ).

).

(1.5) If ![]() is a quantifier and if p and q are elements of its domain, then

is a quantifier and if p and q are elements of its domain, then ![]() .

.

We observe that in view of (1.4) a quantifier is a hemimorphism; from this and from the elementary fact that a hemimorphism is monotone we can recapture (1.2). The reason for stating the facts in the order used above is that in the proof of (1.4) it is convenient to make use of (1.2).

A monadic algebra is a Boolean algebra A together with a quantifier ![]() on A. A subset B of a monadic algebra A is a monadic subalgebra of A (or a monadic ideal in A) if and only if B is a Boolean subalgebra of A (or a Boolean ideal in A) such that

on A. A subset B of a monadic algebra A is a monadic subalgebra of A (or a monadic ideal in A) if and only if B is a Boolean subalgebra of A (or a Boolean ideal in A) such that ![]() belongs to B whenever p belongs to B. A mapping f between monadic algebras is a monadic homomorphism if it is a Boolean homomorphism such that

belongs to B whenever p belongs to B. A mapping f between monadic algebras is a monadic homomorphism if it is a Boolean homomorphism such that ![]() for every p in its domain. (The adjective “monadic” will be used with “subalgebra”, “ideal”, “homomorphism”, etc., whenever it is advisable to emphasize the distinction from other kinds of subalgebras, ideals, homomorphisms, etc. – e. g., from the plain Boolean kind. Usually, however, the adjective will be omitted and the context will unambigously indicate what is meant. A similar remark applies to the polyadic concepts that will be introduced later.) A monadic algebra is simple if {0} is the only proper ideal in it. A monadic ideal is maximal if it is not a proper subset of any proper ideal. A monadic algebra is semisimple if the intersection of all maximal ideals in it is {0}.

for every p in its domain. (The adjective “monadic” will be used with “subalgebra”, “ideal”, “homomorphism”, etc., whenever it is advisable to emphasize the distinction from other kinds of subalgebras, ideals, homomorphisms, etc. – e. g., from the plain Boolean kind. Usually, however, the adjective will be omitted and the context will unambigously indicate what is meant. A similar remark applies to the polyadic concepts that will be introduced later.) A monadic algebra is simple if {0} is the only proper ideal in it. A monadic ideal is maximal if it is not a proper subset of any proper ideal. A monadic algebra is semisimple if the intersection of all maximal ideals in it is {0}.

(1.6) A monadic algebra is simple if and only if its quantifier is simple.

(1.7) Every monadic algebra is semisimple.

If X is a non-empty set and if B is a Boolean algebra, the set of all functions from X into B is a Boolean algebra with respect to the pointwise operations. A B-valued functional monadic algebra with domain X is a Boolean subalgebra A of the algebra of all functions from X into B such that, for each p in A, the range of p has a supremum in B, and such that the (constant) function, whose value at every point of X is that supremum, belongs to A. If that constant function is denoted by ![]() , then

, then ![]() is a quantifier on A, so that a functional monadic algebra is a monadic algebra.

is a quantifier on A, so that a functional monadic algebra is a monadic algebra.

(1.8) An O-valued functional monadic algebra is simple.

A constant of a monadic algebra A (with quantifier ![]() ) is a Boolean endomorphism c on A such that

) is a Boolean endomorphism c on A such that ![]() and

and ![]() .

.

(1.9) A constant is idempotent (i. e., cc = c).

(1.10) If c is a constant of a monadic algebra A with quantifier ![]() , then

, then ![]() for every p in A.

for every p in A.

§ 2. Functional polyadic algebras

If “proposition” is taken to mean an element of a Boolean algebra B, then it is natural to interpret “propositional function” as a function from some set into B. A propositional function of several variables becomes in this scheme a function from a Cartesian product of several sets into B. In order to keep the notation from becoming cumbersome, we shall restrict our attention to Cartesian powers, i. e., to sets of the form XI, where I is an arbitrary index set. (Cf. also the discussion at the end of § 4.) An element of XI is, by definition, a function from I into X; the value of such a function x at an index i will be denoted by a xi.

The set of all functions from a non-empty set into a Boolean algebra is a Boolean algebra with respect to the pointwise operations. According to the usual conventions the set XI is empty if and only if X is empty but I is not. In order to ensure the non-emptiness of XI we shall always assume that the set X is not empty. Since the elements of the set X constitute the universe of discourse with which the propositions and propositional functions of the theory are concerned, this assumption is a reasonable one.

The main thing that distinguishes the abstractly given Boolean algebra B from the Boolean algebra of all B- valued functions on XI is that the concrete representation of the latter automatically endows it with a lot of new structure. The additional structure comes from the presence of variables. Substituting some variables for others, or holding some variables fixed while forming a supremum over the others, we obtain new propositional functions out of old ones.

To make these indications more specific, we consider the set of all transformations on I, i. e., the set of all transformations whose domain is the set I and whose range is included in I. Since the transformations we consider are not necessarily one-to-one and not necessarily onto, the set of all such transformations does not form a group. Since, however, the product (i. e., composite) of two transformations on I is again a transformation on I, and since transformation multiplication is associative, the set of all transformations on I does form a semigroup. Since the identity mapping from I onto itself (which we shall always denote by δ) is a transformation on I, the semigroup contains a (necessarily unique) unit.

The idea of “substituting some variables for others” is to apply a transformation on I to the indices of the arguments of a function from XI into B. The desideratum is to associate with each transformation τ on I, in a natural manner, a transformation S(τ) that sends propositional functions (i. e., functions from XI into B) into propositional functions. The definition of S(τ) is most easily given in terms of an auxiliary transformation τ* on XI. The transformation τ* is defined by

![]()

for all i in I and for all x in XI. The functional transformation S(τ) associated with a transformation τ on I is defined by

![]()

for all x in XI and for all functions p from XI into B. (The symbol S(τ)p(x) is an abbreviation for (S(τ)p)(x). Such abbreviations will be used frequently without any further explanation.) The precise version of “substituting some variables for others” is the application of a transformation such as S(τ).

The formation of partial suprema is a slightly more delicate matter. If J is a subset of I (not necessarily proper), then there is associated with J in a natural manner a transformation ![]() that sends some (but not necessarily all) propositional functions into others. The definition of

that sends some (but not necessarily all) propositional functions into others. The definition of ![]() is most easily given in terms of an auxiliary binary relation J* in XI. The relation J* is, by definition, such that

is most easily given in terms of an auxiliary binary relation J* in XI. The relation J* is, by definition, such that

![]()

for all x and y in XI. (The symbols ![]() and ∉ are used to indicate the relations of belonging and non-belonging, respectively.) The functional transformation

and ∉ are used to indicate the relations of belonging and non-belonging, respectively.) The functional transformation ![]() is defined by

is defined by

![]()

provided that the indicated supremum exists for every x in XI. The idea is to form the supremum of the values of the function p over the points obtained from x by holding fixed the variables outside J and varying the variables in J arbitrarily.

Since ![]() need not exist for every function p from XI into B, we cannot continue to insist on studying the Boolean algebra of all functions from XI into B. We must and we shall be content with considering a Boolean subalgebra of that algebra, say A. By requiring that

need not exist for every function p from XI into B, we cannot continue to insist on studying the Boolean algebra of all functions from XI into B. We must and we shall be content with considering a Boolean subalgebra of that algebra, say A. By requiring that ![]() exist and belong to A for every subset J of I and for every element p of A we can circumvent the difficulty caused by the possible non-existence of suprema. Now, however, another difficulty arises. If τ is a transformation on I, then S(τ)p is a function from XI into B whenever p is such, but if we assume that p

exist and belong to A for every subset J of I and for every element p of A we can circumvent the difficulty caused by the possible non-existence of suprema. Now, however, another difficulty arises. If τ is a transformation on I, then S(τ)p is a function from XI into B whenever p is such, but if we assume that p ![]() A, that does not guarantee that S(τ)p

A, that does not guarantee that S(τ)p ![]() A. This difficulty can be circumvented also, simply by requiring that A be closed under the operation S(τ) for every transformation τ on I.

A. This difficulty can be circumvented also, simply by requiring that A be closed under the operation S(τ) for every transformation τ on I.

We can summarize the preceding considerations as follows. Suppose that B is a Boolean algebra and that X and I are sets, with X non-empty. The elements of I will play for us the role of what are usually called variables (although they do not, in any sense of the word, vary), and the set X will play the role of the domain of the variables (although, in general, the variables do not belong to the domain). The elements of the Boolean algebra B will play the role of the propositions that can occur as the values of the propositional functions to be considered. A functional polyadic (Boolean) algebra is a Boolean subalgebra A of the algebra of all functions from XI into B, such that S(τ)p ![]() A whenever p

A whenever p ![]() A and τ is a transformation on I, and such that

A and τ is a transformation on I, and such that ![]() exists and belongs to A whenever p

exists and belongs to A whenever p ![]() A and J is a subset of I. Whenever it becomes desirable to indicate the constituents used in the definition, A will be called a B-valued functional polyadic algebra with domain X and variables I, or, more simply, a B-valued I-algebra over X. Functional polyadic algebras are the concrete objects in terms of which propositional functions can be studied; our main purpose is to investigate the abstract algebraic objects of which functional polyadic algebras are the typical representations.

A and J is a subset of I. Whenever it becomes desirable to indicate the constituents used in the definition, A will be called a B-valued functional polyadic algebra with domain X and variables I, or, more simply, a B-valued I-algebra over X. Functional polyadic algebras are the concrete objects in terms of which propositional functions can be studied; our main purpose is to investigate the abstract algebraic objects of which functional polyadic algebras are the typical representations.

In order to find out what are the algebraic properties of the operators S(τ) and ![]() on a functional polyadic algebra, we shall make use of a concept in terms of which we can treat both kinds of operators at the same time. The concept (to be called substitution) is not an intuitive one; the only reason for introducing it here is that it is efficient for the purpose at hand. By a substitution in a set I we shall mean a function σ whose domain (doma) and range (rancr) are subsets of I. The fact that the domain of a substitution is not necessarily equal to I will be important for us. In what follows we shall reserve the use of the word transformation for a substitution τ such that domτ = I; this is in agreement with the use of that word in the preceding section.

on a functional polyadic algebra, we shall make use of a concept in terms of which we can treat both kinds of operators at the same time. The concept (to be called substitution) is not an intuitive one; the only reason for introducing it here is that it is efficient for the purpose at hand. By a substitution in a set I we shall mean a function σ whose domain (doma) and range (rancr) are subsets of I. The fact that the domain of a substitution is not necessarily equal to I will be important for us. In what follows we shall reserve the use of the word transformation for a substitution τ such that domτ = I; this is in agreement with the use of that word in the preceding section.

If σ and τ are substitutions, their product στ is defined to be the substitution whose domain is the set of all those elements i of I for which i ![]() domτ and i

domτ and i ![]() domσ and whose value for each i in its domain is defined by (στ)i = σ(τi). It is easy to verify that substitution multiplication is associative, so that, with respect to multiplication as the law of composition, the set of all substitutions in I is a semigroup. This semigroup has a unit, namely the identity transformation δ.

domσ and whose value for each i in its domain is defined by (στ)i = σ(τi). It is easy to verify that substitution multiplication is associative, so that, with respect to multiplication as the law of composition, the set of all substitutions in I is a semigroup. This semigroup has a unit, namely the identity transformation δ.

There is another and more familiar semigroup associated with the set I, namely the set of all subsets of I, with respect to the formation of unions as the law of composition. This semigroup also has a unit, namely the empty set Ø. There is a close relation between (all) subsets of I and (some) substitutions in I. In order to study this relation we make correspond to each subset J the identity mapping, to be denoted by χ(J), on I–J. More explicitly, χ(J) is the substitution such that domχ(J) = I–J and χ(J)i = i for all i in I–J.

(3.1) LEMMA. The correspondence χ is a one-to-one correspondence from the set of subsets of I into the set of substitutions in I such that χ(Ø) = δ and χ(J∪K) = χ(J) χ (K).

Proof. Since domχ(J)χ(K) = (I–J)∩(I–K) = I–(J∪K), it follows that χ(J)χ(K) = χ(J∪K). The proof is completed by the observation that χ(J) = δ if and only if J = Ø.

We note that, in algebraic terms, the result of Lemma 3.1 is simply that the correspondence χ is a semigroup monomorphism.

There is a possible source of minor confusion in connection with the equation χ(Ø) = δ. The empty set Ø is also a substitution, namely the unique substitution with empty domain and empty range. It is notationally convenient to make a distinction between the empty set as a subset of I and the empty set as a substitution in I; we shall denote the latter by θ. It is clear that the substitution θ belongs to the range of the correspondence χ; in fact, θ = χ(I).

Transformations on I and subsets of I are more or less natural objects, whereas substitutions are apparently artificial constructs. In fact, however, substitutions arise quite naturally from transformations and subsets, via the correspondence χ.

(3.2) LEMMA. Every substitution a can be written in the form τχ(J), where τ is a transformation on I and J is a subset of I. The set J in this representation is uniquely determined as I – domσ; the transformation τ must agree with σ outside J and is arbitrary in J.

Proof. If σ = τχ(J), then domσ = domχ(J) = I–J, and, if i![]() I–J, then τi = τχ(J)i = σi. This proves the uniqueness assertions. To prove the rest, let τ be an arbitrary transformation that agrees with σ in domσ; an immediate verification shows that if J = I — domσ, then σ = τχ(J).

I–J, then τi = τχ(J)i = σi. This proves the uniqueness assertions. To prove the rest, let τ be an arbitrary transformation that agrees with σ in domσ; an immediate verification shows that if J = I — domσ, then σ = τχ(J).

What is the connection between the representation established in Lemma 3.2 and substitution multiplication? The next two results provide the answer to this question.

(3.3) LEMMA. If τ is a transformation and J⊂I, then χ(J)τ = τχ(τ−1J).

Proof. An element i of I belongs to domχ(J)τ if and only if τi ![]() domχ(J) = I– J, i. e., if and only if i

domχ(J) = I– J, i. e., if and only if i ![]() τ−1(I–J) = I – τ−1J. An element i of I belongs to domτχ(τ−1J) if and only if i

τ−1(I–J) = I – τ−1J. An element i of I belongs to domτχ(τ−1J) if and only if i ![]() domχ(τ−1J) = I–τ−1J. In other words, χ(J)τ and τχ(τ−1J) have the same domain; if i belongs to this common domain, then χ(J)τi = τi and τχ(τ−1J)i = τi.

domχ(τ−1J) = I–τ−1J. In other words, χ(J)τ and τχ(τ−1J) have the same domain; if i belongs to this common domain, then χ(J)τi = τi and τχ(τ−1J)i = τi.

(3.4) LEMMA. If σ1 = τ1χ(J1) and σ2 = τ1χ(J1), where τ1 and τ1 are transformations, then ![]() .

.

Proof. Observe that σ1σ2 = τ1χ(J1)τ2χ(J2), and apply Lemma 3.3 to the product χ(J1)τ2.

We proceed now to introduce, without any immediately apparent motivation, a concept that will presently turn out to be useful. For substitutions σ and τ we shall say that σ follows τ, and we shall write σ τ, if τ never maps two distinct elements onto the same element of I – domσ. (The notation is not intended to suggest, and it is not in fact true, that the relation thereby defined is a partial order.) The definition can be rephrased as follows: σ

τ, if τ never maps two distinct elements onto the same element of I – domσ. (The notation is not intended to suggest, and it is not in fact true, that the relation thereby defined is a partial order.) The definition can be rephrased as follows: σ τ if and only if the conditions i ≠ j and τi = τj = k imply that k

τ if and only if the conditions i ≠ j and τi = τj = k imply that k ![]() domσ. Equivalently: σ

domσ. Equivalently: σ τ if and only if τ is one-to-one on the set τ−1(I – domσ). While none of these conditions has much intuitive appeal, the point in considering them is that in addition to being equivalent to each other they also turn out to be equivalent to an algebraically elegant and intuitively natural condition (cf. Theorem 3.8).

τ if and only if τ is one-to-one on the set τ−1(I – domσ). While none of these conditions has much intuitive appeal, the point in considering them is that in addition to being equivalent to each other they also turn out to be equivalent to an algebraically elegant and intuitively natural condition (cf. Theorem 3.8).

To prepare the ground for later work, and also as an aid in understanding this somewhat peculiar concept, we explicitly mention some useful special cases.

(3.5) LEMMA. If σ is a transformation, then σ τ for all τ. If τ is one-to-one, and, in particular, if τ = χ(J) for some J, then σ

τ for all τ. If τ is one-to-one, and, in particular, if τ = χ(J) for some J, then σ τ for all σ.

τ for all σ.

Proof. The first assertion follows from the fact that if σ is a transformation, then I – domσ is empty. The second assertion is obvious.

(3.6) LEMMA. A necessary and sufficient condition that χ(J) τ is that τ be one-to-one on τ−1J.

τ is that τ be one-to-one on τ−1J.

Proof. The condition means that the restriction of the function τ to the set τ−1J is one-to-one, and this, in turn, means exactly that τ never maps two distinct elements onto the same element of J. Since J = I – dom χ(J), the proof is complete.

Now we consider again a Cartesian power XI, where X is a non-empty set; we shall discuss the way in which the action of the substitutions discussed above is reflected in the set XI. This is most easily done in terms of an auxiliary binary relation σ* in XI associated with each substitution σ in I. The relation σ* (called the dual of σ) is, by definition, such that

(3.7) xσ*y if and only if xσi = yi whenever i ![]() domσ

domσ

for all x and y in XI.

The duals of transformations and the duals of substitutions of the form χ(J) are particularly simple. If τ is a transformation, then xτ*y means that xτi = yi for all i. Hence, in this case, y is uniquely determined by x, so that the relation τ* is a function; the value of the function τ* at a point x of X is given by (τ*x)i = xτi. (We have thus recaptured (2.1) as a special case of (3.7).) Note in particular that δ* is the identity mapping on XI. If σ = χ(J), then xσ*y means that xi = yi,·whenever i ∉J. (We have thus recaptured (2.3) as a special case of (3.7).) In this case σ* is obviously an equivalence relation; it is exactly the relation that we denoted by J* in the preceding section. Since χ(Ø) = δ, the fact that δ* is the relation of equality in XI is the relation version of the characterization of δ* stated just above in the language of mappings. We note that, at the opposite extreme, θ* = I* is the trivial equivalence relation that places all the points of XI into the same equivalence class. Another worth while observation is that if J ⊂ K, then J* ⊂ K*. The inclusion sign here has its usual meaning if it is recalled that a relation is a set of ordered pairs; explicitly J* ⊂ K* means that xJ*y implies that xK*y whenever x and y are in XI.

The terminology and the notation are designed to indicate that the mapping σ→σ* behaves like duality mappings behave in many other parts of mathematics. Experience with such mappings makes it reasonable to conjecture that (στ)* = τ*σ* (in the sense of relation product). Unfortunately, however, this equation does not always hold. It is at this point that our concept of one substitution “following” another becomes useful; that apparently ad hoc condition is, in all non-trivial cases, necessary and sufficient for the validity of the desired equation.

(3.8) THEOREM. If σ τ, then (στ)* = τ*σ*. If, conversely, X consists of more than one point, and (στ)* = τ*σ*, then σ

τ, then (στ)* = τ*σ*. If, conversely, X consists of more than one point, and (στ)* = τ*σ*, then σ τ.

τ.

Remark. The relation product τ*σ* is defined so that x(τ*σ*)z holds if and only if there exists a point y with yτ*z and xσ*y. This order of events is in accordance with the standard functional notation. Indeed, if both τ* and σ* are single - valued, then τ*σ* is single - valued and x(τ*σ*) z means that z = τ*σ*. If, in this case, y = σ*x, then z = τ*y. These considerations have at least a mnemonic value even in the general case; the proper notational set-up for any relation product can be instantaneously rederived by pretending that the factors are functions.

If X consists of just one point, then the same is true of XI. In this case σ* is the identity mapping of XI onto itself for every σ, and, consequently, (στ)* = τ*σ* is universally valid.

Proof. Assume first that x(τ*σ*)z and let y be a witness to this connection; assume, in other words, that yτ*z and xσ*y. Suppose now that i ![]() domστ, so that i

domστ, so that i![]() domτ and τi

domτ and τi![]() domσ. Since yτ*z and i

domσ. Since yτ*z and i ![]() domτ, it follows that yτi = zi. Since xσ*y and τi

domτ, it follows that yτi = zi. Since xσ*y and τi![]() domσ, it follows that xσ(τi) = yτi Conclusion: x(στ)i = zi whenever i

domσ, it follows that xσ(τi) = yτi Conclusion: x(στ)i = zi whenever i ![]() domστ, and therefore x(στ)*z. We have proved so far that τ*σ* ⊂ (στ)*; note that the assumption σ

domστ, and therefore x(στ)*z. We have proved so far that τ*σ* ⊂ (στ)*; note that the assumption σ τ was not used yet.

τ was not used yet.

Assume next that x(στ)*z; this means that x(στ)i = zi whenever i ![]() domτ and τi

domτ and τi ![]() domσ. To prove that x(τ*σ*)z, a witness y to this connection will be constructed in three stages, (i) If j

domσ. To prove that x(τ*σ*)z, a witness y to this connection will be constructed in three stages, (i) If j ![]() domσ, put yj = x xσi. (ii) If j

domσ, put yj = x xσi. (ii) If j ![]() ranτ – domσ, then j

ranτ – domσ, then j ![]() I – domσ and therefore (here is where the assumption σ

I – domσ and therefore (here is where the assumption σ τ comes in) there is at most one i such that j = τi; since also j

τ comes in) there is at most one i such that j = τi; since also j ![]() ran τ, one such i (and therefore exactly one such i) does exist. In this case we may without ambiguity put yj = zi. (iii) If j

ran τ, one such i (and therefore exactly one such i) does exist. In this case we may without ambiguity put yj = zi. (iii) If j ![]() I – – (ranτ∪domσ), define yj arbitrarily; say, put yj = xj. This three-stage construction defines an element yj of XI. The fact that xσ*y follows from (i) and from the definition of σ*. To prove that yτ*z, consider an element i in domτ. If τi

I – – (ranτ∪domσ), define yj arbitrarily; say, put yj = xj. This three-stage construction defines an element yj of XI. The fact that xσ*y follows from (i) and from the definition of σ*. To prove that yτ*z, consider an element i in domτ. If τi ![]() domσ, then x(στ)i = zi (because of the assumption x(στ)*z), and yτi = x(στ)i (by (i)). If, on the other hand, τi

domσ, then x(στ)i = zi (because of the assumption x(στ)*z), and yτi = x(στ)i (by (i)). If, on the other hand, τi ![]() I –domσ, then τi

I –domσ, then τi ![]() ranτ – domσ and therefore yτi = zi (by (ii)). We have proved thus that x(τ*σ*)z, and hence that (στ)* ⊂ τ*σ*; this completes the proof of the first assertion of the theorem.

ranτ – domσ and therefore yτi = zi (by (ii)). We have proved thus that x(τ*σ*)z, and hence that (στ)* ⊂ τ*σ*; this completes the proof of the first assertion of the theorem.

To prove the second assertion, we assume that X consists of more than one point and that σ and τ are such that σ  τ is false. The latter assumption means that there exist two distinct elements j and k in domτ such that τj = τk

τ is false. The latter assumption means that there exist two distinct elements j and k in domτ such that τj = τk ![]() I – domσ. Consider now an arbitrary point x in Xr and use it to define a point z as follows. If i

I – domσ. Consider now an arbitrary point x in Xr and use it to define a point z as follows. If i ![]() domστ, put zi = xστi; define zj and zk arbitrarily, subject only to the proviso that zj≠zk; for all other indices i, define zi completely arbitrarily. It follows from this definition that x(στ)*z. On the other hand, it is false that x(τ*σ*)z; in fact, yτ*z is false for all y. The reason is that τj = τk implies that yτj = yτk for all y; since zj≠zk, this makes it impossible that yτi should be equal to zi for all i in domτ. The proof of the theorem is complete.

domστ, put zi = xστi; define zj and zk arbitrarily, subject only to the proviso that zj≠zk; for all other indices i, define zi completely arbitrarily. It follows from this definition that x(στ)*z. On the other hand, it is false that x(τ*σ*)z; in fact, yτ*z is false for all y. The reason is that τj = τk implies that yτj = yτk for all y; since zj≠zk, this makes it impossible that yτi should be equal to zi for all i in domτ. The proof of the theorem is complete.

Throughout this section (and, in fact, in most of the paper) we shall continue to use the notation established above; in particular the symbols B, X, and I will always retain their meanings.

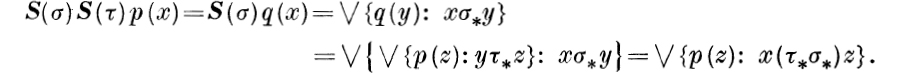

If p is an arbitrary function from XI into B, if x ![]() XI, and if σ is a substitution in I, then {p(y): xσ*y} is a subset of B, and, as such, it may or may not have a supremum in B. If p and σ are such that the supremum exists for all x, and if the value of the supremum for each x in XI is denoted by q(x), then, of course, q is a function from XI into B. In this situation we shall write q = S(σ)p, or, equivalently, we shall say that S(σ)p exists and has the value q. Explicitly

XI, and if σ is a substitution in I, then {p(y): xσ*y} is a subset of B, and, as such, it may or may not have a supremum in B. If p and σ are such that the supremum exists for all x, and if the value of the supremum for each x in XI is denoted by q(x), then, of course, q is a function from XI into B. In this situation we shall write q = S(σ)p, or, equivalently, we shall say that S(σ)p exists and has the value q. Explicitly

![]()

whenever the indicated supremum exists.

The point in considering substitutions at all is that they enable us to treat simultaneously the two kinds of operators that enter into the definition of a functional polyadic algebra. Indeed, if τ is a transformation on I, then the set {p(y): xτ*y} consists of the single element p(τ*x); this shows that the use of the symbol S in (4.1) is consistent with its earlier use in (2.2). If J is a subset of I, and if σ = χ(J), then, as we have already observed, the relation σ* that occurs in (4.1) is identical with the relation J* in (2.4), so that ![]() .

.

(4.2) LEMMA. If p is a function from XI into B and if σ and τ are substitutions in I with σ  τ, then S(στ)p = S(σ)S(τ)p, in the following sense: if both S(τ)p and S(σ)S(τ)p exist, then S(στ)p exists and the equality holds, and conversely, if τ is a transformation on I such that S(στ)p exists, then S(σ)S(τ)p exists and the equality holds again.

τ, then S(στ)p = S(σ)S(τ)p, in the following sense: if both S(τ)p and S(σ)S(τ)p exist, then S(στ)p exists and the equality holds, and conversely, if τ is a transformation on I such that S(στ)p exists, then S(σ)S(τ)p exists and the equality holds again.

Proof. Assume first that both S(τ)p and S(σ)S(τ)p exist. If q = S(τ)p, then ![]() for all y in XI. It follows that

for all y in XI. It follows that

The proof in this case is completed by an application of Theorem 3.8 and of the definition of S(στ)p. Assume next that τ is a transformation such that S(στ)p exists. Since the definition of the product of two relations implies that {p(z): x(τ*σ*)z} = {p(τ*y): xσ*y}, it follows that

![]()

and the proof is completed by an application of the definition of S(σ)S(τ)p.

One consequence of the preceding result is that a functional polyadic algebra admits more operations than its definition demands.

(4.3) LEMMA. A necessary and sufficient condition that a Boolean algebra A of functions from XI into B be a functional polyadic algebra is that S(σ)p exist and belong to A whenever p ![]() A and a is a substitution in I.

A and a is a substitution in I.

Proof. The sufficiency of the condition is obvious: if the condition is satisfied for every substitution, then it is satisfied, in particular, for every transformation and for every substitution of the form χ(J) where J is a subset of I. To prove necessity, we recall that, by Lemma 3.2, every substitution can be written in the form τχ(J), where τ is a transformation on I and J is a subset of I, and that, by Lemma 3.5, τ  χ(J). The desired result now follows from Lemma 4.2.

χ(J). The desired result now follows from Lemma 4.2.

If A is a functional polyadic algebra (more explicitly, a B-valued I-algebra over X), then, for each substitution σ in I, S(σ) is an operator on A, i. e., a mapping of A into itself. In the remainder of this section we shall derive the basic properties of the operators S(σ).

(4.4) LEMMA. If τ is a transformation on I, then S(τ) is a Boolean endomorphism of the algebra of all functions from XI into B (and hence of any functional I-algebra); the endomorphism S(δ) is the identity mapping e.

Proof. The assertion is an immediate consequence of (2.2) and of the fact that the Boolean operations in an algebra of functions are defined pointwise. (Recall also that δ* is the identity mapping on XI.)

(4.5) LEMMA. If J ⊂ I and if x and y are elements of XI such that xJ*y, then ![]() for any function p from XI into B, in the sense that if either term of the equation exists, then the other one exists and the two are equal.

for any function p from XI into B, in the sense that if either term of the equation exists, then the other one exists and the two are equal.

Proof. Since J* is an equivalence relation in XI the assumption xJ*y implies that either one of the conditions xJ*z and yJ*z is necessary and sufficient for the other. It follows that the sets {p(z): xJ*z} and {p(z): yJ*z} are the same and hence that if either one has a supremum, the other one has the same supremum.

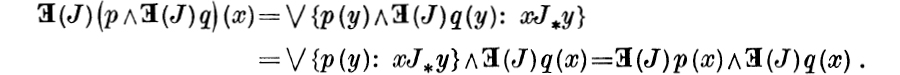

(4.6) LEMMA. If J ⊂ I, then ![]() is a quantifier on A.

is a quantifier on A.

Proof. It is clear that ![]() is normalized, i.e., that

is normalized, i.e., that ![]() . Since the equivalence relation J* is reflexive, i. e., xJ*x for all x, it follows that

. Since the equivalence relation J* is reflexive, i. e., xJ*x for all x, it follows that

![]()

so that ![]() is increasing. The fact that

is increasing. The fact that ![]() is quasi-multiplicative is a consequence, via Lemma 4.5, of the following computation:

is quasi-multiplicative is a consequence, via Lemma 4.5, of the following computation:

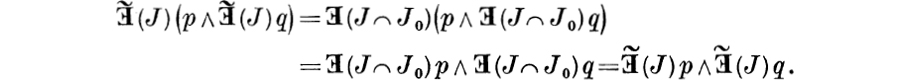

It follows from Lemmas 3.5 and 4.2 that if σ and τ are transformations on I, then S(στ) = S(σ)S(τ). Since χ(Ø) = δ, the fact that S(δ) = e can also be expressed by saying that ![]() is the discrete quantifier on A. From Lemma 3.1 we infer (using Lemmas 3.5 and 4.2 as before) that

is the discrete quantifier on A. From Lemma 3.1 we infer (using Lemmas 3.5 and 4.2 as before) that ![]() and hence, in particular, that

and hence, in particular, that ![]() whenever J and K are subsets of I. All these facts are rather near the surface; the next two results, though easy, are less obvious.

whenever J and K are subsets of I. All these facts are rather near the surface; the next two results, though easy, are less obvious.

(4.7) LEMMA. If σ and τ are transformations on I, if J ⊂ I, and if σ = τ outside J, then S(σ)![]() = S(τ)

= S(τ) ![]() (J)p whenever p is a function from XI into B such that

(J)p whenever p is a function from XI into B such that ![]() exists.

exists.

Proof. The last assumption means that σi = τi whenever i ![]() I –J and hence that σχ(J) = τχ(J). The conclusion follows from Lemmas 3.5 and 4.2.

I –J and hence that σχ(J) = τχ(J). The conclusion follows from Lemmas 3.5 and 4.2.

(4.8) LEMMA. If p is a function from XI into B, if τ is a transformation on I and J is a subset of I such that τ is one-to-one on τ−1J, and if ![]() exists, then

exists, then ![]() exists and

exists and ![]() .

.

Proof. By Lemma 3.3, χ(J)τ = τχ(τ−1J). By Lemma 3.6, the assumption on τ means that x(J)  τ. By Lemma 3.5, τ

τ. By Lemma 3.5, τ χ(τ−1J). By Lemma 4.2, S(τ)

χ(τ−1J). By Lemma 4.2, S(τ) ![]() (τ−1J)p = S(τχ(τ−1J))p, so that S(χ(J)τ)p exists; the conclusion follows from another application of the same lemma.

(τ−1J)p = S(τχ(τ−1J))p, so that S(χ(J)τ)p exists; the conclusion follows from another application of the same lemma.

The superficially complicated conclusions of Lemmas 4.7 and 4.8 are merely a condensed summary of the usual intuitively obvious relations between quantification and substitution. A couple of examples will make them clearer. Suppose that i and j are distinct elements of I and let τ be the transformation that maps i onto j and maps everything else (including j) onto itself. Since τ agrees with δ outside i, it follows from Lemma 4.7 that ![]() . (If J is a singleton, J = {i}, we write

. (If J is a singleton, J = {i}, we write ![]() instead of

instead of ![]() .) This equation corresponds to the familiar fact that once a variable has been quantified, the replacement of that variable by another one has no further effect. To get another example, note, for the same τ, that τ−1i is empty. It follows from Lemma 4.8 that

.) This equation corresponds to the familiar fact that once a variable has been quantified, the replacement of that variable by another one has no further effect. To get another example, note, for the same τ, that τ−1i is empty. It follows from Lemma 4.8 that ![]() . This equation corresponds to the familiar fact that once a variable has been replaced by another one, a quantification on the replaced variable has no further effect.

. This equation corresponds to the familiar fact that once a variable has been replaced by another one, a quantification on the replaced variable has no further effect.

We are now ready to define the central concept of this paper. Abstracting from the functional case, we shall say that a polyadic (Boolean) algebra is a quadruple (A, I, S, ![]() ), where A is a Boolean algebra, I is a set, S is a mapping from transformations on I to Boolean endomorphisms of A, and

), where A is a Boolean algebra, I is a set, S is a mapping from transformations on I to Boolean endomorphisms of A, and ![]() is a mapping from subsets of I to quantifiers on A such that

is a mapping from subsets of I to quantifiers on A such that

(P1) S(δ) is the identity mapping on A (i. e., S(δ) = e),

(P2) S (στ) = S (σ) S (τ) whenever σ and τ are transformations on I,

(P3) ![]() is the discrete quantifier on A (i. e.,

is the discrete quantifier on A (i. e., ![]() ),

),

(P4) ![]() whenever J and K are subsets of I,

whenever J and K are subsets of I,

(P5) if σ and τ are transformations on I, if J ⊂ I, and if σ = τ outside J, then ![]() ,

,

(P6) if τ is a transformation on I, if J ⊂ I, and if τ is one-to-one on τ−1J, then ![]() (J)S(τ) = S(τ)

(J)S(τ) = S(τ) ![]() (τ−lJ).

(τ−lJ).

From this definition and from our preceding results it follows immediately that a functional polyadic algebra is a polyadic algebra.

Most of the time we shall use the same symbols (S and ![]() ) for the endomorphism and quantifier mappings of every polyadic algebra; only rarely will we find it necessary to use a more detailed notation in order to avoid confusion. We shall also commit the common simplifying solecism of identifying the Boolean algebra A with the polyadic algebra (A, I, S,

) for the endomorphism and quantifier mappings of every polyadic algebra; only rarely will we find it necessary to use a more detailed notation in order to avoid confusion. We shall also commit the common simplifying solecism of identifying the Boolean algebra A with the polyadic algebra (A, I, S, ![]() ). We shall, accordingly, use expressions such as “the polyadic algebra A”, or “the polyadic algebra A with variables I”, or simply “the I-algebra A”. The cardinal number of I will be called the degree of the algebra and an algebra of degree n will be called an n-adic algebra.

). We shall, accordingly, use expressions such as “the polyadic algebra A”, or “the polyadic algebra A with variables I”, or simply “the I-algebra A”. The cardinal number of I will be called the degree of the algebra and an algebra of degree n will be called an n-adic algebra.

If I is empty, then there is only one transformation, namely δ, and there is only one subset, namely Ø; in this extreme case θ = δ. Consequently, if A is an arbitrary Boolean algebra, if I is the empty set Ø, and if both S(δ) and ![]() are defined to be e, then A becomes a polyadic algebra of degree 0. We see thus that the classical theory Boolean algebras is subsumed under the 0-adic case of the theory of polyadic algebras.

are defined to be e, then A becomes a polyadic algebra of degree 0. We see thus that the classical theory Boolean algebras is subsumed under the 0-adic case of the theory of polyadic algebras.

If I consists of a single element, then there is only one transformation, namely δ, and there are two distinct subsets, namely Ø and I. Consequently if A is an arbitrary monadic algebra with quantifier ![]() , if I is a singleton, and if we write, by definition,

, if I is a singleton, and if we write, by definition, ![]() and

and ![]() , then A becomes a polyadic algebra of degree 1. We see thus that the theory of monadic algebras is subsumed under the 1-adic case of the theory of polyadic algebras.

, then A becomes a polyadic algebra of degree 1. We see thus that the theory of monadic algebras is subsumed under the 1-adic case of the theory of polyadic algebras.

In a certain sense the definition of polyadic algebras above (and, correspondingly, the definition of functional polyadic algebras in § 2) is not sufficiently general. It often happens (e. g., in axiomatizations of Euclidean geometry) that the propositional functions to be considerep are functions of several different kinds of variables (e. g., points, lines, and planes). The appropriate way of allowing for this phenomenon from the point of view of functional polyadic algebras is to consider not one domain and its Cartesian powers but several possibly distinct sets and their Cartesian product. More explicitly, suppose that to each element i of I there corresponds a non-empty set Xi and let X1 be the Cartesian product of this family of sets. The sets Xi may overlap or coincide among themselves quite arbitrarily. Functions from XI to B form a Boolean algebra as before, and the theory of the operators ![]() carries over to the generalized situation with no significant change. If, however, τ is a transformation on I, then the definition of τ*x is not always meaningful (since Xτi and Xi may have no points in common). The simplest way out of the difficulty is to restrict attention to such transformations τ (“special” transformations) for which Χτi = Χi for all i in I. The set of special transformations is a subsemigroup (containing the unit) of the set of all transformations on I. If we consider only the transformations in this subsemigroup (and, correspondingly, consider only those substitutions σ on I for which Χσi· = Χi for all i in domσ), then the results of § 2 and § 3 go through without any difficulties. The definition of a functional polyadic algebra will, naturally, become slightly different; in place of closure under S(τ) for all transformations τ, the modified definition requires only closure under S(τ) when τ is a special transformation.

carries over to the generalized situation with no significant change. If, however, τ is a transformation on I, then the definition of τ*x is not always meaningful (since Xτi and Xi may have no points in common). The simplest way out of the difficulty is to restrict attention to such transformations τ (“special” transformations) for which Χτi = Χi for all i in I. The set of special transformations is a subsemigroup (containing the unit) of the set of all transformations on I. If we consider only the transformations in this subsemigroup (and, correspondingly, consider only those substitutions σ on I for which Χσi· = Χi for all i in domσ), then the results of § 2 and § 3 go through without any difficulties. The definition of a functional polyadic algebra will, naturally, become slightly different; in place of closure under S(τ) for all transformations τ, the modified definition requires only closure under S(τ) when τ is a special transformation.

The considerations of the preceding paragraph suggest a modification in the definition of abstract polyadic algebras also. The conditions referring to ![]() alone remain unmodified, but the conditions referring to S are required to hold only for a suitable subsemigroup of the semigroup of all transformations on I. The resulting concept is a generalization of the one defined above; the special case is obtained from the generalization by the consideration of the improper subsemigroup of all transformations. Polyadic algebras in the special sense will be called homogeneous algebras; they are the only ones with which we shall be concerned in this paper. The simplest and at the same time the most interesting polyadic algebras are homogeneous; most of the techniques that apply to homogeneous algebras apply with only minor modifications to the general case; and, finally, there are some known techniques (cf. [8]) for generalizing results about homogeneous polyadic algebras to arbitrary polyadic algebras. For these reasons, the details of the study of non-homogeneous polyadic algebras may safely be postponed to a later occasion.

alone remain unmodified, but the conditions referring to S are required to hold only for a suitable subsemigroup of the semigroup of all transformations on I. The resulting concept is a generalization of the one defined above; the special case is obtained from the generalization by the consideration of the improper subsemigroup of all transformations. Polyadic algebras in the special sense will be called homogeneous algebras; they are the only ones with which we shall be concerned in this paper. The simplest and at the same time the most interesting polyadic algebras are homogeneous; most of the techniques that apply to homogeneous algebras apply with only minor modifications to the general case; and, finally, there are some known techniques (cf. [8]) for generalizing results about homogeneous polyadic algebras to arbitrary polyadic algebras. For these reasons, the details of the study of non-homogeneous polyadic algebras may safely be postponed to a later occasion.

In order to see the direction in which the theory of abstract polyadic algebras should develop, we take another look at propositional functions, i. e., at functions from a Cartesian power XI into a Boolean algebra B. While, for logical purposes, it is frequently essential that the set of variables be infinite, each particular propositional function usually depends on a finite number of variables only. The precise definition of a function depending on a certain set of variables is best approached indirectly. A function p from XI into B is independent of a subset J of I if p(x) = p(y) whenever xJ*y. In other words, p is independent of J if and only if the replacement of an argument x of p by a point whose coordinates differ from those of x only when their index is in J leaves the value of p unchanged. Boughly speaking, p is independent of J when coordinates in J can be changed arbitrarily without changing p.

It turns out to be easy to express the concept of independence in algebraic terms.

(5.1) LEMMA. If p is an element of a functional I-algebra and if J ⊂ I, then a necessary and sufficient condition that p be independent of J is that ![]() = p.

= p.

Proof. If p is independent of J, then

![]()

If, conversely, ![]() = p, then, by Lemma 4.5, xJ*y implies that

= p, then, by Lemma 4.5, xJ*y implies that

![]()

In view of Lemma 5.1 it is natural to define independence in an arbitrary polyadic algebra as follows: an element p of an I-algebra A is independent of a subset J of I if and only if ![]() . We note that the concept of independence is the algebraic substitute for familiar logical concepts usually described in terms of “free” and “bound” variables.

. We note that the concept of independence is the algebraic substitute for familiar logical concepts usually described in terms of “free” and “bound” variables.

Dependence could now be defined in terms of independence in the obvious way. It is grammatically more convenient, however, to use a different term. We shall say that a subset J of I is a support of p (equivalent expression: J supports p) if and only if p is independent of I –J. The element p of A will be called finite - dimensional, or simply finite, if it has a finite support, or, equivalently, if there exists a cofinite subset J of I such that p is independent of J. (A cofinite subset of I is one whose complement is finite.) Roughly speaking, p is finite if and only if it is independent of almost all (i. e., all but a finite number) of the variables. The algebra A is locally finite - dimensional, or simply locally finite, if every one of its elements is finite. Throughout the rest of this paper we shall deal with locally finite polyadic algebras only. Unless in a special context we explicitly say the opposite, we shall automatically (and often tacitly) assume that every polyadic algebra we refer to is locally finite. The main reason for this procedure is that almost nothing is known as yet about polyadic algebras that are not locally finite. The “infinite logics” that give rise to such algebras might repay study; for the time being, however, we concentrate our attention on the algebraic systems whose logical counterparts are of already proven value.

The first thing we can do for locally finite algebras is to prove that, just as the functional algebras that suggested them, they admit more operations than their definition demands; the additional operations, moreover, have the same desirable multiplicative properties that they have in functional algebras.

(5.2) THEOREM. If A is a locally finite I-algebra, then there exists a unique mapping ![]() from substitutions in I to operators on A such that (i)

from substitutions in I to operators on A such that (i) ![]() whenever τ is a transformation on I, (ii)

whenever τ is a transformation on I, (ii) ![]() whenever J is a subset of I, and (iii)

whenever J is a subset of I, and (iii) ![]() whenever σ and τ are substitutions in I such that σ

whenever σ and τ are substitutions in I such that σ  τ.

τ.

Remark. A kind of converse of Theorem 5.5 is true and easy to prove (even in the absence of local finiteness.) Suppose in fact that A is a Boolean algebra and that ![]() is a mapping from substitutions in a certain set I to operators on A satisfying (iii) above and satisfying also the following three conditions, (a)

is a mapping from substitutions in a certain set I to operators on A satisfying (iii) above and satisfying also the following three conditions, (a) ![]() is the identity mapping on A. (b) If τ is a transformation on I, then

is the identity mapping on A. (b) If τ is a transformation on I, then ![]() is a Boolean endomorphism of A. (c) If J ⊂ I, then

is a Boolean endomorphism of A. (c) If J ⊂ I, then ![]() is a quantifier on A. It follows that if S and

is a quantifier on A. It follows that if S and ![]() are defined by

are defined by ![]() (whenever τ is a transformation on I) and

(whenever τ is a transformation on I) and ![]() (whenever J is a subset of I), then A becomes an J-algebra. Indeed (P1) and (P3) follow from (a), and (P2), (P4), (P5), and (P6) follow from (b) and (c) via (iii) and Lemma 3.5. In other words, the conditions (a), (b), (c), and (iii) yield an alternative definition of polyadic algebras.

(whenever J is a subset of I), then A becomes an J-algebra. Indeed (P1) and (P3) follow from (a), and (P2), (P4), (P5), and (P6) follow from (b) and (c) via (iii) and Lemma 3.5. In other words, the conditions (a), (b), (c), and (iii) yield an alternative definition of polyadic algebras.

Once Theorem 5.5 is proved, there is no more necessity for maintaining a careful distinction between S and its extension ![]() ; in the future (after the proof of Theorem 5.5 is over) we shall use the same symbol S(τ) whether τ is a transformation on I or an arbitrary substitution in I.

; in the future (after the proof of Theorem 5.5 is over) we shall use the same symbol S(τ) whether τ is a transformation on I or an arbitrary substitution in I.

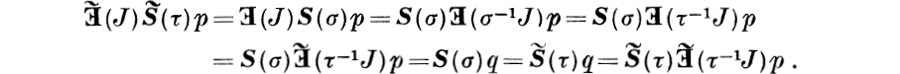

Proof. If σ is a substitution in I, then, by Lemma 3.2, σ can be written in the form τχ(J), where τ is a transformation on I and J is a subset of I. Using (i), (ii), (iii), and Lemma 3.5, we conclude that ![]()

![]() . This proves the uniqueness assertion, and shows, incidentally, that

. This proves the uniqueness assertion, and shows, incidentally, that ![]() is always a hemimorphism from A into itself.

is always a hemimorphism from A into itself.

To prove the existence assertion, we first note that if τ1χ(J1) = τ1χ(J1), where τ1 and τ2 are transformations, then Lemma 3.2 and (P5) imply that ![]() . This proves that whenever σ = τχ(J) is a substitution, where τ is a transformation and J ⊂ I, then the equation

. This proves that whenever σ = τχ(J) is a substitution, where τ is a transformation and J ⊂ I, then the equation ![]() unambigously defines a hemimorphism

unambigously defines a hemimorphism ![]() on A. The assertions (i) and (ii) are now obvious; it remains only to prove that

on A. The assertions (i) and (ii) are now obvious; it remains only to prove that ![]() satisfies (iii).

satisfies (iii).

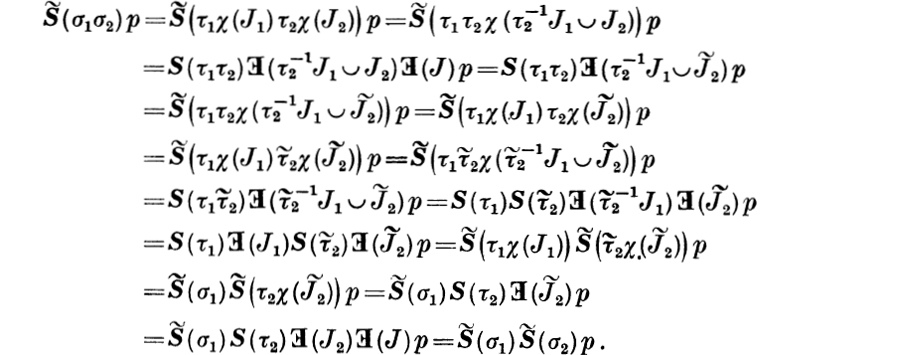

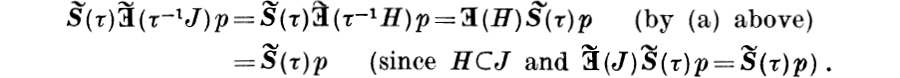

Suppose therefore that τ1 and τ2 are transformations and J1 and J2 are sets such that if σ1 = τ1χ(J1) and σ2 = τ2χ(J2), then σ1  σ2. It is to be proved that if p

σ2. It is to be proved that if p ![]() A, then

A, then ![]() . Let J be a cofinite set such that

. Let J be a cofinite set such that ![]() = p and write

= p and write ![]() . Since

. Since ![]() is finite,

is finite, ![]() is cofinite and has a cardinal number greater than or equal to that of

is cofinite and has a cardinal number greater than or equal to that of ![]() . It follows that there exists a transformation

. It follows that there exists a transformation ![]() that agrees with τ2 outside

that agrees with τ2 outside ![]() and that maps

and that maps ![]() into

into ![]() in a one-to-one manner. Since

in a one-to-one manner. Since ![]() maps

maps ![]() and

and ![]() into disjoint sets, and since

into disjoint sets, and since ![]() is one-to-one on

is one-to-one on ![]() , the only time that

, the only time that ![]() can send two distinct elements i and j onto the same element k is when i and j belong to

can send two distinct elements i and j onto the same element k is when i and j belong to ![]() and τ2i = τ2j = k. Since

and τ2i = τ2j = k. Since ![]() , it follows that both i and j belong to domσ2 and σ2i = σ2j = k. The assumption σ1

, it follows that both i and j belong to domσ2 and σ2i = σ2j = k. The assumption σ1  σ2 implies therefore that k

σ2 implies therefore that k ![]() domσ1 = domχ(J1). This proves that

domσ1 = domχ(J1). This proves that ![]() ; equivalently, by Lemma 3.6,

; equivalently, by Lemma 3.6, ![]() is one-to-one on

is one-to-one on ![]() . The proof of Theorem 5.5 can now be completed by the following computation:

. The proof of Theorem 5.5 can now be completed by the following computation:

§ 6. Supports and independence

It is intuitively clear that if a propositional function depends on a finite number of variables only, then most of the action of a substitution on such a propositional function is wasted; all that matters is the behavior of the substitution on the finite set of variables in question. In order to make these considerations precise, we now make a digression into the appropriate part of the theory of substitutions.

We shall say that a subset J of a set I is a support of a substitution σ in I (equivalent expression: J supports σ) if σ agrees with the identity transformation outside the set J, or, more explicitly, if I – J ⊂ domσ and σi = i whenever i ![]() I –J.

I –J.

(6.1) LEMMA. The set of all supports of a substitution σ in I is a Boolean filter (dual ideal) in the Boolean algebra of all subsets of I.

Proof. It follows immediately from the definition that J supports σ if and only if

![]()

This implies that I always supports σ, and hence that the set of supports of σ is never empty. It implies also that if J supports σ and J ⊂ K, then K supports σ, and that if both J and K support σ, then so does J∩K.

Remark. The proof shows more than the lemma asserts. The filter of supports of σ is, in fact, the principal filter generated by J0, and, consequently, J0 is the minimal support of σ. Despite this fact the language of supports is useful; it is often more convenient to use “a support” of σ than to insist on using “the support”.

The preceding result characterizes all supports of a fixed substitution; the following result goes in the other direction.

(6.2) LEMMA. The set of all substitutions with support J is a subsemigroup, containing the unit, in the semigroup of all substitutions.

Proof. Since δ agrees with δ outside J, it is trivial that J supports δ. If J supports both σ and τ, and if i ![]() I –J, then i

I –J, then i ![]() domσ ∩ domτ and σi = τi = i; it follows that i

domσ ∩ domτ and σi = τi = i; it follows that i ![]() domστ and (οτ)ι = σ(τi) = σi = i.

domστ and (οτ)ι = σ(τi) = σi = i.

(6.3) LEMMA. If J supports σ and K supports τ, then J ∪ K supports στ.

Proof. By Lemma 6.1, J ∪ K supports both σ and τ; by Lemma 6.2, J ∪ K supports στ.

A substitution σ is finite if it leaves fixed almost all the elements of I. More precisely, σ is finite if domσ includes a cofinite subset of I on which σ agrees with the identity transformation δ. In the language of supports, σ is finite if and only if it has a finite support.

(6.4) LEMMA. The set of all f mite substitutions is a subsemigroup, containing the unit, in the semigroup of all substitutions.

Proof. Since there exists a finite set J (e. g., Ø) such that δ agrees with δ outside J, it is trivial that δ is finite. The fact that the set of all finite substitutions is closed under multiplication is an immediate consequence of Lemma 6.3.

(6.5) LEMMA. A necessary and sufficient condition that χ(J) be a finite substitution is that J be a finite set.

Proof. If J is finite, then, since χ(J) = δ outside J, it follows that χ(J) is finite. If, conversely, χ(J) = δ outside some finite set K, then it follows from the definition of χ(J) that J ⊂ K and hence that J is finite.

(6.6) LEMMA. Every finite substitution σ can be written in the form τχ(J), where τ is a finite transformation on I and J is a finite subset of I.

Proof. By Lemma 3.2, σ = τχ(J) with J = I –domσ and σ = τ outside J. The finiteness of σ implies that J is finite. The finiteness of σ and J, together with the fact that σ = τ outside J, implies that τ = σ outside a finite set, i. e., that τ is finite.

We proceed now to investigate the concepts of independence and support in an I-algebra A and the relation between these concepts and the algebraic structure of A. It is convenient to be able to phrase the results in the language of either independence or supports; because, however, of the intimate relation between these two concepts, it is generally sufficient to give the proof for only one of the two cases.

The first five of the results that follow are in a curious situation. They are valid for algebraic systems that are somewhat more general than polyadic algebras, and, in the next section, their generalized versions are needed. In order not to complicate the presentation, we shall state and prove them here for polyadic algebras only, and, in the next section, we shall point out the minor modifications that suffice to reach the generalization. Subsequently we shall refer to the generalized results by the same number as is borne by the special case; to avoid any possible confusion, systematic cross-references are provided at the appropriate places.

(6.7) LEMMA. If p ![]() A, then the set of all sets J such that p is independent of J is a Boolean ideal, and the set of all supports of p is a Boolean filter, in the Boolean algebra of all subsets of I.

A, then the set of all sets J such that p is independent of J is a Boolean ideal, and the set of all supports of p is a Boolean filter, in the Boolean algebra of all subsets of I.

Proof (cf. 7.1)). Since ![]() , it is trivial that p is independent of Ø. If

, it is trivial that p is independent of Ø. If ![]() = p and K ⊂ J, then

= p and K ⊂ J, then

![]()

If ![]() , then

, then

![]()

(6.8) LEMMA. If J ⊂ I, then the set of all elements p in A such that p is independent of J is a Boolean subalgebra of A, and the set of all elements p in A such that J supports p is a Boolean subalgebra of A.

Proof (cf. (7.2)). The set of all those elements p for which ![]() is simply the range of the quantifier

is simply the range of the quantifier ![]() . The desired result follows from the fact (1.3) that the range of a quantifier is always a Boolean algebra.

. The desired result follows from the fact (1.3) that the range of a quantifier is always a Boolean algebra.

(6.9) LEMMA. If p is independent of J, then ![]() for every K; if J supports p, then

for every K; if J supports p, then ![]() for every K.

for every K.

Proof (cf. (7.3)). For independence: ![]()

![]() (by Lemma 6.7). For supports: apply the result for independence to I –J.

(by Lemma 6.7). For supports: apply the result for independence to I –J.

(6.10) LEMMA. If p is independent of J (if J supports p), then ![]() is independent of J∪K (J – K supports

is independent of J∪K (J – K supports ![]() ).

).

Proof (cf. (7.4)). ![]() .

.

(6.11) LEMMA. If p is independent of J and if σ and τ are transformations that agree outside J, then S(σ)p = S(τ)p.

Proof (cf. (7.5)). S(σ)p = S(σ)![]() = S(τ)

= S(τ)![]() (by (P5)) = S(τ)p.

(by (P5)) = S(τ)p.

For the proof of the main non-trivial relation between independence and transformations (Lemma 6.14) the following two technical lemmas are needed.

(6.12) LEMMA. If τ is a transformation, if J ⊂ I, and if K = I– τ(I –J), then τ−1K ⊂ J.

Proof. This purely set - theoretic lemma is based on the fact that J ⊂ τ−1τJ. Applying this inclusion to I –J in place of J and then forming the complement of both terms, we obtain

![]()

Since τ−1Κ = τ−1 (I – τ(I –J)) = I – τ−1τ(I–J), the proof is complete.

(6.13) LEMMA. If τ is a transformation, if J ⊂ I, and if K = I – τ(I –J), then χ(K)  τχ(J).

τχ(J).

Proof. If τχ(J)i = j, then i ![]() I —J and therefore j

I —J and therefore j ![]() τ(I –J), so that j

τ(I –J), so that j ![]() I – K. In other words, the entire range of τχ(J) is included in I –K. Under these circumstances the substitution τχ(J) obviously never maps two distinct elements onto the same element of I – domχ(K) = K, because, in fact, it never maps anything at all into K.

I – K. In other words, the entire range of τχ(J) is included in I –K. Under these circumstances the substitution τχ(J) obviously never maps two distinct elements onto the same element of I – domχ(K) = K, because, in fact, it never maps anything at all into K.

(6.14) LEMMA. If τ is a transformation, if p is independent of J, and if K = I – τ(I –J), then S(τ)p is independent of K. If J supports p, then τJ supports S(τ)p.

Remark. The consideration of simple examples, and, in particular, of δ in the role of τ, shows that the result is in general best possible.

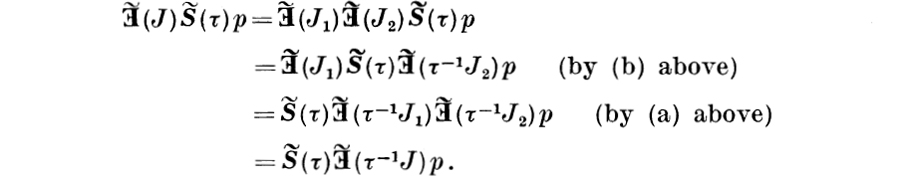

Proof. ![]() (by the assumed independence) = S(χ(K))S(τχ(J))p (by Theorem 5.5) = S(τχ(τ−1K))χ(J))p (by Lemma 6.13 and Theorem 5.5) = S(τχ (J))p (by Lemma 3.3) = S(τχ(J))p (by Lemma 6.12) =

(by the assumed independence) = S(χ(K))S(τχ(J))p (by Theorem 5.5) = S(τχ(τ−1K))χ(J))p (by Lemma 6.13 and Theorem 5.5) = S(τχ (J))p (by Lemma 3.3) = S(τχ(J))p (by Lemma 6.12) = ![]() (by Theorem 5.5) = S(τ)p (by the assumed independence).

(by Theorem 5.5) = S(τ)p (by the assumed independence).

It is to be noted that up to now the results of this section (together with their proofs) are valid even in the absence of local finiteness. In the following two results the assumption of local finiteness is essential.

(6.15) LEMMA. A necessary and sufficient condition that p be independent of J (that J support p) is that ![]() (Κ)p = p whenever K is a finite sub-set of J (of I –J).

(Κ)p = p whenever K is a finite sub-set of J (of I –J).

Proof. The necessity of the condition is trivial from Lemma 6.7. To prove sufficiency, apply the definition of local finiteness to find a co-finite set J0 such that p is independent of J0 and note that ![]()

![]() (by Lemma 6.9) = p (by assumption, since J –J0 is finite).

(by Lemma 6.9) = p (by assumption, since J –J0 is finite).

(6.16) LEMMA. If p ![]() A, then (i) to every subset K of I there corresponds a finite subset K0 of I such that

A, then (i) to every subset K of I there corresponds a finite subset K0 of I such that ![]() , (ii) to every transformation τ on I there corresponds a finite transformation r0 on I such that S(τ)p = S(τ0)p, and (iii) to every substitution σ in I there corresponds a finite substitution σ0 in I such that S(σ)p = S(σ0)p.

, (ii) to every transformation τ on I there corresponds a finite transformation r0 on I such that S(τ)p = S(τ0)p, and (iii) to every substitution σ in I there corresponds a finite substitution σ0 in I such that S(σ)p = S(σ0)p.

Proof. By the definition of local finiteness, p has a finite support J. The conclusion (i) folllows from Lemma 6.9. To prove (ii), write τ0ί = τi when i ![]() J and τ0i = i otherwise. Since J is finite, τ0 is finite; (ii) follows from Lemma 6.11. The conclusion (iii) follows from (i) and (ii), together with Lemma 3.2, Theorem 5.5, and Lemma 6.4.

J and τ0i = i otherwise. Since J is finite, τ0 is finite; (ii) follows from Lemma 6.11. The conclusion (iii) follows from (i) and (ii), together with Lemma 3.2, Theorem 5.5, and Lemma 6.4.

We began the preceding section with a vaguely formulated conjecture to the effect that in the study of (locally finite) poly adic algebras there is no loss of generality in restricting attention to finite substitutions only. Lemma 6.16 may be viewed as a precise formulation (and proof) of that vague conjecture. In this section we shall prove a much stronger result of the same type; for convenience in formulating that strengthened result we now introduce an auxiliary concept. The concept of a quasi-polyadic algebra is more general than that of a (locally finite) polyadic algebra; the difference between the two is that in the definition of quasi - polyadic algebras the mappings S and ![]() are assumed to be defined only for finite transformations on I and finite subsets of I. To avoid all possible misunderstanding, we formulate the pertinent definition explicitly as follows. A quasi - polyadic algebra is a quadruple (A, I, S,

are assumed to be defined only for finite transformations on I and finite subsets of I. To avoid all possible misunderstanding, we formulate the pertinent definition explicitly as follows. A quasi - polyadic algebra is a quadruple (A, I, S, ![]() ), where A is a Boolean algebra, I is a set, S is a mapping from finite transformations on I into Boolean endomorphisms of A, and

), where A is a Boolean algebra, I is a set, S is a mapping from finite transformations on I into Boolean endomorphisms of A, and ![]() is a mapping from finite subsets of I to quantifiers on A, such that

is a mapping from finite subsets of I to quantifiers on A, such that

(Q1) S (δ) is the identity mapping on A,

(Q2) S(στ) = S(σ)S(τ) whenever σ and τ are finite transformations on I,

(Q3) ![]() is the discrete quantifier on A,

is the discrete quantifier on A,

(Q4) ![]() whenever J and K are finite subsets of I,

whenever J and K are finite subsets of I,

(Q5) if σ and τ are finite transformations on I, if J is a finite subset of I, and if σ = τ outside J, then ![]() ,

,

(Q6) if τ is a finite transformation on I, if J is a finite subset of I, and if τ is one-to-one on τ−1J, then ![]() ,

,

(Q7) if p ![]() A, then there exists a cofimite subset J of I such that

A, then there exists a cofimite subset J of I such that ![]() whenever K is a finite subset of J.

whenever K is a finite subset of J.