Linear Functionals and the Dual Space

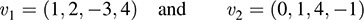

11.1 Introduction

In this chapter, we study linear mappings from a vector space V into its field K of scalars. (Unless otherwise stated or implied, we view K as a vector space over itself.) Naturally all the theorems and results for arbitrary mappings on V hold for this special case. However, we treat these mappings separately because of their fundamental importance and because the special relationship of V to K gives rise to new notions and results that do not apply in the general case.

11.2 Linear Functionals and the Dual Space

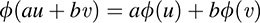

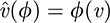

Let V be a vector space over a field K. A mapping ϕ: V → K is termed a linear functional (or linear form) if, for every u, v ∊ V and every a, b, ∊ K,

In other words, a linear functional on V is a linear mapping from V into K.

(a) Let πi:Kn ! K be the ith projection mapping; that is, πi(a1; a2; … an … ai. Then πi is linear and so it is a linear functional on Kn.

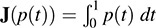

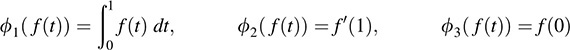

(b) Let V be the vector space of polynomials in t over R. Let J:V → R be the integral operator defined by  . Recall that J is linear; and hence, it is a linear functional on V.

. Recall that J is linear; and hence, it is a linear functional on V.

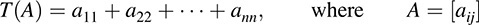

(c) Let V be the vector space of n-square matrices over K. Let T :V → K be the trace mapping

That is, T assigns to a matrix A the sum of its diagonal elements. This map is linear (Problem 11.24), and so it is A linear functional on V.

By Theorem 5.10, the set of linear functionals on A vector space V over A field K is also A vector space over K, with addition and scalar multiplication defined by

where ϕ and σ are linear functionals on V and k ∈ K. This space is called the dual space of V and is denoted by V*.

EXAMPLE 11.2 Let V ¼ Kn, the vector space of n-tuples, which we write as column vectors. Then the dual space V*can be identified with the space of row vectors. In particular, any linear functional ϕ = (a1; … ; anÞ in V* has the representation

Historically, the formal expression on the right was termed a linear form.

11.3 Dual Basis

Suppose V is a vector space of dimension n over K. By Theorem 5.11, the dimension of the dual space V* is also n (because K is of dimension 1 over itself). In fact, each basis of V determines a basis of V* as follows (see Problem 11.3 for the proof).

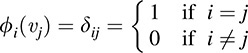

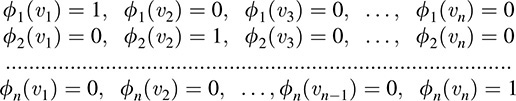

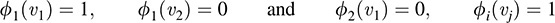

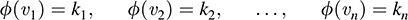

THEOREM 11.1: Suppose {ν1; … ; νn} is a basis of V over K. Let ϕ1; … ; ϕn ∈ V* be the linear functionals as defined by

The above basis {ϕ} is termed the basis dual to {νi} or the dual basis. The above formula, which uses the Kronecker delta δij, is a short way of writing

By Theorem 5.2, these linear mappings ϕi are unique and well defined

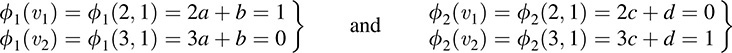

EXAMPLE 11.3 Consider the basis {ν1 = (2, 1), v2 = {3, 1} of R2. Find the dual basis {ϕ1, ϕ2}. We seek linear functionals ϕ1(x, y) = ax + by and ϕ(x, y) = cx + dy such that

These four conditions lead to the following two systems of linear equations:

The solutions yield a = 1, b = 3 and c = 1, d = 2. Hence, ϕ1(x; y) = x + 3y and ϕ2(x; y) = x – 2y form the dual basis.

The next two theorems (proved in Problems 11.4 and 11.5, respectively) give relationships between bases and their duals.

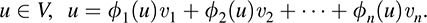

THEOREM 11.2: Let {v1, … ; vn} be a basis of V and let ff1, … ; fng be the dual basis in V*. Then

(i) For any vector  .

.

(ii) For any linear functional  .

.

THEOREM 11.3: Let {v1, … ; vn} and {w1, … ; wn} be bases of V and let {f1, … ; fn} and {s1, … ; sn} be the bases of V* dual to {vi} and {wi}, respectively. Suppose P is the change-of-basis matrix from {vi} to {wi}. Then (P–1)T is the change-of-basis matrix from {fi} to {si}.

11.4 Second Dual Space

We repeat: Every vector space V has a dual space V*, which consists of all the linear functionals on V. Thus, V* has a dual space V**, called the second dual of V, which consists of all the linear functionals on V*.

We now show that each v 2 V determines a specific element  . First, for any ϕ ∈ V*, we define

. First, for any ϕ ∈ V*, we define

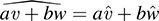

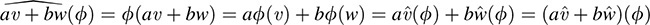

It remains to be shown that this map û :V* → K is linear. For any scalars a, b ∈ K and any linear functionals ϕ, ρ ∈ V*, we have

That is, û is linear and so û ∊ V**. The following theorem (proved in Problem 12.7) holds.

THEOREM 11.4: If V has finite dimensions, then the mapping  is an isomorphism of V onto V**.

is an isomorphism of V onto V**.

The above mapping  is called the natural mapping of V into V**. We emphasize that this mapping is never onto V** if V is not finite-dimensional. However, it is always linear, and moreover, it is always one-to-one.

is called the natural mapping of V into V**. We emphasize that this mapping is never onto V** if V is not finite-dimensional. However, it is always linear, and moreover, it is always one-to-one.

Now suppose V does have finite dimension. By Theorem 11.4, the natural mapping determines an isomorphism between V and V**. Unless otherwise stated, we will identify V with V** by this mapping. Accordingly, we will view V as the space of linear functionals on V* and write V = V**. We remark that if {ϕi} is the basis of V* dual to a basis {vi} of V, then {vi} is the basis of V** = V that is dual to {ϕi}.

11.5 Annihilators

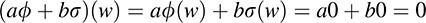

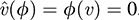

Let W be a subset (not necessarily a subspace) of a vector space V. A linear functional ϕ ∈ V* is called an annihilator of W if ϕ(w) = 0 for every w ∈ W—that is, if f(W) = {0}. We show that the set of all such mappings, denoted by W0 and called the annihilator of W, is a subspace of V*. Clearly, 0 ∈ W0: Now suppose ϕ s ∈ W0. Then, for any scalars a, b, ∈ K and for any w ∈ W,

Thus, aϕ + bσ = W0, and so W0 is a subspace of V*.

In the case that W is a subspace of V, we have the following relationship between W and its annihilator W0 (see Problem 11.11 for the proof).

THEOREM 11.5: Suppose V has finite dimension and W is a subspace of V. Then

Here W00 = {v ∈ V :ϕ(v)= 0 for every ϕ ∈ W0} or, equivalently, W00 = (W0)0, where W00 is viewed as a subspace of V under the identification of V and V**.

11.6 Transpose of a Linear Mapping

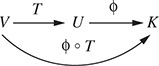

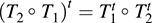

Let T :V → U be an arbitrary linear mapping from a vector space V into a vector space U. Now for any linear functional ϕ ∈ U*, the composition ϕ ° T is a linear mapping from V into K:

That is, ϕ ° T ∈ V*. Thus, the correspondence

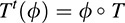

is a mapping from U* into V*; we denote it by Tt and call it the transpose of T. In other words, Tt :U* → V* is defined by

Thus, (Tt(ϕ))(v) = ϕ(T(v)) for every v ∈ V.

THEOREM 11.6: The transpose mapping Tt defined above is linear.

Proof. For any scalars a; b ∈ K and any linear functionals ϕ; σ ∈ U*,

That is, Tt is linear, as claimed.

We emphasize that if T is a linear mapping from V into U, then Tt is a linear mapping from U* into V*. The same “transpose” for the mapping Tt no doubt derives from the following theorem (proved in Problem 11.16).

THEOREM 11.7: Let T : V → U be linear, and let A be the matrix representation of T relative to bases {vi} of V and {ui} of U. Then the transpose matrix AT is the matrix representation of Tt :U* → V* relative to the bases dual to {ui} and {vi}.

SOLVED PROBLEMS

Dual Spaces and Dual Bases

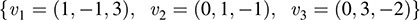

11.1. Find the basis {ϕ1, ϕ2, ϕ3} that is dual to the following basis of R3:

The linear functionals may be expressed in the form

By definition of the dual basis, fi(vj) = 0 for i 6= j, but fi(vj) = 1 for i = j.

We find ϕ1 by setting ϕ1(ν1) = 1; ϕ1(ν2) = 0; ϕ1(ν3) = 0: This yields

Solving the system of equations yields a1 = 1, a2 = 0, a3 = 0. Thus, f1(x; y; z) = x.

We find ϕ2 by setting ϕ2(ν1) = 0, ϕ2(ν2) = 1, ϕ2(ν3) = 0. This yields

Solving the system of equations yields c1 = 7, c2 = 2, c3 = 3. Thus, ϕ2(x; y; z) = 7x – 2y – 3z.

We find ϕ3 by setting ϕ3(ν1) = 0, ϕ3(ν2) = 0, ϕ3(ν3) = 1. This yields

Solving the system of equations yields c1 = 2, c2 = 1, c3 = 1. Thus, ϕ3(x; y; z) = –2x + y + z.

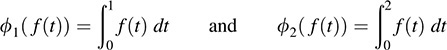

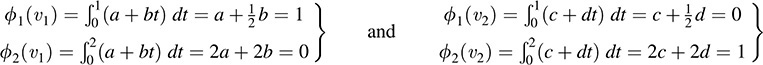

11.2. Let V = {a + bt : a; b ∈ R}, the vector space of real polynomials of degree ≥1. Find the basis {ν1, ν2} of V that is dual to the basis {ϕ1, ϕ2} of V* defined by

Let v1 = a + bt and v2 = c + dt. By definition of the dual basis,

Thus,

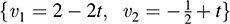

Solving each system yields a = 2, b = –2 and  , d = 1. Thus,

, d = 1. Thus,  is the basis of V that is dual to {ϕ1, ϕ2}.

is the basis of V that is dual to {ϕ1, ϕ2}.

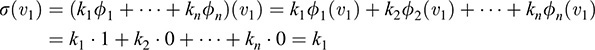

11.3. Prove Theorem 11.1: Suppose {ν1, … ; νn} is a basis of V over K. Let ϕ1, … ; ϕn ∈ V* be defined by ϕi(νj) = 0 for i ≠ j, but {i(vj) = 1 for i = j. Then {ϕ1, … ; ϕn} is a basis of V*.

We first show that {ϕ1, … ; ϕn} spans V*. Let ϕ be an arbitrary element of V*, and suppose

Set σ = k1ϕ1 +….+ knϕn. Then

Similarly, for i = 2; … ; n,

Thus, ϕ(νi) = σ(νi) for i = 1; … ; n. Because ϕ and σ agree on the basis vectors, ϕ = σ = k1ϕ1 + … + knϕn. Accordingly, {ϕ1, … ; ϕn} spans V*.

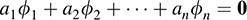

It remains to be shown that {ϕ1, … ; ϕn} is linearly independent. Suppose

Applying both sides to ν1, we obtain

Similarly, for i = 2; … ; n,

That is, a1 = 0; … ; an = 0. Hence, {ϕ1, … ; ϕn} is linearly independent, and so it is a basis of V*.

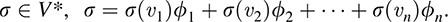

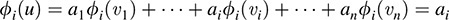

11.4. Prove Theorem 11.2: Let {ν1, … ; νn} be a basis of V and let {ϕ1, … ; ϕn} be the dual basis in V*. For any u ∈ V and any s ∈ V*, (i) u = Σi ϕi(u)νi. (ii) σ = Σi ϕ(vi)ϕi.

Suppose

Then

Similarly, for i = 2;... ; n,

That is, ϕ1(u) = a1, ϕ2(u) = a2, … ; ϕn(u) = an. Substituting these results into (1), we obtain (i).

Next we prove (ii). Applying the linear functional s to both sides of (i),

Because the above holds for every u ∈ V, σ = σ(ν1)ϕ2 + σ(ν2)ϕ2 + …. + σ(νn)ϕn, as claimed.

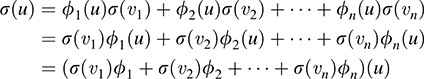

11.5. Prove Theorem 11.3. Let {vi} and {wi} be bases of V and let {fi} and {si} be the respective dual bases in V*. Let P be the change-of-basis matrix from {vi} to {wi}: Then (P– is the change-of-basis matrix from {ϕi to σi}.

Suppose, for i = 1; … ; n,

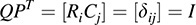

Then P = [aij] and Q = [bij]. We seek to prove that Q = (P–1)T.

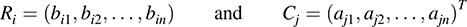

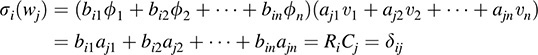

Let Ri denote the ith row of Q and let Cj denote the jth column of PT. Then

By definition of the dual basis,

where ψij is the Kronecker delta. Thus,

Therefore, Q = (PT) = (P–)T, as claimed.

11.6. Suppose ν ∈ V, ν ≠ 0, and dim V = n. Show that there exists ϕ ∈ V* such that ϕ(ν) ≠ 0.

We extend {ν} to a basis {ν; ν2, … ; νn} of V. By Theorem 5.2, there exists a unique linear mapping ϕ:V → K such that ϕ(ν) = 1 and ϕ(νi) = 0, i = 2; … ; n. Hence, ϕ has the desired property.

11.7. Prove Theorem 11.4: Suppose dim V = n. Then the natural mapping  is an isomorphism of V onto V**.

is an isomorphism of V onto V**.

We first prove that the map  is linear—that is, for any vectors v; w ∈ V and any scalars

is linear—that is, for any vectors v; w ∈ V and any scalars  . For any linear functional ϕ ∈ V*,

. For any linear functional ϕ ∈ V*,

Because  for every ϕ ∈ V*, we have

for every ϕ ∈ V*, we have  . Thus, the map

. Thus, the map  is linear.

is linear.

Now suppose ν ∈ V, ν ≠ 0. Then, by Problem 11.6, there exists ϕ ∈ V* for which ϕ(ν) ≠ 0. Hence,  , and thus

, and thus  . Because ν ≠ 0 implies û v ≠ 0, the map

. Because ν ≠ 0 implies û v ≠ 0, the map  is nonsingular and hence an isomorphism (Theorem 5.64).

is nonsingular and hence an isomorphism (Theorem 5.64).

Now dim V = dim V* = dim V**, because V has finite dimension. Accordingly, the mapping  is an isomorphism of V onto V**.

is an isomorphism of V onto V**.

Annihilators

11.8. Show that if ϕ ∈ V* annihilates a subset S of V, then ϕ annihilates the linear span L(S) of S. Hence, S0 = ½span(S) 0.

Suppose ν ∈ span(S). Then there exists w1, … ; wr ∈ S for which ν = a1w1 + a2w2 + … + arwr.

Because ν was an arbitrary element of span(S); ϕ annihilates span(S), as claimed.

11.9. Find a basis of the annihilator W0 of the subspace W of R4 spanned by

By Problem 11.8, it suffices to find a basis of the set of linear functionals ϕ such that ϕ(ν1) = 0, where ϕ(x1, x2, x3, x4) = ax1 + bx2 + cx3 + dx4. Thus,

The system of two equations in the unknowns a, b, c, d is in echelon form with free variables c and d.

(1) Set c = 1, d = 0 to obtain the solution a = 11, b = 4, c = 1, d = 0.

(2) Set c = 0, d = 1 to obtain the solution a = 6, b = 1, c = 0, d = 1.

The linear functions ϕ1(xi) = 11x1 4x2 + x3 and ϕ2(xi) = 6x1 x2 + x4 form a basis of W0.

11.10. Show that (a) For any subset S of V; S ⊆ S00. (b) If S1 ⊆ S2, then

(a) Let ν ∈ S. Then for every linear functional ϕ ∈ S0, ûϕ = f(ν) = 0. Hence,  . Therefore, under the identification of V and V**, v ∈ S00. Accordingly, S ⊆ S00.

. Therefore, under the identification of V and V**, v ∈ S00. Accordingly, S ⊆ S00.

(b) Let  . Then ϕ(ν) = 0 for every ν ∈ S2. But S1 ⊆ S2, hence, ϕ annihilates every element of S1 (i.e.,

. Then ϕ(ν) = 0 for every ν ∈ S2. But S1 ⊆ S2, hence, ϕ annihilates every element of S1 (i.e.,  ). Therefore,

). Therefore,  .

.

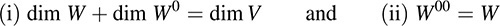

11.11. Prove Theorem 11.5: Suppose V has finite dimension and W is a subspace of V. Then

(i) dim W + dim W0 = dim V, (ii) W00 = W.

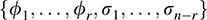

(i) Suppose dim V = n and dim W = r ≤ n. We want to show that dim W0 = n – r. We choose a basis {w1, … ; wr} of W and extend it to a basis of V, say {w1, … ; wr, v1, … ; vn–r}. Consider the dual basis

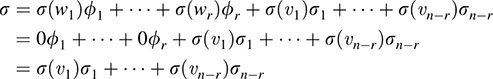

By definition of the dual basis, each of the above s’s annihilates each σi, hence, σ1, … ; sn–r ∈ W0. We claim that {σsi} is a basis of W0. Now {σj} is part of a basis of V*, and so it is linearly independent. We next show that {ϕj} spans W0. Let σ ∈ W0. By Theorem 11.2,

Consequently, {σ1, … ; σn–r} spans W0 and so it is a basis of W0. Accordingly, as required

(ii) Suppose dim V = n and dim W = r. Then dim V* = n and, by (i), dim W0 = n r. Thus, by (i), dim W00 = n – (n – r) = r; therefore, dim W = dim W00. By Problem 11.10, W ⊆ W00. Accordingly, W = W00.

11.12. Let U and W be subspaces of V. Prove that (U + W)0 = U0 ∩ W0.

Let ϕ ∈ (U + W)0. Then ϕ annihilates U + W; and so, in particular, f annihilates U and W: That is, ϕ ∈ U0 and ϕ ∈ W0; hence, ϕ ∈ U0 \ W0: Thus, (U + W)0 U0 \ W0:

On the other hand, suppose σ ∈ U0 \ W0: Then σ annihilates U and also W. If ν ∈ U + W, then ν = u + w , where u ∈ U and w ∈ W. Hence, s(v) = s(u)+ s(w) = 0 + 0 = 0. Thus, σ annihilates U + W; that is, s ∈ (U + W)0. Accordingly, U0 + W0 (U + W)0.

The two inclusion relations together give us the desired equality.

Remark: Observe that no dimension argument is employed in the proof; hence, the result holds for spaces of finite or infinite dimension.

Transpose of a Linear Mapping

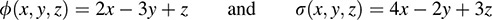

11.13. Let ϕ be the linear functional on R2 defined by ϕ(x; y) = x – 2y. For each of the following linear operators T on R2, find (Tt(f))(x; y):

(a) T(x, y) = (x, 0), (b) T(x, y) = (y, x + y), (c) T(x, y) = (2x – 3y, 5x + 2y)

By definition, Tt(f) = f T; that is, (Tt(f))(v) = f(T(v)) for every v. Hence,

(a) (Tt(ϕ))(x, y) = ϕ(T(x, y)) = ϕ(x, 0) = x

(b) (Tt(ϕ))(x, y) = ϕ(T(x, y)) = ϕ(y, x + y) = y 2(x + y) = 2x y

(c) (Tt(ϕ))(x, y) = ϕ(T(x, y)) = ϕ(2x 3y; 5x + 2y) = (2x 3y) 2(5x + 2y) = 8x 7y

11.14. Let T : V → U be linear and let Tt :U* → V* be its transpose. Show that the kernel of Tt is the annihilator of the image of T—that is, Ker Tt = (Im T)0.

Suppose ϕ ∊ Ker Tt; that is, Tt (ϕ) = ϕ ° T then u = T(u) for some v ∊ V; hence,

We have that ϕ(u) = 0 for every u ∈ Im T; hence, f ∈ (Im T)0. Thus, Ker Tt (Im ⊆ T)0.

On the other hand, suppose σ ∈ (Im ⊆ T)0; that is, σ(Im ⊆ T) = {0}. Then, for every v ∈ V,

We have (Tt(σ))(ν) = 0(ν) for every ν ∈ V; hence, Tt(s) = 0. Thus, σ ∈ Ker Tt, and so (Im T)0 ⊆ Ker Tt.

The two inclusion relations together give us the required equality.

11.15. Suppose V and U have finite dimension and T : V → U is linear. Prove rank(T) = rank(Tt).

Suppose dim V = n and dim U = m, and suppose rank(T) = r. By Theorem 11.5,

By Problem 11.14, Ker Tt = (Im T)0. Hence, nullity (Tt) = m – r. It then follows that, as claimed,

11.16. Prove Theorem 11.7: Let T :V → U be linear and let A be the matrix representation of T in the bases {vj} of V and {ui} of U. Then the transpose matrix AT is the matrix representation of Tt : U* → V* in the bases dual to {ui} and {νj}.

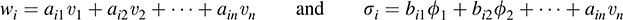

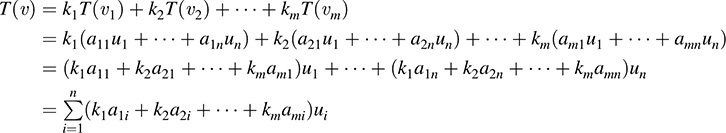

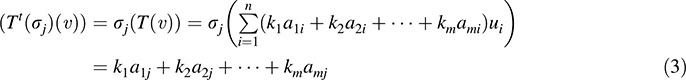

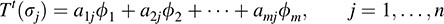

Suppose, for j = 1; … ; m,

We want to prove that, for i = 1; … ; n,

where {σi} and {ϕj} are the bases dual to {ui} and {νj}, respectively.

Let v ∈ V and suppose v = k1v1 + k2v2 + … + kmvm . Then, by (1),

Hence, for j = 1; … ; n.

On the other hand, for j = 1; … ; n,

Because ν ∈ V was arbitrary, (3) and (4) imply that

which is (2). Thus, the theorem is proved.

SUPPLEMENTARY PROBLEMS

Dual Spaces and Dual Bases

11.17. Find (a) ϕ + σ, (b) 3ϕ, (c) 2ϕ 5σ, where ϕ:R3 → R and σ:R3 → R are defined by

11.18. Find the dual basis of each of the following bases of R3: (a) {(1; 0; 0); (0; 1; 0); (0; 0; 1)}, (b) {(1; 2; 3); (1; 1; 1); (2; 4; 7)}.

11.19. Let V be the vector space of polynomials over R of degree ≥2. Let ϕ1, ϕ2, ϕ3 be the linear functionals on V defined by

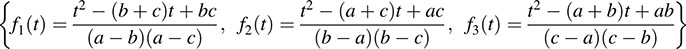

Here f(t) = a + bt + ct2 ∈ V and f0(t) denotes the derivative of f(t). Find the basis { f1(t); f2(t); f3(t)g of V that is dual to {ϕ1, ϕ2, ϕ3}.

11.20. Suppose u; v ∈ V and that ϕ(u) = 0 implies ϕ(v) = 0 for all ϕ ∈ V*. Show that v = ku for some scalar k.

11.21. Suppose ϕ; σ ∈ V* and that ϕ(ν) = 0 implies σ(ν) = 0 for all ν ∈ V. Show that σ = kϕ for some scalar k.

11.22. Let V be the vector space of polynomials over K. For a ∈ K, define fa:V → K by ϕa(f(t)) = f(a). Show that (a) fa is linear; (b) if a ≠ b, then ϕa ≠ ϕb.

11.23. Let V be the vector space of polynomials of degree ≥2. Let a; b; c ∈ K be distinct scalars. Let ϕa, ϕb, ϕc be the linear functionals defined by ϕa(f(t)) = f(a), ϕb(f(t)) = f(b), fc(f(t)) = f(c). Show that {ϕa, ϕb, ϕc} is linearly independent, and find the basis { ϕ1(t); ϕ2(t); ϕ3(t)} of V that is its dual.

11.24. Let V be the vector space of square matrices of order n. Let T : V → K be the trace mapping; that is, T(A) = a11 + a22 + … + ann, where A = (aij). Show that T is linear.

11.25. Let W be a subspace of V. For any linear functional ϕ on W, show that there is a linear functional σ on V such that σ(w) = ϕ(w) for any w ∈ W; that is, ϕ is the restriction of σ to W.

11.26. Let {e1, … ; en} be the usual basis of Kn. Show that the dual basis is {π1, … ; πn} where πi is the ith projection mapping; that is, πi(a1, … ; an) = ai.

11.27. Let V be a vector space over R. Let ϕ1, ϕ2 ∈ V* and suppose σ:V → define by σ(ν) = ϕ1(ν)ϕ1(ν)ϕ2(ν) also belongs to V*. Show that either ϕ1 = 0 or ϕ2 = 0.

Annihilators

11.28. Let W be the subspace of R4 spanned by (1; 2; 3; 4), (1; 3; 2; 6), (1; 4; 1; 8). Find a basis of the annihilator of W.

11.29. Let W be the subspace of R3 spanned by (1; 1; 0) and (0; 1; 1). Find a basis of the annihilator of W.

11.30. Show that, for any subset S of V; span(S) = S00, where span(S) is the linear span of S.

11.31. Let U and W be subspaces of a vector space V of finite dimension. Prove that (U ∪ W)0 = U0 + W0.

11.32. Suppose V = U ⊕ W. Prove that V– = U0 ⊕ W0.

Transpose of a Linear Mapping

11.33. Let ϕ be the linear functional on R2 defined by ϕ(x; y) = 3x – 2y. For each of the following linear mappings T :R3 → R2, find (Tt(ϕ))(x; y; z):

(a) T(x, y, z) = (x + y, y + z), (b) T(x, y, z) = (x + y + z, 2x – y)

11.34. Suppose T1:U → V and T2:V → W are linear. Prove that  .

.

11.35. Suppose T : V → U is linear and V has finite dimension. Prove that Im Tt = (Ker T)0.

11.36. Suppose T : V → U is linear and u ∈ U. Prove that u ∈ Im T or there exists ϕ ∈ V* such that Tt(ϕ) = 0 and ϕ(u) = 1.

11.37. Let V be of finite dimension. Show that the mapping T ↦ Tt is an isomorphism from Hom(V; V) onto Hom(V*; V*). (Here T is any linear operator on V.)

Miscellaneous Problems

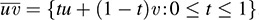

11.38. Let V be a vector space over R. The line segment  joining points u; v ∈ V is defined by

joining points u; v ∈ V is defined by  . subset S of V is convex if u; v ∈ S implies

. subset S of V is convex if u; v ∈ S implies  . Let ϕ ∈ V*. Define

. Let ϕ ∈ V*. Define

Prove that W+; W, and W– are convex.

11.39. Let V be a vector space of finite dimension. A hyperplane H of V may be defined as the kernel of a nonzero linear functional ϕ on V. Show that every subspace of V is the intersection of a finite number of hyperplanes.

ANSWERS TO SUPPLEMENTARY PROBLEMS

11.17. (a) 6x 5y + 4z, (b) 6x – 9y + 3z, (c) –16x + 4y – 13z

11.18. (a) ϕ1 = x; ϕ2 = y; ϕ3 = z; (b) ϕ1 = –3x –5y –2z; ϕ2 = 2x + y; ϕ3 = x + 2y + z

11.19.

11.22. (b) Let f(t) = t. Then ϕa(f(t)) = a ≠ b = ϕb(f(t)); and therefore, ϕa ≠ ϕb

11.23.

11.28. {ϕ1(x; y; z; t) = 5x – y + z; ϕ2(x, y, z, t) = 2y – t}

11.29. {ϕ(x; y; z) = x – y + z}

11.33. (a) (Tt(ϕ))(x; y; z) = 3x + y – 2z, (b) (Tt(ϕ))(x; y; z) = –x + 5y + 3z