CHAPTER 11

Architecture Frameworks and Secure Network Architectures

In this chapter, you will

• Explore use cases and purpose for frameworks

• Examine the best practices for system architectures

• Explain the use of secure configuration guides

• Given a scenario, implement secure network architecture concepts

Architectures play an important role in the establishment of a secure enterprise. Implementing security controls on computer systems may seem to be independent of architecture, but it is the architecture that determines which security controls are implemented and how they are configured. Architectures are intended to be in place for a fairly long term and are difficult to change, so carefully choosing and implementing the correct architecture for an organization’s computer systems up front makes them easier to maintain and more effective over time.

Certification Objective This chapter covers CompTIA Security+ exam objectives 3.1, Explain use cases and purpose for frameworks, best practices and secure configuration guides, and 3.2, Given a scenario, implement secure network architecture concepts.

Objective 3.2 is a good candidate for performance-based questions, which means you should expect questions in which you must apply your knowledge of the topic to a scenario. The best answer to a question will depend upon specific details in the scenario preceding the question, not just the question. The questions may also involve tasks other than just picking the best answer from a list. Instead, you may be instructed to order things on a diagram, put options in rank order, match two columns of items, or perform a similar task.

Industry-Standard Frameworks and Reference Architectures

Industry-standard frameworks and reference architectures are conceptual blueprints that define the structure and operation of the IT systems in the enterprise. Just as in an architecture diagram that provides a blueprint for constructing a building, the enterprise architecture provides the blueprint and roadmap for aligning IT and security with the enterprise’s business strategy. A framework is more generic than the specifics that are specified by an architecture. An enterprise can use both a framework describing the objectives and methodology desired, while an architecture will specify specific components, technologies, and protocols to achieve those design objectives.

There are numerous sources of frameworks and reference architectures, and they can be grouped in a variety of ways. The Security+ exam groups them in the following groups: regulatory, non-regulatory, national vs. international, and industry-specific frameworks.

Regulatory

Industries under governmental regulation frequently have an approved set of architectures defined by regulatory bodies. For example, the electric industry has the NERC (North American Electric Reliability Corporation) Critical Infrastructure Protection (CIP) standards. This is a set of 14 individual standards that, when taken together, drives a reference framework/architecture for this bulk electric system in North America. Most industries in the United States are regulated in one manner or another. When it comes to cybersecurity, more and more regulations are beginning to apply, from privacy, to breach notification, to due diligence and due care provisions.

Non-regulatory

Some reference architectures are neither industry specific nor regulatory, but rather are technology focused and considered non-regulatory, such as the National Institute of Standards and Technology (NIST) Cloud Computing Security Reference Architecture (Special Publication 500-299) and the NIST Framework for Improving Critical Infrastructure Cybersecurity (commonly known as the Cybersecurity Framework, or CSF). The latter being a consensus-created overarching framework to assist enterprises in their cybersecurity programs.

To give you a sense of how a non-regulatory framework is structured, consider the structure of the CSF. The CSF has three main elements: the Framework Core, the Framework Implementation Tiers, and the Framework Profiles. The Framework Core is built around five Functions: Identify, Protect, Detect, Respond, and Recover. The Framework Core then has Categories and Subcategories for each of these Functions, with Informative References to standards, guidelines, and practices matching the Subcategories. The Framework Implementation Tiers are a way of representing an organization’s level of achievement, from Partial (Tier 1), to Risk Informed (Tier 2), to Repeatable (Tier 3), to Adaptive (Tier 4). These Tiers are similar to maturity model levels. The Framework Profiles section describes current state of alignment for the elements and the desired state of alignment, a form of gap analysis. The NIST CSF is being mandated for government agencies, but is completely voluntary in the private sector. This framework has been well received, partly because of its comprehensive nature and partly because of its consensus approach, which created a usable document. NIST has been careful to promote its Cybersecurity Framework (CSF), not as a government-driven requirement but as an optional, non-regulatory based framework.

National vs. International

The U.S. federal government has its own cloud-based reference architecture for systems that use the cloud. Called the Federal Risk and Authorization Management Program (FedRAMP), this process is a government-wide program that provides a standardized approach to security assessment, authorization, and continuous monitoring for systems using cloud products and services.

One of the more interesting international frameworks has been the harmonization between the United States and European Union with respect to data privacy (U.S.) or data protection (EU) issues. The EU rules and regulations covering privacy issues and data protection were so radically different from those in the U.S. that they had to forge a special framework, originally called the U.S.-EU Safe Harbor Framework, to harmonize the concepts and enable U.S. and EU corporations to effectively do business with each other. Changes in EU law, coupled with EU court determinations that the U.S.-EU Safe Harbor Framework is not a valid mechanism to comply with EU data protection requirements when transferring personal data from the European Union to the United States, forced a complete refreshing of the methodology. The new privacy-sharing methodology is called the EU-U.S. Privacy Shield Framework and became effective in the summer of 2016.

Industry-Specific Frameworks

There are several examples of industry-specific frameworks. Industry-specific frameworks have been developed by entities within a particular industry—sometimes to address regulatory needs, other times because of industry-specific concerns or risks. Although some of these may not seem to be complete frameworks, they provide instructive guidance on how systems should be architected. Some of these frameworks are regulatory based, like the NERC CIP standards previously referenced. Another industry-specific framework is the HITRUST Common Security Framework (CSF) for use in the medical industry and enterprises that must address HIPAA/HITECH rules and regulations.

Benchmarks/Secure Configuration Guides

Benchmarks and secure configuration guides offer guidance for setting up and operating computer systems to a secure level that is understood and documented. As each organization may differ, the standard for a benchmark is a consensus-based set of knowledge designed to deliver a reasonable set of security across as wide a base as possible. There are numerous sources for these guides, but three main sources exist for a large number of these systems. You can get benchmark guides from manufacturers of the software, from the government, and from an independent organization called Center for Internet Security (CIS). Not all systems have benchmarks, nor do all sources cover all systems, but searching for and following the correct configuration and setup directives can go a long way in establishing security.

The vendor/manufacturer guidance source is easy—go to the website of the vendor of your product. The government sources are a bit more scattered, but two solid sources are the NIST Computer Security Resource Center’s National Vulnerability Database (NVD) National Checklist Program (NCP) Repository, https://nvd.nist.gov/ncp/repository. A different source is the U.S. Department of Defense’s Defense Information Security Agency (DISA) Security Technical Implementation Guides (STIGs). These are detailed step-by-step implementation guides, a list of which is available at https://iase.disa.mil/stigs/Pages/index.aspx.

Platform/Vendor-Specific Guides

Setting up secure services is important to enterprises, and some of the best guidance comes from the manufacturer in the form of platform/vendor-specific guides. These guides include installation and configuration guidance, and in some cases operational guidance as well.

Web Server

Many different web servers are used in enterprises, but the market leaders are Microsoft, Apache, and nginx. By definition, web servers offer a connection between users (clients) and web pages (data being provided), and as such they are prone to attacks. Setting up any external-facing application properly is key to prevent unnecessary risk. Fortunately, for web servers, several authoritative and proscriptive sources of information are available to help administrators properly secure the application. In the case of Microsoft’s IIS and SharePoint Server, the company provides solid guidance on the proper configuration of the servers. The Apache Software Foundation provides some information for its web server products as well.

Another good source of information is from the Center for Internet Security, as part of its benchmarking guides. The CIS guides provide authoritative, proscriptive guidance developed as part of a consensus effort between consultants, professionals, and others. This guidance has been subject to significant peer review and has withstood the test of time. CIS guides are available for multiple versions of Apache, Microsoft, and other vendors’ products.

Operating System

The operating system (OS) is the interface for the applications that we use to perform tasks and the actual physical computer hardware. As such, the OS is a key component for the secure operation of a system. Comprehensive, proscriptive configuration guides for all major operating systems are available from their respective manufacturers, from the Center for Internet Security, and from the DoD DISA STIGs program.

Application Server

Application servers are the part of the enterprise that handle specific tasks we associate with IT systems. Whether it is an e-mail server, a database server, a messaging platform, or any other server, application servers are where the work happens. Proper configuration of an application server depends to a great degree on the server specifics. Standard application servers, such as e-mail and database servers, have guidance from the manufacturer, CIS, and STIGs. The less standard servers—ones with significant customizations, such as a custom set of applications written in-house for your inventory control operations, or order processing, or any other custom middleware—also require proper configuration, but the true vendor in these cases is the in-house builders of the software. Ensuring proper security settings and testing of these servers should be part of the build program so that they can be integrated into the normal security audit process to ensure continued proper configuration.

Network Infrastructure Devices

Network infrastructure devices are the switches, routers, concentrators, firewalls, and other specialty devices that make the network function smoothly. Properly configuring these devices can be challenging but is very important because failures at this level can adversely affect the security of traffic being processed by them. The criticality of these devices makes them targets, for if a firewall fails, in many cases there are no indications until an investigation finds that it failed to do its job. Ensuring these devices are properly configured and maintained is not a job to gloss over, but one that requires professional attention by properly trained personnel, and backed by routine configuration audits to ensure they stay properly configured. With respect to most of these devices, the greatest risk lies in the user configuration of the device via rulesets, and these are specific to each user and cannot be mandated by a manufacturer’s installation guide. Proper configuration and verification is site specific and, many times, individual device specific. Without a solid set of policies and procedures to ensure this work is properly performed, these devices, while they may work, will not perform in a secure manner.

General Purpose Guides

The best general purpose guide is the CIS Controls, a common set of 20 security controls. This project began as a consensus project out of the U.S. Department of Defense and has over nearly 20 years morphed into the de facto standard for selecting an effective set of security controls. The framework is now maintained by the Center for Internet Security and can be found at https://www.cisecurity.org/controls/.

EXAM TIP Determining the correct configuration information is done by the careful parsing of the scenario in the question. It is common for IT systems to be composed of multiple major components, web server, database server, and application server, so read the question carefully to see what is being requested. The scenario specifics matter as they will point to the best answer.

Defense-in-Depth/Layered Security

Secure system design relies upon many elements, a key one being defense-in-depth, or layered security. Defense-in-depth is a security principle by which multiple, differing security elements are employed to increase the level of security. Should an attacker be able to bypass one security measure, one of the overlapping controls can still catch and block the intrusion. For instance, in networking, a series of defenses, including access control lists, firewalls, intrusion detection systems, and network segregation, can be employed in an overlapping fashion to achieve protection.

Vendor Diversity

Having multiple suppliers creates vendor diversity, which reduces the risk from any single supplier. Having multiple operating systems, such as both Linux and Windows, reduces the total risk should something happen to one of them. Having only a monoculture raises risks when something specific to that environment fails. Having two vendors supply parts means that should one vendor run into any problem, you have a second vendor to turn to. Having two connections to the Internet provides redundancy, and having them operated by separate vendors adds diversity and lowers the risks even more.

Having multiple vendors in a layered defense also adds security through the removal of a single failure mode scenario. Two firewalls, from different vendors, will be more robust should one firewall have a flaw. The odds of the other vendor having the same exploitable flaw is relatively low, making your overall security higher, even during a vendor failure.

Control Diversity

Security controls are the mechanisms by which security functions are achieved. It is important to have control diversity, both administrative and technical, providing layered security to ensure the controls are effective in producing the desired results. One area frequently overlooked is the value of policies and procedures to guide workers’ actions. If these policies and procedures are aligned with reducing risk, they act as controls. If there are technical controls backing up those policies, then policy violations may still not create a complete vulnerability, as the technical control can stop a problem from occurring. Total reliance on technical controls without policy provides insufficient security because users who lack policy guidance may utilize a system in ways not foreseen by the implementers of the technical controls, resulting in another risk.

Administrative

Administrative controls are those that operate on the management aspects of an organization. They include controls such as policies, regulations, and laws. Management activities such as planning and risk assessment are common examples of administrative controls. Having multiple independent, overlapping administrative controls can act as a form of layered security.

Technical

Technical controls are those that operate through a technological intervention in the system. Examples include elements such as user authentication (passwords), logical access controls, antivirus/anti-malware software, firewalls, intrusion detection and prevention systems, and so forth. Having multiple independent, overlapping technical controls, such as firewalls and access control lists to limit entry, is an example of layered security through technical controls.

EXAM TIP Know the difference between administrative and technical controls. You may be given a list of controls and asked to choose which is an administrative control (or a technical control).

User Training

Users represent an essential link in the security defenses of an enterprise. Users also represent a major point of vulnerability, as most significant attacks have a user component. Whether it is phishing, spear phishing, clickbaiting, or some other form of manipulating a user, history has shown it will succeed a portion of the time, and the attacker only needs one person to let them in. The best defense is to implement a strong user training program that instructs users to recognize safe and unsafe computing behaviors. The best form of user training has proven to be user-specific training, training that is related to the tasks that individuals use computers to accomplish. That means you need separate training for executives and management.

Another line of defense is to monitor users and mandate retraining when you notice that their training is no longer effective (for example, they begin clicking unverified links in e-mails). And for users who continually have problems following training/retraining instructions, you need to add additional layers of protection to their machines so that if they are compromised, the damage is limited.

EXAM TIP Users require security training that is specific to how they utilize computers in the enterprise, and they require periodic retraining.

Zones/Topologies

The first aspect of security is a layered defense. Just as a castle has a moat, an outside wall, an inside wall, and even a keep, so, too, does a modern secure network have different layers of protection. Different zones/topologies are designed to provide layers of defense, with the outermost layers providing basic protection and the innermost layers providing the highest level of protection. The outermost zone is the Internet, a free area, beyond any specific controls. Between the inner, secure corporate network and the Internet is an area where machines are considered at risk. A constant issue is that accessibility tends to be inversely related to level of protection, so it is more difficult to provide complete protection and unfettered access at the same time. Trade-offs between access and security are handled through zones, with successive zones guarded by firewalls enforcing ever-increasingly strict security policies.

DMZ

The zone that is between the untrusted Internet and the trusted internal network is called the DMZ, after its military counterpart, the demilitarized zone, where neither side has any specific controls. Within the inner, secure network, separate branches are frequently carved out to provide specific functional areas.

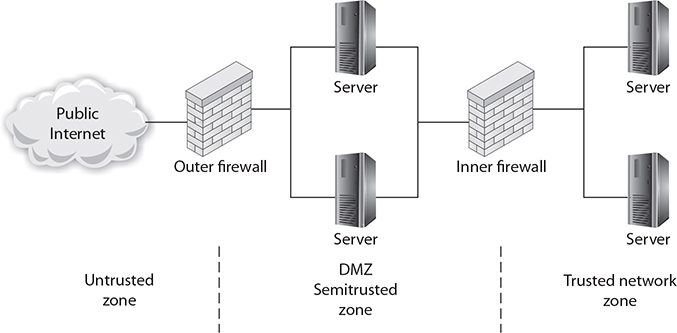

A DMZ in a computer network is used in the same way; it acts as a buffer zone between the Internet, where no controls exist, and the inner, secure network, where an organization has security policies in place. To demarcate the zones and enforce separation, a firewall is used on each side of the DMZ. The area between these firewalls is accessible from either the inner, secure network or the Internet. Figure 11-1 illustrates these zones as caused by firewall placement. The firewalls are specifically designed to prevent access across the DMZ directly, from the Internet to the inner, secure network.

Figure 11-1 The DMZ and zones of trust

Pay special attention to the security settings of network devices placed in the DMZ, and consider them to be compromised by unauthorized use at all times. Machines whose functionality is locked down to preserve security are commonly called hardened operating systems in the industry. This lock-down approach needs to be applied to the machines in the DMZ, and although it means that their functionality is limited, such precautions ensure that the machines will work properly in a less-secure environment.

Many types of servers belong in the DMZ, including web servers that are serving content to Internet users, as well as remote-access servers and external e-mail servers. In general, any server directly accessed from the outside, untrusted Internet zone needs to be in the DMZ. Other servers should not be placed in the DMZ. Domain name servers for your inner, trusted network and database servers that house corporate databases should not be accessible from the outside. Application servers, file servers, print servers—all of the standard servers used in the trusted network—should be behind both firewalls, along with the routers and switches used to connect these machines.

The idea behind the use of the DMZ topology is to force an outside user to make at least one hop in the DMZ before he can access information inside the trusted network. If the outside user makes a request for a resource from the trusted network, such as a data element from a database via a web page, then this request needs to follow this scenario:

1. A user from the untrusted network (the Internet) requests data via a web page from a web server in the DMZ.

2. The web server in the DMZ requests the data from the application server, which can be in the DMZ or in the inner, trusted network.

3. The application server requests the data from the database server in the trusted network.

4. The database server returns the data to the requesting application server.

5. The application server returns the data to the requesting web server.

6. The web server returns the data to the requesting user from the untrusted network.

This separation accomplishes two specific, independent tasks. First, the user is separated from the request for data on a secure network. By having intermediaries do the requesting, this layered approach allows significant security levels to be enforced. Users do not have direct access or control over their requests, and this filtering process can put controls in place. Second, scalability is more easily realized. The multiple-server solution can be made to be very scalable, literally to millions of users, without slowing down any particular layer.

EXAM TIP DMZs act as a buffer zone between unprotected areas of a network (the Internet) and protected areas (sensitive company data stores), allowing for the monitoring and regulation of traffic between these two zones.

Extranet

An extranet is an extension of a selected portion of a company’s intranet to external partners. This allows a business to share information with customers, suppliers, partners, and other trusted groups while using a common set of Internet protocols to facilitate operations. Extranets can use public networks to extend their reach beyond a company’s own internal network, and some form of security, typically VPN, is used to secure this channel. The use of the term extranet implies both privacy and security. Privacy is required for many communications, and security is needed to prevent unauthorized use and events from occurring. Both of these functions can be achieved through the use of technologies described in this chapter and other chapters in this book. Proper firewall management, remote access, encryption, authentication, and secure tunnels across public networks are all methods used to ensure privacy and security for extranets.

EXAM TIP An extranet is a semiprivate network that uses common network technologies (such as HTTP, FTP, and so on) to share information and provide resources to business partners. Extranets can be accessed by more than one company, because they share information between organizations.

Intranet

An intranet describes a network that has the same functionality as the Internet for users but lies completely inside the trusted area of a network and is under the security control of the system and network administrators. Typically referred to as campus or corporate networks, intranets are used every day in companies around the world. An intranet allows a developer and a user the full set of protocols—HTTP, FTP, instant messaging, and so on—that is offered on the Internet, but with the added advantage of trust from the network security. Content on intranet web servers is not available over the Internet to untrusted users. This layer of security offers a significant amount of control and regulation, allowing users to fulfill business functionality while ensuring security.

Two methods can be used to make information available to outside users: Duplication of information onto machines in the DMZ can make it available to other users. Proper security checks and controls should be made prior to duplicating the material to ensure security policies concerning specific data availability are being followed. Alternatively, extranets (discussed in the previous section) can be used to publish material to trusted partners.

EXAM TIP An intranet is a private, internal network that uses common network technologies (such as HTTP, FTP, and so on) to share information and provide resources to organizational users.

Should users inside the intranet require access to information from the Internet, a proxy server can be used to mask the requestor’s location. This helps secure the intranet from outside mapping of its actual topology. All Internet requests go to the proxy server. If a request passes filtering requirements, the proxy server, assuming it is also a cache server, looks in its local cache of previously downloaded web pages. If it finds the page in its cache, it returns the page to the requestor without needing to send the request to the Internet. If the page is not in the cache, the proxy server, acting as a client on behalf of the user, uses one of its own IP addresses to request the page from the Internet. When the page is returned, the proxy server relates it to the original request and forwards it on to the user. This masks the user’s IP address from the Internet. Proxy servers can perform several functions for a firm; for example, they can monitor traffic requests, eliminating improper requests such as inappropriate content for work. They can also act as a cache server, cutting down on outside network requests for the same object. Finally, proxy servers protect the identity of internal IP addresses using NAT, although this function can also be accomplished through a router or firewall using NAT as well.

Wireless

Wireless networking is the transmission of packetized data by means of a physical topology that does not use direct physical links. This definition can be narrowed to apply to networks that use radio waves to carry the signals over either public or private bands, instead of using standard network cabling.

The topology of a wireless network is either hub and spoke or mesh. In the hub and spoke model, the wireless access point is the hub and is connected to the wired network. Wireless clients then connect to this access point via wireless, forming the spokes. In most enterprises, multiple wireless access points are deployed, forming an overlapping set of radio signals allowing clients to connect to the stronger signals. With tuning and proper antenna alignment and placement of the access points, administrators can achieve the desired areas of coverage and minimize interference.

The other topology supported by wireless is a mesh topology. In a mesh topology, the wireless units talk directly to each other, without a central access point. This is a form of ad hoc networking (discussed in more detail later in the chapter). A new breed of wireless access points have emerged on the market that combine both of these characteristics. These wireless access points talk to each other in a mesh network method, and once they have established a background network where at least one station is connected to the wired network, then wireless clients can connect to any of the access points as if the access points were normal access points. But instead of the signal going from wireless client to access point to wired network, the signal is carried across the wireless network from access point to access point until it reaches the master device that is wired to the outside network.

Guest

A guest zone is a network segment that is isolated from systems that guests should never have access to. Administrators commonly configure on the same hardware multiple logical wireless networks, including a guest network, providing separate access to separate resources based on login credentials.

Honeynets

As introduced in Chapter 7, a honeynet is a network designed to look like a corporate network, but is made attractive to attackers. A honeynet is a collection of honeypots, servers that are designed to act like real network servers but possess only fake data. A honeynet looks like the corporate network, but because it is known to be a false copy, all of the traffic is assumed to be illegitimate. This makes it easy to characterize the attacker’s traffic and also to understand where attacks are coming from.

NAT

If you’re thinking that a 32-bit address space that’s chopped up and subnetted isn’t enough to handle all the systems in the world, you’re right. While IPv4 address blocks are assigned to organizations such as companies and universities, there usually aren’t enough Internet-visible IP addresses to assign to every system on the planet a unique, Internet-routable IP address. To compensate for this lack of available IP address space, organizations use Network Address Translation (NAT), which translates private (nonroutable) IP addresses into public (routable) IP addresses.

Certain IP address blocks are reserved for “private use,” and not every system in an organization needs a direct, Internet-routable IP address. Actually, for security reasons, it’s much better if most of an organization’s systems are hidden from direct Internet access. Most organizations build their internal networks using the private IP address ranges (such as 10.1.1.X) to prevent outsiders from directly accessing those internal networks. However, in many cases those systems still need to be able to reach the Internet. This is accomplished by using a NAT device (typically a firewall or router) that translates the many internal IP addresses into one of a small number of public IP addresses.

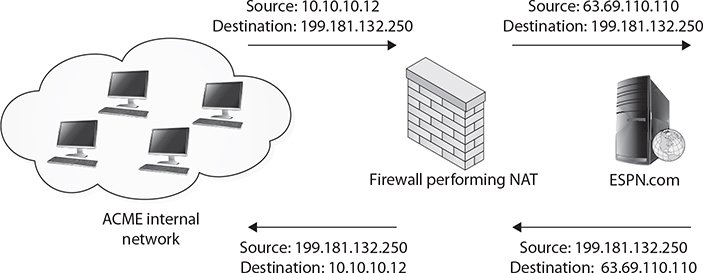

For example, consider a fictitious company, ACME.com. ACME has several thousand internal systems using private IP addresses in the 10.X.X.X range. To allow those IP addresses to communicate with the outside world, ACME leases an Internet connection and a few public IP addresses, and deploys a NAT-capable device. ACME administrators configure all their internal hosts to use the NAT device as their default gateway. When internal hosts need to send packets outside the company, they send them to the NAT device. The NAT device removes the internal source IP address out of the outbound packets and replaces it with the NAT device’s public, routable address and sends the packets on their way. When response packets are received from outside sources, the device performs NAT in reverse, stripping off the external, public IP address from the destination address field and replacing it with the correct internal, private IP address before sending the packets into the private ACME.com network. Figure 11-2 illustrates this NAT process.

Figure 11-2 Logical depiction of NAT

In Figure 11-2, we see an example of NAT being performed. An internal workstation (10.10.10.12) wants to visit a website at 199.181.132.250. When the packet reaches the NAT device, the device translates the 10.10.10.12 source address to the globally routable 63.69.110.110 address, the IP address of the device’s externally visible interface. When the website responds, it responds to the device’s address just as if the NAT device had originally requested the information. The NAT device must then remember which internal workstation requested the information and route the packet to the appropriate destination.

While the underlying concept of NAT remains the same, there are actually several different approaches to implementing NAT. For example:

• Static NAT Maps an internal, private address to an external, public address. The same public address is always used for that private address. This technique is often used when hosting something you wish the public to be able to get to, such as a web server, behind a firewall.

• Dynamic NAT Maps an internal, private IP address to a public IP address selected from a pool of registered (public) IP addresses. This technique is often used when translating addresses for end-user workstations and the NAT device must keep track of internal/external address mappings.

• Port Address Translation (PAT) Allows many different internal, private addresses to share a single external IP address. Devices performing PAT replace the source IP address with the NAT IP address and replace the source port field with a port from an available connection pool. PAT devices keep a translation table to track which internal hosts are using which ports so that subsequent packets can be stamped with the same port number. When response packets are received, the PAT device reverses the process and forwards the packet to the correct internal host. PAT is a very popular NAT technique and in use at many organizations.

Ad Hoc

An ad hoc network is one where the systems on the network direct packets to and from their source and target locations without using a central router or switch. Windows supports ad hoc networking, although it is best to keep the number of systems relatively small. A common source of ad hoc networks is in the wireless space. From Zigbee devices that form ad hoc networks to Wi-Fi Direct, a wireless ad hoc network is one where the devices talk to each other without an access point or a central switch to manage traffic.

Ad hoc networks have several advantages. Without the need for access points, ad hoc networks provide an easy and cheap means of direct client-to-client communication. Ad hoc wireless networks can be easy to configure and provide a simple way to communicate with nearby devices when running cable is not an option.

Ad hoc networks have disadvantages as well. In enterprise environments, managing an ad hoc network is difficult because there isn’t a central device through which all traffic flows. This means there isn’t a single place to visit for traffic stats, security implementations, and so forth. This also makes monitoring ad hoc networks more difficult.

EXAM TIP This section has introduced a lot of zones and topologies, all of which are testable. You need to understand how to construct a network with each type because the exam may provide block diagrams and ask you to fill in the blanks. Know the differences among the zones/topologies and how each is configured.

Segregation/Segmentation/Isolation

As networks have become more complex, with multiple layers of tiers and interconnections, a problem can arise in connectivity. One of the limitations of the Spanning Tree Protocol (STP) is its inability to manage Layer 2 traffic efficiently across highly complex networks. STP was created to prevent loops in Layer 2 networks and has been improved to the current version of Rapid Spanning Tree Protocol (RSTP). RSTP creates a spanning tree within the network of Layer 2 switches, disabling links that are not part of the spanning tree. RSTP, IEEE 802.1w, provides a more rapid convergence to a new spanning tree solution after topology changes are detected. The problem with the spanning tree algorithms is that the network traffic is interrupted while the system recalculates and reconfigures. These disruptions can cause problems in network efficiencies and have led to a push for flat network designs, which avoid packet-looping issues through an architecture that does not have tiers.

One name associated with flat network topologies is network fabric, a term meant to describe a flat, depthless network. Network fabrics are becoming increasingly popular in data centers, and other areas of high traffic density, as they can offer increased throughput and lower levels of network jitter and other disruptions. While this is good for efficiency of network operations, this “everyone can talk to everyone” idea is problematic with respect to security.

Modern networks, with their increasingly complex connections, result in systems where navigation can become complex between nodes. Just as a DMZ-based architecture allows for differing levels of trust, the isolation of specific pieces of the network using security rules can provide differing trust environments. There are several terms used to describe the resulting architecture, including network segmentation, segregation, isolation, and enclaves. “Enclaves” is the most commonly used term to describe sections of a network that are logically isolated by segmentation at the networking protocol. The concept of segregating a network into enclaves can create areas of trust where special protections can be employed and traffic from outside the enclave is limited or properly screened before admission.

Enclaves are not diametrically opposed to the concept of a flat network structure; they are just carved-out areas, like gated neighborhoods, where one needs special credentials to enter. A variety of security mechanisms can be employed to create a secure enclave. Layer 2 addressing (subnetting) can be employed, making direct addressability an issue. Firewalls, routers, and application-level proxies can be employed to screen packets before entry or exit from the enclave. Even the people side of the system can be restricted by dedicating one or more systems administrators to manage the systems.

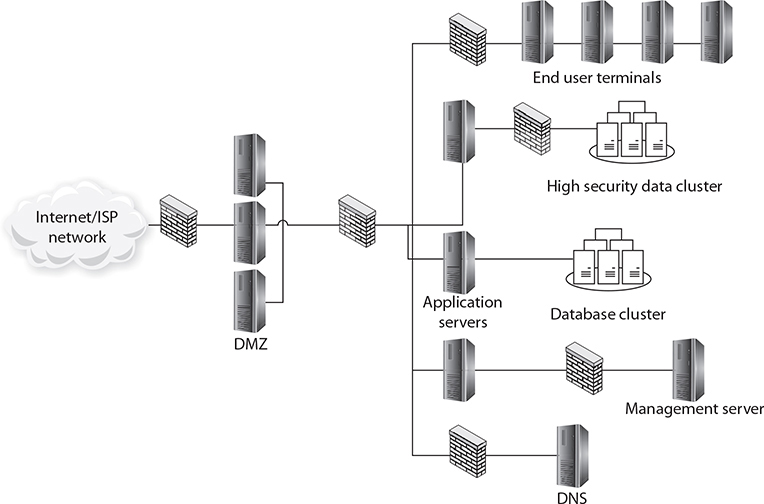

Enclaves are an important tool in modern secure network design. Figure 11-3 shows a network design with a standard two-firewall implementation of a DMZ. On the internal side of the network, multiple firewalls can be seen, carving off individual security enclaves, zones where the same security rules apply. Common enclaves include those for high-security databases, low-security users (call centers), public-facing kiosks, and the management interfaces to servers and network devices. Having each of these in its own zone provides for more security control. On the management layer, using a nonroutable IP address scheme for all of the interfaces prevents them from being directly accessed from the Internet.

Figure 11-3 Secure enclaves

Physical

Physical segregation is where you have separate physical equipment to handle different classes of traffic, including separate switches, separate routers, separate cables. This is the most secure method of separating traffic, but also the most expensive. Organizations commonly have separate physical paths in the outermost sections of the network where connections to the Internet are made. This is mostly for redundancy, but it also acts to separate the traffic.

Contractual obligations sometimes require physical segregation of equipment, such as in the Payment Card Industry Data Security Standards (PCI DSS). Under PCI DSS, if an organization wishes to have a set of assets be considered out of scope with respect to the security audit for card number processing systems, then that set of assets must be physically segregated from the processing systems. Enclaves are an example of physical separation.

Logical (VLAN)

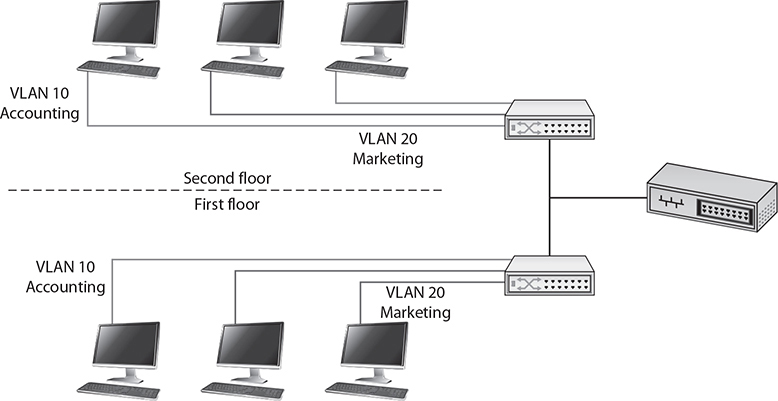

A LAN is a set of devices with similar functionality and similar communication needs, typically co-located and operated off a single switch. This is the lowest level of a network hierarchy and defines the domain for certain protocols at the data link layer for communication. A virtual LAN (VLAN) is a logical implementation of a LAN and allows computers connected to different physical networks to act and communicate as if they were on the same physical network. A VLAN has many of the same characteristic attributes of a LAN and behaves much like a physical LAN but is implemented using switches and software. This very powerful technique allows significant network flexibility, scalability, and performance and allows administrators to perform network reconfigurations without having to physically relocate or recable systems.

Trunking is the process of spanning a single VLAN across multiple switches. A trunk-based connection between switches allows packets from a single VLAN to travel between switches, as shown in Figure 11-4. Two trunks are shown in the figure: VLAN 10 is implemented with one trunk and VLAN 20 is implemented with the other. Hosts on different VLANs cannot communicate using trunks and thus are switched across the switch network. Trunks enable network administrators to set up VLANs across multiple switches with minimal effort. With a combination of trunks and VLANs, network administrators can subnet a network by user functionality without regard to host location on the network or the need to recable machines.

Figure 11-4 VLANs and trunks

VLANs are used to divide a single network into multiple subnets based on functionality. This permits the accounting and marketing departments, for example, to share a switch because of proximity yet still have separate traffic domains. The physical placement of equipment and cables is logically and programmatically separated so that adjacent ports on a switch can reference separate subnets. This prevents unauthorized use of physically close devices through separate subnets that are on the same equipment. VLANs also allow a network administrator to define a VLAN that has no users and map all of the unused ports to this VLAN (some managed switches allow administrators to simply disable unused ports as well). Then, if an unauthorized user should gain access to the equipment, that user will be unable to use unused ports, as those ports will be securely defined to nothing. Both a purpose and a security strength of VLANs is that systems on separate VLANs cannot directly communicate with each other.

EXAM TIP Physical vs. logical segregation: Physical segregation requires creating two or more physical networks, each with its own servers, switches, and routers. Logical segregation uses one physical network with firewalls and/or routers separating and facilitating communication between the logical networks.

Virtualization

Virtualization offers server isolation logically while still enabling physical hosting. Virtual machines allow you to run multiple servers on a single piece of hardware, enabling the use of more powerful machines in the enterprise at higher rates of utilization. By definition, a virtual machine provides a certain level of isolation from the underlying hardware, operating through a hypervisor layer. If a single piece of hardware has multiple virtual machines running, they are isolated from each other by the hypervisor layer as well. Virtualization is covered in depth in Chapter 15.

Air Gaps

Air gaps is the term used to describe when no data path exists between two networks that are not connected in any way except via a physical air gap between them. Physically or logically there is no direct path between them. It is a conceptual term that refers to isolating a secure network or computer from all other networks (particularly the Internet) and computers by ensuring that it can’t establish external communication, the goal of which is to prevent any possibility of unauthorized access. Air gaps are considered by some to be a security measure, but this topology has several weaknesses. First, sooner or later, some form of data transfer is needed between air-gapped systems. When this happens, administrators transfer files via USB-connected external media, which effectively breaches the air gap.

Air gaps as a security measure fail because people can move files and information between the systems with external devices. Second, and because of the false sense of security imparted by the air gap, these transfers are not subject to serious security checks. About the only thing that air gaps can prevent are automated connections such as reverse shells and other connections used to contact servers outside the network from within.

EXAM TIP Air gaps have to be architected into a system to exist in reality, and then strictly enforced with near-draconian policies. The bottom line is that they are hard to control, and when they break down, the failures can be significant.

Tunneling/VPN

Tunneling/virtual private networking (VPN) technologies allow two networks to connect surely across an unsecure stretch of network. These technologies are achieved with protocols discussed in multiple chapters throughout this book, such as IPsec, L2TP, SSL/TLS, and SSH. At this level, understand that these technologies enable two sites, such as a remote worker’s home network and the corporate network, to communicate across unsecure networks, including the Internet, at a much lower risk profile. The two main uses for tunneling/VPN technologies are site-to-site communications and remote access to a network.

Site-to-Site

Site-to-site communication links are network connections that connect two or more networks across an intermediary network layer. In almost all cases, this intermediary network is the Internet or some other public network. To secure the traffic that is going from site to site, encryption in the form of either a VPN or a tunnel can be employed. In essence, this makes all of the packets between the endpoints in the two networks unreadable to nodes between the two sites.

Remote Access

Remote access is when a user requires access to a network and its resources, but is not able to make a physical connection. Remote access via a tunnel or VPN has the same effect as directly connecting the remote system to the network—it’s as if the remote user just plugged a network cable directly into her machine. So, if you do not trust a machine to be directly connected to your network, you should not use a VPN or tunnel, for if you do, that is what you are logically doing.

EXAM TIP Tunneling/VPN technology is a means of extending a network either to include remote users or to connect two sites. Once the connection is made, it is like the connected machines are locally on the network.

Security Device/Technology Placement

The placement of each security device is related to the purpose of the device and the environment that it requires. (See Chapter 1 for deeper coverage of many of the security devices discussed in this section.) Technology placement has similar restrictions; these devices must be in the flow of the network traffic that they use to function. If an enterprise has two Internet connections, with half of the servers going through one connection and the other half going through the other connection, then at least two of each technology that is to be deployed between the Internet and the enterprise is needed, with one each for placement between the connection and the Internet. As we will see with different devices, the placement needs are fairly specific and essential for the devices to function properly.

Sensors

Sensors are devices that capture data and act upon it. There are multiple kinds of sensors and various placement scenarios. Each type of sensor is different, and no single type of sensor can sense everything. Sensors can be divided into two types based on where they are placed: network or host. Network-based sensors can provide coverage across multiple machines, but are limited by traffic engineering to systems that packets pass the sensor. They may have issues with encrypted traffic, for if the packet is encrypted and they cannot read it, they are unable to act upon it. Lastly, network-based sensors have limited knowledge of what hosts they see are doing, so the sensor analysis is limited in its ability to make precise decisions on the content. Host-based sensors provide more specific and accurate information in relation to what the host machine is seeing and doing, but are limited to just that host. A good example of the differences in sensor placement and capabilities is seen in the host-based intrusion detection and network-based intrusion detection systems.

Sensors have several different actions they can take: they can report on what they observe, they can use multiple readings to match a pattern and create an event, and they can act based on proscribed rules. Not all sensors can take all actions, and the application of specific sensors is part of a monitoring and control deployment strategy. This deployment strategy must consider network traffic engineering, the scope of action, and other limitations.

Collectors

Collectors are sensors, or concentrators that combine multiple sensors that collect data for processing by other systems. Collectors are subject to the same placement rules and limitations as sensors.

Correlation Engines

Correlation engines take sets of data and match the patterns against known patterns. They are a crucial part of a wide range of tools, such as antivirus or intrusion detection devices, to provide a means of matching a collected pattern of data against a set of patterns associated with known issues. Should incoming data match one of the stored profiles, the engine can alert or take other actions. Correlation engines are limited by the strength of the match when you factor in time and other variants that create challenges in a busy traffic environment. The placement of correlation engines is subject to the same issues as all other network placements; the traffic you desire to study must pass the sensor feeding the engine. If the traffic is routed around the sensor, the engine will fail.

Filters

Packet filters process packets at a network interface based on source and destination addresses, ports, or protocols, and either allow passage or block them based on a set of rules. Packet filtering is often part of a firewall program for protecting a local network from unwanted traffic. The filters are local to the traffic being passed, so they must be placed inline with a system’s connection to the network and Internet, or else they will not be able to see traffic to act upon it.

Another example of a filter is a spam filter. Spam filters work like all other filters; they act as a sorter—good e-mail to your inbox, spam to the trash. There are many cases where you wish to divide a sample into conforming, which are permitted, and non-conforming, which are blocked.

Proxies

Proxies are servers that act as a go-between between clients and other systems; in essence, they are designed to act on the clients’ behalf. See Chapter 6 for in-depth coverage of proxies. This means that the proxies must be in the normal path of network traffic for the system being proxied. As networks become segregated, the proxy placement must be such that it is in the natural flow of the routed traffic for it to intervene on the clients’ behalf.

Firewalls

Firewalls at their base level are policy enforcement engines that determine whether traffic can pass or not based on a set of rules. Regardless of the type of firewall, the placement is easy: firewalls must be inline with the traffic they are regulating. If there are two paths for data to get to a server farm, then either one firewall must have both paths go through it or two firewalls are necessary. Firewalls are commonly placed between network segments, enabling them to examine traffic that enters or leaves a segment. This gives them the ability to isolate a segment while avoiding the cost or overhead of doing this segregation on each and every system. Chapter 6 covers firewalls in detail.

VPN Concentrators

A VPN concentrator takes multiple individual VPN connections and terminates them into a single network point. This single endpoint is what should define where the VPN concentrator is located in the network. The VPN side of the concentrator is typically outward facing, exposed to the Internet. The internal side of the device should terminate in a network segment where you would allow all of the VPN users to connect their machines directly. If you have multiple different types of VPN users with different security profiles and different connection needs, then you might have multiple concentrators with different endpoints that correspond to appropriate locations inside the network.

SSL Accelerators

An SSL accelerator is used to provide SSL/TLS encryption/decryption at scale, removing the load from web servers. Because of this, it needs to be placed between the appropriate web servers and the clients they serve, typically Internet facing.

Load Balancers

Load balancers take incoming traffic from one network location and distribute it across multiple network operations. A load balancer must reside in the traffic path between the requestors of a service and the servers that are providing the service. The role of the load balancer is to manage the workloads on multiple systems by distributing the traffic to and from them. To do this, it must be located within the traffic pathway. For reasons of efficiency, load balancers are typically located close to the systems that they are managing the traffic for.

DDoS Mitigator

DDoS mitigators by nature must exist outside the area that they are protecting. They act as an umbrella, shielding away the unwanted DDoS packets. As with all of the devices in this section, the DDoS mitigator must reside in the network path of the traffic it is shielding the inner part of the networks from. Because the purpose of the DDoS mitigator is to stop unwanted DDoS traffic, it should be positioned at the very edge of the network, before other devices.

Aggregation Switches

An aggregation switch is a switch that provides connectivity for several other switches. Think of it as a one-to-many type of device. It’s the one switch that many other switches connect to. It is placed upstream from the multitude of devices and takes the place of a router or a much larger switch. Assume you have ten users on each of three floors. You can place a 16-port switch on each floor, and then consume three router ports. Now make that ten floors of ten users, and you are consuming ten ports on your router for the ten floors. An aggregate switch will reduce this to one connection, while providing faster switching between users than the router would. These traffic management devices are located based on network layout topologies to limit unnecessary router usage.

Taps and Port Mirror

Most enterprise switches have the ability to copy the activity of one or more ports through a Switch Port Analyzer (SPAN) port, also known as a port mirror. This traffic can then be sent to a device for analysis. Port mirrors can have issues when traffic levels get heavy as the aggregate SPAN traffic can exceed the throughput of the device. For example, a 16-port switch, with each port running at 100 Mbps, can have traffic levels of 1.6 GB if all circuits are maxed, which gives you a good idea of why this technology can have issues in high-traffic environments.

A Test Access Point (TAP) is a passive signal-copying mechanism installed between two points on the network. The TAP can copy all packets it receives, rebuilding a copy of all messages. TAPs provide the one distinct advantage of not being overwhelmed by traffic levels, at least not in the process of data collection. The primary disadvantage is that a TAP is a separate piece of hardware and adds to network costs.

EXAM TIP To work properly, every security device covered in this section must be placed inline with the traffic flow that it is intended to interact with. If there are network paths around the device, it will not perform as designed. Understanding the network architecture is important when placing devices.

SDN

Software-defined networking (SDN) is a relatively new method of managing the network control layer separate from the data layer, and under the control of computer software. This enables network engineers to reconfigure the network by making changes via a software program, without the need for re-cabling. SDN allows for network function deployment via software, so you could program a firewall between two segments by telling the SDN controllers to make the change. They then feed the appropriate information into switches and routers to have the traffic pattern switch, adding the firewall into the system. SDN is relatively new and just beginning to make inroads into local networks, but the power it presents to network engineers is compelling, enabling them to reconfigure networks at the speed of a program executing change files.

Chapter Review

This first half of this chapter presented use cases and purpose for frameworks, best practices, and secure configuration guides. You first became acquainted with industry-standard frameworks and reference architectures, including regulatory, non-regulatory, national, international, and industry-specific frameworks. You then learned about benchmarks and secure configuration guides, starting with platform/vendor-specific guides for web servers, operating systems, application servers, and network infrastructure devices, and then general purpose guides. Next, the chapter presented defense-in-depth/layered security, describing the roles of vendor diversity, control diversity (both technical and administrative), and user training in support of adding depth to your security posture.

The latter half of the chapter presented secure network architecture concepts, beginning with zones and topologies. The topics in this section included examination of the zones/topologies of the following architectural elements; DMZ, extranet, intranet, wireless, guest, honeynets, NAT, and ad hoc network designs. The concepts of segregation/segmentation/isolation, both physical and logical, were covered. Also covered were virtualization and air gaps. The use of tunneling or VPN to create site-to-site connections, or remote access was presented. You also learned about security device and technology placement, including the placement of sensors, collectors, correlation engines, filters, proxies, firewalls, VPN concentrators, SSL accelerators, load balancers, DDoS mitigators, aggregation switches, TAPs, and port mirrors. The chapter wrapped up with a look at software-defined networking, a relatively new network architecture concept.

Questions

To help you prepare further for the CompTIA Security+ exam, and to test your level of preparedness, answer the following questions and then check your answers against the correct answers at the end of the chapter.

1. From the Internet going into the network, which of the following is the first device a packet would encounter (assuming all devices are present)?

A. Firewall

B. Load balancer

C. DMZ

D. DDoS mitigator

2. Tunneling is used to achieve which of the following?

A. Eliminate an air gap

B. Connect users to a honeynet

C. Remote access from users outside the building

D. Intranet connections to the DMZ

3. Connections to third-party content associated with your business, such as a travel agency website for corporate travel, is an example of which of the following?

A. Intranet

B. Extranet

C. Guest network

D. Proxy service

4. Which of the following is not a standard practice to support defense-in-depth?

A. Vendor diversity

B. User diversity

C. Control diversity

D. Redundancy

5. Industry-standard frameworks are useful for which of the following purposes?

A. Aligning with an audit-based standard

B. Aligning IT and security with the enterprise’s business strategy

C. Providing high-level organization over processes

D. Creating diagrams to document system architectures

6. Which of the following represents the greatest risk if improperly configured?

A. Operating system on a server

B. Web server

C. Application server

D. Network infrastructure device

7. What is the primary purpose of a DMZ?

A. Prevent direct access to secure servers from the Internet

B. Provide a place for corporate servers to reside so they can access the Internet

C. Create a safe computing environment next to the Internet

D. Slow down traffic coming and going to the network

8. If you wish to monitor 100 percent of the transmissions from your customer service representatives to the Internet and other internal services, which is the best tool?

A. SPAN port

B. TAP

C. Mirror port

D. Aggregator switches

9. For traffic coming from the Internet into the network, which of the following is the correct order in which devices should receive the traffic?

A. – Firewall – DMZ – SSL accelerator – load balancer – web server

B. – Firewall – DMZ – firewall – load balancer – SSL accelerator – web server

C. – DMZ – firewall – SSL accelerator – load balancer – web server

D. – Firewall – DMZ – firewall – SSL accelerator – load balancer – web server

10. Which type of network enables networking without intervening network devices?

A. Ad hoc

B. Honeynet

C. NAT

D. Intranet

11. Air gaps offer protection from which of the following?

A. Malware

B. Ransomware

C. Reverse shells

D. Pornography or other not safe for work (NSFW) materials

12. For traffic coming from the Internet into the network, which of the following is the correct order in which devices should receive the traffic?

A. – Firewall – DMZ – proxy server – client

B. – Firewall – DMZ – firewall – DDoS mitigator – web server

C. – DMZ – Firewall – load balancer – web server

D. – Firewall – DMZ – firewall – load balancer – database server

13. Your boss asks you to set up a new server. Where can you get the best source of information on configuration for secure operations?

A. User group website for the server

B. Secure configuration guide from the Center for Internet Security

C. Ask the senior admin for his notes from the last install

D. A copy of the configuration files of another server in your enterprise

14. To have the widest effect on security, which of the following controls should be addressed and maintained on a regular basis?

A. User training

B. Vendor diversity

C. Administrative controls

D. Technical controls

15. VLANs provide which of the following?

A. Physical segregation

B. Physical isolation

C. Logical segmentation

D. All of the above

Answers

1. D. When present, the DDoS mitigator is first in the chain of devices to screen incoming traffic. It blocks the DDoS traffic that would otherwise strain the rest of the network.

2. C. Remote access is one of the primary uses of tunneling and VPNs.

3. B. External network connections to sections of a third-party network as a part of your business’s network is an extranet.

4. B. Although diversity among users can have many benefits, defense-in-depth isn’t one of them. All of the other choices are valid components of a defense-in-depth program.

5. B. Industry frameworks provide a method to align IT and security with the enterprise’s business strategy.

6. D. When improperly configured, network infrastructure devices can allow unauthorized access to traffic traversing all devices they carry traffic to and from.

7. A. The primary purpose of a DMZ is to provide separation between the untrusted zone of the Internet and the trusted zone of enterprise systems. It does so by preventing direct access to secure servers from the Internet.

8. B. A Test Access Point (TAP) is required to monitor 100 percent of the transmissions from your customer service representatives to the Internet and other internal services.

9. D. Firewall – DMZ – Firewall – SSL accelerator – load balancer – web server. A is missing the second firewall, B has the load balancer and SSL accelerator in the wrong order, and C is missing the first firewall.

10. A. An ad hoc network is constructed without central networking equipment and supports direct machine-to-machine communications.

11. C. Reverse shells or other items that call out of a network are stopped by the air gap. All of the other problems can occur by connection such as a USB.

12. D. Firewall – DMZ – firewall – load balancer – database server. A has a missing second firewall, B has the DDoS mitigator in the wrong position, and C is missing the first firewall.

13. B. The Center for Internet Security (CIS) maintains a collection of peer-reviewed, consensus-driven guidance for secure system configuration. All of the other options represent choices with potential errors from unvetted or previous bad choices.

14. A. User training has the widest applicability, because users touch all systems, while the other controls only touch some of them. Users also require maintenance in the form of retraining as they lose their focus on security over time.

15. C. VLANs are logical segmentation devices. They have no effect on the physical separation of traffic.