Robert Hooke (1635-1703)

Although microscopes had been available since about the late 1500s, English scientist Robert Hooke’s use of the compound microscope (a microscope with more than one lens) represents a particularly notable milestone, and his instrument can be considered as an important optical and mechanical forerunner of the modern microscope. For an optical microscope with two lenses, the overall magnification is the product of the powers of the ocular (eyepiece lens), usually about 10×, and the objective lens, which is closer to the specimen.

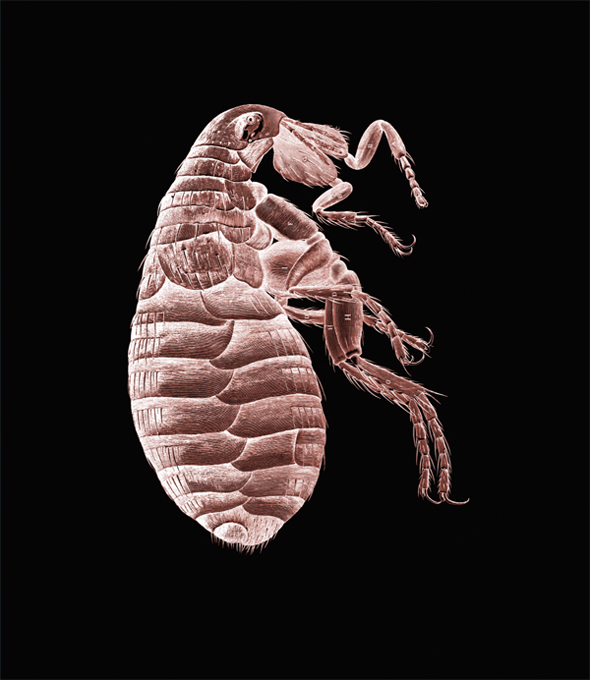

Hooke’s book Micrographia featured breathtaking microscopic observations and biological speculation on specimens that ranged from plants to fleas. The book also discussed planets, the wave theory of light, and the origin of fossils, while stimulating both public and scientific interest in the power of the microscope.

Hooke was first to discover biological cells and coined the word cell to describe the basic units of all living things. The word cell was motivated by his observations of plant cells that reminded him of “cellula,” which were the quarters in which monks lived. About this magnificent work, the historian of science Richard Westfall writes, “Robert Hooke’s Micrographia remains one of the masterpieces of seventeenth century science, [presenting] a bouquet of observations with courses from the mineral, animal and vegetable kingdoms.”

Hooke was the first person to use a microscope to study fossils, and he observed that the structures of petrified wood and fossil seashells bore a striking similarity to actual wood and the shells of living mollusks. In Micrographia, he compared petrified wood to rotten wood, and concluded that wood could be turned to stone by a gradual process. He also believed that many fossils represented extinct creatures, writing, “There have been many other Species of Creatures in former Ages, of which we can find none at present; and that ’tis not unlikely also but that there may be divers new kinds now, which have not been from the beginning.” More recent advances in microscopes are described in the entry “Seeing the Single Atom.”

SEE ALSO Telescope (1608), Kepler’s “Six-Cornered Snowflake” (1611), Brownian Motion (1827), Seeing the Single Atom (1955).

Flea, from Robert Hooke’s Micrographia, published in 1665.

Guillaume Amontons (1663–1705), Leonardo da Vinci (1452–1519), Charles-Augustin de Coulomb (1736–1806)

Friction is a force that resists the sliding of objects with respect to each other. Although it is responsible for the wearing of parts and the wasting of energy in engines, friction is beneficial in our everyday lives. Imagine a world without friction. How would one walk, drive a car, attach objects with nails and screws, or drill cavities in teeth?

In 1669, French physicist Guillaume Amontons showed that the frictional force between two objects is directly proportional to the applied load (i.e., the force perpendicular to the surfaces in contact), with a constant of proportionality (a frictional coefficient) that is independent of the size of the contact area. These relationships were first suggested by Leonardo da Vinci and rediscovered by Amontons. It may seem counterintuitive that the amount of friction is nearly independent of the apparent area of contact. However, if a brick is pushed along the floor, the resisting frictional force is the same whether the brick is sliding on its larger or smaller face.

Several studies have been conducted in the early years of the twenty-first century to determine the extent to which Amontons’ Law actually applies for materials at length scales from nanometers to millimeters—for example, in the area of MEMS (micro-electromechanical systems), which involves tiny devices such as those now used in inkjet printers and as accelerometers in car airbag systems. MEMS make use of microfabrication technology to integrate mechanical elements, sensors, and electronics on a silicon substrate. Amontons’ Law, which is often useful when studying traditional machines and moving parts, may not be applicable to machines the size of a pinhead.

In 1779, French physicist Charles-Augustin de Coulomb began his research into friction and found that for two surfaces in relative motion, the kinetic friction is almost independent of the relative speed of the surfaces. For an object at rest, the static frictional force is usually greater than the resisting force for the same object in motion.

SEE ALSO Acceleration of Falling Objects (1638), Tautochrone Ramp (1673), Ice Slipperiness (1850), Stokes’ Law of Viscosity (1851).

Devices such as wheels and ball bearings are used to convert sliding friction into a decreased form of rolling friction, thus creating less resistance to motion.

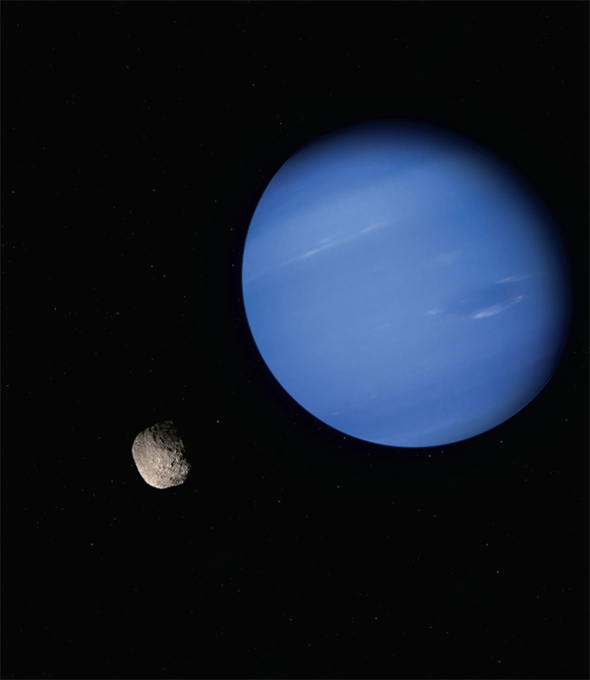

Giovanni Domenico Cassini (1625–1712)

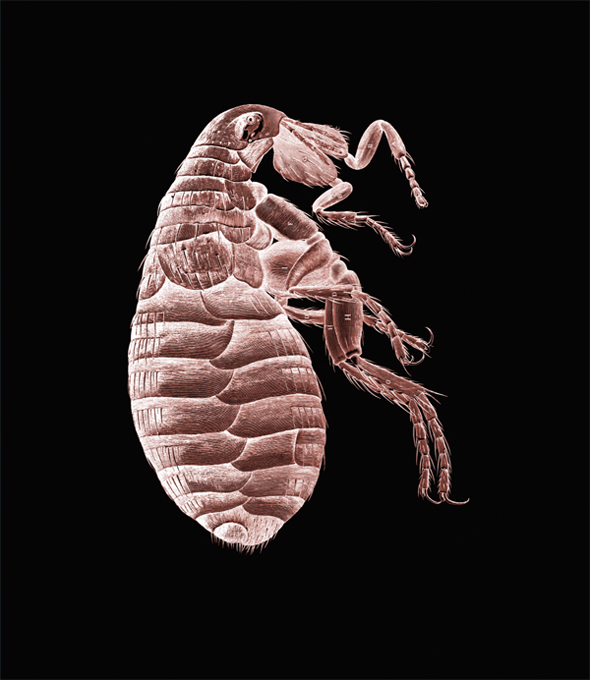

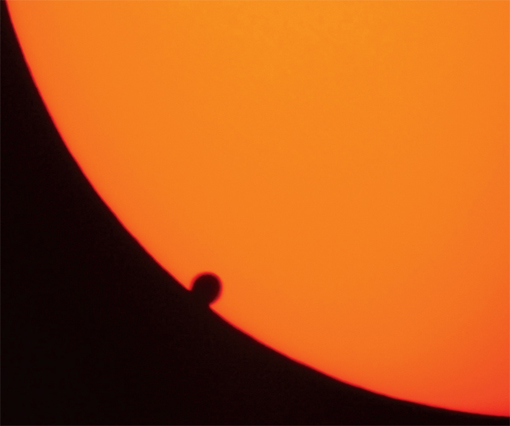

Before astronomer Giovanni Cassini’s 1672 experiment to determine the size of the Solar System, there were some rather outlandish theories floating about. Aristarchus of Samos in 280 B.C. had said that the Sun was a mere 20 times farther from the Earth than the Moon. Some scientists around Cassini’s time suggested that stars were only a few million miles away. While in Paris, Cassini sent astronomer Jean Richer to the city of Cayenne on the northeast coast of South America. Cassini and Richer made simultaneous measurements of the angular position of Mars against the distant stars. Using simple geometrical methods (see the entry “Stellar Parallax”), and knowing the distance between Paris and Cayenne, Cassini determined the distance between the Earth and Mars. Once this distance was obtained, he employed Kepler’s Third Law to compute the distance between Mars and the Sun (see “Kepler’s Laws of Planetary Motion”). Using both pieces of information, Cassini determined that the distance between the Earth and the Sun was about 87 million miles (140 million kilometers), which is only seven percent less than the actual average distance. Author Kendall Haven writes, “Cassini’s discoveries of distance meant that the universe was millions of times bigger than anyone had dreamed.” Note that it would be difficult to make direct measurements of the Sun without risking his eyesight.

Cassini became famous for many other discoveries. For example, he discovered four moons of Saturn and discovered the major gap in the rings of Saturn, which, today, is called the Cassini Gap in his honor. Interestingly, he was among the earliest scientists to correctly suspect that light traveled at a finite speed, but he did not publish his evidence for this theory because, according to Kendall Haven, “He was a deeply religious man and believed that light was of God. Light therefore had to be perfect and infinite, and not limited by a finite speed of travel.”

Since the time of Cassini, our concept of the Solar System has grown, with the discovery, for example, of Uranus (1781), Neptune (1846), Pluto (1930), and Eris (2005).

SEE ALSO Eratosthenes Measures the Earth (240 B.C.), Sun-Centered Universe (1543), Mysterium Cosmographicum (1596), Kepler’s Laws of Planetary Motion (1609), Discovery of Saturn’s Rings (1610), Bode’s Law of Planetary Distances (1766), Stellar Parallax (1838), Michelson-Morley Experiment (1887), Dyson Sphere (1960).

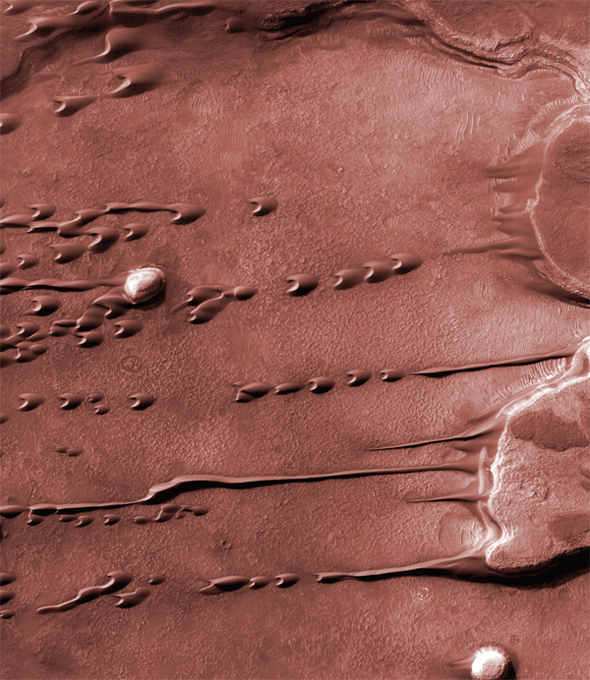

Cassini calculated the distance from Earth to Mars, and then the distance from Earth to the Sun. Shown here is a size comparison between Mars and Earth; Mars has approximately half the radius of Earth.

Isaac Newton (1642–1727)

“Our modern understanding of light and color begins with Isaac Newton,” writes educator Michael Douma, “and a series of experiments that he publishes in 1672. Newton is the first to understand the rainbow—he refracts white light with a prism, resolving it into its component colors: red, orange, yellow, green, blue and violet.”

When Newton was experimenting with lights and colors in the late 1660s, many contemporaries thought that colors were a mixture of light and darkness, and that prisms colored light. Despite the prevailing view, he became convinced that white light was not the single entity that Aristotle believed it to be but rather a mixture of many different rays corresponding to different colors. The English physicist Robert Hooke criticized Newton’s work on the characteristics of light, which filled Newton with a rage that seemed out of proportion to the comments Hooke had made. As a result, Newton withheld publication of his monumental book Opticks until after Hooke’s death in 1703—so that Newton could have the last word on the subject of light and could avoid all arguments with Hooke. In 1704, Newton’s Opticks was finally published. In this work, Newton further discusses his investigations of colors and the diffraction of light.

Newton used triangular glass prisms in his experiments. Light enters one side of the prism and is refracted by the glass into various colors (since their degree of separation changes as a function of the wavelength of the color). Prisms work because light changes speed when it moves from air into the glass of the prism. Once the colors were separated, Newton used a second prism to refract them back together to form white light again. This experiment demonstrated that the prism was not simply adding colors to the light, as many believed. Newton also passed only the red color from one prism through a second prism and found the redness unchanged. This was further evidence that the prism did not create colors, but merely separated colors present in the original light beam.

SEE ALSO Explaining the Rainbow (1304), Snell’s Law of Refraction (1621), Brewster’s Optics (1815), Electromagnetic Spectrum (1864), Metamaterials (1967).

Newton used prisms to show that white light was not the single entity that Aristotle believed it to be, but rather was a mixture of many different rays corresponding to different colors.

Christiaan Huygens (1629-1695)

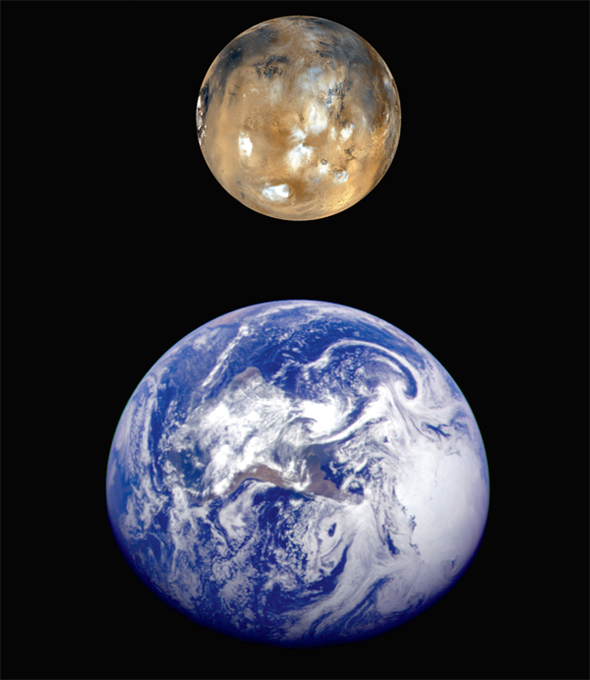

Years ago, I wrote a tall tale of seven skateboarders who find a seemingly magical mountain road. Wherever on the road the skateboarders start their downhill coasting, they always reach the bottom in precisely the same amount of time. How could this be? In the 1600s, mathematicians and physicists sought a curve that specified the shape of a special kind of ramp or road. On this special ramp, objects must slide down to the very bottom in the same amount of time, regardless of the starting position. The objects are accelerated by gravity, and the ramp is considered to have no friction.

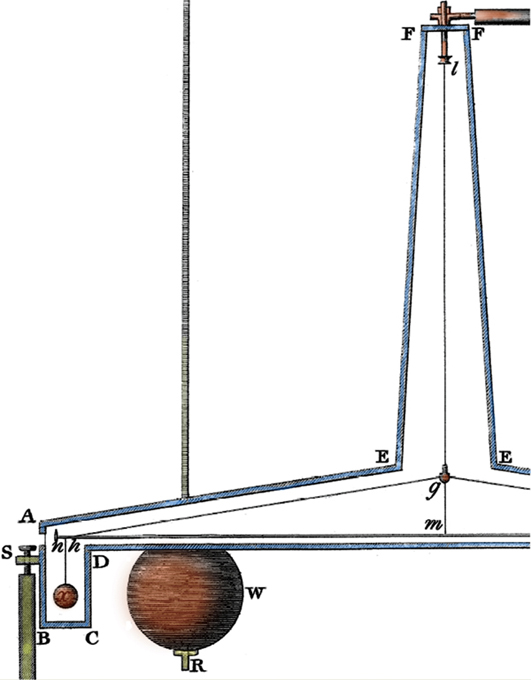

Dutch mathematician, astronomer, and physicist Christiaan Huygens discovered a solution in 1673 and published it in his Horologium Oscillatorium (The Pendulum Clock). Technically speaking, the tautochrone is a cycloid—that is, a curve defined by the path of a point on the edge of circle as the circle rolls along a straight line. The tautochrone is also called the brachistochrone when referring to the curve that gives a frictionless object the fastest rate of descent when the object slides down from one point to another.

Huygens attempted to use his discovery to design a more accurate pendulum clock. The clock made use of inverted cycloid arcs near where the pendulum string pivoted to ensure that the string followed the optimum curve, no matter where the pendulum started swinging. (Alas, the friction caused by the bending of the string along the arcs introduced more error than it corrected.)

The special property of the tautochrone is mentioned in Moby Dick in a discussion on a try-pot, a bowl used for rendering blubber to produce oil: “[The try-pot] is also a place for profound mathematical meditation. It was in the left-hand try-pot of the Pequod, with the soapstone diligently circling round me, that I was first indirectly struck by the remarkable fact, that in geometry all bodies gliding along a cycloid, my soapstone, for example, will descend from any point in precisely the same time.”

Christiaan Huygens, painted by Caspar Netscher (1639-1684).

SEE ALSO Acceleration of Falling Objects (1638), Clothoid Loop (1901).

Under the influence of gravity, these billiard balls roll along the tautochrone ramp starting from different positions, yet the balls will arrive at the candle at the same time. The balls are placed on the ramp, one at a time.

Newton’s Laws of Motion and Gravitation

Isaac Newton (1642–1727)

“God created everything by number, weight, and measure,” wrote Isaac Newton, the English mathematician, physicist, and astronomer who invented calculus, proved that white light was a mixture of colors, explained the rainbow, built the first reflecting telescope, discovered the binomial theorem, introduced polar coordinates, and showed the force causing objects to fall is the same kind of force that drives planetary motions and produces tides.

Newton’s Laws of Motion concern relations between forces acting on objects and the motion of these objects. His Law of Universal Gravitation states that objects attract one another with a force that varies as the product of the masses of the objects and inversely as the square of the distance between the objects. Newton’s First Law of Motion (Law of Inertia) states that bodies do not alter their motions unless forces are applied to them. A body at rest stays at rest. A moving body continues to travel with the same speed and direction unless acted upon by a net force. According to Newton’s Second Law of Motion, when a net force acts upon an object, the rate at which the momentum (mass × velocity) changes is proportional to the force applied. According to Newton’s Third Law of Motion, whenever one body exerts a force on a second body, the second body exerts a force on the first body that is equal in magnitude and opposite in direction. For example, the downward force of a spoon on the table is equal to the upward force of the table on the spoon.

Throughout his life, Newton is believed to have had bouts of manic depression. He had always hated his mother and stepfather, and as a teenager threatened to burn them alive in their house. Newton was also author of treatises on biblical subjects, including biblical prophecies. Few are aware that he devoted more time to the study of the Bible, theology, and alchemy than to science—and wrote more on religion than he did on natural science. Regardless, the English mathematician and physicist may well be the most influential scientist of all time.

SEE ALSO Kepler’s Laws of Planetary Motion (1609), Acceleration of Falling Objects (1638), Conservation of Momentum (1644), Newton’s Prism (1672), Newton as Inspiration (1687), Clothoid Loop (1901), General Theory of Relativity (1915), Newton’s Cradle (1967).

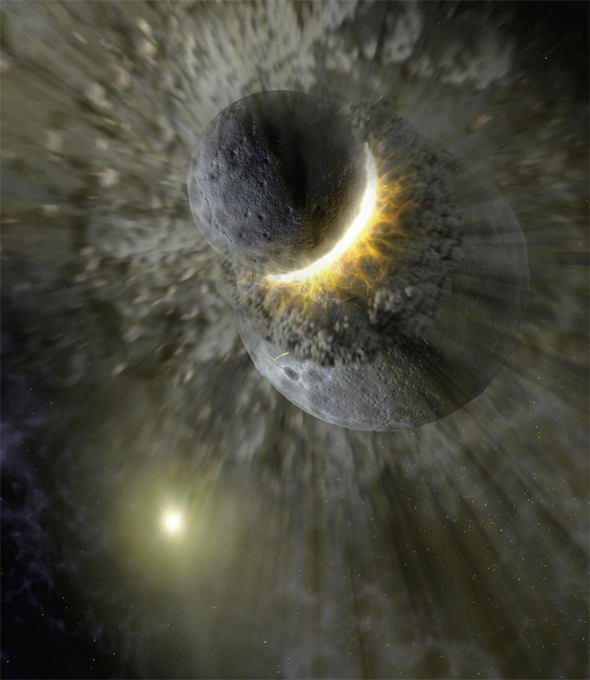

Gravity affects the motions of bodies in outer space. Shown here is an artistic depiction of a massive collision of objects, perhaps as large as Pluto, that created the dust ring around the nearby star Vega.

Isaac Newton (1642–1727)

The chemist William H. Cropper writes, “Newton was the greatest creative genius that physics has ever seen. None of the other candidates for the superlative (Einstein, Maxwell, Boltzmann, Gibbs, and Feynman) has matched Newton’s combined achievements as theoretician, experimentalist, and mathematician…. If you were to become a time traveler and meet Newton on a trip back to the seventeenth century, you might find him something like the performer who first exasperates everyone in sight and then goes on stage and sings like an angel….”

Perhaps more than any other scientist, Newton inspired the scientists who followed him with the idea that the universe could be understood in terms of mathematics. Journalist James Gleick writes, “Isaac Newton was born into a world of darkness, obscurity, and magic… veered at least once to the brink of madness… and yet discovered more of the essential core of human knowledge than anyone before or after. He was chief architect of the modern world…. He made knowledge a thing of substance: quantitative and exact. He established principles, and they are called his laws.”

Authors Richard Koch and Chris Smith note, “Some time between the 13th and 15th centuries, Europe pulled well ahead of the rest of the world in science and technology, a lead consolidated in the following 200 years. Then in 1687, Isaac Newton—foreshadowed by Copernicus, Kepler, and others—had his glorious insight that the universe is governed by a few physical, mechanical, and mathematical laws. This instilled tremendous confidence that everything made sense, everything fitted together, and everything could be improved by science.”

Inspired by Newton, astrophysicist Stephen Hawking writes, “I do not agree with the view that the universe is a mystery…. This view does not do justice to the scientific revolution that was started almost four hundred years ago by Galileo and carried on by Newton…. We now have mathematical laws that govern everything we normally experience.”

SEE ALSO Newton’s Laws of Motion and Gravitation (1687), Einstein as Inspiration (1921), Stephen Hawking on Star Trek (1993).

Photograph of Newton’s birthplace—Woolsthorpe Manor, England—along with an ancient apple tree. Newton performed many famous experiments on light and optics here. According to legend, Newton saw a falling apple here, which partly inspired his law of gravitation.

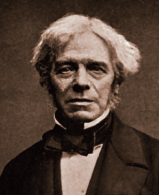

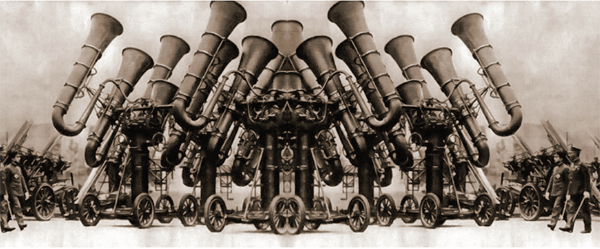

John Shore (c. 1662–1752), Hermann von Helmholtz (1821–1894), Jules Antoine Lissajous (1822–1880), Rudolph Koenig (1832–1901)

Tuning forks—those Y-shaped metal devices that create a pure tone of constant frequency when struck—have played important roles in physics, medicine, art, and even literature. My favorite appearance in a novel occurs in The Great Gatsby, where Gatsby “knew that when he kissed this girl… his mind would never romp again like the mind of God. So he waited, listening for a moment longer to the tuning-fork that had been struck upon a star. Then he kissed her. At his lips’ touch she blossomed for him like a flower….”

The tuning fork was invented in 1711 by British musician John Shore. Its pure sinusoidal acoustic waveform makes it convenient for tuning musical instruments. The two prongs vibrate toward and away from one another, while the handle vibrates up and down. The handle motion is small, which means that the tuning fork can be held without significantly damping the sound. However, the handle can be used to amplify the sound by placing it in contact with a resonator, such as a hollow box. Simple formulas exist for computing the tuning-fork frequency based on parameters such as the density of the fork’s material, the radius and length of the prongs, and the Young’s modulus of the material, which is a measure of its stiffness.

In the 1850s, the mathematician Jules Lissajous studied waves produced by a tuning fork in contact with water by observing the ripples. He also obtained intricate Lissajous figures by successively reflecting light from one mirror attached to a vibrating tuning fork onto another mirror attached to a perpendicular vibrating tuning fork, then onto a wall. Around 1860, physicists Hermann von Helmholtz and Rudolph Koenig devised an electromagnetically driven tuning fork. In modern times, the tuning fork has been used by police departments to calibrate radar instruments for traffic speed control.

In medicine, these can be employed to assess a patient’s hearing and sense of vibration on the skin, as well as for identifying bone fractures, which sometimes diminish the sound produced by a vibrating turning fork when it is applied to the body near the injury and monitored with a stethoscope.

SEE ALSO Stethoscope (1816), Doppler Effect (1842), War Tubas (1880).

Tuning forks have played important roles in physics, music, medicine, and art.

Isaac Newton (1642-1727)

Shoot an arrow straight up into the air, and it eventually comes down. Pull back the bow even farther, and the arrow takes longer to fall. The launch velocity at which the arrow would never return to the Earth is the escape velocity, ve, and it can be computed with a simple formula: ve = [(2GM)/r]1/2, where G is the gravitational constant, and r is the distance of the bow and arrow from the center of the Earth, which has a mass of M. If we neglect air resistance and other forces and launch the arrow with some vertical component (along a radial line from the center of the Earth), then ve = 6.96 miles per second (11.2 kilometers/second). This is surely one fast hypothetical arrow, which would have to be released at 34 times the speed of sound!

Notice that the mass of the projectile (e.g., whether it be an arrow or an elephant) does not affect its escape velocity, although it does affect the energy required to force the object to escape. The formula for ve assumes a uniform spherical planet and a projectile mass that is much less than the planet’s mass. Also, ve relative to the Earth’s surface is affected by the rotation of the Earth. For example, the arrow launched eastward while standing at the Earth’s equator has a ve equal to about 6.6 miles/second (10.7 kilometers/ second) relative to the Earth.

Note that the ve formula applies to a “one-time” vertical component of velocity for the projectile. An actual rocket ship does not have to achieve this speed because it may continue to fire its engines as it travels.

This entry is dated to 1728, the publication date for Isaac Newton’s A Treatise of the System of the World, in which he contemplates firing a cannonball at different high speeds and considers ball trajectories with respect to the Earth. The escape velocity formula may be computed in many ways, including from Newton’s Law of Universal Gravitation (1687), which states that objects attract one another with a force that varies as the product of the masses of the objects and inversely as the square of the distance between the objects.

SEE ALSO Tautochrone Ramp (1673), Newton’s Laws of Motion and Gravitation (1687), Black Holes (1783), Terminal Velocity (1960).

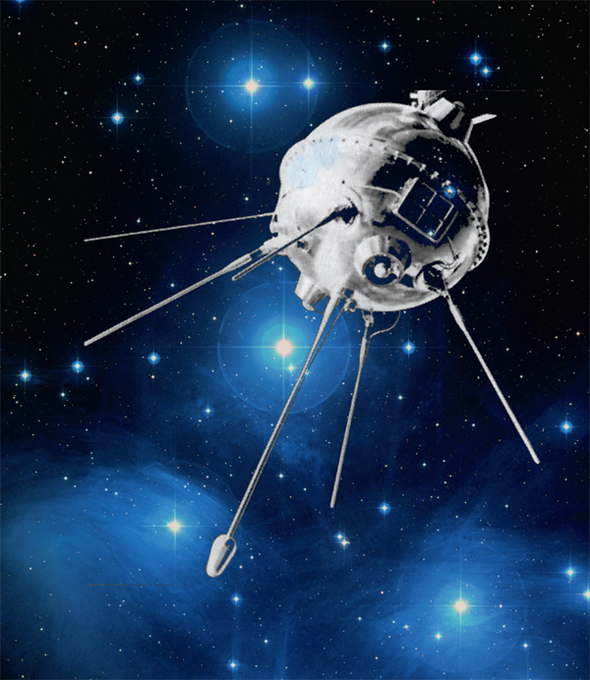

Luna 1 was the first man-made object to reach the escape velocity of the Earth. Launched in 1959 by the Soviet Union, it was also the first spacecraft to reach the Moon.

Bernoulli’s Law of Fluid Dynamics

Daniel Bernoulli (1700-1782)

Imagine water flowing steadily through a pipe that carries the liquid from the roof of a building to the grass below. The pressure of the liquid will change along the pipe. Mathematician and physicist Daniel Bernoulli discovered the law that relates pressure, flow speed, and height for a fluid flowing in a pipe. Today, we write Bernoulli’s Law as v2/2 + gz + p/ρ = C. Here, v is the fluid velocity, g the acceleration due to gravity, z the elevation (height) of a point in the fluid, p the pressure, ρ the fluid density, and C is a constant. Scientists prior to Bernoulli had understood that a moving body exchanges its kinetic energy for potential energy when the body gains height. Bernoulli realized that, in a similar way, changes in the kinetic energy of a moving fluid result in a change in pressure.

The formula assumes a steady (non-turbulent) fluid flow in a closed pipe. The fluid must be incompressible. Because most liquid fluids are only slightly compressible, Bernoulli’s Law is often a useful approximation. Additionally, the fluid should not be viscous, which means that the fluid should not have internal friction. Although no real fluid meets all these criteria, Bernoulli’s relationship is generally very accurate for free flowing regions of fluids that are away from the walls of pipes or containers, and it is especially useful for gases and light liquids.

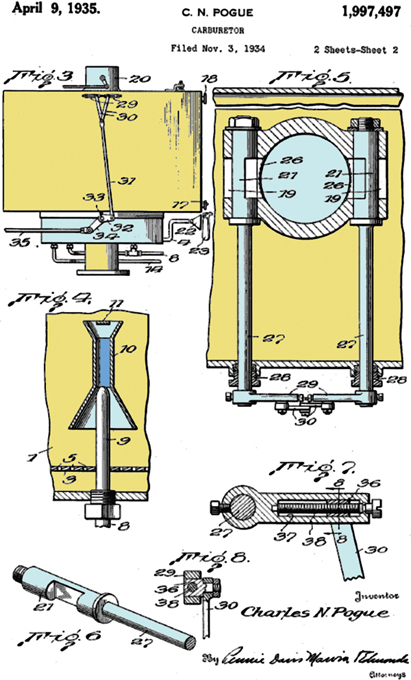

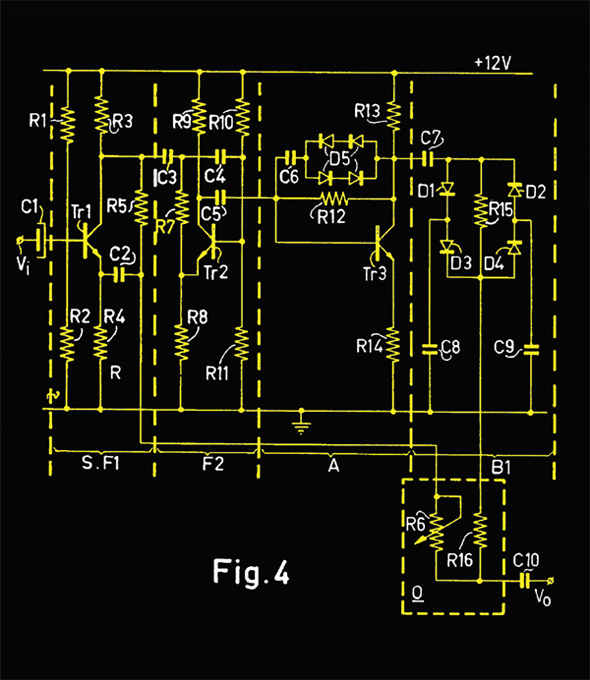

Bernoulli’s Law often makes reference to a subset of the parameters in the above equation, namely that the decrease in pressure occurs simultaneously with an increase in velocity. The law is used when designing a venturi throat—a constricted region in the air passage of a carburetor that causes a reduction in pressure, which in turn causes fuel vapor to be drawn out of the carburetor bowl. The fluid increases speed in the smaller-diameter region, reducing its pressure and producing a partial vacuum via Bernoulli’s Law.

Bernoulli’s formula has numerous practical applications in the fields of aerodynamics, where it is considered when studying flow over airfoils, such as wings, propeller blades, and rudders.

SEE ALSO Siphon (250 B.C.), Poiseuille’s Law of Fluid Flow (1840), Stokes’ Law of Viscosity (1851), Kármán Vortex Street (1911).

Many engine carburetors have contained a venturi with a narrow throat region that speeds the air and reduces the pressure to draw fuel via Bernoulli’s Law. The venture throat is labeled 10 in this 1935 carburetor patent.

Pieter van Musschenbroek (1692–1761), Ewald Georg von Kleist (1700–1748), Jean-Antoine Nollet (1700–1770), Benjamin Franklin (1706–1790)

“The Leyden jar was electricity in a bottle, an ingenious way to store a static electric charge and release it at will,” writes author Tom McNichol. “Enterprising experimenters drew rapt crowds all over Europe… killing birds and small animals with a burst of stored electric charge…. In 1746, Jean-Antoine Nollet, a French clergyman and physicist, discharged a Leyden jar in the presence of King Louis XV, sending a current of static electricity rushing through a chain of 180 Royal Guards who were holding hands.” Nollet also connected a row of a several hundred robed Carthusian monks, giving them the shock of their lives.

The Leyden jar is a device that stores static electricity between an electrode on the outside of a jar and another electrode on the inside. An early version was invented in 1744 by Prussian researcher Ewald Georg von Kleist. A year later, Dutch scientist Pieter van Musschenbroek independently invented a similar device while in Leiden (also spelled Leyden). The Leyden jar was important in many early experiments in electricity. Today, a Leyden jar is thought of as an early version of the capacitor, an electronic component that consists of two conductors separated by a dielectric (insulator). When a potential difference (voltage) exists across the conductors, an electric field is created in the dielectric, which stores energy. The narrower the separation between the conductors, the larger the charge that may be stored.

A typical design consists of a glass jar with conducting metal foils lining part of the outside and inside of the jar. A metal rod penetrates the cap of the jar and is connected to the inner metal lining by a chain. The rod is charged with static electricity by some convenient means—for example, by touching it with a silk-rubbed glass rod. If a person touches the metal rod, that person will receive a shock. Several jars may be connected in parallel to increase the amount of possible stored charge.

SEE ALSO Von Guericke’s Electrostatic Generator (1660), Ben Franklin’s Kite (1752), Lichtenberg Figures (1777), Battery (1800), Tesla Coil (1891), Jacob’s Ladder (1931).

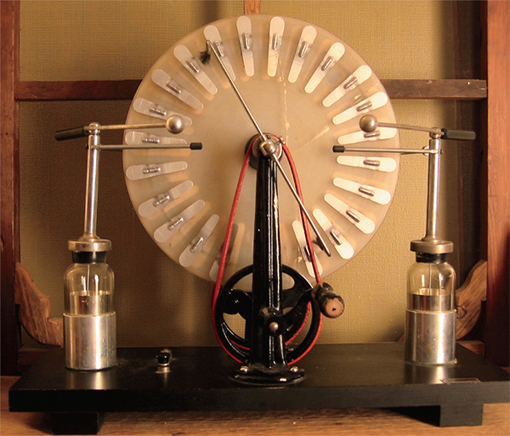

British inventor James Wimshurst (1832–1903) invented the Wimshurst Machine, an electrostatic device for generating high voltages. A spark jumps across the gap formed by two metal spheres. Note the two Leyden jars for charge storage.

Benjamin Franklin (1706–1790)

Benjamin Franklin was an inventor, statesman, printer, philosopher, and scientist. Although he had many talents, historian Brooke Hindle writes, “The bulk of Franklin’s scientific activities related to lightning and other electrical matters. His connection of lightning with electricity, through the famous experiment with a kite in a thunderstorm, was a significant advance of scientific knowledge. It found wide application in constructions of lightning rods to protect buildings in both the United States and Europe.” Although perhaps not on par with many other physics milestones in this book, “Franklin’s Kite” has often been a symbol of the quest for scientific truth and has inspired generations of school children.

In 1750, in order to verify that lightning is electricity, Franklin suggested an experiment that involved the flying of a kite in a storm that seemed likely to become a lightning storm. Although some historians have disputed the specifics of the story, according to Franklin, his experiments were conducted on June 15, 1752 in Philadelphia in order to successfully extract electrical energy from a cloud. In some versions of the story, he held a silk ribbon tied to a key at the end of the kite string to insulate himself from the electrical current that traveled down the string to the key and into the Leyden jar (a device that stores electricity between two electrodes). Other researchers did not take such precautions and were electrocuted when performing similar experiments. Franklin wrote, “When rain has wet the kite twine so that it can conduct the electric fire freely, you will find it streams out plentifully from the key at the approach of your knuckle, and with this key a… Leiden jar may be charged….”

Historian Joyce Chaplin notes that the kite experiment was not the first to identify lighting with electricity, but the kite experiment verified this finding. Franklin was “trying to gauge whether the clouds were electrified and, if so, whether with a positive or a negative charge. He wanted to determine the presence of… electricity within nature, [and] it reduces his efforts considerably to describe them as resulting only in… the lightning rod.”

SEE ALSO St. Elmo’s Fire (78), Leyden Jar (1744), Lichtenberg Figures (1777), Tesla Coil (1891), Jacob’s Ladder (1931).

“Benjamin Franklin Drawing Electricity from the Sky” (c. 1816), by Anglo-American painter Benjamin West (1738–1820). A bright electrical current appears to drop from the key to the jar in his hand.

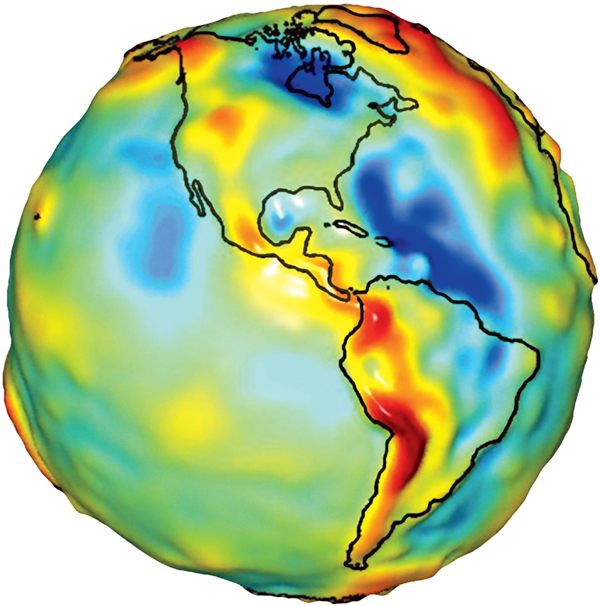

Torbern Olof Bergman (1735-1784), James Cook (1728-1779)

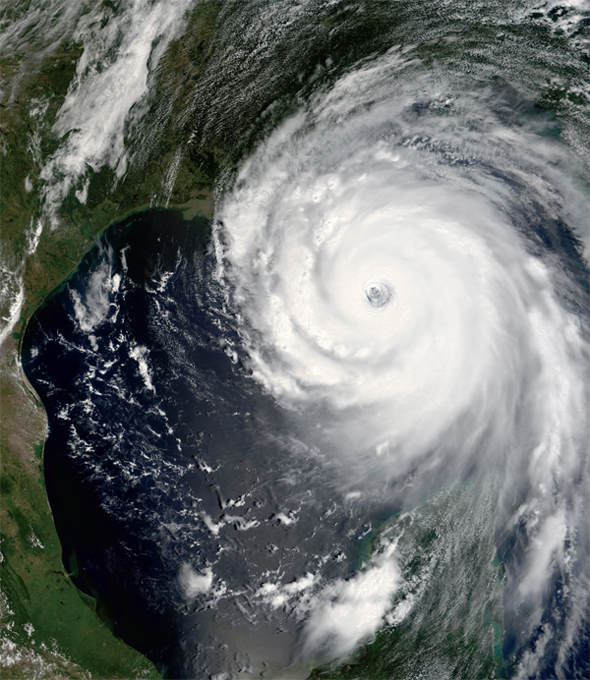

Albert Einstein once suggested that the most incomprehensible thing about the world is that it is comprehensible. Indeed, we appear to live in a cosmos that can be described or approximated by compact mathematical expressions and physical laws. Even the strangest of astrophysical phenomena are often explained by scientists and scientific laws, although it can take many years to provide a coherent explanation.

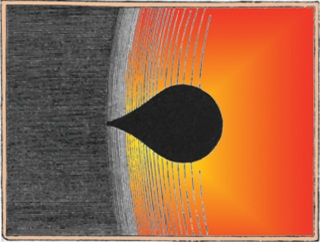

The mysterious black drop effect (BDE) refers to the apparent shape assumed by Venus as it transits across the Sun when observed from the Earth. In particular, Venus appears to assume the shape of a black teardrop when visually “touching” the inside edge of the Sun. The tapered, stretched part of the teardrop resembles a fat umbilical cord or dark bridge, which made it impossible for early physicists to determine Venus’ precise transit time across the Sun.

The first detailed description of the BDE came in 1761, when Swedish scientist Torbern Bergman described the BDE in terms of a “ligature” that joined the silhouette of Venus to the dark edge of the Sun. Many scientists provided similar reports in the years that followed. For example, British explorer James Cook made observations of the BDE during the 1769 transit of Venus.

British explorer James Cook observed the Black Drop Effect during the 1769 transit of Venus, as depicted in this sketch by the Australian astronomer Henry Chamberlain Russell (1836-1907).

Today, physicists continue to ponder the precise reason for the BDE. Astronomers Jay M. Pasachoff, Glenn Schneider, and Leon Golub suggest it is a “combination of instrumental effects and effects to some degree in the atmospheres of Earth, Venus, and Sun.” During the 2004 transit of Venus, some observers saw the BDE while others did not. Journalist David Shiga writes, “So the ‘black-drop effect’ remains as enigmatic in the 21st century as in the 19th. Debate is likely to continue over what constitutes a ‘true’ black drop…. And it remains to be seen whether the conditions for the appearance of black drop will be nailed down as observers compare notes… in time for the next transit….”

SEE ALSO Discovery of Saturn’s Rings (1610), Measuring the Solar System (1672), Discovery of Neptune (1846), Green Flash (1882).

Venus transits the Sun in 2004, exhibiting the black-drop effect.

Bode’s Law of Planetary Distances

Johann Elert Bode (1747–1826), Johann Daniel Titius (1729–1796)

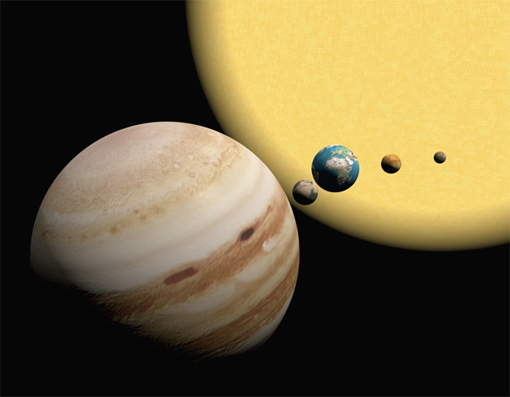

Bode’s Law, also known as the Titius-Bode Law, is particularly fascinating because it seems like pseudo-scientific numerology and has intrigued both physicists and laypeople for centuries. The law expresses a relationship that describes the mean distances of the planets from the Sun. Consider the simple sequence 0, 3, 6, 12, 24, … in which each successive number is twice the previous number. Next, add 4 to each number and divide by 10 to form the sequence 0.4, 0.7, 1.0, 1.6, 2.8, 5.2, 10.0, 19.6, 38.8, 77.2, … Remarkably, Bode’s Law provides a sequence that lists the mean distances D of many planets from the Sun, expressed in astronomical units (AU). An AU is the mean distance between the Earth and Sun, which is approximately 92,960,000 miles (149,604,970 kilometers). For example, Mercury is approximately 0.4 of an AU from the Sun, and Pluto is about 39 AU from the Sun.

This law was discovered by the German astronomer Johann Titius of Wittenberg in 1766 and published by Johann Bode six years later, though the relationship between the planetary orbits had been approximated by Scottish mathematician David Gregory in the early eighteenth century. At the time, the law gave a remarkably good estimate for the mean distances of the planets that were then known—Mercury (0.39), Venus (0.72), Earth (1.0), Mars (1.52), Jupiter (5.2), and Saturn (9.55). Uranus, discovered in 1781, has a mean orbital distance of 19.2, which also agrees with the law.

Today scientists have major reservations about Bode’s Law, which is clearly not as universally applicable as other laws in this book. In fact, the relationship may be purely empirical and coincidental.

A phenomenon of “orbital resonances,” caused by orbiting bodies that gravitationally interact with other orbiting bodies, can create regions around the Sun that are free of long-term stable orbits and thus, to some degree, can account for the spacing of planets. Orbital resonances can occur when two orbiting bodies have periods of revolution that are related in a simple integer ratio, so that the bodies exert a regular gravitational influence on each other.

SEE ALSO Mysterium Cosmographicum (1596), Measuring the Solar System (1672), Discovery of Neptune (1846).

According to Bode’s Law, the mean distance of Jupiter to the Sun is 5.2 AU, and the actual measured value is 5.203 AU.

Georg Christoph Lichtenberg (1742–1799)

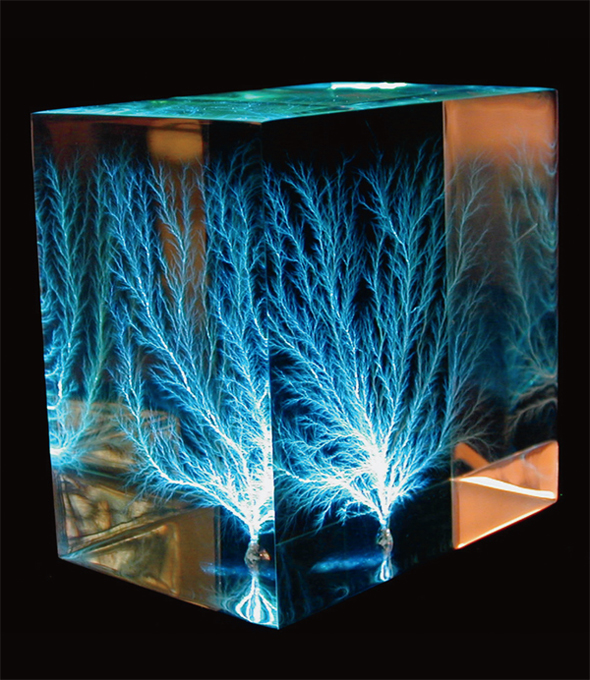

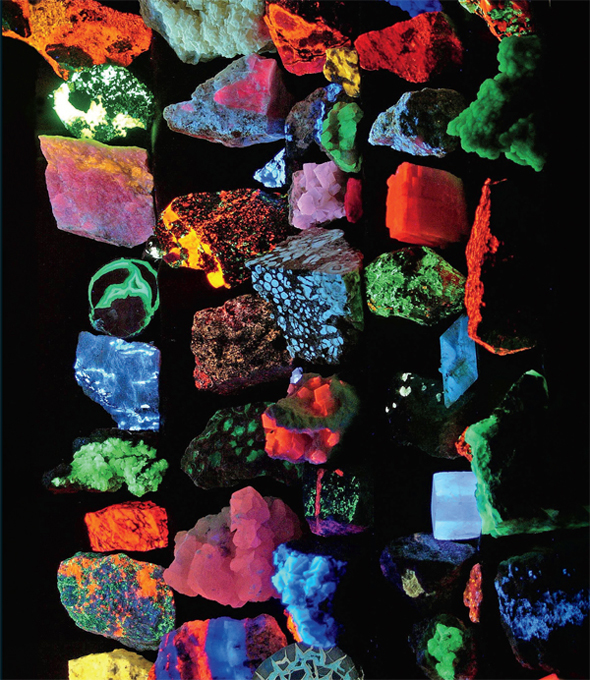

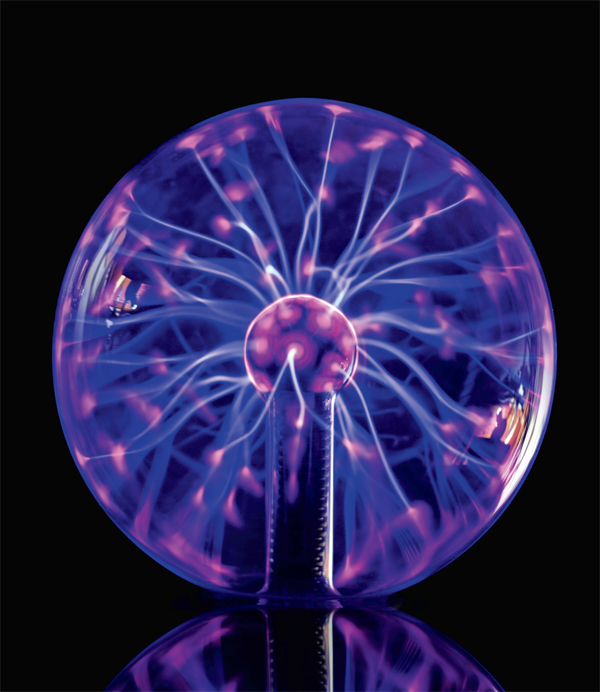

Among the most beautiful representations of natural phenomena are three-dimensional Lichtenberg figures, reminiscent of fossilized lightning trapped within a block of clear acrylic. These branching trails of electric discharges are named after German physicist Georg Lichtenberg, who originally studied similar electrical traces on surfaces. In the 1700s, Lichtenberg discharged electricity onto the surface of an insulator. Then, by sprinkling certain charged powders onto the surface, he was able to reveal curious tendrilous patterns.

Today, three-dimensional patterns can be created in acrylic, which is an insulator, or dielectric, meaning that it can hold a charge but that current cannot normally pass through it. First, the acrylic is exposed to a beam of high-speed electrons from an electron accelerator. The electrons penetrate the acrylic and are stored within. Since the acrylic is an insulator, the electrons are now trapped (think of a nest of wild hornets trying to break out of an acrylic prison). However, there comes a point where the electrical stress is greater than the dielectric strength of the acrylic, and some portions do suddenly become conductive. The escape of electrons can be triggered by piercing the acrylic with a metal point. As a result, some of the chemical bonds that hold the acrylic molecules together are torn apart. Within a fraction of a second, electrically conductive channels form within the acrylic as the electrical charge escapes from the acrylic, melting pathways along the way. Electrical engineer Bert Hickman speculates that these microcracks propagate faster than the speed of sound within the acrylic.

Lichtenberg figures are fractals, exhibiting branching self-similar structures at multiple magnifications. In fact, the fernlike discharge pattern may actually extend all the way down to the molecular level. Researchers have developed mathematical and physical models for the process that creates the dendritic patterns, which is of interest to physicists because such models may capture essential features of pattern formation in seemingly diverse physical phenomena. Such patterns may have medical applications as well. For example, researchers at Texas A&M University believe these feathery patterns may serve as templates for growing vascular tissue in artificial organs.

SEE ALSO Ben Franklin’s Kite (1752), Tesla Coil (1891), Jacob’s Ladder (1931), Sonic Booms (1947).

Bert Hickman’s Lichtenberg figure in acrylic, created by electron-beam irradiation, followed by manual discharge. The specimen’s internal potential prior to discharging was estimated to be around 2 million volts.

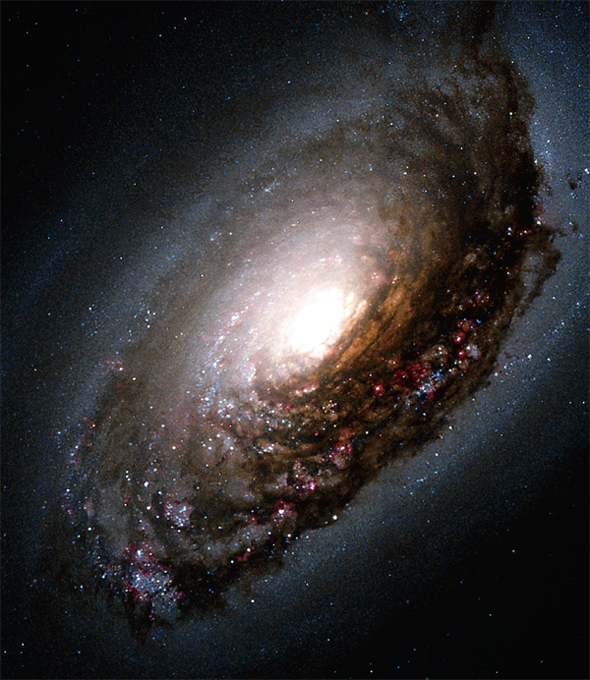

Edward Pigott (1753–1825), Johann Elert Bode (1747–1826), Charles Messier (1730–1817)

The Black Eye Galaxy resides in the constellation Coma Berenices and is about 24 million light years away from the Earth. Author and naturalist Stephen James O’Meara writes poetically of this famous galaxy with its “smooth silken arms [that] wrap gracefully around a porcelain core…. The galaxy resembles a closed human eye with a ‘shiner.’ The dark dust cloud looks as thick and dirty as tilled soil [but] a jar of its material would be difficult to distinguish from a perfect vacuum.”

Discovered in 1779 by English astronomer Edward Pigott, it was independently discovered just twelve days later by German astronomer Johann Elert Bode and about a year later by French astronomer Charles Messier. As mentioned in the entry “Explaining the Rainbow,” such nearly simultaneous discoveries are common in the history of science and mathematics. For example, British naturalists Charles Darwin and Alfred Wallace both developed the theory of evolution independently and simultaneously. Likewise, Isaac Newton and German mathematician Gottfried Wilhelm Leibniz developed calculus independently at about the same time. Simultaneity in science has led some philosophers to suggest that scientific discoveries are inevitable as they emerge from the common intellectual waters of a particular place and time.

Interestingly, recent discoveries indicate that the interstellar gas in the outer regions of the Black Eye Galaxy rotates in the opposite direction from the gas and stars in the inner regions. This differential rotation may arise from the Black Eye Galaxy having collided with another galaxy and having absorbed it over a billion years ago.

Author David Darling writes that the inner zone of the galaxy is about 3,000 light-years in radius and “rubs along the inner edge of an outer disk, which rotates in the opposite direction at about 300 km/s and extends out to at least 40,000 light-years. This rubbing may explain the vigorous burst of star formation that is currently taking place in the galaxy and is visible as blue knots embedded in the huge dust lane.”

SEE ALSO Black Holes (1783), Nebular Hypothesis (1796), Fermi Paradox (1950), Quasars (1963), Dark Matter (1933).

The interstellar gas in the outer regions of the Black Eye Galaxy rotates in the opposite direction of the gas and stars in the inner regions. This differential rotation may arise from the galaxy having collided with another galaxy and having absorbed this galaxy over a billion years ago.

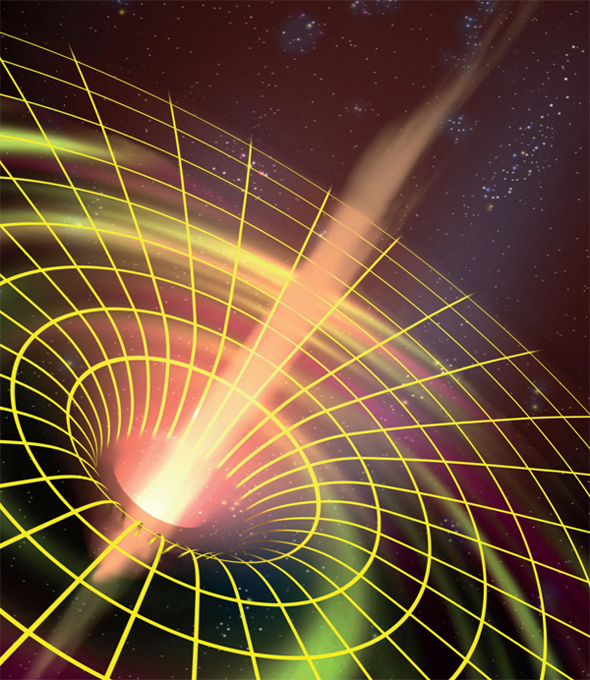

John Michell (1724-1793), Karl Schwarzschild (1873-1916), John Archibald Wheeler (1911-2008), Stephen William Hawking (b. 1942)

Astronomers may not believe in Hell, but most believe in ravenous, black regions of space in front of which one would be advised to place a sign, “Abandon hope, all ye who enter here.” This was Italian poet Dante Alighieri’s warning when describing the entrance to the Inferno in his Divine Comedy, and, as astrophysicist Stephen Hawking has suggested, this would be the appropriate message for travelers approaching a black hole.

These cosmological hells truly exist in the centers of many galaxies. Such galactic black holes are collapsed objects having millions or even billions of times the mass of our Sun crammed into a space no larger than our Solar System. According to classical black hole theory, the gravitational field around such objects is so great that nothing—not even light—can escape from their tenacious grip. Anyone who falls into a black hole will plunge into a tiny central region of extremely high density and extremely small volume … and the end of time. When quantum theory is considered, black holes are thought to emit a form of radiation called Hawking radiation (see “Notes and Further Reading” and the entry “Stephen Hawking on Star Trek”).

Black holes can exist in many sizes. As some historical background, just a few weeks after Albert Einstein published his general relativity theory in 1915, German astronomer Karl Schwarzschild performed exact calculations of what is now called the Schwarzschild radius, or event horizon. This radius defines a sphere surrounding a body of a particular mass. In classical black-hole theory, within the sphere of a black hole, gravity is so strong that no light, matter, or signal can escape. For a mass equal to the mass of our Sun, the Schwarzschild radius is a few kilometers in length. A black hole with an event horizon the size of a walnut would have a mass equal to the mass of the Earth. The actual concept of an object so massive that light could not escape was first suggested in 1783 by the geologist John Michell. The term “black hole” was coined in 1967 by theoretical physicist John Wheeler.

Black holes and Hawking radiation are the stimulus for numerous impressionistic pieces by Slovenian artist Teja Krašek.

SEE ALSO Escape Velocity (1728), General Theory of Relativity (1915), White Dwarfs and Chandrasekhar Limit (1931), Neutron Stars (1933), Quasars (1963), Stephen Hawking on Star Trek (1993), Universe Fades (100 Trillion).

Artistic depiction of the warpage of space in the vicinity of a black hole.

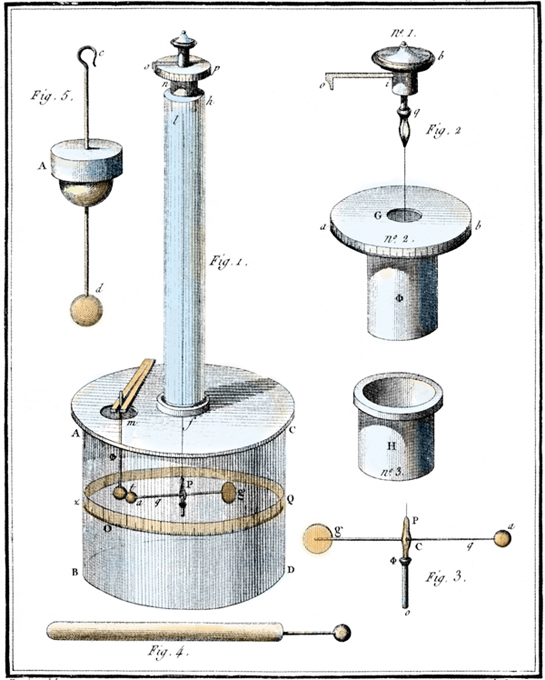

Coulomb’s Law of Electrostatics

Charles-Augustin Coulomb (1736–1806)

“We call that fire of the black thunder-cloud electricity,” wrote essayist Thomas Carlyle in the 1800s, “but what is it? What made it?” Early steps to understand electric charge were taken by French physicist Charles-Augustin Coulomb, the preeminent physicist who contributed to the fields of electricity, magnetism, and mechanics. His Law of Electrostatics states that the force of attraction or repulsion between two electric charges is proportional to the product of the magnitude of the charges and inversely proportional to the square of their separation distance r. If the charges have the same sign, the force is repulsive. If the charges have opposite signs, the force is attractive.

Today, experiments have demonstrated that Coulomb’s Law is valid over a remarkable range of separation distances, from as small as 10−16 meters (a tenth of the diameter of an atomic nucleus) to as large as 106 meters (where 1 meter is equal to 3.28 feet). Coulomb’s Law is accurate only when the charged particles are stationary because movement produces magnetic fields that alter the forces on the charges.

Although other researchers before Coulomb had suggested the 1/r2 law, we refer to this relationship as Coulomb’s Law in honor of Coulomb’s independent results gained through the evidence provided by his torsional measuring. In other words, Coulomb provided convincing quantitative results for what was, up to 1785, just a good guess.

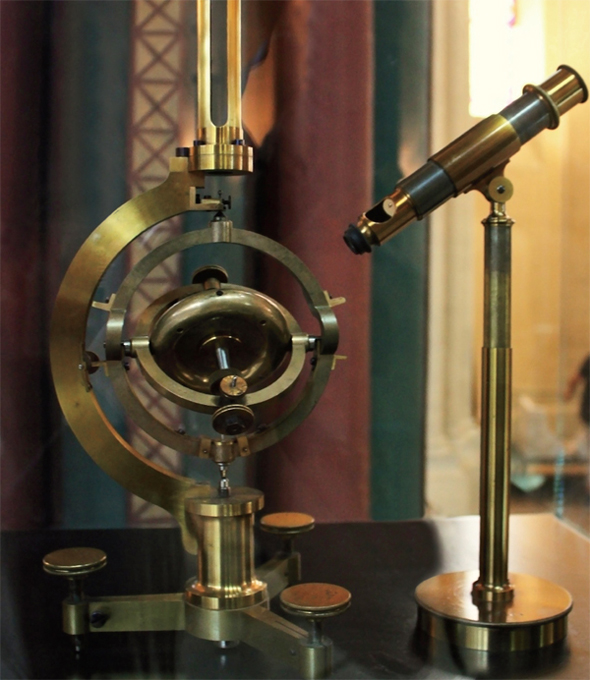

One version of Coulomb’s torsion balance contains a metal and a non-metal ball attached to an insulating rod. The rod is suspended at its middle by a nonconducting filament or fiber. To measure the electrostatic force, the metal ball is charged. A third ball with similar charge is placed near the charged ball of the balance, causing the ball on the balance to be repelled. This repulsion causes the fiber to twist. If we measure how much force is required to twist the wire by the same angle of rotation, we can estimate the degree of force caused by the charged sphere. In other words, the fiber acts as a very sensitive spring that supplies a force proportional to the angle of twist.

SEE ALSO Maxwell’s Equations (1861), Leyden Jar (1744), Eötvös’ Gravitational Gradiometry (1890), Electron (1897), Millikan Oil Drop Experiment (1913).

Charles-Augustin de Coulomb’s torsion balance, from his Mémoires sur l’électricité et le magnétisme (1785–1789).

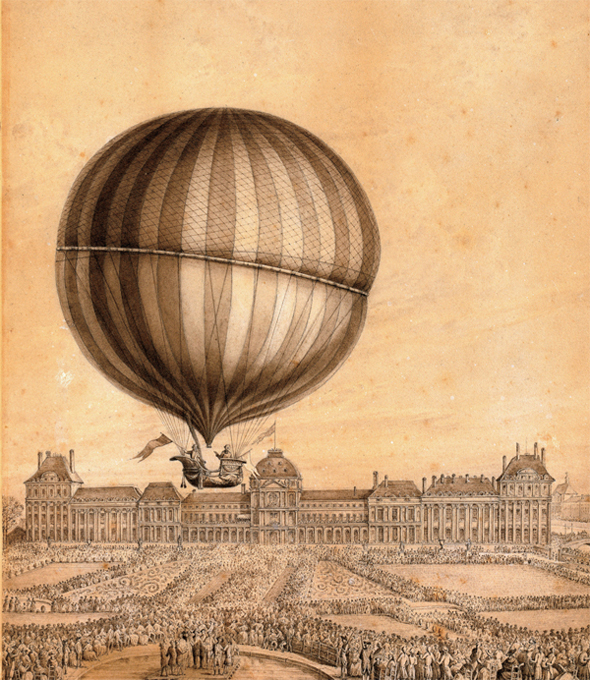

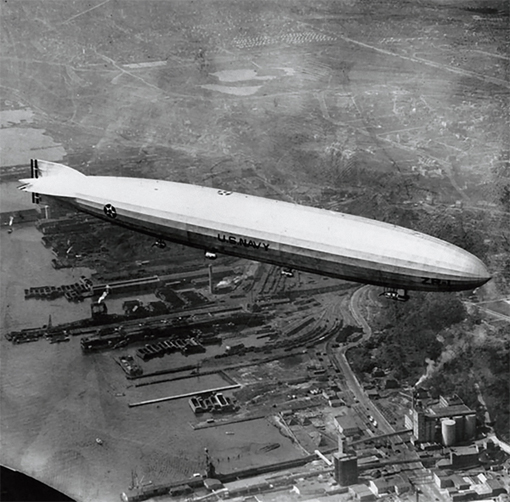

Jacques Alexandre César Charles (1746-1823), Joseph Louis Gay-Lussac (1778-1850)

“It is our business to puncture gas bags and discover the seeds of truth,” wrote essayist Virginia Woolf. On the other hand, the French balloonist Jacques Charles knew how to make “gas bags” soar to find truths. The gas law named in his honor states that the volume occupied by a fixed amount of gas varies directly with the absolute temperature (i.e., the temperature in kelvins). The law can be expressed as V = kT where V is the volume at a constant pressure, T is the temperature, and k is a constant. Physicist Joseph Gay-Lussac first published the law in 1802, where he referenced unpublished work from around 1787 by Jacques Charles.

As the temperature of the gas increases, the gas molecules move more quickly and hit the walls of their container with more force—thus increasing the volume of gas, assuming that the container volume is able to expand. For a more specific example, consider warming the air within a balloon. As the temperature increases, the speed of the moving gas molecules increases inside the surface of the balloon. This in turn increases the rate at which the gas molecules bombard the interior surface. Because the balloon can stretch, the surface expands as a result of the increased internal bombardment. The volume of gas increases, and its density decreases. The act of cooling the gas inside a balloon will have the opposite effect, causing the pressure to be reduced and the balloon to shrink.

Charles was most famous to his contemporaries for his various exploits and inventions pertaining to the science of ballooning and other practical sciences. His first balloon journey took place in 1783, and an adoring audience of thousands watched as the balloon drifted by. The balloon ascended to a height of nearly 3,000 feet (914 meters) and seems to have finally landed in a field outside of Paris, where it was destroyed by terrified peasants. In fact, the locals believed that the balloon was some kind of evil spirit or beast from which they heard sighs and groans, accompanied by a noxious odor.

SEE ALSO Boyle’s Gas Law (1662), Henry’s Gas Law (1803), Avogadro’s Gas Law (1811), Kinetic Theory (1859).

The first flight of Jacques Charles with co-pilot Nicolas-Louis Robert, 1783, who are seen waving flags to spectators. Versailles Palace is in the background. The engraving is likely created by Antoine François Sergent-Marceau, c. 1783.

Immanuel Kant (1724–1804), Pierre-Simon Laplace (1749–1827)

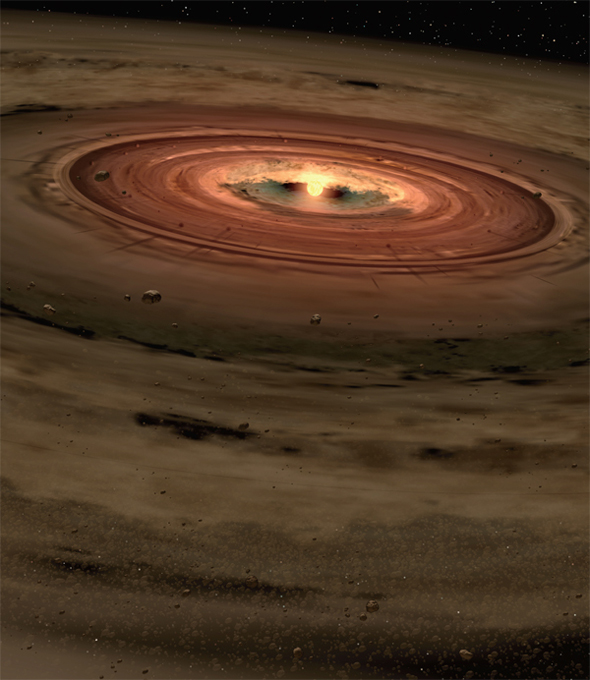

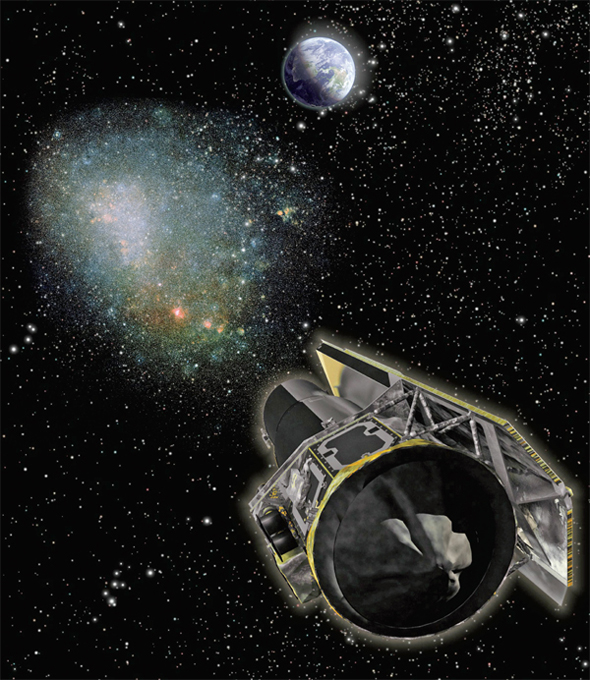

For centuries, scientists hypothesized that the Sun and planets were born from a rotating disk of cosmic gas and dust. The flat disk constrained the planets that formed from it to have orbits almost lying in the same plane. This nebular theory was developed in 1755 by the philosopher Immanuel Kant, and refined in 1796 by mathematician Pierre-Simon Laplace.

In short, stars and their disks form from the gravitational collapse of large volumes of sparse interstellar gas called solar nebulae. Sometimes a shock wave from a nearby supernova, or exploding star, may trigger the collapse. Gases in these protoplanetary disks (proplyds) of gas will be swirling more in one direction than the other, giving the gas cloud a net rotation.

Using the Hubble Space Telescope, astronomers have detected several proplyds in the Orion Nebula, a giant stellar nursery about 1,600 light-years away. The Orion proplyds are larger than the Sun’s solar system and contain sufficient gas and dust to provide the raw material for future planetary systems.

The violence of the early Solar System was tremendous as huge chunks of matter bombarded one another. In the inner Solar System, the Sun’s heat drove away the lighter-weight elements and materials, leaving Mercury, Venus, Earth, and Mars behind. In the colder outer part of the system, the solar nebula of gas and dust survived for some time and were accumulated by Jupiter, Saturn, Uranus, and Neptune.

Interestingly, Isaac Newton marveled at the fact that most of the objects that orbit the Sun are contained with an ecliptic plane offset by just a few degrees. He reasoned that natural processes could not create such behavior. This, he argued, was evidence of design by a benevolent and artistic creator. At one point, he thought of the Universe as “God’s Sensorium,” in which the objects in the Universe—their motions and their transformations—were the thoughts of God.

SEE ALSO Measuring the Solar System (1672), Black Eye Galaxy (1779), Hubble Telescope (1990).

Protoplanetary disk. This artistic depiction features a small young star encircled by a disk of gas and dust, the raw materials from which rocky planets such as Earth may form.

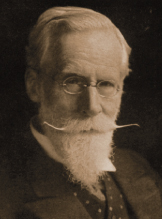

Henry Cavendish (1731–1810)

Henry Cavendish was perhaps the greatest of all eighteenth-century scientists, and one of the greatest scientists who ever lived. Yet his extreme shyness—a trait that made the vast extent of his scientific writings secret until after his death—caused some of his important discoveries to be associated with the names of subsequent researchers. The huge number of manuscripts uncovered after Cavendish’s death show that he conducted extensive research in literally all branches of physical sciences of his day.

The brilliant British chemist was so shy around women that he communicated with his housekeeper using only written notes. He ordered all his female housekeepers to keep out of sight. If they were unable to comply, he Fired them. Once when he saw a female servant, he was so mortified that he built a second staircase for the servants’ use so that he could avoid them.

In one of his most impressive experiments, Cavendish at the age of 70 “weighed” the world! In order to accomplish this feat, he didn’t transform into the Greek god Atlas, but rather determined the density of the Earth using highly sensitive balances. In particular, he used a torsional balance consisting of two lead balls on either end of a suspended beam. These mobile balls were attracted by a pair of larger stationary lead balls. To reduce air currents, he enclosed the device in a glass case and observed the motion of the balls from far away, by means of a telescope. Cavendish calculated the force of attraction between the balls by observing the balance’s oscillation period, and then computed the Earth’s density from the force. He found that the Earth was 5.4 times as dense as water, a value that is only 1.3% lower than the accepted value today. Cavendish was the first scientist able to detect minute gravitational forces between small objects. (The attractions were 1/500,000,000 times as great as the weight of the bodies.) By helping to quantify Newton’s Law of Universal Gravitation, he had made perhaps the most important addition to gravitational science since Newton.

SEE ALSO Newton’s Laws of Motion and Gravitation (1687), Eötvös’ Gravitational Gradiometry (1890), General Theory of Relativity (1915).

Close-up of a portion of the drawing of a torsion balance from Cavendish’s 1798 paper “Experiments to Determine the Density of the Earth.”

Luigi Galvani (1737–1798), Alessandro Volta (1745–1827), Gaston Planté (1834–1889)

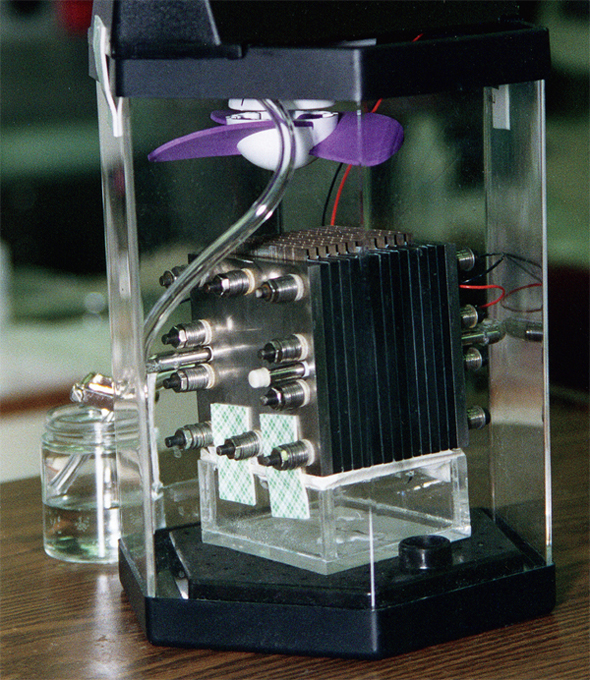

Batteries have played an invaluable role in the history of physics, chemistry, and industry. As batteries evolved in power and sophistication, they facilitated important advances in electrical applications, from the emergence of telegraph communication systems to their use in vehicles, cameras, computers, and phones.

Around 1780, physiologist Luigi Galvani experimented with frogs’ legs that he could cause to jerk when in contact with metal. Science-journalist Michael Guillen writes, “During his sensational public lectures, Galvani showed people how dozens of frogs’ legs twitched uncontrollably when hung on copper hooks from an iron wire, like so much wet laundry strung out on a clothesline. Orthodox science cringed at his theories, but the spectacle of that chorus line of flexing frog legs guaranteed Galvani sell-out crowds in auditoriums the world over.” Galvani ascribed the leg movement to “animal electricity.” However, Italian physicist and friend Alessandro Volta believed that the phenomenon had more to do with the different metals Galvani employed, which were joined by a moist connecting substance. In 1800, Volta invented what has been traditionally considered to be the first electric battery when he stacked several pairs of alternating copper and zinc discs separated by cloth soaked in salt water. When the top and bottom of this voltaic pile were connected by a wire, an electric current began to flow. To determine that current was flowing, Volta could touch its two terminals to his tongue and experience a tingly sensation.

“A battery is essentially a can full of chemicals that produce electrons,” write authors Marshall Brain and Charles Bryant. If a wire is connected between the negative and positive terminals, the electrons produced by chemical reactions flow from one terminal to the other.

In 1859, physicist Gaston Planté invented the rechargeable battery. By forcing a current through it “backwards,” he could recharge his lead-acid battery. In the 1880s, scientists invented commercially successful dry cell batteries, which made use of pastes instead of liquid electrolytes (substances containing free ions that make the substances electrically conductive).

SEE ALSO Baghdad Battery (250 B.C.), Von Guericke’s Electrostatic Generator (1660), Fuel Cell (1839), Leyden Jar (1744), Solar Cells (1954), Buckyballs (1985).

As batteries evolved, they facilitated important advances in electrical applications, ranging from the emergence of telegraph communication systems to their use in vehicles, cameras, computers, and phones.

Christiaan Huygens (1629–1695), Isaac Newton (1642–1727), Thomas Young (1773–1829)

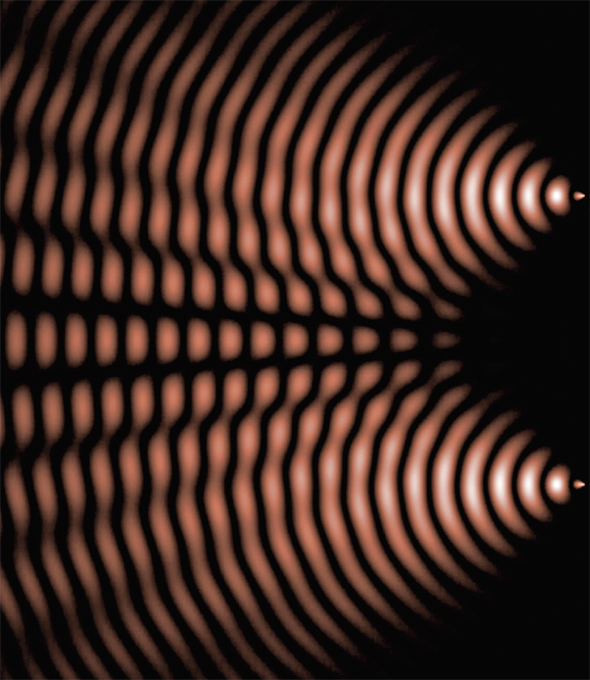

“What is light?” is a question that has intrigued scientists for centuries. In 1675, the famous English scientist Isaac Newton proposed that light was a stream of tiny particles. His rival, the Dutch physicist Christiaan Huygens, suggested that light consisted of waves, but Newton’s theories often dominated, partly due to Newton’s prestige.

Around 1800, the English researcher Thomas Young—also famous for his work on deciphering the Rosetta Stone—began a series of experiments that provided support for Huygens’ wave theory. In a modern version of Young’s experiment, a laser equally illuminates two parallel slits in an opaque surface. The pattern that the light makes as it passes through the two slits is observed on a distant screen. Young used geometrical arguments to show that the superposition of light waves from the two slits explains the observed series of equally spaced bands (fringes) of light and dark regions, representing constructive and destructive interference, respectively. You can think of these patterns of light as being similar to the tossing of two stones into a lake and watching the waves running into one another and sometimes canceling each other out or building up to form even larger waves.

If we carry out the same experiment with a beam of electrons instead of light, the resulting interference pattern is similar. This observation is intriguing, because if the electrons behaved only as particles, one might expect to simply see two bright spots corresponding to the two slits.

Today, we know that the behavior of light and subatomic particles can be even more mysterious. When single electrons are sent through the slits one at a time, an interference pattern is produced that is similar to that produced for waves passing through both holes at once. This behavior applies to all subatomic particles, not just photons (light particles) and electrons, and suggests that light and other subatomic particles have a mysterious combination of particle and wavelike behavior, which is just one aspect of the quantum mechanics revolution in physics.

SEE ALSO Maxwell’s Equations (1861), Electromagnetic Spectrum (1864), Electron (1897), Photoelectric Effect (1905), Bragg’s Law of Crystal Diffraction (1912), De Broglie Relation (1924), Schrödinger’s Wave Equation (1926), Complementarity Principle (1927).

Simulation of the interference between two point sources. Young showed that the superposition of light waves from two slits explains the observed series of bands of light and dark regions, representing constructive and destructive interference, respectively.

William Henry (1775-1836)

Interesting physics is to be found even in the cracking of one’s knuckles. Henry’s Law, named after British chemist William Henry, states that the amount of a gas that is dissolved in a liquid is directly proportional to the pressure of the gas above the solution. It is assumed that the system under study has reached a state of equilibrium and that the gas does not chemically react with the liquid. A common formula used today for Henry’s Law is P = kC, where P is the partial pressure of the particular gas above the solution, C is the concentration of the dissolved gas, and k is the Henry’s Law constant.

We can visualize one aspect of Henry’s Law by considering a scenario in which the partial pressure of a gas above a liquid increases by a factor of two. As a result, on the average, twice as many molecules will collide with the liquid surface in a given time interval, and, thus, twice as many gas molecules may enter the solution. Note that different gases have different solubilities, and these differences also affect the process along with the value of Henry’s constant.

Henry’s Law has been used by researchers to better understand the noise associated with “cracking” of finger knuckles. Gases that are dissolved in the synovial fluid in joints rapidly come out of solution as the joint is stretched and pressure is decreased. This cavitation, which refers to the sudden formation and collapse of low-pressure bubbles in liquids by means of mechanical forces, produces a characteristic noise.

In scuba diving, the pressure of the air breathed is roughly the same as the pressure of the surrounding water. The deeper one dives, the higher the air pressure, and the more air dissolves in the blood. When a diver ascends rapidly, the dissolved air may come out of solution too quickly in the blood, and bubbles in the blood may cause a painful and dangerous disorder known as decompression sickness (“the bends”).

SEE ALSO Boyle’s Gas Law (1662), Charles’ Gas Law (1787), Avogadro’s Gas Law (1811), Kinetic Theory (1859), Sonoluminescence (1934), Drinking Bird (1945).

Cola in a glass. When a soda can is opened, the reduced pressure causes dissolved gas to come out of solution according to Henry’s Law. Carbon dioxide flows from the soda into the bubbles.

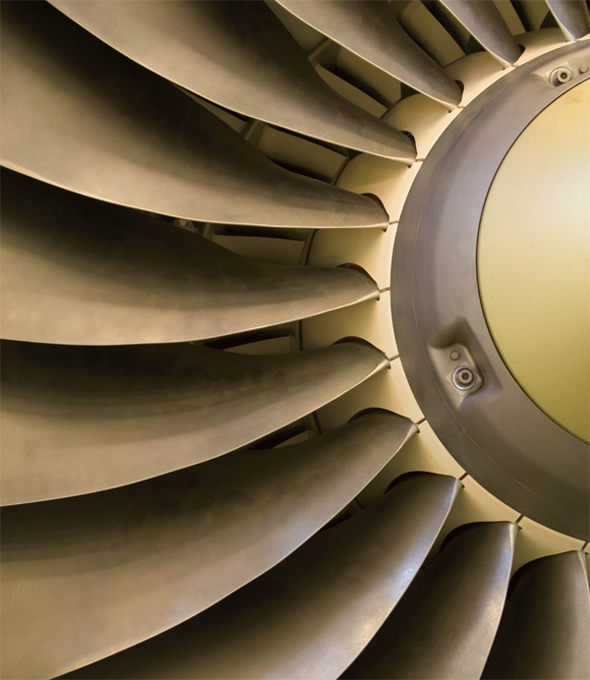

Jean Baptiste Joseph Fourier (1768-1830)

“The single most recurring theme of mathematical physics is Fourier analysis,” writes physicist Sadri Hassani. “It shows up, for example, in classical mechanics… in electromagnetic theory and the frequency analysis of waves, in noise considerations and thermal physics, and in quantum theory”—virtually any field in which a frequency analysis is important. Fourier series can help scientists characterize and better understand the chemical composition of stars and quantify signal transmission in electronic circuits.

Before French mathematician Joseph Fourier discovered his famous mathematical series, he accompanied Napoleon on his 1798 expedition to Egypt, where Fourier spent several years studying Egyptian artifacts. Fourier’s research on the mathematical theory of heat began around 1804 when he was back in France, and by 1807 he had completed his important memoir On the Propagation of Heat in Solid Bodies. One aspect of his fundamental work concerned heat diffusion in different shapes. For these problems, researchers are usually given the temperatures at points on the surface, as well as at its edges, at time t = 0. Fourier introduced a series with sine and cosine terms in order to find solutions to these kinds of problems. More generally, he found that any differentiable function can be represented to arbitrary accuracy by a sum of sine and cosine functions, no matter how bizarre the function may look when graphed.

Biographers Jerome Ravetz and I. Grattan-Guiness note, “Fourier’s achievement can be understood by [considering] the powerful mathematical tools he invented for the solutions of the equations, which yielded a long series of descendants and raised problems in mathematical analysis that motivated much of the leading work in that field for the rest of the century and beyond.” British physicist Sir James Jeans (1877-1946) remarked, “Fourier’s theorem tells us that every curve, no matter what its nature may be, or in what way it was originally obtained, can be exactly reproduced by superposing a sufficient number of simple harmonic curves—in brief, every curve can be built up by piling up waves.”

SEE ALSO Fourier’s Law of Heat Conduction (1822), Greenhouse Effect (1824), Soliton (1834).

Portion of a jet engine. Fourier analysis methods are used to quantify and understand undesirable vibrations in numerous kinds of systems with moving parts.

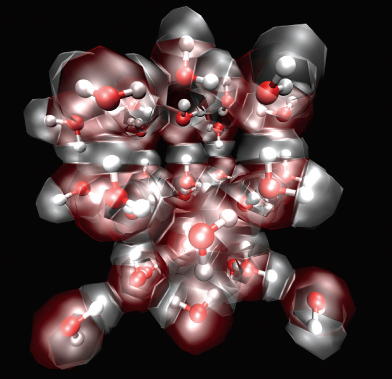

John Dalton (1766-1844)

John Dalton attained his professional success in spite of several hardships: He grew up in a family with little money; he was a poor speaker; he was severely color blind; and he was also considered to be a fairly crude or simple experimentalist. Perhaps some of these challenges would have presented an insurmountable barrier to any budding chemist of his time, but Dalton persevered and made exceptional contributions to the development of atomic theory, which states that all matter is composed of atoms of differing weights that combine in simple ratios in atomic compounds. During Dalton’s time, atomic theory also suggested that these atoms were indestructible and that, for a particular element, all atoms were alike and had the same atomic weight.

He also formulated the Law of Multiple Proportions, which stated that whenever two elements can combine to form different compounds, the masses of one element that combine with a fixed mass of the other are in a ratio of small integers, such as 1:2. These simple ratios provided evidence that atoms were the building blocks of compounds.

Dalton encountered resistance to atomic theory. For example, the British chemist Sir Henry Enfield Roscoe (1833-1915) mocked Dalton in 1887, saying, “Atoms are round bits of wood invented by Mr. Dalton.” Perhaps Roscoe was referring to the wood models that some scientists used in order to represent atoms of different sizes. Nonetheless, by 1850, the atomic theory of matter was accepted among a significant number of chemists, and most opposition disappeared.

The idea that matter was composed of tiny, indivisible particles was considered by the philosopher Democritus in Greece in the fifth century B.C., but this was not generally accepted until after Dalton’s 1808 publication of A New System of Chemical Philosophy. Today, we understand that atoms are divisible into smaller particles, such as protons, neutrons, and electrons. Quarks are even smaller particles that combine to form other subatomic particles such as protons and neutrons.

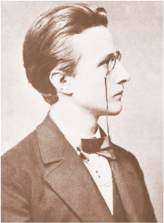

Engraving of John Dalton, by William Henry Worthington (c. 1795–c. 1839).

SEE ALSO Kinetic Theory (1859), Electron (1897), Atomic Nucleus (1911), Seeing the Single Atom (1955), Neutrinos (1956), Quarks (1964).

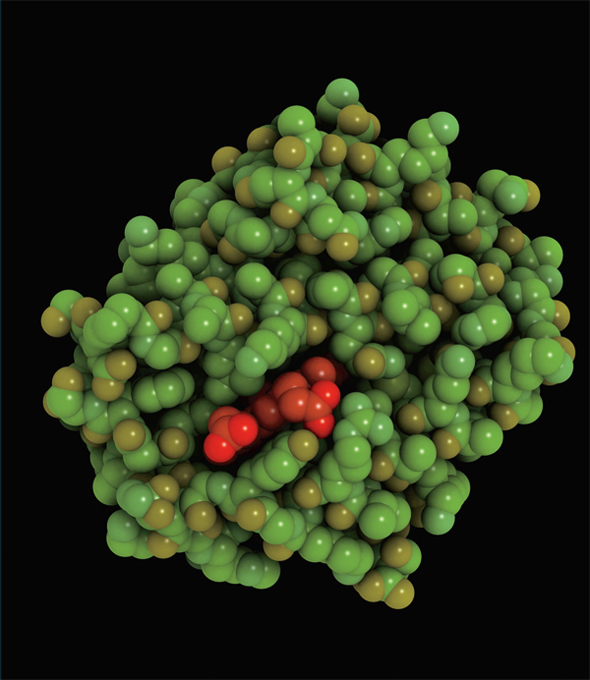

According to atomic theory, all matter is composed of atoms. Pictured here is a hemoglobin molecule with atoms represented as spheres. This protein is found in the red blood cell.

Amedeo Avogadro (1776-1856)

Avogadro’s Law, named after Italian physicist Amedeo Avogadro who proposed it in 1811, states that equal volumes of gases at the same temperature and pressure contain the same number of molecules, regardless of the molecular makeup of the gas. The law assumes that the gas particles are acting in an “ideal” manner, which is a valid assumption for most gases at pressures at or below a few atmospheres, near room temperature.

A variant of the law, also attributed to Avogadro, states that the volume of a gas is directly proportional to the number of molecules of the gas. This is represented by the formula V = a × N, where a is a constant, V is the volume of the gas, and N is the number of gas molecules. Other contemporary scientists believed that such a proportionality should be true, but Avogadro’s Law went further than competing theories because Avogadro essentially defined a molecule as the smallest characteristic particle of a substance—a particle that could be composed of several atoms. For example, he proposed that a water molecule consisted of two hydrogen atoms and one oxygen atom.

Avogadro’s number, 6.0221367 × 1023, is the number of atoms found in one mole of an element. Today we define Avogadro’s number as the number of carbon-12 atoms in 12 grams of unbound carbon-12. A mole is the amount of an element that contains precisely the same number of grams as the value of the atomic weight of the substance. For example, nickel has an atomic weight of 58.6934, so there are 58.6934 grams in a mole of nickel.

Because atoms and molecules are so small, the magnitude of Avogadro’s number is difficult to visualize. If an alien were to descend from the sky to deposit an Avogadro’s number of unpopped popcorn kernels on the Earth, the alien could cover the United States of America with the kernels to a depth of over nine miles.

SEE ALSO Charles’ Gas Law (1787), Atomic Theory (1808), Kinetic Theory (1859).

Place 24 numbered golden balls (numbered 1 through 24) in a bowl. If you randomly drew them out one at a time, the probability of removing them in numerical order is about 1 chance in Avogadro’s number—a very small chance!

Joseph von Fraunhofer (1787–1826)

A spectrum often shows the variation in the intensity of an object’s radiation at different wavelengths. Bright lines in atomic spectra occur when electrons fall from higher energy levels to lower energy levels. The color of the lines depends on the energy difference between the energy levels, and the particular values for the energy levels are identical for atoms of the same type. Dark absorption lines in spectra can occur when an atom absorbs light and the electron jumps to a higher energy level.

By examining absorption or emission spectra, we can determine which chemical elements produced the spectra. In the 1800s, various scientists noticed that the spectrum of the Sun’s electromagnetic radiation was not a smooth curve from one color to the next; rather, it contained numerous dark lines, suggesting that light was being absorbed at certain wavelengths. These dark lines are called Fraunhofer lines after the Bavarian physicist Joseph von Fraunhofer, who recorded them.

Some readers may find it easy to imagine how the Sun can produce a radiation spectrum but not how it can also produce dark lines. How can the Sun absorb its own light?

You can think of stars as fiery gas balls that contain many different atoms emitting light in a range of colors. Light from the surface of a star—the photosphere—has a continuous spectrum of colors, but as the light travels through the outer atmosphere of a star, some of the colors (i.e., light at different wavelengths) are absorbed. This absorption is what produces the dark lines. In stars, the missing colors, or dark absorption lines, tell us exactly which chemical elements are in the outer atmosphere of stars.

Scientists have catalogued numerous missing wavelengths in the spectrum of the Sun. By comparing the dark lines with spectral lines produced by chemical elements on the Earth, astronomers have found over seventy elements in the Sun. Note that, decades later, scientists Robert Bunsen and Gustav Kirchhoff studied emission spectra of heated elements and discovered cesium in 1860.

SEE ALSO Newton’s Prism (1672), Electromagnetic Spectrum (1864), Mass Spectrometer (1898), Bremsstrahlung (1909), Stellar Nucleosynthesis (1946).

Visible solar spectrum with Fraunhofer lines. The y-axis represents the wavelength of light, starting from 380 nm at top and ending at 710 nm at bottom.

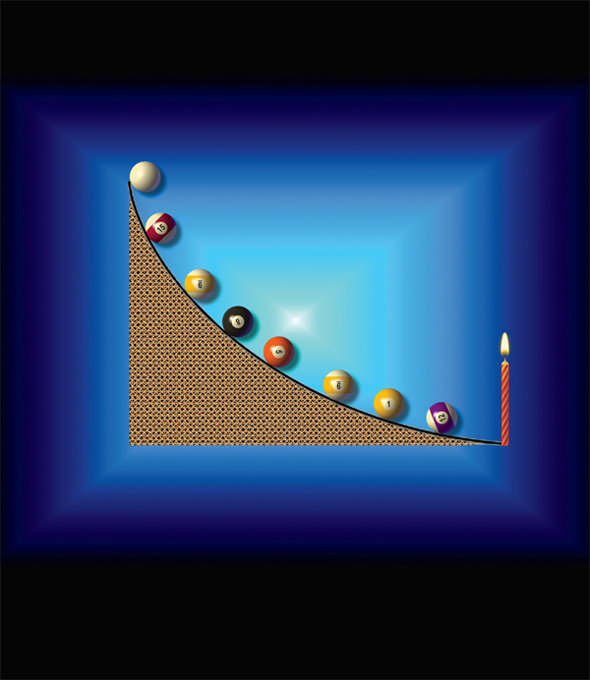

Pierre-Simon, Marquis de Laplace (1749-1827)

In 1814, French mathematician Pierre-Simon Laplace described an entity, later called Laplace’s Demon, that was capable of calculating and determining all future events, provided that the demon was given the positions, masses, and velocities of every atom in the universe and the various known formulae of motion. “It follows from Laplace’s thinking,” writes scientist Mario Markus, “that if we were to include the particles in our brains, free will would become an illusion…. Indeed, Laplace’s God simply turns the pages of a book that is already written.”

During Laplace’s time, the idea made a certain sense. After all, if one could predict the position of billiard balls bouncing around a table, why not entities composed of atoms? In fact, Laplace has no need of God at all in his universe.

Laplace wrote, “We may regard the present state of the universe as the effect of its past and the cause of its future. An intellect which at a certain moment would know all forces that set nature in motion, and all positions of all items of which nature is composed, if this intellect were also vast enough to submit these data to analysis, [it would embrace in] a single formula the movements of the greatest bodies of the universe and those of the tiniest atom; for such an intellect nothing would be uncertain and the future just like the past would be present before its eyes.”

Later, developments such as Heisenberg’s Uncertainty Principle (HUP) and Chaos Theory appear to make Laplace’s demon an impossibility. According to chaos theory, even minuscule inaccuracies in measurement at some initial time may lead to vast differences between a predicted outcome and an actual outcome. This means that Laplace’s demon would have to know the position and motion of every particle to infinite precision, thus making the demon more complex than the universe itself. Even if this demon existed outside the universe, the HUP tells us that infinitely precise measurements of the type required are impossible.

Pierre-Simon Laplace (posthumous portrait by Madame Feytaud [1842]).

In a universe with Laplace’s Demon, would free will be an illusion?

SEE ALSO Maxwell’s Demon (1867), Heisenberg Uncertainty Principle (1927), Chaos Theory (1963).

Artistic rendition of Laplace’s Demon observing the positions, masses, and velocities of every particle (represented here as bright specks) at a particular time.

Sir David Brewster (1781-1868)

Light has fascinated scientists for centuries, but who would think that creepy cuttlefish might have something to teach us about the nature of light? A lightwave consists of an electric field and a magnetic field that oscillate perpendicular to each other and to the direction of travel. It is possible, however, to restrict the vibrations of the electric field to a particular plane by plane-polarizing the light beam. For example, one approach for obtaining plane-polarized light is via the reflection of light from a surface between two media, such as air and glass. The component of the electric field parallel to the surface is most strongly reflected. At one particular angle of incidence on the surface, called the Brewster’s angle after Scottish physicist David Brewster, the reflected beam consists entirely of light whose electric vector is parallel to the surface.

Polarization by light scattering in our atmosphere sometimes produces a glare in the skies. Photographers can reduce this partial polarization using special materials to prevent the glare from producing an image of a washed-out sky. Many animals, such as bees and cuttlefish, are quite capable of perceiving the polarization of light, and bees use polarization for navigation because the linear polarization of sunlight is perpendicular to the direction of the Sun.

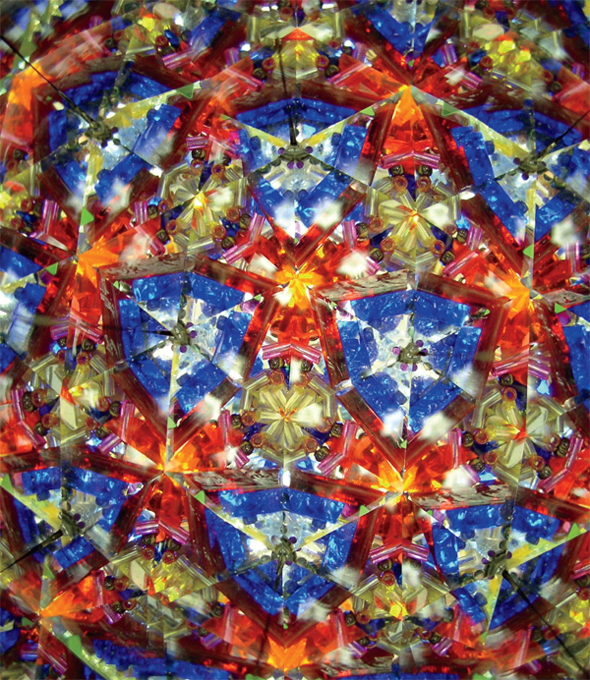

Brewster’s experiments with light polarization led him to his 1816 invention of the kaleidoscope, which has often fascinated physics students and teachers who attempt to create ray diagrams in order to understand the kaleidoscope’s multiple reflections. Cozy Baker, founder of the Brewster Kaleidoscope Society, writes, “His kaleidoscope created unprecedented clamor…. A universal mania for the instrument seized all classes, from the lowest to the highest, from the most ignorant to the most learned, and every person not only felt, but expressed the feeling that a new pleasure had been added to their existence.” American inventor Edwin H. Land wrote “The kaleidoscope was the television of the 1850s….”

Using skin patterns that involve polarized light, cuttlefish can produce intricate “designs” as a means of communication. Such patterns are invisible to human eyes.

SEE ALSO Snell’s Law of Refraction (1621), Newton’s Prism (1672), Fiber Optics (1841), Electromagnetic Spectrum (1864), Laser (1960), Unilluminable Rooms (1969).

Brewster’s experiments with light polarization led him to his 1816 invention of the kaleidoscope.

René-Théophile-Hyacinthe Laennec (1781–1826)

Social historian Roy Porter writes, “By giving access to body noises—the sound of breathing, the blood gurgling around the heart—the stethoscope changed approaches to internal disease and hence doctor-patient relations. As last, the living body was no longer a closed book: pathology could now be done on the living.”

In 1816, French physician René Laennec invented the stethoscope, which consisted of a wooden tube with a trumpet-like end that made contact with the chest. The air-filled cavity transmitted sounds from the patient’s body to the physician’s ear. In the 1940s, stethoscopes with two-sided chest-pieces became standard. One side of the chest-piece is a diaphragm (e.g. a plastic disc that covers the opening), which vibrates when detecting body sounds and produces acoustic pressure waves that travel through the air cavity of the stethoscope. The other side contains a bell-shaped endpiece (e.g. a hollow cup) that is better at transmitting low-frequency sounds. The diaphragm side actually tunes out the low frequencies associated with heart sounds and is used to listen to the respiratory system. When using the bell side, the physician can vary the pressure of the bell on the skin and “tune” the skin vibration frequency in order to best reveal the heartbeat. Many other refinements occurred over the years that involved improved amplification, noise reduction, and other characteristics that were optimized by application of simple physical principles (see “Notes and Further Reading”).

In Laennec’s day, a physician often placed his ear directly on the patient’s chest or back. However, Laennec complained that this technique “is always inconvenient, both to the physician and the patient; in the case of females, it is not only indelicate but often impracticable.” Later, an extra-long stethoscope was used to treat the very poor when physicians wanted to be farther away from their flea-ridden patients. Aside from inventing the device, Laennec carefully recorded how specific physical diseases (e.g. pneumonia, tuberculosis, and bronchitis) corresponded to the sounds heard. Ironically, Laennec himself died at age 45 of tuberculosis, which his nephew diagnosed using a stethoscope.

SEE ALSO Tuning Fork (1711), Poiseuille’s Law of Fluid Flow (1840), Doppler Effect (1842), War Tubas (1880).

Modern stethoscope. Various acoustic experiments have been conducted to determine the effect of chest-piece size and material on sound collection.

Fourier’s Law of Heat Conduction

Jean Baptiste Joseph Fourier (1768-1830)

“Heat cannot be separated from fire, or beauty from the Eternal,” wrote Dante Alighieri. The nature of heat had also fascinated the French mathematician Joseph Fourier, well known for his formulas on the conduction of heat in solid materials. His Law of Heat Conduction suggests that the rate of heat flow between two points in a material is proportional to the difference in the temperatures of the points and inversely proportional to the distance between the two points.

If we place one end of an all-metal knife into a hot cup of cocoa, the temperature of the other end of the knife begins to rise. This heat transfer is caused by molecules at the hot end exchanging their kinetic and vibrational energies with adjacent regions of the knife through random motions. The rate of flow of energy, which might be thought of as a “heat current,” is proportional to the difference in temperatures at locations A and B, and inversely proportional to the distance between A and B. This means that the heat current is doubled if the temperature difference is doubled or if the length of the knife is halved.

If we let U be the conductance of the material—that is, the measure of the ability of a material to conduct heat—we may incorporate this variable into Fourier’s Law. Among the best thermal conductors, in order of thermal conductivity values, are diamond, carbon nanotubes, silver, copper, and gold. With the use of simple instruments, the high thermal conductivity of diamonds is sometimes employed to help experts distinguish real diamonds from fakes. Diamonds of any size are cool to the touch because of their high thermal conductivity, which may help explain why the word “ice” is often used when referring to diamonds.

Even though Fourier conducted foundational work on heat transfer, he was never good at regulating his own heat. He was always so cold, even in the summer, that he wore several large overcoats. During his last months, Fourier often spent his time in a box to support his weak body.

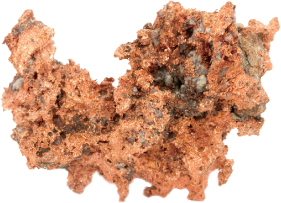

Raw copper ore. Copper is both an excellent thermal and electrical conductor.

SEE ALSO Fourier Analysis (1807), Carnot Engine (1824), Joule’s Law of Electric Heating (1840), Thermos (1892).