Albert Einstein (1879–1955)

Of all of Albert Einstein’s masterful achievements, including the Special Theory of Relativity and the General Theory of Relativity, the achievement for which he won the Nobel Prize, was his explanation of the workings the photoelectric effect (PE), in which certain frequencies of light shined on a copper plate cause the plate to eject electrons. In particular, he suggested that packets of light (now called photons) could explain the PE. For example, it had been noted that high-frequency light, such as blue or ultraviolet light, could cause electrons to be ejected—but not low-frequency red light. Surprisingly, even intense red light did not lead to electron ejection. In fact, the energy of individual emitted electrons increases with the frequency (and, hence, the color) of the light.

How could the frequency of light be the key to the PE? Rather than light exerting its effect as a classical wave, Einstein suggested that the energy of light came in the form of packets, or quanta, and that this energy was equal to the light frequency multiplied by a constant (later called Planck’s constant). If the photon was below a threshold frequency, it just did not have the energy to kick out an electron. As a very rough metaphor for low-energy red quanta, imagine the impossibility of chipping a fragment from a bowling ball by tossing peas at it. It just won’t work, even if you toss lots of peas! Einstein’s explanation for the energy of the photons seemed to account for many observations, such as for a given metal, there exists a certain minimum frequency of incident radiation below which no photoelectrons can be emitted. Today, numerous devices, such as solar cells, rely on conversion of light to electric current in order to generate power.

In 1969, American physicists suggested that one could actually account for the PE without the concept of photons; thus, the PE did not provide definitive proof of photon existence. However, studies of the statistical properties of the photons in the 1970s provided experimental verification of the manifestly quantum (nonclassical) nature of the electromagnetic field.

SEE ALSO Atomic Theory (1808), Wave Nature of Light (1801), Electron (1897), Special Theory of Relativity (1905), General Theory of Relativity (1915), Compton Effect (1923), Solar Cells (1954), Quantum Electrodynamics (1948).

Photograph through night-vision device. U.S. Army paratroopers train using infrared lasers and night-vision optics in Camp Ramadi, Iraq. Night-vision goggles make use of the ejection of photoelectrons due to the photoelectric effect to amplify the presence of individual photons.

Robert Adams Paterson (1829–1904)

A golfer’s worst nightmare may be the sand trap, but the golfer’s best friends are the dimples on the golf ball that help send it sailing down the fairway. In 1848, Rev. Dr. Robert Adams Paterson invented the gutta-percha golf ball using the dried sap of a sapodilla tree. Golfers began to notice that small scrapes or nicks in the ball actually increased the golf ball’s flight distance, and soon ball makers used hammers to add defects. By 1905, virtually all golf balls were manufactured with dimples.

Today we know that dimpled balls fly farther than smooth ones due to a combination of effects. First, the dimples delay separation of the boundary layer of air that surrounds the ball as it travels. Because the clinging air stays attached longer, it produces a narrower low-pressure wake (disturbed air) that trails behind the ball, reducing drag as compared to a smooth ball. Second, when the golf club strikes the ball, it usually creates a backspin that generates lift via the Magnus effect, in which an increase in the velocity of the air flowing over the ball generates a lower-pressure area at the top of the spinning ball relative to the bottom. The presence of dimples enhances the Magnus effect.

Today, most golf balls have between 250–500 dimples that can reduce the amount of drag by as much as half. Research has shown that polygonal shapes with sharp edges, such a hexagons, reduce drag more than smooth dimples. Various ongoing studies employ supercomputers to model air flow in the quest for the perfect dimple design and arrangement.

In the 1970s, Polara golf balls had an asymmetric arrangement of dimples that helped counteract the side spin that causes hooks and slices. However, the United States Golf Association banned it from tournament play, ruling it would “reduce the skill required to play golf.” The association also added a symmetry rule, requiring that a ball perform essentially the same regardless of where on the surface it is struck. Polara sued, received $1.4 million, and removed the ball from the market.

SEE ALSO Cannon (1132), Baseball Curveball (1870), Kármán Vortex Street (1911).

Today, most golf balls have between 250–500 dimples that can reduce the amount of drag “felt” by the golf ball by as much as half.

Walther Nernst (1864–1941)

The humorist Mark Twain once told the crazy tale of weather so cold that a sailor’s shadow froze to the deck! How cold could the environment really become?

From a classical physics perspective, the Third Law of Thermodynamics states that as a system approaches absolute zero temperature (0 K, −459.67 °F, or −273.15 °C), all processes cease and the entropy of the system approaches a minimum value. Developed by German chemist Walther Nernst around 1905, the law can be stated as follows: As the temperature of a system approaches absolute 0, the entropy, or disorder S, approaches a constant S0. Classically speaking, the entropy of a pure and perfectly crystalline substance would be 0 if the temperature could actually be reduced to absolute zero.

Using a classical analysis, all motion stops at absolute zero. However, quantum mechanical zero-point motion allows systems in their lowest possible energy state (i.e. ground state) to have a probability of being found over extended regions of space. Thus, two atoms bonded together are not separated by some unvarying distance but can be thought of as undergoing rapid vibration with respect to each other, even at absolute zero. Instead of stating that the atom is motionless, we say that it is in a state from which no further energy can be removed; the energy remaining is called zero-point energy.

The term zero-point motion is used by physicists to describe the fact that atoms in a solid—even a super-cold solid—do not remain at exact geometric lattice points; rather, a probability distribution exists for both their positions and moments. Amazingly, scientists have been able to achieve the temperature of 100 picokelvins (0.000,000,000,1 degrees above absolute zero) by cooling a piece of rhodium metal.

It is impossible to cool a body to absolute zero by any finite process. According to physicist James Trefil, “No matter how clever we get, the third law tells us that we can never cross the final barrier separating us from absolute zero.”

SEE ALSO Heisenberg Uncertainty Principle (1927), Second Law of Thermodynamics (1850), Conservation of Energy (1843), Casimir Effect (1948).

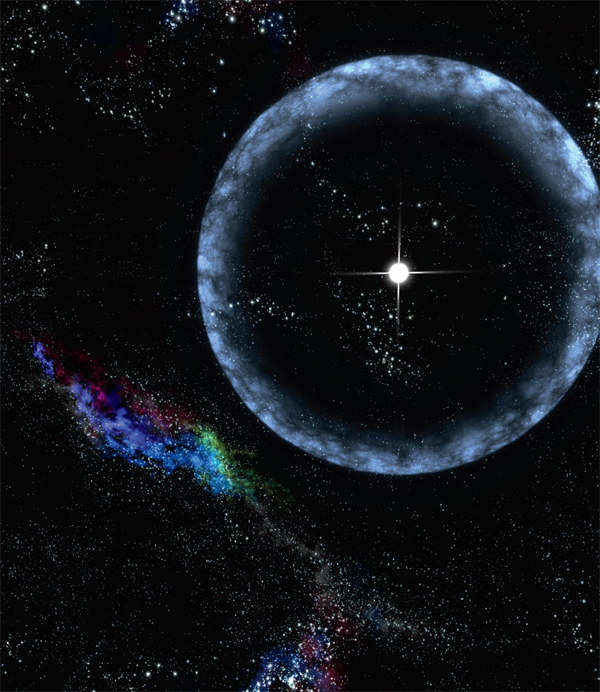

The rapid expansion of gas blowing from an aging central star in the Boomerang Nebula has cooled molecules in the nebular gas to about one degree above absolute zero, making it the coldest region observed in the distant Universe.

Lee De Forest (1873–1961)

In his Nobel Prize lecture on December 8, 2000, American engineer Jack Kilby noted, “The invention of the vacuum tube launched the electronics industry…. These devices controlled the flow of electrons in a vacuum [and] were initially used to amplify signals for audio and other devices. This allowed broadcast radio to reach the masses in the 1920s. Vacuum tubes steadily spread into other devices, and the first tube was used as a switch in calculating machines in 1939.”

In 1883, the inventor Thomas Edison noticed that electrical current could “jump” from a hot filament to a metal plate in an experimental Incandescent Light Bulb. This primitive version of a vacuum tube eventually led to American inventor Lee De Forest’s 1906 creation of the triode, which not only forces current in a single direction but can be used as an amplifier for signals such as audio and radio signals. He had placed a metal grid in his tube, and by using a small current to change the voltage on the grid, he controlled the flow of the second, larger current through the tube. Bell Labs was able to make use of this feature in its “coast to coast” phone system, and the tubes were soon used in other devices, such as radios. Vacuum tubes can also convert AC to DC current and generate oscillating radio-frequency power for radar systems. De Forest’s tubes were not evacuated, but the introduction of a strong vacuum was shown to help create a useful amplifying device.

Transistors were invented in 1947, and in the following decade assumed most of the amplifying applications of tubes at much lower cost and reliability. Early computers used tubes. For example, ENIAC, the first electronic, reprogrammable, digital computer that could be used to solve a large range of computing problems, was unveiled in 1946 and contained over 17,000 vacuum tubes. Since tube failures were more likely to occur during warm-up periods, the machine was rarely turned off, reducing tube failures to one tube every two days.

SEE ALSO Incandescent Light Bulb (1878), Transistor (1947).

RCA 808 power vacuum tube. The invention of the vacuum tube launched the electronics industry and allowed broadcast radio to reach the public in the 1920s.

Johannes (Hans) Wilhelm (Gengar) Geiger (1882–1945), Walther Müller (1905–1979)

During the Cold War in the 1950s, American contractors offered deluxe backyard fallout shelters equipped with bunk beds, a telephone, and a Geiger counter for detecting radiation. Science-fiction movies of the era featured huge radiation-created monsters—and ominously clicking Geiger counters.

Since the early 1900s, scientists have sought technologies for detecting Radioactivity, which is caused by particles emitted from unstable Atomic Nuclei. One of the most important detection devices was the Geiger counter, first developed by Hans Geiger in 1908 and subsequently improved by Geiger and Walther Müller in 1928. The Geiger counter consists of a central wire within a sealed metal cylinder with a mica or glass window at one end. The wire and metal tube are connected to a high-voltage power source outside the cylinder. When radiation passes through the window, it creates a trail of ion pairs (charged particles) in the gas within the tube. The positive ions of the pairs are attracted to the negatively charged cylinder walls (cathode). The negatively charged electrons are attracted to the central wire, or anode, and a slight detectable voltage drop occurs across the anode and cathode. Most detectors convert these pulses of current to audible clicks. Unfortunately, however, Geiger counters do not provide information about the kind of radiation and energy of the particles detected.

Over the years, improvements in radiation detectors have been made, including ionization counters and proportional counters that can be used to identify the kind of radiation impinging on the device. With the presence of boron trifluoride gas in the cylinder, the Geiger-Müller counter can also be modified so that it is sensitive to non-ionizing radiation, such as neutrons. The reaction of neutrons and boron nuclei generates alpha particles (positively charged helium nuclei), which are detected like other positively charged particles.

Geiger counters are inexpensive, portable, and sturdy. They are frequently used in geophysics, nuclear physics, and medical therapies, as well as in settings in which potential radiation leaks can occur.

SEE ALSO Radioactivity (1896), Wilson Cloud Chamber (1911), Atomic Nucleus (1911), Schrödinger’s Cat (1935).

Geiger counters may produce clicking noises to indicate radiation and also contain a dial to show how much radiation is present. Geologists sometimes locate radioactive minerals by using Geiger counters.

Wilhelm Conrad Röntgen (1845–1923), Nikola Tesla (1856–1943), Arnold Johannes Wilhelm Sommerfeld (1868–1951)

Bremsstrahlung, or “braking radiation,” refers to X-ray or other electromagnetic radiation that is produced when a charged particle, such as an electron, suddenly slows its velocity in response to the strong electric fields of Atomic Nuclei. Bremsstrahlung is observed in many areas of physics, ranging from materials science to astrophysics.

Consider the example of X-rays emitted from a metal target that is bombarded by high-energy electrons (HEEs) in an X-ray tube. When the HEEs collide with the target, electrons from the target are knocked out of the inner energy levels of the target atoms. Other electrons may fall into these vacancies, and X-ray photons are then emitted with wavelengths characteristic of the energy differences between the various levels within the target atoms. This radiation is called the characteristic X-rays.

Another type of X-ray emitted from this metal target is bremsstrahlung, as the electrons are suddenly slowed upon impact with the target. In fact, any accelerating or decelerating charge emits bremsstrahlung radiation. Since the rate of deceleration may be very large, the emitted radiation may have short wavelengths in the X-ray spectrum. Unlike the characteristic X-rays, bremsstrahlung radiation has a continuous range of wavelengths because the decelerations can occur in many ways—from near head-on impacts with nuclei to multiple deflections by positively charged nuclei.

Even though physicist Wilhelm Röntgen discovered X-rays in 1895 and Nikola Tesla began observing them even earlier, the separate study of the characteristic line spectrum and the superimposed continuous bremsstrahlung spectrum did not start until years later. The physicist Arnold Sommerfeld coined the term bremsstrahlung in 1909.

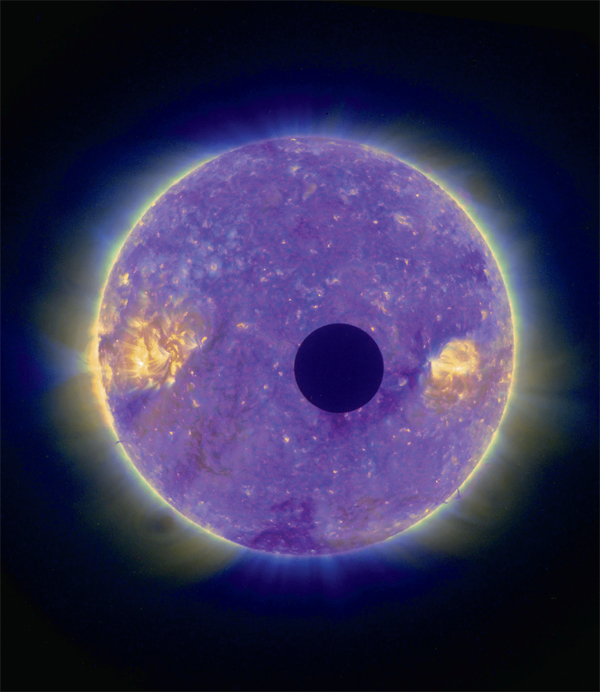

Bremsstrahlung is everywhere in the universe. Cosmic rays lose some energy in the Earth’s atmosphere after colliding with atomic nuclei, slowing, and producing bremsstrahlung. Solar X-rays result from the deceleration of fast electrons in the Sun as they pass through the Sun’s atmosphere. Additionally, when beta decay (a kind of radioactive decay that emits electrons or positrons, referred to as beta particles) occurs, the beta particles can be deflected by one of their own nuclei and emit internal bremsstrahlung.

SEE ALSO Fraunhofer Lines (1814), X-rays (1895), Cosmic Rays (1910), Atomic Nucleus (1911), Compton Effect (1923), Cherenkov Radiation (1934).

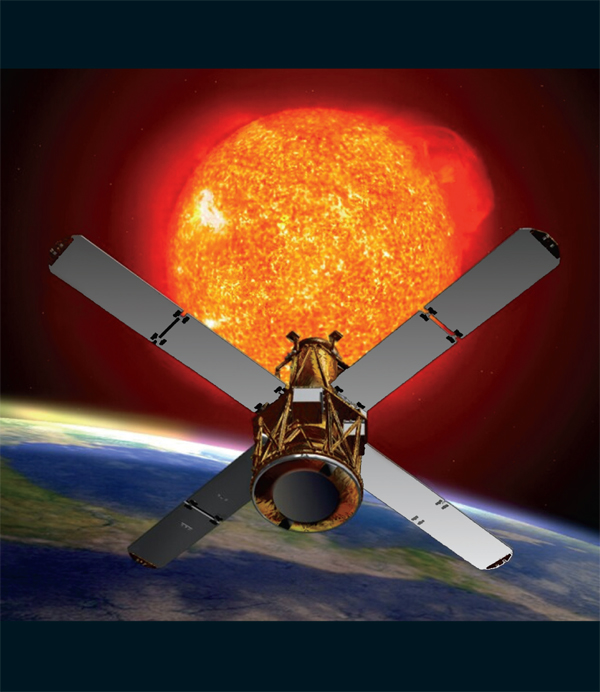

Large solar flares produce an X-ray and gamma-ray continuum of radiation partly due to the bremsstrahlung effect. Shown here is the NASA RHESSI spacecraft, launched in 2002, as it watches the Sun for X-rays and gamma rays.

Theodor Wulf (1868–1946), Victor Francis Hess (1883–1964)

“The history of cosmic ray research is a story of scientific adventure,” write the scientists at the Pierre Auger Cosmic Ray Observatory. “For nearly a century, cosmic ray researchers have climbed mountains, ridden hot air balloons, and traveled to the far corners of the earth in the quest to understand these fast-moving particles from space.”

Nearly 90 percent of the energetic cosmic-ray particles bombarding the Earth are protons, with the remainder being helium nuclei (alpha particles), electrons, and a small amount of heavier nuclei. The variety of particle energies suggests that cosmic rays have sources ranging from the Sun’s solar flares to galactic cosmic rays that stream to the Earth from beyond the solar system. When cosmic ray particles enter the atmosphere of the Earth, they collide with oxygen and nitrogen molecules to produce a “shower” of numerous lighter particles.

Cosmic rays were discovered in 1910 by German physicist and Jesuit priest Theodor Wulf, who used an electrometer (a device for detecting energetic charged particles) to monitor radiation near the bottom and top of the Eiffel Tower. If radiation sources were on the ground, he would have detected less radiation as he moved away from the Earth. To his surprise, he found that the level of radiation at the top was larger than what would be expected if it were from terrestrial radioactivity. In 1912, Austrian-American physicist Victor Hess carried detectors in a balloon to an altitude of 17,300 feet (5,300 meters) and found that the radiation increased to a level four times that at the ground.

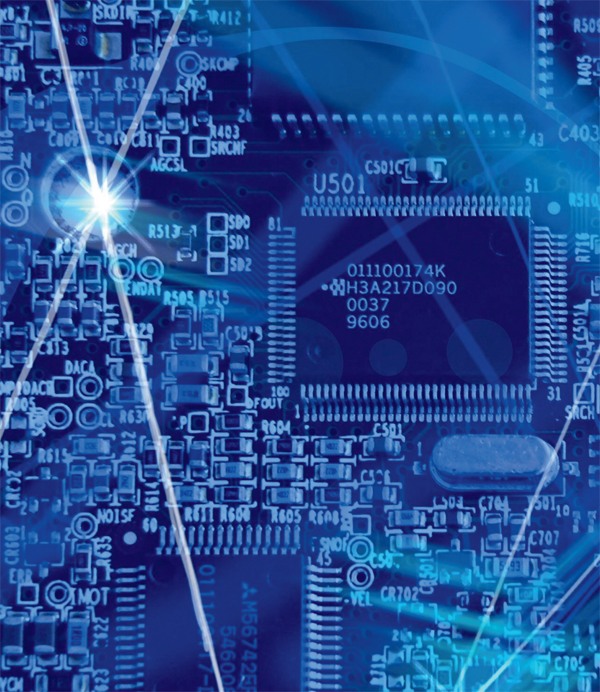

Cosmic rays have been creatively referred to as “killer rays from outer space” because they may contribute to more than 100,000 deaths from cancer each year. They also have sufficient energy to damage electronic Integrated Circuits and may alter data stored in the memory of a computer. The highest-energy cosmic-ray events are quite curious, because their arrival directions do not always suggest a particular source; however, supernovae (exploding stars) and stellar winds from massive stars are probably accelerators of cosmic-ray particles.

SEE ALSO Bremsstrahlung (1909), Integrated Circuit (1958), Gamma Ray Bursts (1967), Tachyons (1967).

Cosmic rays may have deleterious effects on components of electronic integrated circuits. For example, studies in the 1990s suggested that roughly one cosmic-ray-induced error occurred for 256 megabytes of RAM (computer memory) every month.

Heike Kamerlingh Onnes (1853–1926), John Bardeen (1908–1991), Karl Alexander Müller (b. 1927), Leon N. Cooper (b. 1930), John Robert Schrieffer (b. 1931), Johannes Georg Bednorz (b. 1950)

“At very low temperatures,” writes science-journalist Joanne Baker, “some metals and alloys conduct electricity without any resistance. The current in these superconductors can flow for billions of years without losing any energy. As electrons become coupled and all move together, avoiding the collisions that cause electrical resistance, they approach a state of perpetual motion.”

In fact, many metals exist for which the resistivity is zero when they are cooled below a critical temperature. This phenomenon, called superconductivity, was discovered in 1911 by Dutch physicist Heike Onnes, who observed that when he cooled a sample of mercury to 4.2 degrees above absolute zero (−452.1 °F), its electrical resistance plunged to zero. In principle, this means that an electrical current can flow around a loop of superconducting wire forever, with no external power source. In 1957, American physicists John Bardeen, Leon Cooper, and Robert Schrieffer determined how electrons could form pairs and appear to ignore the metal around them: Consider a metal window screen as a metaphor for the arrangement of positively charged atomic nuclei in a metal lattice. Next, imagine a negatively charged electron zipping between the atoms, creating a distortion by pulling on them. This distortion attracts a second electron to follow the first; they travel together in a pair, and encounter less resistance overall.

In 1986, Georg Bednorz and Alex Müller discovered a material that operated at the higher temperature of roughly −396 °F (35 kelvins), and in 1987 a different material was found to superconduct at −297 °F (90 kelvins). If a superconductor is discovered that operates at room temperature, it could be used to save vast amounts of energy and to create a high-performance electrical power transmission system. Superconductors also expel all applied magnetic fields, which allows engineers to build magnetically levitated trains. Superconductivity is also used to create powerful electromagnets in MRI (magnetic-resonance imaging) scanners in hospitals.

SEE ALSO Discovery of Helium (1868), Third Law of Thermodynamics (1905), Superfluids (1937), Nuclear Magnetic Resonance (1938).

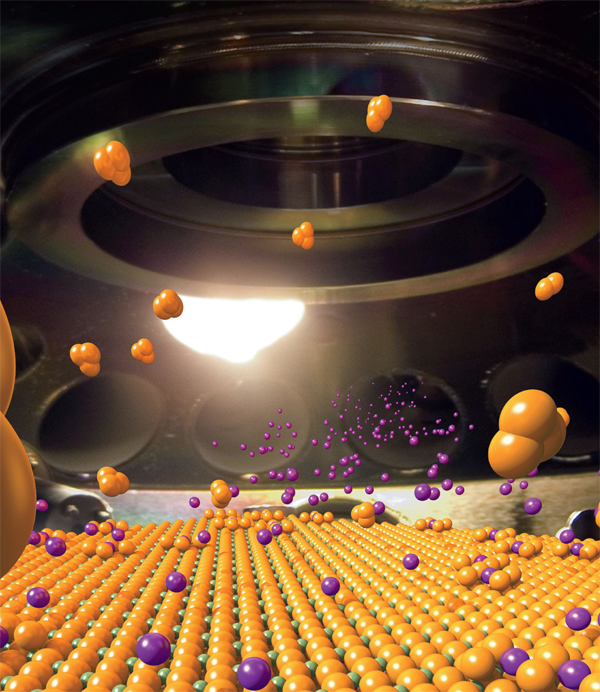

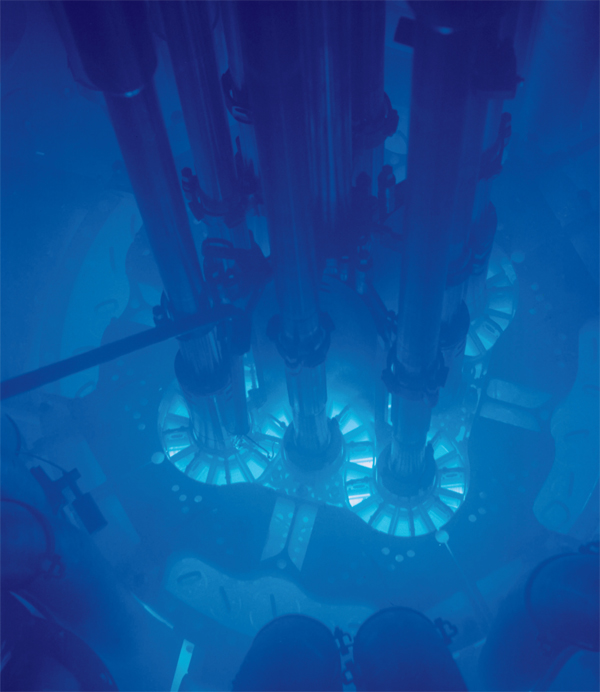

In 2008, physicists at the U.S. Department of Energy’s Brookhaven National Laboratory discovered interface high-temperature superconductivity in bilayer films of two cuprate materials—with potential for creating higher-effciency electronic devices. In this artistic rendition, these thin films are built layer by layer.

Ernest Rutherford (1871-1937)

Today, we know that the atomic nucleus, which consists of protons and neutrons, is the very dense region at the center of an atom. However, in the first decade of the 1900s, scientists were unaware of the nucleus and thought of the atom as a diffuse web of positively charged material, in which negatively charged electrons were embedded like cherries in a cake. This model was utterly destroyed when Ernest Rutherford and his colleagues discovered the nucleus after they Fired a beam of alpha particles at a thin sheet of gold foil. Most of the alpha particles (which we know today as helium nuclei) went through the foil, but a few bounced straight back. Rutherford said later that this was “quite the most incredible event that has ever happened to me…. It was almost as incredible as if you Fired a 15-inch shell at a piece of tissue paper and it came back and hit you.”

The cherry-cake model of the atom, which was a metaphor for a somewhat uniform spread of density across the gold foil, could never account for this behavior. Scientists would have observed the alpha particles slow down, perhaps, like a bullet being Fired through water. They did not expect the atom to have a “hard center” like a pit in a peach. In 1911, Rutherford announced a model with which we are familiar today: an atom consisting of a positively charged nucleus encircled by electrons. Given the frequency of collisions with the nucleus, Rutherford could approximate the size of the nucleus with respect to the size of the atom. Author John Gribbin writes that the nucleus has “one hundred-thousandth of the diameter of the whole atom, equivalent to the size of a pinhead compared with the dome of St. Paul’s cathedral in London…. And since everything on Earth is made of atoms, that means that your own body, and the chair you sit on, are each made up of a million billion times more empty space than ‘solid matter.’”

SEE ALSO Atomic Theory (1808), Electron (1897), E = mc2 (1905), Bohr Atom (1913), Cyclotron (1929), Neutron (1932), Nuclear Magnetic Resonance (1938), Energy from the Nucleus (1942), Stellar Nucleosynthesis (1946).

Artistic rendition of a classical model of the atom with its central nucleus. Only some of the nucleons (protons and neutrons) and electrons are seen in this view. In an actual atom, the diameter of the nucleus is very much smaller than the diameter of the entire atom. Modern depictions of the surrounding electrons often depict them as clouds that represent probability densities.

Henri Bénard (1874–1939), Theodore von Kármán (1881–1963)

One of the most visually beautiful phenomena in physics can also be among the most dangerous. Kármán vortex streets are a repeating set of swirling vortices caused by the unsteady separation of fluid flow over “bluff bodies”—that is, blunt bodies such as cylindrical shapes, airfoils at large angles of attack, or the shapes of some reentry vehicles. Author Iris Chang describes the phenomena researched by Hungarian physicist Theodore von Kármán, which included his “1911 discovery through mathematical analysis of the existence of a source of aerodynamical drag that occurs when the airstream breaks away from the airfoil and spirals off the sides in two parallel streams of vortices. A phenomena, now known as the Kármán Vortex Street, was used for decades to explain the oscillations of submarines, radio towers, power lines….”

Various methods can be used to decrease unwanted vibrations from essentially cylindrical objects such as industrial chimneys, car antennas, and submarine periscopes. One such method employs helical (screw-like) projections that reduce the alternate shedding of vortices. The vortices can cause towers to collapse and are also undesirable because they are a significant source of wind resistance in cars and airplanes.

These vortices are sometimes observed in rivers downstream from columns supporting a bridge or in the small, circular motions of leaves on the ground when a car travels down the street. Some of their most beautiful manifestations occur in cloud configurations that form in the wake of giant obstacles on the Earth, such as tall volcanic islands. Similar vortices may help scientists study weather on other planets.

Von Kármán referred to his theory as “the theory whose name I am honored to bear,” because, according to author István Hargittai, “he considered the discovery to be more important than the discoverer. When about twenty years later, a French scientist Henri Bénard claimed priority for the discovery of vortex streets, von Kármán did not protest. Rather, with characteristic humor he suggested that the term Kármán Vortex Streets be used in London and Boulevard d’Henri Bénard in Paris.”

SEE ALSO Bernoulli’s Law of Fluid Dynamics (1738), Baseball Curveball (1870), Golf Ball Dimples (1905), Fastest Tornado Speed (1999).

Kármán Vortex Street in a Landsat 7 image of clouds off the Chilean coast near the Juan Fernández Islands. A study of these patterns is useful for understanding flows involved in phenomena ranging from aircraft wing behavior to the Earth’s weather.

Charles Thomson Rees Wilson (1869–1959), Alexander Langsdorf (1912–1996), Donald Arthur Glaser (b. 1926)

At his Nobel Banquet in 1927, physicist Charles Wilson described his affection for cloudy Scottish hill-tops: “Morning after morning, I saw the sun rise above a sea of clouds and the shadow of the hills on the clouds below surrounded by gorgeous colored rings. The beauty of what I saw made me fall in love with clouds….” Who would have guessed that Wilson’s passion for mists would have been used to reveal the first form of Antimatter (the positron)—along with other particles—and change the world of particle physics forever?

Wilson perfected his first cloud chamber in 1911. First, the chamber was saturated with water vapor. Next, the pressure was lowered by the movement of a diaphragm, which expanded the air inside. This also cooled the air, creating conditions favorable to condensation. When an ionizing particle passed through the chamber, water vapor condensed on the resulting ion (charged particle), and a trail of the particle became visible in the vapor cloud. For example, when an alpha particle (a helium nucleus, which has a positive charge) moved through the cloud chamber, it tore off electrons from atoms in the gas, temporarily leaving behind charged atoms. Water vapor tended to accumulate on such ions, leaving behind a thin band of fog reminiscent of smoke-writing airplanes. If a uniform magnetic field was applied to the cloud chamber, positively and negatively charged particles curved in opposite directions. The radius of curvature could then be used to determine the momentum of the particle.

The cloud chamber was just the start. In 1936, physicist Alexander Langsdorf developed the diffusion cloud chamber, which employed colder temperatures and was sensitized for radiation detection for longer periods of time than traditional cloud chambers. In 1952, physicist Donald Glaser invented the bubble chamber. This chamber employed liquids, which are better able to reveal the tracks of more energetic particles than the gasses in traditional cloud chambers. More recent spark chambers employ a grid of electric wires to monitor charged particles by the detection of sparks.

SEE ALSO Geiger Counter (1908), Antimatter (1932), Neutron (1932).

In 1963, this Brookhaven National Laboratory bubble chamber was the largest particle detector of its type in the world. The most famous discovery made at this detector was of the omega-minus particle.

Cepheid Variables Measure the Universe

Henrietta Swan Leavitt (1868–1921)

The poet John Keats once wrote “Bright star, were I as steadfast as thou art”—not realizing that some stars brighten and dim on periods ranging from days to weeks. Cepheid variable stars have periods (the time for one cycle of dimness and brightness) that are proportional to the stars’ luminosity. Using a simple distance formula, this luminosity can be used to estimate interstellar and intergalactic distances. The American astronomer Henrietta Leavitt discovered the relationship between period and luminosity in Cepheid variables, thus making her perhaps the first to discover how to calculate the distance from the Earth to galaxies beyond the Milky Way. In 1902, Leavitt became a permanent staff member of the Harvard College Observatory and spent her time studying photographic plates of variable stars in the Magellanic Clouds. In 1904, using a time-consuming process called superposition, she discovered hundreds of variables in the Magellanic Clouds. These discoveries led Professor Charles Young of Princeton University to write, “What a variable-star ‘fiend’ Miss Leavitt is; one can’t keep up with the roll of the new discoveries.”

Henrietta Leavitt.

Leavitt’s greatest discovery occurred when she determined the actual periods of 25 Cepheid variables, and in 1912 she wrote of the famous period-luminosity relation: “A straight line can be readily drawn among each of the two series of points corresponding to maxima and minima, thus showing that there is a simple relation between the brightness of the variables and their periods.” Leavitt also realized that “since the variables are probably nearly the same distance from the earth, their periods are apparently associated with their actual emission of light, as determined by their mass, density, and surface brightness.” Sadly, she died young of cancer before her work was complete. In 1925, completely unaware of her death, Professor Mittag-Leffer of the Swedish Academy of Sciences sent her a letter, expressing his intent to nominate her for the Nobel Prize in Physics. However, since Nobel Prizes are never awarded posthumously, Leavitt was not eligible for the honor.

SEE ALSO Eratosthenes Measures the Earth (240 B.C.), Measuring the Solar System (1672), Stellar Parallax (1838), Hubble Telescope (1990).

Hubble Space Telescope image of spiral galaxy NGC 1309. Scientists can accurately determine the distance between the Earth and the galaxy (100 million light-years, or 30 Megaparsecs) by examining the light output of the galaxy’s Cepheid variables.

Bragg’s Law of Crystal Diffraction

William Henry Bragg (1862-1942), William Lawrence Bragg (1890-1971)

“I was captured for life by chemistry and by crystals,” wrote X-ray crystallographer Dorothy Crowfoot Hodgkin whose research depended on Bragg’s Law. Discovered by the English physicists Sir W. H. Bragg and his son Sir W. L. Bragg in 1912, Bragg’s Law explains the results of experiments involving the diffraction of electromagnetic waves from crystal surfaces. Bragg’s Law provides a powerful tool for studying crystal structure. For example, when X-rays are aimed at a crystal surface, they interact with atoms in the crystal, causing the atoms to re-radiate waves that may interfere with one another. The interference is constructive (reinforcing) for integer values of n according to Bragg’s Law: nλ = 2d sin(θ). Here, λ is the wavelength of the incident electromagnetic waves (e.g. X-rays); d is the spacing between the planes in the atomic lattice of the crystal; and θ is the angle between the incident ray and the scattering planes.

For example, X-rays travel down through crystal layers, reflect, and travel back over the same distance before leaving the surface. The distance traveled depends on the separation of the layers and the angle at which the X-ray entered the material. For maximum intensity of reflected waves, the waves must stay in phase to produce the constructive interferences. Two waves stay in phase, after both are reflected, when n is a whole number. For example, when n = 1, we have a “first order” reflection. For n = 2, we have a “second order” reflection. If only two rows were involved in the diffraction, as the value of θ changes, the transition from constructive to destructive interference is gradual. However, if interference from many rows occurs, then the constructive interference peaks become sharp, with mostly destructive interference occurring in between the peaks.

Bragg’s Law can be used for calculating the spacing between atomic planes of crystals and for measuring the radiation’s wavelength. The observations of X-ray wave interference in crystals, commonly known as X-ray diffraction, provided direct evidence for the periodic atomic structure of crystals that was postulated for several centuries.

Copper sulfate. In 1912, physicist Max von Laue used X-rays to record a diffraction pattern from a copper sulfate crystal, which revealed many well-defned spots. Prior to X-ray experiments, the spacing between atomic lattice planes in crystals was not accurately known.

SEE ALSO Wave Nature of Light (1801), X-rays (1895), Hologram (1947), Seeing the Single Atom (1955), Quasicrystals (1982).

Bragg’s Law eventually led to studies involving the X-ray scattering from crystal structures of large molecules such as DNA.

Niels Henrik David Bohr (1885–1962)

“Somebody once said about the Greek language that Greek flies in the writings of Homer,” writes physicist Amit Goswami. “The quantum idea started flying with the work of Danish physicist Niels Bohr published in the year 1913.” Bohr knew that negatively charged electrons are easily removed from atoms and that the positively charged nucleus occupied the central portion of the atom. In the Bohr model of the atom, the nucleus was considered to be like our central Sun, with electrons orbiting like planets.

Such a simple model was bound to have problems. For example, an electron orbiting a nucleus would be expected to emit electromagnetic radiation. As the electron lost energy, it should decay and fall into the nucleus. In order to avoid atomic collapse as well as to explain various aspects of the emission spectra of the hydrogen atom, Bohr postulated that the electrons could not be in orbits with an arbitrary distance from the nucleus. Rather, they were restricted to particular allowed orbits or shells. Just like climbing or descending a ladder, the electron could jump to a higher rung, or shell, when the electron received an energy boost, or it could fall to a lower shell, if one existed. This hopping between shells takes place only when a photon of the particular energy is absorbed or emitted from the atom. Today we know that the model has many shortcomings and does not work for larger atoms, and it violates the Heisenberg Uncertainty Principle because the model employs electrons with a definite mass and velocity in orbits with definite radii.

Physicist James Trefil writes, “Today, instead of thinking of electrons as microscopic planets circling a nucleus, we now see them as probability waves sloshing around the orbits like water in some kind of doughnut-shaped tidal pool governed by Schrödinger’s Equation …. Nevertheless, the basic picture of the modern quantum mechanical atoms was painted back in 1913, when Niels Bohr had his great insight.” Matrix mechanics—the first complete definition of quantum mechanics—later replaced the Bohr Model and better described the observed transitions in energy states of atoms.

SEE ALSO Electron (1897), Atomic Nucleus (1911), Pauli Exclusion Principle (1925), Schrödinger’s Wave Equation (1926), Heisenberg Uncertainty Principle (1927).

These amphitheater seats in Ohrid, Macedonia, are a metaphor for Bohr’s electron orbits. According to Bohr, electrons could not be in orbits with an arbitrary distance from the nucleus; rather, electrons were restricted to particular allowed shells associated with discrete energy levels.

Robert A. Millikan (1868-1953)

In his 1923 Nobel Prize address, American physicist Robert Millikan assured the world that he had detected individual electrons. “He who has seen that experiment,” Millikan said, referring to his oil-drop research, “has literally seen the electron.” In the early 1900s, in order to measure the electric charge on a single electron, Millikan sprayed a fine mist of oil droplets into a chamber that had a metal plate at top and bottom with a voltage applied between them. Because some of the oil droplets acquired electrons through friction with the sprayer nozzle, they were attracted to the positive plate. The mass of a single charged droplet can be calculated by observing how fast it falls. In fact, if Millikan adjusted the voltage between the metal plates, the charged droplet could be held stationary between the plates. The amount of voltage needed to suspend a droplet, along with knowledge of the droplet’s mass, was used to determine the overall electric charge on the droplet. By performing this experiment many times, Millikan determined that the charged droplets did not acquire a continuous range of possible values for the charge but rather exhibited charges that were whole-number multiples of a lowest value, which he declared was the charge on an individual electron (about 1.592 × 10−19 coulomb). Millikan’s experiment required extreme effort, and his value was slightly less than the actual value 1.602 × 10−19 accepted today because he had used an incorrect value for the viscosity of air in his measurements.

Authors Paul Tipler and Ralph Llewellyn write, “Millikan’s measurement of the charge on the electron is one of the few truly crucial experiments in physics and … one whose simple directness serves as a standard against which to compare others…. Note that, while we have been able to measure the value of the quantized electric charge, there is no hint in any of the above as to why it has this value, nor do we know the answer to that question now.”

SEE ALSO Coulomb’s Law of Electrostatics (1785), Electron (1897).

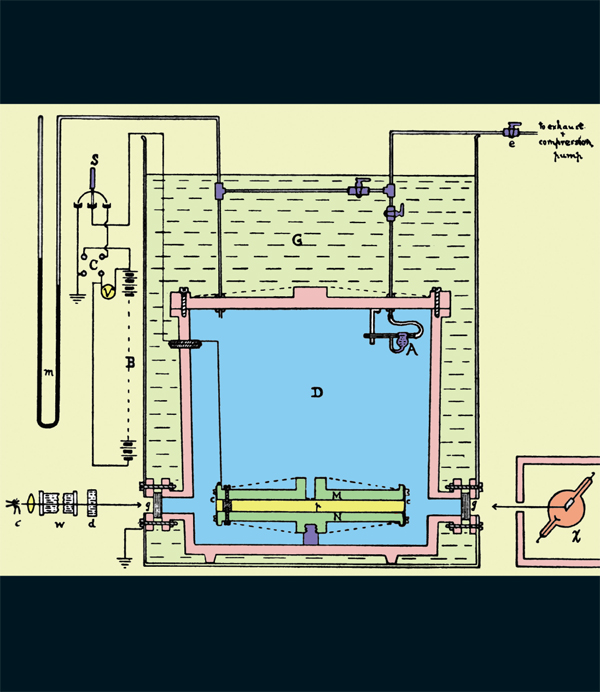

Figure from Millikan’s 1913 paper on his experiment. The atomizer (A) introduced oil droplets into chamber D. A voltage was maintained between parallel plates M and N. Droplets between M and N were observed.

Albert Einstein (1879–1955)

Albert Einstein once wrote that “all attempts to obtain a deeper knowledge of the foundations of physics seem doomed to me unless the basic concepts are in accordance with general relativity from the beginning.” In 1915, ten years after Einstein proclaimed his Special Theory of Relativity (which suggested that distance and time are not absolute and that one’s measurements of the ticking rate of a clock depend on one’s motion with respect to the clock), Einstein gave us an early form of his General Theory of Relativity (GTR), which explained gravity from a new perspective. In particular, Einstein suggested that gravity is not really a force like other forces, but results from the curvature of space-time caused by masses in space-time. Although we now know that GTR does a better job at describing motions in high gravitational fields than Newtonian mechanics (such as the orbit of Mercury about the Sun), Newtonian mechanics still is useful in describing the world of ordinary experience.

To better understand GTR, consider that wherever a mass exists in space, it warps space. Imagine a bowling ball sinking into a rubber sheet. This is a convenient way to visualize what stars do to the fabric of the universe. If you were to place a marble into the depression formed by the stretched rubber sheet, and give the marble a sideways push, it would orbit the bowling ball for a while, like a planet orbiting the Sun. The warping of the rubber sheet by the bowling ball is a metaphor for a star warping space.

GTR can be used to understand how gravity warps and slows time. In a number of circumstances, GTR also appears to permit Time Travel.

Einstein additionally suggested that gravitational effects move at the speed of light. Thus, if the Sun were suddenly plucked from the Solar System, the Earth would not leave its orbit about the Sun until about eight minutes later, the time required for light to travel from the Sun to the Earth. Many physicists today believe that gravitation must be quantized and take the form of particles called gravitons, just as light takes the form of photons, which are tiny quantum packets of electromagnetism.

SEE ALSO Newton’s Laws of Motion and Gravitation (1687), Black Holes (1783), Time Travel (1949), Eötvös’ Gravitational Gradiometry (1890), Special Theory of Relativity (1905), Randall-Sundrum Branes (1999).

Einstein suggested that gravity results from the curvature of space-time caused by masses in space-time. Gravity distorts both time and space.

Theodor Franz Eduard Kaluza (1885–1954), John Henry Schwarz (b. 1941), Michael Boris Green (b. 1946)

“The mathematics involved in string theory …,” writes mathematician Michael Atiyah, “in subtlety and sophistication … vastly exceeds previous uses of mathematics in physical theories. String theory has led to a whole host of amazing results in mathematics in areas that seem far removed from physics. To many this indicates that string theory must be on the right track….” Physicist Edward Witten writes, “String theory is twenty-first century physics that fell accidentally into the twentieth century.”

Various modern theories of “hyperspace” suggest that dimensions exist beyond the commonly accepted dimensions of space and time. For example, the Kaluza-Klein theory of 1919 made use of higher spatial dimensions in an attempt to explain electromagnetism and gravitation. Among the most recent formulations of these kinds of concepts is superstring theory, which predicts a universe of ten or eleven dimensions—three dimensions of space, one dimension of time, and six or seven more spatial dimensions. In many theories of hyperspace, the laws of nature become simpler and more elegant when expressed with these several extra spatial dimensions.

In string theory, some of the most basic particles, like quarks electrons, can be modeled by inconceivably tiny, essentially one-dimensional entities called strings. Although strings may seem to be mathematical abstractions, remember that atoms were once regarded as “unreal” mathematical abstractions that eventually became observables. However, strings are so tiny that there is no current way to directly observe them.

In some string theories, the loops of string move about in ordinary 3-space, but they also vibrate in higher spatial dimensions. As a simple metaphor, think of a vibrating guitar string whose “notes” correspond to different particles such as quarks and electrons or the hypothetical graviton, which may convey the force of gravity.

String theorists claim that a variety of higher spatial dimensions are “compactified”—tightly curled up (in structures known as Calabi-Yau spaces) so that the extra dimensions are essentially invisible. In1984, Michael Green and John H. Schwarz made additional breakthroughs in string theory.

SEE ALSO Standard Model (1961), Theory of Everything (1984), Randall-Sundrum Branes (1999), Large Hadron Collider (2009).

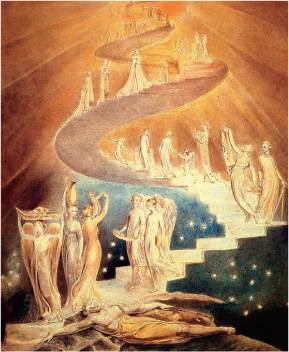

In string theory, the vibrational pattern of the string determines what kind of particle the string is. For a metaphor, consider a violin. Pluck the A string and an electron is formed. Pluck the E string and you create a quark.

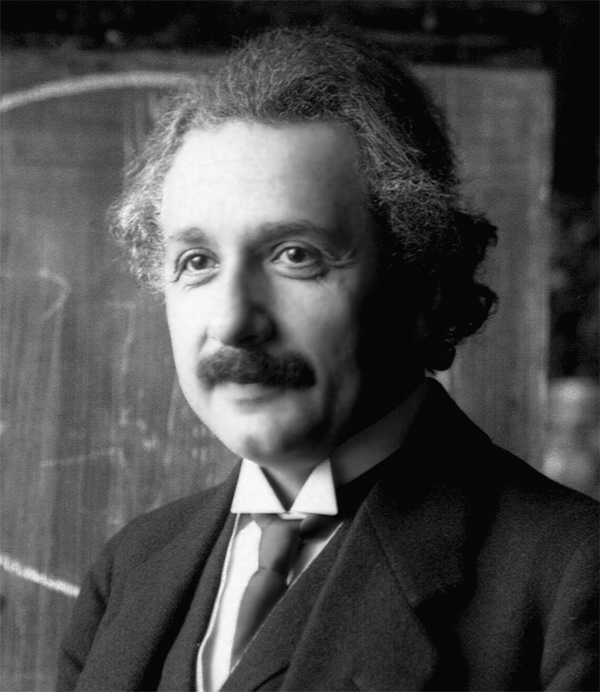

Albert Einstein (1879–1955)

Nobel-prize winner Albert Einstein is recognized as one of the greatest physicists of all time and the most important scientist of the twentieth century. He proposed the Special and General Theories of Relativity, which revolutionized our understanding of space and time. He also made major contributions to the science of quantum mechanics, statistical mechanics, and cosmology.

“Physics has come to dwell at such a deep remove from everyday experiences,” writes Thomas Levenson, author of Einstein in Berlin, “that it’s hard to say whether most of us would be able to recognize an Einstein-like accomplishment should it occur [today]. When Einstein first came to New York in 1921, thousands lined the street for a motorcade…. Try to imagine any theoretician today getting such a response. It’s impossible. The emotional connections between the physicist’s conception of reality and the popular imagination has weakened greatly since Einstein.”

According to many scholars I consulted, there will never be another individual on par with Einstein. Levenson suggested, “It seems unlikely that [science] will produce another Einstein in the sense of a broadly recognized emblem of genius. The sheer complexity of models being explored [today] confines almost all practitioners to parts of the problem.” Unlike today’s scientists, Einstein required little or no collaboration. Einstein’s paper on special relativity contained no references to others or to prior work.

Bran Ferren, cochairman and chief creative officer of the Applied Minds technology company, affirms that “the idea of Einstein is perhaps more important than Einstein himself.” Not only was Einstein the greatest physicist of the modern world, he was an “inspirational role model whose life and work ignited the lives of countless other great thinkers. The total of their contributions to society, and the contributions of the thinkers whom they will in turn inspire, will greatly exceed those of Einstein himself.”

Einstein created an unstoppable “intellectual chain reaction,” an avalanche of pulsing, chattering neurons and memes that will ring for an eternity.

SEE ALSO Newton as Inspiration (1687), Special Theory of Relativity (1905), Photoelectric Effect (1905), General Theory of Relativity (1915), Brownian Motion (1827), Stephen Hawking on Star Trek (1993).

Photo of Albert Einstein, while attending a lecture in Vienna in 1921 at the age of 42.

Otto Stern (1888–1969), Walter Gerlach (1889–1979)

“What Stern and Gerlach found,” writes author Louisa Gilder, “no one predicted. The Stern-Gerlach experiment was a sensation among physicists when it was published in 1922, and it was such an extreme result that many who had doubted the quantum ideas were converted.”

Imagine carefully tossing little spinning red bar magnets between the north and south poles of a large magnet suspended in front of a wall. The fields of the big magnet deflect the little magnets, which collide with the wall and create a scattering of dents. In 1922, Otto Stern and Walter Gerlach conducted an experiment using neutral silver atoms, which contain a lone electron in the atom’s outer orbital. Imagine that this electron is spinning and creating a small magnetic moment represented by the red bar magnets in our thought experiment. In fact, the spin of an unpaired electron causes the atom to have north and south poles, like a tiny compass needle. The silver atoms pass through a non-uniform magnetic field as they travel to a detector. If the magnetic moment could assume all orientations, we would expect a smear of impact locations at the detector. After all, the external magnetic field would produce a force at one end of the tiny “magnets” that might be slightly greater than at the other end, and over time, randomly oriented electrons would experience a range of forces. However, Stern and Gerlach found just two impact regions (above and below the original beam of silver atoms), suggesting that the electron spin was quantized and could assume just two orientations.

Note that the “spin” of a particle may have little to do with the classical idea of a rotating sphere having an angular momentum; rather, particle spin is a somewhat mysterious quantum mechanical phenomenon. For electrons as well as for protons and neutrons, there are two possible values for spin. However, the associated magnetic moment is much smaller for the heavier protons and neutrons, because the strength of a magnetic dipole is inversely proportional to the mass.

SEE ALSO De Magnete (1600), Gauss and the Magnetic Monopole (1835), Electron (1897), Pauli Exclusion Principle (1925), EPR Paradox (1935).

A memorial plaque honoring Stern and Gerlach, mounted near the entrance to the building in Frankfurt, Germany, where their experiment took place.

Georges Claude (1870-1960)

Discussions of neon signs are not complete without reminiscing on what I call the “physics of nostalgia.” French chemist and engineer George Claude developed neon tubing and patented its commercial application in outdoor advertising signs. In 1923, Claude introduced the signs to the United States. “Soon, neon signs were proliferating along America’s highways in the 1920s and ’30s … piercing the darkness,” writes author William Kaszynski. “The neon sign was a godsend for any traveler with a near-empty gas tank or anxiously searching for a place to sleep.”

Neon lights are glass tubes that contain neon or other gases at low pressure. A power supply is connected to the sign through wires that pass through the walls of the tube. When the voltage supply is turned on, electrons are attracted to the positive electrode, occasionally colliding with the neon atoms. Sometimes, the electrons will knock an electron off of a neon atom, creating another free electron and a positive neon ion, or Ne+. The mixture of free electrons, Ne+, and neutral neon atoms form a conducting Plasma in which free electrons are attracted to the Ne+ ions. Sometimes, the Ne+ captures the electron in a high energy level, and as the electron drops from a high energy level to a lower one, light is emitted at specific wavelengths, or colors, for example, red-orange for neon gas. Other gases within the tube can yield other colors.

Author Holly Hughes writes in 500 Places to See Before They Disappear, “The beauty of the neon sign was that you could twist those glass tubes into any shape you wanted. And as Americans took to the highways in the 1950s and 1960s, advertisers took advantage of that, spangling the nighttime streetscape with colorful whimsies touting everything from bowling alleys to ice-cream stands to Tiki bars. Preservationists are working to save neon signs for future generations, either on-site or in museums. After all, what would America be without a few giant neon donuts around?”

SEE ALSO Triboluminescence (1620), Stokes’ Fluorescence (1852), Plasma (1879), Black Light (1903).

Retro fifties-style American car wash neon sign.

Arthur Holly Compton (1892–1962)

Imagine screaming at a distant wall, and your voice echoing back. You wouldn’t expect your voice to sound an octave lower upon its return. The sound waves bounce back at the same frequency. However, as documented in 1923, physicist Arthur Compton showed that when X-rays were scattered off electrons, the scattered X-rays had a lower frequency and lower energy. This is not predicted using traditional wave models of electromagnetic radiation. In fact, the scattered X-rays were behaving as if the X-rays were billiard balls, with part of the energy transferred to the electron (modeled as a billiard ball). In other words, some of the initial momentum of an X-ray particle was gained by the electron. With billiard balls, the energy of the scattered balls after collision depends on the angle at which they leave each other, an angular dependence that Compton found when X-rays collided with electrons. The Compton Effect provided additional evidence for quantum theory, which implies that light has both wave and particle properties. Albert Einstein had provided earlier evidence for quantum theory when he showed that packets of light (now called photons) could explain the Photoelectric Effect in which certain frequencies of light shined on a copper plate causes the plate to eject electrons.

In a purely wave model of the X-rays and electrons, one might expect that the electrons would be triggered to oscillate with the frequency of the incident wave and hence reradiate the same frequency. Compton, who modeled the X-rays as photon particles, had assigned the photon a momentum of hf/c from two well-known physics relationships: E = hf and E = mc2. The energy of the scattered X-rays was consistent with these assumptions. Here, E is energy, f is frequency, c the speed of light, m the mass, and h is Planck’s constant. In Compton’s particular experiments, the forces with which electrons were bound to atoms could be neglected, and the electrons could be regarded as being essentially unbound and quite free to scatter in another direction.

SEE ALSO Photoelectric Effect (1905), Bremsstrahlung (1909), X-rays (1895), Electromagnetic Pulse (1962).

Arthur Compton (left) with graduate student Luis Alvarez at the University of Chicago in 1933. Both men won Nobel Prizes in physics.

Louis-Victor-Pierre-Raymond, 7th duc de Broglie (1892-1987), Clinton Joseph Davisson (1881-1958), Lester Halbert Germer (1896-1971)

Numerous studies of the subatomic world have demonstrated that particles like electrons or photons (packets of light) are not like objects with which we interact in our everyday lives. These entities appear to possess characteristics of both waves and particles, depending on the experiment or phenomena being observed. Welcome to the strange realm of quantum mechanics.

In 1924, French physicist Louis-Victor de Broglie suggested that particles of matter could also be considered as waves and would posses properties commonly associated with waves, including a wavelength (the distance between successive crests of wave). In fact, all bodies have a wavelength. In 1927, American physicists Clinton Davisson and Lester Germer demonstrated the wave nature of electrons by showing that they could be made to diffract and interfere as if they were light.

De Broglie’s famous relationship showed that the wavelength of a matter wave is inversely proportional to the particle’s momentum (generally speaking, mass times velocity), and, in particular, λ = h/p. Here, λ is the wavelength, p is the momentum, and h is Planck’s constant. According to author Joanne Baker, using this equation, it is possible to show that “Bigger objects, like ball bearings and badgers, have minuscule wavelengths, too small to see, so we cannot spot them behaving like waves. A tennis ball flying across a court has a wavelength of 10−34 meters, much smaller than a proton’s width (10−15 m).” The wavelength of an ant is larger than for a human.

Since the original Davisson-Germer experiment for electrons, the de Broglie hypothesis has been confirmed for other particles like neutrons and protons and, in 1999, even for entire molecules such a buckyballs, soccer-ball-shaped molecules made of carbon atoms.

De Broglie had advanced his idea in his PhD thesis, but the idea was so radical that his thesis examiners were, at first, not sure if they should approve the thesis. He later won the Nobel Prize for this work.

SEE ALSO Wave Nature of Light (1801), Electron (1897), Schrödinger’s Wave Equation (1926), Quantum Tunneling (1928), Buckyballs (1985).

In 1999, University of Vienna researchers demonstrated the wavelike behavior of buckminsterfullerene molecules formed of 60 carbon atoms (shown here). A beam of molecules (with velocities of around 200 m/sec, or 656 ft/sec) were sent through a grating, yielding an interference pattern characteristic of waves.

Wolfgang Ernst Pauli (1900–1958)

Imagine people who are beginning to fill the seats in a baseball stadium, starting at the rows nearest the playing field. This is a metaphor for electrons filling the orbitals of an atom—and in both baseball and atomic physics, there are rules that govern how many entities such as electrons or people can fill the allotted areas. After all, it would be quite uncomfortable if multiple people attempted to squeeze into a small seat.

Pauli’s Exclusion Principle (PEP) explains why matter is rigid and why two objects cannot occupy the same space. It’s why we don’t fall through the floor and why Neutron Stars resist collapsing under their own incredible mass.

More specifically, PEP states that no pair of identical fermions (such as electrons, protons, or neutrons) can simultaneously occupy the same quantum state, which includes the spin of a fermion. For example, electrons occupying the same atomic orbital must have opposite spins. Once an orbital is occupied by a pair of electrons of opposite spin, no more electrons may enter the orbital until one leaves the orbital.

PEP is well-tested, and one of the most important principles in physics. According to author Michela Massimi, “From spectroscopy to atomic physics, from quantum field theory to high-energy physics, there is hardly another scientific principle that has more far-reaching implications than Pauli’s exclusion principle.” As a result of PEP, one can determine or understand electronic configurations underlying the classification of chemical elements in the Periodic Table as well as atomic spectra. Science-journalist Andrew Watson writes, “Pauli introduced this principle early in 1925, before the advent of modern quantum theory or the introduction of the idea of electron spin. His motivation was simple: there had to be something to prevent all the electrons in an atom collapsing down to a single lowest state…. So, Pauli’s exclusion principle keeps electrons—and other fermions—from invading each other’s space.”

SEE ALSO Coulomb’s Law of Electrostatics (1785), Electron (1897), Bohr Atom (1913), Stern-Gerlach Experiment (1922), White Dwarfs and Chandrasekhar Limit (1931), Neutron Stars (1933).

Artwork titled “Pauli’s Exclusion Principle, or Why Dogs Don’t Suddenly Fall Through Solids.” PEP helps explain why matter is rigid, why we do not fall through solid floors, and why neutron stars resist collapsing under their humongous masses.

Erwin Rudolf Josef Alexander Schrödinger (1887-1961)

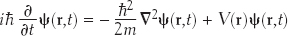

“Schrödinger’s Wave Equation enabled scientists to make detailed predictions about how matter behaves, while being able to visualize the atomic systems under study,” writes physicist Arthur I. Miller. Schrödinger apparently developed his formulation while vacationing at a Swiss ski resort with his mistress who seemed to catalyze his intellectual and “erotic outburst,” as he called it. The Schrödinger Wave Equation describes ultimate reality in terms of wave functions and probabilities. Given the equation, we can calculate the wave function of a particle:

Here, we need not worry about the details of this formula, except perhaps to note that ψ(r, t) is the wave function, which is the probability amplitude for a particle to have a given position r at any given time t.  2 is used to describe how ψ(r, t) changes in space. V(r) is the potential energy of the particle at each position r. Just as an ordinary wave equation describes the progression of a ripple across a pond, Schrödinger’s Wave Equation describes how a probability wave associated with a particle (e.g. an electron) moves through space. The peak of the wave corresponds to where the particle is most likely to be. The equation was also useful in understanding energy levels of electrons in atoms and became one of the foundations of quantum mechanics, the physics of the atomic world. Although it may seem odd to describe a particle as a wave, in the quantum realm such strange dualities are necessary. For example, light can act as either a wave or a particle (a photon), and particles such as electrons and protons can act as waves. As another analogy, think of electrons in an atom as waves on a drumhead, with the vibration modes of the wave equation associated with different energy levels of atoms.

2 is used to describe how ψ(r, t) changes in space. V(r) is the potential energy of the particle at each position r. Just as an ordinary wave equation describes the progression of a ripple across a pond, Schrödinger’s Wave Equation describes how a probability wave associated with a particle (e.g. an electron) moves through space. The peak of the wave corresponds to where the particle is most likely to be. The equation was also useful in understanding energy levels of electrons in atoms and became one of the foundations of quantum mechanics, the physics of the atomic world. Although it may seem odd to describe a particle as a wave, in the quantum realm such strange dualities are necessary. For example, light can act as either a wave or a particle (a photon), and particles such as electrons and protons can act as waves. As another analogy, think of electrons in an atom as waves on a drumhead, with the vibration modes of the wave equation associated with different energy levels of atoms.

Note that the matrix mechanics developed by Werner Heisenberg, Max Born, and Pascual Jordan in 1925 interpreted certain properties of particles in terms of matrices. This formulation is equivalent to the Schrödinger wave formulation.

SEE ALSO Wave Nature of Light (1801), Electron (1897), De Broglie Relation (1924), Heisenberg Uncertainty Principle (1927), Quantum Tunneling (1928), Dirac Equation (1928), Schrödinger’s Cat (1935).

Erwin Schrödinger on a 1000 Austrian schilling banknote (1983).

Heisenberg Uncertainty Principle

Werner Heisenberg (1901-1976)

“Uncertainty is the only certainty there is,” wrote mathematician John Allen Paulos, “and knowing how to live with insecurity is the only security.” The Heisenberg Uncertainty Principle states that the position and the velocity of a particle cannot both be known with high precision, at the same time. Specifically, the more precise the measurement of position, the more imprecise the measurement of momentum, and vice versa. The uncertainty principle becomes significant at the small size scales of atoms and subatomic particles.

Until this law was discovered, most scientists believed that the precision of any measurement was limited only by the accuracy of the instruments being used. German physicist Werner Heisenberg hypothetically suggested that even if we could construct an infinitely precise measuring instrument, we still could not accurately determine both the position and momentum (mass × velocity) of a particle. The principle is not concerned with the degree to which the measurement of the position of a particle may disturb the momentum of a particle. We could measure a particle’s position to a high precision, but as a consequence, we could know little about the momentum.

According to Heisenberg’s Uncertainty Principle, particles likely exist only as a collection of probabilities, and their paths cannot be predicted even by an infinitely precise measurement.

For those scientists who accept the Copenhagen interpretation of quantum mechanics, the Heisenberg Uncertainty Principle means that the physical Universe literally does not exist in a deterministic form but is rather a collection of probabilities. Similarly, the path of an elementary particle such as a photon cannot be predicted, even in theory, by an infinitely precise measurement.

In 1935, Heisenberg was a logical choice to replace his former mentor Arnold Sommerfeld at the University of Munich. Alas, the Nazis required that “German physics” must replace “Jewish physics,” which included quantum theory and relativity. As a result, Heisenberg’s appointment to Munich was blocked even though he was not Jewish.

During World War II, Heisenberg led the unsuccessful German nuclear weapons program. Today, historians of science still debate as to whether the program failed because of lack of resources, lack of the right scientists on his team, Heisenberg’s lack of a desire to give such a powerful weapon to the Nazis, or other factors.

SEE ALSO Laplace’s Demon (1814), Third Law of Thermodynamics (1905), Bohr Atom (1913), Schrödinger’s Wave Equation (1926), Complementarity Principle (1927), Quantum Tunneling (1928), Bose-Einstein Condensate (1995).

German postage stamp, 2001, featuring Werner Heisenberg.

Niels Henrik David Bohr (1885–1962)

Danish physicist Niels Bohr developed a concept that he referred to as complementarity in the late 1920s, while trying to make sense of the mysteries of quantum mechanics, which suggested, for example, that light sometimes behaved like a wave and at other times like a particle. For Bohr, writes author Louisa Gilder, “complementarity was an almost religious belief that the paradox of the quantum world must be accepted as fundamental, not to be ‘solved’ or trivialized by attempts to find out ‘what’s really going on down there.’ Bohr used the word in an unusual way: ‘the complementarity’ of waves and particles, for example (or of position and momentum), meant that when one existed fully, its complement did not exist at all.” Bohr himself in a 1927 lecture in Como, Italy, said that waves and particles are “abstractions, their properties being definable and observable only through their interactions with other systems.”

Sometimes, the physics and philosophy of complementarity seemed to overlap with theories in art. According to science-writer K. C. Kole, Bohr “was known for his fascination with cubism—especially ‘that an object could be several things, could change, could be seen as a face, a limb, a fruit bowl,’ as a friend of his later explained. Bohr went on to develop his philosophy of complementarity, which showed how an electron could change, could be seen as a wave [or] a particle. Like cubism, complementarity allowed contradictory views to coexist in the same natural frame.”

Bohr thought that it was inappropriate to view the subatomic world from our everyday perspective. “In our description of nature,” Bohr wrote, “the purpose is not to disclose the real essence of phenomena but only to track down, as far as it is possible, relations between the manifold aspects of experience.”

In 1963, physicist John Wheeler expressed the importance of this principle: “Bohr’s principle of complementarity is the most revolutionary scientific concept of this century and the heart of his fifty-year search for the full significance of the quantum idea.”

SEE ALSO Wave Nature of Light (1801), Heisenberg Uncertainty Principle (1927), EPR Paradox (1935), Schrödinger’s Cat (1935), Bell’s Theorem (1964).

The physics and philosophy of complementarity often seemed to overlap with theories in art. Bohr was fascinated with Cubism, which sometimes allowed “contradictory” views to coexist, as in this artwork by Czech painter Eugene Ivanov.

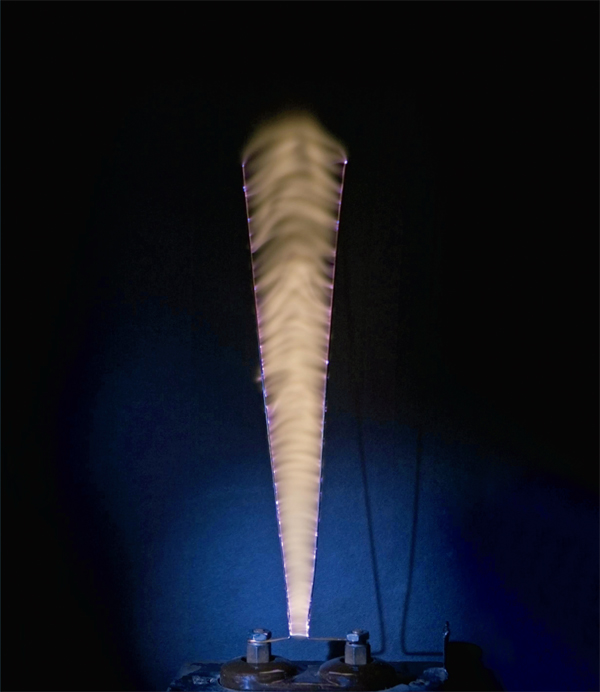

A number of physics papers have been devoted to the supersonic booms created by whipcracking, and these papers have been accompanied by various recent, fascinating debates as to the precise mechanism involved. Physicists have known since the early 1900s that when a bullwhip handle is rapidly and properly moved, the tip of the whip can exceed the speed of sound. In 1927, physicist Z. Carrière used high-speed shadow photography to demonstrate that a sonic boom is associated with the crack of the whip. Ignoring frictional effects, the traditional explanation is that as a moving section of the whip travels along the whip and becomes localized in increasingly smaller portions, the moving section must travel increasingly fast due to the conservation of energy. The kinetic energy of motion of a point with mass m is given by E = 1⁄2mv2, and if E stays roughly constant, and m shrinks, velocity v must increase. Eventually, a portion of the whip near the end travels faster than the speed of sound (approximately 768 mph [1,236 kilometers per hour] at 68 °F [20 °C] in dry air) and creates a boom (see Sonic Boom), just like a jet plane exceeding the speed of sound in air. Whips were likely the first man-made devices to break the sound barrier.

In 2003, applied mathematicians Alain Goriely and Tyler McMillen modeled the impulse that creates a whip crack as a loop traveling along a tapered elastic rod. They write of the complexity of mechanisms, “The crack itself is a sonic boom created when a section of the whip at its tip travels faster than the speed of sound. The rapid acceleration of the tip of the whip is created when a wave travels to the end of the rod, and the energy consisting of the kinetic energy of the moving loop, the elastic energy stored in the loop, and the angular momentum of the rod is concentrated into a small section of the rod, which is then transferred into acceleration of the end of the rod.” The tapering of the whip also increases the maximal speed.

SEE ALSO Atlatl (30,000 B.C.), Cherenkov Radiation (1934), Sonic Booms (1947).

A sonic boom is associated with the crack of a whip. Whips were likely the first man-made devices to break the sound barrier.

Paul Adrien Maurice Dirac (1902-1984)

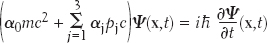

As discussed in the entry on Antimatter, the equations of physics can sometimes give birth to ideas or consequences that the discoverer of the equation did not expect. The power of these kinds of equations can seem magical, according to physicist Frank Wilczek in his essay on the Dirac Equation. In 1927, Paul Dirac attempted to find a version of Schrödinger’s Wave Equation that would be consistent with the principles of special relativity. One way that the Dirac Equation can be written is

Published in 1928, the equation describes electrons and other elementary particles in a way that is consistent with both quantum mechanics and the Special Theory of Relativity. The equation predicts the existence of antiparticles and in some sense “foretold” their experimental discovery. This feature made the discovery of the positron, the antiparticle of the electron, a fine example of the usefulness of mathematics in modern theoretical physics. In this equation, m is the rest mass of the electron,  is the reduced Planck’s constant (1.054 × 10−34

is the reduced Planck’s constant (1.054 × 10−34  · s), c is the speed of light, p is the momentum operator, x and t are the space and time coordinates, and Ψ(x,t) is a wave function. α is a linear operator that acts on the wave function.

· s), c is the speed of light, p is the momentum operator, x and t are the space and time coordinates, and Ψ(x,t) is a wave function. α is a linear operator that acts on the wave function.

Physicist Freeman Dyson has lauded this formula that represents a significant stage in humanity’s grasp of reality. He writes, “Sometimes the understanding of a whole field of science is suddenly advanced by the discovery of a single basic equation. Thus it happened that the Schrödinger equation in 1926 and the Dirac equation in 1927 brought a miraculous order into the previously mysterious processes of atomic physics. Bewildering complexities of chemistry and physics were reduced to two lines of algebraic symbols.”

SEE ALSO Electron (1897), Schrödinger’s Wave Equation (1926), Special Theory of Relativity (1905), Antimatter (1932).

The Dirac equation is the only equation to appear in Westminster Abbey, London, where it is engraved on Dirac’s commemorative plaque. Shown here is an artist’s representation of the Westminster plaque, which depicts a simplified version of the formula.

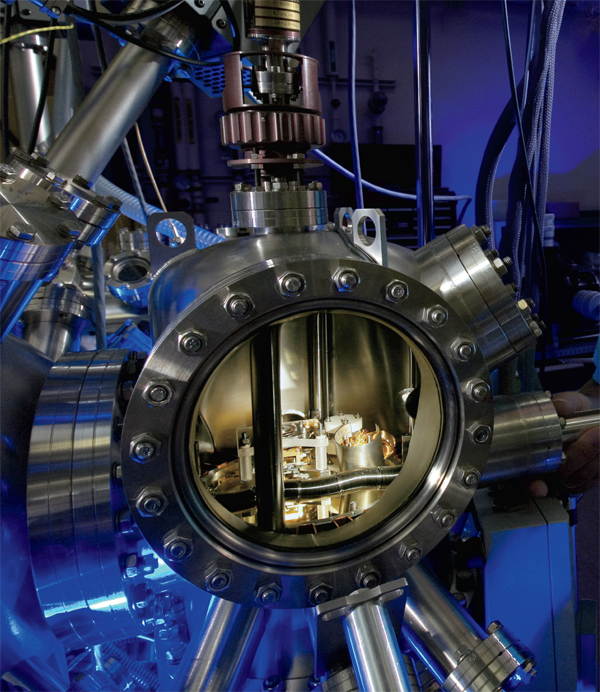

George Gamow (1904–1968), Ronald W. Gurney (1898–1953), Edward Uhler Condon (1902–1974)

Imagine tossing a coin at a wall between two bedrooms. The coin bounces back because it does not have sufficient energy to penetrate the wall. However, according to quantum mechanics, the coin is actually represented by a fuzzy probability wave function that spills through the wall. This means that the coin has a very small chance of actually tunneling through the wall and ending up in the other bedroom. Particles can tunnel through such barriers due to Heisenberg’s Uncertainty Principle applied to energies. According to the principle, it is not possible to say that a particle has precisely one amount of energy at precisely one instant in time. Rather, the energy of a particle can exhibit extreme fluctuations on short time scales and can have sufficient energy to traverse a barrier.

Some Transistors use tunneling to move electrons from one part of the device to another. The decay of some nuclei via particle emission employs tunneling. Alpha particles (helium nuclei) eventually tunnel out of uranium nuclei. According to the work published independently by George Gamow and the team of Ronald Gurney and Edward Condon in 1928, the alpha particle would never escape without the tunneling process.

Tunneling is also important in sustaining fusion reactions in the Sun. Without tunneling, stars wouldn’t shine. Scanning tunneling microscopes employ tunneling phenomena to help scientists visualize microscopic surfaces, using a sharp microscope tip and a tunneling current between the tip and the specimen. Finally, the theory of tunneling has been applied to early models of the universe and to understand enzyme mechanisms that enhance reaction rates.

Although tunneling happens all the time at subatomic size scales, it would be quite unlikely (though possible) for you to be able to leak out through your bedroom into the adjacent kitchen. However, if you were to throw yourself into the wall every second, you would have to wait longer then the age of the universe to have a good chance of tunneling through.

SEE ALSO Radioactivity (1896), Schrödinger’s Wave Equation (1926), Heisenberg Uncertainty Principle (1927), Transistor (1947), Seeing the Single Atom (1955).

Scanning tunneling microscope used at Sandia National Laboratory.

Hubble’s Law of Cosmic Expansion

Edwin Powell Hubble (1889–1953)

“Arguably the most important cosmological discovery ever made,” writes cosmologist John P. Huchra, “is that our Universe is expanding. It stands, along with the Copernican Principle—that there is no preferred place in the Universe, and Olbers’ Paradox—that the sky is dark at night, as one of the cornerstones of modern cosmology. It forced cosmologists to [consider] dynamic models of the Universe, and also implies the existence of a timescale or age for the Universe. It was made possible … primarily by Edwin Hubble’s estimates of distances to nearby galaxies.”

In 1929, American astronomer Edwin Hubble discovered that the greater the distance a galaxy is from an observer on the Earth, the faster it recedes. The distances between galaxies, or galactic clusters, are continuously increasing and, therefore, the Universe is expanding.

For many galaxies, the velocity (e.g. the movement of a galaxy away from an observer on the Earth) can be estimated from the red shift of a galaxy, which is an observed increase in the wavelength of electromagnetic radiation received by a detector on the Earth compared to that emitted by the source. Such red shifts occur because galaxies are moving away from our own galaxy at high speeds due to the expansion of space itself. The change in the wavelength of light that results from the relative motion of the light source and the receiver is an example of the Doppler Effect. Other methods also exist for determining the velocity for faraway galaxies. (Objects that are dominated by local gravitational interactions, like stars within a single galaxy, do not exhibit this apparent movement away from one another.)

Although an observer on the Earth finds that all distant galactic clusters are flying away from the Earth, our location in space is not special. An observer in another galaxy would also see the galactic clusters flying away from the observer’s position because all of space is expanding. This is one of the main lines of evidence for the Big Bang from which the early Universe evolved and the subsequent expansion of space.

SEE ALSO Big Bang (13.7 Billion B.C.), Doppler Effect (1842), Olbers’ Paradox (1823), Cosmic Microwave Background (1965), Cosmic Inflation (1980), Dark Energy (1998), Cosmological Big Rip (36 Billion).

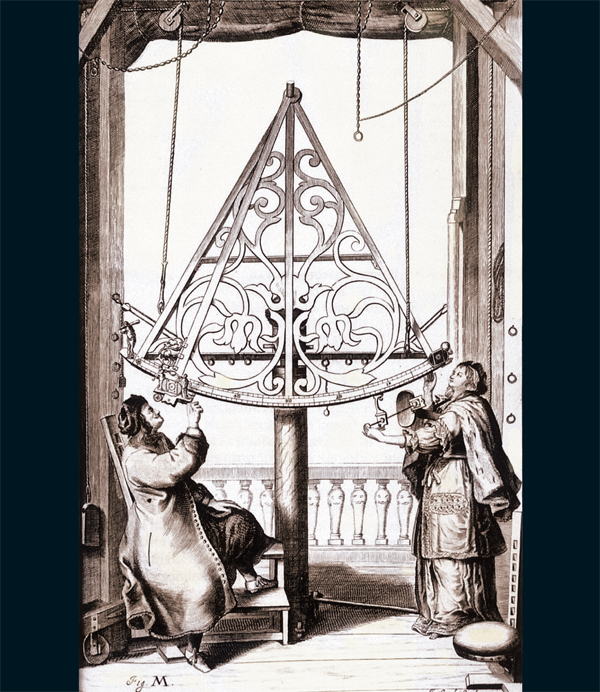

For millennia, humans have looked to the skies and wondered about their place in the cosmos. Pictured here are Polish astronomer Johannes Hevelius and his wife Elisabeth making observations (1673). Elisabeth is considered to be among the first female astronomers.

Ernest Orlando Lawrence (1901–1958)

“The first particle accelerators were linear,” writes author Nathan Dennison, “but Ernest Lawrence bucked this trend and used many small electrical pulses to accelerate particles in circles. Starting off as a sketch on a scrap of paper, his first design cost just US $25 to make. Lawrence continued to develop his [cyclotron] using parts including a kitchen chair … until finally he was awarded the Nobel Prize in 1939.”

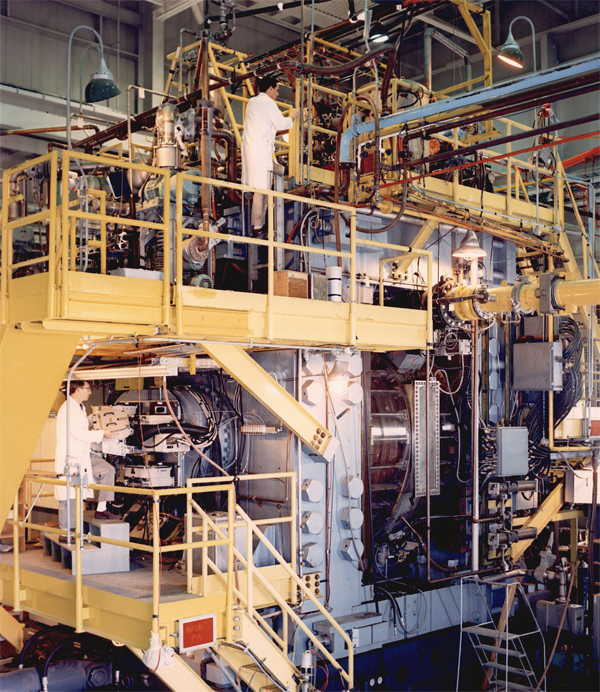

Lawrence’s cyclotron accelerated charged atomic or subatomic particles by using a constant magnetic field and changing electrical field to create a spiraling path of particles that started at the center of the spiral. After spiraling many times in an evacuated chamber, the high-energy particles could finally smash into atoms and the results could be studied using a detector. One advantage of the cyclotron over prior methods was its relatively compact size that could be used to achieve high energies.

Lawrence’s first cyclotron was only a few inches in diameter, but by 1939, at the University of California, Berkeley, he was planning the largest and most expensive instrument thus far created to study the atom. The nature of the atomic nucleus was still mysterious, and this cyclotron—“requiring enough steel to build a good-sized freighter and electric current sufficient to light the city of Berkeley”—would allow the probing of this inner realm after the high-energy particles collided with nuclei and caused nuclear reactions. Cyclotrons were used to produce radioactive materials and for the production of tracers used in medicine. The Berkeley cyclotron created technetium, the first known artificially generated element.

The cyclotron was also important in that it launched the modern era of high-energy physics and the use of huge, costly tools that required large staffs to operate. Note that a cyclotron uses a constant magnetic field and a constant-frequency applied electric field; however, both of these fields are varied in the synchrotron, a circular accelerator developed later. In particular, synchrotron radiation was seen for the first time at the General Electric Company in 1947.

SEE ALSO Radioactivity (1896), Atomic Nucleus (1911), Neutrinos (1956), Large Hadron Collider (2009).

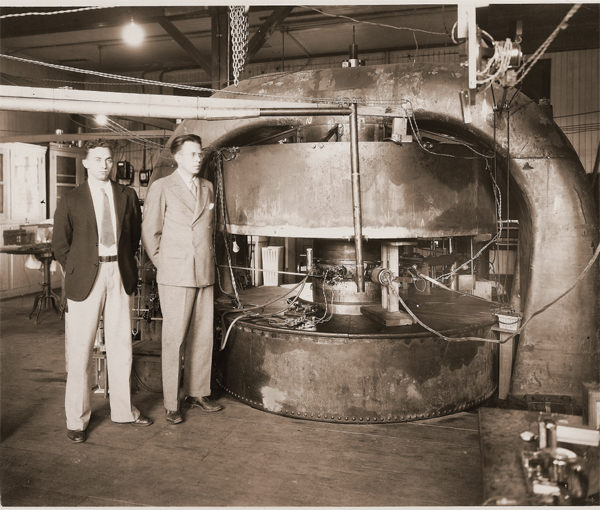

Physicists Milton Stanley Livingston (left) and Ernest O. Lawrence (right) in front of a 27-inch cyclotron at the old Radiation Laboratory at the University of California, Berkeley (1934).

White Dwarfs and Chandrasekhar Limit

Subrahmanyan Chandrasekhar (1910–1995)

Singer Johnny Cash explained in his song “Farmer’s Almanac” that God gave us the darkness so we could see the stars. However, among the most difficult luminous stars to find are the strange graveyard states of stars called white dwarfs. A majority of stars, like our Sun, end their lives as dense white dwarf stars. A teaspoon of white dwarf material would weigh several tons on the Earth.