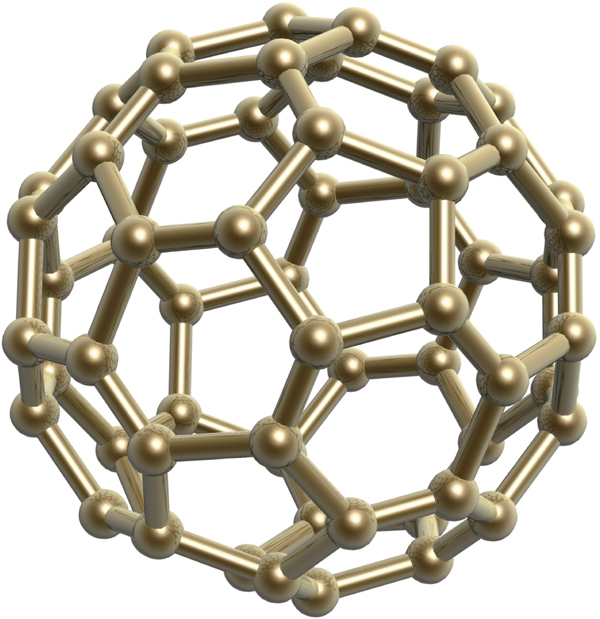

Kenneth Snelson (b. 1927), Richard Buckminster “Bucky” Fuller (1895–1983)

The ancient Greek philosopher Heraclitus of Ephesus once wrote that the world is a “harmony of tensions.” One of the most intriguing embodiments of this philosophy is the tensegrity system, which inventor Buckminster Fuller described as “islands of compressions in an ocean of tension.”

Imagine a structure composed only of rods and of cables. The cables connect the ends of rods to other ends of rods. The rigid rods never touch one another. The structure is stable against the force of gravity. How can such a flimsy-looking assemblage persist?

The structural integrity of such structures is maintained by a balance of tension forces (e.g., pulling forces exerted by a wire) and compression forces (e.g., forces that tend to compress the rods). As an example of such forces, when we push the two ends of a dangling spring together, we compress it. When we pull apart the two ends, we create more tension in the spring.

In tensegrity systems, compression-bearing rigid struts tend to stretch (or tense) the tension-bearing cables, which in turn compress the struts. An increase in tension in one of the cables can result in increased tensions throughout the structure, balanced by an increase in compression in the struts. Overall, the forces acting in all directions in a tensegrity structure sum to a zero net force. If this were not the case, the structure might fly away (like an arrow shot from a bow) or collapse.

In 1948, artist Kenneth Snelson produced his kite-like “X-Piece” tensegrity structure. Later, Buckminster Fuller coined the term tensegrity for these kinds of structures. Fuller recognized that the strength and efficiency of his huge geodesic domes were based on a similar kind of structural stabilization that distributes and balances mechanical stresses in space.

To some extent, we are tensegrity systems in which bones are under compression and balanced by the tension-bearing tendons. The cytoskeleton of a microscopic animal cell also resembles a tensegrity system. Tensegrity structures actually mimic some behaviors observed in living cells.

SEE ALSO Truss (2500 B.C.), Arch (1850 B.C.), I-Beams (1844), Leaning Tower of Lire (1955).

A diagram from U.S. Patent 3,695,617, issued in 1972 to G. Mogilner and R. Johnson, for “Tensegrity Structure Puzzle.” Rigid columns are shown in dark green. One objective is to try to remove the inner sphere by sliding the columns.

Hendrik Brugt Gerhard Casimir (1909–2000), Evgeny Mikhailovich Lifshitz (1915–1985)

The Casimir effect often refers to a weird attractive force that appears between two uncharged parallel plates in a vacuum. One possible way to understand the Casimir effect is to imagine the nature of a vacuum in space according to quantum field theory. “Far from being empty,” write physicists Stephen Reucroft and John Swain, “modern physics assumes that a vacuum is full of fluctuating electromagnetic waves that can never be completely eliminated, like an ocean with waves that are always present and can never be stopped. These waves come in all possible wavelengths, and their presence implies that empty space contains a certain amount of energy” called zero-point energy.

If the two parallel plates are brought very close together (e.g. a few nanometers apart), longer waves will not fit between them, and the total amount of vacuum energy between the plates will be smaller than outside of the plates, thus causing the plates to attract each other. One may imagine the plates as prohibiting all of the fluctuations that do not “fit” into the space between the plates. This attraction was first predicted in 1948 by physicist Hendrik Casimir.

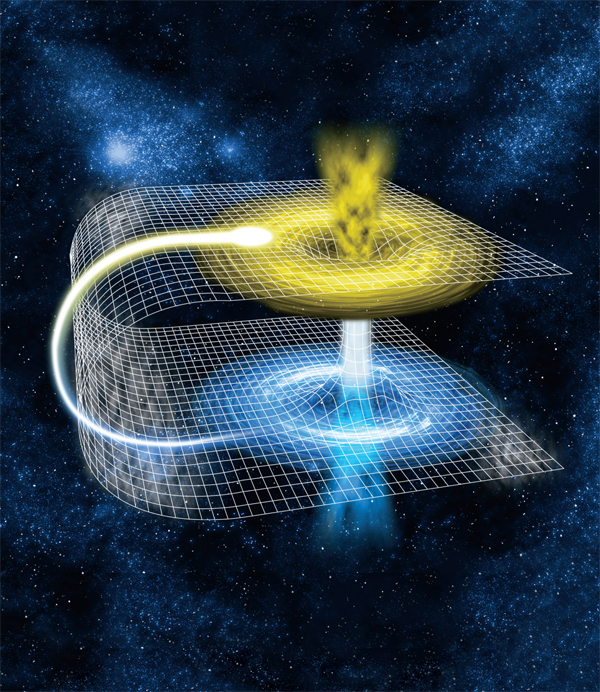

Theoretical applications of the Casimir effect have been proposed, ranging from using its “negative energy density” for propping open traversable wormholes between different regions of space and time to its use for developing levitating devices—after physicist Evgeny Lifshitz theorized that the Casimir effect can give rise to repulsive forces. Researchers working on micromechanical or nanomechanical robotic devices may need to take the Casimir effect into account as they design tiny machines.

In quantum theory, the vacuum is actually a sea of ghostly virtual particles springing in and out of existence. From this viewpoint, one can understand the Casimir effect by realizing that fewer virtual photons exist between the plates because some wavelengths are forbidden. The excess pressure of photons outside the plates squeezes the plates together. Note that Casimir forces can also be interpreted using other approaches without reference to zero-point energy.

SEE ALSO Third Law of Thermodynamics (1905), Wormhole Time Machine (1988), Quantum Resurrection (100 Trillion).

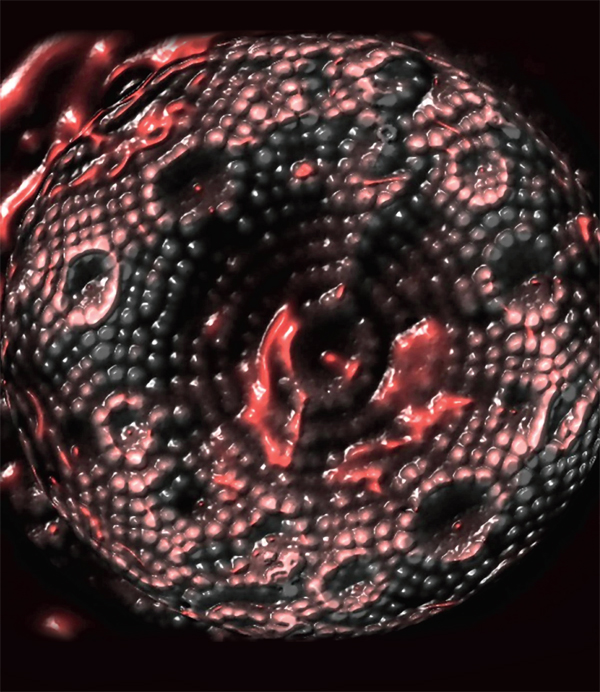

The sphere shown in this scanning electron microscope image is slightly over one tenth of a millimeter in diameter and moves toward a smooth plate (not shown) due to the Casimir Effect. Research on the Casimir Effect helps scientists better predict the functioning of micro-mechanical machine parts. (Photo courtesy of Umar Mohideen.)

Albert Einstein (1879–1955), Kurt Gödel (1906–1978), Kip Stephen Thorne (b. 1940)

What is time? Is time travel possible? For centuries, these questions have intrigued philosophers and scientists. Today, we know for certain that time travel is possible. For example, scientists have demonstrated that objects traveling at high speeds age more slowly than a stationary object sitting in a laboratory frame of reference. If you could travel on a near light-speed rocket into outer space and return, you could travel thousands of years into the Earth’s future. Scientists have verified this time slowing or “dilation” effect in a number of ways. For example, in the 1970s, scientists used atomic clocks on airplanes to show that these clocks had a slight slowing of time with respect to clocks on the Earth. Time is also significantly slowed near regions of very large masses.

Although seemingly more difficult, numerous ways exist in which time machines for travel to the past can theoretically be built that do not seem to violate any known laws of physics. Most of these methods rely on high gravities or on wormholes (hypothetical “shortcuts” through space and time). To Isaac Newton, time was like a river flowing straight. Nothing could deflect the river. Einstein showed that the river could curve, although it could never circle back on itself, which would be a metaphor for backwards time travel. In 1949, mathematician Kurt Gödel went even further and showed that the river could circle back on itself. In particular, he found a disturbing solution to Einstein’s equations that allows backward time travel in a universe that rotated. For the first time in history, backward time travel had been given a mathematical foundation!

Throughout history, physicists have found that if phenomena are not expressly forbidden, they are often eventually found to occur. Today, designs for time travel machines are proliferating in top science labs and include such wild concepts as Thorne Wormhole Time Machines, Gott loops that involve cosmic strings, Gott shells, Tipler and van Stockum cylinders, and Kerr Rings. In the next few hundred years, perhaps our heirs will explore space and time to degrees we cannot currently fathom.

SEE ALSO Tachyons (1967), Wormhole Time Machine (1988), Special Theory of Relativity (1905), General Theory of Relativity (1915), Chronology Protection Conjecture (1992).

If time is like space, might the past, in some sense, still exist “back there” as surely as your home still exists even after you have left it? If you could travel back in time, which genius of the past would you visit?

Willard Frank Libby (1908–1980)

“If you were interested in finding out the age of things, the University of Chicago in the 1940s was the place to be,” writes author Bill Bryson. “Willard Libby was in the process of inventing radiocarbon dating, allowing scientists to get an accurate reading of the age of bones and other organic remains, something they had never been able to do before….”

Radiocarbon dating involves the measuring of the abundance of the radioactive element carbon-14 (14C) in a carbon-containing sample. The method relies on the fact that 14C is created in the atmosphere when cosmic rays strike nitrogen atoms. The 14C is then incorporated into plants, which animals subsequently eat. While an animal is alive, the abundance of 14C in its body roughly matches the atmospheric abundance. 14C continually decays at a known exponential rate, converting to nitrogen-14, and once the animal dies and no longer replenishes its 14C supply from the environment, the animal’s remains slowly lose 14C. By detecting the amount of 14C in a sample, scientists can estimate its age if the sample is not older than 60,000 years. Older samples generally contain too little of 14C to measure accurately. 14C has a half-life of about 5,730 years due to radioactive decay. This means that every 5,730 years, the amount of 14C in a sample has dropped by half. Because the amount of atmospheric 14C undergoes slight variations through time, small calibrations are made to improve the accuracy of the dating. Also, atmospheric 14C increased during the 1950s due to atomic bomb tests. Accelerator Mass Spectrometry can be used to detect 14C abundances in milligram samples.

Before radiocarbon dating, it was very difficult to obtain reliable dates before the First Dynasty in Egypt, around 3000 B.C. This was quite frustrating for archeologists who were feverish to know, for example, when Cro-Magnon people painted the caves of Lascaux in France or when the last Ice Age finally ended.

SEE ALSO Olmec Compass (1000 B.C.), Hourglass (1338), Radioactivity (1896), Mass Spectrometer (1898), Atomic Clocks (1955).

Because carbon is very common, numerous kinds of materials are potentially useable for radiocarbon investigations, including ancient skeletons found during archeological digs, charcoal, leather, wood, pollen, antlers, and much more.

Enrico Fermi (1901-1954), Frank Drake (b. 1930)

During our Renaissance, rediscovered ancient texts and new knowledge flooded medieval Europe with the light of intellectual transformation, wonder, creativity, exploration, and experimentation. Imagine the consequences of making contact with an alien race. Another, far more profound Renaissance would be fueled by the wealth of alien scientific, technical, and sociological information. Given that our universe is both ancient and vast—there are an estimated 250 billion stars in our Milky Way galaxy alone—the physicist Enrico Fermi asked in 1950, “Why have we not yet been contacted by an extraterrestrial civilization?” Of course, many answers are possible. Advanced alien life could exist, but we are unaware of their presence. Alternatively, intelligent aliens may be so rare in the universe that we may never make contact with them. The Fermi Paradox, as it is known today, has given rise to scholarly works attempting to address the question in fields ranging from physics and astronomy to biology.

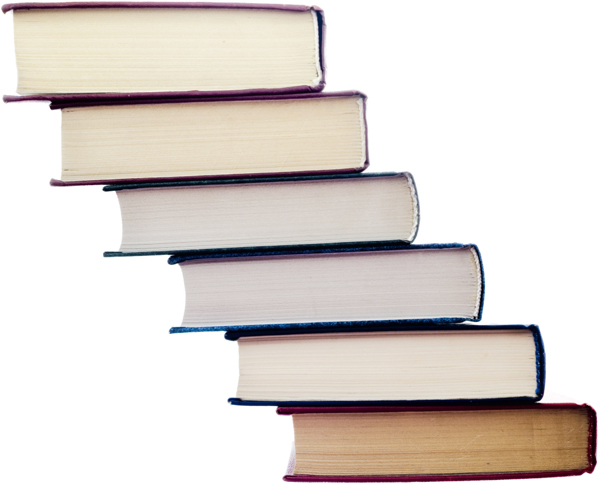

In 1960, astronomer Frank Drake suggested a formula to estimate the number of extraterrestrial civilizations in our galaxy with whom we might come into contact:

Here, N is the number of alien civilizations in the Milky Way with which communication might be possible; for example, alien technologies may produce detectable radio waves. R* is the average rate of star formation per year in our galaxy. fp is the fraction of those stars that have planets (hundreds of extra solar planets have been detected). ne is the average number of “Earth-like” planets that can potentially support life per star that has planets. fl is the fraction of these ne planets that actually yield life forms. fi is the fraction of fl that actually produce intelligent life. The variable fc represents the fraction of civilizations that develop a technology that releases detectable signs of their existence into outer space. L is the length of time such civilizations release signals into space that we can detect. Because many of the parameters are very difficult to determine, the equation serves more to focus attention on the intricacies of the paradox than to resolve it.

SEE ALSO Tsiolkovsky Rocket Equation (1903), Time Travel (1949), Dyson Sphere (1960), Anthropic Principle (1961), Living in a Simulation (1967), Chronology Protection Conjecture (1992), Cosmic Isolation (100 Billion).

Given that our universe is both ancient and vast, the physicist Enrico Fermi asked in 1950, “Why have we not yet been contacted by an extraterrestrial civilization?”

Alexandre-Edmond Becquerel (1820–1891), Calvin Souther Fuller (1902–1994)

In 1973, British chemist George Porter said, “I have no doubt that we will be successful in harnessing the sun’s energy…. If sunbeams were weapons of war, we would have had solar energy centuries ago.” Indeed, the quest to efficiently create energy from sunshine has had a long history. Back in 1839, nineteen-year-old French physicist Edmund Becquerel discovered the photovoltaic effect in which certain materials produce small amounts of electric current when exposed to light. However, the most important breakthrough in solar power technology did not take place until 1954 when three scientists from Bell Laboratories—Daryl Chapin, Calvin Fuller, and Gerald Pearson—invented the first practical silicon solar cell for converting sunlight into electrical power. Its efficiency was only around 6% in direct sunlight, but today efficiencies of advanced solar cells can exceed 40%.

You may have seen solar panels on the roofs of buildings or used to power warning signs on highways. Such panels contain solar cells, commonly composed of two layers of silicon. The cell also has an antireflective coating to increase the absorption of sunlight. To ensure that the solar cell creates a useful electrical current, small amounts of phosphorus are added to the top silicon layer, and boron is added to the bottom layer. These additions cause the top layer to contain more electrons and the bottom layer to have fewer electrons. When the two layers are joined, electrons in the top layer move into the bottom layer very close to the junction between layers, thus creating an electric field at the junction. When photons of sunlight hit the cell, they knock loose electrons in both layers. The electric field pushes electrons that have reached the junction toward the top layer. This “push” or “force” can be used to move electrons out of the cell into attached metal conductor strips in order to generate electricity. To power a home, this direct-current electricity is converted to alternating current by a device called an inverter.

Solar panels used to power the equipment of a vineyard.

SEE ALSO Archimedes’ Burning Mirrors (212 B.C.), Battery (1800), Fuel Cell (1839), Photoelectric Effect (1905), Energy from the Nucleus (1942), Tokamak (1956), Dyson Sphere (1960).

Solar panels on the roof of a home.

Martin Gardner (1914-2010)

One day while walking through a library, you notice a stack of books leaning over the edge of a table. You wonder if it would be possible to stagger a stack of many books so that the top book would be far out into the room—say five feet—with the bottom book still resting on the table? Or would such a stack fall under its own weight? For simplicity, the books are assumed to be identical, and you are only allowed to have one book at each level of the stack; in other words, each book rests on at most one other book.

The problem has puzzled physicists since at least the early 1800s, and in 1955 was referred to as the Leaning Tower of Lire in the American Journal of Physics. The problem received extra attention in 1964 when Martin Gardner discussed it in Scientific American.

The stack of n books will not fall if the stack has a center of mass that is still above the table. In other words, the center of mass of all books above any book B must lie on a vertical axis that “cuts” through B. Amazingly, there is no limit to how far you can make the stack jut out beyond the table’s edge. Martin Gardner referred to this arbitrarily large overhang as the infinite-offset paradox. For an overhang of just 3 book lengths, you’d need a walloping 227 books! For 10 books, you’d need 272,400,600 books. And for 50 book lengths you’d need more than 1.5 × 1044 books. A formula for the amount of overhang attainable with n books, in book lengths, can be used: 0.5 × (1 + 1/2 + 1/3 + … + 1/n). This harmonic series diverges very slowly; thus, a modest increase in book overhang requires many more books. Additional fascinating work has since been conducted on this problem after removing the constraint of having only one book at each level of the stack.

SEE ALSO Arch (1850 B.C.), Truss (2500 B.C.), Tensegrity (1948).

Would it be possible to stagger a stack of many books so that the top book would be many feet into the room, with the bottom book still resting on the table? Or would such a stack fall under its own weight?

Max Knoll (1897–1969), Ernst August Friedrich Ruska (1906–1988), Erwin Wilhelm Müller (1911–1977), Albert Victor Crewe (1927–2009)

“Dr. Crewe’s research opened a new window into the Lilliputian world of the fundamental building blocks of nature,” writes journalist John Markoff, “giving [us] a powerful new tool to understand the architecture of everything from living tissue to metal alloys.”

The world had never “seen” an atom using an electron microscope before University of Chicago Professor Albert Crewe used his first successful version of the scanning transmission electron microscope, or STEM. Although the concept of an elementary particle had been proposed in the fifth century B.C. by the Greek philosopher Democritus, atoms were far too small to be visualized using optical microscopes. In 1970, Crewe published his landmark paper titled “Visibility of Single Atoms” in the journal Science, which presented photographic evidence of atoms of uranium and thorium.

“After attending a conference in England and forgetting to buy a book at the airport for the flight home, he pulled out a pad of paper on the plane and sketched two ways to improve existing microscopes,” writes Markoff. Later, Crewe designed an improved source of electrons (a field emission gun) for scanning the specimen.

Electron microscopes employ a beam of electrons to illuminate a specimen. Using the transmission electron microscope, invented around 1933 by Max Knoll and Ernst Ruska, electrons pass through a thin sample followed by a magnetic lens produced by a current-carrying coil. A scanning electron microscope employs electric and magnetic lenses before the sample, allowing electrons to be focused onto a small spot that is then scanned across the surface. The STEM is a hybrid of both approaches.

In 1955, physicist Erwin Müller used a field ion microscope to visualize atoms. The device used a large electric field, applied to a sharp metal tip in a gas. The gas atoms arriving at the tip are ionized and detected. Physicist Peter Nellist writes, “Because this process is more likely to occur at certain places on the surface of the tip, such as at steps in the atomic structure, the resulting image represents the underlying atomic structure of the sample.”

SEE ALSO Von Guericke’s Electrostatic Generator (1660), Micrographia (1665), Atomic Theory (1808), Bragg’s Law of Crystal Diffraction (1912), Quantum Tunneling (1928), Nuclear Magnetic Resonance (1938).

A field ion microscope (FIM) image of a very sharp tungsten needle. The small roundish features are individual atoms. Some of the elongated features are caused by atoms moving during the imaging process (approximately 1 second).

Louis Essen (1908–1997)

Clocks have become more accurate through the centuries. Early mechanical clocks, such as the fourteenth-century Dover Castle clock, varied by several minutes each day. When pendulum clocks came into general use in the 1600s, clocks became accurate enough to record minutes as well as hours. In the 1900s, vibrating quartz crystals were accurate to fractions of a second per day. In the 1980s, cesium atom clocks lost less than a second in 3,000 years, and, in 2009, an atomic clock known as NIST-F1—a cesium fountain atomic clock—was accurate to a second in 60 million years!

Atomic clocks are accurate because they involve the counting of periodic events involving two different energy states of an atom. Identical atoms of the same isotope (atoms having the same number of nucleons) are the same everywhere; thus, clocks can be built and run independently to measure the same time intervals between events. One common type of atomic clock is the cesium clock, in which a microwave frequency is found that causes the atoms to make a transition from one energy state to another. The cesium atoms begin to fluoresce at a natural resonance frequency of the cesium atom (9,192,631,770 Hz, or cycles per second), which is the frequency used to define the second. Measurements from many cesium clocks throughout the world are combined and averaged to define an international time scale.

One important use of atomic clocks is exemplified by the GPS (global positioning system). This satellite-based system enables users to determine their positions on the ground. To ensure accuracy, the satellites must send out accurately timed radio pulses, which receiving devices need to determine their positions.

English physicist Louis Essen created the first accurate atomic clock in 1955, based on energy transitions of the cesium atom. Clocks based on other atoms and methods are continually being researched in labs worldwide in order to increase accuracy and decrease cost.

SEE ALSO Hourglass (1338), Anniversary Clock (1841), Stokes’ Fluorescence (1852), Time Travel (1949), Radiocarbon Dating (1949).

In 2004, scientists at the National Institute of Standards and Technology (NIST) demonstrated a tiny atomic clock, the inner workings of which were about the size of a grain of rice. The clock included a laser and a cell containing a vapor of cesium atoms.

Hugh Everett III (1930–1982), Max Tegmark (b. 1967)

A number of prominent physicists now suggest that universes exist that are parallel to ours and that might be visualized as layers in a cake, bubbles in a milkshake, or buds on an infinitely branching tree. In some theories of parallel universes, we might actually detect these universes by gravity leaks from one universe to an adjacent universe. For example, light from distant stars may be distorted by the gravity of invisible objects residing in parallel universes only millimeters away. The entire idea of multiple universes is not as far-fetched as it may sound. According to a poll of 72 leading physicists conducted by the American researcher David Raub and published in 1998, 58% of physicists (including Stephen Hawking) believe in some form of multiple universes theory.

Many flavors of parallel-universe theory exist. For example, Hugh Everett III’s 1956 doctoral thesis “The Theory of the Universal Wavefunction” outlines a theory in which the universe continually “branches” into countless parallel worlds. This theory is called the many-worlds interpretation of quantum mechanics and posits that whenever the universe (“world”) is confronted by a choice of paths at the quantum level, it actually follows the various possibilities. If the theory is true, then all kinds of strange worlds may “exist” in some sense. In a number of worlds, Hitler won World War II. Sometimes, the term “multiverse” is used to suggest the idea that the universe that we can readily observe is only part of the reality that comprises the multiverse, the set of possible universes.

If our universe is infinite, then identical copies of our visible universe may exist, with an exact copy of our Earth and of you. According to physicist Max Tegmark, on average, the nearest of these identical copies of our visible universe is about 10 to the 10100 meters away. Not only are there infinite copies of you, there are infinite copies of variants of you. Chaotic Cosmic Inflation theory also suggests the creation of different universes—with perhaps countless copies of you existing but altered in fantastically beautiful and ugly ways.

SEE ALSO Wave Nature of Light (1801), Schrödinger’s Cat (1935), Anthropic Principle (1961), Living in a Simulation (1967), Cosmic Inflation (1980), Quantum Computers (1981), Quantum Immortality (1987), Chronology Protection Conjecture (1992).

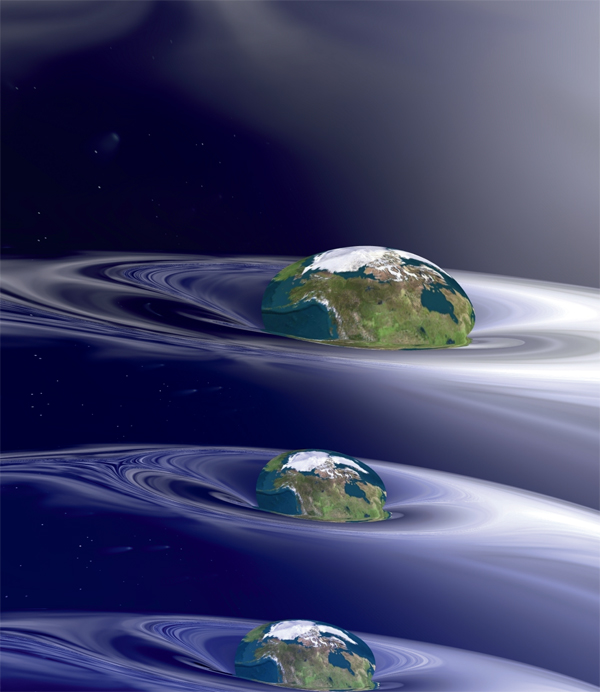

Some interpretations of quantum mechanics posit that whenever the universe is confronted by a choice of paths at the quantum level, it actually follows the various possibilities. Multiverse implies that our observable universe is part of a reality that includes other universes.

Wolfgang Ernst Pauli (1900–1958), Frederick Reines (1918–1998), Clyde Lorrain Cowan, Jr. (1919–1974)

In 1993, physicist Leon Lederman wrote, “Neutrinos are my favorite particles. A neutrino has almost no properties: no mass (or very little), no electric charge … and, adding insult to injury, no strong force acts on it. The euphemism used to describe a neutrino is ‘elusive.’ It is barely a fact, and it can pass through millions of miles of solid lead with only a tiny chance of being involved in a measurable collision.”

In 1930, physicist Wolfgang Pauli predicted the essential properties of the neutrino (no charge, very little mass)—to explain the loss of energy during certain forms of radioactive decay. He suggested that the missing energy might be carried away by ghostlike particles that escaped detection. Neutrinos were first detected in 1956 by physicists Frederick Reines and Clyde Cowan in their experiments at a nuclear reactor in South Carolina.

Each second, over 100 billion neutrinos from the Sun pass through every square inch (6.5 square centimeters) of our bodies, and virtually none of them interact with us. According to the Standard Model of particle physics, neutrinos do not have a mass; however, in 1998, the subterranean Super Kamiokande neutrino detector in Japan was used to determine that they actually have a minuscule mass. The detector used a large volume of water surrounded by detectors for Cherenkov Radiation emitted from neutrino collisions. Because neutrinos interact so weakly with matter, neutrino detectors must be huge to increase the chances of detection. The detectors also reside beneath the Earth’s surface to shield them from other forms of background radiation such as Cosmic Rays.

Today, we know that there are three known types, or flavors, of neutrinos and that neutrinos are able to oscillate between the three flavors while they travel through space. For years, scientists wondered why they detected so few of the expected neutrinos from the Sun’s energy-producing fusion reactions. However, the solar neutrino flux only appears to be low because the other neutrino flavors are not easily observed by some neutrino detectors.

SEE ALSO Radioactivity (1896), Cherenkov Radiation (1934), Standard Model (1961), Quarks (1964).

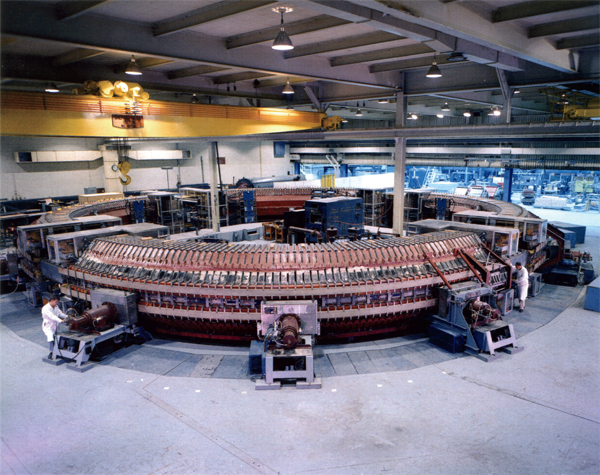

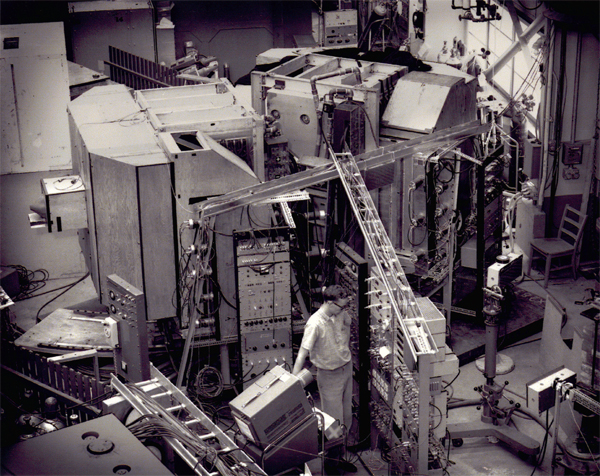

The Fermi National Accelerator Laboratory, near Chicago, uses protons from an accelerator to produce an intense beam of neutrinos that allow physicists to observe neutrino oscillations at a distant detector. Shown here are “horns” that help focus the particles that decay and produce neutrinos.

Igor Yevgenyevich Tamm (1895-1971), Lev Andreevich Artsimovich (1909-1973), Andrei Dmitrievich Sakharov (1921-1989)

Fusion reactions in the Sun bathe the Earth in light and energy. Can we learn to safely generate energy by fusion here on Earth to provide more direct power for human needs? In the Sun, four hydrogen nuclei (four protons) are fused into a helium nucleus. The resulting helium nucleus is less massive than the hydrogen nuclei that combine to make it, and the missing mass is converted to energy according to Einstein’s E = mc2. The huge pressures and temperatures needed for the Sun’s fusion are aided by its crushing gravitation.

Scientists wish to create nuclear fusion reactions on Earth by generating sufficiently high temperatures and densities so that gases consisting of hydrogen isotopes (deuterium and tritium) become a plasma of free-floating nuclei and electrons—and then the resultant nuclei may fuse to produce helium and neutrons with the release of energy. Unfortunately, no material container can withstand the extremely high temperatures needed for fusion. One possible solution is a device called a tokamak, which employs a complex system of magnetic fields to confine and squeeze the plasmas within a hollow, doughnut-shaped container. This hot plasma may be created by magnetic compression, microwaves, electricity, and neutral particle beams from accelerators. The plasma then circulates around the tokamak without touching its walls. Today, the world’s largest tokamak is ITER, under construction in France.

Researchers continue to perfect their tokamaks with the goal of creating a system that generates more energy than is required for the system’s operation. If such a tokamak can be built, it would have many benefits. First, small amounts of fuel required are easy to obtain. Second, fusion does not have the high-level radioactive waste problems of current fission reactors in which the nucleus of an atom, such as uranium, splits into smaller parts, with a large release of energy.

The tokamak was invented in the 1950s by Soviet physicist Igor Yevgenyevich Tamm and Andrei Sakharov, and perfected by Lev Artsimovich. Today, scientists also study the possible use of inertial confinement of the hot plasma using laser beams.

SEE ALSO Plasma (1879), E = mc2 (1905), Energy from the Nucleus (1942), Stellar Nucleosynthesis (1946), Solar Cells (1954), Dyson Sphere (1960).

A photo of the National Spherical Torus Experiment (NSTX), an innovative magnetic fusion device, based on a spherical tokamak concept. NSTX was constructed by the Princeton Plasma Physics Laboratory (PPPL) in collaboration with the Oak Ridge National Laboratory, Columbia University, and the University of Washington at Seattle.

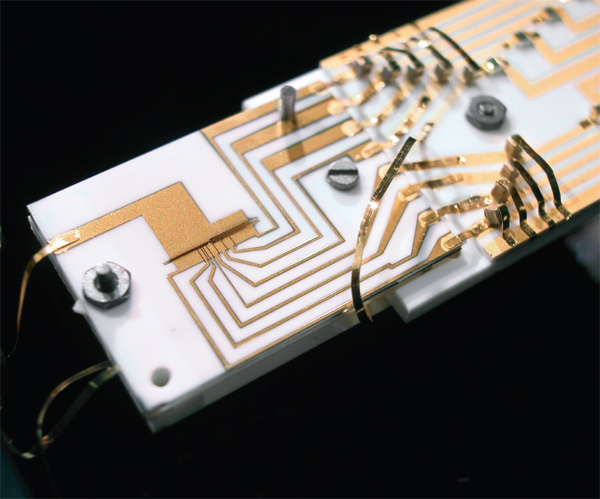

Jack St. Clair Kilby (1923–2005), Robert Norton Noyce (1927–1990)

“It seems that the integrated circuit was destined to be invented,” writes technology-historian Mary Bellis. “Two separate inventors, unaware of each other’s activities, invented almost identical integrated circuits, or ICs, at nearly the same time.”

In electronics, an IC, or microchip, is a miniaturized electronic circuit that relies upon semiconductor devices and is used today in countless examples of electronic equipment, ranging from coffeemakers to fighter jets. The conductivity of a semiconductor material can be controlled by introduction of an electric field. With the invention of the monolithic IC (formed from a single crystal), the traditionally separate transistors, resistors, capacitors, and all wires could now be placed on a single crystal (or chip) made of semiconductor material. Compared with the manual assembly of discrete circuits of individual components, such as resistors and transistors, an IC can be made more efficiently using the process of photolithography, which involves selectively transferring geometric shapes on a mask to the surface of a material such as a silicon wafer. The speed of operations is also higher in ICs because the components are small and tightly packed.

Physicist Jack Kilby invented the IC in 1958. Working independently, physicist Robert Noyce invented the IC six months later. Noyce used silicon for the semiconductor material, and Kilby used germanium. Today, a postage-stamp-sized chip can contain over a billion transistors. The advances in capability and density—and decrease in price—led technologist Gordon Moore to say, “If the auto industry advanced as rapidly as the semiconductor industry, a Rolls Royce would get a half a million miles per gallon, and it would be cheaper to throw it away than to park it.”

Kilby invented the IC as a new employee at Texas Instruments during the company’s late-July vacation time when the halls of his employer were deserted. By September, Kilby had built a working model, and on February 6, Texas Instruments filed a patent.

SEE ALSO Kirchhoff’s Circuit Laws (1845), Transistor (1947), Cosmic Rays (1910), Quantum Computers (1981).

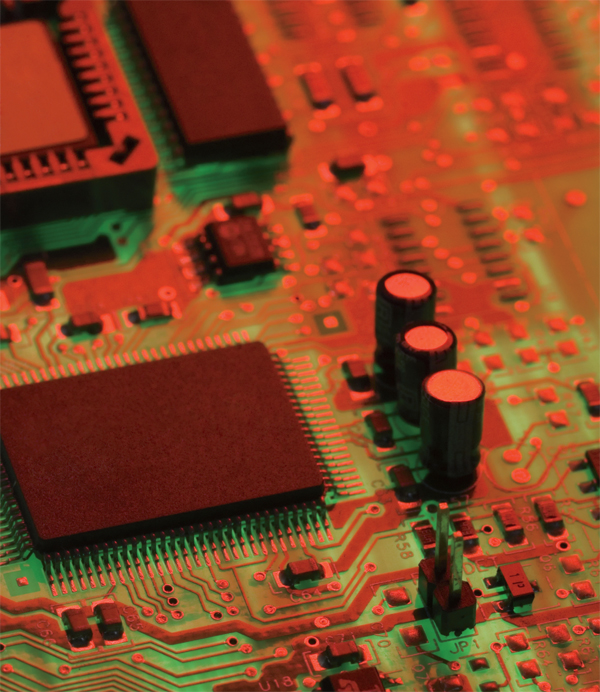

The exterior packaging of microchips (e.g., large rectangular shape at left) house the integrated circuits inside that contain the tiny components such as transistor devices. The housing protects the much smaller integrated circuit and provides a means of connecting the chip to a circuit board.

John Frederick William Herschel (1792–1871), William Alison Anders (b. 1933)

Due to the particular gravitational forces between the Moon and the Earth, the Moon takes just as long to rotate around its own axis as it does to revolve around the Earth; thus, the same side of the Moon always faces the Earth. The “dark side of the moon” is the phrase commonly used for the far side of the Moon that can never be seen from the Earth. In 1870, the famous astronomer Sir John Herschel wrote that the Moon’s far side might contain an ocean of ordinary water. Later, flying saucer buffs speculated that the far side could be harboring a hidden base for extraterrestrials. What secrets did it actually hold?

Finally, in 1959, we had our first glimpse of the far side when it was first photo graphed by the Soviet Luna 3 probe. The first atlas of the far side of the Moon was published by the USSR Academy of Sciences in 1960. Physicists have suggested that the far side might be used for a large radio telescope that is shielded from terrestrial radio interference.

The far side is actually not always dark, and both the side that faces us and the far side receive similar amounts of sunlight. Curiously, the near and far sides have vastly different appearances. In particular, the side toward us contains many large “maria” (relatively smooth areas that looked like seas to ancient astronomers). In contrast, the far side has a blasted appearance with more craters. One reason for this disparity arises from the increased volcanic activity around three billion years ago on the near side, which created the relatively smooth basaltic lavas of the maria. The far side crust may be thicker and thus was able to contain the interior molten material. Scientists still debate the possible causes.

In 1968, humans finally gazed directly on the far side of the moon during America’s Apollo 8 mission. Astronaut William Anders, who traveled to the moon, described the view: “The backside looks like a sand pile my kids have played in for some time. It’s all beat up, no definition, just a lot of bumps and holes.”

SEE ALSO Telescope (1608), Discovery of Saturn’s Rings (1610).

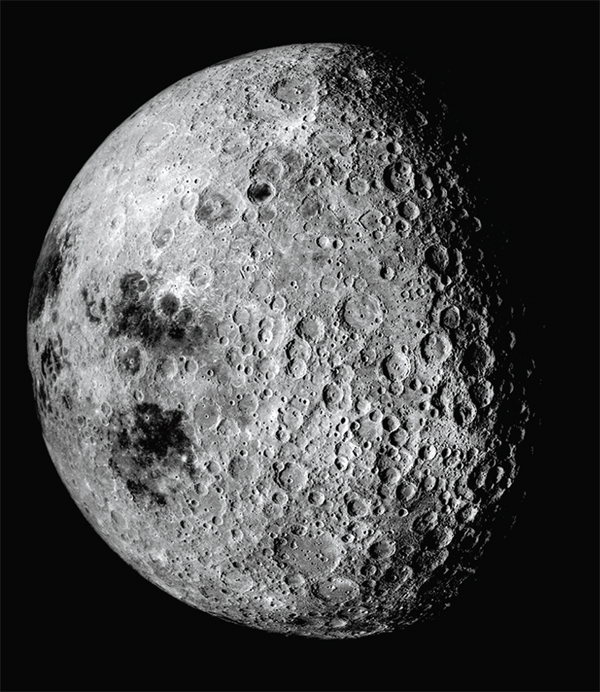

The far side of the moon, with its strangely rough and battered surface, looks very different than the surface facing the Earth. This view was photographed in 1972 by Apollo 16 astronauts while in lunar orbit.

William Olaf Stapledon (1886–1950), Freeman John Dyson (b. 1923)

In 1937, British philosopher and author Olaf Stapledon described an immense artificial structure in his novel Star Maker: “As the eons advanced … many a star without natural planets came to be surrounded by concentric rings of artificial worlds. In some cases the inner rings contained scores, the outer rings thousands of globes adapted to life at some particular distance from the Sun.”

Stimulated by Star Maker, in 1960, physicist Freeman Dyson published a technical paper in the prestigious journal Science on a hypothetical spherical shell that might encompass a star and capture a large percentage of its energy. As technological civilizations advanced, such structures would be desired in order to meet their vast energy needs. Dyson actually had in mind a swarm of artificial objects orbiting a star, but science-fiction authors, physicists, teachers, and students have ever since wondered about the possible properties of a rigid shell with a star at its center, with aliens potentially inhabiting the inner surface of the sphere.

In one embodiment, the Dyson Sphere would have a radius equal to the distance of the Earth to the Sun and would thus have a surface area 550 million times the Earth’s surface area. Interestingly, the central star would have no net gravitational attraction on the shell, which may dangerously drift relative to the star unless adjustments could be made to the shell’s position. Similarly, any creatures or objects on the inner surface of the sphere would not be gravitationally attracted to the sphere. In a related concept, creatures could still reside on a planet, and the shell could be used to capture energy from the star. Dyson had originally estimated that sufficient planetary and other material existed in the solar system to create such a sphere with a 3-meter thick shell. Dyson also speculated that Earthlings might be able to detect a far-away Dyson Sphere because it would absorb starlight and re-radiate energy in readily definable ways. Researchers have already attempted to find possible evidence of such constructs by searching for their infrared signals.

SEE ALSO Measuring the Solar System (1672), Fermi Paradox (1950), Solar Cells (1954), Tokamak (1956).

Artistic depiction of a Dyson sphere that might encompass a star and capture a large percentage of its energy. The lightning effects depicted here represent the capture of energy at the inner surface of the sphere.

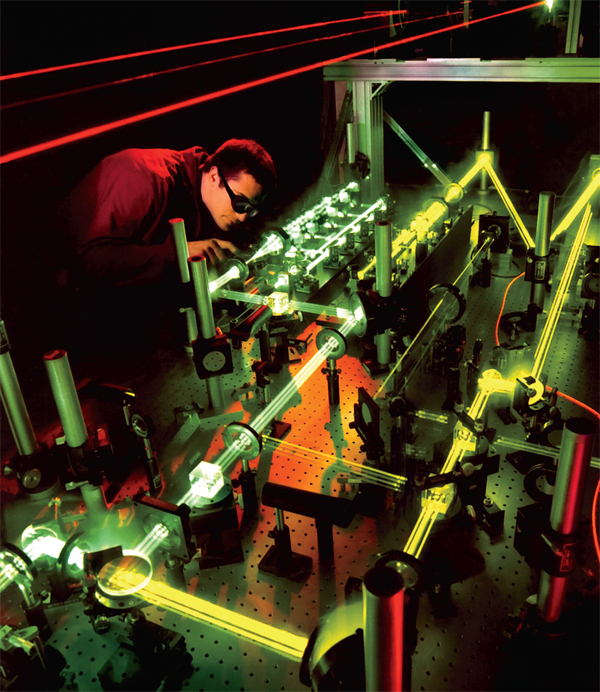

Charles Hard Townes (b. 1915), Theodore Harold “Ted” Maiman (1927–2007)

“Laser technology has become important in a wide range of practical applications,” writes laser-expert Jeff Hecht, “ranging from medicine and consumer electronics to telecommunications and military technology. Lasers are also vital tools on the cutting edge of research—18 recipients of the Nobel Prize received the award for laser-related research, including the laser itself, holography, laser cooling, and Bose-Einstein Condensates.”

The word laser stands for light amplification by stimulated emission of radiation, and lasers make use of a subatomic process known as stimulated emission, first considered by Albert Einstein in 1917. In stimulated emission, a photon (a particle of light) of the appropriate energy causes an electron to drop to a lower energy level, which results in the creation of another photon. This second photon is said to be coherent with the first and has the same phase, frequency, polarization, and direction of travel as the first photon. If the photons are reflected so that they repeatedly traverse the same atoms, an amplification can take place and an intense radiation beam is emitted. Lasers can be created so that they emit electromagnetic radiations of various kinds, and thus there are X-ray lasers, ultraviolet lasers, infrared lasers, etc. The resultant beam may be highly collimated—NASA scientists have bounced laser beams generated on the Earth from reflectors left on the moon by astronauts. At the Moon’s surface, this beam is a mile or two wide (about 2.5 kilometers), which is actually a rather small spread when compared to ordinary light from a flashlight!

In 1953, physicist Charles Townes and students produced the first microwave laser (a maser), but it was not capable of continuous radiation emission. Theodore Maiman created the first practical working laser in 1960, using pulsed operation, and today the largest applications of lasers include DVD and CD players, fiber-optic communications, bar-code readers, and laser printers. Other uses include bloodless surgery and target-marking for weapons use. Research continues in the use of lasers capable of destroying tanks or airplanes.

SEE ALSO Brewster’s Optics (1815), Hologram (1947), Bose-Einstein Condensate (1995).

An optical engineer studies the interaction of several lasers that will be used aboard a laser weapons system being developed to defend against ballistic missile attacks. The U.S. Directed Energy Directorate conducts research into beam-control technologies.

Joseph William Kittinger II (b. 1928)

Perhaps many of you have heard the gruesome legend of the killer penny. If you were to drop a penny from the Empire State Building in New York City, the penny would gain speed and could kill someone walking on the street below, penetrating the brain.

Fortunately for pedestrians below, the physics of terminal velocity saves them from this ghastly demise. The penny falls about 500 feet (152 meters) before reaching its maximum velocity: about 50 miles an hour (80 kilometers/hour). A bullet travels at ten times this speed. The penny is not likely to kill anyone, thus debunking the legend. Updrafts also tend to slow the penny. The penny is not shaped like a bullet, and the odds are that the tumbling coin would barely break the skin.

As an object moves through a medium (such as air or water), it encounters a resistive force that slows it down. For objects in free-fall through air, the force depends on the square of the speed, the area of the object, and the density of air. The faster the object falls, the greater the opposing force becomes. As the penny accelerates downward, eventually the resistive force grows to such an extent that the penny finally falls at a constant speed, known as the terminal velocity. This occurs when the viscous drag on the object is equal to the force of gravity.

Skydivers reach a terminal velocity of about 120 miles per hour (190 kilometers/ hour) if they spread their feet and arms. If they assume a heads-down diving position, they reach a velocity of about 150 miles per hour (240 kilometers/hour).

The highest terminal velocity ever to have been reached by a human in free fall was achieved in 1960 by U.S. military officer Joseph Kittinger II, who is estimated to have reached 614 miles per hour (988 kilometers/hour) due to the high altitude (and hence lower air density) of his jump from a balloon. His jump started at 102,800 feet (31,300 meters), and he opened his parachute at 18,000 feet (5,500 meters).

SEE ALSO Acceleration of Falling Objects (1638), Escape Velocity (1728), Bernoulli’s Law of Fluid Dynamics (1738), Baseball Curveball (1870), Super Ball (1965).

Skydivers reach a terminal velocity of about 120 miles per hour (190 kilometers/hour) if they spread their feet and arms.

Robert Henry Dicke (1916–1997), Brandon Carter (b. 1942)

“As our knowledge of the cosmos has increased,” writes physicist James Trefil, “… it has become apparent that, had the universe been put together just a little differently, we could not be here to contemplate it. It is as though the universe has been made for us—a Garden of Eden of the grandest possible design.”

While this statement is subject to continued debate, the anthropic principle fascinates both scientists and laypeople and was first elucidated in detail in a publication by astrophysicist Robert Dicke in 1961 and later developed by physicist Brandon Carter and others. The controversial principle revolves around the observation that at least some physical parameters appear to be tuned to permit life forms to evolve. For example, we owe our very lives to the element carbon, which was first manufactured in stars before the Earth formed. The nuclear reactions that facilitate the production of carbon have the appearance, at least to some researchers, of being “just right” to facilitate carbon production.

If all of the stars in the universe were heavier than three solar masses, they would live for only about 500 million years, and multicellular life would not have time to evolve. If the rate of the universe’s expansion one second after the Big Bang had been smaller by even one part in a hundred thousand million million, the universe would have recollapsed before reaching its present size. On the other hand, the universe might have expanded so rapidly that protons and electrons never united to make hydrogen atoms. An extremely small change in the strength of gravity or of the nuclear weak force could prevent advanced life forms from evolving.

An infinite number of random (non-designed) universes could exist, ours being just one that permits carbon-based life. Some researchers have speculated that child universes are constantly budding off from parent universes and that the child universe inherits a set of physical laws similar to the parent, a process reminiscent of evolution of biological characteristics of life on Earth. Universes with many stars may be long-lived and have the opportunity to have many children with many stars; thus, perhaps our star-filled universe is not quite so unusual after all.

SEE ALSO Parallel Universes (1956), Fermi Paradox (1950), Living in a Simulation (1967).

If values for certain fundamental constants of physics were a little different, intelligent carbon-based life may have had great difficulty evolving. To some religious individuals, this gives the impression that the universe was fine-tuned to permit our existence.

Murray Gell-Mann (b. 1929), Sheldon Lee Glashow (b. 1932), George Zweig (b. 1937)

“Physicists had learned, by the 1930s, to build all matter out of just three kinds of particle: electrons, neutrons, and protons,” author Stephen Battersby writes. “But a procession of unwanted extras had begun to appear—neutrinos, the positron and antiproton, pions and muons, and kaons, lambdas and sigmas—so that by the middle of the 1960s, a hundred supposedly fundamental particles have been detected. It was a mess.”

Through a combination of theory and experiment, a mathematical model called the Standard Model explains most of particle physics observed so far by physicists. According to the model, elementary particles are grouped into two classes: bosons (e.g., particles that often transmit forces) and fermions. Fermions include various kinds of Quarks (3 quarks make up both the proton and Neutrons) and leptons (such as the Electron and Neutrino, the latter of which was discovered in 1956). Neutrinos are very difficult to detect because they have a minute (but not zero) mass and pass through ordinary matter almost undisturbed. Today, we know about many of these subatomic particles by smashing apart atoms in particle accelerators and observing the resulting fragments.

As suggested, the Standard Model explains forces as resulting from matter particles exchanging boson force-mediating particles that include photons and gluons. The Higgs particle is the only fundamental particle predicted by the Standard Model that has yet to be observed—and it explains why other elementary particles have masses. The force of gravity is thought to be generated by the exchange of massless gravitons, but these have not yet been experimentally detected. In fact, the Standard Model is incomplete, because it does not include the force of gravity. Some physicists are trying to add gravity to the Standard Model to produce a grand unified theory, or GUT.

In 1964, physicists Murray Gell-Mann and George Zweig proposed the concept of quarks, just a few years after Gell-Mann’s 1961 formulation of a particle classification system known as the Eightfold Way. In 1960, physicist Sheldon Glashow’s unification theories provided an early step toward the Standard Model.

SEE ALSO String Theory (1919), Neutron (1932), Neutrinos (1956), Quarks (1964), God Particle (1964), Supersymmetry (1971), Theory of Everything (1984), Large Hadron Collider (2009).

The Cosmotron. This was the first accelerator in the world to send particles with energies in the billion electron volt, or GeV, region. The Cosmotron synchrotron reached its full design energy of 3.3 GeV in 1953 and was used for studying subatomic particles.

In William R. Forstchen’s best-selling novel One Second After, a high-altitude nuclear bomb explodes, unleashing a catastrophic electromagnetic pulse (EMP) that instantly disables electrical devices such as those in airplanes, heart pacemakers, modern cars, and cell phones, and the U.S. descends into “literal and metaphorical darkness.” Food becomes scarce, society turns violent, and towns burn—all following a scenario that is completely plausible.

EMP usually refers to the burst of electromagnetic radiation that results from a nuclear explosion that disables many forms of electronic devices. In 1962, the U.S. conducted a nuclear test 250 miles (400 kilometers) above the Pacific Ocean. The test, called Starfish Prime, caused electrical damage in Hawaii, about 898 miles (1,445 kilometers) away. Streetlights went out. Burglar alarms went off. The microwave link of a telephone company was damaged. It is estimated today that if a single nuclear bomb was exploded 250 miles above Kansas, the entire continental U.S would be affected, due to the greater strength of the Earth’s magnetic field over the U.S. Even the water supply would be affected, given that it often relies on electrical pumps.

After nuclear detonation, the EMP starts with a short, intense burst of gamma rays (high-energy electromagnetic radiation). The gamma rays interact with the atoms in air molecules, and electrons are released through a process called the Compton Effect. The electrons ionize the atmosphere and generate a powerful electrical field. The strength and effect of the EMP depends highly on the altitude at which the bomb detonates and the local strength of the Earth’s magnetic field.

Note that it is also possible to produce less-powerful EMPs without nuclear weapons, for example, through explosively pumped fluxed compression generators, which are essentially normal electrical generators driven by an explosion using conventional fuel.

Electronic equipment can be protected from an EMP by placing it within a Faraday Cage, which is a metallic shield that can divert the electromagnetic energy directly to the ground.

SEE ALSO Compton Effect (1923), Little Boy Atomic Bomb (1945), Gamma-Ray Bursts (1967), HAARP (2007).

A side view of an E-4 advanced airborne command post (AABNCP) on the electromagnetic pulse (EMP) simulator for testing (Kirtland Air Force Base, New Mexico). The plane is designed to survive an EMP with systems intact.

Jacques Salomon Hadamard (1865–1963), Jules Henri Poincaré (1854–1912), Edward Norton Lorenz (1917–2008)

In Babylonian mythology, Tiamat was the goddess that personified the sea and was the frightening representation of primordial chaos. Chaos came to symbolize the unknown and the uncontrollable. Today, chaos theory is an exciting, growing field that involves the study of wide-ranging phenomena exhibiting a sensitive dependence on initial conditions. Although chaotic behavior often seems “random” and unpredictable, it often obeys strict mathematical rules derived from equations that can be formulated and studied. One important research tool to aid in the study of chaos is computer graphics. From chaotic toys with randomly blinking lights to wisps and eddies of cigarette smoke, chaotic behavior is generally irregular and disorderly; other examples include weather patterns, some neurological and cardiac activity, the stock market, and certain electrical networks of computers. Chaos theory has also often been applied to a wide range of visual art.

In science, certain famous and clear examples of chaotic physical systems exist, such as thermal convection in fluids, panel flutter in supersonic aircraft, oscillating chemical reactions, fluid dynamics, population growth, particles impacting on a periodically vibrating wall, various pendula and rotor motions, nonlinear electrical circuits, and buckled beams.

The early roots of chaos theory started around 1900 when mathematicians such as Jacques Hadamard and Henri Poincaré studied the complicated trajectories of moving bodies. In the early 1960s, Edward Lorenz, a research meteorologist at the Massachusetts Institute of Technology, used a system of equations to model convection in the atmosphere. Despite the simplicity of his formulas, he quickly found one of the hallmarks of chaos: extremely minute changes of the initial conditions led to unpredictable and different outcomes. In his 1963 paper, Lorenz explained that a butterfly flapping its wings in one part of the world could later affect the weather thousands of miles away. Today, we call this sensitivity the Butterfly Effect.

According to Babylonian mythology, Tiamat gave birth to dragons and serpents.

SEE ALSO Laplace’s Demon (1814), Self-Organized Criticality (1987), Fastest Tornado Speed (1999).

Chaos theory involves the study of wide-ranging phenomena exhibiting a sensitive dependence on initial conditions. Shown here is a portion of a Daniel White’s Mandelbulb, a 3-dimensional analog of the Mandelbrot set, which represents the complicated behavior of a simple mathematical system.

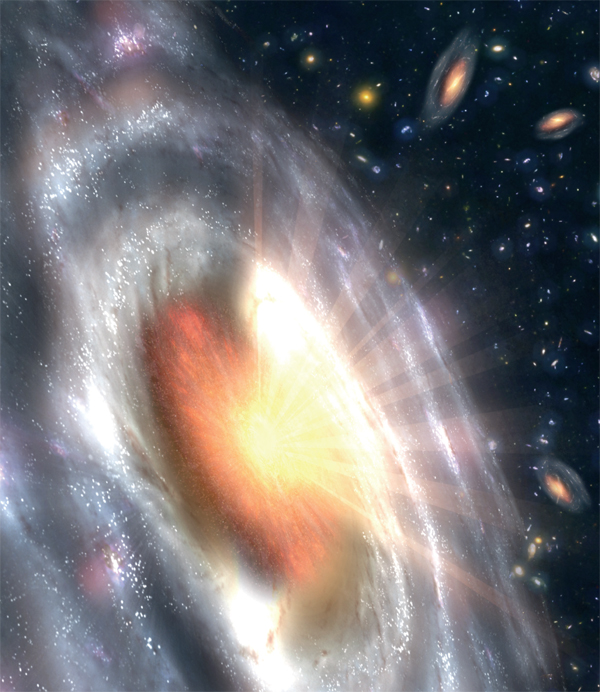

Maarten Schmidt (b. 1929)

“Quasars are among the most baffling objects in the universe because of their small size and prodigious energy output,” write the scientists at hubblesite.org. “Quasars are not much bigger than Earth’s solar system but pour out 100 to 1,000 times as much light as an entire galaxy containing a hundred billion stars.”

Although a mystery for decades, today the majority of scientists believe that quasars are very energetic and distant galaxies with an extremely massive central Black Hole that spews energy as nearby galactic material spirals into the black hole. The first quasars were discovered with radio telescopes (instruments that receive radio waves from space) without any corresponding visible object. In the early 1960s, visually faint objects were finally associated with these strange sources that were termed quasi-stellar radio sources, or quasars for short. The spectrum of these objects, which shows the variation in the intensity of the object’s radiation at different wavelengths, was initially puzzling. However, in 1963, Dutch-born American astronomer Maarten Schmidt made the exciting discovery that the spectral lines were simply coming from hydrogen, but that they were shifted far to the red end of the spectrum. This redshift, due to the expansion of the universe, implied that these quasars were part of galaxies that were extremely far away and ancient (see entries on Hubble’s Law and Doppler Effect).

More than 200,000 quasars are known today, and most do not have detectable radio emissions. Although quasars appear dim because they are between about 780 million and 28 billion light-years away—they are actually the most luminous and energetic objects known in the universe. It is estimated that quasars can swallow 10 stars per year, or 600 Earths per minute, and then “turn off” when the surrounding gas and dust has been consumed. At this point, the galaxy hosting the quasar becomes an ordinary galaxy. Quasars may have been more common in the early universe because they had not yet had a chance to consume the surrounding material.

SEE ALSO Telescope (1608), Black Holes (1783), Doppler Effect (1842), Hubble’s Law of Cosmic Expansion (1929), Gamma-Ray Bursts (1967).

A quasar, or growing black hole spewing energy, can be seen at the center of a galaxy in this artist’s concept. Astronomers using NASA’s Spitzer and Chandra space telescopes discovered similar quasars within a number of distant galaxies. X-ray emissions are illustrated by the white rays.

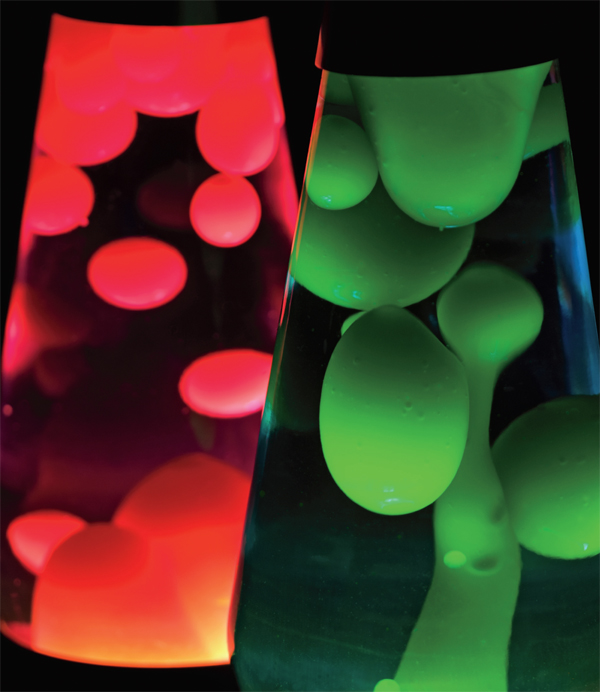

Edward Craven Walker (1918–2000)

The Lava Lamp (U.S. Patent 3,387,396) is an ornamental illuminated vessel with floating globules, and it is included in this book because of its ubiquity and for the simple yet important principles it embodies. Many educators have used the Lava Lamp for classroom demonstrations, experiments, and discussions on topics that include thermal radiation, convection, and conduction.

The Lava Lamp was invented in 1963 by Englishman Edward Craven Walker. Author Ben Ikenson writes, “A World War II veteran, Walker adopted the lingo and lifestyle of the flower children. Part Thomas Edison, part Austin Powers, he was a nudist in the psychedelic days of the UK—and possessed some pretty savvy marketing skills to boot. ‘If you buy my lamp, you won’t need to buy drugs,’ he was known to say.”

To make a Lava Lamp, one must find two liquids that are immiscible, meaning that, like oil and water, they will not blend or mix. In one embodiment, the lamp consists of a 40-watt Incandescent Light Bulb at the bottom, which heats a tall tapered glass bottle containing water and globules made of a mixture of wax and carbon tetrachloride. The wax is slightly denser than the water at room temperature. As the base of the lamp is heated, the wax expands more than the water and becomes fluid. As the wax’s specific gravity (density relative to water) decreases, the blobs rise to the top, and then the wax globules cool and sink. A metal coil at the base of the lamp serves to spread the heat and also to break the Surface Tension of the globules so that they may recombine when at the bottom.

The complex and unpredictable motions of the wax blobs within Lava Lamps have been used as a source of random numbers, and such a random-number generator is mentioned in U.S. Patent 5,732,138, issued in 1998.

Sadly, in 2004, a Lava Lamp killed Phillip Quinn when he attempted to heat it on his kitchen stove. The lamp exploded, and a piece of glass pierced his heart.

SEE ALSO Archimedes’ Principle of Buoyancy (250 B.C.), Stokes’ Law of Viscosity (1851), Surface Tension (1866), Incandescent Light Bulb (1878), Black Light (1903), Silly Putty (1943), Drinking Bird (1945).

Lava lamps demonstrate simple yet important physics principles, and many educators have used the Lava Lamp for classroom demonstrations, experiments, and discussions.

Robert Brout (b. 1928), Peter Ware Higgs (b. 1929), François Englert (b. 1932)

“While walking in the Scottish Highlands in 1964,” writes author Joanne Baker, “physicist Peter Higgs thought of a way to give particles their mass. He called this his ‘one big idea.’ Particles seemed more massive because they are slowed while swimming through a force field, now known as the Higgs field. It is carried by the Higgs boson, referred to as the ‘God particle’ by Nobel Laureate Leon Lederman.”

Elementary particles are grouped into two classes: bosons (particles that transmit forces) and fermions (particles such as Quarks, Electrons, and Neutrinos that make up matter). The Higgs boson is a particle in the Standard Model that has not yet been observed, and scientists hope that the Large Hadron Collider—a high-energy particle accelerator in Europe—may provide experimental evidence relating to the particle’s existence.

To help us visualize the Higgs field, imagine a lake of viscous honey that adheres to the otherwise massless fundamental particles that travel through the field. The field converts them into particles with mass. In the very early universe, theories suggest that all of the fundamental forces (i.e. strong, electromagnetic, weak, and gravitational) were united in one superforce, but as the universe cooled different forces emerged. Physicists have been able to combine the weak and electromagnetic forces into a unified “electroweak” force, and perhaps all of the forces may one day be unified. Moreover, physicists Peter Higgs, Robert Brout, and François Englert suggested that all particles had no mass soon after the Big Bang. As the Universe cooled, the Higgs boson and its associated field emerged. Some particles, such as massless photons of light, can travel through the sticky Higgs field without picking up mass. Others get bogged down like ants in molasses and become heavy.

The Higgs boson may be more than 100 times as massive as the proton. A large particle collider is required to find this boson because the higher the energy of collision, the more massive the particles in the debris.

SEE ALSO Standard Model (1961), Theory of Everything (1984), Large Hadron Collider (2009).

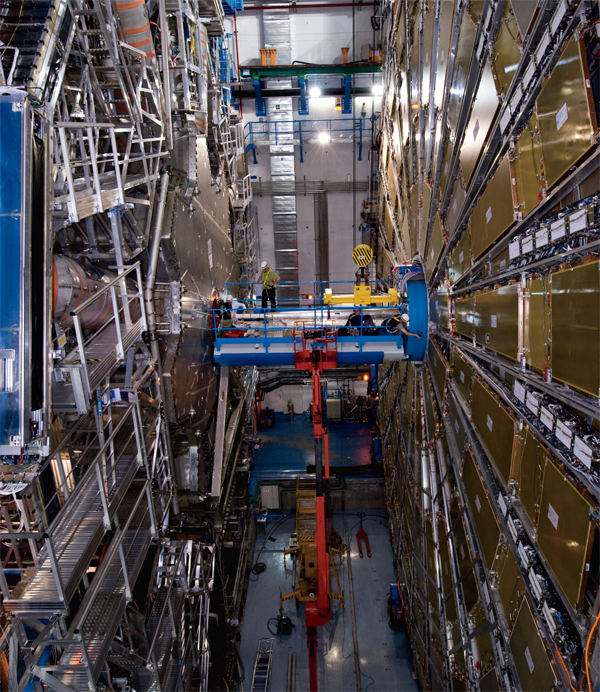

The Compact Muon Solenoid (CMS) is a particle detector located underground in a large cavern excavated at the site of the Large Hadron Collider. This detector will assist in the search for the Higgs boson and in gaining insight into the nature of Dark Matter.

Murray Gell-Mann (b. 1929), George Zweig (b. 1937)

Welcome to the particle zoo. In the 1960s, theorists realized that patterns in the relationships between various elementary particles, such as protons and neutrons, could be understood if these particles were not actually elementary but rather were composed of smaller particles called quarks.

Six types, or flavors, of quarks exist and are referred to as up, down, charm, strange, top and bottom. Only the up and down quarks are stable, and they are the most common in the universe. The other heavier quarks are produced in high-energy collisions. (Note that another class of particles called leptons, which include Electrons, are not composed of quarks.)

Quarks were independently proposed by physicists Murray Gell-Mann and George Zweig in 1964, and, by 1995, particle-accelerator experiments had yielded evidence for all six quarks. Quarks have fractional electric charge; for example, the up quark has a charge of +2/3, and the down quark has a charge of −1/3. Neutrons (which have no charge) are formed from two down quarks and one up quark, and the proton (which is positively charged) is composed of two up quarks and one down quark. The quarks are tightly bound together by a powerful short-range force called the color force, which is mediated by force-carrying particles called gluons. The theory that describes these strong interactions is called quantum chromodynamics. Gell-Mann coined the word quark for these particles after one of his perusals of the silly line in Finnegans Wake, “Three quarks for Muster mark.”

Right after the Big Bang, the universe was filled with a quark-gluon Plasma, because the temperature was too high for hadrons (i.e. particles like protons and neutrons) to form. Authors Judy Jones and William Wilson write, “Quarks pack a mean intellectual wallop. They imply that nature is three-sided…. Specks of infinity on the one hand, building blocks of the universe on the other, quarks represent science at its most ambitious—also its coyest.”

SEE ALSO Big Bang (13.7 Billion B.C.), Plasma (1879), Electron (1897), Neutron (1932), Quantum Electrodynamics (1948), Standard Model (1961).

Scientists used the photograph (left) of particle trails in a Brookhaven National Laboratory bubble chamber as evidence for the existence of a charmed baryon (a three-quark particle). A neutrino enters the picture from below (dashed line in right figure) and collides with a proton to produce additional particles that leave behind trails.

James Watson Cronin (b. 1931), Val Logsdon Fitch (b. 1923)

You, me, the birds, and the bees are alive today due to CP violation and various laws of physics—and their apparent effect on the ratio of matter to Antimatter during the Big Bang from which our universe evolved. As a result of CP violation, asymmetries are created with respect to certain transformations in the subatomic realm.

Many important ideas in physics manifest themselves as symmetries, for example, in a physical experiment in which some characteristic is conserved, or remains constant. The C portion of CP symmetry suggests that the laws of physics should be the same if a particle were interchanged with its antiparticle, for example by changing the sign of the electric charge and other quantum aspects. (Technically, the C stands for charge conjugation symmetry.) The P, or parity, symmetry refers to a reversal of space coordinates, for example, swapping left and right, or, more accurately, changing all three space dimensions x, y, z to −x, −y, and −z. For instance, parity conservation would mean that the mirror images of a reaction occur at the same rate (e.g. the atomic nucleus emits decay products up as often as down).

In 1964, physicists James Cronin and Val Fitch discovered that certain particles, called neutral kaons, did not obey CP conservations whereby equal numbers of antiparticles and particles are formed. In short, they showed that nuclear reactions mediated by the weak force (which governs the radioactive decay of elements) violated the CP symmetry combination. In this case, neutral kaons can transform into their antiparticles (in which each quark is replaced with the others’ antiquark) and vice versa, but with different probabilities.

During the Big Bang, CP violation and other as-yet unknown physical interactions at high energies played a role in the observed dominance of matter over antimatter in the universe. Without these kinds of interactions, nearly equal numbers of protons and antiprotons might have been created and annihilated each other, with no net creation of matter.

SEE ALSO Big Bang (13.7 Billion B.C.), Radioactivity (1896), Antimatter (1932), Quarks (1964), Theory of Everything (1984).

In the early 1960s, a beam from the Alternating Gradient Synchrotron at Brookhaven National Laboratory and the detectors shown here were used to prove the violation of conjugation (C) and parity (P)—winning the Nobel Prize in physics for James Cronin and Val Fitch.

John Stewart Bell (1928–1990)

In the entry EPR Paradox, we discussed quantum entanglement, which refers to an intimate connection between quantum particles, such as between two electrons or two photons. Neither particle has a definite spin before measurement. Once the pair of particles is entangled, a certain kind of change to one of them is reflected instantly in the other, even if, for example, one member of the pair is on the Earth while the other has traveled to the Moon. This entanglement is so counterintuitive that Albert Einstein thought it showed a flaw in quantum theory. One possibility considered was that such phenomena relied on some unknown “local hidden variables” outside of traditional quantum mechanical theory—and a particle was, in reality, still only influenced directly by its immediate surroundings. In short, Einstein did not accept that distant events could have an instantaneous or faster-than-light effect on local ones.

However, in 1964, physicist John Bell showed that no physical theory of local hidden variables can ever reproduce all of the predictions of quantum mechanics. In fact, the nonlocality of our physical world appears to follow from both Bell’s Theorem and experimental results obtained since the early 1980s. In essence, Bell asks us first to suppose that the Earth particle and the Moon particle, in our example, have determinate values. Could such particles reproduce the results predicted by quantum mechanics for various ways scientists on the Earth and Moon might measure their particles? Bell proved mathematically that a statistical distribution of results would be produced that disagreed with that predicted by quantum mechanics. Thus, the particles may not carry determinate values. This is in contradiction to Einstein’s conclusions, and the assumption that the universe is “local” is wrong.

Philosophers, physicists, and mystics have made extensive use of Bell’s Theorem. Fritjof Capra writes, “Bell’s theorem dealt a shattering blow to Einstein’s position by showing that the conception of reality as consisting of separate parts, joined by local connections, is incompatible with quantum theory…. Bell’s theorem demonstrates that the universe is fundamentally interconnected, interdependent, and inseparable.”

SEE ALSO Complementarity Principle (1927), EPR Paradox (1935), Schrödinger’s Cat (1935), Quantum Computers (1981).

Philosophers, physicists, and mystics have made extensive use of Bell’s Theorem, which seemed to show Einstein was wrong and that the cosmos is fundamentally “interconnected, interdependent, and inseparable.”

“Bang, thump, bonk!” exclaimed the December 3, 1965 issue of Life magazine. “Willy-nilly the ball caroms down the hall as if it had a life of its own. This is Super Ball, surely the bouncingest spheroid ever, which has lept like a berserk grasshopper to the top of whatever charts psychologists may keep on U.S. fads.”

In 1965, California chemist Norman Stingley, along with the Wham-O Manufacturing Company, developed the amazing Super Ball made from the elastic compound called Zectron. If dropped from shoulder height, it could bounce nearly 90% of that height and could continue bouncing for a minute on a hard surface (a tennis ball’s bouncing lasts only ten seconds). In the language of physics, the coefficient of restitution, e, defined as the ratio of the velocity after collision to the velocity before collision, ranges from 0.8 to 0.9.

Released to the public in early summer of 1965, over six million Super Balls were bouncing around America by Fall. United States National Security Advisor McGeorge Bundy had five dozen shipped to the White House to amuse the White House staffers.

The secret of the Super Ball, often referred to as a bouncy ball, is polybutadiene, a rubber-like compound composed of long elastic chains of carbon atoms. When polybutadiene is heated at high pressure in the presence of sulfur, a chemical process called vulcanization converts these long chains to more durable material. Because the tiny sulfur bridges limit how much the Super Ball flexes, much of the bounce energy is returned to its motion. Other chemicals, such as di-ortho-tolylguanidine (DOTG), were added to increase the cross-linking of chains.

What would happen if one were to drop a Super Ball from the top of the Empire State Building? After the ball has dropped about 328 feet (around 100 meters, or 25-30 stories), it reaches a Terminal Velocity of about 70 miles per hour (113 kilometers/ hour), for a ball of a radius of one inch (2.5 centimeters). Assuming e = 0.85, the rebound velocity will be about 60 miles per hour (97 kilometers/hour), corresponding to 80 feet (24 meters, or 7 stories).

SEE ALSO Baseball Curveball (1870), Golf Ball Dimples (1905), Terminal Velocity (1960).

If dropped from shoulder height, a Super Ball could bounce nearly 90 percent of that height and continue bouncing for a minute on a hard surface. Its coefficient of restitution ranges from 0.8 to 0.9.

Arno Allan Penzias (b. 1933), Robert Woodrow Wilson (b. 1936)

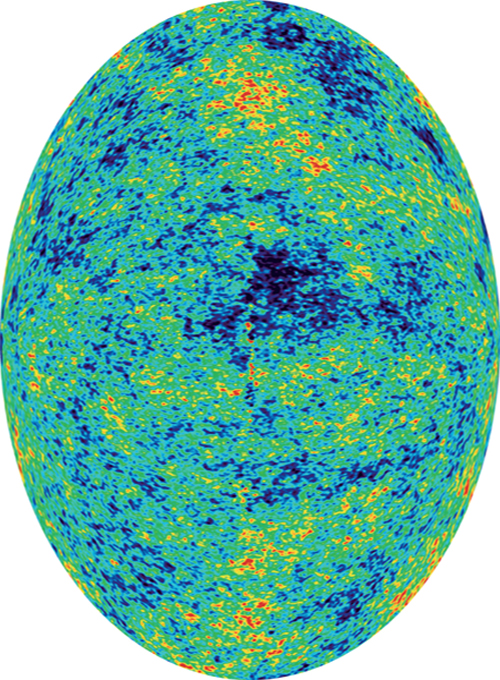

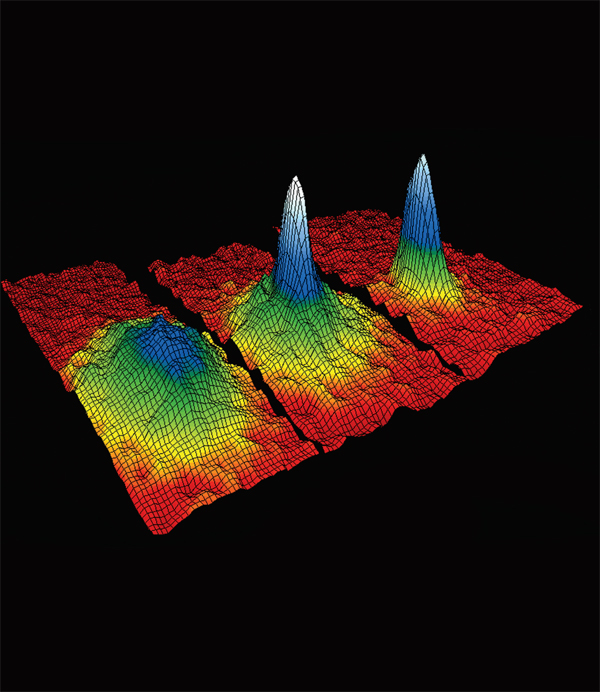

The cosmic microwave background (CMB) is electromagnetic radiation filling the universe, a remnant of the dazzling “explosion” from which our universe evolved during the Big Bang 13.7 billion years ago. As the universe cooled and expanded, there was an increase in wavelengths of high-energy photons (such as in the gamma-ray and X-ray portion of the Electromagnetic Spectrum) and a shifting to lower-energy microwaves.

Around 1948, cosmologist George Gamow and colleagues suggested that this microwave background radiation might be detectable, and in 1965 physicists Arno Penzias and Robert Wilson of the Bell Telephone Laboratories in New Jersey measured a mysterious excess microwave noise that was associated with a thermal radiation field with a temperature of about −454 °F (3 K). After checking for various possible causes of this background “noise,” including pigeon droppings in their large outdoor detector, it was determined that they were really observing the most ancient radiation in the universe and providing evidence for the Big Bang model. Note that because photons of energy take time to reach the Earth from distant parts of the universe; whenever we look outward in space, we are also looking back in time.

More precise measurements were made by the COBE (Cosmic Background Explorer) satellite, launched in 1989, which determined a temperature of −454.47 °F (2.735 K). COBE also allowed researchers to measure small fluctuations in the intensity of the background radiation, which corresponded to the beginning of structures, such as galaxies, in the universe.

Luck matters for scientific discoveries. Author Bill Bryson writes, “Although Penzias and Wilson had not been looking for the cosmic background radiation, didn’t know what it was when they had found it, and hadn’t described or interpreted its character in any paper, they received the 1978 Nobel Prize in physics.” Connect an antenna to an analog TV; make sure it’s not tuned to a TV broadcast and “about 1 percent of the dancing static you see is accounted for by this ancient remnant of the Big Bang. The next time you complain that there is nothing on, remember you can always watch the birth of the universe.”

SEE ALSO Big Bang (13.7 Billion B.C.), Telescope (1608), Electromagnetic Spectrum (1864), X-rays (1895), Hubble’s Law of Cosmic Expansion (1929), Gamma-Ray Bursts (1967), Cosmic Inflation (1980).

The Horn reflector antenna at Bell Telephone Laboratories in Holmdel, New Jersey, was built in 1959 for pioneering work related to communication satellites. Penzias and Wilson discovered the cosmic microwave background using this instrument.

Paul Ulrich Villard (1860–1934)

Gamma-ray bursts (GRBs) are sudden, intense bursts of gamma rays, which are an extremely energetic form of light. “If you could see gamma rays with your eyes,” write authors Peter Ward and Donald Brownlee, “you would see the sky light up about once a night, but these distant events go unnoticed by our natural senses.” However, if a GRB would ever flash closer to the Earth, then “one minute you exist, and the next you are either dead or dying from radiation poisoning.” In fact, researchers have suggested that the mass extinction of life 440 million years ago in the late Ordovician period was caused by a GRB.

Until recently, GRBs were one of the biggest enigmas in high-energy astronomy. They were discovered accidentally in 1967 by U.S. military satellites that scanned for Soviet nuclear tests in violation of the atmospheric nuclear test-ban treaty. After the typically few-second burst, the initial event is usually followed by a longer-lived afterglow at longer wavelengths. Today, physicists believe that most GRBs come from a narrow beam of intense radiation released during a supernova explosion, as a rotating high-mass star collapses to form a black hole. So far, all observed GRBs appear to originate outside our Milky Way galaxy.

Scientists are not certain as to the precise mechanism that could cause the release of as much energy in a few seconds as the Sun produces in its entire lifetime. Scientists at NASA suggest that when a star collapses, an explosion sends a blast wave that moves through the star at close to the speed of light. The gamma rays are created when the blast wave collides with material still inside the star.

In 1900, chemist Paul Villard discovered gamma rays while studying the Radioactivity of radium. In 2009, astronomers detected a GRB from an exploding megastar that existed a mere 630 million years after the Big Bang kicked the Universe into operation some 13.7 billion years ago, making this GRB the most distant object ever seen and an inhabitant of a relatively unexplored epoch of our universe.

SEE ALSO Big Bang (13.7 Billion B.C.), Electromagnetic Spectrum (1864), Radioactivity (1896), Cosmic Rays (1910), Quasars (1963).

Hubble Space Telescope image of Wolf-Rayet star WR-124 and its surrounding nebula. These kinds of stars may be generators of long-duration GRBs. These stars are large stars that rapidly lose mass via strong stellar winds.

Konrad Zuse (1910–1995), Edward Fredkin (b. 1934), Stephen Wolfram (b. 1959), Max Tegmark (b. 1967)

As we learn more about the universe and are able to simulate complex worlds using computers, even serious scientists begin to question the nature of reality. Could we be living in a computer simulation?

In our own small pocket of the universe, we have already developed computers with the ability to simulate lifelike behaviors using software and mathematical rules. One day, we may create thinking beings that live in simulated spaces as complex and vibrant as a rain forest. Perhaps we’ll be able to simulate reality itself, and it is possible that more advanced beings are already doing this elsewhere in the universe.

What if the number of these simulations is larger than the number of universes? Astronomer Martin Rees suggests that if the simulations outnumber the universes, “as they would if one universe contained many computers making many simulations,” then it is likely that we are artificial life. Rees writes, “Once you accept the idea of the multiverse …, it’s a logical consequence that in some of those universes there will be the potential to simulate parts of themselves, and you may get a sort of infinite regress, so we don’t know where reality stops …, and we don’t know what our place is in this grand ensemble of universes and simulated universes.”

Astronomer Paul Davies has also noted, “Eventually, entire virtual worlds will be created inside computers, their conscious inhabitants unaware that they are the simulated products of somebody else’s technology. For every original world, there will be a stupendous number of available virtual worlds—some of which would even include machines simulating virtual worlds of their own, and so on ad infinitum.”

Other researchers, such as Konrad Zuse, Ed Fredkin, Stephen Wolfram, and Max Tegmark, have suggested that the physical universe may be running on a cellular automaton or discrete computing machinery—or be a purely mathematical construct. The hypothesis that the universe is a digital computer was pioneered by German engineer Zuse in 1967.

SEE ALSO Fermi Paradox (1950), Parallel Universes (1956), Anthropic Principle (1961).

As computers become more powerful, perhaps someday we will be able to simulate entire worlds and reality itself, and it is possible that more advanced beings are already doing this elsewhere in the universe.

Gerald Feinberg (1933–1992)

Tachyons are hypothetical subatomic particles that travel faster than the speed of light (FTL). “Although most physicists today place the probability of the existence of tachyons only slightly higher than the existence of unicorns,” writes physicist Nick Herbert, “research into the properties of these hypothetical FTL particles has not been entirely fruitless.” Because such particles might travel backward in time, author Paul Nahin humorously writes, “If tachyons are one day discovered, the day before the momentous occasion a notice from the discoverers should appear in newspapers announcing ‘Tachyons have been discovered tomorrow’.”