Review and evaluate test execution documents such as test results, defect reporting and tracking records, test completion metrics, trouble reports, input/output specifications. (Evaluate)

BODY OF KNOWLEDGE VI.D

B

oth the IEEE Standard for Software and System Test Documentation

(IEEE 2008a) and the ISO/IEC/IEEE Software and Systems Engineering—Software Testing—Part 3: Test Documentation

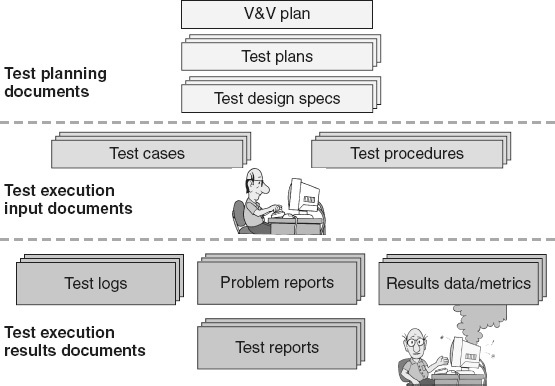

standard (ISO/IEC/IEEE 2013) describe a set of basic project-level software test documents. These standards recommend the form and content for each of the individual test documents, not the required set of test documents. There are a variety of different kinds of test documents, as illustrated in Figure 23.1

:

-

Test planning documents include:

-

Verification and validation (V&V) plans discussed in Chapter 20

-

Test plans and test design specifications discussed in Chapter 21

-

-

Test execution documentation includes test cases and test procedures. Planned test cases and procedures are defined and written during test design activities. Additional test cases and test procedures may also be defined and written during exploratory testing done at test execution time. Test cases and procedures are utilized to run the tests during test execution activities.

-

Test execution and results documentation are done throughout the test execution process to capture the results of the testing activities. This documentation is produced to capture the test execution process so that people can understand and benefit from what has occurred. The results of test execution activities are documented in:

-

Test logs

-

Problem reports

-

Test reports

-

Test data and metrics

-

Figure 23.1

Types of testing documentation.

Test execution and results documentation are necessary because they record the testing process and help software testers and management know what needs to be accomplished, the status of the current testing process, what has and has not tested, and how the testing was done. This test documentation also helps maintainers and other practitioners understand the testing performed by others, and supports the repeatability of tests during debugging and regression testing. All test documentation should be an outgrowth of the normal testing process, not an end in itself. Testers should think carefully about the purpose of the test documentation and whether it makes the final testing outcome better. If not, the tester must determine if that documentation is value-added and necessary. Experience is required to strike a proper balance between too much and too little test

documentation.

Test Execution

Test execution

is the process of actually executing tests and observing, analyzing, and recording the results of those tests. Test execution activities include the testers:

-

Setting up and validating the testing environment.

-

Executing the tests by running predefined manual or automated test cases or procedures, or by performing exploratory testing.

-

Documenting these test execution activities in the test log. If they are performing exploratory testing, the testers also document the test cases and/or procedures as they are executing them, so that they are repeatable.

-

Analyzing the actual test results by comparing them to the expected results. The objective of this analysis is to identify failures.

-

Looking around to identify any other adverse side effects. The expected results typically only describe what should happen rather than what could happen. The test might, for example, enter “xyz” information and the expected result is the display of a certain screen. It will not say, “The printer should not start spewing paper on the floor,” but if the printer does start spewing paper, the tester should notice and report the anomaly.

-

Attempting to repeat the steps needed to recreate the failures or other anomalous results.

-

Capturing problem/adverse side effect information that will help the authors to identify the underlying defects (for example, screen captures, data values, database snap, memory dump shots), if failures or other anomalous results are identified. The testers report the identified failures or other anomalies in formal change requests (problem reports) if the items under test are baselined items. If the items under test are not baselined, failures and anomalies are reported directly to the item’s author for resolution.

-

Participating in change control processes to “champion” their problem reports and/or provide additional information as needed, if the problem was found in a baselined configuration item.

-

Working with the author, as necessary, to isolate defects if the author can not reproduce the failures or other anomalous results. For example, the author may not be able to reproduce the problem because the author does not have the same tools, hardware, simulators, databases, or other resources/configurations in the development environment as the testers are using in the test environment.

-

Reporting ongoing test status, which includes:

-

Activities completed.

-

Effort expended.

-

Test cases executed, passed, failed, and blocked. A blocked test case is one that was planned for execution but could not be executed because of a problem in the software.

-

Testing corrected defects and changes, performing regression analysis, and executing regression testing based on that analysis, as developers provide updated software. This may also include updating the status of the associated problem reports or change requests, as appropriate.

-

Tracking and controlling the ongoing test execution effort using test status reports, data, metrics, and project review meetings. Test metrics are also used to evaluate the test coverage, the completeness status of testing and the quality of the software products.

-

Writing a test report to summarize test results at the end of each major testing cycle.

Test Execution Documentation—Test Case

Test cases

, also called test scripts

, are the fundamental building blocks of testing and are the smallest test unit to be directly executed during testing. Test cases are typically used to test individual paths in a source code module, interfaces, or individual functions or sub-functions of the software. Test cases define:

-

The items to be tested (source code path, interface, function or sub-function)

-

Preconditions defining what must be true before the test case can be executed including how the test environment should be set up

-

What inputs are used to drive the test execution, including both test data and actions

-

What the expected output is (including evaluation criteria that define successful execution and potential failures to watch for)

-

The steps to execute the test

Each test case should include a description and may be assigned a priority. Test cases should always be traceable back to the requirements being tested. Depending on the level of testing, test cases may also trace back to design elements and/or code components. The IEEE Standard for Software and System Test Documentation

(IEEE 2008a) states that each test case should

have a unique identifier and include special procedural requirements and inter-case dependencies as part of its test case outline.

Test cases can be defined in a document, in a spreadsheet, in automated test code, or by using automated testing tools. The level of rigor and detail needed in a documented test case will vary based on the risk and/or integrity level of the item being tested, as well as, the needs of the project and testing staff. For example:

-

Automated test cases may need less documentation than those run manually, or the automated script becomes the documentation

-

Unit level test cases may need less documentation than system test cases

-

Test cases for a system used to search for library books may need to be less rigorous than those for safety critical airplane navigation systems

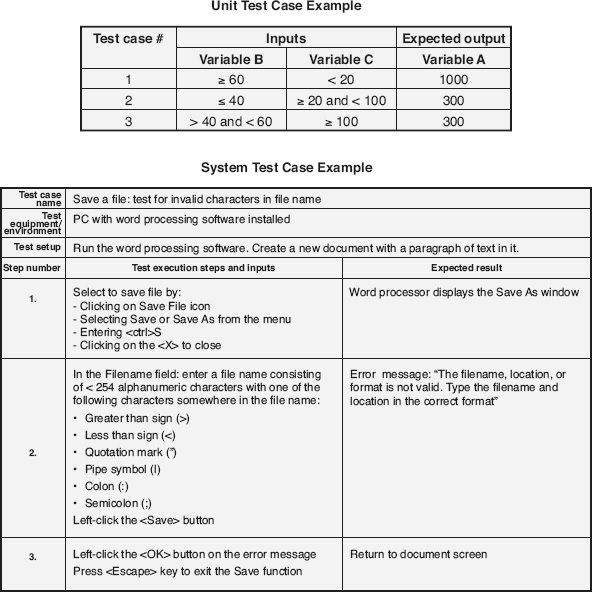

Figure 23.2

illustrates two examples of test case documentation: one simple example for unit test cases and one more rigorously documented example for a system test case.

The input specification part of the test case defines the requirements for the inputs into the test case. This input specification should be as generic as possible to:

-

Allow the tester to sample across the possible input values for more coverage

-

Minimize the number of total test cases and their associated maintenance effort over time as changes occur

Figure 23.2

Test case—examples.

For example, if the input is a range of values, instead of specifying a specific number such as 7 or 73, the input specification might read “input an integer from one through one hundred.” In this case, the actual number chosen as input for each execution of the test case is recorded as part of the test log information to allow reproducibility of that specific test execution (and the reproducibility of any problem or anomaly identified). In other

cases, when specific values are required as inputs into the test case, those specific inputs must be specified. For example, when performing boundary testing on that same range of values, those boundary values of 0, 1, 100 and 101 must be specified in the test cases.

The expected output part of the test case defines the expected results from the execution of the software with the specified inputs. This might includes specific outputs (for example, reports to the printer, flashing lights, error messages, or specific numeric values). Alternatively, if inputs are selected from a range or set of possible inputs, this might include criteria for determining those outputs based on the selected input, for example:

-

For numeric value outputs, the evaluation criteria might include a look-up table or an equation for determining the expected output values based on the selected inputs

-

For a report output to the printer, the evaluation criteria this might include the specification for judging whether the entire report was printed correctly with the correct format and that all information on the report is correct

The environmental needs (setup instructions) part of the test case defines any special test bed requirements for running the test case. For example, if it is a throughput-type requirement, there may be a need to attach a simulator that emulates multiple transaction inputs. Another example might be a requirement to run the test case while the temperature is over 140 degrees Fahrenheit for reliability testing.

Test Execution Documentation—Test Procedure

A test procedure

, also called a test script

or test scenario

, is a collection of test cases intended to be executed as a set, in the specified order, for a particular purpose or objective. For example,

test procedures are used to evaluate a sequence or set of features by chaining together test cases to test a complete user scenario or usage thread within the software. For example, the “pay at the pump with a valid credit card” test procedure might chain together the following test cases:

-

Scan a readable card test case with a valid credit card as input

-

Validate a valid credit card with credit card clearinghouse test case with valid credit card and merchant information as input

-

Select a valid gas grade test case with a valid gas grade as input

-

Pump gas test case with selected gallons of gas being pumped as input

-

Complete transaction with credit card clearinghouse test case with cost of gas pumped amount as input

-

Prompt for receipt test case with selection of “yes” or “no” as input

-

Print receipt test case with information on this transaction as input (this test case would only be included if “yes” was selected in previous test case)

-

Complete transaction and store transaction information test case with information on this transaction as input

Alternative procedures might chain many of these same test cases together with other test cases and/or substitute other test cases into the chain. For example, the pay at the pump with a valid debit card

test procedure, which would include the addition of an accept valid PIN

test case after the first test case and use the validate a valid debit card with credit card clearinghouse

test case in place of the Validate a valid credit card with credit card clearinghouse

test case in the above test procedure.

The documentation of a test procedure defines the purpose of the procedure, any special requirements, including environmental needs or tester skills, and the detailed instructional steps for setting up and executing that procedure. Each test procedure should have a unique identifier so that it can be easily referenced. The IEEE Standard for Software and System Test Documentation

(IEEE 2008a) says the procedural steps should include:

-

Methods and formats for logging the test execution results

-

The setup actions

-

The steps necessary to start, execute, and stop the test procedure (basically the chaining together of the test cases and their inputs)

-

Instructions for taking test measures

-

Actions needed to restore the test environment at the completion of the test procedure

-

If a test procedure might need to be suspended, that test procedure also includes steps for shutting down and restarting the procedure

-

The test procedure may also include contingency plans for dealing with risk associated with that procedure

Test procedures, like test cases, can be defined in documents, in a spreadsheet, in automated test code, or using automated testing tools. Like test cases, the level of detail needed in a documented test procedure will also vary based on the needs of the project and testing staff.

Test Execution Results Documentation—Test Log

A test log

, also called a test execution log

, is a chronological record that documents the execution of one or more test cases and/or test procedures in a testing session. The test log can be a

very useful source of information later when the authors are having trouble reproducing a reported problem, or the tester is trying to replicate test results. For example, sometimes it is not the execution of the last test case or procedure that caused the failure, but the sequence of multiple test cases or procedures. The log can be helpful in repeating those sequences exactly. It may also be important to understand the time of day or day of the week that the tests were executed, the test environment configuration, or what other applications were running in the background at the time the failed test was executed.

The test log information can be logged manually by the testers into a document or spreadsheet, directly into the test cases/procedures, or into a testing tool. If test execution is automated, some or all of the test log information may be captured as part of the outputs recorded and reported by that automation. The test log should include generic information about each test execution session, including:

-

A description of the session

-

The specific configuration of the test environment

-

The version and revision of the software being tested

-

The tester(s) and observers (if any)

As the individual tests are executed during the session, specific chronological information about the execution of each test case and/or procedure is also logged, including:

-

Time and date of execution

-

Specific input(s) selected

-

Any failures or other anomalies observed

-

Associated problem report identifier, if a problem report was opened

-

Other observations or comments worthy of note

Test Execution Results Documentation—Problem Report

A problem report

, also called incident report, anomaly report, bug report, defect report, error report, failure report, issue, trouble report

, and so on, documents a failure or other anomaly observed during the execution of the tests. Problem reports may be part of the formal configuration status accounting information, if the item under test is baselined, or they may be reported informally, if the item is not baselined. A problem report should include information about the identified failure or anomaly with enough detail so that the appropriate change control board can analyze its impact and make informed decisions, and/or so that the author can investigate, replicate, and resolve the problem, if necessary. A description of the information recorded in a problem report type change request is discussed in Chapter 26

.

Problem report descriptions should:

-

Be specific enough to allow for the identification of the underlying defect(s). For example,

-

This: Incomplete message packets input from the XYZ controller component do not time out after two seconds and report a communication error as stated in requirements R00124.

-

Not this: The software hangs up.

-

-

Be neutral in nature and be stated in terms of observed facts, not opinions. Descriptions should treat the work product and its authors with respect. For example:

-

This: When the user enters invalid data into one of the fields in form ABC, the user receives the error message, “Error 397: Invalid Entry.” This error message does not identify the specific fields that were incorrectly entered or provide enough guidance to the user to allow the identification and correction of mistakes.

-

Not this: The error messages in this software are horrible.

-

-

Be written in a clear and easily understood manner. For example:

-

This: Page 12, paragraph 2 of the user manual states “that the user should respond quickly …” This statement is ambiguous because the use of the term “quickly” does not indicate a specific, measurable response time.

-

Not this: The use of vague terminology and nonspecific references in adverbial phrasing makes the interpretation of page 12, paragraph 2 of the user manual questionable at best and may lead to misinterpretations by the individuals responsible for translating its realization into actionable responses.

-

-

Describe a single failure or anomaly and not combine multiple issues into a single report. However, the tester should use their judgment here. If similar failures or anomalies appear to have the same root cause it may be appropriate to document them in the same problem report. Duplicates of the same failure or anomaly should also be recorded in the same problem report. However, if they are reported in separate reports (for example, if they come from different testers or users), a mechanism should exist for linking duplicate reports together.

The contents and tracking of problem reports are further discussed in Chapter 26

. While problem reports can be documented on paper forms, most organizations use some kind of automated problem

reporting tool, or database, to document these reports and track them to resolution. A project’s V&V plans should specify which problem reporting and tracking mechanisms are used by that project.

Test Execution Results Documentation—Test Results Data

Test results data

provides the objective evidence of whether or not a specific test case or test procedures passed or failed test execution. Test results data are compared with the expected results, as defined in the test cases, and evaluated to determine the outcome of the test execution.

Test Execution Results Documentation—Test Status Report

Test status reports

provide ongoing information about the status of the testing part of the software projects over time. Status reports are used to provide management visibility into the testing process including variances between plan and actual results, the status of risks (including new or changed risks) and issues that may require corrective action. Test status reports may be written reports or may be given verbally (for example, verbal status reports are given as part of daily meeting of an agile team).

Test Execution Results Documentation—Test Metrics

Test management, and product and process quality metrics are fundamental to the tracking and controlling of the testing activities of the project. They also provide management with information to make better, informed decisions. Specific test measurement reports for selected test metric many be stand alone reports (Chapter 19

included an example dashboard for a system test sufficiency dashboard) or many be included in test status reports

or test completion reports.

Test management metrics

report the test activities’ progress against the test plans. Test management metrics provide visibility into trends in current progress, indicate accuracy in test planning estimation, flag needed control actions, can be used to forecast future progress, and provide data to indicate the completeness of the testing effort. Examples of test management metrics include:

-

Test activity schedules planned versus actual

-

Test effort planned versus actual

-

Test costs budget versus actual

-

Test resource utilization planned versus actual

-

Requirements (and test case) volatility

-

Testing staff turnover

Product quality metrics

collected during testing can provide insight into the quality of the products being tested and the effectiveness of the testing activities. Product quality metrics also provide data to indicate the completeness of the testing effort, and the product’s readiness to transition to the next software life cycle activity, or be released. Examples of product quality metrics include:

-

Defect arrival rates

-

Cumulative defects by status

-

Defect density

Process quality metrics

can help evaluate the effectiveness and efficiency of the testing activities, and identify opportunities for future process improvement. Examples of test process quality metrics include:

-

Escapes

-

Defect detection efficiency

-

Test cycle times

-

Test productivity

-

Test coverage

Test Execution Results Documentation—Test Completion Report

A test completion report

, also called a test report

or test summary report

, summarizes the results and conclusions from a major cycle of testing, or a designated set of testing activities, after the completion of that testing. For example, there may be an integration test completion report for each subsystem, a system completion test report, an acceptance completion test report, or other test completion reports, as appropriate. Basically, at a minimum, every test plan should have an associated test report. A project’s V&V plans should specify which test reports will be written for that project.

A test report includes a summary of the testing cycle or activities and their results, including any variances between what was planned and what was actually tested. The summary also includes an evaluation, by the testers, of the quality of the work products that were tested, and their readiness (and associated risks) to transition to the next stage of development or to be released into operations. The report also includes a detailed, comprehensive assessment of:

-

The testing process against the criteria specified in the test plan, including a list of any source code modules, components, interfaces, features, and/or functions that were or were not adequately tested

-

A list of identified problems:

-

If resolved—a description of their resolution

-

If unresolved—their associated risks, potential impacts, and work-arounds

-

-

An evaluation of each item tested based on its pass/fail criteria and test results, including an assessment of its reliability (likelihood of future failure)

Finally, the test report includes a summary of test activities, and information including test measures, testing budget, effort and staffing actuals, number of test cases and procedures executed, and other information that would be beneficial when evaluating lessons learned, and planning and estimating future testing efforts.