A

ccording to ISO/IEC/IEEE Systems and Software Engineering—Vocabulary

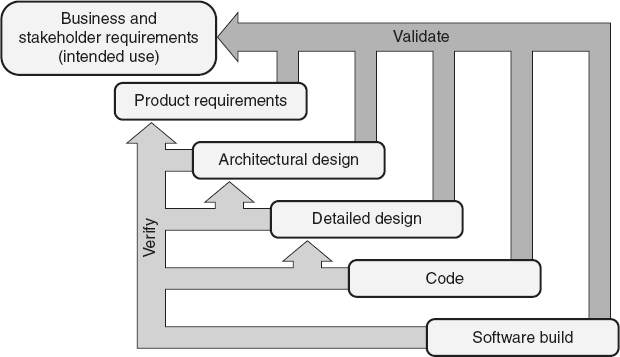

(ISO/IEC/IEEE 2010), verification

is “the process of evaluating a system or component to determine whether the products of a given development phase satisfy the conditions imposed at the start of that phase.” Verification is concerned with the process of evaluating the software to make certain that it meets its specified product requirements and adheres to the appropriate standards, practices, and conventions. Verification is implemented by looking one phase back. For example, code is verified against the detailed design and the detailed design against the architectural design. The Capability Maturity Model Integration (CMMI) for Development

(SEI 2010) defines a verification process area, which states that the purpose of verification “is to make certain that selected work products meet their specified requirements.” In other words, “Is the software being built right?”

Validation

is the “confirmation, through the provision of objective evidence, that the requirements for a specific intended use or application have been fulfilled” (ISO /IEC / IEEE 2010). Validation is concerned with the process of evaluating the software to make certain that it meets its intended use and matches the stakeholders’ needs. The CMMI for Development

(SEI 2010) defines a validation process area, which states that the purpose of validation “is to demonstrate that a product or product

component fulfills its intended use when placed in its intended environment.” In other words, “Is the right software being built?” These definitions of verification and validation are illustrated in Figure 20.1

.

Figure 20.1

Verification and validation.

Verification and validation (V&V) processes are complementary and interrelated. The real strength of V&V processes is their combined employments of a variety of different techniques to make certain that the software, its intermediate components and its interfaces to other components of the system are high-quality, comply with the specified requirements, and will be fit for use when delivered. V&V activities provide objective evaluations of work product and processes throughout the software life cycle, and are an integral part of software development, acquisition, operations, and maintenance. “The purpose of V&V is to help the organization build quality into the system during the life cycle”

(IEEE 2016).

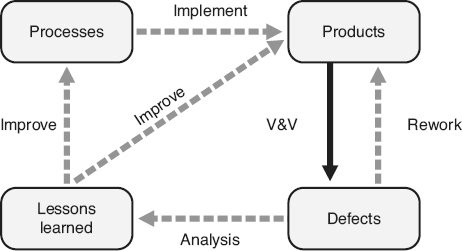

Not only do V&V activities provide objective evidence that quality has been built into the software, they also identify areas where the software lacks quality, that is, where defects exist. As illustrated in Figure 20.2

, every organization has processes (formal and / or informal) that it implements to produce its software products. These software products can include both the final products that are used in operations (for example, executable software, user manuals) and interim products that are used within the organization (for example, requirements, designs, source / object code, test cases). V&V methods are used to identify defects, or potential defects, in all of those products. If potential defects are identified, they must be analyzed to determine if actual defects exist. Identified defects are then eliminated through rework, which has an immediate effect on the quality of the product. V&V activities provide the mechanisms for defect discovery throughout the software life cycle. Feedback from V&V activities performed in parallel with development activities provides a cost-effective mechanism for correcting defects in a timely manner and implementing corrective actions to prevent future recurrence of similar defects. This feedback minimizes the overall cost, schedule, and product quality impacts to the project of software defects.

The defects found with V&V methods should be analyzed to determine their root cause. The lessons learned from this analysis can be used to improve the processes used to create future products and therefore prevent future defects, thus having an impact on the quality of future products produced.

The data recorded during the V&V activities can also be used for making better management decisions about issues such as:

-

Whether the current quality level of the software is adequate

-

What risks are involved in releasing the software in its current state

-

Whether additional V&V activities are cost-effective

-

What the staff, resource, schedule, and budget estimates for future V&V efforts should be

Figure 20.2

V&V techniques identify defects.

Use software verification and validation methods (e.g., static analysis, structural analysis, mathematical proof, simulation, and automation) and determine which tasks should be iterated as a result of modifications. (Apply)

BODY OF KNOWLEDGE VI.A.1

Static Analysis

Static analysis

is a method used to perform V&V by evaluating a software work product to assess its quality and identify defects without executing that work product. Reviews, analysis and mathematical proofs are all forms of static analysis.

Static analysis techniques include various forms of product and process reviews

to evaluate the completeness, correctness, consistency, and accuracy of the software products and the processes that created them. These reviews include:

-

Entry / exit criteria reviews, quality gates, and phase gate reviews, discussed in Chapter 16 , are used to evaluate whether the products of a process or major activity (phase) are of high enough quality to transition to the next activity

-

Peer reviews including inspections, discussed in Chapter 22 , can be used for engineering analysis and as defect-detection mechanisms

-

Other forms of technical reviews and pair programming, discussed in Chapter 22 , can also be used to assess and evaluate software products and processes and find defects

Static analysis

can be performed using tools (for example, compilers, requirement / design / code analyzers, spell checkers, grammar checkers, and other analysis tools).

Individuals or teams can also perform analysis activities through in-depth assessments of the requirements or of other software products. For example, Chapter 11

included a discussion of various models that can be useful in analyzing the software requirements for completeness, consistency, and correctness. These same models can be used in the analysis of the software’s architecture and component designs. Other forms of analysis might include feasibility analysis, testability analysis, traceability analysis, hazard analysis, criticality analysis, security analysis, or

risk analysis used to help identify and mitigate issues. Requirements allocation and traceability analysis is used to verify that the software (or system) requirements were implemented completely and accurately.

Mathematical proofs,

also called proofs of correctness,

are “a formal technique used to prove mathematically that a computer program satisfies its specified requirements” (ISO / IEC / IEEE 2010). Mathematical proofs use mathematical logic (Boolean algebra) to deduce that the logic of the design or code is correct when compares to their predecessor work products.

Dynamic Analysis

Dynamic analysis

methods are used to perform V&V on software components and / or products by executing them and comparing the actual results to expected results. Testing and simulations, discussed in Chapters 21

and 23

, are forms of dynamic analysis.

Piloting

is another type of dynamic analysis technique. The piloting of a software release, also called beta testing

or first office verification,

rolls out the new software to just a few operational sites for evaluation before propagating it to a large number of sites in order to minimize the risk of full scale implementation. For other software deliverables such as training and help desk support that are difficult to test, piloting can be a useful V&V technique. For example, a set of typical software issues or problem areas could be compiled during system test execution and used to create a set of “typical” problem scenarios used to pilot the help desk. The testers could call the help desk, describe one of the scenarios, and evaluate whether the help desk personnel can adequately handle the call.

Product Audits

Product audits, discussed in Chapter 8

, throughout the life cycle

can evaluate the product against the requirements and against required standards (coding standards, naming conventions, required document formats). Product audits usually use static analysis techniques, but they can also use dynamic analysis techniques. For example, if the auditor selects a sampling of test cases to execute as part of the audit. Physical and functional configuration audits, discussed in Chapter 27

, are also used as a final check for defects before the product is transitioned into operations.

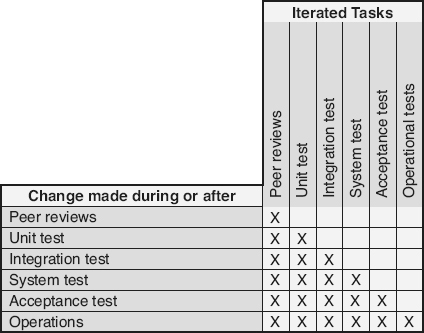

V&V Task Iteration

Whenever a change is made to a software work product that has already undergone one or more V&V activities, an evaluation should be done to determine how many already executed V&V activities will need to be re-executed (iterated). This evaluation should initially be done as part of the impact analysis performed when evaluating the change (see Chapter 26

for a discussion of impact analysis). Additional evaluation may be needed, as the change implemented, if the depth and breadth of that change ends up being different than expected.

The first part of the V&V task iteration decision is based on what V&V activities have already occurred. As Figure 20.3

illustrates, if changes are made after the completion of just the peer reviews, then the only consideration is whether or not to re-peer review. If the change is made later in the life cycle, any completed (or partially completed) V&V activities are candidates for iteration.

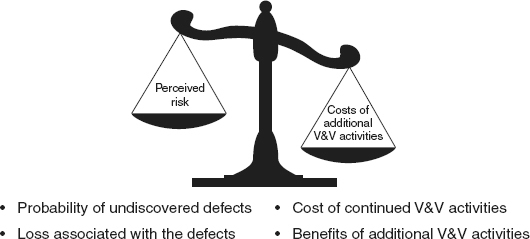

The second part of the V&V task iteration decision is based on risk and the concept of V&V sufficiency. As illustrated in Figure 20.4

, V&V sufficiency analysis

weighs the risk that the software still has undiscovered defects and the potential loss associated with those defects, against the cost of performing additional V&V activities and the benefits of additional V&V activities:

-

Just because V&V tasks were iterated does not mean that any new or additional defects will be found.

-

Just because the software was changed does not mean new defects were introduced.

-

Even if defects were introduced, it does not mean that those defects will cause future failures. For example, most software practitioners have experienced latent defects that have taken years to discover after they were originally introduced into a software product because the software was never before used in the exact way required to uncover the defect.

Figure 20.3

Verification and validation task iteration considerations.

Figure 20.4

Verification and validation sufficiency.

V&V task iteration risk analysis should consider the quality and integrity needs of the product or product component being evaluated. For example, software work products that have the following characteristics are typically candidates for more V&V task iteration than those that do not:

-

Mission-critical

-

Safety-related

-

Security-related

-

Highly coupled

-

Defect-prone in the past

-

Most used functionality

Metrics can be used so that trade-off decisions can be made based on facts and information rather than “gut feelings.” For example, metrics information can be used to tailor the V&V plans based on data collected on previous products or projects, on the estimated defect counts for various work products, and on an analysis of how applying the various techniques can yield the best results given the constraints at hand. As the project progresses, metrics information

can also be used to refine the V&V plans using actual results to date.

Use various evaluation methods on documentation, source code, etc., to determine whether user needs and project objectives have been satisfied. (Analyze)

BODY OF KNOWLEDGE VI.A.2

Evaluation Methods

Common V&V techniques used for requirements and design include: (Note that requirements validation is discussed in more detail in Chapter 11

.)

-

Analysis (for example, interface analysis, criticality analysis, hazard analysis, testability analysis, feasibility analysis, security analysis, and risk analysis) and pair programming, peer reviews including inspections and other technical reviews to:

-

Validate that the business, stakeholder, product requirements, architectural design, and component designs meet the intended use and stakeholders’ needs

-

Validate that the requirements as a set are complete, internally and externally consistent, and modifiable

-

Validate that each specified requirements is clear, unambiguous, concise, finite, measurable, feasible, testable, traceable, and value-added

-

Verify that the design completely and consistently implements the specified requirements, and that it is feasible and testable

-

Verify 100% traceability from business requirements to stakeholder requirements to product requirements to architectural design to component design

-

Validate and verify that criticality, safety, security, and risks are identified, analyzed and mitigated in the requirements and design, as appropriate

-

Verify that the specified requirements and designs comply with standards, policies, regulations, laws, and business rules

-

-

Mathematical proofs of correctness to verify the correctness of the requirements and design based on their predecessor specifications

-

Functional configuration audits to verify that the completed requirements and designs conform to their requirements specification(s) in terms of completeness, performance, and functional characteristics

-

Prototyping to evaluate correctness and feasibility

-

Traceability matrices to verify that the requirements have been built into the software work products and that one or more test cases exist to verify and / or validate each requirement and its implementation

-

Design of integration, system and acceptance test cases and test procedures, and the execution of test cases and test procedures to validate and verify the correct and complete implementation of the requirements and design

-

Static analysis tools including spelling and grammar checkers and tools used to search for words and phrases that are potentially problematic, for example:

-

Non-finite words or phrases (for example, all, always, sometimes, including but not limited to, and etc.)

-

Words ending with “ize” or “ly,” (for example, optimize, minimize, quickly, user-friendly)

-

Other ambiguous or non-measurable words (for example, fast, quick, responsive, large)

-

Indications of incompleteness (for example, “to be done”, “to be determined” or “TBD”

-

Common V&V techniques used to evaluate source code include:

-

Analysis (for example, data flow analysis, control flow analysis, criticality, hazard, security, safety, and risk) and pair programming, peer reviews, and other types of technical reviews

-

Validate that the source code as written meets the intended use and stakeholders’ needs

-

Verify that the source code completely and consistently implements its component design and allocated requirements

-

Verify 100% traceability from component design to source code

-

Verify that criticality, safety, security, and risks are mitigated in the source code as specified

-

Verify that the specified source code comply with standards, policies, regulations, laws, and business rules

-

-

Mathematical proofs of correctness to verify the correctness of each sources code module based on its component design

-

Traceability matrices to verify that the requirements and component designs have been built into the source code and that one or more unit / integration test cases exist to verify each source code module

-

Design and execution of units test cases and test procedures to verify the correct and complete implementation of the code and its associated component design

-

Functional configuration audits to verify that the completed source code modules conform to their requirements specification(s) in terms of completeness, performance, and functional characteristics

-

Physical configuration audits to verify that the completed source code modules conform to the technical documentation that defines them

-

Static analysis tools (for example, compilers, and more-sophisticated code analyzers and security analyzers) to identify defects, security holes, and other analogies

Common V&V techniques used to evaluate software builds include:

-

Integration, system, alpha, beta, and acceptance test execution to validate and verify the correct and complete implementation of the software products including user documentation

-

Build reproducibility analysis to verify the reproducibility of the build

-

Functional configuration audits to verify that the completed software products including user documentation conform to their requirements specification(s) in terms of completeness, performance, and functional characteristics

-

Physical configuration audits to verify that the completed software products including user documentation conform to the technical documentation that defines them

Common V&V techniques used to evaluate test cases and test procedures include:

-

Analysis, pair programming, peer reviews and other technical reviews

-

Validate that the test cases and test procedures as written reflect the intended use and stakeholders’ needs

-

Verify that the test cases and test procedures completely and consistently verify the source code, design and / or requirements

-

Verify 100% traceability from requirements to test cases and test procedures

-

Verify that the specified test cases and test procedures comply with standards, policies, regulations, laws and business rules

-

-

Traceability matrices to verify test coverage and completeness of the test cases and test procedures sets

-

Manual execution test cases and test procedures before they are automated to identify defects and other analogies

-

After automation, the test automation can be reviewed and tested before it is used to test the software to identify defects and other analogies

Common V&V methods used to evaluate documentation (for example, training materials, user documentation) include:

-

Analysis and pair programming, peer reviews, and other types of reviews

-

Validate that the documentation as written meets the intended use and stakeholders’ needs

-

Verify that the documentation completely and consistently implements its allocated requirements

-

Verify 100 percent traceability from requirements to documentation

-

Verify that criticality, safety, security, and risks are mitigated in the documentation as specified and / or needed

-

Verify that the specified documentation comply with standards, policies, regulations, laws, and business rules

-

-

Design and implementation of test cases and test procedures to verify the user-deliverable documentation (for example, user manuals and installation instructions)

-

Static analysis tools (for example spell checkers and grammar checkers) to identify defects and other analogies

Common V&V methods used to evaluate the replication and packaging process include comparing the replicated product to the original, and delivering a complete software shipment in-house, evaluating its contents, and installing and testing / operating the included software. Piloting is a common V&V method for evaluating training and help desk services.

Risk-Based V&V

Risk-based V&V

prioritizes software items (work products, product components, or features / functions) based on their highest risk exposure. The risk exposure

is calculated based on probability and impact. In risk-based V&V, probability

is the estimated likelihood that yet undiscovered, important defects will exist in the software item after the completion of a V&V activity. Impact

is the estimated cost of the result or consequence if one or

more undiscovered defects escape detection during the V&V activity. The higher the risk exposure of an item is, the higher the level of rigor and intensity needed when performing and documenting V&V activities for that item.

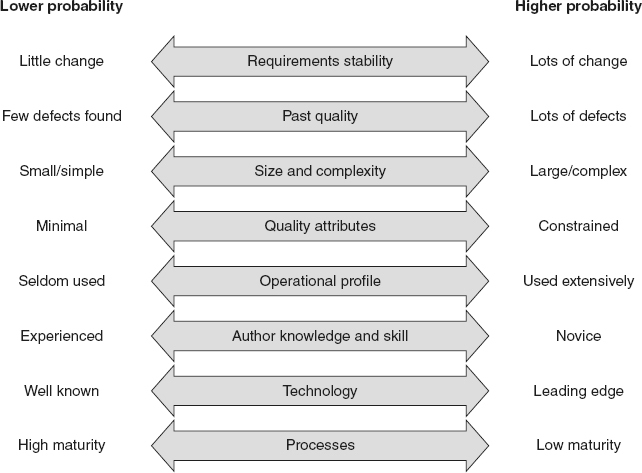

Multiple factors or probability indicators may contribute to a software item having a higher or lower risk probability. These probability indicators may vary from project to project, and from environment to environment. Therefore, each organization or project should determine and maintain a list of probability indicators to consider when assigning risk probabilities to its software items. Figure 20.5

illustrates examples of probability indicators, including:

-

The more churn there has been in the requirements allocated to the software item, the more likely it is that there are defects in that item

-

Software items that have had a history of defects in the past are more likely to have additional defects

-

Larger and / or more-complex software items are more likely to have defects than smaller and / or simpler software items

-

The more constraints on the quality attribute (for example, reliability, performance, safety, security, maintainability), the more likely it is that there are related defects in the software item

-

The more an item in the software is used, the more likely it is that users will encounter defects in that part of the software

-

Novice software developers with less knowledge and skill tend to make more mistakes than experienced developers, resulting in more defects in their work products

-

If the developers are very familiar with the programming language, tool set, and business domain, they are less likely to make mistakes than if they are working with new or leading-edge technology

-

Higher-maturity processes are more likely to help prevent defects from getting into the work products

Figure 20.5

Probability indicators—examples.

Multiple and varying factors or impact indicators may also contribute to a software item having a higher or lower risk impact. Each organization or project should determine and maintain a list of impact indicators to consider when assigning risk impacts to its work products. Examples of impact indicators include:

-

Schedule and effort impacts

-

Development and testing costs

-

Internal and external failure costs

-

Corrective action costs

-

High maintenance costs

-

Stakeholder dissatisfaction or negative publicity resulting in lost market opportunities and / or sales

-

Litigation, warranty costs, or penalties

-

Noncompliance with laws, regulations or policies

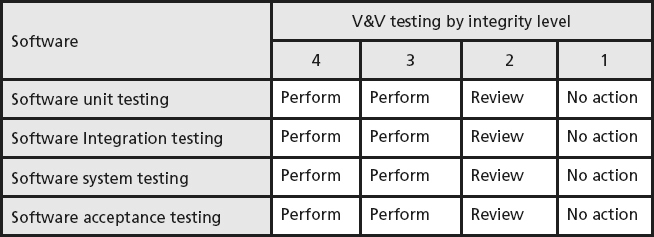

The IEEE Standard for System, Software and Hardware Verification and Validation

(IEEE 2016) uses the assignment of an integrity level, varying from high to low, to determine the required set of V&V activities and their associated level of rigor and intensity for each software item (instead of risk exposure, as discussed above). Other standards, including industry specific standards, use similar integrity level grading schemas. The “integrity level

determination establishes the importance of the system to the user and acquirer based on complexity, criticality, risk, safety level, security level, desired performance, reliability or other system-unique characteristics” (IEEE 2016). This IEEE standard defines a four-level integrity schema as an example, as illustrated in Table 20.1

. According to this table:

Table 20.1

Software testing requirements by integrity level—example (based on [IEEE 2016]).

-

For level 3 and 4 (high integrity level) products, all of the V&V activities are required to be performed

-

For level 2 (lower integrity level) products, a review should be conducted to determine the need to perform each V&V activity

-

For level 1 (lowest integrity level) products, no V&V action are necessary

However, this IEEE standard leaves the determination of the actual integrity levels up to the needs of each project/program, and states that the V&V plans should include (IEEE 2016):

-

The characteristics used to determine the selected integrity schema

-

The resulting item integrity levels

-

Plans defining the minimum level of V&V activities based on each item’s integrity level

V&V Plans

Each project / program defines its V&V strategies and tactics as part of its V&V plans. The IEEE Standard for System, Software and Hardware Verification and Validation

(IEEE 2016) provides an outline, and guidance, for performing V&V planning. Like other planning documents, the V&V plans should include information

about the project’s organizational structure, schedule, budget, roles and responsibilities, resources, tools, techniques, and methods related to the V&V activities. The V&V plan also defines the integrity level schema mentioned above.

The V&V plans define the risk-based, or integrity-based, V&V processes that are planned for each major phase / activity of the software life cycle to evaluate the work products produced or changed during those phases / activities, including:

-

Acquisition

-

Supply

-

Project planning

-

Configuration management

-

Business, stakeholder and product requirements

-

Design including architectural and component design

-

Implementation

-

Test (unit, integration, system, alpha, beta)

-

Acceptance

-

Installation

-

Operations

-

Maintenance

-

Retirement (disposal)

The V&V plans should include plans for ongoing status reporting on identified problems and V&V activities, for V&V summary reports, and for any special or other V&V reports required for the project. The V&V plans should also define V&V administrative procedures including:

-

Processes for reporting identified problems and tracking them to resolution

-

Policies for V&V task iteration

-

Policies for obtaining deviations or waivers

-

Processes for controlling V&V-related configuration items

-

V&V standards, practices, and conventions that are being used on a project, including those adopted or tailored from the organizational-level standards and any that are project-specific