4

Words, Words, Words

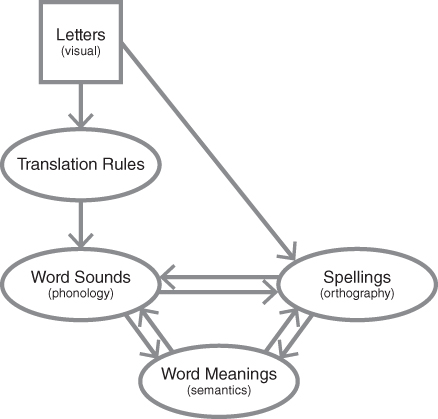

We've been building a simple model of reading since Chapter 1 and our goal has remained the same: How do you use text to access word meanings (Figure 4.1)?

Figure 4.1. Letters, sound, spelling, and meaning.

© Daniel Willingham

But we've said next to nothing about what those word meanings in your memory actually look like, nor where they come from. We can't put that off any longer. After all, we're focusing on the aspects of cognition that lead to successful or unsuccessful reading. In that light, you would expect that vocabulary would be important—if I encounter a bunch of unknown words as I read, that can't be good for comprehension.

Researchers usually call this the breadth of your vocabulary and as we'll see, it matters. But we'll also see that it's only one aspect of the contribution that word representations make to reading. Another factor is vocabulary depth, which may matter even more. Depth implies that your knowledge of a given word is not a simple on off matter wherein you know a word or you don't; word knowledge may be shallow, deep, or somewhere in between.

Let's start by digging into what it means to know a word.

The Baffling Complexity of Word Knowledge

As a starter, please offer a brief definition of these words:

At first blush, the question, “What does it mean to know a word?,” doesn't seem that daunting. We'd guess that we have something like a mental dictionary where word meanings are stored, probably not that different than the paper or online dictionaries we're used to consulting. If I look up the word “spill,” for example, I find these three senses of the verb:1

But the theory that mental dictionaries resemble paper dictionaries won't withstand much scrutiny because it's not hard to generate sentences that the paper dictionary says ought to work, yet our mental dictionary says don't. For example, if I wrote, “Would you mind spilling water on my plants while I'm on vacation?”, that seems wrong, even though it is consistent with the second definition. And this sentence seems consistent with the third: “This sandwich would be better if I had spilled the peanut butter to the edge of the bread.” This sort of lexical near‐miss is familiar to teachers. Students try to enliven their writing with unusual words and, armed with a thesaurus but without detailed knowledge of definitions, they submit papers studded with rare words used in not‐quite‐correct ways.

Admittedly, these examples don't seem to rule out the possibility that the mental dictionary is like the paper variety. They just seem to imply that the mind's dictionary uses more precise definitions than Merriam‐Webster does. With enough time, dictionary writers could sharpen the definition to match what's in the mind and so exclude odd usages like the peanut butter example. But that assessment is too optimistic. The real problem is that words, in isolation, are ambiguous. They derive their meaning from context. Dictionaries can't specify all contexts, and so seek to offer context‐free definitions. That's why they will inevitably be incomplete.

Let's start with an easy example, the word “heavy.” “Heavy” (according to Merriam‐Webster online) means “having great weight.”2 But “great weight” depends on the noun to which “heavy” is applied. Fifty pounds is heavy if I'm describing a watermelon, but it's not heavy if I'm describing an adult human (Reminder: I'm putting quotations marks around a word as it would appear on the page; if it's boldface and italicized, that means it's the meaning of the word in your mind.) When I write, “that's a heavy watermelon,” what I've actually told you is that the watermelon is heavy compared to most watermelons.

This is what I mean when I say that words take on meaning from their context: heavy becomes more meaningful when I know the word applies to watermelon. And if the word heavy means less on its own than we expected, watermelon must mean more. Understanding “heavy watermelon” requires that your mental definition of watermelon cannot consist simply of something like sweet fruit with a juicy red flesh. It must also include a watermelon's typical weight.

The verb enjoy is also ambiguous. You do different things when you enjoy a movie, or enjoy watermelon, or enjoy a friendship. The best we can do for a definition of enjoy without any context might be to take pleasure in something. But when I hear that you're enjoying a watermelon, I know more than that you're taking pleasure in it. I know you're eating it. (I suppose you could enjoy a watermelon just by looking at it or smelling it. If you tell me you're enjoying it by listening to it, we need to have a longer conversation.) To understand the word enjoy I must understand the function of what is being enjoyed: watermelons are for eating, movies for diversion, friendships for companionship. So again, a word (enjoy) turns out to be ambiguous, and so in order to make sense of it, another word (watermelon) must have more information stored in its definition (edibility) than we might have guessed.

Last example. Suppose you read this question: “Does photosynthesis contribute to the growth of a watermelon?” You can answer, of course, but it's odd that you're able to do so. Unless you happen to have studied watermelons closely at some point in school, you likely never read in a book or learned from a teacher that watermelon plants use photosynthesis. No, you successfully answer this question because you know watermelons are the fruit of a plant, and plants use photosynthesis. Again, it seems a word definition (watermelon) that ought to have been simple needs to have more information (grows on plants) crammed into it.

The meaning of watermelon is getting crowded, but this problem is still more extreme than I've implied. All the examples so far have shown that some words must have properties stored with them so that other, more obviously ambiguous words can have meaning. But in another sense, all words are ambiguous because all words have many different associations or features of meaning that might be important (or not). And which feature matters depends on context. Let's look at another example:

These three examples show that even a straightforward verb you might read, like “spill,” is still ambiguous in a sense. Spill has lots of features: spills can make a mess; when you spill something you have less of it; and that liquid spills penetrate clothing and can, depending on the liquid, injure someone. You don't know which feature is relevant upon reading “Trisha spilled her coffee,” so the simple word spill is, outside of a particular context, somewhat ambiguous.

We've reached some sort of a tipping point. Anything I know about spilling may become relevant to how I interpret the word; it all depends on the surrounding context. We can't squeeze everything we know about watermelons and spills into their mental definitions. We need some other way to make what we know about watermelons available to shape the way we interpret the word when we encounter it.

How Words Are Organized in the Mind

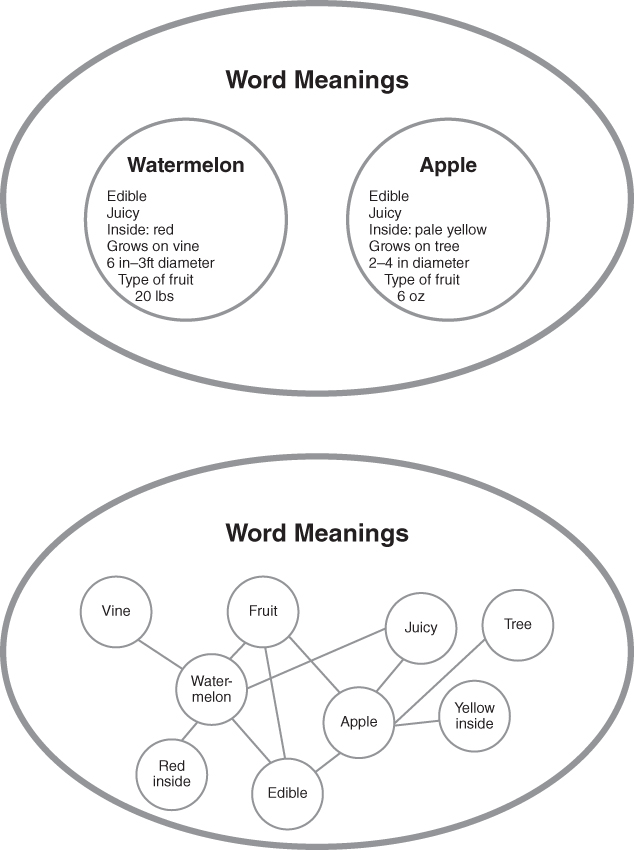

Instead of packing more and more into our hypothetical mental dictionaries, we'll do the opposite. We'll minimize what's in any one entry, but we'll add lots of connections among the entries. This scheme is typically represented by showing mental concepts as nodes in a network, and adding connections or links among the nodes (Figure 4.2).

Figure 4.2. Alternative representations for word definitions. Top, how we might imagine watermelon and apple are represented in the mind. Bottom, a more workable type of representation. Note that in each case we're looking at a more detailed view of the “word meanings” component of the model from Figure 4.1.

© Daniel Willingham

We've seen something like this network before in Chapter 2, when we discussed word identification. As before, each node in the network can have activity—think of it like energy. This time, let's add the idea that the nodes don't just turn on or off, but can be brighter or fainter, like a light bulb turned up or down with a dimmer switch. When you're conscious of a concept (thinking about watermelon, for example), that's synonymous with the node being fully active, the light bulb being as bright as it can be. The lines between nodes represent connections; they carry energy from one node to another. Concepts will be linked if they are attributes (watermelon has the attribute of redness) or category membership (watermelon is a type of fruit) or close semantic relationship (watermelon is similar in meaning to cantaloupe).

When the watermelon node is active, the energy is passed via the links to other concepts connected to watermelon. Characteristics of watermelon and the function of watermelon and the fact that watermelons grow on plants—all of these can be related to the concept watermelon without having to stuff them all into the mental dictionary entry for watermelon.3

Here's why that's a big advantage. When you read the word “watermelon,” the mental representation of the concept watermelon should have a certain promiscuity—you never know which feature of watermelon is going to be important, so all of them need to be available to color your interpretation of “watermelon.” But they won't all be important at the same time. We need to capture the fact that your mind homes in on just the right knowledge about watermelons for the context, while ignoring all the information related to watermelons that doesn't matter for the context.

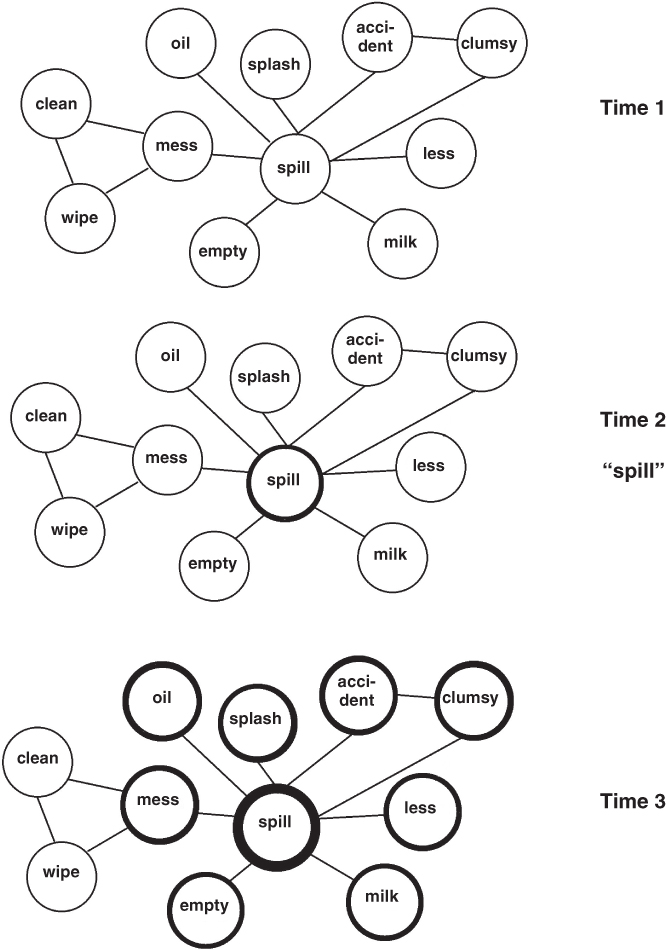

That's easy to implement in the network model. Let's look at an example using the word “spill.” You read the word “spill” which activates that concept in your mind, and that activity is transmitted along the links to all the concepts connected to spill (Figure 4.3).

Figure 4.3. A meaning network for the concept spill. Such a network represents the associations between active and related concepts.

© Daniel Willingham

Time 1 in Figure 4.3 shows concepts in memory, connected. The solid lines show associations between concepts: spill is related to oil, to milk, to mess, and so on. When you read a word, “spill,” the mental concept, spill, becomes active, which I've depicted by the thicker line around the spill concept at Time 2. Time 3 shows the state of the network perhaps two tenths of a second later. Spill has gained still more activity, and that activity is spreading through the network—related concepts are more active.4

The relationship between node activity and awareness is critical to how this model of meaning works. Lots of activity is associated with awareness—if a node is very active, you're thinking about that concept; the light bulb is very bright. But links don't convey all the activity of a node, only some of it. And that partial activation is reflected in the activity of the connected node. It's partially active—the light bulb is dimly lit. That in turn means that you're not thinking about the concept, that it's out of awareness. So when you read “spill,” all the concepts linked to spill are somewhat active, but not enough that you're aware of them. That's the crucial idea—that just reading the word “spill” makes all the ideas associated with spill active, but not so active that all these varied ideas come crashing into consciousness. They are just a little more ready for cognitive action—it's easier to think about the concept or read the word representing it.

Here's a classic experiment showing that claim is true. I sit you before a computer screen and I show you two buttons. Then I give you the following instructions:

On each trial you'll see two words, one above the other. Sometimes one or both won't be a real word, it will be a made‐up word, like “splone.” Your job is simple. If both words are real words, press the “yes” button on your left. If either is a made‐up word, press the “no” button on your right.

What I don't tell you is that when both words are real, sometimes they are related, e.g., “birthday” and “party” but sometimes they are not, e.g., “nurse” and “shell.” As shown in the graph, people are faster to press the “yes” button when the words are related (Figure 4.4).5

Figure 4.4. Words activate related words. This graph shows the time it took people to verify that each of two letter strings formed a word. Verification time was faster when the words were related, compared to when they were not.

© Anne Carlyle Lindsay based on data from Meyer & Schvanaveldt (1971)

So when you read the first word (e.g., “bread”) on the screen, the concept bread becomes active and related concepts—flour, bake, oven, butter, jam—become somewhat active. When you read the second word (“butter”), the concept (butter) is already somewhat active, and so the word is easier to read. Why is it easier to read? Remember that the links between a word's meaning, sound, and spelling are tight. So when the meaning becomes marginally active, so too does the sound and spelling of that word. Note that this experiment shows that the process by which associated words become active during reading is very rapid—it happens between the time that you've read the first word and when you start to read the second word.

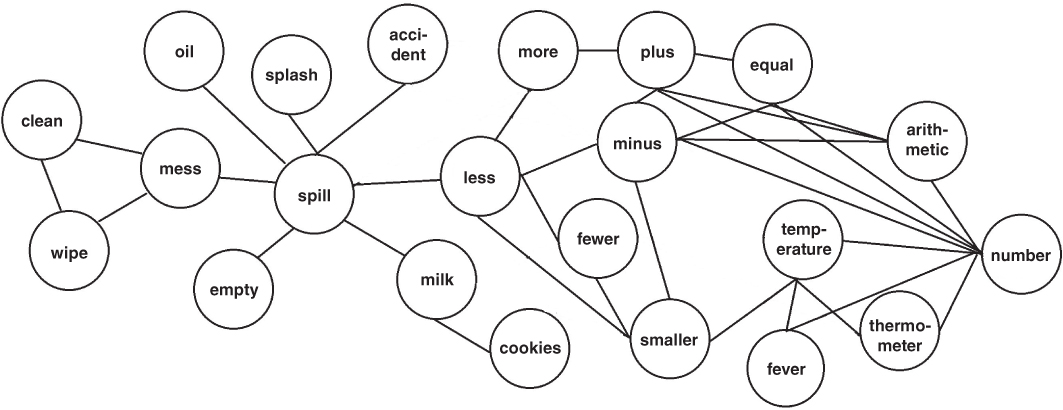

Okay, so when you read a word—say, “doctor”—all the word meanings associated with doctor (e.g., nurse, hospital, illness) become somewhat active, somewhat more ready for cognitive use. Now let's consider what that implies for understanding the meaning of what you read. You read “Trisha spilled her coffee” and all the concepts related to spill get this half‐activation we've been talking about (Figure 4.5). Then you read “Dan jumped up to get her more.”

Figure 4.5. Expanded network of word meanings for spill.

© Daniel Willingham

When you read the word “more” in the second sentence, the concept more will become active, and you'll be aware of it. Related concepts will gain a little bit of activity, but not enough that you're aware of them. But note that the concept less was already somewhat active because you read “spill,” and less is associated with spill. Now the activation of the concept more will also activate the concept less, and that is enough to push the activation of less above some threshold. It enters awareness. So you're thinking of spill and more (because you read those words) and you're also thinking of less, even though you didn't read it. The other associations of spill—that a spill makes a mess, that clumsy people spill things—had the potential to be important, but nothing else in the context made those features rise to awareness. In addition, once one meaning rises to the surface, others are suppressed; in skilled readers, that process take less than a second.6

You can see how this model would allow for ideas to enter awareness even if the writer doesn't refer to them explicitly. If you read a bunch of words, each of which is connected to concept X, then all of those words pass activation to X, and X will pass the activation threshold of awareness. Remember the passage from the Introduction, where the dying “Abbadabba” Berman says, “Right . . . Three three. Left twice. Two seven. Right twice. Three three.” I asked how a reader knows that he's talking about a safe when the safe goes unmentioned. Now we have a model of memory that explains how that might work. Your knowledge of safes includes the ideas that the combinations use numbers between zero and 99, that the combination usually uses three numbers, that the dial is spun to the right, then left, then right in that order, and so on. Hearing those features listed activates your memory representation for safe.

Naturally, the network of concepts in your mind would be enormous—I've just shown a tiny fraction of the network for illustrative purposes. The breadth of the network, and the particular connections in it are a product of an individual's experiences. In the United States, most people associate the concept bird with the concepts builds a nest, eats bugs, and sings melodious songs. Someone who grows up in an environment where most birds have different qualities will have a different network in memory. Someone who grows up in circumstances with less exposure to words, and to knowledge about the world, will have a network with fewer nodes, and with fewer connections among them.

We can also imagine that we'd need to add some features to the stripped‐down architecture I've described. For example, we'd want links that don't simply pass activation, but that pass varying proportions of activation, to reflect the fact that some concepts are more closely linked than others. Salt should perhaps be linked to steak but surely the link from salt to pepper should be stronger. Come to that, we should enable links to be inhibitory too, so that if one node is active, it squelches the other. For example, if we added pour to the network, that concept and spill should mutually inhibit one another, because if something is poured, it isn't spilled, and vice versa.

We could list other niceties, but we've come far enough for the basic point to be clear. Word meanings are exquisitely sensitive to context; any concept connected to the word can influence how you interpret the word. We've made some progress in thinking through how words get their meaning from other words . . . but that points to another problem.

Coping with Missing Meanings

What happens if your network is missing a connection? A child may know that spills make a mess, but likely doesn't know that oil spills are environmental disasters. Or what if the child doesn't know what a “thermometer” is? Obviously, one possibility is that he simply doesn't understand the sentence. If someone remarks, The Presidential candidate has an odd habit of catachresis, the rest of the sentence does not help me puzzle out the meaning of the rare word.

Other times the context provides significant help. Researcher Walter Kintsch offered this example: “Connors used Kevlar sails because he expected little wind.”7 Before reading this example, all I knew about Kevlar was that it had something to do with body armor. I didn't know it's a fabric (which I now assume it is), and I sure didn't know that it is used for sails. These facts are easy to infer from the context, and it's easy to infer that such sails have some property that make them suitable for calm winds although I can't tell what that property is. So what's the problem if I didn't know about Kevlar sails before reading Kintsch's example sentence?

With reading that sentence? No problem. In fact, that's one of the great pleasures of reading. You learn new things! If you had to have all of the background knowledge (and vocabulary) assumed by a writer, then it would nearly impossible to learn anything new by reading; the writer would have to be psychic, anticipating exactly what you don't know, and explaining it using only concepts you do know. So some reading is a little like problem solving, because it requires you to stop and think.

The real issue is the amount of problem solving you must do, because problem solving is mentally demanding and time consuming. It's not just that you have to think about what “Kevlar” might mean, it's also that doing so will interrupt the flow of the text in which you find it. You may lose the thread of the argument or story. So when there's too much problem solving to be done, reading is slower, harder, and less fun.8

The solution would seem obvious, especially in a school context. If a teacher assigns a text and she suspects her students lack some necessary background knowledge, she can provide it: definitions of words, historical context, functions of objects. It's certainly better to provide this information than not, but if you think about the inference mechanism described above, you can see that it's not a substitute; the relevant information is not going to be automatically activated because it's not well learned. Students will have to stop and think, “where did she say Rabat is, again?” And of course there is a limit to how much new information a student can absorb right before reading a text. Most of us have had the experience of reading a chapter that provides so many definitions of technical terms (or abbreviates them with acronyms) that by the middle we can no longer remember what DLPFC stands for.

Just how much unknown stuff can a text have in it before a reader will declare mental overload! and call it quits? This quantity surely varies depending on the reader's attitude toward reading and motivation to understand that particular text. Still, studies have measured readers' tolerance of unfamiliar vocabulary, and have estimated that readers need to know about 98% of the words for comfortable comprehension.9–11 That may sound high, but bear in mind that that the paragraph you're reading now has about 75 unique words. So 98% familiarity means that this and every paragraph like it would have one or two words that are unfamiliar to you.

We have a pretty low tolerance for reading unknown words. And writers use a lot of words, many more than speakers do. If I'm talking about my cheap friend, I might use the word cheap three times within a few sentences. But writers like to mix things up, so my friend will be “frugal,” “stingy,” “thrifty,” and “tight.” Texts that students typically encounter in school have about 85,000 different words.12 Somehow we need to ensure that children have a broad enough vocabulary so that they are not constantly colliding with unknown words.

But What Does a Word Really Mean?

Most of us have had this experience at one time or another. You consult a dictionary to get a word definition, perhaps “condescending.” The dictionary defines it as “patronizing,” which is no help because you don't know the meaning of that word, either. So you look up “patronizing,” and find that it is defined as “condescending.”

That's an example of a circular definition, and it's kind of funny, especially when it happens to someone else. But wait a minute. How much better is the network I introduced in the last section? Words seem defined by their features (watermelon is the red‐on‐the‐inside, juicy, sweet fruit), but how are the features defined? By other words. So doesn't the model amount to a bunch of circular definitions, even if the circles may be bigger than the condescending‐patronizing loop?13

The way out of this problem is to consider the possibility that some representations are grounded. That means that some mental concepts derive meaning not from other mental concepts, but more directly from experience. For example, perhaps the definition of red is not rooted in language. Indeed, if you look up “red” in a dictionary, the definition is pretty unsatisfying. Perhaps the mental definition of red should be rooted in the visual system; when you read the word “red,” its referent is a memory of what it's like to actually see red.

In the last twenty years, much evidence has accumulated that some representations are grounded—they are defined, at least in part, by our senses or by how we move. For example, when you read the word “kick,” the part of your brain that controls leg movements shows activity, even though you're not moving your leg. And the part of your brain that controls mouth movements is active when you read the word “lick,” and that which controls finger movements is active when you read “pick.”14 Part of the mental definition of kick, lick, and pick is what it feels like to execute these movements.

Researchers have also gathered purely behavioral evidence (as opposed to neural evidence) for grounded representations of words. In a classic experiment, subjects were asked to keep their hand on a “home” button. A sentence appeared on a computer screen and they were to push one of two other buttons to indicate whether or not the sentence was sensible. (An example of a nonsensical sentence might be “Boil the air.”) The buttons were arranged vertically, with the home button in the middle so a subject had to move his or her hand away from the body to press one of the buttons, and toward the body to press the other. For half of the subjects, the button that was closer to them signified “yes, this sentence is sensible,” and for the others, the button that was farther away signified “yes.”

Some of the sensible sentences described an action that would require a movement toward or away from the body, and people were slower to verify “this is a sensible sentence” if the movement they had to execute to push the button conflicted with the type of movement described in the sentence. For example, if the sentence was “Andy closed the drawer,” people who had to move their hand toward their body to push the “yes” button responded more slowly than people who had to move their hand away from their body to push the “yes” button. The opposite was true when the sentence described movement toward the body, e.g., “Andy opened the drawer.”15 Part of how you represent a concept like opening a drawer includes the movement that would accomplish it.

No one thinks that all mental representations are reducible to sensations or movement plans. We can think about quite abstract concepts, e.g., democracy, or genius. Exactly how these are represented in the mind has not been worked out. Researchers have proposed different approaches, including but not limited to the obvious idea that abstract concepts might be defined by other concepts, but all must ultimately be built out of grounded concepts.16

Whatever their ultimate representation, a rich vocabulary matters, both in its breadth and its depth. Let's consider why.

Why Breadth and Depth Matter

Breadth is the easy part of vocabulary to understand. I read “bassoon” and I either know the word or I don't. There's lots of evidence that children with broader vocabularies comprehend more of what they read. Of course, that's just a correlation. It may not be true that vocabulary helps reading comprehension, but rather that people with some kind of smarts or experience have big vocabularies and also happen to be good at comprehending what they read. If we want to be confident that a broad vocabulary directly contributes to reading comprehension, we have to rule out other possible factors. So researchers apply statistical controls to remove the effect of factors like IQ, or decoding ability. When they do so, they still observe a correlation between vocabulary breadth and reading comprehension.17 Even more persuasive, there is good evidence that teaching children new vocabulary boosts reading comprehension.18 Again, this finding is pretty intuitive.

Vocabulary depth is a little harder to wrap one's mind around, but we've already encountered a couple of different ways that your knowledge of a word can be relatively shallow or relatively deep. One type of depth might be the density of connections between a concept and other concepts. For example, you may know that a platypus is an animal, and that it has a bill. If that's all you know, do you know the word “platypus”? Well, sure, but your knowledge of the word is pretty shallow. Deeper knowledge of the word would have it connected to concepts like Australia, and mammal, and egg‐laying, and venomous and New South Wales (the Australian state that features the platypus on its emblem), and so on.

Why would this sort of depth matter? Recall the example sentences that highlighted different features of “spill.” The concept platypus also has different features that can be highlighted by different contexts. So if a person who knows only that a platypus is an animal and has a bill were to read “Don't pick up a platypus, but if you must, watch out for its rear legs,” he would in one sense understand. But he wouldn't have as rich an understanding as someone who knows about the venom, and who knows that platypuses deliver the venom via spurs on their hind limbs.

Research shows that depth of vocabulary matters to reading comprehension. Children identified as having difficulty in reading comprehension (but who can decode well) do not have the depth of word knowledge that typical readers do. When asked to provide a word definition, they provide fewer attributes. When asked to produce examples of categories (“name as many flowers as you can”) they produce fewer. They have a harder time describing the meaning of figurative language, like the expression “a pat on the back.” They are slower and more error‐prone in judging if two words are synonyms, although they have no problem making a rhyming judgement.19,20

A second aspect of depth concerns the speed with which you can access word information. It would be helpful if the activation spreads not just to the right words, but does so quickly. Likewise, you'd like the connections between the sound of a word (phonology), the spelling of that word (orthography), and the word's meaning to be strong, and allow for quick access. Research supports this supposition. Children's knowledge of words—measured as their accuracy in judging whether word pairs are synonyms, and their accuracy in judging category membership (“rain is a type of weather”)—predicts their reading comprehension. But the speed with which they make these judgments predicts reading comprehension over and above the accuracy of the judgments. So yes, the richness of word representations helps reading, but so too does the speed with which you can access them. And it's not just that speed of the connections matters because reading tests prize speed. Accessing word meaning quickly helps the reader make meaning because there is other inferential work that must be done to fully understand a text. If the word‐level information can be accessed rapidly, that other work can proceed apace and is more likely to succeed.21

The Process of Learning New Words

Learning new words doesn't seem that complicated. For example, within the last year I learned about ropa vieja, a Cuban dish of shredded beef. I was in a restaurant, saw someone eating it at a neighboring table, and asked the waiter about it. He told me that is ropa vieja. Easy. It seems like the important factors for word learning would be whether I'm exposed to new words, the frequency of that exposure, and maybe the likelihood that I pay attention to new words.

Because we're talking about adults, we are skirting some terribly complex problems that apply to vocabulary acquisition in infants. When someone points out ropa vieja and says That is ropa vieja, I know what that and is means. I know that ropa vieja refers to what's on the plate, and not the plate itself, or the act of pointing at food, or what's on the plate when we talk about it in public, although a different word would be used in someone's home. Infants, presumably, know none of this.

The problem is made easier because the infant mind comes prewired with certain assumptions.22 For example, words are assumed to refer to objects (rabbit) not object parts (the rabbit's hind leg), and words are assumed to have consistent referents—it's not called rabbit on Monday, Wednesday, and Friday, and something else on other days. Those assumptions reduce the possible referents of a word, but don't finish the job. When a parent points to a rabbit running across the lawn and exclaims A rabbit!, this might be referring to brownness, cuteness, furriness, the lawn, a fast‐moving object, any animal, any unusual animal, and so on.

Even for adults, words can be more ambiguous than you think, especially when the referent is not present, as a plate of shredded beef is. For example, I recently heard someone referred to as a bastion of honor and it made me realize that my knowledge of the word bastion was shallow. I couldn't define it outside of the formulation bastion of X. I recognized this must be a metaphoric use of “bastion,” but I didn't know the literal meaning of the word. So I looked it up and found that it was defined as “a stronghold.” Then I realized I actually had the metaphoric meaning wrong. Because bastion is a stronghold, bastion of honor implies someone who would never yield on matters or honor, whereas I had thought it meant shining example of honor.

Why did I have it wrong? Because I hadn't seen “bastion of X” in enough contexts to allow me to make the more fine‐grained distinction between a stronghold of a quality and someone who exemplifies a quality. In other words, I'm not that different from children who try to pretty up their essays with fancy vocabulary words; I see a word in one context and think I understand it, but the meaning I've attributed is not quite right, even if that meaning is consistent with the context I saw it in. I may read “We enjoyed our ice water in the golden crepuscule of the day” and think “crepuscule” means hottest part or nicest part, rather than twilight. The mistake would be resolved by seeing the word in more contexts, for example, “a nasty, winter crepuscule.” We would guess from this account that people who are good readers would be better at figuring out unknown words from context—they are more likely to understand everything else they are reading, and so successfully use context to figure out an unknown word, and research supports that supposition.23,24

This process—seeing words multiple times in different contexts to figure out what they mean—is called statistical learning by researchers. It is easiest to appreciate in the more extreme version that infants must do. If the child hears the word rabbit she can't know in any one instance if it refers to the rabbit, or brownness, or things that run fast. The child keeps a running log (unconscious, naturally) of what was happening when different words were used and eventually is able to sort out that rabbits were always present when someone said rabbit, brown things were always present when someone said brown, and so on. That sounds like an enormous bookkeeping job, and it is. Thus, word learning is a gradual process. Some researchers have even suggested that the explosive growth in vocabulary most children show around the age of two had actually been building much longer—it's only after months of exposure to instances of words that enough information about them has gradually accumulated and begins to bear fruit.

Here's an experiment showing that learning works in this gradual way, even for adults. On each trial subjects see a couple of unfamiliar objects, like odd kitchen gadgets that most people wouldn't be able to name (e.g., a nutmeg grater, a strawberry huller). The experimenter says One of these is a blicket and the other is a timplomper. Because there are two objects and two labels, the subject can't know which goes with which. The experimenter keeps showing objects and the labels don't change, but the pairings do; that is, you later see the strawberry huller and a garlic peeler, and the experimenter says One of these is a blicket and the other is a wug.25 The experimenter is offering adults the same chance to learn that children have; the words are ambiguous each time you hear them, but if you can keep track of what you hear and what you see at the time you hear it, you can sort out which word goes with which object. Adults are able to learn the labels in this small‐scale simulation of the problem that infants face.

Even more interesting, we know that they learn them gradually, not all at once. In another experiment, researchers gave adults a similar sort of word‐learning task, and then tested how much subjects had learned. In this test, the experimenter said a label and then asked the subject to pick the correct object from amidst all the gadgets they had seen during training. The researchers noted which ones each subject got right and wrong. Then the researcher constructed a new training regimen. Half of the object‐label pairs were new, and half were old, meaning they were ones that subjects had seen in the previous training, but had gotten wrong when tested. The idea is that if you missed an old item and if learning is all‐or‐none, well, that word is just not in your memory. In the second phase of training, you're asked to learn it, but there should be no advantage (compared to the new object‐label pairs) because you didn't learn it in the first phase. But that's not what happened. People were faster to learn the old pairs than the new ones, consistent with the idea that learning is gradual. The first phase of the experiment yielded knowledge of the new word that was unreliable and incomplete, but it did yield something.26

Strong vocabulary is not all that's needed to be a strong reader. In fact, it's not all that's needed to comprehend what you read. In the next chapter, we'll consider the processes behind understanding text.

References

- 1. “Spill.” Merriam‐Webster. Retrieved from www.merriam‐webster.com/dictionary/spill/.

- 2. “Heavy.” Merriam‐Webster. Retrieved from www.merriam‐webster.com/dictionary/heavy/.

- 3. Collins, A. M., & Loftus, E. F. (1975). A spreading‐activation theory of semantic processing. Psychological Review, 82(6), 407–428. http://doi.org/10.1016/B978‐1‐4832‐1446‐7.50015‐7.

- 4. Hare, M., Jones, M., Thomson, C., Kelly, S., & McRae, K. (2009). Activating event knowledge. Cognition, 111(2), 151–167. http://doi.org/10.1016/j.cognition.2009.01.009.

- 5. Meyer, D. E., & Schvaneveldt, R. W. (1971). Facilitation in recognizing pairs of words: Evidence of a dependence between retrieval operations. Journal of Experimental Psychology, 90(2), 227–234.

- 6. Gernsbacher, M. A. & Faust, M. (1995). Skilled suppression. In F. N. Dempster & C. N. Brainerd (Eds.), Interference and inhibition in cognition (pp. 295–327). San Diego: Academic Press.

- 7. Kintsch, W. (2012). Psychological models of reading comprehension and their implications for assessment. In J. Sabatini, E. Albro, & T. O'Reilly (Eds.), Measuring up: advances in how we assess reading ability (pp. 21–37). Plymouth, UK: Rowman & Littlefield.

- 8. Foertsch, J., & Gernsbacher, M. A. (1994). In search of complete comprehension: Getting “minimalists” to work. Discourse Processes, 18(3), 271–296. http://doi.org/10.1080/01638539409544896.

- 9. Carver, R. (1994). Percentage of unknown vocabulary words in text as a function of the relative difficulty of the text: Implications for instruction. Journal of Literacy Research, 26(4), 413–437. http://doi.org/10.1080/10862969409547861.

- 10. Schmitt, N., Jiang, X., & Grabe, W. (2011). The percentage of words known in a text and reading Comprehension. The Modern Language Journal, 95(1), 26–43. http://doi.org/10.1111/j.1540‐4781.2011.01146.x.

- 11. Hsueh‐Chao, M. H., & Nation, P. (2000). Unknown vocabulary density and reading comprehension. Reading in a Foreign Language, 13(1), 403–430.

- 12. Nagy, W. E., & Anderson, R. C. (1984). How many words are there in printed school English? Reading Research Quarterly, 19, 304–330.

- 13. Harnad, S. (1990). The symbol grounding problem. Physica D: Nonlinear Phenomena, 42(1‐3), 335–346. http://doi.org/10.1016/0167‐2789(90)90087‐6.

- 14. Hauk, O., Johnsrude, I., & Pulvermüller, F. (2004). Somatotopic representation of action words in human motor and premotor cortex. Neuron, 41(2), 301–307.

- 15. Glenberg, A. M., & Kaschak, M. P. (2002). Grounding language in action, Psychonomic Bulletin & Review, 9(3), 558–565. http://doi.org/10.1109/TAMD.2011.2140890.

- 16. Barsalou, L. W. (2016). On staying grounded and avoiding Quixotic dead ends. Psychonomic Bulletin & Review, 23(4), 1122–1142. http://doi.org/10.3758/s13423‐016‐1028‐3.

- 17. Ouellette, G. P. (2006). What's meaning got to do with it: The role of vocabulary in word reading and reading comprehension. Journal of Educational Psychology, 98(3), 554–566. http://doi.org/10.1037/0022‐0663.98.3.554.

- 18. Elleman, A. M., Lindo, E. J., Morphy, P., & Compton, D. L. (2009). The impact of vocabulary instruction on passage‐level comprehension of school‐age children: A meta‐analysis. Journal of Research on Educational Effectiveness, 2(1), 1–44. http://doi.org/10.1080/19345740802539200.

- 19. Nation, K. (2008). EPS Prize Lecture. Learning to read words. Quarterly Journal of Experimental Psychology (2006), 61(8), 1121–1133. http://doi.org/10.1080/17470210802034603.

- 20. Nation, K., & Snowling, M. J. (1998). Semantic processing and the development of word‐recognition skills: Evidence from children with reading comprehension difficulties. Journal of Memory and Language, 39(1), 85–101. http://doi.org/10.1006/jmla.1998.2564.

- 21. Oakhill, J., Cain, K., McCarthy, D., & Nightingale, Z. (2013). Making the link between vocabulary knowledge and comprehension skill. In M. A. Britt, S. R. Goldman, & J.‐F. Rouet (Eds.), Reading: From words to multiple texts (pp. 101–114). New York: Routledge.

- 22. Astuti, R., Solomon, G. E. A., & Carey, S. (2004). Constraints on conceptual development: I. Introduction. Monographs of the Society for Research in Child Development, 69(3), 1–24. http://doi.org/10.1111/j.0037‐976X.2004.00297.x.

- 23. Cain, K., Oakhill, J. V., & Elbro, C. (2003). The ability to learn new word meanings from context by school‐age children with and without language comprehension difficulties. Journal of Child Language, 30(30), 681–694. http://doi.org/10.1017/S0305000903005713.

- 24. Cain, K., Oakhill, J., & Lemmon, K. (2004). Individual differences in the inference of word meanings from context: The influence of reading comprehension, vocabulary knowledge, and memory capacity. Journal of Educational Psychology, 96(4), 671–681. http://doi.org/10.1037/0022‐0663.96.4.671.

- 25. Yu, C., & Smith, L. (2007). Rapid word learning under uncertainty via cross‐situational statistics. Psychological Science, 18(5), 414–420. http://doi.org/10.1111/j.1467‐9280.2007.01915.x.

- 26. Yurovsky, D., Fricker, D. C., Yu, C., & Smith, L. B. (2014). The role of partial knowledge in statistical word learning. Psychonomic Bulletin & Review, 21(1), 1–22. http://doi.org/10.3758/s13423‐013‐0443‐y.

- 27. Miller, G. A., & Gildea, P. M. (1987). How children learn words. Scientific American, 257(3), 94–99.

- 28. Beck, I. L., Perfetti, C. A., & McKeown, M. G. (1982). Effects of long‐term vocabulary instruction on lexical access and reading comprehension. Journal of Educational Psychology, 74(4), 506–521. http://doi.org/10.1037/0022‐0663.74.4.506.

- 29. McKeown, M., Beck, I., Omanson, R., & Perfetti, C. (1983). The effects of long‐term vocabulary instruction on reading comprehension: A replication. Journal of Literacy Research, 15(1), 3–18. http://doi.org/10.1080/10862968309547474.

- 30. Fukkink, R. G., & de Glopper, K. (1998). Effects of instruction in deriving word meaning from context: A meta‐analysis. Review of Educational Research, 68(4), 450–469. http://doi.org/10.3102/00346543068004450.

- 31. Bowers, P. N., Kirby, J. R., & Deacon, S. H. (2010). The effects of morphological instruction on literacy skills: A systematic review of the literature. Review of Educational Research, 80(2), 144–179. http://doi.org/10.3102/0034654309359353.

- 32. Goodwin, A. P., & Ahn, S. (2010). A meta‐analysis of morphological interventions: Effects on literacy achievement of children with literacy difficulties. Annals of Dyslexia, 60(2), 183–208. http://doi.org/10.1007/s11881‐010‐0041‐x.

- 33. Goulden, R., Nation, P., & Read, J. (1990). How large can a receptive vocabulary be? Applied Linguistics, 11(4), 341–363. http://doi.org/10.1093/applin/11.4.341.

- 34. Nagy, W. E., & Herman, P. A. (1985). Incidental vs. instructional approaches to increasing reading vocabulary. Educational Perspectives, 23(1), 16–21.

- 35. Hayes, D. P., & Ahrens, M. G. (1988). Vocabulary simplification for children: A special case of “motherese”? Journal of Child Language, 15(2), 395. http://doi.org/10.1017/S0305000900012411.

- 36. Nation, I. S. P. (2006). How large a vocabulary is needed for reading and listening? The Canadian Modern Language Review / La Revue Canadienne Des Langues Vivantes, 63(1), 59–81. http://doi.org/10.1353/cml.2006.0049.

- 37. Schwanenflugel, P. J. (2010). Effects of a conversation intervention on the expressive vocabulary development of prekindergarten children. Language, Speech, and Hearing Services in Schools, 41(3), 303–313. http://doi.org/10.1044/0161‐1461(2009/08‐0100).