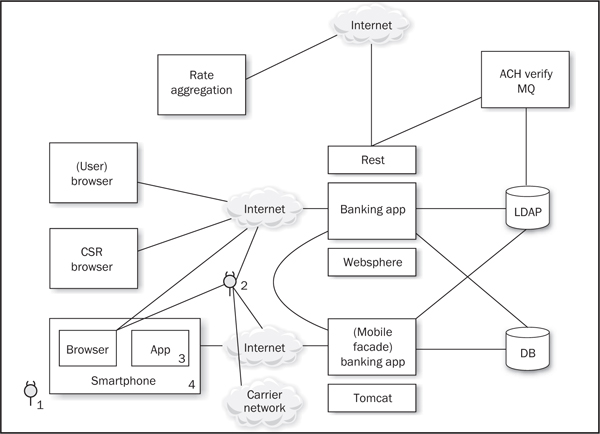

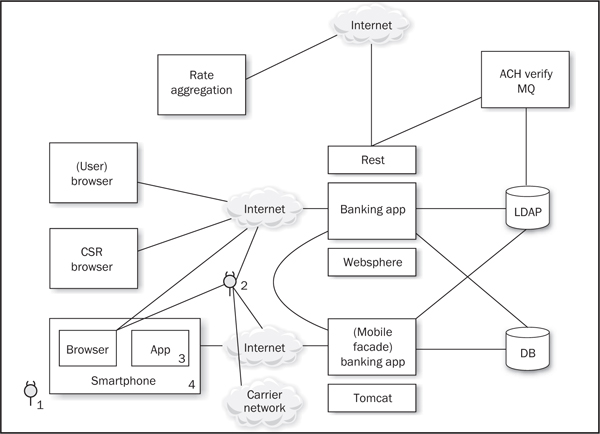

Figure 8-1 An example Web Application Threat Model that has added a mobile client

So far in this book, we’ve talked about mobile security from many different perspectives: mobile network operator, device manufacturer, corporate IT, and end-user. This chapter takes the perspective of another very important player in the mobile ecosystem: application developer.

Mobile application developers are perhaps the most important stakeholder in the mobile experience. After all, they control the interface through which end-users interact with the mobile device and network; it’s the apps, man.

From simple single-player games to complex, multifunctional social networking apps, application developers channel the security of the end-user experience into almost all aspects of mobile. The security of the applications they create is constrained only by the built-in security features of the mobile platform and the possibility of device theft. Mobile platforms contain built-in cryptographic controls that can now be used because power and battery life limitations are no longer a concern. This chapter explores the different dimensions of mobile developer security, including:

• Mobile app Threat Modeling

• Secure mobile development guidance

Our overall goal with this chapter is to educate mobile app developers on the best choices to make when designing secure mobile applications. Secondarily, application security professionals and end-users themselves may benefit from the discussions in this chapter as they will better understand the decisions that developers make. Read on, and be more confident in the next download you make from the app store!

Threat Modeling is a pencil-and-paper exercise of identifying security risks. Threat Modeling helps developers identify the most critical risks to an application, which allows the developer to focus the investment of development effort on features and/or controls to mitigate those risks. Security professionals view Threat Modeling as instrumental to secure software development because without it, security becomes endless and aimless bug squashing without a risk-based understanding of priority and impact.

A number of Threat Modeling methodologies are in use today. They share common approaches and features but differ somewhat in terminology. We’ve listed links to information about some of the more popular ones here:

• Microsoft Threat Modeling The first to receive book-length treatment in 1999 and still one of the most popular approaches (msdn.microsoft.com/en-us/library/ff648644.aspx)

• Trike Aligned more with traditional risk management philosophy (octotrike.org)

• OCTAVE Operationally Critical Threat, Asset, and Vulnerability Evaluation (cert.org/octave/)

• Cigital Threat Modeling Cigital’s Threat Modeling anchor’s the analysis around software architecture (cigital.com/justice-league-blog/category/threat-modeling-2/)

• P.A.S.T.A Process for Attack Simulation and Threat Analysis (owasp.org/images/a/aa/AppSecEU2012_PASTA.pdf)

Nearly all of these methodologies follow a similar approach: diagram the application, understand where information assets flow, derive and document risks to the assets and security controls, and then rank the risks based on probability and impact. The highest-scored risks are then scheduled for remediation and verification testing during the remainder of the development process. Threat Modeling is such a critical component of application security, what does it tell us about the security challenges of a mobile application?

Let’s take an example we see often in our consulting work: adding a mobile client onto an existing web application. This scenario is very common for organizations with an existing web presence that are seeking to capitalize on the mobile phenomenon. It provides lessons that can be applied to designing mobile Threat Models for any mobile application. Figure 8-1 shows our example application, which supports end users and Customer Support Representatives (CSRs) through a browser interface, and has other connections to RESTful services in its middle tier. The new portions of the system, being constructed to support mobile, are depicted in the lower left and middle of the diagram.

Figure 8-1 An example Web Application Threat Model that has added a mobile client

The new mobile functionality aims to provide access for the same users. The mobile application supports only a subset of the web application’s functions; for example, rate comparison and cross-account transfer are omitted. However, the functions provided by the mobile application will have the advantage of being done in a crisp, simple, and responsive format.

The main question for this “adapted to mobile” app is: how do existing threats change when a mobile application is added? When we model threats, we start by describing a threat’s capabilities, level of access, and skills. Let’s apply a few Threat Modeling techniques to identify new threats to consider. Building on the risk model we started in Chapter 1, we’ll

• Enumerate the threats

• Outline what assets mobile devices possess

• Discuss how the mobile tech stacks create opportunities for threats

Script kiddies and hackers who endanger our web apps are also threats for the mobile app. These threats can still be observed in or interrupt device-to-service interactions. In addition to the tried-and-true web application hacking tools, network-based threats have additional resources with which they attack mobile device users. First, mobile users are just that: mobile. Many leave Bluetooth and WiFi radios enabled as they go about their day. Thus, a user’s device may leap at the chance to connect to a malicious base station as he or she heads to work, walks through a mall, or stops for a coffee. Threats can attack those mobile devices without 802.11 or Bluetooth enabled as well. Widely available automated hacker tools decrease the difficulty of these attacks.

AppSec professionals can inject code in a mobile browser, creating a man-in-the-browser (MiTB) threat, just as they can with web apps. This threat is joined by the malicious app threat, which has direct access to the underlying OS and inter-process communication. Example after example shows the relative ease with which both malware and Trojan apps make it into public or corporate app stores for download by the unsuspecting.

Highly skilled security researchers and organized crime professionals can build on the interposition techniques that less-capable script kiddies use by taking their attack to a mobile device’s network- and radio-based attack surfaces. Carriers now sell consumer-grade base stations (or femtocells). This is particularly scary because mobile carriers’ security models rely on keeping network integrity intact. Application developers also assume network integrity. These highly skilled threats can connect to a user’s femtocell and observe all of the victim’s traffic—including that negotiated over SSL (as you saw in more detail in Chapter 2). Yet, despite the prevalence of these femtocells, we rarely see the application-level security controls necessary to thwart this class of threat. That’s because security researchers use these attacks as a ticket to security conferences, not to attack end users. Organized crime is unlikely to use malicious femtocells unless it can (1) remotely exploit a large number of cells in highly populated areas or (2) find a particularly high-value target of choice worth a geographically specific attack.

Up to this point, the threats we’ve covered are the same ones we’ve come to expect with web applications. Mobile applications must address all of the security issues faced by web applications plus those introduced by the mobile device. Mobile devices add three other classes of threat that endanger their security:

• The phone’s user, as he or she may

• Download your app to reverse-engineer or debug it

• Jailbreak the device, subverting controls on which you depend

• Thieves, with access to the device’s UI and physical interfaces (USB and so on)

• Other device “owners,” whose capabilities vary with ownership role

Wait, the device’s owner is a threat to their own device? But this is my personal device. Let’s explore these threats further.

When thinking about users as threats, application developers must consider what risks a user’s jailbroken phone might impose on their application or the mobile services it interacts with. Application developers must also consider how reversing the application’s binary might pose a risk to their app. For instance, does the application binary contain a single symmetric key shared by all users?

When teams add new functionality to a system, or when they start a new development effort entirely, they commonly create user stories or detailed use cases and requirements. The first (and easiest) way to identify new threats to the system is to mine user stories and use cases for their users. Then ask these questions:

• What evil or insidious behaviors could a user engage in?

• What obnoxious or stupid behaviors could a user cause trouble with?

Device users possess the credentials to their device (including any UI, “app store,” or other username/password tuples) and likely have access to carrier credentials or tokens. Access includes physical access to the device and use of both of its applications and browsers. This threat can install applications, sync, and explore the device’s contents with their computer. Of course, this threat has access to the device’s SDK and simulators, just as any developer does. This threat, depicted in Figure 8-1, is labeled 1.

Unlike one’s home PC or laptop, a mobile device includes other parties that hold an ownership stake in addition to the device’s end user. Other stakeholders are the entities involved in underlying application behavior or transactions, including

• The app store account owner, who may or may not be the current device user

• The application publisher, which provides the user experience and access to mobile services

• The mobile carrier, which could be AT&T, Verizon, Sprint, among others

• The device manufacturer, which could be Samsung, HTC, Google, Apple, or so on

• The app store curator, which could be Apple, Google, Amazon (for Kindle), or another entity (your company, the department of defense, and so on)

• The company’s IT department that administers the device

Stakeholders crowd into mobile devices competing for influence and control far more than on traditional operating system platforms. These stakeholders operate applications and underlying software, rely on credentials, and interact with mobile services, sometimes invisibly, all while the mobile device owner uses the device.

Each of these stakeholders possesses different capabilities. Their access to attack surfaces extends beyond the mobile app and network into the device and application lifecycle. For example, mobile carriers and handset manufacturers place applications on the device. They may modify or customize the device’s operating system. They may even place code beneath the OS in firmware. App store curators control a large portion of the application lifecycle: from assurance and acceptance, to packaging and deployment, to update and removal.

Where stakeholder goals differ, the opportunity arises for one “owner” to take an action that another considers a violation of their security or privacy (like collecting and storing personal information). A stakeholder may merely act in its own interest (and with the best intentions for the user) but still be perceived as a threat to others. Although nation states have rattled sabers accusing each other’s manufacturers, carriers, and infrastructure operators of being up to no good, we’ll ignore that element of the threat landscape for now.

The key to predicting and defending against a threat’s unwanted intentions lies in understanding how each views and values the mobile device’s assets.

Each mobile device stakeholder seeks to protect the value of its respective assets. For instance, end-users may value their privacy while the application publisher and carrier want to collect and use personal and usage information. Likewise carriers, app store curators, and application publishers all have different notions of how long device identifiers should live, whether they’re permanent or can be rotated, and whether they should be kept secret. Disagreement about the secrecy of device identifiers frequently created security vulnerabilities in the first years of mobile app development (this issue drove Apple to introduce an application-specific unique identifier API).

When evaluating mobile assets (such as identifiers, file-based data stores, credentials, user data, and so forth), carefully consider how other stakeholders may misuse or outright exploit their access to the classes of assets available to them. The typical data classifications—public, sensitive, secret, and highly confidential—won’t be as helpful for mobile data as they are in classifying and protecting server-side data. Instead label data according to its owner’s intents:

• Offline access Data the app must make available offline. Once labeled as offline, this data can be annotated with the typical data sensitivity categories that govern entitlements. For mobile devices, app designers must decide which controls replace web-based controls for offline access.

• Personal data Data such as contacts, pictures, call data, voicemails, and similar information. Compared to web apps, cell phones provide threats with increased access to personal data because mobile apps often request (and are granted) permission to access this information—by the user! Additionally, mobile operating systems provide easy APIs for accessing this personal data, as compared to web-based applications.

• Sensor-based data Mobile devices are bristling with sensors that bridge the physical and digital worlds that add another class of personal data because of API access and permissions. This data includes location data (through GPS and tower telemetry) as well as camera and microphone data. Although web browsers may grant access to some of this hardware, it’s usually not done without user interaction or exploit.

• Identity data Often overlooked, a mobile device contains a wealth of information serving as proxy for its user. App publishers’ reluctance to force users to authenticate using small virtual keyboards with the same frequency as web-based apps often means that a stolen (or compromised) device proxies for its end-users’ identities. Identity data includes

• Persisted credentials

• Bearer tokens (such as in apps supporting OAuth)

• Usernames

• Device-, user-, or application-specific UUIDs

Why is a username so interesting? In a web application, the username would be useless for impersonating a user without having the password (or other credentials). However, many web-based systems use mobile devices as the mechanism for “out-of-band” password reset, and a user often possesses a mobile application for the very same website that uses the device for password reset. This means a threat who has access to the device can survey the device for the username (a bank account, for instance) and then initiate a mobile-browser-based session completing the password-reset workflow.

When Threat Modeling a mobile application, make a list of the assets, classify them as we’ve done here, and then iterate through the stakeholders and brainstorm how each stakeholder might use assets in a manner that would be considered a security or privacy breach by another. The security controls designed using this “360 degree” view of assets and stakeholders are more comprehensive and robust.

We’ve addressed many of the salient differentiators of mobile; however, Threat Modeling does not end here. What do you need to do next? The above-referenced Threat Modeling methodologies provide great starting points, but here is a thumbnail sketch of some key steps to finalizing and leveraging your shiny new mobile Threat Model:

• Derive the attack surface and potential attacks

• Prioritize attacks by likelihood and impact of successful execution

• Implement mitigations to reduce the risk of prioritized successful attacks

• Use the list of attacks to drive downstream activities in the Secure Software Development Lifecycle (SSDLC).

So far in this chapter, we’ve discussed Threat Modeling mobile applications at a high level to get you acquainted with one of the most important first steps in securing them. Threat Modeling starts you on the journey toward securing your mobile application by showing who and what the application must defend against. You also need proactive development guidance to implement the mitigations for potential attacks identified by Threat Modeling. This section provides you with secure mobile development guidance, with only brief departures into code examples (in-depth code-level coverage would probably require its own book). Our main goal is to help iOS and Android application developers understand how to avoid the many problems and pitfalls we’ve discussed throughout this book.

In fact, you might view this information as simply a different perspective on the many countermeasures we’ve already talked about throughout this book. There is some overlap, but we’ve tried to focus the narrative here from the mobile developer’s point of view and make the guidance proactive rather than reactive.

Before we jump into enumerating specific guidelines for secure mobile development, it’s important to remind our readers of some sage advice (and we paraphrase): an ounce of preparation is worth a metric ton of post-release code fixes. Before you write your first line of code, here are some recognized practices to consider.

The first important consideration we just discussed at length: Threat Modeling. No set of generic secure development guidance is ever going to cover all the possible variations in scenarios and alternative design/coding approaches. Remember the Threat Model shows you that your mobile application has two main components that must be written securely: the mobile client and the mobile services on the Web that support it. Our proactive guidance addresses threats and attacks for both the mobile client and mobile services.

One of the early trends in mobile was the extreme popularity of applications written for the native mobile platforms, such as iOS and Android. These native applications took full advantage of the platform’s features and had a user interface that was consistent with the platform. This was driven largely by Apple’s initial walled-garden approach to their App Store and was followed closely by Google Play, Amazon’s Marketplace for its Kindle platform, and others. Some developers jumped on the native API bandwagon to get their applications in these app stores and to match the look and feel of market-leading smartphones and tablets. Other developers built “mobile web” applications that used the same technologies as their web applications, but were optimized for a smaller form factor and also allowed the applications to work on the different mobile platforms. Today, we have cross-platform mobile development frameworks that blend the differences between these two types of mobile applications: “native look and feel” and “write once, run anywhere.” The security guidance for any application depends on what type of application you have. The security guidance in this section is heavily weighted toward native mobile applications because this application type represents the bulk of the differences between web applications and mobile applications.

More practically, given the mobile app store craze, we’re probably tilting at windmills trying to encourage developers to develop on mobile web versus native mobile. Or are we? We’ve encountered more than a few development shops that are tired of having their development effort effectively doubled or even quadrupled when it comes to mobile just so they can play to the trendy OSes: iOS and Android for sure, plus Windows Phone and BlackBerry for ambitious teams. Cross-platform development frameworks are not a panacea either, though, because they introduce yet another layer of software that can have its own set of vulnerabilities (either within the framework or in how the framework must be used). Evolving technologies like HTML5 are probably the best bet for cross-platform development, as they offer an open standards–based, multiplatform development framework that is almost certainly going to be supported natively by mobile OSes.

The debate will rage on, of course, and these development frameworks will evolve and mature. We encourage you to think about the path you choose, and the total cost of security to your organization as well as the application.

Another “preparatory” consideration is what, if anything, can the application do to ensure the integrity of its runtime environment? Our mobile client Threat Model shows that a threat can tamper with the runtime environment, including the application code itself. How can you validate and ensure that the runtime environment is functioning correctly? These considerations lie mostly outside of the application developer’s control, but they must be considered when “designing in” security. The security design for the application must leverage what the application can trust from the runtime environment, and we believe that such assurances are best done outside of the application code.

This is where Mobile Device Management (MDM) comes in. The native MDM framework or third-party MDM software can provide greater assurances that the surrounding device and OS are not compromised, permitting your application to more confidently use memory, the file system, network communications, inter-process communications, and so on, without undue risk from eavesdropping or hijacking. Of course, no solution is perfect, and we emphasize that MDM provides “greater assurances” but not absolute certainty. Nevertheless, we recommend MDM for corporate IT shops (that may have sufficient control over end-user devices to deploy it successfully) as MDM products and technologies have improved to the point where the risk mitigation is worthwhile.

Of course, for developers distributing consumer applications to the public, where no such control over the end-user device exists, relying on MDM is not practical. Instead, consider application integrity protection, including technologies like anti-debugging and code obfuscation. Because most mobile app assessment approaches involve disassembly of the app to some degree, by making this harder, you provide a key obstacle to would-be attackers.

Ultimately, there is no airtight solution to ensure and/or check the integrity of the application or its execution environment. Once the device is jailbroken or rooted, all bets are off. The environment can lie all day about what’s happening, and the application will be none the wiser since it gets all its input from the runtime. Protections like MDM, anti-debugging, and code obfuscation can provide at least rudimentary assurances if they are external to the application and they can be strengthened independently.

There’s also Mobile Application Management, which covers provisioning and managing of apps, but stops short of managing the entire device as MDM does. Some MAM solutions include private app stores, which can provide closed-loop provisioning, patching, uninstallation, monitoring, and remote data wipe. These are attractive features for developers, but remember that simply having a channel through which to push patches does not guarantee timely or high-quality delivery of the same—that remains the developer’s responsibility.

We won’t go into further detail here on either MDM, MAM, or application integrity protection, since Chapter 7 covered those topics in greater detail. Check it out!

No developer security checklist would be complete without discussing security patches. It’s widely recognized today that one of the most effective technology risk mitigation mechanisms is patching. If anything is certain, it’s that your application will be found to have bugs after its release into the wild. Without a practical strategy to update it in the field, you are at the mercy of any hacker who stumbles onto it out there on the Internet.

Fortunately, the mobile ecosystem has evolved an effective channel for maintaining your application and pushing security patches: the app store. Use this channel early and often. In fact, changing the anti-debugging and code obfuscation mechanisms on every update helps deter reverse engineering of your application.

We aren’t naturally inclined to “top 10”–type lists because they can shortcut more careful thinking, but we’re also aware that developers are busy creatures who want things in bite-sized doses. So we’ve presented our guidance in a framework that maps to our experiences, helping mobile developers step through mobile app security design sequentially, from concept to coding, with key security checkpoints along the way. This framework was developed from years of working with mobile developers both as consultants and colleagues at organizations large and small. Our framework looks like this:

Let’s look at the details for the specific guidance in each of these categories. We won’t cover every one of the points just mentioned; instead, we’ve selected the most important “security rules of the road” for developers writing new mobile apps.

Mobile services are built using the same technology as your current web applications. All of the security practices you use for your web applications, such as proper session management, distributing user input, proper output encoding, and so on, are required for mobile services. There are some additional concerns and a few twists for the mobile portions of the application, however.

Secure Mobile Services with Web Application Security Mobile web applications and/or mobile services should follow security guidance for traditional web applications and web services. Mobile services that support native mobile applications are very similar to the service interfaces you choose to support your Rich Internet Application clients. Whether you are writing a mobile web application or native application using RESTful services with JSON objects or XML RPC, security guidelines such as those from OWASP or the internal standards within your company must be rigorously applied. Chapter 6 has some further details on specific attacks and countermeasures here.

Create a Walled Garden for Mobile Access When an existing application is extended for mobile access, the “legacy” parts of the application must ensure that mobile devices access the new mobile interface and services. The legacy front end must parse the user-agent string and redirect traffic consistently to the mobile interfaces/services; otherwise, the legacy server-side content may be more aggressively cached by mobile browsers (by design, to compensate for low-bandwidth, high-latency over-the-air connections).

Reduce Session Timeout for Mobile Devices Mobile devices are at greater risk of MiTM attacks because they have several radio interfaces. Mobile devices are also at a greater risk of the device being stolen. Therefore, the sessions for mobile devices should be shorter than for standard PC sessions.

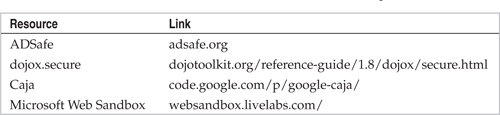

Use a Secure JavaScript Subset A secure JavaScript subset is exactly that—it’s JavaScript with the dangerous functions and other language constructs removed, such as eval(), the use of square brackets, and the this keyword. The secure subset also includes language restrictions to facilitate static code analysis of JavaScript. For example, the with statement is removed. You can choose from several secure JavaScript subsets:

Mask or Tokenize Sensitive Data The more aggressive data caching and increased security risk of sensitive data on mobile devices means that the mobile services must be much more conscious to not send such data to the mobile device. Two good techniques to build into the mobile services are data masking or tokenization. Both mechanisms involve sending an alternate representation of the sensitive data to the mobile device. The masked data or token is generally smaller than the original value, so there’s an additional benefit of reducing the amount of bandwidth consumed by the application.

As a first rule of thumb for storing secrets on mobile devices: don’t do it!

If you’re not convinced of the high risks to sensitive data on mobile devices by this point in the book, then you never will be. We won’t belabor the point further, other than to say: do a Threat Model, and follow where it leads. Resist the urge to hard code cryptographic keys or store them on mobile devices in properties files and data files.

If you’ve made the decision to store sensitive data on the device, you have several options, in order from stronger to weaker:

• Security hardware

• Secure platform storage

• Mobile databases

• File system

Let’s look at each of these separately, but first we need to look at some types of sensitive data that are already on the mobile device.

Mobile Device Sensitive Data Our high-level Threat Model expands the meaning of sensitive data when we look at the mobile client. An application that produces or makes use of these types of data may need to provide additional protection. For example, if an application tracks when and where the user accesses a specific application, that combination of data could be considered sensitive.

• Personal data Data such as contacts, pictures, call data, voicemails, and similar information.

• Sensor-based data Mobile devices are bristling with sensors that bridge the physical and digital worlds bringing you delightful experiences. This includes location data from the GPS as well as camera and microphone data.

• Identity data Identity data includes

• Persisted credentials

• Bearer tokens (such as in apps supporting OAuth)

• Usernames

• Device-, user-, or application-specific UUIDs

Security Hardware Mobile applications that process payment information use dedicated, tamper-resistant security hardware like a Secure Element (SE) microprocessor. SEs are accessed using existing smartcard standards, such as ISO 7816 (contact) and ISO 14443 (contactless). Implemented properly, it is difficult to attack. These are not trivial scenarios for developers to code. Chapter 9 outlines a simple example of an SE used to validate a PIN for a virtual wallet, using application protocol data unit (APDU) commands that include the PIN in the data field, which is sent to a particular applet on the SE. The applet executing within the Java Card runtime environment on the SE processes the APDU command in the Applet.process(APDU) method. If the PIN is successfully verified, then the applet should return a status word with the value of 0x9000 as part of the response APDU. Otherwise, the applet should increment its PIN try counter (again stored in the SE). If the PIN try counter exceeds a certain threshold, such as 5 or 10 attempts, then the applet should lock itself in order to prevent brute-force attempts. Any future attempts to access the applet should always fail.

Unfortunately, general-purpose applications do not have access to the SE, and you’ll need to use the other techniques in this section to secure sensitive data. See Chapter 9 for more information on the SE.

Secure Platform Storage On iOS, Apple provides the keychain to securely handle passwords and other short but sensitive bits of data, such as keys and login tokens. The keychain is a SQLite database stored on the file system, and it is protected by an OS service that determines which keychain items each process or app can access. Keychain access APIs control access via access groups that allow keychain items to be shared among apps from the same developer by checking a prefix allocated to them through the iOS Developer Program, enforced through code signing and provisioning profiles. See the link at the end of this chapter to Apple’s iOS security whitepaper for more info on using keychain.

Specialized tools exist that can read data from iOS 4 and 5 keychains, given physical access to the device. Consider using the following solution involving password-based encryption.

Specialized tools exist that can read data from iOS 4 and 5 keychains, given physical access to the device. Consider using the following solution involving password-based encryption.

Android does not provide a secure storage facility like iOS’s keychain. The default internal storage API makes saved data private to your application. The Android KeyStore is designed to store cryptographic keys, but it has no inherent protection mechanism such as a password. Instead, an Android application needs to provide its own mechanism to protect sensitive information if file system permissions are not sufficient. The application could generate an AES key using Password-Based Key Derivation Function 2 (PBKDF2), which is based on a password that the user enters when the application starts. The encryption key is then used to encrypt/decrypt the sensitive data before it is stored on the file system. Android provides the javax.crypto.spec.PBEKeySpec and javax.crypto.SecretKeyFactory classes to facilitate the generation of the password-based encryption key.

Mobile Databases We put databases a bit above the file system on the strength scale because you can encrypt the database with a single secret that compactly unifies protection of all app data (as opposed to having to delegate protection to the OS and/or having it scattered all over the environment in keychains, in files, and so on). There are a few third-party extensions to SQLite that provide database encryption, including SEE, SQLCipher, and CEROD.

Of course, databases are not without vulnerabilities either. Plain ol’ storing of sensitive data in the database unprotected is probably about as commonly done as it is on file systems or any other repository. Also watch out for “indirect” sensitive data storage. We once reviewed a mobile app that stored images in its SQLite database, which initially appeared to be harmless feature of the user interface; upon closer inspection, however, we determined that the type and pattern of images stored in the database revealed clues as to the user’s identity and behavioral patterns (purchasing, location, and so on).

Also, the use of client-side relational databases obviously introduces the possibility of SQL Injection attacks. SQLite databases are commonly used on mobile clients because the Android API natively supports it. SQL Injection attacks originating via Android intents or other input, such as network traffic, can easily become problematic. Fortunately, the security guidance for preventing SQL Injection on the server works on the mobile device: use parameterized queries, not string concatenation, for constructing your dynamic SQL queries.

File System Protections Apple’s iOS provides a few security protections around the file system, including default encryption of files created on the data partition (thus they are protected by the device passcode if one is set), centrally erasable metadata, and cryptographic linking to a specific device (that is, files moved from one device to another are inaccessible without the key). Most of these features are enabled by default in iOS 5 and above, so no specific coding is required to gain their benefits. More information is available in Apple’s iOS security whitepaper.

On Android, files stored in internal storage are, by default, private to a specific application unless an application chooses to shoot itself in the foot by changing the default Linux file permissions. Also, avoid using the MODE_WORLD_WRITEABLE or MODE_WORLD_READABLE modes for IPC files to prevent other apps from accessing your app’s files.

In contrast to internal storage on Android, files stored in external storage are publicly accessible to all applications. This is so important, we’ll reiterate it!

Files stored in external storage on Android (for example, SD cards) are not secured and are accessible to all to applications!

Files stored in external storage on Android (for example, SD cards) are not secured and are accessible to all to applications!

Android 3.0 and later provides full file-system encryption, so all user data can be encrypted in the kernel using the dmcrypt implementation. For more details on file-system encryption see source.android.com/tech/encryption/android_crypto_implementation.html.

Authentication and authorization are more complicated for a mobile application than for a traditional web application. Several mobile applications may want to share the same identity (Single Sign-On), but two applications from the same company may want different identities because one application is for customers and the other is for employees. And then there could be one application that needs multiple identities (a mashup).

Authorization and Authentication Protocols The protocols for solving authentication and authorization requirements are the same ones for traditional web applications and Rich Internet Applications, but many application developers haven’t had to use them. The trick is knowing which protocol to use based on the problem you’re trying to solve. Chapter 6 has already covered the details about authentication and authorization of the mobile client with mobile services. The section “Common Authentication and Authorization Frameworks” in that chapter covers several methods for using OAuth and SAML.

Always Generate Your Own Identifiers In the category of “Let no good deed go unpunished,” to improve the security and management of mobile devices, some applications want to associate a user with a mobile device; that’s a good practice to determine if a device is authorized to access some resource. However, what happens if the application developer decides that the identifier to use is your physical device ID (like the IMEI for your Android device), the MAC-address, or perhaps your Mobile Directory Number (the phone number, or MDN)? Using identifiers that are immutable with respect to the application will lead to problems if the device is stolen or after it is reassigned. Also, such reused identifiers rarely possess adequate secrecy, entropy, or other security-enhancing properties in the real world. For example, how many people could look up the typical user’s mobile phone number from the user’s public Facebook page or a Google search? Using such an identifier is probably not a good idea, especially for something important. An application needing a unique identifier should always create its own unique ID and store it with the application configuration data, using one of the secure storage methods discussed earlier if appropriate.

Implement a Timeout for Cached Credentials Native applications that cache user credentials or bearer tokens for mobile services should invalidate the cached credentials and bearer tokens if the application is inactive. The timeout period should be measured in minutes.

Mobile applications can take advantage of the tightly coupled relationship between the client and the mobile services to improve security over the loosely coupled browser interface for a traditional web application. By taking advantage of this tight coupling, the resulting mobile application can be more secure than its web application counterpart.

Use Only SSL/TLS Mobile applications have lower bandwidth requirements than traditional web applications, so they are good candidates for using only SSL/TLS for communication. Using only a secure protocol prevents SSL stripping attacks (see thoughtcrime.org/software/sslstrip/).

Validate Server Certificates A mobile client must implement the client-side of an SSL/TLS connection to the mobile services. Do not disable certificate verification and validation by defining a custom TrustManager or a HostNameVerifier that disables hostname validation.

Use Certificate Pinning for Validating Certificates Use Certificate Pinning to mitigate the risk of compromised public certificate authority (CA) private keys (such as the notorious Comodo and DigiNotar breaches). Certificate Pinning bypasses the normal CA validation chain and, instead, uses a unique certificate that you provide (because your mobile app should only be connecting to your services, it shouldn’t need to worry about public CA certs). On the server, you create your own signing certificate and use it to create the certificates for your mobile services. The signing certificate is kept offline on the server-side, and the signed certificates are distributed with the mobile application.

Android 4.2 provides support for Certificate Pinning, but for versions prior to that, you’ll have to write your own implementation.

Android 4.2 provides support for Certificate Pinning, but for versions prior to that, you’ll have to write your own implementation.

Many native mobile apps implement WebView on Android and UIWebView on iOS to view web content within the app. A few potential problems can arise from careless use of WebView.

WebView Cache The WebView cache may contain sensitive web form and authentication data from web pages visited through native mobile clients that implement it. For example, if the user logs into a banking website via the native client and chooses to save his or her credentials, then the credentials will be stored in this cache. A malicious user could use this data to hijack someone else’s account tied to this bank.

Additionally, the WebView cookies database contains the cookie names, values, and domains associated with visited websites. A malicious user could also use this information to hijack active sessions associated with issuing bank websites and merchant websites, since this database contains session identifiers.

To prevent this from happening, on the server-side, we recommend disabling the autocomplete attribute on all sensitive form inputs, such as inputs for government-issued identification numbers, credit card numbers, and addresses. Setting the no-cache HTTP header on the server will also help. On the client-side, the WebView object can be configured to never save authentication data and form data. You can also use the clearCache() method to delete any files stored locally on the device.

On Android, you need to delete files from the cache directory explicitly (our testing of clearCache() doesn’t clear all of the requests and responses cached by WebView). You should also disable caching of authentication information by setting WebSettings.setCacheMode(false).

To address WebView cookie caching, on the server-side, set up a reasonable session timeout to mitigate the risk of session hijacking. Cookies should never be configured to persist for long periods of time. Additionally, never store personal or sensitive data in a cookie. On the client-side, periodically clear cookies via the CookieManager or the NSHTTPCookieStorage classes. You could disable the use of cookies altogether within the WebView object, but that would break common web functionally and isn’t really practical.

On iOS, use the NSURLCache class to remove all cached responses. You can also use it to set an empty cache or remove the cache for a particular request. Search for “uiwebview cache” for more information.

WebView and JavaScript Bridges We discussed several published issues with JavaScript bridges and WebView in Chapter 6. This material is a reiteration of that advice.

On Android, protect against the reflection-based attacks by targeting your app to API Level 17 and above in the future. Because API Level 17 is relatively new and not widely supported on devices, we would recommend the following in the meantime:

• Only use addJavascriptInterface if the application truly loads trusted content into the WebView, so avoid loading anything acquired over the network or via an IPC mechanism into a WebView exposing a JavaScript interface.

• Develop a custom JavaScript bridge using the shouldOverrideUrlLoading function. Although, developers still need to think carefully about what type of functionality is exposed via this bridge.

• Reconsider why a bridge between JavaScript and Java is a necessity for this Android application and remove the bridge if feasible.

Also, any app that checks the newly loaded URL for a custom URI scheme and responds accordingly should be careful about what functionality is exposed via this custom URI scheme, and use input validation and output encoding to prevent common injection attacks.

On iOS, the same countermeasures for Android apply, such as strict input validation and output encoding of user input, while developing a custom URI scheme defined for a local WebView component using a UIWebViewDelegate. Again, be very wary of code that performs reflection using tainted input.

Sensitive data leakage is one of the biggest risks on mobile because all data is inherently at greater risk while on a mobile device. Unfortunately, many mechanisms are built in to mobile platforms to squirrel data away in various nooks, as we noted in Chapter 1 and elsewhere in this book. Here’s a list of the common problem areas and how to avoid them.

Clipboard Modern versions of Android and iOS support copying and pasting of information across programs. Clearly, this presents a risk if the information is sensitive. Access to the clipboard is fairly unrestricted, so you should take explicit precautions to avoid information leakage. On Android, you can call setLongClickable(false) on an EditText or TextView to prevent someone from being able to copy from fields in your application. On iOS, you can subclass UITextView to disable copy/paste operations.

Logs As we noted in Chapter 1, the mobile ecosystem engages in pervasive logging of data—cellular usage, battery life, screen activity, you name it—all to provide for exhaustive analysis and (ostensibly) to improve the mobile user experience. The dark side of all this logging is that your app’s data can easily get caught up in this rather broad net, leaving it vulnerable to prying eyes. Here are some places to watch out for:

• System and debug logs such as the Android system logs or the device driver dmesg buffer. When debugging mode is on, any Android application with the READ_LOGS permission can view the system log. On iOS, disable NSLog statements.

• X:Y coordinate buffers can record user entry of sensitive app data like PINs or passwords.

Check your app and make sure it is not logging sensitive information to these repositories, or to others not mentioned here. Unfortunately, we don’t know of any comprehensive listing of all the facilities used for logging on the major mobile OSes. We recommend conducting an analysis of your app and noting key outputs (events, files, APIs) and investigating what data might flow through them. We’ve conducted a few such “mobile app data leakage” analyses and have been quite surprised with what turns up.

Chapter 3 contains a more in-depth analysis of iOS security concerns. This section summarizes the information in that chapter from the developer’s perspective.

Traditional C Application Secure Coding Guidelines A native iOS application is written in Objective-C. Objective-C is based on the C programming language, so it inherits all of the benefits and security problems of C, such as the ability to write code that is vulnerable to buffer overflows and memory corruption issues. There are a number of great secure-coding guidelines for Objective-C, but the best place to start is with Apple’s Secure Coding Guide (developer.apple.com/library/mac/documentation/security/conceptual/SecureCodingGuide/SecureCodingGuide.pdf).

Keyboard Cache iOS caches keystrokes to provide autocorrect and form-completion features, and the cache’s contents are not accessible by the app. You have to disable autocorrect within your app for any sensitive information entered by using the UITextField class and setting the autocorrectionType property to UI TextAutocorrectionNo to disable caching. Apple MDM customers can add an enterprise policy to clear the keyboard dictionary at regular intervals, and end-users can manually do this by going to Settings | General | Reset | Reset Keyboard Dictionary.

Enable Full ASLR with PIE We’ve noted many security features provided by mobile platforms in this book, including Address Space Layout Randomization (ASLR). Most of the time, these features are enabled by default. But sometimes, the developer must explicitly code for them to achieve maximum protection.

For apps that will run on iOS 4.3 and greater, the position-independent executable (PIE) should be set when compiling on the command line with option –fPIE.

Custom URI Scheme Guidelines If your application uses a Custom URI scheme to launch itself from the browser or another application, follow these guidelines:

1. Use the openURL method instead of the deprecated handleOpenURL (developer.apple.com/library/ios/#documentation/uikit/reference/UIApplicationDelegate_Protocol/Reference/Reference.html).

2. Validate the sourceApplication parameter to restrict access to the custom URI to a specific set of applications by validating the sender’s bundle identifier.

3. Validate the URL parameter after syntactically validating it; assume it contains malicious input.

Protect the Stack If you are using GCC to compile your iOS application, enable Stack Smashing Protection (SSP) using -fstack-protector-all. SSP detects buffer overflow attacks and other stack corruption. The Apple LLVM compiler automatically enables SSP.

Enable Automatic Reference Counting Automatic Reference Counting (ARC) provides automatic memory management for Objective-C objects and blocks. Having ARC means that the application developer doesn’t explicitly code retains and releases, thus reducing the chance of security vulnerabilities caused by releasing memory more than once, use of memory after it’s been freed, and other C memory-allocation problems. Converting an existing application to use ARC requires more than enabling ARC in your project. You may have to change some of the source code in your application and some of the libraries that your application uses.

Disable Caching of Application Screenshots iOS captures the currently running application screen when it is suspended (such as when the user presses the Home button, presses the Sleep/Wake button, or the system launches another app) in order to provide screen transition animations. If your app happens to be displaying sensitive data when this occurs, it could be stored in the screen cache. Preventing this requires some understanding of how multitasking on iOS 4 and later works. To summarize, when your code returns from the applicationDidEnterBackground: method, your app moves to the suspended state shortly afterward. If any views in your interface contain sensitive information, you should hide or modify those views before the applicationDidEnterBackground: method returns. For example, specify a splash screen to display on entering the background. Search on “App States and Multitasking” in the iOS Developer Library at developer.apple.com for more information.

Chapter 4 contains a more in-depth analysis of Android security concerns. This section summarizes the information in that chapter from the developer’s perspective.

Traditional C++/Java Application Secure Coding Guidelines A native Android application can be written in either C++ or Java. Google recommends writing applications in Java rather than C++, and we agree with that recommendation from a security point of view. Regardless of language choice, it’s important to follow secure-coding guidelines for the language you choose: C/C++ or Java. Fortunately, there is a wealth of great books and articles about secure coding in these languages.

Ensure ASLR Is Enabled As with iOS, modern versions of Android support ASLR. Enabling ASLR requires that native code languages (C and C++) be compiled and linked with –fpie to enable PIE code. The linker also needs Read-only Allocations and Immediate Binding flags set as well (-Wl,-z, relro -Wl,-z, now). In the Android NDK 8+, these options are the default. Developers using earlier versions of the NDK can update build scripts to enable ASLR.

Secure Intent Usage Guidelines Android Intents are an asynchronous signaling system for communication between components: applications and the OS. From a security point of view, intents provide an excellent vector for attack. Here are some recommendations to mitigate against intent abuse:

• Public components should not trust data received from intents.

• Perform input validation on all data received from intents.

• Whenever possible one should always use explicit intents.

• Explicitly set android.exported for all components with intent filters.

• Create a custom signature-protection-level permission to control access to implicit intents.

• Use a permission to limit receivers of broadcast intents.

• Do not include sensitive data in broadcast intents.

Secure NFC Guidelines The Near Field Communication (NFC) capabilities in mobile devices require specific handling when used by an application:

• Do not trust data received from NFC tags; perform input validation on all data received from NFC tags.

• Write-protect a tag before it is used to prevent it from being overwritten.

Last, but not least, and this hopefully goes without saying—every setting we just described (plus any custom ones you add!) should have a corresponding test case to ensure that it’s properly implemented in the app’s final release. Consider multiple testing approaches, including dynamic and static, to ensure proper coverage.

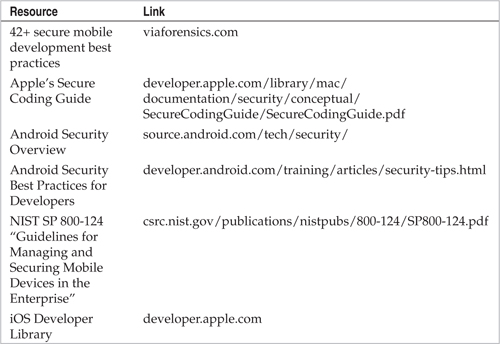

We recognize that no one resource could possibly hope to comprehensively cover everything about such a dynamic field like mobile development security, so we’ve listed some of our favorite online resources here for your further reading:

One of the most important players in the mobile ecosystem is the mobile application developer. In this chapter, we looked at security in the mobile development lifecycle from various perspectives and outlined ways to design and build more secure apps.

In the first section, we briefly looked at mobile Threat Modeling and how developers can benefit from understanding security vulnerabilities during the application’s design phase, early in the development process. Some of the key takeaways from this section included the following:

• Understand the application’s assets. They answer the question what are most critical data and capabilities that the application must protect.

• Derive threats based on the assets and use cases/scenarios. Threats define who can attack the assets.

• Enumerate attack surfaces and potential attacks to answer how threats can attack those assets.

• Prioritize the resulting potential vulnerabilities by risk.

• Design the security controls required to protect the assets.

• Use the potential vulnerabilities to drive downstream behavior in the development process.

We also examined proactive security guidance for mobile app developers. Much of this guidance is triggered from the Threat Model and the types of data and scenarios within which the mobile app is used. We also offered some tactical do’s and don’ts, as well as platform-specific guidance for iOS and Android.

We hope this chapter has been useful to those interested in developing more secure mobile apps!