Chapter 17

How Good Is My Design?

After looking at different ways of producing and describing design ideas in the preceding chapters of Part III, this chapter now considers some of the ways that we can reflect upon and assess the quality of a particular design.

So, why do we need to do this? Well, at the very beginning of this book, in explaining why software development was an example of an ill-structured problem, and describing what this meant, there were two characteristics of ISPs that were described there that are particularly relevant to this chapter.

There is no ultimate test for a solution to an ISP, by which the designer or design team can determine that they have produced a design model that fully meets the customer's needs.

There is no stopping rule that enables the designer(s) to know that the current version of the design model is as good as it is going to get and that there is little point in refining it further.

These two characteristics are reflected in much of the material covered in the third part of this book. Whether considering plan-driven development, agile development, use of patterns or just plain opportunistic design activities, we have no means of knowing when our design activities should stop. And while we might cope with these characteristics by considering our goal to be one of satisficing, by aiming to produce a design that is ‘good enough’, there are no readily available measures that can tell us whether or not that has been achieved either.

This chapter therefore takes a brief look at three (related) issues. Firstly, we consider the question of what concepts and measures we might use to assess design quality. Secondly, we look at a technique that can be used to help make such an assessment. Thirdly, we briefly describe what might we then do if it is obvious that changes are needed.

It is also worth putting the material of this chapter into perspective with regard to the material covered earlier in Chapter 5. There the aspect of concern was more one of assessing what we might term the ‘human and social’ aspects of software design—that is, examining the ways that people design things, and the manner in which end-users deploy the products. This chapter is more concerned with the design models that form the outcomes of designing, and the quality measures that can be employed to assess their form and structure. Hence it has a more ‘technology-centric’ focus.

17.1Quality assessment

The concept of quality is of course something of a subjective one. If a group of friends have enjoyed eating a curry together, they might still come out with quite different opinions of its quality. One who only occasionally eats Asian food might consider it to have been very good; another who greatly likes Asian food might think it was ‘good but a bit bland’; while a third who doesn't really like spicy dishes may feel it wasn't to their taste. And of course, assuming that they are not actually eating this in Asia, yet another, who has extensive knowledge of Asian cookery, might consider it to have been rather below the standard to be expected in Asia. And at the same time, as all of the group will have eaten everything that was put before them, the curry can be considered to have satisficed their needs.

So concepts of quality can have a strong contextual aspect, and also be difficult to quantify (as in the example of the curry).

For software design too, the issue of quality can be difficult to quantify. One approach is to employ some quantitative measures (such as those used for object-oriented design that were described in Chapter 10) and then to interpret these within the context and needs of a particular software development project.

So, if we are to do that, our first question is to determine what constitutes characteristics that reflect ‘good’ quality in a design model? In Chapter 4 we identified some design principles that are generally considered to characterise a good design.

Fitness for purpose. This comes as close as we can hope to providing the ultimate test for an ISP, but of course, is not something that we can easily quantify or indeed, assess systematically.

Separation of concerns. This can be considered as providing an assessment of the ‘modular’ organisation of a design.

Minimum coupling. For any application, the different elements need to interact in order to perform the necessary tasks, and coupling determines how they interact (statically and dynamically).

Maximum cohesion. Like coupling, the idea of cohesion reflects our expectation that the compartmentalisation of the system elements provides elements that are relatively self-contained.

Information hiding. Relates to knowledge used by the application and how this is shared between the elements, such that each element can only access the knowledge that is relevant to its role.

While these are important software design principles, they very much embody ideas about how a design model should be organised, strongly influenced by the idea that a design is likely to evolve and to be adapted.

A rather different perspective, and one that is equally important, is to think about the role and functioning of an application. The group of quality factors that we associate with this are often referred to as the ilities (because most of them end with ‘ility’), and we briefly consider some of these below.

Information hiding

17.1.1The ‘ilities’

There are many ilities, with the emphasis placed upon particular factors being dependent upon the purpose of the application being developed. Here, the discussion is confined to a group that can be considered to be fairly widely applicable: reliability; efficiency; maintainability; and usability. Other, rather more specialised ones include: testability; portability; and reusability.

Reliability. This factor is essentially concerned with the dynamic characteristics of the eventual application, and so involves making predictions about behavioural issues. From a design perspective, assessing this involves determining whether the eventual application will be:

complete, in the sense of being able to react correctly to all combinations of events and system states;

consistent, in that its behaviour will be as expected and repeatable, regardless of how busy the application might be;

robust when faced with component failure or similar conflicts (this is usually referred to as being graceful degradation)—for the example of the CCC, this might require that it copes with a situation where the customer is unable to unlock the selected car, despite having the correct code.

This factor is a key one for safety-critical systems, which may use replicated hardware and software to minimise the risk of component failure, but it is also important for those applications that have an element of user interaction.

-

Efficiency. This can be measured through an application's use of resources, particularly where these need to be shared. Depending upon context, the relevant resources may include processor time, storage, network use etc., and this multi-variate aspect does make it difficult to assess.

There are also trade-offs involved between these. As an example, economising on memory use might result in an increase on processor load. Where resources are constrained it can be important to try and make some assessment of the likely effects of particular design choices, although fine-tuning may be better left to the implementation phase.

-

Maintainability. The lifetime of a software artifact, whether it be a component or an application or a platform will probably be quite long. Planning for possible change is therefore something that should influence design decisions.

This factor strongly reflects the characteristic of separation of concerns. The problem is to determine what the likely evolutionary pathways may be. It may be important to be clear about the assumptions that an application makes about its context and use, and to query these in the light of different possible scenarios of evolution.

-

Usability. There are many things that can influence the usability of an artifact (Norman 2002). However, for software, the user-interaction, or HCI (Human Computer Interaction) elements tend to predominate and may well influence other design decisions too. The set of measures provided by the cognitive dimensions framework is widely considered as being a useful way of thinking about usability (Green & Petre 1996), and the concept of interaction design has helped focus attention on the nature of software use (Sharp et al. 2019).

The cognitive dimensions framework can be considered as providing a set of qualitative measures that can be used to assess a design (not just from the HCI aspect either). We now look at ways in which we might use quantitative measures, and the forms that they might take.

17.1.2Design metrics

Unfortunately, neither the general design characteristics nor the ‘ilities’ can be measured directly, which is where the use of quantitative software metrics may assist by providing some surrogate measures. (Of course, we can use qualitative metrics too, particularly for assessing such factors as usability, but deriving values for these is apt to be quite a time-consuming task.)

We encountered some examples of metrics in Chapter 10 and this may be a useful place to examine their role and form a bit more fully. Measurement science differentiates between an attribute, which we associate with some element (in this case, of design), and a metric, which will have a value in the form of a specific number and an associated unit of measurement. And for a given metric, as we say in Chapter 10, we need to have some form of counting rules that are applied in order to derive its value. Indeed, Fenton & Bieman (2014) have observed that “measurement is concerned with capturing information about attributes of entities”. So, our next need is to determine how ideas about quality can use metrics and measurements.

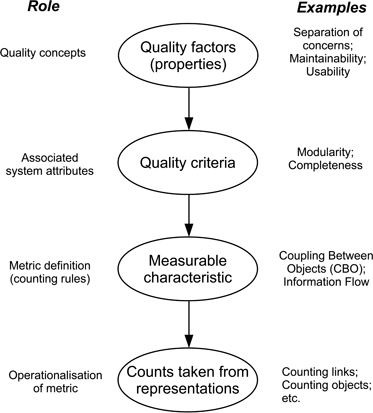

Figure 17.1 shows a simple view of the mapping that links quality concepts through to actual counts.

Figure 17.1: Linking quality concept to measurements

Unfortunately, none of the mappings between the elements of this are particularly easy to define. The desirable design characteristics are quite abstract, as are the attributes. Finding measures for an invisible and complex media such as software can be quite challenging, and so each of the things that we can actually measure usually comes down to counting tokens. For code metrics, the tokens are usually syntactic elements, for design metrics, they tend to be diagrammatical elements. So what we can count is not always what we would like to be able to count! And of course, we are limited to counts of static relationships, although we know that software has quite important dynamic properties.

So, counting the number of objects, components, sub-programs and the coupling links between these is probably the best we can do when looking at a design model. (We saw this in Chapter 10 when reviewing the Chidamber and Kemerer metrics.) Having relatively ‘formal’ models that are documented using (say) UML notations may help, but quite a lot can be done just by looking at sketches too. And there are other things that are related to the design model that can also be measured, such as the number of times the design of a particular sub-system of an application has been modified.

Before discussing what we might do with such knowledge, it is worth noting that there are two types of metric that are both commonly used in software engineering (Hughes 2000).

Actionable metrics are those that relate to things we can control. We can react to the values obtained from using such a metric by making changes to our design. If we are counting the arguments of methods as part of a metric, we can make changes if we find a particular method seem to have too many arguments for its purpose. At a more abstract level, we can make changes if we find that a particular class has particularly high counts for coupling measures (when compared with the other classes in the design model).

Informational metrics tell us about things that can be measured and that are important to us, but that we cannot influence directly. One example of this is ‘module churn’, which occurs where the number of revisions to the design (or code) of each module is counted over a period of time, and we observe that a particular element may have been modified many times. This churn is a consequence of design activities, but usually we wouldn't want to use it to control them!

Many design metrics fall into the category of actionable metrics, since after all, they are usually relating to an application that has yet to be built.

And as a last point about metrics, we should note that complexity is not an attribute in its own right. It is incorrect to refer to the ‘complexity of object D’, because there are many measures that might be applied to that object. Rather, complexity can be viewed as a threshold value for a particular measure—so that we consider anything above that value to be complex. As an example, we might argue that an object with more than (say) 12 external methods will potentially be ‘complex’ to use, because the developers may need to keep checking which methods are the ones they need.

And even then there are further pitfalls with the concept of complexity. Such a threshold value is not absolute, it may differ between applications and designs. And sometimes, a particular object will have a value that is above our chosen threshold value because of its role. The need to interpret the values of any metrics and decide whether or not they are appropriate, then leads us on to our next topic.

17.2Reviews and walkthroughs

A technique that has proved itself useful in assessing design structure and likely behaviour is the design review or walkthrough, also sometimes termed an inspection although that term is more commonly used when assessing code rather than designs. The use of reviews dates from very early in the evolution of software engineering (Fagan 1976), and a set of basic rules for conducting design reviews has been assembled from experience (Yourdon & Constantine 1979, Weinberg & Freedman 1987, Parnas & Weiss 1987).

There are actually two forms of review that are in common use. The technical review is concerned with assessing the quality of a design, and is the form of interest to us in this section. The management review addresses such issues as project deadlines and schedule. Indeed, one of the challenges of review-driven agile approaches such as Scrum is keeping these issues distinct (and of course, they are not independent, which also needs to be recognised).

Technical reviews can include the use of forms of ‘mental execution’ of the design model with the aid of use cases and scenarios and so can help with assessing dynamic attributes as well as static ones. The sources cited above provide some valuable guidelines on how such reviews need to be conducted so as to meet their aims and not become diverted to issues that are more properly the domain of the management review. (One reason why these guidelines remain relevant is that they are really ‘social’ processes, and hence their effectiveness is uninfluenced by such factors as architectural style.) And it is important that the review does not become an assessment of the design team, rather than of the design itself.

Even with the input of metric values, a design review cannot provide any well-quantified measures of ‘quality’. However, the review is where such concepts as complexity thresholds may be agreed (since there are no absolutes). With their aid it can help to identify weaknesses in a design, or aspects that might potentially form weaknesses as details are elaborated or when evolutionary changes occur in the future. It therefore provides a means of assessing potential technical debt. Of course we can rarely avoid having some technical debts; what matters is how much we have and the form it takes. In particular, if carefully planned and organised (and recorded) a review brings together those people who have both the domain knowledge and the technical knowledge to be able to make realistic projections from the available information about the design model.

Parnas & Weiss (1987) have suggested that there are eight requirements that a ‘good’ design should meet.

Well structured: being consistent with principles such as information-hiding and separation of concerns.

Simple: to the extent of being ‘as simple as possible, but no simpler’.

Efficient: providing functions that can be computed using the available resources.

Adequate: sufficient to meet the stated requirements.

Flexible: being able to accommodate likely changes in the requirements, however these are likely to arise.

Practical: with module interfaces providing the required functionality, neither more nor less.

Implementable: with current (and chosen) software and hardware technologies.

Standardised: using well-defined and familiar notation for any documentation.

The last point is particularly relevant given that as we have observed, designers often employ informal notations. However, the key issue is that any notations used should at least be readily explained and interpreted. A set of hand-drawn sequence diagrams may be sufficient to explore scenarios without the need to follow exact UML syntax.

A systematic review looking at the use of metrics by industry during agile software development by Kupiainen, Mäntylä & Itkonen (2015) made some observations of how such reviews could use metrics. In particular the review reinforced the view that the targets of measurement should be the product and the process, but not the people. They also observed the following.

Documentation produced by a project should not be the object of measurement, and the focus of attention should be the product and its features.

Using metrics can be a positive motivator for a team, and can change the way that people behave by determining the set of issues that they pay attention to.

For agile development, teams used metrics in a similar way to how they were employed with plan-driven projects, using them to support sprint and project planning, sprint and progress tracking, understanding and improving quality, and fixing problems with software processes.

An important ground-rule for a design review is that it should concentrate on reviewing the design and not attempt to fix any issues that are identified. In the next section we therefore look at what we might do next, following a review.

17.3Refactoring of designs

The idea of refactoring was introduced in Section 14.7, and as explained in Section 15.4, the need to perform refactoring is often motivated by the presence of code smells (Fowler 1999). The discussion in both of these sections was focused largely upon code, rather than design, as this is often where the relevant structural weaknesses are identified. However, many of the code smells originate in design weaknesses and there is scope to identify their likely presence during a design review. Of course, this isn't always the case, and the idea of code duplication is an obvious example of a problem that is less likely to be identified during design.

Refactoring can occur before that though, based upon the design model, and is one way of addressing the issues identified in a design review. Clearly, some issues such as those associated with duplication are unlikely to be flushed out by a review. However, the need for redistribution of functionality across design elements (objects, components) and reorganisation of communication models are possible outcomes, along with the possible creation of new design elements.

What this points to is the need to document and record the outcomes of a review with care. One possible option here is to use video-recording, with the agreement of all participants, so that issues do not get lost and any whiteboard-based discussions are captured. And of course, after a post-review refactoring of the design (if such changes are necessary), it may be useful to repeat the review, or part of it, using the same review team.

17.4Empirical knowledge about quality assessment

Empirical studies that are relevant to this chapter are chiefly those looking at the use of metrics in assessing quality as well as those examining the effectiveness of code reviews and ways of improving their efficiency. (Design reviews do not appear to have been very extensively studied, perhaps because of the difficulty of finding enough experienced participants.) Earlier we mentioned the systematic review by Kupiainen et al. (2015) and its findings. Here we identify a small number of further studies that use different approaches for the purpose of evaluation.

Software metrics can be used inappropriately as well as being a useful tool, and before adopting any metrics it may well be worth reading the book by Fenton & Bieman (2014) and the invited review paper by Kitchenham (2010). Another interesting study is that by Jabangwe, Borstler, Smite & Wohlin (2015) which looks at the link between object-oriented metrics and quality attributes. Reinforcing our observations about OO metrics in Chapter 10, this review did note that measures for complexity, cohesion, coupling and size corresponded better with reliability and maintainability than the inheritance measures.

The study reported in Bosu, Greiler & Bird (2015) is based on interviews with staff at Microsoft and provides some useful recommendations for improving the practice of reviews. The effect of using light-weight tool-based review practices is reviewed in McIntosh, Kamei, Adams & Hassan (2016) using three large open-source repositories in a case study design. Again, the findings of this study reinforce the value of well-conducted reviews.

Key take-home points about assessing design quality

Assessing design quality poses some challenges, not least because our interpretation of quality may be influenced by such factors as domain, technologies etc. However, there are some useful general points worth making here.

Quality measures. A design will have many different quality attributes, usually related to the ilities, and with the emphasis placed on each attribute being largely dependent upon the nature of the application. Metrics that directly measure features that are directly related to these attributes are generally impractical and it may be necessary for a team to decide on their own counting rules when making measurements related to a design model.

Design reviews. The use of formal or informal design reviews provides an opportunity to make project-specific interpretation of any metric values obtained, as well as to assess the general structure of a design model. It is important that the outcomes from a review are carefully recorded.

Design refactoring. This can be performed as part of the outcome from a review, but there is little guidance available about how to perform refactoring at the design level.