This chapter provides more technical information about digital video recording in production and postproduction. For the basics of video formats, cameras, and editing, see Chapters 1, 2, 3, and 14.

FORMING THE VIDEO IMAGE

THE DIGITAL VIDEO CAMERA’S RESPONSE TO LIGHT

You’ll find simplified instructions for setting the exposure of a video camera on p. 107. Let’s look more closely at how the camera responds to light so you’ll have a better understanding of exposure and how to achieve the look you want from your images.

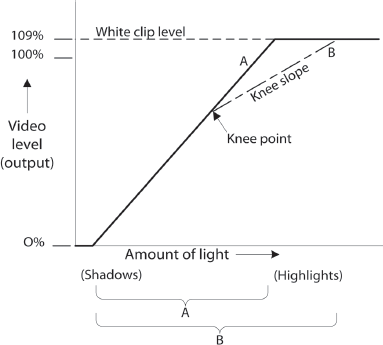

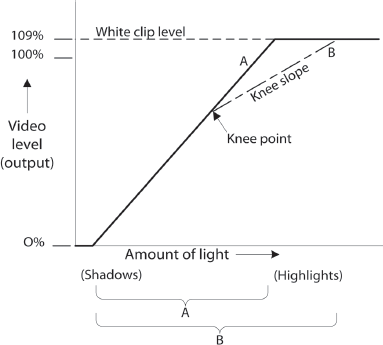

The camera’s sensor converts an image made of light into an electrical signal (see p. 5). Generally speaking, the more light that strikes the sensor, the higher the level of the signal. To look more closely at the relationship between light and the resulting video level, we can draw a simplified graph like the one in Fig. 5-1. The amount of light striking the sensor increases as we move from left to right.1 The vertical axis shows the resulting video signal level.

This relationship between light input and video signal output is called a sensor’s transfer characteristic. It resembles a characteristic curve for film (see Fig. 7-3, with the exception that it is a straight line: both CCD and CMOS sensors produce signals directly proportional to the light falling on them.

Look at the line marked “A.” Note that below a certain amount of light (the far left side of the graph), the system doesn’t respond at all. These are dark shadow areas; the light here is so dim that the photons (light energy) that strike the sensor from these areas simply disappear into the noise of the sensor. Then, as the amount of light increases, there is a corresponding increase in the video level. Above a certain amount of exposure, the system again stops responding. This is the white clip level. You can keep adding light, but the video level won’t get any higher. Video signals have a fixed upper limit, which is restricted by legacy broadcast standards, despite the fact that sensors today can deliver significantly more stops of highlight detail than in the past.

When the exposure for any part of the scene is too low, that area in the image will be undifferentiated black shadows. And anything higher than the white clip will be flat and washed-out white. For objects in the scene to be rendered with some detail, they need to be exposed between the two.

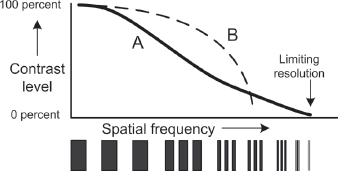

Fig. 5-1. The video sensor’s response to light. The horizontal axis represents increasing exposure (light) from the scene. The vertical axis is the level of the resulting video signal that the camera produces. Line A shows that as the light increases, so does the video level—until the white clip is reached, at which point increases in light produce no further increase in video level (the line becomes horizontal). With some cameras, a knee point can be introduced that creates a less steep knee slope to the right of that point (Line B). Note how this extends the camera’s ability to capture bright highlights compared to A. This graph is deliberately simplified. (Steven Ascher)

The limits of the video signal help explain why the world looks very different to the naked eye than it does through a video camera. Your eye is more sensitive to low light levels than most video cameras are—with a little time to adjust, you can see detail outdoors at night or in other situations that may be too dark for a camera. Also, your eye can accommodate an enormous range of brightness. For example, you can stand inside a house and, in a single glance, see detail in the relatively dark interior and the relatively bright exterior. By some estimates, if you include that our pupils open and close for changing light, our eyes can see a range of about twenty-four f-stops.

Digital video and film are both much more limited in the range of usable detail they can capture from bright to dark (called the exposure range or dynamic range). When shooting, you may have to choose between showing good detail in the dark areas or showing detail in the bright areas, but not both at the same time (see Fig. 7-16). Kodak estimates that many of its color negative film stocks can capture about a ten-stop range of brightness (a contrast ratio of about 1,000:1 between the brightest and darkest value), although color negative’s S-shaped characteristic curve can accommodate several additional stops of information at either end of the curve.2 Historically, analog video cameras were able to handle a much more limited range, sometimes as low as about five stops (40:1), but new high-end digital cameras capture an exposure range of around ten stops, and the latest digital cinematography cameras like the ARRI Alexa and Sony F65 claim fourteen stops. RED says its Epic camera can capture eighteen stops when using its high dynamic range function.

The image in Fig. 7-17 was shot with film; the middle shot shows a “compromise” exposure that balances the bright exterior and dark interior. With typical video cameras it is often harder to find a compromise that can capture both; instead you may need to expose for one or the other (more on this below).

To truly evaluate exposure range we need to look at the film or video system as a whole, which includes the camera, the recording format, and the monitor or projection system—all of which play a part. For example, a digital camera’s sensor may be capable of capturing a greater range than can be recorded on tape or in a digital file, and what is recorded may have a greater range than can be displayed by a particular monitor.

Measuring Digital Video Levels

We’ve seen that the digital video camera records a range of video levels from darkest black to brightest white. This range is determined in part by what is considered “legal” by broadcast standards. We can think of the darkest legal black as 0 percent digital video level and the brightest legal white as 100 percent (sometimes called full white or peak white).

The actual range a digital video camera is capable of capturing always goes beyond what is broadcast legal. On most cameras today, the point at which bright whites are clipped off is 109 percent. The range above 100 is called super white or illegal white and can be useful for recording bright values as long as they’re brought down before the finished video is broadcast. If you’re not broadcasting—say, you’re creating a short for YouTube or doing a film-out—you don’t need to bring the super white levels down at all.

Professional video cameras have a viewfinder display called a zebra indicator (or just “zebra” or “zebras”) that superimposes a striped pattern on the picture wherever the video signal exceeds a preset level (see Fig. 3-3). A zebra set to 100 percent will show you where video levels are close to or above maximum and may be clipped. Some people like to set the zebra lower (at 85 to 90 percent) to give some warning before highlights reach 100 percent. If you use the zebra on a camera that’s not your own, always check what level it’s set for.3

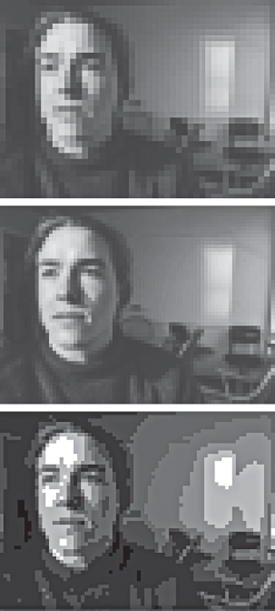

Fig. 5-2. Histograms. The normally exposed shot (top) produces a histogram with pixels distributed from dark tones to light. In this case, the distribution is a “mountain in the middle,” with the most pixels in the midtones, and relatively few in the darkest shadows or brightest highlights. The underexposed shot (bottom left) creates a histogram with the pixels piled up against the left side, showing that blacks are being crushed and shadow detail is lost. The overexposed shot (bottom right) shows pixels concentrated in the bright tones on the right, with details lost due to clipped highlights. (Steven Ascher)

Many newer digital cameras and all DSLRs use a histogram to display the distribution of brightness levels in the image (see Fig. 5-2). A histogram is a dynamically changing bar graph that displays video levels from 0 to 100 percent along the horizontal axis and a pixel count along the vertical axis. In a dark scene, the histogram will show a cluster of tall bars toward the left, which represents a high number of dark pixels in the image. A bright highlight will cause a tall bar to appear on the right side. By opening and closing the iris, the distribution of pixels will shift right or left. For typical scenes, some people use the “mountain in the middle” approach, which keeps the majority of the pixels safely in the middle and away from the sides where they may be clipped.

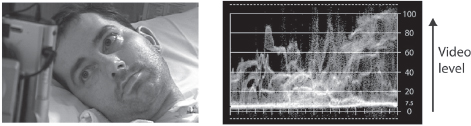

Fig. 5-3. A waveform monitor displays video levels in the image. In this case, you can see that the brightest parts of the bed sheet on the right side of the frame are exceeding 100 percent video level. (Steven Ascher)

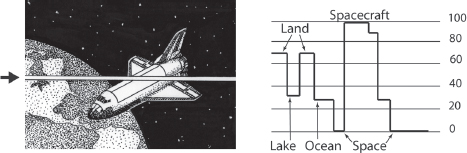

A waveform monitor gives a more complete picture of video levels (see Fig. 5-3). On waveform monitors used for digital video formats, 0 percent represents absolute black and, at the top of the scale, 100 percent represents peak legal white level. (For analog video, waveform monitors were marked in IRE—from Institute of Radio Engineers—units of measurement. Absolute black was 0 IRE units and peak white was 100 IRE units. The units of percentage used for today’s digital video signal directly parallel the old system of IRE units.)

Waveform monitors are commonly used in postproduction to ensure that video levels are legal. But a waveform monitor is also a valuable tool on any shoot. With a waveform monitor, unlike a histogram, if there is a shiny nose or forehead creating highlights above 100 percent signal level, you’ll notice it easily—and know what’s causing it (when a subject in a close-up moves from left to right, you can see the signal levels shift left to right on the waveform monitor). It’s like having a light meter that can read every point in a scene simultaneously. Some picture monitors and camera viewfinders can display a waveform monitor on screen.

Fig. 5-4. By looking at the waveform display of a single horizontal line of pixels, you can more clearly see how luminance values in the image are represented in the waveform monitor. (Robert Brun)

USING A LIGHT METER. Some cinematographers like to use a handheld light meter when shooting digital, much as they would when shooting film. For any particular camera it will take some experimentation to find the best ISO setting for the meter (with digital video cameras that have ISO settings, you can’t assume those will match the meter’s ISO). Point the camera at a standard 18 percent gray card (see p. 307) and make sure the card fills most of the frame. Lock the shutter speed and the gain or ISO, and set the camera’s auto-iris so it sets the f-stop automatically (shutter priority in DSLRs). Note the f-stop of the lens. Now, with the light meter, take a reflected reading of the gray card (or an incident reading in the same light) and adjust the meter’s ISO until it has the same f-stop. If you have a waveform monitor, and are using a manual iris, set the iris so the gray card reads about 50 percent video level.

The fact is, in a digital video camera, no aspect of the digital video signal goes unprocessed (with such adjustments as gamma, black stretch, and so on) so there isn’t an easily measured sensitivity, as there is for film or digital cinema cameras capturing RAW files directly from the sensor. For this reason, experienced video camera operators rarely use light meters to set exposure. They may, however, use light meters to speed up lighting, particularly if they know the light levels best suited to a particular scene and how to adjust key-to-fill ratios with a light meter alone.

Setting Exposure—Revisited

See Setting the Exposure on p. 107 before reading this. Setting exposure by eye—that is, by the way the picture looks in the viewfinder or monitor—is the primary way many videographers operate. But to be able to trust what you’re seeing, you need a good monitor, properly set up, and there shouldn’t be too much ambient light falling on the screen (see Appendix A).

By using the camera’s zebra indicator, a histogram, or a waveform monitor (described above) you can get more precise information to help you set the level.

The goal is to adjust the iris so the picture is as pleasing as possible, with good detail in the most important parts of the frame. If you close the iris too much, the picture will look too dark and blacks will be crushed and show no detail. If you open the iris too much, the highlights will be compressed and the brightest parts of the scene will be blown out (see Fig. 5-2). As noted above, there is a white clip circuit that prevents the signal from going above about 109 percent on many cameras.4 Say you were shooting a landscape, and exposing the sky around 100 percent. If a bright cloud, which would otherwise read at 140 percent, came by, the white clip will cut off (clip) the brightness level of the cloud somewhere between 100 and 109 percent, making it nearly indistinguishable from the sky. In Fig. 5-8A, the edge of the white chair is being clipped.

In most video productions, you have an opportunity to try to correct for picture exposure in postproduction when doing color correction. Given the choice, it’s better to underexpose slightly than overexpose when shooting since it’s easier in post to brighten and get more detail out of underexposed video than to try to reclaim images that were overexposed on the shoot. Once details are blown out, you can’t recover them.

One method for setting exposure is to use the zebra indicator to protect against overexposing. If you have the zebra set at 100 percent, you’ll know that any areas where the zebra stripes appear are being clipped or are right on the edge. You might open the iris until the zebra stripes appear in the brightest areas and then close it slightly until the stripes just disappear. In this way, you are basing the exposure on the highlights (ensuring that they’re not overexposed) and letting the exposure of other parts of the frame fall where it may. If you’re shooting a close-up of a face, as a general rule no part of the face should read above 100 percent (or even close) or else the skin in those areas will appear washed out in a harsh, unflattering way.

When using a camera with a histogram, you can do a similar thing by opening the iris until the pixels pile over to the right side of the display, then close it until they are better centered or at least so there isn’t a large spike of pixels at the right-hand edge (see Fig. 5-2).

Even so, sometimes to get proper exposure on an important part of the scene you must allow highlights elsewhere in the frame to be clipped. If you’re exposing properly for a face, the window in the background may be “hot.” The zebra stripes warn you where you’re losing detail. In this situation you may be able to “cheat” the facial tones a little darker, or you may need to add light (a lot of it) or shade the window (see Fig. 7-17). Or, if seeing into shadow areas is important, you may want to ensure they’re not too dark (because they may look noisy) and let other parts of the frame overexpose somewhat.

It’s an old cliché of “video lighting” that it’s necessary to expose flesh tones consistently from shot to shot, for example at 65–70 percent on a waveform monitor. This approach is outmoded (if not racist). First, skin tone varies a lot from person to person, from pale white to dark black. If you use auto-iris on close-ups of people’s faces, it will tend to expose everyone the same. However, “average” white skin—which is a stop brighter than the 18 percent gray card that auto-iris circuits aim for—may end up looking too dark, and black skin may end up too light. (See Understanding the Reflected Reading, p. 292.) But, even more important, exposure should serve the needs of dramatic or graphic expression. The reality is that people move through scenes, in and out of lighting, and the exposure of skin tones changes as they do. In a nighttime scene, for instance, having faces exposed barely above shadow level may be exactly the look you want (See Fig. 5-10). For sit-down interviews with light-skinned people, a video level of 50–55 percent on a waveform monitor is usually a safe bet. Momentary use of auto-iris is always a good way to spot-check what the camera thinks the best average exposure should be, but don’t neglect to use your eyes and creative common sense too.

As a rule of thumb when using a standard video gamma alone (see below for more on gamma), changing the exposure of a scene by a stop will cause the digital video level of a midtone to rise or fall by about 20 percent. If a digital video signal is defined by a range of 0 to 100 percent, does this imply that the latitude of broadcast video is five stops? It would seem so, but through the use of special gammas, digital video cameras can actually pack many more stops of scene detail into the fixed container that is the video signal. With today’s digital video cameras, you have around ten stops of dynamic range. Use ’em.

For inspiration regarding the creative limits to which digital video exposure—particularly HD—can be pushed these days, watch the newest dramatic series on network or cable television for the latest trends in lighting. You may be in for some surprises.

UNDERSTANDING AND CONTROLLING CONTRAST

As we’ve seen, the world is naturally a very contrasty place—often too contrasty to be captured fully in a single video exposure. For moviemakers, contrast is a key concern, and it comes into play in two main areas:

Fig. 5-5. Thinking about contrast. (A) This image was captured with enough latitude or dynamic range to bring out details in the shadow areas (under the roadway) and in the highlights like the water. (B) This image has compressed shadow areas (crushed blacks), which can happen when you set the exposure for the highlights and your camera has insufficient dynamic range to reach into the shadows. (C) This shot has increased overall contrast; shadows are crushed and the highlights are compressed (note that details in the water are blown out). Notice also the greater separation of midtones (the two types of paving stones in the sidewalk look more similar in B and more different in C). Though increasing the contrast may result in loss of detail in dark and/or light areas, it can also make images look bolder or sharper. (D) If we display image C without a bright white or dark black, it will seem murky and flat despite its high original contrast. Thus the overall feeling of contrast depends both on how the image is captured and how it is displayed. (Steven Ascher)

Contrast is important because it’s both about information (are the details visible?) and emotion (high-contrast images have a very different feel and mood than low-contrast images). Contrast can be thought of as the separation of tones (lights and darks) in an image. Tonal values range from the dark shadow areas to the bright highlights, with the midtones in the middle. The greater the contrast, the greater the separation between the tones.

Low-contrast images—images without good tonal separation—are called flat or soft (soft is also used to mean “not sharp” and often low-contrast images look unsharp as well, even if they’re in focus). Low-contrast images are sometimes described as “mellow.” High-contrast images are called contrasty or hard. An image with good contrast range is sometimes called snappy.

Contrast is determined partly by the scene itself and partly by how the camera records the image and how you compose your shots. For example, if you compose your shots so that everything in the frame is within a narrow tonal scale, the image can sometimes look murky or flat. When shooting a dark night shot, say, it can help to have something bright in the picture (a streetlight, a streak of moonlight) to provide the eye with a range of brightness that, in this case, can actually make the darks look darker (see Fig. 5-10).

Let’s look at some of the factors that affect contrast and how you can work with them.

WHAT IS GAMMA?

Gamma in Film and Analog Video

In photography and motion picture film, gamma () is a number that expresses the contrast of a recorded image as compared to the actual scene. A photographic image that perfectly matches its original scene in contrast is said to have 1:1 contrast or a “unity” gamma equal to 1.

A film negative’s gamma is the slope of the straight line section of its characteristic curve (see Fig. 7-3). The steeper the characteristic curve, the greater the increase in density with each successive unit of exposure, and the greater the resulting image contrast. Actually, a negative and the print made from that negative each has its own separate gamma value, which when multiplied together yield the gamma of the final image. The average gamma for motion picture color negatives is 0.55 (which is why they’re so flat looking), while the gamma for print film is far higher, closer to four.6 When these two gamma values are multiplied (for example, 0.55 × 3.8 = 2), the result is an image projected on the screen with a contrast twice that of nature. We perceive this enhanced contrast as looking normal, however, because viewing conditions in a dark theater are anything but normal, and in the dark our visual system requires additional contrast for the sensation of normal contrast.

In analog video, the term “gamma” has a different meaning. This has caused endless confusion among those who shoot both film and video, which continues in today’s digital era.

TV was designed to be watched in living rooms, not in dark theaters, and therefore there was no need to create any unnatural contrast in the final image. Video images are meant to reproduce a 1:1 contrast ratio compared to the real world. But the cathode ray tubes (CRTs; see Fig. 5-6) used for decades in TVs were incapable of linear image reproduction (in which output brightness is a straight line that’s directly proportional to input signal level). Instead, a gamma correction was needed so that shadow detail wouldn’t appear too dark and bright areas wouldn’t wash out (see Fig. 5-7).7

In analog video, “gamma” is shorthand for this gamma correction needed to offset the distortions of an analog CRT display. When a gamma-corrected signal from an analog video camera is displayed on a CRT, the resulting image has a gamma of 1 and looks normal.

Fig. 5-6. For decades analog CRTs were the only kind of TV. Now no one makes them, though many are still in use. CRT monitors are recognizable because they’re big and boxy (definitely not flat panel). (Sony Electronics, Inc.)

Digital Video Gamma

CRTs are a thing of the past. The video images you shoot will be viewed on plasma, LCD, LCOS, OLED, or laser displays or projectors that are not affected by the nonlinearity of CRT vacuum tubes. So why do digital video cameras still need gamma correction?

In theory we could create a digital camcorder and TV each with a gamma of 1. In fact, as shown in Fig. 5-1, digital video sensors natively produce a straight line response, and digital TVs and displays are capable of reproducing the image in a linear way, with output directly proportional to input. The problem is that this equipment would be incompatible with millions of existing televisions and cameras. So, new cameras and displays are stuck with gamma correction—let’s return to the shorthand “gamma”—as a legacy of the analog era. However, in today’s professional digital video cameras, gamma curves can be used as a creative tool to capture a greater range of scene brightness than was possible in analog.

Fig. 5-7. Gamma correction. CRT monitors have a response curve that bows downward (darkening shadows and midtones), so cameras were designed to compensate by applying a gamma correction that bows upward. When we combine the camera’s gamma-corrected video signal with the monitor’s gamma, we get a straight line (linear) response, which appears to the TV viewer as normal contrast.

Altering the gamma has a noticeable effect on which parts of the scene show detail and on the overall mood and feel of the picture. A high gamma setting can create an image that looks crisp and harsh by compressing the highlights (crushing the detail in the bright areas), stretching the blacks, and rendering colors that appear more saturated and intense (see Fig. 5-8A). A low gamma setting can create a picture that looks flat and muted, allowing you to see more gradations and detail in the bright areas that would otherwise overexpose, while compressing shadow detail and desaturating colors (see Fig. 5-8D).

Some people choose to use various gamma settings on location, while others prefer to alter the look of the image in post under more controlled conditions. If contrast can be fully adjusted in post, why bother with gamma correction in the camera at all? When you adjust gamma in a camera—or any picture parameter, such as color or sharpness—what is being adjusted is the full video signal in the camera’s DSP (digital signal processing) section prior to any compression. If you adjust the image after it’s been compressed and recorded with typical camera codecs, quality can suffer (which is why some people go to the trouble of using external recorders with little or no compression).

Gamma or contrast adjustments in post can achieve only so much. Whether you record compressed or uncompressed, if you didn’t capture adequate highlight detail in the first place by using an appropriate gamma (see the next section) you’re out of luck. There is no way in post to invent missing highlight detail.

GAMMA OPTIONS WHEN SHOOTING

Standard Gamma

All digital video cameras out of the box offer a default or “factory setting” gamma meant to make that camera look good. In some cameras this is called standard gamma. In professional cameras, a camera’s standard gamma will be the internationally standardized gamma for that video format. In high definition, the international standard for gamma is the ITU-R 709 video standard (also known as Rec. 709, CCIR 709, or just 709). Standard definition’s international standard is ITU-R 601. The 709 and 601 standards apply to both gamma (contrast) and the range of legal colors (the color gamut), and they look quite similar to each other in these respects.

Fig. 5-8. Picture profiles. Many cameras offer a variety of preset or user-adjustable settings for gamma and other types of image control. (A) Standard gamma. (B) By adding a knee point (here at 82 percent) highlight detail can be captured without affecting other tonalities—note increased detail in the bright edge of the chair. (C) Some cameras offer a profile that emulates the look of a film print stock, with darker shadows for added contrast (note crushed blacks and loss of detail in dark areas like the woman’s hair). You might use this setting if you like the look and aren’t planning to do color correction in post. However, if you shoot with a standard gamma like A, it’s very easy to achieve the look of C in post, and you don’t risk throwing away shadow detail that can’t be reclaimed later. (D) Some cameras can produce an extended dynamic range image that contains greater detail in highlights and shadows but looks too dark and flat for direct viewing. Different cameras may accomplish this kind of look using a cine gamma, or log or RAW capture. The flat image can be corrected to normal contrast in post, while retaining more detail in bright and dark areas than if it had been shot with standard gamma. All the images here are for illustration; your particular camera or settings may produce different results. (Steven Ascher)

ITU-R 709 and ITU-R 601 are designed to reproduce well without much correction in postproduction. They produce an overall bright, intense feel with relatively rich, saturated colors. For sports and news, this traditional video look makes for a vibrant image. At the same time, these are relatively high gammas that also result in a limited exposure range—extreme highlight detail is lost. These standardized gammas used alone don’t allow you to capture all the dynamic range your camera is capable of, or that a high-quality professional monitor or projector can display.

The curve marked “Standard” in Figure 5-9 is not precisely Rec. 709, but it shares a basic shape. Notice that it rises quickly in the shadows, providing good separation of tones (good detail) in the dark parts of the scene. However, it rises at such a steep slope that it reaches 109 percent quite quickly compared to the other curves; thus it captures a more limited range of brightness in the scene.

Standard gammas like Rec. 709, which are based on the characteristics of conventional TV displays, not only limit dynamic range, they also fall short of the wider color gamut found in today’s digital cinema projectors. To address this fact, some digital video cameras offer a gamma that incorporates DCI P3 (also called SMPTE 431-2), a new color standard established by Hollywood’s Digital Cinema Initiatives for commercial digital video projectors. As a camera gamma, DCI P3 combines the dynamic range of Rec. 709 with an expanded color gamut modeled after 35mm print film. The advantage of using DCI P3 gamma is that what you see in the field will closely resemble what you see on the big screen. Note that LCD monitors must be DCI P3 compliant to accurately view color when using this gamma.

Standard Gamma with Knee Correction

As we’ve just seen, using a standardized gamma produces a snappy, fairly contrasty image at the expense of highlight detail. When the camera’s sensitivity reaches maximum white, highlights are clipped. But there is a way to extend a camera’s dynamic range to improve the handling of highlight detail when using Rec. 709 or Rec. 601.

On a professional digital video camera, you can manually introduce a knee point to the sensor’s transfer characteristic (see Fig. 5-1). Normally, with no knee, the camera’s response curve is a relatively straight line that clips abruptly at 100 or 109 percent. However, using menu settings, if you add a knee point at, say, 85 percent, the straight line can be made to bend at that point, sloping more gently and intersecting the white clip level further to the right along the horizontal axis, which corresponds to higher exposure values. This technique compresses highlights above 85 percent (in this example), so that parts of the scene that would otherwise be overexposed can be retained with some detail.8

When highlights are compressed by use of a knee point, their contrast and sharpness are compressed as well. They can appear less saturated. To correct for this, professional cameras also offer menu settings for “knee aperture” (to boost sharpness and contrast in highlights) and “knee saturation level” (to adjust color saturation in highlights). These are usually located next to the knee point settings in the camera’s menu tree.

It is possible to set the knee point too low, say below 80 percent, where the knee slope can become too flat, with the result that highlights may seem too compressed, normally bright whites may seem dull, and light-skin faces may look pasty.

In addition to a knee point setting, most professional video cameras have an automatic knee function that, when engaged, introduces highlight compression on the fly. When no extreme highlights exist, this function places the knee point near the white clip level, but when highlights exceed the white clip level, it automatically lowers the knee point to accommodate the intensity of the brightest levels. Called Dynamic Contrast Control (DCC) in Sony cameras and Dynamic Range Stretch (DRS) in Panasonic cameras, automatic knee helps to preserve highlight details in high-contrast images, although sometimes the outcome is subtle to the eye. Some camera operators leave it on all the time; others feel that the manual knee is preferable. As with all knee point functions, you can experiment by shooting a high-contrast image and inspecting the results on a professional monitor. Everything you need to know will be visible on the screen.

Fig. 5-9. Gamma curves. Standard gamma rises quickly in the shadows, creates relatively bright midtones, and reaches the white clip level relatively soon. By comparison, the Cine A curve provides darker shadows and midtones but continues to rise to the right of the point at which the standard curve has stopped responding, so it’s able to capture brighter highlights. The Cine B curve is similar to Cine A, but tops out at 100 percent video level, so the picture is legal for broadcast without correction in post. These curves are for illustration only; the names and specific gamma curves in your camera may be different. (Steven Ascher)

“Cine” Gamma in Video Cameras

The technique just discussed of setting a knee point to control highlight reproduction has been with us for a while. Today’s digital video cameras accomplish a similar but more sophisticated effect using special “cine” gamma curves that remap the sensor’s output to better fit the limited dynamic range of the video signal.

All professional digital video cameras offer reduced gamma modes said to simulate the look of film negative or film print. These gamma curves typically darken midtones and compress highlight contrast, thereby extending reproducible dynamic range and allowing you to capture detail in extremely bright areas that would otherwise overexpose. The goal is to capture highlights more like the soft shoulder of a film negative’s characteristic curve does. The cine curves in Fig. 5-9 represent such filmlike video gammas. Note that they continue to rise to the right of the standard video gamma curve, capturing bright highlights where the standard gamma has stopped responding.

The principle of most cine gammas is similar, but they come in two categories: (1) display cine gammas, whose images are meant to be viewed directly on a video monitor, and (2) intermediate cine gammas not meant for direct viewing, whose dark, contrast-flattened images need to be corrected in post.

Examples of the first type include Panasonic’s CineGamma (called Cine-Like and Film-Like in some camcorders), Sony’s CinemaTone (found in low-cost pro cameras), Canon’s Cine, and JVC’s Cinema gamma. Typically they come in gradations like Canon’s Cine 1 and 2 or Sony’s CinemaTone 1 and 2.9 A more sophisticated cine gamma called HyperGamma, which extends the camera’s dynamic range without the use of a knee point, is found in high-end Sony CineAlta cameras (it’s also called CINE gamma in some Sony cameras, although it’s exactly the same thing). HyperGamma features a parabolic curve and comes in four gradations.

Some of these cine gammas cut off at 100 percent, and keep the level legal for broadcast. Some reach up to 109 percent, which extends the ability to capture extreme highlights, but the maximum level must be brought down if the video will be televised.10

The second type of cine gammas are the intermediate gammas including Panasonic’s FILM-REC (found in VariCams) and JVC’s Film Out gamma. Both produce flat-contrast images with extended dynamic range, which need to be punched up in post for normal viewing. Both were a product of the 2000s, a decade in which independent filmmakers sometimes shot low-cost digital video for transfer to 35mm film for the film festival circuit.

Camcorder manuals invariably do a poor job describing what each cine gamma actually does, and the charts, if there are any, often use different scales (making them hard to compare) or are fudged. Cine gammas can be confusing if not misleading because their very name implies a result equal to film. Color negative film possesses a very wide dynamic range (up to sixteen stops), while digital video signals must fall inside a fixed range of 0–100 percent (for broadcast) or 0–109 percent (for everything else). Cine gamma curves must shoehorn several additional stops of highlight detail into these strict signal limits, regardless of the sensor’s inherent dynamic range. It’s no easy task.

Some people prefer cine gamma settings; others think that display cine gammas look disagreeably flat and desaturated. (In some cases, whites don’t look very bright.) Many who do use cine gammas add contrast correction in postproduction to achieve a more normal-looking scene. At the end of the day, the main advantage to using a cine gamma is that you can capture extended highlights that would be unavailable in post if you hadn’t.

As in the case of adding a knee point, you can experiment with cine gammas by shooting a variety of scenes and inspecting the results on a professional monitor or calibrated computer screen like an Apple Cinema Display. Watch the image on the monitor as you open the iris. Highlight areas that might otherwise clearly overexpose may take on a flat, compressed look as you increase the exposure. You may wish to underexpose by a half stop or more to further protect these areas. You may also want to experiment with contrast and color correction in post in order to discover what impact a cine gamma has on dark detail and low-light noise levels.

In summary, most cine gammas attempt to capture the look of film for viewing on a video monitor or TV. To attempt to capture the latitude of film from a digital sensor requires something beyond the conventional video signal. To do this requires a more extreme approach, even a new kind of signal, which we will discuss next.

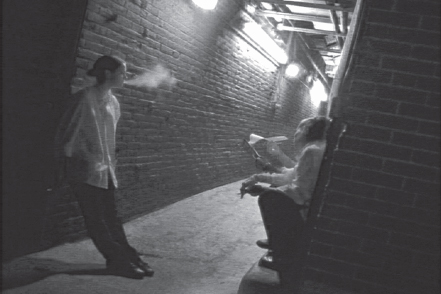

Fig. 5-10. This shot might be considered underexposed, but as a night shot it feels appropriate. The lights in the background accentuate the cigarette smoke and create a range of contrast that helps the scene feel natural by giving the eye a bright reference point that can make the blacks seem darker. (Steven Ascher)

Log and RAW Capture in Digital Cinema Cameras

High-end digital cinematography cameras offer two methods of capturing a much larger dynamic range, allowing you to record details in deeper shadows and brighter highlights.

LOG CAPTURE. Logarithmic transfer characteristic, or log for short, is one way to extract even more of a sensor’s dynamic range from an uncompressed RGB video signal. Think of it as a super gamma curve.

In a typical linear scale, each increment represents adding a fixed amount (for example, 1, 2, 3, 4, 5…). Along a logarithmic scale, however, each point is related by a ratio. In other words, each point on a logarithmic scale, although an equal distance apart, might be twice the value of the preceding point (for example, 1, 2, 4, 8, 16, 32).

Digital video is intrinsically linear, from sensors to signals (before gamma is applied), while both film and human vision capture values of light logarithmically.11 So, for example, imagine you had a light fixture with thirty-two lightbulbs; you might think that turning on all the bulbs would appear to the eye thirty-two times as bright as one bulb. However, following a logarithmic scale, the eye only sees that as five times as bright (five steps along the 1, 2, 4, 8, 16, 32 progression).12 At low light levels, the eye is very sensitive to small increases in light. At high light levels, the same small increases are imperceptible. The change in brightness you see when you go from one light to two lights (a one-light difference) is the same as going from sixteen lights to thirty-two lights (a sixteen-light difference).

Where the sampling of digital images is concerned, the advantage of a nonlinear logarithmic scale is that many more samples, and therefore bits, can be assigned to the gradations of image brightness we perceive best—namely, dark tones—and fewer bits to brightness levels we are less sensitive to, meaning whites and bright tones. Digital video with its linear capture of brightness levels can’t do this; it assigns the same number of samples and precious digital bits to highlights as to shadows, without distinction. This is particularly disadvantageous in postproduction, where vastly more samples are needed in the dark half of the tonal scale for clean image manipulation.

The logarithmic mapping of image brightness levels originated in film scanning for effects and digital intermediate work using full-bandwidth RGB signals (no color subsampling or component video encoding), 10-bit quantization for 1024 bits per sample (compared to 8-bit quantization and 256 bits per sample of most digital video), and capture to an RGB bitmap file format pioneered by Kodak known as DPX (Digital Picture Exchange).

One of the first digital cinematography cameras to output a log transfer characteristic was Panavision’s Genesis, a PL-mount, single-CCD camera introduced in 2005. Panavision was motivated to use what it called PANALOG because standard Rec. 709 gamma for HD could accommodate only 17 percent of the CCD’s saturation level (the maximum exposure a sensor can handle). By dramatically remapping the video signal using a 10-bit log function to preserve the CCD’s entire highlight range, a filmlike latitude of ten stops was achieved.13

PANALOG is output as uncompressed RGB (4:4:4) via dual-link HD-SDI cables and typically recorded to a Sony SSR-1 solid-state recorder or an HDCAM SR tape using a portable Sony field recorder.14

The equipment needed to capture and record uncompressed 10-bit log signals is expensive. The data flow is enormous: nearly 200 megabytes (not bits) per second at 24 fps. Cameras must be capable of dual-link HD-SDI output. Hard-disk recording systems used on location must incorporate a lot of bandwidth and fast RAID performance. Don’t forget you have to transfer it all, and back it up at some point too.

For its F35, F23, and F3 digital cinematography cameras, Sony has its own version of log output, called S-Log. ARRI’s Alexa uses a third type of log output, a SMPTE standard called Log C.15 Each company might boast that its version contains the best secret sauce, but in fact with the proper LUT (lookup table), it’s relatively easy to convert S-Log to Log C or PANALOG, or the other way around. In other words, they’re easily intercut, just as they’re also easily captured to standard 10-bit DPX files on hard disks.

Because a log transfer characteristic radically remaps the brightness values generated by the sensor, the video image that results is flat, milky, and virtually unwatchable in its raw state. In effect, you’ve committed your production to extensive D.I.-like color correction of every scene in post. On the upside, you’ll obtain a video image that comes closest to film negative in its latitude and handling of color grading without quality loss. Don’t forget, not only is there no color subsampling (full 4:4:4), but 10-bit log sampling of the individual RGB components also better captures the wide color gamut produced by the sensor, which is not reproducible by conventional video. All of this favors more accurate keying and pulling of mattes in effects work. With some cameras, such as the Sony F3, it is also possible to record log in 4:2:2 at a lower data rate internally or to more affordable external recorders such as the Ki Pro Mini or Atomos Ninja (see Figs. 2-19 and 5-11).

Each camera capable of log output has its own solution for displaying usable contrast in its viewfinder, as well as for providing viewing LUTs for monitoring the image on location. LUTs, simply put, convert the log image into something that looks normal. They are nondestructive, meaning they translate only the image for viewing but don’t change the image in any way. LUTs created and used on location can be stored and sent to color correction as guides to a DP’s or director’s intent. When shooting log, it’s recommended to not underexpose.

Fig. 5-11. The AJA Ki Pro Mini field recorder can be mounted on a camera. Records 10-bit HD and SD Apple ProRes files to CF cards. Inputs include SDI, HD-SDI, and HDMI. Files are ready to edit without transcoding. (AJA Video Systems)

Canon’s EOS C300 brings a new wrinkle to log output, an 8-bit gamma curve called Canon Log. (Echoes of the Technicolor CineStyle gamma curves found in Canon DSLRs.) This high-dynamic-range gamma is invoked when the C300 is switched into “cinema lock” mode. A built-in LUT permits viewing of normal contrast in the C300’s LCD screen only. (Unavailable over HDMI or HD-SDI outputs—so you can’t see it on an external monitor.) Since the C300 records compressed 50 Mbps, long-GOP MPEG-2 to CF cards and outputs uncompressed HD from a single HD-SDI connection—both only 8 bit, 4:2:2—it does not belong in the same class as the digital cinematography cameras described above.

RAW CAPTURE. For those who need the utmost in dynamic range from a digital cinematography camera, recording RAW files is the alternative to using a log transfer characteristic. RAW files are signals captured directly from a single CMOS sensor that uses a Bayer pattern filter to interpret color (see Fig. 1-13). Before being captured directly to disk, flash memory, or solid-state drive, the sensor’s analog signals are first digitized—yes, linearly—but no other processing takes place, including video encoding or gamma curves. As a result, RAW is not video. Nor is it standardized.

RAW recording first gained popularity among professional still photographers, because it provides them with a “digital negative” that can be endlessly manipulated. As a result, it is the gold standard in that world. RAW recording of motion pictures works the same way, only at 24 frames per second.

It’s called RAW for a reason. Upon recording, each frame has to be demosaicked or “debayered.” Among CMOS sensors with Bayer filters, there are different types of relationships between the number of pixels that make up the final image (for instance, 1920 x 1080) and the number of photosites on that sensor that gather light for each individual pixel. The simplest arrangement is 1:1, where each photosite equals one pixel. In this case, a Bayer filter means that there will be twice as many green pixels/photosites as either red and blue. In the final image, the color of each pixel is represented by a full RGB value (a combination of red, green, and blue signals) but each photosite on the sensor captures only one of those signals (either red, green, or blue). Debayering involves averaging together (interpolating) the color values of neighboring photosites to essentially invent the missing data for each pixel. It’s more art than science.

Next a transfer characteristic or gamma curve must be applied; otherwise, the image would appear flat and milky. White balance, color correction, sharpening—every image adjustment is made in postproduction. All of this consumes time, personnel, and computer processing power and storage, and none of it will satisfy those with a need for instant gratification. But the ultimate images can be glorious. It’s like having a film lab and video post house in your video editing workstation.

RED pioneered the recording and use of RAW motion picture images with the RED One camera and its clever if proprietary REDCODE RAW, a file format for recording of compressed 4K Bayer images (compression ratios from 8:1 to 12:1). In this instance 4K means true 4K, an image with the digital cinema standard of 4,096 pixels across, like a film scan (instead of 3,840 pixels, sometimes called Quad HD by the video industry). REDCODE’s wavelet compression enables instant viewing of lower-resolution images in Final Cut Pro and other NLEs by use of a QuickTime component, and full resolution playback or transcoding when using the RED ROCKET card (see Fig. 3-8).

Both ARRI’s D-21 and Alexa can output uncompressed 2K ARRIRAW by dual-link HD-SDI, usually to a Codex Digital or S.two disk recorder. Uniquely, it is 12 bit and log encoded. ARRI says that 12-bit log is the best way to transport the Alexa’s wide dynamic range. Actually, an ARRIRAW image is captured at 2,880 pixels across and remains that size until downscaled to 2,048 pixels (2K) upon postproduction and completion of effects.

The Sony F65 digital cinema camera captures 4K, 16-bit linear RAW with a unique 8K sensor (20 million photosites) that provides each 4K pixel with a unique RGB sample—no interpolation needed. Onboard demosaicking provides real-time RGB output files to a small docking SRMASTER field recorder that carries one-terabyte SRMemory cards.

More within reach for independent filmmakers is Silicon Imaging’s SI-2K Mini with its 2⁄3-inch sensor and 2K RAW output captured using the CineForm RAW codec, a lossless wavelet compression similar to REDCODE. The Blackmagic Cinema Camera is an even-more affordable option (see p. 29).

Like video cameras that provide log output, motion picture cameras that output RAW files let you monitor a viewable image during production. By means of a LUT, they typically output an image close to standard ITU-R 709 gamma so you can get a rough sense of how the image will look after processing.

A wide latitude is always more flexible and forgiving. A side benefit to the filmlike latitude provided by cameras with log and RAW output is that DPs can once again use their light meters for setting scene exposure, just as in film, using the camera’s ISO rating.

Other Ways to Extend Dynamic Range

When you go into the world with a camera, you’re constantly dealing with situations in which the contrast range is too great. You’re shooting in the shade of a tree, and the sunlit building in the background is just too bright. You’re shooting someone in a car, and the windows are so blown out you can’t see the street. When the lighting contrast of a scene exceeds the camera’s ability to capture it, there are a number of things you can do (see Controlling Lighting Contrast, p. 512).

Altering the gamma and adjusting the knee point and slope as discussed above are important tools in allowing you to capture greater dynamic range. Here are some other methods or factors.

USE MORE BITS, LESS COMPRESSION. When you can record video using 10 bits or 12 bits instead of the 8 bits common to consumer and many professional camcorders, you will be able to capture greater dynamic range and subtler differences between tones. With greater bit depth comes a more robust image better able to withstand color and contrast adjustment in postproduction. Often an external recording device is the answer. Convergent Design’s nanoFlash records uncompressed HD to CompactFlash cards using Sony XDCAM HD422 compression up to 280 Mbps (see Fig. 1-27). The Atomos Ninja records uncompressed HD to a bare solid-state drive (SSD) via HDMI using 10-bit ProRes (see Fig. 2-19). For no compression, Convergent Design’s Gemini 4:4:4 recorder records uncompressed HD and 2K via HD-SDI, and Blackmagic Design’s HyperDeck Shuttle records 10-bit uncompressed HD, both to SSDs (see Fig. 5-30).

HIGHLIGHTS AND ISO. Video sensors have a fixed sensitivity, and changing the ISO or gain doesn’t make the sensor more or less sensitive, it only affects how the image is processed after the sensor. Changing the ISO when shooting effectively rebalances how much dynamic range extends above and below middle gray. If you shoot with a high ISO (essentially underexposing the sensor), there’s more potential latitude above middle gray, so you actually increase the camera’s ability to capture highlights. If you decrease the ISO (overexposing), dynamic range below neutral gray increases, so you improve the camera’s ability to reach into shadows. This is counterintuitive for anyone familiar with film, where using a faster, more sensitive stock usually means curtailing highlights (because a faster negative is genuinely more light sensitive).

HIGH DYNAMIC RANGE MODE. The RED Epic camera has a mode called HDRx (high dynamic range) in which it essentially captures two exposures of each frame, one exposed normally and one with a much shorter exposure time to capture highlights that would otherwise be overexposed. The two image streams can be combined in the camera or stored separately and mixed together in post. This extends the camera’s latitude up to eighteen stops, allowing you to capture very dark and very bright areas in the same shot.

Black Level

The level of the darkest tones in the image is called the black level. The darkest black the camera puts out when the lens cap is on is known as reference black. Black level is important because it anchors the bottom of the contrast range. If the black level is elevated, instead of a rich black you may get a milky, grayish tone (see Fig. 5-5D).16 Without a good black, the overall contrast of the image is diminished and the picture may lack snap. Having a dark black can also contribute to the apparent focus—without it, sometimes images don’t look as sharp. In some scenes, blacks are intentionally elevated, for example, by the use of a smoke or fog machine. In some scenes, nothing even approaches black to begin with (for example, a close shot of a blank piece of white paper).

In all digital video, the world over, reference black is 0 percent video, also known as zero setup.17 When you’re recording digitally, or transferring from one digital format to another, the nominal (standard) black level is zero. Some systems can create black levels below the legal minimum, known as super blacks.

Fig. 5-12. Video levels in RGB and component color systems. (A) In RGB color, used in computer systems, digital still cameras, and some digital cinema cameras, the darkest black is represented by digital code 0 and the brightest white by code 255 (this is for an 8-bit system). (B) In component digital video (also called YCBCR or YUV), used in most digital video cameras, 100 percent white is at code 235. Video levels higher than 100 percent are considered “super white”; these levels can be used to capture bright highlights in shooting and are acceptable for nonbroadcast distribution (for example, on the Web), but the maximum level must be brought down to 100 percent for television. Darkest legal black is at 0 percent level, represented by code 16. RGB has a wider range of digital codes from white to black and can display a wider range of tonal values than component. Problems can sometimes result when translating between the two systems. For example, bright tones or vibrant colors that look fine in an RGB graphics application like Photoshop may be too bright or saturated when imported to component video. Or video footage that looks fine in component may appear dull or dark when displayed on an RGB computer monitor or converted to RGB for the Web. Fortunately, when moving between RGB and component, some systems automatically remap tonal values (by adjusting white and black levels and tweaking gamma for midtones).

Fig. 5-13. Most editing systems operate in component video color space. When you import a file, the system needs to know if the source is an RGB file that needs to be converted to component, or one that is already component (either standard definition Rec. 601 or high definition Rec. 709). Shown here, some options in Avid Media Composer. Compare the code numbers here and in Fig. 5-12. (Avid Technology, Inc.)

BLACK STRETCH/COMPRESS. Some cameras have a black stretch adjustment that can be set to increase or decrease contrast in the shadow areas. Increasing the black stretch a little brings out details in the shadows and softens overall contrast. On some cameras, the darkest black can be a bit noisy, and adding some black stretch helps elevate dark areas up out of the noise. Some cameras also provide a black compress setting, which you can use to darken and crush shadow areas. Since stretching or compressing blacks alters the shape of the gamma curve, some cameras simply call these settings black gamma.

Because you can always crush blacks in post, it’s a good idea not to throw away shadow detail in shooting.

Storing Picture Settings for Reuse

Professional cameras provide extensive preset and user-adjustable settings for gamma and many other types of image control. Generally, after you turn off a digital camera, your latest settings are retained in memory, available again when the camera is powered up.

Most cameras permit gathering together various image-control settings and storing them internally, to be called up as needed. These collections of settings are called scene files in Panasonic cameras, picture profiles in Sony cameras, custom picture files in Canon cameras, and camera process in JVC cameras. Typically you are able to store five to eight of these preprogrammed collections.

Many cameras also permit convenient storage of your settings on setup cards (usually inexpensive SD cards), allowing you to transfer settings from one camera to another or later restore the same settings for different scenes. These settings pertain only to the exact same model of camera.

Scene files or picture profiles make it easy to experiment with different looks. If you want to experiment with a particular gamma, for instance, hook up your digital video camera to a large video monitor (set to display standard color and brightness) and look carefully at how the image changes as you adjust various parameters of that gamma. Aim the camera at bright scenes with harsh contrast and also low-key scenes with underexposed areas. Inspect extremes of highlight and shadow detail. Often one size doesn’t fit all, but with the ability to save and instantly call up several collections of settings, you can determine what works best for you before you shoot. It’s a great way to get to know a digital video camera intimately.

VIDEO COLOR SYSTEMS

Color Systems

Be sure to read How Color Is Recorded, p. 16, and Thinking About Camera Choices, Compression, and Workflow, p. 94, before reading this section.

All digital camcorders accomplish the same basic set of tasks: (1) capture an image to a sensor; (2) measure the amounts of red, green, and blue light at photosites across the sensor; (3) process and store that data; and (4) provide a means to play back the recording, re-creating the relative values of red, green, and blue so a monitor can display the image.

Fig. 5-14. RGB, component, S-video, and composite video systems vary in the paths they use to convey the video signal from one piece of equipment to another. For systems that use multiple paths, the signal is sometimes sent on multiple cables, but often the various paths are part of one cable. See text. (Robert Brun)

Different cameras use different methods, particularly when it comes to steps 2 and 3. As noted earlier, each photosite in a digital camera sensor can measure only brightness, but we can create color values in the image by placing a microscopic red, green, or blue filter over individual photosites (a technique used for single-sensor cameras; see Fig. 1-13) or by splitting the light from the lens into three paths using a prism and recording with three sensors (the technique used in three-chip cameras; see Fig. 1-14).

Let’s briefly review how digital cameras acquire and process color; one or more of these methods may be employed by your camera.

RGB. All digital cameras internally process raw data from the sensor(s) to generate three distinct color signals—red, green, and blue (RGB). RGB (also called 4:4:4; see below) is a full-bandwidth, uncompressed signal that offers a wide gamut of hues and is capable of very high image quality. RGB output can be found in high-end cameras, including some digital cinematography cameras, and is usually recorded to a high-end format like HDCAM SR. RGB requires high bandwidth and storage space; it is particularly useful for visual effects. RGB handles brightness values differently than component video, so there may be translation issues, for instance, when moving between the RGB color of a computer graphics program and the component color of a video editing program (see Fig. 5-12).18

COMPONENT VIDEO. Most digital cameras today record component video. They acquire the three RGB signals from the sensor(s), digitize them, process them, then throw away either half or three-quarters of the color data to make a video signal that’s easier to store and transmit. Prior to output, the three color signals (R, G, and B) are encoded into a monochrome luminance (sometimes called luma) signal, represented with the letter Y, which corresponds to the brightness of the picture, as well as two “color difference” signals (R minus Y, B minus Y), which are called chrominance (or sometimes chroma). Your color TV later decodes the luma and chroma signals and reconstructs the original RGB signals. Prominent examples of this type of video are the world standards for standard definition, ITU-R 601, and high definition, ITU-R 709.

Shorthand for component video is variously Y’CBCR, YCbCr, or Y,B-Y,R-Y. Though historically inaccurate, it’s also widely referred to as YUV. Analog component is Y’PBPR.

S-VIDEO. S-video (separate video) is also called Y/C. This is for analog video only, and it’s not so much a video system as a two-path method of routing the luminance signal separately from the two chrominance signals. It provides poorer color and detail than true component video, but is noticeably better than composite. If a camera or monitor has an S-video input or output, this is a superior choice over a composite input or output.

COMPOSITE VIDEO. Analog television, the original form of video, was broadcast as a single signal. What was uploaded to the airwaves was composite video, in which the luminance and two chrominance signals were encoded together. As a result, composite video could be sent down any single path, such as an RCA cable. Many different types of gear today still have analog composite inputs and outputs, often labeled simply “video in” and “video out” (see Fig. 14-13). These can be handy for, say, monitoring a camera on a shoot. Composite video was used for decades for analog broadcast PAL and NTSC, but it delivered the lowest-quality image of all the color systems, with many sacrifices in color reproduction due to technical compromises. No digital cameras today record composite video.

Color Sampling

See Reducing Color Data, p. 17, before reading this.

When we look at the world or a picture, our eyes (assuming we have good eyesight) can perceive subtle distinctions in brightness in small details. However, the eye is much less sensitive to color gradations in those same fine details. Because of this, smart engineers realized that if a video system records less information about color than brightness, the picture can still look very good, while cutting down the amount of data. This can be thought of as a form of compression.

As discussed above, most digital camcorders record component color. In this system there are three components: Y (luma) and CB and CR (both chroma). When the signal from the camera’s sensor is processed, some of the color resolution is thrown away in a step called subsampling or chroma subsampling; how much depends on the format.

To see how this works, look at a small group of pixels, four across (see Fig. 5-15). In a 4:4:4 (“four-four-four”) digital video system there are four pixels each of Y, CB, and CR. This provides full-color resolution. Component 4:4:4 is used mostly in high-end video systems like film scanners. (RGB video, described above, is always 4:4:4, meaning that each pixel is fully represented by red, green, and blue signals.)19

In 4:2:2 systems, a pair of adjacent CB pixels is averaged together and a pair of CR pixels is averaged together. This results in half as much resolution in color as brightness. Many high-quality component digital formats in both standard and high definition are 4:2:2. This reduction in color information is virtually undetectable to the eye.

Some formats reduce the color sampling even further. In a 4:1:1 system, there are four luma samples for every CB and CR, resulting in one-quarter the color resolution. This is used in the NTSC version of DV. While the color rendering of 4:1:1 is technically inferior to 4:2:2, and the difference may sometimes be detectable in side-by-side comparisons, the typical viewer may see little or no difference. Another type of chroma sampling is 4:2:0, used in HDV and PAL DV. Here, the resolution of the chroma samples is reduced both horizontally and vertically. Like 4:1:1, the color resolution in 4:2:0 is one-quarter that of brightness.

Some people get very wrapped up in comparing cameras and formats in terms of chroma sampling, praising one system for having a higher resolution than another. Take these numbers with a grain of salt: the proof is in how the picture looks. Even low numbers may look very good. Also, bear in mind that chroma sampling applies only to resolution. The actual color gamut—the range of colors—is not affected.

The main problems with 4:1:1 and 4:2:0 formats have to do with the fact that after we’ve thrown away resolution to record the image, we then have to re-create the full 4:4:4 pixel array when we want to view the picture. During playback this involves interpolating between the recorded pixels (essentially averaging two or more together) to fill in pixels that weren’t recorded. As a result, previously sharp borders between colored areas can become somewhat fuzzy or diffuse. This makes 4:1:1 and 4:2:0 less than ideal for titles and graphics, special effects, and blue- or green-screen work (though many people successful do green-screen work with 4:2:0 HD formats). Often projects that are shot in a 4:1:1 format like DV are finished on a 4:2:2 system that has less compression.

Fig. 5-15. Color sampling. In a 4:4:4 system, every pixel contains a full set of data about brightness (Y) and color (CB and CR). In 4:2:2 and 4:1:1 systems, increasing amounts of color data are thrown away, resulting in more pixels that have only brightness (luma) information. In 4:2:0 systems, pixels with Y and CR alternate on adjacent lines with ones that have Y and CB (shown here is PAL DV; other 4:2:0 systems use slightly different layouts).

SOME IMAGE MANIPULATIONS AND ARTIFACTS

Green Screen and Chroma Keys

There are digital graphic images, and scenes in movies and TV shows, that involve placing a person or object over a graphic background or a scene shot elsewhere. A common example is a weather forecaster who appears on TV in front of a weather map. This is done by shooting the forecaster in front of a special green background, called a green screen. A chroma key is used to target areas in the frame that have that particular green and “key them out”—make them transparent—which leaves the person on a transparent background. The forecaster, minus the background, is then layered (composited) over a digital weather map. The green color is a special hue not otherwise found in nature, so you don’t accidentally key out, say, a green tie. In some cases a blue background is used instead, which may work better with subjects that are green; blue is also used for traditional film opticals.

This technique is called a green-screen or blue-screen shot or, more generically, a process or matte shot. For situations other than live television, the chroma key is usually done in postproduction, and the keyer is part of the editing system. Ultimatte is a common professional chroma key system and is available as a plug-in for various applications.

Green-screen shots are not hard to do, but they need to be lit and framed carefully. Green background material is available in several forms, including cloth that can be hung on a frame, pop-up panels for close shots, and paint for covering a large background wall. When using a small screen, keep the camera back and shoot with a long lens to maximize coverage. It’s important that the green background be evenly lit with no dark shadows or creases in the material, though some keying programs are more forgiving than others of background irregularities. For typical green-screen work shot on video, lighting the background with tungsten-balanced light should work fine, but for film and blue-screen work, filters or bulbs better matched to the screen color are often used. Don’t underexpose the background, since you want the color to be vivid.

Avoid getting any of the green background color on the subject, since that may become transparent after the key. Keep as much separation as possible between the subject and the background and avoid green light from the background reflecting on the subject (called spill—most keying software includes some spill suppression). If you see green tones in someone’s skin, reposition him or set a flag. If objects are reflecting the background, try dulling spray or soap. Fine details, like frizzy hair, feathers, and anything with small holes can sometimes be hard to key. Make sure your subject isn’t wearing green (or blue for a blue screen) or jewelry or shiny fabrics that may pick up the background. Don’t use smoke or diffusion that would soften edges. Using a backlight to put a bright rim on the subject can help define his edges.

The Chromatte system uses a special screen that reflects back light from an LED light ring around the lens. This is fast to set up (since you don’t need other light for the background) and works in situations where the subject is very close to the screen (but keep the camera back). Get your white balance before turning up the light ring.

Locked-down shots with no camera movement are the easiest to do. You can put actors on a treadmill, stationary bike, or turntable to simulate camera or subject movement. Footage shot to be superimposed behind the subject is called a background plate. If the camera moves you’ll want the background to move also, which may require motion tracking. Orange tape marks are put on the green screen for reference points for tracking. Do digital mockups or storyboards to plan your shots.

As a rule, keys work best with HD and with 4:2:2 color sampling (or even better, 4:4:4). That said, many successful keys have been done in SD, and even with formats like DV that are 4:1:1 or 4:2:0 (for more, see the previous section). Make sure the detail/enhancement on the camera isn’t set too high. Bring a laptop with keying software to the set to see how the chroma key looks.

Fig. 5-16. Chroma key. The subject is shot in front of a green or blue background, which is then keyed out. The subject can then be composited on any background. NLEs usually include a chroma key effect or you may get better results with specialized software or plug-ins such as this Serious Magic product. (Serious Magic)

Deinterlacing

See Progressive and Interlace Scanning on p. 11 before reading this section.

It’s very easy to convert from progressive to interlace. One frame is simply divided into two fields (this is PsF; see p. 602). This is done when you’ve shot using a progressive format but are distributing the movie in an interlaced one, such as 50i or 60i.

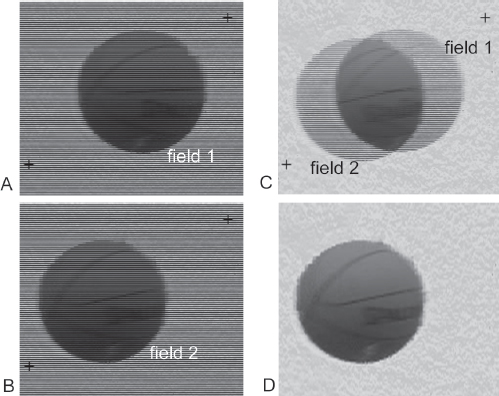

Creating progressive footage from interlace is more complex. This may be done when distributing interlaced material on the Web, for example, or when extracting still frames. Static images aren’t a problem—you can just combine the two fields. But when there’s any movement between fields they will show edge tear when you combine them (see Figs. 1-11 and 5-17). Some deinterlacing methods just throw away one of the fields and double the other (line doubling), which lowers resolution and can result in unsmooth motion. Some use field blending to interpolate between the two fields (averaging them), which may also lower resolution. “Smart” deinterlacers can do field blending when there’s motion between two fields but leave static images unchanged. For more, see p. 600.

Moiré

In terms of video, moiré (pronounced “mwa-ray”) is a type of visual artifact that can appear as weird rippling lines or unnatural color fringes. Sometimes you’ll see moiré in video when people wear clothing that has a finely spaced checked, striped, or herringbone pattern. Moiré patterns often show up on brick walls, along with odd-colored areas that may seem to move. When you convert from a higher definition format to a lower definition one, various types of aliasing can result, including moiré and “jaggies” (stair steps on diagonal lines).

With the explosion of DSLRs, moiré is often showing up in scenes that would look fine if shot with traditional video cameras. Even hair, or clothing that has no visible pattern, may end up with moiré when shot with a DSLR. This happens in part because the DSLR’s sensor is designed to shoot stills at a much higher resolution than HD or SD video. To create the video image, instead of doing a high-quality downconversion, as you might do in postproduction with specialized software or hardware, many DSLRs simply skip some of the sensor’s horizontal lines of pixels. The resulting image forces together pixels that should have been separated by some distance, causing artifacts.

Fig. 5-17. Interlace and deinterlace. (A, B) Two consecutive fields from 60i footage of a basketball being thrown. (C) Here we see both fields together as one frame. You can clearly see that each field has half the resolution (half the horizontal lines), and that the ball has moved between the two fields. Makes for an ugly frame. (D) This deinterlaced frame was made by deleting the first field and filling in the gap it left. We can create new pixels for the gap by “interpolating” (essentially averaging) the lines on either side of each missing line. Though this creates a single frame that could be used for a progressive display, it’s not as clean or sharp as true progressive footage shot in a progressive format. Deinterlacing works best when there’s relatively little camera or subject movement. See also Fig. 1-11.

Though it may seem counterintuitive, the way to minimize this problem is essentially to lower the resolution of the picture at the sensor. With a DSLR, be sure that any sharpness settings are turned down. The camera may have a picture profile for a softer, mellower look. Sometimes you have to shoot a shot slightly out of focus to get rid of a particularly bothersome pattern. True video cameras avoid moiré by using an optical low pass filter (OLPF), which softens the image, removing very fine details that can cause artifacts (if you’re shooting with a Canon EOS 5D Mark II DSLR, you could use Mosaic Engineering’s VAF-5D2 Optical Anti-Aliasing filter). Higher-end cameras that shoot both stills and video, like the RED Epic, avoid artifacts by not doing line skipping when they downconvert.

If you’re on a shoot and you’re seeing moiré in the viewfinder, it may be in the image or it may just be in the viewfinder. Try a different monitor to check. If it’s in the image, to minimize the artifacts try shooting from a different angle and not moving the camera. You may also need to change wardrobe or other items that are causing issues.

Footage that has moiré in it can be massaged in post to try to soften the most objectionable parts, but it’s a cumbersome process that usually delivers mixed results. Along with poor audio, moiré is one of the chief drawbacks of shooting with DSLRs.

Fig. 5-18. Moiré. The metal gate on the storefront has only horizontal slats, but this video image shot with a DSLR (Canon EOS 5D Mark II with full-frame sensor) shows a fan pattern of moving dark lines. This is one of several types of moiré that can occur in video, particularly with footage from DSLRs. (David Leitner)

Rolling Shutter

As described on p. 11, interlace involves scanning the picture from top to bottom, capturing each frame in two parts: one field (half of the frame) first, and the second field fractions of a second later. Progressive formats, on the other hand, capture the entire frame at the same instant in time.

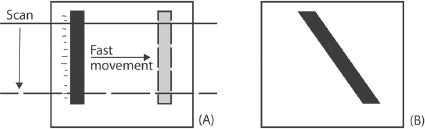

Actually, the part about progressive is not entirely true. CCD sensors can capture an entire progressive frame at once, as can CMOS cameras equipped with global shutters. However, many CMOS cameras have rolling shutters, which scan from top to bottom. There’s still only one field—it’s truly progressive—but the scan starts at the top of the frame and takes a certain amount of time to get to the bottom. The result is that fast pans and movements can seem to wobble or distort. Straight vertical lines may seem to bend during a fast pan (sometimes called skew; see Fig. 5-19). Some people call the overall effect “jello-cam.”

Some sensors scan faster than others, so you may not have a problem. To avoid rolling shutter issues, do relatively slow camera movements. Avoid whip pans. Keep in mind that fast events, like a strobe light, lightning, or photographer’s flash may happen faster than the sensor’s scan, leaving the top or the bottom of the frame dark.

Various software solutions minimize rolling shutter artifacts in postproduction, including Adobe After Effects, the Foundry’s RollingShutter plug-in, and CoreMelt’s Lock & Load.

Fig. 5-19. Rolling shutter and skew. (A) On cameras that have a rolling shutter, the sensor scans from top to bottom. If the camera or an object moves quickly, the top of an object may be in one part of the frame at the beginning of the scan, with the bottom of the object in a different place by the time the scan finishes. (B) The recorded frame shows the object tilted or skewed, even though it was in fact vertical. The slower a particular camera scans, the worse the problem.

Video Noise

As discussed elsewhere, different types of video noise can become apparent in the image when it is underexposed, enlarged, processed at a low bit depth, etc. Sometimes you can reduce the appearance of noise simply by darkening blacks in postproduction. Various types of noise-reducing software are available either as part of your NLE or as a plug-in, such as Neat Video.

VIDEO MONITORS AND PROJECTORS

See Camera and Recorder Basics, p. 5, and Viewfinder and Monitor Setup, p. 105, before reading this section.

On many productions, a lot of attention and money go into finding the best camera, doing good lighting, creating artful production design, etc. All in the service of creating a picture that looks great and captures the intent of the filmmakers.

But when showing the work in video, all that care can be undone by a display device that’s not up to the task. There are numerous reasons why a given monitor or projector may not show the picture the way it should (see below). As a moviemaker, you can control some aspects of the viewing experience (such as what type of equipment you use yourself and how you set it up). In screening situations, always do a “tech check” beforehand to make sure anything adjustable is set correctly. Unfortunately, once your movie goes out into the world, you have no control over how it looks and viewers will see something that may or may not look the way you intended it.

These days, video displays are everywhere and there are many different types. The following are some of the main varieties available. Many of these technologies come in different forms: as flat-panel screens (except CRT) and video projectors (which shine on a screen like a film projector). CRTs are analog; the rest are digital.

Fig. 5-20. Sony’s Luma series of professional LCD video monitors. (Sony Electronics, Inc.)