The discussion in Chap. 1 has shown that the classical theory of probability, based upon a finite set of equally likely possible outcomes of a trial, has severe limitations which make it inadequate for many applications. This is not to dismiss the classical case as trivial, for an extensive mathematical theory and a wide range of applications are based upon this model. It has been possible, by the use of various strategies, to extend the classical case in such a way that the restriction to equally likely outcomes is greatly relaxed. So widespread is the use of the classical model and so ingrained is it in the thinking of those who use it that many people have difficulty in understanding that there can be any other model. In fact, there is a tendency to suppose that one is dealing with physical reality itself, rather than with a model which represents certain aspects of that reality. In spite of this appeal of the classical model, with both its conceptual simplicity and its theoretical power, there are many situations in which it does not provide a suitable theoretical framework for dealing with problems arising in practice. What is needed is a generalization of the notion of probability in a manner that preserves the essential properties of the classical model, but which allows the freedom to encompass a much broader class of phenomena.

In the attempt to develop a more satisfactory theory, we shall seek in a deliberate way to describe a mathematical model whose essential features may be correlated with the appropriate features of real-world problems. The history of probability theory (as is true of most theories) is marked both by brilliant intuition and discovery and by confusion and controversy. Until certain patterns had emerged to form the basis of a clear-cut theoretical model, investigators could not formulate problems with precision, and reason about them with mathematical assurance. Long experience was required before the essential patterns were discovered and abstracted. We stand in the fortunate position of having the fruits of this experience distilled in the formulation of a remarkably successful mathematical model.

A mathematical model shares common features with any other type of model. Consider, for example, the type of model, or “mock-up,” used extensively in the design of automobiles or aircraft. These models display various essential features: shape, proportion, aerodynamic characteristics, interrelation of certain component parts, etc. Other features, such as weight, details of steering mechanism, and specific materials, may not be incorporated into the particular model used. Such a model is not equivalent to the entity it represents. Its usefulness depends on how well it displays the features it is designed to portray; that is, its value depends upon how successfully the appropriate features of the model may be related to the “real-life” situation, system, or entity modeled. To develop a model, one must be aware of its limitations as well as its useful properties.

What we seek, in developing a mathematical model of probability, is a mathematical system whose concepts and relationships correspond to the appropriate concepts and relationships in the “real world.” Once we set up the model (i.e., the mathematical system), we shall study its mathematical behavior in the hope that the patterns revealed in the mathematical system will help in identifying and understanding the corresponding features in real life.

We must be clear about the fact that the mathematical model cannot be used to prove anything about the real world, although a study of the model may help us to discover important facts about the real world. A model is not true or false; rather, a model fits (i.e., corresponds properly to) or does not fit the real-life situation. A model is useful, or it is not. A model is useful if the three following conditions are met:

1. Problems and situations in the real world can be translated into problems and situations in the mathematical model.

2. The model can be studied as a mathematical system to obtain solutions to the model problems which are formulated by the translation of real-world problems.

3. The solutions of a model problem can be correlated with or interpreted in terms of the corresponding real-world problem.

The mathematical model must be a consistent mathematical system. As such, it has a “life of its own.” It may be studied by the mathematician without reference to the translation of real-world problems or the interpretation of its features in terms of real-world counterparts. To be useful from the standpoint of applications, however, not only must it be mathematically sound, but also its results must be physically meaningful when proper interpretation is made. Put negatively, a model is considered unsatisfactory if either (1) the solutions of model problems lead to unrealistic solutions of real-world problems or (2) the model is incomplete or inconsistent mathematically.

Although long experience was needed to produce a satisfactory theory, we need not retrace and relive the mistakes and fumblings which delayed the discovery of an appropriate model. Once the model has been discovered, studied, and refined, it becomes possible for ordinary minds to grasp, in reasonably short time, a pattern which took decades of effort and the insight of genius to develop in the first place.

The most successful model known at present is characterized by considerable mathematical abstractness. A complete study of all the important mathematical questions raised in the process of establishing this system would require a mathematical sophistication and a budget of time and energy not properly to be expected of those whose primary interest is in application (i.e., in solutions to real-world problems). Two facts motivate the study begun in this chapter:

1. Although the details of the mathematics may be sophisticated and difficult, the central ideas are simple and the essential results are often plausible, even when difficult to prove.

2. A mastery of the ideas and a reasonable skill in translating real-world problems into model problems make it possible to grasp and solve problems which otherwise are difficult, if not impossible, to solve. Mastery of this model extends considerably one’s ability to deal with real-world problems.

In addition to developing the fundamental mathematical model, we shall develop certain auxiliary representations which facilitate the grasp of the mathematical model and aid in discovering strategies of solution for problems posed in its terms. We may refer to the combination of these auxiliary representations as the auxiliary model.

Although the primary goal of this study is the ability to solve real-world problems, success in achieving this goal requires a reasonable mastery of the mathematical model and of the strategies and techniques of solution of problems posed in terms of this model. Thus considerable attention must be given to the model itself. As we have already noted, the model may be studied as a thing in itself, with a “life of its own.” This means that we shall be engaged in developing a mathematical theory. The study of this mathematics can be an interesting and challenging game in itself, with important dividends in training in analytical thought. At times we must be content to play the game, until a stage is reached at which we may attempt a new correlation of the model with the real world. But as we reach these points in the development of the theory, repeated success in the act of interpretation will serve to increase our confidence in the model and to make it easier to comprehend its character and see its implications for the real world.

The model to be developed is essentially the axiomatic system described by the mathematician A. N. Kolmogorov (1903– ), who brought together in a classical monograph [1933] many streams of development. This monograph is now available in English translation under the title Foundations of the Theory of Probability [1956]. The Kolmogorov model presents mathematical probability as a special case of abstract measure theory. Our exposition utilizes some concrete but essentially sound representations to aid in grasping the abstract concepts and relations of this model. We present the concepts and their relations with considerable precision, although we do not always attempt to give the most general formulation. At many places we borrow mathematical theorems without proof. We sometimes note critical questions without making a detailed examination; we merely indicate how they have been resolved. Emphasis is on concepts, content of theorems, interpretations, and strategies of problem solution suggested by a grasp of the essential content of the theory. Applications emphasize the translation of physical assumptions into statements involving the precise concepts of the mathematical model.

It is assumed in this chapter that the reader is reasonably familiar with the elements of set theory and the elementary operations with sets. Adequate treatments of this material are readily available in the literature. A sketch of some of these ideas is given in Appendix B, for ready reference. Some specialized results, which have been developed largely in connection with the application of set theory and boolean algebra to switching circuits, are summarized in Sec. 2–6. A number of references for supplementary reading are listed at the end of this chapter.

Sets, Events, and Switching [1964]. A number of references for supplementary reading are listed at the end of this chapter.

The discussion in the previous introductory paragraphs has indicated that, to establish a mathematical model, we must first identify the significant concepts, patterns, relations, and entities in the “real world” which we wish to represent. Once these features are identified, we must seek appropriate mathematical counterparts. These mathematical counterparts involve concepts and relations which must be defined or postulated and given appropriate names and symbolic representations.

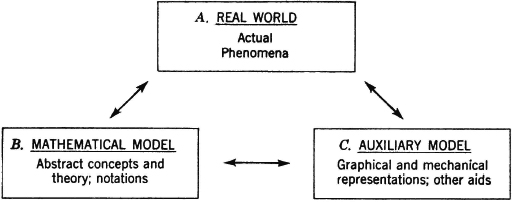

Fig. 2-1-1 Diagrammatic representation of the relationships between the “real world” and the models.

In order to be clear about the situation that exists when we utilize mathematical models, let us make a diagrammatic representation as in Fig. 2-1-1. In this diagram, we analyze the object of our investigation into three component parts:

A. The real world of actual phenomena, known to us through the various means of experiencing these phenomena.

B. The imaginary world of the mathematical model, with its abstract concepts and theory. An important feature of this model is the use of symbolic notational schemes which enable us to state relationships and facts with great precision and economy.

C. An auxiliary model, consisting of various graphical, mechanical, and other aids to visualization, remembering, and even discovering important features about the mathematical model. It seems likely that even the purest of mathematicians, dealing with the most abstract mathematical systems, employ, consciously or unconsciously, concrete mental images as the carriers of their thought patterns. We shall develop explicitly some concrete representations to aid in thinking about the abstract mathematical model; these in turn will help us to think clearly and systematically about the patterns abstracted from (i.e., lifted out of) our experience of the real world of phenomena.

Much of our attention and effort will be devoted to establishing the mathematical model B and to a study of its characteristics. In doing this, we shall be concerned to relate the various aspects of the mathematical model to corresponding aspects of the auxiliary model C, as an aid to learning and remembering the important characteristics of the mathematical model. Our real goal as engineers and scientists, however, is to use our knowledge of the mathematical model as an aid in dealing with problems in the real world. This means that we must be able to move from one part of our system to another with freedom and insight. For clarity and emphasis, we may find it helpful to indicate the important transitions in the following manner:

A → B: Translation of real-world concepts, relations, and problems into terms of the concepts of the mathematical model.

B → A: Interpretation of the mathematical concepts and results in terms of real-world phenomena. This may be referred to as the primary interpretation.

B → C: Interpretation of the mathematical concepts and results in terms of various concrete representations (mass picture, mapping concepts, etc.). This may be referred to as a secondary interpretation.

C → B: The movement from the auxiliary model to the mathematical model exploits the concrete imagery of the former to aid in discovering new results, remembering and extending previously discovered results, and evolving strategies for the solution of model problems.

A ↔ C: The correlation of features in A and C often aids both the translation of real-world problems into model problems and the interpretation of the mathematical results. In other words, the best path from A to B or from B to A may be through C.

The first element to be modeled is the relative frequency of the occurrence of an event. It is an empirical fact that in many investigations the relative frequencies of occurrence of various events exhibit a remarkable statistical regularity when a large number of trials are made. This feature of many games of chance served (as we noted in Chap. 1) to motivate much of the early development of probability theory. In fact, many of the questions posed by gamblers to the mathematicians of their time were evoked by the fact that observed frequencies deviated slightly from that which they expected.

This phenomenon of constant relative frequencies is in no way limited to games of chance. Modern statistical communication theory, for example, makes considerable use of the remarkable statistical regularities which characterize languages. The relative frequencies of occurrence of symbols of the alphabet (including all symbols such as space, numbers, punctuation, etc.), of symbol pairs, triples, etc., and of words, word combinations, etc., have been studied extensively and are known to be quite stable. Of course, exceptions are known. For example, Pierce [1961] quotes a paragraph from a novel which is written without the use of a single letter E in its entire 267 pages. In ordinary English, the letter E is used more frequently than any other letter of the alphabet. Such marked deviations from the usual patterns require special effort or indicate unusual situations. In the normal course of affairs one may expect rather close adherence to the common patterns of statistical regularity.

The whole life insurance industry is based upon so-called mortality tables, which predict the relative frequency of deaths at various ages (or give information equivalent to specifying these frequencies). In a similar manner, reliability engineering makes extensive use of the life expectancy of articles manufactured by a given process. These life expectancies are based on the equivalent of mortality tables for the products manufactured. Closely related is the theory of errors, in which the relative frequencies of errors of various magnitudes may be assumed to follow quite closely certain well-known patterns.

The empirical fact of a stable (i.e., constant) relative frequency serves as the basis in experience for choosing a mathematical model of probability. This empirical fact cannot in any way imply logically the existence of a probability number. We set up a model by postulating the existence of an ideal number, which we call the probability of an event. If this is a sound model and we have chosen the number properly, we may expect the relative frequency of occurrence of the event in a large number of trials to lie quite close to this number. But we cannot “prove” the existence in the real world of such an ideal limiting frequency. We simply set up a model. We then examine the “behavior” of the model, interpret the probabilities as relative frequencies, check or test these probabilities against observed frequencies (where possible), and try to determine experimentally whether the model fits the real-world situation to be modeled. We can build up confidence in our model; we cannot prove or disprove our model. If it works for enough different problems, we move with considerable confidence to new problems. Continued success tends to increase our confidence, so that we come to place a large degree of reliance upon the adequacy of the model to predict behavior in the real world.

If the feature of the real world to be represented by probability is the relative frequency of occurrence of a given event in a large number of trials, then the probability must be a number associated with this event. These numbers must obey the same laws as relative frequencies. We can readily list several elementary properties which must therefore be possessed by the probability model.

1. If an event is sure to occur, its relative frequency, and hence its probability, must be unity. Similarly, if an event cannot possibly occur, its probability must be 0.

2. Probabilities are real numbers, lying between 0 and 1.

3. If two events are mutually exclusive (i.e., cannot both happen on any one trial), the probability of the compound event that either one or the other of the original events will occur is the sum of the individual probabilities.

4. The probability that an event will not occur is 1 minus the probability of the occurrence of the event.

5. If the occurrence of one event implies the occurrence of a second event, the relative frequency of occurrence of the second event must be at least as great as that of the first event. Thus the probability of the second event must be at least as great as that of the first event.

Many more such properties could be enumerated. One of the concerns of the model maker is to discover the most basic list, in the sense that the properties included in this list imply as logical consequences all the other desirable or necessary properties. When we come to the formal presentation of our mathematical model in Sec. 2-3, we shall see that the basic list desired is contained in the list of properties above.

In order to realize an economy of expression, we shall need to introduce an appropriate notational scheme to be used in formulating probability statements. Before attempting to do this, however, we should take note of the fact that we have used the term event in the previous discussion without attempting to characterize or represent this concept. Since probability theory deals in a fundamental way with events and various combinations of events, it is desirable that we give attention to a precise formulation of the concept of event in a manner that will be mathematically useful and precise and that will be meaningful in terms of real-world phenomena. We turn our attention to that problem in the next section.

In order to produce a mathematical model of an event, it is necessary to have a clear idea of the situations to which probability theory may be applied. Historically, the ideas developed principally in connection with games of chance. In such games the concept of some sort of trial or test or experiment is fundamental. One draws a card from a deck, throws a pair of dice, or selects a colored ball from a jar. The trial may be composite. A single composite trial may consist of drawing, successively, five cards from a deck to form a hand, or of flipping a coin ten times and noting the sequence of heads and tails that turn up. But in any case, there must be a well-defined trial and a set of possible outcomes. In the coin-flipping trial, for example, it is physically conceivable that the coin may land neither “heads” nor “tails.” It may stand on edge. It is necessary to decide whether the latter eventuality is to be considered a satisfactory performance of the experiment of flipping the coin.

It is perhaps helpful to realize that each of the situations described above is equivalent to drawing balls from a jar or urn. Each throw of a pair of dice, for instance, results in an identifiable outcome. Each such outcome can be represented by a single ball, appropriately marked. Throwing the dice is then equivalent to choosing at random a ball from the urn. Similarly, the composite trial of drawing five cards from a deck may be equivalent to drawing a single ball. Suppose we were to lay out each of the possible hands of five cards, with the cards arranged in the order of their drawing; a picture could be taken of each possible hand; the set of all pictures (or a set of balls, each with one of the pictures of a hand printed thereon) could be put in a large urn. Drawing a single picture (or ball) from the urn would then be equivalent to the composite trial of drawing five cards from the deck to form a hand.

The concept of the outcome of a trial, which is so natural to a game of chance, is readily extended to situations of more practical and scientific importance. One may be sampling opinions or recording the ages at death of members of a population. One may be making a physical measurement in which the precise outcome is uncertain. Or one may be receiving a message on a communication system. In each case, the result observed may be considered to be the result of a trial which yields one of a set of possible outcomes. This set of possible outcomes is clearly defined, in principle, at least. Even here we may use the mental image of drawing balls from a jar, provided we are willing to suppose, our jar may contain an infinite number of balls. Making a trial, no matter what physical procedure is required, is then equivalent to choosing a ball from the jar.

We have a simple mathematical counterpart of a jar full of balls. This is the fundamental mathematical notion of an abstract set of elements. We consider a basic set S of elements  . Each element

. Each element  represents one of the possible outcomes of the trial. The basic set, or basic space (also commonly called the sample space), S represents the collection of all allowed outcomes. The single element

represents one of the possible outcomes of the trial. The basic set, or basic space (also commonly called the sample space), S represents the collection of all allowed outcomes. The single element  is referred to as an elementary outcome. Regardless of the nature of the physical trial referred to, the performance of a trial is represented mathematically as the choice of an element

is referred to as an elementary outcome. Regardless of the nature of the physical trial referred to, the performance of a trial is represented mathematically as the choice of an element  . In many considerations it is, in fact, convenient to refer to the element

. In many considerations it is, in fact, convenient to refer to the element  as the choice variable.

as the choice variable.

Events

Probability theory refers to the occurrence of events. Let us now see how this idea may be expressed in terms of sets and elements. Suppose, for example, a trial consists of drawing a hand of five cards from an ordinary deck of playing cards. We may think in terms of our equivalent urn experiment in which each ball has the picture of one of the possible hands, with one ball for each such hand. Selecting a ball is equivalent to drawing the hand pictured thereon. What is meant by the statement “A hand with one or more aces is drawn”? This can mean but one thing. The ball drawn is one of those having a picture of a hand with one or more aces. But the set of balls satisfying this condition constitutes a well-defined subset of the set of all the balls in the jar.

Our mathematical counterpart of the jar full of balls is the abstract basic space S. There is an element  corresponding to each ball in the urn. We suppose that it is possible, in principle at least, to identify each element in terms of the properties of the ball (and hence the hand) it represents. The occurrence of the event is the selection of one of the elements in the subset of those elements which have the properties determining the event. In our example, this is the property of having one or more aces. Viewed in this way, it seems natural to identify the event with the subset of elements (balls or hands) having the desired properties. The occurrence of the event is the act of choosing one of the elements in this subset. In probability investigations, we suppose the choice is “random,” in the sense that it is uncertain which of the balls or elements will be chosen before the actual trial is made. It should be apparent that the occurrence of events is not dependent upon this concept of randomness. A deliberate choice—say, one in which the balls are examined in the process of selection—would still lead to the occurrence or nonoccurrence of an event.

corresponding to each ball in the urn. We suppose that it is possible, in principle at least, to identify each element in terms of the properties of the ball (and hence the hand) it represents. The occurrence of the event is the selection of one of the elements in the subset of those elements which have the properties determining the event. In our example, this is the property of having one or more aces. Viewed in this way, it seems natural to identify the event with the subset of elements (balls or hands) having the desired properties. The occurrence of the event is the act of choosing one of the elements in this subset. In probability investigations, we suppose the choice is “random,” in the sense that it is uncertain which of the balls or elements will be chosen before the actual trial is made. It should be apparent that the occurrence of events is not dependent upon this concept of randomness. A deliberate choice—say, one in which the balls are examined in the process of selection—would still lead to the occurrence or nonoccurrence of an event.

In general, an event may be defined by a proposition. The event occurs whenever the proposition is true about the outcome of a trial. On the other hand, a proposition about elements defines a set in the following manner. Suppose the symbol πA(·) represents a proposition about elements  in the basic set S. In the example above, the symbol πA(

in the basic set S. In the example above, the symbol πA( ) is understood to mean “the hand represented by

) is understood to mean “the hand represented by  has one or more aces.” This statement may or may not be true, depending upon which element

has one or more aces.” This statement may or may not be true, depending upon which element  is being considered. Let us consider the set A of those elements

is being considered. Let us consider the set A of those elements  for which the proposition πA(

for which the proposition πA( ) is true. We use the symbolic representation A = {

) is true. We use the symbolic representation A = { :πA(

:πA( )} to mean “A is the set of those

)} to mean “A is the set of those  for which the proposition πA(

for which the proposition πA( ) is true.” Thus, in our example, A is the set of those hands which have one or more aces. We identify this set with the event A. The event A occurs iffi (if and only if) the

) is true.” Thus, in our example, A is the set of those hands which have one or more aces. We identify this set with the event A. The event A occurs iffi (if and only if) the  chosen is a member of the set A.

chosen is a member of the set A.

Let us illustrate further. Consider the result of flipping a coin three times. Each resulting sequence may be represented by writing a sequence of H’s and T’s, corresponding to throws of heads or tails, respectively. Thus the symbol THT indicates a sequence in which a tail appears on the first and third throws and a head appears on the second throw. There are eight elementary outcomes, each of which is represented by an element. We may list them as follows:

Consider the event that a tail turns up on the second throw. This is the event T2 = { 0,

0,  1,

1,  4,

4,  5}. This event occurs if any one of the elements in this list is chosen, which means that any one of the sequences represented by

5}. This event occurs if any one of the elements in this list is chosen, which means that any one of the sequences represented by  0,

0,  1,

1,  4, or

4, or  5 is thrown. Similarly, the set H1 = {

5 is thrown. Similarly, the set H1 = { 4,

4,  5,

5,  6,

6,  7} corresponds to the event that a head is obtained on the first throw. Many other events could be defined and the elements belonging to them listed in a similar manner.

7} corresponds to the event that a head is obtained on the first throw. Many other events could be defined and the elements belonging to them listed in a similar manner.

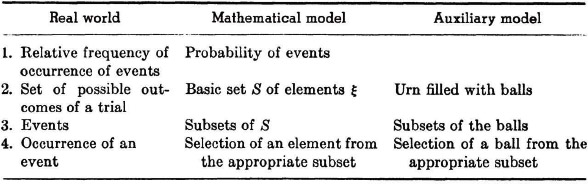

We have made considerable progress in developing a mathematical model for probability theory. The essential features may be outlined as follows:

Some aspects of the formulation of the model have not been made precise as yet. Some of the flexibility and possibilities of the representation of events as sets have not yet been demonstrated. But considerable groundwork has been laid.

Special events and combinations of events

In probability theory we are concerned not only with single events and their probabilities, but also with various combinations of events and relations between them. These combinations and relations are precisely those developed in elementary set theory. Thus, having introduced the concept of an event as a set, we have at our disposal an important mathematical resource in the theory of sets. In the following treatment, it is assumed that the reader is reasonably familiar with elementary topics in set theory. A brief exposition of the facts needed is given in Appendix B, for ready reference.

Before considering combinations of events, it may be well to note the following fact. When a trial or choice is made, one and only one element  is chosen—i.e., only one elementary outcome is observed—but a large number of events may have occurred. Suppose, in throwing a pair of dice, the pair of numbers 2, 4 turn up. Only one of the possible outcomes of the trial has been selected. But the following events (among many others) have occurred:

is chosen—i.e., only one elementary outcome is observed—but a large number of events may have occurred. Suppose, in throwing a pair of dice, the pair of numbers 2, 4 turn up. Only one of the possible outcomes of the trial has been selected. But the following events (among many others) have occurred:

1. A “six” is thrown.

2. A number less than seven is thrown.

3. An even number is thrown.

4. Both of the numbers appearing are even numbers.

5. The larger number in the pair thrown is twice the smaller.

These are distinct events, and not just different names for the same event, as may be verified by enumerating the elements in each of the events described. Each of these events has occurred; yet only one outcome has been observed.

It is important to distinguish between the elementary outcome  and the elementary event {

and the elementary event { } whose only member is the elementary outcome

} whose only member is the elementary outcome  . Whenever the result of a trial is the elementary outcome

. Whenever the result of a trial is the elementary outcome  , the elementary event {

, the elementary event { } occurs; so also, in general, do many other events. We have here an example of the necessity in set theory of distinguishing logically between an element

} occurs; so also, in general, do many other events. We have here an example of the necessity in set theory of distinguishing logically between an element  and the single-element set {

and the single-element set { }. The reader should be warned of an unfortunate anomaly in terminology found in much of the literature. In his fundamental work, Kolmogorov [1933, 1956] used the term elementary event for the elementary outcome

}. The reader should be warned of an unfortunate anomaly in terminology found in much of the literature. In his fundamental work, Kolmogorov [1933, 1956] used the term elementary event for the elementary outcome  ; he used no specific term for the event {

; he used no specific term for the event { }. Although he does not confuse logically the elementary outcome

}. Although he does not confuse logically the elementary outcome  with the event {

with the event { }, his terminology is inconsistent at this point. We shall attempt to be consistent in our usage. A little care will prevent confusion in reading the literature which follows Kolmogorov’s usage.

}, his terminology is inconsistent at this point. We shall attempt to be consistent in our usage. A little care will prevent confusion in reading the literature which follows Kolmogorov’s usage.

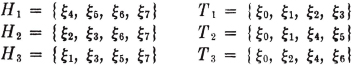

Let us consider now several special events, combinations of events, and relations between events. We shall illustrate these by reference to the coin-flipping experiment described earlier in this section. We let Hk be the event of a head on the kth throw in the sequence (k is 1, 2, or 3 in this experiment) and Tk be the event of a tail on the kth throw. For convenience, we list the corresponding sets as follows:

Suppose we are interested in the compound event of “a head on the first throw or a tail on the second throw.” To what set of elementary outcomes does this correspond? The event “a head on the first throw” is the set of elementary outcomes H1; the event “a tail on the second throw” is the set of elementary outcomes T2. The compound event occurs iffi an element from either of these sets is chosen. Thus the desired event is the set of elementary outcomes H1 ∪ T2, that is, the set of all those elementary outcomes which are in at least one of the events H1, T2. The argument carried out for this illustration could be repeated without essential change for any two events A and B. Thus, for any pair of events A, B, the event “A or B” is the event A ∪ B. We commonly refer to this as the or event; also, we may use the language of sets and refer to this as the union of the events A, B.

In a similar way, the event of “a head on the first throw and a tail on the second throw” can be represented by the intersection H1T2 = H1 ∩ T2. From the point of view of set theory, this is the set of those elements which are in both H1 and T2. This is precisely what is required by the joint occurrence of H1 and T2; the element chosen must belong to both of the events (sets) H1 and T2, which is the same as saying that it belongs to H1T2. We may refer to this as the joint event, or as the intersection of the events, H1 and T2. Again, the argument does not depend upon the specific illustration, and we may speak of the joint event AB for any two events A and B.

If we consider the intersection H1T1 of the events H1 and T1, we find that it contains no element; i.e., there is no sequence which has both a head on the first throw and a tail on the first throw. As a set, the intersection H1T1 is the empty set  ; as an event, the intersection is impossible, since no possible outcome can meet the conditions which determine the event. It is immediately evident that the impossible event always corresponds to the empty set. In abstract set theory, the symbol

; as an event, the intersection is impossible, since no possible outcome can meet the conditions which determine the event. It is immediately evident that the impossible event always corresponds to the empty set. In abstract set theory, the symbol  is commonly used to represent the empty set; we shall also use it to indicate the impossible event. When two events A,B have the relation that their joint occurrence is impossible, they are commonly called mutually exclusive events. Thus two mutually exclusive events are characterized by having an empty intersection; in the language of sets, we may refer to these as disjoint events.

is commonly used to represent the empty set; we shall also use it to indicate the impossible event. When two events A,B have the relation that their joint occurrence is impossible, they are commonly called mutually exclusive events. Thus two mutually exclusive events are characterized by having an empty intersection; in the language of sets, we may refer to these as disjoint events.

It may be noted that the set T1 has as members all the elements that are not in H1. T1 is thus the set known as the complement of H1, designated H1c. The event T1 occurs whenever H1 fails to occur—if a head does not appear on the first throw, a tail must appear there. We speak of the event H1c as the complementary event for H1. Inspection of the list above shows that, for each k = 1, 2, or 3, we must have Hkc = Tk and Tkc = Hk, as common sense requires. If we take the event Hk ∪ Tk for any k, we have the set of all possible outcomes. This means that the event Hk ∪ Tk is sure to happen. Every sequence must have either a head or a tail (but not both) in the kth place. The event S, corresponding to the basic set of all possible outcomes, is thus naturally referred to as the sure event.

We have noted that two events A and B may stand in the relation of being mutually exclusive, in the sense that if one occurs the other cannot occur on the same trial. At the other extreme, we may have the situation in which the occurrence of the event A implies the occurrence of the event B. This, in terms of set theory, means that set A is contained in set B, a relationship indicated by the notation A ⊂ B. We shall utilize the same notation for events; if the occurrence of event A requires the occurrence of event B (this means that every element  in A is also in B), we shall indicate the fact by writing A ⊂ B. In keeping with the custom in set theory, we shall suppose the impossible event

in A is also in B), we shall indicate the fact by writing A ⊂ B. In keeping with the custom in set theory, we shall suppose the impossible event  implies any event A. It certainly is true that every element which is in event

implies any event A. It certainly is true that every element which is in event  is also in event A. The convention involved in this seemingly pointless statement, denoted in symbols by

is also in event A. The convention involved in this seemingly pointless statement, denoted in symbols by  ⊂ A for any A ⊂ S, is often useful in the symbolic manipulation of events.

⊂ A for any A ⊂ S, is often useful in the symbolic manipulation of events.

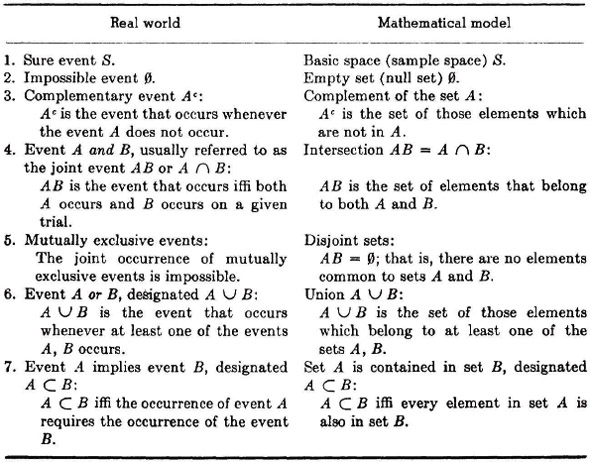

Because of the essential identity of the notions of sets and events, as we have formulated our model, we shall find it convenient to use the language of sets and that of events interchangeably, or even in a manner which mixes the terminology. We have, in fact, already done so in the discussions of the preceding paragraphs. The resulting usage is so natural and obvious that no confusion is likely to result.

A systematic tabulation of the terminology and concepts discussed above may be useful for emphasis and for reference. We emphasize the fact that the language of events comes largely from our ordinary experience by listing various terms in this language in the “real-world” column. In the same column, however, we include symbols which are taken from the set theoretic formulation of our concept of events. In spite of some anomalies here, the tabulation may be useful in making the tie between the real world, where events actually happen, and the mathematical model, in which they are represented in an extremely useful way.

We have extended our mathematical model by the use of the theory of sets. An important addition to our auxiliary model is provided by the Venn diagrams (Appendix B), used as an aid in visualizing set combinations and relations. These now afford a means of visualizing combinations of events and relations among them. The Venn diagram, in fact, provides a somewhat simplified version of the urn or jar model. Points in the appropriate regions on the Venn diagram correspond to the balls in the urn. Various subsets may be designated by designating appropriate regions. Determining the outcome of a trial corresponds to picking a point on the Venn diagram. The occurrence of an event A corresponds to the choice of one of that set of points determined by the region delineating the event A. We shall illustrate the use of Venn diagrams in the following discussion.

Classes of events

In the foregoing discussion, events are defined as sets which are subsets of the basic space S; relationships between pairs of events are specified in terms of the appropriate concepts of set theory. These relations and concepts may be extended to much larger (finite or infinite) classes of events in a straightforward manner, utilizing the appropriate features of abstract set theory. Thus we may speak of finite classes of events, sequences of events, mutually exclusive (or disjoint) classes of events, monotone classes of events, etc. The and and or (intersection and union) combinations may be extended to general classes of events. Where necessary or desirable, we make the modifications of terminology required to adapt to the language of events.

Notation for classes

It frequently occurs that the most difficult part of a mathematical development is to find a way of stating precisely and concisely various conditions or relationships pertinent to the problem. To this end, skill in the use of mathematical notation may play a key role. In dealing with classes of events (sets), we shall often find it expedient to exploit the notational devices described below.

A class of events is a set of events. We shall ordinarily use capital letters A, B, C, etc., to designate events and shall use capital script letters  ,

,  ,

,  , etc., to indicate classes of events. To designate the membership of a class, we use an adaptation of the notation employed for subsets of the basic space S. For example,

, etc., to indicate classes of events. To designate the membership of a class, we use an adaptation of the notation employed for subsets of the basic space S. For example,

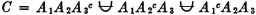

is the class having the four member events listed inside the braces.

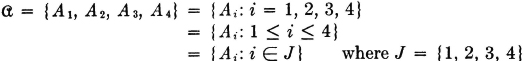

One of the common means of designating the member events in a class of events is to use the same letter for all events in the class and to distinguish between the various events in the class by appropriate subscripts (or perhaps in some cases, superscripts). When this scheme is used, the membership of the class may be designated by an appropriate specification of which indices are to be allowed. This means that we designate the membership of the class when we specify the index set, i.e., the set of all those indices such that the corresponding event is a member of the class. For example, suppose  is the class consisting of four member events A1, A2, A3, and A4. Then we may write

is the class consisting of four member events A1, A2, A3, and A4. Then we may write

We may thus (1) list the member events, (2) list the indices in the index set, (3) give a condition determining the indices in the index set, or (4) simply indicate symbolically the index set, which is then described elsewhere. It is obvious that the last two schemes may be used to advantage when the class has a very large (possibly infinite) number of members. Also, the symbolic designation of the index set J is useful in expressions in which the index set may be any of a large class of index sets. For example, we may simply require that J be any finite index set. We say class  is countable iffi the number of member sets is either finite or countably infinite, in which case the index set J is a finite or countably infinite set.

is countable iffi the number of member sets is either finite or countably infinite, in which case the index set J is a finite or countably infinite set.

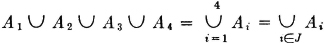

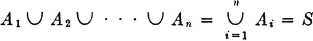

Suppose we wish to consider the union of the events in the class  just described. This is the event that occurs iffi at least one of the member events occurs. We speak of this event as the union of the class and designate it in symbols in one of the following ways:

just described. This is the event that occurs iffi at least one of the member events occurs. We speak of this event as the union of the class and designate it in symbols in one of the following ways:

The latter notation is particularly useful when we may want to consider a general statement true for a large class of different index sets.

If the class of events under consideration is a mutually exclusive class, we may designate the union of the class by replacing the symbol ∪ by the symbol  . Use of the latter symbol not only indicates the union of the class, but implies the stipulation “the events are mutually exclusive.” Thus, when we write

. Use of the latter symbol not only indicates the union of the class, but implies the stipulation “the events are mutually exclusive.” Thus, when we write

we are thereby adding the requirement that the Ai be mutually exclusive. It is not incorrect in such a case, however, to use the ordinary union symbol ∪ if we are not concerned to display the fact that the events are mutually exclusive.

In a similar way, we may deal with the intersection of the events of the class  . This is the event that occurs iffi all the member events occur. We speak of this event as the intersection of the class (or sometimes as the joint event of the class) and designate it in symbols in one of the following ways:

. This is the event that occurs iffi all the member events occur. We speak of this event as the intersection of the class (or sometimes as the joint event of the class) and designate it in symbols in one of the following ways:

Extensions of this notational scheme to various finite and infinite cases are immediate and should be obvious.

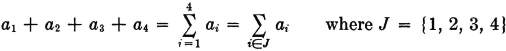

Use of the index-set notation is also useful in the case of dealing with sums of numbers. Suppose a1, a2, a3, and a4 are numbers. We may designate the sum of these numbers in any of the following ways:

Here we have used the conventional sigma Σ to indicate summation. Extensions to sums of larger classes of numbers parallels the extension of the convention for unions of events to larger classes. We shall find this notational scheme extremely useful in later developments.

Partitions

As our theory develops, we shall find that expressing an event as the union of a class of mutually exclusive events plays a particularly important role. In anticipation of this fact, we introduce and illustrate some concepts and terminology which will be quite useful. As a first step, we make the following

Definition 2-2a

A class of events is said to form a complete system of events if at least one of them is sure to occur.

It is immediately apparent that the class  = {A1, A2, …, An} consisting of the indicated n events is a complete system iffi

= {A1, A2, …, An} consisting of the indicated n events is a complete system iffi

If any element is chosen, it must be in at least one of the Ai (it could be in more than one). A class thus forms a complete system iffi the union of the class is the sure event.

One of the simplest complete systems is formed by taking any event A and its complement Ac. One of these two events is sure to occur. Suppose, for example, that during a storm a photographer sets up his camera and opens the shutter for 10 seconds. Let the event A be the event that one or more lightning strokes are recorded. Then the event Ac is the event that no lightning stroke is recorded. One of the two events A or Ac must occur, for A ∪ Ac = S, the sure event.

As a more complicated complete system, suppose we take a sample of 10 electrical resistors from a large stock. We suppose this choice of 10 resistors corresponds to one trial. The resistors are tested individually to see that their resistance values lie within specified limits of the nominal value marked upon them. Let Ak, for any integer k between 0 and 10, be the event that, for the sample taken, k or more of the resistors meet specification. Then the 11 events A0, A1, …, A10 form a complete system of events. In any sample taken, some number (possibly zero) of resistors must meet specification. Suppose in a given sample this number is three. Then the element  representing this particular sample is an element of each of the events A3, A2, A1, and A0 and hence is an element of the union of all the events. We may note that we have a class of events satisfying A0 ⊃ A1 … ⊃ A10; that is, we have a monotone-decreasing class. As a matter of fact, the event A0 is the sure event, for every sample must have zero or more resistors which meet specification.

representing this particular sample is an element of each of the events A3, A2, A1, and A0 and hence is an element of the union of all the events. We may note that we have a class of events satisfying A0 ⊃ A1 … ⊃ A10; that is, we have a monotone-decreasing class. As a matter of fact, the event A0 is the sure event, for every sample must have zero or more resistors which meet specification.

A more interesting class of events in the resistor-sampling problem is the class  = {Bk: 0 ≤ k ≤ 10}, where for each k the event Bk is the event that the sample has exactly k resistors which meet specification. In this case, the different events in the class are mutually exclusive, since no sample can have exactly k and also exactly j ≠ k resistors which meet tolerance. Thus

= {Bk: 0 ≤ k ≤ 10}, where for each k the event Bk is the event that the sample has exactly k resistors which meet specification. In this case, the different events in the class are mutually exclusive, since no sample can have exactly k and also exactly j ≠ k resistors which meet tolerance. Thus  is a mutually exclusive class. Now it is apparent that the class

is a mutually exclusive class. Now it is apparent that the class  forms a complete system of events, for every element corresponding to a sample must belong to one (and only one) of the Bk. The particular k in each case is determined by counting the number of resistors in the sample which meet specification.

forms a complete system of events, for every element corresponding to a sample must belong to one (and only one) of the Bk. The particular k in each case is determined by counting the number of resistors in the sample which meet specification.

Complete systems of events in which the members are mutually exclusive are so important that we find it convenient to give them a name as follows:

Definition 2-2b

A class of events is said to form a partition iffi one and only one of the member events must occur on each trial.

It is apparent that the class of events  = {Bk: 0 ≤ k ≤ 10} defined in the resistor-sampling problem above is a partition; so also is the complete system of events {A, Ac} illustrated in the photography example. It is also apparent that a partition is characterized by the two properties

= {Bk: 0 ≤ k ≤ 10} defined in the resistor-sampling problem above is a partition; so also is the complete system of events {A, Ac} illustrated in the photography example. It is also apparent that a partition is characterized by the two properties

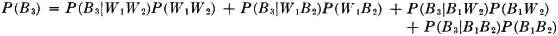

Fig. 2-2-1 Venn-diagram representation of a partition.

1. The class is mutually exclusive.

2. The union of the class is the sure event S.

The name partition is suggested by the fact that a partition divides the Venn diagram representing the basic space S into nonoverlapping sets. While the members of the partition may be quite complicated sets (representing events), the essential features of a partition are exhibited in a schematic representation such as that in Fig. 2-2-1. One deficiency of such a representation is that it is not clear to which set the points on the boundary are assigned. If it is kept in mind that disjoint sets are being represented, this need cause no difficulty in interpretation.

We may extend the notion of a partition in the following useful way:

Definition 2-2c

A mutually exclusive class  of events whose union is the event A is called a partition of the event A (or sometimes a decomposition of A).

of events whose union is the event A is called a partition of the event A (or sometimes a decomposition of A).

It is obvious that a partition of the sure event S is a partition in the sense of Def. 2-2b. A Venn diagram of a partition of an event A is illustrated in Fig. 2-2-2.

As an illustration of the partition of an event, we consider some partitions of the union of two events. A certain type of rocket is known to fail for one of two reasons: (1) failure of the rocket engine because the fuel does not burn evenly or (2) failure of the guidance system. Let the elementary outcome  correspond to the firing of a rocket. We let A be the event the rocket fails because of engine malfunction and B be the event the rocket fails because of guidance failure. The event F of a failure of the rocket is thus given by F = A ∪ B. Now we cannot assert that events A and B are mutually exclusive. We may assert, however, that

correspond to the firing of a rocket. We let A be the event the rocket fails because of engine malfunction and B be the event the rocket fails because of guidance failure. The event F of a failure of the rocket is thus given by F = A ∪ B. Now we cannot assert that events A and B are mutually exclusive. We may assert, however, that

Fig. 2-2-2 Venn-diagram representation of a partition of an event A.

We thus have three partitions of A ∪ B, namely, {A, AcB}, {B, ABc}, and {AcB, AB, ABc}. The first and third of these are illustrated in Fig. 2-2-3.

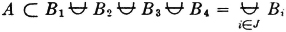

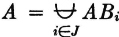

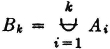

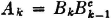

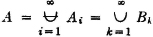

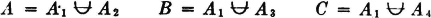

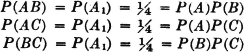

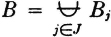

As a second example, which is quite important in probability theory, consider a mutually exclusive class of events

where in this case J = {1, 2, 3, 4}. Suppose

Then the class {AB1, AB2, AB3, AB4} = {ABi: i ∈ J} is a mutually exclusive class whose union is the event A. Thus {ABi: i ∈ J} is a partition of the event A. If the class  is a partition (of the sure event S), then every A is contained in the union of the class. This manner of producing a partition of an event A by taking the intersections of A with the members of a partition is illustrated in the Venn diagram of Fig. 2-2-4. Note that some of the members of the partition may be impossible events (empty sets). These may be ignored when the fact is known. They do not affect the union if included, since they contribute no elementary outcomes. The use of the general-index-set notation in the argument above shows that the result is not limited to the special choice of the mutually exclusive sets.

is a partition (of the sure event S), then every A is contained in the union of the class. This manner of producing a partition of an event A by taking the intersections of A with the members of a partition is illustrated in the Venn diagram of Fig. 2-2-4. Note that some of the members of the partition may be impossible events (empty sets). These may be ignored when the fact is known. They do not affect the union if included, since they contribute no elementary outcomes. The use of the general-index-set notation in the argument above shows that the result is not limited to the special choice of the mutually exclusive sets.

Fig. 2-2-3 Partitions of the union of two events A and B.

Fig. 2-2-4 A partition of an event A produced by the intersection with a partition of the sure event S.

Because of its importance, we state the general case as

Theorem 2-2A

Let  = {Bi: i ∈ J} be any mutually exclusive (disjoint) class of events. If the event A is such that

= {Bi: i ∈ J} be any mutually exclusive (disjoint) class of events. If the event A is such that  , then the class {ABi: i ∈ J} is a partition of A, so that

, then the class {ABi: i ∈ J} is a partition of A, so that  .

.

We shall return to the important topic of partitions in Sec. 2-7.

Sigma fields of events

We have defined events as subsets of the basic space S and have developed a considerable mathematical system based on these ideas. Our discussion has proceeded as if every subset of S could be considered an event, and hence as if the class  of events could be considered the class of all subsets of S. In the case of a finite number of elementary outcomes, this is the usual procedure. In many investigations in which the basic space is infinite, there are technical mathematical reasons for defining a less extensive class of subsets as the class of events. Fortunately, in applications it is usually not important to examine the question of which of the subsets of S are to be considered as events. For the purposes of this book, we shall simply suppose that in each case a suitable class of subsets of S makes up the class of events. It is usually important to describe carefully the various individual events of interest, but once these are described, they are assumed, without further examination, to belong to an appropriate class.

of events could be considered the class of all subsets of S. In the case of a finite number of elementary outcomes, this is the usual procedure. In many investigations in which the basic space is infinite, there are technical mathematical reasons for defining a less extensive class of subsets as the class of events. Fortunately, in applications it is usually not important to examine the question of which of the subsets of S are to be considered as events. For the purposes of this book, we shall simply suppose that in each case a suitable class of subsets of S makes up the class of events. It is usually important to describe carefully the various individual events of interest, but once these are described, they are assumed, without further examination, to belong to an appropriate class.

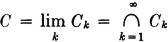

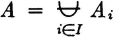

Development of the mathematical foundations has shown that the class of events must be a sigma field of sets. A brief discussion of such classes is given in Appendix B. We simply note here that such classes are closed under the formation of unions, intersections, and complements. This means that if the class of events includes a countable class  = {Ai: i ∈ J}, then the union of

= {Ai: i ∈ J}, then the union of  , the intersection of

, the intersection of  , and the complements of any of the Ai must be events also. This provides the mathematical flexibility required for building up composite events from various events defined in formulating a problem, as subsequent developments show.

, and the complements of any of the Ai must be events also. This provides the mathematical flexibility required for building up composite events from various events defined in formulating a problem, as subsequent developments show.

Having noted some of the general properties which probability numbers must have and having examined the concept of an event in considerable detail, we are now ready to make a formal definition of probability.

In Sec. 2-1 we discussed informally several properties of probability which must hold if we are to consider the probability of an event as the relative frequency of occurrences of the event to be expected in a large number of trials. This informal discussion was restricted by the fact that the concept of an event had not been formulated in a precise way. The subsequent development in Sec. 2-2 was devoted to formulating carefully the concept of an event and relating the resulting mathematical model to the real-world phenomena which it represents. An event is represented as a set of elements from a basic space; each element of that space represents one of the possible outcomes of a trial or experiment under study; the occurrence of an event A corresponds to the choice of an element from that subset of the basic space which represents the event. As a result of this development, we have adopted the following mathematical apparatus:

1. A basic space S, consisting of elements  (referred to as elementary outcomes)

(referred to as elementary outcomes)

2. Events which are subsets of S (more precisely, members of a suitable class of subsets of S)

In this model, the occurrence of the event A is the choice of an element  in the set A (thus

in the set A (thus  is sometimes referred to as the choice variable).

is sometimes referred to as the choice variable).

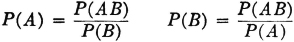

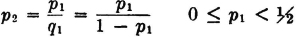

We now wish to introduce a third entity into this model: a mathematical representation of probability. It is desired to do this in a manner that extends the classical probability model and includes it as a special case. In particular, it is desired to preserve the properties which make possible the relative-frequency interpretation. We note first that probability is associated with events rather than with individual outcomes. In the classical case, the probability of an event A is the ratio of the number of elements in A (i.e., the number of ways that A can occur) to the total number of elements in the basic space S (i.e., the total number of possible outcomes). Thus, in our model, probability must be a function of sets; that is, it must associate a number with a set of elements rather than with an individual element.

An examination of the list of properties which probability must have, as developed in Sec. 2-1, shows that probability has the formal properties characterizing a class of functions of sets known in mathematics as measures. If we are able to use this well-known class of set functions as our probability model, we shall be able to appropriate a rich and extensive mathematical theory. It is precisely this notion of probability as a measure function which characterizes the highly successful Kolmogorov model for probability. An examination of the theory of measure shows that it is not necessary to postulate all the properties of probability discussed and listed in Sec. 2-1. Several of these properties can be taken as basic or axiomatic, and the others deduced from them. This trimming of the list of basic properties to a minimum or near minimum often results in an economy of effort in establishing the fact that a set function is a probability measure. It is also an aid in grasping the essential character of the function under study. At this point, we shall simply introduce the definition of probability in a formal manner; the justification for this choice rests upon the many mathematical consequences which follow and upon the success in relating these mathematical consequences in a meaningful way to phenomena of interest in the real world.

Probability systems

We are now ready to make the following

Definition 2-3a

A probability system (or probability space) consists of the triple:

1. A basic space S of elementary outcomes (elements)

2. A class  of events (a sigma field of subsets of S)

of events (a sigma field of subsets of S)

3. A probability measure P(·) defined for each event A in the class  and having the following properties:

and having the following properties:

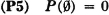

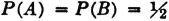

(P1) P(S) = 1 (probability of the sure event is unity)

(P2) P(A) ≥ 0 (probability of an event is nonnegative)

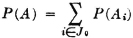

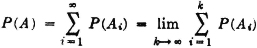

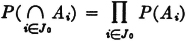

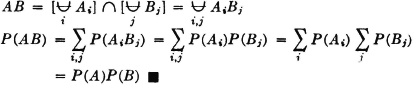

(P3) If  = {Ai: i ∈ J} is a countable partition of A (i.e., a mutually exclusive class whose union is A), then

= {Ai: i ∈ J} is a countable partition of A (i.e., a mutually exclusive class whose union is A), then

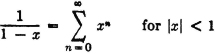

The additivity property (P3) provides an essential characterization of a probability measure P(·). It may be stated in words by saying that the probability of the union of mutually exclusive events is the sum of the probabilities of the individual events. We have seen how this property is required for a finite number of events in order to preserve a fundamental property of relative frequencies. The extension in the mathematical model to an infinity of events is needed to allow the analytical flexibility required in many common problems. For technical mathematical reasons, this property is limited to a countable infinity of events. A countably infinite class is one whose members may be put into a one-to-one correspondence with the positive integers. It is in this sense that the infinity is “countable.” As an example of a simple situation in which an infinity of mutually exclusive events is required for analysis, consider

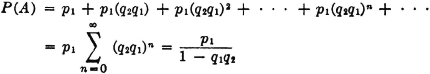

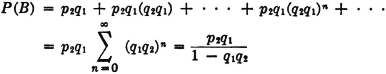

Example 2-3-1

Suppose a game is played in which two players alternately toss a coin. The player who first tosses a “head” is the winner. In principle, the game as defined may continue indefinitely, for it is not possible to specify in advance how many tosses will be required before one player wins. Let Ak be the event that the first player (i.e., the player who makes the first toss in the sequence) wins on the kth toss. We note that a win is impossible for this player on the even-numbered throws. Thus Ak =  for k even. We note that any two events Ak and Aj for different j and k must be mutually exclusive, since we cannot have a head appear for the first time in a given sequence on both the kth and the jth toss. Thus the class

for k even. We note that any two events Ak and Aj for different j and k must be mutually exclusive, since we cannot have a head appear for the first time in a given sequence on both the kth and the jth toss. Thus the class  = {A1, A3, A5, …} = {Ai: i ∈ J0}, where J0 is the set of odd positive integers, is a mutually exclusive class. Also, the event A of the first man’s winning is the union of the class

= {A1, A3, A5, …} = {Ai: i ∈ J0}, where J0 is the set of odd positive integers, is a mutually exclusive class. Also, the event A of the first man’s winning is the union of the class  . This means that the first man wins iffi he wins on one of the odd-numbered throws in the sequence which corresponds to the compound trial defining the game. We thus have

. This means that the first man wins iffi he wins on one of the odd-numbered throws in the sequence which corresponds to the compound trial defining the game. We thus have

If we can calculate the probabilities P(Ai), we can determine the probability of the event A by the relation

We shall see later that the P(Ai) may be determined quite easily under certain natural assumptions.

We shall encounter a great many problems in which it is desirable to be able to deal with an infinity of events.

The example above illustrates a basic strategy in dealing with probability problems. It is desired to calculate the probability P(A) that the first man wins the game. The event A can be expressed as the union of the mutually exclusive events Ak. Under the usual conditions and assumptions (to be discussed later), it is easy to evaluate the probabilities P(Ak). Evaluation of P(A) is then made by use of the fundamental additivity rule. The key to the strategy consists in finding a partition of the event A such that the probabilities of the member events of the partition may be determined readily.

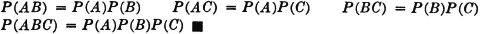

Before considering some of the properties of the probability measure P(·) which follow as consequences of the three axiomatic properties used in the definition, let us verify the fact that the new definition includes the classical probability as a special case. In order to do this, we must show that the three basic properties hold for the classical probability measure. In the classical case, the basic space has a finite number of elements and the class of events is the class of all subsets. Let the symbol n(A) indicate the number of elements in event A; that is, n(A) is the number of ways in which event A can occur. For disjoint (mutually exclusive) events, n(A  B) = n(A) + n(B). The classical probability measure is defined by the expression P(A) = n(A)/n(S). From this it follows easily that

B) = n(A) + n(B). The classical probability measure is defined by the expression P(A) = n(A)/n(S). From this it follows easily that

The property (P3) can easily be extended to any finite number of mutually exclusive events. In the case of a finite basic space, there can be only a finite number of nonempty, mutually exclusive events. The demonstration shows that the axioms are consistent, in the sense that there is at least one probability system satisfying the axioms. As we shall see, there are many others.

Other elementary properties

In order that the probability of an event be an indicator of the relative frequency of occurrence of that event in a large number of trials, it is immediately evident that several properties are required of the probability measure. Several of these are listed near the end of Sec. 2-1. In defining probability, we chose from this list three properties which are taken as basic or axiomatic. We now wish to see that the remaining properties in this list may be derived from the three basic properties. In addition, we shall obtain several other elementary properties which are fundamental to the development of the theory and to its application to real-world problems.

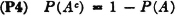

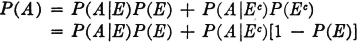

Property 4 in the list in Sec. 2-1 states that the probability that an event will not occur is 1 minus the probability of the occurrence of the event. This follows very easily from the fact that for any event A, the pair {A, Ac} forms a partition; that is, A  Ac = S. From properties (P1) and (P3) we have immediately P(A) + P(Ac) = P(S) = 1, so that we may assert

Ac = S. From properties (P1) and (P3) we have immediately P(A) + P(Ac) = P(S) = 1, so that we may assert

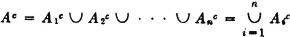

As a special case, we may let A = S, the sure event, so that Ac =  . As a result we have

. As a result we have

The probability of the impossible event is zero. This with property (P1) completes property 1 in Sec. 2-1.

We have now included in our model all the properties listed in Sec. 2-1 except property 5. This property states that if the occurrence of one event A implies the occurrence of a second event B on the same trial (i.e., if A ⊂ B), then P(B) ≥ P(A). We first note that the condition A ⊂ B implies A ∪ B = B, so that B = A  AcB. Since every element in A is also in B, the elements in B must be those which are either in A or in that part of B not in A. By property (P3) we have P(B) = P(A) + P(AcB). Since the second term in the sum is non-negative, by property (P2), it follows immediately that P(A) ≤ P(B). For reference, we state this property formally as follows:

AcB. Since every element in A is also in B, the elements in B must be those which are either in A or in that part of B not in A. By property (P3) we have P(B) = P(A) + P(AcB). Since the second term in the sum is non-negative, by property (P2), it follows immediately that P(A) ≤ P(B). For reference, we state this property formally as follows:

As an illustration of the implications of this fact, consider the following

Example 2-3-2 Serial Systems in Reliability Theory

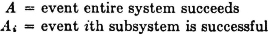

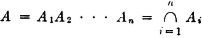

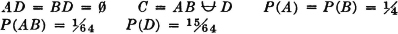

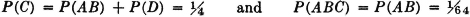

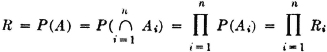

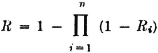

In reliability theory one is concerned with the success or failure of a system or device. Usually the system is analyzed into several subsystems whose success or failure affects the success or failure of the larger system. The larger system is called a serial system if the failure of any one subsystem causes the complete system to fail. Such may be the case for a complex missile system with more than one stage. Failure of any stage results in failure of the entire system. If we let

then

A serial system composed of n subsystems is characterized by

or equivalently by

That is, the system succeeds iffi all the subsystems succeed, or the system fails iffi any one or more of the subsystems fail. This relationship requires A ⊂ Ai for each i = 1, 2, …, n. By property (P6) we must therefore have R ≤ Ri for each i. That is, the serial system reliability is no greater than the reliability of any subsystem.

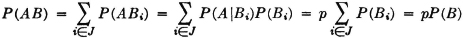

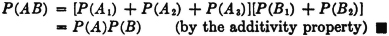

We now wish to develop several other properties of the probability measure which are used repeatedly in both theory and applications. In Sec. 2-2 we discussed in terms of an example the following partitions of the event A ∪ B: {A, AcB}, {B, ABc}, and {AcB, ABc, AB}. The first and third of these are illustrated in Fig. 2-2-3. Use of property (P3) gives several alternative expressions for the probability of A ∪ B.

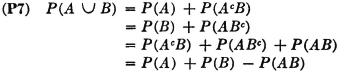

The first three expressions are obtained by direct application of property (P3) to the successive partitions. The fourth expression is obtained by an algebraic combination of the first three in the form P(A ∪ B) + P(A ∪ B) − P(A ∪ B). These expressions may be visualized with the aid of a Venn diagram, as in Fig. 2-3-1. Probabilities of nonoverlapping sets add to give the probability of the union. If one simply adds P(A) + P(B), he includes P(AB) twice; hence it is necessary to subtract P(AB) from the sum, as in the last expression in (P7). If the events A and B are mutually exclusive (i.e., if the sets are disjoint), AB =  and, by property (P5), P(AB) = 0. Thus, for mutually exclusive events, property (P7) reduces to a special case of the additivity rule (P3).

and, by property (P5), P(AB) = 0. Thus, for mutually exclusive events, property (P7) reduces to a special case of the additivity rule (P3).

The next example provides a simple illustration of a property which is frequently useful in analysis.

Example 2-3-3

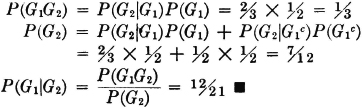

A manufacturing process utilizes a basic raw material supplied by companies 1, 2, and 3. A batch of this material is drawn from stock and used in the manufacturing process. The material is selected at random but is packaged in such a way that the batch drawn is from only one of the suppliers. Let A be the event that the finished product meets specifications. Let Bk be the event that the raw material chosen is supplied by company numbered k (k = 1, 2, or 3). When event A occurs, one and only one of the events B1, B2, or B3 occurs. We thus have the situation formalized in Theorem 2-2A. The Bk form a mutually exclusive class. Occurrence of the event A implies the occurrence of one of the Bk. That is, A  B1

B1  B2

B2  B3. From this it follows that A = AB1

B3. From this it follows that A = AB1  AB2

AB2  AB3. By property (P3) we have P(A) = P(AB1) + P(AB2) + P(AB3). If the information about the selection process and the characteristics of the material supplied by the various companies is sufficient, it may be possible to evaluate each of the joint probabilities P(ABk). A discussion of the manner in which this is usually done must await the introduction of the idea of conditional probability, in Sec. 2-5.

AB3. By property (P3) we have P(A) = P(AB1) + P(AB2) + P(AB3). If the information about the selection process and the characteristics of the material supplied by the various companies is sufficient, it may be possible to evaluate each of the joint probabilities P(ABk). A discussion of the manner in which this is usually done must await the introduction of the idea of conditional probability, in Sec. 2-5.

Fig. 2-3-1 A partition of the union A ∪ B of two events.

The pattern illustrated in this example is quite general. We may utilize the formalism of Theorem 2-2A to give a general statement of this property as follows.

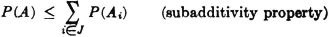

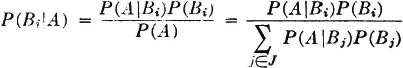

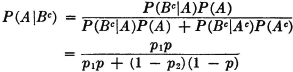

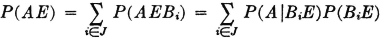

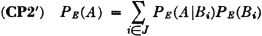

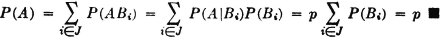

(P8) Let  = {Bi: i ∈ J} be any countable class of mutually exclusive events. If the occurrence of the event A implies the occurrence of one of the Bi (i.e., if

= {Bi: i ∈ J} be any countable class of mutually exclusive events. If the occurrence of the event A implies the occurrence of one of the Bi (i.e., if  ), then

), then  .

.

It should be noted that if the class  is a partition (of the whole space), then the occurrence of any event A implies the occurrence of one of the Bi, so that the theorem is applicable. The validity of the general theorem follows from Theorem 2-2A, which states that the class {ABi: i ∈ J} must be a partition of A, and from the additivity property (P3).

is a partition (of the whole space), then the occurrence of any event A implies the occurrence of one of the Bi, so that the theorem is applicable. The validity of the general theorem follows from Theorem 2-2A, which states that the class {ABi: i ∈ J} must be a partition of A, and from the additivity property (P3).

Example 2-3-4

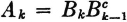

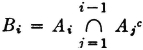

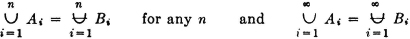

Let us return to the coin-tossing game considered in Example 2-3-1. We let Ak be the event that the first man wins by tossing the first head in the sequence on the kth toss. We may let k be any positive integer; the fact that Ak =  for k even will not affect our argument. Let us now consider the event Bk that the first man wins on the kth toss or sooner. We have a number of interesting relationships. For one thing, Bk ⊂ Bk+1 for any k since, if the man wins on the kth toss or sooner, he certainly wins on the (k + 1)st toss or sooner. Also,

for k even will not affect our argument. Let us now consider the event Bk that the first man wins on the kth toss or sooner. We have a number of interesting relationships. For one thing, Bk ⊂ Bk+1 for any k since, if the man wins on the kth toss or sooner, he certainly wins on the (k + 1)st toss or sooner. Also,  since the man wins on the kth toss or sooner iffi he tosses a head for the first time on some toss between the first and the kth. We note also that

since the man wins on the kth toss or sooner iffi he tosses a head for the first time on some toss between the first and the kth. We note also that  ; that is, the man tosses a head for the first time on the kth toss in the sequence iffi he tosses it on the kth toss or sooner but not on the (k − 1)st toss or sooner. We must also have the event A that the first man wins expressible as

; that is, the man tosses a head for the first time on the kth toss in the sequence iffi he tosses it on the kth toss or sooner but not on the (k − 1)st toss or sooner. We must also have the event A that the first man wins expressible as

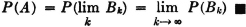

We note that the second union is not the union of a disjoint class. In fact, since the Bk form an increasing sequence, we may assert (see the discussion of limits of sequences in Appendix B) that  . By the additivity property,

. By the additivity property,

The last expression is the definition of the infinite sum given in the second expression. Again, by the additivity property,

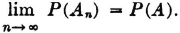

From this we infer the interesting fact that

An examination of the argument in the example above shows that the last result does not depend upon the particular situation described. It depends upon the fact that the Bk form an increasing sequence and that the union of the Bk can be expressed as the disjoint union of the Ak in the manner shown. Further examination shows that if we had started with any increasing sequence {Bk: 1 ≤ k <  } and had defined Ak by the relation

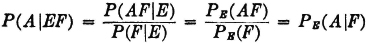

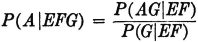

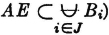

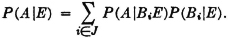

} and had defined Ak by the relation  , the Ak and Bk would have the properties and relationships utilized in the problem. Thus the final result holds generally for increasing sequences.