Linear Operators on Inner Product Spaces

13.1 Introduction

This chapter investigates the space A(V) of linear operators T on an inner product space V. (See Chapter 7.) Thus, the base field K is either the real numbers R or the complex numbers C. In fact, different terminologies will be used for the real case and the complex case. We also use the fact that the inner products on real Euclidean space Rn and complex Euclidean space Cn may be defined, respectively, by

where u and v are column vectors.

The reader should review the material in Chapter 7 and be very familiar with the notions of norm (length), orthogonality, and orthonormal bases. We also note that Chapter 7 mainly dealt with real inner product spaces, whereas here we assume that V is a complex inner product space unless otherwise stated or implied.

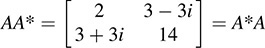

Lastly, we note that in Chapter 2, we used AH to denote the conjugate transpose of a complex matrix A; that is,  . This notation is not standard. Many texts, expecially advanced texts, use A* to denote such a matrix; we will use that notation in this chapter. That is, now

. This notation is not standard. Many texts, expecially advanced texts, use A* to denote such a matrix; we will use that notation in this chapter. That is, now  .

.

13.2 Adjoint Operators

We begin with the following basic definition.

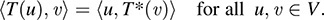

DEFINITION: A linear operator T on an inner product space V is said to have an adjoint operator T* on V if  T(u); v

T(u); v =

=  u, T*(v)

u, T*(v) for every u, v ∈ V.

for every u, v ∈ V.

The following example shows that the adjoint operator has a simple description within the context of matrix mappings.

(a) Let A be a real n-square matrix viewed as a linear operator on Rn. Then, for every u, v ∈ Rn;

Thus, the transpose AT of A is the adjoint of A.

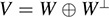

(b) Let B be a complex n-square matrix viewed as a linear operator on Cn. Then for every u; v; ∈ Cn,

Thus, the conjugate transpose B* of B is the adjoint of B.

Remark: B* may mean either the adjoint of B as a linear operator or the conjugate transpose of B as a matrix. By Example 13.1(b), the ambiguity makes no difference, because they denote the same object.

The following theorem (proved in Problem 13.4) is the main result in this section.

THEOREM 13.1: Let T be a linear operator on a finite-dimensional inner product space V over K. Then

(i) There exists a unique linear operator T* on V such that  (T u), v

(T u), v =

=  u, T*(v)

u, T*(v) for every u, v ∈ V. (That is, T has an adjoint T*.)

for every u, v ∈ V. (That is, T has an adjoint T*.)

(ii) If A is the matrix representation T with respect to any orthonormal basis S = {ui} of V, then the matrix representation of T* in the basis S is the conjugate transpose A* of A (or the transpose AT of A when K is real).

We emphasize that no such simple relationship exists between the matrices representing T and T* if the basis is not orthonormal. Thus, we see one useful property of orthonormal bases. We also emphasize that this theorem is not valid if V has infinite dimension (Problem 13.31).

The following theorem (proved in Problem 13.5) summarizes some of the properties of the adjoint.

THEOREM 13.2: Let T; T1, T2 be linear operators on V and let k ∈ K. Then

Observe the similarity between the above theorem and Theorem 2.3 on properties of the transpose operation on matrices.

Linear Functionals and Inner Product Spaces

Recall (Chapter 11) that a linear functional ϕ on a vector space V is a linear mapping ϕ : V → K. This subsection contains an important result (Theorem 13.3) that is used in the proof of the above basic Theorem 13.1.

Let V be an inner product space. Each u ∈ V determines a mapping û: V → K defined by

Now, for any a, b ∈ K and any v1, v2 ∈ V,

That is, û is a linear functional on V. The converse is also true for spaces of finite dimension and it is contained in the following important theorem (proved in Problem 13.3).

THEOREM 13.3: Let ϕ be a linear functional on a finite-dimensional inner product space V. Then there exists a unique vector u ∈ V such that ϕ(v) =  v; u

v; u for every v ∈ V.

for every v ∈ V.

We remark that the above theorem is not valid for spaces of infinite dimension (Problem 13.24).

13.3 Analogy Between A(V) and C, Special Linear Operators

Let A(V) denote the algebra of all linear operators on a finite-dimensional inner product space V. The adjoint mapping T → T* on A(V) is quite analogous to the conjugation mapping  on the complex field C. To illustrate this analogy we identify in Table 13-1 certain classes of operators T ∈ A(V) whose behavior under the adjoint map imitates the behavior under conjugation of familiar classes of complex numbers.

on the complex field C. To illustrate this analogy we identify in Table 13-1 certain classes of operators T ∈ A(V) whose behavior under the adjoint map imitates the behavior under conjugation of familiar classes of complex numbers.

The analogy between these operators T and complex numbers z is reflected in the next theorem.

THEOREM 13.4: Let λ be an eigenvalue of a linear operator T on V.

(i) If T* = T– λ (i.e., T is orthogonal or unitary), then |λ| = 1.

(ii) If T* = T (i.e., T is self-adjoint), then λ is real.

(iii) If T* = T (i.e., T is skew-adjoint), then λ is pure imaginary.

(iv) If T = S*S with S nonsingular (i.e., T is positive definite), then λ is real and positive.

Proof. In each case let v be a nonzero eigenvector of T belonging to λ that is, T(v) = λv with v ≠ 0. Hence,  v; v

v; v is positive.

is positive.

Proof of (i). We show that

But  ν, v

ν, v ≠ 0; λλ = 1 and so |λ| = 1.

≠ 0; λλ = 1 and so |λ| = 1.

Proof of (ii). We show that

But  v, v

v, v ≠ 0; hence,

≠ 0; hence,  and so |λ| is real.

and so |λ| is real.

Proof of (iii). We show that λ v, v

v, v = λ

= λ v, v

v, v :

:

But  ν,ν

ν,ν hence,

hence,  , and so λ is pure imaginary.

, and so λ is pure imaginary.

Proof of (iv). Note first that S(v) ≠ 0 because S is nonsingular; hence,  S(v), S(v)

S(v), S(v) is positive. We show that λ

is positive. We show that λ v, v

v, v =

=  S(v), S(v)

S(v), S(v) :

:

But  v, v

v, v and

and  S(v); S(v)

S(v); S(v) are positive; hence, λ is positive.

are positive; hence, λ is positive.

Remark: Each of the above operators T commutes with its adjoint; that is, TT* = T*T. Such operators are called normal operators.

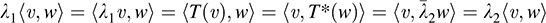

13.4 Self-Adjoint Operators

Let T be a self-adjoint operator on an inner product space V; that is, suppose

(If T is defined by a matrix A, then A is symmetric or Hermitian according as A is real or complex.) By Theorem 13.4, the eigenvalues of T are real. The following is another important property of T.

THEOREM 13.5: Let T be a self-adjoint operator on V. Suppose u and v are eigenvectors of T belonging to distinct eigenvalues. Then u and v are orthogonal; that is,  u; v

u; v = 0.

= 0.

Proof. Suppose T(u) = λ1u and T(v) = λ2v, where λ1 ≠ λ2. We show that λ1 u; v

u; v = l2

= l2 u; v

u; v :

:

(The fourth equality uses the fact that T* = T, and the last equality uses the fact that the eigenvalue λ2 is real.) Because λ1 ≠ λ2, we get  u; v

u; v = 0. Thus, the theorem is proved.

= 0. Thus, the theorem is proved.

13.5 Orthogonal and Unitary Operators

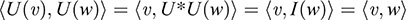

Let U be a linear operator on a finite-dimensional inner product space V. Suppose

Recall that U is said to be orthogonal or unitary according as the underlying field is real or complex. The next theorem (proved in Problem 13.10) gives alternative characterizations of these operators.

THEOREM 13.6: The following conditions on an operator U are equivalent:

(i) U* = U– 1; that is, UU* = U*U = I. [U is unitary (orthogonal).]

(ii) U preserves inner products; that is, for every v, w ∈ V,  U(v), U(w)

U(v), U(w) =

=  v, w

v, w .

.

(iii) U preserves lengths; that is, for every ν ∈ V, ||U(v)|| = ||v||.

(a) Let T :R3 → R3 be the linear operator that rotates each vector v about the z-axis by a fixed angle y as shown in Fig. 10-1 (Section 10.3). That is, T is defined by

We note that lengths (distances from the origin) are preserved under T. Thus, T is an orthogonal operator.

(b) Let V be l2-space (Hilbert space), defined in Section 7.3. Let T :V → V be the linear operator defined by T(a1, a2, a3, …) = (0; a1, a2, a3, …)

Clearly, T preserves inner products and lengths. However, T is not surjective, because, for example, (1, 0, 0, …) does not belong to the image of T; hence, T is not invertible. Thus, we see that Theorem 13.6 is not valid for spaces of infinite dimension.

An isomorphism from one inner product space into another is a bijective mapping that preserves the three basic operations of an inner product space: vector addition, scalar multiplication, and inner products. Thus, the above mappings (orthogonal and unitary) may also be characterized as the isomorphisms of V into itself. Note that such a mapping U also preserves distances, because

Hence, U is called an isometry.

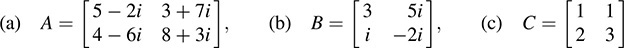

13.6 Orthogonal and Unitary Matrices

Let U be a linear operator on an inner product space V. By Theorem 13.1, we obtain the following results.

THEOREM 13.7A: A complex matrix A represents a unitary operator U (relative to an orthonormal basis) if and only if A* = A–1.

THEOREM 13.7B: A real matrix A represents an orthogonal operator U (relative to an orthonormal basis) if and only if AT = A–

The above theorems motivate the following definitions (which appeared in Sections 2.10 and 2.11).

DEFINITION: A complex matrix A for which A* = A–1 is called a unitary matrix.

DEFINITION: A complex matrix A for which A* = A–1 is called an orthogonal matrix.

We repeat Theorem 2.6, which characterizes the above matrices.

THEOREM 13.8: The following conditions on a matrix A are equivalent:

(i) A is unitary (orthogonal).

(ii) The rows of A form an orthonormal set.

(iii) The columns of A form an orthonormal set.

13.7 Change of Orthonormal Basis

Orthonormal bases play a special role in the theory of inner product spaces V. Thus, we are naturally interested in the properties of the change-of-basis matrix from one such basis to another. The following theorem (proved in Problem 13.12) holds.

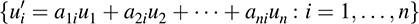

THEOREM 13.9: Let {u1, … ; un} be an orthonormal basis of an inner product space V. Then the change-of-basis matrix from {ui} into another orthonormal basis is unitary (orthogonal). Conversely, if P = [aij] is a unitary (orthogonal) matrix, then the following is an orthonormal basis:

Recall that matrices A and B representing the same linear operator T are similar; that is, B = P- AP, where P is the (nonsingular) change-of-basis matrix. On the other hand, if V is an inner product space, we are usually interested in the case when P is unitary (or orthogonal) as suggested by Theorem 13.9. (Recall that P is unitary if the conjugate tranpose P* = P– 1, and P is orthogonal if the transpose PT = P–1.) This leads to the following definition.

DEFINITION: Complex matrices A and B are unitarily equivalent if there exists a unitary matrix P for which B = P*AP. Analogously, real matrices A and B are orthogonally equivalent if there exists an orthogonal matrix P for which B = PTAP.

Note that orthogonally equivalent matrices are necessarily congruent.

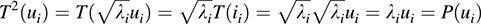

13.8 Positive Definite and Positive Operators

Let P be a linear operator on an inner product space V. Then

(i) P is said to be positive definite if P = S*S for some nonsingular operators S.

(ii) P is said to be positive (or nonnegative or semidefinite) if P = S*S for some operator S.

The following theorems give alternative characterizations of these operators.

THEOREM 13.10A: The following conditions on an operator P are equivalent:

(i) P = T2 for some nonsingular self-adjoint operator T.

(ii) P is positive definite.

(iii) P is self-adjoint and  P(u); u

P(u); u > 0 for every u ≠ 0 in V.

> 0 for every u ≠ 0 in V.

The corresponding theorem for positive operators (proved in Problem 13.21) follows.

THEOREM 13.10B: The following conditions on an operator P are equivalent:

(i) P = T2 for some self-adjoint operator T.

(ii) P is positive; that is, P = S–S.

(iii) P is self-adjoint and  P(u); u

P(u); u 0 for every u ∈ V.

0 for every u ∈ V.

13.9 Diagonalization and Canonical Forms in Inner Product Spaces

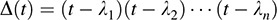

Let T be a linear operator on a finite-dimensional inner product space V over K. Representing T by a diagonal matrix depends upon the eigenvectors and eigenvalues of T, and hence, upon the roots of the characteristic polynomial Δ(t) of T. Now Δ(t) always factors into linear polynomials over the complex field C but may not have any linear polynomials over the real field R. Thus, the situation for real inner product spaces (sometimes called Euclidean spaces) is inherently different than the situation for complex inner product spaces (sometimes called unitary spaces). Thus, we treat them separately.

Real Inner Product Spaces, Symmetric and Orthogonal Operators

The following theorem (proved in Problem 13.14) holds.

THEOREM 13.11: Let T be a symmetric (self-adjoint) operator on a real finite-dimensional product space V. Then there exists an orthonormal basis of V consisting of eigenvectors of T; that is, T can be represented by a diagonal matrix relative to an orthonormal basis.

We give the corresponding statement for matrices.

THEOREM 13.11: (Alternative Form) Let A be a real symmetric matrix. Then there exists an orthogonal matrix P such that B = P–1AP = PTAP is diagonal.

We can choose the columns of the above matrix P to be normalized orthogonal eigenvectors of A; then the diagonal entries of B are the corresponding eigenvalues.

On the other hand, an orthogonal operator T need not be symmetric, and so it may not be represented by a diagonal matrix relative to an orthonormal matrix. However, such a matrix T does have a simple canonical representation, as described in the following theorem (proved in Problem 13.16).

THEOREM 13.12: Let T be an orthogonal operator on a real inner product space V. Then there exists an orthonormal basis of V in which T is represented by a block diagonal matrix M of the form

The reader may recognize that each of the 2 × 2 diagonal blocks represents a rotation in the corresponding two-dimensional subspace, and each diagonal entry 1 represents a reflection in the corresponding one-dimensional subspace.

Complex Inner Product Spaces, Normal and Triangular Operators

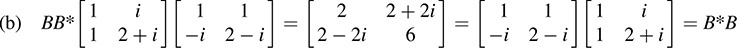

A linear operator T is said to be normal if it commutes with its adjoint—that is, if TT* = T*T. We note that normal operators include both self-adjoint and unitary operators.

Analogously, a complex matrix A is said to be normal if it commutes with its conjugate transpose—that is, if AA* = A*A.

Also  . Thus, A is normal.

. Thus, A is normal.

The following theorem (proved in Problem 13.19) holds.

THEOREM 13.13: Let T be a normal operator on a complex finite-dimensional inner product space V. Then there exists an orthonormal basis of V consisting of eigenvectors of T; that is, T can be represented by a diagonal matrix relative to an orthonormal basis.

We give the corresponding statement for matrices.

THEOREM 13.13: (Alternative Form) Let A be a normal matrix. Then there exists a unitary matrix P such that B = P– 1AP = P*AP is diagonal.

The following theorem (proved in Problem 13.20) shows that even nonnormal operators on unitary spaces have a relatively simple form.

THEOREM 13.14: Let T be an arbitrary operator on a complex finite-dimensional inner product space V. Then T can be represented by a triangular matrix relative to an orthonormal basis of V.

THEOREM 13.14: (Alternative Form) Let A be an arbitrary complex matrix. Then there exists a unitary matrix P such that B = P– 1AP = P*AP is triangular.

13.10 Spectral Theorem

The Spectral Theorem is a reformulation of the diagonalization Theorems 13.11 and 13.13.

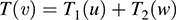

THEOREM 13.15: (Spectral Theorem) Let T be a normal (symmetric) operator on a complex (real) finite-dimensional inner product space V. Then there exists linear operators E1, … ; Er on V and scalars λ1, … ; λr such that

The above linear operators E1, … ; Er are projections in the sense that  . Moreover, they are said to be orthogonal projections because they have the additional property that EiEj = 0 for i ≠ j.

. Moreover, they are said to be orthogonal projections because they have the additional property that EiEj = 0 for i ≠ j.

The following example shows the relationship between a diagonal matrix representation and the corresponding orthogonal projections.

EXAMPLE 13.4 Consider the following diagonal matrices A; E1, E2, E3:

The reader can verify that

SOLVED PROBLEMS

Adjoints

13.1. Find the adjoint of F: R3 → R3 defined by

First find the matrix A that represents F in the usual basis of R3—that is, the matrix A whose rows are the coefficients of x; y; z—and then form the transpose AT of A. This yields

The adjoint F* is represented by the transpose of A; hence,

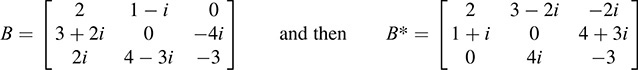

13.2. Find the adjoint of G:C3 → C3 defined by

First find the matrix B that represents G in the usual basis of C3, and then form the conjugate transpose B* of B. This yields

Then G*(x; y; z) = [2x + (3 – 2i)y – 2iz; (1 + i)x +(4 + 3i)z; 4iy – 3z].

13.3. Prove Theorem 13.3: Let ϕ be a linear functional on an n-dimensional inner product space V. Then there exists a unique vector u ∈ V such that ϕ(v) =  v, u

v, u for every ν ∈ V.

for every ν ∈ V.

Let {w1, … ; wn} be an orthonormal basis of V. Set

Let û be the linear functional on V defined by û(v) = ∊ u

∊ u for every v ∈ V. Then, for i = 1; … ; n,

for every v ∈ V. Then, for i = 1; … ; n,

Because û and ϕ agree on each basis vector, û = ϕ.

Now suppose u′ is another vector in V for which ϕ(v) =  v, u′

v, u′ for every v ∈ V. Then

for every v ∈ V. Then  v, u

v, u =

=  v, u′

v, u′ or

or  v, u – u′

v, u – u′ = 0. In particular, this is true for v = u – u′, and so

= 0. In particular, this is true for v = u – u′, and so  u – u′; u – u′i = 0. This yields u – u′ = 0 and u = u′. Thus, such a vector u is unique, as claimed.

u – u′; u – u′i = 0. This yields u – u′ = 0 and u = u′. Thus, such a vector u is unique, as claimed.

13.4. Prove Theorem 13.1: Let T be a linear operator on an n-dimensional inner product space V. Then

(a) There exists a unique linear operator T* on V such that

(b) Let A be the matrix that represents T relative to an orthonormal basis S = {ui}. Then the conjugate transpose A* of A represents T* in the basis S.

(a) We first define the mapping T*. Let v be an arbitrary but fixed element of V. The map u 7! hT(u); vi is a linear functional on V. Hence, by Theorem 13.3, there exists a unique element v′ ∈ V such that hT(u); vi = hu; v′i for every u ∈ V. We define T* : V → V by T*(v) = v′. Then h T(u); vi = hu; T *(v)i for every u; v ∈ V.

We next show that T* is linear. For any u, vi ∈ V and a, b ∈ K,

But this is true for every u ∈ V; hence, T*(av1 + bv2) = aT*(v1)+ bT*(v2). Thus, T* is linear.

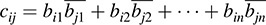

(b) The matrices A = [aij] and B = [bij] that represent T and T*, respectively, relative to the orthonormal basis S are given by [aij] = hT(uj); uii and bij = hT*(uj); uii (Problem 13.67). Hence,

Thus, B = A*, as claimed.

13.5. Prove Theorem 13.2:

(i) For any u; v ∈ V,

The uniqueness of the adjoint implies

(ii) For any u, v ∈ V,

The uniqueness of the adjoint implies

(iii) For any u, v ∈ V,

The uniqueness of the adjoint implies

(iv) For any u, v ∈ V,

The uniqueness of the adjoint implies (T*)* = T.

13.6. Show that (a) I* = I, and (b) 0* = 0.

(a) For every u, v ∈ V,  I(u); v

I(u); v =

=  u, v

u, v =

=  u; I(v)

u; I(v) ; hence, I* = I.

; hence, I* = I.

(b) For every u, v ∈ V,  0(u); v

0(u); v =

=  0, v

0, v = 0 =

= 0 =  u, 0

u, 0 =

=  u, 0(v)

u, 0(v) ; hence, 0* = 0.

; hence, 0* = 0.

13.7. Suppose T is invertible. Show that (T–)

13.8. Let T be a linear operator on V, and let W be a T-invariant subspace of V. Show that W? is invariant under T*.

Let u ∈ W⊥. If w ∈ W, then T(w) ∈ W and so  w; T*(u)

w; T*(u) =

=  T(w); u

T(w); u = 0. Thus, T*(u) ∈ W⊥ because it is orthogonal to every w ∈ W. Hence, W⊥ is invariant under T*.

= 0. Thus, T*(u) ∈ W⊥ because it is orthogonal to every w ∈ W. Hence, W⊥ is invariant under T*.

13.9. Let T be a linear operator on V. Show that each of the following conditions implies T = 0:

(i)  T(u); v

T(u); v = 0 for every u, v ∈ V.

= 0 for every u, v ∈ V.

(ii) V is a complex space, and  T(u); u

T(u); u = 0 for every u ∈ V.

= 0 for every u ∈ V.

(iii) T is self-adjoint and  T(u); u

T(u); u = 0 for every u ∈ V.

= 0 for every u ∈ V.

Give an example of an operator T on a real space V for which  T(u); u

T(u); u = 0 for every u ∈ V but T ≠ 0. [Thus, (ii) need not hold for a real space V.]

= 0 for every u ∈ V but T ≠ 0. [Thus, (ii) need not hold for a real space V.]

(i) Set v = T(u). Then  T(u); T(u)

T(u); T(u) = 0, and hence, T(u) = 0, for every u ∈ V. Accordingly, T = 0.

= 0, and hence, T(u) = 0, for every u ∈ V. Accordingly, T = 0.

(ii) By hypothesis,  T(v + w); v + w

T(v + w); v + w = 0 for any v, w ∈ V. Expanding and setting

= 0 for any v, w ∈ V. Expanding and setting  T(v); v

T(v); v = 0 and

= 0 and  T(w); w

T(w); w = 0, we find

= 0, we find

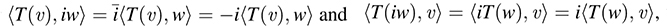

Note w is arbitrary in (1). Substituting iw for w, and using  we find

we find

Dividing through by i and adding to (1), we obtain  T(w), v

T(w), v = 0 for any v, w, ∈ V. By (i), T = 0.

= 0 for any v, w, ∈ V. By (i), T = 0.

(iii) By (ii), the result holds for the complex case; hence we need only consider the real case. Expanding  T(v + w); v + wi = 0, we again obtain (1). Because T is self-adjoint and as it is a real space, we have

T(v + w); v + wi = 0, we again obtain (1). Because T is self-adjoint and as it is a real space, we have  T(w); v

T(w); v =

=  w; T(v)

w; T(v) =

=  T(v); w

T(v); w . Substituting this into (1), we obtain

. Substituting this into (1), we obtain  T(v); w

T(v); w = 0 for any v, w ∈ V. By (i), T = 0.

= 0 for any v, w ∈ V. By (i), T = 0.

For an example, consider the linear operator T on R2 defined by T(x, y) = (y, x). Then h  (u), u

(u), u = 0 for every u ∈ V, but T ≠ 0.

= 0 for every u ∈ V, but T ≠ 0.

Orthogonal and Unitary Operators and Matrices

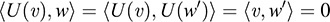

13.10. Prove Theorem 13.6: The following conditions on an operator U are equivalent:

(i) U* = U–1; that is, U is unitary. (ii)  U(v); U(w)

U(v); U(w) =

=  u, w

u, w .

.  . Suppose (i) holds. Then, for every v, w, ∈ V,

. Suppose (i) holds. Then, for every v, w, ∈ V,

Thus, (i) implies (ii). Now if (ii) holds, then

Hence, (ii) implies (iii). It remains to show that (iii) implies (i).

Suppose (iii) holds. Then for every v ∈ V,

Hence,  (U*U I)(v), v

(U*U I)(v), v = 0 for every v ∈ V. But U*U I is self-adjoint (Prove!); then, by Problem 13.9, we have U*U I = 0 and so U*U = I. Thus, U* = U–

= 0 for every v ∈ V. But U*U I is self-adjoint (Prove!); then, by Problem 13.9, we have U*U I = 0 and so U*U = I. Thus, U* = U–

13.11. Let U be a unitary (orthogonal) operator on V, and let W be a subspace invariant under U. Show that W⊥ is also invariant under U.

Because U is nonsingular, U(W) = W; that is, for any w ∈ W, there exists w′ ∈ W such that U(w′) = w. Now let v ∈ W⊥. Then, for any w ∈ W,

Thus, U(v) belongs to W⊥. Therefore, W⊥ is invariant under U.

13.12. Prove Theorem 13.9: The change-of-basis matrix from an orthonormal basis {u1, … ; un} into another orthonormal basis is unitary (orthogonal). Conversely, if P = [aij] is a unitary (orthogonal) matrix, then the vectors ui′ = Σj ajiuj form an orthonormal basis.

Suppose {vi} is another orthonormal basis and suppose

Because {vi} is orthonormal,

Let B = [bji] be the matrix of coefficients in (1). (Then BT is the change-of-basis matrix from {ui} to.) Then BB* = [cij], where  . By (2), cij = dij, and therefore BB* = I. Accordingly, B, and hence, BT, is unitary.

. By (2), cij = dij, and therefore BB* = I. Accordingly, B, and hence, BT, is unitary.

It remains to prove that { } is orthonormal. By Problem 13.67,

} is orthonormal. By Problem 13.67,

where Ci denotes the ith column of the unitary (orthogonal) matrix P = ½aij : Because P is unitary (orthogonal), its columns are orthonormal; hence,  . Thus,

. Thus,  is an orthonormal basis.

is an orthonormal basis.

Symmetric Operators and Canonical Forms in Euclidean Spaces

13.13. Let T be a symmetric operator. Show that (a) The characteristic polynomial Δ(t) of T is a product of linear polynomials (over R); (b) T has a nonzero eigenvector.

(a) Let A be a matrix representing T relative to an orthonormal basis of V; then A = AT. Let Δ(t) be the characteristic polynomial of A. Viewing A as a complex self-adjoint operator, A has only real eigenvalues by Theorem 13.4. Thus,

where the λi are all real. In other words, Δ(t) is a product of linear polynomials over R.

(b) By (a), T has at least one (real) eigenvalue. Hence, T has a nonzero eigenvector.

13.14. Prove Theorem 13.11: Let T be a symmetric operator on a real n-dimensional inner product space V. Then there exists an orthonormal basis of V consisting of eigenvectors of T. (Hence, T can be represented by a diagonal matrix relative to an orthonormal basis.)

The proof is by induction on the dimension of V. If dim V = 1, the theorem trivially holds. Now suppose dim V = n > 1. By Problem 13.13, there exists a nonzero eigenvector v1 of T. Let W be the space spanned by v1, and let u1 be a unit vector in W, e.g., let u1 = v1 = ‖v1‖.

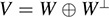

Because v1 is an eigenvector of T, the subspace W of V is invariant under T. By Problem 13.8, W⊥ is invariant under T* = T. Thus, the restriction  of T to W⊥ is a symmetric operator. By Theorem 7.4, V = W W⊥. Hence, dim W⊥ = n – 1, because dim W = 1. By induction, there exists an orthonormal basis {u2, … ; un} of W⊥ consisting of eigenvectors of

of T to W⊥ is a symmetric operator. By Theorem 7.4, V = W W⊥. Hence, dim W⊥ = n – 1, because dim W = 1. By induction, there exists an orthonormal basis {u2, … ; un} of W⊥ consisting of eigenvectors of  and hence of T. But

and hence of T. But  u1, ui

u1, ui = 0 for i = 2; … ; n because ui ∈ W⊥. Accordingly fu1, u2, … ; un} is an orthonormal set and consists of eigenvectors of T. Thus, the theorem is proved.

= 0 for i = 2; … ; n because ui ∈ W⊥. Accordingly fu1, u2, … ; un} is an orthonormal set and consists of eigenvectors of T. Thus, the theorem is proved.

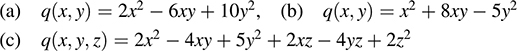

13.15. Let q(x, y) = 3x2 6xy + 11y2. Find an orthonormal change of coordinates (linear substitution) that diagonalizes the quadratic form q.

Find the symmetric matrix A representing q and its characteristic polynomial D(t). We have

The eigenvalues are λ = ∈ and λ = 12. Hence, a diagonal form of q is

(where we use s and t as new variables). The corresponding orthogonal change of coordinates is obtained by finding an orthogonal set of eigenvectors of A.

Subtract λ = ∈ down the diagonal of A to obtain the matrix

A nonzero solution is u1 = (3, 1). Next subtract λ = 12 down the diagonal of A to obtain the matrix

A nonzero solution is u2 = (–1, 3). Normalize u1 and u2 to obtain the orthonormal basis

Now let P be the matrix whose columns are û1 and û2. Then

Thus, the required orthogonal change of coordinates is

One can also express s and t in terms of x and y by using P– = PT; that is,

13.16. Prove Theorem 13.12: Let T be an orthogonal operator on a real inner product space V. Then there exists an orthonormal basis of V in which T is represented by a block diagonal matrix M of the form

Let S = T + T–1 = T + T*. Then S* = (T + T*)* = T* + T = S. Thus, S is a symmetric operator on V. By Theorem 13.11, there exists an orthonormal basis of V consisting of eigenvectors of S. If λ1, … ; λm denote the distinct eigenvalues of S, then V can be decomposed into the direct sum V = V1 ⊕ V2 ⊕ Vm where the Vi consists of the eigenvectors of S belonging to λi. We claim that each Vi is invariant under T. For suppose v ∈ V; then S(v) = λiv and

That is, T(v) ∈ Vi. Hence, Vi is invariant under T. Because the Vi are orthogonal to each other, we can restrict our investigation to the way that T acts on each individual Vi.

On a given Vi, we have (T + T– 1)v = S(v) = λiv. Multiplying by T, we get

We consider the cases λi = ±2 and λi ≠ ∈ separately. If λi = 2, then (T ± I)2(v) = 0, which leads to ( T ± I)(v) = 0 or T(v) = v. Thus, T restricted to this Vi is either I or I.

If λi ≠ then T has no eigenvectors in Vi, because, by Theorem 13.4, the only eigenvalues of T are 1 or 1. Accordingly, for v ≠ 0, the vectors v and T(v) are linearly independent. Let W be the subspace spanned by v and T(v). Then W is invariant under T, because using (1) we get

By Theorem 7.4, Vi = W ⊕ W⊥. Furthermore, by Problem 13.8, W⊥ is also invariant under T. Thus, we can decompose Vi into the direct sum of two-dimensional subspaces Wj where the Wj are orthogonal to each other and each Wj is invariant under T. Thus, we can restrict our investigation to the way in which T acts on each individual Wj.

Because T2 – λiT + I = 0, the characteristic polynomial D(t) of T acting on Wj is Δ(t) = t2 λit + 1. Thus, the determinant of T is 1, the constant term in Δ(t). By Theorem 2.7, the matrix A representing T acting on Wj relative to any orthogonal basis of Wj must be of the form

The union of the bases of the Wj gives an orthonormal basis of Vi, and the union of the bases of the Vi gives an orthonormal basis of V in which the matrix representing T is of the desired form.

Normal Operators and Canonical Forms in Unitary Spaces

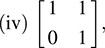

13.17. Determine which of the following matrices is normal:

Because AA* ≠ A*A, the matrix A is not normal.

Because BB* ≠ B*B, the matrix B is normal.

13.18. Let T be a normal operator. Prove the following:

(a) T(v) = 0 if and only if T*(v) = 0. (b) T – λI is normal.

(c) If T(v) = λ1v, then  hence, any eigenvector of T is also an eigenvector of T*.

hence, any eigenvector of T is also an eigenvector of T*.

(d) If T(v) = λ1v and T(w) = λ2w where λ1 ≠ λ2, then λv, wλ = 0; that is, eigenvectors of T belonging to distinct eigenvalues are orthogonal.

(a) We show that  T(v); T(v)

T(v); T(v) =

=  T*(v); T*(v)

T*(v); T*(v) :

:

Hence, by [I]3 in the definition of the inner product in Section 7.2, T(v) = 0 if and only if T*(v) = 0.

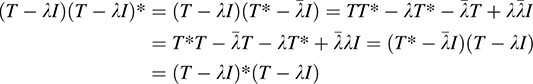

(b) We show that T – λI commutes with its adjoint:

Thus, T – λI is normal.

(c) If T(v) = λv, then (T – λI)(v) = 0. Now T – λI is normal by (b); therefore, by (a), (T – λI)*(v) = 0. That is, (T* – λI)(v) = 0; hence,  .

.

(d) We show that λ1 v, w

v, w = λ2

= λ2 v, w

v, w :

:

But λ1 ≠ λ2, hence,  v, w

v, w = 0.

= 0.

13.19. Prove Theorem 13.13: Let T be a normal operator on a complex finite-dimensional inner product space V. Then there exists an orthonormal basis of V consisting of eigenvectors of T. (Thus, T can be represented by a diagonal matrix relative to an orthonormal basis.)

The proof is by induction on the dimension of V. If dim V = 1, then the theorem trivially holds. Now suppose dim V = n > 1. Because V is a complex vector space, T has at least one eigenvalue and hence a nonzero eigenvector v. Let W be the subspace of V spanned by v, and let u1 be a unit vector in W.

Because v is an eigenvector of T, the subspace W is invariant under T. However, v is also an eigenvector of T* by Problem 13.18; hence, W is also invariant under T*. By Problem 13.8, W⊥ is invariant under T** = T. The remainder of the proof is identical with the latter part of the proof of Theorem 13.11 (Problem 13.14).

13.20. Prove Theorem 13.14: Let T be any operator on a complex finite-dimensional inner product space V. Then T can be represented by a triangular matrix relative to an orthonormal basis of V.

The proof is by induction on the dimension of V. If dim V = 1, then the theorem trivially holds. Now suppose dim V = n > 1. Because V is a complex vector space, T has at least one eigenvalue and hence at least one nonzero eigenvector v. Let W be the subspace of V spanned by v, and let u1 be a unit vector in W. Then u1 is an eigenvector of T and, say, T(u1) = a11u1.

By Theorem 7.4, V = W ⊕ W⊥. Let E denote the orthogonal projection V into W⊥. Clearly W⊥ is invariant under the operator ET. By induction, there exists an orthonormal basis {u2, … ; un} of W⊥ such that, for i = 2; … ; n,

(Note that fu1, u2, … ; un} is an orthonormal basis of V.) But E is the orthogonal projection of V onto W⊥; hence, we must have

for i = 2; … ; n. This with T(u1) = a11u1 gives us the desired result.

Miscellaneous Problems

13.21.. Prove Theorem 13.10B: The following are equivalent:

(i) P = T2 for some self-adjoint operator T.

(ii) P = S*S for some operator S; that is, P is positive.

(iii) P is self-adjoint and  P(u), u

P(u), u 0 for every u ∈ V.

0 for every u ∈ V.

Suppose (i) holds; that is, P = T2 where T = T*. Then P = TT = T*T, and so (i) implies (ii). Now suppose (ii) holds. Then P* = (S*S)* = S*S** = S*S = P, and so P is self-adjoint. Furthermore,

Thus, (ii) implies (iii), and so it remains to prove that (iii) implies (i).

Now suppose (iii) holds. Because P is self-adjoint, there exists an orthonormal basis {u1, … ; un} of V consisting of eigenvectors of P; say, P(ui) = λiui. By Theorem 13.4, the λi are real. Using (iii), we show that the λi are nonnegative. We have, for each i,

Thus,  ui, ui

ui, ui defined by 0 forces li 0; as claimed. Accordingly,

defined by 0 forces li 0; as claimed. Accordingly,  is a real number. Let T be the linear operator

is a real number. Let T be the linear operator

Because T is represented by a real diagonal matrix relative to the orthonormal basis fui}, T is self-adjoint. Moreover, for each i,

Because T2 and P agree on a basis of V; P = T2. Thus, the theorem is proved.

Remark: The above operator T is the unique positive operator such that P = T2; it is called the positive square root of P.

13.22. Show that any operator T is the sum of a self-adjoint operator and a skew-adjoint operator.

and

that is, S is self-adjoint and U is skew-adjoint.

13.23. Prove: Let T be an arbitrary linear operator on a finite-dimensional inner product space V. Then T is a product of a unitary (orthogonal) operator U and a unique positive operator P; that is, T = UP. Furthermore, if T is invertible, then U is also uniquely determined.

By Theorem 13.10, T*T is a positive operator; hence, there exists a (unique) positive operator P such that P2 = T*T (Problem 13.43). Observe that

We now consider separately the cases when T is invertible and noninvertible.

If T is invertible, then we set Û = PT–1. We show that Û is unitary:

Then Û is also unitary, and T = UP as required.

To prove uniqueness, we assume T = U0P0, where U0 is unitary and P0 is positive. Then

But the positive square root of T*T is unique (Problem 13.43); hence, P0 = P. (Note that the invertibility of T is not used to prove the uniqueness of P.) Now if T is invertible, then P is also invertible by (1). Multiplying U0P = UP on the right by P– 1 yields U0 = U. Thus, U is also unique when T is invertible.

Now suppose T is not invertible. Let W be the image of P that is, W = Im P. We define U1: W → V by

We must show that U1 is well defined; that is, that P(v) = P(v′) implies T(v) = T(v′). This follows from the fact that P(v – v′) = 0 is equivalent to  , which forces

, which forces  by (1). Thus, U1 is well defined. We next define U2:W → V. Note that, by (1), P and T have the same kernels. Hence, the images of P and T have the same dimension; that is, dim(Im P) = dim W = dim(Im T). Consequently, W? and (Im T)⊥ also have the same dimension. We let U2 be any isomorphism between W⊥ and (Im T)⊥.

by (1). Thus, U1 is well defined. We next define U2:W → V. Note that, by (1), P and T have the same kernels. Hence, the images of P and T have the same dimension; that is, dim(Im P) = dim W = dim(Im T). Consequently, W? and (Im T)⊥ also have the same dimension. We let U2 be any isomorphism between W⊥ and (Im T)⊥.

We next set U = U1 ⊕ U2. [Here U is defined as follows: If v ∈ V and v = w + w′, where w ∈ W, w′ ∈ W⊥, then U(v) = U1(w)+ U2(w′).] Now U is linear (Problem 13.69), and, if v ∈ V and P(v) = w, then, by (2),

Thus, T = UP, as required.

It remains to show that U is unitary. Now every vector x ∈ V canbewritten in theform x = P(v) + w′, where w′ ∈ W⊥. Then U(x)= UP(v)+ U2(w′)= T(v)+ U2(w′), where  T(v); U2(w′)

T(v); U2(w′) = 0 by definition

= 0 by definition

of U2. Also,  T(ν); T(ν)

T(ν); T(ν) =

=  P(u), P(u)

P(u), P(u) by (1). Thus,

by (1). Thus,

[We also used the fact that hP(v); w′i = 0: Thus, U is unitary, and the theorem is proved.

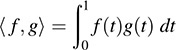

13.24. Let V be the vector space of polynomials over R with inner product defined by

Give an example of a linear functional f on V for which Theorem 13.3 does not hold—that is, for which there is no polynomial h(t) such that ϕ(f) =  f, h

f, h for every f ∈ V.

for every f ∈ V.

Let ϕ:V → R be defined by ϕ(f) = f(0); that is, f evaluates f (t) at 0, and hence maps f(t) into its constant term. Suppose a polynomial h(t) exists for which

for every polynomial f(t). Observe that f maps the polynomial tf(t) into 0; hence, by (1),

for every polynomial f(t). In particular (2) must hold for f(t) = th(t); that is,

This integral forces h(t) to be the zero polynomial; hence, ϕ(f) =  f , h

f , h =

=  f, 0

f, 0 = 0 for every polynomial f(t). This contradicts the fact that f is not the zero functional; hence, the polynomial h(t) does not exist.

= 0 for every polynomial f(t). This contradicts the fact that f is not the zero functional; hence, the polynomial h(t) does not exist.

ANSWERS TO SUPPLEMENTARY PROBLEMS

Adjoint Operators

13.26. Let T :R3 → R3 be defined by T(x, y, z) = (x + 2y, 3x, 4z, y): Find T*(x, y, z):

13.27. Let T : C3 → C3 be defined by T(x, y, z) = [ix +(2 + 3i)y; 3x +(3 – i)z; (2 – 5i)y + iz : Find T*(x, y z):

13.28. For each linear function f on V; find u ∈ V such that f(v) = hv; ui for every v ∈ V:

(a) ϕ:R3 → R defined by ϕ(x, y, z) = x + 2y – 3z:

(b) ϕ:C3 → C defined by ϕ(x, y, z) = ix + (2 + 3i) y + (1 – 2i)z:

13.29. Suppose V has finite dimension. Prove that the image of T* is the orthogonal complement of the kernel of T; that is, Im T* = (Ker T)⊥: Hence, rank(T) = rank(T*).

13.30. Show that T*T = 0 implies T = 0.

13.31. Let V be the vector space of polynomials over R with inner product defined by  Let D be the derivative operator on V; that is, D(f) = df = dt: Show that there is no operator D* on V such that

Let D be the derivative operator on V; that is, D(f) = df = dt: Show that there is no operator D* on V such that  D(f); g

D(f); g = h f , D*(g)

= h f , D*(g) for every f, g ∈ V: That is, D has no adjoint.

for every f, g ∈ V: That is, D has no adjoint.

Unitary and Orthogonal Operators and Matrices

13.32. Find a unitary (orthogonal) matrix whose first row is

(b) a multiple of (1; 1 –i), (c) a multiple of (1, –i 1 –i )

(b) a multiple of (1; 1 –i), (c) a multiple of (1, –i 1 –i )

13.33. Prove that the products and inverses of orthogonal matrices are orthogonal. (Thus, the orthogonal matrices form a group under multiplication, called the orthogonal group.)

13.34. Prove that the products and inverses of unitary matrices are unitary. (Thus, the unitary matrices form a group under multiplication, called the unitary group.)

13.35. Show that if an orthogonal (unitary) matrix is triangular, then it is diagonal.

13.36. Recall that the complex matrices A and B are unitarily equivalent if there exists a unitary matrix P such that B = P *AP. Show that this relation is an equivalence relation.

13.37. Recall that the real matrices A and B are orthogonally equivalent if there exists an orthogonal matrix P such that B = PTAP. Show that this relation is an equivalence relation.

13.38. Let W be a subspace of V. For any v ∈ V, let v = w + w′, where w ∈ W, w′ ∈ W⊥. (Such a sum is unique because V = W ⊕ W⊥.) Let T :V → V be defined by T(v) = w – w′. Show that T is self-adjoint unitary operator on V.

13.39. Let V be an inner product space, and suppose U:V → V (not assumed linear) is surjective (onto) and preserves inner products; that is,  U(v); U(w)

U(v); U(w) =

=  u, w

u, w for every v, w ∈ V. Prove that U is linear and hence unitary.

for every v, w ∈ V. Prove that U is linear and hence unitary.

Positive and Positive Definite Operators

13.40. Show that the sum of two positive (positive definite) operators is positive (positive definite).

13.41. Let T be a linear operator on V and let f : V × V → K be defined by f(u, v) =  T(u), v

T(u), v . Show that f is an inner product on V if and only if T is positive definite.

. Show that f is an inner product on V if and only if T is positive definite.

13.42. Suppose E is an orthogonal projection onto some subspace W of V. Prove that kI + E is positive (positive definite) if k ≥ 0 (k > 0).

13.43. Consider the operator T defined by  , in the proof of Theorem 13.10A. Show that T is positive and that it is the only positive operator for which T2 = P

, in the proof of Theorem 13.10A. Show that T is positive and that it is the only positive operator for which T2 = P

13.44. Suppose P is both positive and unitary. Prove that P = I.

13.45. Determine which of the following matrices are positive (positive definite):

13.46. Prove that a ∈ ∈ complex matrix  is positive if and only if (i) A = A*, and (ii) a, d and |A| = ad – bc are nonnegative real numbers.

is positive if and only if (i) A = A*, and (ii) a, d and |A| = ad – bc are nonnegative real numbers.

13.47. Prove that a diagonal matrix A is positive (positive definite) if and only if every diagonal entry is a nonnegative (positive) real number.

Self-adjoint and Symmetric Matrices

13.48. For any operator T, show that T + T* is self-adjoint and T – T* is skew-adjoint.

13.49. Suppose T is self-adjoint. Show that T2(v) = 0 implies T(v) = 0. Using this to prove that Tn(v) = 0 also implies that T(v) = 0 for n > 0.

13.50. Let V be a complex inner product space. Suppose T(v), v is real for every v ∈ V. Show that T is self-adjoint.

13.51. Suppose T1 and T2 are self-adjoint. Show that T1T2 is self-adjoint if and only if T1 and T1 commute; that is, T1T2 = T2T1.

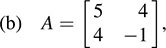

13.52. For each of the following symmetric matrices A, find an orthogonal matrix P and a diagonal matrix D such that PTAP is diagonal:

13.53. Find an orthogonal change of coordinates X = PX′ that diagonalizes each of the following quadratic forms and find the corresponding diagonal quadratic form q(x′):

Normal Operators and Matrices

13.54. Let  . Verify that A is normal. Find a unitary matrix P such that P*AP is diagonal. Find P*AP.

. Verify that A is normal. Find a unitary matrix P such that P*AP is diagonal. Find P*AP.

13.55. Show that a triangular matrix is normal if and only if it is diagonal.

13.56. Prove that if T is normal on V, then ‖T(v)‖ = ‖T*(v)‖ for every v ∈ V. Prove that the converse holds in complex inner product spaces.

13.57. Show that self-adjoint, skew-adjoint, and unitary (orthogonal) operators are normal.

13.58. Suppose T is normal. Prove that

(a) T is self-adjoint if and only if its eigenvalues are real.

(b) T is unitary if and only if its eigenvalues have absolute value 1.

(c) T is positive if and only if its eigenvalues are nonnegative real numbers.

13.59. Show that if T is normal, then T and T* have the same kernel and the same image.

13.60. Suppose T1 and T2 are normal and commute. Show that T1 + T2 and T1T2 are also normal.

13.61. Suppose T1 is normal and commutes with T2. Show that T1 also commutes with T2*.

13.62. Prove the following: Let T1 and T2 be normal operators on a complex finite-dimensional vector space V. Then there exists an orthonormal basis of V consisting of eigenvectors of both T1 and T2. (That is, T1 and T2 can be simultaneously diagonalized.)

Isomorphism Problems for Inner Product Spaces

13.63. Let S = {u1, … ; un} be an orthonormal basis of an inner product space V over K. Show that the mapping v ↣ [v]s is an (inner product space) isomorphism between V and Kn. (Here [v] S denotes the coordinate vector of v in the basis S.)

13.64. Show that inner product spaces V and W over K are isomorphic if and only if V and W have the same dimension.

13.65. Suppose {u1, … ; un} and  are orthonormal bases of V and W, respectively. Let T : V → W be the linear map defined by T(ui) = u′i for each i. Show that T is an isomorphism.

are orthonormal bases of V and W, respectively. Let T : V → W be the linear map defined by T(ui) = u′i for each i. Show that T is an isomorphism.

13.66. Let V be an inner product space. Recall that each u ∈ V determines a linear functional û in the dual space V* by the definition (v) =  v, u

v, u for every v ∈ V. (See the text immediately preceding Theorem 13.3.) Show that the map u 7! ^u is linear and nonsingular, and hence an isomorphism from V onto V*.

for every v ∈ V. (See the text immediately preceding Theorem 13.3.) Show that the map u 7! ^u is linear and nonsingular, and hence an isomorphism from V onto V*.

Miscellaneous Problems

13.67. Suppose {u1, … ; un} is an orthonormal basis of V: Prove

(a)

(b) Let A = [a]ij be the matrix representing T: V → V in the basis {ui}: Then aij =  T(ui); uj

T(ui); uj :

:

13.68. Show that there exists an orthonormal basis {u1, … ; un} of V consisting of eigenvectors of T if and only if there exist orthogonal projections E1, … ; Er and scalars λ1, … ; λr such that

13.69. Suppose V = U ⊕ W and suppose T1: U → V and T2: W → V are linear. Show that T = T1 T2 is also linear. Here T is defined as follows: If v ∈ V and v = u + w where u ∈ U, w ∈ W, then

ANSWERS TO SUPPLEMENTARY PROBLEMS

Notation: [R1, R2, …; Rn] denotes a matrix with rows R1, R2, … ; Rn.

13.25. (a) [5 + 2i, 4 + 6i, 3 7i, 8 3i], (b) [3, i, 5i, 2i, (c) [1, 2; 1,3]

13.26. T*(x, y, z) = (x + 3y, 2x + z, 4y)

13.27. T*(x, y, z) = [–ix + 3y, (2 – 3i)x +(2 + 5i)z; (3 + i)y – iz

13.28. (a) u = (1, 2, 3), (b) u = ( –i 2, 3i, 1 + 2i)

13.45. Only (i) and (v) are positive. Only (v) is positive definite.

13.52.

(a) D = [2, 0, 0; –3], (b) D = [7, 0; 0, –3], (c) D = [8, 0; 0, –2]

(a) D = [2, 0, 0; –3], (b) D = [7, 0; 0, –3], (c) D = [8, 0; 0, –2]

13.53.

(a) q(x′) = dia(1 11); (b) q(x′) = dia(3, –7); (c) q(x′) = dia(1, 17)

(a) q(x′) = dia(1 11); (b) q(x′) = dia(3, –7); (c) q(x′) = dia(1, 17)

. Then

. Then