The first four chapters of this text have been concerned with probability models and statistical analyses of these models. The emphasis has been on finding a realistic description of an unpredictable, natural phenomenon. Although scientific description or explanation may be an intermediate goal, in engineering practice the use of any analysis is ultimately in situations where the engineer must make a decision. This decision might be the choice of: (1) a flood-control channel’s dimensions, when future flood magnitudes are unpredictable; or (2) the decision of whether to install a traffic control at an intersection where the number of long gaps between randomly passing vehicles determines the delays and safety risks to crossing vehicles on an intersecting street; or (3) the choice among alternate pavement designs when the amount of useful aggregate in the available borrow area is not well established. In this chapter and the next we shall deal with the analysis of decision making when unknown or unpredictable elements are involved in decisions.

In Chaps. 2 and 3 there was little discussion of the influence of the particular application and particular engineer on the description of the random variable. There was a tacit assumption that all engineers in all situations would arrive at substantially the same description of the random variable. In Chap. 4 it was recognized, however, that once we had left the world of purely mathematical models and were forced to relate these models to real data or to make elementary decisions about these models, there were no longer hard and fast rules of “correct” analysis. Two or more reasonable models may exist, different rules for choosing parameter estimators are available, and significance-test conclusions depend upon the convention for the choice of acceptable error probabilities. It is clear, then, that the particular engineer and hence the particular situation necessarily influence the results of applied probability and statistics. The discussions of the methods of previous chapters do not go far enough, however, in revealing the central role of engineering decisions and their influences.

In recent years a new philosophy of applied probability and statistics has been under development. This approach recognizes not only that the ultimate use of probabilistic methods is decision making but also that the individual, subjective elements of the analysis are inseparable from the more objective aspects. This new theory, Bayesian statistical decision theory, provides a mathematical model for making engineering decisions in the face of uncertainty. It derives its name from Thomas Bayes, an English mathematician who introduced the equation now used to relate certain probabilities in the decision model.

In this chapter we shall be concerned with situations where the consequences of a decision depend on some factor which is not known with certainty. We call this factor the ‘‘state of nature.” The factor might be the total settlement of the soil below a proposed bridge footing, or the fraction of a suburban population which will use a proposed rapid-transit system, or the average annual maximum daily rainfall in a stream’s watershed, or whether or not a potentially active geological fault exists in the bedrock several hundred feet below the site of a nuclear power plant. Recognizing that the uncertainty of what the true state of nature is can be expressed as probabilities, the engineer can analyze the alternative decisions facing him to determine which is the optimal choice. In this chapter, we present this method of analysis.

When uncertainty exists regarding the true state of nature, it is often feasible, but not necessarily economical, to obtain more information concerning the state. With the problems given above, for example, the engineer may use drill holes to learn the values of certain soil characteristics at a limited number of points below the footing; he may interview a representative group of the suburban population; he may install gauges and wait to collect several observations of the annual maximum daily rainfall; he may drill a pattern of holes hoping to intersect a fault plane and, if found, examine the recovered cores to assess the likelihood that it is potentially active. The data seldom permit a perfectly confident statement to be made about the true state of nature of interest, but they do provide new information.

Two major questions that we consider in this chapter are:

1. How to combine this new data with the previous probability assignments before making the decision analysis

2. Whether (and how) one should obtain more such information before making a final decision

Section 5.1 discusses the analysis of decisions with a given set of information. Sections 5.2 and 5.3 treat the processing of new or potential information. When this incorporation of new data has been completed, the method of analysis of the decision reverts to that given in Sec. 5.1.

In Chap. 6 we shall treat in some detail decision analysis when the consequences depend upon future outcomes of a process which generates a sequence of random variables, such as successive annual maximum floods or a sequence of concrete cylinders. The uncertain state of nature will be defined as the value of the parameters of the process. Information on the value of the parameters is made available through past observations of the sequence.

In this section we treat those situations in which a decision must be made based on the available information about nature. In later sections we treat the questions of, first, deciding whether more information should be obtained and, second, how the new information should be processed.

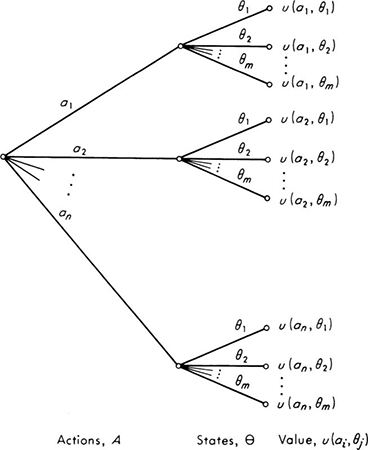

Components of the model Our concern is decision. What course of action should be taken when uncertainty exists regarding the “true state of nature,” i.e., when some aspects of the problem have been treated by the engineer as probabilistic? The decision-making process will be formulated as the process of choosing an action a from among the available alternative actions a1, a2, . . ., an, the members of an action space A. In practice this set or space will have already been reduced to a limited number by the engineer’s exclusion of all but the potentially optimal courses of action. Once the decision has been made, the engineer can only wait to see which state of nature θ in the space of possible states θ is the true one. As a result of having taken action a and having found true state θ, the engineer will receive value u(a, θ), a numerical measure (say, dollars) of the consequences of this action-state pair.

Fig. 5.1.1 Decision tree for discrete-state space.

This formulation is displayed graphically in Fig. 5.1.1 as a decision tree. The process can be viewed as a game: the engineer chooses an action, a state is chosen in a probabilistic manner “by chance,” and the engineer receives a payoff dependent upon his action and the outcome.

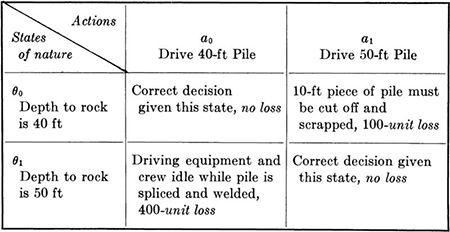

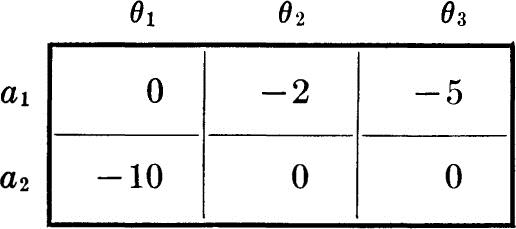

Examples The formulation of decision problems will be demonstrated by examples. They include a construction engineer’s problem of selecting a steel pile length when the depth to rock is uncertain. The available actions are driving a 40- or 50-ft pile, and the possible states of nature are a 40- or 50-ft depth to bedrock. The consequences of any action- state pair can be given in a payoff table:

Table 5.1.1 A simple payoff table

This situation is shown in decision-tree form in Fig. 5.1.2. The losses have been entered as negative values. (In this case they represent the “opportunity loss” associated with not making the best choice of action possible in light of the true state. This is discussed more thoroughly in Prob. 5.7.)

Other examples of decisions under uncertainty include an engineer’s choosing one of four cofferdam designs when the state of nature is the magnitude of next year’s maximum flow. Here the state space θ might logically be treated as continuous, 0 to ∞. The magnitude of that future flow is uncertain to the designer. Value to the engineer results from the initial cost of his design and its performance under the observed flood. The decision tree is shown in Fig. 5.1.3.

Fig. 5.1.2 Decision tree: pile-length choice.

Fig. 5.1.3 Decision tree: cofferdam.

The performance and hence the value of a facility might depend both upon its capacity and upon the demand. If both are uncertain, the possible states are the possible pairs of capacity and demand levels. These pairs can be represented as a two-dimensional state θij, representing the joint occurrence of demand level i and capacity level j. If these levels are discrete, the situation can be indicated by another level of branches in the decision tree, as in Fig. 5.1.4. The probabilities of the capacity levels, and possibly of the demand levels,† will depend upon the action or design chosen.

Another engineer might be responsible for designing a small flood-control channel for a 20-year lifetime. The state of nature could be taken as a set of values, the annual maximum flows in each of the next 20 years; that is, θ is a highly multidimensional continuous-state space. The value received from taking an action and finding a point in this state space will depend on the initial cost and future performance associated with these 20 flows.

When choosing between two types of pavement, an engineer may recognize that the cost and performance of one type will depend on the volume of a convenient aggregate borrow pit. A more expensive or lower-quality aggregate will have to be obtained if the volume in the available pit is inadequate. This quantity, the true state of nature, is uncertain (and, in fact, may never become known unless the designer chooses the pavement type requiring use of the pit). The decision tree might appear as in Fig. 5.1.5. Other examples will be found throughout this chapter.

Fig. 5.1.4 Demand-capacity decision tree.

Fig. 5.1.5 Decision tree: pavement choice.

Our problem now is to analyze such decision problems, that is, to develop a method which makes consistent use of all the engineer’s information: his degrees of belief in the various possible states, his subsequent observed data, and his preferences among the various possible action-state pairs. We shall see that the method suggests the use of relative likelihoods or probabilities—no matter how subjectively defined and evaluated—to express the engineer’s uncertainty, and the use of expected value to rank order the available actions. These concepts, probability and expectation, are not new to us. The validity of the expected-value criterion, however, relies on a properly chosen measure of preference among the outcomes. Hence we require a brief discussion of a new idea: value theory.

Our concern in this section is to demonstrate that if preferences or values of outcomes are properly expressed, then expected value is a logical basis for choosing among alternative actions. As early as Sec. 2.1 this criterion was suggested as reasonable. Here we shall explore its validity. Actually, this discussion is little more than suggestive of the more formal treatment that the subject requires in order that the criterion be firmly based. The reader is referred to other sources† for such discussions. Our purpose here is to suggest their content and necessity.

Consider a simple decision situation where the engineer must choose between actions a1 and a2. Action a1 will lead with certainty to consequences B. Action a2, on the other hand, involves uncertainty. The state of nature may be θ1, in which case the consequences are A, or it may be θ2, in which case C will be the consequences of this action and state. The decision tree is shown in Fig. 5.1.6. A simple example is the choice of pavement type mentioned in the previous section. Another example involves a construction engineer who must decide between a1, paying for insurance against work stoppage due to rain during a critical 1-day erection operation, and a2, buying no such insurance. In the latter case, if the true state of nature is θ1, good weather on that day, the consequences are A, which includes no insurance costs. If, on the other hand, bad weather forces delay (that is, if state of nature proves to be θ2), the consequences include monetary losses (and, possibly, professional embarrassment for having failed to buy the insurance!). Before the true state is known, however, the optimal decision depends upon the likelihood of θ2 and the relative degrees of seriousness of these various consequences.

Fig. 5.1.6 A basic decision process.

Utility function In order to proceed with the decision-making analysis, then, we require a numerical assessment by the designer of his preferences among these outcomes. Let us assume that he would prefer A to B and B to C. (Formally the theory also requires transitivity, i.e., that the decision maker also prefers A to C. We shall not include in our present discussion a number of similar but equally reasonable formal requirements.) This simple preference statement can be expressed numerically by any function u (that is, by any numerical values assigned to A, B, and C) such that

![]()

For example, u(A) = 1.0, u(B) = 0.5, and u(C) = 0; or, alternatively, u(A) = +100, u(B) = 93.6, and u(C) = –106. Our purpose is to find a particular function u, which we will call a utility function, such that it is logically consistent to make the decision between actions a1 and a2 by comparing u(B) and the expected value of the utility of action a2, pu(A) + (1 – p)u(C). Here p is the probability that θ1 is the true state. Actually, there is no need for such a utility function in these simple decision problems, but once we have established expected utility as a valid criterion, we can continue to use it in very complicated problems.

We shall see that the choice of any two values of u may be arbitrary; we may assign the two utilities u(A) and u(C), for example, the values 1 and 0, say, or +10 and –10. It is somewhat advantageous to assign the two arbitrary values to A and C, the most preferable and the least preferable outcomes. All other values of u will thus lie between u(A) and u(C) in numerical value. Assume then that u(A) and u(C) have been given convenient values. What value should be given u(B) to make expected value a valid decision criterion? To answer this, we must return to our decision maker for a more precise preference statement. Notice that if p, the probability of θ1 being the true state, were unity, the engineer would choose action a2 over a1 because he prefers A (now a sure consequence of a2) to B. On the other hand, if p were zero, a1 would be chosen over a2 if the engineer were going to act consistently with his stated preferences. As for other values of p, it can be said that as p grows from zero, a1 will be preferred less and less strongly over a2 until some point p*, after which a2 will be preferred over a1. We assume that by some means of interrogation we can find the crossover value p* between 0 and 1, such that the engineer is indifferent between choosing a1, with its certain consequence B, and choosing action a2, the lottery with chance p* of receiving A and 1 – p* of receiving C.

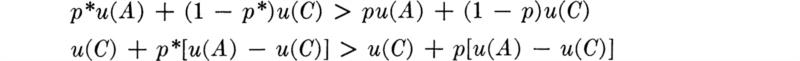

Validity of expected-value criterion Once we have that value p*, we choose to assign u(B) the value

![]()

(Note, in passing, that for any p* between 0 and 1 the assigned value u(B) will lie between u(A) and u(C) in value; this is consistent with the original preference statements.) By this assignment we have accomplished our intended purpose, that of establishing expected utility value as the consistent criterion by which to choose actions. The decision maker should choose a1 if and only if the expected utility given this action choice, E[u | a1], is greater than E[u | a2]. That this is true is verified by noting that:

1. For all p < p*:

(a) The engineer should, to be consistent with his preferences, as reflected in his p* choice, choose a1 over a2, and it is also true that for these values of p (and only these values of p):

![]()

as can be seen by evaluating the expectations:

![]()

and substituting for u(B) its assigned value

The inequality holds because p* > p and u(A) – u(C) is positive.

2. Similarly, for all p > p*:

(a) The engineer should choose a2 over a1, and also

(b) E[u | a1] < E[u | a2], since

![]()

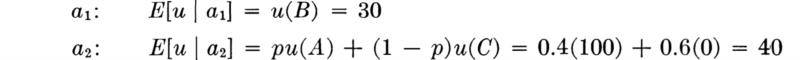

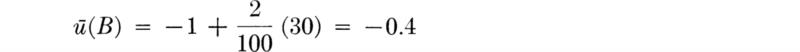

For example, assume that the engineer was given choices between action a1 and a2 for a selection of values of p until he showed by his choices to be indifferent at p* = 0.3. We arbitrarily let u(A) = 100 and u(C) = 0. Then we should assign

![]()

If the available information suggests that the probability of θ1 is p = 0.4, then the expected utilities of the actions are:

The engineer should choose action a2, since 40 > 30.

In other words, if u(B) is properly assigned to be consistent with the decision maker’s stated preferences (here expressed in the statement A preferred to B preferred to C and in the indifference probability p*), then ranking of the expected values of utility for the actions determines the ranking of the actions which is consistent with those preference statements. The logic may appear circular and unnecessary. It is, however, precisely the circular reasoning which guarantees that the decision criterion remains valid when more complicated model structures make the decision choice far from obvious and the need for a consistent, formal analysis overwhelming.

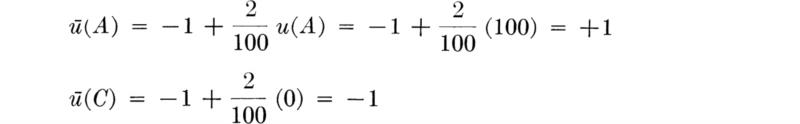

Linear transformations We note in passing, because it will be useful shortly, the validity of the arbitrary choice of any two values of u. To assign two other values, ū(A) and ū(C), to these two consequences is equivalent to saying ū = c0 + c1u, that is, that a scaling and shifting (or linear transformation) is being made in the utility function. It is trivial to show that our conclusions remain true for a positive linear transformation of the utilities. Let us simply demonstrate this fact through the previous numerical example. Suppose that we assign a new utility function ū = – 1 + 2/100u. Then

and

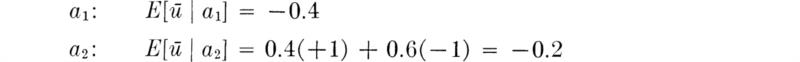

This is the same value for the utility of B that we would have reached had we assigned, initially, A a utility of +1 and C a utility of – 1, since then ū(B) = p* ū(A) + (1 – p*)ū(C) = 0.3(1) +0.7(–l) = –0.4. The decision also remains unchanged, of course. Now we have, with p = 0.4,

The expected utility of action a2 still exceeds that of action a1.

The freedom in assigning the utility function is analogous to that in assigning a temperature scale. Note that, owing to this degree of arbitrariness, no significance can be attached to the relative magnitude of the expected utility values. In the example above, action a1 cannot be said to be, say |(40 – 30)/40| or “¼ worse” than a2, for with the ū utility function it is |[–0.2 – (–0.4)] / – 0.2| or “100% worse.” It can only be said that “a1 ranks below a2.”

In simple decision situations such as this, nothing is gained by the steps of finding p*, finding u(B), and then finding the expected utilities in order to make a decision. It would be easier in this case simply to find p* and ascertain whether p is greater or less than p*. We shall become interested, however, in more complex decision problems where the latter approach is impossible, but where the determination of expected utilities will require nothing but familiar operations. The analysis of such complex decision situations will find us replacing lotteries (some embedded within others) by their expected utilities, † in order to reduce each action to an associated single number, the expected utility if that action is taken. By extension of the simple case above, we can say that if our utility assignment has been made in the manner outlined above, maximization of expected utility is a criterion of action choice which is consistent with the decision maker’s stated preferences.

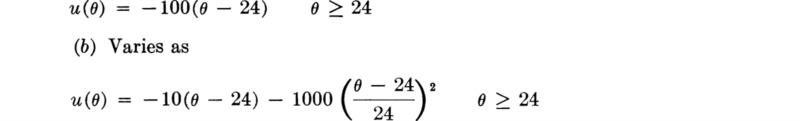

Monetary value versus utility; risk aversion It is common in business and engineering practice to express the consequences of decisions and outcomes in monetary terms. It may or may not be appropriate to replace expected utility by expected dollar value as a decision criterion. Let us investigate this problem briefly.

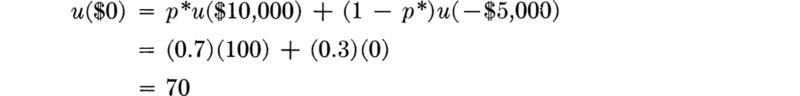

If a decision tree has a number of terminal points, each the consequence of an action-state pair, and if dollar values are attached to these consequences, it is in general necessary to replace each of these dollar values by a proper utility in order to justify the use of the expected-value criterion. If the number of such terminal points is large, it is often easier to attempt to plot a smooth function of utility versus dollars, u(d). Then one may simply read the utility value from this curve for each particular dollar value of a consequence. The determination of this utility function follows the established pattern. Suppose the terminal-point dollar consequences are as shown in Fig. 5.1.7. The most preferable outcome is +$10,000; assign this, arbitrarily, a utility of u($10,000) = 100. The least preferable value is a loss, –$5,000; assign this a utility value of 0, u(– $5,000) = 0. Then to find the utility of any other dollar value, say $0, the engineer must be quizzed to determine at what value of p he would be indifferent between a sure gain of $0 and a lottery in which he would win $10,000 with probability p and lose $5,000 with probability 1 – p. In personal financial matters most individuals would undoubtedly find themselves in the position where a gain of $10,000 would be welcome but a loss of $5,000 would be very serious. For any value of p less than, say, 0.7, they might prefer to take no risk at all, i.e., a sure $0. Above p = 0.7 they might feel that the potential large income was so likely that the small risk of loss was “worth the gamble.” Arriving in this way at a p* of 0.7, $0 is assigned a utility of

Fig. 5.1.7 Terminal-point dollar-value consequences.

Fig. 5.1.8 Utility curves.

A number of similar processes obtaining preferences for dollar values of, say, –$2,000, +$2,000, and +$6,000 versus lotteries involving a gain of $10,000 and a loss of $5,000 will lead to corresponding values of p*, and thence to the utilities of –$2,000, +$2,000, and +$6,000. For indifference p*’s of 0.5, 0.85, and 0.95, respectively,

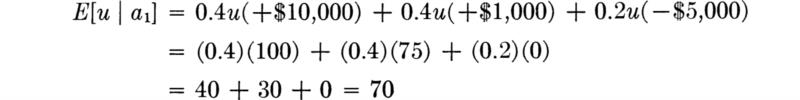

A plot of these points and a smooth curve drawn through them is shown as a solid line in Fig. 5.1.8. From this curve utilities can be assigned to all the dollar-valued consequences of the terminal points of the decision tree in Fig. 5.1.7. The utility of +$1,000, for example, is found to be about 75. Given probabilities on θ1, θ2, and θ3, say, 0.4, 0.4, and 0.2, the expected utility of taking action a1 (Fig. 5.1.7) could be evaluated as

Similar calculations for the other actions would lead to a choice of the action which is optimal in the light of the preferences reflected in the utility curve. We shall discuss these steps in detail in Sec. 5.1.4.

The shape of the solid-line utility curve in Fig. 5.1.8 has been commonly encountered (Grayson [I960]) among individuals making decisions involving dollar values which are large in relation to their working capital. This concave-downward curve reflects the facts that in such situations the utility of a gain of d dollars is less in magnitude than a loss of the same amount, and that, after a gain of d, a gain of d more has, incrementally, a smaller utility. Decision makers with such utility functions are said to be “risk averters.”

In many practical circumstances the decisions may not involve dollar sums which are “large” for the individual or body (corporate or social) involved. For a large professional office, for a major business, or for a large political body the dollar values of +$10,000 and –$5,000 are not large. Many decisions may be made each day involving such numbers. For some individuals the comparable “not large” numbers might be +$10 and –$5. In these cases a strongly curved, concave utility assignment may be too conservative. As individuals we might assign an indifference probability of almost ⅓ to a choice between a sure $0 (no gamble) and a lottery with a chance p of gaining $10 or 1 – p of losing $5. So too, a large firm might assign a breakeven probability of about ⅓ to the earlier situation involving a sure $0 versus a lottery with payoffs +$10,000 or –$5,000. Assigning the largest and smallest dollar-value utilities of 100 and 0, as before, the proper utility assignment to $0 is 33 for the large firm.

This point and others for the same situation of a large firm or political body are shown as crosses in Fig. 5.1.8. The dashed line through them is linear. The implication is that utility can be expressed as u = c1 + c2d or, inversely, that dollars can be expressed as d = c3 + c4u. Since, as we have seen, we retain a valid utility function after any linear transformation, it is clear that under these special circumstances dollars themselves are a valid utility measure. The important consequence is that if utility is linear in dollars, expected monetary value is a valid criterion for choosing among alternative actions. In most examples to follow, we shall assume that this linearity exists and hence that expected dollar values are adequate for decision-making purposes.

Extracting preferences Methods for interrogating individuals to determine their utility assignments can be found in other references (e.g., Schlaifer [1959] or Pratt, Raiffa, and Schlaifer [1965]). They usually involve choices among hypothetical lotteries in search of indifference points. The assessment may in practice prove difficult. Individuals may, for example, respond less favorably to hypothetical risky situations than to real ones. Some consequences (e.g., dam failures, formwork collapses, highway accidents) involve difficult, but fundamentally unavoidable problems in assessing utilities. When a highway planner puts the limited funds available to him into a new, time-saving highway link rather than into accident-reducing modifications at an existing intersection, he has made such an assessment implicitly. Explicit utility assessments could potentially bring more consistency to the decisions that must be made under such difficult circumstances. † Experience comparable to that being gained in major business decision applications (Howard [1966]) is needed in engineering situations.

Discussion A number of comments are in order about the validity of the expected utility decision criterion and about aspects of utility assignment which are peculiar to civil engineering.

It is important to note that for expected (or average) utility to become the valid criterion of choice, there has been no need for the situation to involve repeated trials. The decision may be, as most civil-engineering decisions are, a “one-shot” affair. Nor does the criterion say that one should always prefer a 50–50 lottery with yields $100,000 or $0 to a sure gain of, say, $40,000. For any number of reasons the decision maker may have an aversion to risk taking. The one-shot and risk-aversion elements will be reflected in the utility assignments which express the individual’s preferences among outcomes of the situation at hand. For example, the engineer might feel that in this decision situation p* is 0.7, in which case u($40,000) = 0.7u($100,000) + 0.3u($0) = 0.7, if we set u($100,000) = 1 and u($0) = 0. Then the preferred decision is the sure $40,000, since its expected utility is 0.7, whereas the lottery has an expected utility of only (0.5) (1) + (0.5) (0) = 0.5. Thus, the utility assignment has expressed the engineer’s aversion to risking a sure $40,000 against a possible $100,000. He will accept the risk only if the likelihood of success is sufficiently high, for example, p > 0.7.

It should be clear that the utility assignments to consequences may be highly subjective. Depending on circumstances, such assignments may differ from individual to individual and even from time to time for one individual. They should represent the preferences of the moment of the individual responsible for the decision.

Civil engineers make many professional decisions under conditions of uncertainty about the future. Do these professional decisions bring them value? Can the value accruing from a particular professional decision be directly identified? Or is value only received over a long period of time from many decisions with few apparent direct relationships to the engineer, beyond the generalization that consistently wise decisions are associated with long-term economic gain and increase in professional stature?

In the field of business, where much of applied decision theory has been developed, an executive can often specifically identify value accruing from a specific decision. He is operating for himself or in the name of the concern, and an economic gain or loss is more apparent. The operations of a professional civil-engineering office do parallel ordinary business operations in that a profit or loss is found, but only a small part of the decisions made pertain to the management of the engineering business itself. Most of the decisions of interest are professional in nature. That is, the engineer is acting as an agent for his client, and he has a professional obligation to attempt to make a design with characteristics optimal to the owner. The situation is complicated since some aspects of decisions are predominantly client-related, whereas others are more closely engineer-related. For example, absolute minimum cost to the owner is rarely a goal for the engineer because of other professional factors that must be considered, such as public safety, appearance, etc. The values of these latter factors to the owner may be extremely difficult to ascertain. But the saving of $10,000 in the cost of construction of a building is of immediate importance to the client or owner. How important is this saving to the engineer who must make the critical design decision? Utility to the client is not equivalent to the utility to the engineer. The reverse may be in part true; the saving of $10,000 in construction costs may actually reduce the fee received by the engineer for the job. Another example will follow in a bridge-design illustration in Sec. 5.1.4.

How, in short, should the preferences of the client be reflected or transformed into the preference assessments of the engineer responsible for the decision? These are questions which have received no quantitative answers. But these are not new problems generated by the methodology of statistical decision theory. They are, in fact, only new ways of expressing some of the key issues underlying professionalism. By dealing with them in a more formal, mathematical framework, decision theory may provide a basis for further discussion of them and for a rational, quantitative resolution.

Despite the immediate practical difficulties in assessing utilities, the concept remains fundamental to the understanding of rational decision making under uncertainty.

Closure It should be repeated that our development of the value theory underlying statistical decision theory has been nonrigorous and only suggestive of the basis for the expected utility criterion. Briefly, the use of the criterion is justified by the method of making the utility assignments. The assignments are consistent both with the engineer’s preferences and with the use of the expected utility criterion. In the analysis of decision trees in Sec. 5.1.4, we shall use this fact over and over to replace an array of branches and associated outcomes by a single number—the expected utility.

We next must reconsider briefly the probability assignments on the decision tree. We shall find that some of the earlier statements in Chap. 2 about the degree of belief or operational interpretation of probability can now be made more explicit.

The probability assignments on a decision tree are more familiar than utilities. Their treatment has been the subject of all the preceding chapters. The sources of these probabilities may include observed frequencies, deductions from mathematical models, and, in addition, measures of an engineer’s subjective degree of belief regarding the possible states of nature. The former two sources have been emphasized up to this point, but the broader, subjective interpretation can now be given a firmer basis. As mentioned briefly in Sec. 2.1, such statements as “The odds are 2 to 1 that this job will be completed in 2 months,” “I’ll bet a quarter to your nickel that the depth to bedrock is less than 50 ft,” or “The most likely value of the mean trip time is 8 minutes, but it may be as large as 10 or as small as 7” all represent expressions of the engineer’s judgment about unknown quantities. These quantities may represent repetitive experiments, one-of-a-kind situations, fixed but unknown factors, or even parameters of the distributions of repetitive random variables. The subjective expressions above can all be related to probability distributions assigned to these quantities. An excellent engineering argument for this position is presented by Tribus [1969].

Both observed frequencies and these “more subjective” probability assignments can now be interpreted as those numerical summaries of an individual’s degrees of belief or relative likelihoods upon which that individual is prepared to base his decision. The implication seems to be that another individual might make different probability assignments to the same decision tree. This is true; it is consistent with the observation that different engineers have had different experiences and use different reasoning processes.

This operational interpretation of probability as an individual’s decision-making aid is not, however, inconsistent in practice with the more restricted relative-frequency interpretation; if a large amount of relevant observed data is available, two reasonable individuals will undoubtedly use it in the same way, obtaining the same personal probability of the event. The techniques for determining probability assignments when sufficient data and/or theoretical models are available have been discussed.

In those cases where the probabilities depend strongly upon judgment, however, the extraction of an engineer’s degrees of belief in terms of personal or subjective probabilities may not be an easy task. When accustomed to the idea, an engineer may be able to make direct statements. For example, he might say:

Based on the information available to me at the moment, the probability is 0.3 that the volume of useful aggregate in this borrow pit is about 4000 yd3 and 0.7 that it is about 5000 yd3.

Or, if a continuous-state space is desirable:

There is a 50–50 chance that the volume is less than 4750 yd3. If it proves to be less than 4750 yd3, however, there is a 50–50 chance that it is less than 3800 yd3. Given that it is greater than 4750 yd3, on the other hand, the probability is 50 percent that it is greater than 5000 yd3. There is no significant chance that the volume is less than 3000 yd3 nor more than 5250 yd3.

The points implied by these latter statements and a smooth curve through them yield the CDF in Fig. 5.1.9.

Alternatively, these quantifications of degrees of belief can be extracted in a manner which more strongly reflects the decision situation at hand. The engineer can be asked to state a preference between two lotteries, one artificial, the other involving the state of nature or event under consideration. His preference will indicate a bound on the probability sought. For example, assume that an engineer states a preference for an artificial “lottery,” lottery I, which offers a sure $5, over lottery II, which offers him $0 if the amount of useful aggregate is found to be less than 4500 yd3, and $8 if it is found to be more than 4500 yd3. The implication of this preference statement is that the expected utility of the first lottery is greater than that of the second, or

![]()

in which p = P[volume ≤ 4500]. Assuming, for simplicity, that utility and dollars have been found to be linearly related (Sec. 5.1.2) in this range, and letting u($d) = d, the engineer’s preference implies that

Fig. 5.1.9 Subjective cumulative probability distribution on usable aggregate volume.

![]()

or

![]()

By changing the values of the rewards or the values of the probabilities (or both) in lotteries I and II, one can presumably find a situation where the engineer states that he is indifferent between the two lotteries. In this case the expected utilities can be set equal and the implied value of p solved for. This probability is clearly the one upon which the engineer is prepared to make a decision which rests in part, at least, upon the quantity of aggregate in the borrow pit.

Subjective probabilities have proved (Howard [1966]) a useful way to transmit judgments within an organization where numerous individuals have to contribute to the components of a larger decision. For example, they can help a consulting engineer communicate the uncertainty in his estimate of a factor in his field specialty. A soils consultant, who has pulled together various kinds of data and experience to estimate that the mean shear strength of a particular soil layer is 400 lb/ft2, might go one step further and state that he is “50 percent sure” that the strength is with ± 10 percent of that estimate. The figure 10 percent might have been arrived at by varying that fraction until he was indifferent between lottery I, a coin flip offering $0 or $1, and lottery II, offering $1 if the true mean strength is found to be within +10 percent of his estimate and $0 if it were outside of those limits. By transmitting two numbers—a best estimate 400 and a “probable error” ± 10 percent—rather than only a conservative estimate, say, 350 lb/ft2, this engineering specialist will permit a balanced, economical design decision to be made by those supervising engineers who have gathered all such individual judgments together with the costs and the alternatives. This approach avoids uneconomical compounding of “safety factors” which can arise when each opinion is transmitted simply as a conservative estimate. This is true whether or not a formal decision analysis is made.

We can afford no further discussion on the subject of trying to get probability assessments from individuals, but we refer the reader to other sources (e.g., Schlaifer [1959], Pratt, Raiffa, and Schlaifer [1965], Grayson [1960], and Howard [1966]) for additional discussion. Interesting questions remain concerning the “policing” of inconsistent probability statements and lottery choices, the influence of the hypothetical nature of the lotteries, and the extension of the procedures to small probability values.

Like the procedure for utility assignments, the assessment of judgment-based probabilities utilizes the decision maker’s preferences among simple, obvious lotteries to extract numbers which will become parts of the actual larger, more complicated decision problem. Many of the probabilities in a decision analysis will be determined by the methods developed in earlier chapters, that is, by manipulation of probabilities and random variables in a manner consistent with the rules of probability theory. Nonetheless, all applications rest fundamentally on an individual’s probability assignment, whether it is extracted by preference statements or implied in the adoption of a particular distribution.

In the following section we will put together the pieces of the decision problem and analyze the problem to determine the best decision consistent with the probability and utility assignments.

We have discussed how, in principle, the elements of the decision problem are assembled. These elements are the available actions, the possible states with their assigned probabilities (representing the information available to the decision maker), and the utilities associated with the action-state pairs (representing the decision maker’s preferences). The analysis of the problem determines the optimal action consistent with the individual’s probability and preference assignments.

The criterion of choice among actions is maximum expected utility. That this is a valid criterion has been guaranteed by the method of utility assignment. The analysis of the decision situation reduces then to calculation of expected utilities and selection of the action which shows the largest expected value.

Finding expected values is a familiar process by this time, but we include several examples to illustrate its application in decision analysis. We shall adopt the short-hand notation P[θi] to mean “the probability that the true state of nature is θi.” Notice in the examples that, if it is numerically valued, the state of nature θ will be treated as if it were a random variable. Since there is no opportunity for ambiguity in this chapter, we shall not, however, distinguish by upper and lower case between the random variable and its specific values.

Illustration: Pavement design, discrete-state space As a simple example we shall complete the analysis of the decision between two pavement types, Fig. 5.1.5. Uncertainty exists regarding the amount of aggregate in the available borrow pit. Assuming that it is sufficient to use just a pair of discrete states (θ1 = “large” volume of aggregate, say about 5000 yd3, and θ2 = “small” volume, about 4000 yd3), we shall use probability assignments extracted as in Sec. 5.1.3: 0.7 and 0.3, respectively. The engineer’s utility assignments for the action-state pairs have been assessed to be 60 and 200 and 0 for u(a1), u(a2, θ1), and u(a2, θ2), respectively. The final tree with the numerical assignments indicated appears in Fig. 5.1.10. The expected value promised by choosing a particular action is indicated within a box at the right-hand end of the action branch.

For action a2 it is 140. This expected value can be interpreted, recall, as the certain gain equivalent in value (in the decision maker’s eyes) to the existing a2 “lottery.” In essence this is the “selling price” he would accept for his “ticket” in the lottery. Because 140 is greater than 60, the indication is that a2 is the choice which is consistent with the engineer’s assessment of the preferences and judgments (probabilities). It is important to realize that the value which will be received if a2 is taken will not be 140 but rather either 0 or 200.

Fig. 5.1.10 Pavement-type decision tree.

It is also important to recognize the distinction between a good decision and a good outcome. The analysis suggests that a2 is the better decision, but there is a possibility that the volume will turn out to be inadequate; that is, the outcome may be bad. Such an outcome does not imply that a bad decision was made. At the earlier time when the choice had to be made, the available information did not include the fact that the volume was inadequate. The available information at that time was summarized by the engineer as a probability of only 0.3 that volume was inadequate.

Notice, too, that even if the engineer had judged that the volume was “somewhat more likely than not” to be inadequate, say P[θ1] = 0.4 and P[θ2] = 0.6, action a2 would have remained the better decision; the conclusion is that a decision should not necessarily be based on the “most likely” state, without consideration of the consequences.

Illustration: Pavement design, continuous-state space The engineer may have preferred a more detailed model of the state space in the pavement-choice example, a space which permitted the possibility of any of a range of values. Assume, then, that the state space of aggregate available in the borrow pit is the positive line, 0 to ∞. Assume, too, that utility is linearly related to construction cost dollars. We shall assume arbitrarily a scale of u(–$100,000) = –100,000 and u($0) = 0. In other words, we shall use expected monetary value as a criterion. Pavement type II requires 4750 yd3 of aggregate. If this amount is not available in the borrow pit, the additional material, (4750 – θ) yd3, will have to be hauled a great distance at added cost. Assume, then, that the construction cost of type II is $95,000 if the pit volume exceeds 4750 yd3. But if the volume is less than 4750 yd3, the cost will be $95,000 plus $10 per imported cubic yard, or $95,000 + $10(4750 – θ). Pavement type I has a cost of $100,000. In short, the utility of choosing type I is –$100,000 and the utility of the type II choice is

![]()

Assume that the engineer’s probability assignment on the available volume θ, is the CDF indicated in Fig. 5.1.9. The decision tree can be indicated as shown in Fig. 5.1.11. Since the discrete-state “branches” are no longer appropriate, the value of the tree as a visual aid is weakened when continuous variables are involved. It remains useful conceptually however. The expected values associated with actions a1 and a2 are again shown in boxes.

The determination of the expected value of a2 now requires integration:

This evaluation requires that a PDF be determined from the given CDF and that, analytically or numerically, the integration be accomplished. The approximate result is indicated in Fig. 5.1.11 to be –97,900. Action a2 is preferable. The continuous-state model may be more detailed than the discrete-state representation, but any model which is accurate enough to give the proper action choice is sufficient. In all further examples in this chapter we shall restrict our attention to discrete models.

Fig. 5.1.11 Continuous-state-space model of pavement-type decision.

Recall that the expected utility criterion is sufficient only to obtain a ranking of actions. It should not be concluded, after inspecting the expected values – $100,000 and –$97,900, that a2 is about 2 percent “better” than a1, only that a2 is better. Recall that assignment of a different, linearly transformed utility scale could yield a different proportion between the expected values, but never a different conclusion as to the better decision.

Illustration: John Day River bridge Two spans of a bridge over the John Day River near Rufus, Oregon, failed during the floods of December, 1964. The primary cause was “abnormal” flooding of the river. The foundation of the center pier was undermined by the unusually fast current, and two 200-ft spans collapsed. The bridge was 1 year old and had cost $2,500,000.

The original plans for the bridge called for this pier to bear on bedrock, but after the contractor had excavation difficulties, he was allowed to found the pier on compacted sand and gravel. The outcome was bad, but the decision may not have been. The highway department explained that the change was allowed because the bridge would eventually be in the reservoir formed by the John Day dam, and by then it would not be exposed to fast currents. The change speeded construction and saved $150,000 in construction cost. It is interesting to note that after the failure the decision was publicly called a “clear error in judgment.”

The foundation was “designed” for 37,000 cfs. The river reached 40,000 cfs during the flood. Three older bridges on the same river were undamaged even though some of their piers also bear on gravel; performance under a given “load” cannot be precisely predicted when such a complex process as scour and erosion are involved. The cost of reconstruction was estimated to be $765,000. †

This type of decision situation is very often encountered in civil-engineering practice. It is common to consider design changes when new information is obtained during construction. Reanalysis of this foundation-design decision provides an excellent opportunity to illustrate the use of statistical decision theory. All probability statements and conclusions that follow are entirely fictitious and are designed only to illustrate the methods.

The decision tree is shown in Fig. 5.1.12. The engineer must choose between action a1, requiring strict compliance with original plans, and action a2, accepting the contractor’s proposal to found the pier on sand and gravel at a saving of construction money and time.

Fig. 5.1.12 Decision tree: foundation design.

Based on historical records, the engineer assigns probabilities to the four possible discrete flood states that he thinks are sufficient to describe this aspect of the problem. These must be the probabilities of the largest flow in the time before the foundations are protected by the dam. The states selected are:

Their probabilities are independent of the action taken and are estimated semi-subjectively from available records of past flows (see, for example, Sec. 4.5.2):

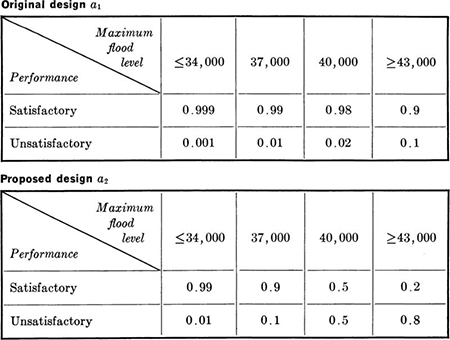

The conditional probabilities of successful or unsuccessful performance of the original design and the proposed design, given the maximum flood level, appear in Table 5.1.2 and in the decision tree. Note, for example, that the (fictitious) engineer has estimated that the probability of the original design’s failing under a peak flow of 37,000 cfs is about 10 percent of that of the revised design. The states are labeled θi1 and θi2 for flood state i and satisfactory or unsatisfactory performance, respectively. Such performance probabilities can only be subjective assignments reflecting the engineer’s judgment about the reliability of these particular designs under these particular conditions. Related experience and professional knowledge represent the only sources of information. Data cannot be obtained before the designs are completed.

Table 5.1.2 Conditional probabilities of performance given the design and the maximum flood level

The gains and losses in dollars are estimated as shown in the decision tree. The cost of poor performance, i.e., foundation and pier failure, will be taken as $765,000; the construction-cost savings associated with the proposed design are $150,000. Failure of a revised design has a net cost of $765,000 – $150,000 or $615,000. Assume that the engineer is making a decision to maximize the expected utility of the people of Oregon and hence that utility to the people and dollars can be assumed to be linearly related.

Analysis of the tree proceeds by finding the expected utilities or monetary values at each branching point. The right-hand-most expected values on the decision tree are the expected values given a particular action and flood level θi. For example, the top boxed value is:

The expected utilities for each action are found in turn by weighting the expected utilities given the flood level by the probabilities of these levels. For example:

The better decision is to choose the proposed revised design because E[u | a2] is greater than E[u | a1].

Several observations are in order. First, the problem could also have been represented by a simple tree with only one set of state branches, as shown in Fig. 5.1.13. There are eight such states, the combinations of four flood levels and two performance levels. Call them ![]() k = 1, 2, 3, . . ., 8. Note that each

k = 1, 2, 3, . . ., 8. Note that each ![]() corresponds to an earlier θi1 or θi2, i = 1, 2, 3, 4. For each action, there is associated with each state its probability of occurrence. Each probability is the product of a probability of flood level and a conditional probability of performance level given that flood level. The products of these eight probabilities and the eight dollar values give the expected monetary value of an action. In symbols, the calculation is

corresponds to an earlier θi1 or θi2, i = 1, 2, 3, 4. For each action, there is associated with each state its probability of occurrence. Each probability is the product of a probability of flood level and a conditional probability of performance level given that flood level. The products of these eight probabilities and the eight dollar values give the expected monetary value of an action. In symbols, the calculation is

Fig. 5.1.13 Alternate decision tree: foundation design.

The reader can quickly convince himself that this is the same number obtained above, where that computation can be shown as:

The equivalence of the two computations implies that the expected utilities in the original tree, Fig. 5.1.12, can be computed either by working to the left with sets of conditional expectations to obtain the expected utility of an action (as done) or by working to the right with sets of conditional probabilities to find the probability of each possible terminal point (and taking expectations only as a final step).

As a third alternative for the decision tree, the engineer might have preferred only two states, those prompted by observing that there is only a pair of utilities appearing in the larger decision trees (Figs. 5.1.12 or 5.1.13). These two states could be called “satisfactory performance” and “unsatisfactory performance.” Their probabilities could be found by adding the probabilities of the mutually exclusive events which make them up. For example, the probability of satisfactory performance is the sum of the probabilities of ![]() in Fig. 5.1.13. The purpose of mentioning this large number of alternative decision trees is simply to make the point that there is no single “proper” formulation. The engineer has freedom in how he chooses to view a problem, and the several possible views will lead to the same action.

in Fig. 5.1.13. The purpose of mentioning this large number of alternative decision trees is simply to make the point that there is no single “proper” formulation. The engineer has freedom in how he chooses to view a problem, and the several possible views will lead to the same action.

A second major observation is that the decision may well have been altered had the engineer assessed his personal preferences in making the utility assignments. For illustration, let us simplify the decision tree to the elementary one shown in Fig. 5.1.14. (Note that the engineer has chosen now to assume that there is no probability of unsatisfactory performance with action a1.) The engineer responsible for the decision might recognize that the consequences to him of each action-state pair are quite complicated. Failure to accept the immediate, sure construction savings (i.e., choosing action a1) might brand him as inflexible and over conservative. If he takes this action, the possible poor performance that might have been (and was!) found under a2 could never take place, and the engineer’s conservatism (appropriate, after the fact) would never be appreciated. If the engineer takes action a2, either he will obtain the benefits for himself and the people which he represents (θ1) or the poor performance will be observed (θ2). In the latter case, the consequences to the engineer will depend in part upon the review of his decision which is likely to follow. If the flood which causes the failure is truly a large one, larger at least than any on record, the engineer may escape personal criticism even though financial losses are quite large.

Viewed in this light, the professional decision situation, like most, is quite personal in nature. The methodology of decision theory remains applicable, however. Only a preference statement on the part of the engineer is needed. Assign, arbitrarily, u(a2, θ1) = 10 and u(a2, θ2) = –10. Then, through interrogation, an indifference probability p* is sought. It is the value at which the engineer has no preference between (1) obtaining the consequences of a1 for sure and (2) being given a chance in a lottery where the consequences of a2 and θ1 will be found with probability p* and the consequences of a2 and θ2 will be his with probability 1 – p*. Then

Fig. 5.1.14 Simplified tree: bridge-foundation design.

Assume that the engineer’s preference statements imply that p* = 0.98. (That is, 1 – p* is about equal to the likelihood that the engineer can correctly name the top card on a deck of cards.) Then

With these preferences the engineer should still take action a2, since

![]()

while

![]()

As a final observation, notice that the dollar consequences of an action-state pair may also be uncertain. In this case, still another level of branches can be established to represent the various levels of costs associated with a given action and outcome. Conditional probability assignments must be made to each new branch. The first step in the analysis will be to calculate the rightmost expected value, which will now be the conditional expectation of the dollar consequences given the action and outcome. With this value the analysis proceeds exactly as above. In this sense the dollar estimates in the original tree (Fig. 5.1.12) can be thought of as expected dollar consequences given the action and outcome. Notice, however, that this interpretation is valid only if a utility and dollars are linear for the decision maker. If not, the dollar values must all be converted to utilities before taking expected values.

Illustration: General capacity-demand problem The preceding problem can be considered to be a special case of a more general capacity-demand type of problem. The flow rate is a measure of demand; the resistance of the foundation to this flow is a measure of capacity. The relationship between capacity and demand levels determines the performance of the system and hence the payoff or consequences: capacity greater than demand defines a more-or-less satisfactory behavior; demand greater than capacity implies a system failure of some magnitude.

Both capacity and demand may be uncertain. Commonly the probabilities of demand levels are largely independent of the action or design, whereas the specified design level or action determines the most likely capacity as well as the dispersion about that value. Performance and consequences depend upon the action and the value of the two-dimensional state, demand and capacity. If for a given action, 10 discrete demand levels and 10 discrete capacity levels are considered, 100 combinations of levels exist and each must be considered.

As mentioned in the bridge-foundation-design illustration, it may be possible to simplify the decision-tree analysis by lumping many of the demand-capacity pairs into a smaller number of common performance levels. This is possible if the consequences are equivalent for each action-state pair in a particular performance level.

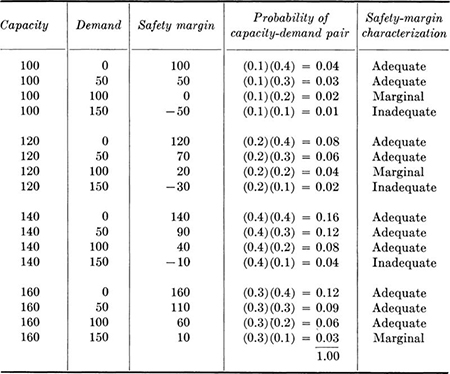

Table 5.1.3 Safety margin levels

For example, for a particular action, the decision maker may consider the four possible demand levels 0, 50, 100, and 150 and the four possible capacity levels 100, 120, 140, and 160. Assume that the probabilities of the demands are 0.4, 0.3, 0.2, and 0.1, respectively, whereas the probabilities of the capacities are 0.1, 0.2, 0.4, and 0.3. If performance can be related to simply the “safety margin” or the difference between capacity and demand, it is appropriate to calculate the possible values of the safety margin with their likelihoods. Assuming independence of demand and capacity, these values all appear in Table 5.1.3. The performance levels associated with each safety margin are shown. Negative values of the safety margin are termed “inadequate,” non-negative values less than 30 are called “marginal,” and larger values are considered “adequate.” In these terms the probabilities of the three performances are 0.07, 0.09, and 0.84 for the inadequate, marginal, and adequate safety margins. Analysis of the tree could continue based on only these three outcomes, with their respective probabilities and utility values.

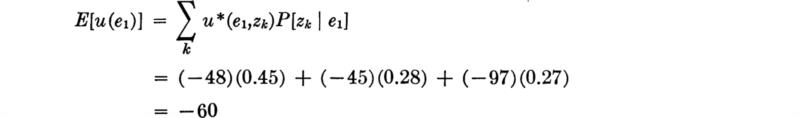

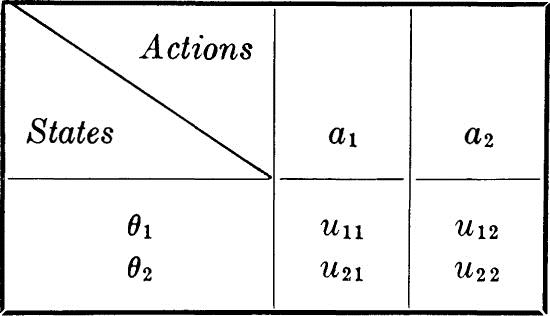

The simple decision model consists of a set of possible actions, a1, a2, . . . ., an, and a set of possible states, θ1, θ2, . . ., θm. Decision theory prescribes how one should choose among the actions when the state is uncertain. The prescribed decision criterion is maximization of expected utility.

The criterion is appropriate if the decision maker’s preferences among the various possible action-state pairs are expressed in terms of a particular utility measure u(aj, θi) (or any positive linear transformation of that measure). The proper utility measure may or may not coincide with simple monetary units.

In decision analysis, probability assignments p(θi) are also interpreted with reference to the particular decision maker, and hence they are, in general, subjective quantities rather than, say, physical attributes of the uncertain states.

Analysis of the decisions involves only the computation of expected utilities E[u | aj] and choice of the action with the largest expected utility (or minimum expected loss).

In the previous section we discussed the analysis of a decision tree when the various probability assignments were available. In this section we consider terminal analysis, the analysis of the tree when new information about the states has become available. We shall learn in Sec. 5.2.1 to incorporate the new information with the old to yield new probability assignments. After this has been done, the decision-tree analysis is identical to that discussed in Sec. 5.1.

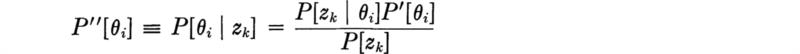

Our interest turns now to the problem of reassessment of the probabilities of state in the light of new information. No new ideas are involved; we wish only to reconsider some familiar ideas in the context of a decision situation. For clarity we denote our initial or prior probabilities as P'[θi] and our revised or posterior probabilities as Pʺ[θi]. (For notational simplicity we suppress possible dependence of these state probabilities on the action a.)

Calculation of posterior probabilities The prior probabilities of state are known. As the result of an experiment e, † we have observed new information, namely, an outcome zk in the space Z of all possible outcomes of that experiment. The problem is to combine this new information with the prior probabilities of the state P'[θi] to obtain posterior probabilities of state Pʺ[θi]. We do this through Bayes’ rule, Eq. (2.1.13). This rule, recall, is derived as follows. Consider the two alternative products which equal the probability of zk and θi:

![]()

in which an obvious short-hand notation has been adopted, e.g., P[zk | θi] is the probability that the outcome of the experiment would be zk if θi is the true state of nature.

Solving for P[θi | zk] or Pʺ[θi],

Substituting for P[zk] in the denominator,

Bayes’ rule states that the posterior probability of a state is the product of three factors:

in which

The normalizing factor simply insures that the P”[θi] form a proper set of probabilities. The mixing of new information and old appears through the product of the sample likelihood, P[zk | θi], and the prior probability P'[θi]. The sample likelihood is the probability of obtaining the observed sample as a function of the true state of nature θi. Or (as discussed in Sec. 4.1.4) the sample likelihoods can also be interpreted as the relative likelihoods of the various states given the observation zk. If this likelihood is relatively higher for θi than other states, the posterior probability of this state will be increased over its prior probability. If θi is a relatively unlikely state to be associated with observation zk, Pʺ[θi] will reflect this by being smaller than P'[θi]. If either the sample likelihood or the prior is sharply defined, strongly favoring one or a small group of states, the subsequent posterior probabilities will be predominantly influenced by this function (unless the other is also strongly peaked). (This notion is demonstrated in Chap. 6.)

Once the posterior probabilities have been computed, the decision analysis proceeds exactly as in Sec. 5.1.

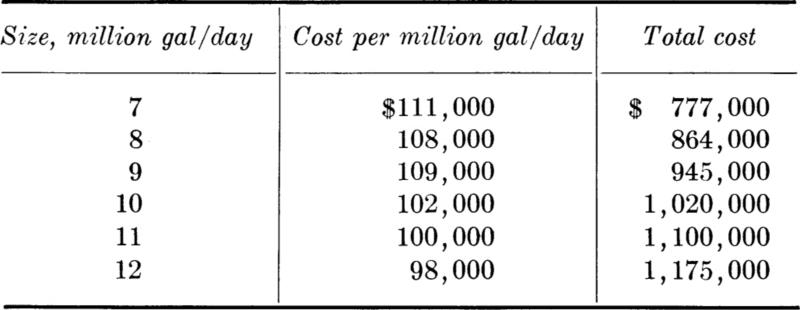

A simple example We reconsider the pile decision discussed in Sec. 5.1. A construction engineer must choose a length of steel section to be driven to a firm layer below. The decision elements of that problem are as follows. The engineer has a choice between two actions:

The possible states of nature are assumed to be just two:

The utilities † are shown in Table 5.1.1 and in the decision tree shown in Fig. 5.2.1.

Fig. 5.2.1 Pile-selection problem.

The prior probabilities of state, representing the engineer’s assessment of such information as large-scale geological maps, depths of piles driven several hundred feet away, etc., are assumed to be

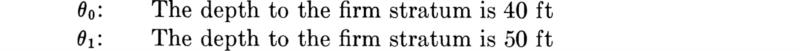

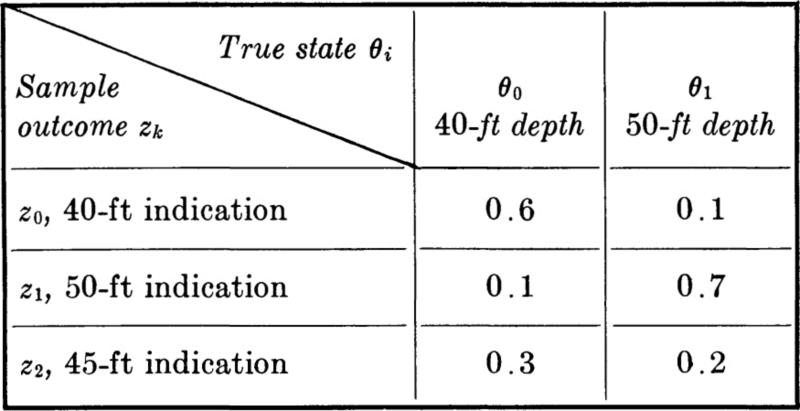

The engineer plans, as the experiment or source of information, to use a simple sonic test to give a depth indication. An instrument records the time required for the sound wave created by a hammer blow on the surface to travel to the firm stratum and return. Owing to soil irregularities, measuring errors, etc., the indicated depth Z is not a wholly reliable estimate of the true depth. Assume that the sample space of possible experimental outcomes is discrete with only three values, 40, 45, and 50 ft. Two of the possible outcomes might be said to favor a particular state of nature, while the third possible outcome, 45 ft, is ambiguous. The engineer summarizes the test’s properties in a table of sample likelihoods or conditional probabilities of observing particular test outcomes given each of the states of nature.

Sample likelihoods P[zk | θi]

In words, the right-hand column of the table states that if the true depth is 50 ft, the engineer believes that one of the three indications— 40, 45, or 50 ft—will result, with the probabilities shown. Given this true depth and the local conditions, the instrument is 70 percent reliable; it has a 10 percent chance of being flatly wrong (by indicating 40 ft); and it will give an ambiguous 45-ft reading with probability 20 percent. The total is, of course, unity. Comparing the two columns, the instrument is apparently somewhat more likely to give a too-deep indication than a too-shallow indication. These sample likelihoods may be in part subjective, since in addition to the manufacturer’s calibration tests and stated “tolerances,” they may depend on the engineer’s judgment, based on his own previous experience with similar tests on a number of different soil types and depths using the same instrument.

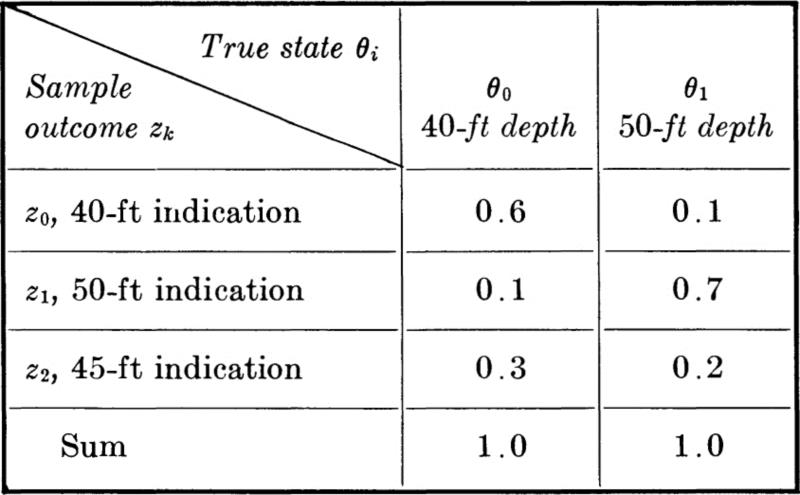

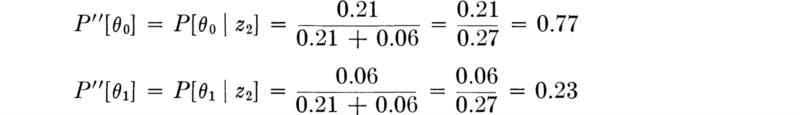

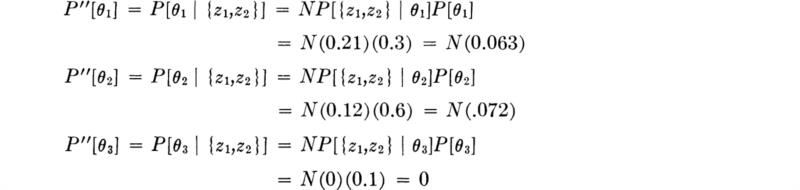

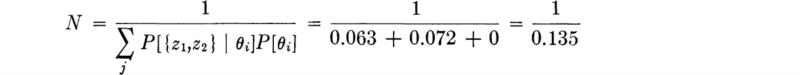

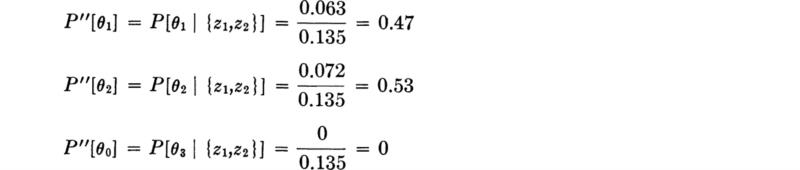

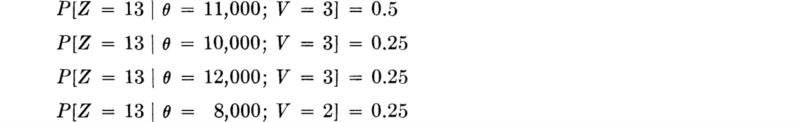

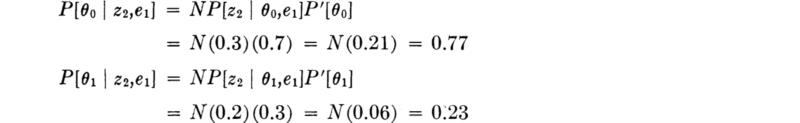

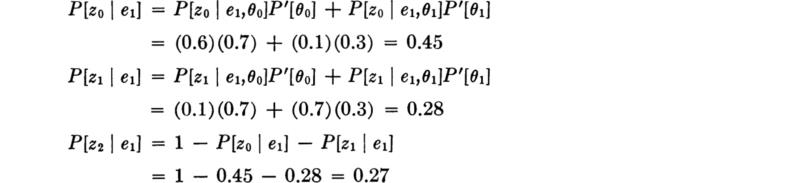

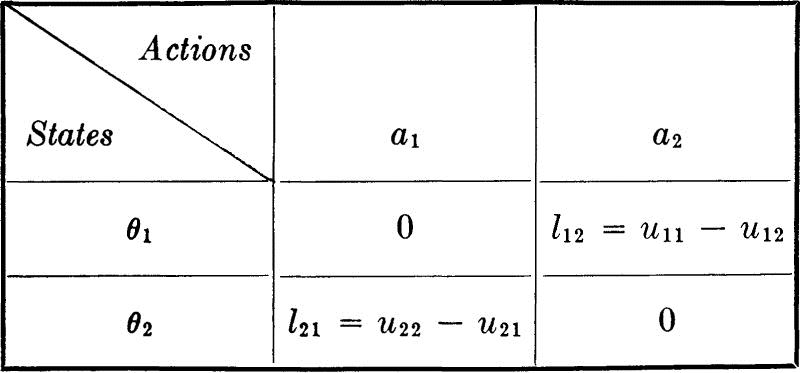

The engineer makes the test and the instrument indicates 45 ft; i.e., z2 is observed. The posterior probabilities of state, given the observation of a 45-ft depth indication, are [Eq. (5.2.1)]:

The true state is either θ0 or θ1; therefore the sum of the posterior probabilities must equal unity. Normalizing these posterior likelihoods by their sum,

Notice that the ambiguous result has, in fact, altered the probabilities of state, owing to the asymmetry of the “error” probabilities, 0.3 versus 0.2.

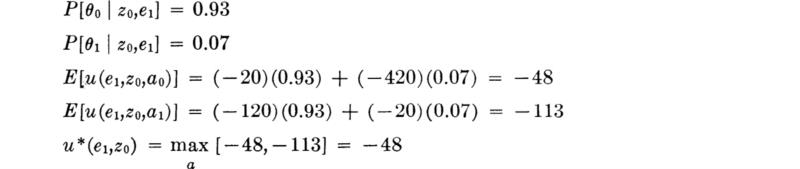

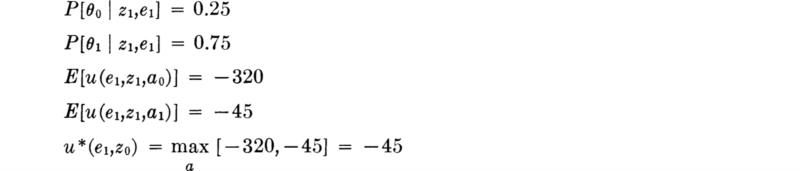

The decision analysis uses these posterior probabilities of state as indicated in Fig. 5.2.1. The expected utility of each action is found as the sum of products of utilities and posterior probabilities. (In this problem, state probabilities are independent of the action.) The better decision is a1, i.e., the longer pile. Although the engineer believes that the true state is more likely to be θ0, the shallower one, his prior information and the ambiguous sonic test result do not give sufficient confidence to offset the relatively large loss (– 400) that he will absorb if the action appropriate to θ0 is taken and θ1 is found to be the true state.

Note, in passing, that if the engineer must drive a number of piles in a close area, the choice of piles, after driving the first, can be made without risk since the true state will have been determined. In decision terms, by driving one pile the engineer will have carried out a perfectly reliable experiment for which the conditional probabilities are all zero except two:

The implication is that Bayes’ rule will lead to a posterior probability of zero for one state and unity for the other, depending on whether the first pile hits a firm stratum at 40 or 50 ft. A more detailed, but similar, illustration appears in Sec. 5.3.1.

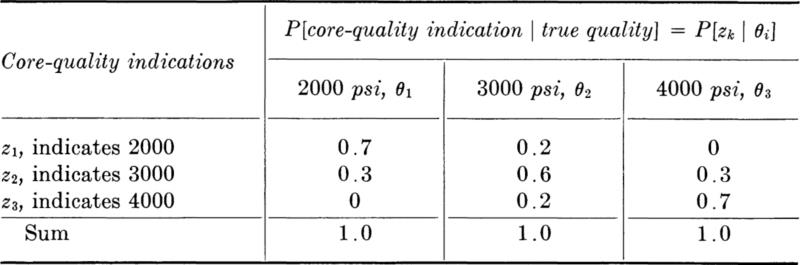

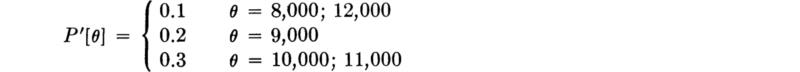

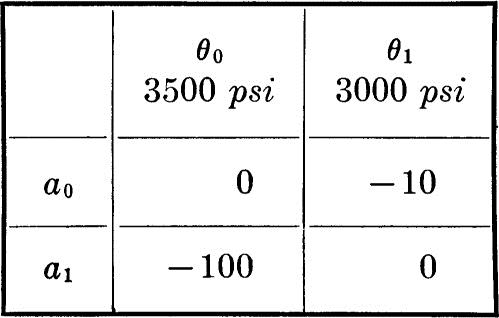

Illustration: Remodeling decision with imperfect testing In an illustration of Sec. 2.1 an engineer is concerned with the quality of concrete in an old building which is being considered for a new purpose. The concrete qualities are classed as 2000 psi (θ1), 3000 psi (θ2), or 4000 psi (θ3). The engineer assigns the states prior probabilities of 0.3, 0.6, and 0.1, respectively. His available actions are either to report that the floor slabs of this concrete should be replaced (a1), to restrict the use of the rooms they support to a light office category (a2), or to give permission that the rooms be used for all office-use types including file rooms (a3). The performance of any slab will depend upon its concrete strength, plus a number of other random factors such as the loads to which it is subjected.

The engineer summarizes these factors in the decision tree (Fig. 5.2.2) (perhaps after an analysis of additional branches representing load levels, etc., given each concrete state and action pair).

To better his information he has two cores taken and tested. The reliability of any one of these core tests in defining in-place concrete quality is defined by the table of sample likelihoods or conditional probabilities:

Fig. 5.2.2 Remodeling decision.

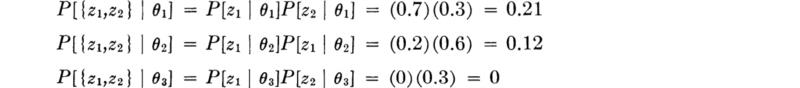

The two cores are taken, one indicating 2000, one indicating 3000; that is, the outcome is {z1,z2}. As discussed in detail in Sec. 2.1, we can find the posterior probabilities of state in either of two ways: (1) by first finding the conditional probabilities of the {z1,z2} outcome given the various possible states and then finding the posterior probabilities of state; or (2) by first finding the posterior probabilities of the states given the first outcome z1 and then, using these posterior probabilities as priors, finding the posterior probabilities given the second outcome z2. In either case, the results are the same. With the former approach, we find first (assuming independence of the samples) the conditional probabilities or sample likelihoods.

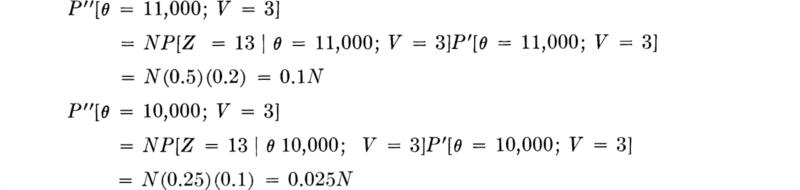

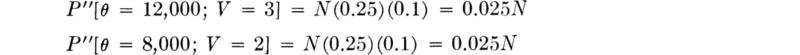

Then the posterior probabilities are

In which N is the normalizing factor needed to make these posterior probabilities sum to 1.

The core outcomes have eliminated the possibility that the concrete is of high strength, and they suggest that the lower-strength state is more likely then the engineer had originally judged.

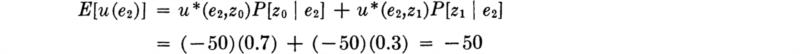

Using these probabilities in the decision tree, Fig. 5.2.2, the best decision seems to be a2, limiting the use of rooms.

In fact the engineer might reconsider his position, which now finds the concrete quality quite uncertain. He may also recognize that he can, at some expense, carry out further experimentation to gain further information. In this case, he might want to order another or several more cores or even a load test. In Sec. 5.3, we shall treat such questions as, “When should he postpone the decision and pay for more information?” and, “Which experiment should he pay for?”

Illustration: A traffic-sampling problem In a study of the dispersion of traffic throughout a city, one car in every r cars entering the city between 8 and 9 A.M. via a certain route, route A, was stopped and small, easily visible stickers were placed on its bumpers. During the day various points in the city were monitored by men who counted the total number of marked autos passing certain points during a certain time interval. A decision as to whether to restrict a particular street to one-way traffic is going to be based on the total number of cars that enter the city via route A and then use this street during the time interval. The performance of the total traffic system will be improved if the change is made and this number is large. If the decision to restrict the street is made, but the traffic on it from route A is now small, the inconvenience to others will outweigh the gain for route A commuters.

The engineer summarizes these potential consequences in a utility assignment of

![]()

in which a1 is the action to restrict the street to one-way flow. The action will incur cost c1 to others, but it will bring benefits to route A users which the engineer assumes are an increasing function of θ, the total number of cars from route A using the street during the time interval (on the day of the study, for simplicity). To take action a2 is to leave the street as is, with (relative) utility of zero:

![]()

We need information about the state of nature θ. The engineer summarizes the information contained in a number of related, but less specific, past traffic surveys and traffic-assignment analyses by assigning θ a prior distribution, P'[θ], θ = 0, 1, 2 ... . His decision will be determined by an expected utility criterion based on the posterior distribution of θ.

The results of the day’s survey are an observation that z0 cars with stickers used the street during the specified time interval. What is the posterior distribution of θ?

We ask first for the sample likelihood P[Z = z | θ] in which Z is the random number of marked route A cars using the street. The probability that any one of the θ route A cars using the street has a sticker is 1/r. Assuming independence of cars, Z, the total number of marked cars in the total of θ cars, has a binomial distribution:

Thus, given the observation that Z = z0, the likelihood function, a function of θ, is

since any value of θ equal to or larger than z0 could lead to the observation of Z = z0. AS a function now of θ, the likelihood function gives the sample-implied relative likelihoods of the various possible values of the state θ. It should be emphasized that in this case the sample likelihoods were derived from a theoretical analysis of the experiment, not from purely subjective assignments.

The posterior distribution of θ is thus

The interesting part (θ ≥ z0) can be rewritten

in which the new normalizing constant is

It should be expected that the larger the proportion of cars that are stopped for stickers, the “better” the survey. In the limit, if every car is stopped, r = 1, the information on θ is perfect. The likelihood function [Eq. (5.2.6)] becomes simply

![]()

in which z0 might equal, say, 64 cars. In this case Eq. (5.2.7) becomes

![]()

This result is true no matter what the engineer’s prior distribution is (as long as that distribution does not specifically exclude the possibility of θ = 64, that is, as long as P'[64] > 0). The expected costs of the actions are

implying that a1 is the better decision only if the cost of the change c1 is less then 64b1 + 4096b2.

The cost of a survey that stops every car may be high. Had the engineer decided to stop only every other car, the sample likelihood function would, of course, not be concentrated solely at θ = z0. If every other car were stopped (r = 2) and the number of marked cars observed were z0 = 32, the sample-likelihood function [Eq. (5.2.6)] would appear as shown in Fig. 5.2.3. Combined with the prior distribution [through Eq. (5.2.8)], this would yield the posterior distribution Pʺ[θ] and finally E[u(a1)]. The shapes of two posterior distributions are shown in Fig. 5.2.4. In both cases the engineer’s prior distribution was triangular, either increasing from θ = 50 to θ = 90 (Fig. 5.2.4a) or decreasing from θ = 50 to θ = 90 (Fig. 5.2.4b). Compare these with the likelihood function, Fig. 5.2.3. Even for these diverse prior shapes, the posterior distribution and hence the decision are stili influenced predominantly by the shape of the sample-likelihood function, that is, by the information in the experimental results.

Fig. 5.2.3 Selected values of the sample-likelihood function given an observation of 32 marked cars; every second car marked.

Fig. 5.2.4 Posterior distributions based on every second car marked.

If an even smaller proportion of cars were stopped and marked, say one in four, and 16 were observed† on the street, the likelihood function becomes much more diffuse (Fig. 5.2.5), implying that it contains less information about the value of θ. In this case the engineer’s prior information will be of more relative significance. Figure 5.2.6, parts a and b, shows posterior distributions based on the two triangular prior distributions mentioned above. Clearly, these prior distributions have a strong influence on the final shape, and hence potentially on the decision.

The trend is clear. If only every eighth car is marked, we know that unless the value of Z observed is very large or very small, the information to be gained will be of such small value relative to the engineer’s triangular prior distribution as to have little influence on the posterior distribution of θ or the decision. This conclusion is influenced, of course, by the shape of the prior distribution.

Fig. 5.2.5 Selected values of the sample-likelihood function given an observation of 16 marked cars; every fourth car marked.

In Sec. 5.3 we shall investigate the method by which the engineer here could have planned whether to mark every car, every second car, or every rth car, or whether even to dispense with the new survey entirely and base his decision on his prior distribution. We should anticipate that the decision to experiment and the level of experimentation will depend on the nature of the engineer’s prior distribution, the costs of various experiments, and the quality of information we expect to gain from them.

Illustration: A flow-measurement problem In this example we shall illustrate several factors commonly appearing in engineering problems. These include the use of inexact, empirical functional relationships, the observation of an easily measured variable to help predict the state of interest, and the presence of joint state variables, one or more of which may not be of direct interest, but whose influence must be accounted for.

In the decision as to the amount of pollutant that can be safely released into a river in any day, the flow rate θ for that day must be estimated. This flow rate is the integrated effect of the distribution of the downstream component of the velocity across the cross-sectional area of the channel on that day. Owing to the difficulty and expense of making all the velocity and depth measurements necessary to calculate θ, however, it is predicted using only an easily measured depth Z at a convenient reference point.

Fig. 5.2.6 Posterior distributions based on every fourth car marked.

To “calibrate” the measuring procedure, the river was carefully surveyed a number of times under different flow conditions to measure θ, the total flow rate. At the same time the reference depth Z was observed and a reference, midstream, velocity V was measured. A regression analysis (Sec. 4.3) of the observations of θ/V versus Z led to the conclusion that a straight line gave a reasonable fit in the region of interest, but the scatter of the observations about this line was significant. †