In the application of probability theory a number of models arise time and time again. In many, diverse, real situations, engineers will often make assumptions about their physical problems which lead to analogous descriptions and hence to mathematically identical forms of the model. Only the numbers, for example, the values of the parameters of a model, and their interpretations differ from application to application. The random variables of interest in these situations have distributions which can be derived and studied independently of the specific application. These distributions have become so common that they have been given proper names and have been tabulated to facilitate their use.

The familiarity of these distributions in fact leads to their frequent adoption simply for reasons of computational expediency. Even though there may exist no argument (no model of the underlying physical mechanism) suggesting that a particular distribution is appropriate, it is often convenient to have a simple mathematical function to describe a variable. The distributions studied in this chapter are the ones most commonly adopted for this empirical use by the engineer. This is simply because they are well known, well tabulated, and easily worked with. In such situations the choice among common distributions is usually based on a comparison between the shape of a histogram of data and the shape of a PDF or PMF of the mathematical distribution. Examples will appear in this chapter, and the technique of comparison will be more fully discussed in Sec. 4.5. †

Such an empirical path is often the only one available to the engineer, but it should be remembered that in these cases the subsequent conclusions, being based on the adopted distribution, are usually dependent to a greater or lesser degree upon its properties. In order to make the conclusions as accurate and as useful as possible, and in order to justify, when necessary, extrapolation beyond available data, it is preferable that the choice of distribution be based whenever possible on an understanding of how the physical situation might have given rise to the distribution. For this reason this chapter is written to give the reader not simply a catalog of common distributions but rather an understanding of at least one mechanism by which each distribution might arise. To the engineer, the existence of such mechanisms, which may describe a physical situation of interest to him, is fundamentally a far more important reason for gaining familiarity with a particular common distribution than, say, the fact that it is a good mathematical approximation of some other distribution, or that it is widely tabulated.

From the pedagogical point of view this chapter has the additional purpose of reinforcing all the basic theory presented in Chap. 2. After the statement of the underlying mechanism, the derivation of the various distributions involved is only an application of those basic principles. In particular, derived distribution techniques will be applied frequently in this chapter.

Perhaps the single most common basic situation is that where the outcomes of experiments can be separated into two exclusive categories— for example, satisfactory or not, high or low, too fast or not, within specifications or not, etc. The following distributions arise from random variables of interest in this situation.

We are interested in the simplest kind of experiment, one where the outcomes can be called either a “success” or a “failure”; that is, only two (mutually exclusive, collectively exhaustive) events are possible outcomes. Examples include testing a material specimen to see if it meets specifications, observing a vehicle at an intersection to see if it makes a left turn or not, or attempting to load a member to its predicted ultimate capacity and recording its ability or lack of ability to carry the load.

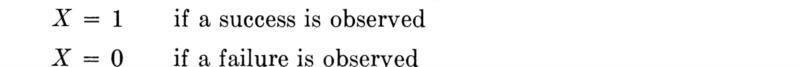

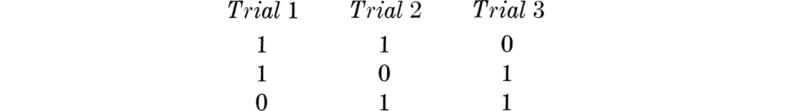

Although at the moment it may hardly seem necessary, one can define a random variable on the experiment so that the variable X takes on one of two numbers—one associated with success, the other with failure. The value of this approach will be demonstrated later. We define, then, the “Bernoulli” random variable X and assign it values. For example,

The choice of values 1 and 0 is arbitrary but useful. Clearly, the PMF of X is simply

where p is the probability of success. The random variable has mean

and variance

As mentioned, another choice of values other than 0,1 might have been made, but this particular choice yields a random variable with an expectation equal to the probability of success p.

Thus, if one has a large stock of items, say high-strength bolts, and he proposes to select and inspect one to determine whether it meets specifications, the mean value of the Bernoulli random variable in such an experiment (with a “success” defined as finding an unsatisfactory bolt) is p, the proportion of defective bolts, † This direct correspondence between mX and p will prove useful when in Chap. 4 we discuss methods for estimating the mean value of random variables. Notice that the variance of X is a maximum when p is ½.

A sequence of simple Bernoulli experiments, when the outcomes of the experiments are mutually independent and when the probability of success remains unchanged, are called Bernoulli trials. One might be interested, for example, in observing five successive cars, each of which turns left with probability p; under the assumption that one driver’s decision to turn is not affected by the actions of the other four drivers, the set of five cars’ turning behaviors represents a sequence of Bernoulli trials. From a batch of concrete one extracts three test cylinders, each with probability p of failing at a stress below the specified strength; these represent Bernoulli trials if the conditional probability that any one will fail remains unchanged given that any of the others has failed. In each of a sequence of 30 years, the occurrence or not of a flood greater than the capacity of a spillway represents a sequence of 30 Bernoulli trials if maximum annual flood magnitudes are independent and if the probability p of an occurrence in any year remains unchanged throughout the 30 years, †

We shall be interested in determining the distributions of various random variables related to the Bernoulli trials mechanism.

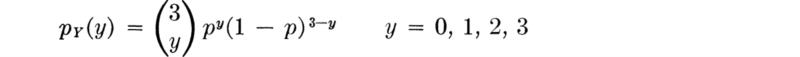

Distribution Let us determine the distribution of the total number of successes in n Bernoulli trials, each with probability of success p. Call this random number of successes Y. Consider first a simple case, n = 3. There will be no successes (that is, Y = 0) in three trials only if all trials lead to failures. This event has probability

![]()

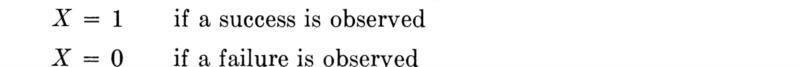

Any of the following sequences of successes 1 and failures 0 will lead to a total of one success in three trials:

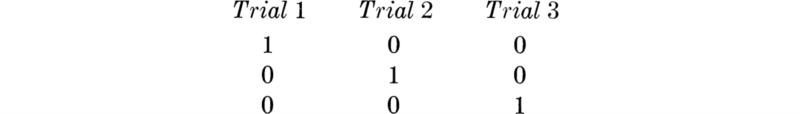

Each sequence is an event with probability of occurrence p(l – p)2. Therefore the event Y = 1 has probability 3p(1– p)2, since the sequences are mutually exclusive events. Similarly, the mutually exclusive sequences

each occuring with probability p2(l – p), lead to Y = 2. Hence

![]()

Similarly, P[Y = 3] = p3, since only the sequence 1, 1, 1 corresponds to Y = 3. In concise notation,

where  indicates the binomial coefficient:

indicates the binomial coefficient:

This coefficient is equal to the number of ways that exactly y successes can be found in a sequence of three trials. (Recall that 0! = 1, by definition.)

If the probability that a contractor’s bid will be low is ½ on each of three independent jobs, the probability distribution of Y, the number of successful bids, is

Contrary to some popular beliefs, it is not certain that the contractor will get a job; the likelihood is almost ⅓ that he will get no job at all!

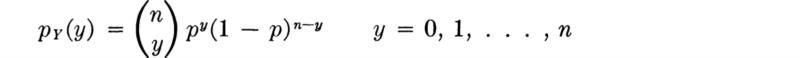

In general, if there are n Bernoulli trials, the PMF of the total number of successes Y is given by

It is clear that the parameter n must be integer and the parameter p

must be 0 ≤ p ≤ 1. The binomial coefficient

is the number of ways a sequence of n trials can contain exactly y successes Parzen [I960].

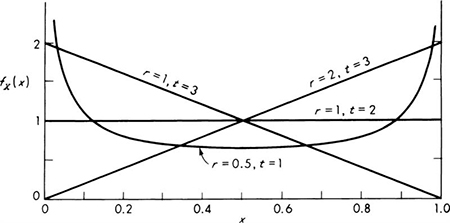

A number of examples of this, the “binomial distribution,’ are plotted in Fig. 3.1.1. The shape depends radically on the values of the parameters n and p. Notice the use of the notation B(n,p) to indicate a binomial distribution with parameters n and p; for example, the random variable Y in the bidding example above is B(3,⅓).

Fig. 3.1.1 Binomial distribution B(n,p).

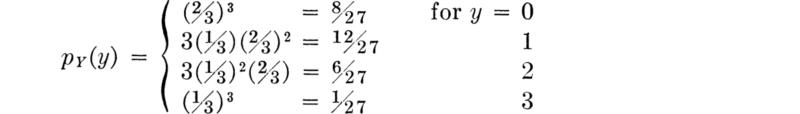

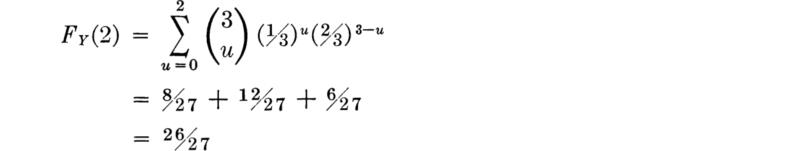

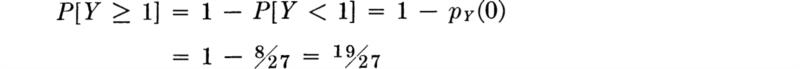

Formally, the CDF of the binomial distribution is

Thus in the bidding example, the probability that the contractor will receive two or fewer bids is

Or, more easily,

It is often the case that the probabilities of complementary events are more easily computed than those of the events themselves. As another example, the probability that the contractor gets at least one bid is

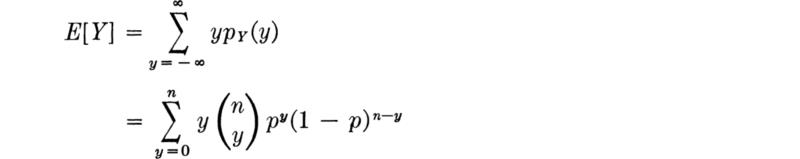

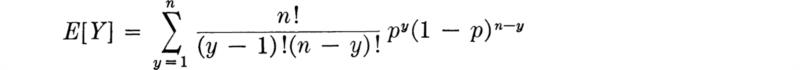

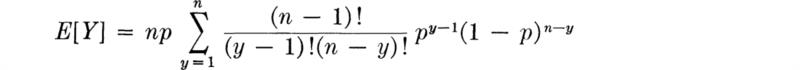

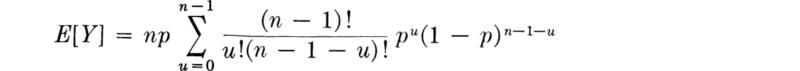

Moments The mean and variance of a binomial random variable Y are easily determined, and the exercise permits us to demonstrate some of the techniques of dealing with sums which many readers find unfamiliar. Thus,

Since the first term is zero, it can be dropped. The y cancels a term in y!:

Bringing np in front of the sum,

Letting u = y – 1,

Notice now that the sum is over all the elements of a B(n – 1, p) mass function and hence equals 1, yielding

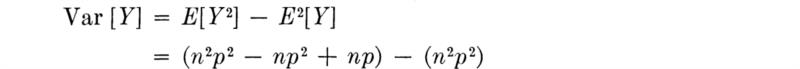

This is an example of a most common method of approach in probability problems, namely, manipulating integrals and sums into integrals and sums over recognizable PDF or PMF’s, which are known to equal unity. In a similar manner, we can find

or

Y as a sum Notice that the total number of successes in n trials Y, can be interpreted as the sum

![]()

of n independent identically distributed Bernoulli random variables; thus Xi = 1 if there is a success on the ith trial, and Xi = 0 if there is a failure. As such, the results above could have been more easily obtained as

using Eqs. (3.1.2) and (3.1.3). The techniques of derived distributions (Sec. 2.3) could also have been used to find the distribution of Y from those of Xi The interpretation of a binomial variable as the sum of Bernoulli variables also explains why the sum of two binomial random variables, B(n1,p) and B(n2,p), also has a binomial distribution, B(n1 + n2, p) as long as p remains constant. This fact is easily verified using the techniques of Sec. 2.3.

The binomial distribution is tabulated, † but its evaluation, which is time-consuming for large n, can frequently be carried out approximately using more readily available tables of the Poisson distribution (Sec. 3.2.1) or the normal distribution (Sec. 3.3.1); Prob. 3.18 explains these approximations. The latter approximation is justified in Sec. 3.3.1 in the light of the binomial random variable’s interpretation as the sum of n Bernoulli random variables.

Rather than asking the question, “How many successes will occur in a fixed number of repeated Bernoulli trials?” the engineer may alternatively be interested in the question, “At what trial will the first success occur?” For example, how many bolts will be tested before a defective one is encountered if the proportion defective is p? When will the first critical flood occur if the probability that it will occur in any year is p?

Geometric distribution Assuming independence of the trials and a constant value of p, the distribution of N, the number of trials to the first success, is easily found. The first success will occur on the nth trial if and only if (1) the first n – 1 are failures, which occurs with probability (1 – p)n–1 and (2) the nth trial is a success, which occurs with probability p, or

Notice that N may, conceptually at least, take on any value from one to infinity. ‡

This distribution is called the geometric § distribution with parameter p. Symbolically we say that N is G(p). A plot of a geometric distribution appears in Fig. 3.1.2.

The cumulative distribution function of the geometric distribution is

Fig. 3.1.2 Geometric distribution G(p).

where use is made of the familiar formula for the sum of a geometric progression. This result could have been obtained directly by observing that the probability that N ≤ n is simply the probability that there is at least one occurrence in n trials, or

![]()

The geometric distribution also follows from a totally different mechanism (see Sec. 3.5.3).

Moments of the geometric The first moment of a geometric random variable is found by substitution and a number of algebraic manipulations similar to those used in the binomial case. The reader can show that

In words, the average number of trials to the first occurrence is the reciprocal of the probability of occurrence on any one trial. For example, if the proportion of defective bolts is 0.1, on the average, 10 bolts would be tested before a defective would be found, assuming the conditions of independent Bernoulli trials hold.

The variance of N can easily be shown to be

Negative binomial distribution We have just determined the answer to the question, “On which trial will the first success occur?” Next consider the more general question, “On which trial will the kth success occur?” We are dealing now with a random variable, say Wk, which is the sum of random variables N1N2, . . ., Nk, where Ni is the number of trials between the (i – l)th and ith successes. Thus

![]()

Because of the assumed independence of the Bernoulli trials, it is clear that the Ni: (i = 1,2, . . . k) are mutually independent random variables, each with a common geometric distribution with parameter p. As a result of this observation, we can obtain partial information about Wk, namely, its moments, very easily. The mean and variance of Wk can be written down immediately as

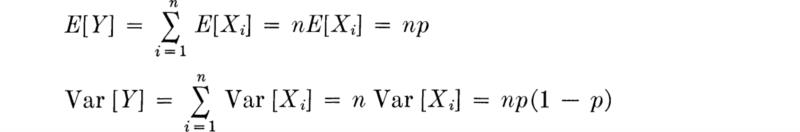

The distribution of W can be found using the methods discussed in Sec. 2.3. For example, for k = 2,

![]()

Writing Eq. (2.3.43) for the discrete case, we have

which states that the probability that W2 = w is the probability that N1= n and N2= w – n, summed over all values of n up to w. Notice that when n = w in the sum, we get ![]() , which is zero; hence

, which is zero; hence

or, simply,

This result could have been arrived at directly by arguing that the second success will be achieved at the wth trial only if there are w – 2 failures, a success on the wth trial, and one success in any one of the w – 1 preceding trials.

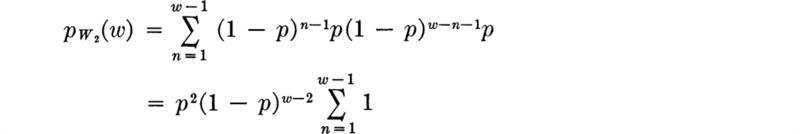

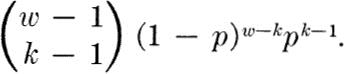

With this result for W2, one could find the distribution of W3, knowing W3 = W2+ N3. In turn the distribution for any k could be arrived at. If this exercise is carried out, a pattern emerges immediately, and one can conclude that

The result is reasonable if one argues that the kth success occurs on wth trial only if there are exactly k – 1 successes in the preceding w – 1 trials and there is a success on the wth trial. The probability of exactly k – 1 successes in w – 1 trials we know from our study of binomial random variables to be

This distribution is known as the Pascal, or negative binomial, distribution with parameters k and p, denoted here NB(k,p).† It has been widely used in studies of accidents, ‡ cosmic rays, learning processes, and exposure to diseases. It should not be surprising, owing to its possible interpretation as the number of trials to the (k1 + k2)th event, that a random variable which is the sum of a NB(k1,p) and a NB(k2,p) pair of (independent) random variables is itself negatively binomially distributed, NB(k1 + k2, p).

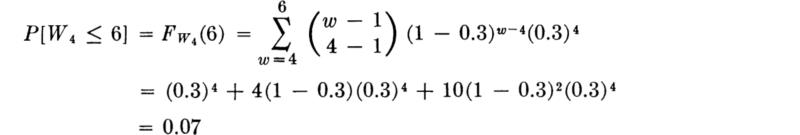

Illustration: Turn lanes If a left-turn lane at a traffic light has a capacity of three cars, what is the probability that the lane will not be sufficiently large to hold all the drivers who want to turn left in a string of six cars delayed by a red signal? In the mean, 30 percent of all cars want to turn left. The desired probability is simply the probability that the number of trials to the fourth success (left-turning car) is less than 6. Letting WA equal that number, W4 has a negative binomial distribution. Therefore,

Alternatively, one could have deduced this answer by asking for the probability of four, five, or six successes in six Beroulli trials and used the PMF of the appropriate binomial random variable.

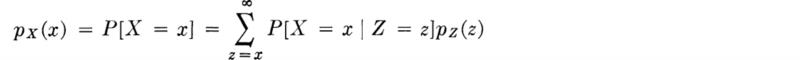

A more realistic question might be, “What is the probability that the left-turn lane capacity will be insufficient when the number of red-signal-delayed cars is unspecified?” This number is itself a random variable, say Z, with a probability mass function, pz(z), z = 0, 1, 2, .... Then the probability of the event A, that the lane is inadequate, is found by considering all possible values of Z:

or, since Fw4(z)= 0 for z < 4,

A logical choice for the mass function of Z will be considered in the following section, Sec. 3.2.1.

Illustration: Design values and return periods In the design of civil engineering systems which must withstand the effects of rare events such as large floods or high winds, it is necessary to consider the risks involved for any particular choice of design capacity. Given a design, the engineer can usually estimate the largest magnitude of the rare event which the design can withstand, e.g., the maximum flow possible through a spillway or the maximum wind velocity which a structure can resist. The engineer then seeks (from past data, say) an estimate of the probability p that in a given time period, usually a year, this critical magnitude will be exceeded.

If, as is commonly assumed, the magnitude of the maximum annual flow rates in a river or the maximum annual wind velocities are independent, and if p remains constant from year to year, then the successive years represent independent Bernoulli trials. A magnitude greater than the critical value is denoted a success. With the knowledge of the distributions in this section the engineer is now in a position to answer a number of critical questions.

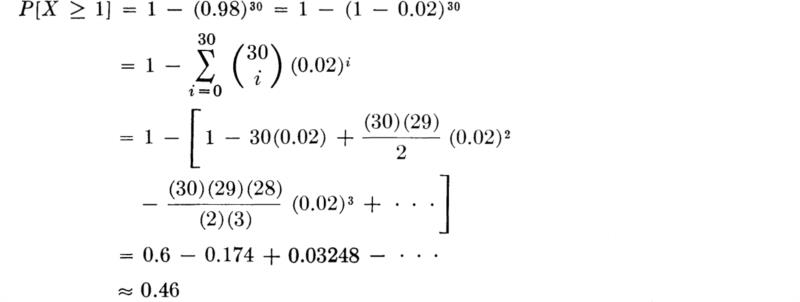

Let us assume, for example, that p = 0.02; that is, there is 1 chance in 50 that a flood greater than the critical value will occur in any particular year. What is the probability that at least one large flood will take place during. the 30-year economic lifetime of a proposed flood-control system? Let X equal the number of large floods in 30 years. Then X is B(30,0.02), and

![]()

Using the familiar binomial theorem,

If this risk of at least one critical flood is considered too great relative to the consequences, the engineer might increase the design capacity such that the magnitude of the critical flood would only be exceeded with probability 0.01 in any one year. Then X is B(30,0.01), and

![]()

The engineer seeks, of course, to weigh increased initial cost of the system versus the decreased risk of incurring the damage associated with a failure of the system to contain the largest flood.

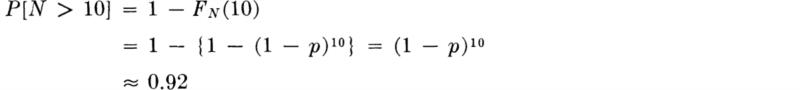

The number of years N to the first occurrence of the critical flood is a random variable with a geometric distribution, G(0.01), in the latter case. The probability that it is greater than 10 years is

What is the probability that N > 30? Clearly it is just equal to the probability that there are no floods in 30 years, i.e., that X = 0, where X is B(30,0.01). Here, using a previous result,

Average Return Periods The expected value of N is simply

This is the average number of trials (years) to the first flood of magnitude greater than the critical flood. In civil engineering this is referred to as the average return period or simply the return period. The critical magnitude is often referred to as the “mN-year flood,” here the “100-year flood.” The term is somewhat unfortunate, since its use has led the layman to conclude that there will be 100 years between such floods when in fact the probability of such a flood in any year remains 0.01 independently of the occurrence of such a flood in the previous or a recent year (at least according to the engineer’s model).

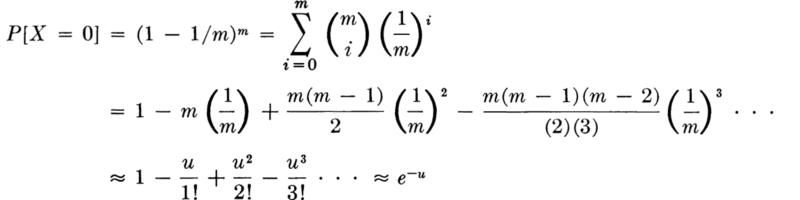

The probability that there will be no floods greater than the m-year flood in m years is, since X is then B[m,l/m],

where u = m(l/m) = 1; hence, for large m,

![]()

That is, the likelihood that one or more m-year events will occur in m years is approximately 1 – e–1= 0.632. Thus a system “designed for the m-year flood” will be inadequate with a probability of about 2/3 at least once during a period of m years.

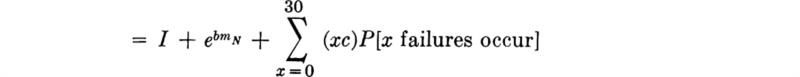

Cost Optimization What is the optimum design to minimize total expected cost? Assume that the cost c associated with a system failure is independent of the flood magnitude, and that, in the range of designs of interest, the cost is a constant I plus an incremental cost whose logarithm is proportional to the mean return period mN of the design demand, † For an economic design life of 30 years,

Since the cost of failure is cX,

![]()

Assume that the system itself remains in use with an unaltered capacity after any failure. Then, if X is the number of failures in 30 years, it is distributed

B(30, 1/mN) (assuming no more than one failure per year). The mean of X is 30/mN. The expected cost, given a value of mN, is

![]()

The design flood magnitude ![]() , which minimizes this expected total cost, is found by setting the derivative of total cost equal to zero:

, which minimizes this expected total cost, is found by setting the derivative of total cost equal to zero:

This equation can be solved by trial and error for given values of b and c. For example, for b = 0.1 year-1 and c = $200,000, ![]() years. Thus the designer should provide a system with a capacity sufficient to withstand the demand which is exceeded with probability 1/90 = 0.011 in any year. In Sec. 3.3, we will encounter distributions commonly used to describe annual maximum floods from which such design magnitudes could be easily obtained, once the design return period is fixed.

years. Thus the designer should provide a system with a capacity sufficient to withstand the demand which is exceeded with probability 1/90 = 0.011 in any year. In Sec. 3.3, we will encounter distributions commonly used to describe annual maximum floods from which such design magnitudes could be easily obtained, once the design return period is fixed.

The basic model discussed here is that of Bernoulli trials. They are a sequence of independent experiments, the outcome of any one of which can be classified as either success or failure (or, numerically, 0 or 1). The probability of success p remains constant from trial to trial.

If this model holds, then:

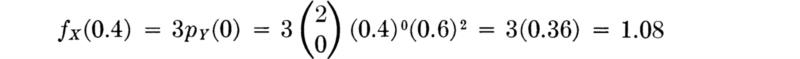

1. Y, the total number of successes in n trials, has a binomial distribution:

with mean np and variance np(1 – p).

2. N, the trial number at which the first success occurs, has a geometricdistribution:

![]()

with mean 1/p and variance (1 – p)/p2. The former is called the mean return period.

3. Wk, the trial number at which the kth success will occur, has a negative binomial distribution:

with mean k/p and variance k (l – p)/p2.

In many situations it is not possible to identify individual discrete trials at which events (or successes) might occur, but it is known that the number of such trials is many. The models in this section arise out of consideration of situations where the number of possible trials is infinitely large. Examples include situations where events can occur at any instant over an interval of time or at any location along the length of a line or on the area of a surface.

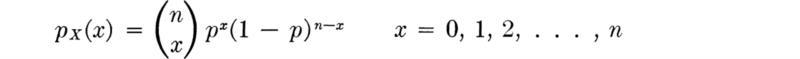

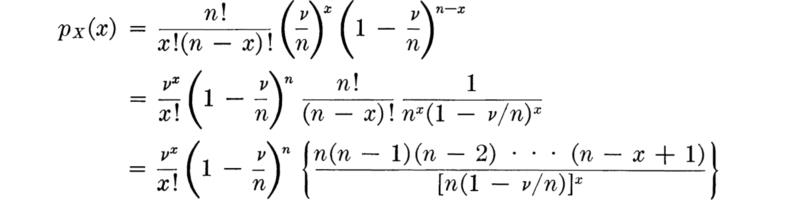

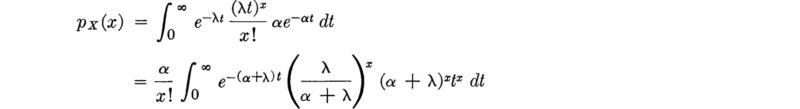

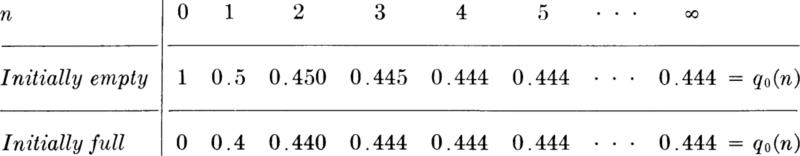

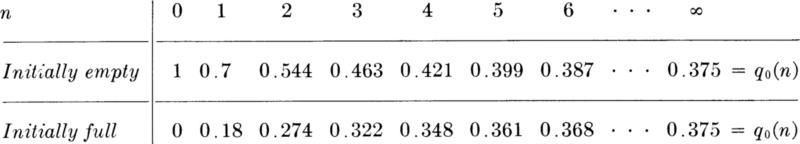

A derivation of the distribution Suppose that a traffic engineer is interested in the total number of vehicles which arrive at a specific location in a fixed interval of time, t sec long. If he knows that the probability of a vehicle occurring in any second is a small number p, (and if he assumes that the probability of two or more vehicles in any one second is negligible), then the total number of vehicles X in the n = t (assumed) independent trials is binomial, B(n,p):

Consider what happens as the engineer takes smaller and smaller time durations to represent the individual trials. The number of trials n increases and the probability p of success on any one trial decreases, but the expected number events in the total interval must remain constant at pn. Call this constant ν and consider the PMF of X in the limit as the trial duration shrinks to zero, such that

Substituting for p = ν/n in the PMF of X and rearranging,

The term in braces has x terms in the numerator and x terms in the denominator. For large n each of these terms is very nearly equal to n; hence in the limit, as n goes to infinity, the term in braces is simply nx/nx or 1. The term (1 – ν/n)n is known to equal e–ν in the limit. Hence the PMF of X is

Moments This extremely useful distribution is known as the Poisson distribution, denoted here P(ν). Notice it contains but a single parameter ν, compared with the two required for the binomial distribution, B(n,p). Its mean and variance are both equal to this parameter. Following the same steps used to find the mean of the binomial distribution,

Letting y = x – 1,

since the sum is now simply the sum over a Poisson PMF. A similar calculation shows that also

Plots of Poisson distributions are displayed in Fig. 3.2.1. † Notice the fading of skew as ν increases.

Also, consideration of the derivation should make it clear that the sum of two Poisson random variables with parameters vx and v2 must again be a Poisson random variable with parameters ν= ν1 + ν2- (How might this fact be verified?) Distributions with the peculiar and valuable property that the sum of independent random variables with the distribution has the same distribution are said to be “regenerative.” The binomial and negative binomial distributions are, recall, regenerative only on the condition that the parameter p is the same for all the distributions.

Poisson process It is clear from the derivation that if a time interval of a different duration, say 2t, is of interest, then the number of trials at any stage in the limit would be twice as great and the parameter of the resulting Poisson distribution would be 2ν. In such cases, the parameter of the Poisson distribution can be written advantageously as λt rather than ν:

Fig. 3.2.1 Poisson distribution P(ν).

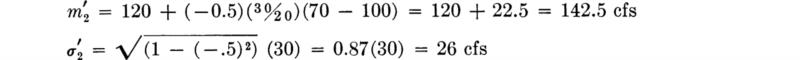

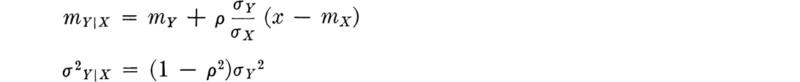

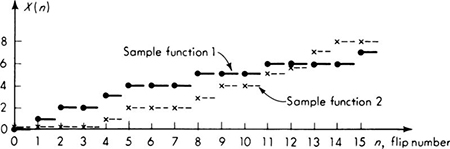

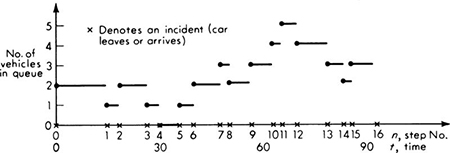

This form of the Poisson distribution is suggestive of its association with the Poisson process. A stochastic process is a random function of time (usually). In this case we are interested in a stochastic process X(t), whose value at any time t is the (random) number of arrivals or incidents which have occurred since time t = 0. Just as samples of a random variable X are numbers, x1, x2, . . ., so observations of a random process X(t) are sample functions of time, x1(t), x2(t), . . ., as shown in Fig. 3.2.2.

Fig. 3.2.2 Sample functions of a Poisson process X(t).

The samples shown there are of a Poisson process which is counting the number of vehicle arrivals versus time. Other examples of stochastic processes include wave forces versus time, total accumulated rainfall versus time, and strength of soil versus depth. We will encounter other cases of stochastic processes in Secs. 3.6 and 3.7 and in Chap. 6.

At any fixed value of the (time) parameter t, say t = t0, the value X(t0) of a stochastic process is a simple random variable, with an appropriate distribution ![]() which has an appropriate mean

which has an appropriate mean ![]() variance

variance ![]() etc.† In general, this distribution, mean, and variance are functions of time. In addition, the joint behavior of two (or more) values, say X(t0) and X(t1), of a stochastic process is governed by a joint probability law. Typically one might be interested in studying the conditional distribution of a future value X(t1), given an observation at the present time X(t0), in order to “predict” that future value (e.g., see Sec. 3.6.2).

etc.† In general, this distribution, mean, and variance are functions of time. In addition, the joint behavior of two (or more) values, say X(t0) and X(t1), of a stochastic process is governed by a joint probability law. Typically one might be interested in studying the conditional distribution of a future value X(t1), given an observation at the present time X(t0), in order to “predict” that future value (e.g., see Sec. 3.6.2).

An elementary result of the study of stochastic processes‡ is that the distribution of the random variable X(t0) is the Poisson distribution with parameter λt0 if the stochastic process X(t) is a Poisson process with parameter λ. To be a Poisson process, the underlying physical mechanism generating the arrivals or incidents must satisfy the following important assumptions:

1. Stationarity. The probability of an incident in a short interval of time t to t + h is approximately λh, for any t.

2. Nonmultiplicity. The probability of two or more events in a short interval of time is negligible compared to λh (i.e., it is of smaller order than λh).

3. Independence. The number of incidents in any interval of time is independent of the number in any other (nonoverlapping) interval of time.

The close analogy to the assumptions underlying discrete Bernoulli trials (Sec. 3.1) is evident. Therefore, the observed convergence of the binomial distribution to the Poisson distribution is to be expected.

In short, if we are observing a Poisson process, the distribution of X(t) at any t is the Poisson distribution [Eq. (3.2.4)], with mean mX(t)=λt [from Eq. (3.2.2)]. Therefore, the parameter λ is usually referred to as the average rate (of arrival) of the Poisson process.

The basic mechanism from which the Poisson process arises, namely, independent incidents occurring along a continuous (time) axis with a constant average rate of occurrence, suggests why it is often referred to as the “model of random events” (or random arrivals). It has been successfully employed to describe such diverse problems as the occurrences of storms (Borgman [1963]), major floods (Shane and Lynn [1964]), overloads on structures (Freudenthal, Garrelts, and Shinozuka [1966]), and, distributed in space rather than time, flaws in materials and particles of aggregate in surrounding matrices of material,† It is also widely employed in other fields of engineering to describe the arrival of telephone calls at a central exchange and the demands upon service facilities.

The Poisson process is often used by traffic engineers to model the flow of vehicles past a point when traffic is freely flowing and not dense (e.g., Greenshields and Weida [1952], Haight [1963]). For this reason the Poisson distribution describes well the number of vehicles which arrive at an intersection during a given time interval, say a cycle of a traffic light. The distribution logically might have been used in the illustrations dealing with traffic lights in Secs. 2.3 and 3.1.

Illustration: Left-turn lane and the model of random selection If, in the illustration in Sec. 3.1.2 involving left-turning cars at a traffic light, the number Z of cars arriving during one cycle is Poisson-distributed with parameter ν = 6, the

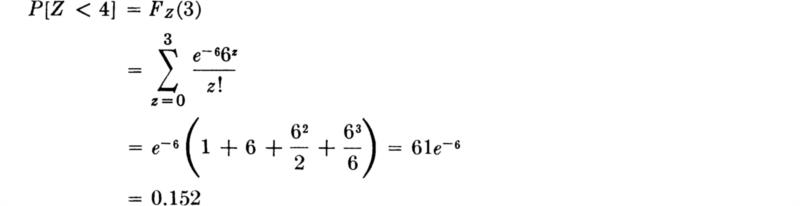

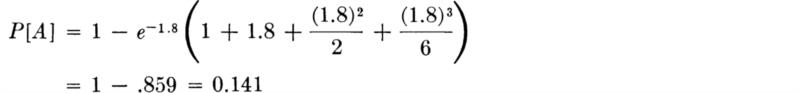

probability that less than four cars will arrive is

If this event occurs, there is no chance that the left-turn lane capacity will be exceeded. The probability of the event A, that the lane will be inadequate on any cycle (assuming there are no cars remaining from a previous cycle), is given in Eq. (3.1.17b):

where p is the proportion of cars turning left. This result could be evaluated as it stands for any values of p and v, but it is more informative to reason to another, simpler form.

If the probability is p that any particular car will turn left, then we can consider directly the arrival only of those cars which desire to turn left. Returning to the derivation of the Poisson distribution from the binomial, one can see that, since such left-turning cars arrive with a probability † p times the probability that any car arrives, the number X of these left-turning cars is also Poisson-distributed, but with parameter pv.‡ Hence the probability that the left-turn lane is inadequate is simply the probability that X is greater than or equal to four, or§:

For ν = 6 and p = 0.3, pν = 1.8 and

If this number is considered by the engineer to be too large, he might consider increasing the capacity of the lane in order to reduce the likelihood of inadequate performance.

It is important to realize the generality of the result used here. The implication is that if a random variable Z is Poisson-distributed, then so too is the random variable X, which is derived by (independently) selecting only with probability p each of the incidents counted by Z; that is if Z is P(ν), then X is P(pν). More formally, the distribution of X is found as

The conditional term is simply the probability of observing x successes in z Bernoulli trials; thus

which, upon changing the variable to u = z – x, reduces to

Examples of application of this result might include X being the number of hurricane-caused floods greater than critical magnitude when Z is the total number of hurricane arrivals, or X being the number of vehicles recorded by a defective counting device when Z is the total number of vehicles passing in a given interval. The latter example was encountered in Sec. 2.2.2 when the joint distribution of X and Z was investigated.

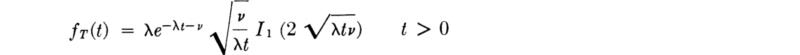

The traffic engineer observing a traffic stream is often concerned with the length of the time interval between vehicle arrivals at a point. If an interval is too short, for example, it will cause a car attempting to cross or merge with the traffic stream to remain stationary or to interrupt the stream. Let us seek the distribution for this time between arrivals under the conditions describing traffic flow used in the preceding section, namely, that the vehicles follow a Poisson arrival process with average arrival rate λ.

Distribution and moments If we denote by random variable T the time to the first arrival, then the probability that T exceeds some value t is equal to the probability that no events occur in that time interval of length t. The former probability is 1 – FT(t). The latter probability is px(0) the probability that a Poisson random variable X with parameter λt is zero. Substituting into Eq. (3.2.4),

![]()

Therefore,

while

This defines the “exponential” distribution which we shall denote EX(λ). It describes the time to the first occurrence of a Poisson event. Therefore it is a continuous analog of the geometric distribution [Eq. (3.1.3)]. But, owing to the stationarity and independence oroperties of the Poisson process, e–λt is the probability of no events in xny interval of time of length t, whether or not it begins at time 0. If we use the arrival time of the nth event as the beginning of the time interval, then e–λt is the probability that the time to the (n + 1)th event is greater than t. In short, the interarrival times of a Poisson process are independent and exponentially distributed.

The mean of the exponential distribution is

![]()

Letting u = λt,

Recall that in the previous section, λ was found to be the average number of events per unit time, while here 1/λ is revealed as the average time between arrivals.

In a similar manner,

Notice that the coefficient of variation of T is unity for any value of the parameter λ.

The exponential distribution is plotted in Fig. 3.2.3 as a function of λt, the ratio of t to the mean interarrival time. The distribution of

Fig. 3.2.3 Exponential distribution EX(λ).

the sum of two independent exponential random variables with different parameters α. and β was discussed in Sec. 2.3.2.

Memoryless property The Poisson process is often said to be “memory-less,” meaning that future behavior is independent of its present or past behavior. This memoryless character of the Poisson arrivals and of the exponential distribution is best understood by determining the conditional distribution of T given that T > t0, that is, the distribution of the time between arrivals given that no arrivals occurred before t0:

For t less than t0, the numerator is zero; for t ≥ t0 it is simply equal to P[t0 < T ≤t]. Thus

Or if time r is reckoned from t0, τ = t — t0,

In words, failure to observe an event up to t0 does not alter one’s prediction of the length of time (from t0) before an event will occur. The future is not influenced by the past if events are Poisson arrivals. An implication is that any choice of the time origin is satisfactory for the Poisson process.

Applications The very tractable exponential distribution is widely adopted in practice. The close association between the Poisson distribution and the exponential distribution, both arising out of a mechanism of events arriving independently and “at random” suggests that the exponential is applicable to the description of interarrival times (or distances, in the case of flaw distribution, for example) in those situations mentioned in Sec. 3.2.1 in which the number of events in a fixed interval is Poisson distributed. In addition, observed data is often suggestive of an exponential distribution even when the assumptions of a Poisson-arrivals mechanism may not seem wholly appropriate. Times between vehicle arrivals, for example, are influenced (for small values at least) by effects such as minimum spacings between vehicles and “platooning” of vehicles behind a slower vehicle, implying a lack of independence between event arrivals in two neighboring short intervals. Nonetheless, experience† has indicated that in many circumstances the adoption of the exponential distribution of vehicle interarrival times is reasonable. In studies of the lengths of the lifetimes in service of mechanical and electrical components, the analytical tractability of the exponential distribution has led to its wide adoption, even though gradual wearout of such components would suggest that the risk of failure in intervals of equal length would not be constant in time. This time dependence is, of course, in contradiction to the stationarity assumption in the Poisson process model, which predicts that the exponential distribution will describe interarrival times. In short, the exponential distribution, like many others we will encounter, is often adopted simply as a convenient representation of a phenomenon when no more than the shape of the observed data or the analytical tractability of the exponential function seem to suggest it.

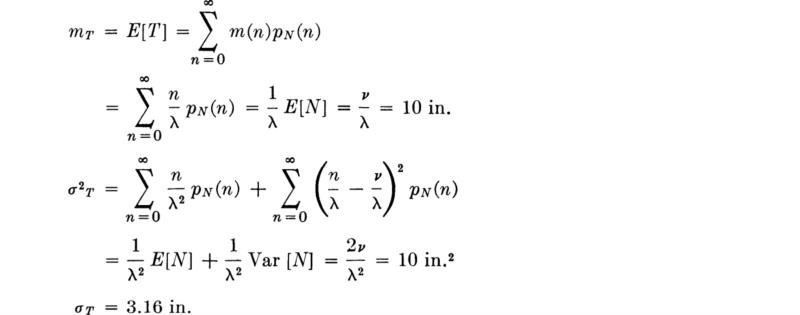

Distribution and moments As in the case with discrete trials, it is also of interest to ask for the distribution of the time Xk to the kth arrival of a Poisson process. Now, the times between arrivals, Ti, i = 1,2, . . ., k, are independent and have exponential distributions with common parameter λ. Xk is the sum T1 + T2+ · · · + Tk. Its distribution follows from repeated application of the convolution integral, Eq. (2.3.43). † For any k = 1, 2, 3, . . . ,

We say X is gamma-distributed with parameters k and λ, G(k,λ)‡ if its density function has the form in Eq. (3.2.15). By integration or, more simply, by consideration of X as the sum of k independent exponentially distributed random variables, if X is G(k,λ), then

and

In fact, the gamma distribution is more broadly defined than is implied by its derivation as the distribution of the sum of k independently, identically distributed exponential random variables. More generally the parameter k need not be integer-valued § when the gamma distribution is written

the only restrictions being λ > 0 and k > 0. The gamma function Γ(k) (from which the distribution gets its name) is equal to (k – 1)! if k is an integer, but more generally is defined by the definite integral

The integral arises here as a constant needed to normalize the function to a proper density function. The gamma function is widely tabulated,

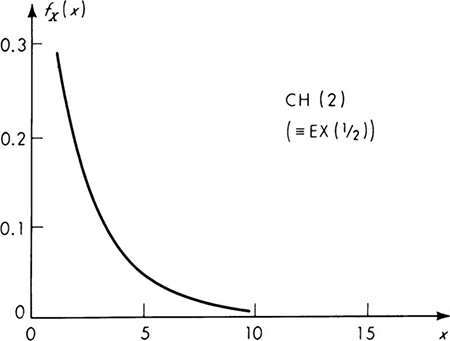

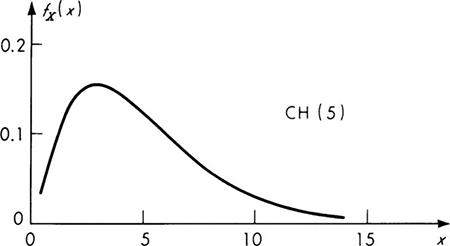

Fig. 3.2.4 Gamma distributions G(k,λ).

as is the “incomplete gamma function”:

which can be used to evaluate the cumulative distribution function Fx(x):†

The equations given above for the mean and variance of X hold for noninteger k as well.

The shape of the gamma density function, Fig. 3.2.4, indicates why it is widely used in engineering applications. Like observed data from many phenomena it is limited to positive values and is skewed to the right. Notice that λ can be interpreted as a scaling parameter and k as a shape parameter of the distribution. The skewness coefficient is

Owing to this shape and its convenient mathematical form (rather than to any belief that it arose from a fundamental, underlying mechanism where the time to the kth occurrence of some event was critical), the gamma distribution has been used by civil engineers to describe such varied phenomena as maximum stream flows (Markovic [1965]), the yield strength of reinforced concrete members (Tichy and Vorlicek [1965]), and the depth of monthly precipitation (Whitcomb [1940]).

In addition to its ability to describe observed data, the gamma distribution is, of course, important as the time to the occurrence of the kth [or the time between the nth and (n + k)th occurrence] in a Poisson process, † Applications include the time to the arrival of a fixed number of vehicles, and the time to the failure of a system designed to accept a fixed number of overloads before failing, when the vehicles and the overloads are Poisson arrivals. The sum of exponentials interpretation explains (at least for integer k) why the gamma is regenerative under fixed λ; that is, if X is G(k1,λ), Y is G(k2,λ), and Z = X + Y, then Z is G(k1+ k2, λ) if X and Y are independent. The result is true for noninteger k as well.

Illustration: Maximum flows Based on a histogram of data of maximum annual river flows in the Weldon River at Mill Grove, Missouri, for the years 1930 to 1960, the distribution was considered as a model by Markovic [1965]. The parameters were estimated ‡ as k = 1.727 and λ = 0.00672 (cfs)–1.

The mean is

The probability that the maximum flow is less than 400 cfs in any year is (λ400 = 2.70):

These values were taken from tables of the gamma function. In Sec. 3.4 more convenient tables will be introduced exploiting a simple relationship between the gamma and the widely tabulated “chi-square distribution.”

In a Poisson stochastic process incidents occur “at random” along a time (or other parameter) axis. If the conditions of stationarity, non-multiplicity, and independence of nonoverlapping intervals hold, then for an average arrival rate λ,

1. For any interval of time of length t, the number of events X which occur has a Poisson distribution with parameter ν = λt:

The mean and variance are both equal to ν.

2. The distribution of time T between incidents has an exponential distribution with parameter λ:

![]()

with mean 1/λ and variance 1/λ2.

3. The time Sk between k incidents has a gamma distribution:

with mean k/λ and variance k/λ2. The distribution is also defined for noninteger k when (k – 1)! should be replaced by Γ(k).

The single most important class of models is that in which the model arises as a limit to an argument about the relationship between the phenomenon of interest and its (many) “causes.” The uncertainty in a physical variable may be the result of the combined effects of many contributing causes, each difficult to isolate and observe. In several important situations, if we know the mechanism by which the individual causes affect the variable of interest, we can determine a model (or distribution) for the latter variable without studying in detail the individual effects. In particular, we need not know the distributions of the causes. Three important cases will be considered—that where the individual causes are additive, that where they are multiplicative, and that where their extremes are critical.

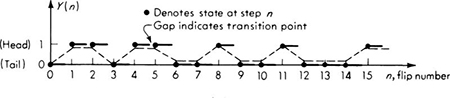

Convergence of the shape of the distribution of sums We shall introduce this case through an example. The total length of an item may be made up of the sum of the lengths of a number of similar individualparts. Examples are the total length of a line of cars in a queue, the total error in a long line surveyed in 100-ft increments, or the total time spent repeating a number of identical operations in a construction schedule.

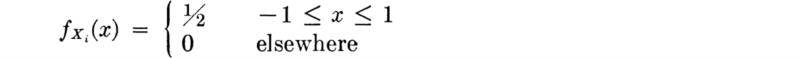

Suppose, as a specific example, that the total length of several pieces is desired, and each has been measured on an accurate rule and recorded to the nearest even number of units (for example, 248 mm). It may be reasonable to assume that each “error,” that is, each difference between the recorded length and the true length, is in this case equally likely to lie anywhere between plus and minus one unit (e.g., the true length is between 247 and 249 mm).

Let us define a random variable:

Xi = the measurement error in the ith piece

Then by our argument this variable has a constant (or uniform, Sec. 3.4.1) density function

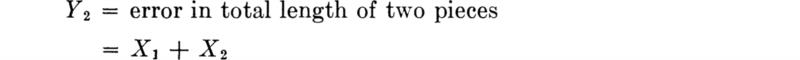

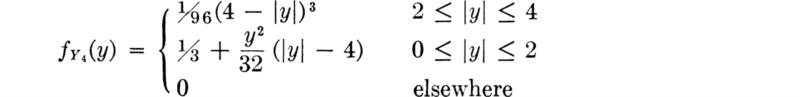

Consider first the total error in the combined length of two pieces, that is, the difference between the sum of their recorded lengths and the sum of their true lengths. Let

Assuming that the two measurement errors are unrelated, i.e., that X1 and X2 are independent random variables, we can determine the density function of Y2 using Eq. (2.3.43). The following result will be found:

The distributions of Xi (or Y1) and Y2 are shown in Fig. 3.3.1.

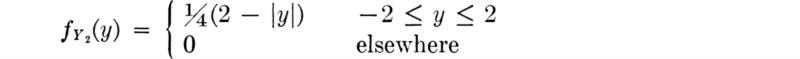

The density function of Y3 = X1 + X2 + X3 is found in the same manner:

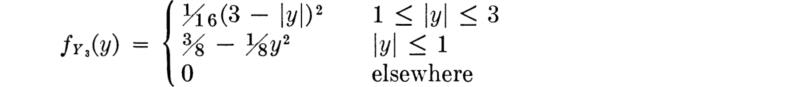

Similarly, we find too

These results are sketched in Fig. 3.3.1, along with the corresponding cumulative distribution functions. Note the scale changes.

Looking at the sequence of curves, it is evident that the distribution of the sum of a number of uniformly distributed random variables quickly takes on a bell-shaped form. By proper adjustment of the parameter c in each case,† a density function of the form

will closely approximate the density functions of the successive Yi. Such curves are shown in Fig. 3.3.1 as dotted lines. The approximations are successively better as more pieces are considered, and the approximation is always better around the mean, y = 0, than in the tails. This very important “double exponential” density function is called the normal or gaussian distribution.

Central limit theorem The ability of a curve of this shape to approximate the distribution of the sum of a number of uniformly distributed random variables is not coincidental. It is, in fact, one of the most important results of probability theory that:

Under very general conditions, as the number of variables in the sum becomes large, the distribution of the sum of random variables will approach the normal distribution.

Several of the phrases in this rather loose statement of the central limit theorem deserve elaboration. Some idea of the “very general conditions” can be obtained by considering some special cases. The theorem ‡ holds for most physically meaningful § random variables: (1) if the variables involved are independent and identically distributed; (2) if the variables are independent, but not identically distributed (provided that each individual variable has a small effect on the sum); or (3) if the variables are not independent at all, but jointly distributed such that correlation is effectively zero between any variable and all but a limited number of others. The word “approach” in the theorem’s statement would be interpreted by a mathematician as “converge to,” but an engineer will read “be approximated by.” The question of how many is “large” depends, as in any such approximation, on what accuracy is demanded, but in this case it depends too on the shape of the distributions of the random variables being summed (highly skewed distributions are relatively slow to converge). The degree of dependence is also a factor. For example, if all variables were perfectly linearly dependent on the first, Xi = ai + biX1 then the distribution of the sum of any number of them would have the same shape as the distribution of the first, since the sum  could always be written Yn = c + dX1.

could always be written Yn = c + dX1.

Fig. 3.3.1 Distributions of the error in the total length of one, two, three, or four pieces, (a) Distribution of Y1; (6) distribution of Y2; (c) distribution of Y3; (d) distribution of Y4.

The important fact is that, even if the number of variables involved is only moderately large, as long as no one variable dominates and as long as the variables are not highly dependent, the distribution of their sum will be very near normal. The immense practical importance of the normal distribution lies in the fact that this statement of the central limit theorem can be made without exact knowledge (1) of the marginal distributions of the contributing random variables, (2) of their number, or (3) of their joint distribution. Since the random variation in many phenomena arises from a number of additive variations, it is not surprising that histograms approximating this distribution are frequently observed in nature and that this distribution is frequently adopted as a model in practice. In fact, owing to its analytic tractability and to the familiarity of many engineers with the distribution, the normal model is very often used in practice when there is no reason to believe that an additive physical mechanism exists. For all these reasons the distribution deserves special attention.

Parameters: Mean and variance First, it should be pointed out that the distributions need not be centered at the origin. Consider then in general the shifted case

The distance to the center of the distribution is labeled m, for, by the symmetry of the distribution, it is its mean. We can determine the normalizing constant k by integration:

![]()

We find

Hence

or

Let us next determine the variance of the normal distribution.

A change of variable and integration by parts yield

Noting that

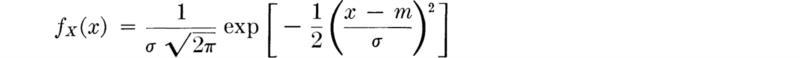

we replace c as a parameter of the normal distribution and write the density function in the form

In this, its usual form, the mean and standard deviation (or variance) are used as parameters of the distribution, and we would write X is N(m,σ2).” The effect of changes in m and α is shown in Fig. 3.3.2. The normal curves plotted in dotted lines in Fig. 3.3.1 are those with mean and standard deviation equal to the corresponding moments of the true distributions.

Using normal tables The normal distribution is widely tabulated in the literature.† This job is simplified by tabulating only the standardized

Fig. 3.3.2 Normal density functions.

variable

This variable has mean 0 and standard deviation 1 (as the reader was asked to show in Prob. 2.47). The density of the standardized normal random variable is consequently N(0,1) or

which is shown in Fig. 3.3.2a. The desired density function, that of X = mX + σXU, is found using Eq. (2.3.15):

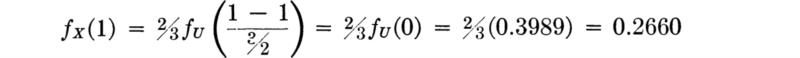

For example, the value of the density function in Fig. 3.3.2d at x = 1 is

Tables give only half the range of u, u ≥ 0, owing to the symmetry of the PDF.

To determine the probability that a normal random variable lies in any interval, the integral of fX(x) over the interval is required; alternatively of course, the difference between the two values of the cumulative distribution function will yield this information. There is no simple expression for the CDF of the normal distribution, but it has been evaluated numerically and tabulated, again for the standardized random variable. In general

in which u = (x – mX)/σX. Note that u can be interpreted as the number of standard deviations by which x differs from the mean. Tables yield values of FU(u). Because of the symmetry of the PDF, tables give only half of the range of u, usually for u ≥ 0.

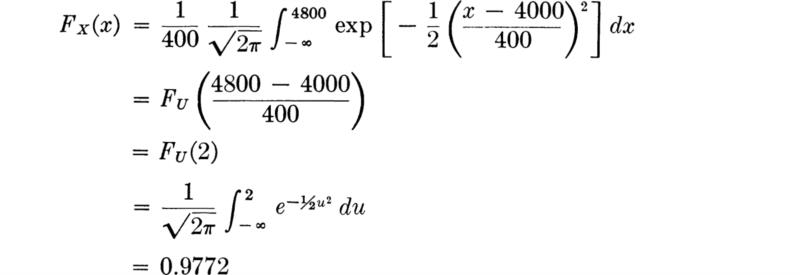

For example, the probability that a normal random variable X, with mean 4000 and standard deviation 400, will be less than 4800 is:

Concrete compressive strength is commonly assumed to be normally distributed, and a particular mix might have parameters near the values used here.

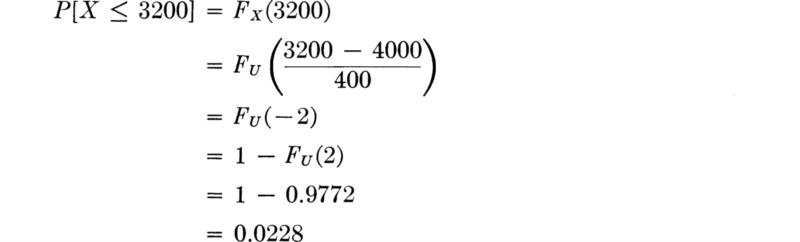

The symmetry of the distribution permits one to find values for negative arguments. A quick sketch should convince the reader that for the standardized normal variable,

Consequently, for the variable just cited:

In other tables 1 – FU(u) is tabulated for u ≥ 0, while still other tables give 1 – 2FU(– u), u ≥ 0.

The last form is related to the common situation in the study of errors or tolerances where it is desired to know the probability that a normal random variable will fall within k standard deviations of its mean:

If one recalls the discussion in Sec. 2.4.1, he will recognize this value as the probability of a normal random variable remaining within the “k-sigma” bounds. In fact, the “rules of thumb” stated there are approximately those values one obtains for the probabilities of a normal variable. Therefore they are appropriate if the distribution is approximately bell-shaped.

Higher moments The symmetry of the normal distribution about its mean implies that all odd-order central moments (and the skewness coefficient) are zero. The even-order moments must be related to only the mean and standard deviation because these two moments serve as the parameters of the family of normal distributions. It can be shown by integration that the even-order moments are given by

Note that

Hence the coefficient of kurtosis [Eq. (2.4.19)] is

It is not uncommon to compare the coefficient of kurtosis of a random variable with this “standard” value of 3, that of the normal distribution. If a distribution has γ2> 3, it is said to be “flatter” than standard. In fact, one often sees defined the coefficient of excess, γ2 – 3. Positive coefficients of excess indicate distributions flatter than the normal, negative, more peaked than normal.

Distribution of the sum of normal variables Since by the central limit theorem the sum of many variables tends to be normally distributed, it should be expected that the sum of two independent random variables each normally distributed would also be normally distributed. That is, in fact, the case. The proof is a direct application of Eq. (2.3.45), but will not be demonstrated here. Accepting the statement, we conclude that the distribution of Z = X + Y when X is ![]() and Y is

and Y is ![]() is also normal if X and Y are independent. We can use the results of Sec. 2.4.3 to determine the parameters of Z:

is also normal if X and Y are independent. We can use the results of Sec. 2.4.3 to determine the parameters of Z:

Thus Z is ![]()

If we are interested in Z = X1 + X2+ · · · + Xn, where the Xi, are independent, normally distributed random variables, we can conclude, by considering pairs of the variables in sequence, that, in general, the sum of independent normally distributed random variables is normally distributed with a mean equal to the sum of their means and a variance equal to the sum of their variances. A generalization of this statement for nonindependent, jointly normally distributed random variables will be found in Sec. 3.6.2.

Illustration: Footing loads For example, suppose that a foundation engineer wanting to estimate the long-term settlement of a footing states that the total sustained load on the footing is the sum of the dead load of the structure and the load imposed by furniture and the occupancy loads. Since each is the sum of many relatively small weights, the engineer adopts the assumption that the dead load X and sustained occupancy load Y are normally distributed. Unable to see any important correlation between them, he also decides to treat X and Y as independent. Data from numerous buildings of a similar type suggest to him that

mX = 100 kips

σX = 10 kips

mY = 40 kips

σY = 10 kips

The distribution of the total sustained load, Z = X + Y, then is also normally distributed with mean

mz = 100 + 40 = 140 kips

and

![]()

A reasonable design load might be that load which will be exceeded only with probability 5 percent. This is the value z* such that

1 – Fz(z*)= 5 percent

or

Fz(z*)= 95 percent

In terms of U, the tabulated N(0,l) variable, we find u* = (z* – mz)/σz such that

FU(u*) = 95 percent

From tables,

u* = 1.65

implying that

z* = mz + 1.65σz

= 140 + (1.65) (14.1) = 163 kips

Applications It can be said without qualification that the normal distribution is the single most used model in applied probability theory. We have seen that a sound reason may exist for its adoption as a description of certain natural phenomena. Namely, it can be expected to represent those variables which arise as the sum of a number of random effects, no one of which dominates the total. As a consequence the normal model has been used fruitfully to describe the error in measurements, to represent deviations from specified values of manufactured products and constructed items that result from a number of pieces and/or operations each of which may add some deviation to the total, and to model the capacity of a system which fails only after saturation of a number of parallel, redundant (random capacity) components has taken place. Specific examples of the last case include the capacity of a road which is the sum of the capacities of its lanes, the strength of a collapse mechanism of a ductile, elastoplastic frame, which is a sum of constants times the yield moments of certain joints, and the deflection of an elastic material which is the sum of the deflections of a number of small elementary volumes. The normal model was adopted in Fig. 2.2.5 to represent the distribution of total annual runoff (given that it was not zero) based on the assumption that this runoff was the sum of a number of individual daily contributions.

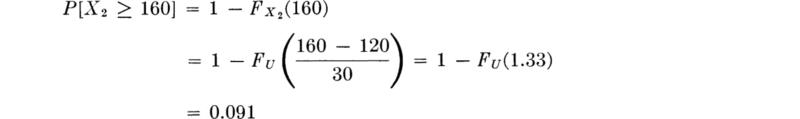

The normal distribution is also often adopted as a convenient approximation to other distributions which are less widely tabulated. Those cases in which the normal distribution is a good numerical approximation can usually be anticipated by considering how this distribution arises and then invoking the central limit theorem. The gamma distribution with parameters λ and integer k, for example, was shown in Sec. 3.2.3 to be the distribution of the sum of k exponentially distributed random variables. For k large (integer or not) the normal distribution will closely approximate the gamma. Figure 3.2.4 shows that the approximation is not good for k ≤ 3 but that it rapidly improves as k increases.

Similarly, discrete random variables such as the binomial or Poisson variables can be considered to represent the sum of a number of “zero-one” random variables and can be expected, by the central limit theorem, to be approximated well by the continuous, normal random variable for proper values of their parameters. In particular, if the approximation is to be good for the binomial, n should be large and p not near zero or one, and for the Poisson, ν should be large. Figures 3.1.1 and 3.2.1 verify these conclusions. The ability to use the normal approximation may be particularly appreciated in such discrete cases where the evaluation of factorials of large numbers is involved. Many references treat these approximations in some detail (Hald [1952], Parzen [1960], Feller [1957], etc.)

Although certain corrections associated with representing discrete by continuous variables are available to increase the accuracy of the approximation, † it is usually sufficient simply to equate first and second moments of the two distributions (discrete or continuous) in order to achieve a satisfactory approximation. For example, the probability that X, a gamma-distributed random variable, G(12.5,3.16), is less than 3 is approximately equal to the probability that a normally distributed random variable, say X*, with the same mean, mX* = mX = 12.5/3.16 = 3.95, and same standard deviation, ![]() , is less than 3. That is, X* is N(3.95,(1.12)2). Thus

, is less than 3. That is, X* is N(3.95,(1.12)2). Thus

U is, as above, N(0,l). The exact value of FX(3) can be found using tables of the incomplete gamma function or of the χ2 distribution (Sec. 3.4). This value is 0.2000.

Whether the normal model is adopted following a physical argument or as an approximation to other distributions, it should be noted that its validity may break down outside the region about its mean. Tails of the distribution are much more sensitive to errors in the model formulation than the central region. Recall, for example, the original illustration, Fig. 3.3.1. To say that the sum of four uniformly distributed random errors is normally distributed is a valuable approximation in the range close to the mean, but it clearly falls down in the tails, where the normal distribution predicts a small, but nonzero probability of occurrence of errors larger than four units. The limits on the argument of a normal density function are theoretically plus and minus infinity, yet it may still be useful in practice to assume that some variable, such as load, weight, or time, which is physically limited to nonnegative values, is normally distributed. Caution must be urged in drawing conclusions from models based on such assumptions, if, as is often the case in civil engineering, it is just these extreme values (large or small) which are of most concern. In short, the engineer must never forget the range of validity of his model nor the accuracy to which he can meaningfully use it. This statement holds true for all probabilistic models, as well as for deterministic formulations of engineering problems, although it is too frequently ignored in both.

The ease with which one can work with the normal distribution, † its many tables, and its well-known properties causes its adoption as a model in many situations when little or no physical justification exists. Frequently it is used simply because an observed histogram is roughly bell-shaped and approximately symmetrical. In this case the reason for the choice is simply mathematical convenience, with a continuous, easily defined curve replacing empirical data. Even with little or no data, the normal distribution is often adopted as a “not-unreasonable” model. The American Concrete Institute specifications for concrete strength are based on the normal distribution because it seems to fit observed data. The U.S. Bureau of Public Roads, in initial development of statistical quality-control techniques for highway construction, has adopted the normal assumption for material and construction properties, often when no information is yet available. Such uses of the normal distribution serve a valid computational and operational purpose, but the engineer should be quick to question highly refined conclusions or high-order accuracy leading from such premises. ‡ In particular, results highly dependent upon the tail probabilities in or beyond the region where little data has been observed should be suspect unless there is a valid physical reason to expect the model to hold.

Multiplicative models While the normal distribution arose from the sum of many small effects, it is desirable also to consider the distribution of a phenomenon which arises as the result of a multiplicative mechanism acting on a number of factors. An example of such a mechanism occurs in breakage processes, such as in the crushing of aggregate or transport of sediments in streams. The final size of a particle results from a number of collisions of particles of many sizes traveling at different velocities. Each collision reduces the particle by a random proportion of its size at the time (Epstein [1947]). Therefore, the size Yn of a randomly chosen particle after the nth collision is the product of Yn–1 (its size prior to that collision) and Wn (the random reduction factor). Extending this argument back through previous collisions,

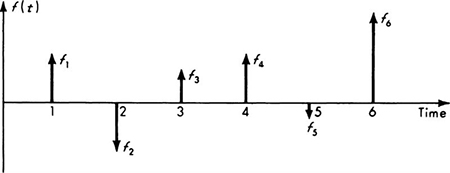

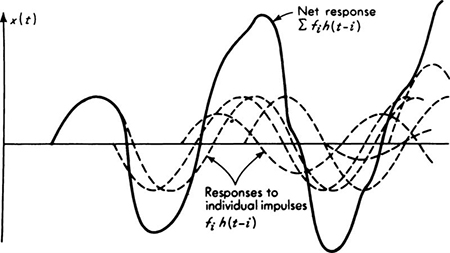

Also, a number of physical systems can be characterized by a mechanism which dictates that the increment Yn – Yn–1 in the response of the system when it is subjected to a random impulse of input Zn is proportional to the present value of the response Yn–1. Formally,

or

Letting Wi = 1 + Zi, this function is in the same multiplicative form as the breakage model above. The growth of certain economic systems may follow this model.

Finally, the fatigue mechanism in materials has been described as follows (Fruedenthal [1951]†). The internal damage Yn, after n cycles of loading, is

In this expression, Wn is the internal stress state resulting from the nth load application, subject to variation because of the internal differences in materials at the microscopic level. If, as a first approximation, g(Yn–1) is taken equal to cn–1 Yn–1,

Distribution In all these cases the variable of interest Y is expressed as the product of a large number of variables, each of which is, in itself, difficult to study and describe. In many cases something can be said, however, of the distribution of Y. Take natural logarithms ‡ of both sides of any of the equations above. The result is of the form

Since the Wi are random variables, the functions In Wi are also random variables (Sec. 2.3.1). Calling upon the central limit theorem, one may predict that the sum of a number of these variables will be approximately normally distributed. In this case, then, we expect In Y to be normally distributed. Let

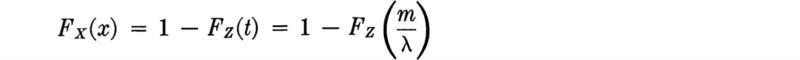

Our problem is, knowing that X is normally distributed, to determine the distribution of Y or

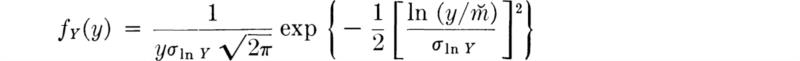

A random variable Y whose logarithms are normally distributed is said to have the logarithmiconormal or lognormal distribution. Its form is easily determined.

In Sec. 2.3.1 we found that the density function in such a case of one-to-one, monotonic transformation is [Eq. (2.3.13)]

Here

and X is normally distributed:

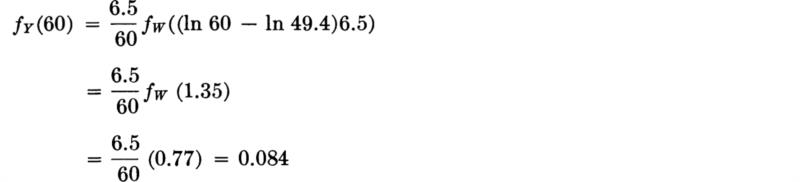

Therefore, upon substituting,

The random variable Y is lognormally distributed, whereas its logarithm X is normally distributed. The range of Y is zero to infinity, whereas that for X is minus to plus infinity. If Y = 1, X = 0, and if Y > 1, X is positive. In the range of 0 ≤ Y ≤ 1, X is in the range minus infinity to zero, since the logarithm of a number between zero and unity is negative. Y cannot have negative values, since the logarithm of a negative number is not defined.

Common parameters In Eq. (3.3.24), for the PDF of Y, the parameters used, σX and mX, are the mean and standard deviation of X or In F, not of Y. A somewhat more natural pair of parameters involving the median of Y is available. The median ![]() of a random variable was defined in Sec. 2.3.1 as that value below which one-half of the probability mass lies. That is,

of a random variable was defined in Sec. 2.3.1 as that value below which one-half of the probability mass lies. That is,

![]()

When X equals In Y,

![]()

Thus,

In ![]()

For the normal and other symmetrical distributions, the median equals the mean: ![]() . Consequently,

. Consequently,

![]()

Thus

![]()

Substituting into Eq. (3.3.24) for In y – mX,

![]()

And, writing σX as σIn Y, we obtain the most common form of the lognormal PDF:

If y has this density function, we say Y is ![]() , where

, where ![]() and σIn Y are the two parameters of the distribution.

and σIn Y are the two parameters of the distribution.

Using normal tables In terms of the PDF of a standardized N(0,1) variable U,

That is,

where

The function fU(u) is widely tabulated.

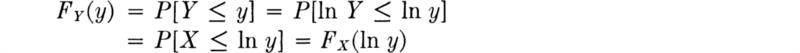

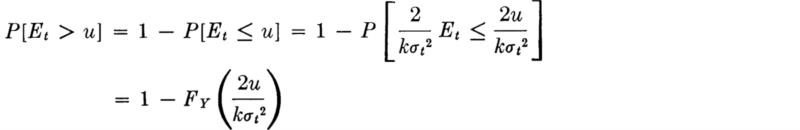

The CDF of Y is also most easily evaluated using a table of the normal distribution, since

Because X is N(mx,σX2) or ![]() ,

,

with u given above in Eq. (3.3.28).

Moments Through experimental evidence one usually has available the moments of the random variable Y. To determine the parameters of the PDF and CDF, it then becomes necessary to compute ![]() and the standard deviation of X or In Y from these moments. To determine the equations to do this, we seek first the moments of Y in terms of its parameters. By integration, it is easily shown that the moments of Y are

and the standard deviation of X or In Y from these moments. To determine the equations to do this, we seek first the moments of Y in terms of its parameters. By integration, it is easily shown that the moments of Y are

In particular

and

Thus

It follows that the desired relationships for the parameters in terms of the moments are

It is also important to note that the mean of In Y or X is

The mean of In Y is not equal to In mY; that is, the mean of the log is not the log of the mean, illustrating again Eq. (2.4.29).

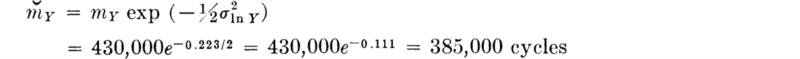

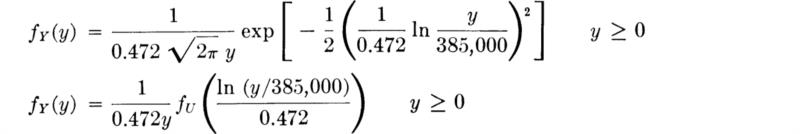

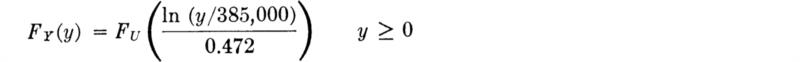

Illustration: Fatigue life From a number of tests on rotating beams of A285 steel, the number of cycles Y to fatigue failure were concluded to be lognormally distributed with an estimated mean of 430,000 cycles and a standard deviation of 215,000 cycles (when tested to ±36,400 psi). Let us plot fY(y) and FY(y). We are given from data

Using the previous results,

If VY is less than 0.2, one can assume that ![]() with less than 2 percent error in

with less than 2 percent error in ![]() . This follows from an expansion of ln

. This follows from an expansion of ln ![]() as

as

for VY < 0.2.

The other parameter of the lognormal distribution is

Again, if VY is less than 0.2, a good approximation is ![]() These two approximations can also be derived using the technique described in Sec. 2.4.4.

These two approximations can also be derived using the technique described in Sec. 2.4.4.

In this example, Eqs. (3.3.25) and (3.3.26) become

while the CDF is

Fig. 3.3.3 Lognormal distributions showing influence of σIn y.

To plot these functions, one might pick y at a number of selected locations and evaluate. It is somewhat easier, however, to select values of the standardized normal variable u, locate fU(u) and FU(u) in the tables, calculate the corresponding values of y, and finally compute fY(y). The functions fY(y) and FY(y) are shown graphed in Fig. 3.3.3. Also shown in Fig. 3.3.3 are plots of lognormal distributions with the same means but with coefficients of variation equal to 1.32 and 0.1. In these cases the parameters of the distributions are σInY, equal to 1.0 and 0.1, and ![]() equal to 260,000 and 429,800, respectively.

equal to 260,000 and 429,800, respectively.

Skewness and small V approximations Compared to a normal distribution, the most salient characteristic of the lognormal is its skewed shape. Through Eq. (3.3.30) the third central moment and thence the skewness coefficient of the lognormal distribution can be computed to be

Through Eqs. (3.3.38) and (3.3.35) it is clear that the coefficient ![]() and the skewness of the distribution depend only on the coefficient of variation of Y. The ratio of the mean to the median [Eq. (3.3.31)] also depends only on this factor. Furthermore, for small values of V (less than about 0.2), σIn Y and γ1 are very nearly linear in V [Eqs. (3.3.37) and (3.3.38)], and the mean of the logarithm of Y is approximately equal to the logarithm of the mean of Y. The distribution of Y is approximately normal for small V (Sec. 2.4.4).

and the skewness of the distribution depend only on the coefficient of variation of Y. The ratio of the mean to the median [Eq. (3.3.31)] also depends only on this factor. Furthermore, for small values of V (less than about 0.2), σIn Y and γ1 are very nearly linear in V [Eqs. (3.3.37) and (3.3.38)], and the mean of the logarithm of Y is approximately equal to the logarithm of the mean of Y. The distribution of Y is approximately normal for small V (Sec. 2.4.4).

Distribution of a product of lognormals The lognormal distribution does not have the additive regenerative property; that is, the distribution of a random variable which is the sum of two lognormal distributed random variables is no longer lognormal. But the distribution is rare in possessing a multiplicative type of regeneration ability. If

and the Yi are independent and all lognormally distributed with parameters ![]() and σInY, then Z is also lognormally distributed with

and σInY, then Z is also lognormally distributed with

and

By Eq. (3.3.36),

![]()

Hence

![]()

and

That Z is lognormally distributed follows [after logarithms of both sides of Eq. (3.3.39) have been taken] from the fact established in Sec. 3.3.1 that the distribution of the sum of independent normally distributed random variables is normally distributed. What is more, this argument also implies that under the same conditions on the Yi the random variable W:

is also lognormally distributed with easily determined constants.

Applications The lognormal probability law has a long history in civil engineering. It was adopted early in the statistical studies of hydro-logical data (Hazen [1914]) and of fatigue failures. It seems to have been adopted originally only because the observed data were found to be skewed, and better fit was obtained using this simple transformation of the familiar normal distribution. This skewed quality, † not uncommon in many kinds of data, plus the fact that the distribution avoids the nonzero probability (however small) of negative values associated with the normal model, have combined to make this distribution remain one commonly used in civil engineering practice. It is particularly frequently encountered in hydrology studies (for example, Chow [1954], Beard [1953], and Beard [1962]) to model daily stream flow, flood peak discharges, annual floods, and annual, monthly, and daily rainfall. Chow [1954] argues that the hydrological event is the result of the joint action of many hydrological and geographical factors which can be expressed in the mathematical form

![]()

where n is large. This form is typical of those which we have seen to lead to the lognormal distribution.

Lomnitz [1964] has used the multiplicative model to describe the distribution of earthquake magnitudes. He found the lognormal distribution to fit both the magnitudes and the interarrival times between earthquakes.

The distribution has also been found to describe the strength of elementary volumes of plastic materials (Johnso [1953]), the distribution of small particle sizes (Kottler [1950]), and the yield stress in steel reinforcing bars (Fruedenthal [1948]). A thorough treatment of the distribution is available in Aithchison and Brown [1957].

In civil engineering applications concern often lies with the largest or smallest of a number of random variables. Success or failure of a system may rest solely on its ability to function under the maximum demand or load to which it is subjected, not simply the typical values. Floods, winds, and floor loadings are all variables whose largest value in a sequence may be critical to a civil engineering system. The capacity, too, of a system may depend only on extremes, for example, on the strength of the weakest of many elementary components.

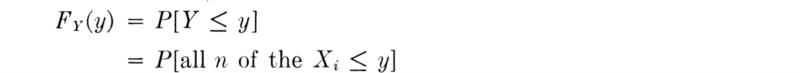

If the key variable Y is the maximum † of n random variables X1, X2, . . ., Xn, then the probability

If the Xi are independent,

In the special case where all the Xi are identically distributed with CDF FX(x):

If the Xi in the last case are continuous random variables ‡ with common density function fX(x),

Knowing from past experience the distribution of Xi the flood in any, say the ith., year, one might need the distribution of Y, the largest flood in 50 years, this being the design lifetime of a proposed water resource system. Equations (3.3.45) and (3.3.46) define the distribution of Y if the Xi can be assumed to be (1) mutually independent and (2) identically distributed. Related to these two assumptions are questions of long (multiyear) weather cycles, meteorological trends, changes (natural or man-made) in the drainage basin, etc. It may be possible to estimate the magnitude of these effects, if they exist, and, after alteration of the distributions of the Xi, make use of the more general form Eq. (3.3.44).

Fig. 3.3.4 Merging-traffic illustration. X values are times between vehicles.

Illustration: Merging lengths An engineer is studying how drivers merge into freely flowing traffic (Fig. 3.3.4). A certain class of drivers will merge only if the time Xi between passing cars is at least y sec. (The value of y will depend upon the class of drivers being studied.) If he assumes that the traffic is following a Poisson arrival process, then the Xi’s are independent, exponentially distributed random variables with parameter λ (Sec. 3.2). What is the probability that after n cars have passed, a driver will not have been able to merge?

Consider the maximum Y of the n times between cars, X1, X2, . . ., Xn. Then there will have been no merge if Y is less than y. For any particular value of y.

P[no merge] = ![]()

The density function of Y, the maximum of times, is simply

![]()

which is compared in Fig. 3.3.5 with the distribution of Xi for n = 2 and 10. Note that the maximum of say 10 values is likely to be (i.e., its mode is) in the right-hand tail of the distribution of Xi.

Fig. 3.3.5 comparison of PDF of interval times Xiwith that of maximum Y of n times.

Asymptotic distributions If the conditions of independence and common distribution hold among the Xi then in a number of cases of great practical importance the shape of the distribution of Y is relatively insensitive to the exact shape of the distribution of the Xi. In these cases, limiting forms (as n grows) of the distribution of Y can be found, which can be expected to describe the behavior of that random variable even when the exact shape of the distribution of the Xi is not known precisely. In this sense this situation is not unlike those already encountered in the previous two sections on the normal and lognormal distributions. When dealing with extreme values, however, no single limiting distribution exists. The limiting distribution depends, obviously, on whether largest or smallest values are of interest, and also on the general way in which the appropriate tail of the underlying distribution, that of the Xi behaves. Three specific cases, which have been studied in some detail (Gumbel [1958]) and applied widely, will be mentioned here.

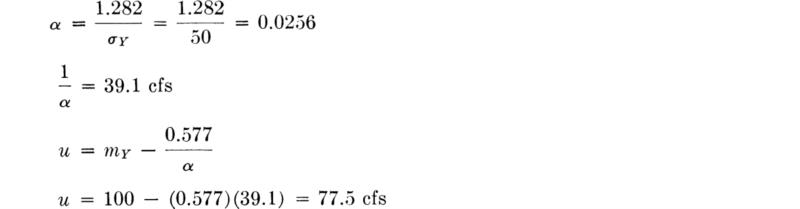

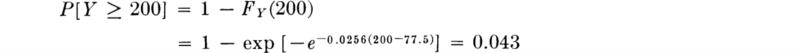

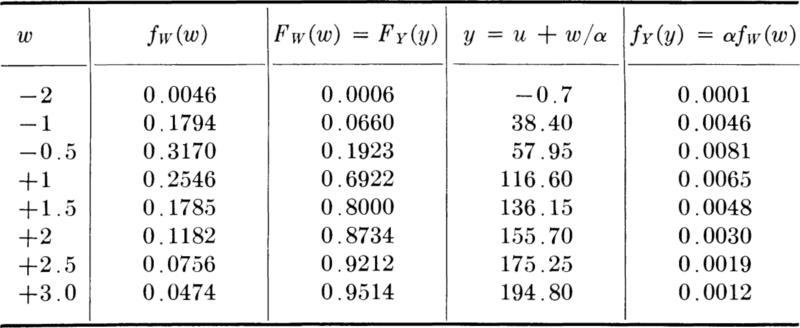

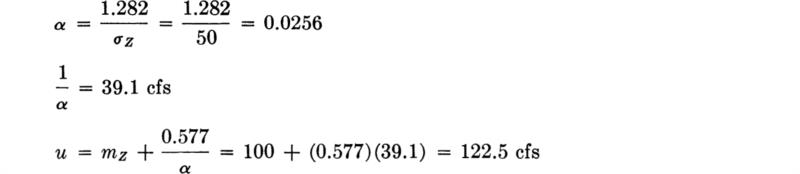

Type I: Distribution of largest value The so-called Type I limiting distribution arises under the following circumstances. We wish to know the limiting distribution of the largest of n values of Xi as n gets large. Suppose that it is known only that the distribution of the xi is unlimited in the positive direction and that the upper tail falls off “in an exponential manner”; that is, suppose that, in the upper tail, at least, the common CDF of the Xi may be written in the form