A probabilistic model remains an abstraction until it has been related to observations of the physical phenomenon. These data yield numerical estimates of the model’s parameters, and provide an opportunity to verify the model by comparing the observations against model predictions. The former process is called estimation; it is the subject of Sec. 4.1. The latter, comparative, process includes verification of the entire model (Sec. 4.4), but more broadly it includes the search for significance in a batch of statistical data. Conventional methods of significance testing are discussed in Sec. 4.2.

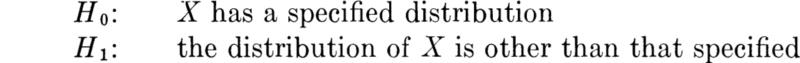

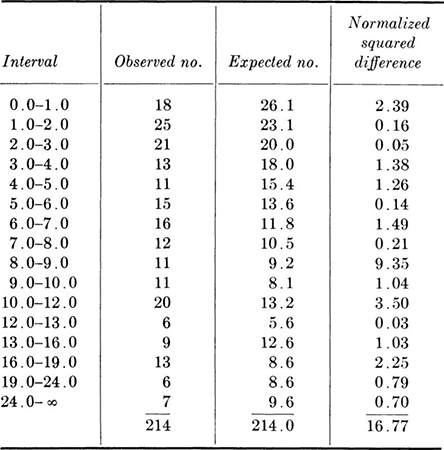

Consider the engineer who has developed a model of some physical phenomenon leading to a proposed functional form (e.g., lognormal) of the governing probability distribution. He must next estimate its parameters and then judge the validity of the model. Both these processes, estimation and verification, require data for the resolution. Repeated experiments will yield a sample of observed values of the random variable, say n in number. The resulting set of n numbers might be treated by the data-reduction techniques discussed in Chap. 1, yielding sample moments and a histogram. If we were to repeat the sequence of n tests, it is the nature of the probabilistic phenomenon that we would get (except coincidentally) a different set of values for the n numbers and therefore for the sample moments and histograms. Any reasonable rule for extracting parameter estimates from such sequences of numbers (e.g., a rule which says “estimate the parameter m by the average of the sample”) would consequently yield different estimates of the values of the model’s parameters. No single sequence of observations finite in number can be expected to give us exact model parameter values, because the data itself is a product of the “randomness” which characterizes the phenomenon. We must be prepared then to accept a data-derived parameter value as no more than an estimate of the true value, subject to an error of uncertain but, as we shall see, not unquantifiable magnitude.

We can also use data to evaluate the validity of an hypothesized model by comparing the model’s predictions with observed occurrences. The relative frequency of particular events, for example, can be measured in the experiment and compared with probabilities computed from the model. Consistent and large discrepancies between such numbers would cast doubt on the ability of the hypothesized model to describe the physical phenomenon, while close correspondence would tend to lend credence to the proposed model. A moment’s reflection, however, will reveal that, again owing to the probabilistic nature of the situation, one can seldom state that certain observed data are sufficient to reject or accept categorically a proposed model. An apparent discrepancy between model and reality may simply be the result of the occurrence in that particular data of a relatively rare but not impossible set of events. On the other hand, there may be a number of other models capable of yielding (with small or large likelihood) a set of observed data judged to be in accordance with our model’s predictions. Consequently such evaluations will have to be qualified and phrased in a way that reflects the possibilities of such errors in the conclusions.

In short, if parameter estimation and model verification are based on practical sample sizes, there will remain uncertainty in the parameter values and perhaps uncertainty in the validity of the model itself. We recognize, then, that the engineer faces at least three distinct types of uncertainty. The first is the natural, intrinsic, irreducible, or fundamental uncertainty of the probabilistic phenomenon itself. This was emphasized in previous chapters. The second type of uncertainty can be called statistical. It is associated with failure to estimate the model parameters with precision. It is not fundamental, in that it can be virtually eliminated at the expense of a very large sample size. The third kind of uncertainty, that in the form of the model itself (the model uncertainty), can, in principle, also be eliminated with sufficient data. For example, a Bernoulli trial model says the number of heads in k flips of an unbalanced coin is a binomial random variable B(k,p). The outcome of any experiment (of k flips) is fundamentally uncertain, even given the validity of the binomial distribution and given a known value of p. With only a limited number of experiments upon which to base an estimate, however, the true value of p will remain in some doubt, i.e., subject to statistical uncertainty. A limited amount of data will not provide enough comparisons between histograms and the binomial PMF to guarantee that the model holds (e.g., there may not be independence among the flips); model uncertainty remains. More data will tend to reduce the magnitude of statistical and model uncertainty. The fundamental uncertainty remains unchanged by the number of past experiments.

This chapter represents a small but appropriate sampling of applied statistics† a widely studied and broadly applied discipline dealing with the relationships among abstract probabilistic models and real data gathered from situations in engineering, industry, and the sciences. We shall see that convenient conventions have been established for reporting and discussing the very difficult questions surrounding the second and third kinds of uncertainty, those associated with estimation and model verification.

Having hypothesized the type or functional form of his model, the engineer’s first problem upon being confronted with numerical data is the estimation of the values of the parameters of these functions. In this operation he chooses the parameters’ estimates so that the model, in some sense, best fits the observed behavior. We seek estimators (certain functions of the random variables in the sample) which promise this fit, and then we study the nature of these estimators as random variables.

We choose to introduce this topic through illustrations. A structural engineer concerned with strength of reinforced-concrete structures needs information concerning the force at which the tensile reinforcement will yield. A yield-strength theory for ductile steel argues that a bar yields only when its many individual “fibers” have all yielded, and, since each such fiber contributes in an additive manner to the total force, it should be expected that X, the yield force of a bar, would be normally distributed†:

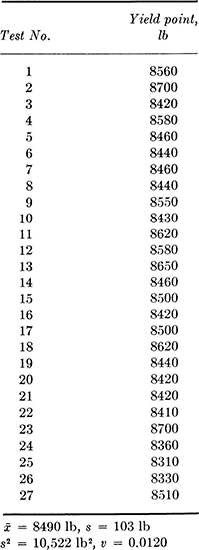

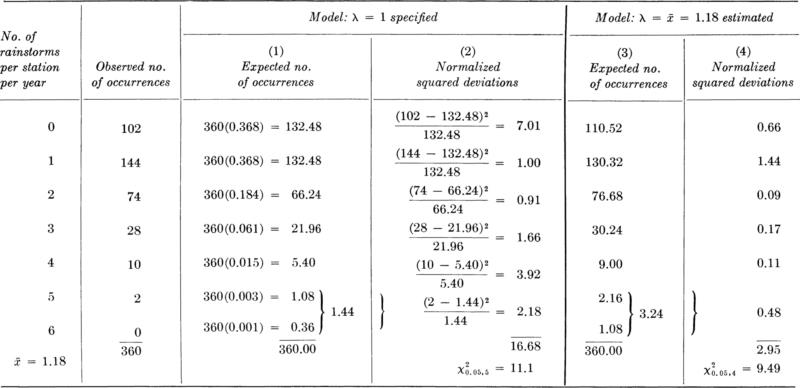

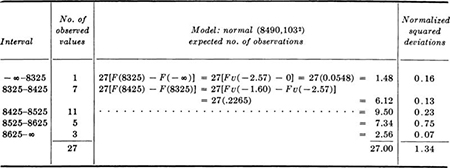

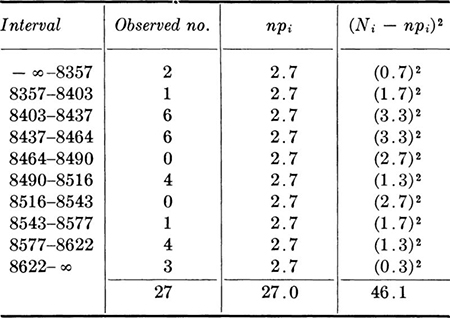

The engineer tests a number of specimens of no. 4 (nominally ![]() in diameter) mild steel reinforcing bars. The data from 27 specimens are shown in Table 4.1.1. The sample mean

in diameter) mild steel reinforcing bars. The data from 27 specimens are shown in Table 4.1.1. The sample mean ![]() and sample standard deviation s (Sec. 1.2), are 8490 and 103 lb, respectively.† Accepting the conjecture that the yield force of such a bar has a normal distribution, what values of the parameters m and σ should be used when describing the behavior of a no. 4 reinforcing bar in this lot?

and sample standard deviation s (Sec. 1.2), are 8490 and 103 lb, respectively.† Accepting the conjecture that the yield force of such a bar has a normal distribution, what values of the parameters m and σ should be used when describing the behavior of a no. 4 reinforcing bar in this lot?

Table 4.1.1 Tests of no. 4 rein forcing bars from a single lot

It would be contrary to the engineer’s common sense to consider estimates of m and σ which differ much, if any, from the sample statistics ![]() and s. The moments of the numerical data provide obvious and reasonable estimates of the corresponding moments of the proposed mathematical model.

and s. The moments of the numerical data provide obvious and reasonable estimates of the corresponding moments of the proposed mathematical model.

This intuitively satisfactory rule for calculating model parameter estimates from data is given the name the method of moments. It says that the estimator ![]() of mX should be the sample mean, and the estimator

of mX should be the sample mean, and the estimator ![]() of the variance σ2 is the sample variance. Similar rules hold for higher moments of the variable and the data, although these two estimators are sufficient for two-parameter distributions.

of the variance σ2 is the sample variance. Similar rules hold for higher moments of the variable and the data, although these two estimators are sufficient for two-parameter distributions.

In the example, the estimators (rules) lead to the following estimates (values) ‡ of the mean and variance

Given these parameter values the engineer can define completely his model [Eq. (4.1.1)] of the bar-force random variable and he is prepared to make such statements as “The probability is 5 percent that the yield force of any particular bar will be less than m – 1.65σ = 8490 – 1.65(103) = 8490 – 173 = 8317 lb.”

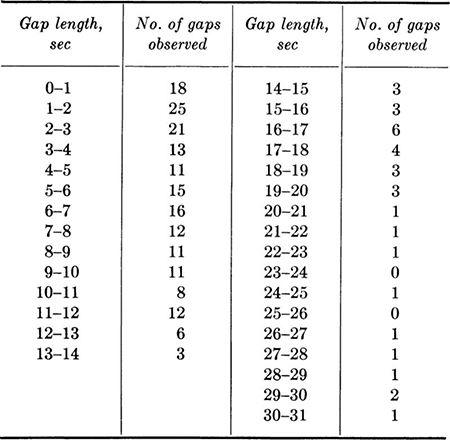

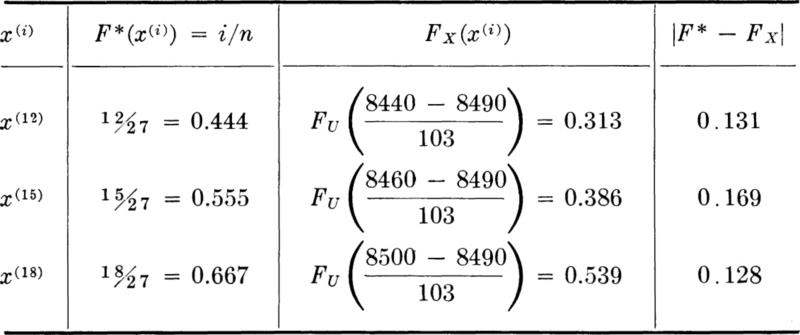

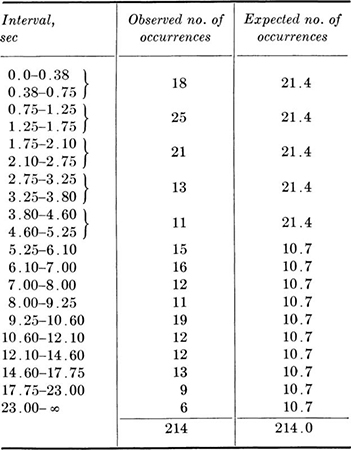

Illustration: Exponential distribution It is not always true, of course, that the parameters of a distribution are equivalent to moments. The parameters n and p of the binomial distribution (Sec. 3.1.3) are examples. The moments are, however, functions of the parameters, and these equations can normally be solved to yield the parameters in terms of the moments.§ Consider the following simple example. A traffic engineer who had adopted the common assumption of Poisson traffic (Sec. 3.2) measured the gap or time-between-successive-cars data shown in Table 4.1.2. The sample mean of this data is 7.66 sec. The mathematical model states that this distribution is exponential with parameter λ:

Table 4.1.2 Observed gaps in traffic: Arroyo Seco Freeway (Los Angeles) *

Interval midpoint is used as value of ti; Σxi = 1640; total no. of observations = 214; ![]() sec; Σti2 = 21,396 sec2.

sec; Σti2 = 21,396 sec2.

* Source: Gerlough [1955].

The mean of this distribution is (Sec. 3.2.2)

Hence the parameter, as a function of the moments, is simply

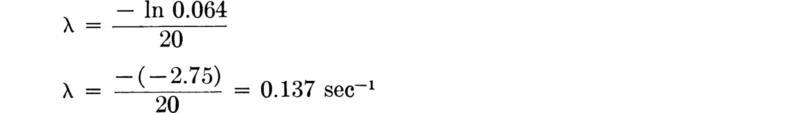

By the method of moments [Eq.(4.1.2)] the estimator of mT is the sample mean. The corresponding estimator of the parameter is the reciprocal of the sample mean. For this data the estimate of λ is

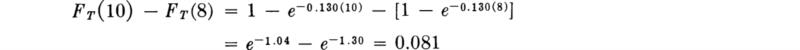

Adopting this parameter value, the engineer now can state, for example, that the probability of a gap length of 8 to 10 sec is

The sample moments have been proposed as reasonable estimators of the moments of the mathematical model. The sample moments, it should be remembered, were proposed in Chap. 1 only as logical numbers to summarize a batch of numbers observed in some physical experiment. They were given definitions [Eqs. (4.1.2) and (4.1.3)] in terms of those observed numbers, x1 x2, . . ., xn. The moments m and σ2, on the other hand, are constants. They are parameters of some distribution defining the behavior of a random variable X, which is a purely mathematical model. Once we have adopted the assumption, however, that this mathematical model is controlling the physical experiment, we can interpret the xi’s as observed values of a random variable X. In this new light, the sample average ![]() is the numerical average of n observations of the random variable X.

is the numerical average of n observations of the random variable X.

Sample statistics as random variables With the ultimate goal of gaining some knowledge of how good an estimator of the mean m the sample average ![]() is, let us back up one step to a point before the physical experiments have been carried out, i.e., to a point before the observed values x1, x2, . . ., xn are known, and then let us see what can be said about this sample mean. Define the random variables X1, X2, . . ., Xn as the first, second, . . ., nth outcomes of the sequence of n experiments involved in the process† of sampling. Each Xi has the same distribution as X, sometimes called in this context the population to be sampled. In particular, each variable has mean and variance equal to the population mean mX and population variation σX2. Then the sample mean which we intend to compute can be written

is, let us back up one step to a point before the physical experiments have been carried out, i.e., to a point before the observed values x1, x2, . . ., xn are known, and then let us see what can be said about this sample mean. Define the random variables X1, X2, . . ., Xn as the first, second, . . ., nth outcomes of the sequence of n experiments involved in the process† of sampling. Each Xi has the same distribution as X, sometimes called in this context the population to be sampled. In particular, each variable has mean and variance equal to the population mean mX and population variation σX2. Then the sample mean which we intend to compute can be written

and the sample variance is

Capital letters are now used for the sample mean and the sample variance to emphasize the fact that, as functions of the random variables X1, X2, . . ., Xn, these sample averages are themselves random variables. After the experiments, the outcomes X1 = x1, X2 = x2, . . ., Xn = xn will be recorded, and the observed values ![]() and s2 of the random variables

and s2 of the random variables ![]() and S2 will be computed. Functions g(X1X2, . . ., Xn) of a sample X1 X2, . . ., Xn are called sample statistics. The sample mean and sample variance are just two of many such sample statistics of potential interest. Others include X(n) = max [X1X2, . . ., Xn], the largest observed value, and X(i), the ith smallest value in the sample. (The latter is called an “order statistic.” See Prob. 3.46.) Our present interest in sample statistics is due to their role as estimators of unknown parameters.

and S2 will be computed. Functions g(X1X2, . . ., Xn) of a sample X1 X2, . . ., Xn are called sample statistics. The sample mean and sample variance are just two of many such sample statistics of potential interest. Others include X(n) = max [X1X2, . . ., Xn], the largest observed value, and X(i), the ith smallest value in the sample. (The latter is called an “order statistic.” See Prob. 3.46.) Our present interest in sample statistics is due to their role as estimators of unknown parameters.

Moments of the sample mean ![]() As a random variable, the sample mean,

As a random variable, the sample mean, ![]() is open to study of such questions as, “What is its average value?” “What is its variance?” and “What is its distribution?” In particular, since we use

is open to study of such questions as, “What is its average value?” “What is its variance?” and “What is its distribution?” In particular, since we use ![]() as its estimator, what is the relationship between answers to these questions and the mean mX of the random variable X? In this section we concentrate only on first- and second-order moments of this estimator. The moments of

as its estimator, what is the relationship between answers to these questions and the mean mX of the random variable X? In this section we concentrate only on first- and second-order moments of this estimator. The moments of ![]() are independent of the form of the distribution of X and they are sufficient to answer many questions about estimators. To go further we will have to specify the PDF of X. Do not forget that we study

are independent of the form of the distribution of X and they are sufficient to answer many questions about estimators. To go further we will have to specify the PDF of X. Do not forget that we study ![]() only because we ultimately intend to use observations of

only because we ultimately intend to use observations of ![]() to estimate an unknown mX.

to estimate an unknown mX.

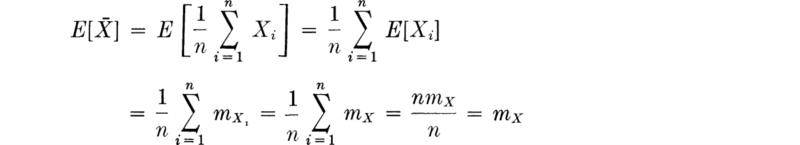

Consider first, then, the mean of the sample mean [Eq. (4.1.8)]:

In short,

In words, the expected value of the sample mean is equal to the mean of the underlying random variable. Any other answer would undoubtedly leave us somewhat uneasy as to the merit of ![]() as an estimator of mX.

as an estimator of mX.

As a random variable it cannot be expected, however, that ![]() will often, if ever, take on values exactly equal to the mean and hence give a perfect estimate of mX. The estimation error,

will often, if ever, take on values exactly equal to the mean and hence give a perfect estimate of mX. The estimation error, ![]() is a random variable with a mean of zero. A measure of the average magnitude of the random error associated with an estimator can be gained through the expected value of its square (called the mean square error). This second- order moment reduces in this case to the variance of

is a random variable with a mean of zero. A measure of the average magnitude of the random error associated with an estimator can be gained through the expected value of its square (called the mean square error). This second- order moment reduces in this case to the variance of ![]() :

:

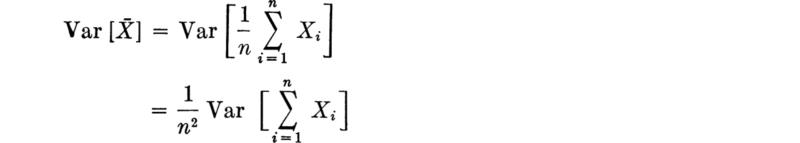

The next question is then how Var ![]() depends on Var

depends on Var![]() :

:

Using Eq. (2.4.81b) for the variance of a sum of random variables,

We shall restrict our attention to those cases where the sampling of X is carried on in such a manner that it may be assumed that the individual observations X1 X2, . . ., Xn are mutually independent random variables. Such sampling is called random sampling. The main reason for this restriction is simply that, even if the stochastic dependence involved lent itself to description, nonindependent types of sampling are usually more difficult to analyze. †

It should be pointed out that in practice it is virtually impossible to ascertain that true random sampling is being achieved, but, if the engineer wishes to exploit the theory of statistics to give him a quantifiable measure of the errors in estimation, it remains necessary that he take precautions to insure that there is little systematic variation or “persistence” from experiment to experiment. In sampling a population of reinforcing bars, for example, one would avoid taking more than one specimen from any one bar because correlation among specimens within a bar is to be expected.

An immediate consequence of assumption of random sampling is the great simplification of Eq. (4.1.12). For independent samples, the correlations are zero and

The simplicity of these formulas belies their great practical significance. We now have a measure of the expected error associated with the rule that states that mX should be estimated by![]() . No matter what the distributions of X and

. No matter what the distributions of X and ![]() , through Chebyshev’s inequality [Eq. (2.4.11)] we are now in a position to make probability statements regarding the likelihood that the estimator

, through Chebyshev’s inequality [Eq. (2.4.11)] we are now in a position to make probability statements regarding the likelihood that the estimator ![]() will be within certain limits about mX, say

will be within certain limits about mX, say ![]() Equation (4.1.14) also shows how, for fixed probability (or fixed k), the width of these limits decreases as the sample size increases. Notice, for example, that to halve the interval width from that associated a single observation (n = 1) of X, one must increase the sample size to n = 4. To halve it again, n must equal 16, and so forth. The distributions of

Equation (4.1.14) also shows how, for fixed probability (or fixed k), the width of these limits decreases as the sample size increases. Notice, for example, that to halve the interval width from that associated a single observation (n = 1) of X, one must increase the sample size to n = 4. To halve it again, n must equal 16, and so forth. The distributions of ![]() for these cases are sketched in Fig. 4.1.1. It becomes increasingly expensive to continue to reduce the magnitude of

for these cases are sketched in Fig. 4.1.1. It becomes increasingly expensive to continue to reduce the magnitude of![]() , the measure of error in the estimator

, the measure of error in the estimator![]() . It should be remembered, however, that

. It should be remembered, however, that ![]() is a measure of expected error. In a state of ignorance regarding the true mean we can only say that the sample averages based on larger n are more likely to fall closer to mX than those derived from less information.

is a measure of expected error. In a state of ignorance regarding the true mean we can only say that the sample averages based on larger n are more likely to fall closer to mX than those derived from less information.

Fig. 4.1.1 Distribution of ![]() for n = 1, 4, 16.

for n = 1, 4, 16.

Moments of the sample variance; bias A brief, similar study of the first- and second-order moment behavior of the (random variable) sample variance S2 will lead us to some additional conclusions. We are interested in S2 because we have proposed using S2 as an estimator of the parameter σ2. By definition,

Expanding the square and taking expectations leads directly to the conclusion that (for random samples)

The striking and somewhat disturbing conclusion is that the expected value of the second central moment of the sample E[S2] is not equal to the second central moment of the probability distribution σ2. On the average over many different samples, S2 will not equal the parameter σX2 for which it has been proposed to serve as an estimator, but will equal something smaller.†

Estimators, like S2, whose expected values are not equal to the parameter they estimate are said to be biased estimators. On the assumption that bias is an undesirable property, one can easily determine an unbiased estimator of the variance.

The estimator S2*, which we define as

or

is a sample statistic whose expected value is n/(n ‒ 1) times the expected value of S2 or

Hence S2* is an unbiased estimator of σX2. For this reason the formulas

are found in many texts discussing data reduction in place of Eqs. (1.2.2a) and (1.2.2b) for s2. However, without the formal mechanism of probability and statistics and without the number of assumptions (e.g., independence and desirability of an unbiased estimator) which led us to Eq. (4.1.19), there is no theoretical reason to favor s2* over the more natural s2 as a numerical summary of a batch of numbers.

In the bar-yield-force illustration, the estimator S2 led to an estimate of (103)2 for σ2, and the unbiased estimator S2* yields an estimate of (105)2.

Even if S2* is unbiased, however, it is not necessarily true that S2* is a “better” estimator of σ2 than S2. In general, the error between any (biased or unbiased) estimator ![]() and the parameter it estimates θ is

and the parameter it estimates θ is![]() . (The former value is random; the second is a constant.) The mean square error

. (The former value is random; the second is a constant.) The mean square error ![]() is found easily upon expanding the square to equal

is found easily upon expanding the square to equal

which depends on both the variance and the bias, ![]() , of the estimator. Therefore, the mean square errors of the two estimators S2 and S2* are

, of the estimator. Therefore, the mean square errors of the two estimators S2 and S2* are

and

It is not immediately clear which estimator has the smaller mean square error. For a special case, that where X is normally distributed, some simple algebra will show that †

Substituting into the previous two equations for the mean square errors, we can calculate the ratio q(n) of the expected squared error of S2 to that of S2*:

The ratio is less than 1 for all values of n. For example, ![]() . In other words, although S2 has an expected value different from σ2, the expected value of its squared error is smaller than that of the unbiased estimator S2*. The latter estimator leads more frequently to larger errors. This condition is sketched in Fig. 4.1.2 for small n where it is most extreme. In customary statistical terminology, although unbiased, S2* is not a minimum-mean-square-error estimator† of σ2.

. In other words, although S2 has an expected value different from σ2, the expected value of its squared error is smaller than that of the unbiased estimator S2*. The latter estimator leads more frequently to larger errors. This condition is sketched in Fig. 4.1.2 for small n where it is most extreme. In customary statistical terminology, although unbiased, S2* is not a minimum-mean-square-error estimator† of σ2.

Choice of estimator One can conclude generally that the choice of the sample statistic (the function of the observed values) which he should use to estimate a parameter is not necessarily obvious. Any reasonable statistic can be considered as a contending estimator. The sample median, sample mode, or even the average of the maximum and minimum value in the sample are not unreasonable estimators of the mean of many distributions. The method of moments is one general rule for selecting estimators. The method of maximum likelihood (Sec. 4.1.4) is yet another, very common general rule. The question of choosing the

Fig. 4.1.2 Comparison of distributions of S2 and S2*, an extreme case.

best estimator is ambiguous until “best” is defined. Such intuitively desirable properties† of an estimator as its being unbiased and as its being the estimator which promises the minimum mean square error are unfortunately not necessarily combined in the same sample statistic, as was seen in comparing S2 and S2* as contending estimators of σ2. The search for and study of “best” or just “good” estimators is one of the tasks of mathematical statisticians. We refrain from venturing further into the questions of defining other desirable properties, or of choosing among contending estimators. In Sec. 4.1.4 we present an alternative general method, the method of maximum likelihood. It is an intuitively appealing method of choosing estimators which are, on the whole, equal to or better (in the mean-square-error sense) than those estimators suggested by the method of moments.

We have seen that a sample statistics mean and variance, which we were able to find without reference to the distribution of X, are sufficient to describe the salient characteristics of the statistic as an estimator of a model parameter. They define its central tendency relative to the parameter and its dispersion (mean square error) about the true parameter; in particular they indicate the dependence of this latter factor on sample size. More precise, quantitative statements of the uncertainty in parameter values are possible if the entire distribution of the sample statistic is utilized. (This distribution depends upon the distribution of X.) We shall find that one vehicle for such statements is the confidence interval.

The distribution of a sample statistic, which is a function of the random variables X1, X2, . . ., Xn in the sample, depends upon their (common) distribution. The methods of derived distributions (Sec. 2.3) are in general necessary to determine the distribution of the statistic. ‡ We shall focus our attention here on the distribution of the sample mean, which is the distribution of a constant times a sum of random variables.

Distribution of the sample mean of Bernoulli variables For example, suppose the sampled random variable X has a simple Bernoulli distribution with

and

Then the distribution of the average of a sample of size n,

where each Xi has the distribution above, can be determined by inspection. The sum

representing the number of successes (observations of “1’s”) in n Bernoulli trials, is binomial, B(n,p) [Sec. 3.1.2, Eq. (3.1.4a)]. Hence the distribution of ![]() = Y/n is [Sec. 2.3.1]

= Y/n is [Sec. 2.3.1]

which is a binomial-like distribution defined on noninteger values.

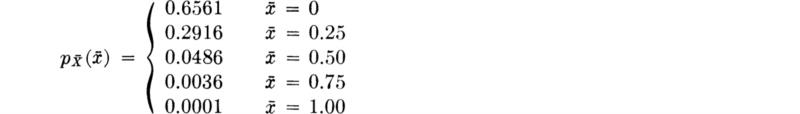

Illustration: Quality indicator In the process of setting up proposed statistical methods of quality control in highway construction, engineers have investigated data from old tests of a typical material quality indicator, such as the density of the subbase. The data were selected only from those sections of roads which had subsequently proved with use to show good performance. The purpose of this effort is to determine the reliability of the material tests as indicators of final road performances. It is desired to estimate what proportion p of these tests indicated poor material and consequently incorrectly suggested that the road would be of unsatisfactory quality. If X is a (0,1) Bernoulli random variable, indicating by a value “1” that the test would have rejected the (satisfactory) road, then in a sample of four sections of a road, the estimator ![]() of mX and hence of p has the distribution

of mX and hence of p has the distribution

Clearly the estimate of p is restricted to one of these five values, since only an integer number, 0, 1, 2, 3, or 4, of tests can be observed to be “bad.” The mean and variance of the estimator are

![]()

For example, if the true value of p were 10 percent, then![]() , the estimator of p, would have this distribution:

, the estimator of p, would have this distribution:

Thus, for example, if the engineers look at only four observations of a test of this kind, i.e., one which is actually incorrect only 10 percent of the time, there is a 5 percent chance that they will estimate that the test “rejects” potentially good roads half the time. If many independent samples of size four were taken (say, four sections from many different roads) and p estimated from each sample, the average (mean) of the estimate would be

![]()

and the square root of the mean square error (rms) of these many estimates would be

Normally distributed sample mean ![]() From Chap. 3 we know that the distribution of the sample means in the two illustrations in Sec. 4.1.1 are, respectively, normal,

From Chap. 3 we know that the distribution of the sample means in the two illustrations in Sec. 4.1.1 are, respectively, normal, ![]() , and gamma, † G(n,nλ), for the normally distributed bar-yield-force variable and the exponentially distributed traffic-interval variable. Assuming that the true distribution of the bar-force data is normal, with mean 8500 lb and standard deviation 100 lb, this distribution and those of the sample means for two sample sizes, n = 9 and n = 27, are shown in Fig. 4.1.3. The distribution of

, and gamma, † G(n,nλ), for the normally distributed bar-yield-force variable and the exponentially distributed traffic-interval variable. Assuming that the true distribution of the bar-force data is normal, with mean 8500 lb and standard deviation 100 lb, this distribution and those of the sample means for two sample sizes, n = 9 and n = 27, are shown in Fig. 4.1.3. The distribution of ![]() , the mean of the exponentially distributed traffic intervals is skewed right for small sample sizes. As n increases, this gamma distribution will be almost perfectly symmetrical. In a few such cases the distribution of the sample mean is one of the common distributions discussed in Chap. 3. But in general when dealing with the distribution of the sample mean we rely upon another observation.

, the mean of the exponentially distributed traffic intervals is skewed right for small sample sizes. As n increases, this gamma distribution will be almost perfectly symmetrical. In a few such cases the distribution of the sample mean is one of the common distributions discussed in Chap. 3. But in general when dealing with the distribution of the sample mean we rely upon another observation.

Fig. 4.1.3 Bar-yield-force illustration—distributions of X and of two sample means ![]() ; n = 9, n = 27.

; n = 9, n = 27.

If n is reasonably large, we can invoke the central limit theorem (Sec. 3.3.1) to state that, no matter what the distribution of X, the sample mean will be approximately normal. Its mean is mx and its standard deviation is![]() . As the most important single case, we choose the normally distributed sample mean to illustrate the use to which the distribution of the sample statistic is put in estimation situations, namely, in the determination of interval estimates.

. As the most important single case, we choose the normally distributed sample mean to illustrate the use to which the distribution of the sample statistic is put in estimation situations, namely, in the determination of interval estimates.

Confidence intervals Consider again the problem of the yield force in reinforcing bars. Let us assume that through a history of tests on similar material and other bar sizes the engineer knows with relative certainty that the standard deviation of the yield force is 100lb. † His problem is to estimate the mean, and he focuses attention quite logically upon the sample mean![]() . This statistic is, prior to making the sample, a random variable normally distributed ‡ with unknown mean m and known standard deviation 100

. This statistic is, prior to making the sample, a random variable normally distributed ‡ with unknown mean m and known standard deviation 100 ![]() . Thus the variable

. Thus the variable ![]() /

/![]() is N(0,l). Anticipating an experiment involving 27 specimens, the engineer can say, for example,

is N(0,l). Anticipating an experiment involving 27 specimens, the engineer can say, for example,

or

The statement, before experimentation, is that the sample average will with probability 0.95 lie within 37.7 lb of the true mean.

But what can be said after the experiment when the results in Table 4.1.1 are observed and ![]() is found to take on the value

is found to take on the value ![]() = 8490 lb? It is tempting to substitute

= 8490 lb? It is tempting to substitute ![]() for

for ![]() in the formula above, yielding

in the formula above, yielding

As inviting as it is to use the statement “the probability that the true mean lies in the interval 8452.3 and 8527.7 is 0.95” as a concise way of incorporating our uncertainty about the true value of the mean, the statement is, strictly speaking, inadmissible. The true mean of X has been considered, to this point, † to be a constant, not a random variable. As such, mx has a specific (although unknown) value, and it is not free to vary from experiment to experiment. It either lies in the stated interval or it does not.

Although the probability statement is not strictly correct in the present context, intervals formed in the manner above do convey a measure of the uncertainty associated with incomplete knowledge of parameter values. Such intervals are termed confidence intervals or interval estimates. The number which is the measure of this uncertainty is called the confidence coefficient. In this case the “95 percent confidence interval” that was observed in this particular experiment is 8452.3 to 8527.7.

The confidence interval provides an interval estimate on a parameter as opposed to the point estimates we considered earlier in the chapter. When the distribution whose parameter is being estimated is to be embedded in a still larger probabilistic model, the engineer can seldom afford the luxury of carrying along anything more than a point estimate, i.e., a single number, to represent the parameter. But the statement of an interval estimate does serve to emphasize that the value of a parameter is not known precisely and to quantify the magnitude of that uncertainty.

Confidence intervals on the mean Consider a more general statement concerning the confidence interval of the mean when σ is known. The statistic ![]() is assumed to be approximately (if not exactly) distributed, N(0,l). Hence

is assumed to be approximately (if not exactly) distributed, N(0,l). Hence

U being the standardized normal random variable. The limits a and b are usually chosen symmetrically, † and we write

in which k α/2 is denned to be that value such that 1 – FU(k α/2) = α/2. It is found in tables of the standardized normal distribution. Then

or

which is of the form

In this form it is clear that the two limits of the interval, g1![]() and g2

and g2![]() ,are sample statistics and random variables. ‡ Equation (4.1.40) states that the probability that these two random variables will bracket the true mean is 1 – α. The probability that either the lesser random variable g1

,are sample statistics and random variables. ‡ Equation (4.1.40) states that the probability that these two random variables will bracket the true mean is 1 – α. The probability that either the lesser random variable g1![]() will exceed m or the greater, g2

will exceed m or the greater, g2![]() , will be less than m is α. Figure 4.1.4 demonstrates these points.

, will be less than m is α. Figure 4.1.4 demonstrates these points.

The confidence interval on m with confidence coefficient (1 – α) 100 percent is formed after the experiment by substituting the observed value ![]() for

for ![]() . Thus the (1 – α) 100 percent confidence interval on m is

. Thus the (1 – α) 100 percent confidence interval on m is ![]() or

or ![]()

Fig. 4.1.4 (a) Distributions of confidence-limit statistics, g1![]() and g2

and g2![]() ; (b) three possible observed values of confidence limits g1

; (b) three possible observed values of confidence limits g1![]() ) to g2

) to g2![]() (two contain m, the third does not).

(two contain m, the third does not).

Factors influencing confidence intervals The engineer would prefer to report very tight intervals, that is, well-defined estimates of m. But notice that the width of the interval between these limits depends on three factors: σ, α, and n. The larger the standard deviation of X, the wider these intervals will be, and the intervals will be narrower if 1 – α is permitted to be smaller. The value of 1 – α can be interpreted as the long-run proportion of many (1 – α) 100 percent confidence intervals which will include the true mean. In the long run α 100 percent will fail to bracket the true mean. The choice of the value of α is the engineer’s, and depends in principle upon the importance associated with making the mistake of stating that an observed interval contains m when in fact it does not. In practice, the 90, 95, or 99 percent confidence intervals are reported. Decreasing α requires increasing the interval width, i.e., making a less precise statement about the value of m.

It is most important to observe that these confidence limits depend upon the sample size n. For a fixed confidence level, 1 – α, the interval can be made as short as the engineer pleases at the expense of additional sampling. If, on the other hand, the sample size has been fixed (and α is prescribed), the engineer must be content with the interval width associated with this amount of information. Precise statements with high confidence come only at the price of a sufficiently large sample.

Confidence intervals: typical procedure Confidence limits on a parameter are obtained, in general, in the following way:

1. Writing a probability statement about an appropriate sample statistic

2. Rearranging the statement to isolate the parameter

3. Substituting in the inequality the observed value of the sample statistic

Illustrations will make this procedure clear and bring out some additional points.

Illustration: The exponential case: one-sided intervals and confidence intervals on functions Recall the exponential illustration in Sec. 4.1.1. There the sample size was large, so we may assume that the estimator ![]() of the mean 1/λ is approximately normally distributed with mean

of the mean 1/λ is approximately normally distributed with mean ![]() and variance

and variance ![]() . The large sample size implies that the coefficient of variation of

. The large sample size implies that the coefficient of variation of ![]() , is small and that consequently the method-of-moments estimator of λ,

, is small and that consequently the method-of-moments estimator of λ, ![]() , is also approximately normally distributed † with approximate mean

, is also approximately normally distributed † with approximate mean ![]() and variance

and variance![]() . The implication is that the sample statistic

. The implication is that the sample statistic ![]() is approximately N(0,l).

is approximately N(0,l).

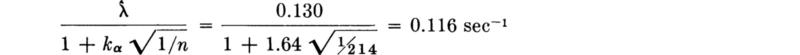

In the traffic-interval study suppose that interest lies in the possibility of the average arrival rate λ being so great that the gaps between cars are not long enough on the average to permit proper merging of traffic from a side road. The engineer would like a “conservative” lower bound estimate on the value of λ.

A one-sided probability statement on the sample statistic is called for. It should be

in which kα is that value of an N(0,l) variable which will be exceeded with probability α. Isolating the parameter of interest within the statement,

The random variable in this statement is, recall, ![]() . After the sample is taken, a one-sided (1 – α) 100 percent confidence interval on the parameter λ is found by substituting for the observed value of the random variable

. After the sample is taken, a one-sided (1 – α) 100 percent confidence interval on the parameter λ is found by substituting for the observed value of the random variable![]() . In this example, suppose a 95 percent level of confidence is desired; then α = 5 percent and k0.05 = 1.64 (Table A.l). A sample (Table 4.1.2) of size n = 214 yielded an observed value of

. In this example, suppose a 95 percent level of confidence is desired; then α = 5 percent and k0.05 = 1.64 (Table A.l). A sample (Table 4.1.2) of size n = 214 yielded an observed value of ![]() = 0.130 sec-1. Thus “with 95 percent confidence” λ is greater than

= 0.130 sec-1. Thus “with 95 percent confidence” λ is greater than

in words, 0.166 is a lower one-sided 95 percent confidence limit on λ.

Exact Distribution It is worth outlining how such a confidence interval would be obtained if the sample size were not sufficiently large to justify the assumption of normality. In principle the exact distribution of ![]() is needed, when, as pointed out above,

is needed, when, as pointed out above, ![]() has a gamma distribution. In this case, however, we can use the distribution of

has a gamma distribution. In this case, however, we can use the distribution of ![]() directly, since

directly, since

For given α and n, tables (Sec. 3.2.3) of the gamma distribution will give 1 / α as a function of the parameter λ. A rearrangement will isolate λ in the probability statement permitting the substitution of the observed value of ![]() and yielding an exact confidence interval on λ.

and yielding an exact confidence interval on λ.

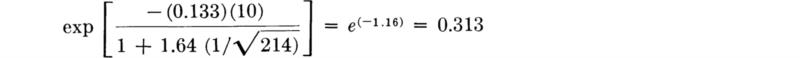

Confidence Interval on a Function of a Parameter Engineering interest in estimated quantities usually goes beyond the values of the parameters themselves. If the model is incorporated into the framework of a larger problem, confidence intervals on functions of the parameters may be required. Consider the following elementary example. In the traffic situation above, the engineer might be interested in an estimate of the proportion of gaps which are larger than t0, the value required to permit another car to enter from a side road.† This proportion is

which is a function of the unknown parameter λ.

A point estimate of ![]() is simplyng

is simplyng![]() . If an upper, one-sided confidence interval on

. If an upper, one-sided confidence interval on ![]() is desired, the starting point is again an appropriate, rearranged probability statement on the sample statistic [Eq. (4.1.42)]:

is desired, the starting point is again an appropriate, rearranged probability statement on the sample statistic [Eq. (4.1.42)]:

Manipulating the inequality to isolate, not λ, but the function of λ of interest, namely, ![]() we obtain

we obtain

In words, a (1 – α) 100 percent upper confidence interval on the proportion of gaps greater than t0 is exp ![]() with the observed value of

with the observed value of ![]() substituted.

substituted.

In the numerical example above, with t0 = 10 sec, the 95 percent upper confidence interval on the proportion is

In words, the engineer would report that “with 95 percent confidence the proportion of gaps greater than 10 sec is less than 0.313.”

Notice that in this case the result coincides with the value obtained by substituting the lower bound of the 95 percent confidence interval on λ into the function of λ, ![]() . When a monotonic function is involved, such a simple substitution is always possible provided that thought is given to the type of bound on the parameter, upper or lower, which corresponds to the desired bound on the function. More difficult operations, analogous to those used in Sec. 2.3, are needed when confidence intervals on nonmontonic functions are encountered.

. When a monotonic function is involved, such a simple substitution is always possible provided that thought is given to the type of bound on the parameter, upper or lower, which corresponds to the desired bound on the function. More difficult operations, analogous to those used in Sec. 2.3, are needed when confidence intervals on nonmontonic functions are encountered.

Illustration: Variance of the normal case Confidence intervals on the variance of a random variable can be formed once the distribution of S2 is known. We consider here a special case—that in which the underlying variable X is normally distributed.

The determination of the distribution of S2 follows from available results. Consider the statistic S2 in Eq. (4.1.15). Adding and subtracting m and expanding the square gives

Multiplying by n/σ2, we find

The first term on the right-hand side is the sum of the squares of n independent normally distributed random variables each with zero mean and standard deviation 1. According to the results in Sec. 3.4.2, this sum has the χ2 distribution with n degrees of freedom. But the second term is also the square of a N (0,l) random variable since ![]() has been shown to be

has been shown to be![]() . Hence the second term has a χ2 distribution with 1 degree of freedom. Rewriting Eq. (4.1.47), we obtain

. Hence the second term has a χ2 distribution with 1 degree of freedom. Rewriting Eq. (4.1.47), we obtain

Equation (4.1.48) states that a ![]() random variable is the sum of nS2/σ2 and a

random variable is the sum of nS2/σ2 and a ![]() random variable. Knowing, as shown in Sec. 3.4.2, that the sum of two independent χ2 random variables, one with r degrees of freedom and the other with p, is χ2-distributed with r + p degrees of freedom, it is not unexpected (what is shown here is not a proof†) that nS2/ σ2 is χ2-distributed with n – 1 degrees of freedom. The same is true of (n – 1)S2* /σ2.

random variable. Knowing, as shown in Sec. 3.4.2, that the sum of two independent χ2 random variables, one with r degrees of freedom and the other with p, is χ2-distributed with r + p degrees of freedom, it is not unexpected (what is shown here is not a proof†) that nS2/ σ2 is χ2-distributed with n – 1 degrees of freedom. The same is true of (n – 1)S2* /σ2.

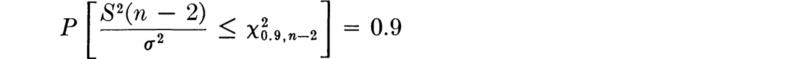

With this knowledge confidence intervals follow easily. For example, begin with the probability statement

in which ![]() that (tabulated) value ‡ such that, if Y is CH(n – 1), then

that (tabulated) value ‡ such that, if Y is CH(n – 1), then ![]() We are led to a (1 – α) 100 percent one-sided confidence interval on σ2 by rearrangement:

We are led to a (1 – α) 100 percent one-sided confidence interval on σ2 by rearrangement:

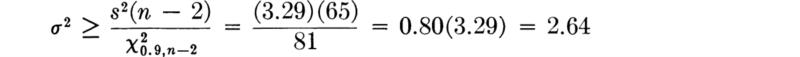

For example, in the bar-yield-force illustration, the 99 percent upper confidence limit on σ2 is (Table A.2)

![]()

Owing to the monotonic relationship between the variance and standard deviation, the square roots of variance confidence interval bounds are the bounds of the corresponding confidence limits on the standard deviation. In this example a 99 percent one-sided upper confidence interval on σ is ![]() or

or ![]() = 158 lb.

= 158 lb.

Illustration: Mean of the normal case, variance unknown; experimental design Interval estimates on the mean are usually based on the assumption that the sample statistic ![]() is normally distributed with mean m and variance σ2/n. This case has been considered in detail above under the assumption that σ2 was known. If, as is more common, the variance is not known, other approaches are necessary. In some cases the unknown variance may be simply related to the unknown mean. This is the case if the distribution of X has only a single unknown parameter. (Examples are: the exponential distribution, the binomial distribution, or the normal distribution with known coefficient of variation.) In this case relatively simple intervals are possible, as before. See, for example, the first paragraph of the preceding illustration of the exponential parameter. In other cases, if the sample size is sufficiently large, it is reasonable (see Brunk [I960]) to set up the confidence interval assuming that σ2 is known and then simply replace σ2 by its estimate S2 after the test results are obtained. In other words, the statistic

is normally distributed with mean m and variance σ2/n. This case has been considered in detail above under the assumption that σ2 was known. If, as is more common, the variance is not known, other approaches are necessary. In some cases the unknown variance may be simply related to the unknown mean. This is the case if the distribution of X has only a single unknown parameter. (Examples are: the exponential distribution, the binomial distribution, or the normal distribution with known coefficient of variation.) In this case relatively simple intervals are possible, as before. See, for example, the first paragraph of the preceding illustration of the exponential parameter. In other cases, if the sample size is sufficiently large, it is reasonable (see Brunk [I960]) to set up the confidence interval assuming that σ2 is known and then simply replace σ2 by its estimate S2 after the test results are obtained. In other words, the statistic ![]() used in Eq. (4.1.36) is replaced by the statistic

used in Eq. (4.1.36) is replaced by the statistic![]() , but the uncertainty associated with not knowing σ2 is ignored.

, but the uncertainty associated with not knowing σ2 is ignored.

In smaller samples this contribution cannot be ignored, however, and, if one of the latter statistics is to be used, its true distribution must be found. This has been done for the case when X is normal. Multiplying numerator and denominator by![]() , the second statistic † above, i.e., the so-called t statistic, takes on a recognizable form:

, the second statistic † above, i.e., the so-called t statistic, takes on a recognizable form:

The numerator is ![]() times a N(0,l) random variable. The denominator being the square root of the χ2-distributed variable, (n – 1)S2*/σ2, is χ-distributed, CHI(n – 1), as defined in Sec. 3.4.3. In that same section a variable of the form of T was said to have the “t distribution” with n – 1 degrees of freedom, if numerator and denominator are independent. That S2* and

times a N(0,l) random variable. The denominator being the square root of the χ2-distributed variable, (n – 1)S2*/σ2, is χ-distributed, CHI(n – 1), as defined in Sec. 3.4.3. In that same section a variable of the form of T was said to have the “t distribution” with n – 1 degrees of freedom, if numerator and denominator are independent. That S2* and ![]() , both functions of the same n normally distributed random variables X1, X2, . . ., Xn, are independent is hardly to be expected, but is in fact true. The verification is lengthy and will not be shown here.‡

, both functions of the same n normally distributed random variables X1, X2, . . ., Xn, are independent is hardly to be expected, but is in fact true. The verification is lengthy and will not be shown here.‡

The t statistic, Eq. (4.1.51), has a somewhat broader distribution than the corresponding normally distributed statistic, ![]() , associated with a known standard deviation σ. See Fig. 3.4.4. This fact merely reflects the greater uncertainty which is introduced if there is a lack of knowledge of the true value of σ. For the same value of 1 – α, we should expect somewhat wider confidence intervals on m in this case. This will be demonstrated.

, associated with a known standard deviation σ. See Fig. 3.4.4. This fact merely reflects the greater uncertainty which is introduced if there is a lack of knowledge of the true value of σ. For the same value of 1 – α, we should expect somewhat wider confidence intervals on m in this case. This will be demonstrated.

Having at hand the distribution of the statistic ![]() (see Table A.3), we can find a confidence interval on m by following the usual pattern.

(see Table A.3), we can find a confidence interval on m by following the usual pattern.

Write an appropriate probability statement:

in which tα/2,n–1 is that (tabulated) value which a random variable with a t distribution with parameter n – 1 exceeds with probability α/2.

Isolate the parameter of interest:

Substitute in the inequality the observed values of the sample statistics. The (1 – α) 100 percent two-sided confidence interval on m is

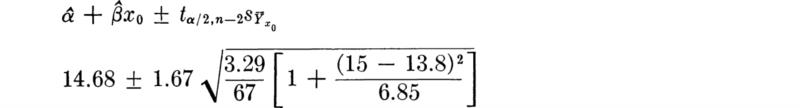

In the bar-yield force example with n = 27, ![]() = 8490, and s* = 105, the 95 percent confidence intervals on m are

= 8490, and s* = 105, the 95 percent confidence intervals on m are

which, upon substitution from Table A.3 of t0.025,26 = 2.056, yields

![]()

These limits are wider by a factor 2.056/1.963 = 1.05 than those which would be obtained if σ had been assumed to be known with certainty to equal the observed yalue of S*, namely, 105 lb. For sample sizes of 5, 10, and 15 this factor is 1.31, 1.16, and 1.08. For sample sizes larger than about 25, it is clearly usually sufficient to use the test based on the normal distribution assuming σ = s*. In other words, for large n, T is very nearly N(0,l) (see Fig. 3.4.4).

Choice of Sample Size The engineer is occasionally in a situation where he can “design” his sampling procedure to yield confidence limits of a desired width with a prescribed confidence. In other words he can determine n, the sample size needed to yield an estimate of m, say, with specified accuracy and confidence.

Consider the following example. It is typical of practice recommended by the American Concrete Institute for determining the required number of times to sample a batch of concrete in order to estimate its mean strength for quality control purposes. The strength is assumed to be normally distributed. Assume that we desire a one-sided, lower, 90 percent confidence interval on the mean which has an interval width of no more than about 10 percent of the mean. Considering a one-sided version of Eq. (4.1.54), we want to pick n such that

It has been observed that the coefficient of variation of concrete strength under fixed conditions of control remains about constant when the mean is altered. (See Prob. 2.45.) Here, say it is about 15 percent. Thus we expect s* to be about 0.15![]() , and we seek n such that

, and we seek n such that

Using Table A.3, we find that a value of n equal to 5 gives

A sample of size five should be used to meet the stated specifications.

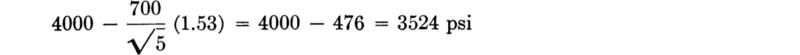

After taking a sample of size 5 and observing, for example, ![]() = 4000 psi and s* = 700 psi, the engineer could say, with 90 percent confidence, that the true mean is greater than

= 4000 psi and s* = 700 psi, the engineer could say, with 90 percent confidence, that the true mean is greater than

Notice that the required number of samples depends upon the desired confidence, the desired width of the interval, and the estimated coefficient of variation of the concrete strength (the last is considered a measure of the quality of the control practiced by the contractor).

Comments For the reasons stated in this section the engineer who has, for example, observed ![]() and calculated confidence limits should not say “The probability that m lies between

and calculated confidence limits should not say “The probability that m lies between![]() ,” but rather just “the (1 – α) 100 percent confidence limits on m are

,” but rather just “the (1 – α) 100 percent confidence limits on m are ![]()

![]() ” Superficially, the distinction may seem to be one of semantics only, but it is of fundamental importance in the theory of statistics. The reader will, however, hear statements like the former one made by engineers who use statistical methods frequently. Such “probability statements” remain the most natural way of describing the situation and of conveying the second kind of uncertainty, that surrounding the value of a parameter. Consequently, such statements should probably not be discouraged, as they seem to express the way engineers operate with such limits. Fortunately, the more liberal interpretation of probability, namely, that associated with degree of belief (Sec. 2.1 and Chap. 5) permits and encourages this pragmatic, operational use of confidence limits, as we shall see in Chap. 6.

” Superficially, the distinction may seem to be one of semantics only, but it is of fundamental importance in the theory of statistics. The reader will, however, hear statements like the former one made by engineers who use statistical methods frequently. Such “probability statements” remain the most natural way of describing the situation and of conveying the second kind of uncertainty, that surrounding the value of a parameter. Consequently, such statements should probably not be discouraged, as they seem to express the way engineers operate with such limits. Fortunately, the more liberal interpretation of probability, namely, that associated with degree of belief (Sec. 2.1 and Chap. 5) permits and encourages this pragmatic, operational use of confidence limits, as we shall see in Chap. 6.

The use of confidence limits is most appropriate in reporting of data. It provides a convenient and concise method of reporting which is understood by a wide audience of readers, and which encourages communication of measures of uncertainty as well as simply “best” (or point) estimates of parameters. In spite of the somewhat arbitrary nature of the conventionally used confidence levels, and in spite of the philosophical difficulties surrounding their interpretation, confidence limits remain, therefore, useful conventions.

A widely used rule for choosing the sample† statistic to be used in estimation often replaces the method of moments described in Sec. 4.1.1. This is called the method of maximum likelihood. In addition to prescribing the statistic which should be used, it provides an approximation of the distribution of that statistic, so that approximate confidence intervals may be constructed.

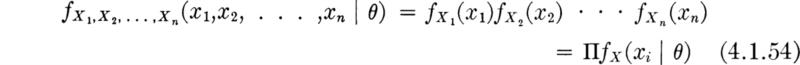

The likelihood function The method of maximum likelihood makes use of the sample-likelihood function. Suppose for the moment that we know the parameter(s) θ of the distribution of X. Then we can write down the joint probability distribution of a (random) sample X1, X2, . . ., Xn,

The expression has been written in a manner, f(· | θ), which emphasizes that the parameter is known. If, for example, the underlying random variable is T and it has an exponential distribution with parameter λ,

Placing ourselves now in a position of having sampled and observed specific values X1 = x1, X2 = x2, . . ., Xn = xn, but not knowing the parameter θ, we can look upon the right-hand side of the function above [Eq. (4.1.54)] as a function of θ only. It gives the relative likelihood of having observed this particular sample, x1, x2, . . ., xn, as a function of θ. To emphasize this, it is written

and is called the likelihood function of the sample. For the exponential example, the observed values t1, t2, .. ., tn determine a number ![]() , and the likelihood function of the parameter λ is

, and the likelihood function of the parameter λ is

The likelihood function is sketched in Fig. 4.1.5 for the data reported in Table 4.1.2. The vertical scale is arbitrary, since only relative values will be of interest.

The likelihood function is subject also to a looser, inverse interpretation. It can be said to give, in addition to the relative likelihood of the particular sample for a given θ, the relative likelihood of each possible value of θ given the sample. This intuitively appealing interpretation suggests that values of θ for which the observed sample was relatively speaking more likely, are, relatively speaking, the more likely values of the unknown parameter θ. In Fig. 4.1.5, for example, values of λ between 0.12 and 0.14 are, in light of the observed sample, “more likely” to be the true value of λ than a value near 0.10 or 0.16. Likelihood functions will be used extensively in Chaps. 5 and 6 to represent the information contained in the observed sample about the relative likelihoods of the various possible parameter values.

Fig. 4.1.5 Traffic-interval illustration: likelihood function on λ.

Maximum-likelihood criterion for estimation The likelihood function is used to determine parameter estimators. Formally, the rule for obtaining a maximum-likelihood estimator is:

The maximum-likelihood estimator of θ is the value ![]() which causes the likelihood function L(θ) to be a maximum.

which causes the likelihood function L(θ) to be a maximum.

For example, in the exponentially distributed traffic-interval illustration, it is clear from Fig. 4.1.5 that the most likely value of λ, i.e., the maximum-likelihood estimate of λ, is about 0.13. More generally we seek ![]() to maximize Eq. (4.1.57):

to maximize Eq. (4.1.57):

Any of the many techniques for seeking the maximum of a function are acceptable. One which is frequently appropriate in maximum-likelihood estimation problems is to find the maximum of the logarithm of the function† by setting its derivative equal to zero. Here:

The derivative (with respect to λ), when set equal to zero, yields

Solving for ![]() , the value which maximizes In L (and L),

, the value which maximizes In L (and L),

which equals ![]() and hence (only coincidentally) is equivalent to the estimator suggested by the method of moments, Eq. (4.1.7).

and hence (only coincidentally) is equivalent to the estimator suggested by the method of moments, Eq. (4.1.7).

The two estimators, given by the method of moments and the method of maximum likelihood, may not be identical. The reader is asked to show in Prob. 4.20 that the method-of-moments estimator of the parameter b of a random variable known to have distribution uniform on the interval 0 to b is 2![]() , and the maximum-likelihood estimator is X(n) =

, and the maximum-likelihood estimator is X(n) = ![]() , the largest observed value in the sample. Note that the method of moments might lead to an estimated value of the theoretical upper value b that is less than the largest value observed!

, the largest observed value in the sample. Note that the method of moments might lead to an estimated value of the theoretical upper value b that is less than the largest value observed!

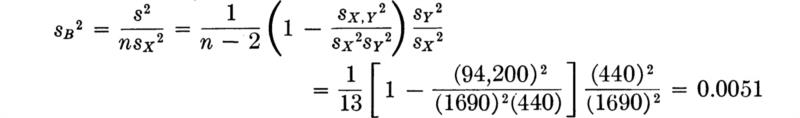

Two or more parameters It should be clear that these notions can be easily generalized to those situations where two or more (say r) parameters must be estimated to define a distribution. Call this vector of parameters θ = {θ1, θ2, . . . , θr}. The method of maximum likelihood states: choose the estimators ![]() to maximize the likelihood

to maximize the likelihood

or, equivalently, the log likelihood (if appropriate to the particular problem)

This maximization will typically require solving a set of r equations

The solution may also be subject to certain constraints, for example, ![]() > 0 or

> 0 or ![]() max [xi]. The solution of the system is not always possible in closed form; in complex problems, computer-automated trial-and-error search techniques are often employed to find the

max [xi]. The solution of the system is not always possible in closed form; in complex problems, computer-automated trial-and-error search techniques are often employed to find the ![]() values which maximize the likelihood function.

values which maximize the likelihood function.

Illustration: Maximum-likelihood estimators in the normal case As an illustration, we seek the maximum-likelihood estimators of the parameters m and σ of the normal distribution when both are unknown. The likelihood function is

The presence of exponentials suggests in this case an attack on the log-likelihood function

To choose ![]() and

and ![]() to maximize this function, we set the partial derivatives equal to zero:

to maximize this function, we set the partial derivatives equal to zero:

and solve these simultaneous equations for ![]() and

and ![]() . Since 0 < σ < ∞, the former equation is satisfied only if

. Since 0 < σ < ∞, the former equation is satisfied only if

Substituting this expression into the second equation and solving for ![]() gives

gives

Properties of maximum-likelihood estimators As sample statistics or functions of the observations, the estimators are, before the experiment, random variables and can be studied as such. In fact, the major advantage of maximum-likelihood estimators is that their properties have been thoroughly studied and are well known, virtually independently of the particular density function under consideration. Noting the summation form of Eq. (4.1.64), one should not be surprised to learn that the estimators ![]() are approximately (for large n), normally distributed.† Their means are (asymptotically, as n → ∞) equal to the true parameter values θ ; that is, the estimators are asymptotically unbiased. Their variances (or mean square errors) are (asymptotically) equal to

are approximately (for large n), normally distributed.† Their means are (asymptotically, as n → ∞) equal to the true parameter values θ ; that is, the estimators are asymptotically unbiased. Their variances (or mean square errors) are (asymptotically) equal to

For example, the approximate variance of the estimator of the parameter λ in the exponential illustration is found as follows:

In this case the result is simply a constant independent of the random variable T. Therefore, the expectation is simply that of a constant or the constant itself:

and [Eq. (4.1.71)]

An estimate of this approximate variance is found by substituting ![]() for λ, which gives

for λ, which gives ![]() .

.

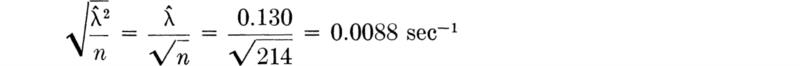

In the car-following data the standard deviation of ![]() , the estimator of λ, is approximately

, the estimator of λ, is approximately

Note once again the characteristic decrease in the expected square error of an estimator by a factor 1/n.

In addition to being asymptotically unbiased (that is, ![]() ), the maximum-likelihood estimators can be shown (e.g., Freeman [1963]) to have, at least asymptotically, the additional desirable properties that they have minimum expected squared error among all possible unbiased estimators (hence they are said to be efficient). They also generally give the same estimator for a function of a parameter g(θ) as that found by simply substituting the estimator† of θ, that is,

), the maximum-likelihood estimators can be shown (e.g., Freeman [1963]) to have, at least asymptotically, the additional desirable properties that they have minimum expected squared error among all possible unbiased estimators (hence they are said to be efficient). They also generally give the same estimator for a function of a parameter g(θ) as that found by simply substituting the estimator† of θ, that is, ![]() =

= ![]() (i.e., they are said to be invariant); they will with increasingly high probability be arbitrarily close to the true values (i.e., they are said to be consistent)) and they make maximum use of the information contained in the data (i.e., they are said to be sufficient). If the available sample sizes are large, there seems little doubt that the maximum-likelihood estimator is a good choice. It should be emphasized, however, that the properties above are asymptotic (large n), and better (in some sense) estimators† may be available when sample sizes are small.

(i.e., they are said to be invariant); they will with increasingly high probability be arbitrarily close to the true values (i.e., they are said to be consistent)) and they make maximum use of the information contained in the data (i.e., they are said to be sufficient). If the available sample sizes are large, there seems little doubt that the maximum-likelihood estimator is a good choice. It should be emphasized, however, that the properties above are asymptotic (large n), and better (in some sense) estimators† may be available when sample sizes are small.

In this section we have investigated the problem of obtaining from data estimates of the parameters of a probabilistic model, such as a random variable X. We worked with estimators (i.e., certain functions of the sample, X1, X2, . . ., Xn); the estimators considered were those suggested by general rules, either the method of moments (Sec. 4.1.1) or the method of maximum likelihood (Sec. 4.1.4).

The customary estimator of the population mean mX is the sample mean ![]() :

:

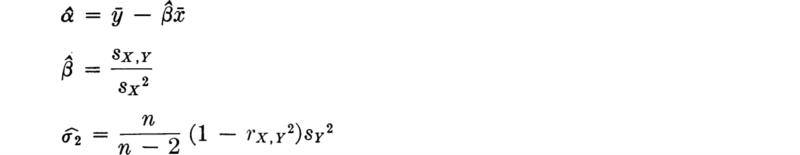

while the conventional estimate of the variance σx2 is the sample variance, defined either as

or as

The choice between these estimators is not clear-cut, but the latter is used somewhat more frequently in practice.

An estimator ![]() is studied by familiar methods once it is recognized that it is a random variable and a function of X1 X2 . . ., Xn. The estimator’s mean E

is studied by familiar methods once it is recognized that it is a random variable and a function of X1 X2 . . ., Xn. The estimator’s mean E ![]() is compared with the true value of the parameter d. If they are equal, the estimator is said to be unbiased. The mean square error of any estimator is the sum of its variance and its squared bias, E

is compared with the true value of the parameter d. If they are equal, the estimator is said to be unbiased. The mean square error of any estimator is the sum of its variance and its squared bias, E![]() – θ is,

– θ is,

![]()

Generally we seek unbiased estimators with small mean square errors.

The distribution of an estimator can be used to obtain confidence-interval estimates on a parameter at prescribed confidence levels. These are obtained by “turning inside out” probability statements on the estimator ![]() to obtain random limits

to obtain random limits ![]() and

and ![]() which are (1 – α) 100 percent likely to contain the true parameter. After a sample has been observed, these limits are evaluated and called the (1 – α) 100 percent confidence interval on the true parameter.

which are (1 – α) 100 percent likely to contain the true parameter. After a sample has been observed, these limits are evaluated and called the (1 – α) 100 percent confidence interval on the true parameter.

A very common and most vexing problem that engineers face is the assessment of significance in scattered statistical data. For example, given a set of observed concrete cylinder strengths, say 4010, 3880, 3970, 3780, and 3820 psi, should a materials engineer report that the true mean strength of the batch of concrete is less than the design value of 4000 psi? Or to put the question differently, in light of the inherent variation in such observations, is the observed sample average

![]()

significantly less than the presumed value of 4000 psi? If the engineer had twice as many samples, but their average happened to be the same, 3892 psi, would this not be a “more significant” deviation from the presumed mean? Suppose an engineer has installed a new traffic-control device in an attempt to enhance the safety of an intersection. In the 3 months prior to the installation there were four major accidents there; in the 3 months following the installation there were two. Can the engineer conclude with confidence that the device has been effective? That is, has it reduced the mean accident rate? Is this limited data sufficient to justify such a conclusion? In different words, is the difference between 4 and 2 significant when one recognizes the inherently probabilistic nature of accident occurrences?

Generally speaking we state such problems as follows. The engineer considers a particular assumption about his model (e.g., “the true mean strength is 4000 psi” or “the mean accident rate is unchanged”). Given data, he wants to evaluate this tentative hypothesis. If the hypothesis is assumed to be correct, the engineer can calculate the expected results of an experiment. If the data are observed to be significantly different from the expected results, then the engineer must consider the assumption to be incorrect. The technical question to be answered is, “What represents a significant difference?”

Although many such problems in engineering are most effectively treated in the context of the modern decision theory to be presented in Chaps. 5 and 6, a simpler procedure has also been developed. Like confidence limits, this method of assessing the significance of data has the merit of being a widely used convention. Its use is therefore a convenient way to communicate to others the method by which the data were analyzed and the criterion by which the assumption or model was judged.

In this section we shall concentrate on relatively simple model assumptions; in particular, we shall test hypotheses about model parameter values. In Sec. 4.4 we shall treat the verification of the model or distribution as a whole, including its shape as well as its parameters.

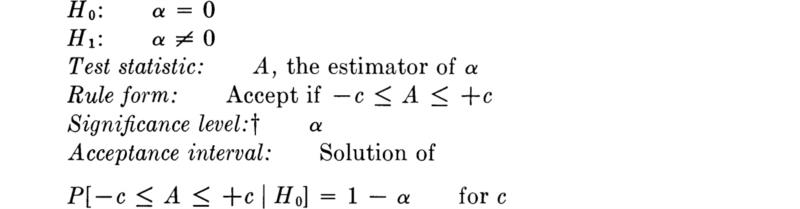

This conventional procedure for drawing simple conclusions from observed statistical data is called hypothesis testing. Typical examples include: a product-development engineer who wants to decide whether or not a new concrete additive has a significant influence on curing time; a soils engineer who wants to report whether a simple, in-the-field “vane test” provides an unbiased estimate of the lab-measured unconfined compressive strength of soil; and a water-resources engineer who wants to verify an assumption used in his system model, namely, that the correlation coefficient between the monthly rainfalls on two particular watersheds is zero.

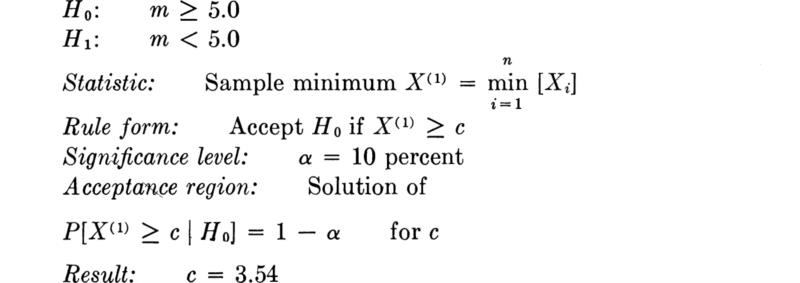

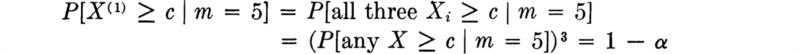

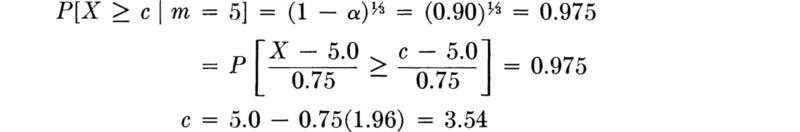

We shall develop the conventional treatment of such problems through the following simple example. Even under properly controlled production, asphalt content varies somewhat from batch to batch. A materials-testing engineer must report, on the basis of three batches, whether the mean asphalt content of all batches of material leaving a particular plant is equal to the desired value of 5 percent. The concern is that unknown changes in the mixing procedure or raw materials supply might have increased or decreased this mean. Based on previous experience the engineer is certain that, no matter what the mean, the asphalt content in different batches can be assumed to be normally distributed with a known standard deviation of 0.75 percent.

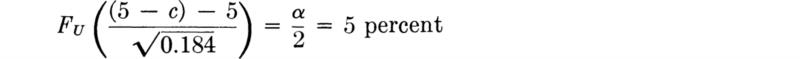

It is important to appreciate the basic dilemma. The engineer must use information in the sample to make his decision. This information is usually summarized in some sample statistic, such as the sample average ![]() . The finite size of the sample implies that any sample statistic calculated from the observations is a random variable with some nonzero variance. For example,

. The finite size of the sample implies that any sample statistic calculated from the observations is a random variable with some nonzero variance. For example, ![]() is a normally distributed random variable with a variance of (0.75) 2/3 = (0.43)2 = 0.184 and a mean m equal to the (unknown) true mean of the plant’s present production. Therefore the observed value of this sample statistic may lie some distance from the expected value of 5 even if this is the true value of the mean. On the other hand, if the true value of the mean asphalt content is no longer 5 but has slipped to 4.5 or increased to 5.6, the observed sample average may nonetheless lie close to the hypothesized value of 5. Thus, with limited data, the engineer cannot hope to be correct every time he makes a report. In some cases he will report that the mean is not 5 when it is; and in other cases he will report that it is 5 when it is not. At best he can ask for an operating rule which recognizes the inherent variability of the data and the size of his sample, and which promises some consistency in approach to this and similar questions he must report on. Preferably he would like to have some measure, too, of the percentage of times he will make one mistake or the other.

is a normally distributed random variable with a variance of (0.75) 2/3 = (0.43)2 = 0.184 and a mean m equal to the (unknown) true mean of the plant’s present production. Therefore the observed value of this sample statistic may lie some distance from the expected value of 5 even if this is the true value of the mean. On the other hand, if the true value of the mean asphalt content is no longer 5 but has slipped to 4.5 or increased to 5.6, the observed sample average may nonetheless lie close to the hypothesized value of 5. Thus, with limited data, the engineer cannot hope to be correct every time he makes a report. In some cases he will report that the mean is not 5 when it is; and in other cases he will report that it is 5 when it is not. At best he can ask for an operating rule which recognizes the inherent variability of the data and the size of his sample, and which promises some consistency in approach to this and similar questions he must report on. Preferably he would like to have some measure, too, of the percentage of times he will make one mistake or the other.

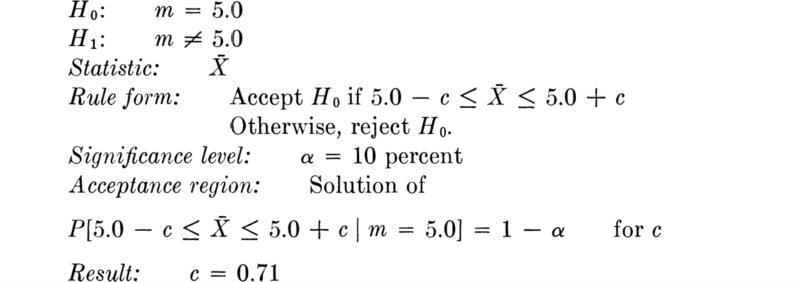

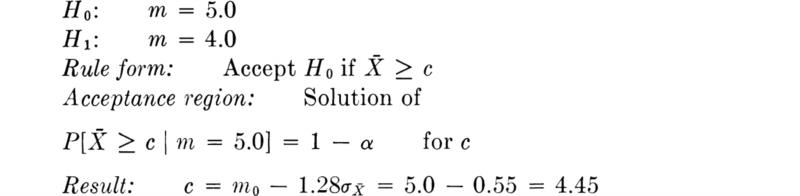

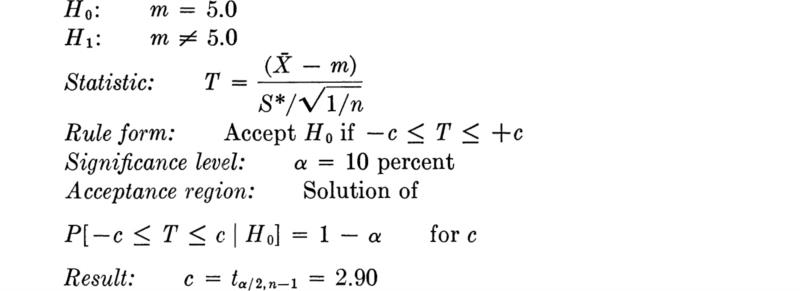

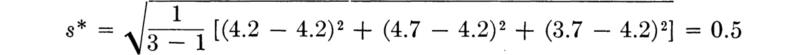

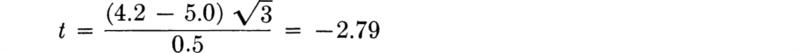

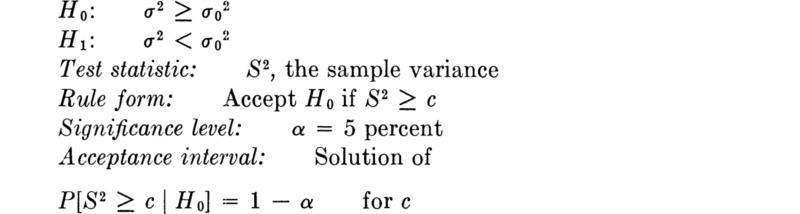

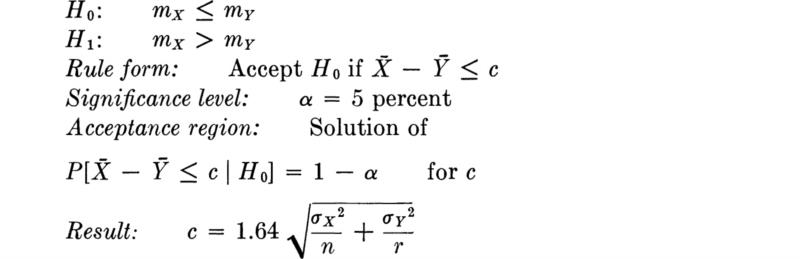

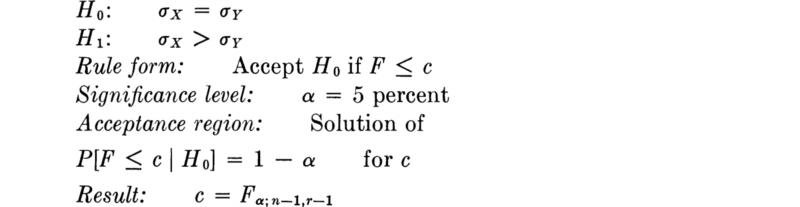

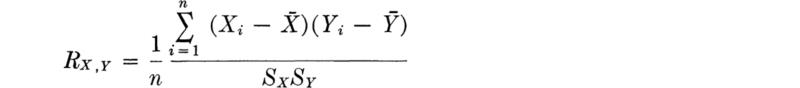

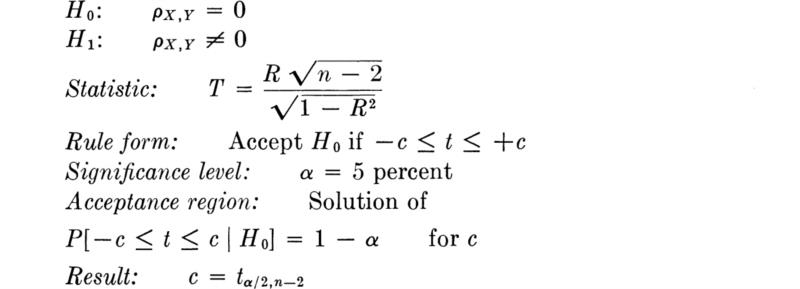

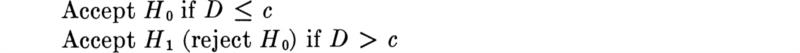

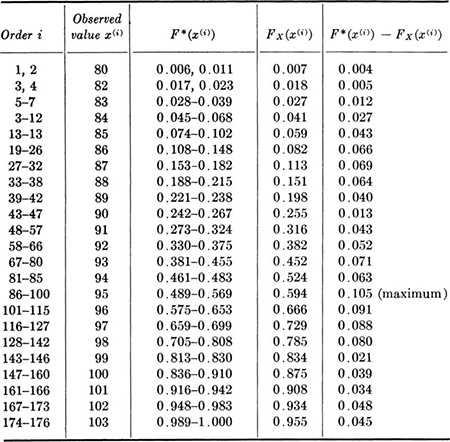

Hypothesis testing is a method which is commonly used in such routine decisions. It provides most of these desired consistency properties, and, being a widely used convention, it simplifies communication among engineers. If the materials engineer reports that he “accepted the hypothesis that the true mean is 5 at the 10 percent significance level/’ trained readers of the report will know first, and most importantly, that the engineer has studied and considered the scatter in the data, and, second, precisely the way by which he reached his conclusion, complete with the implied risks and limitations. The method is objective in that, under the convention, two engineers faced with the same data will report the same conclusion.